Power Line Monitoring through Data Integrity Analysis with Q-Learning Based Data Analysis Network

Abstract

1. Introduction

2. Overview of Classification Approaches Based on Power Line Data Analysis

Problem Definition

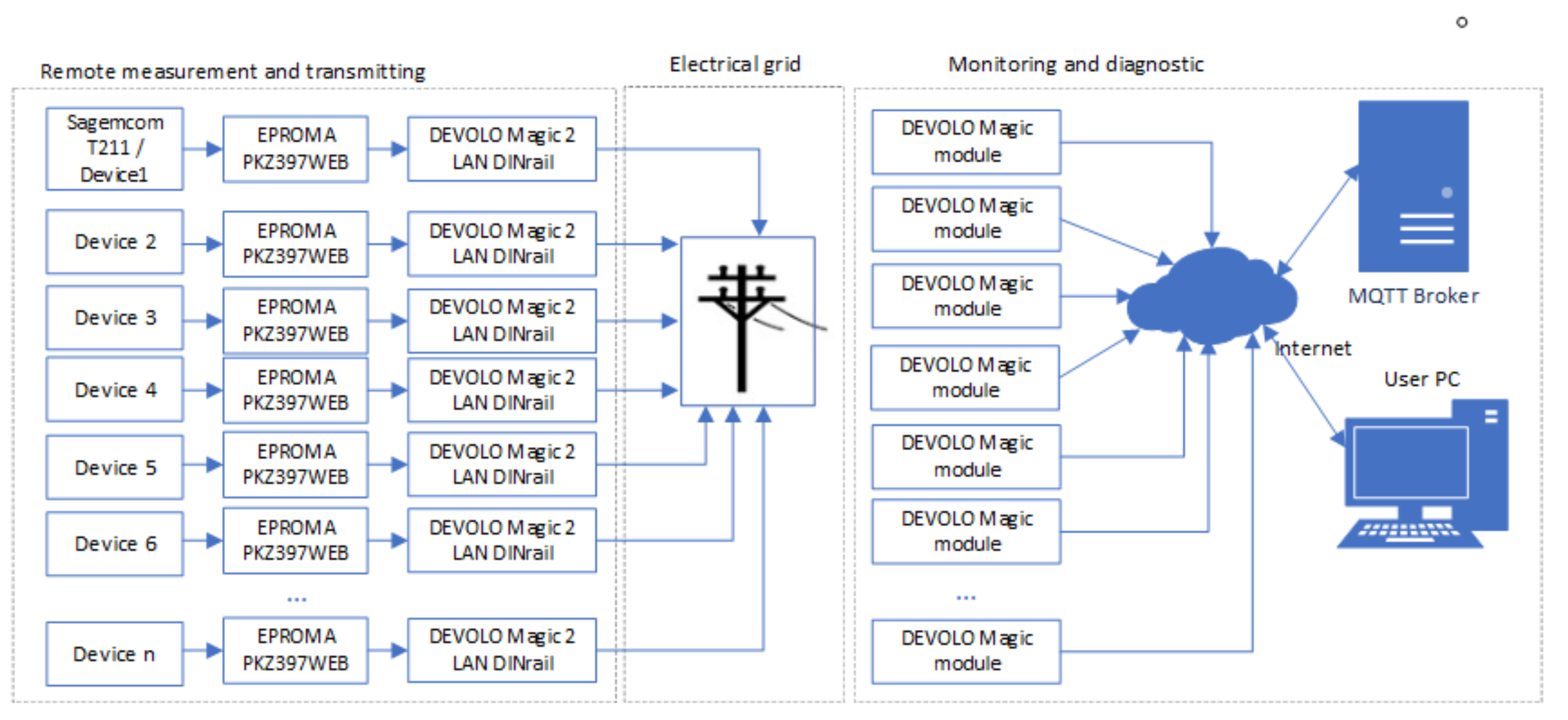

- Data. How much and what data will be collected.

- Transfer of data. What technologies and how often data will be transferred.

- Data flow broker. Will a data flow broker be used, as for example is the case with IoT power meters.

- Data collection. How data will be collected.

- Data pre-processing. How the data will be filtered and how often it will be submitted for analysis.

- Data monitoring. Real-time or batch presentation of incoming data.

- System training. Annotating the collected data and training the neural network model.

- Diagnostics of the situation. By applying the received data, diagnostics of the operation of systems and their individual devices and identification of potential risks are performed.

- Re-training of the system. Training with continuously augmented data and adjusting the previously trained network.

- Does the system work in real time;

- Does the system work with a certain time interval, accumulating data packets;

- If data is collected, how long the data must be collected;

- If data is collected, how much data needs to be collected;

- How to take into account if certain data will not be received for a certain period of time;

- Whether data filtering or normalization is necessary.

- Does data collection on the server include all other processes of the full neural network training and deployment pipeline, or is it more of web page front-end with secure access to the internal resources of neural network analysis similar to the REST based services?

3. Methodology

3.1. Proposed Data Analysis Model

3.2. Q-Learning Based Data Analysis Network

- Initialize arbitrary ().

- For Choose the action for the current state . Take action , observe ,

4. Experimental Setup

5. Results

5.1. Analysis of Real Data in Small Scale Scenario

5.2. Analysis of Simulated Full Data Flow of a Whole District

5.3. Analysis of Real Data in Power Line Failure Scenarios

- Due to network issues, data from the meter is arriving with a larger delay than intended and at irregular intervals.

- The data does not reach the broker for an extended length of time, indicating a malfunction in the system.

5.4. Classification of of Power Line Failures

5.5. Computational Performance

6. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| SDN | software defined networking |

| MQTT | Message Queuing Telemetry Transport |

| SVM | Support Vector Machine |

| DL | Deep learning |

| QOS | Quality of Service |

| ADWIN | Adaptive Windowing approach |

| MDP | Markov decision process |

| Q-learning | model-free reinforcement learning algorithm |

| Sagemcom T211 | Model of Smart Power meter |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| Mosquitto MQTT | Open source (EPL/EDL licensed) message broker software |

| NTP | Network Time Protocol |

| MSE | Mean Square Error |

References

- Hou, J.; Guo, H.; Wang, S.; Zeng, C.; Hu, H.; Wang, F. Design of a Power Transmission Line Monitoring System Based upon Edge Computing and Zigbee Wireless Communication. Mob. Inf. Syst. 2022, 2022, 9379789. [Google Scholar] [CrossRef]

- Bin, X.; Qing, C.; Jun, M.; Yan, Y.; Zhixia, Z. Research on a Kind of Ubiquitous Power Internet of Things System for Strong Smart Power Grid. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies—Asia (ISGT Asia), Chengdu, China, 21–24 May 2019. [Google Scholar] [CrossRef]

- Jabir, H.; Teh, J.; Ishak, D.; Abunima, H. Impacts of Demand-Side Management on Electrical Power Systems: A Review. Energies 2018, 11, 1050. [Google Scholar] [CrossRef]

- Tejwani, R.; Kumar, G.; Solanki, C. Remote Monitoring for Solar Photovoltaic Systems in Rural Application Using GSM Voice Channel. Energy Procedia 2014, 57, 1526–1535. [Google Scholar] [CrossRef]

- Meligy, R.; Lopez-Iturri, P.; Astrain, J.J.; Picallo, I.; Klaina, H.; Rady, M.; Paredes, F.; Montagnino, F.; Alejos, A.V.; Falcone, F. Low-Cost Cloud-Enabled Wireless Monitoring System for Linear Fresnel Solar Plants. Eng. Proc. 2020, 2, 6. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, D.; Zhou, Y.; Cutler, M.E.J.; Cui, D.; Zhang, Z. Quantitative Analysis of the Interaction between Wind Turbines and Topography Change in Intertidal Wind Farms by Remote Sensing. J. Mar. Sci. Eng. 2022, 10, 504. [Google Scholar] [CrossRef]

- Sayed, M.; Nemitz, M.; Aracri, S.; McConnell, A.; McKenzie, R.; Stokes, A. The Limpet: A ROS-Enabled Multi-Sensing Platform for the ORCA Hub. Sensors 2018, 18, 3487. [Google Scholar] [CrossRef]

- Ahmed, M.; Kim, Y.C. Hierarchical Communication Network Architectures for Offshore Wind Power Farms. Energies 2014, 7, 3420–3437. [Google Scholar] [CrossRef]

- Ogunleye, O.; Alabi, A.; Misra, S.; Adewumi, A.; Ahuja, R.; Damasevicius, R. Comparative Study of the Electrical Energy Consumption and Cost for a Residential Building on Fully ac Loads Vis-a-Vis One on Fully dc Loads; Lecture Notes in Electrical Engineering; Springer: Singapore, 2020; Volume 612, pp. 395–405. [Google Scholar]

- Makhadmeh, S.N.; Al-Betar, M.A.; Alyasseri, Z.A.A.; Abasi, A.K.; Khader, A.T.; Damaševičius, R.; Mohammed, M.A.; Abdulkareem, K.H. Smart home battery for the multi-objective power scheduling problem in a smart home using grey wolf optimizer. Electronics 2021, 10, 447. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Al-Betar, M.A.; Awadallah, M.A.; Abasi, A.K.; Alyasseri, Z.A.A.; Doush, I.A.; Alomari, O.A.; Damaševičius, R.; Zajančkauskas, A.; Mohammed, M.A. A Modified Coronavirus Herd Immunity Optimizer for the Power Scheduling Problem. Mathematics 2022, 10, 315. [Google Scholar] [CrossRef]

- Tao, F.; Qi, Q. New IT Driven Service-Oriented Smart Manufacturing: Framework and Characteristics. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 81–91. [Google Scholar] [CrossRef]

- Chen, W.L.; Huang, C.T.; Chen, K.S.; Chang, L.K.; Tsai, M.C. Design and Realization of Microcontroller-Based Remote Status Monitoring System for Smart Factory Task Planning Applications. In Proceedings of the 2021 60th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Tokyo, Japan, 8–10 September 2021; pp. 1500–1505. [Google Scholar]

- Tamang, D.; Pozzebon, A.; Parri, L.; Fort, A.; Abrardo, A. Designing a Reliable and Low-Latency LoRaWAN Solution for Environmental Monitoring in Factories at Major Accident Risk. Sensors 2022, 22, 2372. [Google Scholar] [CrossRef]

- Yang, L.; Fan, J.; Liu, Y.; Li, E.; Peng, J.; Liang, Z. A Review on State-of-the-Art Power Line Inspection Techniques. IEEE Trans. Instrum. Meas. 2020, 69, 9350–9365. [Google Scholar] [CrossRef]

- Mudaliar, M.D.; Sivakumar, N. IoT based real time energy monitoring system using Raspberry Pi. Internet Things 2020, 12, 100292. [Google Scholar] [CrossRef]

- Bu, X.; Liu, S.; Yu, Q. Framework Design of Edge IoT Agent Used in State Grid Electrical Internet of Things. In Proceedings of the 2019 IEEE International Conference on Energy Internet (ICEI), Nanjing, China, 27–31 May 2019; pp. 185–190. [Google Scholar] [CrossRef]

- Liu, C.; Hao, H.; Li, B.; Wang, C. SDN Mixed Mode Flow Table Release Mechanism Based on Network Topology. In Proceedings of the 2017 6th International Conference on Measurement, Instrumentation and Automation (ICMIA 2017), Zhuhai, China, 29–30 June 2017; Atlantis Press: Zhengzhou, China, 2017. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, G.; Tao, J.; Jiang, D.; Qi, S.; Lu, J. Active Measurement Approach to Traffic QoS Sensing for Smart Network Access in SDN. In Proceedings of the 2019 IEEE International Conference on Industrial Internet (ICII), Orlando, FL, USA, 10–12 November 2019; pp. 103–108. [Google Scholar] [CrossRef]

- Dias, C.F.; de Oliveira, J.R.; de Mendonça, L.D.; de Almeida, L.M.; de Lima, E.R.; Wanner, L. An IoT-Based System for Monitoring the Health of Guyed Towers in Overhead Power Lines. Sensors 2021, 21, 6173. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Wang, Z.; Liu, X.; Zhang, H.; Xu, D. Detection of Power Line Insulator Defects Using Aerial Images Analyzed With Convolutional Neural Networks. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 1486–1498. [Google Scholar] [CrossRef]

- Breviglieri, P.; Erdem, T.; Eken, S. Predicting Smart Grid Stability with Optimized Deep Models. SN Comput. Sci. 2021, 2, 73. [Google Scholar] [CrossRef]

- Erdem, T.; Eken, S. Layer-Wise Relevance Propagation for Smart-Grid Stability Prediction. In Pattern Recognition and Artificial Intelligence; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 315–328. [Google Scholar] [CrossRef]

- Moosavi, S.S.; Djerdir, A.; Ait-Amirat, Y.; Khaburi, D.A.; N’Diaye, A. Artificial neural network-based fault diagnosis in the AC–DC converter of the power supply of series hybrid electric vehicle. IET Electr. Syst. Transp. 2016, 6, 96–106. [Google Scholar] [CrossRef]

- Shah, F.M.; Maqsood, S.; Damaševičius, R.; Blažauskas, T. Disturbance rejection and control design of mvdc converter with evaluation of power loss and efficiency comparison of sic and si based power devices. Electronics 2020, 9, 1878. [Google Scholar] [CrossRef]

- Alseiari, A.Y.; Farrel, P.; Osman, Y. Notice of Removal: The Impact of Artificial Intelligence Applications on the Participation of Autonomous Maintenance and Assets Management Optimisation within Power Industry: A Review. In Proceedings of the 2020 IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA), Bangkok, Thailand, 16–21 April 2020. [Google Scholar] [CrossRef]

- Morgan, J.; Halton, M.; Qiao, Y.; Breslin, J.G. Industry 4.0 smart reconfigurable manufacturing machines. J. Manuf. Syst. 2021, 59, 481–506. [Google Scholar] [CrossRef]

- Kim, H.S.; Park, Y.J.; Kang, S.J. Secured and Deterministic Closed-Loop IoT System Architecture for Sensor and Actuator Networks. Sensors 2022, 22, 3843. [Google Scholar] [CrossRef]

- Huo, Y.; Prasad, G.; Atanackovic, L.; Lampe, L.; Leung, V.C.M. Cable Diagnostics With Power Line Modems for Smart Grid Monitoring. IEEE Access 2019, 7, 60206–60220. [Google Scholar] [CrossRef]

- Frincu, M.; Irimie, B.; Selea, T.; Spataru, A.; Vulpe, A. Evaluating Distributed Systems and Applications through Accurate Models and Simulations. In Studies in Big Data; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 1–18. [Google Scholar] [CrossRef]

- Hill, J.H.; Schmidt, D.C.; Edmondson, J.R.; Gokhale, A.S. Tools for Continuously Evaluating Distributed System Qualities. IEEE Softw. 2010, 27, 65–71. [Google Scholar] [CrossRef]

- Jogalekar, P.; Woodside, M. Evaluating the scalability of distributed systems. IEEE Trans. Parallel Distrib. Syst. 2000, 11, 589–603. [Google Scholar] [CrossRef]

- Mesbahi, M.R.; Rahmani, A.M.; Hosseinzadeh, M. Reliability and high availability in cloud computing environments: A reference roadmap. Hum.-Centric Comput. Inf. Sci. 2018, 8, 20. [Google Scholar] [CrossRef]

- Yang, B.; Liu, D. Research on Network Traffic Identification based on Machine Learning and Deep Packet Inspection. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019. [Google Scholar] [CrossRef]

- Vieira, T.; Soares, P.; Machado, M.; Assad, R.; Garcia, V. Measuring Distributed Applications through MapReduce and Traffic Analysis. In Proceedings of the 2012 IEEE 18th International Conference on Parallel and Distributed Systems, Singapore, 17–19 December 2012. [Google Scholar] [CrossRef]

- Khan, R.H.; Heegaard, P.E.; Ullah, K.W. Performance Evaluation of Distributed System Using SPN. In Petri Nets—Manufacturing and Computer Science; InTech: London, UK, 2012. [Google Scholar] [CrossRef]

- PHADKE, A.G.; WALL, P.; DING, L.; TERZIJA, V. Improving the performance of power system protection using wide area monitoring systems. J. Mod. Power Syst. Clean Energy 2016, 4, 319–331. [Google Scholar] [CrossRef]

- Kefalakis, N.; Roukounaki, A.; Soldatos, J. A Configurable Distributed Data Analytics Infrastructure for the Industrial Internet of things. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, L.; Ding, W.; Zheng, Q. Heterogeneous Data Storage Management with Deduplication in Cloud Computing. IEEE Trans. Big Data 2019, 5, 393–407. [Google Scholar] [CrossRef]

- Das, C.K.; Bass, O.; Kothapalli, G.; Mahmoud, T.S.; Habibi, D. Overview of energy storage systems in distribution networks: Placement, sizing, operation, and power quality. Renew. Sustain. Energy Rev. 2018, 91, 1205–1230. [Google Scholar] [CrossRef]

- Kermarrec, A.M.; Merrer, E.L.; Straub, G.; van Kempen, A. Availability-Based Methods for Distributed Storage Systems. In Proceedings of the 2012 IEEE 31st Symposium on Reliable Distributed Systems, Irvine, CA, USA, 8–11 October 2012. [Google Scholar] [CrossRef]

- Wang, N.; Li, M.; Xiao, B.; Ma, L. Availability analysis of a general time distribution system with the consideration of maintenance and spares. Reliab. Eng. Syst. Saf. 2019, 192, 106197. [Google Scholar] [CrossRef]

- Fang, J.; Wan, S.; Huang, P.; Xie, C.; He, X. Early Identification of Critical Blocks: Making Replicated Distributed Storage Systems Reliable Against Node Failures. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 2446–2459. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, X.; Wu, C.; Wang, S. Mixed shock model for multi-state weighted k-out-of-n: F systems with degraded resistance against shocks. Reliab. Eng. Syst. Saf. 2022, 217, 108098. [Google Scholar] [CrossRef]

- Shakiba, F.M.; Azizi, S.M.; Zhou, M.; Abusorrah, A. Application of machine learning methods in fault detection and classification of power transmission lines: A survey. Artif. Intell. Rev. 2022. [Google Scholar] [CrossRef]

- Chen, K.; Huang, C.; He, J. Fault detection, classification and location for transmission lines and distribution systems: A review on the methods. High Volt. 2016, 1, 25–33. [Google Scholar] [CrossRef]

- Goswami, T.; Roy, U.B. Predictive Model for Classification of Power System Faults using Machine Learning. In Proceedings of the TENCON 2019—2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 1881–1885. [Google Scholar] [CrossRef]

- Mukherjee, A.; Kundu, P.K.; Das, A. Transmission Line Faults in Power System and the Different Algorithms for Identification, Classification and Localization: A Brief Review of Methods. J. Inst. Eng. (India) Ser. B 2021, 102, 855–877. [Google Scholar] [CrossRef]

- Zin, A.A.M.; Saini, M.; Mustafa, M.W.; Sultan, A.R.; Rahimuddin. New algorithm for detection and fault classification on parallel transmission line using DWT and BPNN based on Clarke’s transformation. Neurocomputing 2015, 168, 983–993. [Google Scholar] [CrossRef]

- Moravej, Z.; Pazoki, M.; Khederzadeh, M. New Pattern-Recognition Method for Fault Analysis in Transmission Line With UPFC. IEEE Trans. Power Deliv. 2015, 30, 1231–1242. [Google Scholar] [CrossRef]

- Jiang, J.A.; Chuang, C.L.; Wang, Y.C.; Hung, C.H.; Wang, J.Y.; Lee, C.H.; Hsiao, Y.T. A Hybrid Framework for Fault Detection, Classification, and Location—Part I: Concept, Structure, and Methodology. IEEE Trans. Power Deliv. 2011, 26, 1988–1998. [Google Scholar] [CrossRef]

- Subashini, A.; Claret, S.P.A. A literature survey on fault identification and classification system using machine learning. In Proceedings of the 2nd International Conference on Mathematical Techniques and Applications: ICMTA 2021; AIP Publishing: Melville, NY, USA, 2022. [Google Scholar] [CrossRef]

- Hasheminejad, S. A new high-frequency-based method for the very fast differential protection of power transformers. Electr. Power Syst. Res. 2022, 209, 108032. [Google Scholar] [CrossRef]

- Kumar, D.; Bhowmik, P.S. Hidden Markov Model Based Islanding Prediction in Smart Grids. IEEE Syst. J. 2019, 13, 4181–4189. [Google Scholar] [CrossRef]

- Chen, K.; Hu, J.; He, J. Detection and Classification of Transmission Line Faults Based on Unsupervised Feature Learning and Convolutional Sparse Autoencoder. IEEE Trans. Smart Grid 2018, 9, 1748–1758. [Google Scholar] [CrossRef]

- Sahu, A.R.; Palei, S.K. Fault analysis of dragline subsystem using Bayesian network model. Reliab. Eng. Syst. Saf. 2022, 225, 108579. [Google Scholar] [CrossRef]

- Zhang, J.; Bian, H.; Zhao, H.; Wang, X.; Zhang, L.; Bai, Y. Bayesian Network-Based Risk Assessment of Single-Phase Grounding Accidents of Power Transmission Lines. Int. J. Environ. Res. Public Health 2020, 17, 1841. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.; Li, Y.; Wang, Z.; Yang, D.; Ren, Y.; Feng, Q. A combined physics of failure and Bayesian network reliability analysis method for complex electronic systems. Process Saf. Environ. Prot. 2021, 148, 698–710. [Google Scholar] [CrossRef]

- Godse, R.; Bhat, S. Mathematical Morphology-Based Feature-Extraction Technique for Detection and Classification of Faults on Power Transmission Line. IEEE Access 2020, 8, 38459–38471. [Google Scholar] [CrossRef]

- Mishra, D.P.; Ray, P. Fault detection, location and classification of a transmission line. Neural Comput. Appl. 2017, 30, 1377–1424. [Google Scholar] [CrossRef]

- Prasad, A.; Edward, J.B.; Ravi, K. A review on fault classification methodologies in power transmission systems: Part—I. J. Electr. Syst. Inf. Technol. 2018, 5, 48–60. [Google Scholar] [CrossRef]

- Sadeh, J.; Afradi, H. A new and accurate fault location algorithm for combined transmission lines using Adaptive Network-Based Fuzzy Inference System. Electr. Power Syst. Res. 2009, 79, 1538–1545. [Google Scholar] [CrossRef]

- Lopez, J.R.; de Jesus Camacho, J.; Ponce, P.; MacCleery, B.; Molina, A. A Real-Time Digital Twin and Neural Net Cluster-Based Framework for Faults Identification in Power Converters of Microgrids, Self Organized Map Neural Network. Energies 2022, 15, 7306. [Google Scholar] [CrossRef]

- Coleman, N.S.; Schegan, C.; Miu, K.N. A study of power distribution system fault classification with machine learning techniques. In Proceedings of the 2015 North American Power Symposium (NAPS), Charlotte, NC, USA, 4–6 October 2015. [Google Scholar] [CrossRef]

- Li, L.; Che, R.; Zang, H. A fault cause identification methodology for transmission lines based on support vector machines. In Proceedings of the 2016 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Xi’an, China, 25–28 October 2016. [Google Scholar] [CrossRef]

- Mtawa, Y.A.; Haque, A.; Halabi, T. A Review and Taxonomy on Fault Analysis in Transmission Power Systems. Computation 2022, 10, 144. [Google Scholar] [CrossRef]

- Teng, S.; Li, J.; He, S.; Fan, B.; Hu, S. On-line Fault Diagnosis Technology and Application Based on Deep Learning of Fault Characteristic of Power Grid. J. Phys. Conf. Ser. 2021, 2023, 012023. [Google Scholar] [CrossRef]

- Kothari, N.H.; Tripathi, P.; Bhalja, B.R.; Pandya, V. Support Vector Machine Based Fault Classification and Faulty Section identification Scheme in Thyristor Controlled Series Compensated Transmission Lines. In Proceedings of the 2020 IEEE-HYDCON, Hyderabad, India, 11–12 September 2020. [Google Scholar] [CrossRef]

- Phong, B.H.; Hoang, T.M.; Le, T.L. A new method for displayed mathematical expression detection based on FFT and SVM. In Proceedings of the 2017 4th NAFOSTED Conference on Information and Computer Science, Hanoi, Vietnam, 24–25 November 2017. [Google Scholar] [CrossRef]

- Karmacharya, I.M.; Gokaraju, R. Fault Location in Ungrounded Photovoltaic System Using Wavelets and ANN. IEEE Trans. Power Deliv. 2018, 33, 549–559. [Google Scholar] [CrossRef]

- Ren, Y.; Yu, D.; Li, Y. Research on Causes of Transmission Line Fault Based on Decision Tree Classification. In Proceedings of the 2020 IEEE/IAS Industrial and Commercial Power System Asia (I&CPS Asia), Weihai, China, 13–15 July 2020; pp. 1066–1070. [Google Scholar] [CrossRef]

- Fonseca, G.A.; Ferreira, D.D.; Costa, F.B.; Almeida, A.R. Fault Classification in Transmission Lines Using Random Forest and Notch Filter. J. Control Autom. Electr. Syst. 2021, 33, 598–609. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Bao, Z. Deep learning neural network for power system fault diagnosis. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 6678–6683. [Google Scholar] [CrossRef]

- Cordoni, F.; Bacchiega, G.; Bondani, G.; Radu, R.; Muradore, R. A multi–modal unsupervised fault detection system based on power signals and thermal imaging via deep AutoEncoder neural network. Eng. Appl. Artif. Intell. 2022, 110, 104729. [Google Scholar] [CrossRef]

- Li, T.; Zhao, H.; Zhou, X.; Zhu, S.; Yang, Z.; Yang, H.; Liu, W.; Zhou, Z. Method of Short-Circuit Fault Diagnosis in Transmission Line Based on Deep Learning. Int. J. Pattern Recognit. Artif. Intell. 2022, 36, 2252009. [Google Scholar] [CrossRef]

- Khodayar, M.; Liu, G.; Wang, J.; Khodayar, M.E. Deep learning in power systems research: A review. CSEE J. Power Energy Syst. 2021, 7, 209–220. [Google Scholar] [CrossRef]

- Guo, Y.; Pang, Z.; Du, J.; Jiang, F.; Hu, Q. An Improved AlexNet for Power Edge Transmission Line Anomaly Detection. IEEE Access 2020, 8, 97830–97838. [Google Scholar] [CrossRef]

- Wang, M.; Chai, W.; Xu, C.; Dong, L.; Li, Y.; Wang, P.; Qin, X. An edge computing method using a novel mode component for power transmission line fault diagnosis in distribution network. J. Ambient. Intell. Humaniz. Comput. 2020, 13, 5163–5176. [Google Scholar] [CrossRef]

- Faheem, M.; Shah, S.; Butt, R.; Raza, B.; Anwar, M.; Ashraf, M.; Ngadi, M.; Gungor, V. Smart grid communication and information technologies in the perspective of Industry 4.0: Opportunities and challenges. Comput. Sci. Rev. 2018, 30, 1–30. [Google Scholar] [CrossRef]

- Kotur, D.; Stefanov, P. Optimal power flow control in the system with offshore wind power plants connected to the MTDC network. Int. J. Electr. Power Energy Syst. 2019, 105, 142–150. [Google Scholar] [CrossRef]

- Forstel, L.; Lampe, L. Grid diagnostics: Monitoring cable aging using power line transmission. In Proceedings of the 2017 IEEE International Symposium on Power Line Communications and its Applications (ISPLC), Madrid, Spain, 3–5 April 2017. [Google Scholar] [CrossRef]

- Prasad, G.; Lampe, L. Full-Duplex Power Line Communications: Design and Applications from Multimedia to Smart Grid. IEEE Commun. Mag. 2020, 58, 106–112. [Google Scholar] [CrossRef]

- Malik, H.; Iqbal, A.; Yadav, A.K. (Eds.) Soft Computing in Condition Monitoring and Diagnostics of Electrical and Mechanical Systems; Springer: Singapore, 2020. [Google Scholar] [CrossRef]

- Shagiev, R.I.; Karpov, A.V.; Kalabanov, S.A. The Diagnostics System for Power Transmission Lines of 6/10 kV. Appl. Mech. Mater. 2014, 666, 138–143. [Google Scholar] [CrossRef]

- Passerini, F.; Tonello, A.M. Analog Full-Duplex Amplify-and-Forward Relay for Power Line Communication Networks. IEEE Commun. Lett. 2019, 23, 676–679. [Google Scholar] [CrossRef]

- Djordjevic, M.D.; Vracar, J.M.; Stojkovic, A.S. Supervision and Monitoring System of the Power Line Poles Using IIoT Technology. In Proceedings of the 2020 55th International Scientific Conference on Information, Communication and Energy Systems and Technologies (ICEST), Niš, Serbia, 10–12 September 2020. [Google Scholar] [CrossRef]

- Van de Kaa, G.; Fens, T.; Rezaei, J.; Kaynak, D.; Hatun, Z.; Tsilimeni-Archangelidi, A. Realizing smart meter connectivity: Analyzing the competing technologies Power line communication, mobile telephony, and radio frequency using the best worst method. Renew. Sustain. Energy Rev. 2019, 103, 320–327. [Google Scholar] [CrossRef]

- Petchrompo, S.; Li, H.; Erguido, A.; Riches, C.; Parlikad, A.K. A value-based approach to optimizing long-term maintenance plans for a multi-asset k-out-of-N system. Reliab. Eng. Syst. Saf. 2020, 200, 106924. [Google Scholar] [CrossRef]

- Fernández, P.M.G.; López, A.J.G.; Márquez, A.C.; Fernández, J.F.G.; Marcos, J.A. Dynamic Risk Assessment for CBM-based adaptation of maintenance planning. Reliab. Eng. Syst. Saf. 2022, 223, 108359. [Google Scholar] [CrossRef]

- Hu, V.C.; Kuhn, D.R.; Ferraiolo, D.F. Access Control for Emerging Distributed Systems. Computer 2018, 51, 100–103. [Google Scholar] [CrossRef]

- Eze, K.G.; Akujuobi, C.M. Design and Evaluation of a Distributed Security Framework for the Internet of Things. J. Signal Inf. Process. 2022, 13, 1–23. [Google Scholar] [CrossRef]

- Duan, L.; Sun, C.A.; Zhang, Y.; Ni, W.; Chen, J. A Comprehensive Security Framework for Publish/Subscribe-Based IoT Services Communication. IEEE Access 2019, 7, 25989–26001. [Google Scholar] [CrossRef]

- Krishnadas, G.; Kiprakis, A. A Machine Learning Pipeline for Demand Response Capacity Scheduling. Energies 2020, 13, 1848. [Google Scholar] [CrossRef]

- Berre, A.J.; Tsalgatidou, A.; Francalanci, C.; Ivanov, T.; Pariente-Lobo, T.; Ruiz-Saiz, R.; Novalija, I.; Grobelnik, M. Big Data and AI Pipeline Framework: Technology Analysis from a Benchmarking Perspective. In Technologies and Applications for Big Data Value; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 63–88. [Google Scholar] [CrossRef]

- Giordano, D.; Pastor, E.; Giobergia, F.; Cerquitelli, T.; Baralis, E.; Mellia, M.; Neri, A.; Tricarico, D. Dissecting a data-driven prognostic pipeline: A powertrain use case. Expert Syst. Appl. 2021, 180, 115109. [Google Scholar] [CrossRef]

- Aoufi, S.; Derhab, A.; Guerroumi, M. Survey of false data injection in smart power grid: Attacks, countermeasures and challenges. J. Inf. Secur. Appl. 2020, 54, 102518. [Google Scholar] [CrossRef]

- Yaacoub, J.P.A.; Salman, O.; Noura, H.N.; Kaaniche, N.; Chehab, A.; Malli, M. Cyber-physical systems security: Limitations, issues and future trends. Microprocess. Microsyst. 2020, 77, 103201. [Google Scholar] [CrossRef] [PubMed]

- Omerovic, A.; Vefsnmo, H.; Erdogan, G.; Gjerde, O.; Gramme, E.; Simonsen, S. A Feasibility Study of a Method for Identification and Modelling of Cybersecurity Risks in the Context of Smart Power Grids. In Proceedings of the 4th International Conference on Complexity, Future Information Systems and Risk, Heraklion, Greece, 2–4 May 2019; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2019. [Google Scholar] [CrossRef]

- Huo, Y.; Prasad, G.; Lampe, L.; Leung, V.C.M. Advanced Smart Grid Monitoring: Intelligent Cable Diagnostics using Neural Networks. In Proceedings of the 2020 IEEE International Symposium on Power Line Communications and its Applications (ISPLC), Malaga, Spain, 11–13 May 2020. [Google Scholar] [CrossRef]

- Aligholian, A.; Farajollahi, M.; Mohsenian-Rad, H. Unsupervised Learning for Online Abnormality Detection in Smart Meter Data. In Proceedings of the 2019 IEEE Power & Energy Society General Meeting (PESGM), Atlanta, GA, USA, 4–8 August 2019. [Google Scholar] [CrossRef]

- Serradilla, O.; Zugasti, E.; Rodriguez, J.; Zurutuza, U. Deep learning models for predictive maintenance: A survey, comparison, challenges and prospects. Appl. Intell. 2022, 52, 10934–10964. [Google Scholar] [CrossRef]

- Huo, Y.; Prasad, G.; Lampe, L.; Leung, V.C.M. Cable Health Monitoring in Distribution Networks using Power Line Communications. In Proceedings of the 2018 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), Aalborg, Denmark, 29–31 October 2018. [Google Scholar] [CrossRef]

- Xi, P.; Feilai, P.; Yongchao, L.; Zhiping, L.; Long, L. Fault Detection Algorithm for Power Distribution Network Based on Sparse Self-Encoding Neural Network. In Proceedings of the 2017 International Conference on Smart Grid and Electrical Automation (ICSGEA), Changsha, China, 27–28 May 2017. [Google Scholar] [CrossRef]

- Chen, W.; Zheng, L. Q-Learning Algorithm Based Topology Control of Power Line Communication Networks. In Proceedings of the 2020 IEEE 11th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 16–18 October 2020. [Google Scholar] [CrossRef]

- Bifet, A.; Gavaldà, R. Learning from Time-Changing Data with Adaptive Windowing. In Proceedings of the 2007 SIAM International Conference on Data Mining, Minneapolis, MN, USA, 26–28 April 2007; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2007. [Google Scholar] [CrossRef]

- Specification of Sagemcom T211 Smart Power Meter. Available online: https://www.sagemcom.com/sites/default/files/datasheet/Datasheet%20T211_1121.pdf (accessed on 24 September 2022).

- Mosquitto Message Broker Software. Available online: https://mosquitto.org/ (accessed on 24 September 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maskeliūnas, R.; Pomarnacki, R.; Khang Huynh, V.; Damaševičius, R.; Plonis, D. Power Line Monitoring through Data Integrity Analysis with Q-Learning Based Data Analysis Network. Remote Sens. 2023, 15, 194. https://doi.org/10.3390/rs15010194

Maskeliūnas R, Pomarnacki R, Khang Huynh V, Damaševičius R, Plonis D. Power Line Monitoring through Data Integrity Analysis with Q-Learning Based Data Analysis Network. Remote Sensing. 2023; 15(1):194. https://doi.org/10.3390/rs15010194

Chicago/Turabian StyleMaskeliūnas, Rytis, Raimondas Pomarnacki, Van Khang Huynh, Robertas Damaševičius, and Darius Plonis. 2023. "Power Line Monitoring through Data Integrity Analysis with Q-Learning Based Data Analysis Network" Remote Sensing 15, no. 1: 194. https://doi.org/10.3390/rs15010194

APA StyleMaskeliūnas, R., Pomarnacki, R., Khang Huynh, V., Damaševičius, R., & Plonis, D. (2023). Power Line Monitoring through Data Integrity Analysis with Q-Learning Based Data Analysis Network. Remote Sensing, 15(1), 194. https://doi.org/10.3390/rs15010194