Siam-Sort: Multi-Target Tracking in Video SAR Based on Tracking by Detection and Siamese Network

Abstract

1. Introduction

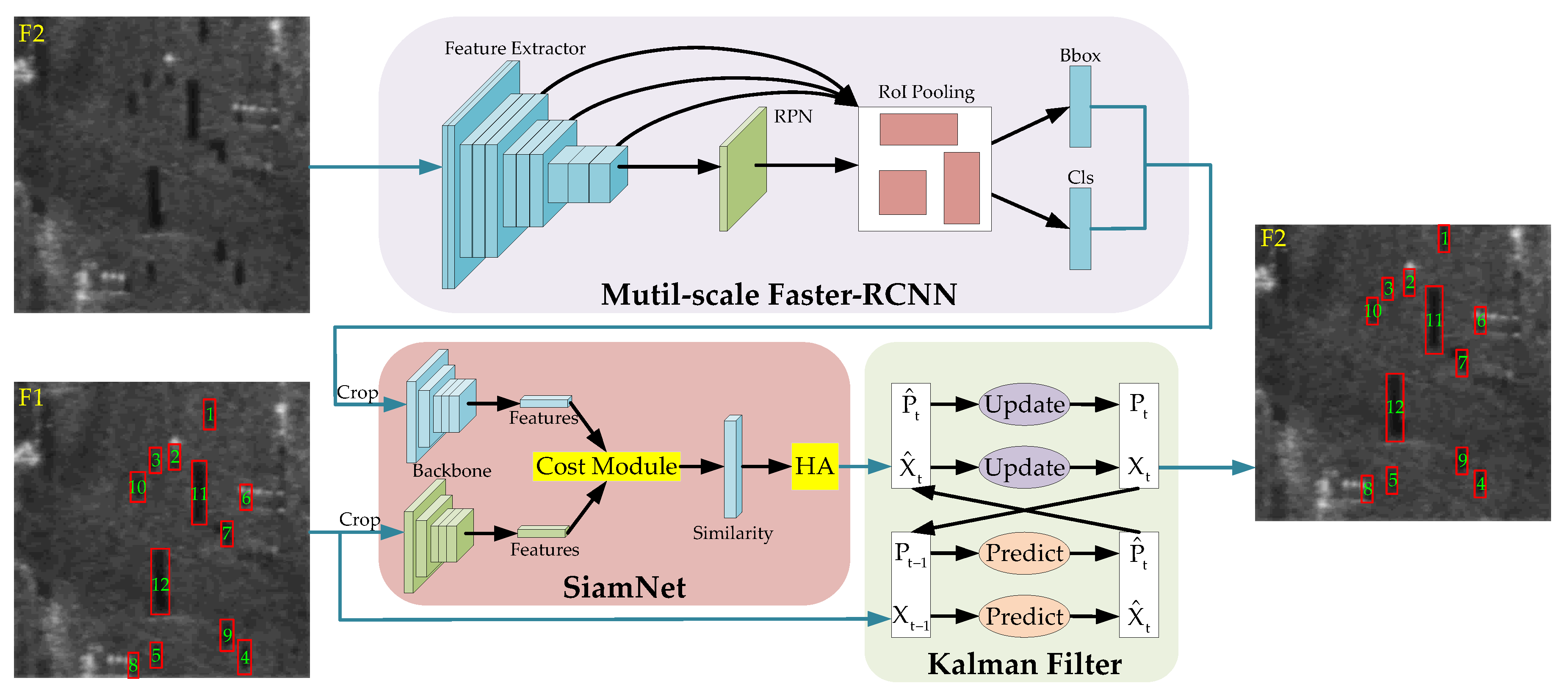

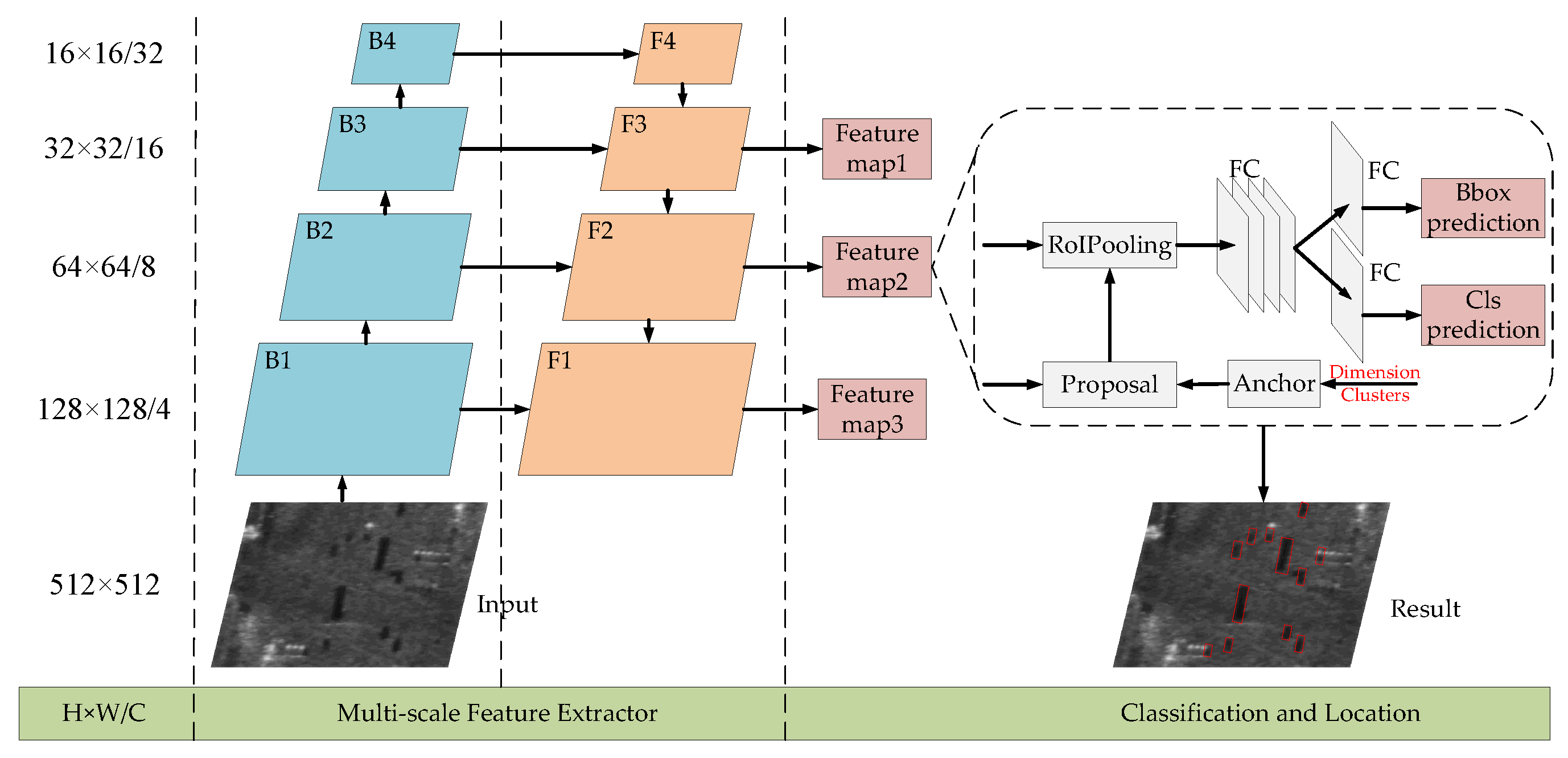

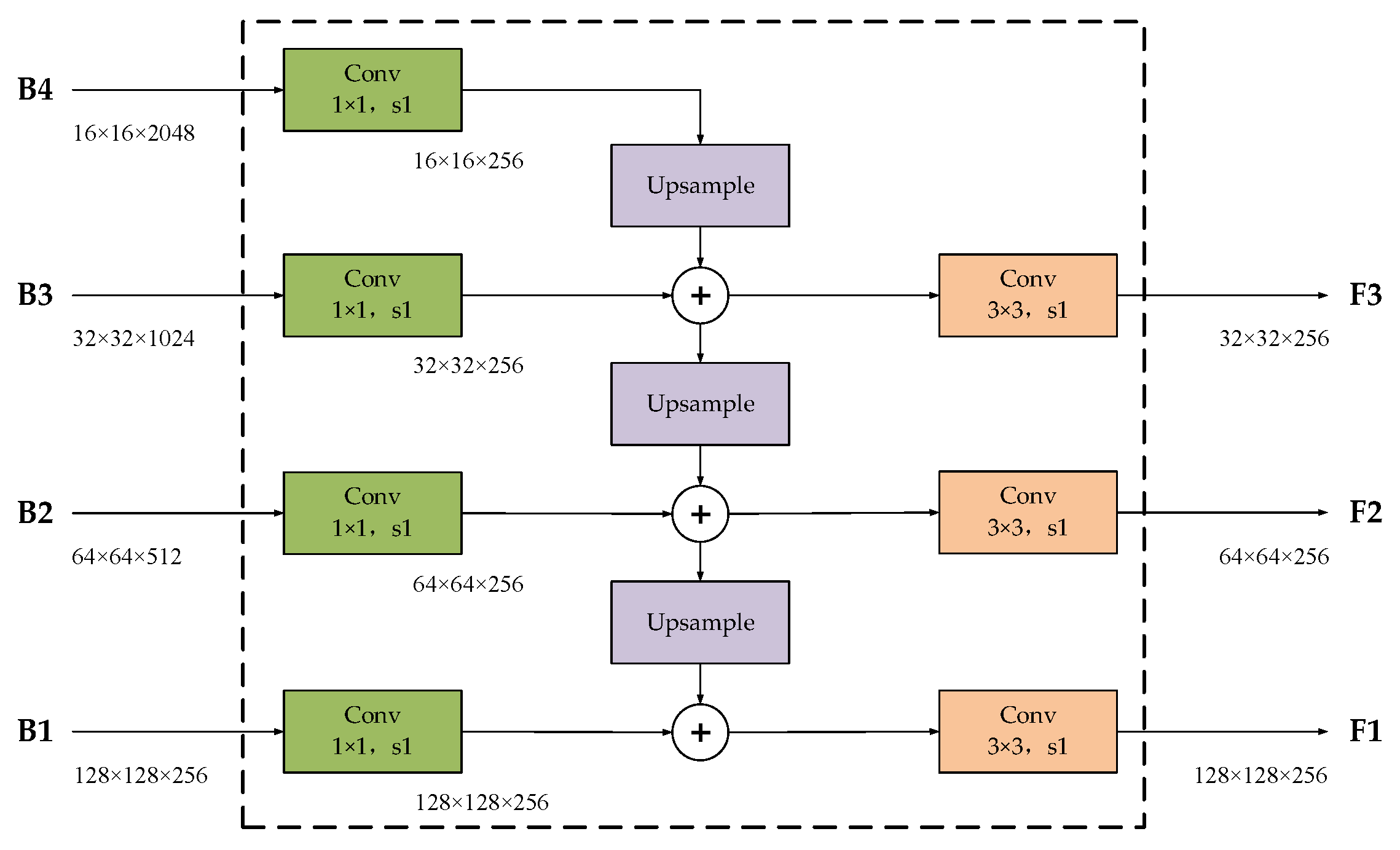

- Multi-scale Faster-RCNN is proposed to detect the shadows of moving targets. We modify the feature extractor of Faster-RCNN where the multi-layer features of the network are fused to enhance its feature representation capability. Moreover, we predict the target shadows on the feature maps of multiple scales to improve the detection accuracy.

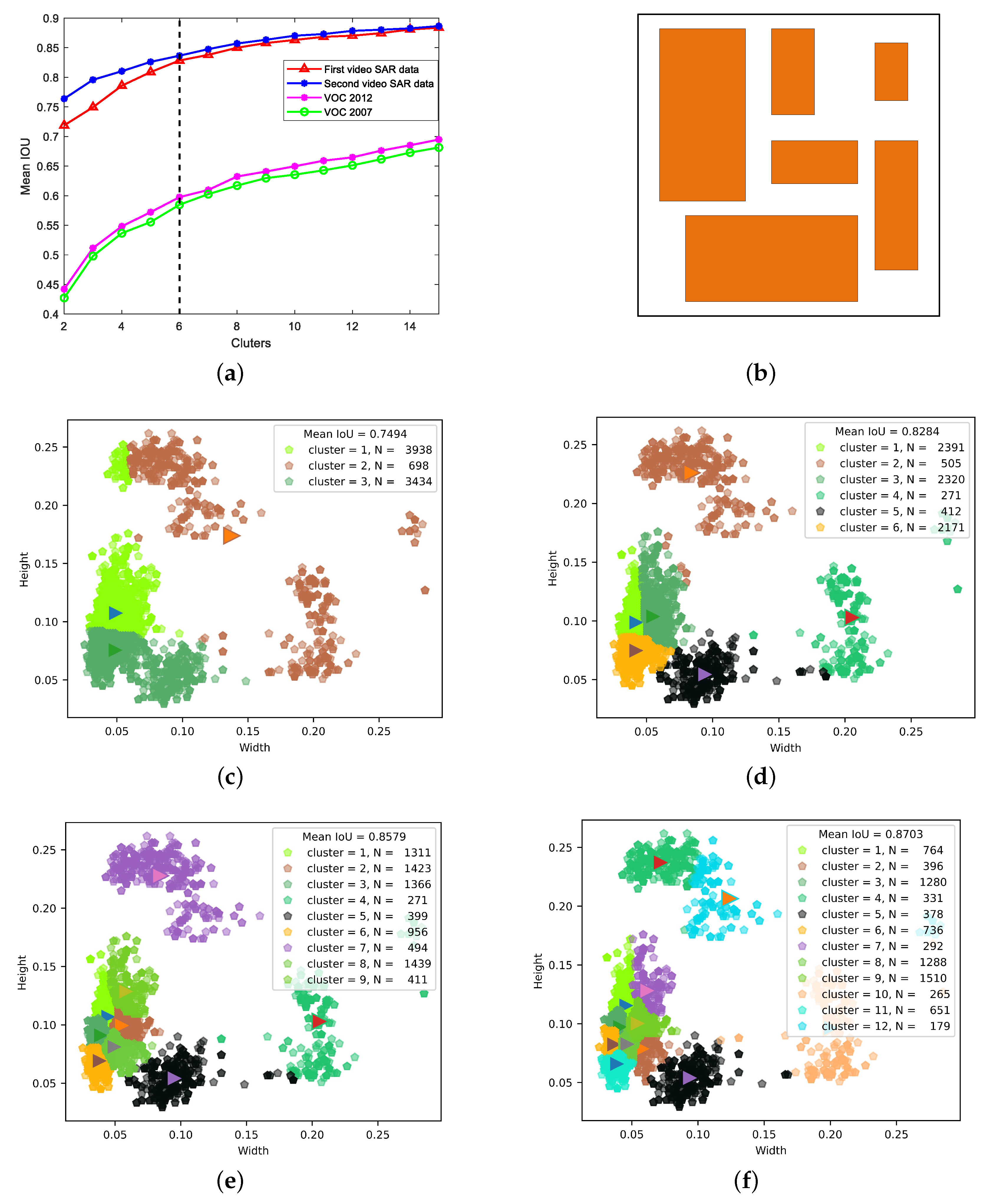

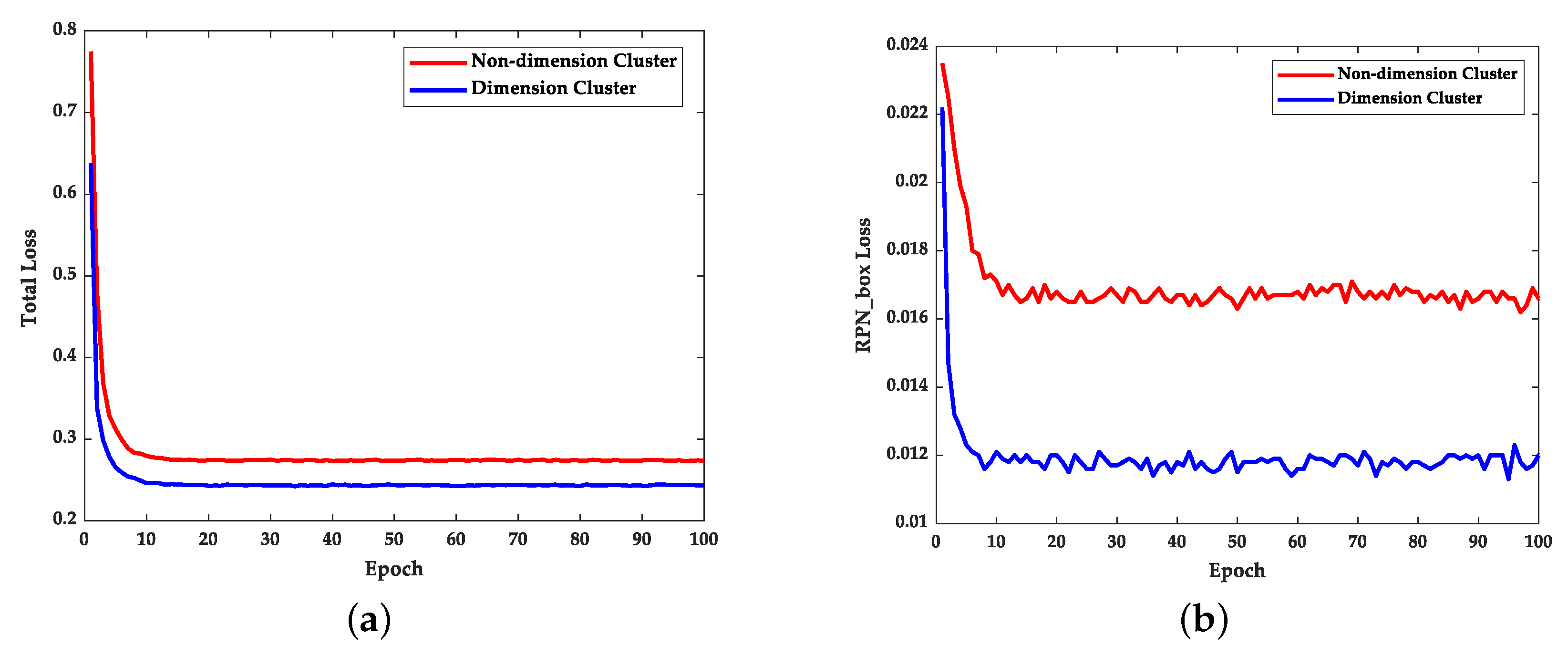

- We utilize dimension clusters to optimize the generation strategy of the anchors for multi-scale faster-RCNN and to speed up the convergence speed of the model in the training process, which further improves the detection performance of multi-scale faster-RCNN.

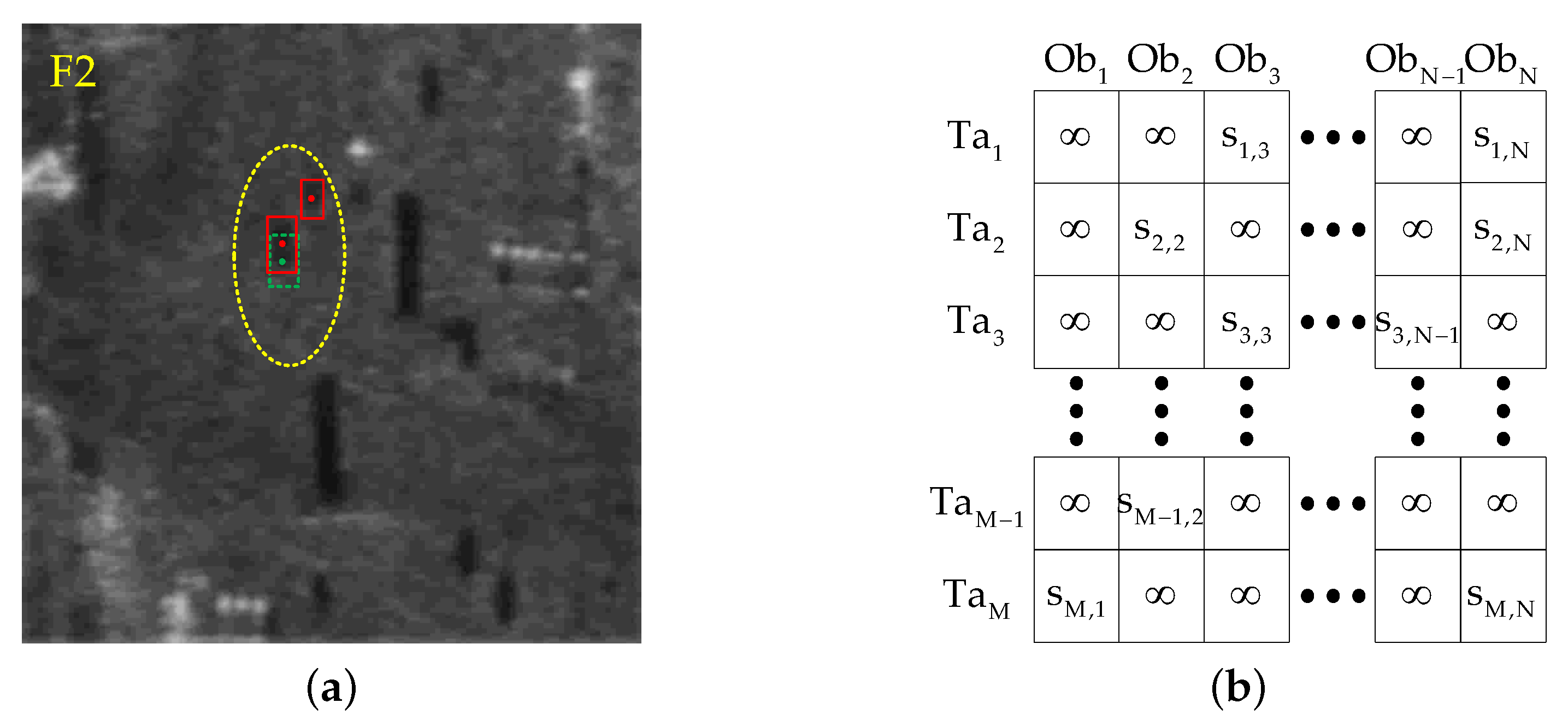

- SiamNet is proposed for data association. We first extract the features of target shadows and observations. Then, we calculate the similarities between their features to build the similarity matrix. Finally, the matrix is used by the Hungarian algorithm to match the targets and observations. Compared with other methods, it significantly reduces the matching errors.

2. Methodology

2.1. Multi-Scale Faster-RCNN

2.1.1. Multi-Scale Feature Extractor

2.1.2. Dimension Clusters

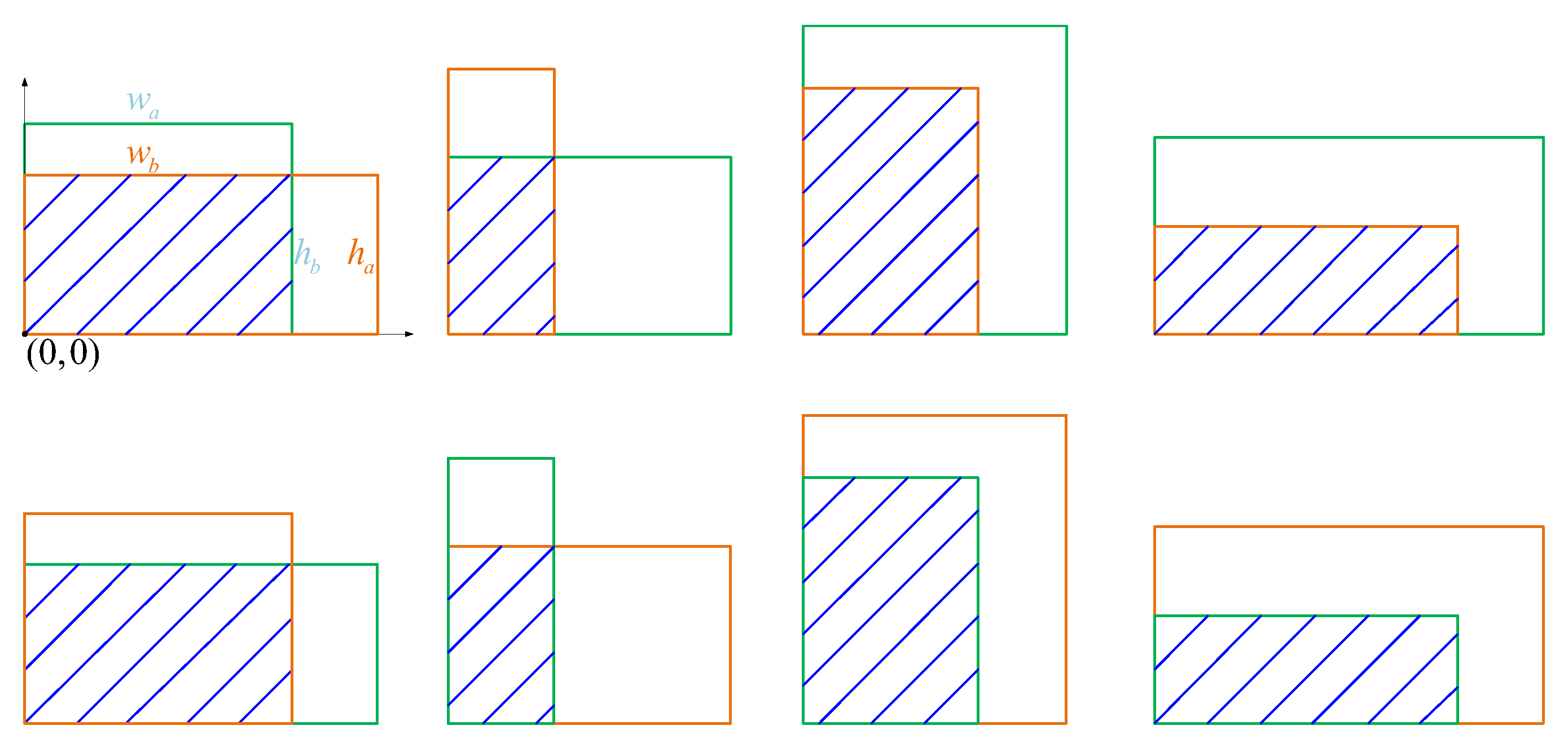

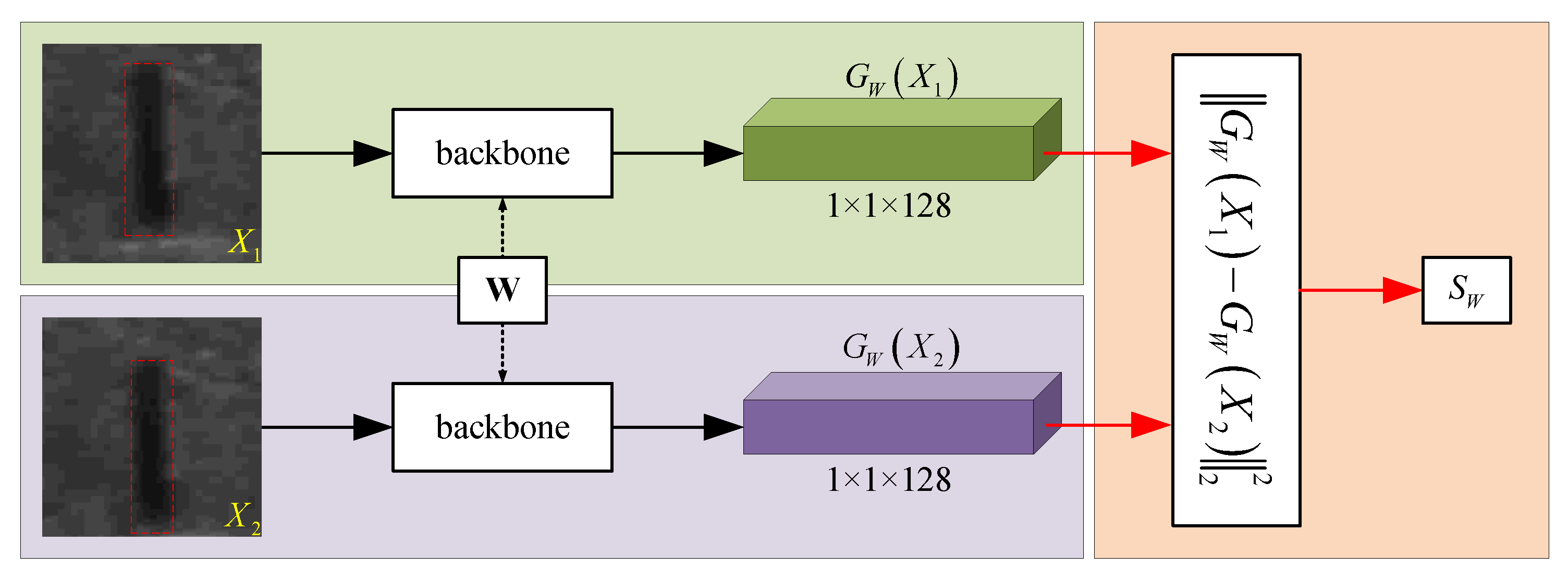

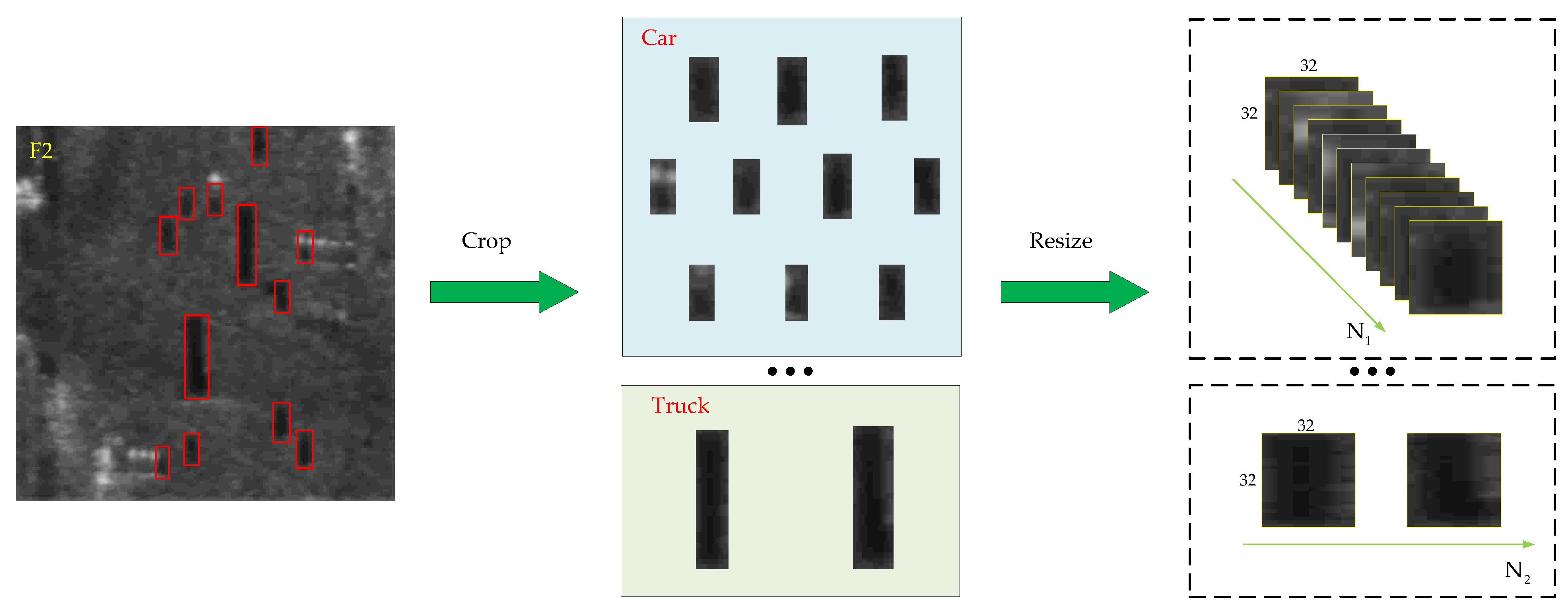

2.2. SiamNet

| Algorithm 1 Dimension Clusters in the dataset |

| Input: The error threshold and the set of bounding boxes of all targets in the dataset. Begin 1. Initialize: Let and select randomly K boxes as the initial anchors . 2. IOU calculation: Calculate the IOU between each box and each anchor to generate distance matrix . for to do for to do end for end for 3. Boxes allocation: Allocate each bounding box to its closest anchor and generate the set of K clusters. 4. Anchors updating: Let , and calculate the mean of the width and height of all boxes in each cluster and update the anchors . Determine whether . If yes, the algorithm ends; otherwise, jump to Step 2. End Output: anchors |

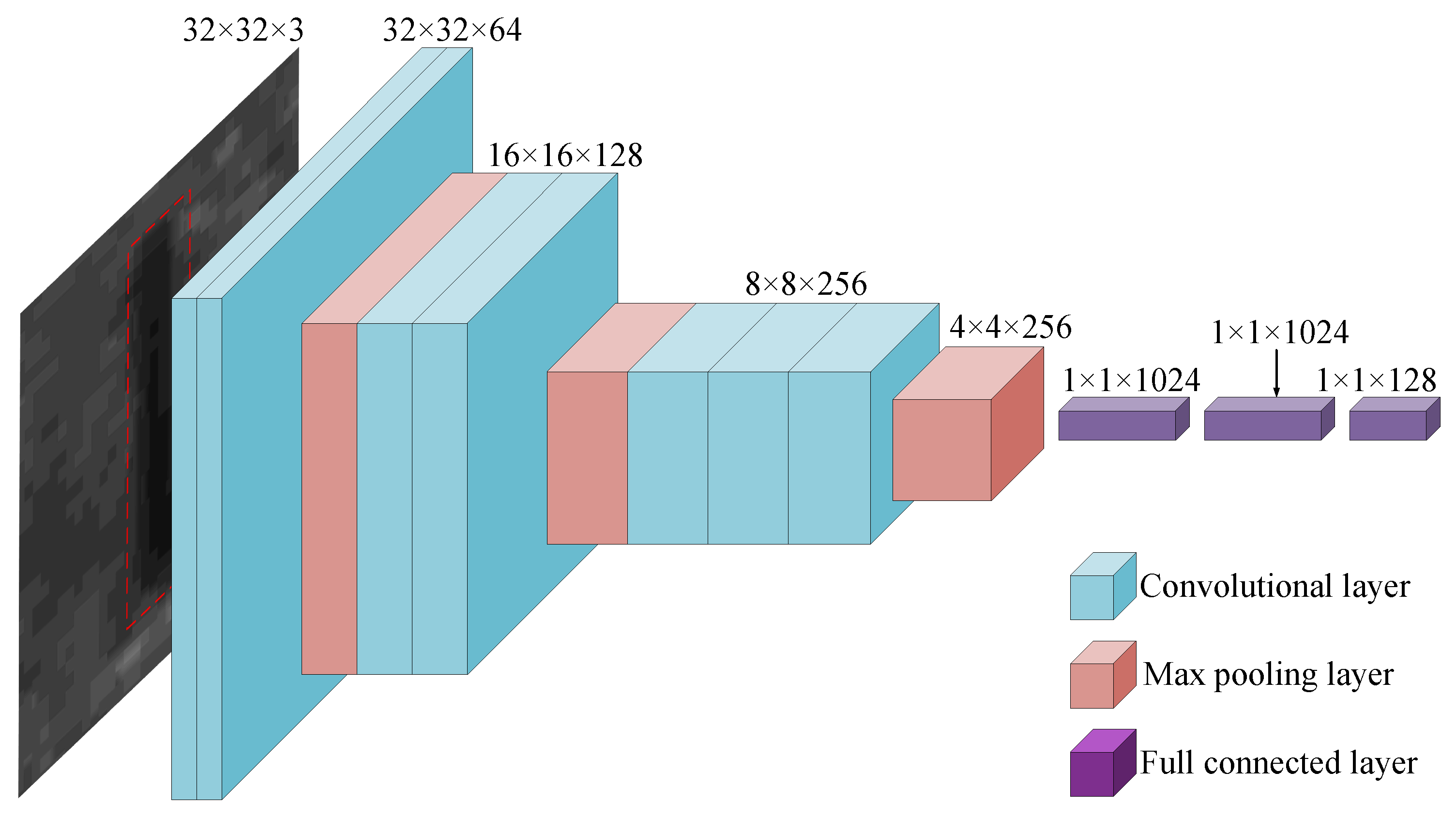

2.2.1. Backbone

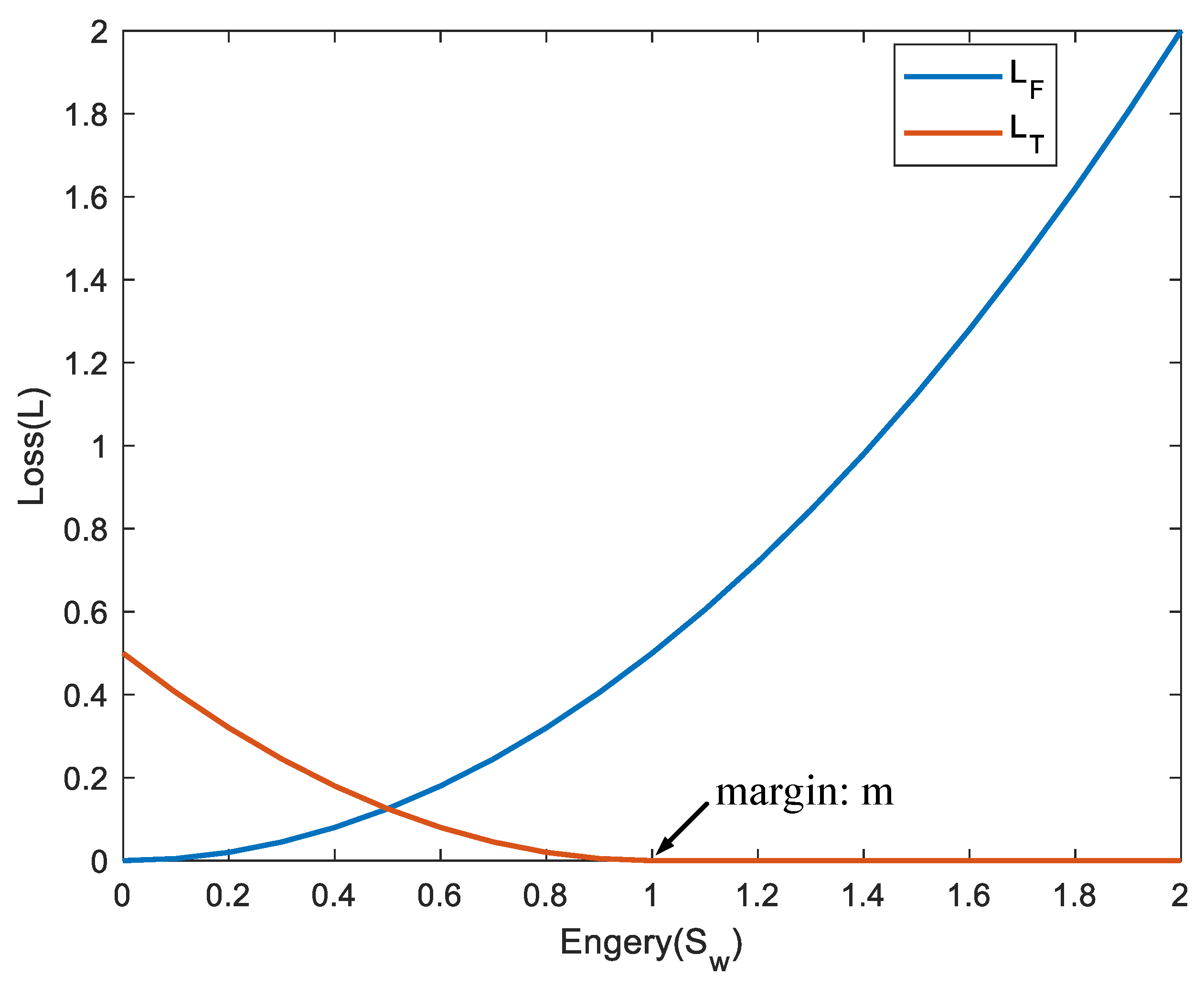

2.2.2. Contrastive Loss Function

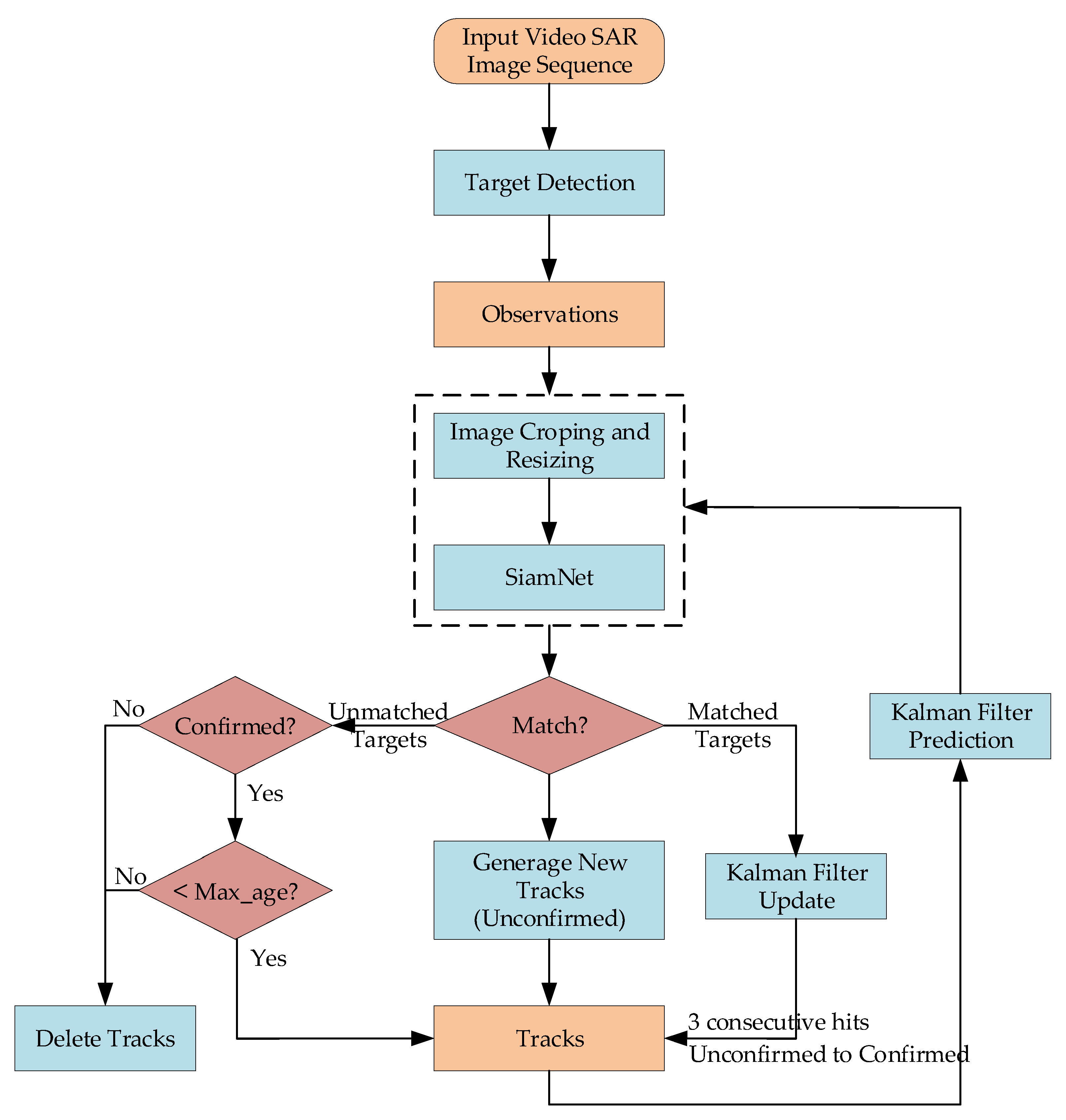

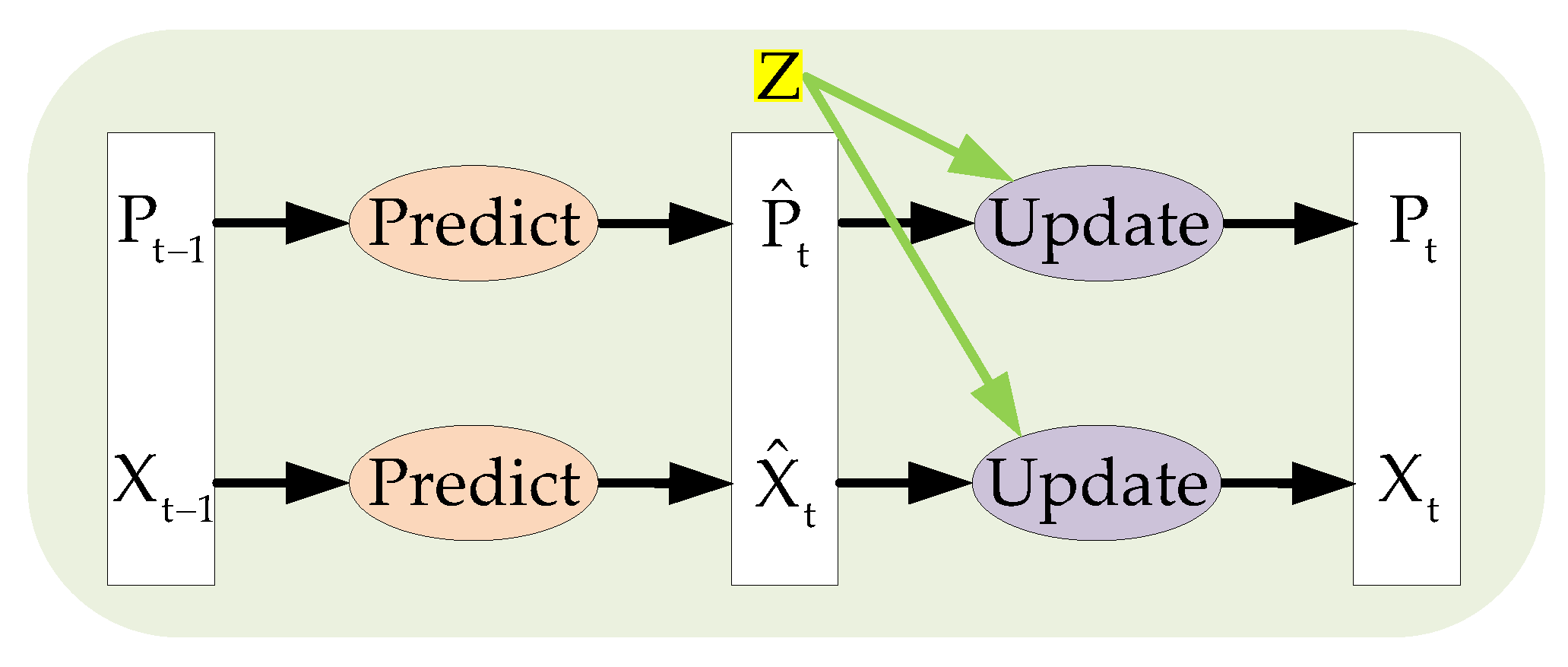

2.3. Tracking Process

3. Results

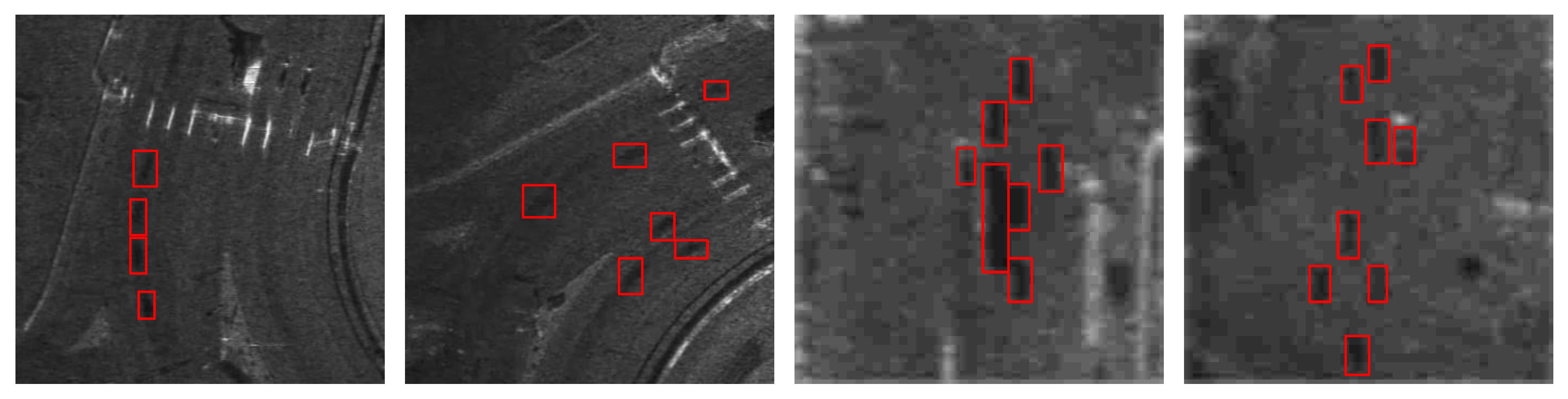

3.1. Experimental Data

3.2. Evaluation Indicators

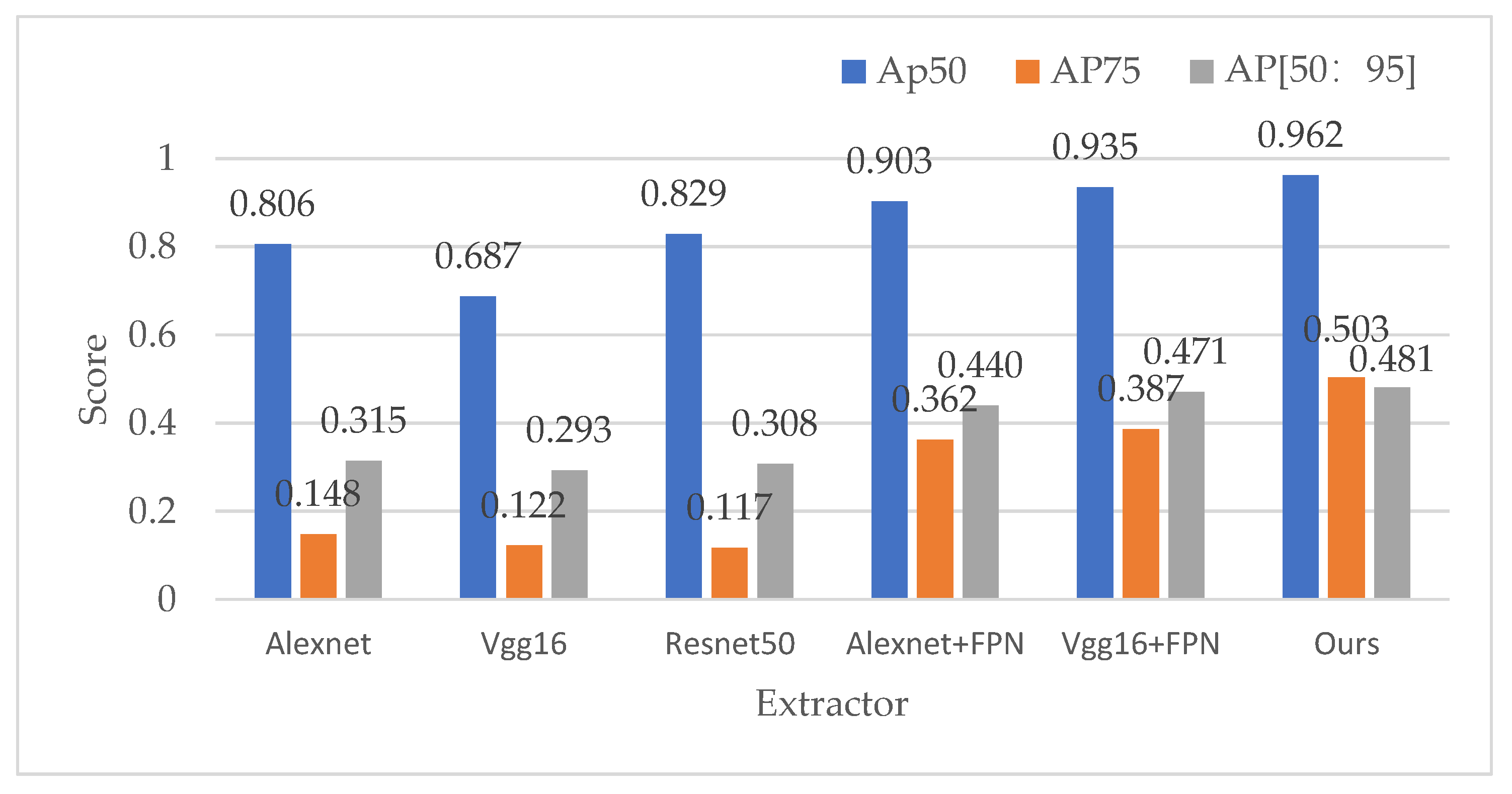

3.2.1. Detection Indicators

- Average precision @50 (AP@50): The average precision for a target category when the IOU is set to 0.5;

- Average precision @75 (AP@75): The average precision for a target category when the IOU is set to 0.75;

- Average precision @[50:95] (AP@[50:95]): The average precision for a target category when the IOU is set from 0.5 to 0.95.

3.2.2. Tracking Indicators

- Multi-target tracking accuracy (MTTA): The total tracking accuracy for false negatives, false positives, and identity switches;

- Multi-target tracking precision (MTTP): The total sum of overall tracking precision calculated from bounding box overlap between the label and the detected position;

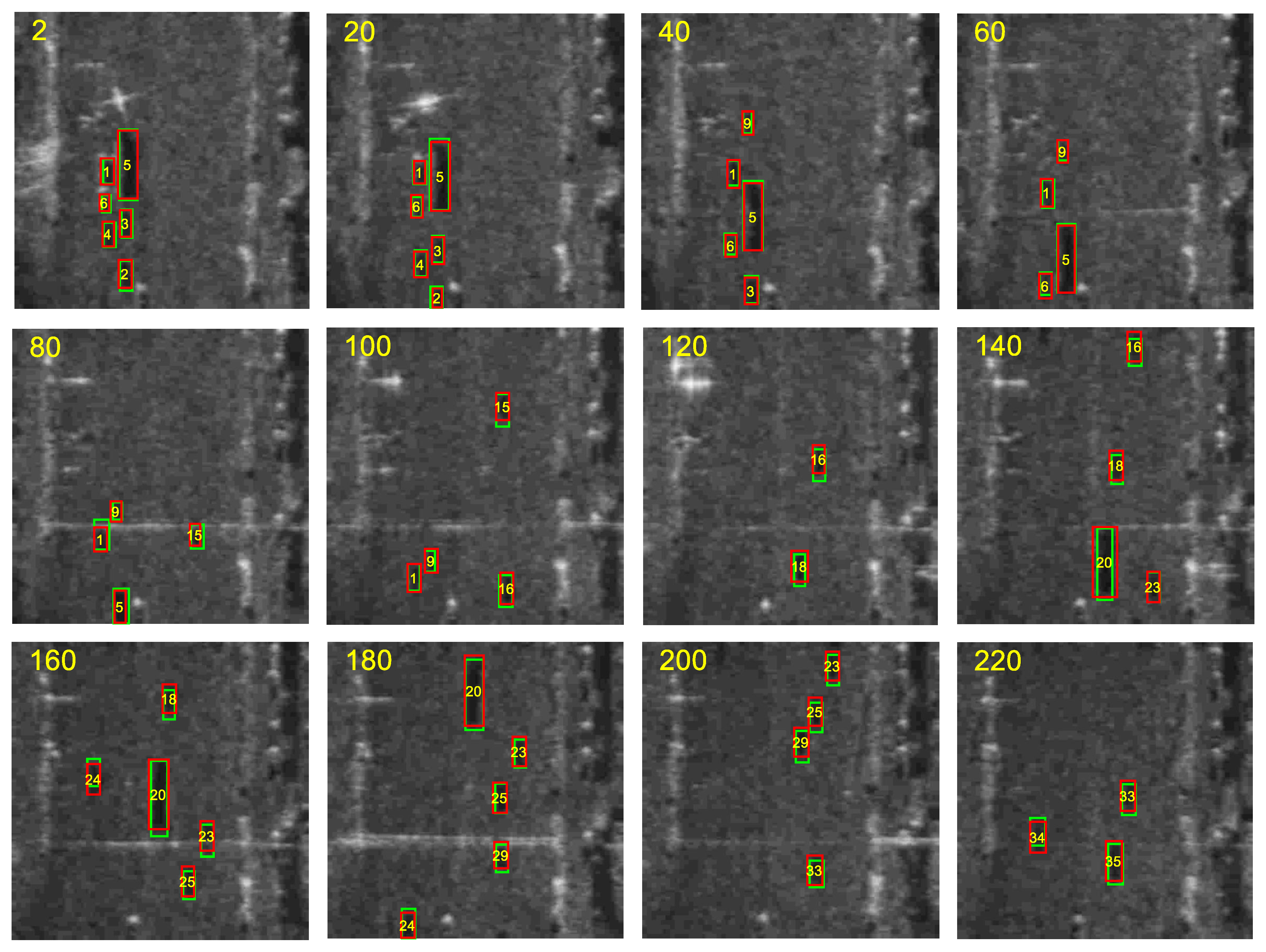

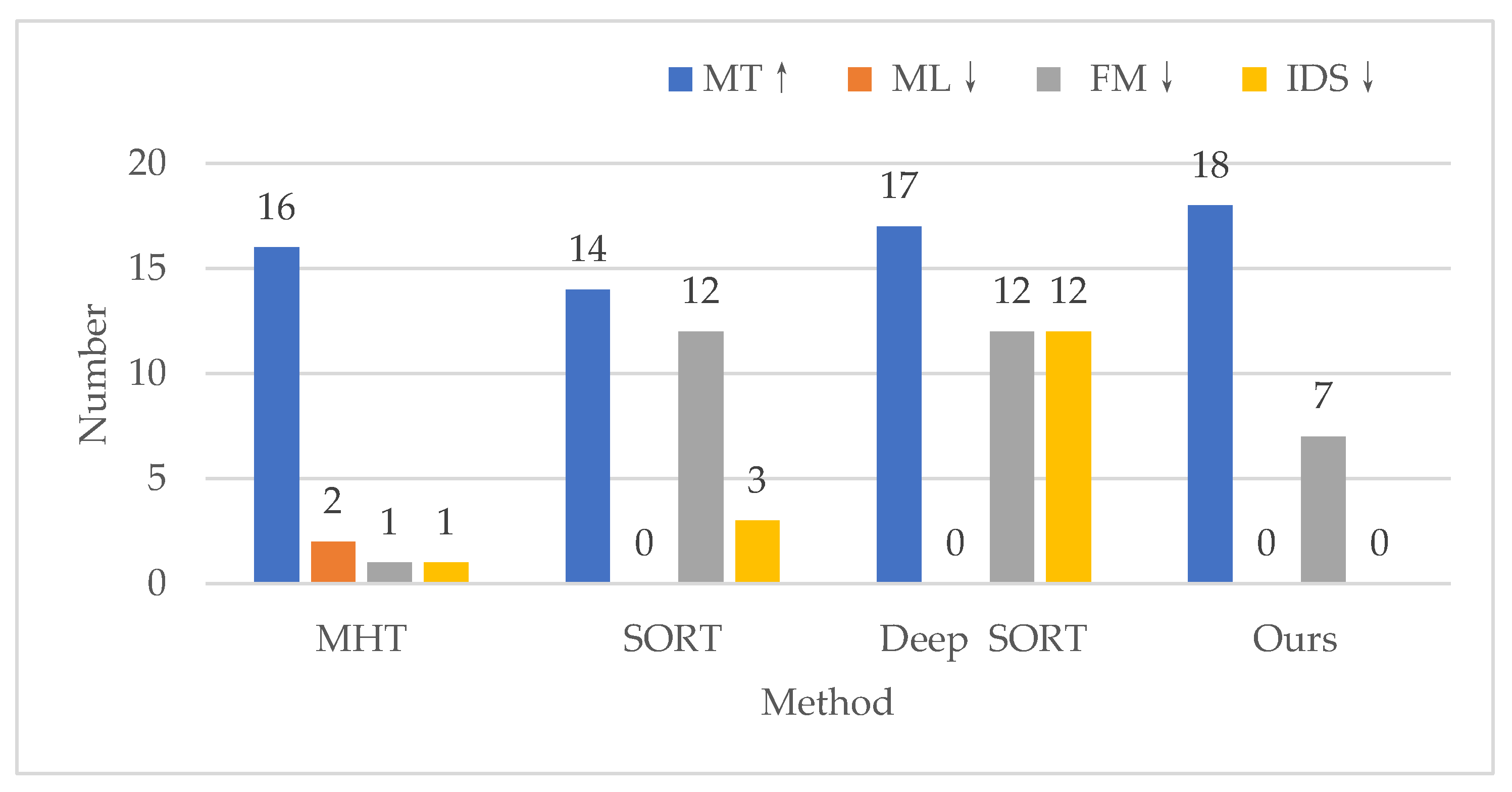

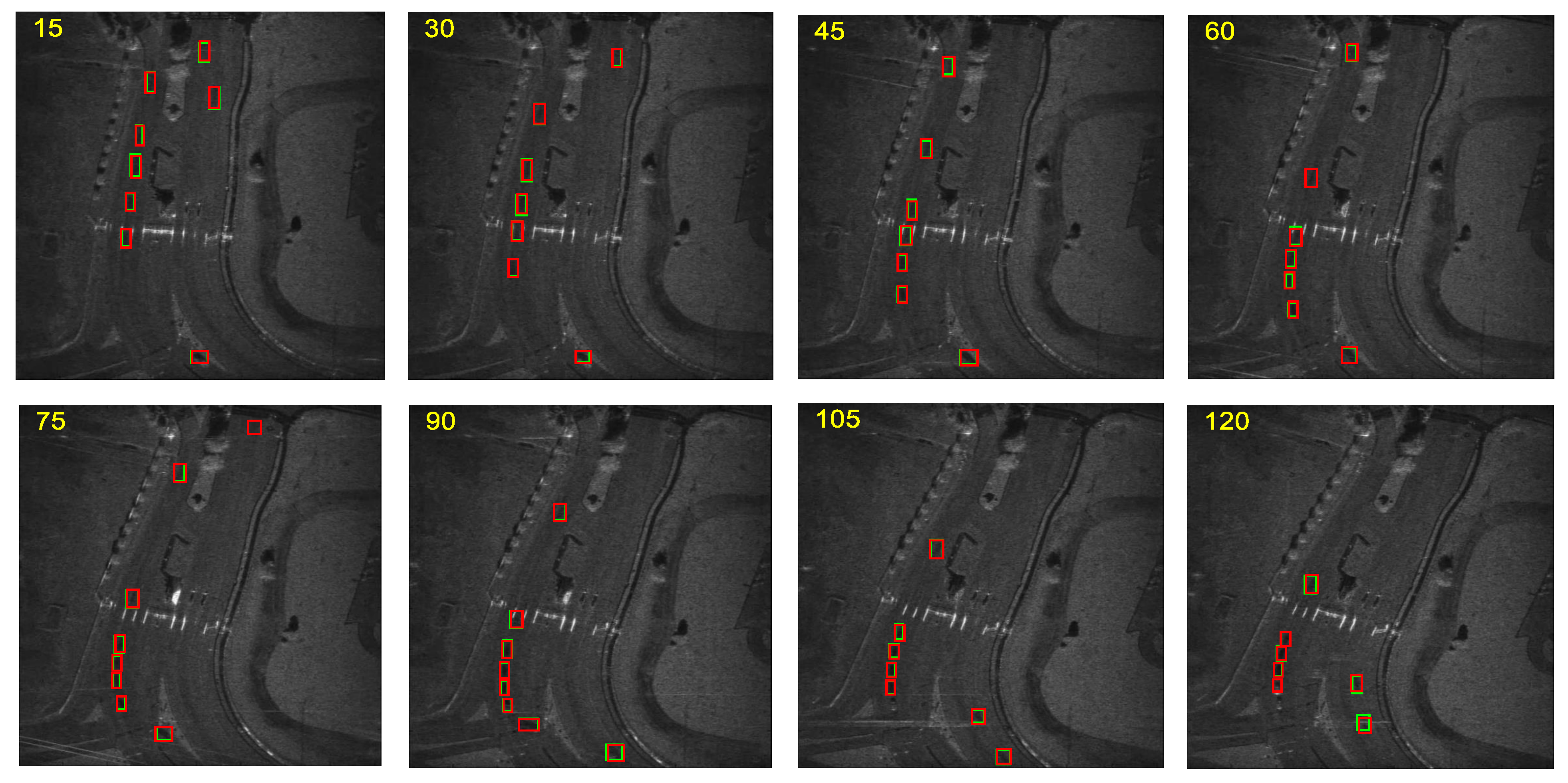

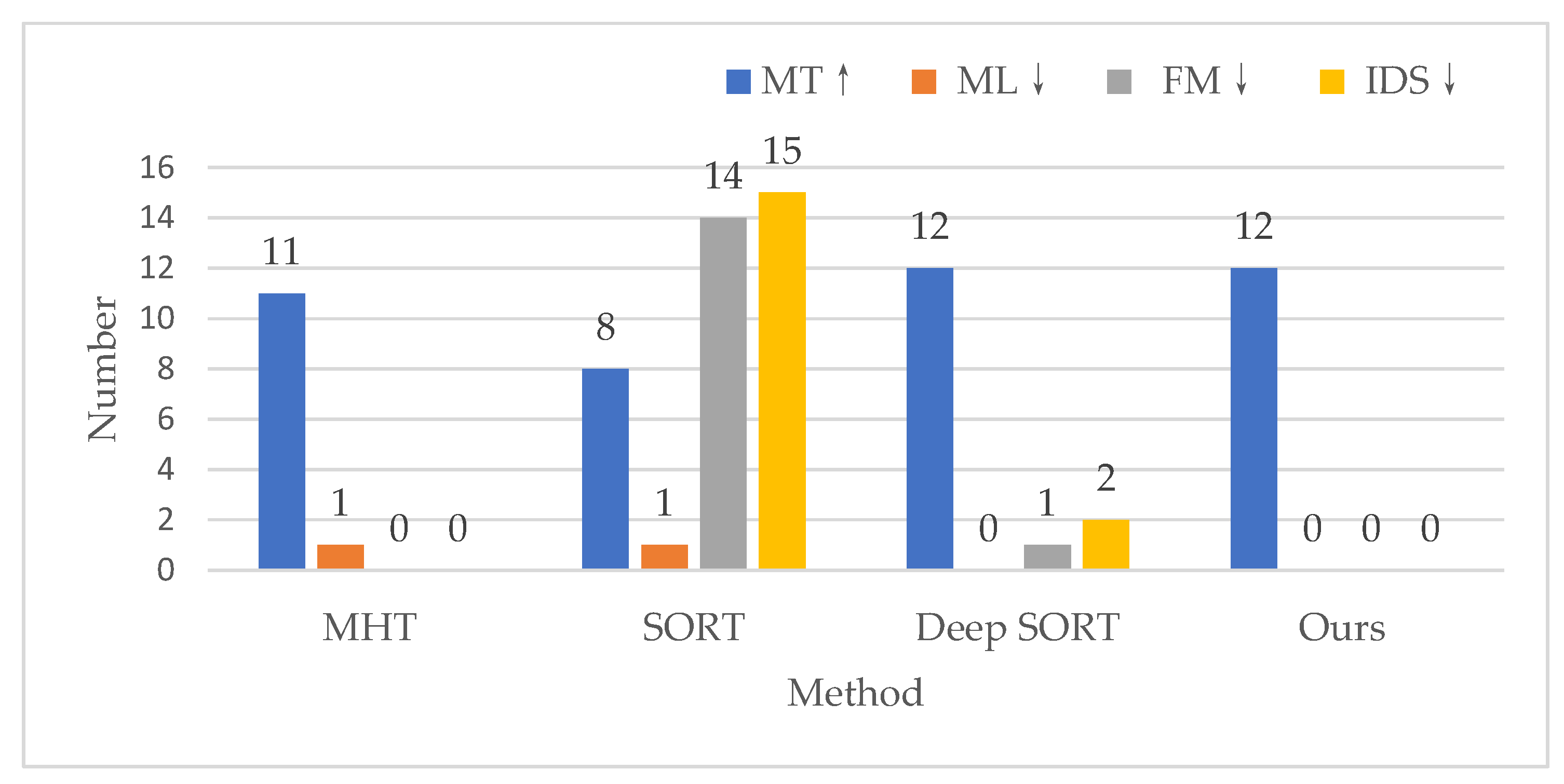

- Mostly tracked (MT): Number of ground-truth targets that have the same label for at least 80% of their lifetime;

- Mostly lost (ML): Number of ground-truth targets that are tracked in a maximum of 20% of their lifetime;

- Identity switches (IDS): Number of changes in the identity of a ground-truth target;

- Fragmentation (FM): Number of interrupted tracks due to missing detection;

- Frame per speed (FPS): The average running time of the tracking algorithm for one frame.

3.3. Results of the First Real Video SAR Dataset

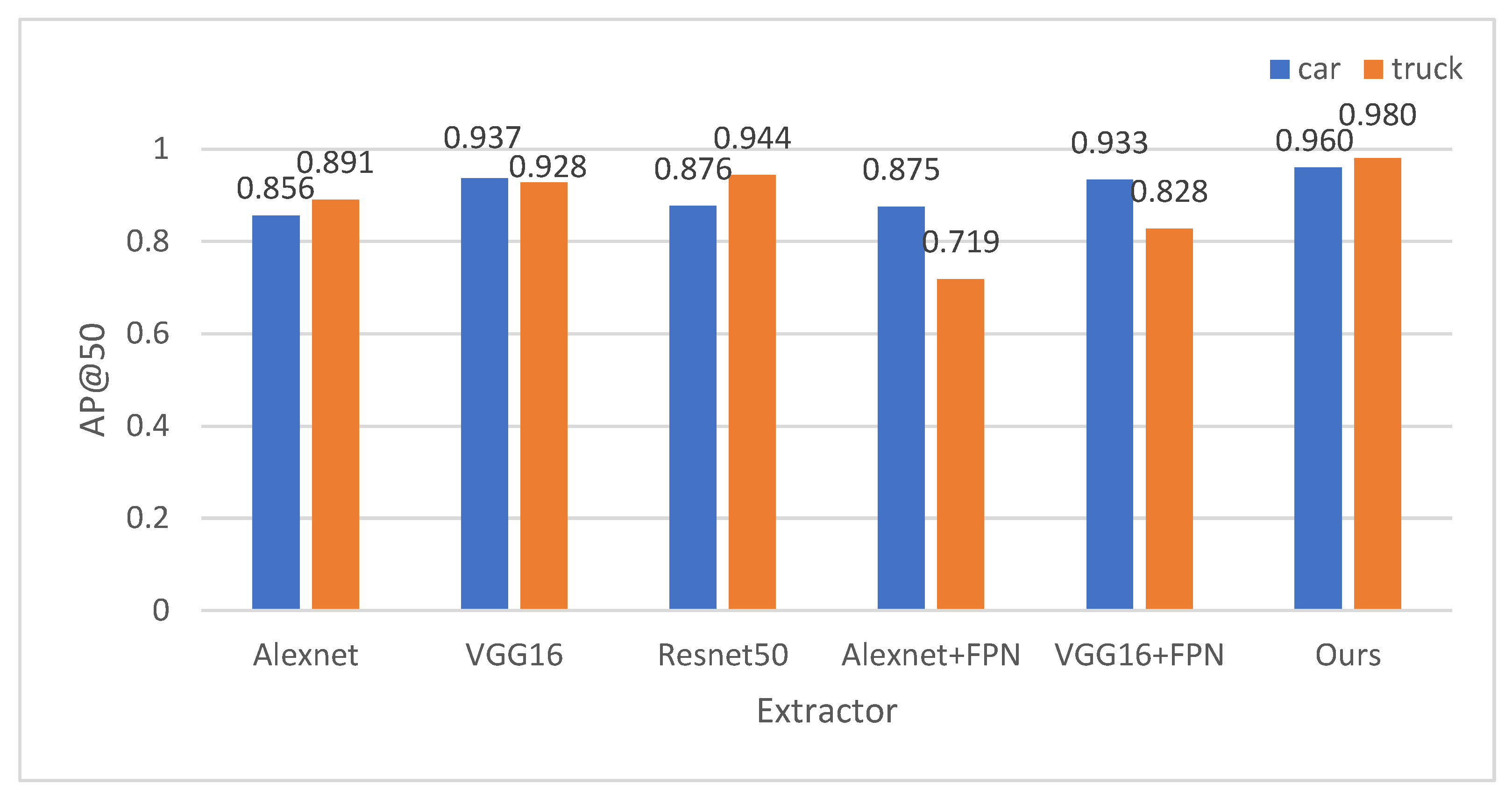

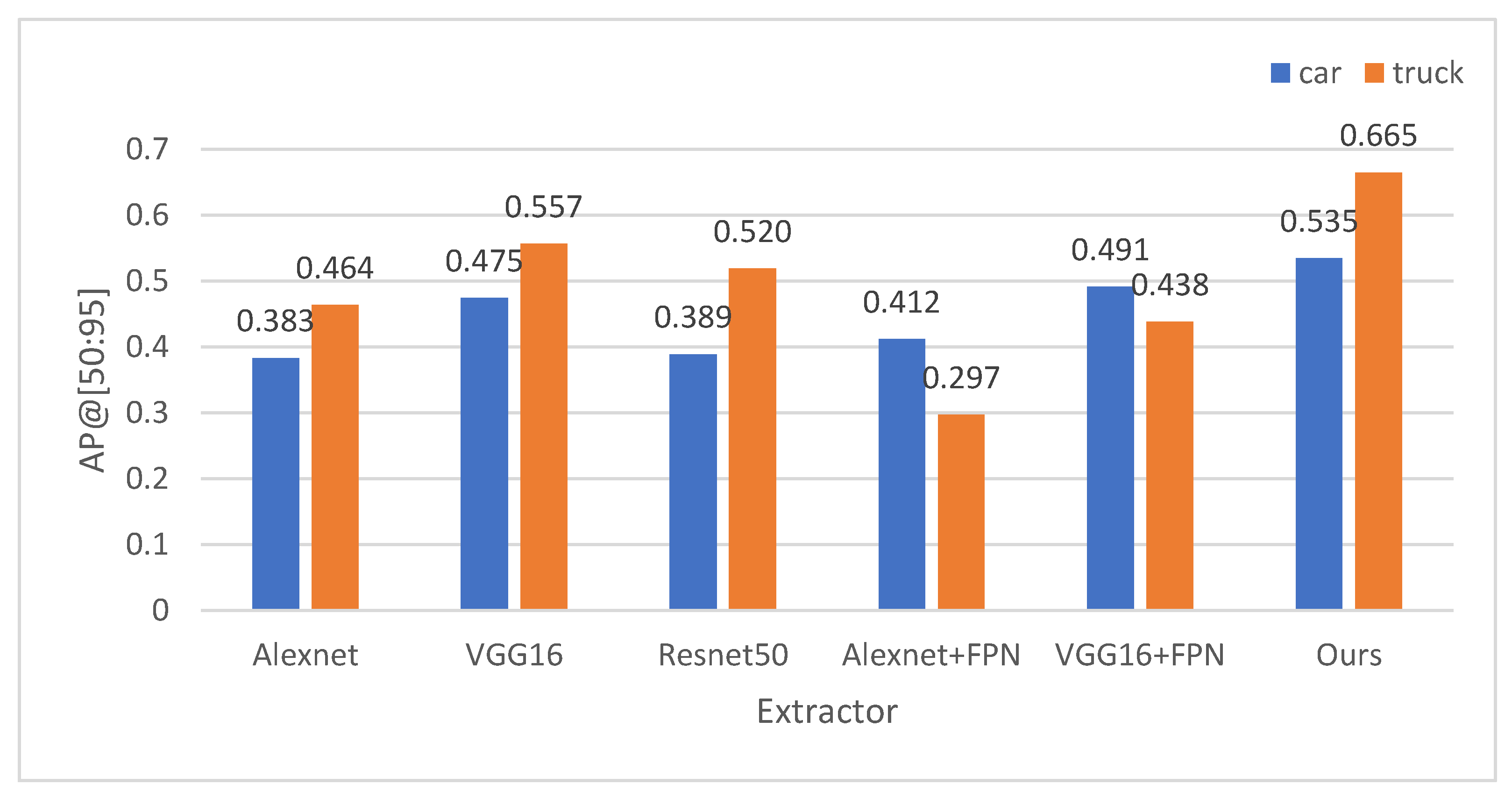

3.3.1. Detection Results

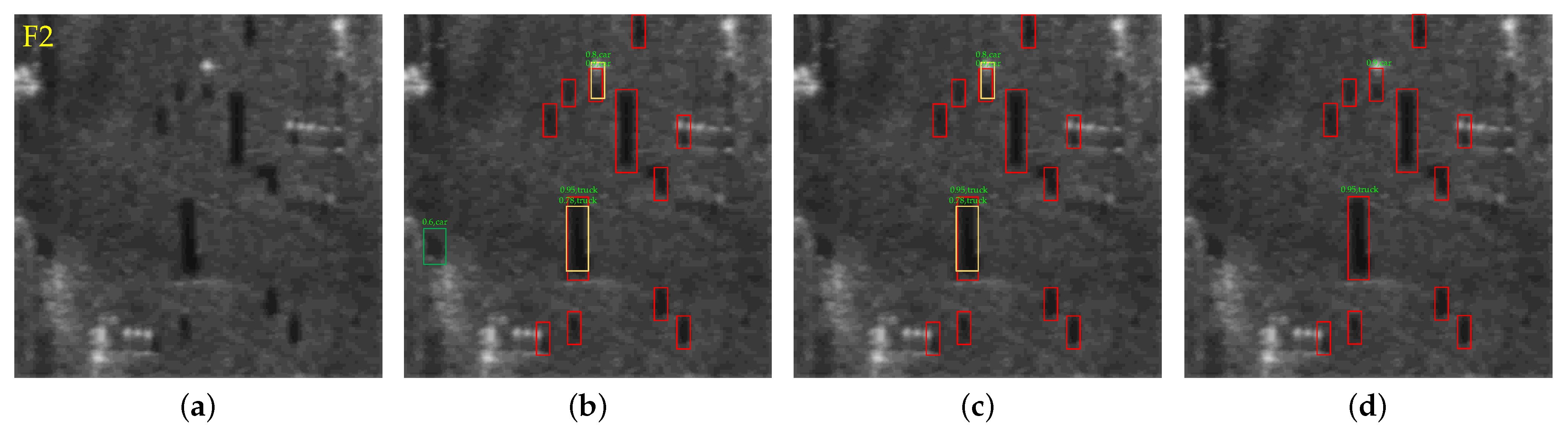

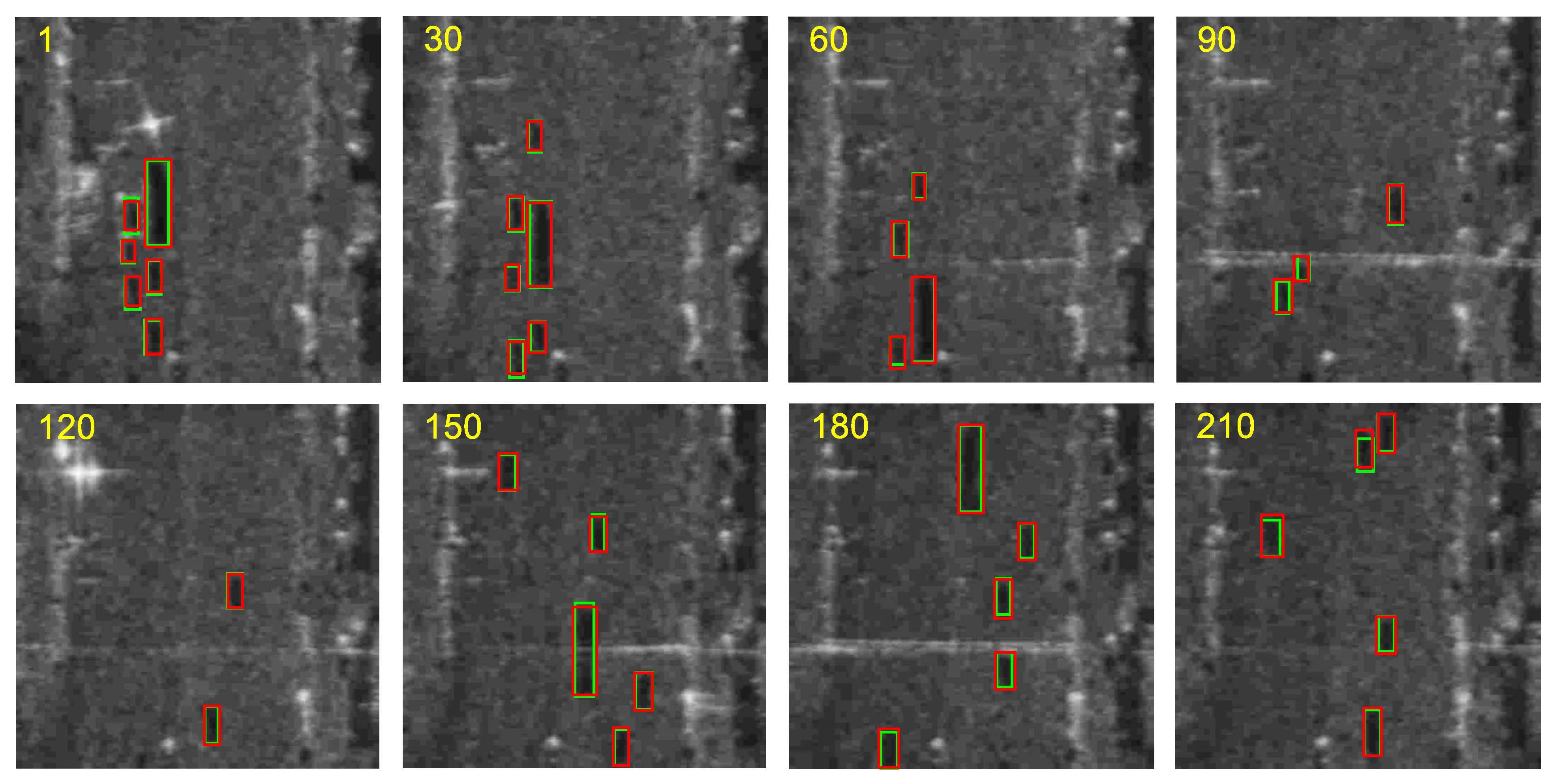

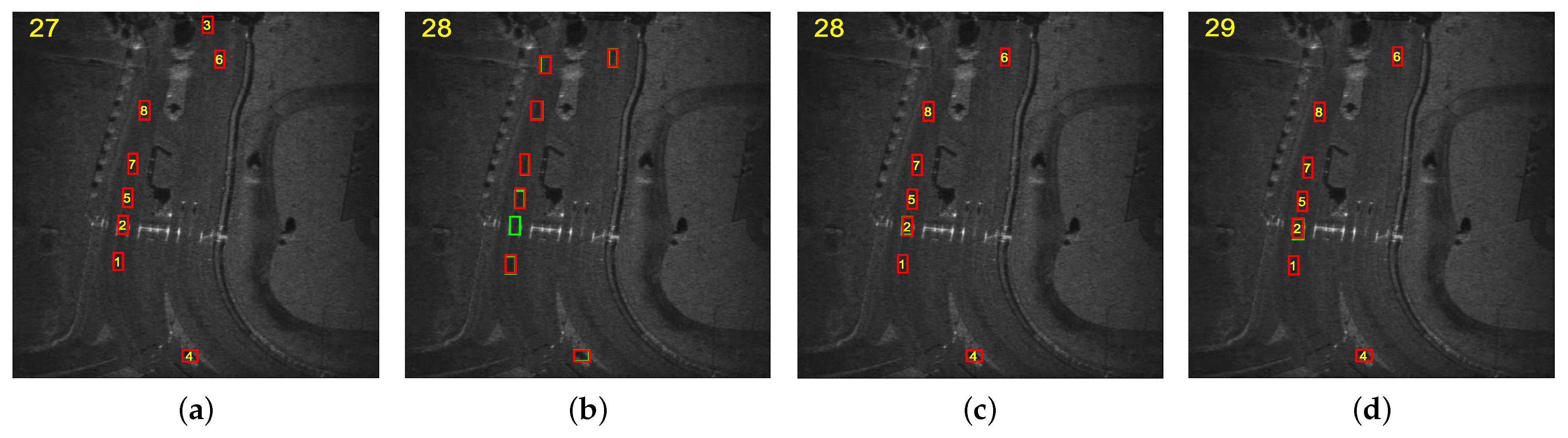

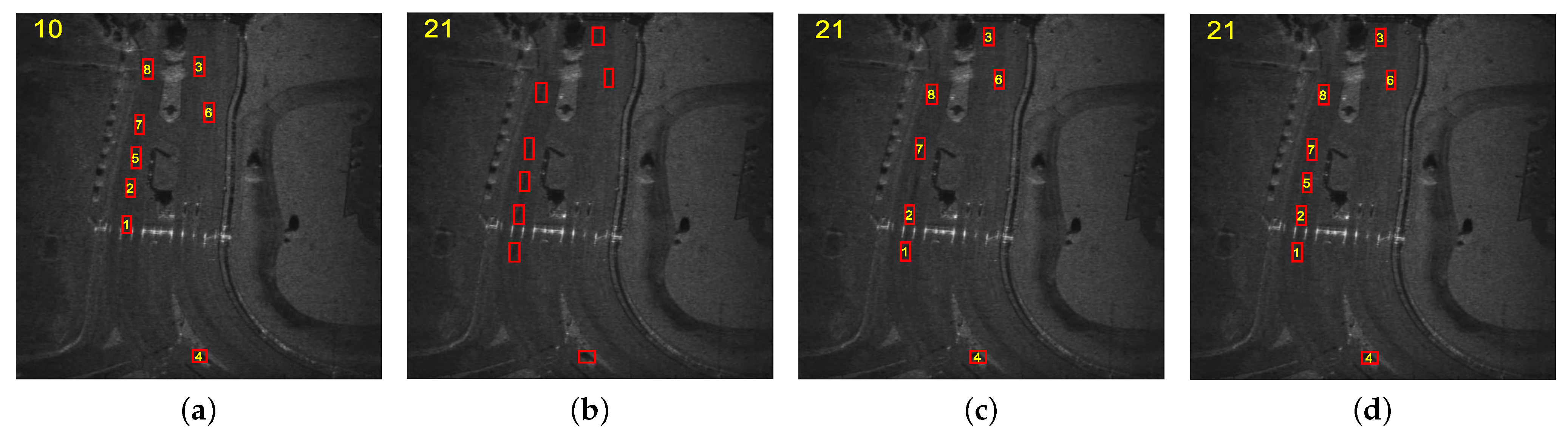

3.3.2. Tracking Results

3.4. Results of the Second Real Video SAR Dataset

3.4.1. Detection Results

3.4.2. Tracking Results

4. Discussion

4.1. Choice of K Value in the Dimension Clusters

4.2. Research on Background Occlusion

4.3. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Qin, S.; Ding, J.; Wen, L.; Jiang, M. Joint track-before-detect algorithm for high-maneuvering target indication in video SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8236–8248. [Google Scholar] [CrossRef]

- Raynal, A.M.; Bickel, D.L.; Doerry, A.W. Stationary and moving target shadow characteristics in synthetic aperture radar. In Radar Sensor Technology XVIII; SPIE: Bellingham, WA, USA, 2014; pp. 413–427. [Google Scholar]

- Miller, J.; Bishop, E.; Doerry, A.; Raynal, A. Impact of ground mover motion and windowing on stationary and moving shadows in synthetic aperture radar imagery. In Algorithms for Synthetic Aperture Radar Imagery XXII; SPIE: Bellingham, WA, USA, 2015; pp. 92–109. [Google Scholar]

- Xu, Z.; Zhang, Y.; Li, H.; Mu, H.; Zhuang, Y. A new shadow tracking method to locate the moving target in SAR imagery based on KCF. In Proceedings of the International Conference in Communications, Signal Processing, and Systems, Harbin, China, 14 July 2017; pp. 2661–2669. [Google Scholar]

- Yang, X.; Shi, J.; Zhou, Y.; Wang, C.; Hu, Y.; Zhang, X. Ground moving target tracking and refocusing using shadow in video-SAR. Remote Sens. 2020, 12, 3083. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, S.; Li, H.; Xu, Z. Shadow tracking of moving target based on CNN for video SAR system. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22 July 2018; pp. 4399–4402. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25 September 2017; pp. 3645–3649. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. Fairmot: On the fairness of detection and re-identification in multiple object tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking objects as points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23 August 2020; pp. 474–490. [Google Scholar]

- Gao, S. Grape Theory and Network Flow Theory; Higher Education Press: Beijing, China, 2009. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. Mar. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23 June 2014; pp. 580–587. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5 October 2015; pp. 234–241. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8 October 2016; pp. 850–865. [Google Scholar]

- Viteri, M.C.; Aguilar, L.R.; Sánchez, M. Statistical Monitoring of Water Systems. Comput. Aided Chem. Eng. 2015, 31, 735–739. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Advances in Neural Information Processing Systems; Palais des Congrès de Montréal: Montréal, QC, Canada, 7 December 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 7263–7271. [Google Scholar]

- Zhang, J.; Xing, M.; Xie, Y. FEC: A feature fusion framework for SAR target recognition based on electromagnetic scattering features and deep CNN features. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2174–2187. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Wang, C.; Liu, Y.; Fu, K. PBNet: Part-based convolutional neural network for complex composite object detection in remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 173, 50–65. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Fu, K. DABNet: Deformable contextual and boundary-weighted network for cloud detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Goyal, A.; Bochkovskiy, A.; Deng, J.; Koltun, V. Non-deep networks. arXiv 2021, arXiv:2110.07641. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 2117–2125. [Google Scholar]

- MacQueen, J. Classification and analysis of multivariate observations. In 5th Berkeley Symp. Math. Statist. Probability; Statistical Laboratory of the University of California: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Selim, S.Z.; Ismail, M.A. K-means-type algorithms: A generalized convergence theorem and characterization of local optimality. IEEE Trans. Pattern Anal. Mach. Intell. 1984, PAMI-6, 81–87. [Google Scholar] [CrossRef] [PubMed]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. In Advances in Neural Information Processing Systems; Morgan Kaufmann Publishers Inc.: Denver, CO, USA, 1993; Volume 6. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20 June 2005; pp. 539–546. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17 June 2006; pp. 1735–1742. [Google Scholar]

- Hu, F.; Xia, G.-S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Malmgren-Hansen, D.; Kusk, A.; Dall, J.; Nielsen, A.A.; Engholm, R.; Skriver, H. Improving SAR automatic target recognition models with transfer learning from simulated data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1484–1488. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20 August 2006; pp. 850–855. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. Eurasip J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef]

- Jin, Z.; Yu, D.; Song, L.; Yuan, Z.; Yu, L. You Should Look at All Objects. arXiv 2022, arXiv:2207.07889. [Google Scholar]

- Blackman, S.S. Multiple hypothesis tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Yan, H.; Mao, X.; Zhang, J.; Zhu, D. Frame rate analysis of video synthetic aperture radar (ViSAR). In Proceedings of the 2016 International Symposium on Antennas and Propagation (ISAP), Okinawa, Japan, 24 October 2016; pp. 446–447. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20 June 2005; pp. 886–893. [Google Scholar]

| Layer | Layer | Size | Number | Stride | Output |

|---|---|---|---|---|---|

| 0 | Input | - | - | - | 32 × 32 |

| 1 | Conv | 3 × 3 | 64 | 1 × 1 | 32 × 32 |

| 2 | Conv | 3 × 3 | 64 | 1 × 1 | 32 × 32 |

| 3 | Pool | 2 × 2 | - | 2 × 2 | 16 × 16 |

| 4 | Conv | 3 × 3 | 128 | 1 × 1 | 16 × 16 |

| 5 | Conv | 3 × 3 | 128 | 1 × 1 | 16 × 16 |

| 6 | Pool | 2 × 2 | - | 2 × 2 | 8 × 8 |

| 7 | Conv | 3 × 3 | 256 | 1 × 1 | 8 × 8 |

| 8 | Conv | 3 × 3 | 256 | 1 × 1 | 8 × 8 |

| 9 | Conv | 3 × 3 | 256 | 1 × 1 | 8 × 8 |

| 10 | Pool | 2 × 2 | - | 2 × 2 | 4 × 4 |

| 11 | Fc | 4 × 4 × 256 | 1024 | ||

| 12 | Fc | 1024 | 1024 | ||

| 13 | Fc | 1024 | 128 | ||

| Framework | Extractor | mAP@50 1 | mAP@75 2 | mAP@[50:95] 3 |

|---|---|---|---|---|

| Faster-RCNN | Alexnet | 0.874 | 0.327 | 0.424 |

| VGG16 | 0.933 | 0.503 | 0.516 | |

| Resnet50 | 0.910 | 0.393 | 0.454 | |

| Alexnet + FPN | 0.797 | 0.214 | 0.354 | |

| VGG16 + FPN | 0.880 | 0.432 | 0.465 | |

| Resnet50 + FPN(Ours) | 0.970 | 0.660 | 0.600 |

| Target | MT? | ML? | FM | IDS |

|---|---|---|---|---|

| 1 | Yes | No | 0 | 0 |

| 2 | Yes | No | 0 | 0 |

| 3 | Yes | No | 0 | 0 |

| 4 | Yes | No | 0 | 0 |

| 5 | Yes | No | 0 | 0 |

| 6 | Yes | No | 2 | 0 |

| 7 | Yes | No | 4 | 0 |

| 8 | Yes | No | 0 | 0 |

| 9 | Yes | No | 0 | 0 |

| 10 | Yes | No | 0 | 0 |

| 11 | Yes | No | 1 | 0 |

| 12 | Yes | No | 0 | 0 |

| 13 | Yes | No | 0 | 0 |

| 14 | Yes | No | 0 | 0 |

| 15 | Yes | No | 0 | 0 |

| 16 | Yes | No | 0 | 0 |

| 17 | Yes | No | 0 | 0 |

| 18 | Yes | No | 0 | 0 |

| Total | Data | Data | Data | Data |

| Method | MTTA | MTTP | FPS |

|---|---|---|---|

| MHT | 0.277 | 0.743 | 9.771 |

| SORT | 0.862 | 0.831 | 14.501 |

| Deep SORT | 0.885 | 0.855 | 10.816 |

| Siam-sort (Ours) | 0.947 | 0.869 | 12.505 |

| Target | MT? | ML? | FM | IDS |

|---|---|---|---|---|

| 1 | Yes | No | 0 | 0 |

| 2 | Yes | No | 0 | 0 |

| 3 | Yes | No | 0 | 0 |

| 4 | Yes | No | 0 | 0 |

| 5 | Yes | No | 0 | 0 |

| 6 | Yes | No | 0 | 0 |

| 7 | Yes | No | 0 | 0 |

| 8 | Yes | No | 0 | 0 |

| 9 | Yes | No | 0 | 0 |

| 10 | Yes | No | 0 | 0 |

| 11 | Yes | No | 0 | 0 |

| 12 | Yes | No | 0 | 0 |

| Total | 12 | 0 | 0 | 0 |

| Target | MTTA | MTTP | FPS |

|---|---|---|---|

| MHT | 0.798 | 0.620 | 6.670 |

| SORT | 0.890 | 0.642 | 9.501 |

| Deep SORT | 0.950 | 0.825 | 8.779 |

| Siam-sort (Ours) | 0.954 | 0.867 | 9.340 |

| Dimension Clusters | MAP@50 | MAP@75 | MAP@[50:95] |

|---|---|---|---|

| ✗ | 0.958 | 0.598 | 0.556 |

| ✓ | 0.970 | 0.660 | 0.600 |

| Schemes | MT | ML | FM | IDS | MTTA | MTTP |

|---|---|---|---|---|---|---|

| Baseline | 14 | 0 | 18 | 16 | 0.877 | 0.831 |

| Baseline + A | 17 | 0 | 12 | 11 | 0.884 | 0.854 |

| Baseline + A + B | 18 | 0 | 7 | 0 | 0.947 | 0.869 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, H.; Liao, G.; Liu, Y.; Zeng, C. Siam-Sort: Multi-Target Tracking in Video SAR Based on Tracking by Detection and Siamese Network. Remote Sens. 2023, 15, 146. https://doi.org/10.3390/rs15010146

Fang H, Liao G, Liu Y, Zeng C. Siam-Sort: Multi-Target Tracking in Video SAR Based on Tracking by Detection and Siamese Network. Remote Sensing. 2023; 15(1):146. https://doi.org/10.3390/rs15010146

Chicago/Turabian StyleFang, Hui, Guisheng Liao, Yongjun Liu, and Cao Zeng. 2023. "Siam-Sort: Multi-Target Tracking in Video SAR Based on Tracking by Detection and Siamese Network" Remote Sensing 15, no. 1: 146. https://doi.org/10.3390/rs15010146

APA StyleFang, H., Liao, G., Liu, Y., & Zeng, C. (2023). Siam-Sort: Multi-Target Tracking in Video SAR Based on Tracking by Detection and Siamese Network. Remote Sensing, 15(1), 146. https://doi.org/10.3390/rs15010146