Abstract

The construction of diverse dictionaries for sparse representation of hyperspectral image (HSI) classification has been a hot topic over the past few years. However, compared with convolutional neural network (CNN) models, dictionary-based models cannot extract deeper spectral information, which will reduce their performance for HSI classification. Moreover, dictionary-based methods have low discriminative capability, which leads to less accurate classification. To solve the above problems, we propose a deep learning-based structure dictionary for HSI classification in this paper. The core ideas are threefold, as follows: (1) To extract the abundant spectral information, we incorporate deep residual neural networks in dictionary learning and represent input signals in the deep feature domain. (2) To enhance the discriminative ability of the proposed model, we optimize the structure of the dictionary and design sharing constraint in terms of sub-dictionaries. Thus, the general and specific feature of HSI samples can be learned separately. (3) To further enhance classification performance, we design two kinds of loss functions, including coding loss and discriminating loss. The coding loss is used to realize the group sparsity of code coefficients, in which within-class spectral samples can be represented intensively and effectively. The Fisher discriminating loss is used to enforce the sparse representation coefficients with large between-class scatter. Extensive tests performed on hyperspectral dataset with bright prospects prove the developed method to be effective and outperform other existing methods.

1. Introduction

Hyperspectral images (HSI) show specific spectral data through sampling from hundreds of contiguous narrow spectral bands [1]. HSI with significantly improved dimensionality of the data can show discriminative spectral information in each pixel, whereas it reveals that it is highly challenging to perform accurate analysis for various land covers in remote sensing [2,3,4], which is the curse of intrinsic or extrinsic spectral variability. HSI feature learning [5,6,7,8] aiming to extract invariable characteristics has become a crucial step in HSI analysis and has been widely used in different applications (e.g., classification, target detection, and image fusion). Therefore, the focus of our framework is extracting an effective spectral feature for HSI classification.

Generally, the existing HSI feature extraction (FE) can be classified into linear and nonlinear methods. The common linear FE models are band-clustering and merging-based approaches [9,10], which split the high-correlation spectral bands into several groups to acquire typical band or feature of a range of groups. In general, the above techniques have low computation cost and can be extensively used in real applications. Kumar et al. [9] calculated a band clustering approach based on discriminative bases by taking into account overall classes in the meantime. Rashwan et al. [10] used the Pearson correlation coefficient of adjacent bands to complete band splitting.

The other linear FE models are projection models that are developed for linearly projecting or transforming the spectral information into feature space with lower dimensions. Principal component analysis (PCA) [11] projects the samples on the eigenvectors of the covariance matrix to capture the maximum variance, which has been widely used for hyperspectral investigation [12]. Green et al. [13] proposed a maximum noise fraction (MNF) model that completed projection under the maximum signal-to-noise ratio (SNR). Despite the low complexity of the linear model, they face low representation capability and fails to process inherently nonlinear hyperspectral data.

A nonlinear model handles hyperspectral data with a nonlinear transformation. It is likely that such nonlinear features perform better than linear features due to the presence of nonlinear class boundaries. One of the widely used nonlinear models is the kernel-based method, which focuses on mapping the data into a higher-dimensional space to achieve better separability. The kernel versions of the abovementioned algorithms, i.e., kernel PCA (KPCA) [14] and kernel ICA (KICA) [15], were proposed and used for HSI classification [16] and change detection [17]. The support vector machine (SVM) [18] is a representative kernel-based approach and has shown effective performance in HSI classification [19]. Bruzzone et al. [20] proposed a hierarchical SVM to capture features in a semi-supervised manner. Recently, a spectral-spatial SVM-based multi-layer learning algorithm was designed for HSI classification [21]. However, the above methods usually have no theoretical foundation to select kernels and may not produce satisfying results in practical applications.

Deep learning is another nonlinear model with great potential for learning features [22]. Chen et al. designed complex frameworks [23,24], including PCA, deep learning architecture, and logistic regression, and used it to verify the eligibility of a stacked auto-encoder (SAE) and a deep belief network (DBN) for HSI classification. Recurrent neural networks (RNNs) [25,26] processed the whole spectral bands as a sequence and used a flexible network configuration for classifying HSIs. Rasti et al. [27] provided a technical overview of the existing techniques for HSI classification, in particular model for deep learning. Although deep learning models present powerful information extraction ability, discriminative ability needs to be improved by rational design of loss functions.

Dictionary-based methods have emerged in recent years for HSI feature learning. To extract features effectively, those methods represent high-dimensional spectral data as a complex combination of dictionary atoms, with a low reconstruction error and a high sparsity level in the abundance matrix. Sparse representation-based classification (SRC) [28] constructed an unsupervised dictionary that was used for HSI classification in [29], and it acts as one vanguard to open the prologue of classification with the help of dictionary coding. SRC operates impressively in face recognition and is robust to different noise [28]; moreover, redundant atoms and disorder structure making is unsuitable for intricate HSI classification [29,30]. Yang et al. [31] constructed a class-specific dictionary to overcome the shortcomings of SRC, but it does not consider the discriminative ability between different coefficients, resulting in low classification accuracy. Yang et al. [32] proposed a complicated model called Fisher discriminant dictionary learning (FDDL), which used the Fisher criterion to learn a structured dictionary, but this model is time consuming and the reconstructive ability needs to be improved. Gu et al. [33] designed an efficient dictionary pair learning (DPL) model that replaces the sparsity constraint with a block-diagonal constraint to reduces the computational cost but the linear projection of the analysis dictionary restricts the classification performance. Akhtar et al. [34] used the Bayesian framework for learning discriminative dictionaries for hyper-spectral classification. Tu et al. combined a discriminative sub-dictionary with a multi-scale super-pixel strategy and achieved a significant improvement in classification [35]. Dictionary-based methods are promising to represent HSI features. However, the above dictionaries encounter difficulty with extracting deeper spectral information and suffer from poor discriminative ability.

The latest works [36,37] tried to incorporate the deep learning module into a dictionary algorithm and achieve impressive results for target detection. Nevertheless, the code coefficients of the above models require more powerful constraints, and the combination form needs to be improved. To address the aforementioned issues, in this paper, we propose a deep learning-based structure dictionary model for HSI classification. The main novelties of this paper are threefold, as follows:

- (1)

- We devise an effective feature learning framework that adopts convolutional neural networks (CNNs) to capture abundant spectral information and construct a structure dictionary to predict HSI samples.

- (2)

- We design a novel shared constraint in terms of the sub-dictionaries. In this way, the common and specific feature of HSI samples will be learned separately to represent features in a more discriminative manner.

- (3)

- We carefully design two kinds of loss functions,, i.e., coding loss and discriminating loss, for code coefficients to enhance the classification performance.

- (4)

- Extensive experiments conducted on several hyperspectral datasets demonstrate the superiority of proposed method in terms of the performance and efficiency in comparison with the state-of-the-art techniques.

The rest of the paper is organized as follows. Section 2 presents a short description for the experimental datasets which are widely used in HSI classification applications at first. Afterwards, the proposed methods are detailed as illustrated subsequently. Furthermore, Section 3 and Section 4 present the experimental results and the corresponding discussions to better demonstrate the effectiveness of the proposed method. Finally, conclusions are drawn in Section 5.

2. Materials and Methodology

In this section, we first introduce the experimental datasets and then elaborate the framework for our deep learning-based structure dictionary method.

2.1. Experimental Datasets

Despite the research progress in designing robust algorithms in HSI classification applications, some researchers devoted themselves to constructing publicly available datasets, which could provide the community with fair comparisons across different algorithms. In this subsection, we give a brief review in terms of the band range, image resolution, and classes of interests for four popular datasets, which are also employed in this article for further compare our method with the other existing methods.

Center of Pavia [38]: This dataset is acquired by the reflective optics system imaging spectrometer (ROSIS) with 115 spectral bands ranging from 0.43 to 0.86 μm over the urban area in Pavia. It should be mentioned that the noisy and water absorption bands are discarded by the authors in [38] and finally obtaining HSIs with dimension of and nine land cover classes.

Botswana [39]: This dataset was captured by the NASA earth observing one (EO-1) with 145 bands ranging from 0.4 to 2.5 μm over the Okavango Delta, Botswana. The dataset contains pixels and 14 classes of interests.

Houston 2013 [40]: This dataset was collected by the compact airborne spectrographic imager (CASI) with 144 bands ranging from 0.38 to 1.05 μm over the campus of the University of Houston and the neighboring urban area. The dataset contains pixels and 15 classes of interest.

Houston 2018 [41]: This dataset was acquired by the same CASI sensor with 48 bands sampling the wavelength of between 0.38 and 1.05 μm over the same region as the Houston 2013. Houston 2018 contains pixels and 20 classes of interest.

2.2. Methodology

Dictionary learning aims to learn a set of atoms, called visual words in the computer vision community, in which a few atoms can be linearly combined to approximate a given sample well [42]. However, the role of sparse coding in classification is still an open problem and the code coefficients of the recently models require more powerful constraints, and the combination form needs to be improved. Therefore, we propose a deep learning-based structure dictionary model in this paper.

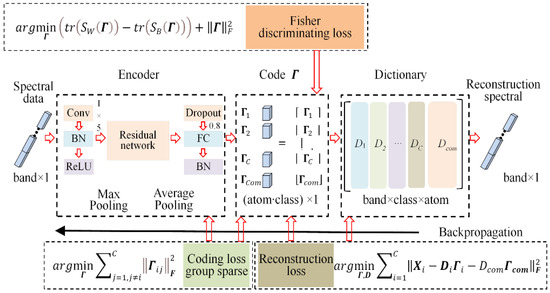

Figure 1 presents the pipeline of the developed framework, with a CNN constructed to encode the spectral information, as well as a structured dictionary established to classify HSIs. Spectral data are first encoded by the CNN model, in which residual networks are used to optimize the main networks. We can acquire the group sparse code using the full connection layer. Meanwhile, two loss functions, i.e., coding loss and Fisher discriminating loss, are calculated to optimize code coefficients. More importantly, a discriminative dictionary is constructed by reconstruction loss to enhance the discriminative ability of the developed model.

Figure 1.

Workflow of the proposed feature extraction model.

2.2.1. Residual Networks Encoder

Compared with the common dictionary-based models using sparse constraints, the group sparsity models have more intensive and effective code coefficients, i.e., the coefficient values converge to the diagonal of the matrix [33] and achieve more effective results in classification applications. Therefore, we replace the sparsity constraint with a group sparsity constraint for the dictionary model and construct an encoder to acquire the group sparsity effect.

Suppose is coding coefficients of discriminative dictionary for spectral samples . We want to construct an encoder , and apply , to project the spectral samples into to a nearly null space, i.e.,

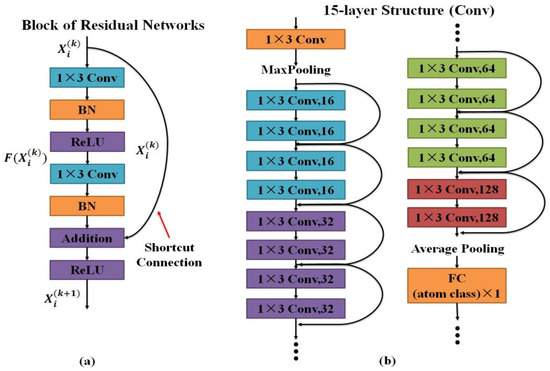

Considering the poor performance of linear projection, a CNN encoder is designed to complete the transformation. Thus, the performance for dictionary learning will be enhanced greatly. He et al. [43] suggested that deeper networks encounter difficulties in the following degradation problem: as the network depth increases, accuracy becomes saturated and then degrades rapidly. Therefore, residual networks are used to address the degradation problem and increase the convergence rate. As depicted in Figure 2, the building block of residual networks (Figure 2a) contains two convolutional layers (Conv), two batch normalization layers (BN), and two leaky ReLU layers. The output of residual networks is calculated as follows:

Figure 2.

Network architectures for our encoder: (a) a block of residual networks, (b) main structure of CNNs.

We explicitly let the stacked nonlinear layers fit the residual function and original mapping is recast into . The formulation of Equation (2) can be realized by feedforward neural networks with “shortcut connections” (Figure 2a). Shortcut connections [43,44] are those skipping one or more layers. The entire network can still be trained end-to-end by stochastic gradient descent (SGD) with backpropagation.

Based on the above block of residual networks, we construct a 15-layer encoder (in terms of convolutional layers) as presented in Figure 2b. We first employ a convolutional layer to capture large receptive field [45] and max pooling is used to select the remarkable values. Then, we design 7 pieces of residual block, in which a convolutional layer is used to acquire effective receptive field. More importantly, feature maps corresponding to various convolutional kernel increase from 16 to 128 to acquire abundant spectral information. Finally, we adopt an average pooling to compress the spectral information and employ a fully connected (FC) layer to connect the coding coefficients. The output number of FC depends on the product of spectral band and sub-dictionary atom numbers. For backpropagation, we use SGD with a batch size of 8. The learning rate starts from 0.1 and epoch number is 500.

We apply the encoder P to extract spectral information from HSI and enforce the code coefficients to be group sparsity. To enhance the discriminative ability of our model, we build a structured dictionary, where each sub-dictionary can be directly used to represent the specific-class samples, i.e., the dictionaries are interpretable. The structure dictionary can be calculated as follows:

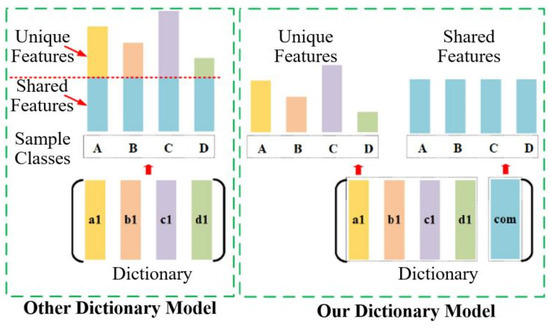

The first term of Equation (3) is the reconstruction loss which is used to construct the structure dictionary. Each sub-dictionary is established and learned from ith class samples. The second term for Equation (3) is the coding loss. However, the structure of existing dictionary needs optimization to learn common and specific features of HSI samples. As depicted in Figure 3, the test samples contain the common characteristics, i.e., the features they shared. To solve this problem, we design the shared constraint for sub-dictionaries. Our shared constraint (the com sub-dictionary) is built to describe duplicated information (common characteristics in Figure 3). Then, the discriminative characteristics will be “amplified” relative to the original characteristics.

Figure 3.

Overview of the built dictionary of the developed model. Shared constraints are used to describe the common features of all classes of HSI samples.

2.2.2. Dictionary Learning

Here, we design a sub-dictionary to calculate the class-shared characters as follows:

where denotes the shared (common) sub-dictionary. Each sub-dictionary (both specific and common ones) contains atoms, and each atom is column vector.The matrix of specific and common sub-dictionaries is randomly initialized, and corresponding atoms are constantly updated according to the objective function. The corresponding objective function is modified as follows:

where is the coding coefficient for common sub-dictionary. With the calculation of term , the results of term tend to be closer to zero, and the corresponding reconstructive ability of the structured dictionary will be improved. Meanwhile, the coding loss and discriminating loss will facilitate the construction of shared (common) sub-dictionaries.

In our framework, the dictionary is calculated by the single convolutional layer, and the convolutional weights serve as dictionary coefficients. Therefore, the dictionary is updated by the back-propagation (BP) algorithm. We design additional channels for shared (common) sub-dictionaries that will also be updated during BP processing. The main difference from the CNN model (end-to-end) is that we design a dictionary module, which contains various sub-dictionaries, to optimize the discriminative ability. Moreover, we design coding and discriminating loss for the middle variable (code coefficients ), which is a very different algorithm structure.

2.2.3. Loss Functions

To enhance the discriminative ability of the developed model, we design two kinds of loss functions for the proposed model. First, we design the coding loss to realize a fast and effective spectral data encoding.

Then, Fisher discriminative loss is designed to enhance the discriminative ability of our model. Meanwhile, a reconstruction loss is also used to build the structure dictionary. Overall, we apply three loss functions to optimize the classification performance.

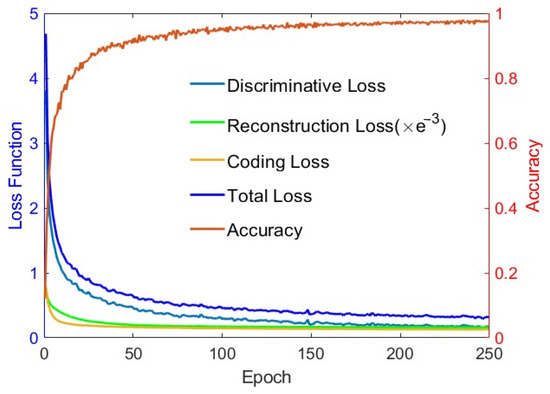

Two kinds of discriminative loss functions can be implemented by programming. One is Fisher discriminative loss and the other is cross-entropy loss . Both loss functions have been implemented in the program, and we will provide two versions of the final program for researchers. In this paper, all of the results are calculated with cross-entropy loss. Figure 4 shows the variation trend of three loss function values and classification accuracy with increasing epoch. All loss functions are convergent, and our approach achieves excellent classification accuracy. Therefore, the final objective function is as follows:

where and are the scalar constants and they will be discussed in experiments.

Figure 4.

The loss function value of training samples and classification accuracy of the developed model versus the number of epochs.

3. Experimental Results and Analysis

In this section, we quantitatively and qualitatively evaluate the classification performance of the proposed model on four public datasets, namely Pavia [38], Botswana [39], Houston 2013 [40], and Houston 2018 [41]. We compare the performance of the proposed method with other existing algorithms, including SVM [18,21], FDDL [32], DPL [33], ResNet [33], AE [27], RNN [27], CNN [27], and CRNN [27], for HSI classification. We report the overall accuracy (OA), average accuracy (AA) and kappa coefficient [46] of different datasets and present corresponding classification maps. Furthermore, we analyze the classification performance in detail, following each experimental dataset.

3.1. Sample Selection

We randomly choose 10% of the labeled samples in the dataset as the training data. To overcome the imbalance issue, we adopt a weighted sample generation strategy and make all trained samples per class equal to others as follows:

where is the new sample generated by combining of samples and , and is a random constant between 0 and 1. Samples and are randomly selected from the same class of training data. All the methods in comparison are implemented on the balanced dataset in this strategy.

3.2. Parameter Setting

In the proposed model, two groups of free parameters need to be adjusted: (1) the number of dictionary atoms and (2) regularization parameters and . The above parameter settings are a critical factor to ensure the performance of the model and will be analyzed.

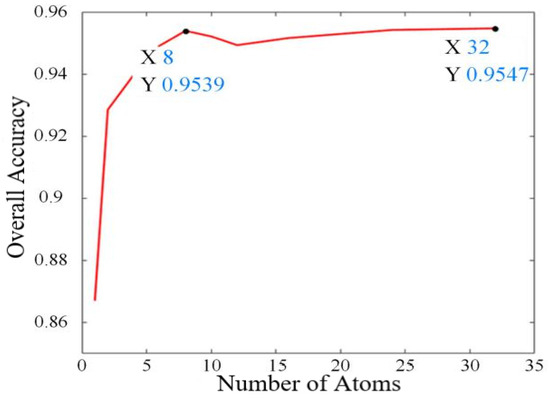

3.2.1. Number of Dictionary Atoms

We set all the subdictionaries to have the same number atoms. The number of atoms is adaptively estimated with Houston 2013 datasets, as depicted in Figure 5. The classification OA increases with the number of atoms for each sub-dictionary (atom number under 8), while the changes in OA are not enormous along with the variation in atom number beyond 10. We set the number of atoms to 8 for each dataset to efficiently train the developed model.

Figure 5.

The classification OA under different numbers of atoms for each sub-dictionary.

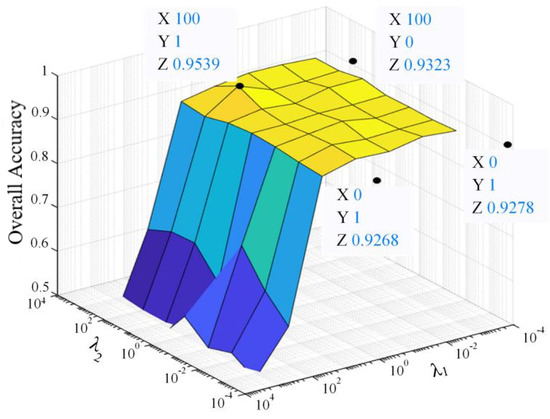

3.2.2. Constraint Coefficients and

and mainly balance reconstruction and coding loss in Equation (6). We test the proposed model with regularization parameters and ranging from 0 to , and select the value of parameter with the highest accuracy. Figure 6 presents the visualized result on Houston 2013 datasets. The OA values will decrease greatly with the increasing of (beyond 10). This result demonstrates that our model is sensitive for coding loss. We set and for the classification of Houston 2013 dataset.

Figure 6.

The classification OA under different regularization parameters and .

3.3. Classification Performance Analysis for Different Datasets

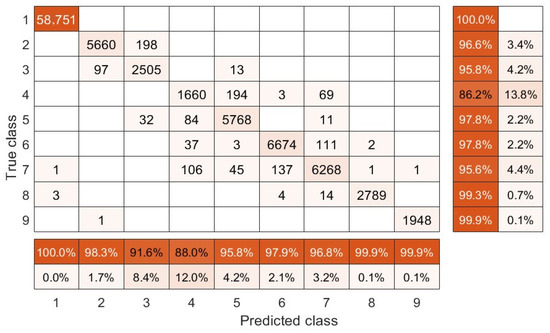

3.3.1. Center of Pavia

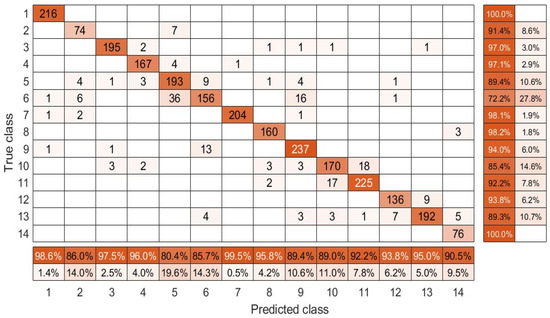

Table 1 shows the classification results of the compared algorithms. According to the presented score, our method outperforms other algorithms, especially on Water, Tiles, and Bare Soil surface regions. Benefiting from the designed deep and sub-dictionary framework, the proposed method is more outstanding than other dictionary learning-based method (i.e., FDDL). Compared with other CNN-based models, by involving dictionary structure, our method shows better deep feature extraction ability and achieves higher OA, AA, and kappa coefficients with , and , respectively. In addition, Figure 7 presents the confusion matrix of the developed model, which also indicates an effective discrimination ability for surface regions.

Table 1.

Classification Accuracy for Center of Pavia Dataset. The red bold fonts and blue italic fonts indicate the best and the second best performance.

Figure 7.

The confusion matrix of the developed model on the Center of Pavia dataset.

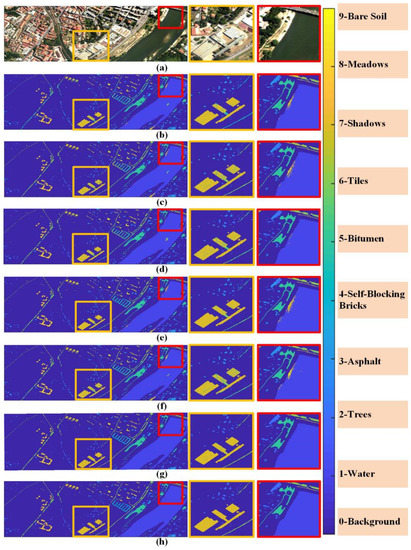

Figure 8 presents the classification maps acquired by various methods on the Center of Pavia data set. Figure 8a,h are the pseudo-color image and ground truth map, and Figure 8b–g depicts the corresponding classification maps of FDDL, DPL, ResNet, RNN, CNN, and the proposed model, respectively. It can be seen that our method shows better visual performance than the dictionary and CNN-based algorithms in both the smoothness in same material region and sharpness on the edges between different material one. For better comparison, we highlight river bank and building regions with red and yellow rectangles in Figure 8. In terms of the classification results in the red rectangle, all of the methods, except the CNN model and our method, incorrectly classify some water samples into Bare Soil class. As for the yellow rectangle region, our model achieved smoothest classification results on Bara Soil class in which CNN method confuses some samples into Bitumen class, which leads to a inferior score when comparing with our proposed algorithm.

Figure 8.

Classification maps of the Center of Pavia dataset with methods in comparison: (a) pseudo-color image; (b) FDDL; (c) DPL; (d) ResNet; (e) RNN; (f) CNN; (g) Ours; (h) ground truth. The yellow and red rectangles correspond to building and water areas.

3.3.2. Botswana

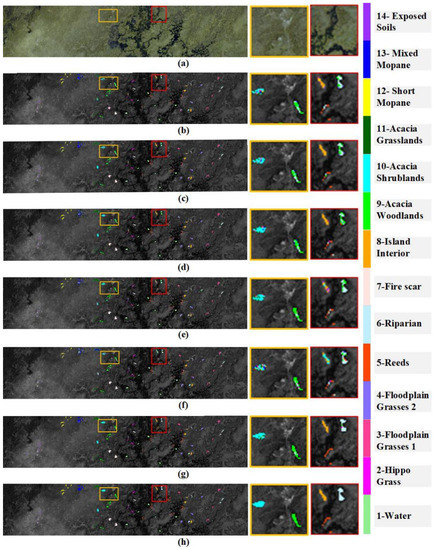

Table 2 presents the class-specific classification accuracy in terms of the Botswana data set, where our algorithm achieves the highest classification accuracy in over 50 percent of the classes (8 out of total 14 classes). In particular, our method performs better than other comparison methods in classes Reeds, Acacia Woodlands, and Exposed Soils. To be more specific, for the Reeds class our method is almost higher than the others, and higher than comparison methods for the Exposed Soils class. In addition, according to the corresponding confusion matrix in Figure 9, our algorithm outperforms other methods in terms of OA, AA, and kappa coefficients with , , and , respectively. Such performance could further demonstrate the superior discrimination classification capability for our model.

Table 2.

Classification Accuracy for Botswana Dataset. The red bold fonts and blue italic fonts indicate the best and the second best performance.

Figure 9.

Confusion matrix of the developed model on the Botswana dataset.

Figure 10 shows the classification maps for the Botswana dataset, where Figure 10a,h are the pseudo-color image and ground truth map, and Figure 10b–g are the corresponding classification results of FDDL, DPL, ResNet, RNN, CNN, and the proposed model. We mark the most misplaced regions with yellow and red rectangles for more obvious comparison. As shown in yellow rectangle region, Acacia Woodlands and Acacia Shrublands are wrongly classified into Exposed Soils or the Floodplain Grasses 1 class by other methods. As for the rectangles colored red, other methods almost lose the ability to distinguish between different land covers, i.e., the CNN method misclassified the Island Interior class into the Acacia Shrublands class. In contrast, our approach removed noisy scattered points and led to smoother classification results without blurring the boundaries. The superior performance could be owing to the effectiveness of the proposed structured dictionary learning model.

Figure 10.

Classification map for the Botswana dataset with methods in comparison: (a) pseudo-color image; (b) FDDL; (c) DPL; (d) ResNet; (e) RNN; (f) CNN; (g) ours; (h) ground truth. Rectangles colored as red and yellow represent mountain and grassland areas.

3.3.3. Houston 2013

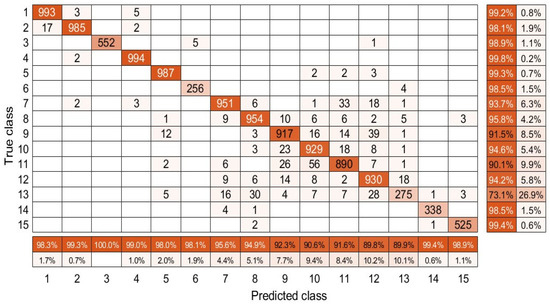

The classification results achieved by the methods in comparison on the Houston 2013 dataset are presented in Table 3, and Figure 11 presents the confusion matrix relative to the developed model. As depicted in Table 3, the classification results is more than higher than other methods on classes Commercial, Road, Highway and Parking Lot 1, while it performs competitively with other methods for other classes. Specifically, for Stressed Grass class, our method only is lower than AE method and lower than CNN method, but almost two percent in average higher than the other methods. It is noteworthy that the developed algorithm outperforms other operators in feature extraction generally for OA, AA and kappa with , , and , respectively. Furthermore, the confusion matrix in Figure 11 also reveals that the algorithm here can be effective to distinguish a surface region.

Table 3.

Classification Accuracy for Houston 2013 Dataset. The red bold fonts and blue italic fonts indicate the best and the second best performance.

Figure 11.

Confusion matrix of the developed model on the Houston 2013 dataset.

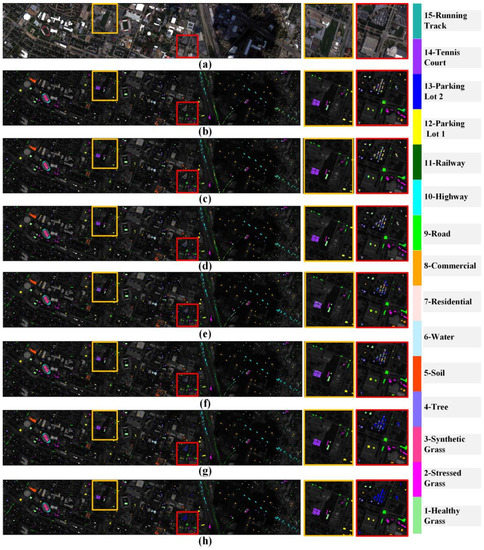

Figure 12 depicts the classification map generated by the approaches in comparison on Houston 2013 dataset. Figure 12a,h shows the pseudo-color image and ground truth map, and Figure 12b–g shows the corresponding classification results thereof. We label the misclassified regions which contain buildings and cars with yellow and red rectangles, respectively. According to the ground truth in yellow regions, there exists a small Parking Lot 1 class but this is misplaced by most of the methods. Specifically, the classification of DPL, RNN, and CNN contain many misclassified points which lead to an unsatisfactory classification effect. For the red one, the other algorithms show significant misclassification of the Parking Lot 2 class, FDDL, DPL and ResNet are unable to distinguish between Parking Lot 2 and Parking Lot 1 classes. In contrast, as impacted by the robust property within the spectra’s local variation, our method showed more complete and correct classification results. Besides that, our method also achieved an effective removal of salt-and-pepper noise effects from the classification map. Moreover, it maintains the significant objects or structures. The developed model acquires a classification map with higher accuracy around and in the parking lot due to the robust property in the spectra’s local variation.

Figure 12.

Classification map generated by Houston 2013 dataset with approaches in comparison: (a) pseudo-color image; (b) FDDL; (c) DPL; (d) ResNet; (e) RNN; (f) CNN; (g) ours; (h) ground truth. The rectangles with the colors of red and yellow represent the parking lot space and building area.

3.3.4. Houston 2018

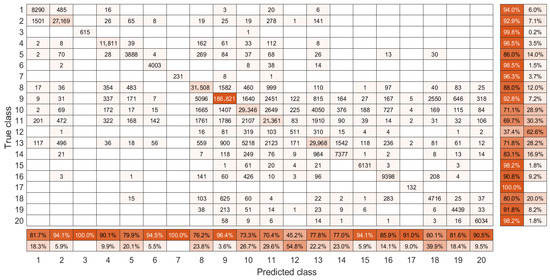

Table 4 and Figure 13 present the classification results and confusion matrix on Houston 2018 datasets in comparison with other algorithms, respectively. According to the results, one can see that the classification accuracy decreases for each method when compared to the one in the Houston 2013 dataset. This phenomenon is more obvious for sidewalk and crosswalk classes, where the highest scores are only and , respectively. We believe that the decrease could be attributed to the increase of land cover types and the reduction of spectral information (only 46 spectral bands). In addition, our method ranks first in 9 out of 20 total classes of land covers and our method also outperforms other algorithms at least two points in terms of OA, AA, and kappa indicators. Furthermore, our model also shows significant discriminative ability between 20 classes according to the confusion matrix as illustrated in Figure 13.

Table 4.

Classification Accuracy for Houston 2018 Dataset. The red bold fonts and blue italic fonts indicate the best and the second best performance.

Figure 13.

The confusion matrix of our model on the Houston 2018 dataset.

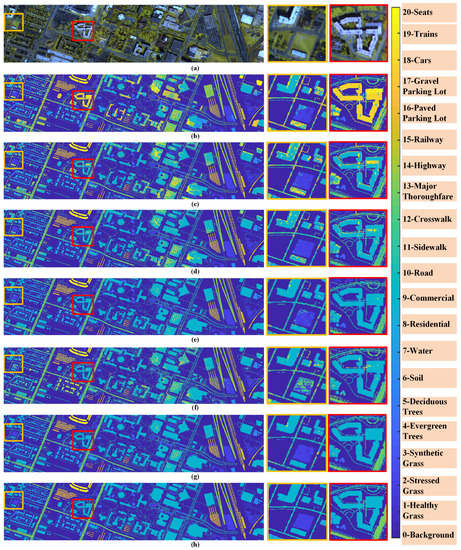

Figure 14 highlights the superiority of the proposed method by means of classification maps on Houston 2018 dataset. Figure 14a,h shows the pseudo-color image and ground truth map, and Figure 14b–g shows the corresponding classification results of FDDL, DPL, ResNet, RNN, CNN, and the proposed model. For better visualization, we marked the Commercial, Road, Major Thoroughfares, and Stressed grass with yellow and red rectangles. According to the yellow rectangles, FDDL and DPL classify the Commercial into the Seats class, and they fail to identify the Road class owing to their poor ability to extract deeper spectral information. For the region of the red rectangle, RNN and CNN misclassified small Road into Cars. Moreover, there also exist some noisy points in the Commercial class. In contrast, our models showed more smooth and complete classification results.

Figure 14.

Classification maps of the Houston 2018 dataset with compared methods: (a) pseudo-color image; (b) FDDL; (c) DPL; (d) ResNet; (e) RNN; (f) CNN; (g) Ours; (h) ground truth. The yellow and red rectangles are corresponding to grassland andbuilding areas.

According to the experimental results above, our approach achieved the highest OA, AA, and kappa coefficient on four benchmark datasets which were collected by different sensors with various land covers. Compared with other dictionary-based and CNN-based models, our model generally achieves smoother and more accurate classification results, especially for the large area of homogeneous land cover and the irregular small area types. The aforementioned experiments verified that benefiting from the rationally designed structure classification layer (dictionary) and loss functions, our model has the capability to extract the intrinsic invariant spectral feature representation from the HSI and achieves more effective feature extraction.

4. Discussion

In the last section, we presented the result and analysis of experiments on each dataset. In this section, we analyze and discuss the key factors, which affect the performance of the algorithm and need to be considered in practical HSI classification applications.

4.1. Influence of Imbalanced Samples

Chang et al. [47] suggested that imbalanced data will be of great significance to the HSI classification. To explore the effect arising from imbalanced data on classification performance, we randomly choose 10% of the labeled sample of each class and performed an experiment on Houston 2013 dataset with imbalanced data, as listed in Table 5. The imbalanced data will reduce the classification performance, leading to a decrease of about 3∼4% in classification accuracy. Our model still achieves the best performance when the training data are imbalanced. The classification results confirm the powerful classification ability of our model.

Table 5.

Classification Results for Imbalanced Data on Houston 2013. The red bold fonts and blue italic fonts indicate the best and the second best performance.

4.2. Influence of Small Training Samples

To confirm the effectiveness of our framework in practical scenario with the absence of samples, we reduce the number of training samples into 10, 20, and 30 per class. As listed in Table 6, we observed that the models generally suffer from unstable classification performance for different classes. Overall, the proposed model can achieve the best results in most of the indices with an increase of at least 4% accuracy compared with CNN-based models. We will obtain a better classification result with the increasing training data which corresponds with the results.

Table 6.

Classification Results for a Small Number of Training Samples on Houston 2013. The red bold fonts and blue italic fonts indicate the best and the second best performance.

4.3. Computational Cost

Another factor that affects the practical application of the HSI classification model is the efficiency of the algorithm. Therefore, we present the computational time-consuming for the comparison algorithms.

All the tests here are performed on an Intel Core i7-8700 CPU 3.20 GHz desktop with 16 GB memory and carried out by employing Python on the Windows 10 operating system. In Table 7, we can see that the developed model has a slower running speed than DPL, which applies linear projection to extract spectral features. However, the developed model achieves faster testing speed than CNN-based models due to its simpler convolutional structure.

Table 7.

Computational Costs for Different Classification Methods (In Seconds).

5. Conclusions

In this paper, we propose a novel deep learning-based structure dictionary model to extract spectral features from HSIs. Specifically, a residual network is used with a dictionary learning framework to strengthen the feature representation for the original data. Afterwards, sub-dictionaries with shared constraints are introduced to extract the common feature for samples with different class label. Moreover, three kinds of loss functions are combined to enhance the discriminative ability for the overall model. Numerous tests were carried out on HSI datasets, and qualitatively and quantitatively results showed that the proposed feature learning model requires much less computation time when comparing with other SVM and CNN-based models, demonstrating its potential and superiority in HSI classification tasks.

Author Contributions

Funding acquisition, Y.H. and C.D.; Methodology, W.W. and Z.L.; Supervision, C.D.; Validation, Z.L.; Writing original draft, W.W. and Y.H. All authors have read and agreed to the published version of this manuscript.

Funding

This study receives support from China Postdoctoral Science Foundation under Grant 2021TQ0177 and the National Natural Science Foundation of China (NSFC) under Grant 62171040.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found in references [38,39,40,41].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef] [Green Version]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.X. An augmented linear mixing model to address spectral variability for hyperspectral unmixing. IEEE Trans. Image Process. 2018, 28, 1923–1938. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, J.; Huang, X.; Gamba, P.; Bioucas-Dias, J.M.; Zhang, L.; Benediktsson, J.A.; Plaza, A. Multiple feature learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1592–1606. [Google Scholar] [CrossRef] [Green Version]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More diverse means better: Multimodal deep learning meets remote-sensing imagery classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. Joint and progressive subspace analysis (JPSA) with spatial-spectral manifold alignment for semi-supervised hyperspectral dimensionality reduction. IEEE Trans. Cybern. 2021, 51, 3602–3615. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph convolutional networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Yokoya, N.; Chanussot, J.; Heiden, U.; Zhang, B. Endmember-guided unmixing network (EGU-Net): A general deep learning framework for self-supervised hyperspectral unmixing. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Chanussot, J.; Hong, D.; Zhao, B. StfNet: A two-stream convolutional neural network for spatiotemporal image fusion. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6552–6564. [Google Scholar] [CrossRef]

- Kumar, S.; Ghosh, J.; Crawford, M.M. Best-bases feature extraction algorithms for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1368–1379. [Google Scholar] [CrossRef] [Green Version]

- Rashwan, S.; Dobigeon, N. A split-and-merge approach for hyperspectral band selection. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1378–1382. [Google Scholar] [CrossRef] [Green Version]

- Jolliffe, I.T. Principal component analysis. Technometrics 2003, 45, 276. [Google Scholar]

- Senthilnath, J.; Omkar, S.; Mani, V.; Karnwal, N.; Shreyas, P. Crop stage classification of hyperspectral data using unsupervised techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 6, 861–866. [Google Scholar] [CrossRef]

- Green, A.A.; Berman, M.; Switzer, P.; Craig, M.D. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef] [Green Version]

- Schölkopf, B.; Smola, A.; Müller, K.R. Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput. 1998, 10, 1299–1319. [Google Scholar] [CrossRef] [Green Version]

- Mei, F.; Zhao, C.; Wang, L.; Huo, H. Anomaly detection in hyperspectral imagery based on kernel ICA feature extraction. In Proceedings of the 2008 Second International Symposium on Intelligent Information Technology Application, Shanghai, China, 20–22 December 2008; Volume 1, pp. 869–873. [Google Scholar]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. Kernel principal component analysis for the classification of hyperspectral remote sensing data over urban areas. EURASIP J. Adv. Signal Process. 2009, 2009, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Marchesi, S.; Bruzzone, L. ICA and kernel ICA for change detection in multispectral remote sensing images. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 2. [Google Scholar]

- Cortes, C.; Vapnik, V. Support vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Bruzzone, L.; Chi, M.; Marconcini, M. A novel transductive SVM for semisupervised classification of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3363–3373. [Google Scholar] [CrossRef] [Green Version]

- Zhao, C.; Liu, W.; Xu, Y.; Wen, J. A spectral-spatial SVM-based multi-layer learning algorithm for hyperspectral image classification. Remote Sens. Lett. 2018, 9, 218–227. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Shi, C.; Pun, C.M. Multi-scale hierarchical recurrent neural networks for hyperspectral image classification. Neurocomputing 2018, 294, 82–93. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef] [Green Version]

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature extraction for hyperspectral imagery: The evolution from shallow to deep: Overview and toolbox. IEEE Geosci. Remote Sens. Mag. 2020, 8, 60–88. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 210–227. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Gao, L.; Yu, H.; Zhang, B.; Li, Q. Locality-preserving sparse representation-based classification in hyperspectral imagery. J. Appl. Remote Sens. 2016, 10, 042004. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Yang, J.; Zhang, D. Metaface learning for sparse representation based face recognition. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1601–1604. [Google Scholar]

- Yang, M.; Zhang, L.; Feng, X.; Zhang, D. Fisher discrimination dictionary learning for sparse representation. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 543–550. [Google Scholar]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Projective dictionary pair learning for pattern classification. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Akhtar, N.; Mian, A. Nonparametric coupled Bayesian dictionary and classifier learning for hyperspectral classification. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 4038–4050. [Google Scholar] [CrossRef]

- Tu, X.; Shen, X.; Fu, P.; Wang, T.; Sun, Q.; Ji, Z. Discriminant sub-dictionary learning with adaptive multiscale superpixel representation for hyperspectral image classification. Neurocomputing 2020, 409, 131–145. [Google Scholar] [CrossRef]

- Tang, H.; Liu, H.; Xiao, W.; Sebe, N. When dictionary learning meets deep learning: Deep dictionary learning and coding network for image recognition with limited data. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2129–2141. [Google Scholar] [CrossRef] [PubMed]

- Tao, L.; Zhou, Y.; Jiang, X.; Liu, X.; Zhou, Z. Convolutional neural network-based dictionary learning for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1776–1780. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, F.; Hang, R.; Yuan, X. Bidirectional-convolutional LSTM based spectral-spatial feature learning for hyperspectral image classification. Remote Sens. 2017, 9, 1330. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.L.; Crawford, M.M. Spectral and spatial proximity-based manifold alignment for multitemporal hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 51–64. [Google Scholar] [CrossRef]

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; Kasteren, T.; Liao, W.; Bellens, R.; Pižurica, A.; Gautama, S. Hyperspectral and LiDAR Data Fusion: Outcome of the 2013 GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2405–2418. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L.; Cerra, D.; Pato, M.; Carmona, E.; Prasad, S.; Yokoya, N.; Hänsch, R.; Le Saux, B. Advanced Multi-Sensor Optical Remote Sensing for Urban Land Use and Land Cover Classification: Outcome of the 2018 IEEE GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1709–1724. [Google Scholar] [CrossRef]

- Kong, S.; Wang, D. A brief summary of dictionary learning based approach for classification (revised). arXiv 2012, arXiv:1205.6544. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Sinha, B.; Yimprayoon, P.; Tiensuwan, M. Cohen’s Kappa Statistic: A Critical Appraisal and Some Modifications. Math. Calcutta Stat. Assoc. Bull. 2006, 58, 151–170. [Google Scholar] [CrossRef]

- Chang, C.I.; Ma, K.Y.; Liang, C.C.; Kuo, Y.M.; Chen, S.; Zhong, S. Iterative random training sampling spectral spatial classification for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3986–4007. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).