1. Introduction

The Earth is always under threat by natural disasters such as wildfires, floods, and earthquakes. These disasters can cause a lot of damage in their wakes, which could cross regions. Earthquakes are a natural disaster that has the most impact on human settlements, particularly in urban areas [

1]. Therefore, monitoring and assessing damage levels is vital in urban areas in order to understand this type of disaster [

2].

Buildings make up a large portion of urban areas, and it is crucial that damaged buildings be properly indicated after earthquakes [

3]. In past decades, remote sensing played a key role in providing wide coverage surface data at minimal cost and time. Due to this, RS data is used in many applications such as change detection [

4], forest monitoring [

5], soil monitoring [

6], and crop mapping [

7]. Another important application is damage detection and assessment, which makes use of RS datasets such as Light Detection and Ranging (Lidar) [

8], nightlights datasets [

9], multispectral datasets, Synthetic Aperture Radar (SAR) [

3,

10], and optical very high-resolution imagery [

2,

11]. Although these data types can be used for damage assessment, they have limitations:

SAR imagery has high backscatter in built-up areas and suffers from low temporal and spatial resolution. Furthermore, it is difficult to interpret and detect similar objects.

Nightlights data are deployed in rapid damage assessment scenarios. They suffer, however, from low spatial resolution. Furthermore, nightlight can be affected by external factors that increase the potential for false alarms and missing detections.

Multispectral data have high spectral and temporal resolutions that can help detect damaged areas. Such datasets are also of low spatial resolution, which makes damage detection for individual buildings difficult.

Optical VHR (very high resolution) data form the most common type of dataset for building damage detection that facilitates interpreting and processing damaged areas. One of the common issues with this kind of data is that only the roofs of damaged buildings can be detected. Shadows are also said to affect assessment results.

Lidar data are utilized widely in building damage assessment. The major limitation of these data is that they are difficult to interpret.

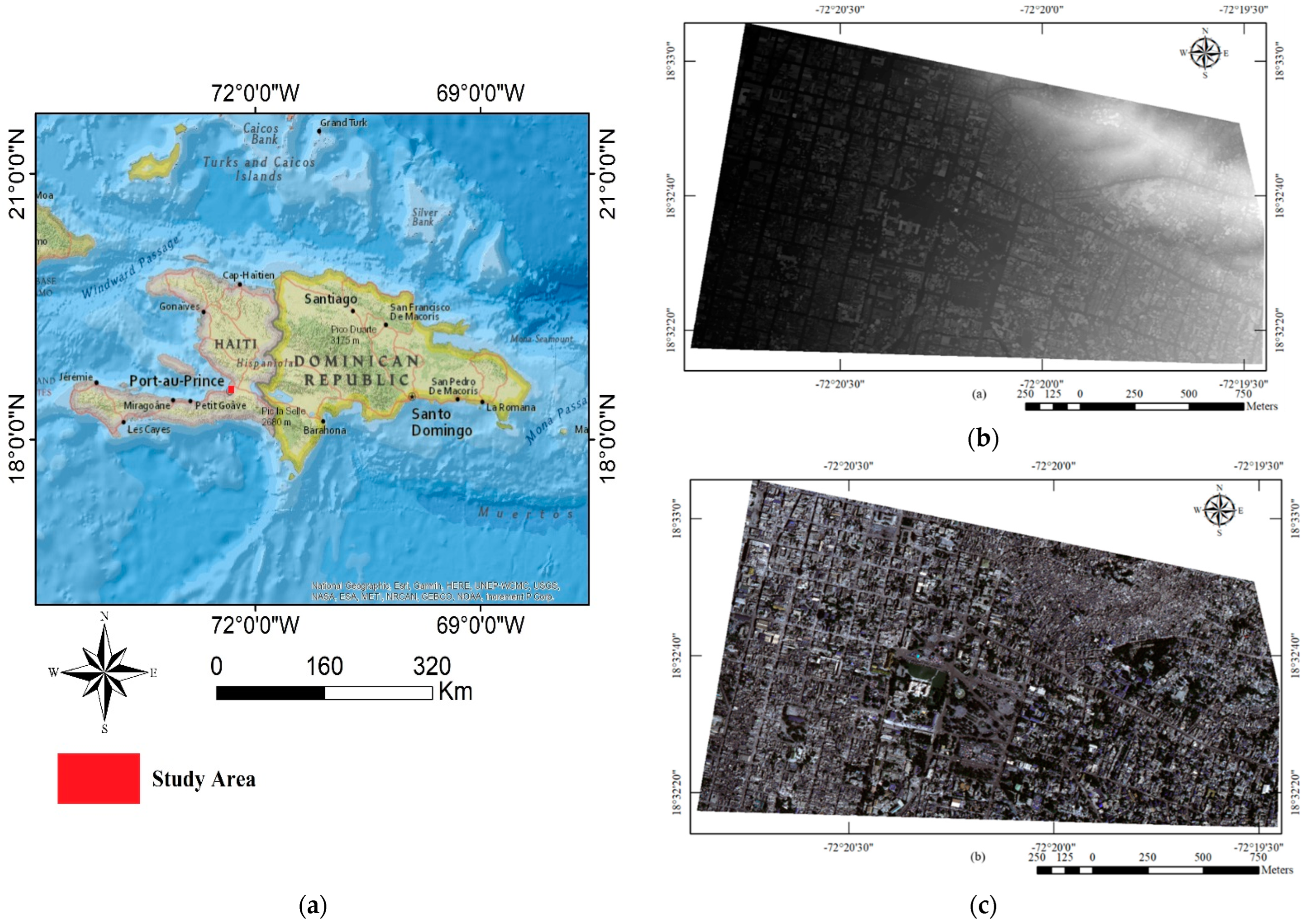

It is worth noting that optical and Lidar data have their own strengths (and weaknesses) in different scenarios. This is summarized in

Figure 1, where detection capabilities of each dataset depend on the type of detection required. We foresee that fusing both types of dataset can potentially mitigate the limitations of each. Hence, this research explores the advantages of fusing optical VHR RS and Lidar datasets for mapping building damages.

According to [

12], fusion can take place at three levels: (1) the pixel-level, (2) the feature-level, and (3) the decision-level. In the literature, feature- and decision-level fusion are more commonly utilized for damage assessment [

13].

As the name implies, pixel-level fusion combines/integrates both datasets at the pixel-level, which is known as pansharpening. The gist is to enhance the spatial resolution of the low-resolution dataset by combining the panchromatic dataset into a low spatial resolution dataset. This process can be applied using either linear or non-linear transformation-based methods.

Feature-level fusion is widely used in classification, damage assessment, or change detection tasks. At this level, some type of feature is extracted from each modality, and then both are ‘stacked’ to form the final representation. The more popular features used in RS work are: (1) spatial features, including texture features such as the Gray-level Co-occurrence Matrix (GLCM) [

14] and the Gabor filter Gabor transformation [

15], as well as morphological attribute profiles (MAP) [

16]; (2) spectral features obtained through linear and non-linear transformations, such as Principle Component Analysis (PCA), the Normalized Vegetation Index (NDVI), and (3) deep features, which are obtained through Convolutional Neural Networks (CNN).

Decision-level fusion takes place when the results from more than one learning algorithm are fused [

12,

17]. Two types of fusion exist: (1) hard fusion, where the final classification result is determined through hard label majority voting, Borda count, and Bayesian fusion [

18]; and (2) soft fusion, where the final decision is based on the probability of obtaining results (

-value), with many methods proposed for this end, such as fuzzy-fusion [

19] or Dempster-Shafer (DS) fusion [

20]. Since decision-level fusion requires the results of various classifiers and possibly from multisource datasets, it can be time-consuming. Furthermore, at least two classifiers need to be tuned, as well as the parameters of the fusion algorithm.

Among the types of fusion methods, feature-level fusion can be more compatible with Lidar and optical datasets. The feature-level fusion strategy has low complexity in comparison to decision-level fusion. One of the disadvantages of traditional feature-level fusion algorithms is the extraction of suitable features. Mainly, the building damage mapping based on traditional feature-level fusion algorithms requires informative feature generation and then feature selection, which is a time-consuming process. To this end, this research utilizes the advantages of feature-level fusion for generating building damage maps. The main purpose of this research is to take advantage of both Lidar and optical datasets for earthquake-induced building damage maps in order to minimize the above-mentioned challenges. Thus, a multi-stream deep feature extractor method based on the CNN algorithm is proposed for building damage mapping using the post-earthquake fused Lidar and optical data. In the proposed method, the extracted deep features for buildings are integrated through a fusion strategy and imported into a Multilayer Perceptron (MLP) classifier to make the final decision to detect damaged buildings.

The wide availability of RS datasets has led to the proposal of several damage assessment methods and frameworks. For building damage mapping, the most common datasets include SAR, Optical VHR, nightlight, Lidar, and multispectral datasets. For instance, Adriano et al. [

21] proposed a building damage multimodal detection framework by combining SAR, optical, and multi-temporal datasets. U-Net architecture was used with two branches to detect building damages. In the encoder phase of the architecture, they used the optical and SAR datasets. The extracted deep features were fused at the pixel-level and used in the decoder phase to generate the damage map. Additionally, Gokaraju et al. [

22] proposed a change detection framework for disaster damage assessment based on multi-sensor data fusion. This study utilized an SAR dataset and multispectral and panchromatic datasets. Specifically, several features such as multi-polarized radiometric and textural features were extracted, then multi-variate conditional copula were utilized for binary classification to generate binary damage mapping. In another work based on change detection, Trinder and Salah [

23] fused bi-temporal aerial and Lidar datasets. They basically performed change detection using methods such as post-classification, image differencing, PCA, and minimum noise fraction. In the end, a simple majority vote was used for damage map generation. Finally, Hajeb et al. [

24] proposed a damage building assessment framework based on integrating post/pre-earthquake Lidar and SAR datasets. They firstly performed texture feature extraction on the Lidar dataset, and then change was detected on the original Lidar datasets and extracted features. A coherence map was later generated followed by coherence change detection on SAR datasets. Finally, the damage map was generated through an RF- and Support Vector Machine (SVM)-supervised classification.

The work in this paper is proposed based on the following motivations:

- (1)

Most of the previous works had the researchers determine the relevant features manually.

- (2)

Decision-level fusion methods can be difficult to implement and requires many considerations. Additionally, the source data need to be classified at separate levels, and then the results are only fused at the end. This might potentially lead to incorrect initial classification, which in the end compromises the whole assessment task.

- (3)

Most previous frameworks employ “traditional” machine learning methods. However, the performance of deep learning methods has been proven by many studies.

- (4)

Most feature-level fusion methods are applied in many steps and this is time-consuming.

- (5)

Most feature-level fusion methods are based on pre/post-event images, but it is difficult to obtain post-event imagery. Furthermore, multi-source datasets have improved the performance of classification and damage detection significantly.

- (6)

Change detection-based damage assessment has shown promising results, but removing the effect of non-target changes (atmospheric condition, manufactured changes) is the most challenging aspect. To this end, it is necessary to propose a novel algorithm to minimize these challenges and improve the result of building damage detection.

Based on the reviewed studies, multi-source data can be integrated and used for building damage mapping. Among different data sources, SAR imagery has many advantages, such as operation in all weather conditions, and penetrating through clouds and rain. However, it suffers from noise, can be difficult to interpret, and have low spatial and spectral resolutions. Based on

Figure 1, optical and Lidar are shown to be complementary datasets for evaluating the damage to a building. Therefore, the fusion of these datasets can seemingly improve the accuracy and quality of the resulting damage maps (provided a suitable fusion strategy is employed). Choosing a suitable fusion strategy depends on several factors, which will be explained in the next section.

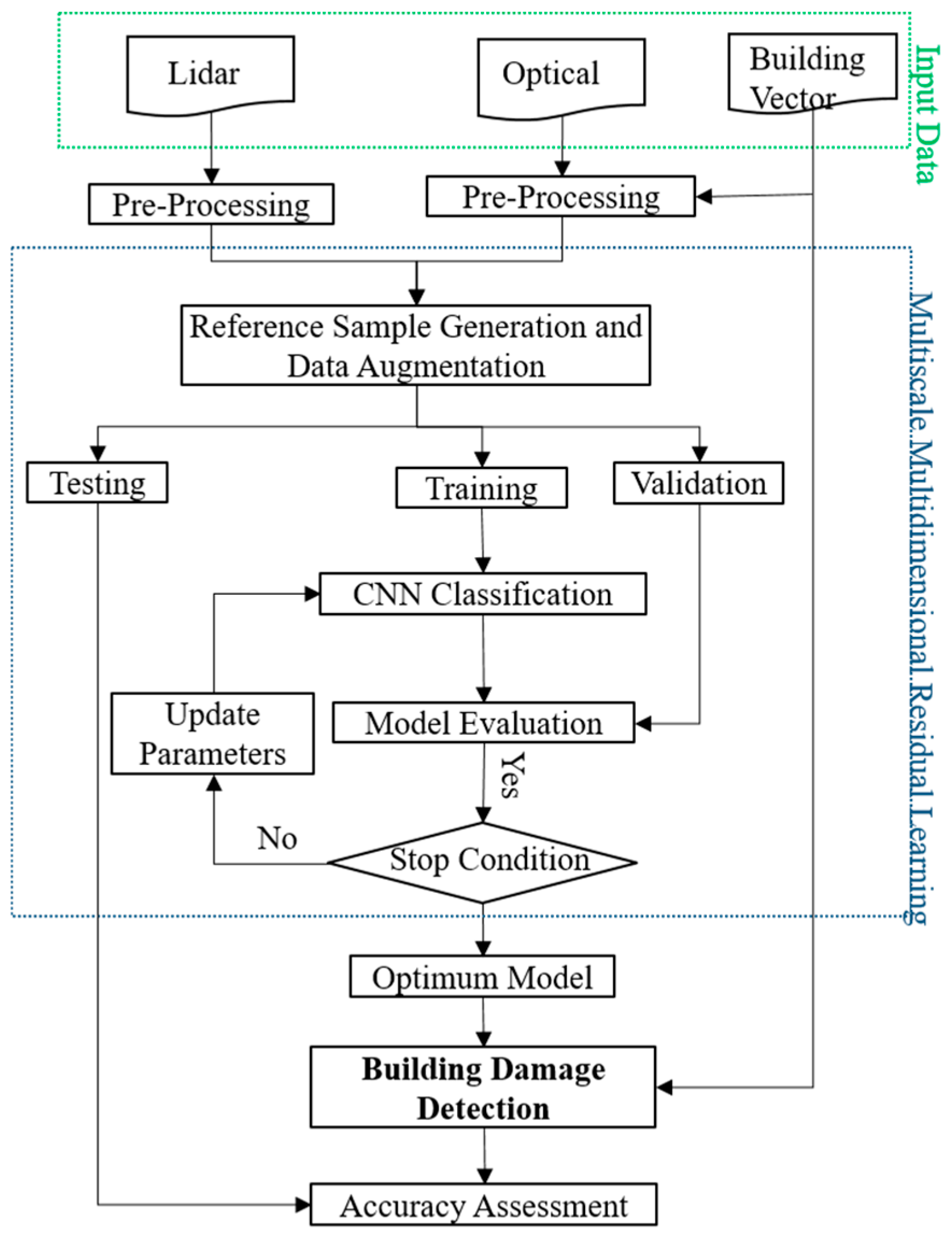

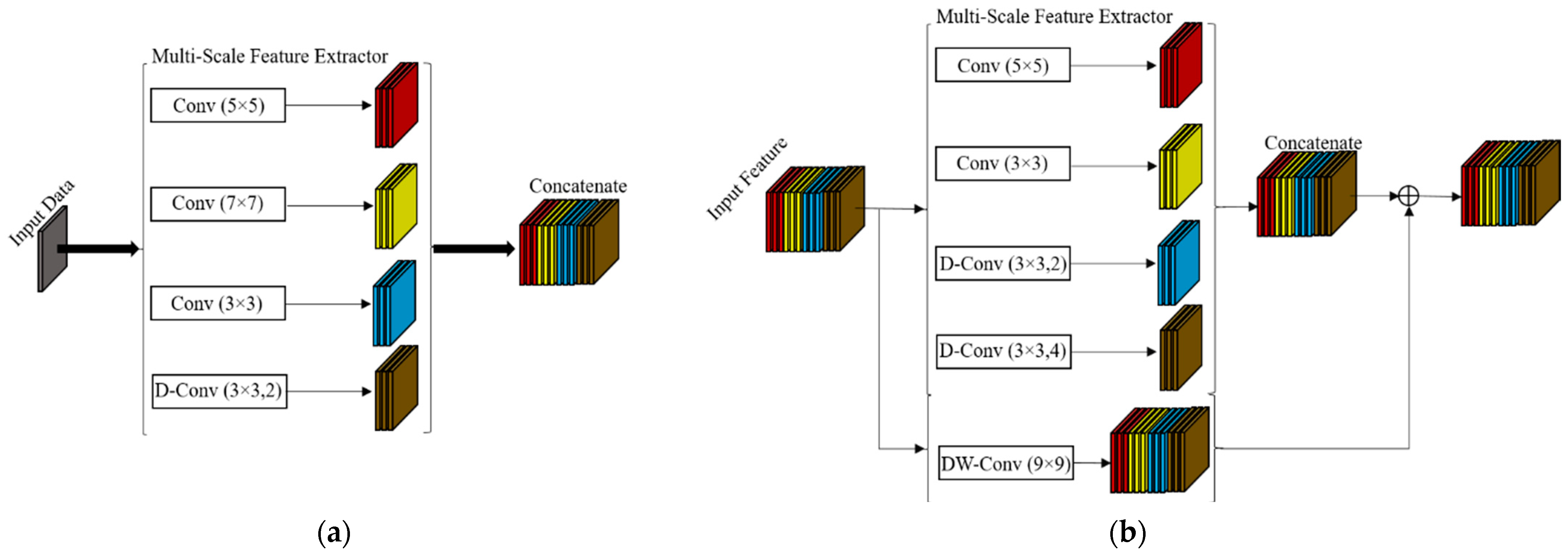

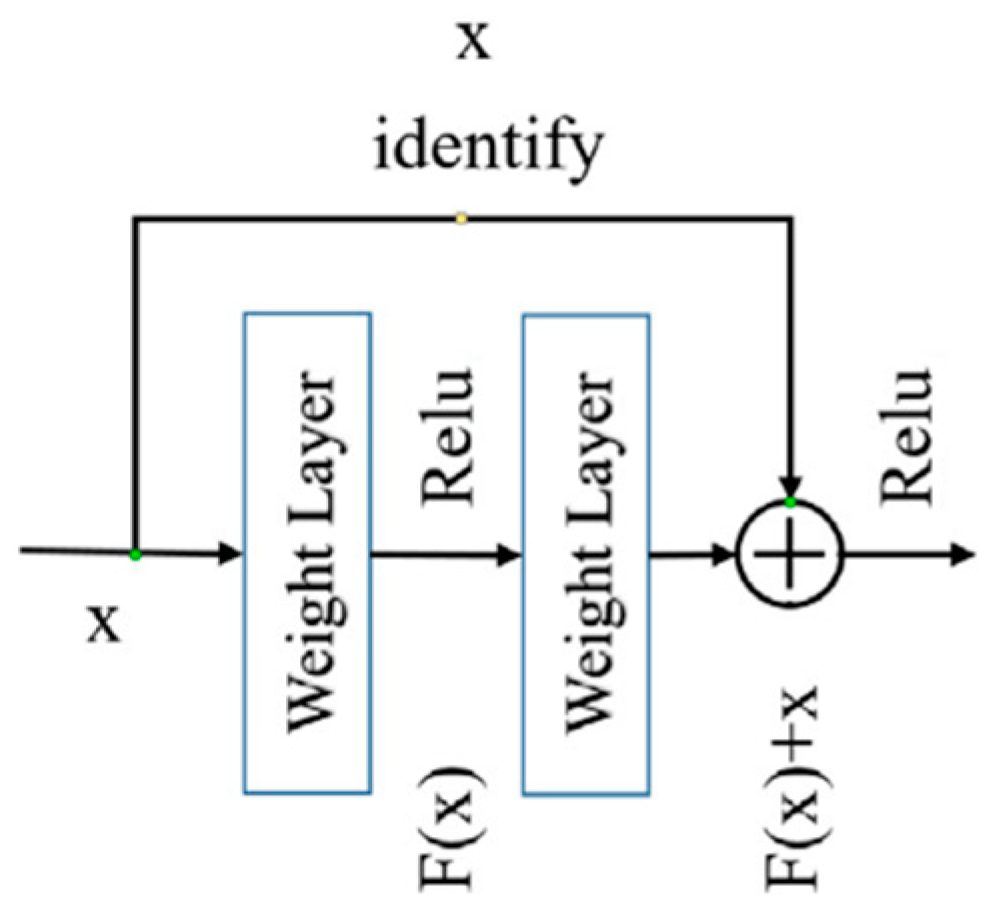

This research proposes a new framework for building damage detection based on deep learning. The proposed network, termed BBD-Net, is an end-to-end framework for damage assessment. There is a total of three channels: two channels to extract deep features from the optical and Lidar datasets, and the third channel is the fusion channel that fuses the extracted deep features from the first and second channels. The main contribution of this research is to: (1) present the novel end-to-end fusion framework for building damage assessment by deep learning methods, (2) propose a framework that takes advantage of residual multi-scale dilated kernel convolution and of depth-wise kernel coevolution, and (3) evaluate the performance for each dataset and perform comparisons with BDD-Net.

4. Discussion

4.1. Summary of Performance of BDD-Net in Different Scenarios

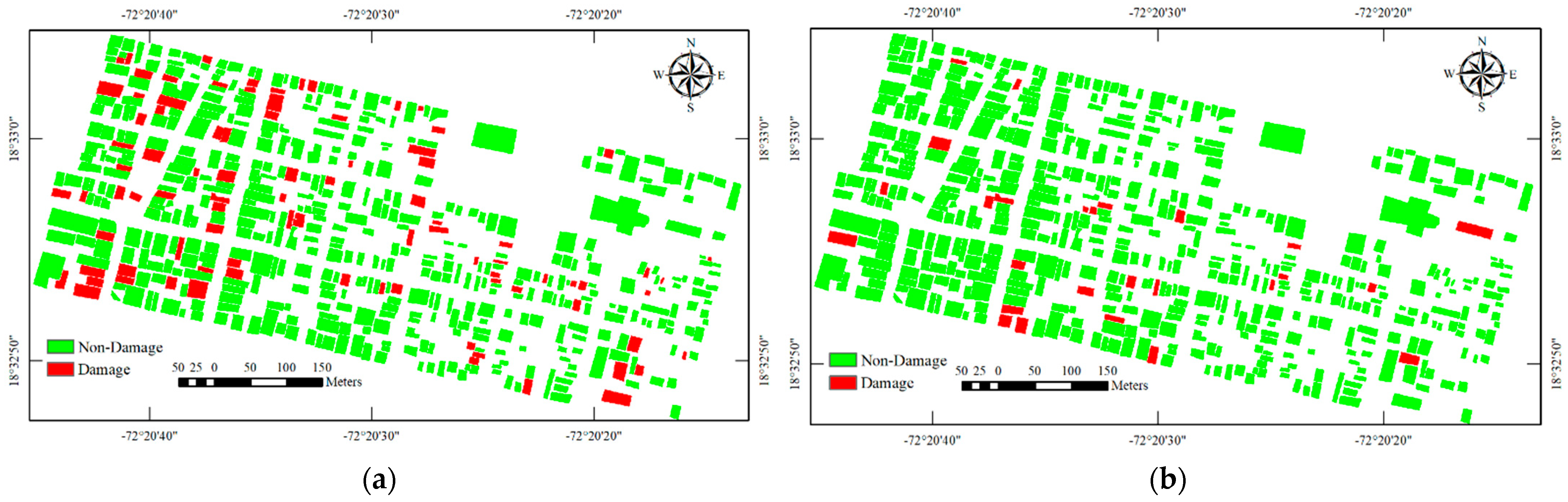

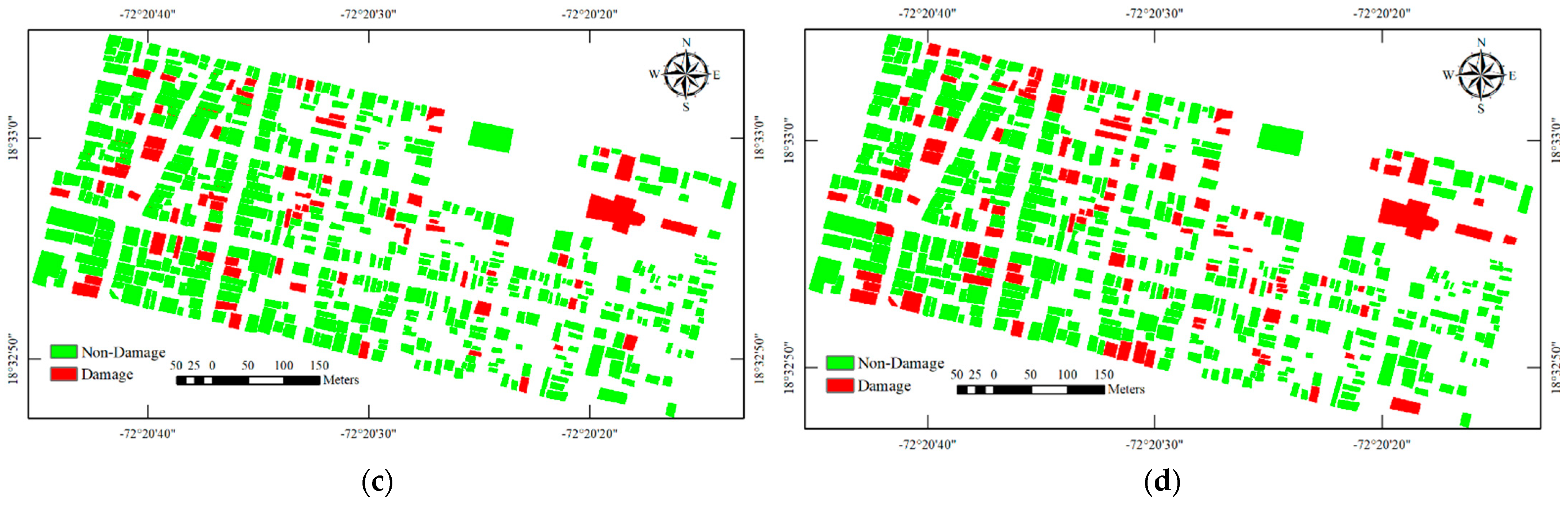

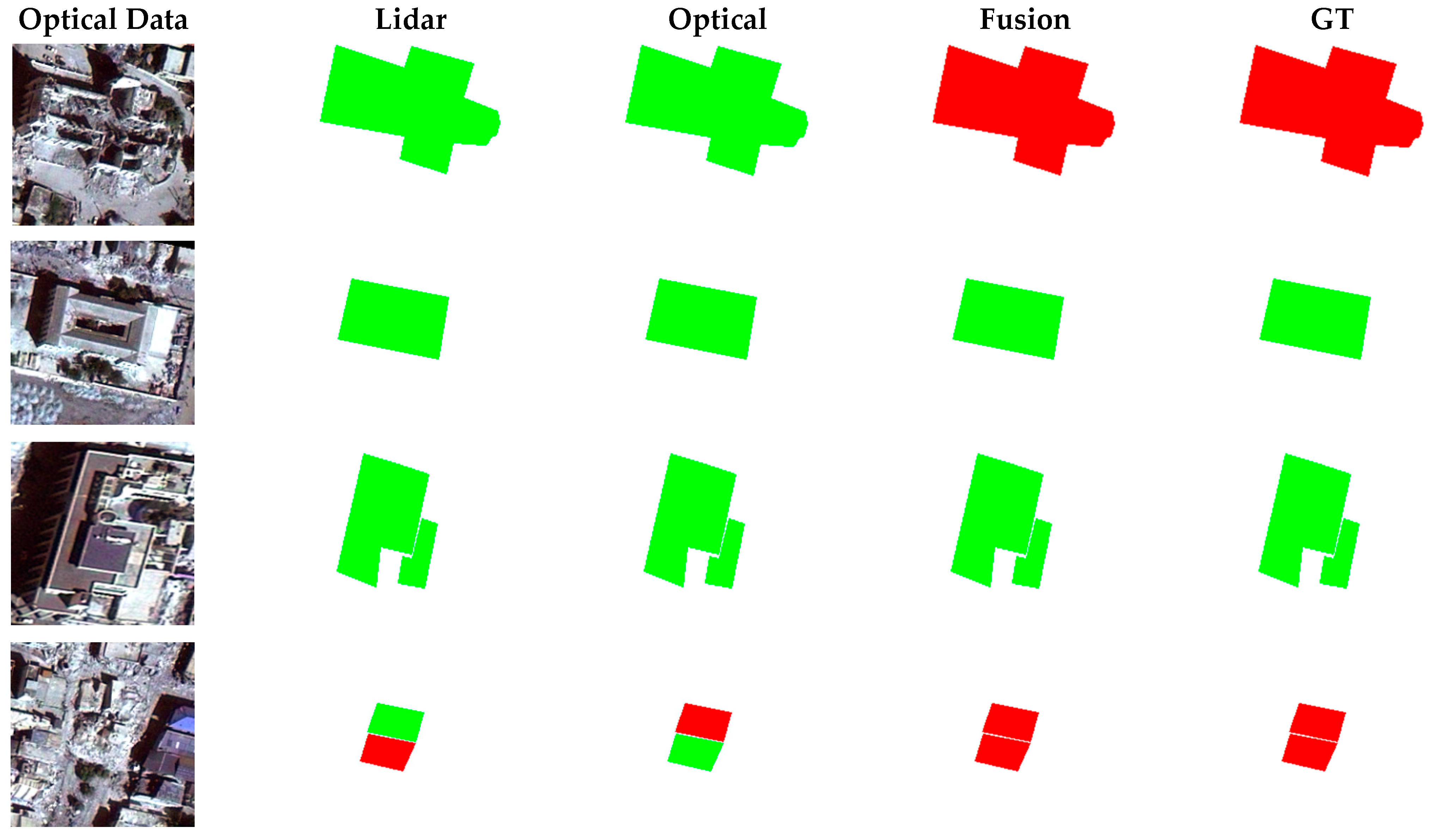

We have considered the problem of building damage detection (BDD) by taking a single-modality approach (either using optical or Lidar data), or a fusion approach where both modalities are considered simultaneously. As per the results in

Table 6 and

Table 7, as well as the visual results in

Figure 10, single or fused modalities managed to produce accuracy of over 80%. However, the highest accuracy, even in the test area, was achieved through the fusion strategy. Also reflecting the effectiveness of fusion using BDD-Net is the MCC, which is higher than 0.6. The justification for using the fusion strategy is further strengthened by the fact that BDD-Net fails to detect damaged buildings when using optical alone (but is able to detect non-damaged buildings).

4.2. Sample Data and Training Process

Training a supervised deep learning model normally requires huge amounts of quality sample data to enable the model to optimally converge [

47,

48]. However, collecting and labelling huge data samples can be time-consuming and labor intensive. Therefore, our approach makes use of data augmentation, which is a process that adds artificial samples to the training data via specific geometric transformations. In all, this utilized only 603 polygons for the training model; this size of sample data is considerably low.

Recently, some state-of-the-art methods based on semantic segmentation were utilized for damage detection purposes. For example, Gupta and Shah [

49] have proposed a building damage assessment framework (Rescuenet) based on pre/post-event optical high-resolution datasets. These methods provided promising results in the damage generation map, but they require too large a sample dataset for the training model. Collecting a high amount of reference sample data for such a problem is very difficult. Furthermore, the training of the semantic segmentation models such as deeplabv3+ [

50], U-Net++ [

51], and Rethinking BiSeNet [

52] requires more time. Additionally, these frameworks need advanced processing tools for training the model and an optimization of hyperparameters. Thus, BDD for small areas based on semantic segmentation methods is not affordable, since the training process of the proposed BDD-Net takes under 4 h, while the semantic segmentation methods require more time.

4.3. Generalization of BDD-Net

This research evaluated the performance of BDD-Net in two scenarios: (1) evaluation of sample date, (2) test areas. The buildings of the test area do not contribute to the training of the model. The structure of buildings in test areas and collected sample areas differ in some building parameters (size, color, and elevation).

Figure 11 shows some sample buildings that considerably differ in comparison to buildings in the sample data.

Based on numerical and visual analysis, BDD-Net manages to perform well even for the test areas. A fusion of optical and Lidar seems to be the best strategy, where a high OA of more than 88% was reported. The good performance of BDD-Net on the test might be a good indication that it will be able to generalize well on unseen data.

4.4. Feature Extraction

Feature- and decision-level fusions are the most common strategies when dealing with multi-source remote sensing imagery. The BDD-Net framework is applied end-to-end and generates deep features form three feature extractor channels. The BDD-Net can extract deep features from both the Lidar and optical modalities, in addition to the third channel focused on integrating expected features to obtain more details.

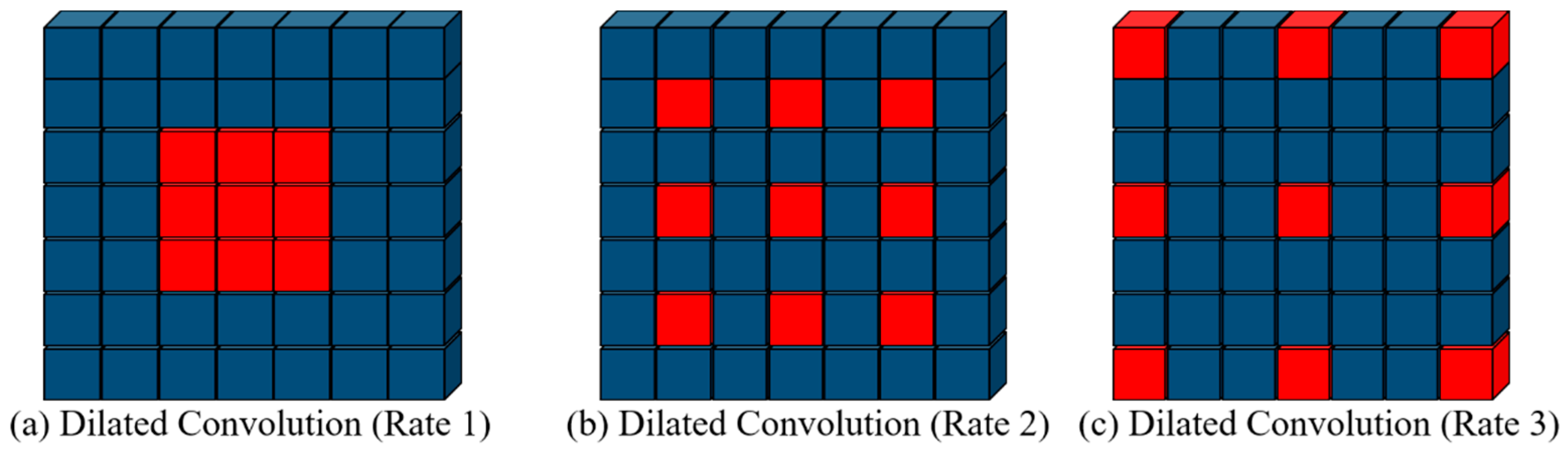

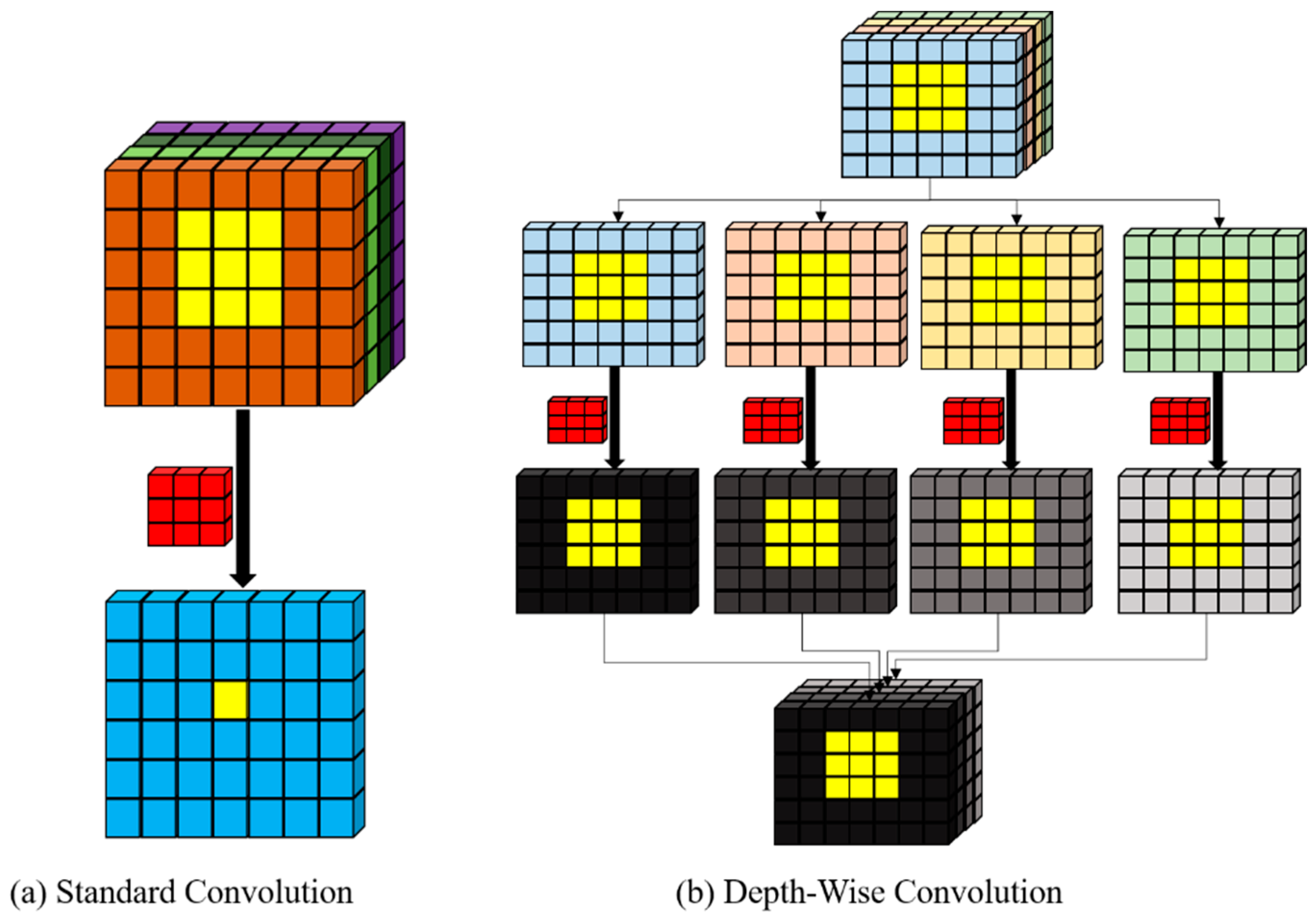

The proposed BDD-Net employs hybrid robust deep-feature extractor convolution layers based on dilated convolution, residual block, depth-wise convolution, and multi-scale convolution layers. This advantage of the proposed method enables BDD-Net to produce good results in damage map generation. Furthermore, the BDD-Net automatically extracts and select the deep features by changing the hyperparameters, while many researchers have used traditional features such as textural features based on the GLCM matrix [

53,

54,

55,

56], or morphological attribute profiles [

57], which are time-consuming, and the optimal features are selected manually.

4.5. Fundamental Error

One of the most important challenges of classification based on multi-source imagery is registration error. For instance, change detection-based methods require accurate registration. This is difficult to achieve since control points for matching are not easily discoverable due to the content of data and the difference between resolutions. This can lead to a cascade of issues as changes originating from the registration error can trickle down to the pixel-based damage detection. BDD-Net can control this issue, and minimize the registration error, by considering the building polygon in the decision making.

The height relief displacements are a fundamental error in the analysis of VHR satellite imagery, which does not appear in the Lidar dataset. The effect of the height relief displacements cannot be removed completely and may affect the results of building damage mapping. The proposed framework can minimize the effect of the height relief displacements comparison with pixel-to-pixel comparison methods, for as much as the proposed framework focused on building footprints.

4.6. Data Resolution

The ground resolution of the available image and Lidar data was 0.5 m and 1 m, respectively. However, the Lidar DSM with the same ground resolution as the image was initially generated by dividing each DSM grid (pixel) into four pixels before importing it to our framework. This simplified the implementation of the network. The size of the available buildings in the selected test areas varied from 31 (m2) to 1880 (m2), equal to 124 (Pix2) to 7520 (Pix2), respectively, on the applied image, and 31 (Pix2) and 1880 (Pix2), respectively, on the original Lidar DSM. It is worth mentioning that both the smallest and the largest buildings were detected as damaged buildings by our algorithm. Although a higher spatial resolution may provide more details about the buildings, it increases the computation cost during the network training, since a larger building box should be imported into the network to prevent information loss. However, input images with higher resolution can be imported to the proposed framework by considering a scaling layer before the input layer, which can be tested in the future studies.

4.7. Multi-Source Dataset

This study generated a damage map based on post-earthquake imagery. Most work in the literature normally requires both pre- and post-event datasets. Furthermore, change detection based on BDD methods [

58,

59] may extract changes originating from other factors, which results in false-alarm pixels. In other words, the change pixels in the bi-temporal dataset can be related to some foreign factors such as noise, atmospheric conditions, and urban development. Thus, one of the advantages of utilizing a post-earthquake dataset in BDD is to reduce false alarm pixels.

4.8. Future Work

We foresee that VHR SAR satellite imagery can also be utilized for BDD. For future work, the optical remote sensing dataset can be integrated with VHR SAR satellite imagery. Additionally, the proposed method focused on binary damage maps with multiple damage assessment methods can help to find more building damages. Therefore, future work can be focused on direct BDD generation in the multiple damage map levels.