Hyperspectral Anomaly Detection Using Deep Learning: A Review

Abstract

:1. Introduction

1.1. Hyperspectral Image and Applications

1.2. Anomaly Detection

1.3. Hyperspectral Anomaly Detection

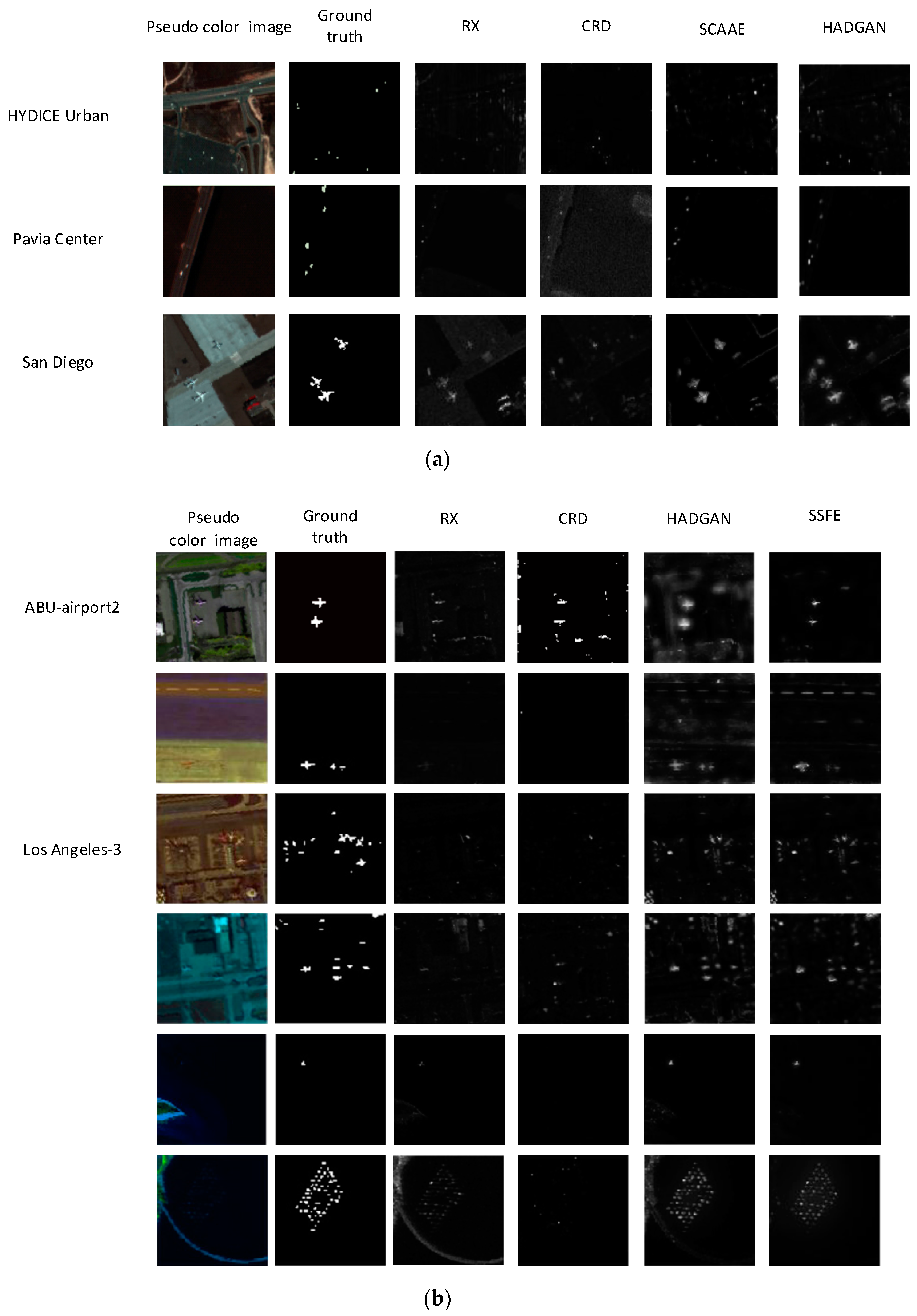

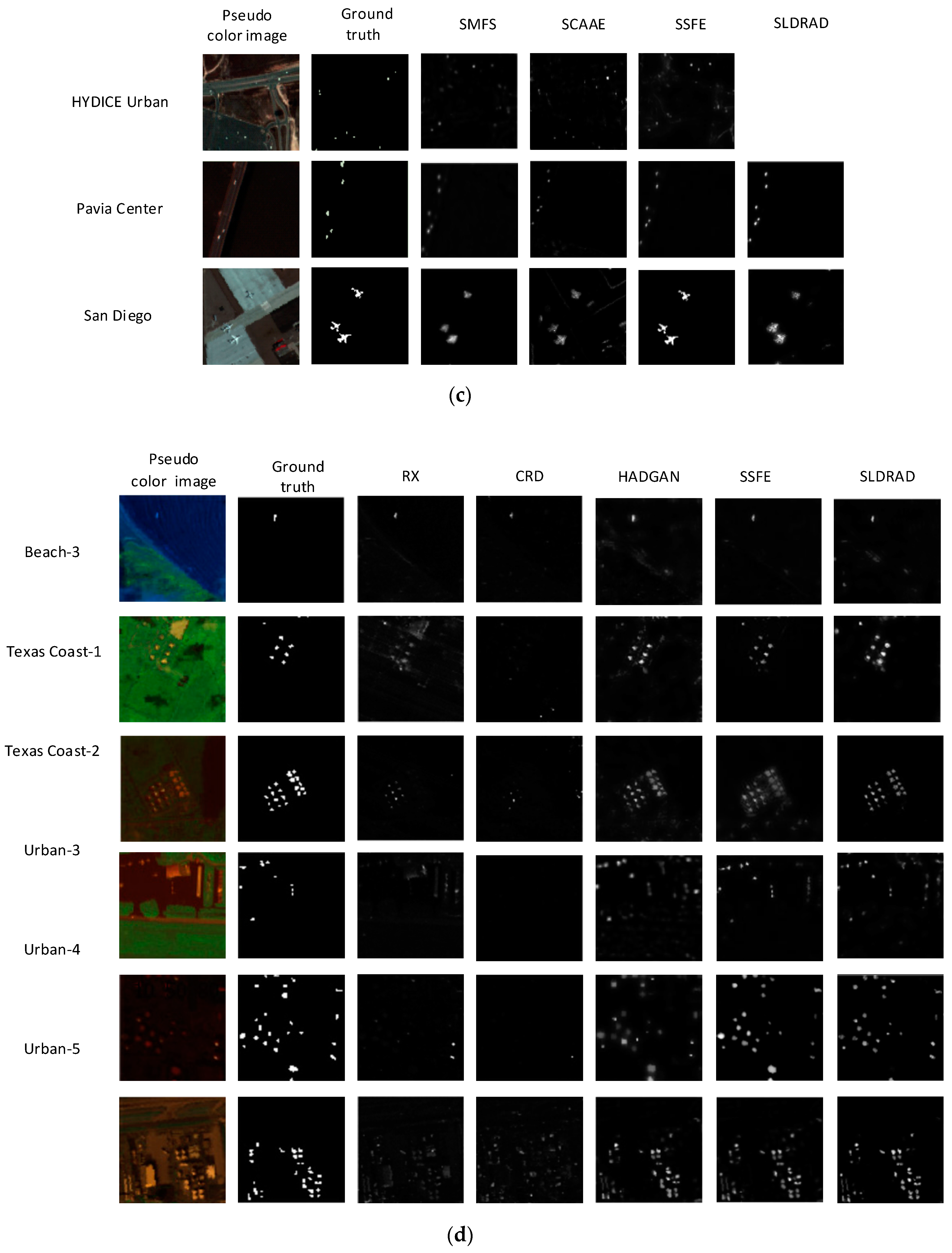

1.4. Contributions and Structure

2. Datasets

- (1)

- San Diego Dataset: The real hyperspectral data of the San Diego area collected by AVIRIS sensor. SDD-1 has 189 spectral bands, and the image data space pixel size is 100 × 100. The hangar, tarmac, and soil are the main backgrounds, and three airplanes and 134 spatial pixels are considered anomalies. SDD-2 has 193 spectral bands, spatial pixels 100 × 100, beach and seawater as the main background, and man-made objects with 202 spatial pixels in the water are regarded as anomalies.

- (2)

- Cat Island Dataset: Collected by AVIRIS sensor, it contains 193 spectral bands and the spatial pixels are 100 × 100. The main background is seawater and islands, and a ship with 19 spatial pixels is considered anomalous.

- (3)

- Pavia Dataset: the scene of Pavia city in northern Italy collected by ROSIS-03 sensor. Including 102 spectral bands, the image scene covers 150 × 150 pixels, the spatial resolution is 1.3 m/pixel, and the main background is bridge and water. There are some vehicles on the bridge accounting for a total of 68 pixels. The spectral characteristics of these pixels are different from the background, thus they are regarded as anomalies.

- (4)

- HYDICE Urban Dataset: an urban scene in California, the image size is 80 × 100, and the original data has 210 bands. After removing noise and water absorption, 162 bands are generally left for subsequent processing and analysis. The ground features include roads, roofs, grasslands, and trees. Among them, 21 pixels were considered abnormal.

- (5)

- GulfPort Dataset: Images of GulfPort area in the United States collected by AVIRIS sensors. Contains 191 spectral bands, the range is 400–2500 nm, the spatial pixel is 100 × 100, and the spatial resolution is 3.4 m. Three planes of different proportions on the ground are regarded as anomalies.

- (6)

- Los Angeles Dataset: The image of Los Angeles urban area collected by AVIRIS sensor, including 205 spectral bands, spatial pixels of 100 × 100, and spatial resolution of 7.1 m. Among them, LA-1 occupies 272 pixels of buildings and LA-2 occupies 232 pixels of houses are considered abnormal.

- (7)

- Wuhan University: The ultra-macro airborne hyperspectral imaging spectrometer (NANO-Hyperspec) loaded by UAV drones contains 270 bands and 4000 × 600 spatial pixels, which is much larger than the commonly used hyperspectral anomaly detection data set. Among them, there are 1122 abnormal pixels in Station and 1510 abnormal pixels in Park.

- (8)

- Texas Coast Dataset: The US Texas coast image collected by the AVIRIS sensor, including Texas coast-1, contains 204 spectral bands, spatial pixels 100 × 100, and spatial resolution 17.2 m, 67 pixels of which are regarded as anomalies. Texas coast-2, contains 207 spectral bands, spatial pixels 100 × 100, spatial resolution 17.2 m, vehicles in the parking lot are marked as abnormal.

- (9)

- Bay Champagne Dataset: The Vanuatu region image collected by the AVIRIS sensor contains 188 spectral bands, spatial pixels 100 × 100, and a spatial resolution of 4.4 m. Among them, 13 pixels are regarded as anomalies, accounting for 0.13% of the entire scene.

- (10)

- EI Segundo Data: The image of the El Segundo area in the United States collected by the AVIRIS sensor contains 224 spectral bands, spatial pixels 250 × 300, and spatial resolution 7.1 m. The data set consists of oil refinery areas, residential areas, parks, and campuses, among which oil storage tanks and towers are considered anomalies.

- (11)

- Grand Island: Collected by the AVIRIS sensor, it contains 224 spectral channels with a spatial resolution of 4.4 m, and man-made objects in the water are regarded as anomalies.

- (12)

- Cuprite and Moffett: Collected by the AVIRIS sensor, it contains 224 bands, the spatial size is 512 × 512, and the spatial resolution is 20 m. Furthermore, 46 and 59 pixels are regarded as anomalies, respectively.

3. Machine Learning Model in HSI-AD

3.1. Traditional Methods

- (1)

- Abnormal spectral features are easy to distinguish in the spectral domain;

- (2)

- Abnormalities usually appear in smaller areas;

3.2. Deep Learning-Based Methods

3.2.1. CNN

3.2.2. Autoencoder

3.3. DBN

3.4. GAN

3.5. RNN and LSTM

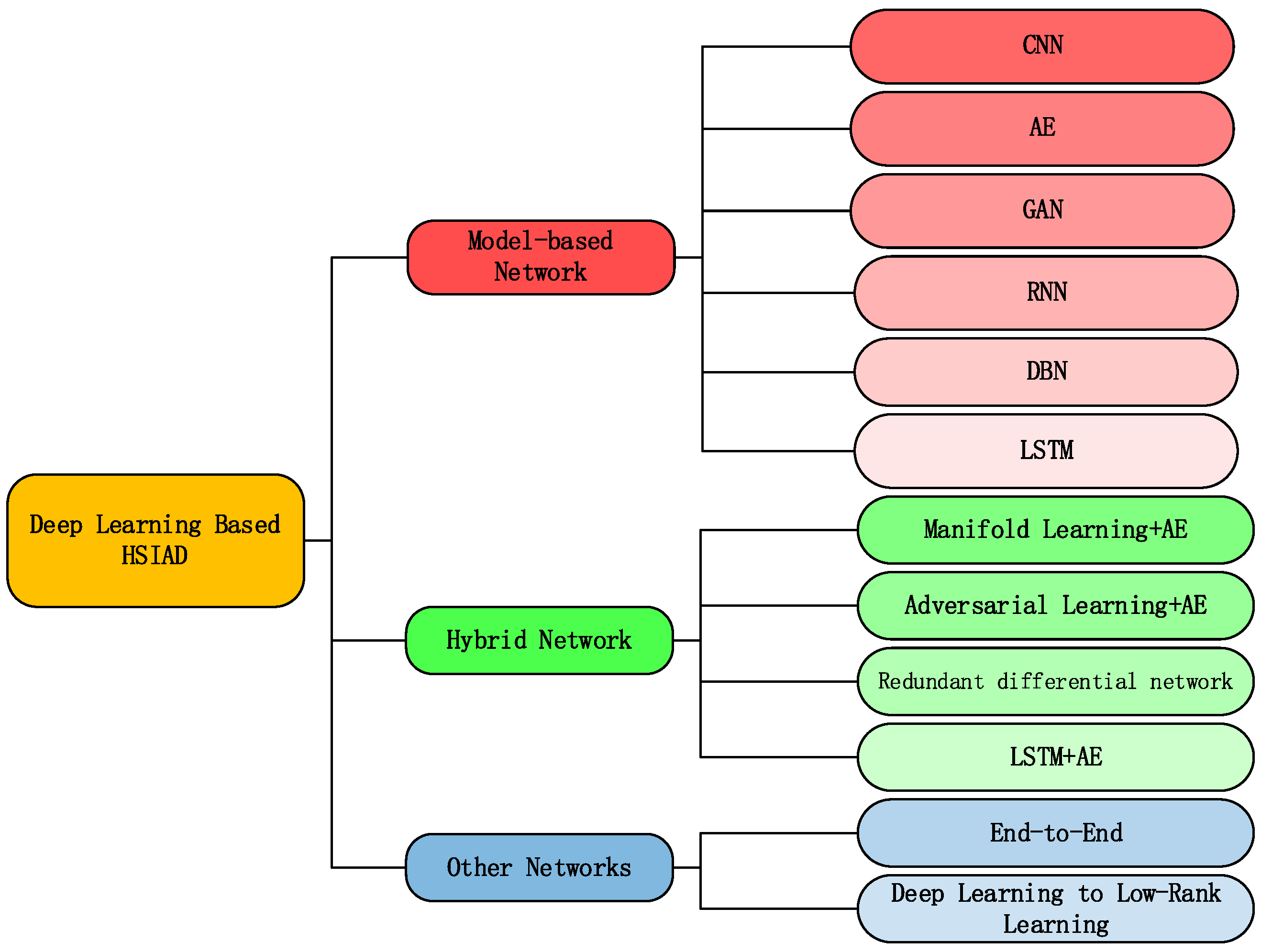

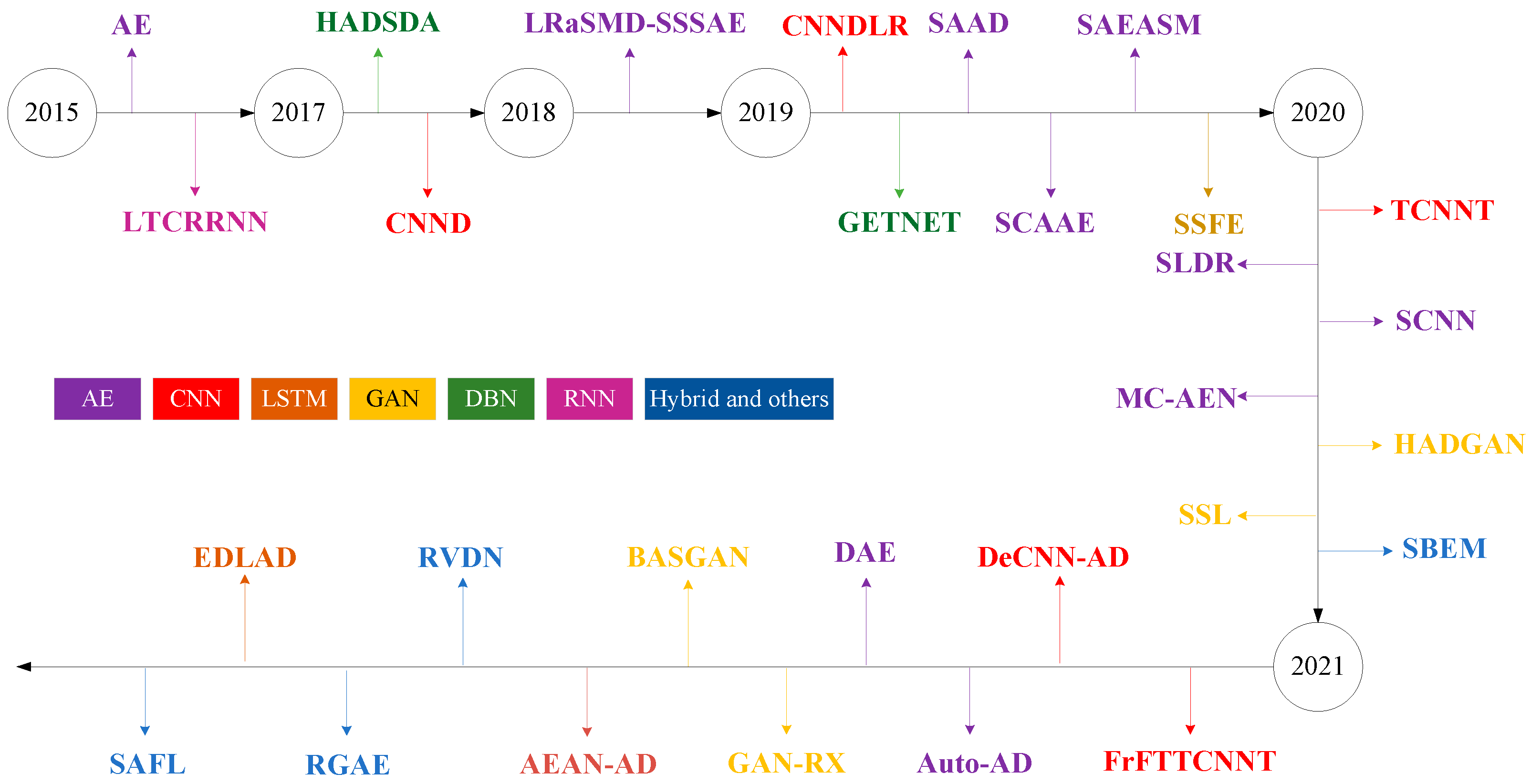

4. HSI-AD Based on Deep Learning

4.1. Model-Based Network

4.1.1. Based on Convolutional Neural Network

4.1.2. Based on Autoencoder

- (1)

- Can the detection model applied to natural images be directly extended to HAD tasks without any background or abnormal training samples?

- (2)

- If not, how to design the network architecture in a spectrum-driven way to take advantage of the inherent spectral characteristics without supervision.

- (3)

- Is it possible to improve the detection performance by emphasizing the discriminative constraints on the feature extraction network?

4.1.3. GAN

4.1.4. Recurrent Neural Network (RNN)

4.1.5. Deep Belief Network (DBN)

4.1.6. Based on Long and Short-Term Memory Network

4.2. Based on Hybrid Network

4.2.1. Manifold Learning Constrained Autoencoder Network

4.2.2. Semi-Supervised Background Estimation Based on Adversarial Learning and Autoencoder

4.2.3. Redundant Difference Network

4.2.4. Adversarial Autoencoder Network Based on LSTM

4.3. Other Networks

4.3.1. End to End

4.3.2. Deep Learning Converted to Low-Rank Representation

5. Performance Evaluation Index for Anomaly Detection

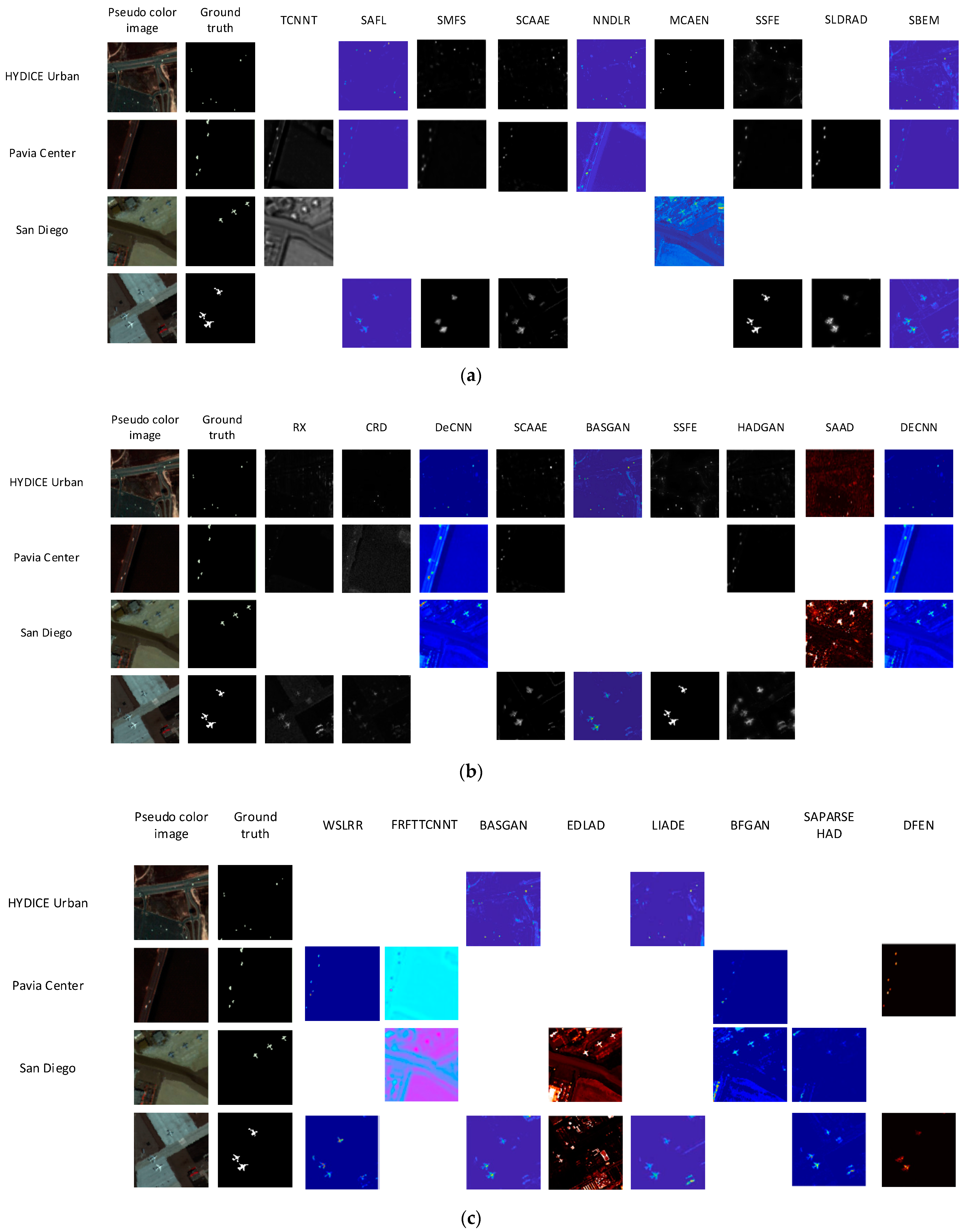

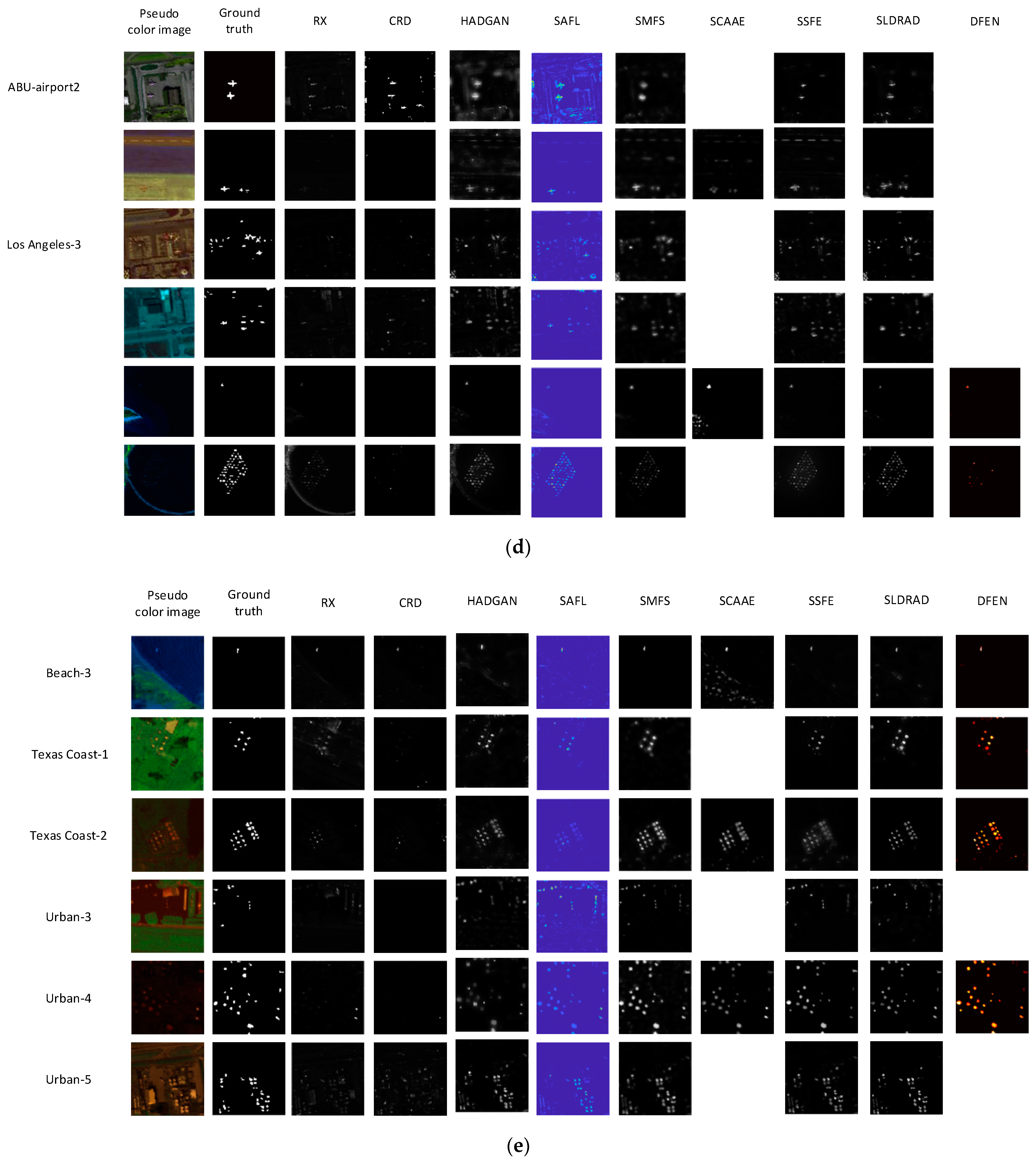

5.1. Based on Convolutional Neural Network

5.2. Box Plot

6. Performance Comparison

7. Challenges

- (1)

- Using feature extraction or band selection to reduce dimensionality, features may be lost to a certain extent.

- (2)

- Affected by noise and interference, it is difficult to meet the requirements of high detection accuracy and low false alarm rate using high-dimensional hyperspectral anomaly detection. In addition, there are problems with insufficient samples and imbalances.

- (3)

- Real-time anomaly detection can not only detect ground objects in real time, but can also effectively relieve the pressure of data storage. Therefore, the military defense and civilian fields have an urgent need for real-time processing. The current real-time processing algorithm results are not ideal. How to introduce new methods, such as GPU, is a key issue for real-time processing.

- (4)

- HSI contains abundant spectral information, but due to the influence of illumination, scattering, and other problems, it is easily disturbed by noise during the imaging process. Therefore, it is difficult for the original spectral features to effectively show the separability of the background and the abnormal target.

- (5)

- There are many methods for anomaly detection, but there are few practical applications.

- (6)

- UAV-borne hyperspectral data has a higher resolution, and the large amount of existing UAVs data should be fully utilized.

- (7)

- Deep learning hyperspectral anomaly detection under the condition of few samples is still a challenging problem.

8. Future Directions

- (1)

- According to HSI’s own characteristics, combined with the advantages of end-to-end and high-level deep feature extraction of deep learning models, it can be combined with manifold learning, sparse representation, graph learning, and other theories to consider designing new deep learning models to improve performance.

- (2)

- Combining the characteristics of HSI, design a space-spectrum joint deep network.

- (3)

- In terms of model training, transfer learning, weakly supervised learning, self-supervised learning, etc. can be introduced, and a small number of samples can be used to implement deep learning network training and optimization.

- (4)

- Focus on the collaborative learning of multi-modal and multi-temporal data.

- (5)

- Real-time anomaly detection.

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Li, W.; Wu, G.; Du, Q. Transferred Deep Learning for Anomaly Detection in Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 597–601. [Google Scholar] [CrossRef]

- Eismann, M.T.; Stocker, A.D.; Nasrabadi, N.M. Automated Hyperspectral Cueing for Civilian Search and Rescue. Proc. IEEE 2009, 97, 1031–1055. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Liu, B.; Li, Y.; Jia, X. Spectral constraint adversarial autoencoders approach to feature representation in hy-perspectral anomaly detection. Neural Netw. 2019, 119, 222–234. [Google Scholar] [CrossRef] [PubMed]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of anoptical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Nasrabadi, N.M. Regularization for spectral matched filter and RX anomaly detector. Proc. SPIE 2008, 6966, 696604. [Google Scholar] [CrossRef]

- Kwon, H.; Nasrabadi, N. Kernel RX-algorithm: A nonlinear anomaly detector for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 388–397. [Google Scholar] [CrossRef]

- Carlotto, M. A cluster-based approach for detecting man-made objects and changes in imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 374–387. [Google Scholar] [CrossRef]

- Banerjee, A.; Burlina, P.; Diehl, C. A support vector method for anomaly detection in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2282–2291. [Google Scholar] [CrossRef]

- An, J.; Cho, S. Variational Autoencoder based Anomaly Detection using Reconstruction Probability. Comput. Sci. 2015, 2, 1–18. [Google Scholar]

- Cheng, B.; Zhao, C.; Zhang, L. Research Advances of Anomaly Target Detection Algorithms for Hyperspectral Imagery. Electron. Opt. Control 2021, 28, 56–59+65. [Google Scholar]

- Geng, X.; Sun, K.; Ji, L.; Zhao, Y. A High-Order Statistical Tensor Based Algorithm for Anomaly Detection in Hyperspectral Imagery. Sci. Rep. 2014, 4, 6869. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, S.; Wang, W.; Qi, H.; Ayhan, B.; Kwan, C.; Vance, S. Low-rank tensor decomposition based anomaly detection for hyper-spectral imagery. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Xing, Z.; Wen, G.; Wei, D. A Tensor Decomposition-Based Anomaly Detection Algorithm for Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5801–5820. [Google Scholar]

- Sun, J.; Wang, W.; Wei, X.; Fang, L.; Tang, X.; Xu, Y.; Yu, H.; Yao, W. Deep Clustering With Intraclass Distance Constraint for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4135–4149. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Hong, D.; He, W.; Yokoya, N.; Yao, J.; Gao, L.; Zhang, L.; Chanussot, J.; Zhu, X.X. Inter-pretable Hyperspectral Artificial Intelligence: When Non-Convex Modeling meets Hyperspectral Remote Sensing. IEEE Geosci. Remote Sens. Mag. 2021, 9, 52–87. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional Neural Networks for Multimodal Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.X. An Augmented Linear Mixing Model to Address Spectral Variability for Hyperspectral Unmixing. IEEE Trans. Image Process. 2019, 28, 1923–1938. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep Learning for Hyperspectral Image Classification: An Overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef] [Green Version]

- Li, R.; Cui, K.; Chan, R.H.; Plemmons, R.J. Classification of Hyperspectral Images Using SVM with Shape-adaptive Reconstruction and Smoothed Total Variation. arXiv 2022, arXiv:2203.15619. [Google Scholar]

- Zhao, C.; Li, C.; Feng, S. A Spectral–Spatial Method Based on Fractional Fourier Transform and Collaborative Representation for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1259–1263. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced Spectral Classifiers for Hyperspectral Images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef] [Green Version]

- Lei, J.; Fang, S.; Xie, W.; Li, Y.; Chang, C.-I. Discriminative Reconstruction for Hyperspectral Anomaly Detection With Spectral Learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7406–7417. [Google Scholar] [CrossRef]

- Shi, Y. Anomaly intrusion detection method based on variational autoencoder and attention mechanism. J. Chongqing Univ. Posts Telecommun. (Nat. Sci. Ed.) 2021, 1–8. Available online: http:kns.cnki.net/kcms/detail/50.1181.n.20210824.1036.014.html (accessed on 12 March 2022).

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, I.; Frey, B. Adversarial Autoencoders. arXiv 2015, arXiv:1511.05644. [Google Scholar]

- Arisoy, S.; Nasrabadi, N.M.; Kayabol, K. GAN-based Hyperspectral Anomaly Detection. In Proceedings of the 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021. [Google Scholar]

- Jiang, T.; Li, Y.; Xie, W.; Du, Q. Discriminative Reconstruction Constrained Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4666–4679. [Google Scholar] [CrossRef]

- Song, S.; Zhou, H.; Yang, Y.; Song, J. Hyperspectral Anomaly Detection via Convolutional Neural Network and Low Rank With Density-Based Clustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3637–3649. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B.; Deng, Y. A tensor-based adaptive subspace detector for hyperspectral anomaly detection. Int. J. Remote Sens. 2018, 39, 2366–2382. [Google Scholar] [CrossRef]

- Du, B.; Zhang, M.; Zhang, L.; Hu, R.; Tao, D. PLTD: Patch-Based Low-Rank Tensor Decomposition for Hyperspectral Images. IEEE Trans. Multimed. 2017, 19, 67–79. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B. Fractional Fourier Transform and Transferred CNN Based on Tensor for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a Fast and Flexible Solution for CNN based Image Denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fu, X.; Jia, S.; Zhuang, L.; Xu, M.; Zhou, J.; Li, Q. Hyperspectral Anomaly Detection via Deep Plug-and-Play Denoising CNN Regularization. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9553–9568. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted nuclear norm minimization with application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image de-noising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [Green Version]

- Teodoro, A.M.; Bioucas-Dias, J.M.; Figueiredo, M.A.T. Image restoration and reconstruction using variable splitting and class-adapted image priors. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3518–3522. [Google Scholar] [CrossRef] [Green Version]

- Zhuang, L.; Bioucas-Dias, J.M. Fast hyperspectral image denoising and inpainting based on low-rank and sparse representa-tions. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 730–742. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Kang, X. Regularizing Hyperspectral and Multispectral Image Fusion by CNN Denoiser. IEEE Trans. Neural Networks Learn. Syst. 2020, 32, 1124–1135. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-end Two-dimensional CNN Framework for Hyperspectral Image Change Detection. arXiv 2019, arXiv:1905.01662. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Sun, Y.; Zhang, J.; Wu, P.; Jiao, L. Hyperspectral Unmixing via Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1755–1759. [Google Scholar] [CrossRef]

- Bati, E.; Çalışkan, A.; Koz, A.; Alatan, A.A. Hyperspectral anomaly detection method based on auto-encoder. SPIE Remote Sens. 2015, 9643, 220–226. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Bengio, Y. Extracting and Composing Robust Features with Denoising Autoencoders Machine Learning. In Proceedings of the Twenty-Fifth International Conference (ICML), Helsinki, Finland, 5–9 June 2008. [Google Scholar]

- Zhao, W.; Guo, Z.; Yue, J.; Zhang, X.; Luo, L. On combining multiscale deep learning features for the classification of hyperspectral remote sensing imagery. Int. J. Remote Sens. 2015, 36, 3368–3379. [Google Scholar] [CrossRef]

- Zhao, C.; Li, X.; Zhu, H. Hyperspectral anomaly detection based on stacked denoising autoencoders. J. Appl. Remote Sens. 2017, 11, 042605. [Google Scholar] [CrossRef]

- Chang, S.; Du, B.; Zhang, L. A Sparse Autoencoder Based Hyperspectral Anomaly Detection Algorihtm Using Residual of Reconstruction Error. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5488–5491. [Google Scholar] [CrossRef]

- Van Veen, D.; Jalal, A.; Soltanolkotabi, M. Compressed Sensing with Deep Image Prior and Learned Regularization. arXiv 2018, arXiv:1806.06438. [Google Scholar]

- Zhao, C.; Zhang, L. Spectral-spatial stacked autoencoders based on low-rank and sparse matrix decomposition for hyper-spectral anomaly detection. Infrared Phys. Technol. 2018, 92, 166–176. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Zhang, L. Auto-AD: Autonomous Hyperspectral Anomaly Detection Network Based on Fully Convolu-tional Autoencoder. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5503314. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Fang, S.; Li, Y.; Jia, X.; Li, M. Dual Feature Extraction Network for Hyperspectral Image Analysis. Pattern Recognit. 2021, 118, 107992. [Google Scholar] [CrossRef]

- Fan, G.; Ma, Y.; Huang, J. Robust Graph Autoencoder for Hyperspectral Anomaly Detection. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 1830–1834. [Google Scholar]

- Cheng, T.; Wang, B. Graph and Total Variation Regularized Low-Rank Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 391–406. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B. A stacked autoencoders-based adaptive subspace model for hyperspectral anomaly detection. Infrared Phys. Technol. 2018, 96, 52–60. [Google Scholar] [CrossRef]

- Hosseiny, B.; Shah-Hosseini, R. A hyperspectral anomaly detection framework based on segmentation and convolutional neural network algorithms. Int. J. Remote Sens. 2020, 41, 6946–6975. [Google Scholar] [CrossRef]

- Arisoy, S.; Nasrabadi, N.M.; Kayabol, K. Unsupervised Pixel-wise Hyperspectral Anomaly Detection via Autoencoding Ad-versarial Networks. IEEE Geosci. Remote Sens. Lett. 2021, 19, 5502905. [Google Scholar]

- Sabokrou, M.; Khalooei, M.; Fathy, M.; Adeli, E. Adversarially learned one-class classifier for novelty detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 July 2018; pp. 3379–3388. [Google Scholar]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. GANomaly: Semi-supervised Anomaly Detection via Adversarial Training. In Proceedings of the ACCV 2018—14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Jawahar, C.V., Li, H., Mori, G., Schindler, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Nadeem, M.; Marshall, O.; Singh, S. Semi-Supervised Deep Neural Network for Network Intrusion Detection. In CCERP-2016; Kennesaw State University: Kennesaw, GA, USA, 2016. [Google Scholar]

- Wulsin, D.; Blanco, J.; Mani, R. Semi-Supervised Anomaly Detection for EEG Waveforms Using Deep Belief Nets. In Proceedings of the Ninth International Conference on Machine Learning and Applications, Washington, DC, USA, 12–14 December 2010. [Google Scholar]

- Song, H.; Jiang, Z.; Men, A.; Yang, B. A Hybrid Semi-Supervised Anomaly Detection Model for High-Dimensional Data. Comput. Intell. Neurosci. 2017, 2017, 8501683. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nguyen, A.T.; Park, M. Detection of DoH Tunneling using Semi-supervised Learning method. In Proceedings of the 2022 International Conference on Information Networking (ICOIN), Jeju-si, Korea, 12–15 January 2022; pp. 450–453. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Z.; Tsui, K.-L. A Deep Generative Approach for Rail Foreign Object Detections via Semi-supervised Learning. IEEE Trans. Ind. Inform. 2022. [Google Scholar] [CrossRef]

- Shao, L.; Zhang, E.; Ma, Q.; Li, M. Pixel-Wise Semisupervised Fabric Defect Detection Method Combined With Multitask Mean Teacher. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Jiang, K.; Xie, W.; Li, Y.; Lei, J.; He, G.; Du, Q. Semisupervised Spectral Learning With Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5224–5236. [Google Scholar] [CrossRef]

- Zhong, J.; Xie, W.; Li, Y.; Lei, J.; Du, Q. Characterization of Background-Anomaly Separability With Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6017–6028. [Google Scholar] [CrossRef]

- Lyu, H.; Hui, L. Learning a transferable change detection method by Recurrent Neural Network. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016. [Google Scholar]

- Ning, M.; Yu, P.; Wang, S. An Unsupervised Deep Hyperspectral Anomaly Detector. Sensors 2018, 18, 693. [Google Scholar]

- Lei, J.; Xie, W.; Yang, J.; Li, Y.; Chang, C.-I. Spectral–Spatial Feature Extraction for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8131–8143. [Google Scholar] [CrossRef]

- Zhu, D.; Du, B.; Zhang, L. DLAD: An Encoder-Decoder Long Short-Term Memory Network-Based Anomaly Detector for Hyperspectral Images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021; pp. 4412–4415. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, W.; Huang, J. Exploiting Embedding Manifold of Autoencoders for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1527–1537. [Google Scholar] [CrossRef]

- Xie, W.; Liu, B.; Li, Y.; Lei, J.; Du, Q. Autoencoder and Adversarial-Learning-Based Semisupervised Background Estimation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5416–5427. [Google Scholar] [CrossRef]

- Li, X.; Zhao, C.; Yang, Y. Hyperspectral anomaly detection based on the distinguishing features of a redundant difference-value network. Int. J. Remote Sens. 2021, 42, 5459–5477. [Google Scholar] [CrossRef]

- Shah, R.; Säckinger, E. Signature Verification Using A “Siamese” Time Delay Neural Network. Int. J. Pattern Recognit. Artif. Intell. 1993, 7, 737–744. [Google Scholar]

- Alex, V.; KP, M.S.; Chennamsetty, S.S.; Krishnamurthi, G. Generative adversarial networks for brain lesion detection. Med. Imaging Image Process. Int. Soc. Opt. Photonics 2017, 10133, 101330G. [Google Scholar] [CrossRef]

- Yang, Y.; Hinde, C.J.; Gillingwater, D. Improve neural network training using redundant structure. In Proceedings of the 2003 International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003. [Google Scholar]

- Ouyang, T.; Wang, J.; Zhao, X.; Wu, S. LSTM-Adversarial Autoencoder for Spectral Feature Learning in Hyperspectral Anomaly Detection. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2162–2165. [Google Scholar]

- Jiang, K.; Xie, W.; Lei, J.; Li, Z.; Li, Y.; Jiang, T.; Du, Q. E2E-LIADE: End-to-End Local Invariant Autoencoding Density Estimation Model for Anomaly Target Detection in Hyperspectral Image. IEEE Trans. Cybern. 2021, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Zhang, X.; Li, Y. Weakly Supervised Low-Rank Representation for Hyperspectral Anomaly Detection. IEEE Trans. Cybern. 2021, 51, 3889–3990. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Sensor | Spectral Band | Size | Resolution | Abnormal |

|---|---|---|---|---|---|

| San Diego | AVIRIS | SDD-1:189 SDD-2:193 | 100 × 100 | 7.5 m | SDD-1:3 planes and 134 pixels SDD-2: 202-pixel artificial object |

| Cat Island | AVIRIS | 193 | 100 × 100 | 17.2 m | One ship: 19 pixels |

| Pavia | ROSIS-03 | 102 | 150 × 150 | 1.3 m | Vehicle: 68 pixels |

| HYDICE Urban | Hydice | 210 | 80 × 100 | 3 m | 21 pixels |

| GulfPort | AVIRIS | 191 | 100 × 100 | 3.4 m | Three airplanes of different proportions with 68 pixels |

| Los Angeles | AVIRIS | 205 | 100 × 100 | 7.1 m | LA-1: buildings with 272 pixels LA_2: houses with 232 pixels |

| Texas Coast | AVIRIS | TC-1:204 TC-2:207 | 100 × 100 | 17.2 m | TC-1: 67 pixels TC-2: Vehicle |

| Bay Champagne | AVIRIS | 188 | 100 × 100 | 4.4 m | Vehicle |

| EI Segundo | AVIRIS | 224 | 250 × 300 | 7.1 m | Oil storage tanks and towers |

| WHU-Hi | NANO-Hyperspec (Airborne) | Station:270 Park:270 | 4000 × 600 | 0.04 m 0.08 m | 1122 pixels 1510 pixels |

| Grand Island | AVIRIS | 224 | 300 × 480 | 4.4 m | Man-made objects in water |

| Cuprite | AVIRIS | 224 | 512 × 512 | 20 m | 46 pixels |

| Moffett | AVIRIS | 224 | 512 × 512 | 20 m | 59 pixels |

| Dataset | RX Statistics | CRD Representation | TBASD Tensor | DeCNN CNN | SCAAE AE | BASGAN GAN | SSFE DBN | EDLAD LSTM |

|---|---|---|---|---|---|---|---|---|

| HYDICE Urban | 0.9857 | 0.9956 | - | 0.9976 | 0.9968 | 0.9987 | 0.9985 | - |

| San Diego | 0.909 | 0.9880 | - | 0.9932 | 0.9852 | 0.9954 | 0.9946 | 0.9917 |

| Pavia | 0.9543 | 0.9862 | 0.9828 | 0.9994 | 0.9992 | - | - | - |

| Dataset | RX Statistics | CRD Representation | TBASD Tensor | DeCNN CNN | SCAAE AE | BASGAN GAN | SSFE DBN | EDLAD LSTM |

|---|---|---|---|---|---|---|---|---|

| HYDICE Urban | 0.9857 | 0.9956 | - | 0.9976 | 0.9968 | 0.9987 | 0.9985 | - |

| San Diego | 0.909 | 0.9880 | - | 0.9932 | 0.9852 | 0.9954 | 0.9946 | 0.9917 |

| Pavia | 0.9543 | 0.9862 | 0.9828 | 0.9994 | 0.9992 | - | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, X.; Xie, C.; Fan, Z.; Duan, Q.; Zhang, D.; Jiang, L.; Wei, X.; Hong, D.; Li, G.; Zeng, X.; et al. Hyperspectral Anomaly Detection Using Deep Learning: A Review. Remote Sens. 2022, 14, 1973. https://doi.org/10.3390/rs14091973

Hu X, Xie C, Fan Z, Duan Q, Zhang D, Jiang L, Wei X, Hong D, Li G, Zeng X, et al. Hyperspectral Anomaly Detection Using Deep Learning: A Review. Remote Sensing. 2022; 14(9):1973. https://doi.org/10.3390/rs14091973

Chicago/Turabian StyleHu, Xing, Chun Xie, Zhe Fan, Qianqian Duan, Dawei Zhang, Linhua Jiang, Xian Wei, Danfeng Hong, Guoqiang Li, Xinhua Zeng, and et al. 2022. "Hyperspectral Anomaly Detection Using Deep Learning: A Review" Remote Sensing 14, no. 9: 1973. https://doi.org/10.3390/rs14091973

APA StyleHu, X., Xie, C., Fan, Z., Duan, Q., Zhang, D., Jiang, L., Wei, X., Hong, D., Li, G., Zeng, X., Chen, W., Wu, D., & Chanussot, J. (2022). Hyperspectral Anomaly Detection Using Deep Learning: A Review. Remote Sensing, 14(9), 1973. https://doi.org/10.3390/rs14091973