Abstract

Relative radiometric normalization (RRN) is important for pre-processing and analyzing multitemporal remote sensing (RS) images. Multitemporal RS images usually include different land use/land cover (LULC) types; therefore, considering an identical linear relationship during RRN modeling may result in potential errors in the RRN results. To resolve this issue, we proposed a new automatic RRN technique that efficiently selects the clustered pseudo-invariant features (PIFs) through a coarse-to-fine strategy and uses them in a fusion-based RRN modeling approach. In the coarse stage, an efficient difference index was first generated from the down-sampled reference and target images by combining the spectral correlation, spectral angle mapper (SAM), and Chebyshev distance. This index was then categorized into three groups of changed, unchanged, and uncertain classes using a fast multiple thresholding technique. In the fine stage, the subject image was first segmented into different clusters by the histogram-based fuzzy c-means (HFCM) algorithm. The optimal PIFs were then selected from unchanged and uncertain regions using each cluster’s bivariate joint distribution analysis. In the RRN modeling step, two normalized subject images were first produced using the robust linear regression (RLR) and cluster-wise-RLR (CRLR) methods based on the clustered PIFs. Finally, the normalized images were fused using the Choquet fuzzy integral fusion strategy for overwhelming the discontinuity between clusters in the final results and keeping the radiometric rectification optimal. Several experiments were implemented on four different bi-temporal satellite images and a simulated dataset to demonstrate the efficiency of the proposed method. The results showed that the proposed method yielded superior RRN results and outperformed other considered well-known RRN algorithms in terms of both accuracy level and execution time.

1. Introduction

Relative radiometric normalization (RRN) is the process of minimizing radiometric aberrations (i.e., gray-levels changes caused by variations in sun-target-sensor geometry, atmospheric conditions, illumination, and viewing angles) from one or more high/multispectral target images based on a high/multispectral reference image which are taken at different times from the same place [1,2,3,4,5]. It is a critical task because it is a prerequisite for the processing of multitemporal remote sensing (RS) images in several applications, such as automatic change detection [6,7] and image mosaicking [8].

A variety of RRN methods have been developed to radiometrically adjust RS images, mainly categorized into two main groups: dense RRN (DRRN) and sparse RRN (SRRN) [3]. DRRN methods adopt global image statistics to predict the relationship between image pairs, which are not feasible for image pairs with considerable noise and land use/land cover (LULC) changes [3,9,10]. In contrast, SRRN methods typically extract pseudo-invariant features (PIFs) from the target and reference images and use them to obtain the model transformation between the image pair [9,10]. Since the PIFs are partially invariant to illumination variation and changed regions, the SRRN can achieve more precise results than the DRRN methods in dealing with datasets with LULC regions [11]. Many SRRN methods have been developed in response to questions, such as: how to select PIFs and establish a reasonable relationship between PIFs? For example, Elvidge et al. [2] proposed a SRRN method based on an automatic scattergram-controlled regression (ASCR) to select the pixels close to the regression line. In this method, the regression line was determined by connecting the centers of water and land clusters at the scattergram between target and reference images. As a result, this strategy is operationally limited when image pairs do not include both clusters. Furthermore, due to the lack of PIF refinement in the ASCR approach, the radiometric resolution of the resulting normalized image may not be preserved. To address these limitations, a robust SRRN approach was introduced by Du et al. [12] based on the principal component analysis (PCA) and quality control for PIFs refinement. Additionally, Canty et al. [13] proposed a robust SRRN method in which PIFs were selected based on the multivariate alteration detection (MAD) transformations [14], which was invariant to linear transformation (e.g., affine and conformal) of the image pair gray-levels. Canty and Nielsen [15] further improved the robustness of the MAD method through an iterative reweighting scheme, named the iteratively reweighted (IR)-MAD method, which was affordable for radiometric adjustment of image pairs with significant seasonal changes. The MAD and IR-MAD methods have been widely used in the change detection process [16,17,18] and are frequently developed by researchers for RRN tasks [19,20]. For example, Byun et al. [21] developed a new MAD algorithm for RRN of very high-resolution (VHR) bitemporal images. Their algorithm utilized a weighting function derived from the normalized difference water index (NDWI) to calculate the MAD transform’s covariance matrices. Furthermore, Liu et al. [20] presented a robust SRRN for image mosaicking that extracted the optimal PIFs through the modified IRMAD and used them in an iteratively reweighted block adjustment.

Despite the advantages of IRMAD-based methods, they only use statistical analysis to select PIFs and do not take into account their physical properties, which may lead to potential errors in the RRN modeling process [4,22,23]. To address this, some rule-based SRRN methods have been suggested to consider the physical nature of land surfaces by adopting spectral indices over the PIFs selection process. For example, Zho et al. [22] proposed an automatic SRRN for multiple images with PIFs (MIPIFs) retrieved using step-by-step dark and bright sets selection based on the NDWI and some statistical sampling rules. Such PIFs selection is appropriate for the RRN of datasets acquired within the same time (e.g., season), but it cannot accurately handle the radiometric dispersion induced by seasonal fluctuations [22]. Furthermore, its results are highly reliant on the regulation of statistical sampling rules, which were employed to restrict the number of PIFs. To overcome these constraints, Ghanbari et al. [24] proposed a robust SRRN that took advantage of the Gaussian mixture modeling (GMM)-based change detection in PIFs selection and an error ellipsoid (EE) process in RRN modeling. Likewise, Moghimi et al. [3] employed a fast level set method (FLSM) and patch-based outlier detection to pick an ideal set of PIFs using a step-by-step unchanged sample selection strategy. With a similar idea, Yan et al. [25] employed a chi-square test to automatically extract the PIFs from the unchanged regions detected by an unsupervised autoencoder (AE) method. Although the mentioned methods yielded promising results, they were often computationally demanding in terms of both processing and memory storage.

The prior SRRN methods were mainly developed by assuming a linear relationship between the PIF values in the reference and target images, which was not feasible for datasets with nonlinear radiometric differences. Several RRN methods have been suggested that employ a nonlinear mapping function instead of a linear one in RRN modeling to cope with this problem [10,19,26,27]. For example, Sadeghi et al. [10] proposed an intelligent RRN technique using an artificial neural network (ANN) to approximate solutions of a nonlinear relation between PIFs (unchanged samples) in the reference and target images. This method had high flexibility for modeling the relationship between PIFs in the reference and subject images. Nevertheless, its performance depended on its ANN architecture/network topology and the quality of the training data. Seo, et al. [26] developed the ASCR method [2] by employing a random forest (RF) regression instead of linear regression for handling nonlinear radiometric and phenological differences. Although this method had a good performance in radiometric correction, it was highly prone to overfitting and required one to set the appropriate RF regression parameters. Bai et al. [19] also developed the IR-MAD method by exploiting the kernel version of canonical correlation analysis (kCCA) and cubic polynomial (degree 3), respectively, instead of linear methods to eliminate the regular nonlinear spectral and radiometric differences. Selecting optimal values for the kernel parameters regulation and kernel type were the challenges of this approach. In general, although nonlinear-based SRRN methods [10,19,26,27] can radiometrically reduce the nonlinear distortions between image pairs, they are prone to overfitting and are often computationally intensive [28].

Most of the mentioned SRRN studies have provided a great solution to address the limitations of RRN. However, they do not contribute to the type of ground surfaces/LULC of PIFs in the RRN modeling, leading to potential errors and bias in the final results [29]. Therefore, several SRRN methods have been proposed that employ PIFs from different LULCs. These methods aim to create a linear relationship between all LULCs in the image pairs, while for different LULCs, such a relationship is different [30,31]. For instance, Sadeghi et al. [29] proposed an automatic RRN method by categorizing unchanged pixels according to the histogram of subject images for each band using the Otsu thresholding technique and calculating relevant coefficients of piecewise linear regression. In another study, He et al. [31] improved a semi-supervised RRN method to select the high correlated histogram of oriented gradients (HOG) features from image pairs as PIFs in each ground object class. In this method, an object-based classification was applied to input images to generate LULC maps for the reference and target images. This study generated the normalized image during linear class-wise RRN modeling using the extracted PIFs. Although this method had superior results, its automation was low because it used a supervised classification in its process. In general, the studies of [29,31] produced valuable RRN findings, but they did not refine the PIFs from uncertain/imprecise samples, which might lead to an imperfect linear model for specific classes. Moreover, their performance depended on the accuracy of the supervised/unsupervised classification algorithms utilized in their processes. Furthermore, some discontinuities between adjacent classes were found in the normalized images generated by these methods, resulting in an imperfect normalized image in terms of vision inception.

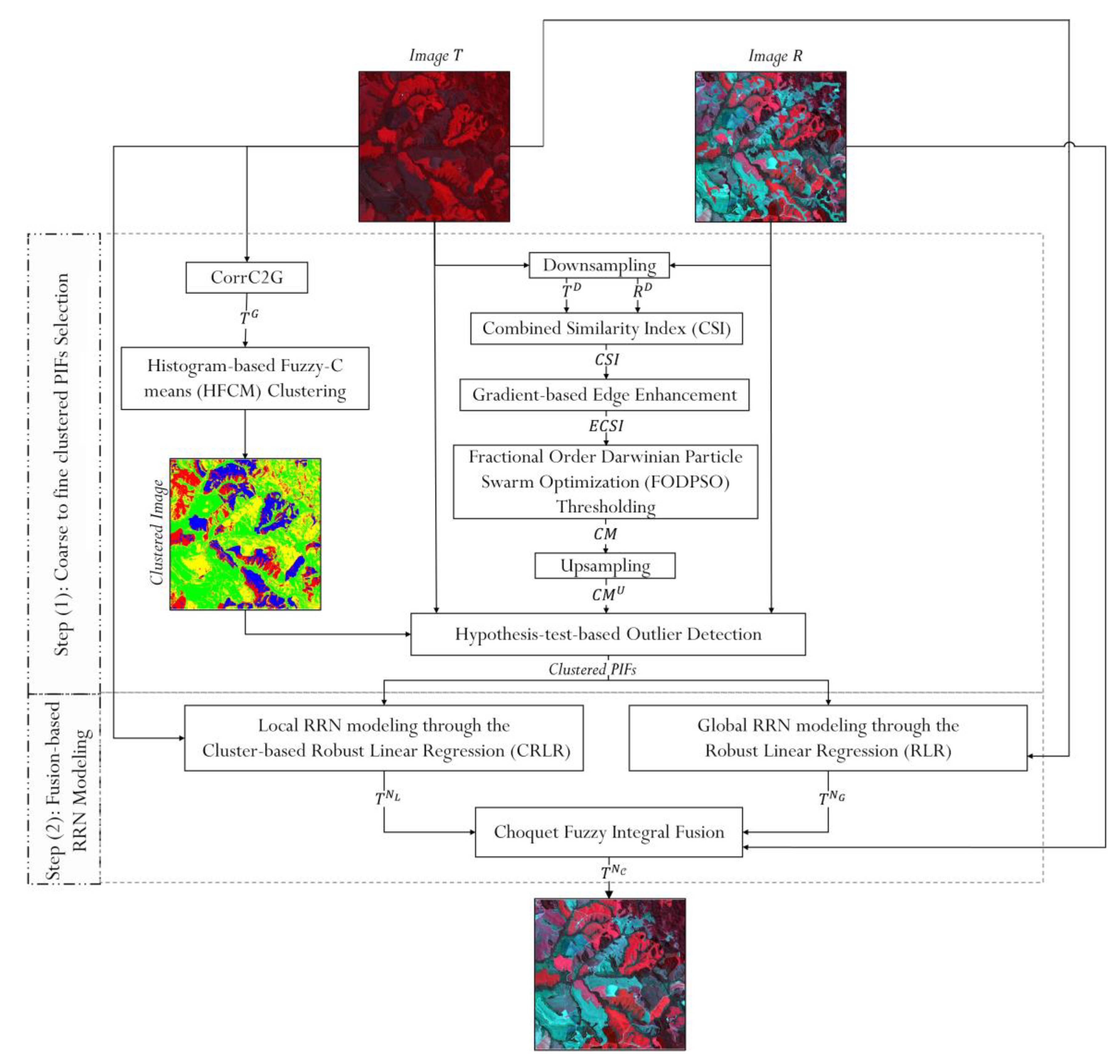

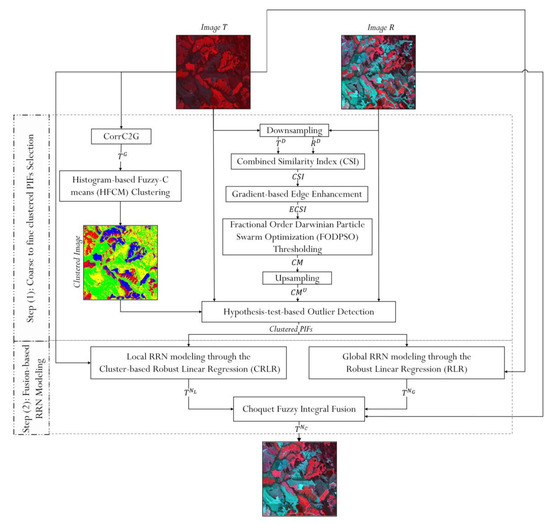

To address the constraints noted above, we present a novel SRRN technique that could efficiently extract reliable PIFs from various clusters and reduce discontinuities and bias in the final results by formulating the RRN modeling process with a fusion strategy. In the first step, the optimal PIFs are selected in a coarse-to-fine process. In the coarse stage of this process, the Pearson correlation, Chebyshev distance, and spectral angle mapper (SAM) are combined to construct a change index from the down-sampled input images in which changed regions were highlighted. This index is further pre-classified to three regions of changed, unchanged, and uncertain, using efficient multiple thresholding. In the fine level of the process, the target image is first clustered into different groups using the histogram-based fuzzy C-means (HFCM) [32]. Subsequently, the stable PIFs are collected from unchanged and uncertain pixels (generated by multiple thresholding pre-segmentation) for each cluster using a hypothesis-test-based outlier detection. In the next step, the retrieved clustered PIFs are then employed in the proposed fusion-based RRN modeling to provide a reliable normalized image. In this model, the two normalized images are initially generated using a standard robust linear regression (RLR) and the new cluster-wise version, named CRLR. The Choquet integral [33] was then utilized to fuse the produced normalized images because it is a flexible nonlinear aggregator operator that can efficiently model the relationships between fusion sources [34]. The performances of the proposed method were comprehensively evaluated on a simulated dataset and four different bi-temporal satellite images and compared to several existing state-of-the-art RRN methods. The key contributions of our work can be summarized as follows:

- A coarse-to-fine approach is designed to efficiently extract reliable and well-spatially distributed PIFs from distinct ground surface clusters. Moreover, a hypothesis-based outlier detection was developed and embedded in this approach toefficiently refine the PIF candidates by taking advantage of the probability contour of the bivariate normal (BVN) joint distribution.

- The cluster-wise-RLR (CRLR) is proposed for better modeling the complex relationship between target and reference images with different LULC types. This model also contains a weight matrix defined based on the distance to PIFs that can reduce the potential bias in the results of RRN.

- A novel fusion-based framework is presented for RRN modeling that can integrate multiple normalized images using the Choquet integral as well as handle potential uncertainties, such as discontinues and bias in the final results.

The rest of this manuscript is organized as follows. Section 2 describes the proposed RRN approach and its detailed descriptions, datasets, and quantitative measures utilized for performance evaluation. Section 3 presents the experimental results on the datasets to verify the feasibility of the proposed approach. Finally, the concluding remarks are summarized in Section 4.

2. Materials and Methods

2.1. Proposed SRRN Method

Consider two co/geo-registered satellite images R (i.e., Equation (1)) and T (i.e., Equation (2)), respectively, as the reference and target images with the same size, acquired from the same scene at different times.

where H × W refers to height and width in pixels, and N is the number of spectral bands of the images R and T. The primary goal of this research was to generate a dependable normalized target image (i.e., Equation (3)) computed using clustered PIFs extracted from input images R and T so that it is spectrally balanced with the reference image R.

We designed a novel RRN framework composed of two main steps to reach this goal, as shown in Figure 1. First, the clustered PIFs were selected and optimized through a coarse-to-fine no-change selection strategy. The selected PIFs were then used in fusion-based RRN modeling to generate an optimal normalized target image. The steps included are detailed in the following sections.

Figure 1.

The block diagram of the proposed SRRN method.

2.1.1. Step 1: Coarse-to-Fine Clustered PIFs Selection

As mentioned before, the first step of the proposed method was to generate reliable and well-spatially distributed PIFs from input images R and T over a coarse-to-fine no-change selection. To this end and for further faster processing, the grid size of input images was reduced to a coarser size P × Q, i.e.,

where the operator rounds its argument toward the nearest integer, s is the scaling factor of down-sampling, computed by

where φ is the user-defined positive integer (e.g., 128, 512, 720), min(.) and max(.) are respectively minimum and maximum operators. Accordingly, the input images R and T were down-sampled, respectively, to and which are defined as follows:

To generate a dependable input for the no-change selection process, down-sampled images and were then compared through a similarity index CSI which was combined from three metrics as follows:

where ρ, , and θ respectively refer to Pearson correlation, Chebyshev distance, and SAM [35] metrics, which are given by:

where and represent the gray-level pixel (p, q) in the spectral band bth of the down-sampled target and reference images, respectively. Such a combination can better reflect the characteristics of the changed and unchanged areas because it compares the reference and target images from different perspectives. It is worth noting that each metric was rescaled to [0, 1] by the Min-Max method before they were used in constructing .

To reinforce the boundaries of the changed/unchanged regions in the index CSI, gradient magnitude of the index was first calculated as:

where stands for the convolution of the CSI index with a Gaussian smoothing kernel , and ∇ denotes the gradient operator. The maximum operator was then used to supply complementary information from the index CSI and its gradient magnitude into the enhanced index ECSI which is given by:

The ECSI index makes a trade-off between geometrical detail preserving (edges and corners) and enhancing changed regions. It should be noted that rescaled to [0, 1] before applying the max operator.

Generally, due to the coarse resolution of ECSI and the complexity of land surface features, a binary segmentation result often fails to reflect the real changed/unchanged regions. To address this issue, an automatic multilevel thresholding was applied to the index CSI to generate the change map in which , (“0” and “1” indicate the pixel respectively belongs to the certain changed and unchanged classes, whereas “2” involves pixels belonging to the uncertain class. This process can be performed as follow:

where the low and high thresholds, and were determined by the fractional-order Darwinian particle swarm optimization (FODPSO) thresholding [36] due to its efficiency and fast process. The change map, CM, was further up-sampled to the original size of input images using the nearest-neighbor interpolation and indexed by .

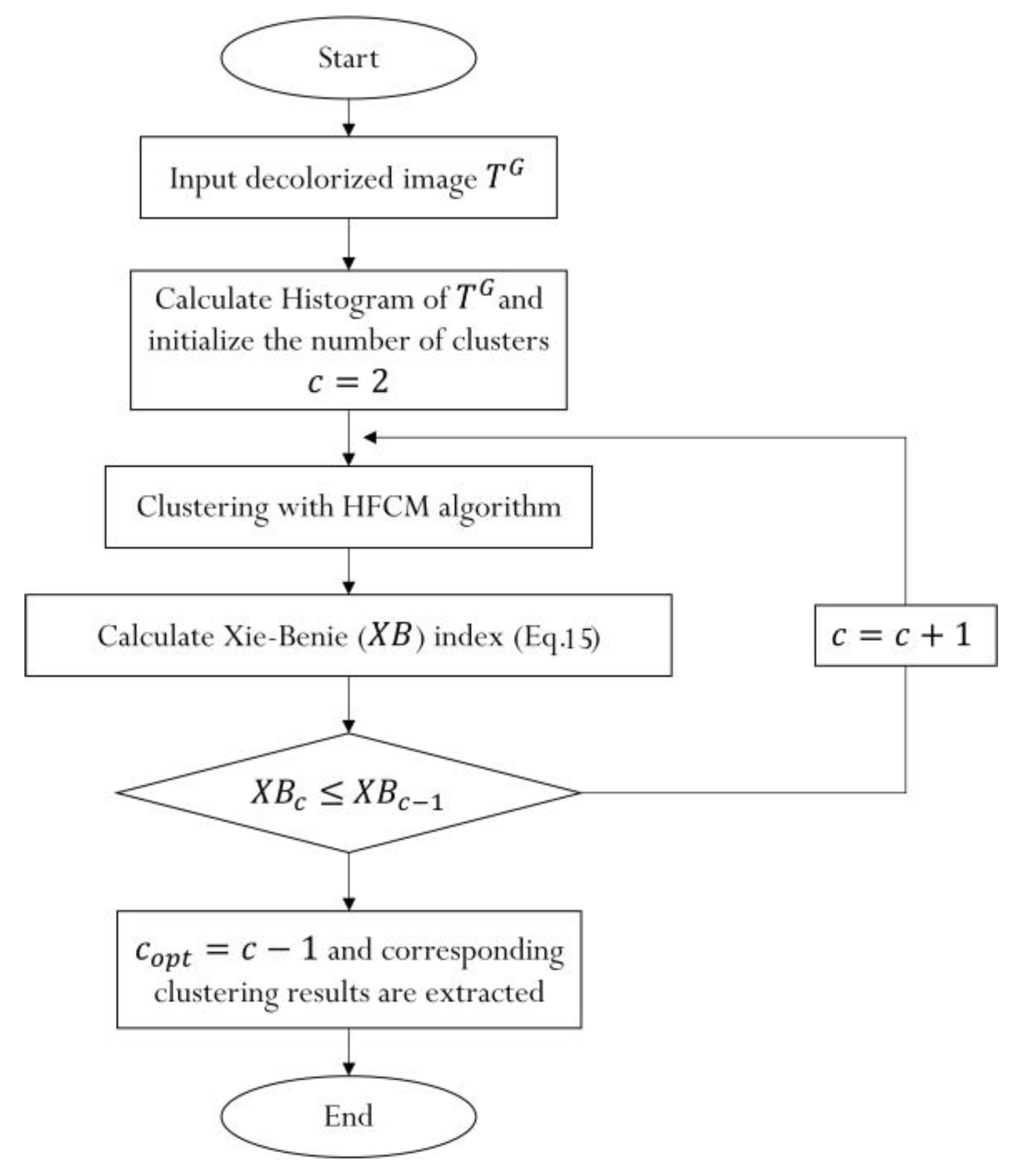

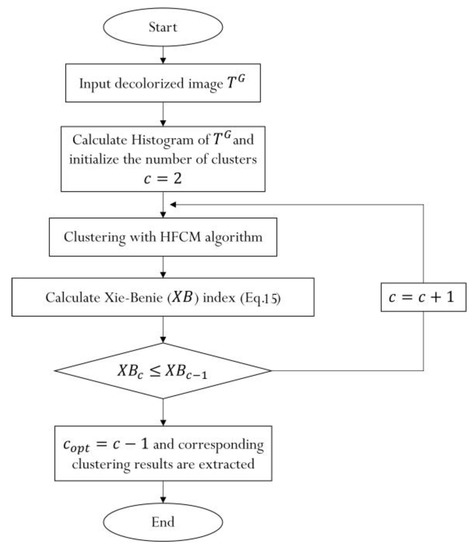

In fine no-change selection, a local outlier detection was introduced, inspired by [37], to select reliable and well-spatially distributed PIFs from different LULCs by making decisions based on a hypothesis test. To this end, the target image T, was first converted to the grayscale target image using CorrC2G [38], and it then partitioned into the cluster. Since satellite image typically includes multiple features with overlapped distributions, fuzzy clustering algorithms have been found to be very beneficial [39,40]. In this study, the HFCM clustering [32] was selected for this task because it operates on the histogram of an input image instead of the entire image, leading to a much faster process and reducing memory storage. The HFCM algorithm typically needs to know the number of clusters as an input which should be optimally determined. In this study, the optimum number of clusters was self-adaptively selected during the analysis of the Xie-Beni (XB) index [41] (i.e., Equation (15)) as the workflow of Figure 2.

where

and

where is the histogram of the image with L gray-levels, i.e., ; is the distance metric between two variables, is the cluster number, the membership function (i.e., Equation (16)) and cluster center (i.e., Equation (17)) are obtained as a result of applying the HFCM algorithm to the image . It is worth noting that each pixel value of the image was normalized according to Equation (18) to make sure that they are in the range of [0, 255], i.e., :

Figure 2.

The flowchart for determining the optimal number of clusters using the histogram-based fuzzy C-means (HFCM) clustering algorithm.

After partitioning the image into clusters, the pixel pairs of input images belonging to the unchanged class were first picked up from each cluster and considered as the definite PIFs for that cluster. The hypothesis-test-based method was then proposed to eliminate the outliers from pixels of the uncertain class in each cluster using statistical parameters estimated based on the definite PIFs. As a result of this process, more reliable PIFs could be extracted in each cluster, resulting in accurate modeling between the reference and target images.

Let’s consider that in each cluster, and are a vector of pixels values that belong to the uncertain class in each spectral band of reference and target images, respectively. Moreover, suppose that they follow a BVN distribution, and their joint probability density function (PDF) can be formulated as follows:

where and and are, respectively, the mean vector and covariance matrix generated from and in each cluster. Moreover, as is clear from Equation (19), represent the Mahalanobis distance of input samples, following a chi-square distribution with 2 degrees of freedom. Accordingly, the probability contour of the BVN distribution can be defined for each cluster as follows:

where ξ is the scale of the probability contour and determined as , in which is a given level of significance (e.g., the 95% probability contour corresponds to level of significance).

As can be seen from Equation (20), the performance of the probability contour is highly dependent on the estimation of its statistical parameters (i.e., and ). The uncertain class may include many noises and anomalies in each cluster, leading to incorrect statistical parameters estimation and distortion of RRN results. To address this problem, we used unchanged samples (i.e., definite PIFs) to correctly estimate the statistical parameters of μ and Σ for each cluster. Therefore, the probability contour at the given significance level was updated with such parameters and then directly adopted to form the critical region for the hypothesis test in each cluster. For each cluster, the hypothesis had a null hypothesis i.e., all of the uncertain pixel values which fall inside the critical region added to the set of PIFs in the corresponding band. Since this process was implemented band-by-band, a majority voting rule over spectral bands produced the final decision for selecting PIFs from uncertain pixels in each cluster.

2.1.2. Step 2: Fusion-Based RRN Modeling

This step aimed to adjust the target image to the reference image through a novel fusion-based RRN modeling. To this end, two normalized images of and were first generated, respectively, through the global and local RRN modeling based on the clustered PIFs. In fact, the relation between the target and reference images was once modeled globally through a band-by-band RLR as follows:

where and are respectively the global slope and intercept for the th spectral band, which were estimated using the iteratively reweighted least-squares (IRLS) method [42] based on the gray-levels of the clustered PIFs in images, T and R. The process of the IRLS method was started by considering the initial value for and . At each iteration τ, the non-negative weights of clustered PIFs were then estimated from the previous iteration based on the bisquare estimator [43]. In the next stage, the new coefficients were estimated as follows:

where is the total number of clustered PIFs, and are respectively the gray-levels for the th clustered PIF on the th spectral band of images T and R; and are the weighted means of gray-levels of clustered PIFs in the bth spectral band of images T and R, respectively, which are calculated from the previous iteration as follows:

The two last stages were repeated until the estimated normalization coefficients converged to optimal values.

The RLR is much more robust to existing outliers than the other conventional linear regression models due to an efficient weighting function in its procedure [42]. The global RRN modeling generates a uniform normalized image where the discontinuity problem is not observed. However, applying such an approach may be insufficient to model the complex relationship between target and reference images, especially in dealing with datasets with different LULC types. Additionally, when the number of PIFs in one of the clusters is high, the global RRN modeling results may be biased to that cluster. To address these problems, the CRLR was introduced as a local RRN modeling to locally estimate the relation between image pairs in a band-by-band manner, which is defined as

where and are respectively the slope and intercept for th cluster of the bth spectral band, which were calculated by the IRLS technique; refers to the weight matrix, which was separately computed for each cluster based on the inverse distance of pixel values of the target image from the corresponding cluster center as follows:

Such an RRN model can generate a normalized image that is well adjusted to the reference image in different LULC. Furthermore, the weight matrix embedded in this model can help decrease the potential bias in the RRN results. However, the normalized image generated by the local model is not as uniform as that provided by the global model, where discontinuity along the LULC’s edge boundaries could be observed in the results of this model. Thus, the global and local RRN approaches include advantages and weaknesses in producing the normalized image. Accordingly, we looked for a strategy that decreases the discontinuity along the LULC’s edge boundaries and reduces the bias model problem. This could be resolved by fusing information from the normalized images and . There are a variety of fusion approaches available in the literature for merging information from multiple sources. In this study, we used the Choquet integral operator [33] to construct the fused normalized image as it utilizes the fuzzy measures in its calculation, allowing it to consider all possible combinations of criteria in the decision-making process [44].

Suppose that we have normalized images, for fusion. Denote the gray-level of th normalized image, , on th pixel in the th spectral band, , as . The discrete Choquet integral on the instance, calculated by [45]:

where is rearranged so that refer to the fuzzy measure element value corresponding to the subset =, and . Note that when is an -fuzzy measure, values are determined by [34]:

where , the parameter refers to the degree of interaction between two components which is obtained with the condition as the following equation [45]:

As for the fusion between two normalized images, and the domain is defined as . In this domain and according to Equation (28), the pixel value for the fused image, can be obtained as follows:

where and are the fuzzy measures respectively for and which were determined based on the inverse of the absolute value of the gray-level difference between normalized and reference images on the th pixel in the th spectral band as follows:

By substituting and into Equation (29), the joint fuzzy measure can be determined as follow:

2.2. Datasets

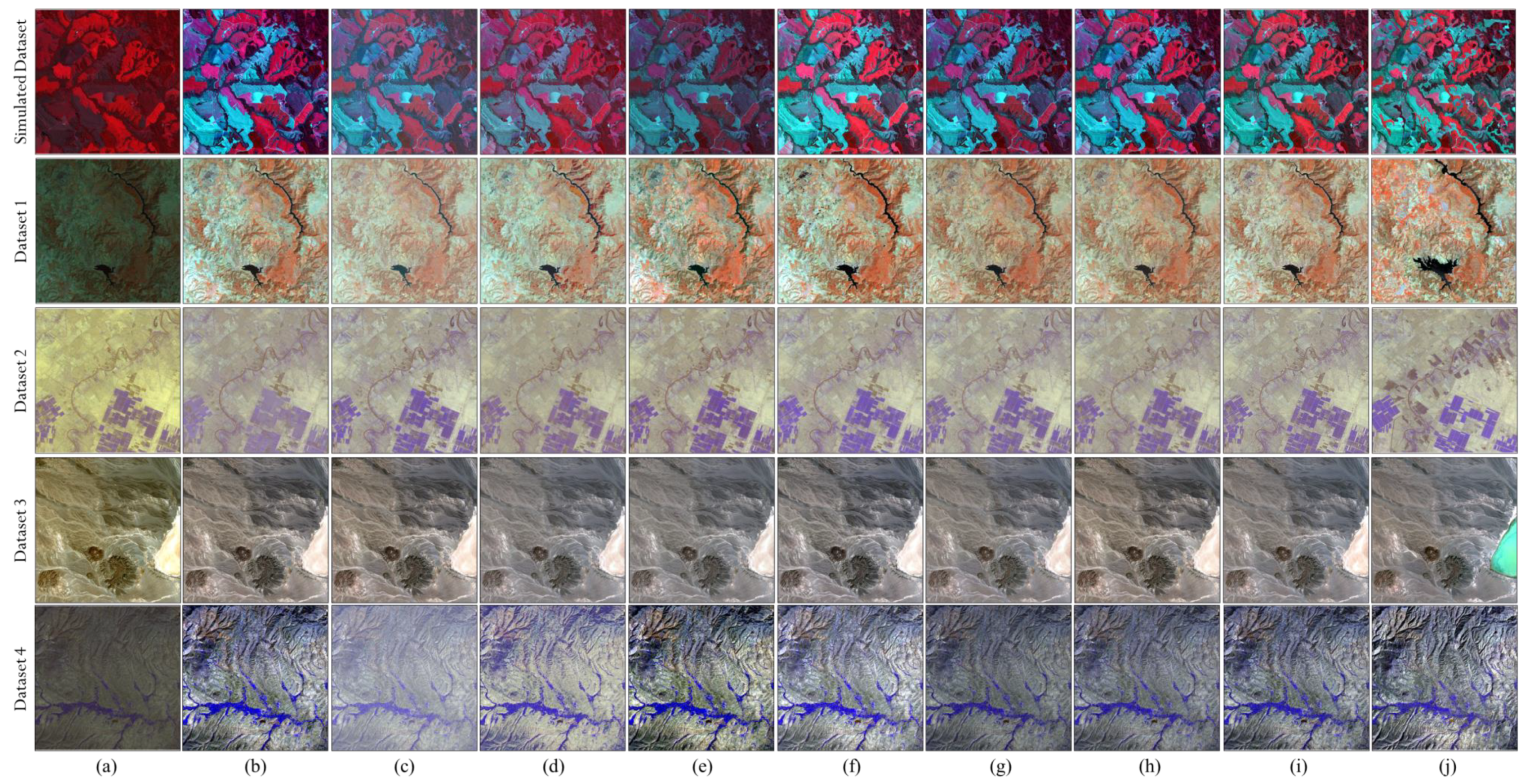

As part of this study, a simulated dataset and four real bi-temporal optical images taken by Landsat-7, Terra, Sentinel-2, and IRS satellites were used to evaluate the proposed SRRN method (see Figure 3). The key reasons for choosing these datasets were their diversity in terms of satellite sensor, geographical coverage, and various atmospheric conditions. The main characteristics of real datasets are summarized in Table 1.

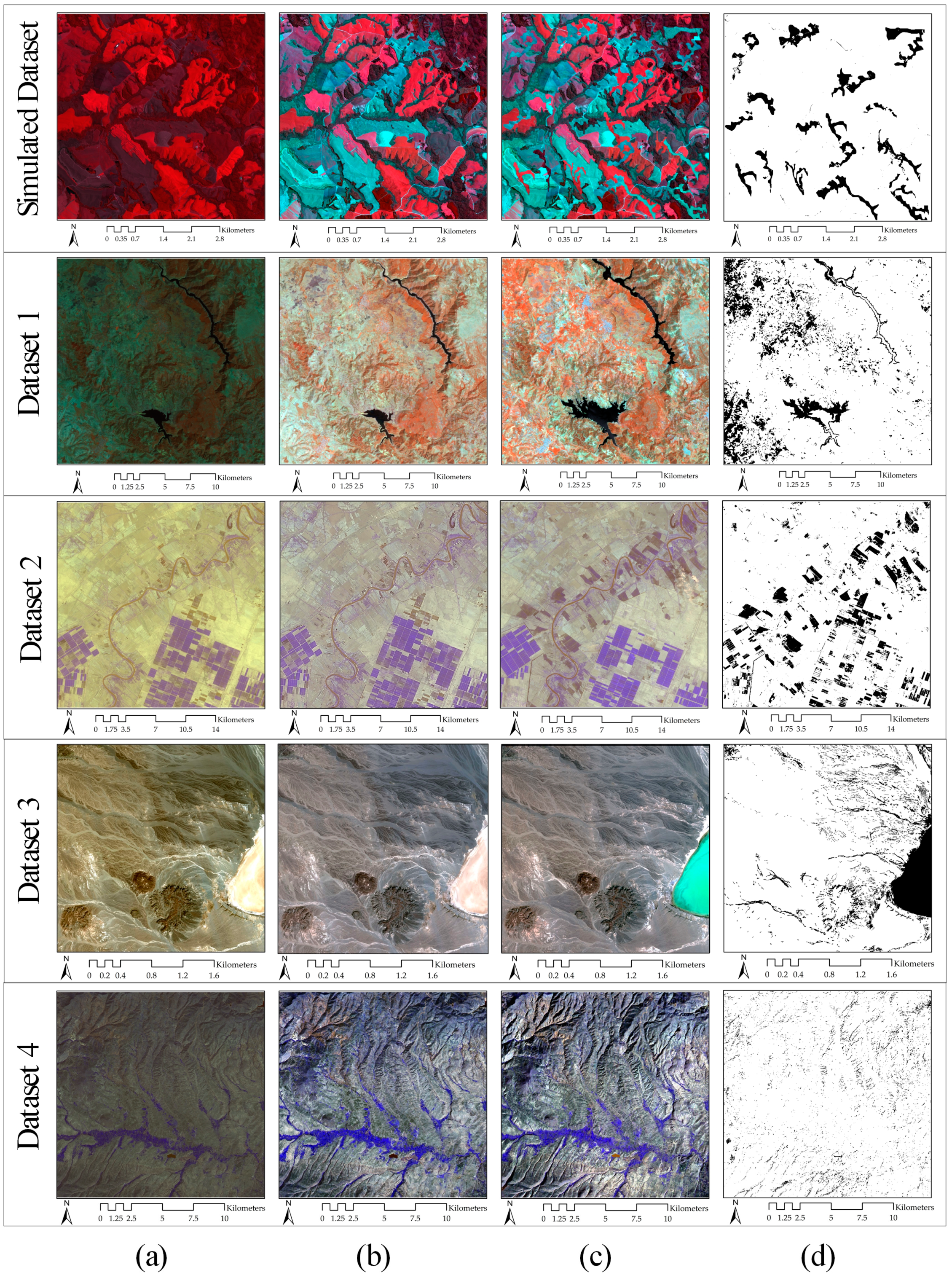

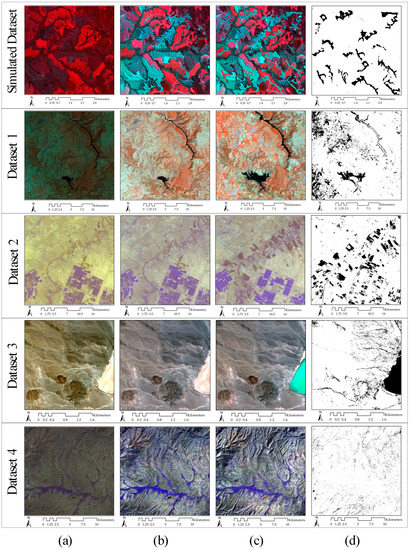

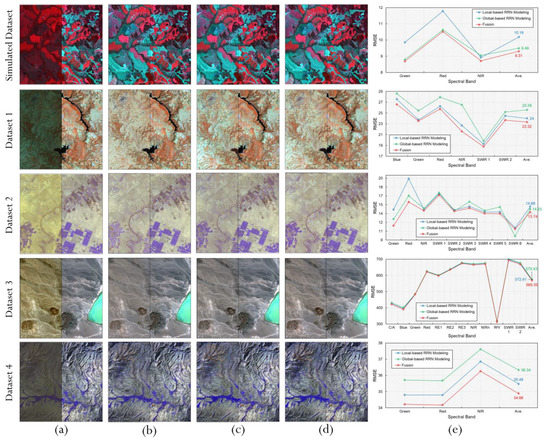

Figure 3.

The color composites of considered datasets, and the corresponding normalized target images and binary change maps. (a) target images, (b) normalized target images generated by the proposed method, (c) reference images, and (d) binary change maps (black = changed, white = unchanged). False-color composites (NIR/red/green) and (green/red/NIR) were respectively used for the simulated dataset and datasets 1, 2, and 4, whereas the natural color composite (Red/Green/Blue) was employed for datasets 3 for visual assessments.

Table 1.

Characteristics of the datasets used in this study.

The simulated dataset was made up of a portion of 1200 × 1200 pixels of an uncalibrated image acquired by the SPOT 7 satellite over the three channels (green, red, NIR) in November 2014 from Curitiba, Brazil, i.e., geographically limited between [24°34′18″ S, 49°43′44″ W] and [24°38′8″ S, 49°39′28″ W]. In this dataset, the uncalibrated image was considered as the target image, whereas its adjusted image (in terms of contrast and brightness) with some simulated changed areas was selected as the reference image (see Figure 3a,c first row). The target image includes the different cropland types with low contrast and brightness. Following RRN, the contrast and illumination of target images must be similar to those of the reference image, and croplands will be discernible similarly to those shown in simulated reference images.

Dataset 1 is composed of the bi-temporal Landsat 7 images over Lago Mulargia Lake in Italy (i.e., geographically limited between [39°45′01″ N, 9°9′30″ E] and [39°35′02″ N, 9°22′30″ E]) spanning a period of about one year (see Figure 3a,c second row). There are many differences among bitemporal data, including seasonal changes in different LULC, especially in vegetation, and varying illumination that could result in false changes in the final results of RS applications such as change detection and time series analysis. The Landsat 7 image acquired in September 2002 was considered the reference image because of its reasonable contrast and illumination. The one acquired in May 2003 was used as the target image due to its non-ideal spectral properties. As a result of RRN, the spectral response of various LULCs in the target image is expected to be similar to their response in the reference image.

Dataset 2 consists of the bi-temporal images acquired by the ASTER sensor over the south of Ahwaz, Iran (i.e., geographically limited between [31°20′04″ N, 48°10′02″ E] and [31°01′01″ N, 48°41′03″ E]) across a period of about two years (see Figure 3a,c third row). There are many croplands, vegetation, soil changes, and illumination differences among this bitemporal data. The ASTER image taken in July 2002 was used as the reference image because of its realistic illumination and spectral contents. The one acquired in July 2003 was considered the target image. After applying RRN, the target image is expected to be harmonized with the reference image in terms of contrast, brightness, and spectral content. It is worth noting that the spatial resolution of 30 m/pixel bands of the ASTER images was also sharpened to 15 m/pixel by the PCA-based PAN-sharpening algorithm [46].

Dataset 3 contains the bitemporal Sentinel 2 images over the Hamoon wetland, Iran-Afghanistan border (i.e., geographically limited between [31°35′38″ N, 60°57′42″ E] and [31°22′38″ N, 61°15′36″ E]) across a period of about two years (see Figure 3a,c forth row). These images were acquired in the same month but under different atmospheric conditions. There are significant water body changes as well as illumination variations caused by slightly different viewing angles among the bitemporal data. The Sentinel 2 image taken in April 2016 was employed as the reference image, and atmosphere, terrain, and cirrus corrections were performed on this image by the Sen2Cor model, which is available in SNAP software. The uncalibrated Sentinel 2 image (at processing Level-1C) acquired in April 2016 was also used as a target image. After applying RRN, the spectral content of the target image is intended to be rectified based on that of the reference image. Moreover, the spatial resolutions of 20 m/pixel and 60 m/pixel bands of the Sentinel 2 dataset were also enhanced to 10 m/pixel by the Sen2Res model [47], which is available in the Sentinel Application Platform (SNAP) open-source software.

Dataset 4 includes the bitemporal IRS images over Varzaqan, Iran (i.e., geographically limited between [38°40′24″ N, 46°29′25″ E] and [38°25′12″ N, 46°47′56″ E]) spanning a period of about ten years (see Figure 3a,c last row). There are many LULC changes as well as mountain offsets caused by different viewing angles among this bitemporal data. The IRS image taken in July 1998 was employed as the reference image due to its better brightness and contrast than the one acquired in May 2007. After applying RRN, the target image was expected to be well-adjusted with the reference image in terms of spectral content and visual point of view.

All spectral bands of image pairs, except the thermal, cirrus, and panchromatic bands, were used in the RRN process. The ground truth of the change maps, which is shown in Figure 3d, was generated by the post-classification comparison and manual analysis of the image pairs. It is worth noting that the unchanged pixels in these maps were considered for RRN validation (experiments of Section 3.1, Section 3.3, and Section 3.4) to have fair results.

2.3. Evaluation Metrics

To quantify the global performance of the proposed SRRN method and generate comparative experiments, the root mean square error (RMSE) was used in this study (Equation (35)).

where is the total number of unchanged pixels in the binary change map. A low RMSE describes the acceptable RRN results.

To locally validate the performance of the proposed SRRN method, the cross-correlation (CC) to average mean absolute percentage error (AMAPE) ratio index (CAMRI) is suggested, which is calculated for each exciting LULC as follows:

where denotes the number of test samples in a specific LULC, and are respectively the average values of these samples in the bth spectral band of the reference and normalized target images. The higher value of the CAMRI results in a better RRN.

3. Experimental Results

3.1. Analysis of the Coarse-to-Fine PIFs Selection

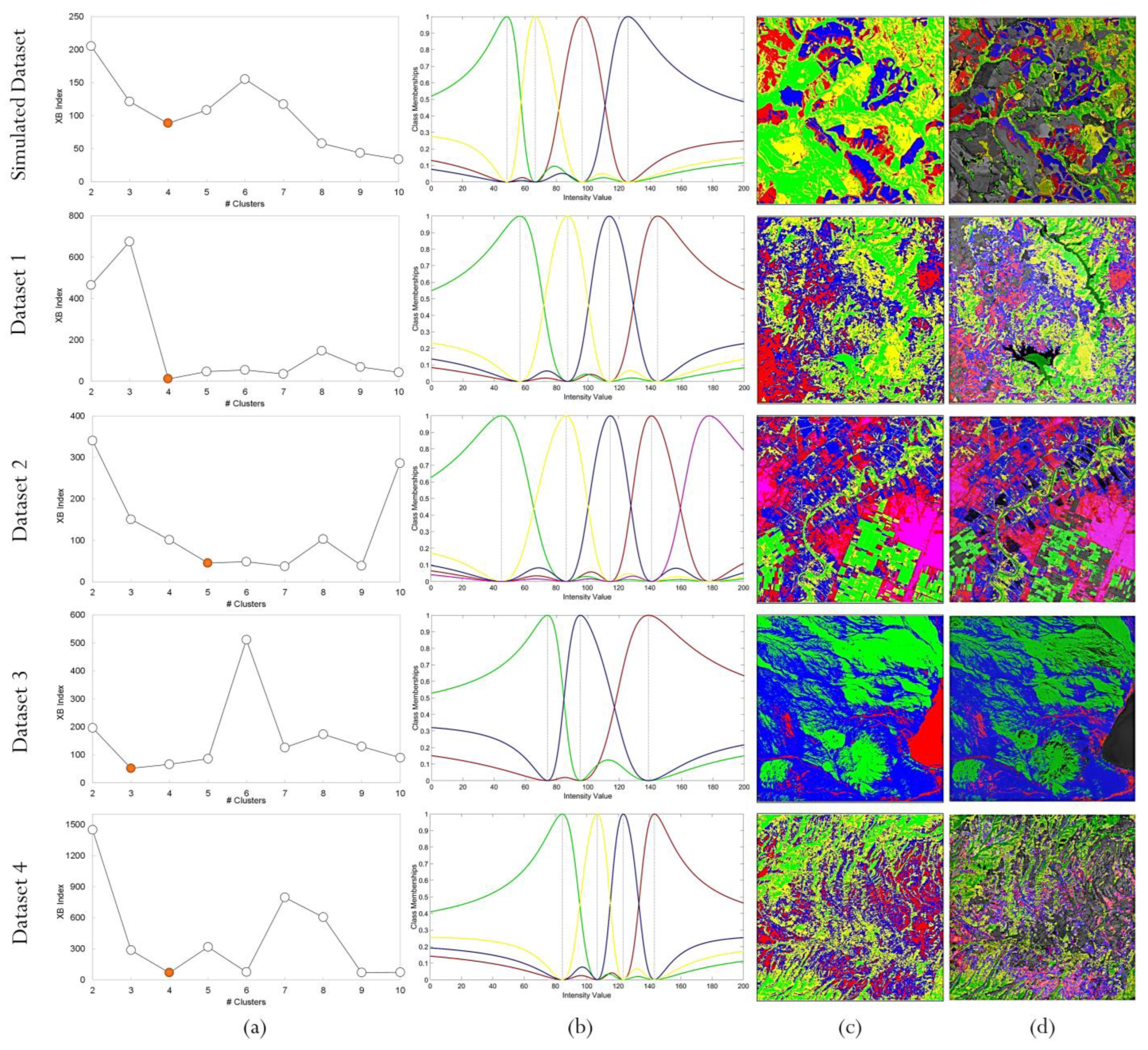

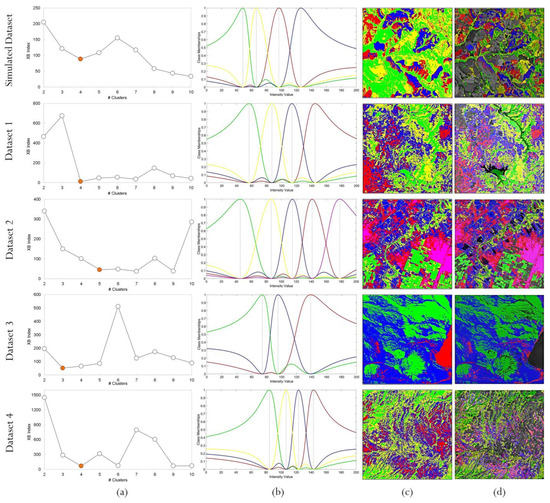

As clustering is an essential component in the proposed coarse-to-fine PIFs selection, we present the results of the HFCM algorithm for all datasets used in Figure 4a–c. In this way, the XB values for the number of clusters from 2 to 10 are shown in Figure 4a during the clustering process for each dataset. For the best cluster number in each dataset, the clustering results and membership maps are also shown in Figure 4b,c, whereas the selected PIFs in each cluster are illustrated in Figure 4d for all datasets.

Figure 4.

(a) The optimal cluster number selection through the HFCM clustering using the XB index for all datasets. (b) Membership function maps, (c) clustering results for all datasets, and (d) clustered PIFs extracted by the proposed coarse-to-fine strategy for all datasets.

As can be seen from the subplots in Figure 4a and according to the flowchart presented in Figure 2, the optimal cluster numbers were self-adaptively selected as 4, 4, 5, 3, and 4, respectively, for the simulated dataset and datasets 1–4. The achieved optimal cluster number for each dataset corresponds to the first minimum value of the XB index, which seemed to be consistent with the real number of clusters in the target image (see Figure 4b,c). As is evident from the clustering results and their membership maps, the decolorized target images were well categorized from dark to bright clusters. As a result, the robust and well-spatially distributed PIFs were collected from these clusters through the proposed hypothesis-test-based method, which was well fitted with the physical properties of ground surface types. For example, the clustered PIFs were extracted from dark and bright croplands of the simulated dataset using the proposed coarse-to-fine strategy (see Figure 4d first row). The PIFs were also collected from different LULC types of dataset 1, such as water bodies and wetlands (dark regions), dense vegetation (gray regions), sparse vegetation (light gray regions), and mountainous, as well as bare soil land cover (bright regions) (see Figure 4d second row). For dataset 2, the clustered PIFs mainly were selected from the river and dark-green farmlands (dark regions), wetlands (dark-gray regions), irrigated croplands (gray regions), harvested cropland areas (light-gray regions), and bare soil land covers (bright regions) (see Figure 4d third row). The clustered PIFs of dataset 3 were composed of the water bodies, sandy and rocky areas, and the dark, gray, and bright ground surfaces, respectively (see Figure 4d fourth row). They were also composed of dark to bright samples collected from the valleys, dense and sparse vegetation, and rocky areas included in dataset 4 (see Figure 4d last row).

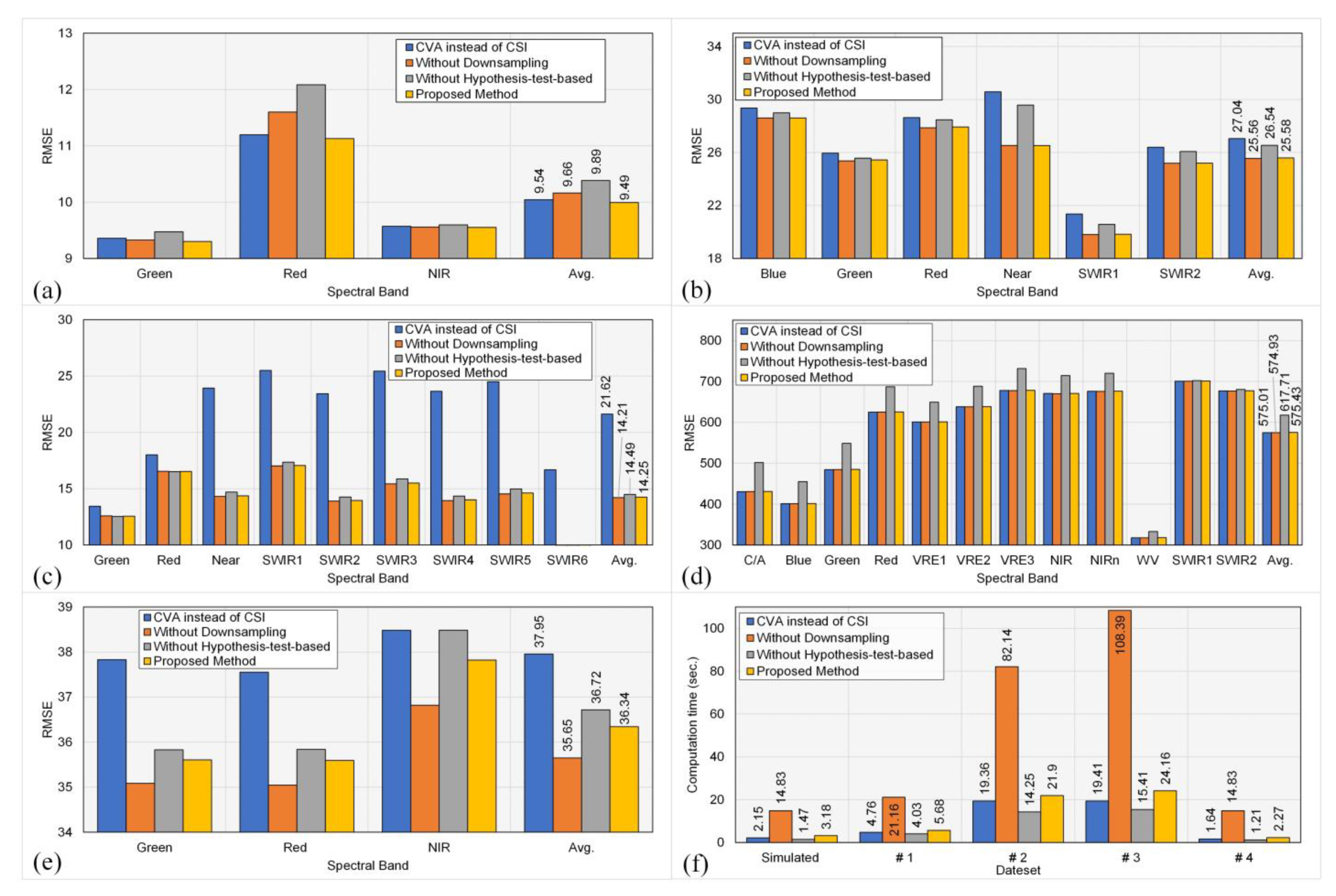

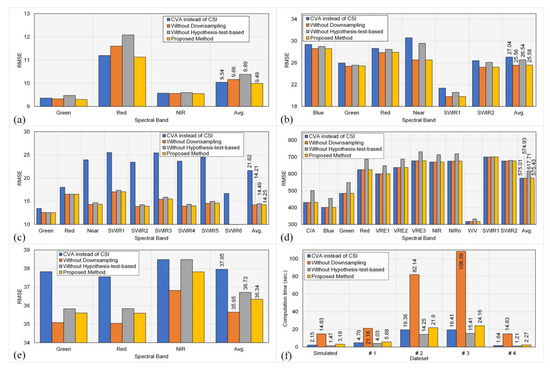

To analyze the efficiency of the proposed coarse-to-fine strategy, its RRN results were compared to those obtained using the same approach without the downscaling process, without the hypothesis-test-based method, and when using CVA instead of CSI in terms of RMSE and computing time (Figure 5). The linear RLR was selected as the core of RRN modeling in this experiment to provide a fair comparison and investigate the quality of clustered PIFs generated by the coarse-to-fine approach under the mentioned conditions. The experiments and the computation time analysis were conducted on all considered datasets by MATLAB (version 2020a) on an Intel CPU Core (TM) i7-3770 GHz with 16 GB of RAM.

Figure 5.

The RRN performance resulted from the proposed coarse-to-fine PIF selection and the same strategy without down-sampling, without hypothesis-test-based, and when CVA was used instead of the CSI for the simulated dataset (a) and datasets 1–4 (b–e) and (f) their processing time.

It is evident from bar charts Figure 5a–e that using CVA instead of CIS index in the proposed coarse-to-fine PIFs selection resulted in a significant reduction in RRN performance for most analyzed datasets. For example, the average RMSE was reduced by 7.37 and 0.05 in the best (simulated dataset) and worst (dataset 2) cases after using CVA instead of the CIS index, whereas it led to an increase in average RMSE by 0.42 only for dataset 3. These results could be due to the high sensitivity of CVA to radiometric differences between image pairs because it directly employs only information of spectral bands to generate a difference/change index. In contrast, the CSI index uses the integration of information obtained from distance, angle, and correlation between spectral bands of image pairs in the comparison process, which was less affected by radiometric distortions. However, the running time was reduced by almost 21% when using CVA in the PIF selection process, which could be due to the simplicity of CVA calculations (see Figure 5f).

After using the hypothesis-based test in the process of PIF selection, the average RMSEs were improved by 4.22% for the simulated dataset and 3.83%, 1.68%, 7.34%, and 1.05%, respectively, for datasets 1–4, indicating its efficacy in the PIF refinement (see Figure 5a–e). As expected, using a refinement algorithm raised the computational cost of the process. Knowing this, using a hypothesis-based-test algorithm increased the execution time of the proposed PIFs selection by almost 40% over most cases, which is worth considering for better results.

The PIFs selection without down-sampling yielded the best performance over most of the datasets, but there was no significant difference in its RRN accuracy and that of the proposed method. For example, when the down-sampling process was discarded from the proposed method, the average RMSE was reduced by only 0.11%. Furthermore, as compared to other approaches, the proposed PIF selection without down-sampling required the greatest processing time in all cases (see Figure 5f). These findings revealed that adopting down-sampling in the PIF selection process not only reduced execution time but also provided satisfactory RRN accuracy for most datasets.

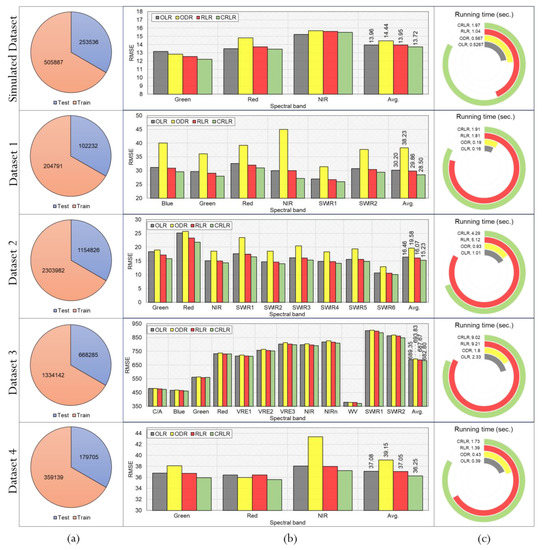

3.2. Comparative Results of the RRN Modelling

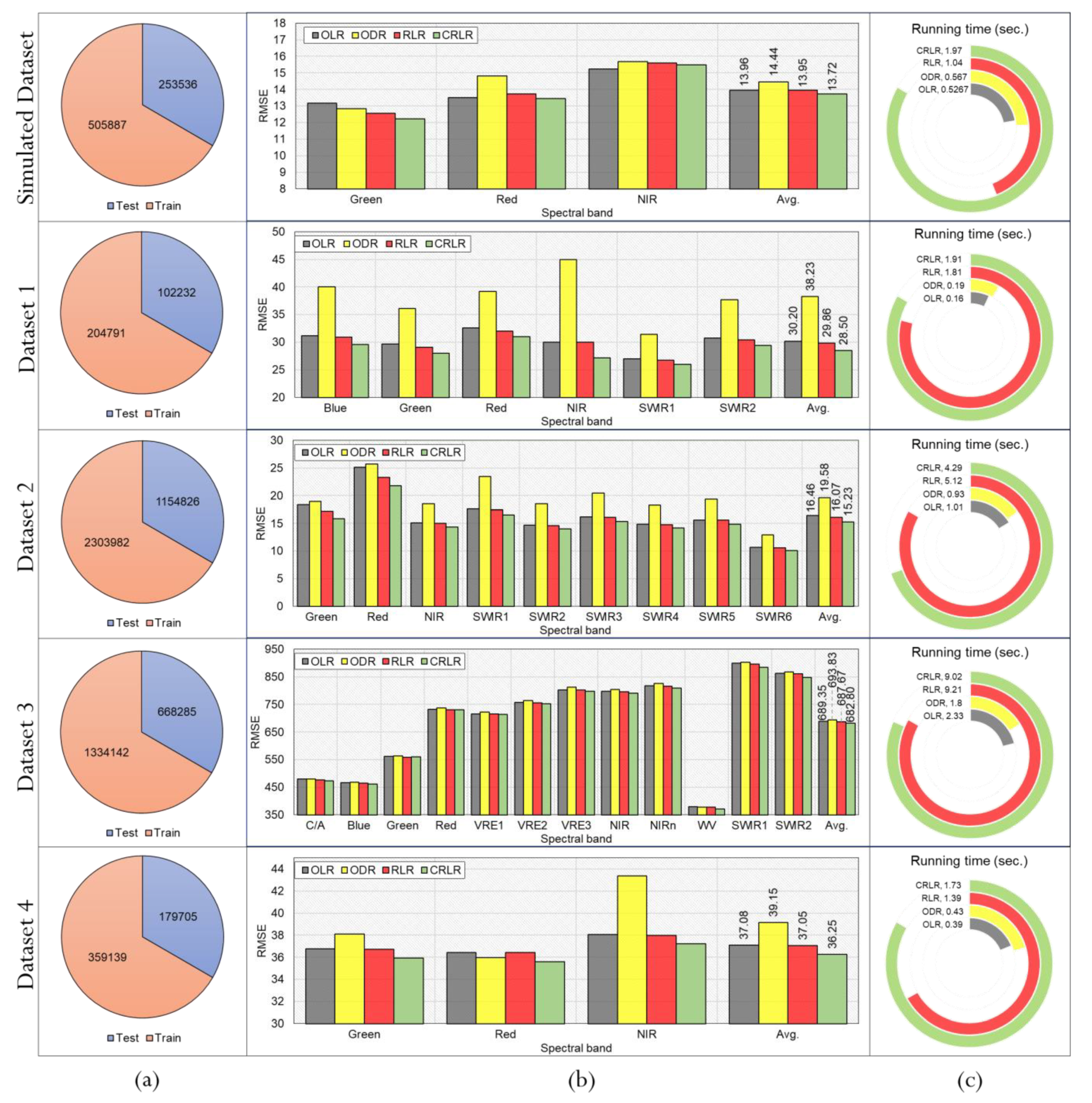

To evaluate the competence of the RLR and CRLR models, they were compared with the two most widely used models in the RRN process, including ordinary least squares (OLS) [48] and orthogonal distance regression (ODR) [49], in terms of RMSE and processing time (see Figure 6). For this experiment, 67% of the PIFs from each cluster were randomly selected for training, and the rest was used to test the models (see Figure 6a). In addition, we calculated the running time only for the modeling step (see Figure 6c).

Figure 6.

The comparative results of the OLR, ODR, RLR, and CRLR models in the proposed RRN process. (a) Number of training and test samples, (b) the RRN performance resulted from considered models, and (c) their processing time for the simulated dataset and datasets 1–4.

As depicted in Figure 6b, the proposed CRLR model obtained the best performance in RRN modeling of all datasets among all considered models, which indicates its robustness and effectiveness in model fitting. For example, the CRLR outperformed the RLR, ORD, and OLR, respectively, by 5.20%, 22.19%, and 7.48% in the best case (dataset 2) and by ~1.5% in the worst cases (dataset 3) in terms of average RMSE. Moreover, RLR had a somewhat better performance than the OLR in that it slightly improved the average RMSE of the OLR by ~1% for all datasets. This can be mainly because RLR, like CRLR, benefits from the bisquare weighting function, resulting in more robust results. Among all considered models, the ORD had poor RRN results for considered datasets where it conducted somewhat large RMSE compared with other models. The main reason may be related to a large number of training samples and their variety of errors, leading to errors in fitting and estimating coefficients. Although the CRLR and RLR had better quantitative results than ORD and OLR, both of them were computationally intensive. This was more obvious for datasets 2 and 3, which have more spectral bands than other datasets (see Figure 6c). In summary, The CRLR and RLR typically require a much larger computational volume than conventional models used in the RRN modeling phase, which can be seen as a weakness of these models.

3.3. Effects of the Fusion-Based RRN Modelling

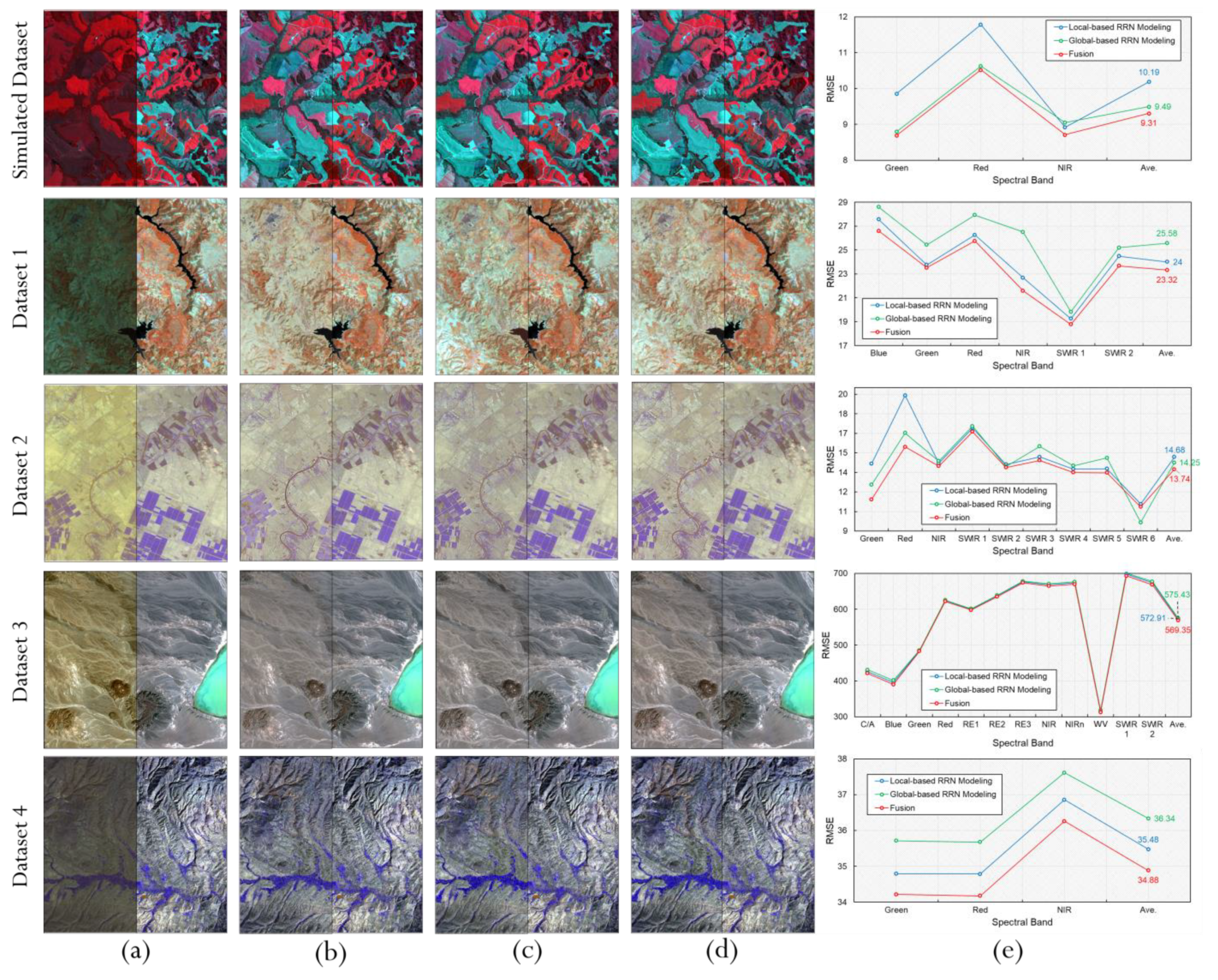

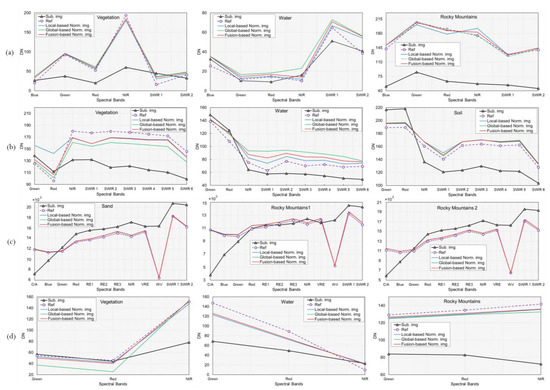

In this section, we evaluated the performance of the proposed fusion-based RRN model at the local and global levels over the analyzed datasets. The experiments were performed with the clustered PIFs selected by the proposed coarse-to-fine process. Figure 7 shows comparative results between the proposed fusion-based RRN model and local and RRN modeling in terms of accuracy and visual depth.

Figure 7.

The comparative results of the proposed SRRN method using the local, global and fusion-based RRN modeling. The mosaic images are composed of (a) subject images (left) and reference images (right), (b) normalized images generated through the local RRN model (left) and reference images (right), (c) normalized images generated through global RRN model (left) and reference images (right), and (d) normalized image generated through fusion-based RRN model (left) and reference images (right) for all analyzed datasets. (e) The performance resulted from local, global, and the proposed fusion strategy models over all spectral bands of analyzed datasets.

As demonstrated in Figure 7a–e, the normalized images produced by the proposed fusion strategy were more visually similar to the corresponding reference image than those generated by local and global models, indicating its effectiveness in the RRN process. Moreover, the proposed fusion-based strategy significantly reduced the amount of bias and discontinuities present in the normalized images of datasets 3 and 4, produced from local and global RRN models. For example, the local RRN modeling generated undesirable normalized images that were not well harmonized with the reference images in datasets 3 and 4, whereas global RRN modeling produced blurred normalized images. Notably, the fused normalized images in these two datasets were in good agreement with the relevant reference images (See Figure 7a–e second and third rows). On other datasets, there were not many visual differences between the normalized images generated by methods compared, where they all visually matched their reference images.

Based on the results illustrated in Figure 7e, the RMSEs were reduced over most of the spectral bands of the datasets after fusing the normalized images generated by the local and global models by the fuzzy Choquet integral. For example, when using the proposed fusion technique in RRN modeling instead of a single local model, like the cluster-wise RLR, the average RMSEs were reduced by 8.63% for the simulated dataset and 2.83%, 6.40%, 0.62%, and 1.69% for datasets 1–4, respectively. Moreover, the average RMSEs were improved by 1.90% for the simulated dataset and 8.84%, 3.57%, 1.06%, and 4.02%, respectively, for datasets 1–4 when the proposed fusion strategy in RRN modeling was used instead of the single global model like the RLR. This could mainly be due to the difference between the reference and normalized target images as the fuzzy Choquet integral memberships during the fusion process. In addition, local RRN modeling provided better results for datasets 1, 3, and 4, while global RRN modeling delivered better results for the simulated dataset and dataset 2. Based on our results, a single RRN model was not sufficient to achieve better qualitative and quantitative results, and a fused approach, such as the one proposed in this study, could lead to better results by optimally combining various normalized images.

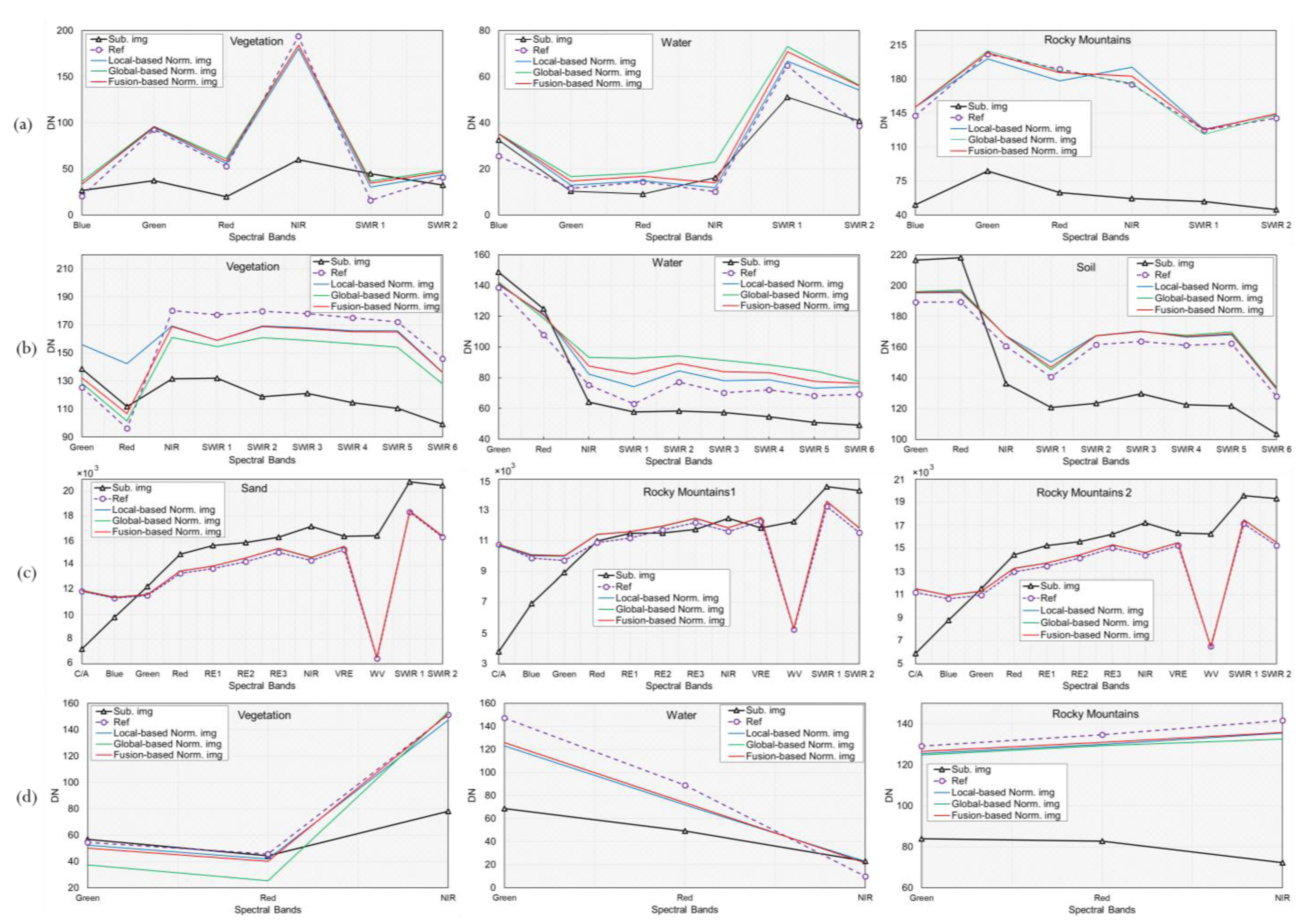

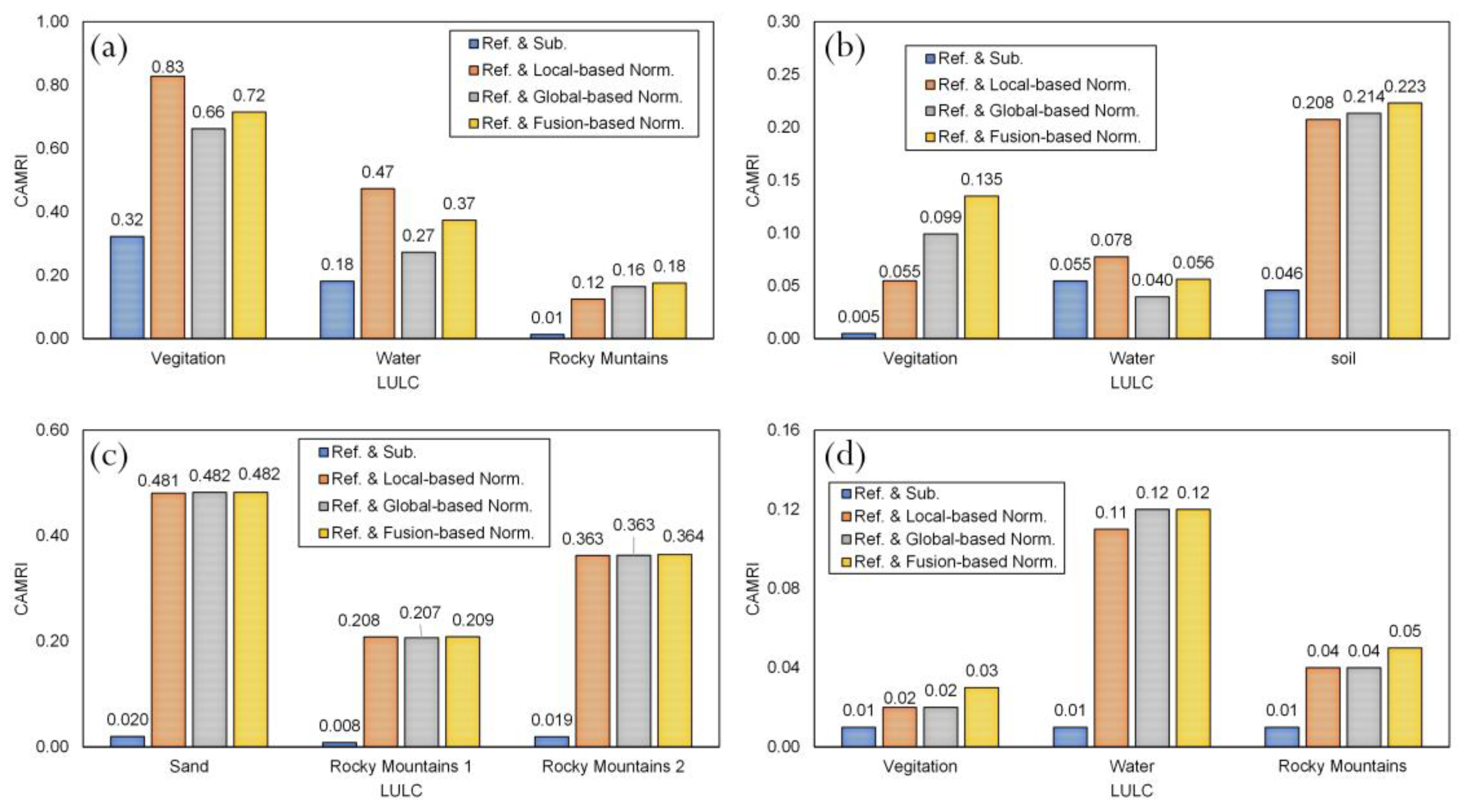

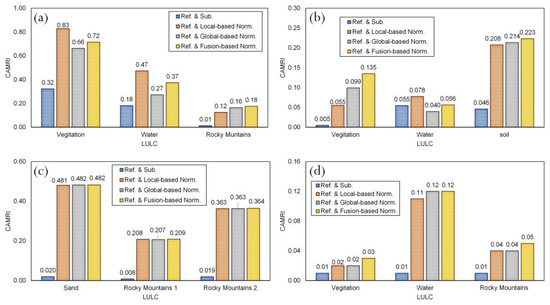

The spectral characteristics of different LULC types in multi-temporal images may be unexpectedly affected due to radiometric distortions. Thus, a local assessment can be a practical approach to assessing the effectiveness of the proposed RRN methods in preserving spectral characteristics of LULC types on images pairs. In this way, the spectral signatures of several LULC types (e.g., vegetation, water, rock mountains) were compared before and after normalization using the proposed SRRN method under the local, global, and fused-based RRN modeling (see Figure 8). The CAMRI values before and after normalization were also compared over the LULC classes (see Figure 9). Multiple polygons from the LULC classes were manually selected for these experiments on the unchanged areas of reference, target, and normalized images.

Figure 8.

Comparison between the spectral signatures of different LULCs before and after the normalization of datasets 1–4 (a–d) with local, global, and fusion RRN modeling.

Figure 9.

Comparison of the CAMRI values between the reference and target images and the reference and normalized target images generated by the proposed SRRN method with local, global, and fusion-based RRN modeling over different LULCs for datasets 1–4 (a–d).

As shown in Figure 8a–d, the spectral signatures of LULC types in the target images were well adjusted after normalization with the proposed SRRN method under different modeling. Moreover, these results were in good agreement with CAMRI values reported for datasets before and after RRN (see Figure 9a–d). In more detail, the spectral signatures of vegetation and water bodies in the normalized image generated by the local approach were slightly similar to those obtained in the reference image for dataset 1. The fusion-based method also had the best performance with a CMRI value of ~0.18 in rocky mountains areas of dataset 1. Similarly, the proposed fused approach provided spectrally better RRN results in the vegetation and soil LULC types, with the highest CMRI values. Nevertheless, the local model yielded better results for water bodies in dataset 2. The local, global, and fusion-based RRN modeling had the same performance for different LULCs included in dataset 3, as shown in Figure 8c and Figure 9c. Moreover, the local and global models also had nearly the same RRN performance for LULCs of dataset 4. In contrast, the proposed fusion-based model produced better results in spectral similarity and CAMRI values (see Figure 8d and Figure 9d).

Although the SRRN method under the mentioned models had locally acceptable results, small shifts were observed between the spectral signatures of some of the LULCs in the reference and normalized images. For example, such shifts were mainly observed in water bodies due to existing particles floating in these areas, such as phytoplankton, pollution, and sediments which affect the apparent color of the water between acquisition times of the reference-target image pair. Moreover, the differences between the spectral signatures of vegetation in the reference and normalized images were mostly observed for bi-temporal images acquired in different seasons (e.g., datasets 1, 2, and 4). This could be mainly due to differences in vegetation phenological properties.

3.4. Comparative Results of the SRRN Methods

To evaluate the efficiency of the proposed method, it was compared with our implementations of IRMAD [15], multi-Otsu-based [29], MPIF [22], ASCRRF-based [27], GMM-EE [24], HOG-based [31], and FLSM-based [3] in terms of quantitative and qualitative results, as well as operation time (see Table 2, Table 3, Table 4, Table 5 and Table 6 and Figure 10). These SRRN algorithms were set up as described in their respective works of literature. This study did not report the componential time of the HOG-based [31] SRRN technique since it is a semi-supervised SRRN approach that requires training data for its classification process.

Table 2.

Comparison between the accuracy and componential time of different SRRN methods and the proposed method for the simulated dataset.

Table 3.

Comparison between the accuracy and componential time of different SRRN methods and the proposed method for dataset 1.

Table 4.

Comparison between the accuracy and componential time of different SRRN methods and the proposed method for dataset 2.

Table 5.

Comparison between the accuracy and componential time of different SRRN methods and the proposed method for dataset 3.

Table 6.

Comparison between the accuracy and componential time of different SRRN methods and the proposed methods for simulated dataset 4.

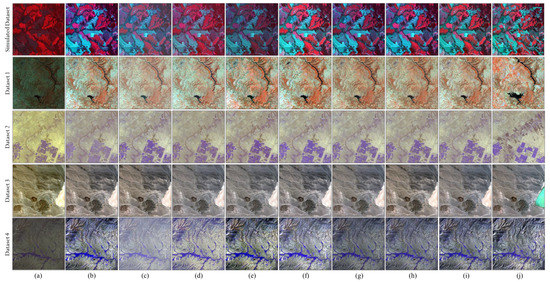

Figure 10.

Visualizations of the RRN results over the simulated dataset and real datasets 1 to 4, obtained by the proposed method and other well-known SRRN models. (a) target images; and normalized images generated by (b) IRMAD [15], (c) multi-Otsu-based [29], (d) MPIF [22], (e) ASCR-RF-based [27], (f) GMM-EE [24], (g) HOG-based [31], (h) FLSM-based [3], (i) the proposed method, and (j) the reference images.

As can be seen from Table 2, Table 3, Table 4, Table 5 and Table 6, RMSE values were significantly reduced after applying all considered SRRN methods over all datasets. Among these models, the proposed method obtained the highest accuracy in minimizing radiometric errors from the target images of the analyzed datasets. Specifically, the average raw RMSEs decreased significantly from 69.13 to 9.31 (~87%), from 88.82 to 23.32 (~74%), from 36.12 to 13.74 (~62%), from 2762.72 to 569.35 (~79%), and from 63.77 to 34.88 (~45%), respectively for the simulated dataset, and datasets 1–4. Compared with the implemented methods, the proposed methods also improved the average RMSEs by ~27% and ~1% in best and worst cases, i.e., the simulated dataset and dataset 3, respectively. This was mainly because the proposed method took advantage of the efficient coarse-to-fine PIFs selection and fusion-based RRN modeling in its process.

The multi-Otsu-based [29] had the worst RRN performance in most cases, except for dataset 3, in which the HOG-based [31] achieved a lower average RMSE compared with other well-known methods. This could be mainly because this method uses the Otsu thresholding as segmentation in its process, which is highly sensitive to the non-uniform illumination and imperfectly segment images with such distortions. The ASCRRF-based [27] also had an almost poor RRN performance in most cases because it uses the RF regression in RRN modeling, which is highly prone to overfitting and needs parameter tuning for each dataset. Although the MPIF [22] had a moderate RRN performance, it could not perfectly handle radiometric falsifications between image pairs in most cases, as shown in Table 2, Table 3, Table 4 and Table 5. This may be related to using several threshold-based sampling rules in PIFs selection that do not take LULC types into account, resulting in insufficient PIFs with an unsuitable spatial distribution.

The IRMAD [15], FLSM-based [3], and GMM-EE [24] methods were high-end techniques among the considered methods because they provided comparatively reasonable results for most of the analyzed datasets. Of course, the IRMAD [15] had an incredible performance of the simulated dataset in RRN compared to the FLSM-based [3] and GMM-EE [24] methods. The reason for this could be found in the nature of the IRMAD method, which uses only statistical properties for detecting PIFs. In general, the IRMAD [15], FLSM-based [3], and GMM-EE [24] methods do not consider the LULC types in the process of PIFs selection and optimization, resulting in poor RRN results compared with the proposed method. On the other hand, the HOG-based method [31] had an unstable RRN performance among the evaluated methods because it yielded reasonable results for the simulated dataset and datasets 2 and 4, while it had poor performance in RRN of datasets 1 and 3. This instability was attributed mainly because this method depends on the value of the correlation coefficient’s threshold and the accuracy of the classification, which may vary for different datasets.

As shown in Figure 10a–j, after normalization with the considered SRRN methods, the target images were significantly close to the relevant reference images from a visual point of view. Moreover, the visualizations of RRN results were in line with the quantitative results reported in Table 2, Table 3, Table 4 and Table 5. In more detail, the normalized images generated by the proposed method were more harmonized with the relevant reference images than other methods, indicating the high efficacy of the proposed method in reducing radiometric distortions from the target images (see Figure 10i). The HOG-based [31], FLSM-based [3], and GMM-EE [24] methods also generated reasonable normalized images, which were visually well-matched with the corresponding reference images in most cases (see Figure 10f–h). However, they produced contrast-distorted normalized images for the simulated dataset, as shown in the first row of Figure 10f–h. The IRMAD [15] also returned dependable normalized images with more contrast and saturation than the reference images in most cases. At the same time, it generated a low-brightness normalized image for dataset 2 (see Figure 10b).

The multi-Otsu-based [29] generated blurred normalized images for the simulated dataset and datasets 1 and 4, while it induced more appropriate normalized images for datasets 2 and 3 (see Figure 10c). This could be mainly due to the fluctuated performance of the Otsu algorithm in dealing with non-uniform illumination (e.g., simulated dataset and datasets 1 and 4). The MPIF [22] visually yielded unsatisfactory results, especially for the simulated dataset and dataset 4, where it generated the normalized images with artifact colors (see Figure 10d). This could be mainly due to the sensitivity of this method to the numerical thresholds of its sampling rules and the lack of considering different LULC types in its process. The normalized images of the ASCRRF-based [27] were composed of artifact colors in the simulated dataset and datasets 1 and 4. This is mainly because this method was over-fitted to estimate the relationship between their image pairs (see Figure 10e).

In terms of computation time, the proposed method was the most efficient method for the simulated dataset and dataset 4, while the multi-Otsu-based [29] was inexpensive on other analyzed datasets. A major reason for the efficiency of the proposed method could be attributed to the coarse-to-fine PIFs selection, which substantially reduced the execution time. In fact, a slight increase in time cost was observed when using the fused-based model in the proposed method, but did not lead to a heavy computational load (see Table 2, Table 3, Table 4, Table 5 and Figure 6f). The IRMAD [15] and MPIF [22] were also among the computationally efficient methods in RRN of the analyzed datasets, while the ASCRRF-based [27] and GMM-EE [24] were mid-range methods in terms of processing time. In all cases, the FLSM-based [3] method was more expensive, such that in the best and worst cases, its execution times were approximately twice and three times longer than the proposed method. This was mostly because the FLSM-based [3] included several image processing algorithms that demanded massive storage and componential time.

4. Conclusions

This study introduced a new SRRN method to provide robust and well-distributed PIFs and a reasonable normalized image. To be specific, a new coarse-to-fine strategy was embedded in the proposed method to efficiently select robust PIFs from different LULC types. A fusion-based model was also developed based on the fuzzy Choquet integral that effectively integrated two normalized images generated by the global (i.e., RLR model) and local (i.e., proposed CRLR model) models. The experimental results were evaluated on a simulated dataset and four real datasets, each of which was composed of a bi-temporal image pair acquired by different RS systems.

The experimental results demonstrated that the proposed coarse-to-fine approach successfully reduced uncertainties from PIFs and efficiently selected them from dark to bright regions, which aligned with the nature of LULC types. Moreover, the proposed fusion-based RRN modeling led to more accurate local and global RRN results than the RLR and CRLR models. In addition, the spectral signatures of different LULC types in the target images were closer to those of the reference image after normalization with the proposed SRRN method, indicating its high potential in preserving spectral characteristics of various LULC classes. The proposed method also outperformed the other well-known SRRN methods regarding accuracy, computation time, and visual point of view, indicating its high potential in reducing the radiometric differences between image pairs.

Although the current work presented an efficient coarse-to-fine strategy for PIF selection, it employed RLR and its cluster-wise variant in the core of the RRN modeling, which is computationally expensive. Such an issue reduces the operationality of the proposed SERN method in dealing with the big-size dataset. Therefore, it is recommended to use much more efficient and robust models in the RRN modeling process. Moreover, the proposed method was developed by assuming a linear relationship between image pairs and their LULC types. However, this relationship can be nonlinear, especially in image pairs with significant illumination and LULC changes. Therefore, the current fusion scheme could also be further improved by using multiple normalized images generated by more advanced nonlinear mapping functions.

Author Contributions

Conceptualization, A.M. (Armin Moghimi), T.C. and M.A.; methodology, A.M. (Armin Moghimi), A.M. (Ali Mohammadzadeh) and T.C.; investigation, A.M. (Armin Moghimi) and M.A.; writing—original draft preparation, A.M. (Armin Moghimi), A.M. (Ali Mohammadzadeh) and M.A.; writing—review and editing, all authors; visualization, A.M. (Armin Moghimi); supervision, A.M. (Ali Mohammadzadeh), T.C., M.A. and B.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. These datasets can be found here: (https://scihub.copernicus.eu/ (accessed on 20 April 2019)) (https://earthexplorer.usgs.gov/ (accessed on 26 September 2017)), and (https://www.intelligence-airbusds.com/en/9317-sample-imagery-detail?product=35822 (accessed on 26 January 2022)). The dataset presented in this study can be available on request from the author.

Acknowledgments

The authors would like to thank the National Aeronautics and Space Administration (NASA), United States Geological Survey (USGS), European Space Agency (ESA), and Airbus team for freely providing multispectral remote sensing images.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hall, F.G.; Strebel, D.E.; Nickeson, J.E.; Goetz, S.J. Radiometric Rectification: Toward a Common Radiometric Response among Multidate, Multisensor Images. Remote Sens. Environ. 1991, 35, 11–27. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Ding, Y.; Weerackoon, R.D.; Lunetta, R.S. Relative Radiometric Normalization of Landsat Multispectral Scanner (MSS) Data Using an Automatic Scattergram-Controlled Regression. Photogramm. Eng. Remote Sens. 1995, 61, 1255–1260. [Google Scholar]

- Moghimi, A.; Mohammadzadeh, A.; Celik, T.; Amani, M. A Novel Radiometric Control Set Sample Selection Strategy for Relative Radiometric Normalization of Multitemporal Satellite Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2503–2519. [Google Scholar] [CrossRef]

- Moghimi, A.; Celik, T.; Mohammadzadeh, A.; Kusetogullari, H. Comparison of Keypoint Detectors and Descriptors for Relative Radiometric Normalization of Bitemporal Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4063–4073. [Google Scholar] [CrossRef]

- Yuan, D.; Elvidge, C.D. Comparison of Relative Radiometric Normalization Techniques. ISPRS J. Photogramm. Remote Sens. 1996, 51, 117–126. [Google Scholar] [CrossRef]

- Mohsenifar, A.; Mohammadzadeh, A.; Moghimi, A.; Salehi, B. A Novel Unsupervised Forest Change Detection Method Based on the Integration of a Multiresolution Singular Value Decomposition Fusion and an Edge-Aware Markov Random Field Algorithm. Int. J. Remote Sens. 2021, 42, 9376–9404. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F.; Al-Sharari, H.D. Unsupervised Change Detection in Multispectral Remotely Sensed Imagery with Level Set Methods. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3178–3187. [Google Scholar] [CrossRef]

- Wei, J.; Tang, W.; He, C. Enblending Mosaicked Remote Sensing Images with Spatiotemporal Fusion of Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5891–5902. [Google Scholar] [CrossRef]

- Yang, X.; Lo, C.P. Relative Radiometric Normalization Performance for Change Detection from Multi-Date Satellite Images. Photogramm. Eng. Remote Sens. 2000, 66, 967–980. [Google Scholar]

- Sadeghi, V.; Ebadi, H.; Ahmadi, F.F. A New Model for Automatic Normalization of Multitemporal Satellite Images Using Artificial Neural Network and Mathematical Methods. Appl. Math. Model. 2013, 37, 6437–6445. [Google Scholar] [CrossRef]

- Moghimi, A.; Sarmadian, A.; Mohammadzadeh, A.; Celik, T.; Amani, M.; Kusetogullari, H. Distortion Robust Relative Radiometric Normalization of Multitemporal and Multisensor Remote Sensing Images Using Image Features. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–20. [Google Scholar] [CrossRef]

- Du, Y.; Teillet, P.M.; Cihlar, J. Radiometric Normalization of Multitemporal High-Resolution Satellite Images with Quality Control for Land Cover Change Detection. Remote Sens. Environ. 2002, 82, 123–134. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A.; Schmidt, M. Automatic Radiometric Normalization of Multitemporal Satellite Imagery. Remote Sens. Environ. 2004, 91, 441–451. [Google Scholar] [CrossRef]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate Alteration Detection (MAD) and MAF Postprocessing in Multispectral, Bitemporal Image Data: New Approaches to Change Detection Studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A. Automatic Radiometric Normalization of Multitemporal Satellite Imagery with the Iteratively Re-Weighted MAD Transformation. Remote Sens. Environ. 2008, 112, 1025–1036. [Google Scholar] [CrossRef]

- Nielsen, A.A. The Regularized Iteratively Reweighted MAD Method for Change Detection in Multi- and Hyperspectral Data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef]

- Ma, L.; Zhenhong, J.; Yang, J.; Kasabov, N. Multi-Spectral Image Change Detection Based on Single-Band Iterative Weighting and Fuzzy C-Means Clustering. Eur. J. Remote Sens. 2020, 53, 1–13. [Google Scholar] [CrossRef]

- Xu, Q.; Liu, Z.; Li, F.; Yang, M.; Ren, H. The Regularized Iteratively Reweighted Object-Based MAD Method for Change Detection in Bi-Temporal, Multispectral Data. In Proceedings of the Hyperspectral Remote Sensing Applications and Environmental Monitoring and Safety Testing Technology, Beijing, China, 25 October 2016; Volume 10156. [Google Scholar] [CrossRef]

- Bai, Y.; Tang, P.; Hu, C. KCCA Transformation-Based Radiometric Normalization of Multi-Temporal Satellite Images. Remote Sens. 2018, 10, 432. [Google Scholar] [CrossRef]

- Liu, K.; Ke, T.; Tao, P.; He, J.; Xi, K.; Yang, K. Robust Radiometric Normalization of Multitemporal Satellite Images via Block Adjustment without Master Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6029–6043. [Google Scholar] [CrossRef]

- Byun, Y.; Han, D. Relative Radiometric Normalization of Bitemporal Very High-Resolution Satellite Images for Flood Change Detection. J. Appl. Remote Sens. 2018, 12, 26021. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, S.; He, J.; Wen, Q.; Song, L.; Ma, Y. A New Model for the Automatic Relative Radiometric Normalization of Multiple Images with Pseudo-Invariant Features. Int. J. Remote Sens. 2016, 37, 4554–4573. [Google Scholar] [CrossRef]

- Xu, H.; Wei, Y.; Li, X.; Zhao, Y.; Cheng, Q. A Novel Automatic Method on Pseudo-Invariant Features Extraction for Enhancing the Relative Radiometric Normalization of High-Resolution Images. Int. J. Remote Sens. 2021, 42, 6153–6183. [Google Scholar] [CrossRef]

- Ghanbari, H.; Homayouni, S.; Ghamisi, P.; Safari, A. Radiometric Normalization of Multitemporal and Multisensor Remote Sensing Images Based on a Gaussian Mixture Model and Error Ellipse. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4526–4533. [Google Scholar] [CrossRef]

- Yan, L.; Yang, J.; Zhang, Y.; Zhao, A.; Li, X. Radiometric Normalization for Cross-Sensor Optical Gaofen Images with Change Detection and Chi-Square Test. Remote Sens. 2021, 13, 3125. [Google Scholar] [CrossRef]

- Seo, D.K.; Kim, Y.H.; Eo, Y.D.; Park, W.Y.; Park, H.C. Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection. Remote Sens. 2017, 9, 1163. [Google Scholar] [CrossRef]

- Seo, D.K.; Eo, Y.D. Multilayer Perceptron-Based Phenological and Radiometric Normalization for High-Resolution Satellite Imagery. Appl. Sci. 2019, 9, 4543. [Google Scholar] [CrossRef]

- Denaro, L.G.; Lin, C.H. Hybrid Canonical Correlation Analysis and Regression for Radiometric Normalization of Cross-Sensor Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 976–986. [Google Scholar] [CrossRef]

- Sadeghi, V.; Ahmadi, F.F.; Ebadi, H. A New Automatic Regression-Based Approach for Relative Radiometric Normalization of Multitemporal Satellite Imagery. Comput. Appl. Math. 2017, 36, 825–842. [Google Scholar] [CrossRef]

- Ya’allah, S.M.; Saradjian, M.R. Automatic Normalization of Satellite Images Using Unchanged Pixels within Urban Areas. Inf. Fusion 2005, 6, 235–241. [Google Scholar] [CrossRef]

- He, H.; Liu, X.; Shen, Y. Relative Radiometric Correction of High-Resolution Remote Sensing Images Based on Feature Category. Cluster Comput. 2019, 22, 7933–7941. [Google Scholar] [CrossRef]

- Lo, C.-C.; Wang, S.-J. Video Segmentation Using a Histogram-Based Fuzzy c-Means Clustering Algorithm. Comput. Stand. Interfaces 2001, 23, 429–438. [Google Scholar] [CrossRef]

- Murofushi, T.; Sugeno, M. An Interpretation of Fuzzy Measures and the Choquet Integral as an Integral with Respect to a Fuzzy Measure. Fuzzy sets Syst. 1989, 29, 201–227. [Google Scholar] [CrossRef]

- Du, X.; Zare, A. Multiple Instance Choquet Integral Classifier Fusion and Regression for Remote Sensing Applications. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2741–2753. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The Spectral Image Processing System (SIPS)—Interactive Visualization and Analysis of Imaging Spectrometer Data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Ghamisi, P.; Couceiro, M.S.; Martins, F.M.L.; Benediktsson, J.A. Multilevel Image Segmentation Based on Fractional-Order Darwinian Particle Swarm Optimization. IEEE Trans. Geosci. Remote Sens. 2013, 52, 2382–2394. [Google Scholar] [CrossRef]

- Teng, S.P.; Chen, Y.K.; Cheng, K.S.; Lo, H.C. Hypothesis-Test-Based Landcover Change Detection Using Multi-Temporal Satellite Images–A Comparative Study. Adv. Space Res. 2008, 41, 1744–1754. [Google Scholar] [CrossRef]

- Nafchi, H.Z.; Shahkolaei, A.; Hedjam, R.; Cheriet, M. CorrC2G: Color to Gray Conversion by Correlation. IEEE Signal Process. Lett. 2017, 24, 1651–1655. [Google Scholar] [CrossRef]

- Ghosh, A.; Mishra, N.S.; Ghosh, S. Fuzzy Clustering Algorithms for Unsupervised Change Detection in Remote Sensing Images. Inf. Sci. 2011, 181, 699–715. [Google Scholar] [CrossRef]

- Sadeghi, V.; Etemadfard, H. Optimal Cluster Number Determination of FCM for Unsupervised Change Detection in Remote Sensing Images. Earth Sci. Inform. 2022, 1–13. [Google Scholar] [CrossRef]

- Xie, X.L.; Beni, G. A Validity Measure for Fuzzy Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 841–847. [Google Scholar] [CrossRef]

- Holland, P.W.; Welsch, R.E. Robust Regression Using Iteratively Reweighted Least-Squares. Commun. Stat. Methods 1977, 6, 813–827. [Google Scholar] [CrossRef]

- Gross, A.M. Confidence Intervals for Bisquare Regression Estimates. J. Am. Stat. Assoc. 1977, 72, 341–354. [Google Scholar] [CrossRef]

- Abdullah, L.; Awang, N.A.; Othman, M. Application of Choquet Integral-Fuzzy Measures for Aggregating Customers’ Satisfaction. Adv. Fuzzy Syst. 2021, 2021, 2319004. [Google Scholar] [CrossRef]

- Wei, P.; Ball, J.E.; Anderson, D.T. Fusion of an Ensemble of Augmented Image Detectors for Robust Object Detection. Sensors 2018, 18, 894. [Google Scholar] [CrossRef] [PubMed]

- Shah, V.P.; Younan, N.H.; King, R.L. An Efficient Pan-Sharpening Method via a Combined Adaptive PCA Approach and Contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- Brodu, N. Super-Resolving Multiresolution Images with Band-Independent Geometry of Multispectral Pixels. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4610–4617. [Google Scholar] [CrossRef]

- Goldberger, A.S. Classical Linear Regression. Econom. Theory 1964, 156–212. [Google Scholar]

- Boggs, P.T.; Rogers, J.E. Orthogonal Distance Regression. Contemp. Math. 1990, 112, 183–194. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).