Automated Delineation of Microstands in Hemiboreal Mixed Forests Using Stereo GeoEye-1 Data

Abstract

:1. Introduction

- Development of modified JSEG segmentation [30] workflow in a way providing for the CHM and multispectral data fusion;

- Application of the JSEG workflow and freeware solution provided by the Orfeo Toolbox: Generic Region Merging to four-band GeoEye-1 images and the CHM prepared using the same GeoEye-1 stereo scene. The CHM produced in this manner includes time compatibility with the spectral bands;

- Extensive accuracy assessment for hemiboreal forests in Latvia using (1) unsupervised and forest-specific metrics, (2) supervised, direct accuracy assessment using 2700 microstands delineated by an independent image analyst, and (3) system-level assessment by estimating stand volume. All metrics were also calculated for grid cells to evaluate the benefits of segmentation.

2. Materials and Methods

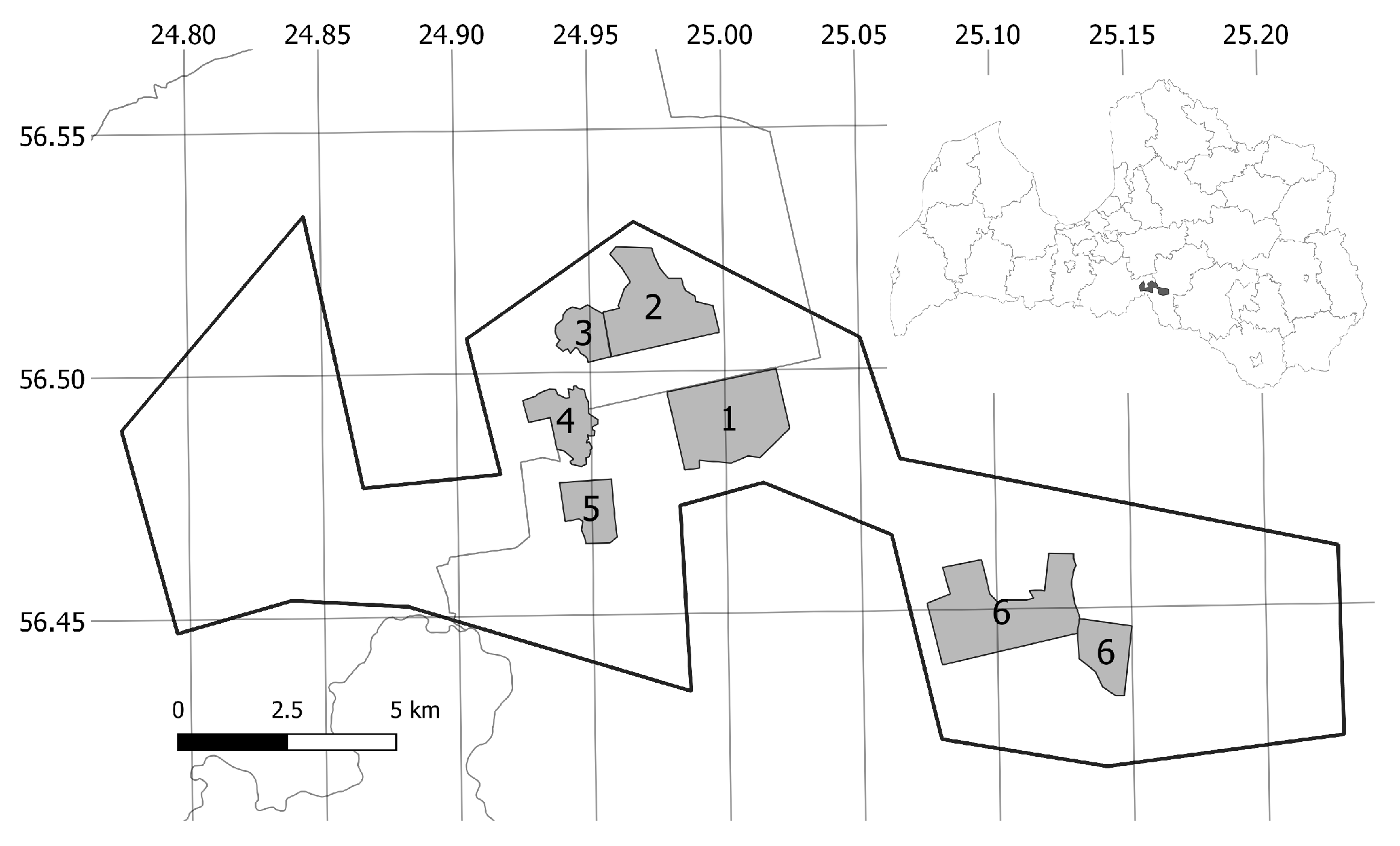

2.1. Study Site

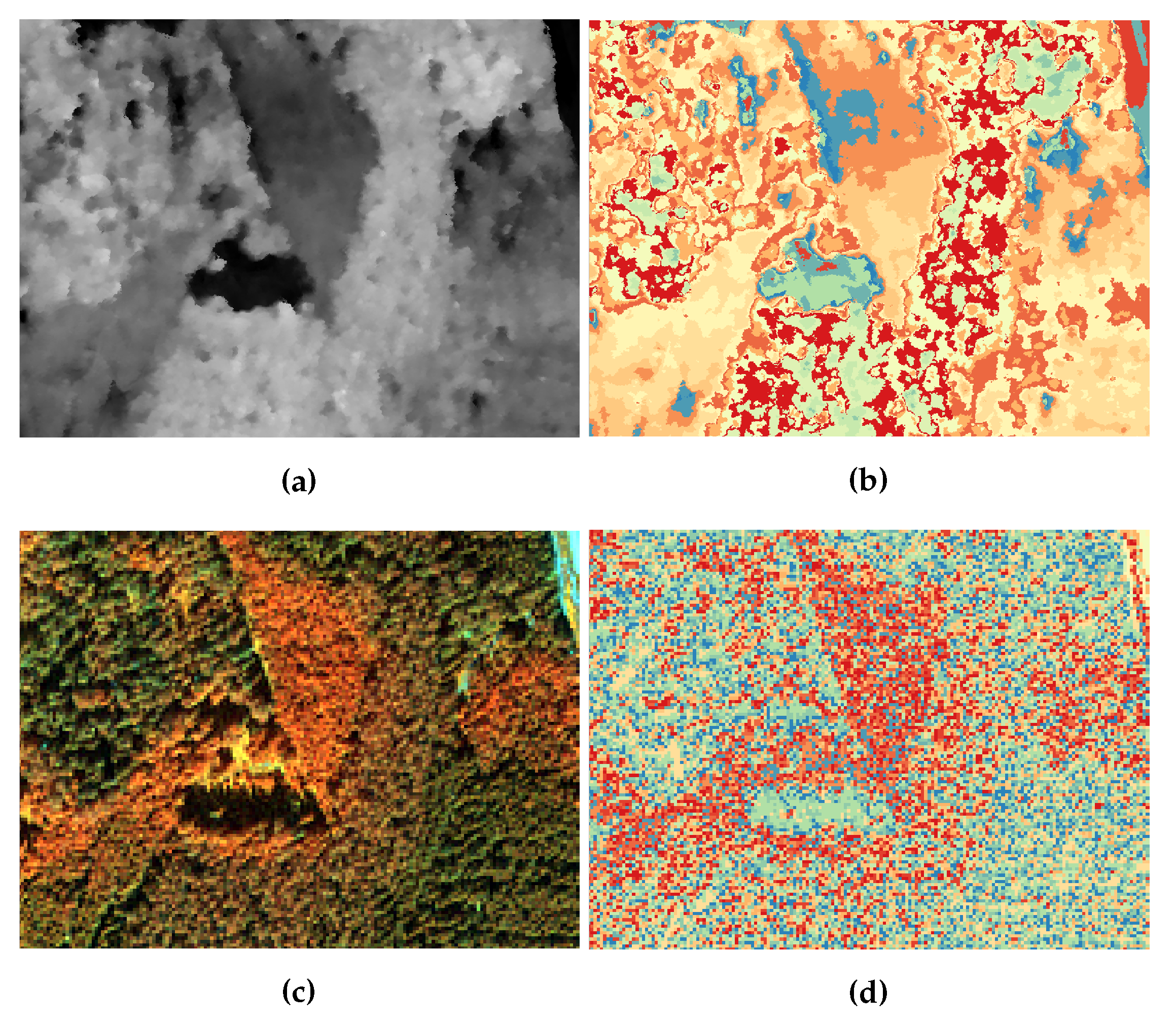

2.2. Remote Sensing and Reference Data

2.3. Methods

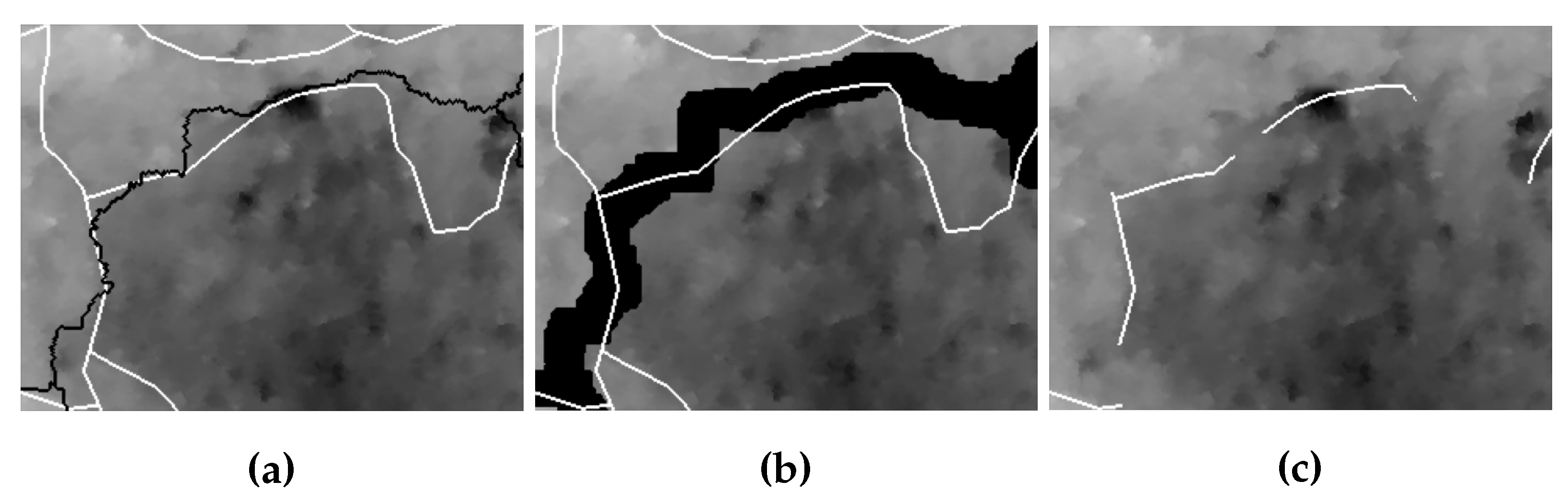

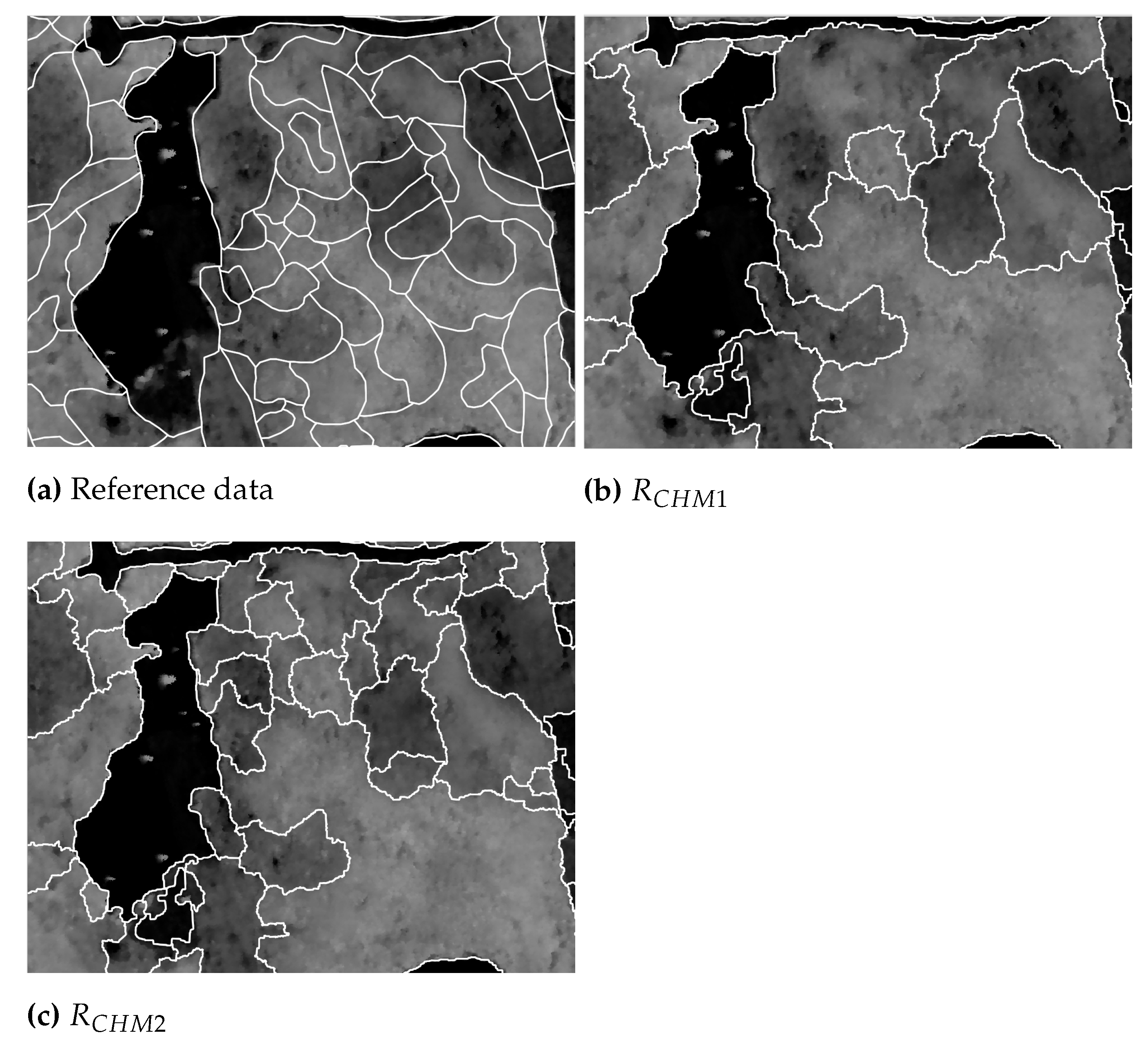

2.3.1. Modified JSEG Workflow

- Calculate J images for clustered (number of clusters ) CHM at three scales with window sizes px, px, and px as , , and . The number of clusters was set by the trial-and-error method, aiming to emphasise microstands distinguishable by visual assessment. To emphasise sharp boundaries, the morphological gradient of the CHM with a square structuring element was merged with J images using the elementwise maximum operation;

- Perform the multiscale segmentation of J images. To save the calculation time, we excluded pixels with a CHM value lower than 3 m from further analysis, since we were interested only in the tree-covered areas;

- Scale 1: Find a seed image [30] using by setting the minimum allowed seed size as 512 px. Add homogeneous chunks of to the seed image, and perform region growing pixel-by-pixel using . The output is denoted as ;

- Scale 2: Find the refined seed image for using and 128 as the minimum allowed seed size; perform region growing by adding homogeneous chunks using ; perform region growing pixel-by-pixel using . The output is denoted as ;

- Calculate J images for the clustered () multispectral image (MS) at three scales with the same window sizes w = 33, 17, 7 as , , and ;

- Resegment each region in . Statistical measures of the JSEG method were calculated for each region from to be processed individually, and segmentation again was performed at multiple scales:

- (a)

- for the first scale , find new seeds for the region using , and employ the pixel-by-pixel region growing using . The output is denoted as , where the first index shows the CHM segmentation scale, the second one reflects the MS scale, and the last ones indicate the J images employed;

- (b)

- For the second scale , find new seeds for the segmented image from the previous Step (a) using and perform the pixel-by-pixel region growing using . The output is denoted as ;

- (c)

- If three scales are employed, find new seeds (64 as the minimum allowed seed size) for the output of Step (b) using , and apply the pixel-by-pixel region growing using . The output is denoted as ;

- An optional step is merging regions smaller than the specified threshold with the most similar neighbour region, defining the similarity as the Euclidean distance between the trimmed mean values of the regions under consideration.

2.3.2. Generic Region Merging

2.3.3. Microstand Quality Assessment

2.3.4. Adjusting Workflow Parameters

- D: lowest D score showing the best match with segments delineated by the image analyst;

- : lowest when segments are employed as the basic spatial units for stand volume estimation.

3. Results

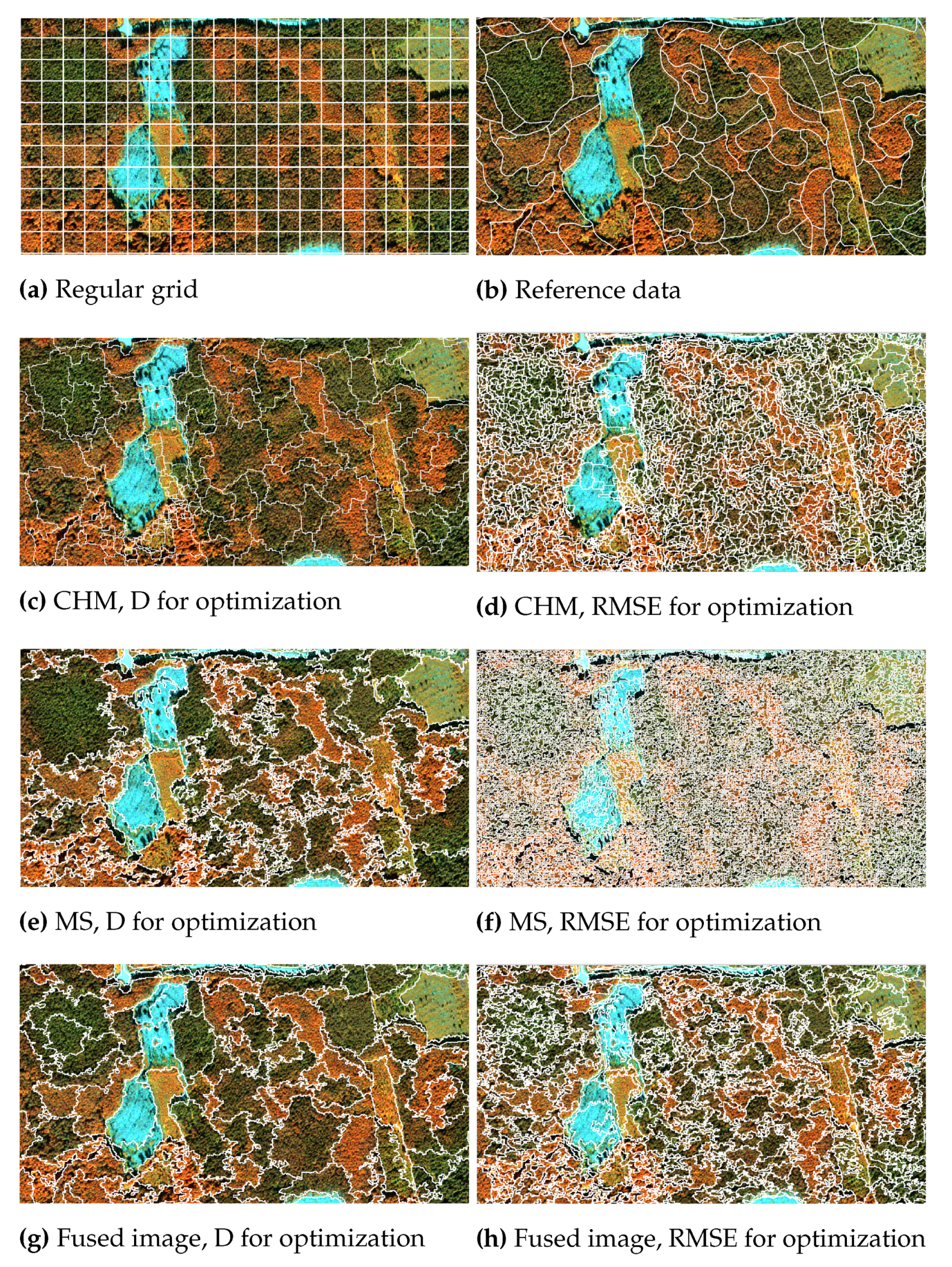

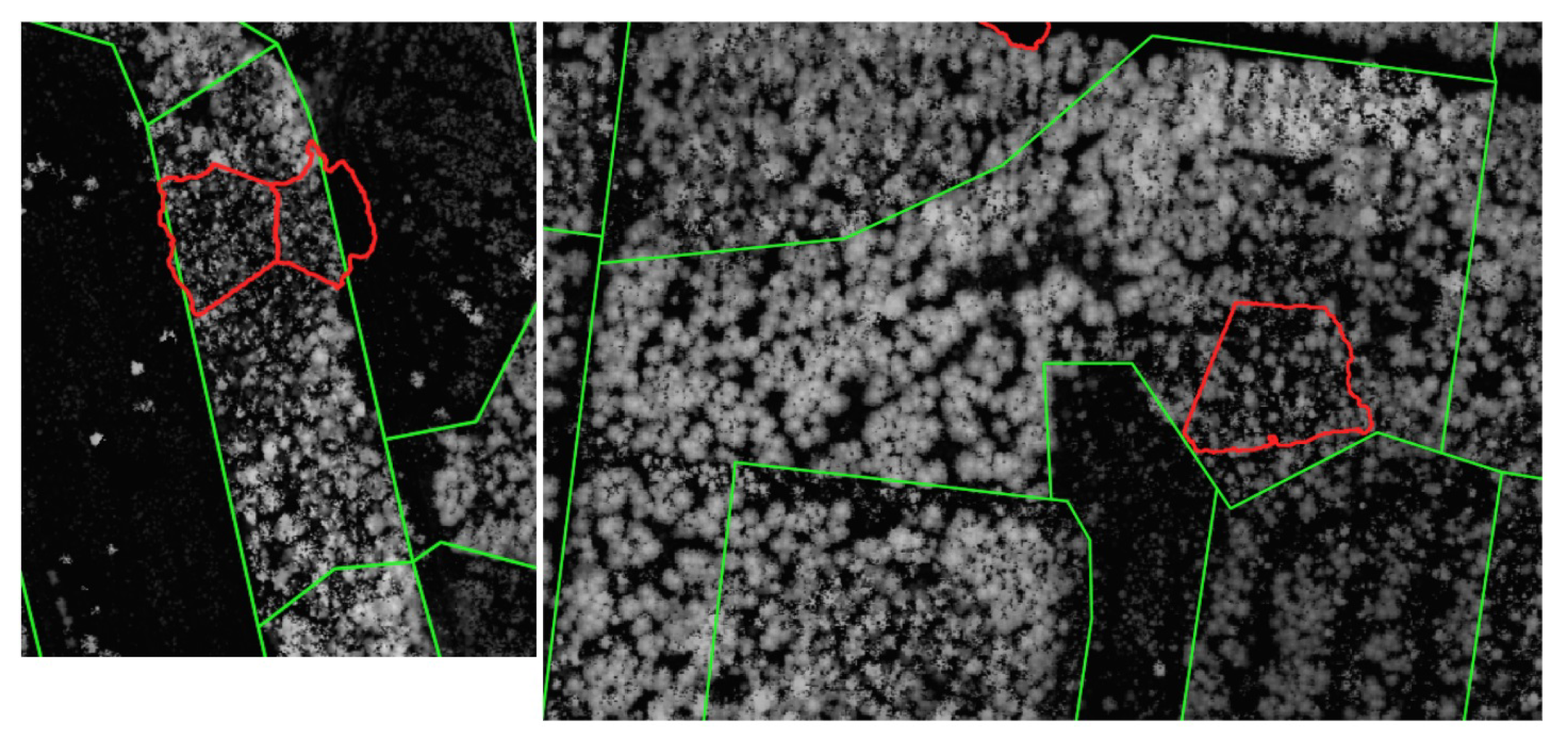

3.1. Examples of the Segmentation Results

3.2. Unsupervised Metrics

3.3. Supervised and System-Level Metrics

4. Discussion

4.1. Applicability for Microstand Border Refinement

4.2. Applicability to the Stand Volume Estimation Task

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Remote sensing technologies for enhancing forest inventories: A review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar]

- Latvian Geospatial Information Agency, Ortofotokartes. Available online: https://www.lgia.gov.lv/lv/ortofotokartes-0 (accessed on 5 January 2022). (In Latvian)

- Barrett, F.; McRoberts, R.E.; Tomppo, E.; Cienciala, E.; Waser, L.T. A questionnaire-based review of the operational use of remotely sensed data by national forest inventories. Remote Sens. Environ. 2016, 174, 279–289. [Google Scholar] [CrossRef]

- Schumacher, J.; Rattay, M.; Kirchhöfer, M.; Adler, P.; Kändler, G. Combination of multi-temporal sentinel 2 images and aerial image based canopy height models for timber volume modelling. Forests 2019, 10, 746. [Google Scholar] [CrossRef] [Green Version]

- Kankare, V.; Holopainen, M.; Vastaranta, M.; Liang, X.; Yu, X.; Kaartinen, H.; Hyyppä, J. Outlook for the Single-Tree-Level Forest Inventory in Nordic Countries; Springer: New York, NY, USA, 2017. [Google Scholar]

- Pascual, A.; Pukkala, T.; de Miguel, S.; Pesonen, A.; Packalen, P. Influence of size and shape of forest inventory units on the layout of harvest blocks in numerical forest planning. Eur. J. For. Res. 2019, 138, 111–123. [Google Scholar] [CrossRef] [Green Version]

- Bergseng, E.; Ørka, H.O.; Næsset, E.; Gobakken, T. Assessing forest inventory information obtained from different inventory approaches and remote sensing data sources. Ann. For. Sci. 2015, 72, 33–45. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Tianyang, D.; Jian, Z.; Sibin, G.; Ying, S.; Jing, F. Single-tree detection in high-resolution remote-sensing images based on a cascade neural network. ISPRS Int. J. Geo-Inf. 2018, 7, 367. [Google Scholar] [CrossRef] [Green Version]

- Tianyang, D.; Jian, Z.; Sibin, G.; Ying, S.; Jing, F. Local pivotal method sampling design combined with micro stands utilizing airborne laser scanning data in a long term forest management planning setting. Silva Fenn 2015, 50, 1414. [Google Scholar]

- Legal Acts of the Republic of Latvia, Law on Forests. Available online: https://likumi.lv/ta/en/en/id/2825 (accessed on 5 January 2022).

- Koch, B.; Straub, C.; Dees, M.; Wang, Y.; Weinacker, H. Airborne laser data for stand delineation and information extraction. Int. J. Remote Sens. 2009, 30, 935–963. [Google Scholar] [CrossRef]

- von Gadow, K.; Pukkala, T. Designing Green Landscapes; Springer Science & Business Media: New York, NY, USA, 2008; Volume 15. [Google Scholar]

- Baatz, M.; Schape, A. Multi Resolution Segmentation: An Optimum Approach for High Quality Multi Scale Image Segmentation; Wichmann-Verlag: Heidelberg, Germany, 2000; pp. 12–23. [Google Scholar]

- Ozkan, U.Y.; Demirel, T.; Ozdemir, I.; Saglam, S.; Mert, A. Examining lidar-worldview-3 data synergy to generate a detailed stand map in a mixed forest in the north-west of Turkey. Adv. Space Res. 2020, 65, 2608–2621. [Google Scholar] [CrossRef]

- Rajbhandari, S.; Aryal, J.; Osborn, J.; Lucieer, A.; Musk, R. Leveraging machine learning to extend Ontology-driven Geographic Object-Based Image Analysis (O-GEOBIA): A case study in forest-type mapping. Remote Sens. 2019, 11, 503. [Google Scholar] [CrossRef] [Green Version]

- Varo-Martínez, M.Á.; Navarro-Cerrillo, R.M.; Hernández-Clemente, R.; Duque-Lazo, J. Semi-automated stand delineation in mediterranean pinus sylvestris plantations through segmentation of lidar data: The influence of pulse density. Int. J. Appl. Earth Obs. Geoinf. 2017, 56, 54–64. [Google Scholar] [CrossRef] [Green Version]

- Leppänen, V.J.; Tokola, T.; Maltamo, M.; Mehtätalo, L.; Pusa, T.; Mustonen, J. Automatic delineation of forest stands from lidar data. GEOBIA 2008, 1, 5–8. [Google Scholar]

- Radoux, J.; Defourny, P. A quantitative assessment of boundaries in automated forest stand delineation using very high resolution imagery. Remote Sens. Environ. 2007, 110, 468–475. [Google Scholar] [CrossRef]

- Hernando, A.; Tiede, D.; Albrecht, F.; Lang, S. Spatial and thematic assessment of object-based forest stand delineation using an ofa-matrix. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 214–225. [Google Scholar] [CrossRef] [Green Version]

- Sanchez-Lopez, N.; Boschetti, L.; Hudak, A.T. Semi-automated delineation of stands in an even-age dominated forest: A lidar-geobia two-stage evaluation strategy. Remote Sens. 2018, 10, 1622. [Google Scholar] [CrossRef] [Green Version]

- Zhao, P.; Gao, L.; Gao, T. Extracting forest parameters based on stand automatic segmentation algorithm. Sci. Rep. 2020, 10, 1571. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; Heikkinen, V.; Hauta-Kasari, M.; Parkkinen, J.; Tokola, T. Als data based forest stand delineation with a coarse-to-fine segmentation approach. In Proceedings of the 2014 7th International Congress on Image and Signal Processing, Dalian, China, 14–16 October 2014; pp. 547–552. [Google Scholar]

- Bruggisser, M.; Hollaus, M.; Wang, D.; Pfeifer, N. Adaptive framework for the delineation of homogeneous forest areas based on lidar points. Remote Sens. 2019, 11, 189. [Google Scholar] [CrossRef] [Green Version]

- Leckie, D. Stand delineation and composition estimation using semi-automated individual tree crown analysis. Remote Sens. Environ. 2003, 85, 355–369. [Google Scholar] [CrossRef]

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Forest stand extraction: Which optimal remote sensing data source(s)? In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7279–7282. [Google Scholar]

- Jia, W.; Sun, Y.; Pukkala, T.; Jin, X. Improved cellular automaton for stand delineation. Forests 2020, 11, 37. [Google Scholar] [CrossRef] [Green Version]

- Räsänen, A.; Rusanen, A.; Kuitunen, M.; Lensu, A. What makes segmentation good? A case study in boreal forest habitat mapping. Int. J. Remote Sens. 2013, 34, 8603–8627. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Hay, G.J.; Castilla, G. Towards automated segmentation of forest inventory polygons on high spatial resolution satellite imagery. For. Chron. 2008, 84, 221–230. [Google Scholar] [CrossRef]

- Deng, Y.; Manjunath, B. Unsupervised segmentation of color-texture regions in images and video. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 800–810. [Google Scholar] [CrossRef] [Green Version]

- Petrokas, R.; Baliuckas, V.; Manton, M. Successional categorization of european hemi-boreal forest tree species. Plants 2020, 9, 1381. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Shi, A.-Y.; Wang, X.; Wu, F.M.; Huang, F.C.; Xu, L.Z. A novel multi-scale segmentation algorithm for high resolution remote sensing images based on wavelet transform and improved jseg algorithm. Optik 2014, 125, 5588–5595. [Google Scholar] [CrossRef]

- European Space Imaging, “Geoeye-1”. Available online: https://www.euspaceimaging.com/geoeye-1/ (accessed on 5 January 2022).

- Happ, P.; Ferreira, R.S.; Bentes, C.; Costa, G.A.O.P.; Feitosa, R.Q. Multiresolution segmentation: A parallel approach for high resolution image segmentation in multicore architectures. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2010, 38, C7. [Google Scholar]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.I.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Neubert, M.; Herold, H. Assessment of remote sensing image segmentation quality. Development 2008, 10, 2007. [Google Scholar]

- Lucieer, A.; Stein, A. Existential uncertainty of spatial objects segmented from satellite sensor imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2518–2521. [Google Scholar] [CrossRef] [Green Version]

- Scikit-Learn Developers, “Scikit-Learn User Guide: Random Forest Regressor”. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html (accessed on 5 January 2022).

- Hay, G.J.; Castilla, G. Geographic object-based image analysis (geobia): A new name for a new discipline. In Object-Based Image Analysis; Springer: New York, NY, USA, 2008; pp. 75–89. [Google Scholar]

- Surovỳ, P.; Kuželka, K. Acquisition of forest attributes for decision support at the forest enterprise level using remote-sensing techniques—A review. Forests 2019, 10, 273. [Google Scholar] [CrossRef] [Green Version]

| No. | Area (km) | Number of Microstands | Percentage of the Forested Area Owned by the State | Percentage of the Area Formed by Mixed Stands | Percentage of the Area Delineated with Low Confidence |

|---|---|---|---|---|---|

| 1 | 4.54 | 508 | 46 | 51.4 | 12.8 |

| 2 | 3.85 | 545 | 62 | 42.8 | 31.5 |

| 3 | 1.16 | 194 | 7 | 45.2 | 15.6 |

| 4 | 1.67 | 336 | 88 | 53.1 | 40.4 |

| 5 | 1.44 | 208 | 18 | 33.5 | 34.3 |

| 6 | 6.83 | 979 | 73 | 40.6 | 13.8 |

| Abbreviation | Metric | Group | Higher Accuracy |

|---|---|---|---|

| Normalised height variance | Direct, unsupervised | ↓ | |

| Average difference in mean height between adjacent microstands | Direct, unsupervised | ↑ | |

| Average Euclidean distance between the mean spectral vectors of adjacent microstands | Direct, unsupervised | ↑ | |

| Average difference in mean heights of local maximums between adjacent microstands | Direct, unsupervised | ↑ | |

| Oversegmentation | Direct, supervised | ↓ | |

| Undersegmentation | Direct, supervised | ↓ | |

| D | Summary score | Direct, supervised | ↓ |

| Boundary similarity | Direct, supervised | ↑ | |

| Root-mean-squared error for stand volume estimation | System-level, supervised | ↓ |

| Case | ||||

|---|---|---|---|---|

| Regular grid m | 0.35 | 3.9 | 9.32 | 2.83 |

| Reference polygons | 0.24 | 5.68 | 934 | 4.2 |

| GRM CHM, D | 0.09 | 7.47 | 569 | 4.34 |

| GRM CHM, RMSE | 0.01 | 4.86 | 31 | 3.09 |

| JSEG CHM | 0.12 | 8.2 | 103 | 4.4 |

| GRM MS, D | 0.35 | 4.3 | 638 | 3.8 |

| GRM MS, RMSE | 0.3 | 2.63 | 23.7 | 2.4 |

| JSEG MS | 0.25 | 4.2 | 223 | 4.1 |

| GRM fused, D | 0.14 | 6.53 | 822 | 4.27 |

| GRM fused, RMSE | 0.1 | 4.92 | 173 | 3.18 |

| JSEG fused | 0.09 | 7.1 | 106 | 4.9 |

| Case | |||||||

|---|---|---|---|---|---|---|---|

| Regular grid m | 0.63 | 0.45 | 0.55 | 0.5 | 74.8 | 1369 | 0 |

| Reference polygons | - | - | - | - | 67.9 | 1352 | 362 |

| GRM CHM, D | 0.38 | 0.5 | 0.45 | 0.67 | 82 | 890 | 425 |

| GRM CHM, RMSE | 0.89 | 0.15 | 0.63 | 0.99 | 78.8 | 66 | 12 |

| JSEG CHM | 0.63 | 0.43 | 0.54 | 0.69 | 80.1 | 586 | 572 |

| GRM MS, D | 0.51 | 0.54 | 0.53 | 0.76 | 80.6 | 833 | 326 |

| GRM MS, RMSE | 0.94 | 0.11 | 0.67 | 0.99 | 78.9 | 38 | 8 |

| JSEG MS | 0.61 | 0.45 | 0.75 | 0.75 | 82 | 620 | 535 |

| GRM fused, D | 0.38 | 0.52 | 0.46 | 0.74 | 82 | 1104 | 416 |

| GRM fused, RMSE | 0.71 | 0.3 | 0.55 | 0.94 | 78.8 | 271 | 55 |

| JSEG fused | 0.71 | 0.43 | 0.54 | 0.79 | 76.0 | 651 | 114 |

| Site No. | Average for | Std of for | Average for | Std of for | Confidence Level of the Image Analyst |

|---|---|---|---|---|---|

| 1 | 0.49 | 0.04 | 0.56 | 0.03 | 0.73 |

| 2 | 0.41 | 0.08 | 0.51 | 0.06 | 0.70 |

| 3 | 0.35 | 0.05 | 0.44 | 0.01 | 0.67 |

| 4 | 0.34 | 0.1 | 0.43 | 0.08 | 0.67 |

| 5 | 0.41 | 0.05 | 0.49 | 0.03 | 0.7 |

| 6 | 0.45 | 0.07 | 0.52 | 0.07 | 0.72 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gulbe, L.; Zarins, J.; Mednieks, I. Automated Delineation of Microstands in Hemiboreal Mixed Forests Using Stereo GeoEye-1 Data. Remote Sens. 2022, 14, 1471. https://doi.org/10.3390/rs14061471

Gulbe L, Zarins J, Mednieks I. Automated Delineation of Microstands in Hemiboreal Mixed Forests Using Stereo GeoEye-1 Data. Remote Sensing. 2022; 14(6):1471. https://doi.org/10.3390/rs14061471

Chicago/Turabian StyleGulbe, Linda, Juris Zarins, and Ints Mednieks. 2022. "Automated Delineation of Microstands in Hemiboreal Mixed Forests Using Stereo GeoEye-1 Data" Remote Sensing 14, no. 6: 1471. https://doi.org/10.3390/rs14061471

APA StyleGulbe, L., Zarins, J., & Mednieks, I. (2022). Automated Delineation of Microstands in Hemiboreal Mixed Forests Using Stereo GeoEye-1 Data. Remote Sensing, 14(6), 1471. https://doi.org/10.3390/rs14061471