Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation

Abstract

:1. Introduction

- (1)

- (2)

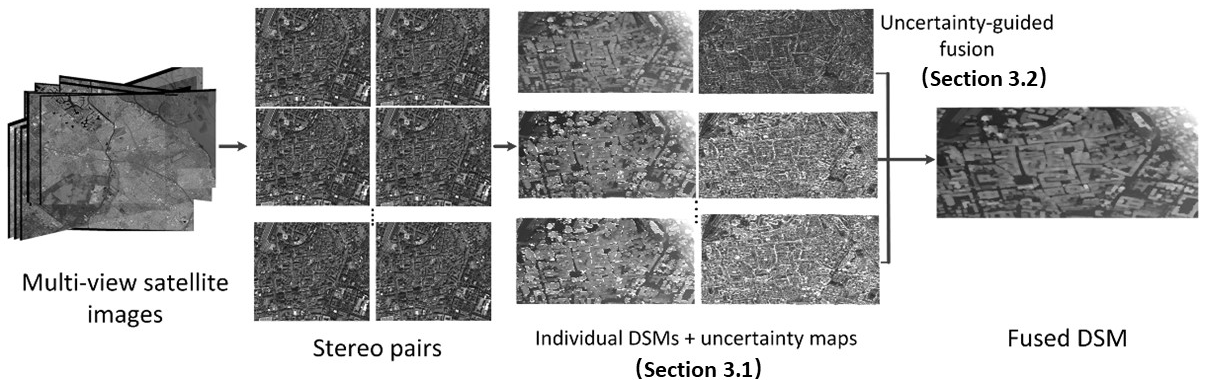

The Proposed Work

- A scalable procedure that uses the uncertainty information of the dense matching to better fuse depth maps;

- An evaluation of the proposed procedure performances using three different satellite datasets (WorldView-2, GeoEye, and Pleiades) acquired over a complex urban landscape;

- An RMSE improvement of 0.1–0.2 m (5–10% of relative accuracy improvement considering the achieved 2–4 m of the final RMSE on different evaluation cases) against LiDAR reference data over a typical median filter.

2. State of the Art

3. Methodology

3.1. The Uncertainty Metric through Dense Image Matching

3.2. Uncertainty Guided DSM Fusion

| Algorithm 1: Pseudo code for the proposed fusion method |

| For in all pixels in |

| For j in |

| aggregate based on for (following Equation (2)) |

| collect height samples (following Equation (3)) |

| compute and (following Equations (4) and (5)) |

| if − > |

| = |

| else |

| = |

4. Experiments and Analyses

4.1. Experiment Dataset and Setup

- (1)

- Fusion of DSMs generated by all pairs;

- (2)

- Fusion of only Pleiades pairs.

4.2. Accuracy Assessment

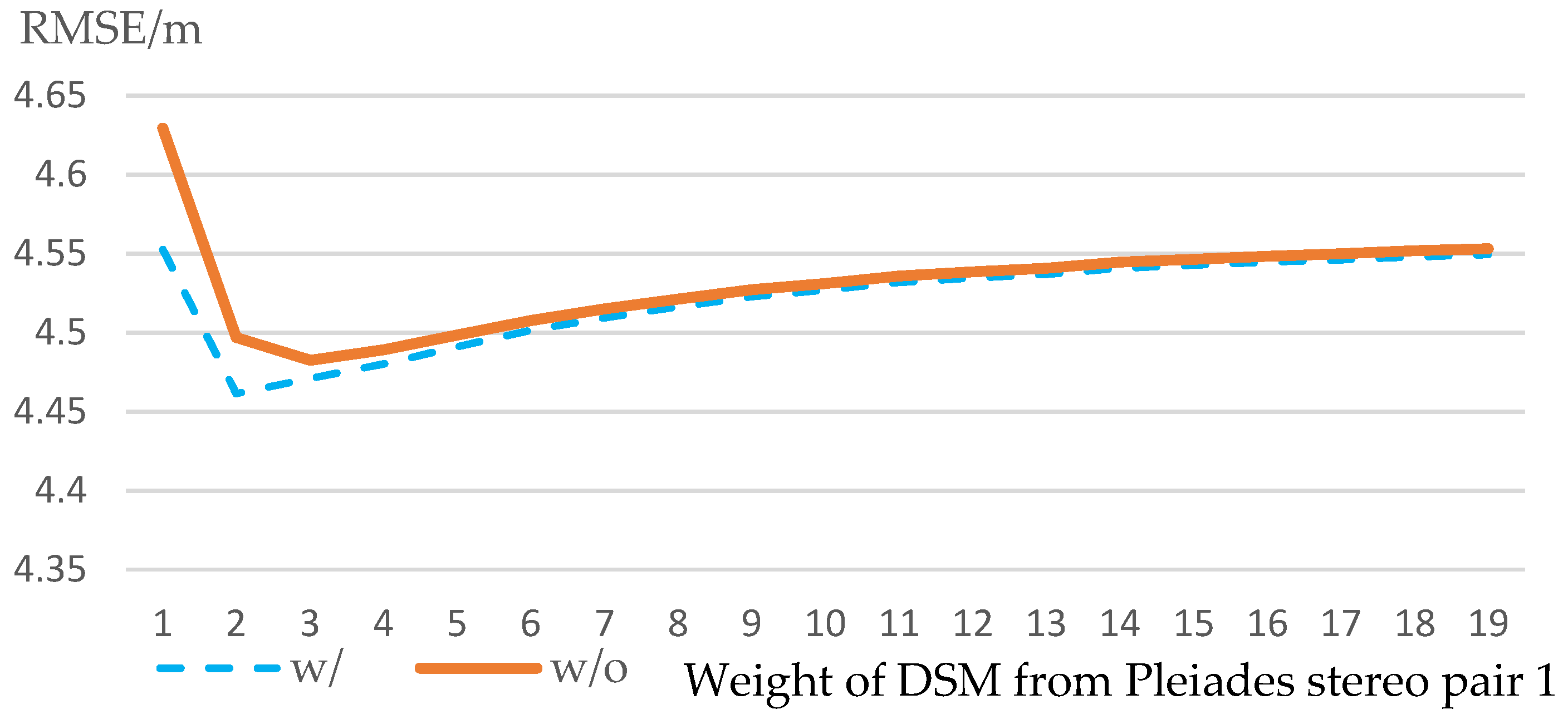

4.3. Weight and Contributions of Individual DSMs in Fusion

5. Discussions

- Sometimes the RMSE of a single pair (e.g., Pleiades pair 1, in AOI-1, Table 4) tended to be better (lower) than that of a fused DSM (using a median filter) in the test regions, primarily due to it being a pair with a very small intersection angle that could pick up narrow streets while others could not. Our proposed algorithm can optimally and adaptively incorporate information of these individual DSMs, and produced a fused DSM better than that of “Pleiades pair 1”;

- Deep and high-frequency relief differences, as shown in the city center areas, remain to be challenging for satellite-based (high-altitude) mapping. Our accuracy analysis showed that the overall RMSE did not necessarily become better as the number of DSMs increased (Table 2), primarily due to the large error rate occurring on the borders of objects and narrow streets; there was only one DSM that reconstructed these deep relief variations correctly (with a very small intersection angle). For building objects that appeared to be nearer objects than the deep and narrow streets, the accuracy followed the intuitive expectation that the RMSE became lower as the number of DSMs increased;

- We considered weighting the contributions of the individual DSMs (Section 4.3), showing that the results of the fusion could be further enhanced by appropriately weighting DSMs of better quality in the fusion procedure. There may be an optimal weight available, although we did not explore further how such a weight might be determined as this exceeded the scope of the current study.

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- Algorithm = SGM.

- Cost mode = The census transform mode.

- corr-kernel = 3 × 3. The default parameter is 25 × 25. This parameter was used as it showed a better performance in our experiment.

- subpixel-mode= 2. Notes from manual: when set to 2, it produces very accurate results, but it is about an order of magnitude slower.

- alignment-method = AffineEpipolar. Notes from manual: stereo will attempt to pre-align the images by detecting tie-points using feature matching, and using those to transform the images such that pairs of conjugate epipolar lines become collinear and parallel to one of the image axes. The effect of this is equivalent to rotating the original cameras which took the pictures.

- individually-normalize. Notes from manual: this option forces each image to be normalized to its own maximum and minimum valid pixel value. This is useful in the event that images have different and non-overlapping dynamic ranges.

References

- Satellite Sensors and Specifications|Satellite Imaging Corp. Available online: https://www.satimagingcorp.com/satellite-sensors/ (accessed on 14 January 2022).

- Facciolo, G.; De Franchis, C.; Meinhardt-Llopis, E. Automatic 3D Reconstruction from Multi-Date Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 57–66. [Google Scholar]

- Bosch, M.; Kurtz, Z.; Hagstrom, S.; Brown, M. A Multiple View Stereo Benchmark for Satellite Imagery. In Proceedings of the 2016 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 18–20 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–9. [Google Scholar]

- Qin, R. A Critical Analysis of Satellite Stereo Pairs for Digital Surface Model Generation and a Matching Quality Prediction Model. ISPRS J. Photogramm. Remote Sens. 2019, 154, 139–150. [Google Scholar] [CrossRef]

- Zhang, K.; Snavely, N.; Sun, J. Leveraging Vision Reconstruction Pipelines for Satellite Imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Zhang, L. Automatic Digital Surface Model (DSM) Generation from Linear Array Images; ETH Zurich: Zurich, Switerland, 2005. [Google Scholar]

- Qin, R. Automated 3D Recovery from Very High Resolution Multi-View Images Overview of 3D Recovery from Multi-View Satellite Images. In Proceedings of the ASPRS Conference (IGTF) 2017, Baltimore, MD, USA, 12–17 March 2017; pp. 12–16. [Google Scholar]

- Huang, X.; Qin, R. Multi-View Large-Scale Bundle Adjustment Method for High-Resolution Satellite Images. arXiv 2019, arXiv:1905.09152. [Google Scholar]

- Bhushan, S.; Shean, D.; Alexandrov, O.; Henderson, S. Automated Digital Elevation Model (DEM) Generation from Very-High-Resolution Planet SkySat Triplet Stereo and Video Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 173, 151–165. [Google Scholar] [CrossRef]

- IARPA Multi-View Stereo 3D Mapping Challenge. Available online: https://www.iarpa.gov/challenges/3dchallenge.html (accessed on 2 March 2022).

- Krauß, T.; d’Angelo, P.; Wendt, L. Cross-Track Satellite Stereo for 3D Modelling of Urban Areas. Eur. J. Remote Sens. 2019, 52, 89–98. [Google Scholar] [CrossRef] [Green Version]

- Albanwan, H.; Qin, R. Enhancement of Depth Map by Fusion Using Adaptive and Semantic-Guided Spatiotemporal Filtering. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 3, 227–232. [Google Scholar] [CrossRef]

- Yang, J.; Li, D.; Waslander, S.L. Probabilistic Multi-View Fusion of Active Stereo Depth Maps for Robotic Bin-Picking. IEEE Robot. Autom. Lett. 2021, 6, 4472–4479. [Google Scholar] [CrossRef]

- Chen, J.; Bautembach, D.; Izadi, S. Scalable Real-Time Volumetric Surface Reconstruction. ACM Trans. Graph. (ToG) 2013, 32, 1–16. [Google Scholar] [CrossRef]

- Hou, Y.; Kannala, J.; Solin, A. Multi-View Stereo by Temporal Nonparametric Fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 2651–2660. [Google Scholar]

- Kuhn, A.; Hirschmüller, H.; Mayer, H. Multi-Resolution Range Data Fusion for Multi-View Stereo Reconstruction. In Proceedings of the German Conference on Pattern Recognition, Saarbrücken, Germany, 3–6 September 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 41–50. [Google Scholar]

- Rothermel, M.; Haala, N.; Fritsch, D. A median-based depthmap fusion strategy for the generation of oriented points. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Elsevier: Amsterdam, The Netherlands; pp. 115–122. [Google Scholar]

- Rumpler, M.; Wendel, A.; Bischof, H. Probabilistic Range Image Integration for DSM and True-Orthophoto Generation. In Proceedings of the Scandinavian Conference on Image Analysis, Espoo, Finland, 17–20 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 533–544. [Google Scholar]

- Unger, C.; Wahl, E.; Sturm, P.; Ilic, S. Probabilistic Disparity Fusion for Real-Time Motion-Stereo. In Proceedings of the ACCV, Queenstown, New Zealand, 8–12 November 2010; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Zhao, F.; Huang, Q.; Gao, W. Image Matching by Normalized Cross-Correlation. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, Toulouse, France, 14–19 May 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 2, pp. 729–732. [Google Scholar]

- Hirschmuller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2, pp. 807–814. [Google Scholar]

- Qin, R. Rpc Stereo Processor (RSP)–a Software Package for Digital Surface Model and Orthophoto Generation from Satellite Stereo Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 77. [Google Scholar]

- Zabih, R.; Woodfill, J. Non-Parametric Local Transforms for Computing Visual Correspondence. In Proceedings of the European conference on computer vision, Stockholm, Sweden, 2–6 May 1994; Springer: Berlin/Heidelberg, Germany, 1994; pp. 151–158. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric Surface Reconstruction from Imagery. In Proceedings of the Proceedings LC3D Workshop, Berlin, Germany, 4–5 December 2012; Volume 8. [Google Scholar]

- Poli, D.; Remondino, F.; Angiuli, E.; Agugiaro, G. Evaluation of Pleiades-1a Triplet on Trento Testfield. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 287–292. [Google Scholar] [CrossRef] [Green Version]

- Agugiaro, G.; Poli, D.; Remondino, F. Testfield Trento: Geometric Evaluation of Very High Resolution Satellite Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2012, 39, B8. [Google Scholar] [CrossRef] [Green Version]

- Akca, D. Co-Registration of Surfaces by 3D Least Squares Matching. Photogramm. Eng. Remote Sens. 2010, 76, 307–318. [Google Scholar] [CrossRef] [Green Version]

- Lastilla, L.; Belloni, V.; Ravanelli, R.; Crespi, M. DSM Generation from Single and Cross-Sensor Multi-View Satellite Images Using the New Agisoft Metashape: The Case Studies of Trento and Matera (Italy). Remote Sens. 2021, 13, 593. [Google Scholar] [CrossRef]

- Partovi, T.; Fraundorfer, F.; Bahmanyar, R.; Huang, H.; Reinartz, P. Automatic 3-d Building Model Reconstruction from Very High Resolution Stereo Satellite Imagery. Remote Sens. 2019, 11, 1660. [Google Scholar] [CrossRef] [Green Version]

- Beyer, R.A.; Alexandrov, O.; McMichael, S. The Ames Stereo Pipeline: NASA’s Open Source Software for Deriving and Processing Terrain Data. Earth Space Sci. 2018, 5, 537–548. [Google Scholar] [CrossRef]

| Datasets | Intersection Angle (degrees) | GSD (m) | Area (km2) | Year |

|---|---|---|---|---|

| Pleiades stereo pair 1 | 5.57 | 0.72 | 20 × 20 | 2012 |

| Pleiades stereo pair 2 | 27.85 | 0.72 | 20 × 20 | 2012 |

| Pleiades stereo pair 3 | 33.42 | 0.72 | 20 × 20 | 2012 |

| GeoEye-1 stereo pair | 30.30 | 0.50 | 10 × 10 | 2011 |

| WorldView-2 stereo pair | 33.71 | 0.51 | 17 × 17 | 2010 |

| Generated DSMs | AOI-1 | AOI-2 | AOI-3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | STD | RMSE | Mean | STD | RMSE | Mean | STD | RMSE | |

| Pleiades stereo pair 1 (int. ang. 5.57°) | 0.92 | 4.04 | 4.14 | 0.89 | 3.02 | 3.15 | 1.67 | 3.56 | 3.93 |

| Pleiades stereo pair 2 (int. ang. 27.85°) | 1.75 | 4.00 | 4.36 | 1.03 | 3.08 | 3.25 | 1.42 | 3.74 | 4.00 |

| Pleiades stereo pair 3 (int. ang. 33.42°) | 1.61 | 4.22 | 4.52 | 0.84 | 3.26 | 3.37 | 1.55 | 3.80 | 4.11 |

| GeoEye-1 stereo pair (int. ang. 30.30°) | 1.26 | 4.15 | 4.34 | 0.48 | 3.03 | 3.07 | 1.32 | 3.71 | 3.93 |

| WorldView-2 stereo pair (int. ang. 33.71°) | 2.01 | 4.40 | 4.84 | 1.47 | 3.39 | 3.70 | 2.22 | 4.26 | 4.80 |

| Fused Pleiades pairs w/uncertainty (ours) | 1.48 | 3.80 | 4.08 | 0.89 | 2.92 | 3.05 | 1.46 | 3.46 | 3.75 |

| Fused Pleiades pairs w/o uncertainty | 1.62 | 4.01 | 4.32 | 0.86 | 2.93 | 3.06 | 1.14 | 3.61 | 3.79 |

| Fused all pairs w/uncertainty (ours) | 1.49 | 3.90 | 4.17 | 0.87 | 2.86 | 3.00 | 1.54 | 3.49 | 3.82 |

| Fused all pairs w/o uncertainty | 1.49 | 3.98 | 4.25 | 0.82 | 2.90 | 3.01 | 1.23 | 3.60 | 3.81 |

| Generated DSMs | AOI-1 | AOI-2 | AOI-3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | STD | RMSE | Mean | STD | RMSE | Mean | STD | RMSE | |

| Pleiades stereo pair 1 (int. ang. 5.57°) | −0.17 | 2.27 | 2.28 | −0.35 | 1.94 | 1.97 | −0.46 | 2.52 | 2.56 |

| Pleiades stereo pair 2 (int. ang. 27.85°) | 0.69 | 2.10 | 2.21 | 0.50 | 1.74 | 1.81 | −0.12 | 2.70 | 2.71 |

| Pleiades stereo pair 3 (int. ang. 33.42°) | 0.51 | 2.28 | 2.33 | 0.23 | 2.01 | 2.02 | −0.02 | 2.62 | 2.62 |

| GeoEye-1 stereo pair (int. ang. 30.30°) | 0.11 | 2.23 | 2.23 | −0.08 | 1.64 | 1.64 | −0.28 | 2.27 | 2.28 |

| WorldView-2 stereo pair (int. ang. 33.71°) | 0.64 | 2.44 | 2.52 | 0.66 | 1.92 | 2.03 | 0.23 | 2.95 | 2.96 |

| Fusion Pleiades pairs w/uncertainty (ours) | 0.44 | 2.04 | 2.09 | 0.26 | 1.61 | 1.64 | −0.12 | 2.33 | 2.33 |

| Fusion Pleiades pairs w/o uncertainty | 0.50 | 2.09 | 2.15 | 0.31 | 1.69 | 1.72 | −0.60 | 3.06 | 3.12 |

| Fusion all pairs w/uncertainty (ours) | 0.28 | 2.06 | 2.08 | 0.16 | 1.58 | 1.59 | −0.17 | 2.20 | 2.21 |

| Fusion all pairs w/o uncertainty | 0.29 | 2.05 | 2.07 | 0.17 | 1.59 | 1.60 | −0.57 | 2.87 | 2.92 |

| Areas/Software | Pleiades Pair 1 (a Single Pair) | Median Filter | Adapt. Median [7] | Facciolo et al. [2] | Proposed |

|---|---|---|---|---|---|

| AOI-1/RSP | 4.14 | 4.27 | 4.32 | 4.76 | 4.08 |

| AOI-2/RSP | 3.15 | 3.17 | 3.06 | 3.64 | 3.05 |

| AOI-3/RSP | 3.93 | 3.87 | 3.79 | 5.30 | 3.75 |

| AOI-1/ASP | 5.79 | 5.89 | 5.88 | 6.18 | N.A. |

| AOI-2/ASP | 4.27 | 4.41 | 4.38 | 4.73 | N.A. |

| AOI-3/ASP | 5.46 | 6.28 | 6.22 | 5.95 | N.A. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, R.; Ling, X.; Farella, E.M.; Remondino, F. Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation. Remote Sens. 2022, 14, 1309. https://doi.org/10.3390/rs14061309

Qin R, Ling X, Farella EM, Remondino F. Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation. Remote Sensing. 2022; 14(6):1309. https://doi.org/10.3390/rs14061309

Chicago/Turabian StyleQin, Rongjun, Xiao Ling, Elisa Mariarosaria Farella, and Fabio Remondino. 2022. "Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation" Remote Sensing 14, no. 6: 1309. https://doi.org/10.3390/rs14061309

APA StyleQin, R., Ling, X., Farella, E. M., & Remondino, F. (2022). Uncertainty-Guided Depth Fusion from Multi-View Satellite Images to Improve the Accuracy in Large-Scale DSM Generation. Remote Sensing, 14(6), 1309. https://doi.org/10.3390/rs14061309