Abstract

Next day wildfire prediction is an open research problem with significant environmental, social, and economic impact since it can produce methods and tools directly exploitable by fire services, assisting, thus, in the prevention of fire occurrences or the mitigation of their effects. It consists in accurately predicting which areas of a territory are at higher risk of fire occurrence each next day, exploiting solely information obtained up until the previous day. The task’s requirements in spatial granularity and scale of predictions, as well as the extreme imbalance of the data distribution render it a rather demanding and difficult to accurately solve the problem. This is reflected in the current literature, where most existing works handle a simplified or limited version of the problem. Taking into account the above problem specificities, in this paper, we present a machine learning methodology that effectively (sensitivity > 90%, specificity > 65%) and efficiently performs next day fire prediction, in rather high spatial granularity and in the scale of a country. The key points of the proposed approach are summarized in: (a) the utilization of an extended set of fire driving factors (features), including topography-related, meteorology-related and Earth Observation (EO)-related features, as well as historical information of areas’ proneness to fire occurrence; (b) the deployment of a set of state-of-the-art classification algorithms that are properly tuned/optimized on the setting; (c) two alternative cross-validation schemes along with custom validation measures that allow the optimal and sound training of classification models, as well as the selection of different models, in relation to the desired trade-off between sensitivity (ratio of correctly identified fire areas) and specificity (ratio of correctly identified non-fire areas). In parallel, we discuss pitfalls, intuitions, best practices, and directions for further investigation derived from our analysis and experimental evaluation.

1. Introduction

Wildfires are events that, although relatively rare, can have catastrophic impacts on the environment, economy and, on several occasions, on people’s lives. Climate change expectedly adds to the problem, increasing the frequency, severity, and consequences of wildfires. Thus, the analysis of such events has been gaining increased research interest, especially during the last decade, with a plethora of works examining different aspects and settings [1]. Indicatively, the research community has proposed methods for fire detection, fire risk and susceptibility prediction, fire index calculation, fire occurrence prediction, burnt area prediction estimation, etc.

In this work, we focus on the task of next day wildfire prediction (alt. fire occurrence prediction), proposing a machine learning methodology and models that allow the highly granular, scalable, and accurate prediction of next day fire occurrence in separate areas of a territory, utilizing information (features) obtained for each area up until the previous day. We need to emphasize here that the specific task is the most challenging among the discussed wildfire analysis tasks, and in particular compared to the (most similar) fire susceptibility prediction task, which estimates the danger of fire occurrence within a comparatively much larger upcoming time interval (i.e., from one month to one year ahead). The difficulty of the task lies in (a) the extreme imbalance of the data distribution with respect to the fire/no-fire instances, (b) the massive scale of the data, (c) the heterogeneity and potential concept drifts that lurk in the data, along with the factor of (d) the absence of fire phenomenon, namely the fact that different areas with almost identical characteristics might display different behavior with respect to fire occurrence.

With the advent of machine/deep learning methods (ML/DL), domain scientists are increasingly realizing the capacity of such techniques to capture more complex patterns in the data and exploiting them, on the task of fire prediction, as opposed to more traditional, theoretical/statistical methods. This is reflected in a plethora of recent works [1,2,3,4,5,6,7] that present ML-based methods for the tasks of fire prediction and susceptibility, instead of more traditional approaches (e.g., the calculation of fire indexes). Thus, machine learning is steadily becoming the de facto methodology applied in the task. However, most approaches in the current literature either solve a simplified version of the problem, with limited applicability in a real-world setting, or present methodological shortcomings that limit the generalizability of their findings, as described next.

One of the most significant shortcomings of current state-of-the-art works is that they ignore the fact that wildfire prediction is an extremely imbalanced classification problem. As a consequence, most approaches of the literature [2,3,4,5,8,9,10] focus on a balanced version of the problem, by under-sampling no-fire instances or over-sampling fire ones, or by considering specific non-fire areas as fire ones [6]. We need to emphasize here that the actual problem of next day fire prediction is merely handled by a handful of works; most methods cited handle other versions of the problem, such as weekly/monthly/yearly fire occurrence prediction, or fire susceptibility in general. Such approaches, although often demonstrating impressive prediction effectiveness in their experiments, are essentially detached from a real-world setting, since they assess their methods on different (re-sampled) test set distributions than the original, real-world ones. We note here that adopting a balanced dataset setting during model training or even validation is an acceptable procedure; the methodological issue of the aforementioned approaches lies in expanding the balanced setting also to the test set of their evaluation. In this work, we maintain the extremely imbalanced distribution of the data on the test set, essentially evaluating the methods’ expected real-world effectiveness. Further, we investigate how we can optimally select models trained in balanced training sets, with the aim to perform well when deployed on imbalanced test sets.

Another important omission of works in the literature [4,6,7,11] regards the fact that the instances of the problem (individual areas of a territory, often represented as grid cells) are spatio-temporally correlated, since they represent geographic areas through the course of time. Ignoring this fact by, e.g., performing shuffling of the data before splitting them into train/validation/test partitions, leads to misleading effectiveness results, since it allows almost identical instances to be shared between training and test sets, essentially performing information leakage. In this work we propose a strict scheme for partitioning the data during cross-validation, so as to avoid the aforementioned pitfall.

An additional shortcoming of several existing works [2,3,4,5,6,12] is the poor exploration of the model space of the classification algorithms that are applied. Each of these algorithms can be configured via a set of hyperparameters that, if properly set, can significantly change the algorithm’s effectiveness. However, selecting the proper set of hyperparameters for each algorithm is a highly data-driven process, and might lead to completely different hyperparameterizations (configurations) for different datasets. Most works in the literature deploy cross-validation exclusively for assessing a manually selected configuration for an algorithm, which, however, might be sub-optimal in the context of the task and the underlying data. In our work, we exploit cross-validation to perform extensive search of the hyperparameter space of each assessed algorithm, in order to select models that both perform well and are expected to generalize well.

The presented work builds on and significantly extends our previous two works on the field [13,14], by: (i) Implementing an extended set of training features (described in Section 2.3) for capturing the most important fire driving factors within the adopted ML models. (ii) Implementing two alternative cross-validation schemes, as well as task-specific evaluation measures (described in Section 3.2.2) for model selection during validation, in order to handle the large data scale and imbalance; the second cross-validation scheme, as well as the second task-specific evaluation measure comprise new contributions compared to [14]. (iii) Presenting an extended evaluation and discussion of the proposed methodology. The contributions of our work are summarized as follows:

- The proposed methods, including the extended feature set, the alternative cross-validation processes, and the task-specific evaluation measures, considerably improve sensitivity (recall of fire class) and specificity (recall of no-fire class) compared to our previous work [13]. To the best of our knowledge, the achieved effectiveness comprises the current state of the art in the problem of next day fire prediction, for the considered real-world setting, with respect to data scale and imbalance.

- The proposed methodology produces a range of models, allowing the selection of the most suitable model, with respect to the desirable trade-off between sensitivity and specificity.

- An extended analysis and discussion on the specificities of the task is performed, tying the proposed methods and schemes with specific gaps, shortcomings and errors of existing methodologies that handle the task. Further, insights, intuitions, and directions for further improving the proposed methods are discussed.

The paper is organized as follows. Section 2 first provides the problem definition and identifies the specificities of the task. Then, it describes in detail the study area, the derived evaluation dataset and the implemented training features. Section 3 presents the proposed methodology, including the deployed ML algorithms and the cross-validation/model selection procedures that are implemented. Section 4 presents an extensive experimental evaluation of the proposed methods. Section 5 summarizes our most important findings and discusses future steps on the task. Finally, Section 6 concludes the paper.

2. Materials

2.1. Problem Definition and Specificities

In this work, we handle the problem of next day fire prediction. Our dataset comprises a geographic grid of high granularity (each cell being 500 m wide) covering the whole territory of interest (in our case, the whole Greek territory). Each instance corresponds to the daily snapshot of each grid cell, and is represented by a set of characteristics (alt. fire driving factors, features) that are extracted for the specific area, for a specific day . Given a historical dataset annotated (labeled) with the existence or absence of fire, for each grid cell, for each day, each available historical instance carries a binary label for the day , denoting the existence (label:fire) or absence of fire (label:no-fire). It is important to point out that all the features of the instance , are available from the previous day because they either (1) are invariant in time (e.g., DEM), (2) have a slow variation (e.g., land cover), or (3) can be represented with satisfying accuracy by next day predictions (e.g., wind, temperature). Thus, our problem is formulated as a binary classification task; the goal is to learn, using historical data, a decision function , comprising a set of hyperparameters H to be properly selected and a set of parameters to be properly learned, that, given a new instance accurately predicts label .

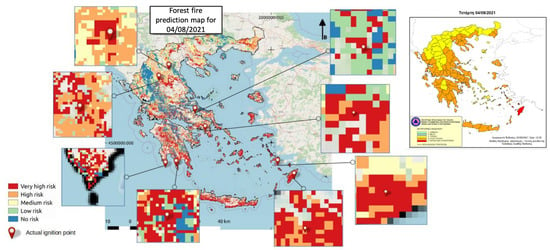

In our real-world operational setting, a fire service needs to be provided, each previous day, with a map that assigns fire predictions for the whole country’s territory, so that they can properly distribute their forces. In case the majority of the map is predicted as fire, this map is useless, even if all fires are eventually predicted correctly, since the available resources/forces are not adequate to cover the whole territory. On the other hand, assigning fire predictions to an adequately small part of the whole territory but missing e.g., half of the actual fires is obviously equally undesired; while predicting fire occurrences in a very small part would allow the fire service to efficiently distribute their forces there, there would exist several areas that would actually have a fire occurrence but no fire service forces to handle them. Thus, a proper evaluation measure in our task should favor models that identify the majority of the actual fire events, while, in the same time, not falsely classify the majority of a territory. This requires the joint inspection of the measures of sensitivity and specificity in order to evaluate the quality of a method. Sensitivity is defined as the recall of fire instances, i.e., the ratio of predicted (by the method) fire instances to the number of actual, total fire instances and specificity is defined as the recall of no-fire instances, i.e., the ratio of predicted (by the method) no-fire instances to the number of actual, total no-fire instances [15].

This is empirically, based on our work in the problem and our interactions/collaboration with the Greek Fire Service, translated to achieving sensitivity values of ∼90%, while, on the same time, specificity of at least . This of course does not comprise a fixed requirement and, depending on the exact real-world setting and need, another trade-off might be preferable, e.g., something closer to . We emphasize that the methods we propose are not tied to any specific requirement and provide the agility to learn different models that can aim at different sensitivity/specificity trade-offs, as demonstrated in Section 4 and in particular in Section 4.2.3.

We note that the above problem formulation fully aligns with the requirements of a real-world fire prediction system; the adopted spatial granularity of predictions (500 m wide cells) is more than sufficient for the fire services to organize and distribute their resources in a targeted and efficient manner, while the next day horizon provides adequate time for the aforementioned organization. As briefly outlined in Section 1, the task presents certain specificities that render it particularly challenging:

- Extreme data imbalance. Due to the fact that each instance of the dataset corresponds to a daily snapshot of an area (grid cell), it is evident that we end up with extreme imbalance in favor of the no-fire class. Consider for example a fire that spanned for two days of month August 2018 and through an area of 16 grid cells. This fire generates 32 fire instances and more than 3300 no-fire instances for year 2018, if we consider the whole seven-months period, for the specific grid cells. The imbalance becomes even larger given that fire occurrences naturally correspond to a small percentage of a whole territory (country) and that it is rather unusual to have a fire occurrence in the same area during consecutive (or even close) years. Indicatively, considering the whole Greek territory, one of the most prone countries to wildfires, for the 11-year period of 2010–2020, the ratio of fire to non-fire areas (grid cells) is in the order of :100,000. Note that the difference in data distribution to the much more widely uptaken task of fire susceptibility is vast, where even a single day’s fire occurrence in a grid cell generates one fire instance (but no no-fire instances) for the whole prediction interval, which might be e.g., monthly or yearly. As a consequence, most approaches in the literature handling fire susceptibility end up with balanced or slightly imbalanced (at most 1:10) datasets [2,3,4,5,6,8,9,10].

- Massive scale of data. In order to be exploitable by the fire service, a next day fire prediction system needs to produce individual daily predictions for areas that are adequately granular. Consider for example a system that produces predictions per prefecture; it is quite possible that during the summer period, several prefectures are predicted as having a fire, for the same day. Then, it is essentially impossible for a fire service to organize their resources in order to cover the whole range of them. Instead, if the predictions regard small enough areas, it is then feasible to distribute their forces to the areas with the highest risk, even if these individual areas are distributed through various prefectures. To satisfy the above requirement, in our work we consider grid cells 500 m wide, ending up with a total of 360 K grid cells (distinct areas 500 m wide) to cover the whole Greek territory. Considering that, each of these cells “generate” daily instances, for a 7-month fire period and for an 11-year interval, this amounts to a dataset of more than 830 M instances. Such scale makes the task of properly selecting and learning expressive ML models rather difficult, requiring high performance computing (HPC) infrastructure, which is hardly the case for fire services. Essentially, a significant amount of undersampling needs to be carefully performed to produce a realistically exploitable training set, upon which proper cross-validation/model selection processes can be executed.

- Heterogeneity and concept drifts (dataset shift). It is observed from our analysis that different months of each year can demonstrate significant differences with respect to the suitability and effectiveness of different ML models on the task, while different ML models are able to produce quite different prediction distributions, with respect to the sensitivity/specificity trade-off.

- Absence of fire. Finally, it is empirically known that fire occurrence can be caused by rather unpredictable factors (i.e., a person’s decision to start a fire, a cigarette thrown by a driver, a lightning), which are impossible to be captured and utilized as training features within the prediction algorithms; as a result any algorithm deployed to discriminate between fire and no-fire instances (areas) is bound to decide lacking such crucial information and is inevitably expected to classify instances based on their proneness on fire occurrence. Thus, several instances with “absence” of fire are areas that could as well have displayed a fire occurrence based on their characteristics, however, due to almost random factors did not. Such instances lead to significant restrictions of potentially any algorithm’s achieved specificity.

In the following sections we propose solutions that aim to handle, to a certain extent, the above specificities, as well as we discuss potential future steps for further addressing them.

2.2. Study Area and Evaluation Dataset

The area of interest is the whole Greek territory, situated on the southern tip of the Balkans. It is a typical Mediterranean country with a total area of 131,957 km2, out of which 130,647 km2 is land area. The climate of Greece is Mediterranean with usually hot and dry summers and mild and rainy winters with sunshine during the whole year. Due to the influence of the topography (great mountain chains along the central part), the upper part of Greece can be very cold in the winter with significant snowfall events, whereas the south of Greece and the islands experience much milder winters.

Eighty percent of Greece consists of mountains or hills, making the country one of the most mountainous in Europe. A major part, up to of the total surface, represents low altitude areas (0–500 m) which are prone to fire ignition according to [16]. More specifically, it is indicated that the great majority of burnt area falls within the 0–500 m elevation zone (61–86.5%), followed by the 500–1000 m elevation zone (13.4–33.6%).

According to [17], the mean annual rainfall depth ranges from less than 300 mm in Cyclades islands to more than 2000 mm in Pindos mountain range in central Greece. The lower mean monthly rainfall depth is less than 10 mm, in July and August in Aegean islands, Crete, coastal areas of Peloponnese, Athens, and south Euvoia. The average annual temperature ranges from less than 8 °C to 19.8 °C. The lowest temperatures occur at Northern Greece at the mountains’ peaks and the highest at the southern coasts of Crete and Athens mainly due to the urban islet phenomenon. The highest monthly average temperature is observed in July in the plains of central Macedonia, Thessaly, Agrinio, Kopaida, in the plain of Argos, in Tympaki, and in the Attica basin.

Concerning the average monthly sunshine, the highest rates are observed in July in southern Crete, northeast Rhodes and western Peloponnese. The lowest average values occur in northern Greece in the mountains of Rodopi, southeast Greece, and the peaks of Pindos [17].

The topography and the dominant north winds in combination with the vegetation types of central and southern parts of Greece are prime drivers for fire ignition during the summer period [16]. The vegetation cover that makes Greece particularly prone to fire hazard and fire risk, such as coniferous and mixed forests, sclerophyllous vegetation, natural grasslands, transitional woodlands, semi-natural, and pasture areas, corresponds to approximately of the total surface of the country [18].

As is it reported in [16] and other studies in Mediterranean Europe [19,20], wildfire activity in Greece is increased in the southern and more arid parts comparing to the northern and wetter parts, revealing a climatic gradient that strongly affects fire regime.

The construction of a reliable forest fire inventory can be a demanding task but is vital for the prediction of wildfire outbreaks, given that future fires are expected to occur under similar conditions. In this work an exhaustive forest fire inventory and burn scar maps were compiled by obtaining and fusing data from multiple sources, including the FireHub system of BEYOND (http://195.251.203.238/seviri/; accessed on 22 February 2022), NASA FIRMS (https://firms.modaps.eosdis.nasa.gov/active_fire/; accessed on 22 February 2022), and the European Forest Fire Information System (EFFIS) (https://effis.jrc.ec.europa.eu/; accessed on 22 February 2022). The FireHub system provides the diachronic burned scar mapping (BSM) service which maintains an archive of burned areas polygons in high resolution (30 and 10 m) for the last 35 years over the entire country. The burned areas are provided per year, but, given that the problem is defined as a daily fire risk prediction through the study of previous day’s conditions, each polygon should be assigned the exact outbreak date. Thus, given the seasonal FireHUB burned scars produced seasonally by BEYOND, we exploited the active fire and BSM products from NASA FIRMS and EFFIS for cross examination and exact fire date retrieval. More specifically, we validate each FireHUB scar, considering its intersection with a burned area from EFFIS or NASA and also the existence of active fires swarm inside it. The exact fire ignition date is then derived from the active fires product, since this product records the fire event at the time of the satellite pass, whereas the burned areas are recorded in later passes. Finally, a fire inventory for the years 2010–2020 was constructed in order to be used for training, validation, and testing [13,14].

2.3. Training Features

Twenty five forest fire influencing factors were considered, including topography-related, meteorology-related and Earth Observation (EO)-related variables. All factors were gathered and harmonized into a 500 m spatial resolution. Meteorology features had coarser granularity while topography-related (DEM) and land cover had finer granularity than 500 m. The former were processed and rescaled at the desired resolution by nearest neighbor interpolation [21]. From the latter, the DEM was downscaled via bilinear interpolation and land cover via the weighted majority resampling for continuous and categorical raster values. The weighted majority resampling was applied considering higher weights on more fire prone categories. We note that the initial resolution of each dataset is given in the column Source Spatial Resolution of Table 1. Next we present them in detail.

DEM. Topographic variables include the digital elevation model (DEM) which represents elevation and three more derivative factors: aspect, slope, curvature. For this, the European DEM ( https://land.copernicus.eu/imagery-in-situ/eu-dem; accessed on 22 February 2022) at 25 m resolution provided by Copernicus programme was employed. According to [16], the elevation distribution of the burnt areas shows a trend since the great majority falls within the 0–500 m elevation zone. The variables were downscaled via bilinear interpolation. The code names are DEM, aspect, slope, and curvature.

Land cover. Fire ignition and spread is strongly affected by land cover type as well. Sclerophyllous vegetation and natural grasslands form the main fuel sources of fires in Greece and the Mediterranean region generally. Copernicus’ Corine Land Cover (https://land.copernicus.eu/dashboards/clc-clcc-2000--2018; accessed on 22 February 2022) (CLC) datasets from the years 2012 and 2018, with spatial resolution 100 m, were retrieved and processed for the purposes of this study. Specifically, after applying weighted majority downscaling to the datasets, the Corine 2012 dataset was used to represent the corresponding variable (code named corine) for the instances up to the year 2015, while the Corine 2018 was used to represent the instances after 2015. Each land cover category is assigned with a distinct constant value, thus forming a feature with categorical nature, which was transformed to one-hot encoding features [22].

The factors of meteorology (like wind, temperature, precipitation) are crucial fire driving factors because their variability has impact both on the occurrence and the intensity of forest fires [23,24]. The meteorology variables (Temperature, Dewpoint, Wind speed, Wind direction, and Precipitation) were all extracted from ERA5-Land reanalysis datasets of Copernicus EO program and dowsncaled at 500 m via nearest neighbor interpolation. ERA5-Land provides hourly temporal resolution and enhanced native spatial resolution at 9 km. Each cell was divided and, as a result, the following feature groups were considered:

Temperature. From the hourly values of the 2 m temperature dataset, the minimum, maximum and mean aggregations for the temperature were calculated and introduced as the features Maximum temperature, Minimum temperature, Mean temperature in the dataset, with code names max_temp, min_temp, mean_temp.

Dewpoint. A feature with dewpoint temperature was added in the current work, compared to [14], which measures the humidity of the air. According to ERA5 Land definition it is the temperature at which, the air at 2 meters above the surface of the Earth would have to be cooled for saturation to occur (https://cds.climate.copernicus.eu/cdsapp#!/dataset/reanalysis-era5-land?tab=overview; accessed on 22 February 2022). Processing the hourly values of the dewpoint from ERA5 land, the minimum, maximum and mean aggregations for the dewpoint were extracted introducing the features Maximum dewpoint, Min dewpoint, Mean dewpoint in the data set with code names max_dew, min_dew, mean_dew.

Wind speed. The wind is long known to be one of the most influencing factors for fire ignition and spread [24], therefore the maximum wind velocity of the day was extracted from ERA5-Land reanalysis datasets at 10m for u, v components. We named this feature Maximum speed of the dominant direction (code name dom_vel) as it expresses the maximum wind speed for the direction of wind that is measured the majority of times (dominant direction).

Wind direction. Although wind direction cannot influence directly the primary ignition, it can help or prevent the fire to evolve in combination with other factors. To study and estimate the influence of the wind direction, the Wind direction of the maximum wind speed and Wind direction of the dominant wind speed features were introduced in the feature space with code names dirmax and domdir respectively. The former is the direction of the wind when during maximum wind speed within the day and the latter is the direction of the wind that was the dominant within the day. The eight major wind directions that were considered as values, formed a feature with categorical nature that was also transformed to one-hot encoding.

Seven-day accumulated precipitation. At regions that it recently rained, the soil moisture and humidity reduce the probability of fire ignition. To exploit this information, we formed the precipitation feature as an accumulation of the past seven days of the ERA5 land total precipitation variable. The code name of the feature is .

Vegetation indices. The existence and state of vegetation is another important factor for fire ignition as dry vegetation is more prone to fire than fresh. Such information can be derived by empirical remote sensing vegetation indices based on calculations of the light reflectance in specific frequencies of the spectrum [25,26]. The remote sensing index (Normalized Difference Vegetation Index) and (Enhanced Vegetation Index) [27] are two of the most utilized vegetation indices in many applications for which several analysis ready products are provided. For this work the two indices were collected from NASA products of the Moderate Resolution Imaging Spectroradiometer (MODIS), MYD13A1 and MOD13A1. Each pixel value of those products is an average of the daily products collected within the 16-day period, with 500 m spatial resolution (https://modis.gsfc.nasa.gov/data/dataprod/mod13.php; accessed on 22 February 2022).

LST. It is well known that prolonged heat leads vegetation to water stress conditions and increased plant temperature [28]. These conditions can be represented by the radiative skin temperature of the land surface (LST), a commonly used remote sensing index. For this reason MODIS MYD11A2 and MOD11A2 products of daytime and nighttime surface temperature (https://modis.gsfc.nasa.gov/data/dataprod/mod11.php; accessed on 22 February 2022) (LST-day, LST-night) were exploited to further assist fire risk prediction. Each pixel value of those products is an average of the daily LST products collected within the 8-day period, with 1 kilometer (km) spatial resolution which was downscaled at 500 m by nearest neighbor interpolation.

Fire history and Spatially smoothed fire history. Up to this point we considered the explicitly identifiable and measurable fire driving factors, like the vegetation type, the weather conditions, the altitude, etc. However, there exist factors that cannot be easily identified or measured, like human activity, difficulty in accessing the area by firefighting means, special ground morphology, etc. A historical frequency metric of the fire ignitions in an area during a large number of years would probably incorporate, to some extent, not only the identifiable and measurable factors but also the unidentifiable or difficult to measure influencing factors. To this end, we introduced two new features, Fire history and Spatially smoothed fire history with code names and respectively. Fire history is a count of all the appearances of fire from 1984 to 2009 in each grid cell, while Spatially smoothed fire history is the blurred (smoothed) image of the Fire history feature after applying a low-pass linear filter in order to smoothly disperse the frequency feature. Specifically, to apply this filter, an 81 × 81 kernel of the box blur type was used to calculate the convolution [29,30]. The source of the Fire history feature is the diachronic BSM service of Firehub from 1984 to 2009. The BSM polygons were mapped to the 500 m grid to annotate the fire cells for each single day in that time period. The BSM period used for creating this feature did not extend beyond the year 2009 for avoiding any interference with the training dataset which starts from the year 2010.

Cell coordinates. The coordinates of a region are features with similar purpose to the Fire history and Spatially smoothed fire history described previously, since they can be used to relate location with the fire ignition risk. In the case of x position and y position this relation is sought in the possible correlation of the coordinates with the output labels (fire and no-fire). For example, if in the north of the country (higher y coordinate) less wildfires occur, then there might be a substantial correlation between y coordinate and fire risk. Of course, a combination of reasons may be responsible for this, like the lack of forests, the different vegetation, the lower temperature or even a better organized civil protection service. In any case, these features, together with Fire history and Spatially smoothed fire history, can potentially assist in the prediction of the total fire risk from known and even unknown causes. The code names of the features are xpos and ypos.

Month of the year and Week day. As shown in related works [24,31], some calendar cycles (months, weeks) are related to the fire ignition risk. The months of the year differ significantly in the meteorological conditions, the vegetation status, and the type and intensity of human activities. Months with higher mean temperature and wind tend to be the months when the most fire ignitions occur. The differentiation in the week days that may affect the fire ignition regards mainly human activities. During weekends many people travel from large cities to rural areas, thus increasing the probability of human caused wildfire ignitions. However, the influence of the Week day is shown to be less important than that of the Month of the year [31]. The code feature names or these features are and . These categorical features were also transformed and introduced as one-hot encoding in the training dataset.

Table 1 summarizes the aforementioned features, denoting with bold the ones that were added in the current work, as compared to our previous one [14].

Table 1.

Synoptic presentation of features. The first column contains the type/category which corresponds to a suit of products from the same source, the second column is the name of the feature and the third contains the shorthands of the names. In the next two columns we find the spatial and temporal resolution of the feature’s source and the last column contains the reference of the source. The newly added features in this study are noted with bold lettering.

Table 1.

Synoptic presentation of features. The first column contains the type/category which corresponds to a suit of products from the same source, the second column is the name of the feature and the third contains the shorthands of the names. In the next two columns we find the spatial and temporal resolution of the feature’s source and the last column contains the reference of the source. The newly added features in this study are noted with bold lettering.

| Category | Feature | Code Name | Source Spatial Resolution | Source Temporal Resolution | Source |

|---|---|---|---|---|---|

| DEM | Elevation | dem | 25 m | - | Copernicus DEM |

| Slope | slope | ||||

| Curvature | curvature | ||||

| Aspect | aspect | ||||

| Land cover | Corine Land Cover | corine | 100 m | 3 years | Copernicus Corine Land Cover |

| Temperature | Maximum daily temperature | max_temp | 9 km | hourly | ERA5 land |

| Minimum daily temperature | min_temp | ||||

| Mean daily temperature | mean_temp | ||||

| Dewpoint | Maximum dewpoint temperature | max_dew | 9 km | hourly | ERA5 land |

| Minimum dewpoint temperature | min_dew | ||||

| Mean dewpoint temperature | mean_dew | ||||

| Wind speed | Maximum wind speed | dom_vel | 9 km | hourly | ERA5 land |

| Wind direction | Wind direction of the maximum wind speed | dir_max | 9 km | hourly | ERA5 land |

| Wind direction of the dominant wind speed | dom_dir | ||||

| Precipitation | 7 day accumulated precipitation | rain_7days | 9 km | hourly | ERA5 land |

| Vegetation indices | NDVI | ndvi | 500 m | 8 days | NASA MODIS |

| EVI | evi | ||||

| LST | LST-day | lst_day | 1 km | 8 days | NASA MODIS |

| LST-night | lst_night | ||||

| Fire history | Fire history | frequency | 500 m | daily | FireHub BSM |

| Spatially smoothed fire history | f81 | ||||

| Cell coordinates | x position | xpos | 500 m | daily | FireHub cell grid |

| y position | ypos | ||||

| Calendar cycles | Month of the year | month | 500 m | daily | Fire Inventory date field |

| Week day | wkd |

3. Method

The proposed methodology implements a machine learning pipeline that aims to learn scalable and accurate models for next day fire prediction, handling the large scale of available training data and, on the same, time ensuring the proper assessment and generalizability of the models. The first step of the pipeline comprises feature extraction, i.e., producing vector representations of the instances (grid cells/areas) derived from meteorological, topographical, vegetation, earth observation, and historical characteristics of the areas, as already described in Section 2.3.

The next step consists in performing a cross-validation procedure on the training dataset to compare a series of machine learning algorithms with respect to their effectiveness and generalizability on the task. We include several state-of-the-art tree ensemble algorithms (Random Forest, Extra Trees, XGBoost) and a series of shallow Neural Network architectures, as described in more detail in Section 3.1. The aforementioned algorithms, depending on the selection of their hyperparameters, can become adequately expressive, ensuring a low bias in our methods. Nevertheless, as discussed in Section 5, more complex/expressive models could potentially capture deeper patterns in our data and, thus, further increase the prediction effectiveness. An extensive hyperparameter search for each algorithm is performed via two alternative cross-validation schemes in order to identify the best performing models, with respect to different evaluation measures, as detailed in Section 3.2. We consider the measures of ROC-AUC, f-score (considering precision and recall of fire class), as well as hybrid measures that are derived by the weighted combinations of sensitivity (recall of fire class) and specificity (recall of no-fire class). In the following subsections, the proposed approach is described in detail.

3.1. ML Algorithms

We adopt a set of state-of-the-art classification algorithms, that includes tree ensembles (Random Forest, Extremely Randomized Trees, and XGBoost) and shallow NNs (up to five layers). Our goal is twofold: (a) to consider expressive algorithms, that are well proven on a plethora of classification tasks as well as on wildfire risk prediction [1] where instances are represented in the form of tabular data and (b) to represent relatively diverse algorithmic rationales. The latter is particularly significant since the handled problem is quite complex and different algorithms may perform better in different settings (e.g., see Section 4.2). Next, we briefly present the main characteristics of the considered algorithms.

Random Forest (RF) [32] is an ensemble of multiple decision trees (DT) that generally exhibits a substantial performance improvement over plain DT classifiers. The algorithm fits a number of DT classifiers using the bootstrap aggregating technique; it extracts random samples (with resampling) of training data points when training individual trees, considering random subsets of features when splitting nodes. Classification of unseen samples is performed through majority voting between the individual trees.

Extremely Randomized Trees (ET) [33] are also ensembles of multiple decision trees, similar to RF with two main differences; ET use the whole original sample to build the trees and the cut points are selected randomly instead of searching for the optimum split.

Extreme Gradient Boosting (XGBoost-XGB) [34] is an implementation of gradient boosted decision trees. In this case, trees are not independently but sequentially constructed and, at each iteration, more weight is put on instances that were misclassified by the learned trees of the previous step.

Neural Networks [35,36] are widely used algorithms that consist in stacking layers of nodes, connected with edges which are assigned weights during the training of the model, via back-propagation.

There exists a plethora of NN variations with respect to the architecture, optimization algorithms and hyperparameters used. In our setting, shallow NN architectures of up to five hidden layers, with Adam optimizer [37] and drop-out [38] were considered. Herein we denote them as and , when we use them without and with drop-out respectively. We note that, as detailed in Section 3.2, in this work the training of the models is performed in significantly undersampled (with respect to the no-fire class), balanced versions of the initially available training data. Thus, deploying deeper NNs is empirically not expected to increase the effectiveness of the models. Nevertheless, we consider as part of our future work the exploration of deeper architectures in training our models on the initial, vast-sized training data.

For each of the above algorithms, we consider several hyperparameters to search and optimize through the cross-validation process, the most important of which we briefly enumerate next. We note that the complete hyperparameter spaces that were searched for each model are provided in the Appendix A.

The most important arguments during hyperparameter search (performed within the cross-validation process) are the number of different hyperparameterizations to try (n_iter), and the number of folds to use for cross validation. In the tree ensembles case, we set n_iter to 200 and the number of folds to 10.

Hyperparameters of the Random Forest classifier include split criterion, to be used in individual trees (estimators), total number of estimators to be constructed (n_estimators), minimum number of samples in estimator leaf node (min_samples_leaf), maximum number of features to be considered in constructing individual estimators (max_features), minimum number of samples required to perform a split in estimator (min_samples_split), maximum depth of estimators (max_depth), and the function to measure the quality of a split (criterion). A wide range of values was tested for each of the aforementioned hyperparameters, i.e., 50 to 1500 estimators and 10 to 2000 or None for max_depth.

Hyperparameters of the Extremely Randomized Trees classifier are similar to those of RF. The hyperparameter search was based mainly on the number of decision trees in the ensemble (n_estimators), the number of input features to randomly select and consider for each split point and the minimum number of samples required in a node to create a new split point (min_samples_leaf).

Regarding the particular parameters of Extreme Gradient Boosting, gamma, lambda, and alpha parameters were tuned to increase the model performance. These parameters refer to the minimum loss reduction required to make a further partition on a leaf node of the tree, the L2 regularization and the L1 regularization respectively. Extensive search was performed with values that range from 1 to 21 for lambda, from 0 to 1000 for gamma and 0 to 100 for alpha. The higher the parameters’ values, the more conservative the algorithm will be.

In the case of Neural Networks we are using a fully connected architecture (FCNN) and the most important parameterization is the number of fully connected layers together with number of nodes in each layer. The parameter space contains two basic schemes of FCNN, one wide and one narrow. In the wide scheme, the FCNN is formed with minimum one to maximum four internal layers comprising from 100 to 2000 nodes at intervals of 100 nodes; in the narrow scheme the FCNN is formed from minimum one to maximum five internal layers comprising from 10 to 100 nodes at intervals of 10 nodes. Other important parameters for the FCNNs are the optimization algorithm, the loss function, the batch size, and the number of epochs to be trained. The number of epochs in our training scheme is dynamic and it depends on the Keras (https://keras.io/; accessed on 22 February 2022) framework’s class. Based on preliminary training runs, where only the parameters were tried, we have determined a combination of and to trigger the training stopping when the model learning stabilizes. The defines the difference of the monitored measure (e.g., , ) to be considered as improvement and the is the number of epochs to continue training without improvement. In the same way we determined the to be used, we derived the value from the best model performances from preliminary training runs, where only that parameter was varied. The optimization algorithm was also selected likewise, through dedicated preliminary runs for tuning that parameter. The best performing optimization algorithm found to be , using the default parameters from Keras library. Briefly, the algorithm is based on with the improvement of automatically adapting the which is constant in the latter algorithm [37]. The type of the output, a two node layer representing the two classes imposed the use of the standard loss function.

During the preliminary training-validation runs, alternative subsets of the input features were fed to the different models for getting a first evaluation of the performance achieved due to the newly added features. Those runs clearly showed that the FCNN models performance was considerably lower when the features concerning calendar cycles and wind direction were part of the feature subset (month, wkd, dir_max, dom_dir), while those features did not harm the ensemble trees algorithms. Consequently those features were removed from the input dataset used for the FCNN in our subsequent experiments, while the reasons for this different behavior between FCNN and ensemble trees is to be further investigated.

Particular emphasis needs to be given on the class_weights hyperparameter, which is common to all the deployed algorithms and allows them to behave in a cost-sensitive manner with respect to the minority class of our setting (fire class) [39]. This hyperparameter allows to adjust the misclassification cost of each instance (depending on the class that it belongs to) that is imposed on the error function being optimized during the training iterations. This way, assigning a higher weight to the instances of the fire class, the algorithm can potentially, to some extent, compensate for the extreme imbalance of the data. Of course, one could claim that since the training of all considered models is performed on balanced (undersampled) versions of the initial data, searching this hyperparameter’s space is unncesessary; nevertheless, our extensive experiments demonstrate the opposite. Nearly all the best performing models in the several experimental settings comprise a hyperparameterization that assigned higher weight to the fire class.

3.2. Cross-Validation Schemes and Measures

As discussed in Section 4.1, in our real-world operational setting, a fire service needs to be provided, each previous day, with a map that assigns fire predictions for the whole country’s territory, so that they can properly distribute their forces. This is empirically translated to achieving sensitivity (alternatively recall of the fire class) values of ∼90%, while, on the same time, specificity (alternatively recall of the no-fire class) of at least . In what follows, we first provide a short presentation of a typical cross-validation process, in order to point out its shortcoming with respect to our large scale and extremely imbalanced setting. We then present our proposed methodology, which comprises task specific evaluation measures (exclusively used for model selection on the validation sets and not for model assessment in the test sets) in combination with two alternative cross-validation schemes, aiming to produce ML models that satisfy the aforementioned empirical and practical requirements.

3.2.1. The Generic Methodology

In general, applying a cross-validation scheme [36] has a twofold purpose: (a) to search the hyperparameter space of the adopted ML algorithm(s), so as to identify configurations (hyperparameterizations) that optimize the effectiveness of the model with respect to a selected measure, and (b) to assess the generalizability of each configuration/model, considering the achieved effectiveness between the different partitions of the dataset (training/validation/test). The rationale is that the available dataset is split into three discrete partitions that are utilized for different purposes.

The training set is used to learn the parameters of the considered models (algorithms with specific hyperparameterizations), that best optimize an objective function that compares class predictions of the model and actual classes of the training instances (usually targeting to optimize the overall accuracy of the model, due to inherent restrictions of most algorithmic implementations [39]).

The validation set is utilized in order to assess the effectiveness of the learned models, not on the instances they have learned their parameters, but on separate ones. Depending on the specificities of the task to be solved (e.g., which classes are more important to be correctly predicted, the distribution of the data with respect to the classes) different measures might be selected in order to assess and compare the effectiveness of the models, such as ROC-AUC, f-score, sensitivity, etc. The comparative effectiveness of the assessed models on the validation set is examined in order to select the best achieving model for testing/deployment. Further, the absolute and relative effectiveness values achieved in the training and validation set are used to provide an estimation of the performance of the models with respect to overfitting and underfitting. If a model does not manage to reach high effectiveness values on the training set, it underfits the data, meaning that it not expressive (complex) enough to capture the desired patterns on the data. The lack of expressiveness (alt. low complexity or high bias) might be due to both the lack of proper features and/or the (low) complexity of the ML algorithm itself, i.e., what functions it applies on the input data/features. On the other hand, if a model achieves high effectiveness values on the training set, but considerably low ones on the validation set, this is an indication of overfitting: the model is more complex than required and, as a result, overfits the training set, essentially learning even the noise/variance of the training instances. A first verification of a model’s effectiveness and robustness is provided when the model performs well on the training set and, similarly well on the validation set. A more trustworthy estimation is provided via the utilization of the test set.

The test set is utilized in order to assess the effectiveness of the selected model on an unseen dataset. Since the test set is not involved in the process of selecting the model (in contrast to the validation set), the effectiveness reported on it comprises an unbiased estimation of the robustness of the model, regarding its effectiveness and generalization ability.

Cross validation schemes, through their several variations (plain train/validation/test split; cross-validation; nested cross-validation), normally require that the individual dataset partitions follow roughly the same data distributions. It is evident that a model that is trained and assessed on instances of a specific distribution, no matter how effective might be, is not guaranteed to perform similarly if deployed on a different distribution. We note here that distribution may regard both the characteristics of the instances (distributions of the individual training features values), as well as how the instances are shared through the classes of the task (in our case, through fire and no-fire classes).

The above requirement raises a significant issue in the next day fire prediction setting, due to the scale and the imbalance of the underlying data. In particular, if we regard a daily deployment basis, the considered models need to be deployed (equivalently tested) on ∼365 K instances, out of which, only a few tens might belong to the fire class. While the deployment of such models is relatively lightweight, the bottleneck lies in training them. In order to obtain a sufficient number of fire instances so that the classification algorithms are properly trained (i.e., a few thousand of fire instances), one needs to include in their training set daily instances spanning almost a decade. Simply put, if we consider only the seven months of each year (April–October for the Greek territory) for the fire season, a decade of daily instances summing up to more than 830 M instances needs to be used as training set, out of which the train/validation partitions will be derived, within a cross-validation process. The above number of instances is prohibitive for performing a hyperparameter search via cross validation, since training a single algorithm, with a single hyperparameterization on conventional hardware might take days to execute on data of such scale.

The most common practice in order to alleviate the above bottleneck is to perform undersampling of the majority class so that the training dataset is considerably reduced, rendering the execution of a proper cross-validation scheme feasible. The issue with this approach is that it drastically changes the distribution of no-fire instances in the training set. Indicatively, considering the aforementioned Greek territory dataset, the number of instances drops from ∼830 M to ∼13 K, if we consider the scenario where a balanced (containing almost equal number of fire and no-fire instances) train/validation setting is produced. As a consequence, a ML model trained, optimized and selected based on a specific evaluation measure (e.g., f-score) in this (balanced) train/validation dataset, cannot be expected to demonstrate the same effectiveness behavior on a test dataset that maintains the initial, real-world, extremely imbalanced distribution.

Finally, we emphasize that usually, in a typical cross-validation process, the same evaluation measures (e.g., f-score) are used both to perform model tuning and selection on the validation set and to assess the final models on the test set.

3.2.2. The Proposed Schemes

The proposed pipeline is realized via (a) two alternative cross-validation schemes, that differentiate on the validation set selection, thus implementing two model selection alternatives and (b) task specific, hybrid evaluation measures that are used for model selection during cross-validation with the aim to maximize the performance of the models (with respect to sensitivity and specificity) on the real-world test sets.

Default cross-validation scheme.Given the massive amount of training data, and the heavyweight processing required during the hyperparameter search in a cross-validation scheme, we perform undersampling on the initial training dataset (in particular, on the no-fire instances), and produce a balanced training set of 25 K instances in total. Undersampling is performed by examining the spatial and temporal attributes of no-fire instances. In particular, the instances are spatially sampled uniformly on the whole territory of interest, while temporally the no-fire instances are sampled according to the the yearly and monthly distribution of the fire instances. Then, a k-fold cross-validation process is performed, where the training dataset is split into k subsets (folds) and k training sessions are executed. In each training session k-1 folds are used for training, leaving each time a different fold to be used as validation set. Since the number of eventual training instances in the undersampled training set (∼25 K) allows it, we set . Further, to account for the strong spatial correlations in the data, which could lead to data leakage and model overfitting, a strict rule was followed in the 10-fold splitting process: cells of a specific day were not allowed to be distributed in more than one fold. This rule effectively prevented neighboring cells from the same day and the same fire event to be included in both the training and the validation folds during cross-validation. Omitting this rule would probably produce validation partitions easier to predict but it would also compromise the model generalization capability [13].

During the process, a large grid of hyperparameterizations for each algorithm is sampled, each creating a model that is trained on the training set and assessed on the validation set. The best performing models are selected based on the average validation performance on all folds with respect to several considered evaluation measures, as described next. We point out that the selected models are eventually assessed on a hold-out test set, which maintains the real-world, extremely imbalanced distribution of classes (1:100 K ratio of fire/no-fire instances).

Alternative cross-validation scheme. Inspired by time series validation, we consider a cross-validation scheme that comprises the following: (a) Each validation set chronologically succeeds the respective training set, considering yearly granularities. For example, if a training set comprises instances from years 2010 to 2013, then the respective validation set includes instances exclusively from year 2014. (b) At each iteration of the validation process, the training set is additively increased with the validation year of the previous iteration, while the next year becomes the next validation set. Continuing the example above, the second iteration of the process would comprise a training set from years 2010 to 2014 and a validation set from year 2015. (c) Training sets are always created by undersampling the initial datasets to a balanced set of fires and non-fires i.e., reducing the no-fire instances by a factor of 100 K; on the other hand, validation sets are slightly undersampled by just a factor of 10, aiming to maintain, as much as possible, the initial dataset distribution. We note here that in this scheme, ideally, the validation sets should not be undersampled at all, maintaining this way their exact size and distribution. However, validating a series of algorithms and hyperparameterizations on such sizes (indicatively, one month requires ∼11 M predictions), severely slows down the cross-validation process, inevitably leading to considerably less number of hyperparameterizations to be assessed. With this “intermediate” scheme that we propose, we aim at assessing a feasible scheme, that considers validation sets closer to their initial distribution and, on the same time, does not dramatically reduce the number of hyperparameterizations that are sampled and assessed.

The aforementioned scheme has a twofold purpose: (a) to allow us to select the best performing models on validation sets that are very close to the actual, real-world distribution of the data (and not on the severely undersampled version of the default cross-validation setting), and (b) to simulate a more real-world deployment/assessment of the models, where training is always performed on data of years preceding the deployment year.

Task-specific evaluation measures. A plethora of evaluation measures are defined for assessing the effectiveness of models on classification tasks, including accuracy, f-score, ROC-AUC, precision/recall, etc. Depending on the specific task that needs to be solved, different measures are more appropriate, while selecting an inappropriate measure, no matter how widely used it is, can lead to highly misleading conclusions. For example, accuracy, although one of the most widely used measures must not be used in our setting, due to the highly imbalanced nature of the data; a naive model that would classify all instances as no-fire would be assigned an almost perfect accuracy score. Similarly, more elaborate measures such as f-score cannot fully cover the informativeness needs of the task.

Further, in a typical cross-validation setting, an evaluation measure is used not only to assess the final model, but also to select the best model on the validation sets. Typically, exactly the same measure is used in both processes, which is directly related to the end goal of the task, as described above. However, in practice, the extremely imbalanced setting of our problem requires tuning the considered evaluation measure, in favor of the extremely rare, fire class, when used during model selection. To this end, apart from the widely used measures of ROC-AUC and f-score, and based on our empirical experimentation [14], we define a set of task-specific evaluation measures that combine the two most important effectiveness indicators for the task, i.e., sensitivity and specificity, and put additional weight on sensitivity, i.e., the percentage of fires that are identified by the model:

The first measure, ratio-based hybrid is inspired by f-score [40], however, instead of considering precision and recall of the fire class, it directly considers the recall of the two classes. This way, the importance of the two recall values (sensitivity and specificity) can be more intuitively weighted via factor k. This measure was first introduced and assessed in our previous work in [14].

The second measure, sum-based hybrid shybrid adjusts Youden’s index [41], so that, again, sensitivity can be boosted by factor k. Both measures target at the same goal: select the best models of the cross-validation process directly on their joint performance with respect to sensitivity and specificity, and boosting the relative importance of sensitivity by variable factors (k). As shown in more detail in Section 4, we treat these measures as meta-hyperparameters in our evaluation, meaning that different (configurations of the above) measures are used in conjunction with different classification algorithms to produce/select models that achieve the best results (with respect to sensitivity and specificity values) on the hold-out, test sets.

4. Results

In this section we present the results of our experimental analysis. First, the evaluation setting is presented (Section 4.1). Then, we present the evaluation results, including: (i) the considerable improvements we achieve in comparison with our previous work [14], which, to the best of our knowledge, is the only one that solves the specific problem, in its realistic basis with respect to the vast sizes and imbalance of the data (Section 4.2.1); (ii) the contribution of the additional training features that were implemented Section 4.2.2; (iii) the merits that are obtained for different algorithms by utilizing the proposed, problem specific evaluation measures for model selection and the two alternative cross-validation schemes (Section 4.2.3); (iv) how the best models’ performance further generalizes in different test years (Section 4.2.4).

4.1. Evaluation Setting

Table 2 presents a synopsis of the dataset used in our evaluation, derived from the study area presented in Section 2.2. It is comprised of daily instances of grid cells covering the Greek territory, for months June–September, for years 2010–2020. We can observe that the number of fires largely varies through consecutive years, considering both the yearly totals, as well as August individually, further indicating the complexity and multifactoriality of the problem. Another point is that, in most cases, the majority of the fire instances expectedly belong to August, increasing thus the importance of the particular month in the evaluation of the examined models.

Table 2.

Distribution of fire and no-fire instances in the dataset.

Instances from years 2010–2018 are used for training and validation of the examined models (via the two presented cross-validation schemes), while years 2019 and 2020 are used exclusively as hold-out, test sets for evaluating prediction effectiveness. For each algorithm (RF, XT, XGB, NN, NNd) a wide space of hyperparameters was searched during cross-validation, by applying the following hyperparameterization methodologies: (i) the Tree of Parzen Estimators (TPE) and random search from hyperopt library http://hyperopt.github.io/hyperopt/; accessed on 22 February 2022) and the random search of scikit learn library (https://scikit-learn.org/stable/modules/grid_search.html; accessed on 22 February 2022). To select the best performing models on validation sets, the following measures where considered:

- ROC-AUC. Area under the receiver operating characteristic curve [42] is a widely utilized evaluation measure, since it is a measure that summarizes the performance of a classification model over a range of different classification thresholds, that produce different sensitivity/specificity thresholds. Due to its definition, ROC-AUC is imbalance insensitive [39], which is a desirable property for out setting. However, a significant disadvantage of the measure is that it does not allow adjusting the relative importance of sensitivity and specificity values.

- F-score. This is also a widely used evaluation measure [40], that can also tackle data imbalance, since it produces a joint score by weighting precision and recall. Its downside in our setting is that weighting these two factors cannot be easily performed in an intuitive way, since, due to extreme imbalance in combination with the importance that is given on fire class recall (sensitivity), precision values are expected to be orders of magnitude lower than recall.

- rh-2, rh-5. Ratio-based hybrid, with setting weight k to values 2 and 5, are two instantiations our proposed measure (first introduced in [14]), that directly produces a joint score on sensitivity and specificity and allows boosting the importance of the former via parameter k.

- sh-2, sh-5, sh-10. Sum-based hybrid, with setting weight k to values 2, 5, and 10, are three instantiations our second proposed measure that target exactly the same goal as rh-k, but performs the weighting (boosting of sensitivity) in a more direct way, as presented in Section 3.2.

We remind that the aforementioned measures are exclusively used for model selection within the cross-validation process. Instead, for the final evaluation of the assessed methods in the test sets, sensitivity and specificity measures are exclusively utilized, since, as described in and Section 3.2, these two measures need to be jointly (but separately as two individual values) examined to assess our models. The core part of our evaluation examines how combinations of each algorithm’s cross-validation evaluation measure and cross-validation scheme perform on on average on the 2019 test set, as well as specifically on August 2019 in comparison with our previous work (IGARSS21 [14]). A complementary step, to further examine the generalizability of our methods, examines how the best performing models on the 2019 test set perform on average on the 2020 test set. More specifically, the best performing models of the tests on 2019 dataset were considered those with sensitivity score over and specificity over .

In order to facilitate the presentation of the results, next we briefly present the model naming notations that we use in the following subsections.

- Algorithms. The notation for the three tree ensembles, Random Forest, Extra Trees, and XGBoost are RF, XT, and XGB respectively. For Neural Networks, we consider two variations, without and with dropout, denoted NN and NNd, respectively.

- Cross-validation measure. In order to denote that a model has been selected based on a specific evaluation measure on the validation sets, we append the measure’s abbreviation (AUC, fscore, rh2, rh5, sh2, sh5, sh10) at the end of the model. For example, if a RF is selected via shybrid-5 is selected, then it is denoted as RF-sh5.

- Cross-validation scheme. In order to discriminate which of the two presented cross-validation schemes, we append the terms defCV or altCV respectively at the end of the model’s name. Thus, to further denote that the above model has been trained on the alternative cross-validation scheme, then we write it as RF-sh5-altCV.

4.2. Evaluation Results

4.2.1. Overall Effectiveness

Starting our analysis by comparing with our previous work [14], we present in Table 3 a list of models that achieved high performance with respect to sensitivity/specificity metrics from our previous ([14]—denoted igarss21) and our current work (current). Ref. [14] reports prediction values only for August 2019 due to limited computing resources, so comparison is only possible for this month. However, we also include average sensitivity and specificity results for months June–September from our current work We note that the test dataset also contains months April, May, and October, however, we excluded them to simplify our analysis, since the number of fire occurrences within these three months are marginal. We need to emphasize here that our experiments have demonstrated increased average effectiveness scores when including these months, meaning that the excluded months comprise “easier” cases for the proposed models.

Table 3.

Effectiveness (sensitivity/specificity) of the proposed models, compared to [14] on the 2019 test set. In the first column the model is referenced as “Algorithm-Cross Validation measure-Cross Validation scheme” (see Section 4.1) and in the parentheses next to the model we denote the origin of the results (current work or IGARSS 2021 [14]). The second column contains the sensitivity and specificity scores for August 2019 test set while the third column contains the same measures averaged on the most significant months of the wildfire period: June, July, August, and September.

For August predictions, the best models from the present study in most of the cases achieved scores over in sensitivity (models # 4, 5, 7, 8, 9 in Table 3), while they maintained specificity scores over 0.4 (models # 4, 5, 6, 7, 9) and reaching specificity over in one case (model # 4). These values considerably improve the best models of our previous work with respect to both measures.

Further, focusing on the average yearly performance of models of our current work, we can see that specificity is increased, ranging from to , while sensitivity is maintained in the same high levels, as compared to the respective August values. This is an expected behavior, since August is the most prone to fire occurrences month of the year due to various factors (both meteorological and human-induced). As a consequence, the particular month comprises the most challenging case for the assessed prediction models. Nevertheless, we observe that the proposed models essentially maintain the values for the most important measure, i.e., sensitivity, ensuring that, even in the most challenging deployment scenario, the largest percentage of fire occurrences is predicted. In brief, Table 3 demonstrates that the proposed methods, and in particular Neural Networks selected on the proposed hybrid measures can achieve high enough effectiveness values (sensitivity of , specificity ) to be exploitable in real-world deployment scenarios. Next, we present more detailed experiments, further analyzing the individual gains from the several components of the proposed methods.

4.2.2. Gains from New Training Features

In order to demonstrate the contribution of the additional features that were implemented in the current work, we exploit two different instruments that measure in different ways the importance of different features with respect to the response variable (fire occurrence): permutation importance and correlation with the response variable.

The permutation importance algorithm [32] computes each feature’s importance by comparing the model’s performance when having inserted noise in the feature (e.g., by shuffling the values) with the model’s performance when all the features are in their original state. This algorithm belongs to the category of the feature ranking algorithms in the utilized libraries, which means that any estimator can be used (as wrapped model) to compute the feature importance. In our case, we deployed the algorithm on three of the best models that emerged from the test results, one from FCNNs with dropout layer (NNd-nh2-defCV), one from RF (RF-nh5-defCV), and one from XGB (XGB-nh5-defCV). As scoring for the permutation importance we set the ROC-AUC metric. We note that, in this table, the one-hot encoded features were excluded, because the permutation importance algorithm handles each feature separately whereas in the case of one-hot encoded features, the numerous vectors that comprise the encoded categorical feature influence the model more as a whole than each one separately.

In Table 4 we present the first ten features from each of the three permutation rankings. The percentage values denote the drop in ROC-AUC when the specific feature is permuted. For the first two rankings (NNd, RF), the first three features (dom_vel, evi, f81) are identical. While it is naturally expected that wind speed and the EVI index are factors highly influencing the occurrence of fire, we can observe that the newly introduced feature of spatially smoothed fire history (f81) takes the third place in NN and RF and a 7th place in XGB, meaning that the fire history of an area plays an important role in considering the risk of a future occurrence. The coordinates of a cell in the grid (xpos, ypos) take a placement in the first ten most influencing features according the three rankings confirming that the location of the cell has a strong relation to fire risk. Furthermore, of the new features, the land surface temperature (lst_day) and the mean dew temperature (mean_dew_temp) are placed among the ten features, the first in the RF and the latter in the RF and XGB rankings. Overall, we can see that for three different models with good performance, four to five newly introduced features are found in the top-10 list of the most important ones.

Table 4.

Feature ranking using permutation importance with three of the best models emerged from tests for algorithms NNd (FCNN with dropout layer), RF and XGB.

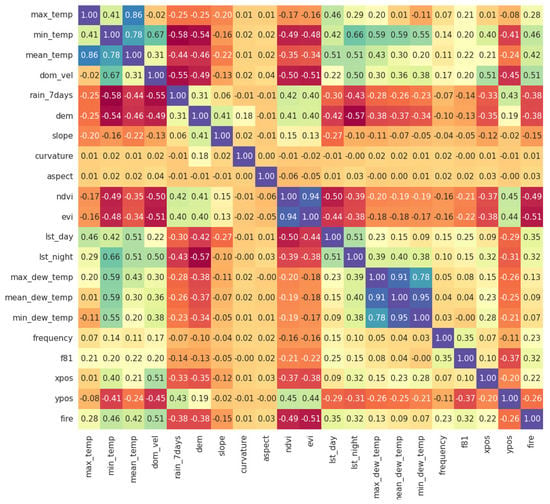

The above findings are further strengthened by examining the correlation between the features and the response variable. Figure 1 presents the values of Spearman’s correlation for all available numerical features. We can observe that almost all introduced features (except for dewpoint temperature ones) present absolute correlation values of more that , reaching up to .

Figure 1.

Spearman’s correlation values between problem’s independent (training features) and dependent (fire occurrence to be predicted) variables.

We note that the above schemes (permutation importance and feature correlation) can only serve as indication and not as proof of the (the degree of) importance of the examined features. For example, permutation importance can be problematic when there are strong correlations between training features. On the other hand, Spearman’s correlation is able to capture only monotonic correlations between variables, while the ML algorithms we deploy are able to identify and exploit more complex (e.g., non-monotonic) relations. Nevertheless, the findings on feature importance provided by the two above schemes, in conjunction with the comparative findings of Section 4.2.1, provide strong indications about the utility of the newly introduced training features.

4.2.3. Gains from Hybrid Measures and Alternative Cross Validation Scheme

In Table 5 we present the effectiveness (sensitivity/specificity) scores achieved by the proposed models on the 2019 test set. In this table, we analyze the contribution of the task specific, hybrid evaluation measures for model selection, as well as the alternative cross-validation scheme, that were presented in Section 3.2. The tested models were chosen from both cross validation schemes for each measure as described in Section 4.1. We emphasize here that the top row of the table refers to the measures that were used during cross-validation to select the best model, which is then assessed in terms of sensitivity/specificity (second row of the table) on the test set.

Table 5.

Comparative scores of the different measures from both the default and the alternative cross validation scheme on test set 2019.

The first observation is that the proposed hybrid measures perform much better than the traditional ones (AUC and f-score). Given the empirical and practical requirement of achieving sensitivity close to and specificity , the best models we can obtain from the two traditional measures achieve values of , , (RF-AUC-defCV, RF-fscore-defCV, NN-AUC-altCV respectively), with the latter being achieved via the proposed alternative cross-validation scheme. On the other hand, the hybrid measures provide a variety of models with better performance, indicatively marked with bold in Table 5. For example, all models based on sh5 measure and the default cross-validation consistently achieve at least sensitivity with specificity ranging from to , while NNd-sh2-defCV achieves values of , which can be considered the best performance according to the aforementioned empirical requirement.

Additionally, in general, we can observe a slightly better performance of NN models compared to the tree ensemble algorithms and in particular regarding the NN with drop-out. This can be potentially/partly justified by the fact that some hurtful features were removed from NNs early on on the experimental process, since they were empirically shown to severely hurt their performance (Section 3.1), while they were left as is for the tree ensembles, since they seemed more robust at handling them at the time. Nevertheless, a more in-depth analysis of this effect is part of our ongoing work.

Another observation regards the expected trade-off between sensitivity and specificity scores for each model, as higher values of the first lead to lower values of the second and vice versa. Given this, we can see that the proposed hybrid measures can be used to increasingly adjust this trade-off in favor of sensitivity; moving rightward in each row of the table, most of the times sensitivity increases while specificity decreases. This is a useful behavior that allows the configurable selection of different models, when the needs on effectiveness slightly differ.