A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images

Abstract

:1. Introduction

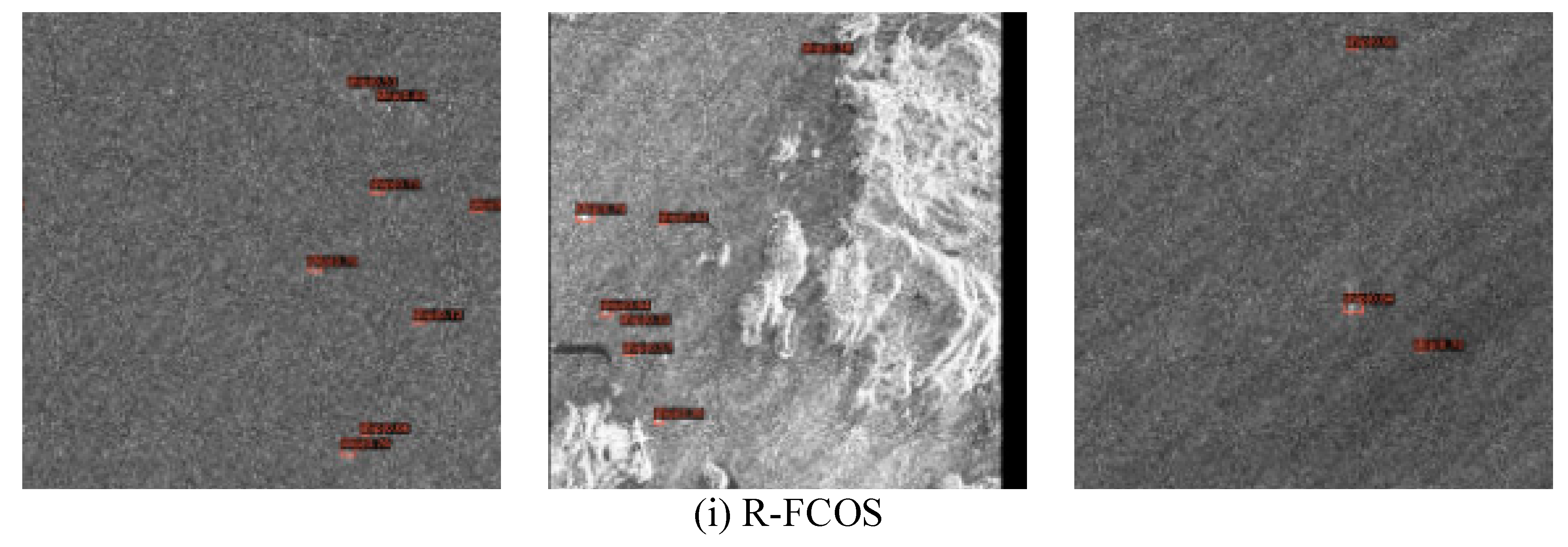

- It is shown that R-FCOS can eliminate the effect of anchors and avoid missing detection of small ships.

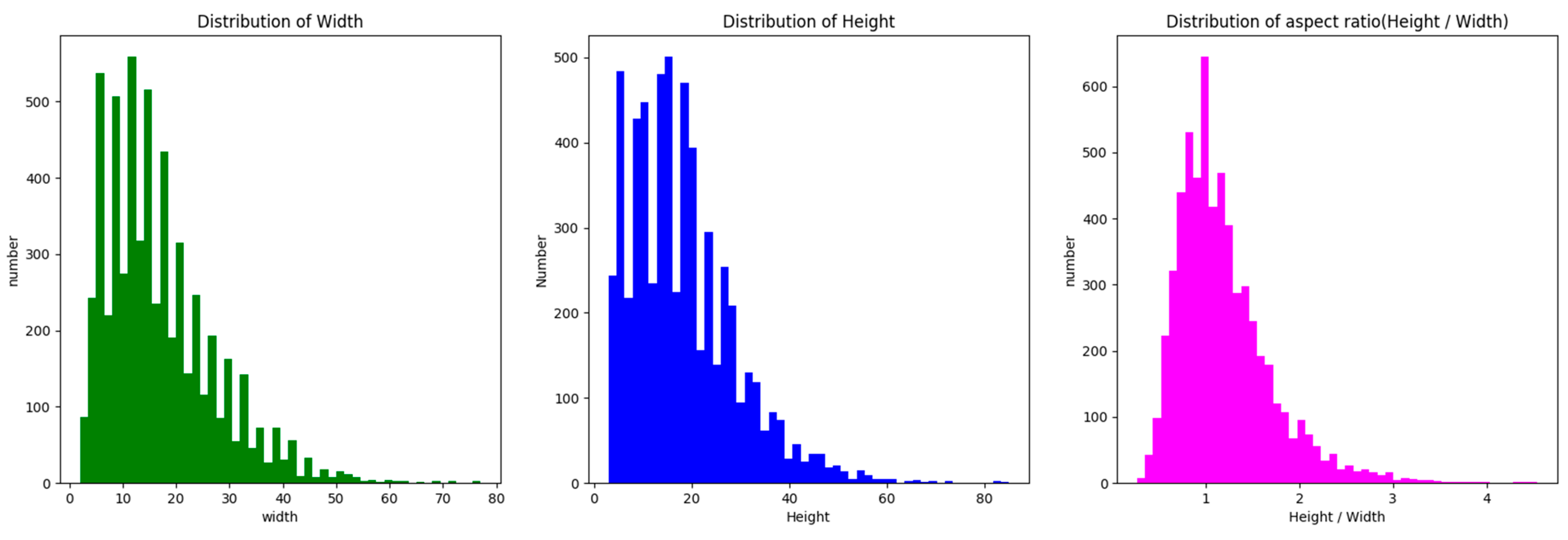

- Considering the particularity of SAR ships, the sample definition was redesigned based on the statistical characteristics of these ships.

- The feature extraction was redesigned to improve the feature representation for dim and small ships.

- The classification and regression stages were redesigned by introducing an improved focal loss and bounding box refinement with complete intersection over union (CIoU) loss.

2. Materials and Methods

2.1. Baseline

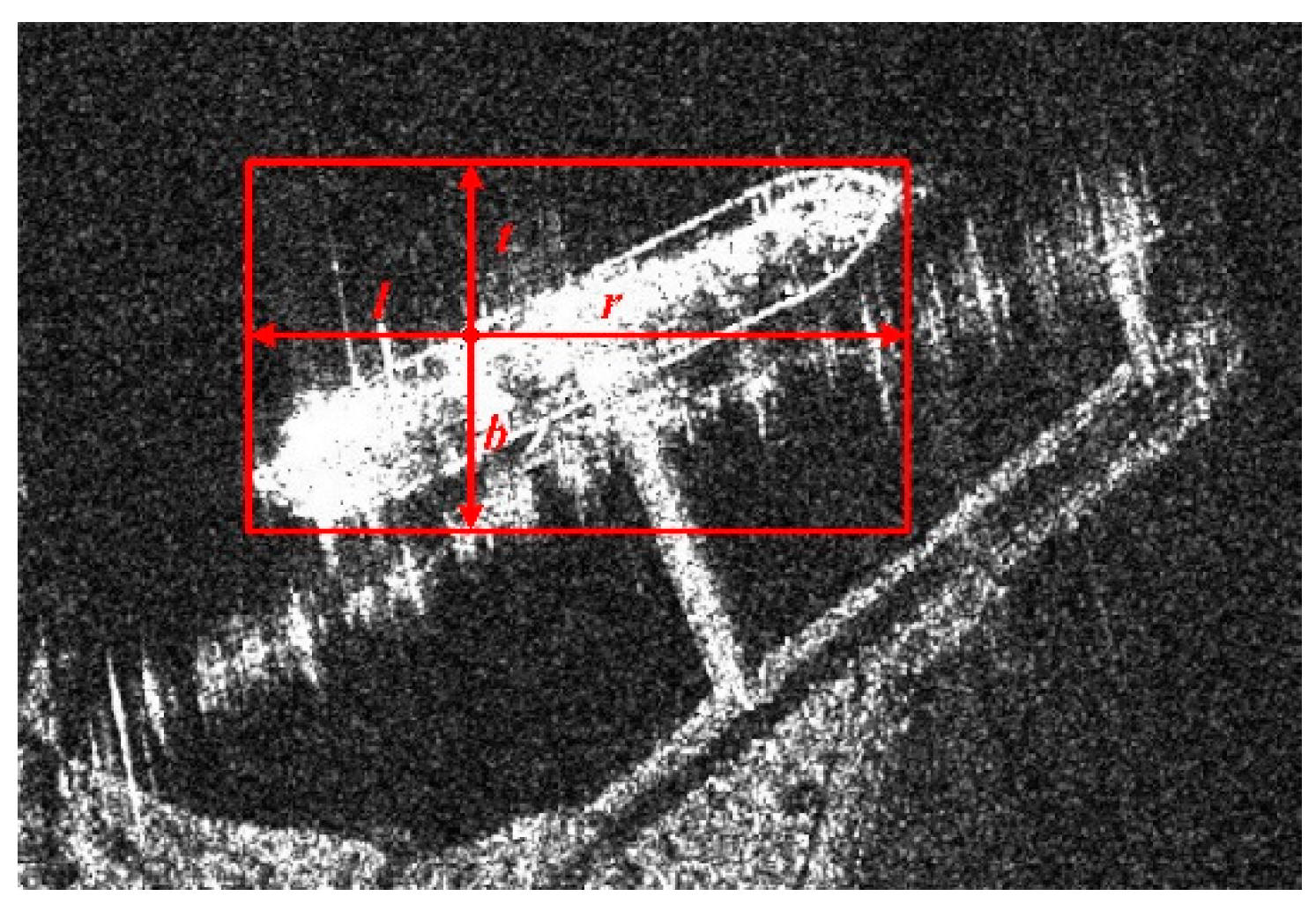

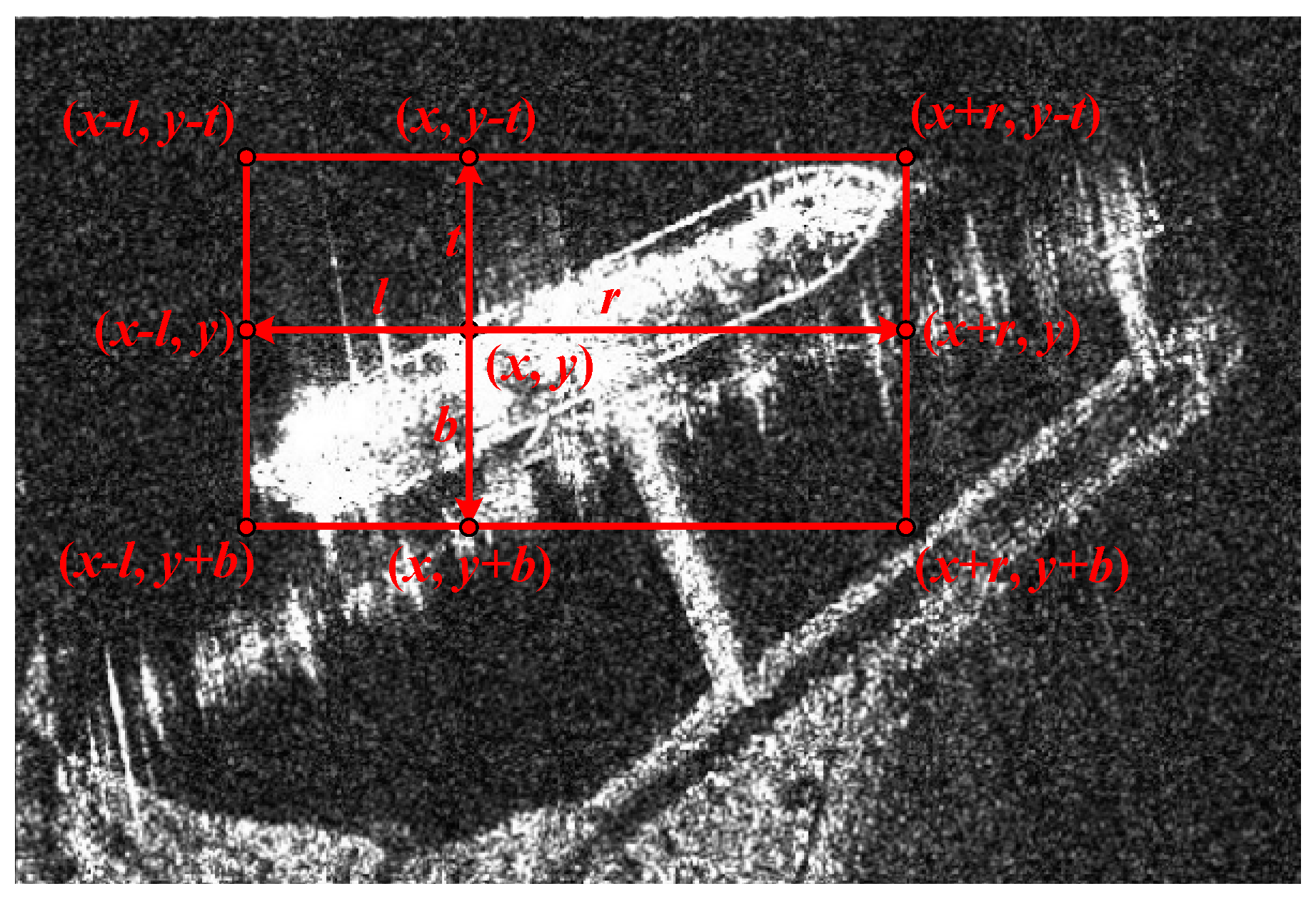

2.2. Sample Definition Redesign

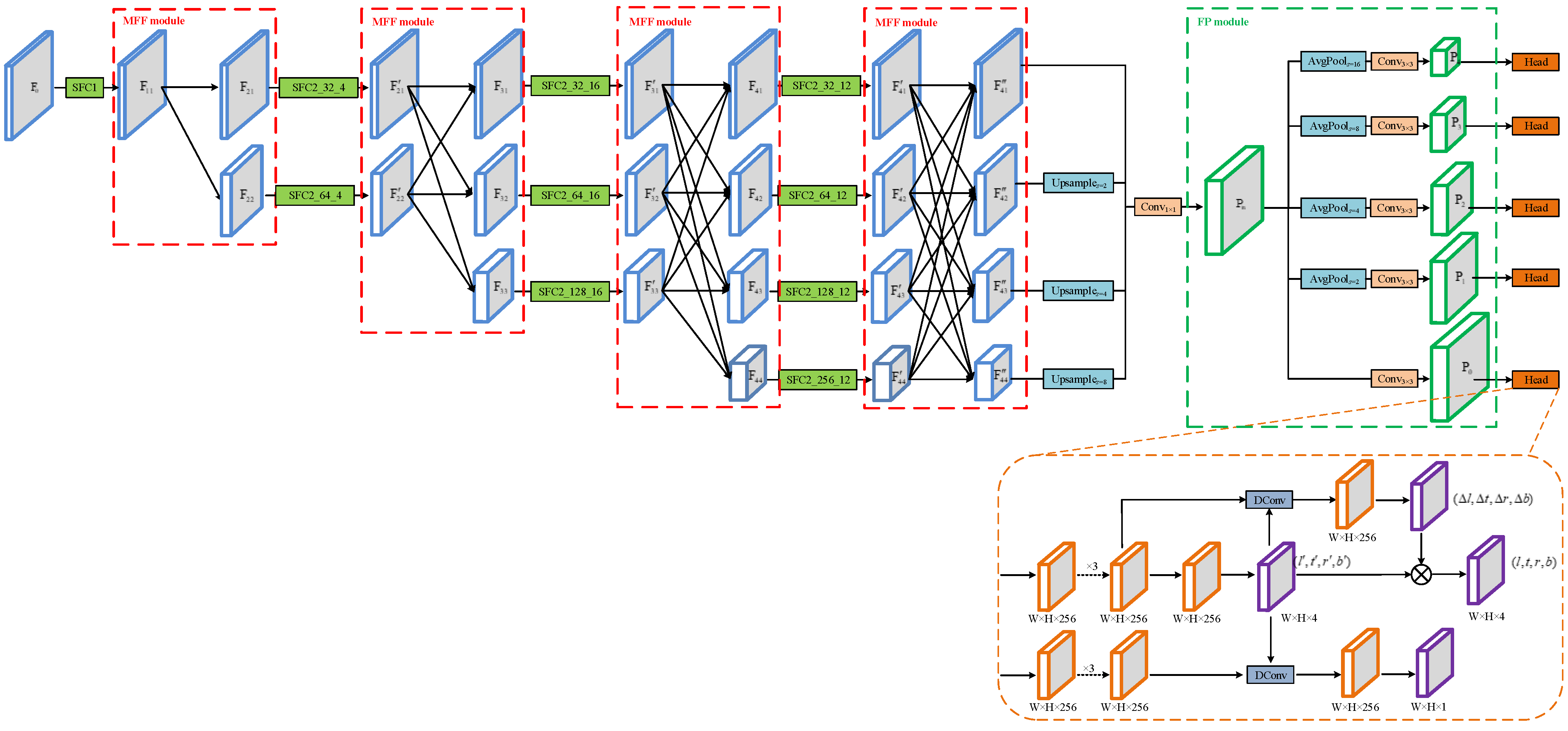

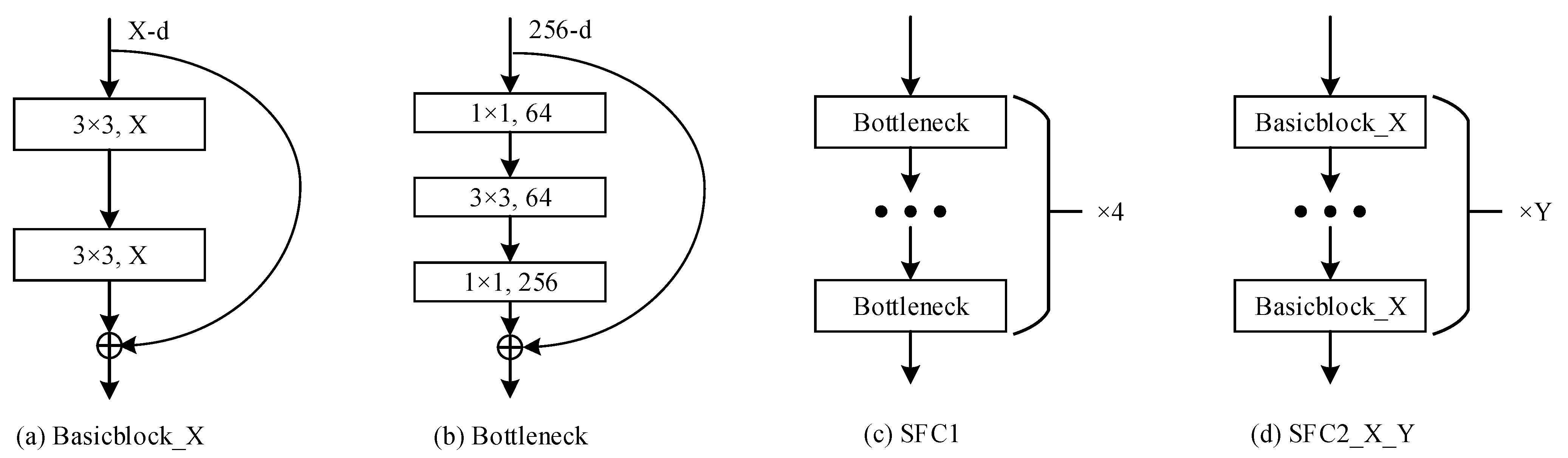

2.3. Feature Extraction Redesign

2.4. Classification and Regression Redesign

2.5. Loss Function

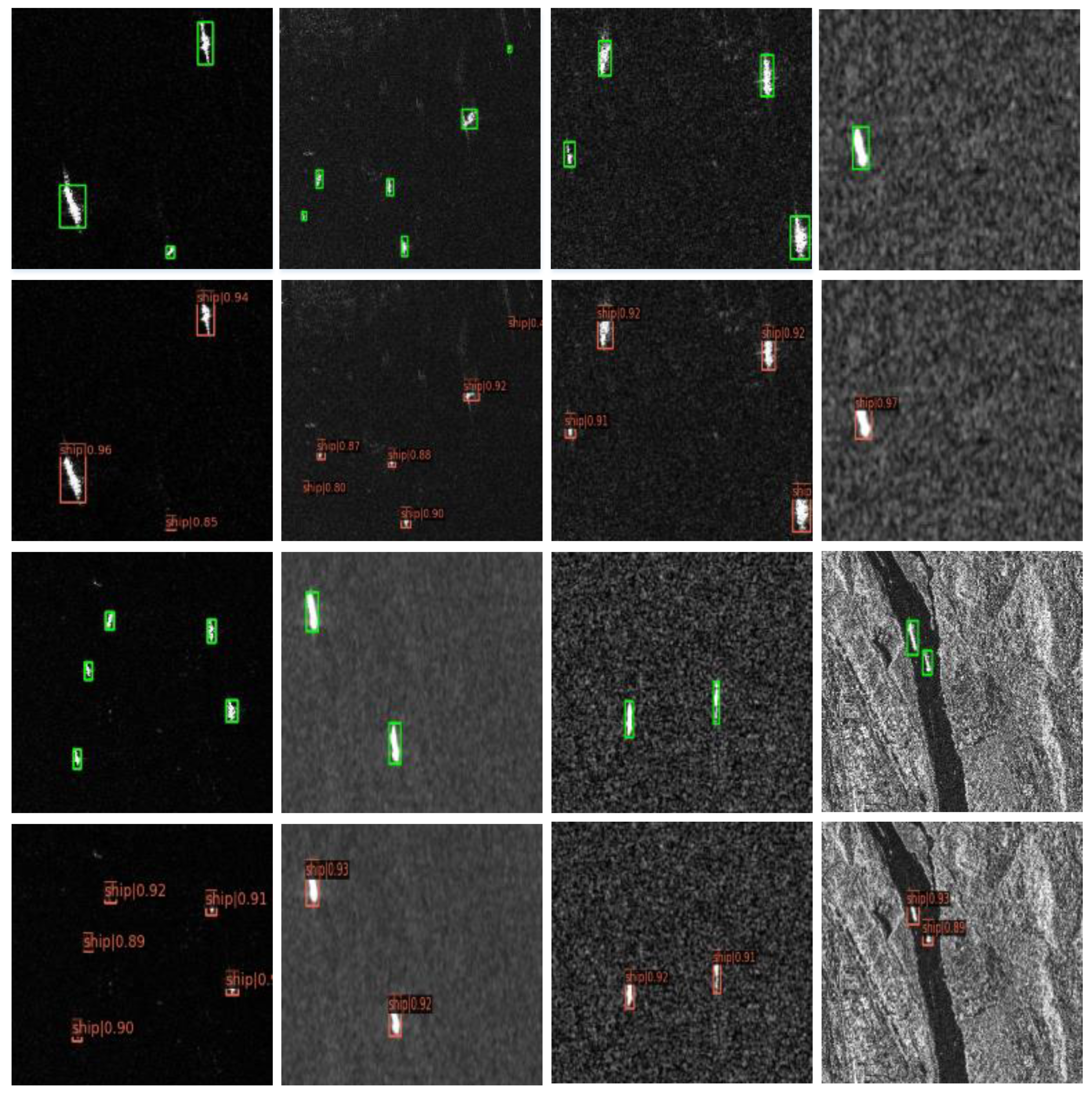

3. Results

3.1. Dataset

3.2. Ablation Study

3.2.1. Analysis of Sample Definition Redesign

3.2.2. Analysis of Feature Extraction Redesign

3.2.3. Analysis of Classification and Regression Redesign

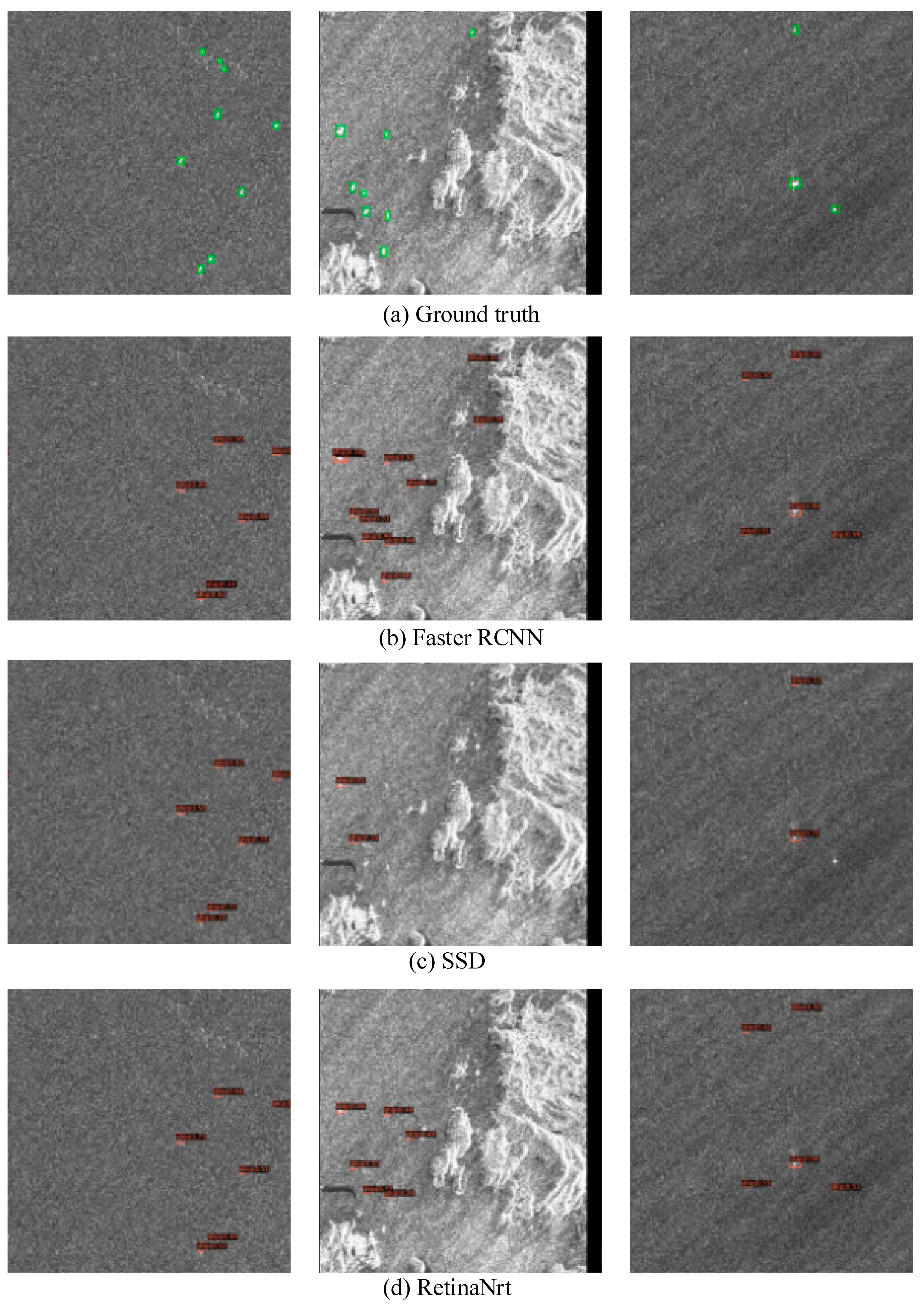

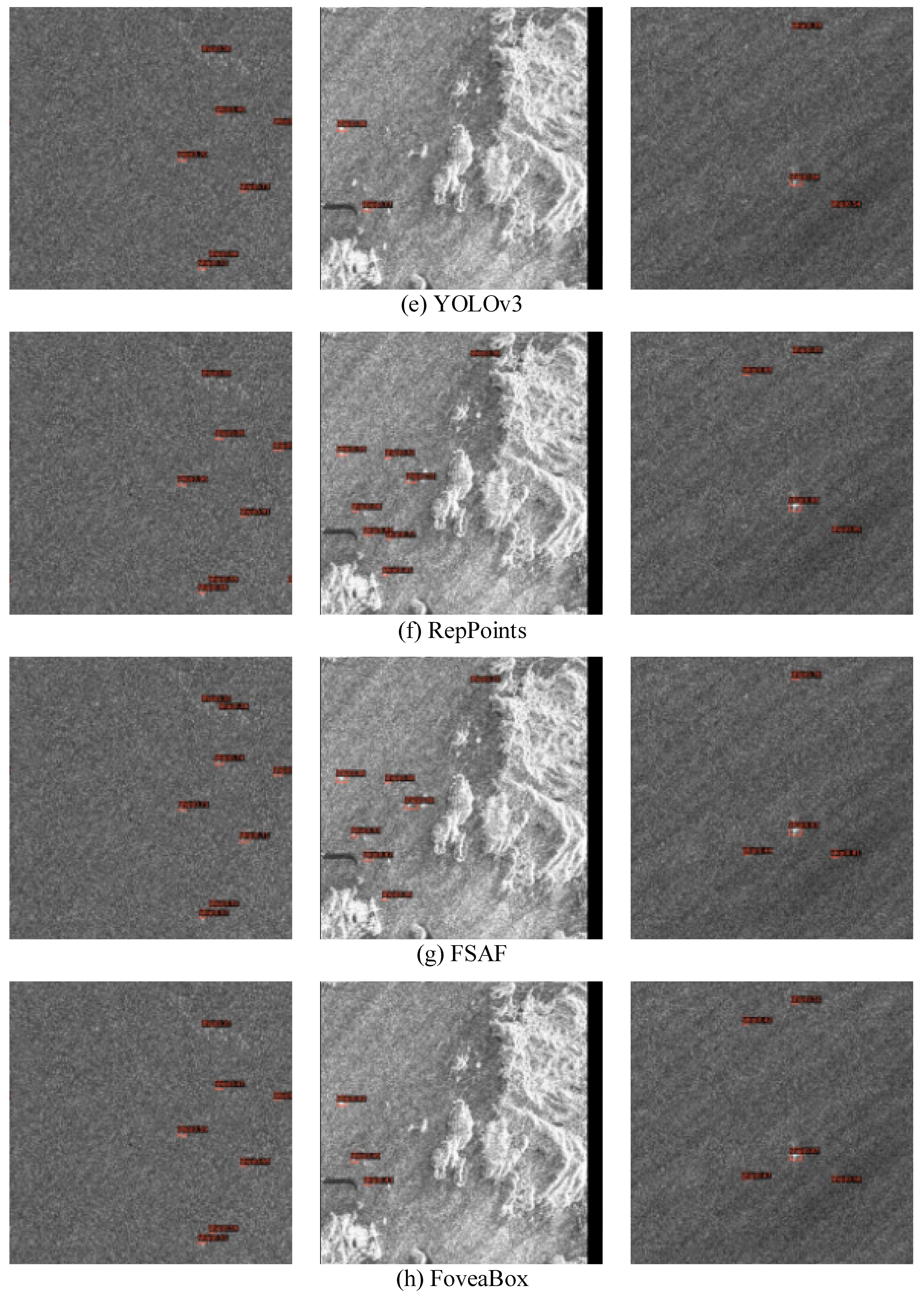

3.3. Comparison with Other Methods

- The AP of R-FCOS is better than those of other methods. Specifically, the AP of R-FCOS is 75.5%, which is 9.2%, 17.7%, 4.7%, 8.9%, 3.2%, 4.9%, and 6.1% higher than Faster RCNN, SSD, RetinaNet, YOLOv3, RepPoints, FSAF, and FoveaBox, respectively.

- The AP of SSD is the worst and is 17.7% lower than our method. Although SSD uses high-resolution features to detect small objects, it contains less semantic information, resulting in unsatisfactory detection results. In addition, SSD reduces the input image size to 300×300, which destroys the object information in the image.

- The APs of anchor-free methods such as RepPoints, FSAF, FoveaBox, and R-FCOS are generally better than those of anchor-based methods except for RetinaNet. This shows that the anchor-free method is more suitable for ship detection.

- The FPS of SSD is the highest, and that of Faster RCNN is the lowest. Although the FPS of our method is only 52.0, it already meets the real-time requirements.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SAR | Synthetic Aperture Radar |

| FCOS | Fully Convolutional One-Stage Object Detection |

| CIoU | Complete Intersection over Union |

| CFAR | Constant False Alarm Rate |

| LCFVs | Local Contrast of Fisher Vectors |

| CNN | Convolutional Neural Network |

| Faster | R-CNN Faster Region-based CNN |

| YOLO | You Only Look Once |

| SSD | Single Shot Multi-Box Detector |

| P2P-CNN | Patch-to-Pixel CNN |

| RepPoints | Representative Points |

| FSAF | Feature Selective Anchor-Free |

| R-FCOS | Redesigned FCOS |

| FPN | Feature Pyramid Network |

| GIoU | Generalized Intersection over Union |

| IoU | Intersection over Union |

| DeconvNet | Deconvolution Network |

| SFC | Same-Resolution Feature Convolution |

| MFF | Multi-Resolution Feature Fusion |

| FP | Feature Pyramid |

| Dconv | Deformable Convolution |

| IS | IoU Score |

| AP | Average Precision |

| FPS | Frames Per Second |

| LS-SSDD-v1.0 | Large-Scale SAR Ship Detection Dataset-v1.0 |

| MS COCO | Microsoft Common Objects in COntext |

| FCOS+SDR | FCOS with Sample Definition Redesign |

| FCOS+SDR+FER | FCOS with SDR and Feature Extraction Redesign |

| FCOS+SDR+FER+CRR | FCOS with SDR, FER, and Classification and Regression Redesign |

| SSDD | SAR Ship Detection Dataset |

References

- Li, M.; Wen, G.; Huang, X.; Li, K.; Lin, S. A Lightweight Detection Model for SAR Aircraft in a Complex Environment. Remote Sens. 2021, 13, 5020. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Pagiatakis, S.D.; Hassan, Q.K. A Survey on Change Detection and Time Series Analysis with Applications. Appl. Sci. 2021, 11, 6141. [Google Scholar] [CrossRef]

- Cui, Z.; Qin, Y.; Zhong, Y.; Cao, Z.; Yang, H. Target Detection in High-Resolution SAR Image via Iterating Outliers and Recursing Saliency Depth. Remote Sens. 2021, 13, 4315. [Google Scholar] [CrossRef]

- Pappas, O.; Achim, A.; Bull, D. Superpixel-Level CFAR Detectors for Ship Detection in SAR Imagery. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1397–1401. [Google Scholar] [CrossRef] [Green Version]

- He, J.; Wang, Y.; Liu, H.; Wang, N.; Wang, J. A Novel Automatic PolSAR Ship Detection Method Based on Superpixel-Level Local Information Measurement. IEEE Geosci. Remote Sens. Lett. 2018, 15, 384–388. [Google Scholar] [CrossRef]

- Yang, F.; Xu, Q.; Li, B. Ship Detection From Optical Satellite Images Based on Saliency Segmentation and Structure-LBP Feature. IEEE Geosci. Remote Sens. Lett. 2017, 14, 602–606. [Google Scholar] [CrossRef]

- Wang, S.; Wang, M.; Yang, S.; Jiao, L. New Hierarchical Saliency Filtering for Fast Ship Detection in High-Resolution SAR Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 351–362. [Google Scholar] [CrossRef]

- Song, S.; Xu, B.; Yang, J. SAR Target Recognition via Supervised Discriminative Dictionary Learning and Sparse Representation of the SAR-HOG Feature. Remote Sens. 2016, 8, 683. [Google Scholar] [CrossRef] [Green Version]

- Li, T.; Liu, Z.; Xie, R.; Ran, L. An Improved Superpixel-Level CFAR Detection Method for Ship Targets in High-Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 184–194. [Google Scholar] [CrossRef]

- Salembier, P.; Liesegang, S.; Lopez-Martinez, C. Ship Detection in SAR Images Based on Maxtree Representation and Graph Signal Processing. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2709–2724. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.; Chen, H.; Jin, K.; Zeng, L.; Yang, J. Ship Detection with Superpixel-Level Fisher Vector in High-Resolution SAR Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 247–251. [Google Scholar] [CrossRef]

- Wang, X.; Li, G.; Zhang, X.-P.; He, Y. Ship Detection in SAR Images via Local Contrast of Fisher Vectors. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6467–6479. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR Dataset of Ship Detection for Deep Learning under Complex Backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef] [Green Version]

- Song, J.; Kim, D.-J.; Kang, K.-M. Automated Procurement of Training Data for Machine Learning Algorithm on Ship Detection Using AIS Information. Remote Sens. 2020, 12, 1443. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense Attention Pyramid Networks for Multi-Scale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention Receptive Pyramid Network for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W.-Q. A Lightweight Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Hong, Z.; Yang, T.; Tong, X.; Zhang, Y.; Jiang, S.; Zhou, R.; Han, Y.; Wang, J.; Yang, S.; Liu, S. Multi-Scale Ship Detection From SAR and Optical Imagery Via A More Accurate YOLOv3. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 6083–6101. [Google Scholar] [CrossRef]

- Zhang, K.; Wu, Y.; Wang, J.; Wang, Y.; Wang, Q. Semantic Context-Aware Network for Multiscale Object Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J. Learning Deep Ship Detector in SAR Images from Scratch. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4021–4039. [Google Scholar] [CrossRef]

- Chen, C.; He, C.; Hu, C.; Pei, H.; Jiao, L. MSARN: A Deep Neural Network Based on an Adaptive Recalibration Mechanism for Multiscale and Arbitrary-Oriented SAR Ship Detection. IEEE Access 2019, 7, 159262–159283. [Google Scholar] [CrossRef]

- Jin, K.; Chen, Y.; Xu, B.; Yin, J.; Wang, X.; Yang, J. A Patch-to-Pixel Convolutional Neural Network for Small Ship Detection with PolSAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6623–6638. [Google Scholar] [CrossRef]

- Gao, S.; Liu, J.M.; Miao, Y.H.; He, Z.J. A High-Effective Implementation of Ship Detector for SAR Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9656–9665. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Wang, D.; Krahenbuhl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), ATSS, Seattle, WA, USA, 13–19 June 2020; pp. 9756–9765. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A Simple and Strong Anchor-free Object Detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyound Anchor-Based Object Detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Zhu, C.; He, Y.; Savvides, M. Feature Selective Anchor-Free Module for Single-Shot Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 840–849. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. VarifocalNet: An IoU-aware Dense Object Detector. arXiv 2021, arXiv:2008.13367. [Google Scholar]

- Cui, Z.; Wang, X.; Liu, N.; Cao, Z.; Yang, J. Ship Detection in Large-Scale SAR Images Via Spatial Shuffle-Group Enhance Attention. IEEE Trans. Geosci. Remote Sens. 2021, 59, 379–391. [Google Scholar] [CrossRef]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An Anchor-Free Method Based on Feature Balancing and Refinement Network for Multiscale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1331–1344. [Google Scholar] [CrossRef]

- Sun, Z.; Dai, M.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. An Anchor-Free Detection Method for Ship Targets in High-Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 7799–7816. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Binarized Convolutional Landmark Localizers for Human Pose Estimation and Face Alignment with Limited Resources. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3726–3734. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, M.; Hu, G.; Zhou, H.; Wang, S.; Feng, Z.; Yue, S. A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images. Remote Sens. 2022, 14, 1153. https://doi.org/10.3390/rs14051153

Zhu M, Hu G, Zhou H, Wang S, Feng Z, Yue S. A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images. Remote Sensing. 2022; 14(5):1153. https://doi.org/10.3390/rs14051153

Chicago/Turabian StyleZhu, Mingming, Guoping Hu, Hao Zhou, Shiqiang Wang, Ziang Feng, and Shijie Yue. 2022. "A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images" Remote Sensing 14, no. 5: 1153. https://doi.org/10.3390/rs14051153

APA StyleZhu, M., Hu, G., Zhou, H., Wang, S., Feng, Z., & Yue, S. (2022). A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images. Remote Sensing, 14(5), 1153. https://doi.org/10.3390/rs14051153