Image Correction and In Situ Spectral Calibration for Low-Cost, Smartphone Hyperspectral Imaging

Abstract

:1. Introduction

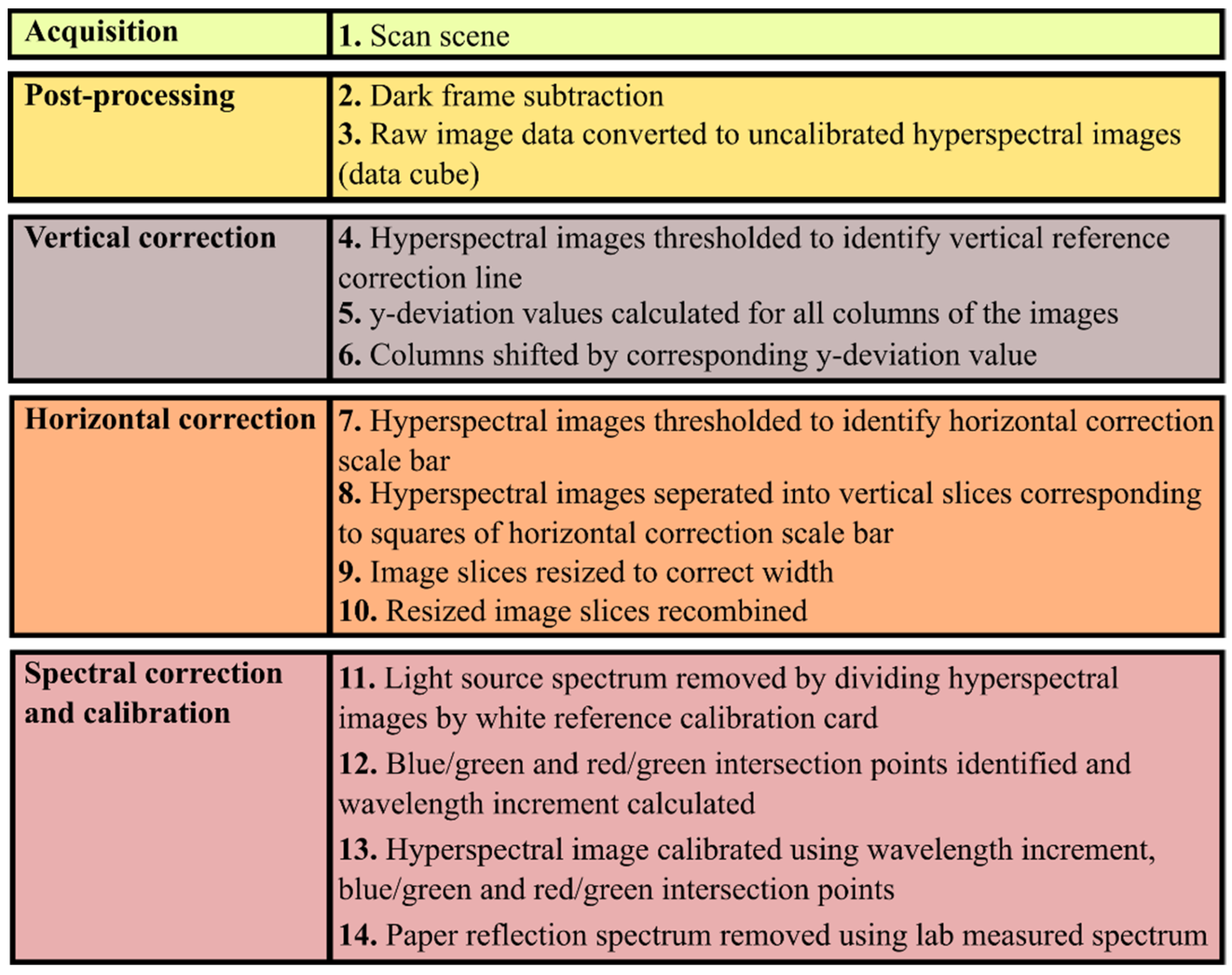

2. Materials and Methods

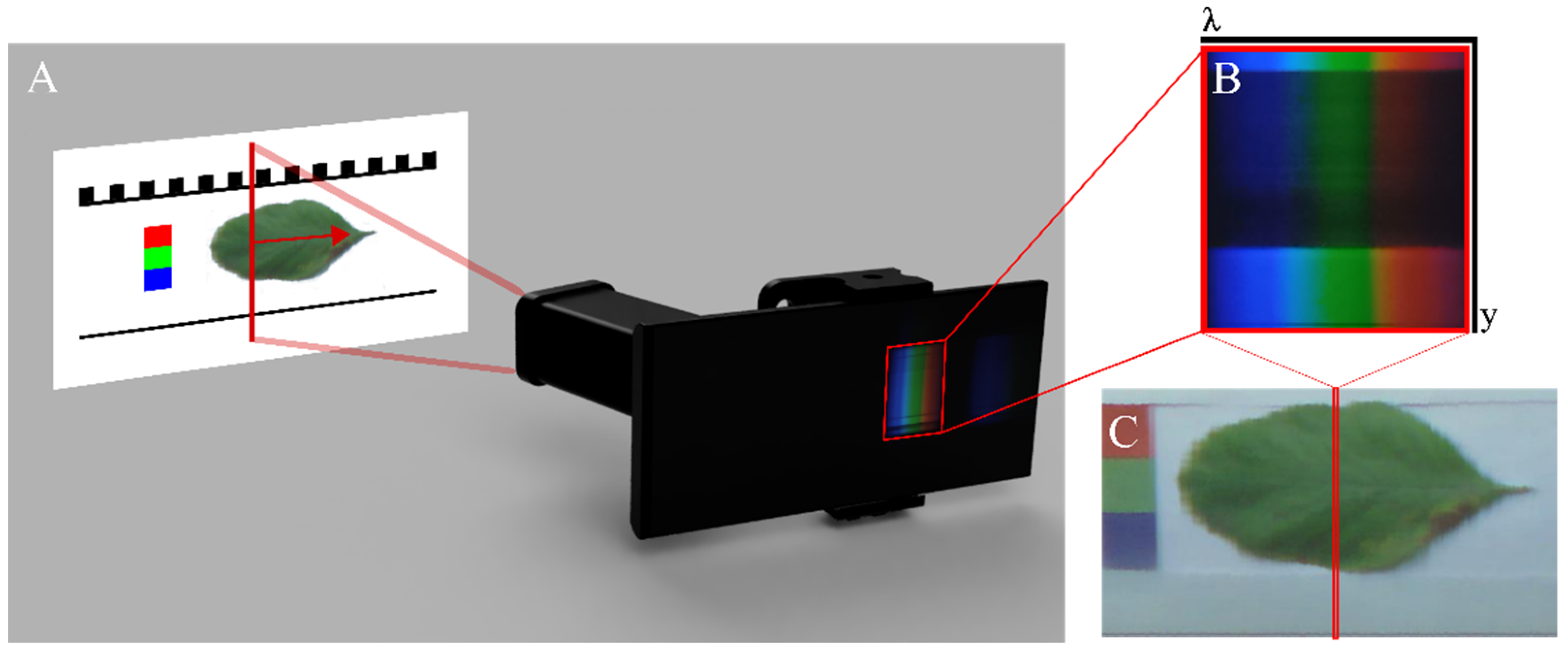

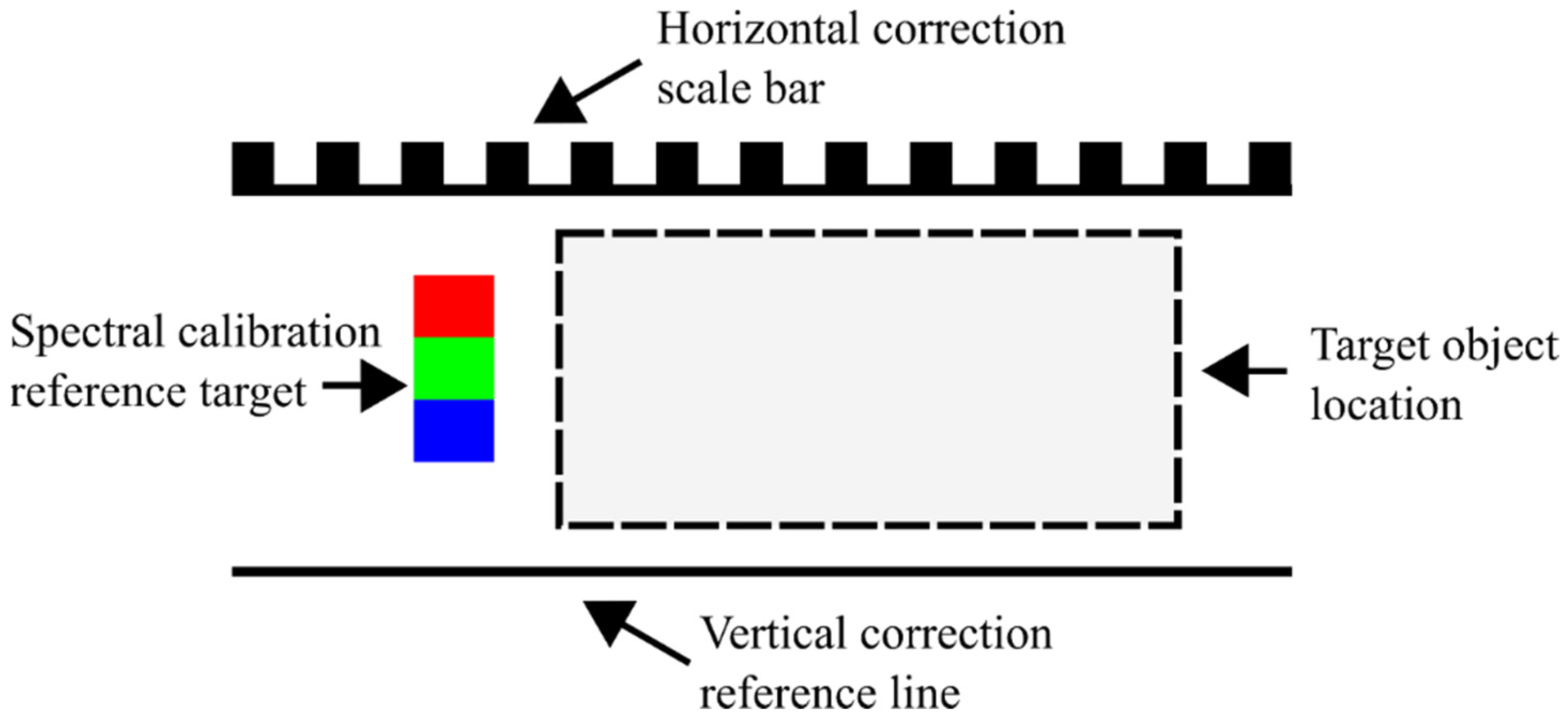

2.1. Image Acquisition

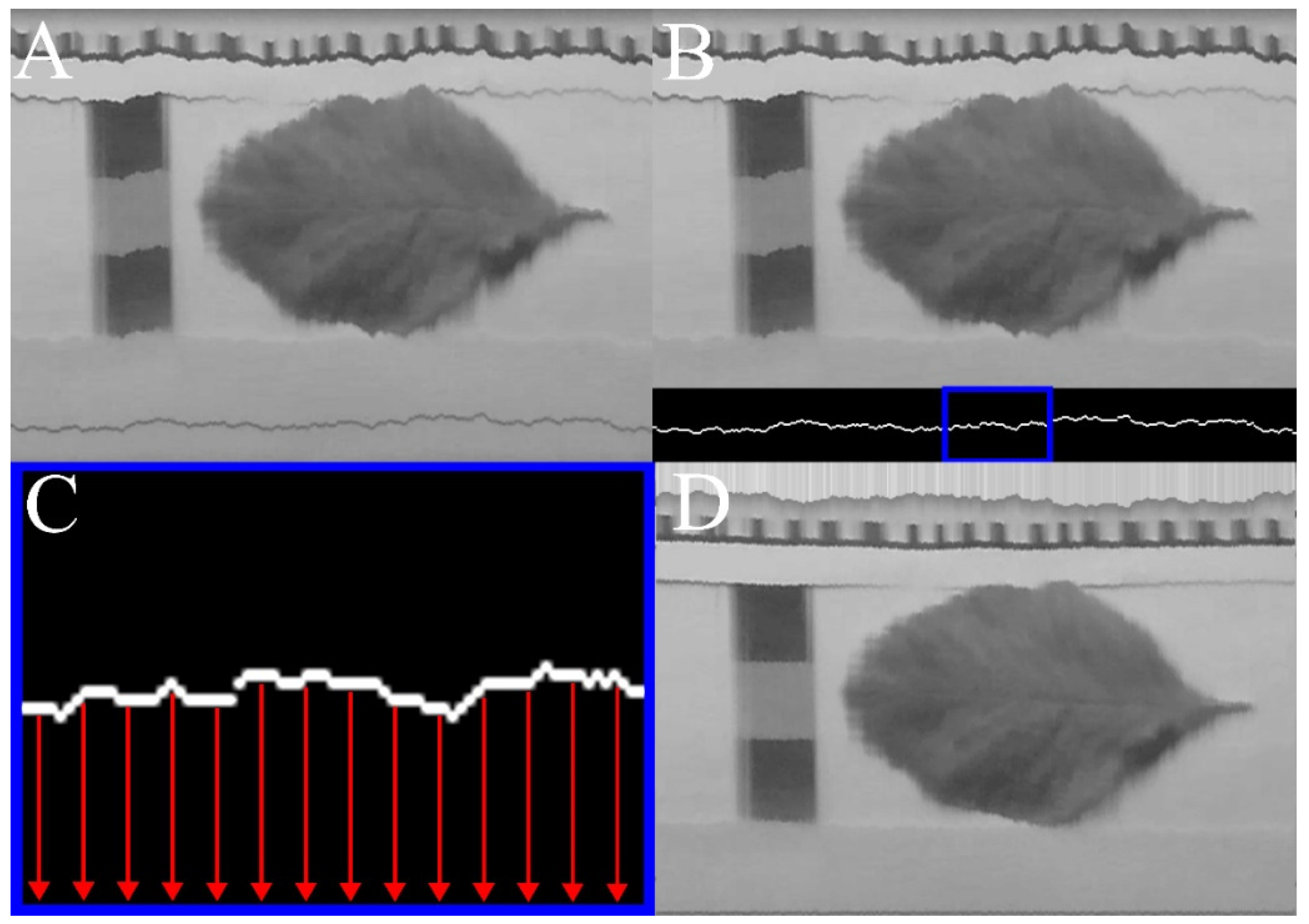

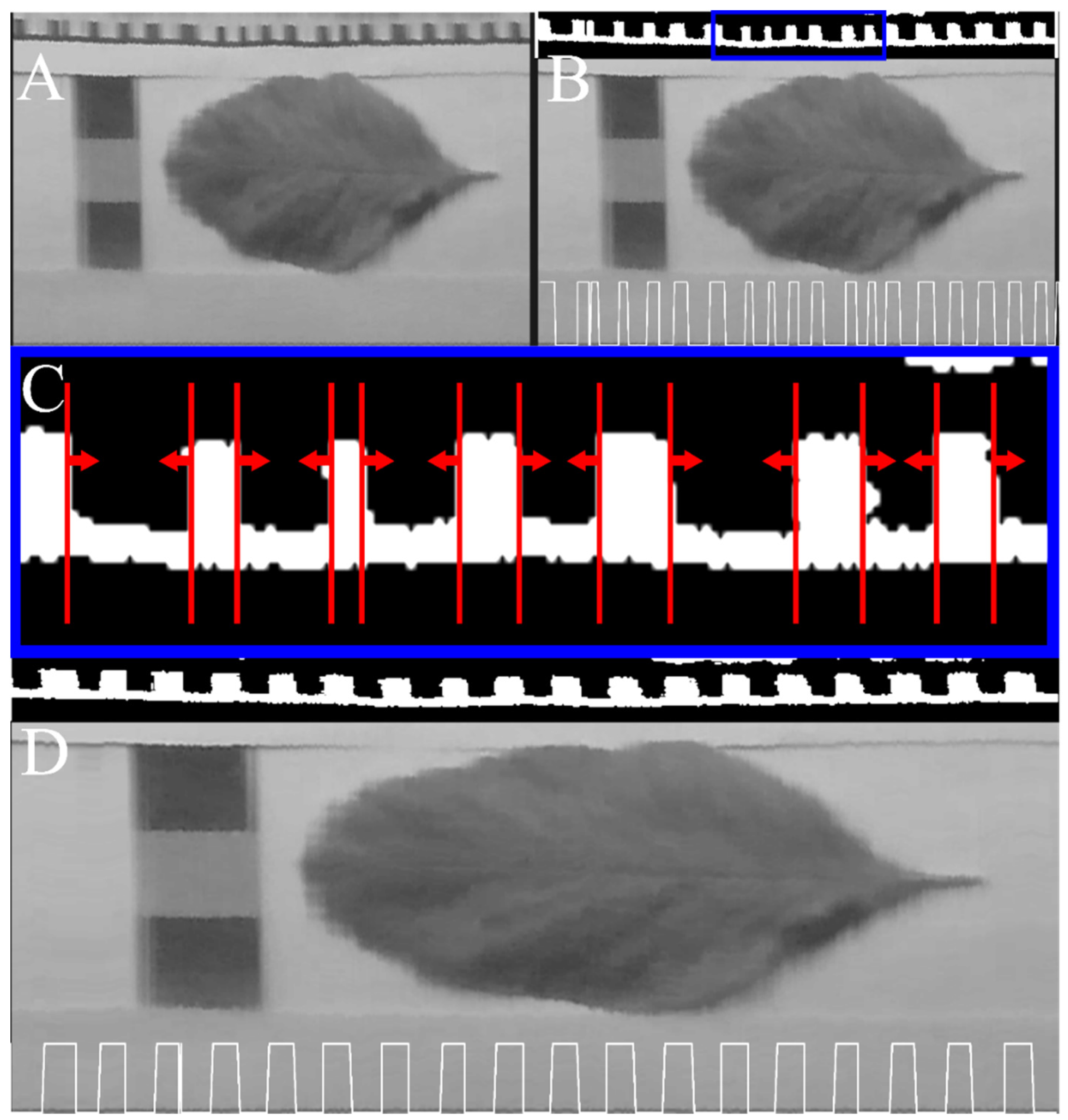

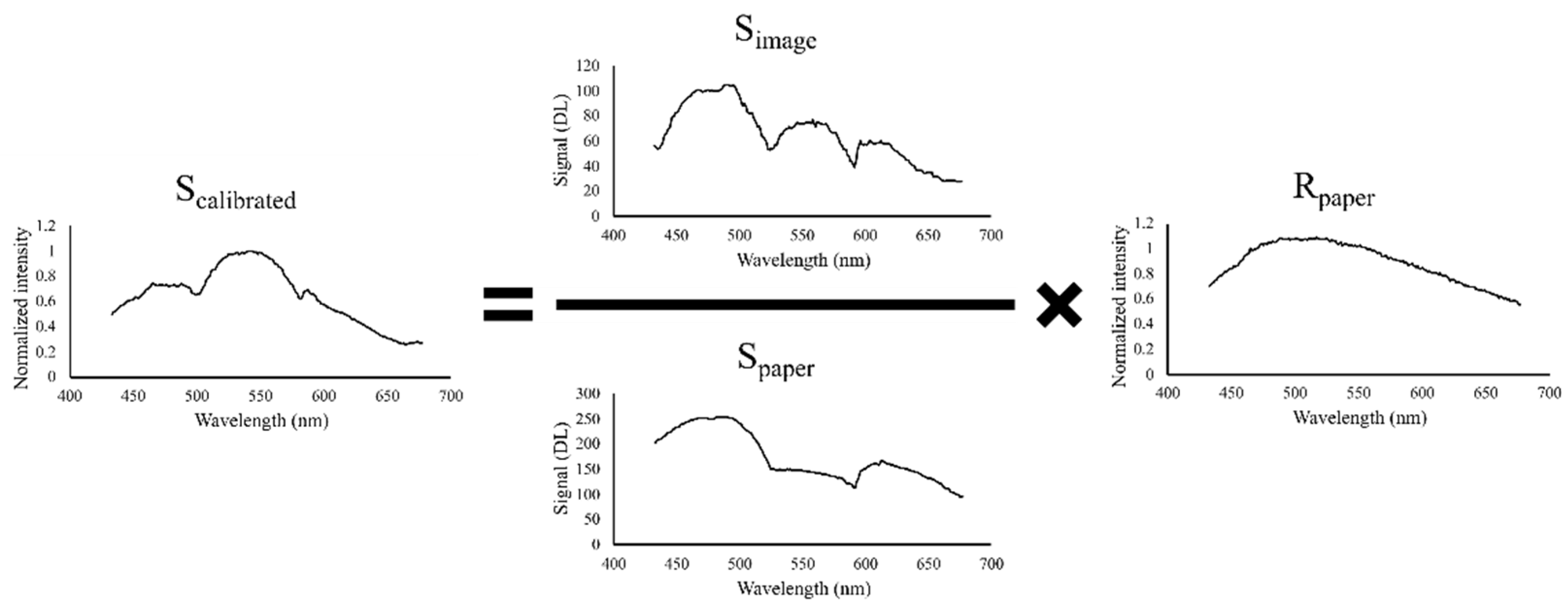

2.2. Bias Correction

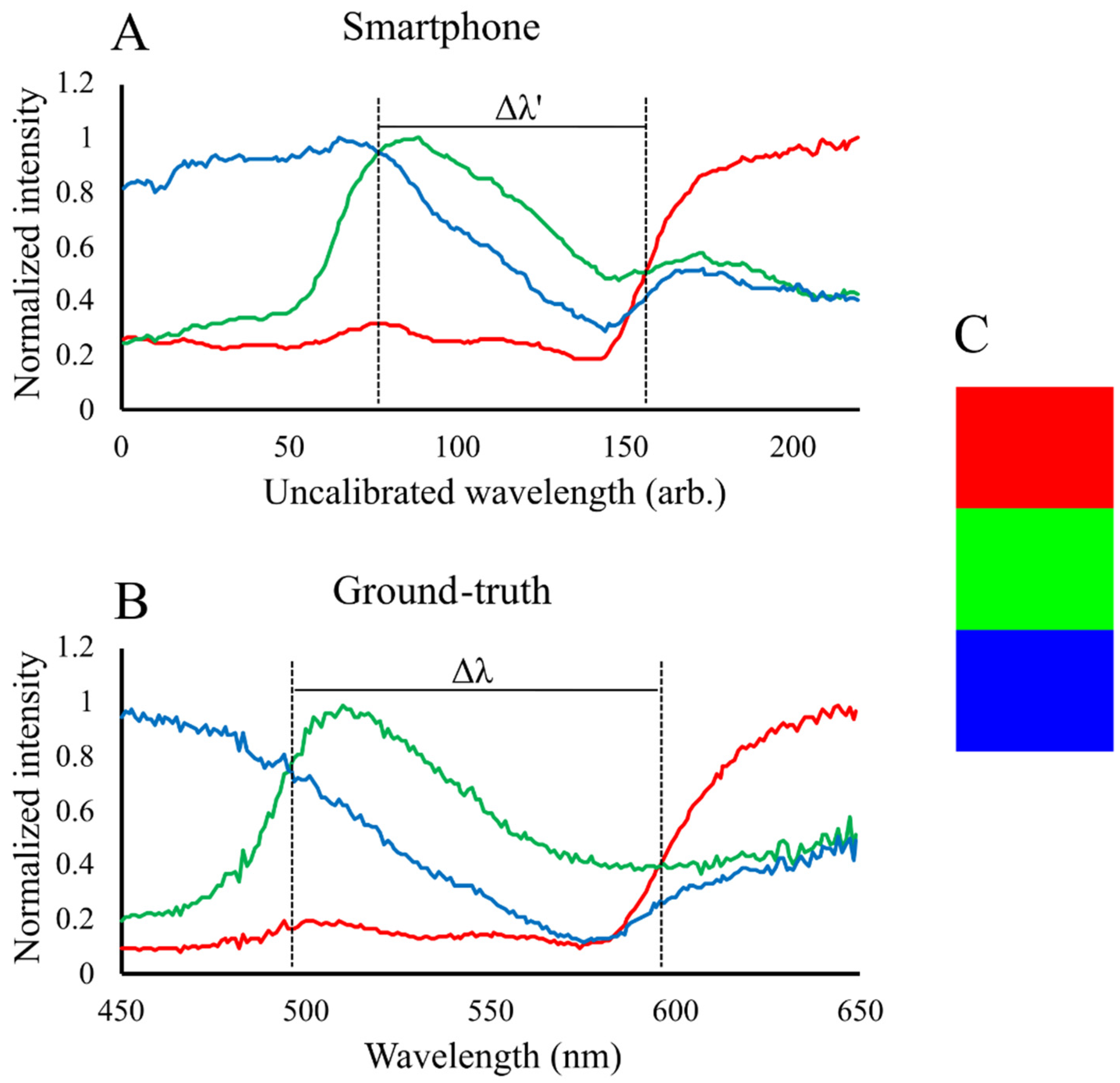

2.3. Spectral Calibration

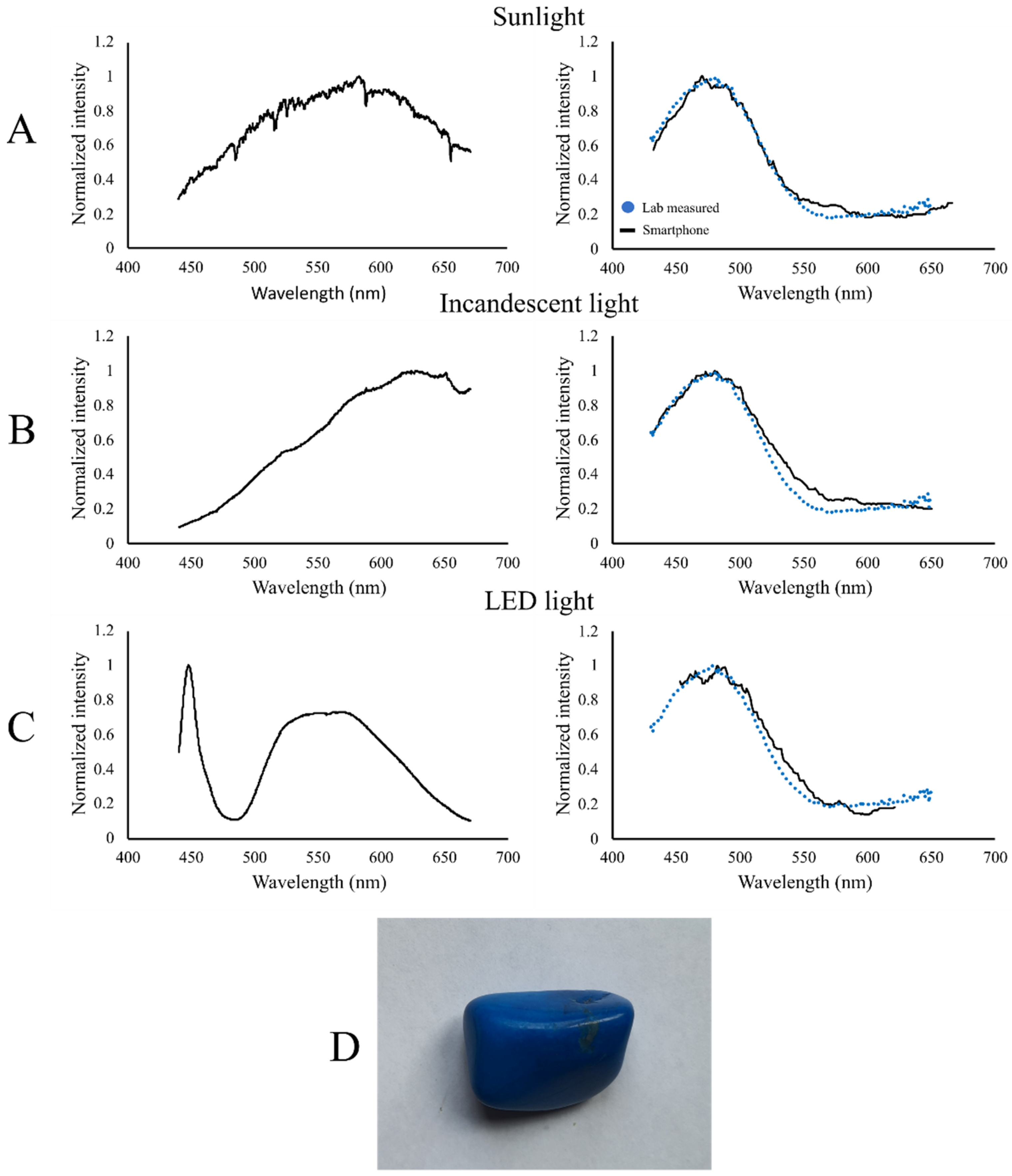

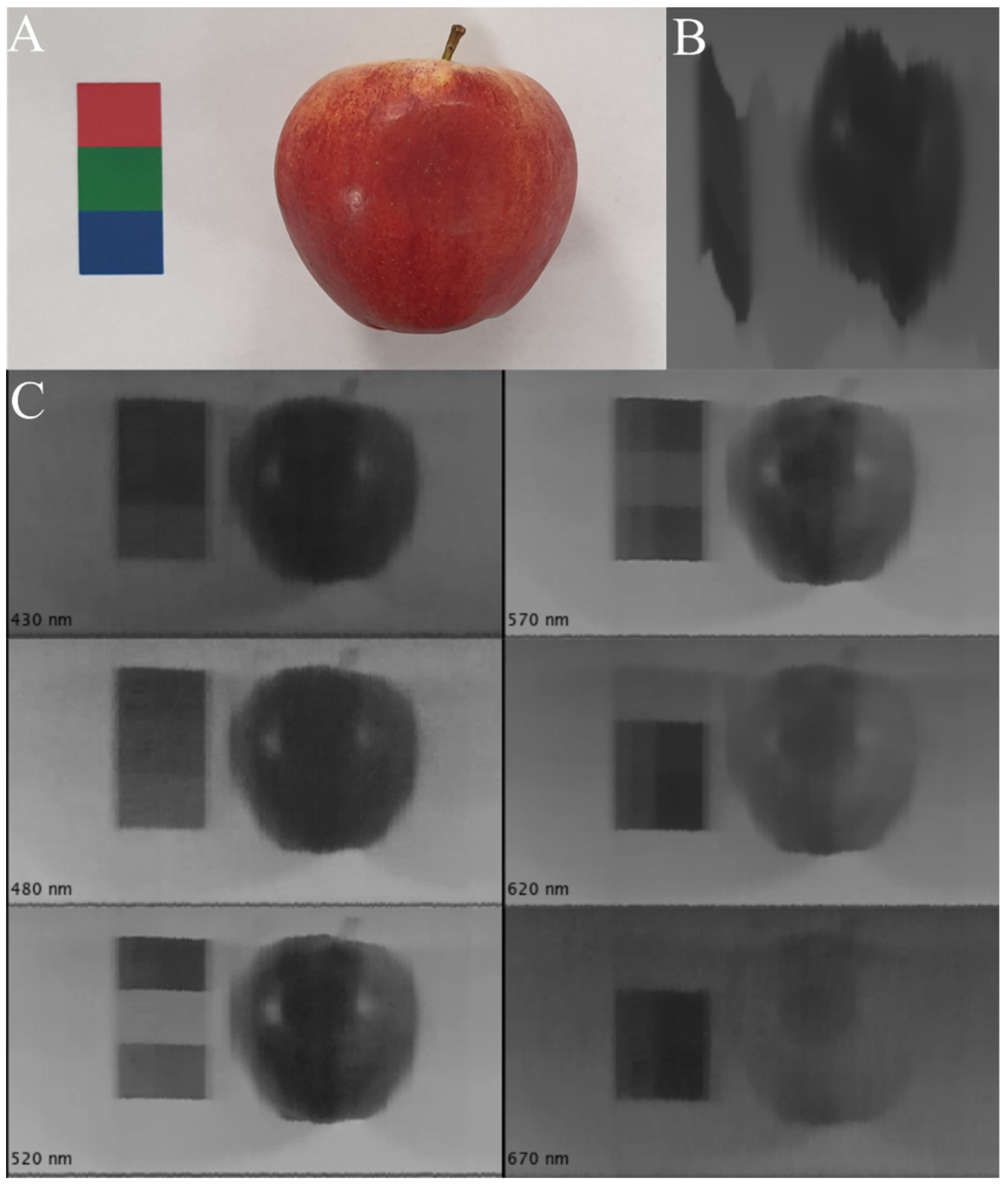

3. Results

4. Discussion

Example Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kim, Y.; Glenn, D.M.; Park, J.; Ngugi, H.K.; Lehman, B.L. Hyperspectral image analysis for water stress detection of apple trees. Comput. Electron. Agric. 2011, 77, 155–160. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Mahlein, A.; Kuska, M.; Thomas, S.; Bohnenkamp, D.; Alisaac, E.; Behmann, J.; Wahabzada, M.; Kersting, K. Plant disease detection by hyperspectral imaging: From the lab to the field. Adv. Anim. Biosci. 2017, 8, 238–243. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Aryal, S.; Chen, Z.; Tang, S. Mobile hyperspectral imaging for material surface damage detection. J. Comput. Civ. Eng. 2021, 35, 04020057. [Google Scholar] [CrossRef]

- Lavadiya, D.N.; Sajid, H.U.; Yellavajjala, R.K.; Sun, X. Hyperspectral imaging for the elimination of visual ambiguity in corrosion detection and identification of corrosion sources. Struct. Health Monit. 2021. [Google Scholar] [CrossRef]

- Akbari, H.; Uto, K.; Kosugi, Y.; Kojima, K.; Tanaka, N. Cancer detection using infrared hyperspectral imaging. Cancer Sci. 2011, 102, 852–857. [Google Scholar] [CrossRef]

- Hadoux, X.; Hui, F.; Lim, J.K.; Masters, C.L.; Pébay, A.; Chevalier, S.; Ha, J.; Loi, S.; Fowler, C.J.; Rowe, C. Non-invasive in vivo hyperspectral imaging of the retina for potential biomarker use in Alzheimer’s disease. Nat. Commun. 2019, 10, 4227. [Google Scholar] [CrossRef] [Green Version]

- Stuart, M.B.; McGonigle, A.J.; Davies, M.; Hobbs, M.J.; Boone, N.A.; Stanger, L.R.; Zhu, C.; Pering, T.D.; Willmott, J.R. Low-Cost Hyperspectral Imaging with A Smartphone. J. Imaging 2021, 7, 136. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef] [Green Version]

- Zabalza, J.; Ren, J.; Wang, Z.; Marshall, S.; Wang, J. Singular spectrum analysis for effective feature extraction in hyperspectral imaging. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1886–1890. [Google Scholar] [CrossRef] [Green Version]

- Singh, V.; Sharma, N.; Singh, S. A review of imaging techniques for plant disease detection. Artif. Intell. Agric. 2020, 4, 229–242. [Google Scholar] [CrossRef]

- Yu, C.; Yang, J.; Song, N.; Sun, C.; Wang, M.; Feng, S. Microlens array snapshot hyperspectral microscopy system for the biomedical domain. Appl. Opt. 2021, 60, 1896–1902. [Google Scholar] [CrossRef]

- Stuart, M.B.; Stanger, L.R.; Hobbs, M.J.; Pering, T.D.; Thio, D.; McGonigle, A.J.; Willmott, J.R. Low-Cost Hyperspectral Imaging System: Design and Testing for Laboratory-Based Environmental Applications. Sensors 2020, 20, 3293. [Google Scholar] [CrossRef]

- Hagen, N.; Kudenov, M. Review of snapshot spectral imaging technologies. Opt. Eng. 2013, 52, 090901. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Gao, S.; Zhuang, J.; Hou, C.; He, Y.; Chu, X.; Miao, A.; Luo, S. Apple bruise grading using piecewise nonlinear curve fitting for hyperspectral imaging data. IEEE Access 2020, 8, 147494–147506. [Google Scholar] [CrossRef]

- Saha, A.K.; Saha, J.; Ray, R.; Sircar, S.; Dutta, S.; Chattopadhyay, S.P.; Saha, H.N. IOT-based drone for improvement of crop quality in agricultural field. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 612–615. [Google Scholar]

- Stuart, M.B.; McGonigle, A.J.; Willmott, J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef] [Green Version]

- Stampfer, C.; Heinke, H.; Staacks, S. A lab in the pocket. Nat. Rev. Mater. 2020, 5, 169–170. [Google Scholar] [CrossRef]

- Onorato, P.; Rosi, T.; Tufino, E.; Caprara, C.; Malgieri, M. Quantitative experiments in a distance lab: Studying blackbody radiation with a smartphone. Eur. J. Phys. 2021, 42, 045103. [Google Scholar] [CrossRef]

- Singh, M.; Singh, G.; Singh, J.; Kumar, Y. Design and Validation of Wearable Smartphone Based Wireless Cardiac Activity Monitoring Sensor. Wirel. Pers. Commun. 2021, 119, 441–457. [Google Scholar] [CrossRef]

- Cao, Y.; Zheng, T.; Wu, Z.; Tang, J.; Yin, C.; Dai, C. Lab-in-a-Phone: A lightweight oblique incidence reflectometer based on smartphone. Opt. Commun. 2021, 489, 126885. [Google Scholar] [CrossRef]

- McGonigle, A.J.; Wilkes, T.C.; Pering, T.D.; Willmott, J.R.; Cook, J.M.; Mims, F.M.; Parisi, A.V. Smartphone spectrometers. Sensors 2018, 18, 223. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wilkes, T.C.; McGonigle, A.J.; Pering, T.D.; Taggart, A.J.; White, B.S.; Bryant, R.G.; Willmott, J.R. Ultraviolet imaging with low cost smartphone sensors: Development and application of a raspberry Pi-based UV camera. Sensors 2016, 16, 1649. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Turner, J.; Igoe, D.; Parisi, A.V.; McGonigle, A.J.; Amar, A.; Wainwright, L. A review on the ability of smartphones to detect ultraviolet (UV) radiation and their potential to be used in UV research and for public education purposes. Sci. Total Environ. 2020, 706, 135873. [Google Scholar] [CrossRef]

- Wilkes, T.C.; McGonigle, A.J.; Willmott, J.R.; Pering, T.D.; Cook, J.M. Low-cost 3D printed 1 nm resolution smartphone sensor-based spectrometer: Instrument design and application in ultraviolet spectroscopy. Opt. Lett. 2017, 42, 4323–4326. [Google Scholar] [CrossRef]

- Stanger, L.R.; Wilkes, T.C.; Boone, N.A.; McGonigle, A.J.S.; Willmott, J.R. Thermal imaging metrology with a smartphone sensor. Sensors 2018, 18, 2169. [Google Scholar] [CrossRef] [Green Version]

- Lü, Q.; Tang, M. Detection of hidden bruise on kiwi fruit using hyperspectral imaging and parallelepiped classification. Procedia Environ. Sci. 2012, 12, 1172–1179. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Li, Q.; Rao, X.; Ying, Y. Precautionary analysis of sprouting potato eyes using hyperspectral imaging technology. Int. J. Agric. Biol. Eng. 2018, 11, 153–157. [Google Scholar] [CrossRef] [Green Version]

- Sigernes, F.; Syrjäsuo, M.; Storvold, R.; Fortuna, J.; Grøtte, M.E.; Johansen, T.A. Do it yourself hyperspectral imager for handheld to airborne operations. Opt. Express 2018, 26, 6021–6035. [Google Scholar] [CrossRef]

- Yu, X.; Sun, Y.; Fang, A.; Qi, W.; Liu, C. Laboratory spectral calibration and radiometric calibration of hyper-spectral imaging spectrometer. In Proceedings of the 2014 2nd International Conference on Systems and Informatics (ICSAI 2014), Shanghai, China, 15–17 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 871–875. [Google Scholar]

- Polder, G.; van der Heijden, G.W. Calibration and characterization of spectral imaging systems. In Proceedings of the Multispectral and Hyperspectral Image Acquisition and Processing, Wuhan, China, 22–24 October 2001; International Society for Optics and Photonics: Bellingham, WA, USA, 2001; pp. 10–17. [Google Scholar]

- Hartmann, J.; Fischer, J.; Johannsen, U.; Werner, L. Analytical model for the temperature dependence of the spectral responsivity of silicon. JOSA B 2001, 18, 942–947. [Google Scholar] [CrossRef]

- Kumar, J.; Gupta, P.; Naseem, A.; Malik, S. Light spectrum and intensity, and the timekeeping in birds. Biol. Rhythm Res. 2017, 48, 739–746. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 2020, 21, 955–978. [Google Scholar] [CrossRef]

- Zhou, J.-J.; Zhang, Y.-H.; Han, Z.-M.; Liu, X.-Y.; Jian, Y.-F.; Hu, C.-G.; Dian, Y.-Y. Evaluating the Performance of Hyperspectral Leaf Reflectance to Detect Water Stress and Estimation of Photosynthetic Capacities. Remote Sens. 2021, 13, 2160. [Google Scholar] [CrossRef]

- Van De Vijver, R.; Mertens, K.; Heungens, K.; Somers, B.; Nuyttens, D.; Borra-Serrano, I.; Lootens, P.; Roldán-Ruiz, I.; Vangeyte, J.; Saeys, W. In-field detection of Alternaria solani in potato crops using hyperspectral imaging. Comput. Electron. Agric. 2020, 168, 105106. [Google Scholar] [CrossRef]

- Jones, C.L.; Weckler, P.R.; Maness, N.O.; Stone, M.L.; Jayasekara, R. Estimating water stress in plants using hyperspectral sensing. In Proceedings of the 2004 ASAE Annual Meeting, Ottawa, ON, Canada, 1–4 August 2004; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2004; p. 1. [Google Scholar]

- Li, J.; Luo, W.; Wang, Z.; Fan, S. Early detection of decay on apples using hyperspectral reflectance imaging combining both principal component analysis and improved watershed segmentation method. Postharvest Biol. Technol. 2019, 149, 235–246. [Google Scholar] [CrossRef]

- Cheng, J.-H.; Sun, D.-W. Rapid and non-invasive detection of fish microbial spoilage by visible and near infrared hyperspectral imaging and multivariate analysis. LWT-Food Sci. Technol. 2015, 62, 1060–1068. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Qureshi, J.; Roberts, P. Laboratory and UAV-based identification and classification of tomato yellow leaf curl, bacterial spot, and target spot diseases in tomato utilizing hyperspectral imaging and machine learning. Remote Sens. 2020, 12, 2732. [Google Scholar] [CrossRef]

- Liu, D.; Zeng, X.-A.; Sun, D.-W. Recent developments and applications of hyperspectral imaging for quality evaluation of agricultural products: A review. Crit. Rev. Food Sci. Nutr. 2015, 55, 1744–1757. [Google Scholar] [CrossRef]

- Xing, J.; Bravo, C.; Jancsók, P.T.; Ramon, H.; De Baerdemaeker, J. Detecting bruises on ‘Golden Delicious’ apples using hyperspectral imaging with multiple wavebands. Biosyst. Eng. 2005, 90, 27–36. [Google Scholar] [CrossRef]

- Wang, N.; ElMasry, G. Bruise detection of apples using hyperspectral imaging. In Hyperspectral Imaging for Food Quality Analysis and Control; Elsevier: Amsterdam, The Netherlands, 2010; pp. 295–320. [Google Scholar]

- Kim, M.S.; Chen, Y.; Mehl, P. Hyperspectral reflectance and fluorescence imaging system for food quality and safety. Trans. ASAE 2001, 44, 721. [Google Scholar]

- Wang, T.; Chen, J.; Fan, Y.; Qiu, Z.; He, Y. SeeFruits: Design and evaluation of a cloud-based ultra-portable NIRS system for sweet cherry quality detection. Comput. Electron. Agric. 2018, 152, 302–313. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Davies, M.; Stuart, M.B.; Hobbs, M.J.; McGonigle, A.J.S.; Willmott, J.R. Image Correction and In Situ Spectral Calibration for Low-Cost, Smartphone Hyperspectral Imaging. Remote Sens. 2022, 14, 1152. https://doi.org/10.3390/rs14051152

Davies M, Stuart MB, Hobbs MJ, McGonigle AJS, Willmott JR. Image Correction and In Situ Spectral Calibration for Low-Cost, Smartphone Hyperspectral Imaging. Remote Sensing. 2022; 14(5):1152. https://doi.org/10.3390/rs14051152

Chicago/Turabian StyleDavies, Matthew, Mary B. Stuart, Matthew J. Hobbs, Andrew J. S. McGonigle, and Jon R. Willmott. 2022. "Image Correction and In Situ Spectral Calibration for Low-Cost, Smartphone Hyperspectral Imaging" Remote Sensing 14, no. 5: 1152. https://doi.org/10.3390/rs14051152

APA StyleDavies, M., Stuart, M. B., Hobbs, M. J., McGonigle, A. J. S., & Willmott, J. R. (2022). Image Correction and In Situ Spectral Calibration for Low-Cost, Smartphone Hyperspectral Imaging. Remote Sensing, 14(5), 1152. https://doi.org/10.3390/rs14051152