The “Fuzzy” Repair of Urban Building Facade Point Cloud Based on Distribution Regularity

Abstract

:1. Introduction

- We proposed a principle of hole repair for point cloud data that meets the distribution regularity of buildings. This kind of building is very common in the urban scene, and the repair results meet the modeling needs of the “Smart City”;

- We proposed a “fuzzy” repair method, which effectively reduces the complexity of the algorithm and makes our method applicable to data whose feature boundaries are difficult to extract;

- In the process of classifying feature boundaries, we proposed a rectangle translation classification algorithm that meets the follow-up requirements of this research, which is simple and efficient.

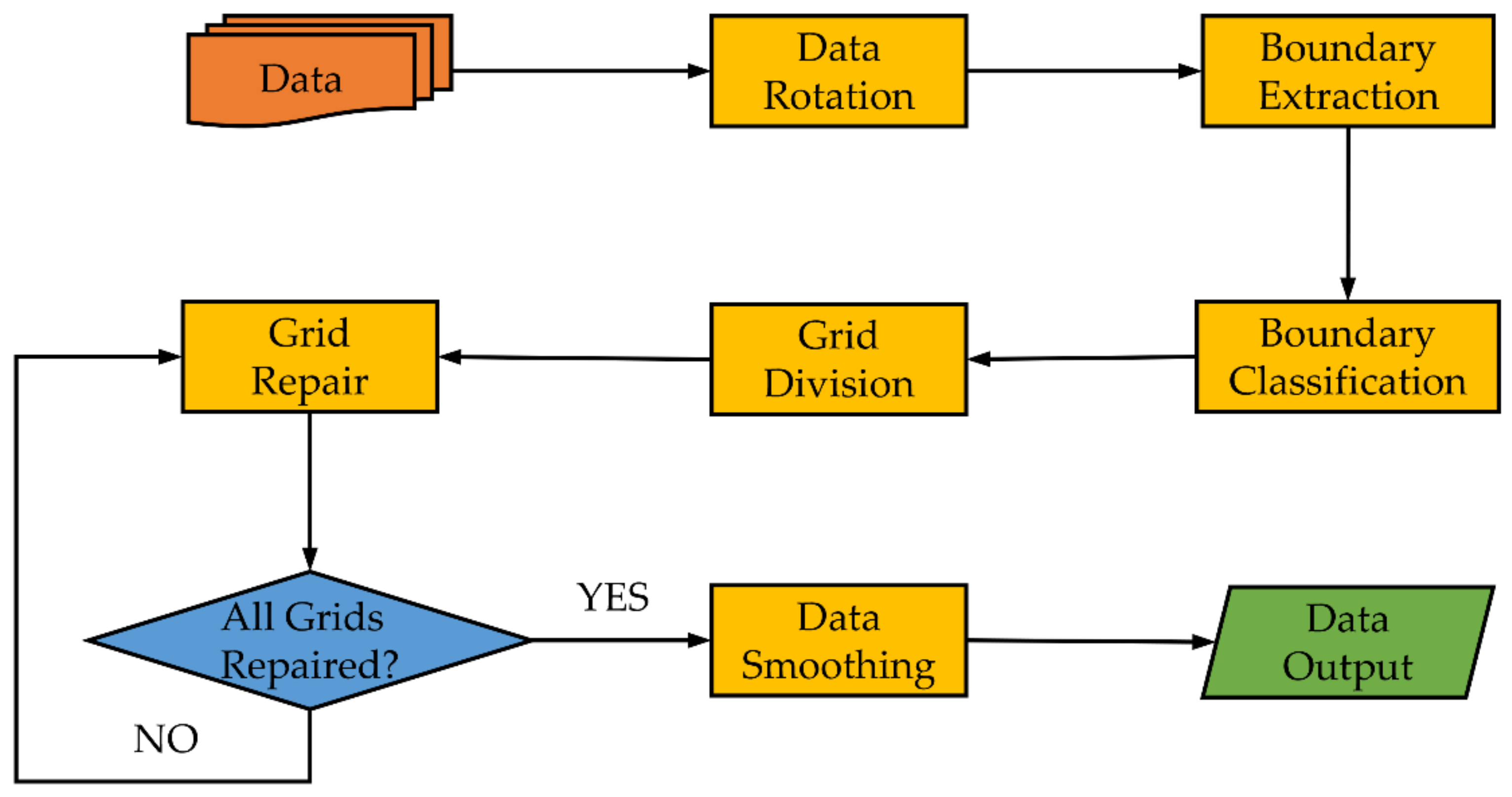

2. Materials and Methods

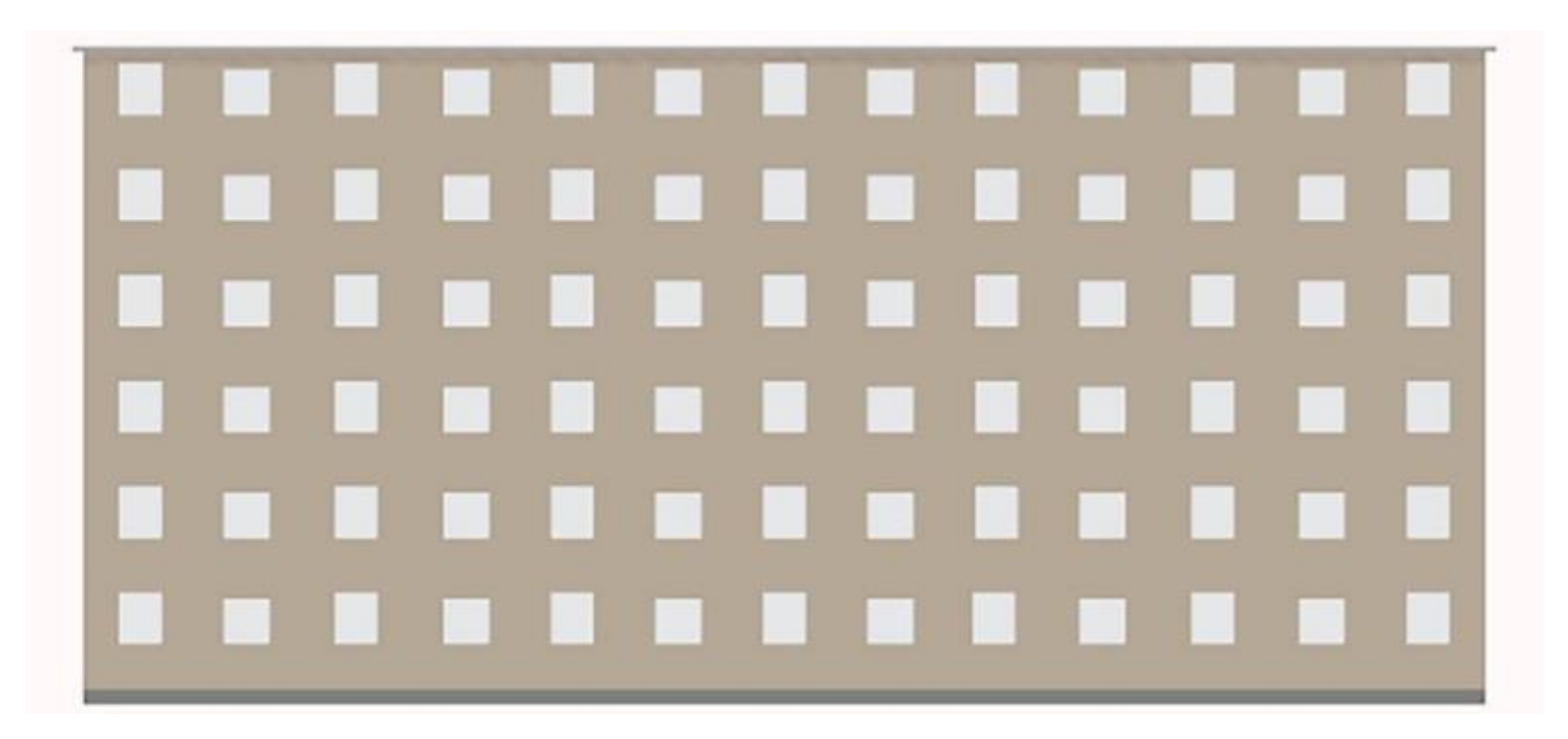

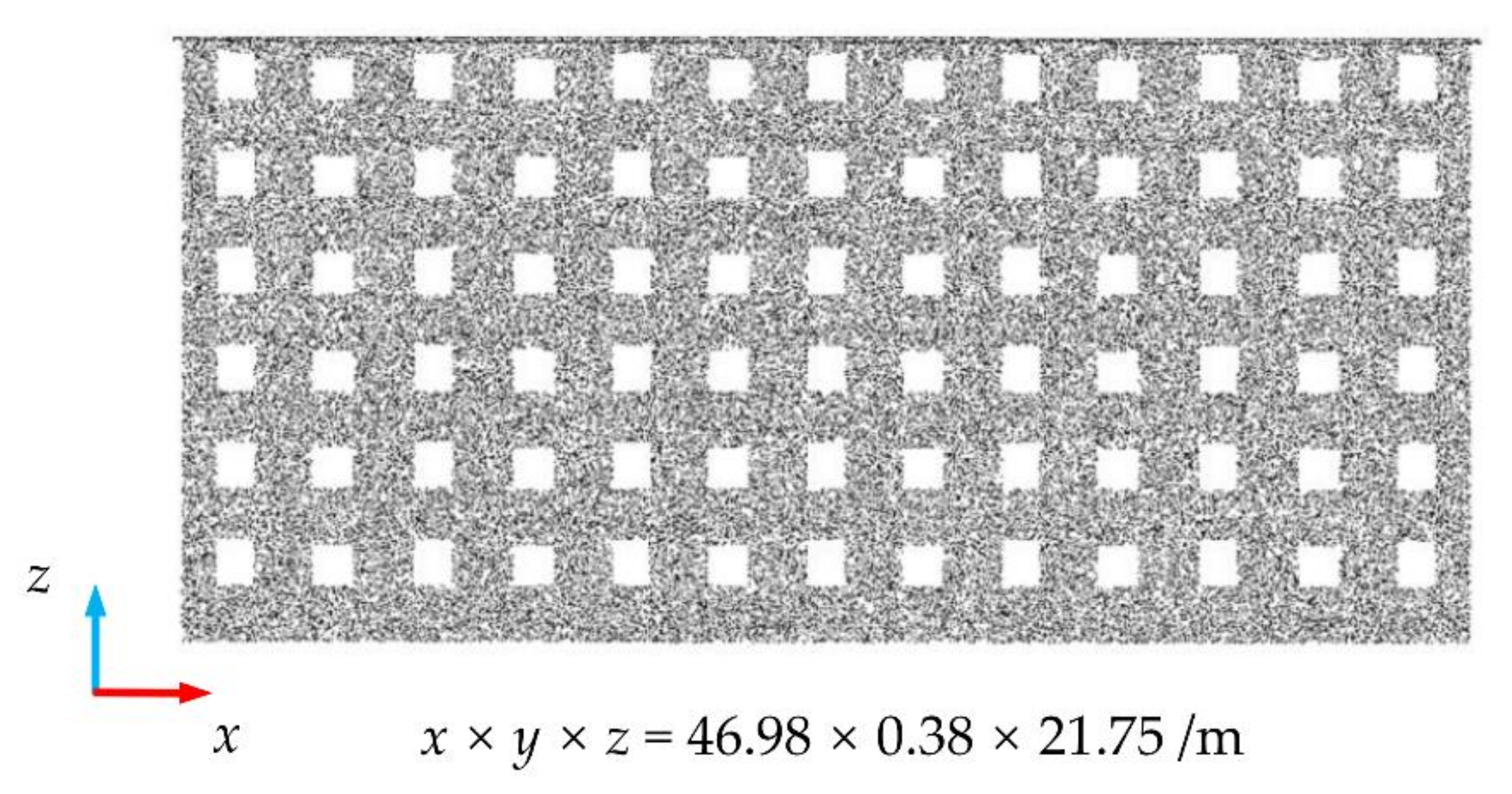

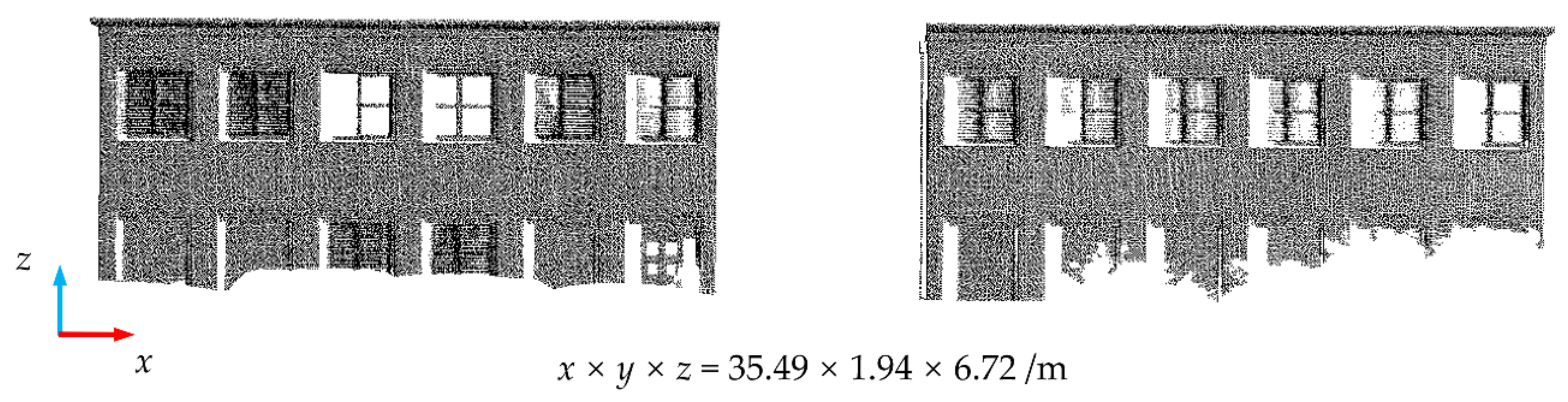

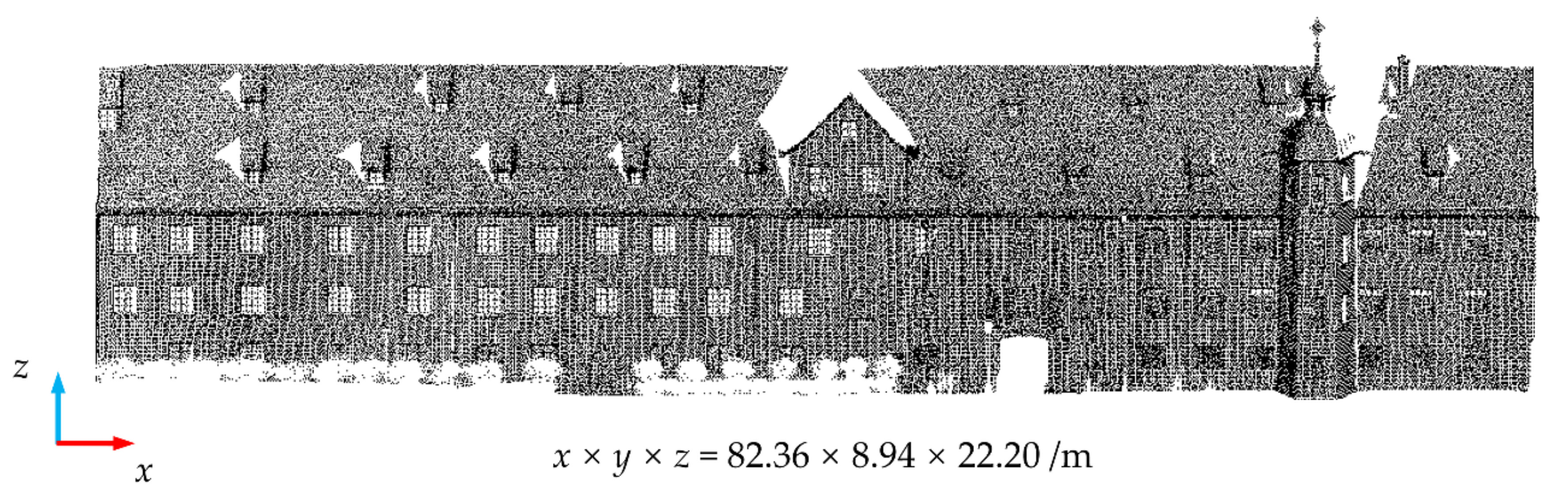

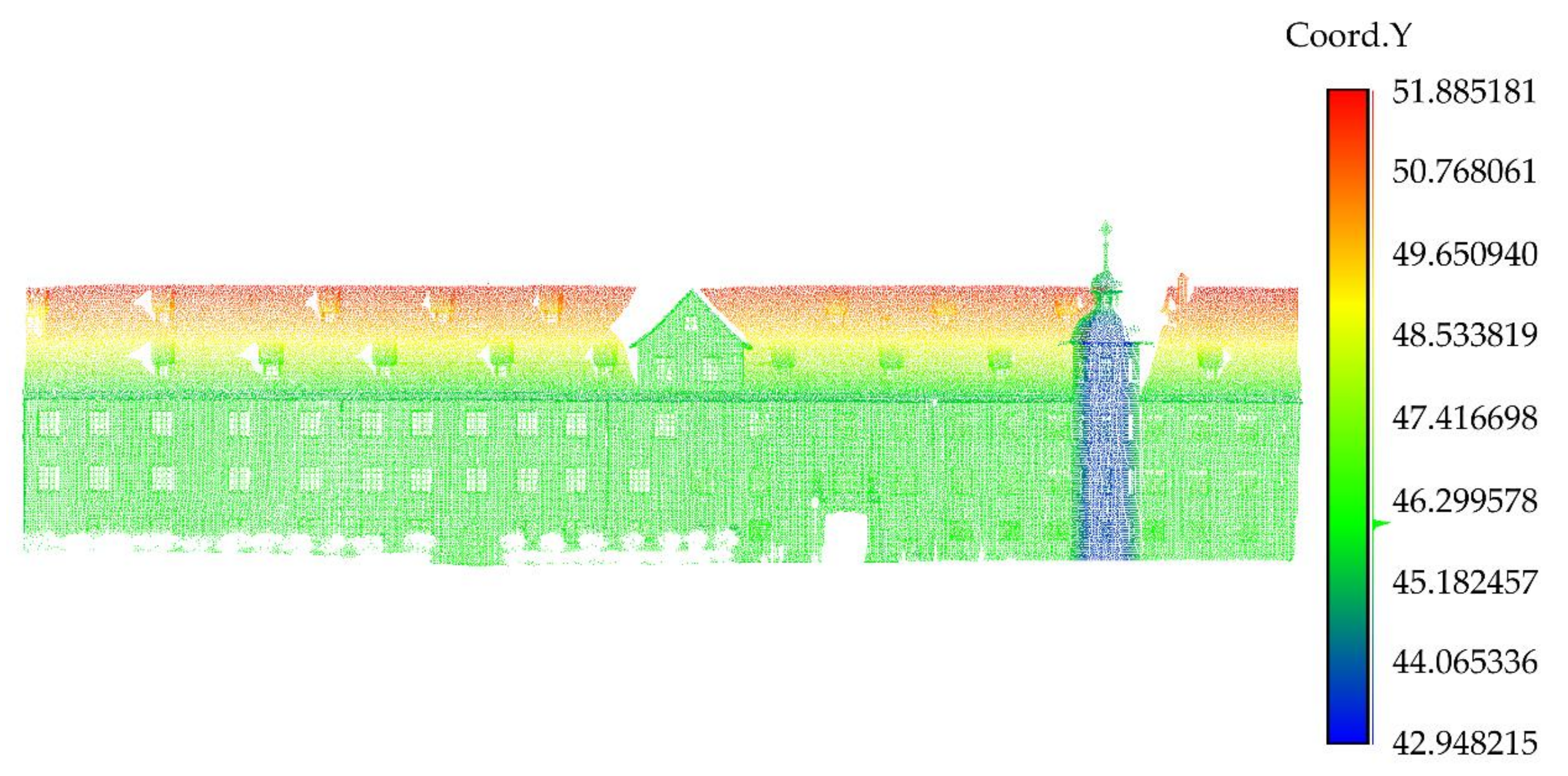

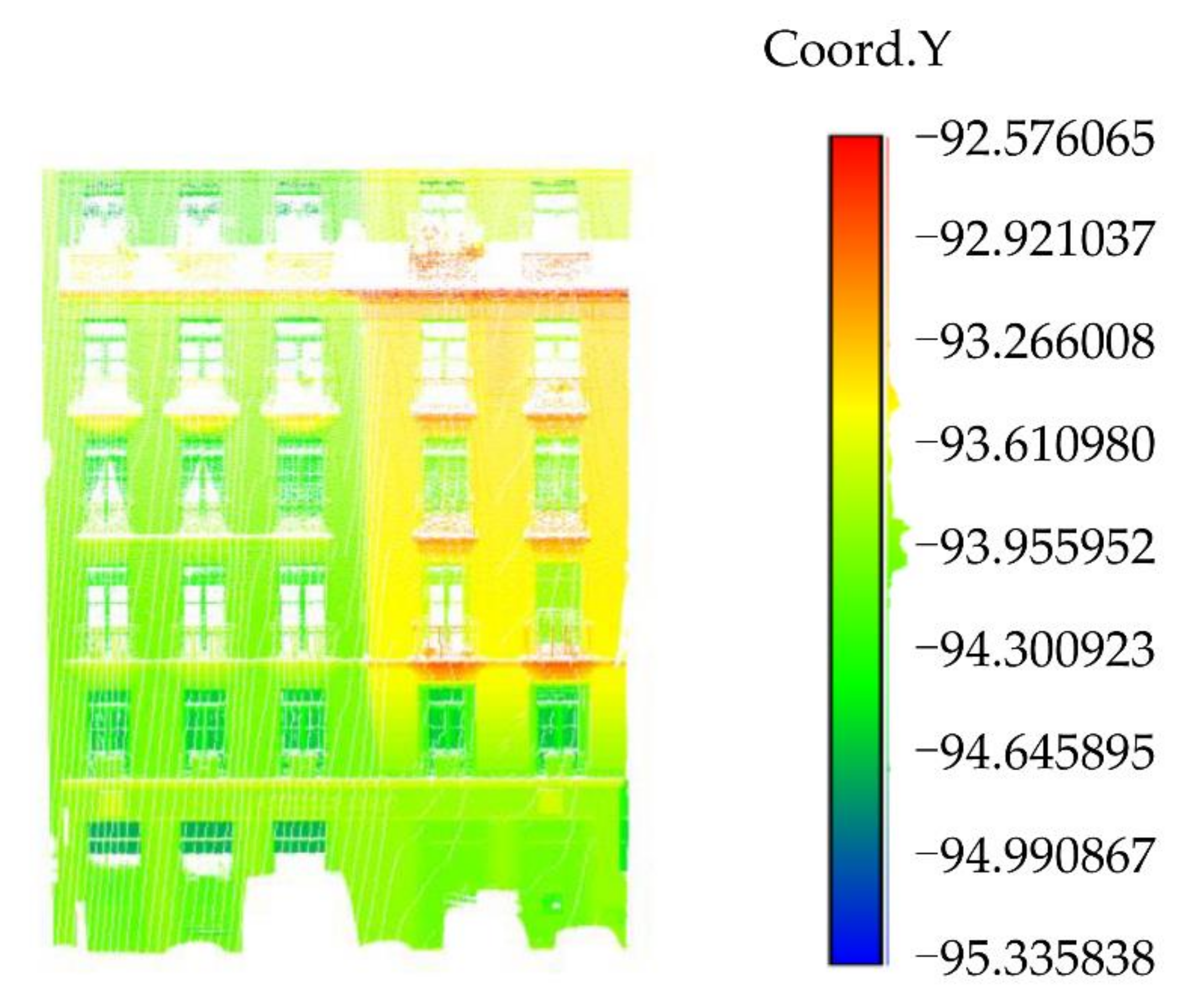

2.1. Research Data

2.2. Point Cloud Rotation

2.3. Feature Boundaries Extraction

- Traverse all points on the data; for the point , search its r-neighborhood points for local feature analysis, and estimate the r-neighborhood normal vector. At this time, if the neighborhood radius r is too small, the noise will be large, and the estimated normal vector is prone to errors. However, if the r is set too large, the estimation speed will be slow. According to the data density, r is generally selected as 0.1–1.0 m. An estimate of the normal vector can be expressed as Formula (2):where M is the covariance matrix. The minimum eigenvalue of M is , and its corresponding eigenvector is the normal vector and . The average value of all points x in the point set is . The average of all points y in the point set is . The average value of all points z in the point set is .

- TLS or MLS are due to errors and environmental reasons in the measurement work will produce outliers of different sparseness levels. Outliers will complicate feature operations such as normal vector estimation or curvature change rate at local point cloud sampling points. This leads to incorrect values, which, in turn, lead to post-processing failures. To solve this problem, we use a method based on distance filtering to remove outliers: calculate the average distance between all points in the r neighbor domain and ; if the distance is greater than , is an outlier point that is removed; otherwise, go to step 3.

- Construct the KD-tree index on the point cloud data, use the angle between each normal direction as the judgment basis, and calculate the normal angle of k neighborhood points. If the included angle is greater than the threshold , it is classified as a boundary feature point. The angle threshold is usually set to or . The smaller the value of the neighborhood point k, the more internal points are identified and the worse the boundary identification accuracy. The larger the value k, the better the accuracy of the final boundary identification. However, if the value k is too large, the boundary points of doors or windows may be ignored. the data from many experiments indicate k usually takes 5–10 times the point spacing Rp.

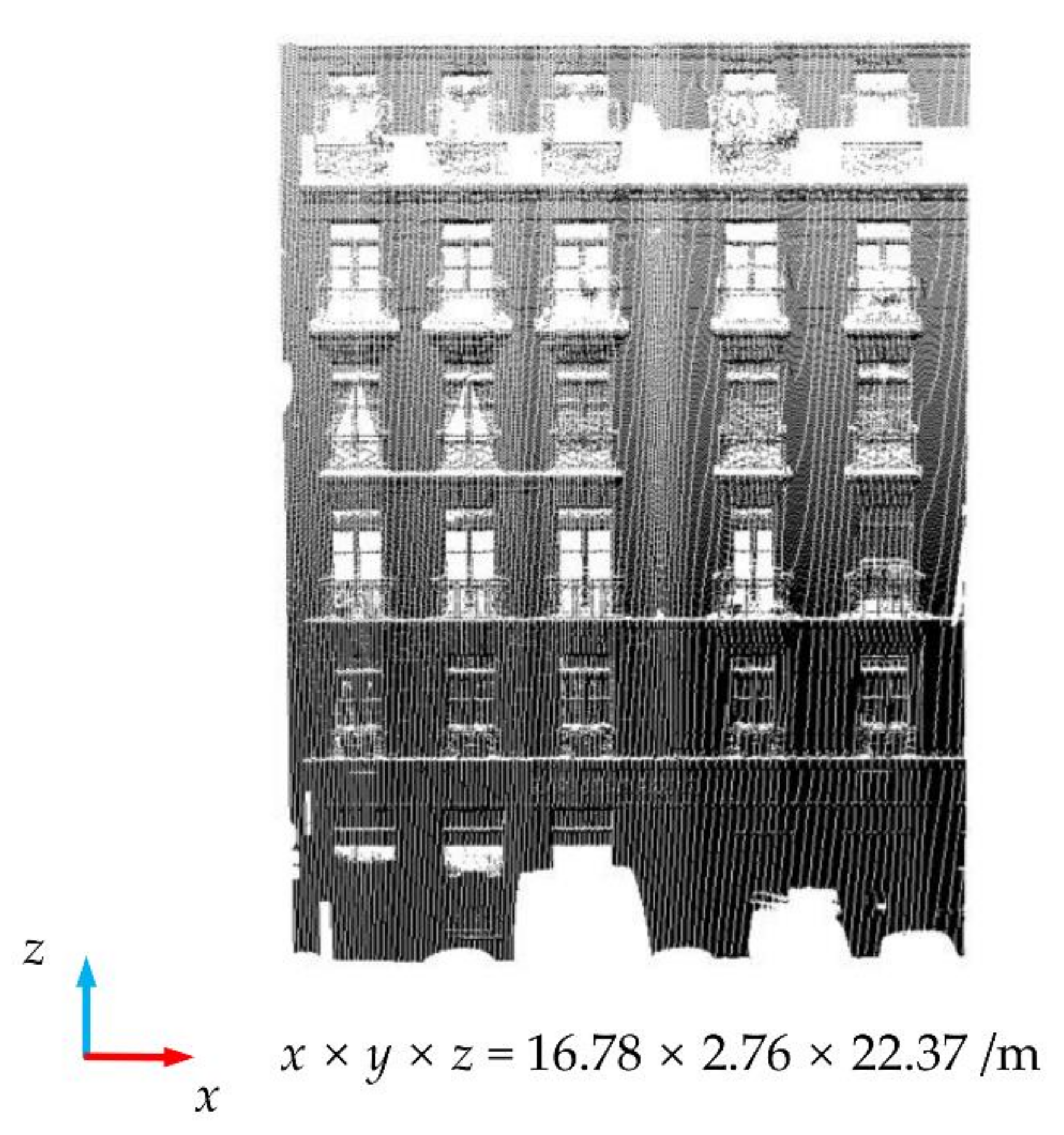

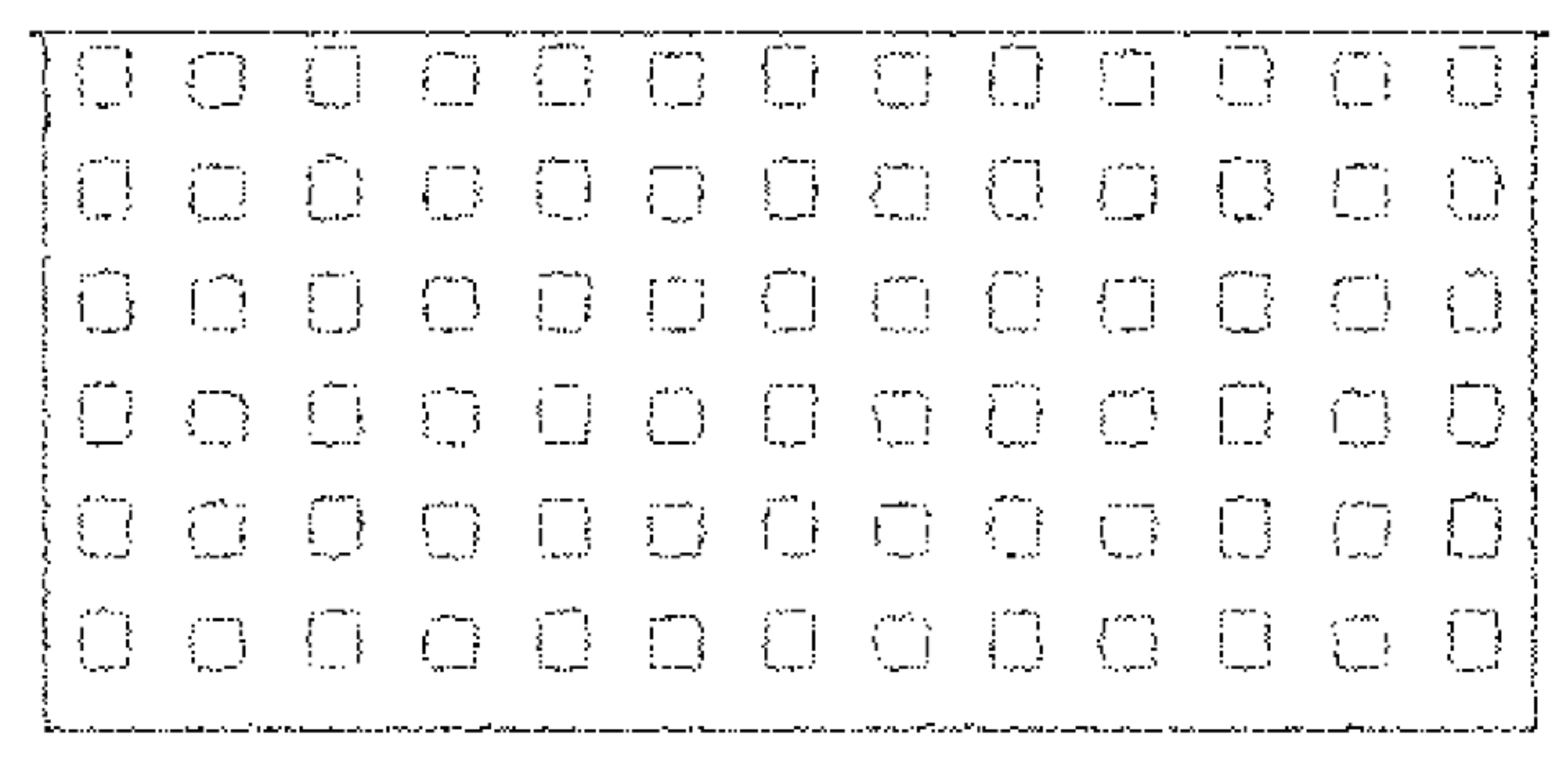

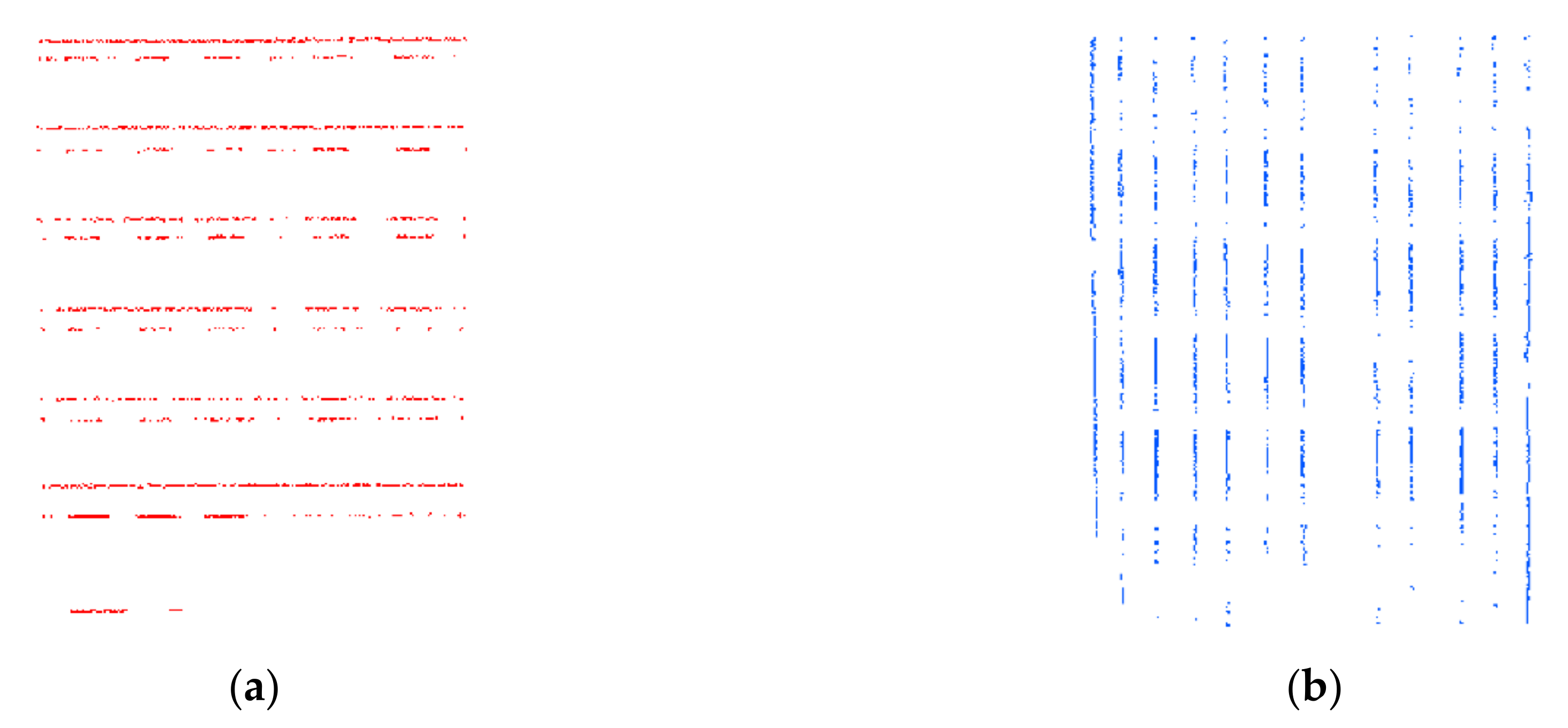

2.4. Classification of Rectangle Translation

- Project the feature boundaries to XOZ plane;

- Set the Rectangle radius and the translation step size s (generally, step size s is set to 2). When the vertical feature boundaries are extracted, the Rectangle height is the Z-axis length of the point cloud after the boundary extraction. At this time, the Rectangle is translated from to , traversing all the data. If the number of points in the Rectangle is less than the threshold value , these points are discarded. If is greater than , these points are saved. The saved points are all the required vertical feature boundaries;

- The process of extracting horizontal feature boundaries is similar to step 2. In this case, the height of the Rectangle is the X-axis length of the point cloud after the boundary extraction. The Rectangle is translated to from to traverse the entire data.

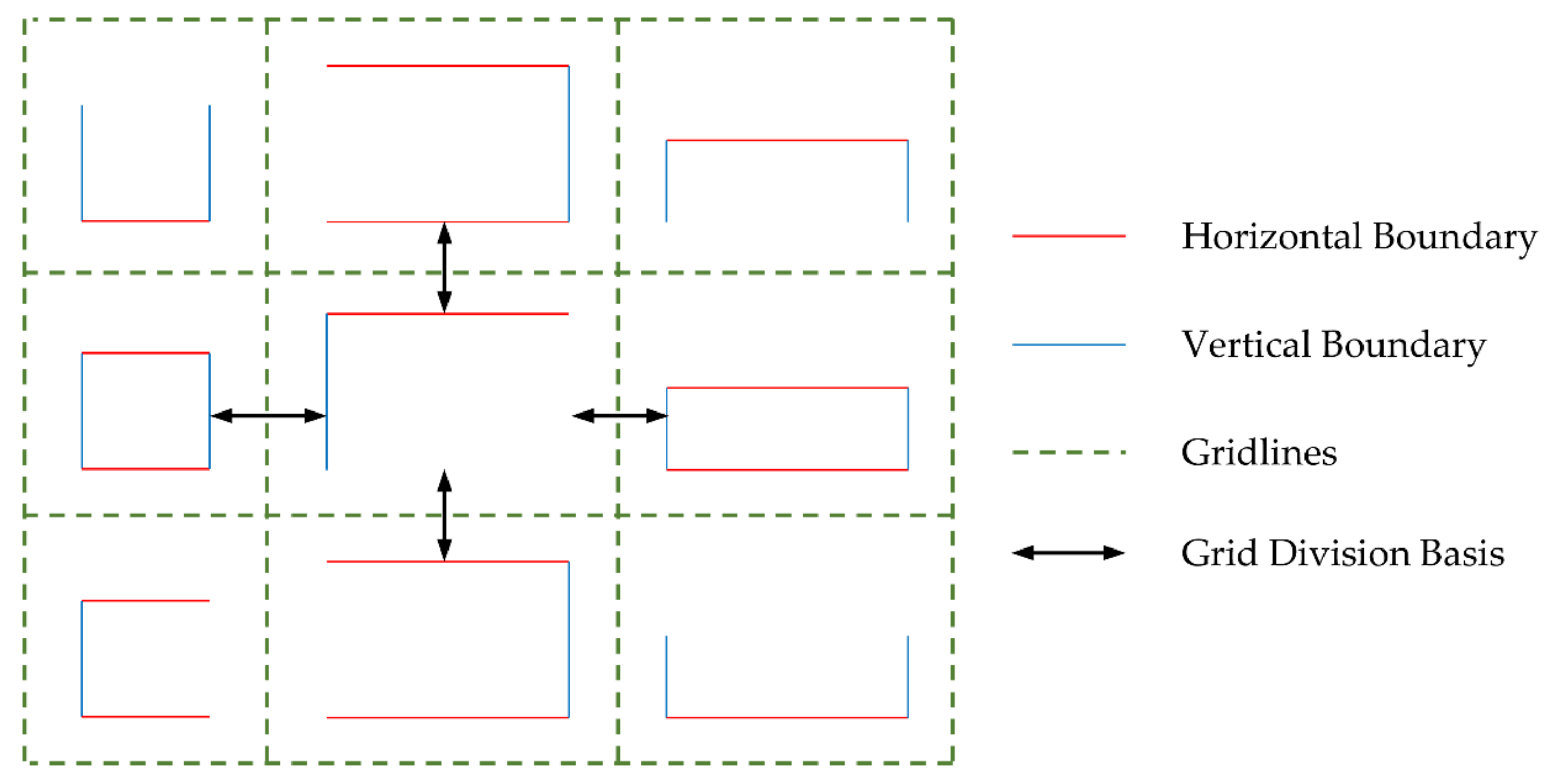

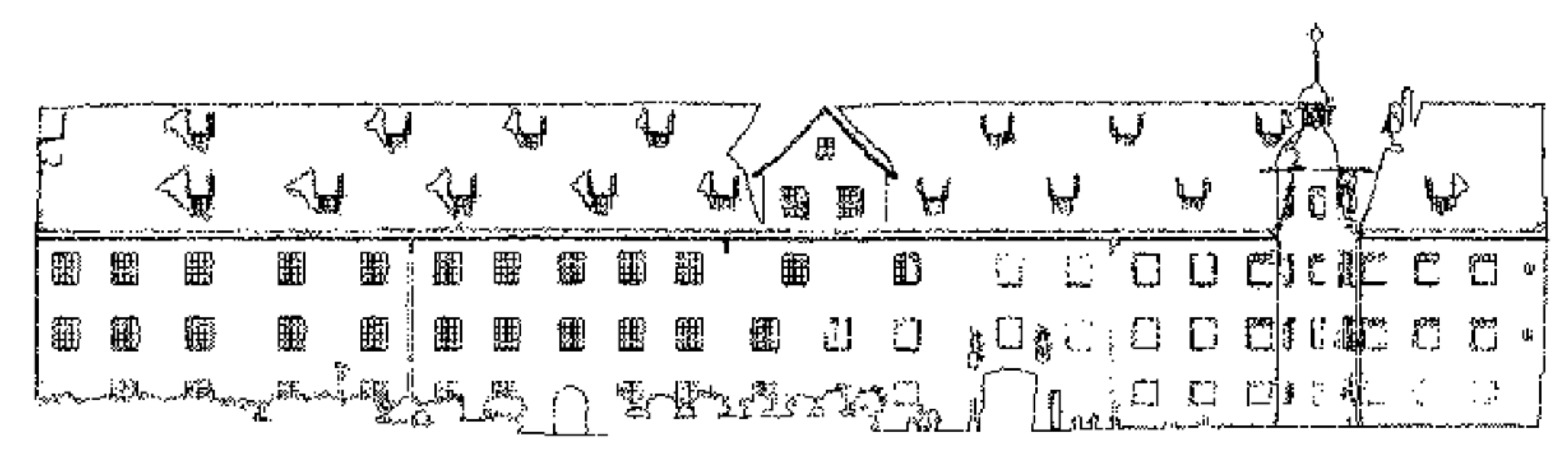

2.5. Grid Division and Repair Based on Distribution Regularity

- In the horizontal direction, the two adjacent grids are based on the boundary of two adjacent windows (doors) on the left and on the right, and half of the boundary distance between the two adjacent windows (doors) is taken as the division;

- In the vertical direction, as in Step 1, we take half of the boundary distance between the upper and the lower adjacent windows (doors) for division;

- When a window (door) does not extract the feature boundaries during boundary extraction, the division is based on the other window (door) boundaries in the same row or column;

- When there are two windows (doors) of different sizes in a row or a column, the division is carried out according to the boundary of the larger window (door).

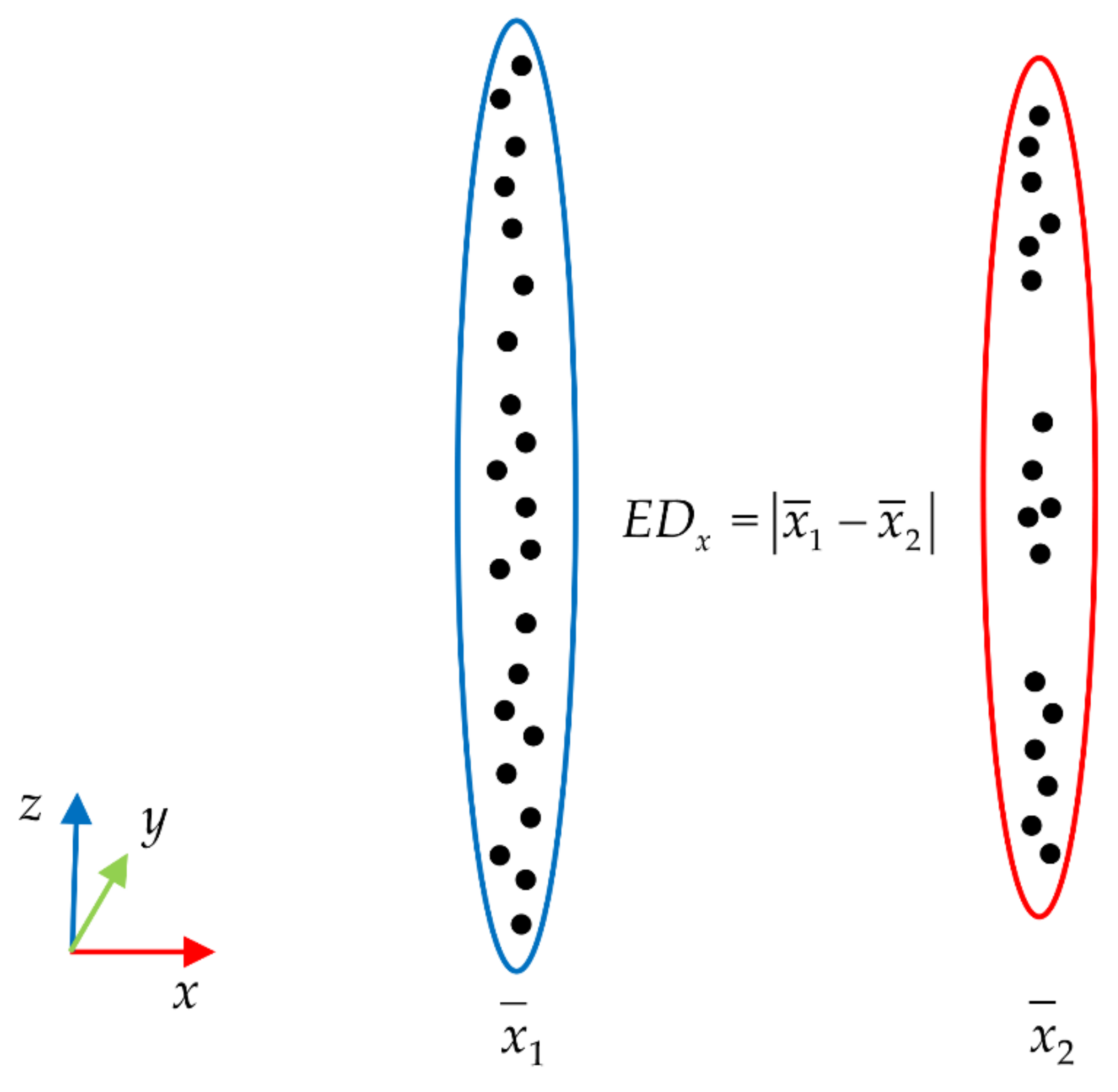

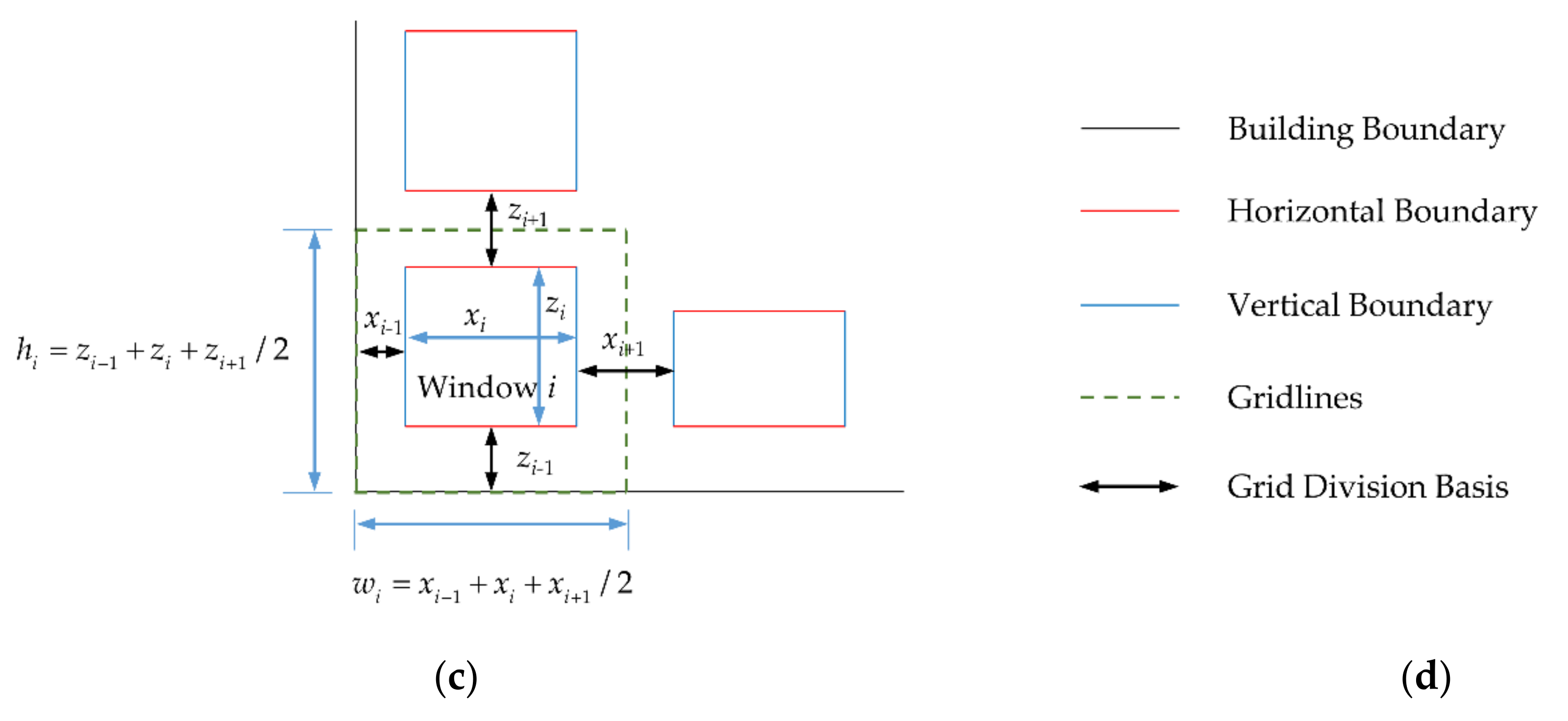

- When dividing the grids, we divide windows into three types: internal, boundary, and corner windows (only boundary doors exist). The method of grid-division is shown as follows: After obtaining the horizontal and vertical feature boundaries of buildings in Section 2.4, the Euclidean distance is used to calculate the distance of two adjacent boundaries as , . As shown in Figure 10, the Euclidean distance refers to the difference between the average values of the X-axis (Z-axis) of two adjacent feature boundary point clouds;

- When i is an internal window, set its width to and height to . If the distance between it and the window (i − 1) is and the distance between it and window (i + 1) is , then the grid width w is . The grid height h of the window is the same as this, which is ;

- When i is the upper or lower boundary window, its width is set to , its height to , and its grid width w to . If the distance between i and the bottom of the building is , and the distance between i and the upper window is , then the grid height h of i is . When i is the left or right boundary window, the grid-division is similar to the upper and lower boundary windows, with the grid width w is , and the grid height h is ;

- When i is a corner window, its width is set to and its height, to . If the distance between it and the bottom of the building is , the distance between it and the upper window is , the distance between it and the left boundary of the building is , and the distance between it and the right window (door) is . Then, the grid width w is , and the grid height h is .

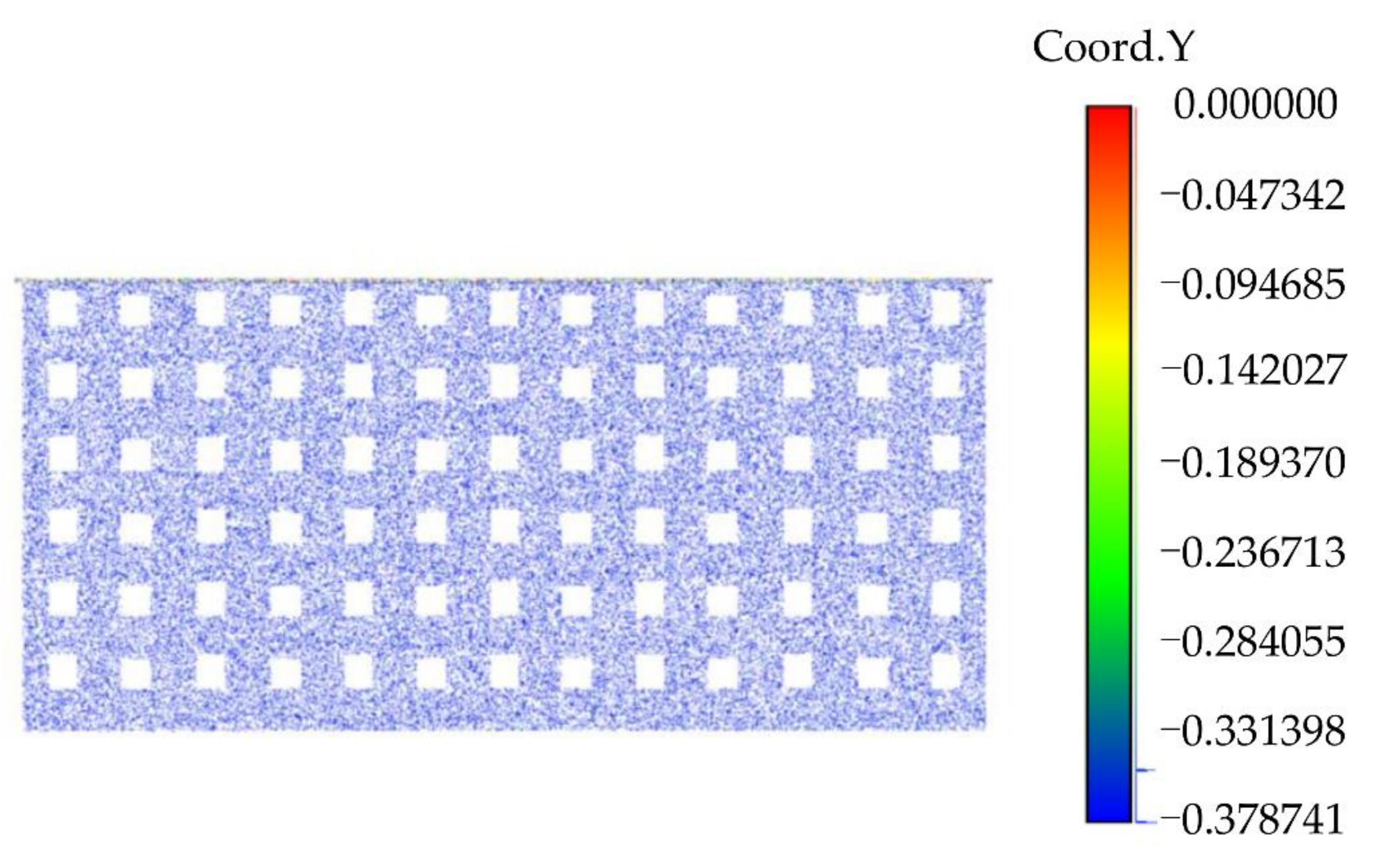

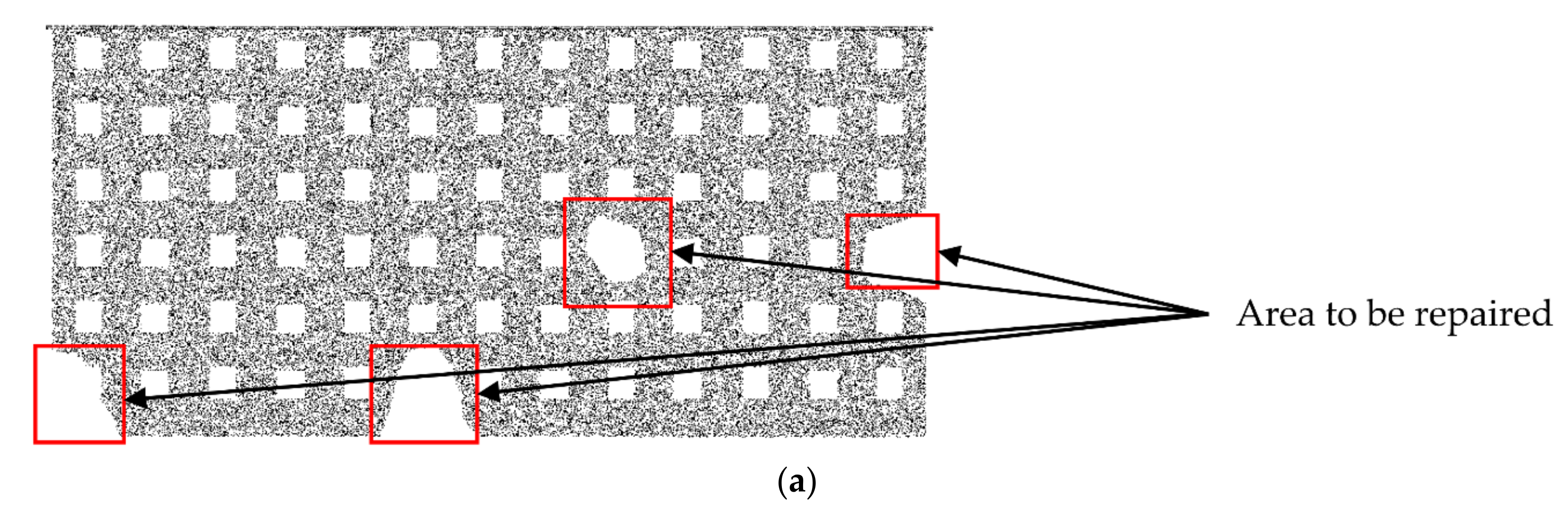

- Define the threshold of the number of points in the grid to determine whether the grid is to be repaired. The definition of is not fixed. Considering that the number of point clouds in the occluded area is usually significantly lower than that in the unoccluded area, can be set according to the point density of all grids. Generally speaking, the setting of is as follows:where is the total number of points in grid i. The grid volume i is . The total number of grids is n.

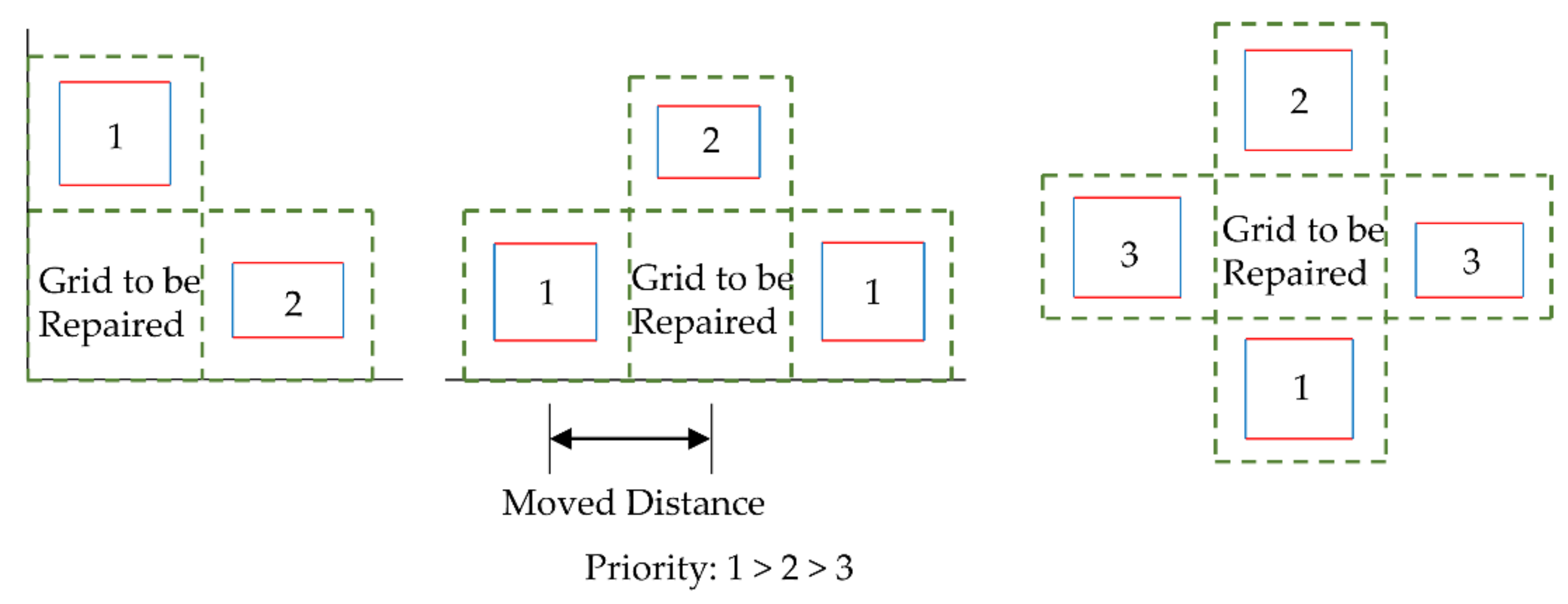

- When the internal grid is to be repaired, adjacent columns will first be selected to repair the internal grid due to the similar structural feature of the columns. At this time, considering the characteristics of laser scanners, the density of low-elevation point clouds is usually denser than that of high-elevation point clouds. Therefore, the lower adjacent columns are first selected to repair the grid, and the upper adjacent columns are selected second. When both the upper and the lower adjacent columns are to be repaired, the left and the right adjacent rows are selected to repair the grid. When all four adjacent grids are to be repaired, the grid will be temporarily shelved and repaired in the next cycle until all the grids are repaired;

- When the boundary grid is to be repaired, for the upper and lower boundary grids, the height is different from that of the internal grid because one side is close to the wall but similar to the adjacent boundary grids. Therefore, the adjacent boundary grids are first selected for repair. When the adjacent boundary grids are both to be repaired, the adjacent internal grids are selected for repair. When all the adjacent boundary grids and internal grids are to be repaired, the grid will be temporarily shelved and repaired in the next cycle until the repair of all grids is complete. The repair principle of left or right boundary grids is similar;

- When the corner grid is to be repaired, the width of the grid is usually different from the width of the upper and lower boundary grids, and the height of the grid is different from the length of the left and the right boundary grids because the two sides of the grid are close to the wall. This kind of grid repair is difficult. Therefore, we try to carry out a “copy–paste” repair from the column to the grid, as the features of the door and the window in the column are usually similar or the same in most buildings, while the features in the row may be quite different. When the adjacent column grid is also the grid to be repaired, the adjacent row grid will be selected for repair. When all the adjacent column and row grids are to be repaired, the grid will be temporarily shelved and repaired in the next cycle until all grids are repaired;

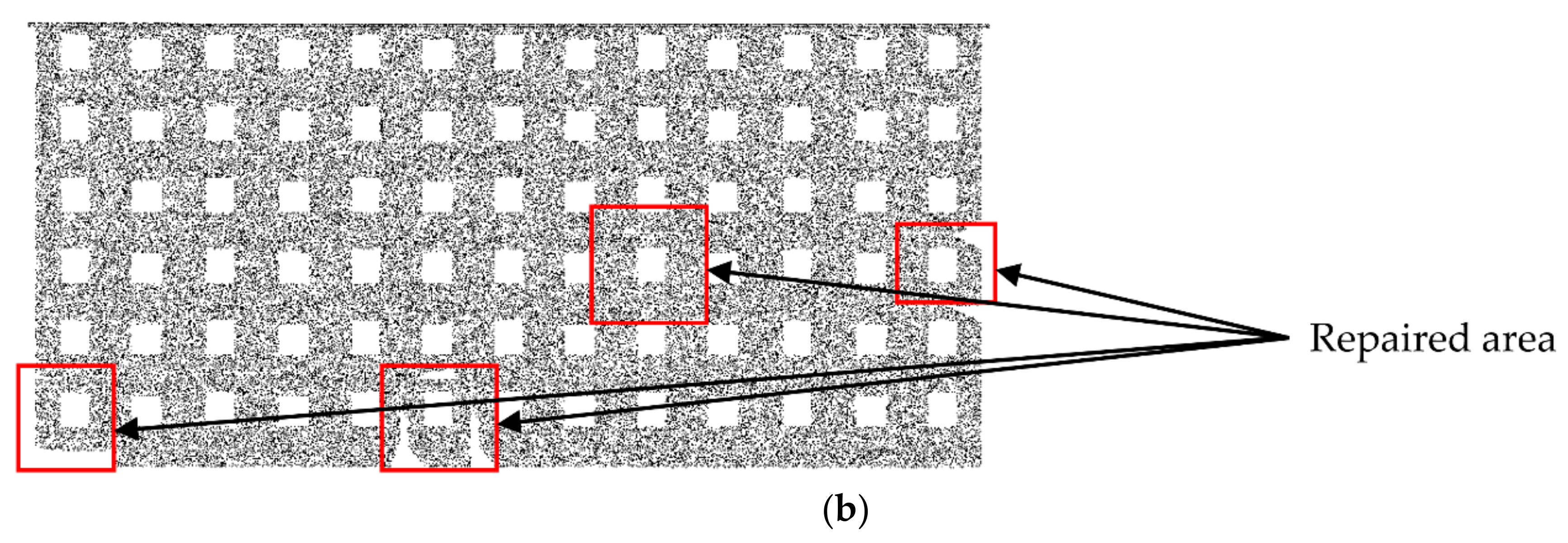

- After the point cloud repair principle is determined, for the grid to be repaired, we delete all points in the grid, copy all points in the adjacent grid, and fill these points into this grid. Finally, the repair of holes in the point cloud is realized through point cloud smoothing. Therefore, this method can also be regarded as a “copy–paste” repair method. Here, since the point cloud has been rotated to be parallel to the XOZ plane, when performing “copy–paste”, we need only to change the coordinates on a particular axis to complete the “paste”.

2.6. Evaluation Indicators

3. Results

3.1. Point Cloud Rotation Results

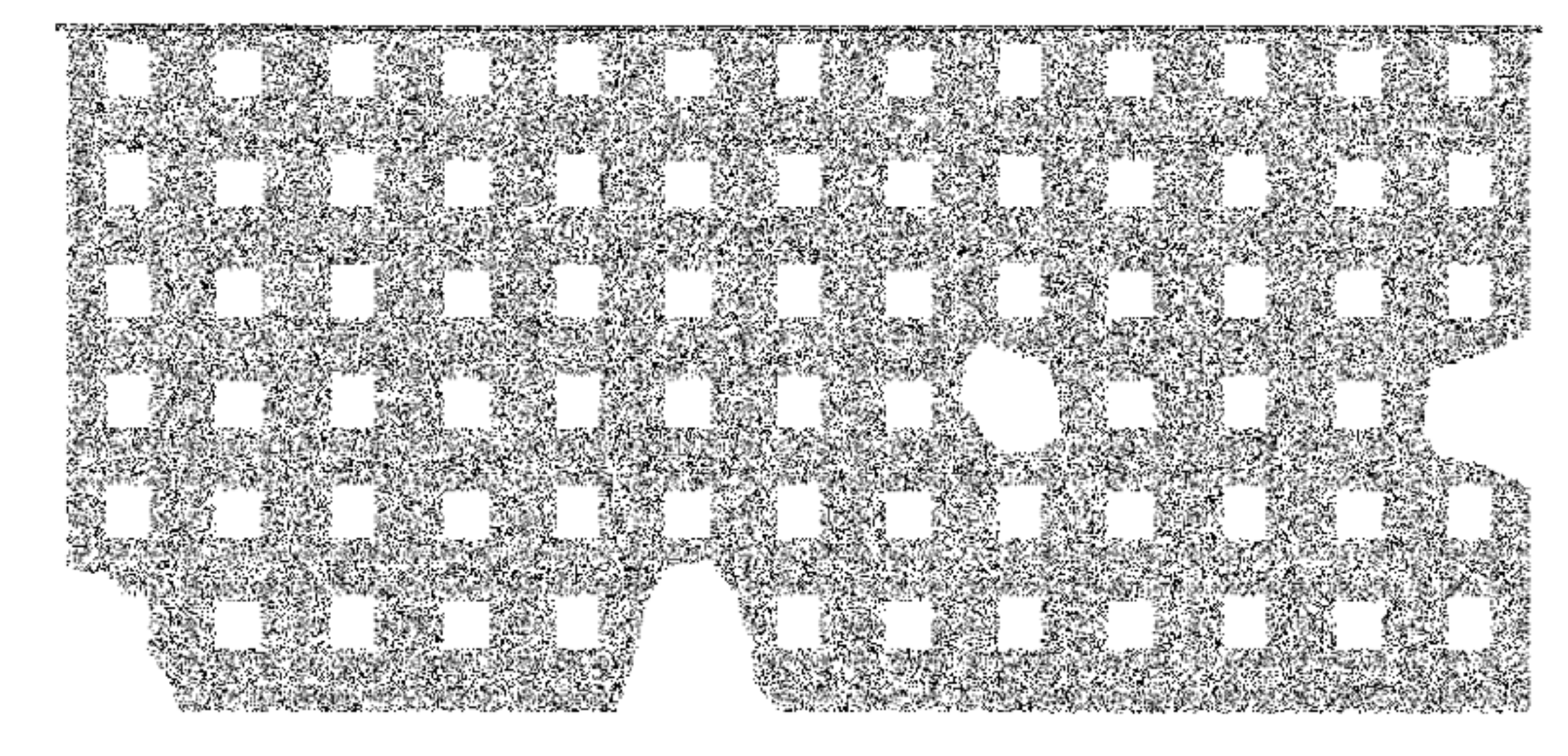

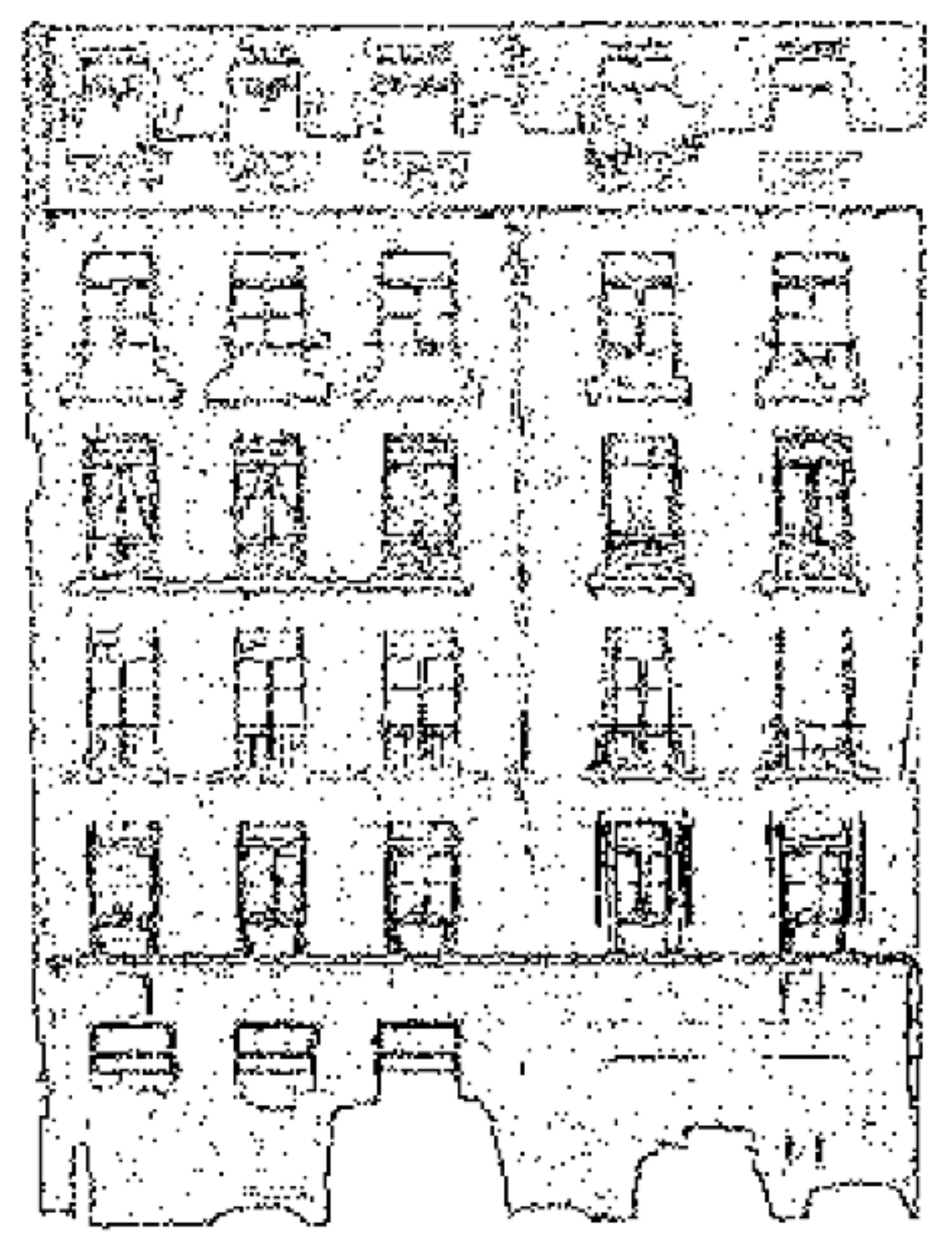

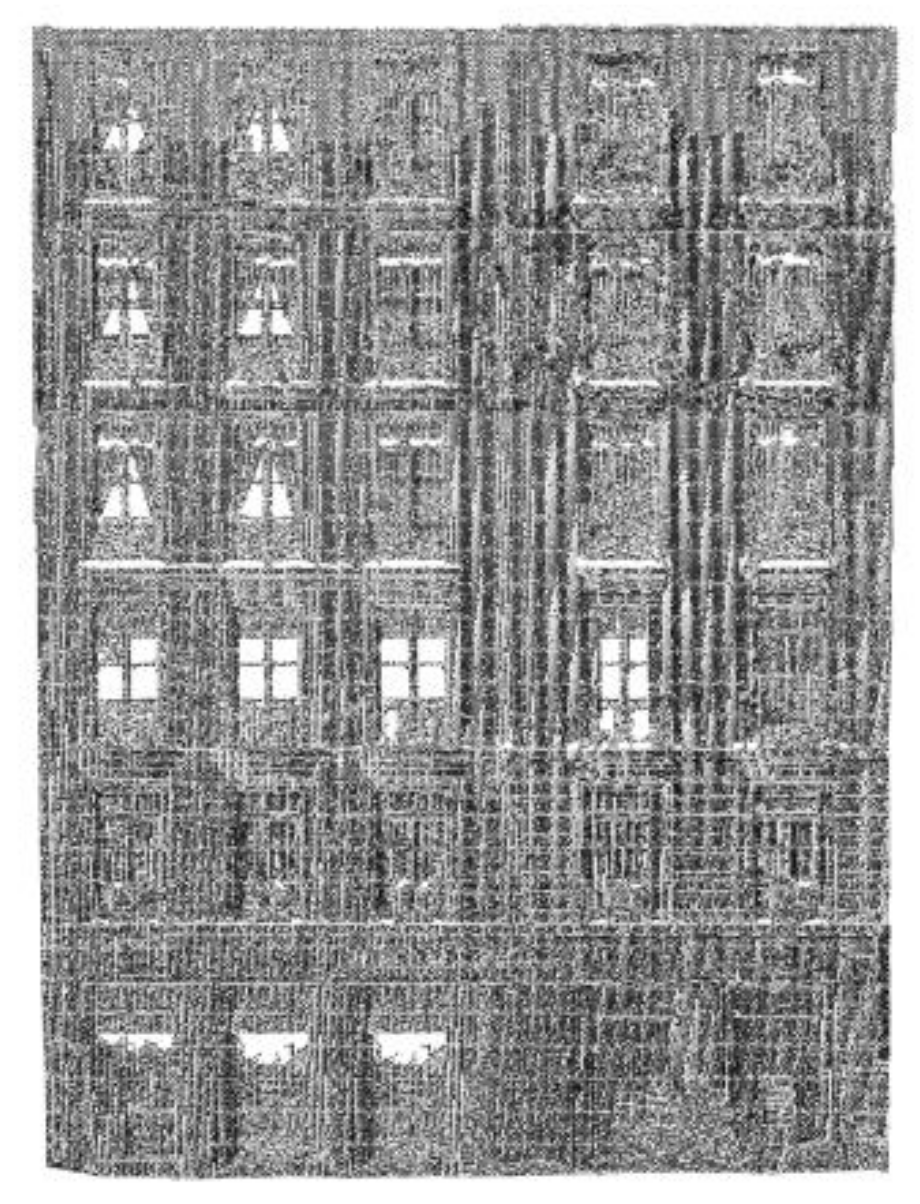

3.2. Feature Boundaries Extraction Results

3.3. Rectangle Translation Classification Results

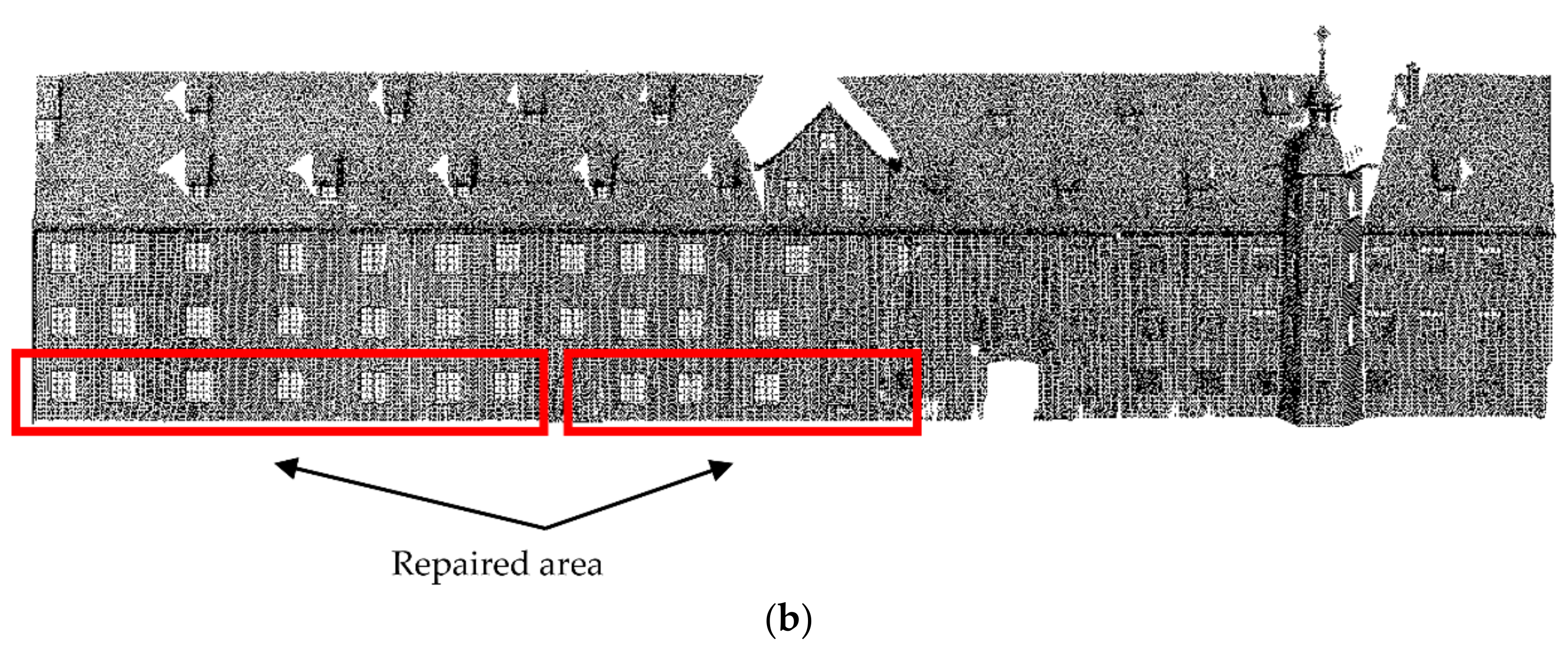

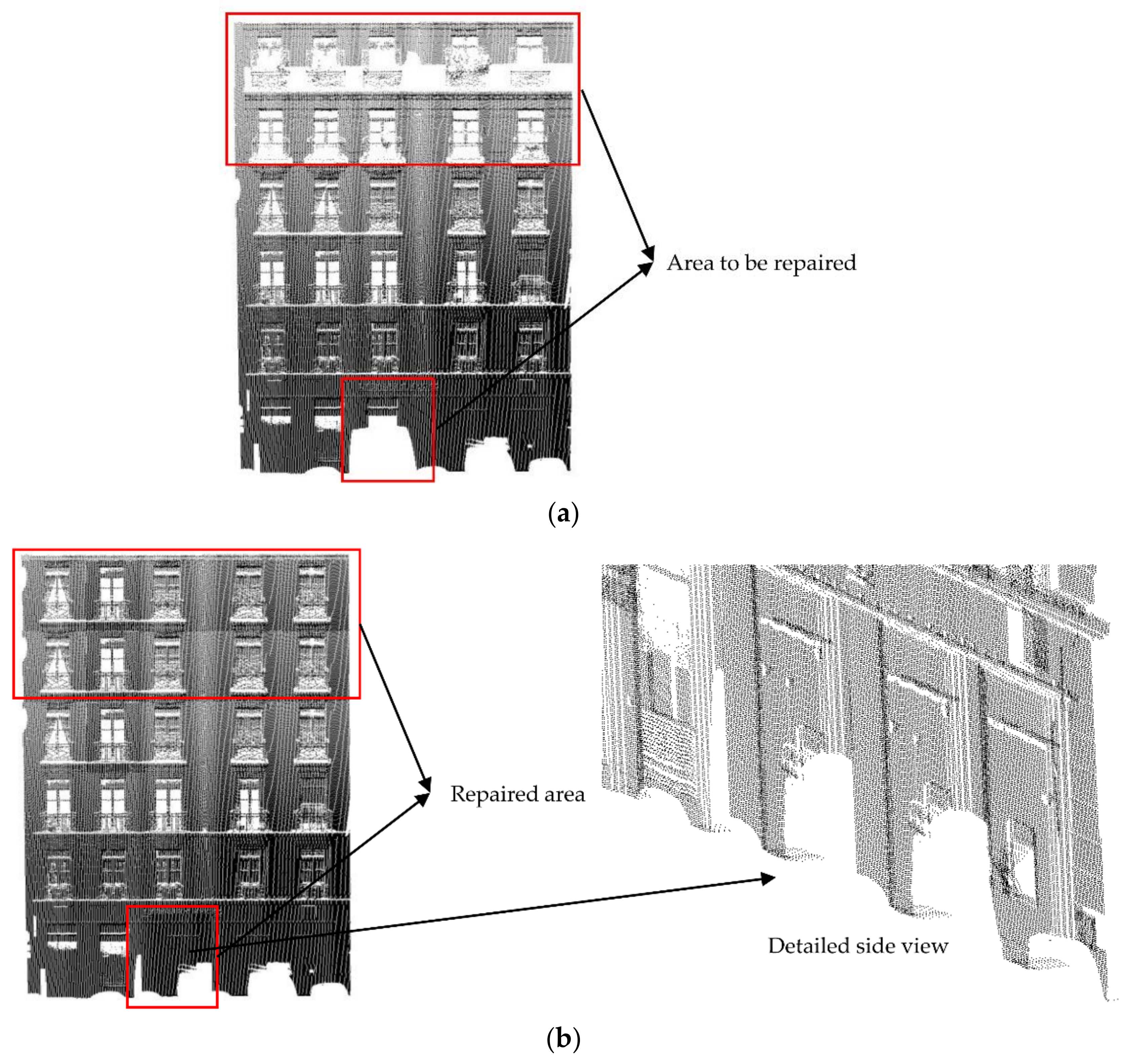

3.4. Point Cloud Repair Results

4. Discussion

4.1. The Discussion of Feature Boundaries Extraction Results

4.2. The Discussion of Point Cloud Repair Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuan, L.; Bo, W. Relation-constrained 3D reconstruction of buildings in metropolitan areas from photogrammetric point clouds. Remote Sens. 2021, 13, 129. [Google Scholar]

- Zhu, X.; Shahzad, M. Facade reconstruction using multiview spaceborne TomoSAR point clouds. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3541–3552. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Z.; Li, A.; Fang, L. Large-scale urban point cloud labeling and reconstruction. ISPRS J. Photogramm. Remote Sens. 2018, 138, 86–100. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X. Automatic detection and reconstruction of 2-D/3-D building shapes from spaceborne TomoSAR point clouds. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1292–1310. [Google Scholar] [CrossRef] [Green Version]

- Lafarge, F.; Mallet, C. Creating large-scale city models from 3D-point clouds: A robust approach with hybrid representation. Int. J. Comput. Vis. 2012, 99, 69–85. [Google Scholar] [CrossRef]

- Mahmood, B.; Han, S. BIM-based registration and localization of 3D point clouds of indoor scenes using geometric features for augmented reality. Remote Sens. 2020, 12, 2302. [Google Scholar] [CrossRef]

- Tor, A.E.; Seyed, R.H.; Sobah, A.P.; John, K. Smart facility management: Future healthcare organization through indoor positioning systems in the light of enterprise BIM. Smart Cities 2020, 3, 793–805. [Google Scholar]

- Ferreira, J.; Resende, R.; Martinho, S. Beacons and BIM models for indoor guidance and location. Sensors 2018, 18, 4374. [Google Scholar] [CrossRef] [Green Version]

- Niu, S.; Pan, W.; Zhao, Y. A BIM-GIS integrated web-based visualization system for low energy building design. Procedia Eng. 2015, 121, 2184–2192. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Cho, Y.K.; Kim, C. Automatic BIM component extraction from point clouds of existing buildings for sustainability applications. Autom. Constr. 2015, 56, 1–13. [Google Scholar] [CrossRef]

- Wong, K.D.; Fan, Q. Building information modelling (BIM) for sustainable building design. Facilities 2013, 31, 138–157. [Google Scholar] [CrossRef]

- Ma, G.; Wu, Z. BIM-based building fire emergency management: Combining building users’ behavior decisions. Autom. Constr. 2020, 109, 102975. [Google Scholar] [CrossRef]

- Yu, Y.; Guan, H.; Li, D.; Jin, C.; Wang, C.; Li, J. Road manhole cover delineation using mobile laser scanning point cloud data. IEEE Trans. Geosci. Remote Sens. 2020, 17, 152–156. [Google Scholar] [CrossRef]

- Isikdag, U.; Underwood, J.; Aouad, G. An investigation into the applicability of building information models in geospatial environment in support of site selection and fire response management processes. Adv. Eng. Inform. 2008, 22, 504–519. [Google Scholar] [CrossRef]

- Yi, T.; Si, L.; Qian, W. Automated geometric quality inspection of prefabricated housing units using BIM and LiDAR. Remote Sens. 2020, 12, 2492. [Google Scholar]

- Liu, J.; Xu, D.; Hyyppä, J.; Liang, Y. A survey of applications with combined BIM and 3D laser scanning in the life cycle of buildings. IEEE Trans. Geosci. Remote Sens. 2021, 14, 5627–5637. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.K.; Cheng, J.; Sohn, H. Automated quality assessment of precast concrete elements with geometry irregularities using terrestrial laser scanning. Autom. Constr. 2016, 68, 170–182. [Google Scholar] [CrossRef]

- Min, L.; Hang, W.; Chun, L.; Nan, L. Classification and cause analysis of terrestrial 3D laser scanning missing data. Remote Sens. Inf. 2013, 28, 82–86. [Google Scholar]

- Barazzetti, L.; Banfi, F.; Brumana, R.; Previtali, M. Creation of parametric BIM objects from point clouds using nurbs. Photogramm. Rec. 2016, 30, 339–362. [Google Scholar] [CrossRef]

- Martín-Lerones, P.; Olmedo, D.; López-Vidal, A.; Gómez-García-Bermejo, J.; Zalama, E. BIM supported surveying and imaging combination for heritage conservation. Remote Sens. 2021, 13, 1584. [Google Scholar] [CrossRef]

- Yong, L.; Lu, N.; Li, L.; Jie, M.; Xi, Z. Hole filling of building façade based on LIDAR point cloud. Sci. Surv. Mapp. 2017, 42, 191–195. [Google Scholar]

- Stamos, I.; Allen, P.K. Geometry and texture recovery of scenes of large scale. Comput. Vis. Image Underst. 2002, 88, 94–118. [Google Scholar] [CrossRef]

- Frueh, C.; Jain, S.; Zakhor, A. Data processing algorithms for generating textured 3D building facade meshes from laser scans and camera images. Int. J. Comput. Vis. 2002, 61, 159–184. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Yu, X.; Yan, X.; Yuan, Z. A method for filling absence data of airborne LIDAR point cloud. Bull. Surv. Mapp. 2018, 10, 27–31. [Google Scholar]

- Kumar, A.; Shih, A.M.; Ito, Y.; Ross, D.H.; Soni, B.K. A hole-filling algorithm using non-uniform rational B-splines. In Proceedings of the 16th International Meshing Roundtable, Seattle, WA, USA, 14–17 October 2007; pp. 169–182. [Google Scholar]

- De, L.; Teng, B.; Li, L. Repairing holes of point cloud based on distribution regularity of building façade components. J. Geod. Geodyn. 2014, 34, 59–62. [Google Scholar]

- Becker, S.; Haala, N. Quality dependent reconstruction of building façades. In Proceedings of the International Workshop on Quality of Context, Stuttgart, Germany, 25–26 June 2009. [Google Scholar]

- Vallet, B.; Bredif, M.; Serna, A.; Marcotegui, B.; Paparoditis, N. TerraMobilita/iQmulus urban point cloud analysis benchmark. Comput. Graph. 2015, 49, 126–133. [Google Scholar] [CrossRef] [Green Version]

- Tao, L.; Xiao, C. Straight-line-segment feature-extraction method for building-façade point-cloud data. Chin. J. Lasers 2018, 46, 1109002. [Google Scholar]

- Zhe, Y.; Xiao, C.; Quan, L.; Min, H.; Jian, O.; Pei, X.; Wang, G. Segmentation of point cloud in tank of plane bulkhead type. Chin. J. Lasers 2017, 44, 1010006. [Google Scholar]

- Pearson, K. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef] [Green Version]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2010, 26, 214–226. [Google Scholar] [CrossRef]

- Fan, M.; Jung, S.; Ko, S.J. Highly accurate scale estimation from multiple keyframes using RANSAC plane fitting with a novel scoring method. IEEE Trans. Veh. Technol. 2020, 69, 15335–15345. [Google Scholar] [CrossRef]

- Poreba, M.; Goulette, F. RANSAC algorithm and elements of graph theory for automatic plane detection in 3D point clouds. Arch. Photogramm. 2012, 24, 301–310. [Google Scholar]

- Dai, S.J. Euler-rodrigues formula variations, quaternion conjugation and intrinsic connections. Mech. Mach. Theory 2015, 92, 144–152. [Google Scholar] [CrossRef]

- Dong, Z.; Teng, H.; Jian, C.; Gui, L. An algorithm for terrestrial laser scanning point clouds registration based on Rodriguez matrix. Sci. Surv. Mapp. 2012, 37, 156–157. [Google Scholar]

- Rui, Z.; Ming, P.; Cai, L.; Yan, Z. Robust normal estimation for 3D LiDAR point clouds in urban environments. Sensors 2019, 19, 1248. [Google Scholar]

- De, L.; Xue, L.; Yang, S.; Jun, W. Deep feature-preserving normal estimation for point cloud filtering. Comput. Aided Des. 2020, 125, 102860. [Google Scholar]

- Fulvio, R.; Nex, F.C. New integration approach of photogrammetric and LIDAR techniques for architectural surveys. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 5, 230–238. [Google Scholar]

| Data | Point Number before Subsample | Point Number after Subsample | Point Number after Manual Hole | Point Spacing (Rp) after Subsample |

|---|---|---|---|---|

| Data 1 | 99,680 | 99,680 | 94,996 | 5.14 cm |

| Data 2 1 | 2,856,483 | 116,538 | 3.83 cm | |

| Data 3 1 | 1,611,887 | 124,080 | 10.12 cm | |

| Data 4 2 | 214,570 | 214,570 | 3.56 cm |

| Data | Feature Boundaries Extraction | Classification | Grid-Division and Repair | ||||||

|---|---|---|---|---|---|---|---|---|---|

| This Paper | General | This Paper | General | This Paper | General | ||||

| Data 1 | r | 50 cm | (5–10) * Rp | s | 10 cm | s = 2 × rc | 13.36/m3 | Equation (3) | |

| k | 30 cm | (5–10) * Rp | rc | 5 cm | 5 cm | ||||

| Data 2 | r | 30 cm | (5–10) * Rp | s | 10 cm | s = 2 × rc | 1823.48/m3 | Equation (3) | |

| k | 20 cm | (5–10) * Rp | rc | 5 cm | 5 cm | ||||

| Data 3 | r | 50 cm | (5–10) * Rp | s | 10 cm | s = 2 × rc | 83.96/m3 | Equation (3) | |

| k | 50 cm | (5–10) * Rp | rc | 5 cm | 5 cm | ||||

| Data 4 | r | 30 cm | (5–10) * Rp | s | 10 cm | s = 2 × rc | 511.93/m3 | Equation (3) | |

| k | 30 cm | (5–10) * Rp | rc | 5 cm | 5 cm | ||||

| Data | Point Number after “Fuzzy” Repair | Point Number after Manual Repair | Integrity before Repair | Integrity after “Fuzzy” Repair |

|---|---|---|---|---|

| Data 1 | 98,279 | 99,680 | 95.30% | 98.59% |

| Data 2 | 124,770 | 127,865 | 91.14% | 97.58% |

| Data 3 | 128,691 | 128,929 | 96.24% | 99.82% |

| Data 4 | 243,768 | 297,188 | 72.20% | 82.02% |

| Data 1 | Grid 1 | Grid 2 | Grid 3 | Grid 4 |

|---|---|---|---|---|

| Pangel | 0.00° | 0.00° | 0.02° | 0.21° |

| Pposition/cm | 0.03 | 0.05 | 0.03 | 0.03 |

| Data 2 | Grid 1 | Grid 2 | Grid 3 | Grid 4 |

| Pangel | 0.17° | 0.11° | 0.08° | 0.08° |

| Pposition/cm | 2.50 | 1.31 | 0.92 | 0.63 |

| Grid 5 | Grid 6 | Grid 7 | Grid 8 | |

| Pangel | 0.12° | 0.16° | 0.36° | 0.18° |

| Pposition/cm | 0.40 | 0.11 | 1.59 | 1.44 |

| Grid 9 | Grid 10 | Grid 11 | Grid 12 | |

| Pangel | 0.13° | 0.17° | 0.08° | 0.17° |

| Pposition/cm | 1.88 | 2.21 | 2.59 | 2.31 |

| Data 3 | Grid 1 | Grid 2 | Grid 3 | Grid 4 |

| Pangel | 0.19° | 0.19° | 0.22° | 0.35° |

| Pposition/cm | 0.56 | 0.41 | 0.18 | 0.14 |

| Grid 5 | Grid 6 | Grid 7 | Grid 8 | |

| Pangel | 0.54° | 0.41° | 0.18° | 0.14° |

| Pposition/cm | 0.22 | 0.19 | 0.09 | 0.81 |

| Grid 9 | Grid 10 | Grid 11 | ||

| Pangel | 0.19° | 0.21° | 0.23° | |

| Pposition/cm | 0.67 | 0.82 | 0.58 | |

| Data 4 | Grid 1 | Grid 2 | Grid 3 | Grid 4 |

| Pangel | 0.10° | 0.11° | 0.20° | 0.23° |

| Pposition/cm | 3.25 | 1.01 | 1.87 | 12.64 |

| Grid 5 | Grid 6 | Grid 7 | Grid 8 | |

| Pangel | 0.24° | 0.21° | 0.07° | 0.12° |

| Pposition/cm | 11.84 | 0.41 | 1.51 | 2.49 |

| Grid 9 | Grid 10 | Grid 11 | ||

| Pangel | 0.16° | 0.20° | 0.28° | |

| Pposition/cm | 16.86 | 15.79 | 0.62 |

| Data | #1 | #2 | #3 | #4 | #5 | #6 | #7 |

|---|---|---|---|---|---|---|---|

| Data 1 | 2.76 | 8.90 | 0.16 | 12.41 | 1.14 | 25.37 | |

| Data 2 | 0.78 | 13.13 | 0.37 | 8.06 | 0.25 | 22.59 | 481.54 |

| Data 3 | 2.46 | 8.19 | 0.22 | 15.86 | 0.32 | 27.05 | 454.08 |

| Data 4 | 1.75 | 25.86 | 0.51 | 5.98 | 0.53 | 34.63 | 399.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Cheng, X.; Wu, J.; Zhang, L.; Li, Y.; Wu, Z. The “Fuzzy” Repair of Urban Building Facade Point Cloud Based on Distribution Regularity. Remote Sens. 2022, 14, 1090. https://doi.org/10.3390/rs14051090

Zhang Z, Cheng X, Wu J, Zhang L, Li Y, Wu Z. The “Fuzzy” Repair of Urban Building Facade Point Cloud Based on Distribution Regularity. Remote Sensing. 2022; 14(5):1090. https://doi.org/10.3390/rs14051090

Chicago/Turabian StyleZhang, Zijian, Xiaojun Cheng, Jicang Wu, Lei Zhang, Yanyi Li, and Zhenlun Wu. 2022. "The “Fuzzy” Repair of Urban Building Facade Point Cloud Based on Distribution Regularity" Remote Sensing 14, no. 5: 1090. https://doi.org/10.3390/rs14051090

APA StyleZhang, Z., Cheng, X., Wu, J., Zhang, L., Li, Y., & Wu, Z. (2022). The “Fuzzy” Repair of Urban Building Facade Point Cloud Based on Distribution Regularity. Remote Sensing, 14(5), 1090. https://doi.org/10.3390/rs14051090