UAV Image Stitching Based on Optimal Seam and Half-Projective Warp

Abstract

:1. Introduction

- We propose a new optimal seam algorithm and define a new difference matrix, including color, structure and line differences. It can better reflect the difference degree of overlapping regions.

- We use the minimum energy to constrain the difference matrix to further limit the search range of the seam, and design a seam search algorithm based on the minimum global energy, which can improve the probability of the seam avoiding structural objects.

- According to the position of the seam, we use half-projective warp to correct the image shape, so that more areas maintain the original shape and the stitching effect is improved.

2. Related Works

3. Materials and Methods

3.1. Optimal Seam Algorithm

- (1)

- Construct the difference matrix of the overlapping regions of two registered images;

- (2)

- Search for the optimal seam on the difference matrix.

| Algorithm 1: Calculation of search region |

Input: Different matrix , start point , end point Output: search region  |

3.2. Half-Projective Warps

4. Results

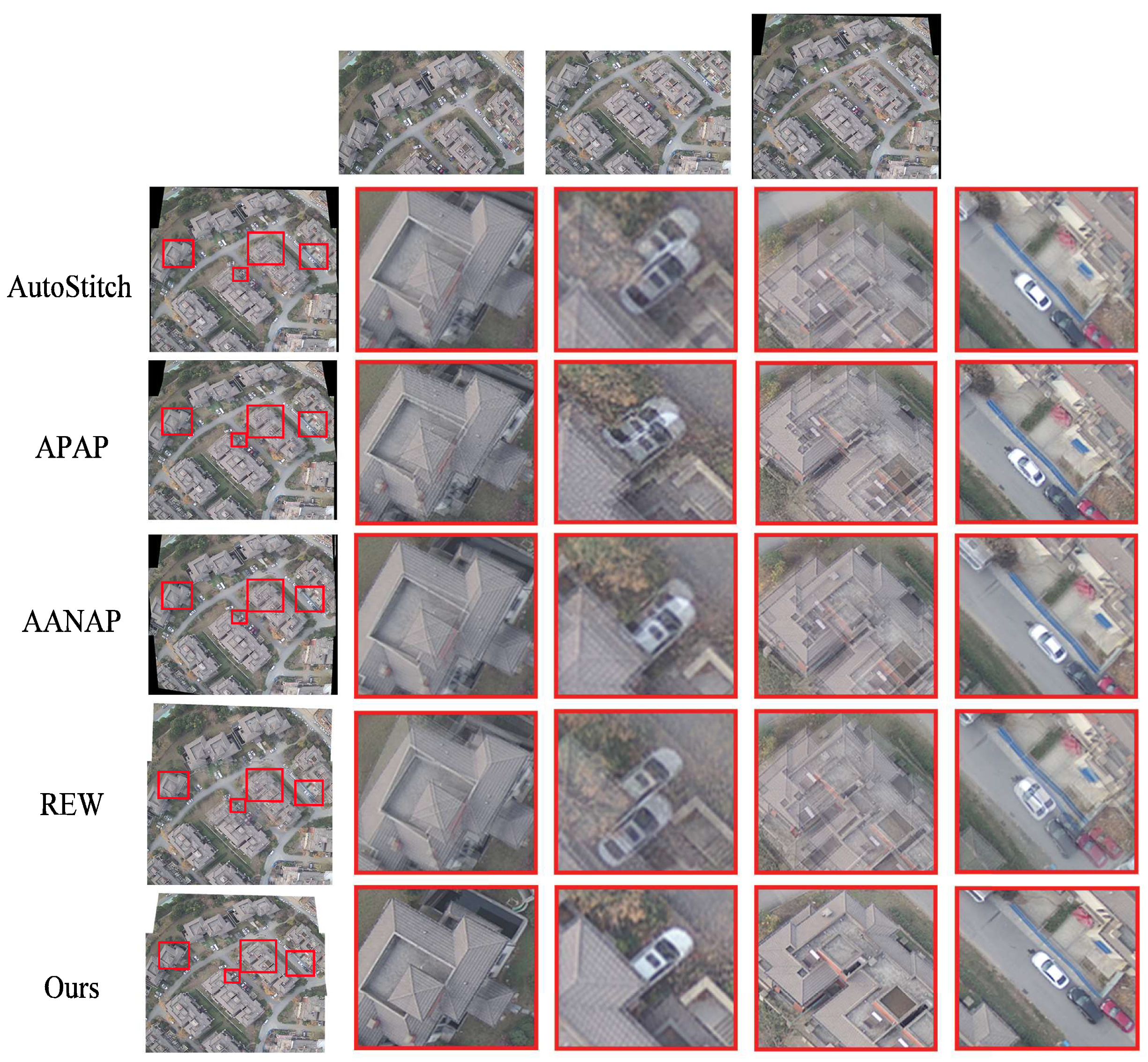

4.1. Visual Comparison

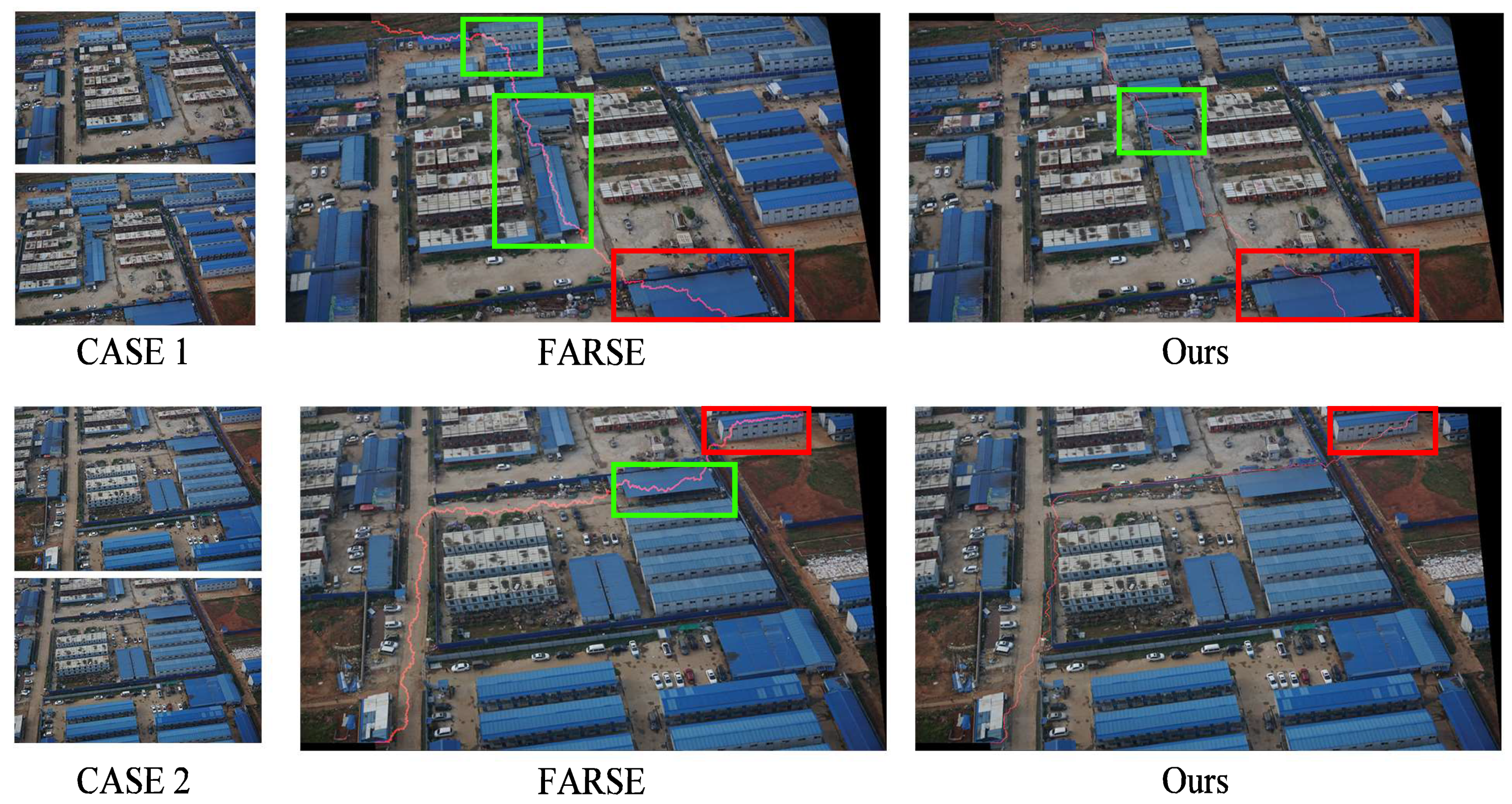

4.2. Seam Comparison

4.3. Shape Correction

4.4. Extended Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bang, S.; Kim, H.; Kim, H. UAV-based automatic generation of high-resolution panorama at a construction site with a focus on preprocessing for image stitching. Autom. Constr. 2017, 84, 70–80. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- De Grandi, G.; Malingreau, J.P.; Leysen, M. The ERS-1 Central Africa mosaic: A new perspective in radar remote sensing for the global monitoring of vegetation. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1730–1746. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, S.; Akbar, A. Ground feature oriented path planning for unmanned aerial vehicle mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1175–1187. [Google Scholar] [CrossRef]

- Jiang, J.; Ma, J.; Chen, C.; Wang, Z.; Cai, Z.; Wang, L. SuperPCA: A superpixelwise PCA approach for unsupervised feature extraction of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4581–4593. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Feng, R.; Guan, X.; Shen, H.; Zhang, L. Remote sensing image mosaicking: Achievements and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 8–22. [Google Scholar] [CrossRef]

- Ma, J.; Qiu, W.; Zhao, J.; Ma, Y.; Yuille, A.L.; Tu, Z. Robust L2E estimation of transformation for non-rigid registration. IEEE Trans. Signal Process. 2015, 63, 1115–1129. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Jiang, J.; Guo, X. Robust feature matching using spatial clustering with heavy outliers. IEEE Trans. Image Process. 2020, 29, 736–746. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Gao, J.; Kim, S.J.; Brown, M.S. Constructing image panoramas using dual-homography warping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 49–56. [Google Scholar]

- Lin, W.Y.; Liu, S.; Matsushita, Y.; Ng, T.T.; Cheong, L.F. Smoothly varying affine stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 345–352. [Google Scholar]

- Zaragoza, J.; Chin, T.J.; Brown, M.S.; Suter, D. As-projective-as-possible image stitching with moving DLT. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2339–2346. [Google Scholar]

- Ma, J.; Zhao, J.; Tian, J.; Yuille, A.L.; Tu, Z. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, J.; Wang, Z.; Lai, S.; Zhai, Y.; Zhang, M. Parallax-tolerant image stitching based on robust elastic warping. IEEE Trans. Multimed. 2017, 20, 1672–1687. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality preserving matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Chen, J.; Wan, Q.; Luo, L.; Wang, Y.; Luo, D. Drone image stitching based on compactly supported radial basis function. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4634–4643. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Jiang, J.; Zhao, J.; Guo, X. LMR: Learning a two-class classifier for mismatch removal. IEEE Trans. Image Process. 2019, 28, 4045–4059. [Google Scholar] [CrossRef]

- Fan, A.; Ma, J.; Jiang, X.; Ling, H. Efficient Deterministic Search with Robust Loss Functions for Geometric Model Fitting. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Li, S.; Yuan, L.; Sun, J.; Quan, L. Dual-feature warping-based motion model estimation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4283–4291. [Google Scholar]

- Joo, K.; Kim, N.; Oh, T.H.; Kweon, I.S. Line meets as-projective-as-possible image stitching with moving DLT. In Proceedings of the IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015; pp. 1175–1179. [Google Scholar]

- Ren, X.; Malik, J. Learning a classification model for segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 10–17. [Google Scholar]

- Hejazifar, H.; Khotanlou, H. Fast and robust seam estimation to seamless image stitching. Signal Image Video Process. 2018, 12, 885–893. [Google Scholar] [CrossRef]

- Yuan, Y.; Fang, F.; Zhang, G. Superpixel-Based Seamless Image Stitching for UAV Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1565–1576. [Google Scholar] [CrossRef]

- Gao, J.; Li, Y.; Chin, T.J.; Brown, M.S. Seam-Driven Image Stitching. In Proceedings of the Eurographics, Girona, Spain, 6–10 May 2013; pp. 45–48. [Google Scholar]

- Burt, P.J.; Adelson, E.H. A multiresolution spline with application to image mosaics. ACM Trans. Graph. (TOG) 1983, 2, 217–236. [Google Scholar] [CrossRef]

- Xiong, Y.; Pulli, K. Fast and high-quality image blending on mobile phones. In Proceedings of the IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 9–12 January 2010; pp. 1–5. [Google Scholar]

- Fang, F.; Wang, T.; Fang, Y.; Zhang, G. Fast color blending for seamless image stitching. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1115–1119. [Google Scholar] [CrossRef]

- Chang, C.H.; Sato, Y.; Chuang, Y.Y. Shape-preserving half-projective warps for image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3254–3261. [Google Scholar]

- Lin, C.C.; Pankanti, S.U.; Natesan Ramamurthy, K.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1155–1163. [Google Scholar]

- Chen, Y.S.; Chuang, Y.Y. Natural image stitching with the global similarity prior. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 186–201. [Google Scholar]

- Pandey, A.; Pati, U.C. Image mosaicing: A deeper insight. Image Vis. Comput. 2019, 89, 236–257. [Google Scholar] [CrossRef]

- Liao, T.; Chen, J.; Xu, Y. Quality evaluation-based iterative seam estimation for image stitching. Signal Image Video Process. 2019, 13, 1199–1206. [Google Scholar] [CrossRef]

- Li, L.; Tu, J.; Gong, Y.; Yao, J.; Li, J. Seamline network generation based on foreground segmentation for orthoimage mosaicking. ISPRS J. Photogramm. Remote Sens. 2019, 148, 41–53. [Google Scholar] [CrossRef]

- Eden, A.; Uyttendaele, M.; Szeliski, R. Seamless image stitching of scenes with large motions and exposure differences. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2498–2505. [Google Scholar]

- Kerschner, M. Seamline detection in colour orthoimage mosaicking by use of twin snakes. ISPRS J. Photogramm. Remote Sens. 2001, 56, 53–64. [Google Scholar] [CrossRef]

- Davis, J. Mosaics of scenes with moving objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santa Barbara, CA, USA, 23–25 June 1998; pp. 354–360. [Google Scholar]

- Gracias, N.; Mahoor, M.; Negahdaripour, S.; Gleason, A. Fast image blending using watersheds and graph cuts. Image Vis. Comput. 2009, 27, 597–607. [Google Scholar] [CrossRef] [Green Version]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef] [Green Version]

- Li, N.; Liao, T.; Wang, C. Perception-based seam cutting for image stitching. Signal Image Video Process. 2018, 12, 967–974. [Google Scholar] [CrossRef]

- Zhang, Y.; Wan, Z.; Jiang, X.; Mei, X. Automatic stitching for hyperspectral images using robust feature matching and elastic warp. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3145–3154. [Google Scholar] [CrossRef]

| FARSE | PSC | QEISE | Ours | |

|---|---|---|---|---|

| Case 1 | 51.9395 | 46.2676 | 52.4685 | 53.1513 |

| Case 2 | 49.0071 | 35.1541 | 49.4543 | 51.8483 |

| Case 3 | 47.0600 | 34.7118 | 48.9986 | 50.3483 |

| Case 4 | 51.1492 | 49.1601 | 50.5044 | 53.3715 |

| FARSE | PSC | QEISE | Ours | |

|---|---|---|---|---|

| Case 1 | 0.0176 | 0.0276 | 0.0141 | 0.0133 |

| Case 2 | 0.0079 | 0.2391 | 0.0131 | 0.0073 |

| Case 3 | 0.0088 | 0.2039 | 0.0135 | 0.0080 |

| Case 4 | 0.0186 | 0.0253 | 0.0244 | 0.0154 |

| FARSE | PSC | QEISE | Ours | |

|---|---|---|---|---|

| Case 1 | 3.12 | 12.26 | 20.24 | 7.24 |

| Case 2 | 2.70 | 7.16 | 9.05 | 6.31 |

| Case 3 | 2.59 | 5.24 | 5.33 | 4.14 |

| Case 4 | 2.36 | 7.46 | 6.535 | 4.68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Li, Z.; Peng, C.; Wang, Y.; Gong, W. UAV Image Stitching Based on Optimal Seam and Half-Projective Warp. Remote Sens. 2022, 14, 1068. https://doi.org/10.3390/rs14051068

Chen J, Li Z, Peng C, Wang Y, Gong W. UAV Image Stitching Based on Optimal Seam and Half-Projective Warp. Remote Sensing. 2022; 14(5):1068. https://doi.org/10.3390/rs14051068

Chicago/Turabian StyleChen, Jun, Zixian Li, Chengli Peng, Yong Wang, and Wenping Gong. 2022. "UAV Image Stitching Based on Optimal Seam and Half-Projective Warp" Remote Sensing 14, no. 5: 1068. https://doi.org/10.3390/rs14051068

APA StyleChen, J., Li, Z., Peng, C., Wang, Y., & Gong, W. (2022). UAV Image Stitching Based on Optimal Seam and Half-Projective Warp. Remote Sensing, 14(5), 1068. https://doi.org/10.3390/rs14051068