1. Introduction

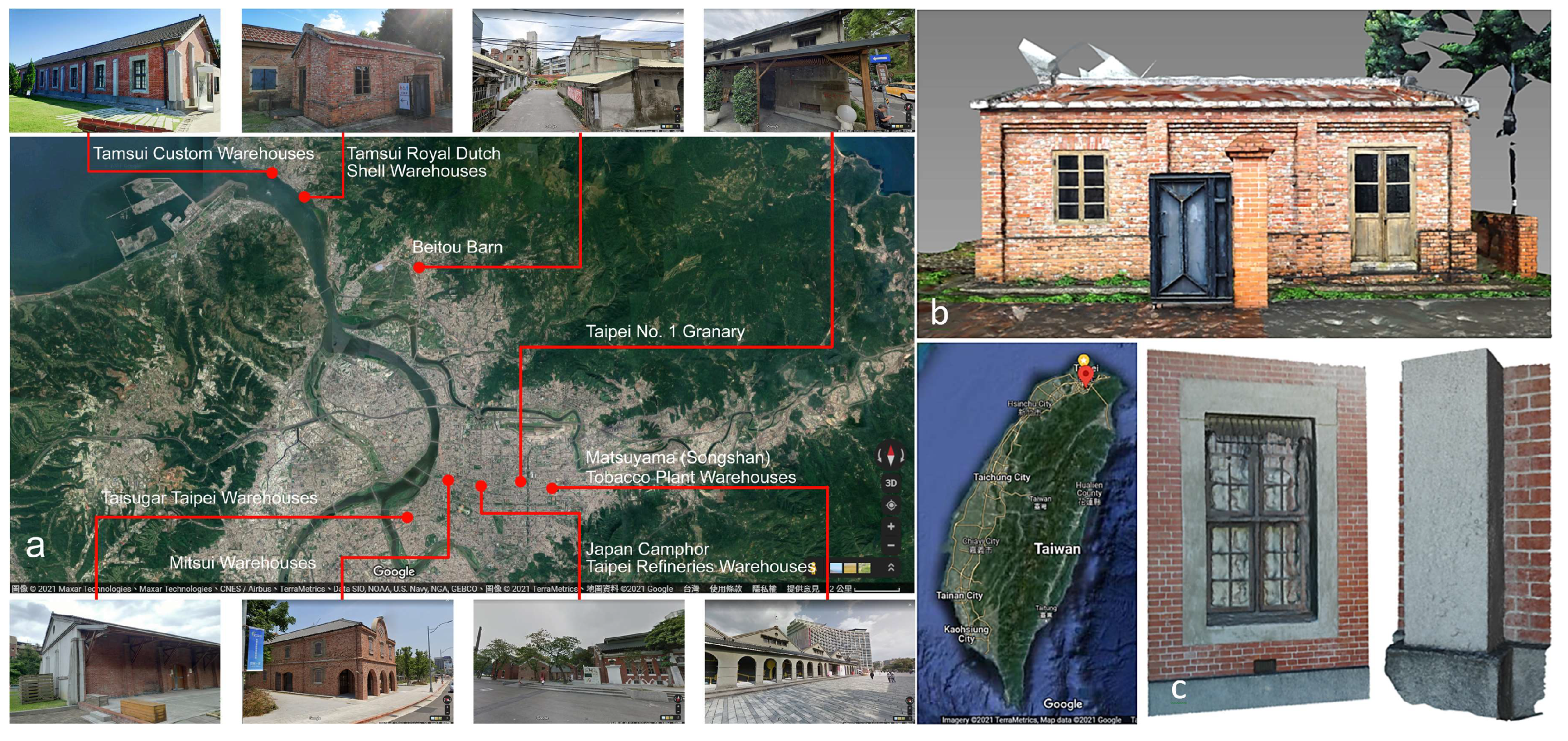

One of the best approaches to explore the historical context of a city is from its industry-related constructions. Construction materials such as bricks contribute to the spatio-temporal background and geographic distribution of details by means of layout, style, and system, under the impact of industry, culture, and technology. Industry and brick warehouses represent two of the main entry points to understand the early history of Taiwan. Most industrial buildings were designed using bricks. The manufacturing of bricks began in the Qing Dynasty (from 1644 to 1912). In contrast to the small quantity produced in early periods, new techniques from Japan led to mass production. Brick warehouses (

Figure 1) were designed to process raw materials (e.g., sugar, alcohol, camphor, and tobacco), support heavy industry (e.g., ordnance and railroad), store goods (e.g., rice), and exchange resources at trade ports. The elucidation of the relationship between the two subjects can deepen our knowledge of tangible heritage according to different stages of development.

Construction materials and methods represent professional practices in the assessment of design quality and the systematic interface for adjacent components. Architectural drawings of details may be missing in old buildings, so field works are required to reconstruct 3D models, to enable inspection. The reconstruction process and the interaction platform constitute the two major aspects that must be appropriately managed.

The reconstruction of 3D models is conducted to reformulate spatial relationships for the observation, interaction, and comparison of reorganized building components. The reconstruction of industry and brick warehouses facilitates an architectural domain-specific inspection of brick and corresponding details, in which the models can be accessed from remote sites in various situations.

Photogrammetry has been applied to reconstruct 3D models using aerial images or images taken at ground level. This approach has a broad range of applications, from the imagery taken by unmanned aerial systems (UASs), to artifacts documented at short range. The reconstructed model usually provides different levels of detail, which is of concern for situated studies either in a remote site or in social media. It is necessary to reformulate an environment to suit the role of 3D data, related augmented reality (AR) interactions and to today’s communication need. The traditional distance should be reinterpreted and extended to physical attributes geo-referenced in virtual space, without boundaries.

When objects are interacted with in an AR platform, an opportunity is provided to cross-reference the structural and visual details between building components from a remote site. This experience can be extended to remote video conferencing, with documented results. The documentation of brick warehouse details in AR can be challenging when parties on both ends of a video conference require the relative location of details in 3D, instead of annotations using 2D screenshots. Questions may be raised if the documentation and verification of the final AR result can be achieved based on a proper estimation of the relative location in a simulation.

If the AR-enabled interaction can be extended to architecture using the same manipulation process, construction details can be created and reactivated in the new 3D form to facilitate research inspections, especially in heritage buildings such as brick warehouses.

1.1. Research Goal

This study aimed to provide a method to document the relative locations of two models after manipulation in an AR platform, based on framed imagery or streamed videos in conferencing or broadcasting. This method compared brick details and documented the interacted result at the correct scale and relative location. To meet the abovementioned requirement, we applied photogrammetry modeling and smartphone AR for both the first and secondary reconstructions of the 3D brick warehouse details. These were applied to reconstruct a 3D base model as the first part of the paired comparison in the AR simulation. A secondary reconstruction was conducted using the inspection video from the comparison results, to assure each involved party that the geometries were feasible for verification and 3D prints.

The AR-based 3D reconstruction of multiple imagery and video resources should enable the documentation of the configuration of AR-interacted brick details on site, or in remote comparisons. In creating secondary photogrammetry modeling, we aimed to determine if the process could create feasible structural and visual details based on videos recorded from real-time conferencing, field screens, and broadcasting from an AR platform using a smartphone. In addition, a 3D printout of the simulated results should be provided to verify elements in physical form.

Since many workspaces have become decentralized due to the COVID-19 pandemic, video conferencing has become ubiquitous, and should be integrated with an effective 3D reconstruction method for content delivery to fulfill remote-based tasks in real time.

1.2. AR Studies

We mainly selected references published within the last five years to obtain more up-to-date information, although many earlier references also presented significant achievements. AR enables real and virtual information in an actual environment to be interacted with in real time [

1,

2,

3]. Virtual content can be created from models originating in the physical world, with a highly realistic appearance. Users can explore reality within the real world [

4], along with new layers of information, using mobile AR applications for novel interactive and highly dynamic experiences [

5,

6].

AR applications have been successfully implemented in a broad range of fields, including navigation, education, industry, medical practice, and landscape architecture [

7,

8,

9,

10,

11]. Extended reality and informative models have been created for architectural heritage from scans, building information modeling (BIM), virtual reality (VR), and AR, among others [

12]. Heritage-related BIM provides complexity in both surveying and preserving [

13].

Construction-specific studies of the finer details of VR/AR environments have attracted significant attention [

14], and researchers have simulated the environmental context and spatio-temporal constraints of various processes [

15]. The dimensional inconsistencies of building design found by VR participants can be partially alleviated with AR, by adding an accurately scaled real object [

16].

The operation of 3D objects should be straightforward, to facilitate thorough knowledge delivery and exploration implemented by AR, including learning [

17,

18], locating an object [

19], understanding cultural aspects [

20], enhancing laboratory learning environments [

21], and implementing a mobile AR system for creative course subjects [

22]. Links between real world objects and digital media should also provide a novel and beneficial tool for researchers.

1.3. Structural and Visual Details in AR

AR virtual models that represent subjects of interest have to be sufficiently detailed to attract users, or to effectively deliver specific instructions. Light detection and ranging (LiDAR) and photogrammetric modeling have gained attention in creating photorealistic 3D models. The former reconstructs models through 3D scans of an object in its as-built form. The latter, Structure-from-Motion (SfM) photogrammetry, has been used to capture complex topography and assess volume in an inexpensive, effective, and flexible approach [

23], with minimal expensive equipment or specialist expertise [

24]. Applications can be found in wood [

25,

26], archaeology [

27], cultural heritage [

28], architecture [

29], construction [

30], and construction progress tracking [

31]. For large areas and irregular shapes, airborne photogrammetry and modeling has been applied to adapt architectural configurations to the steep slope of sites [

32], or to assess stone excavation [

33]. Geospatial surveys are associated with UAVs [

34]; an example includes assessing the percentage of cover and mean height of pine saplings [

26]. Photogrammetry can be applied for small regular objects in indoor scenes [

35]. In addition to using consumer grade digital cameras for the low-cost acquisition of 3D data [

36], camera performance today enables smartphones to be considered as promising devices to capture imagery.

AR applications have been proven to be feasible for varied cases [

37,

38,

39,

40,

41] and scales [

42,

43], with awareness of both location and context [

44]. It should be noted that documentation is related to information systems [

45] and is part of a communication model [

46] for exploration and dissemination [

47]. Engagement and learning have also been assessed [

48].

AR mobile solutions have evolved from online shopping experiences, and contribute to end-to-end scalable AR and 3D platforms for visualization and communication [

49,

50,

51]. Solutions learned from ecommerce, field sales, education, and design can be utilized to reduce the cost of physical travel, logistics, validation, and prototyping processes [

52,

53]. Considering the similar natures of architectural representations of building components, structural and visual details can be applied in the same manner for visualization and communication.

1.4. Video Conferencing and Remote Collaboration with AR

Telework has dramatically increased since the beginning of the COVID-19 pandemic and will potentially continue into the future. Many professionals who used to work in person have switched to remote assistance, advice, or collaboration. This has led to a new trend of AR using on-screen smartphone instructions [

54,

55]. AR remote assistance, such as “see what I see” apps, can provide better knowledge transfer via a peer-to-peer connection that incorporates video, audio, or hand annotations [

56,

57,

58]. Other studies of AR assistance have provided remote technical support, including those for maintenance [

52], manufacturing [

54,

59], automotive [

60], and utilities [

61], with visual instructions or virtual user manuals [

53,

55] by means of annotations and content uploaded to phones, tablets, and AR glasses.

A synchronized first-person view offers the same perspective and enables video conferencing or online meetings across devices or platforms through, for example, Webex

®, Google Meet

®, Skype

®, and Zoom

®. In remote collaboration, different AR system approaches have been developed to improve communication efficiency by applying gaze-visualization platforms for physical tasks [

62], 3D gesture and CAD models of mixed reality for training tasks [

63], essential factors for remote collaboration [

64], image- or live-video-based AR collaborations for industrial applications [

65], remote diagnosis for complex equipment [

66], 360° video cameras (360 cameras) in augmented virtual teleportation (AVT) for high-fidelity telecollaboration [

67], web-based extended reality (XR) for physical environments with physical objects [

68], Industry 4.0 environments [

59], 2D/3D telecollaboration [

69], and indoor construction monitoring [

70].

2. Materials and Methods

In architectural field work, the content is communicated by means of 2D drawings, 3D models, or wall finish specifications. For 3D models, the quality of structural and visual details contributes to the level of reality that can be efficiently and effectively interacted and communicated. The diversity of the interacted results should be formulated in order to be accessed by multiple applications and communication platforms, to increase the efficiency of such media for users. Moreover, interactions with field data need to be verified or confirmed. As a result, real-time communication should be achieved by sharing the reality on both sides of an online meeting, via sufficiently detailed documentation.

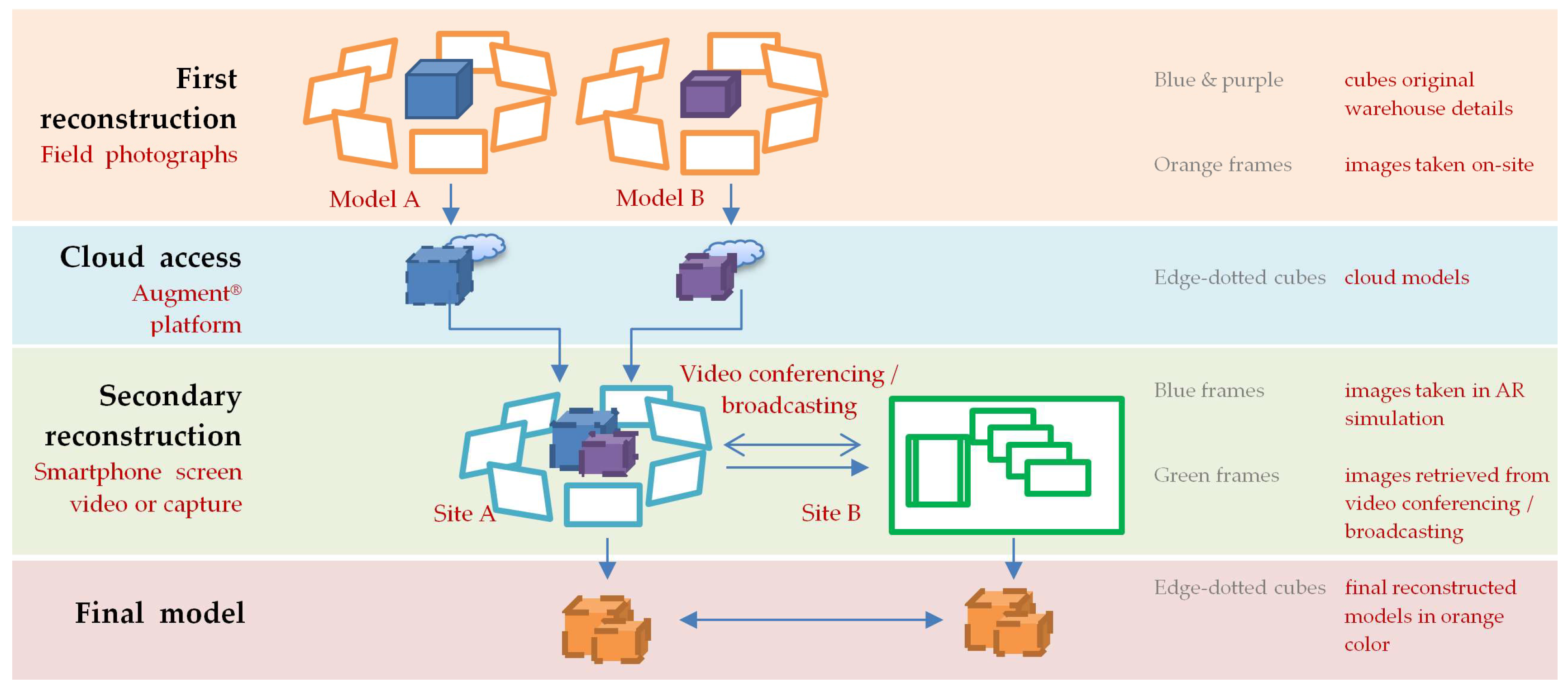

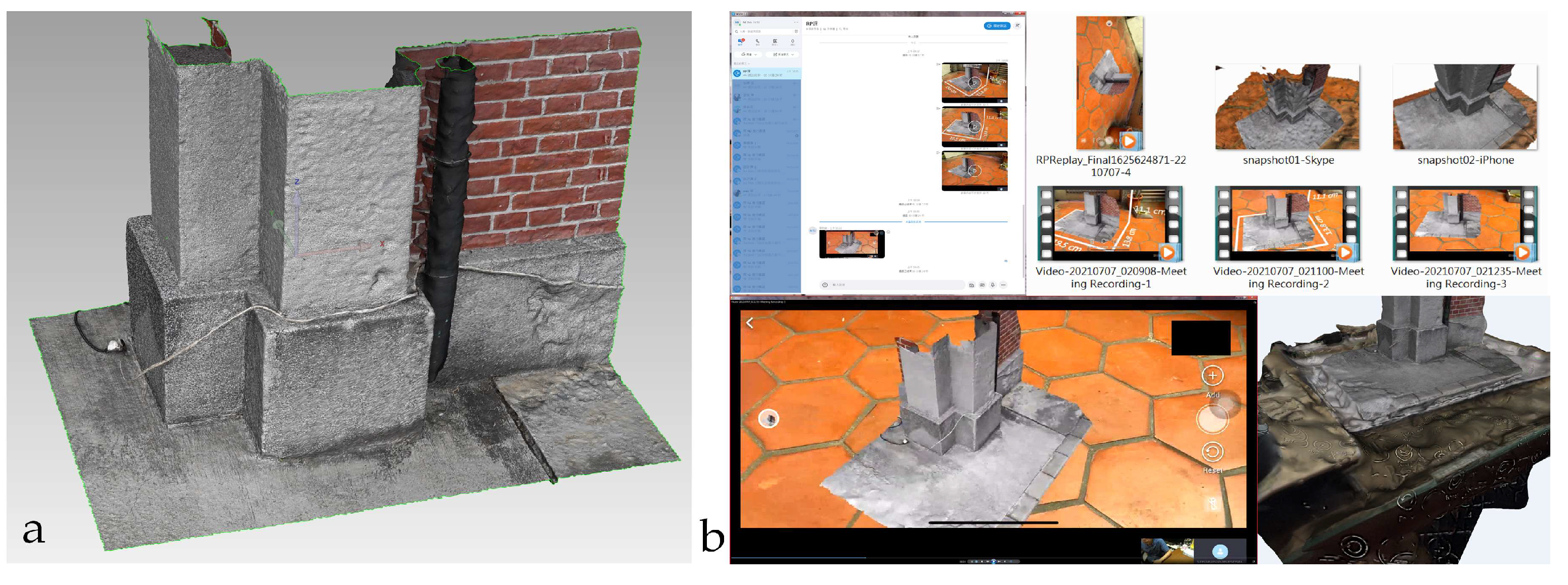

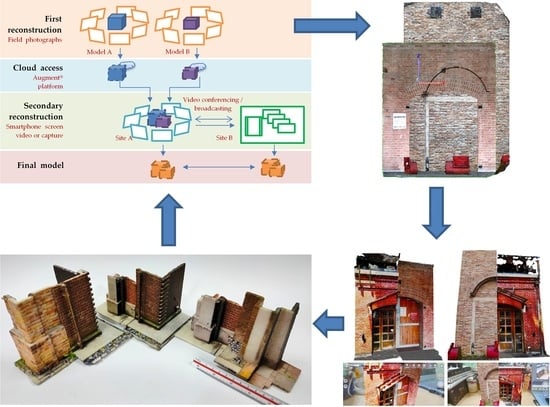

In total, 146 3D AR database models were created to enable cloud access from a smartphone (

Figure 2). Photogrammetry modeling and smartphone AR were used for the first and secondary 3D reconstruction of brick warehouse details, and comparison of the results in in Taipei, Taiwan. The first reconstructed model was used as the ground truth model for comparison with the second reconstructed model. The process applied multiple imagery resources from video conferences, broadcasts, and smartphone screen video of interacted models on the Augment

® AR platform, through a smartphone. Augment

® is a ready-made AR platform that was originally designed for commercial use. Comparisons were made on site, in a home office, and through video conferencing from a remote location to verify and document the final result.

This method provided a collaborative assistance in a metaverse, by enabling free communication between the virtual and real world. By referring to the similar brick dimension, we found that a sequential reconstruction process enabled cross-referencing between warehouses. AR enabled the study and documentation of cross-referenced results at the same time in the same environment. The construction complexity of inspected parts was interpreted by a simplified AR-based reconstruction process, with details in virtual and physical form. The method emphasized a novel representation and verification approach by using 3D color rapid prototyping (RP) models to conclude the result for pedagogical illustration.

2.1. The First 3D Reconstruction of Details and AR Models

We analyzed eight brick warehouses and 146 3D details. Three-dimensional models were first reconstructed with Zephyr®, AutoDesk Recap Photo®, or AliceVision Meshroom® using images taken with a smartphone, i.e., the same device used to interact with the 3D models. The reconstructed models were edited by trimming, decimation, color enhancement, or manifold checking prior to being used for comparison or being exported to the cloud-based Augment® platform. The cloud-uploaded models were further checked and edited within the AR platform by adjusting the origin location, orientation, surface normal, and dimensions for application feasibility. Each AR model was assigned a QR code for easy access from remote sites.

2.2. Interactions of Two Details

Interactions were made for two details, the base model and the intervention model, by adjusting relative locations to reveal differences. A base model, which was the target detail to be compared, was reconstructed first and acted as a reference in the background. A new intervention model, which was the new model to compare, was inserted next to the base in the same AR environment, to find out the differences in dimension, layout, or construction method. Any first reconstructed 3D model can be used as a base model to support following comparisons. Both models contributed to the second photogrammetric reconstruction for creating a new verification model to confirm and document relationship locations.

Allocating two details side by side assisted us with discerning the changes made to the corresponding parts. The link between AR interaction and architectural study was verified based on the definition of building components and possible manipulation. The comparison was recorded in a building matrix of eight component types and 28 subelements (

Table 1). The assessment was performed by classifying building parts, brick-related properties, construction-related details, and quality-related references. The building parts of this study were classified as the foundation, pavement, wall, opening, column, buttress, joint, finish, and molding. Brick-related properties included manufacturer, size, and surface attributes. Construction-related details included layout, trim, molding, edge/corner, ending, and joint. Quality-related references comprised the alignment, smoothness, tolerance, and vertical and horizontal alignment.

2.3. 3D Prints

A 3D-printed model was applied as an effective physical representation of the data. The second photogrammetric model was 3D-printed to document and verify results and details originating from the AR interaction. The color model was printed with layers of powder with inkjet dyes (ComeTrue® T10) for texture verification. It was a scaled model used to identify the relationships of the geometric attributes between two components. The models were initially printed using single-colored ABS filaments in fused deposition modeling (FDM) by UP Plus 2®. The 12 cm models illustrated brick layouts and joints with a 0.2 mm thickness, thin fill-in, and in fast mode. The detail presented in the first reconstruction could be improved to obtain a fine appearance by increasing the model resolution in the photogrammetry application, changing the settings by altering the layer thickness, and changing the output speed from fast to normal or slow.

The visual and structural details were self-explanatory. The first reconstruction, which usually presented better details in both plain and color 3D prints, contributed to the verification of AR models with additional friendly experiences to inspect paired instances.

3. Results

This method applied ready-made hardware and software environments to integrate model preparation, interaction, and documentation together in AR. Each model can be a base model and intervention model. This exclusive process contributed to the discovery that brick construction represents a systematic application of materials. A building component, such as a column or a window, constitutes a micro-system through which an interface was established to connect adjacent parts, such as walls, frames, canopies, moldings, structural elements, wainscots, ceilings, and the ground. The reconstructions of micro-systems were performed from a remote site through the control of screenshots or videos and software-assisted rendering in separate locations, including the laboratory, office, and home. Two different scenarios were applied: on site with a real background, and in a laboratory with two high-fidelity AR models.

3.1. D Reconstruction of Result and the Sources of Imagery

The AR interaction findings were verified by the second reconstruction. We found that the relative scale and location was better presented and confirmed through online meetings. A collaboration of tools and the environment was presented using video conferencing (Skype

®), screen sharing (Skype

®), and broadcasting (YouTube

®) (

Table 2). We first selected and recorded video from Skype

® to enable the reconstruction, performed in collaboration between the office, home, and laboratory settings. The Skype

®-based reconstruction enabled remote assistance in AR and verification by RP models in ABS or powder-based color prints. YouTube

® broadcasts were applied using a smartphone with a 3840 × 1640 resolution, as a media-independent alternative for a better reconstructed model quality.

The findings, based on the interaction results, needed to be documented as proof of differences, so the second level of photogrammetric modeling was applied to the two models, or the model and the real background. The process was conducted and confirmed from a remote site through video conferencing, using the first-person view from a smartphone.

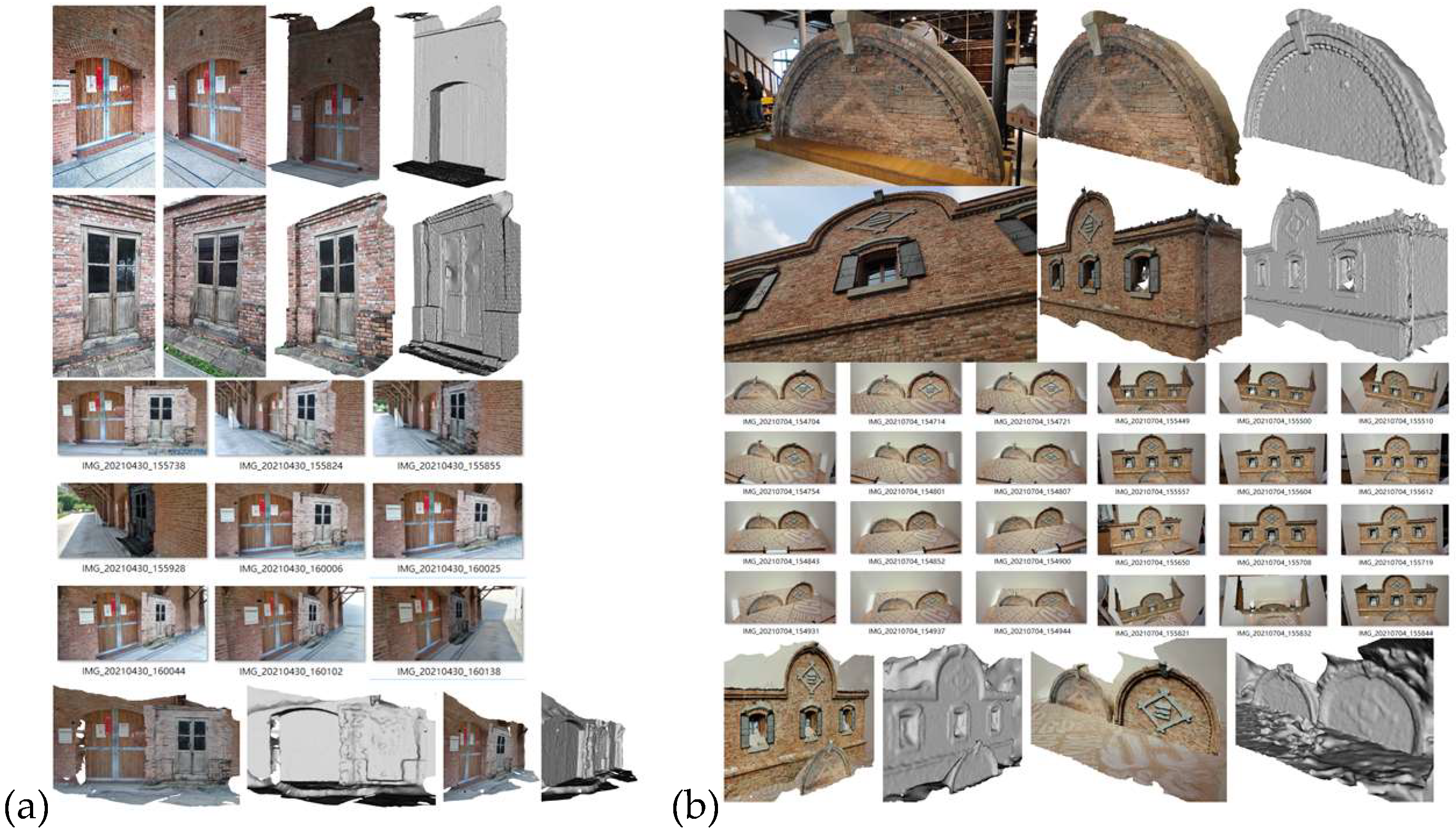

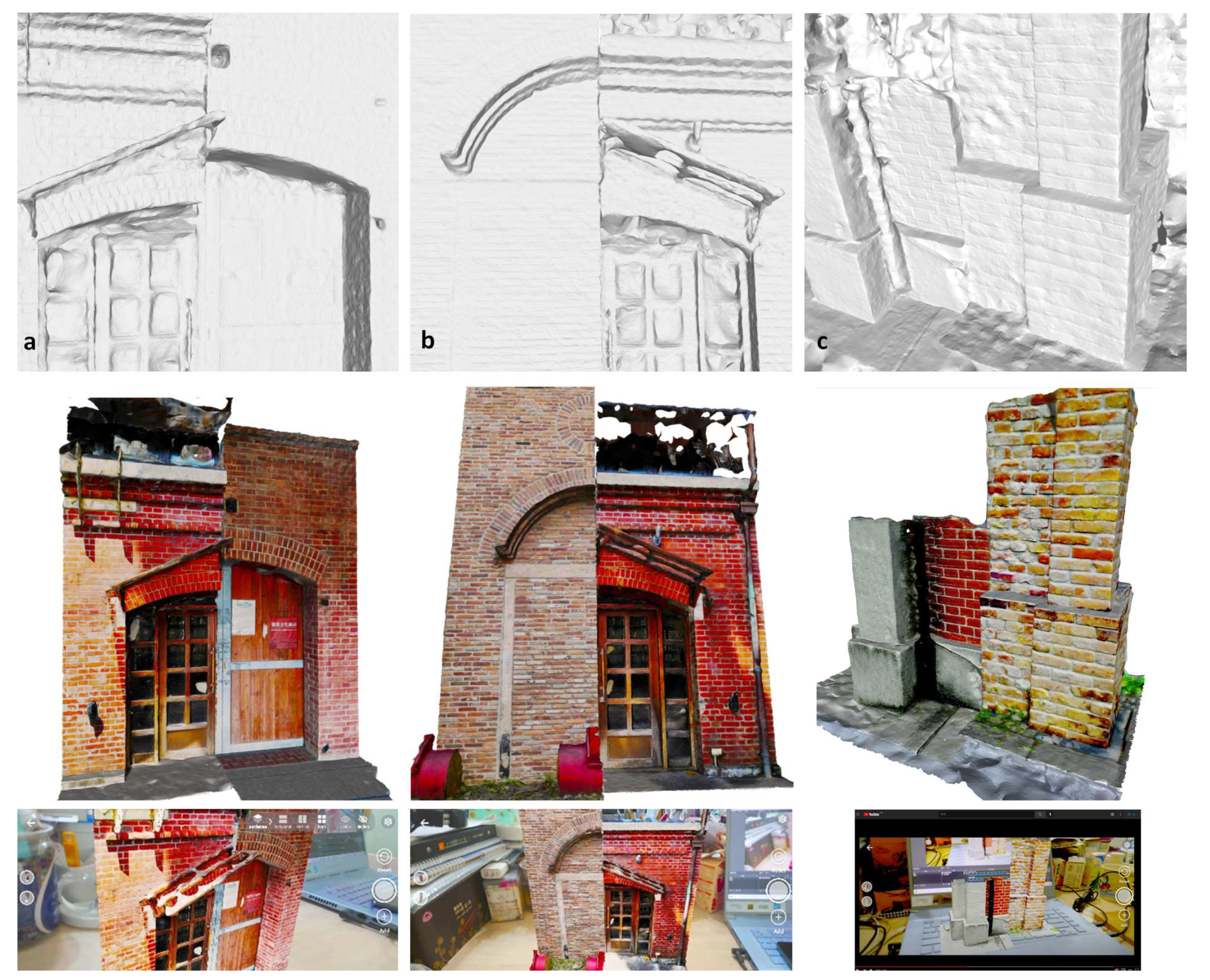

3.2. First 3D Reconstruction of Structural and Visual Details

Feature abstraction and comparison constituted the basic architectural study process to articulate and inspect professional knowledge. In place of the scan-to-AR method [

12], the photogrammetry-to-AR method proved to be an efficient modeling process, especially when the same ubiquitous smartphone device was used to take pictures and simultaneously interact with a real environment when examining on-site variations. Three-dimensional AR models (

Figure 3, top) have attracted attention and supported photogrammetric reconstruction modeling of mutual relationships. The simulation was also immediate and user-friendly, relating the two first reconstructed components in different spatio-temporal backgrounds (

Figure 3, bottom).

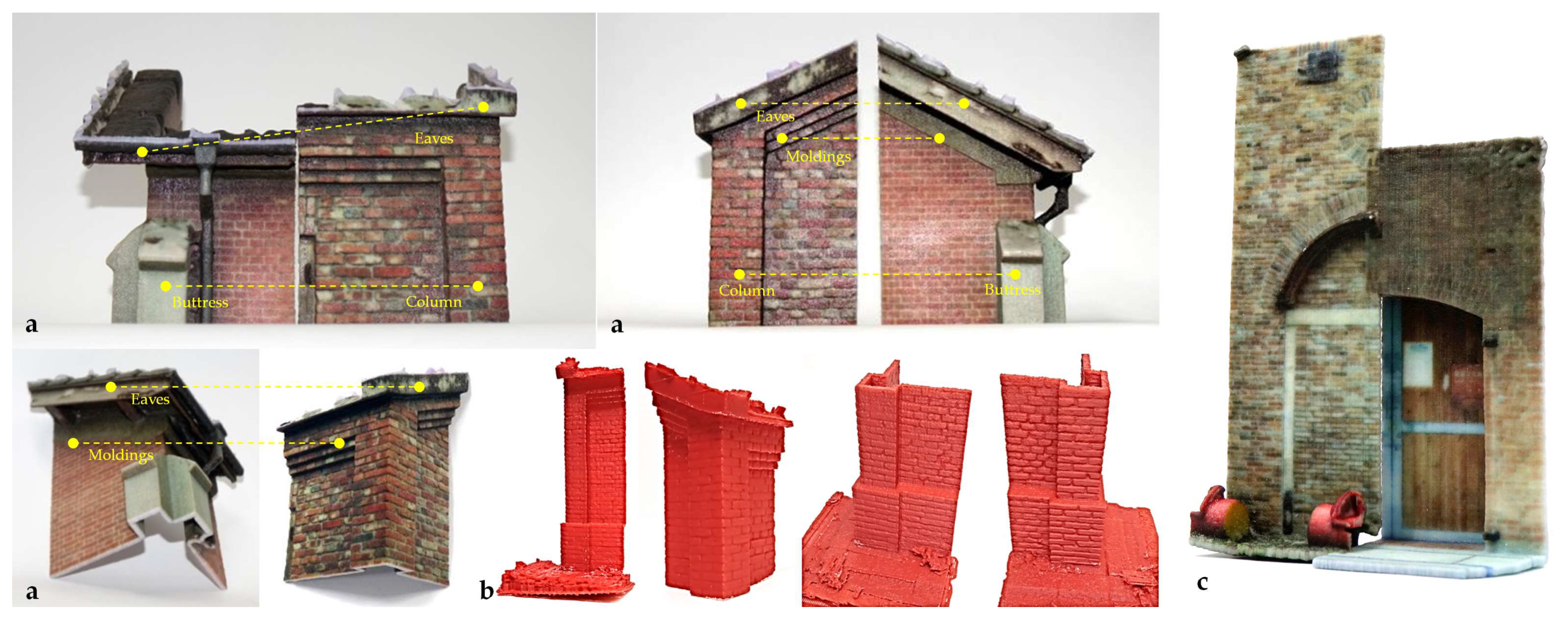

3.3. 3D-Printed Model Verification for the Base Models

Verifications of the original first reconstructed models, the base models, were obtained through a 3D printing process (

Figure 4). The links and names in

Figure 4 indicate the details used for comparison with those from different warehouses. The eaves, moldings, columns, and buttresses presented different construction methods and structural supports. In addition to different brick types, structural members chose different finishes and illustrated a contrast of traditional styles.

The 3D models enabled a close inspection of each component and a side-by-side comparison to additionally verify the field work from the first reconstructed digital model at a different location. The model to the right (

Figure 4c) made a section-based layout of two by dividing the original model in half from the central line. This layout highlighted brick types, curvatures, and layer numbers between the two arches above the exterior doors.

3.4. Connection between Paired Details in the Second Reconstruction

Additional sets of paired comparisons were performed, and 3D models were created to record the results of the interactions (

Figure 5). The studies were initially carried out for corresponding building parts placed side by side, such as wainscots (dado), moldings, wall finishes, and ground treatments. A more direct reference was made to the construction system via the molding section measured from the same ground level.

The second reconstruction of the buildings shared a series of tools in order to deliver the data for documentation and further applications. Verification of remote collaboration was confirmed by quantity and quality, checked in terms of tolerance and structural detail, and visual detail initially perceived on site. The reconstruction constituted a consistent operation interface that was directly applied to the same types of objects by the same process.

3.5. Reconstruction from Video Conferencing and Broadcasted Videos

Skype

® and YouTube

® video-based reconstructions were conducted for remote confirmation (

Figure 6). The remote involvement consisted of two objects with details and screenshot videos between a smartphone and a desktop computer. The source of data, i.e., the images of screenshots, was the same as that for the screen of the AR interface. A screen video was streamed over the internet for remote communication and confirmation between field work or home office and the laboratory. The remote conferencing platform simultaneously supported the data for simulation, communication, process documentation, image documentation, and reconstruction.

The early sets of tests from Skype® were not satisfactory due to the improper arrangement of the background color and model variables. The second tests presented sufficient detail using smartphone video screenshots.

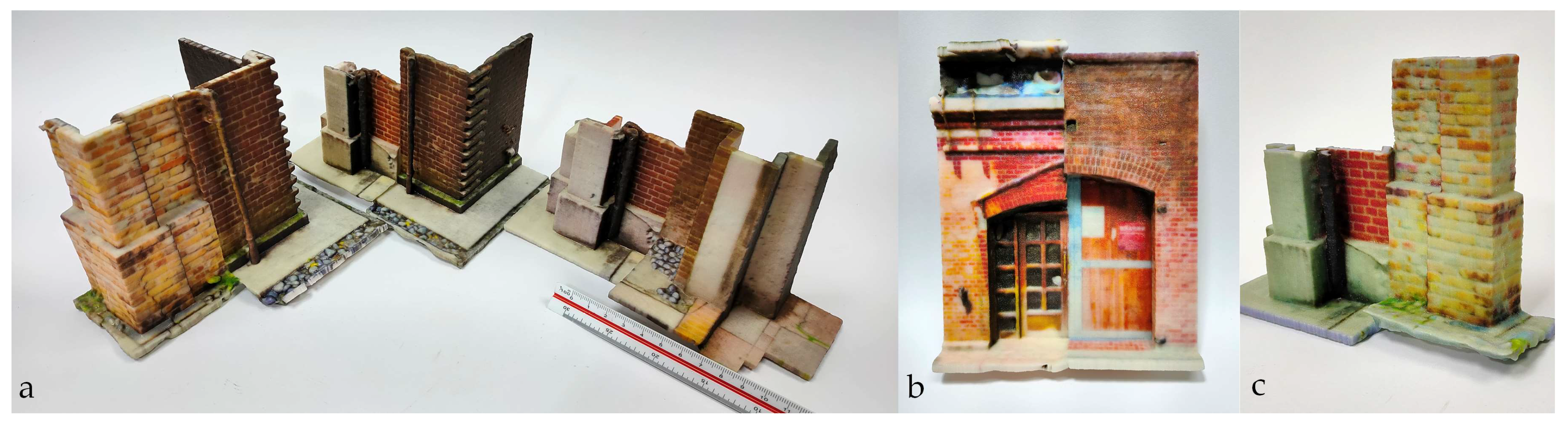

3.6. 3D-Printed Model Verification for the Second 3D Reconstruction

The second reconstruction was more oriented toward assessing the volumetric difference and topological allocation of parts. The solution for improving the detail quality was implemented by uploading the video broadcast to the YouTube

® platform for review, and then downloading the video at 4K resolution (

Figure 7). The broadcast used the Sony

® Xperia 1 II/III smartphone with a 3840 × 1644 resolution. The video was streamed through a capture device with color and contrast adjusted by Photoshop

® or OBS Studio

®. The details indicated a better quality of brick joints; however, this was not a real-time interactive process, with only a one-way response available through texting.

Two additional sets of pair-comparison models are included in

Figure 7a,b to represent the new improvements made to the first reconstruction. The two comparisons illustrate different designs of building entrances and façades, with greatly improved visual details. The models were created in a high detail mode and at a smaller scale, so that the movement of the smartphone camera could enhance AR details in a closer range while simultaneously covering the entire 3D model in as many frames as possible.

Our 3D-printed model comprised a feasible type of data form as an effective physical representation for detail documentation and verification (

Figure 8). We found that the video made after AR interaction contributed to warehouse comparisons. The screen video of AR models was even better when using the latest version of the smartphone, and enabled the most acceptable quality of structural and visual details.

4. Discussion

New interpretations of the relationship between heterogeneous entities of data were found through the exemplification of architectural details. An interdependent relationship was established between architecture construction knowledge, structural and visual details, and AR interactions. The fine details, which were created with photogrammetric modeling, were also used to reconstruct the dynamic interaction and transformations in AR scenes to the spatio-temporal relationship with location and context. Documentation in physical models was made possible on both ends of the video conference.

4.1. Verification of Virtual Reconstruction by Deviation

AR is feasible for varied cases [

37,

38,

39,

40,

41] and scales [

42,

43], with awareness of location and context [

44]. AR instruction requires location aids with more than one discrete view from a smartphone. In this study, a video recorded or streamed in AR from different angles provided sufficient data to create a 3D model of an object and its background environment. The context was then extended and related to similar examples in different warehouses, from the macro scale of a building to the micro scale of the details.

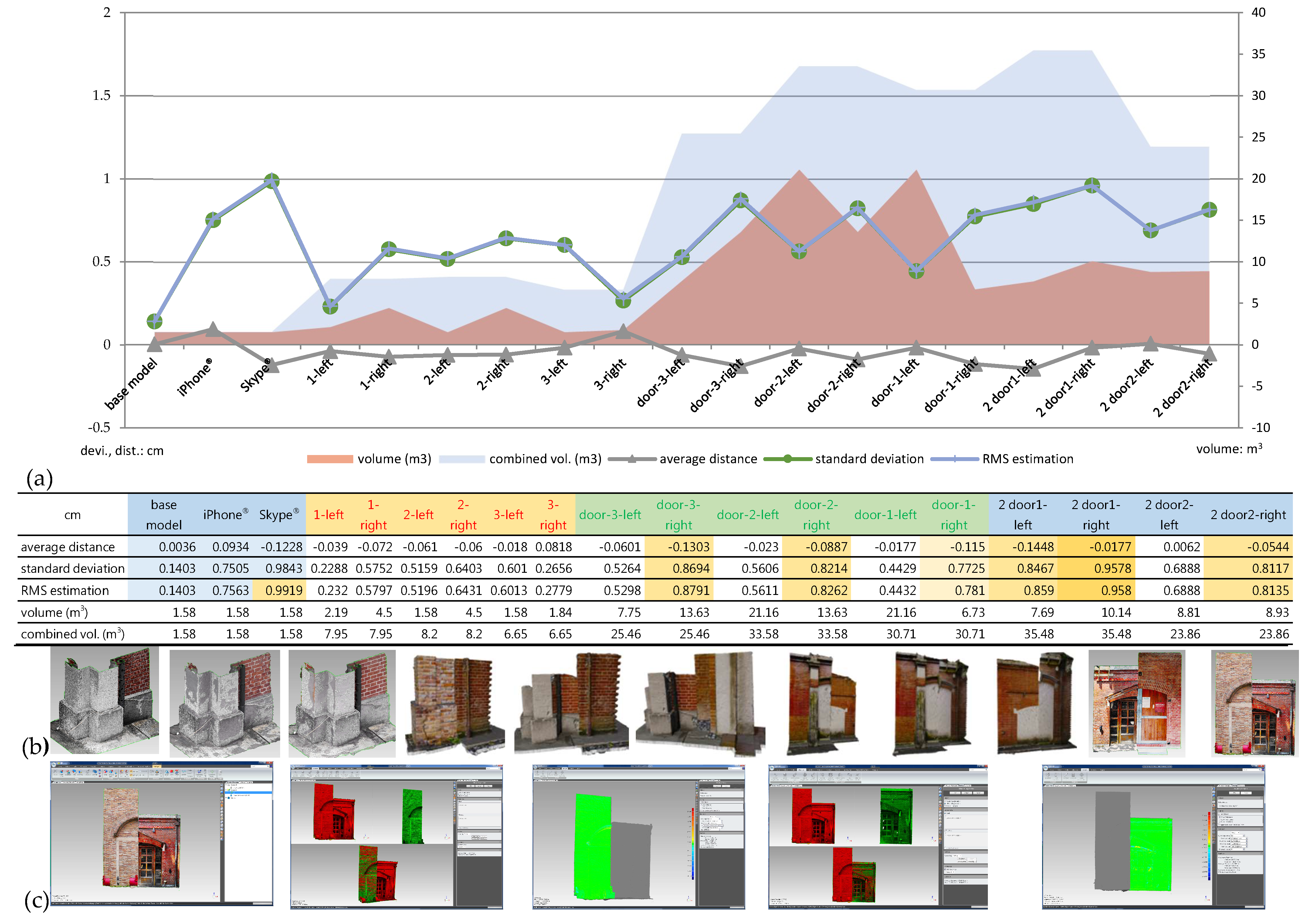

Deviation was used to represent the configuration similarity of two 3D models: the first and the secondary reconstructed model (

Figure 9). The process was performed using the analytic value supported by Geomagic Studio

®, including average distance, standard deviation, and root mean square (RMS) estimation.

The tests consisted of three parts: base sets (the left three models), small geometry sets (the middle three models in six comparisons), and larger building part sets (the right five models in 10 comparisons), based on the sizes of the 146 AR models in small or larger building details. The base sets were compared three times for a first reconstructed detail with the secondary reconstructed one, the 3D model created by a smartphone screen-recorded video, and the one created by Skype® conferencing video. The third one, with a 0.9843 standard deviation, out of 1.58 m3 of volume, set the worst reference in comparison.

Each model of the following comparisons, which was made by the left and right parts, was compared and calculated separately. The 16 models were made from eight paired comparisons, in which the individual left or right part of the volume was larger than that of the base sets, with a standard deviation less than that of the Skype®-assisted modeling. The standard deviation increased from 0.2288 to 0.9578. Compared to the iPhone® model, with a 0.7505 standard deviation in 1.58 m3, the pair-combined volume was much larger when adding both parts of the details to 35.48 m3.

The tests concluded that virtual reconstruction could obtain a deviation between the final model and the first reconstructed model of less than 1 cm. The first reconstructed models can be used to support the study of the secondary reconstruction, with certain limited values for the standard deviation in model quality. This approach was more suitable for building detail up to the size of a façade, using broadcasting video with a 4K smartphone display and imagery captures. Since the tests used a smartphone specifically to catch imagery from the ground level, the results presented a level restriction relative to the operation height of the researcher, and the clearance between the researcher and the detail.

4.2. Collaboration of Tools and the Environment

When working with a 3D model, there is a need to confirm the final results to check if an element is perfectly aligned or placed in the right location. This may be required by the operator or inspector over the internet and social media. Various tools and setups apply (

Table 3), in terms of app-independence, cross-app data sharing, background export, provision of a common 3D format, and the availability of AR platform-extended simple reconstruction.

The purpose of independent 3D reconstruction in a virtual space can be fulfilled by virtual reconstruction, i.e., SfM photogrammetry in the virtual space. The “independence” is related to the nature of the object, entry/internet circulation, geographic distribution, interface, context, social behavior, roles, and scenarios. Considering the solutions provided by existing tools, collaborative results can be different from remote assistance, remote control, and first-person AR object interaction. The SfM, which worked simply in virtual reconstruction, presented a solution for creating a virtual AR context.

4.3. An Open Domain of Application

Based on the results, the reconstruction process was reconstructable, and AR was not a closed environment for creating new 3D data. The AR interacted result did not have to be documented separately. The reconstruction took the advantages of video conferencing and broadcasting for open 3D documentation, without being separated from the AR platform. Although the model was created post the conference, the data were determined during the interaction. The open construction contributed to AR as an open documentation environment, which can connect to conferencing and provide remote verification.

Based on the optimized modeling process, the same process enabled a heritage study in a better quality of detail, and an as-needed allocation pattern of adjacent components was made by referring to the brick scale or the gap of joints. The reconstruction presented quantitative assistance based on the reference of brick size, and qualitative assistance for the side-by-side comparison of construction complexity.

4.4. Connection between Architectural Details and the System

With a proper preparation of the base model and intervention model, the AR platform provided an intuitive and user-friendly interface for multiple adjustment interactions. As seen in the online shopping experience of 3D models, end-to-end scalable AR and 3D platforms for visualization and communication [

49,

50,

51] can be easily extended to architecture research. The adjustments were performed according to a systematic view of the selected component, and the relationships found between adjacent components through the AR interface (

Figure 10). The findings demonstrated that a former reconstructed result was remodeled to verify types, evolving stages, arrangements, and levels of remodeling established to meet today’s needs for building codes or interfaces between buildings, or indoors and outdoors.

The reconstructed results presented an evolved process for the former construction method and current demand of restoration, in terms of new interrelationships and management of warehouse details. The development of industrial history and historical context was related to elucidating the relationship between the construction background and current situation of brick warehouses. The color and dimensions of two types of brick components were usually found to be dissimilar and occurred in different batches, and with different materials. The reconstruction process was able to identify differences between paired details in the initial test, such as articulated management of level height between indoors and outdoors at the entrance, using wood deck and concrete pavement, the arrangement of building elements between the distance of eaves and openings, and elevated characteristics of details. The brick layout of renovated arches was adjusted by different numbers of repetitive layers based on the clearance available to adjacent components, and the best arrangement for newly installed lighting and monitoring devices.

5. Conclusions

Brick construction constitutes a systematic application of materials and the interface that connects adjacent parts. The complexity of such a system demands real-time communication, which is supported by the interactive manipulation of inspected parts with photo-realistic details from a remote heritage site. Value can be added to the AR process by means of interaction, composition, communication, confirmation, and recursion in the development of the warehouse and its preservation attempts. Compared to AR remote assistance methods such as “see what I see” (performed by incorporating multiple sources [

56,

57,

58]), we evolved the paradigm of “reconstruct what I interact with” to confirm and share remote spatial interrelationships during AR-enabled interaction. The novelty of video conferences redefined the connection between processes and configurations through a looped interaction between AR and 3D models for morphology, conservation, and situated comparisons. We found that a former reconstructed result could be remodeled as a reference for the follow-up reconstruction process. The first and second reconstructions presented architectural details synchronously in video conferences or asynchronously in broadcasting. A cross-comparison between warehouses illustrated the differences between warehouses with the side-by-side allocation of details from both halves of each site. The additional visual quality of the obtained models was presented in color RP models (first and second reconstruction) and digital models (second reconstruction).

This study exemplified heritage exploration, in which the quality and quantity aspects of the outcome still need to be optimized in detail. Future research should extend inter-comparisons between warehouse clusters by means of a third or further reconstruction for trend setting. A lossless reconstruction should be developed, so the reconstructions can be recursively made without losing any fidelity in the future.

Author Contributions

Conceptualization, methodology, software, validation, investigation, writing—original draft preparation, visualization, and data curation, N.-J.S. and Y.-C.W.; formal analysis, resources, writing—review and editing, supervision, project administration, and funding acquisition, N.-J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology of Taiwan, MOST 110-2221-E-011-051-MY3. The authors express their sincere appreciation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abdullah, F.; Kassim, M.H.B.; Sanusi, A.N.Z. Go virtual: Exploring augmented reality application in representation of steel architectural construction for the enhancement of architecture education. Adv. Sci. Lett. 2017, 23, 804–808. [Google Scholar] [CrossRef]

- Ayer, S.K.; Messner, J.I.; Anumba, C.J. Augmented reality gaming in sustainable design education. J. Archit. Eng. 2016, 22, 04015012. [Google Scholar] [CrossRef] [Green Version]

- Behzadan, A.H.; Kamat, V.R. Enabling discovery-based learning in construction using telepresent augmented reality. Autom. Constr. 2013, 33, 3–10. [Google Scholar] [CrossRef]

- Jetter, J.; Eimecke, J.; Rese, A. Augmented reality tools for industrial applications: What are potential key performance indicators and who benefits? Comput. Hum. Behav. 2018, 87, 18–33. [Google Scholar] [CrossRef]

- Fritz, F.; Susperrgui, A.; Linaza, M.T. Enhancing cultural tourism experiences with augmented reality technologies. In Proceedings of the 6th International Symposium on Virtual Reality, Archaeology and Cultural Heritage 2005, VAST: 1–6, Pisa, Italy, 8–11 November 2005. [Google Scholar]

- Kounavis, C.D.; Kasimati, A.E.; Zamani, E.D. Enhancing the tourism experience through mobile augmented reality: Challenges and prospects. Int. J. Eng. Bus. Manag. 2012, 4, 10. [Google Scholar] [CrossRef]

- Diao, P.H.; Shih, N.J. Trends and research issues of augmented reality studies in architectural and civil engineering education—A review of academic journal publications. Appl. Sci. 2019, 9, 1840. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Truijens, M.; Hou, L.; Wang, Y.; Zhou, Y. Integrating augmented reality with building information modeling: Onsite construction process controlling for liquefied natural gas industry. Autom. Constr. 2014, 40, 96–105. [Google Scholar] [CrossRef]

- Wang, S.; Parsons, M.; Stone-McLean, J.; Rogers, P.; Boyd, S.; Hoover, K.; Meruvia-Pastor, O.; Gong, M.; Smith, A. Augmented reality as a telemedicine platform for remote procedural training. Sensors 2017, 17, 2294. [Google Scholar] [CrossRef]

- Zhou, Y.; Luo, H.; Yang, Y. Implementation of augmented reality for segment displacement inspection during tunneling construction. Autom. Constr. 2017, 82, 112–121. [Google Scholar] [CrossRef]

- Kerr, J.; Lawson, G. Augmented reality in design education: Landscape architecture studies as ar experience. Int. J. Art Des. Educ. 2019, 39, 6–21. [Google Scholar] [CrossRef] [Green Version]

- Banfi, F.; Brumana, R.; Stanga, C. Extended reality and informative models for the architectural heritage: From scan-to-BIM process to virtual and augmented reality. Virtual Archaeol. Rev. 2019, 10, 14–30. [Google Scholar] [CrossRef]

- Brumana, R.; Della Torre, S.; Previtali, M.; Barazzetti, L.; Cantini, L.; Oreni, D.; Banfi, F. Generative HBIM modelling to embody complexity (LOD, LOG, LOA, LOI): Surveying, preservation, site intervention—The Basilica di Collemaggio (L’Aquila). Appl. Geomat. 2018, 10, 545–567. [Google Scholar] [CrossRef]

- Xiao, L.; Yib, W.; Chia, H.L.; Wang, X.; Chana, A.P.C. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Shanbari, H.; Blinn, N.; Issa, R.R.A. Using augmented reality video in enhancing masonry and roof component comprehension for construction management students. Eng. Constr. Archit. Manag. 2016, 23, 765–781. [Google Scholar] [CrossRef]

- Hartless, J.F.; Ayer, S.K.; London, J.S.; Wu, W. Comparison of building design assessment behaviors of novices in augmented and virtual-reality environments. J. Archit. Eng. 2020, 26, 1–11. [Google Scholar] [CrossRef]

- Birt, J.; Cowling, M. Toward future “mixed reality” learning spaces for STEAM education. Int. J. Innov. Sci. Math. Educ. 2017, 25, 1–16. [Google Scholar]

- Joo-Nagata, J.; Martínez-Abad, F.; García-Bermejo Giner, J.; García-Peñalvo, F.J. Augmented reality and pedestrian navigation through its implementation in m-learning and e-learning: Evaluation of an educational program in Chile. Comput. Educ. 2017, 111, 1–17. [Google Scholar] [CrossRef]

- González, N.A.A. Development of spatial skills with virtual reality and augmented reality. Int. J. Interact. Des. Manuf. 2018, 12, 133–144. [Google Scholar] [CrossRef]

- Chang, Y.S.; Hu, Y.-J.R.; Chen, H.-W. Learning performance assessment for culture environment learning and custom experience with an AR navigation system. Sustainability 2019, 11, 4759. [Google Scholar] [CrossRef] [Green Version]

- Frank, J.A.; Kapila, V. Mixed-reality learning environments: Integrating mobile interfaces with laboratory test-beds. Comput. Educ. 2017, 110, 88–104. [Google Scholar] [CrossRef] [Green Version]

- Huang, T. Seeing creativity in an augmented experiential learning environment. Univers. Access Inf. Soc. 2019, 18, 301–313. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. Structure-from-motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Carrivick, J.; Quincey, D. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. 2016, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Spreitzer, G.; Tunnicliffe, J.; Friedrich, H. Using structure from motion photogrammetry to assess large wood (LW) accumulations in the field. Geomorphology 2019, 346, 106851. [Google Scholar] [CrossRef]

- Fernandez-Guisuraga, J.M.; Calvo, L.; Suarez-Seoane, S. Monitoring post-fire neighborhood competition effects on pine saplings under different environmental conditions by means of UAV multispectral data and structure-from-motion photogrammetry. J. Environ. Manag. 2022, 305, 114373. [Google Scholar] [CrossRef]

- Papadopoulos, C.; Paliou, E.; Chrysanthi, A.; Kotoula, E.; Sarris, A. Archaeological research in the digital age. In Proceedings of the 1st Conference on Computer Applications and Quantitative Methods in Archaeology Greek Chapter (CAA-GR), Retymno, Greece, 6–8 March 2015; pp. 46–54. [Google Scholar]

- Yilmaz, H.M.; Yakar, M.; Gulec, S.A.; Dulgerler, O.N. Importance of digital close-range photogrammetry in documentation of cultural heritage. J. Cult. Herit. 2007, 8, 428–433. [Google Scholar] [CrossRef]

- Carnevali, L.; Ippoliti, E.; Lanfranchi, F.; Menconero, S.; Russo, M.; Russo, V. Close-range mini-UAVs photogrammetry for architecture survey. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, ISPRS TC II Mid-Term Symposium “Towards Photogrammetry 2020”, Riva del Garda, Italy, 4–7 June 2018; Volume XLII-2, pp. 217–224. [Google Scholar]

- Asadi, K.; Suresh, A.K.; Ender, A.; Gotad, S.; Maniyar, S.; Anand, S.; Noghabaei, M.; Han, K.; Lobaton, E.; Wu, T. An integrated UGV-UAV system for construction site data collection. Autom. Constr. 2020, 112, 103068. [Google Scholar] [CrossRef]

- Kim, S.; Kim, S.; Lee, D.E. Sustainable application of hybrid point cloud and BIM method for tracking construction progress. Sustainability 2020, 12, 4106. [Google Scholar] [CrossRef]

- Bernal, A.; Muñoz, C.; Sáez, A.; Serrano-López, R. Suitability of the Spanish open public cartographic resources for BIM site modeling. J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 505–517. [Google Scholar] [CrossRef]

- Bogdanowitsch, M.; Sousa, L.; Siegesmund, S. Building stones quarries: Resource evaluation by block modelling and unmanned aerial photogrammetric survey. Environ. Earth Sci. 2022, 81, 1–55. [Google Scholar] [CrossRef]

- Videras Rodríguez, M.; Melgar, S.G.; Cordero, A.S.; Márquez, J.M.A. A critical review of unmanned aerial vehicles (UAVs) Use in architecture and urbanism: Scientometric and bibliometric analysis. Appl. Sci. 2021, 11, 9966. [Google Scholar] [CrossRef]

- Li, C.; Guan, T.; Yang, M.; Zhang, C. Combining data-and-model-driven 3D modelling (CDMD3DM) for small indoor scenes using RGB-D data. ISPRS J. Photogramm. Remote Sens. 2021, 180, 1–13. [Google Scholar] [CrossRef]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Structure from motion (SfM) photogrammetry. In Geomorphological Techniques; British Society for Geomorphology: London, UK, 2015; Chapter 2, Section. 2.2. [Google Scholar]

- Tamborrino, R.; Wendrich, W. Cultural heritage in context: The temples of Nubia, digital technologies and the future of conservation. J. Inst. Conserv. 2017, 40, 168–182. [Google Scholar] [CrossRef] [Green Version]

- Younes, G.; Kahil, R.; Jallad, M.; Asmar, D.; Elhajj, I. Virtual and augmented reality for rich interaction with cultural heritage sites: A case study from the Roman theater at byblos. Digit. Appl. Archaeol. Cult. Herit. 2017, 5, 1–9. [Google Scholar] [CrossRef]

- Jung, T.; Chung, N.; Leue, M.C. The determinants of recommendations to use augmented reality technologies: The case of a Korean theme park. Tour. Manag. 2015, 49, 75–86. [Google Scholar] [CrossRef]

- Fernández-Palacios, B.J.; Morabito, D.; Remondino, F. Access to complex reality-based 3D models using virtual reality solutions. J. Cult. Herit. 2017, 23, 40–48. [Google Scholar] [CrossRef]

- Kasapakis, V.; Gavalas, D.; Galatis, P. Augmented reality in cultural heritage: Field of view awareness in an archaeological site mobile guide. J. Ambient Intell. Smart Environ. 2016, 8, 501–514. [Google Scholar] [CrossRef]

- Schöps, T.; Sattler, T.; Häne, C.; Pollefeys, M. Large-scale outdoor 3D reconstruction on a mobile device. Comput. Vis. Image Underst. 2017, 157, 151–166. [Google Scholar] [CrossRef]

- Golodetz, S.; Cavallari, T.; Lord, N.; Prisacariu, V.; Murray, D.; Torr, P. Collaborative large-scale dense 3D reconstruction with online inter-agent pose optimisation. EEE Trans. Vis. Comput. Graph. 2018, 24, 2895–2905. [Google Scholar] [CrossRef] [Green Version]

- Rubino, I.; Barberis, C.; Xhembulla, J.; Malnati, G. Integrating a location-based mobile game in the museum visit: Evaluating visitors’ behaviour and learning. J. Comput. Cultur. Herit. 2015, 8, 1–18. [Google Scholar] [CrossRef]

- Soler, F.; Melero, F.J.; Luzón, M.V. A complete 3D information system for cultural heritage documentation. J. Cult. Herit. 2017, 23, 49–57. [Google Scholar] [CrossRef]

- Xue, K.; Li, Y.; Meng, X. An evaluation model to assess the communication effects of intangible cultural heritage. J. Cult. Herit. 2019, 40, 124–132. [Google Scholar] [CrossRef]

- Vosinakis, S.; Avradinis, N.; Koutsabasis, P. Dissemination of intangible cultural heritage using a multi-agent virtual world. In Advances in Digital Cultural Heritage; Springer-Verlag: Berlin/Heidelberg, Germany, 2018; pp. 197–207. [Google Scholar] [CrossRef]

- Galani, A.; Kidd, J. Evaluating digital cultural heritage “in the wild”: The case for reflexivity. J. Comput. Cult. Herit. 2019, 12, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Smink, A.R.; van Reijmersdal, E.A.; van Noort, G.; Neijens, P.C. Shopping in augmented reality: The effects of spatial presence, personalization and intrusiveness on app and brand responses. J. Bus. Res. 2020, 118, 474–485. [Google Scholar] [CrossRef]

- Daassi, M.; Debbabi, S. Intention to reuse AR-based apps: The combined role of the sense of immersion, product presence and perceived realism. Inf. Manag. 2021, 58, 103453. [Google Scholar] [CrossRef]

- Meißner, M.; Pfeiffer, J.; Peukert, C.; Dietrich, H.; Pfeiffer, T. How virtual reality affects consumer choice. J. Bus. Res. 2020, 117, 219–231. [Google Scholar] [CrossRef]

- Picallo, I.; Vidal-Balea, A.; Blanco-Novoa, O.; Lopez-Iturri, P.; Fraga-Lamas, P.; Klaina, H.; Fernández-Caramés, T.M.; Azpilicueta, L.; Falcone, F. Design and experimental validation of an augmented reality system with wireless integration for context aware enhanced show experience in auditoriums. IEEE Access 2021, 9, 5466–5484. [Google Scholar] [CrossRef]

- Alves, J.B.; Marques, B.; Dias, P.; Santos, B.S. Using augmented reality for industrial quality assurance: A shop floor user study. Int. J. Adv. Manuf. Technol. 2021, 115, 105–116. [Google Scholar] [CrossRef]

- Bottani, E.; Longo, F.; Nicoletti, L.; Padovano, A.; Tancredi, G.P.C.; Tebaldi, L.; Vetrano, M.; Vignali, G. Wearable and interactive mixed reality solutions for fault diagnosis and assistance in manufacturing systems: Implementation and testing in an aseptic bottling line. Comput. Ind. 2021, 128, 103429. [Google Scholar] [CrossRef]

- Huang, B.C.; Hsu, J.; Chu, E.T.H.; Wu, H.M. ARBIN: Augmented reality based indoor navigation system. Sensors 2020, 20, 5890. [Google Scholar] [CrossRef]

- Jeong, H.J.; Jeong, I.; Moon, S.M. Dynamic offloading of web application execution using snapshot. ACM Trans. Web. 2020, 14, 1–24. [Google Scholar] [CrossRef]

- Marino, E.; Barbieri, L.; Colacino, B.; Fleri, A.K.; Bruno, F. An augmented reality inspection tool to support workers in industry 4.0 environments. Comput. Ind. 2021, 127, 103412. [Google Scholar] [CrossRef]

- Deng, X.; Zhang, Y.; Shi, J.; Zhu, Y.; Cheng, D.; Zhu, D.; Chi, Z.; Tan, P.; Chang, L.; Wang, H. Hand pose understanding with large-scale photo-realistic rendering dataset. IEEE Trans. Image Processing 2021, 30, 4275–4290. [Google Scholar] [CrossRef]

- Reljić, V.; Milenković, I.; Dudić, S.; Šulc, J.; Bajči, B. Augmented reality applications in industry 4.0 environment. Appl. Sci. 2021, 11, 5592. [Google Scholar] [CrossRef]

- Mura, M.D.; Dini, G. An augmented reality approach for supporting panel alignment in car body assembly. J. Manuf. Syst. 2021, 59, 251–260. [Google Scholar] [CrossRef]

- Diao, P.H.; Shih, N.J. BIM-based AR maintenance system (BARMS) as an intelligent instruction platform for complex plumbing facilities. Appl. Sci. 2019, 9, 1592. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; He, W.; Han, D.; Wang, Y.; Min, H.; Lan, W.; Han, S. Using a head pointer or eye gaze: The effect of gaze on spatial AR remote collaboration for physical tasks. Interact. Comput. 2020, 32, 153–169. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Wei, S.; Xu, G.; He, W.; Zhang, X.; Zhang, J. 3DGAM: Using 3D gesture and CAD models for training on mixed reality remote collaboration. Multimed. Tools Appl. 2020, 80, 31059–31084. [Google Scholar] [CrossRef]

- Kim, S.; Billinghurst, M.; Kim, K. Multimodal interfaces and communication cues for remote collaboration. J. Multimodal. User Interfaces 2020, 14, 313–319. Available online: https://doi-org.ezproxy.lib.ntust.edu.tw/10.1007/s12193-020-00346-8 (accessed on 18 July 2021). [CrossRef]

- Choi, S.H.; Kim, M.; Lee, J.Y. Situation-dependent remote AR collaborations: Image-based collaboration using a 3D perspective map and live video-based collaboration with a synchronized VR mode. Comput. Ind. 2018, 101, 51–66. [Google Scholar] [CrossRef]

- Del Amo, I.F.; Erkoyuncu, J.; Vrabič, R.; Frayssinet, R.; Reynel, C.V.; Roy, R. Structured authoring for AR-based communication to enhance efficiency in remote diagnosis for complex equipment. Adv. Eng. Inform. 2020, 45, 101096. Available online: https://www.sciencedirect.com/science/article/pii/S1474034620300653 (accessed on 18 July 2021).

- Rhee, T.; Thompson, S.; Medeiros, D.; dos Anjo, R.; Chalmers, A. Augmented virtual teleportation for high-fidelity telecollaboration. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1923–1933. [Google Scholar] [CrossRef]

- Lee, Y.; Yoo, B. XR collaboration beyond virtual reality: Work in the real world. J. Comput. Des. Eng. 2021, 8, 756–772. [Google Scholar] [CrossRef]

- Anton, D.; Kurillo, G.; Bajcsy, R. User experience and interaction performance in 2D/3D telecollaboration. Future Gener. Comput. Syst. 2018, 82, 77–88. Available online: https://www.sciencedirect.com/science/article/pii/S0167739X17323385 (accessed on 18 July 2021). [CrossRef]

- Khairadeen Ali, A.; Lee, O.J.; Lee, D.; Park, C. Remote indoor construction progress monitoring using extended reality. Sustainability 2021, 13, 2290. [Google Scholar] [CrossRef]

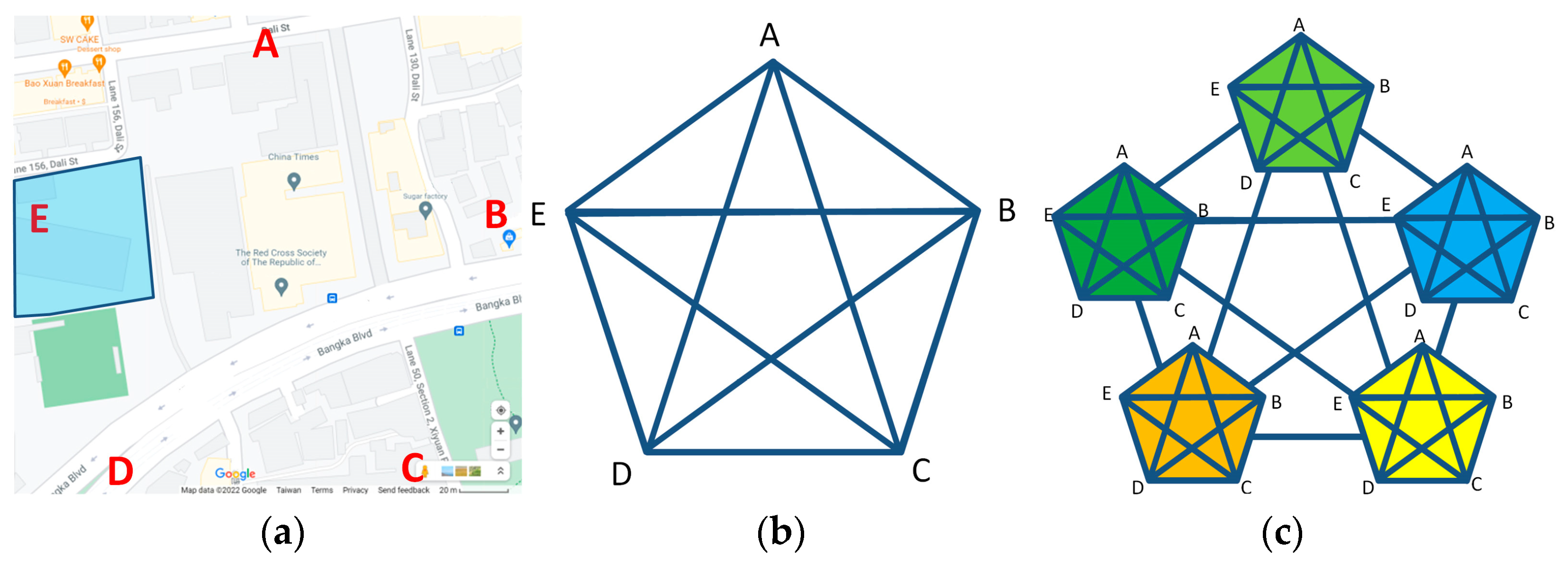

Figure 1.

(a) Eight heritage brick warehouses; (b) three-dimensional model of a brick warehouse; (c) instances of three-dimensional details.

Figure 1.

(a) Eight heritage brick warehouses; (b) three-dimensional model of a brick warehouse; (c) instances of three-dimensional details.

Figure 2.

Structure of the working process.

Figure 2.

Structure of the working process.

Figure 3.

(a) Examples of the first reconstructed components; (b) two arches of different spatio-temporal backgrounds presenting different curvatures, endings, and numbers of brick layers above openings.

Figure 3.

(a) Examples of the first reconstructed components; (b) two arches of different spatio-temporal backgrounds presenting different curvatures, endings, and numbers of brick layers above openings.

Figure 4.

3D color-printed models created from original first reconstructions to (a) relate brick texture and context with the eaves, moldings, columns, and buttresses of two warehouses from different orientations; (b) illustrate brick joints in a plain-colored FDM model; and (c) reveal differences between two arches above the exterior door.

Figure 4.

3D color-printed models created from original first reconstructions to (a) relate brick texture and context with the eaves, moldings, columns, and buttresses of two warehouses from different orientations; (b) illustrate brick joints in a plain-colored FDM model; and (c) reveal differences between two arches above the exterior door.

Figure 5.

Two paired comparisons in the second reconstruction: (a) entrances and (b) gables.

Figure 5.

Two paired comparisons in the second reconstruction: (a) entrances and (b) gables.

Figure 6.

(a) Original model; (b) Skype® video conference with smartphones.

Figure 6.

(a) Original model; (b) Skype® video conference with smartphones.

Figure 7.

Enhanced broadcast digital model with joints visible, acceptable visual detail, from (a,b): video or frame shots, and (c): YouTube® broadcast video or screenshots, using Sony® Xperia 1 II.

Figure 7.

Enhanced broadcast digital model with joints visible, acceptable visual detail, from (a,b): video or frame shots, and (c): YouTube® broadcast video or screenshots, using Sony® Xperia 1 II.

Figure 8.

3D-printed digital models using (a): Sony® Xperia 1 III broadcast video and (b): Sony® Xperia 1 II broadcast video, compared to (c): the model made by a former Skype® screenshot video.

Figure 8.

3D-printed digital models using (a): Sony® Xperia 1 III broadcast video and (b): Sony® Xperia 1 II broadcast video, compared to (c): the model made by a former Skype® screenshot video.

Figure 9.

(a) Deviation analysis; (b) base sets (the left three models), small geometry sets (the middle three models in six comparisons), and larger building part sets (the right five models in 10 comparisons); (c) screenshots of deviation analysis for the secondary reconstructed model and 2 door2-left & 2 door2-right in Geomagic Studio®.

Figure 9.

(a) Deviation analysis; (b) base sets (the left three models), small geometry sets (the middle three models in six comparisons), and larger building part sets (the right five models in 10 comparisons); (c) screenshots of deviation analysis for the secondary reconstructed model and 2 door2-left & 2 door2-right in Geomagic Studio®.

Figure 10.

(a) Individual warehouses (A–E) modeled by the first reconstruction; (b) warehouse inter-comparisons performed by the second reconstruction; (c) cross-warehouse cluster inter-comparisons performed by the third or further reconstruction.

Figure 10.

(a) Individual warehouses (A–E) modeled by the first reconstruction; (b) warehouse inter-comparisons performed by the second reconstruction; (c) cross-warehouse cluster inter-comparisons performed by the third or further reconstruction.

Table 1.

Matrix of compared details and the components around the details.

Table 1.

Matrix of compared details and the components around the details.

| | | Ground | Column | Beam | Opening | Wall/

Wainscot | Molding |

|---|

| Material | Deck | Exposed | Hidden | Buttress | Material | Finish | Exposed | Hidden | Truss | Material | Layout | Lintel | Sill | Frame | Cover | Door Stop | Material | Material |

|---|

| Tamsui, Royal Dutch Shell Plc. | lower wall buttress | concrete | wood | v | | v | brick | v | | | | | | | | | | | brick | |

| A building |

| upper corner eaves—A building | | | | v | | brick | | | | | | | | | | | | brick | brick |

| lower corner—A building | tile | | v | | | brick | | | | | | | | | | | | brick | |

| B building |

| sealed door—B building | gravel | stone | | | | | | | | | | | brick | | | brick | | brick | |

| small steel window—B building | | | | | | | | | | | | | brick | concrete | concrete | steel | | brick | |

| lower corner—B building | gravel | stone | | v | | brick | | | | | | | | | | | | brick | |

| C building |

| small steel plate window—C building | | | | | | | | | | | | | brick | concrete | concrete | steel | | brick | |

| vent window—C building | | | | | | | | | | | | | brick | brick | brick | steel | | brick | |

| upper corner eaves—C building | | | | v | | brick | | | v | | | | | | | | | brick | wood |

| upper corner eaves & fence—C building | | | | v | | brick | | | v | | | | | | | | | brick | wood |

| D building |

| front façade—D building | concrete | wood | v | v | | brick | | | | | | | | | | steel | | brick | brick |

| Steel plate window—D building | concrete | wood | | | | | | | | | | | concrete | brick | steel | steel | | brick | |

| Steel plate window 2—D building | | | | | | | | | | | | | concrete | brick | steel | steel | | brick | |

| Steel plate door—D building | concrete | wood | | | | | | | | | | | concrete | | steel | steel | | brick | |

| lower wall & column—D building | concrete | wood | v | v | | brick | | | | | | | | | | | | brick | |

| lower corner—D building | concrete | | v | v | | brick | | | | | | | | | | | | brick | |

| E building |

| wooden door—E building | concrete | brick | v | v | | | | v | | | brick | horizontal | wood | | wood | wood | concrete | brick | brick |

| wooden window—E building | | | v | v | | brick | | | | | | | wood | brick | wood | glass | | brick | brick |

| lower corner 1—E building | concrete | brick | v | v | | brick | | | | | | | | | | | | brick | |

| lower corner 2—E building | concrete | brick | v | v | | brick | | | | | | | | | | | | brick | |

| upper corner eaves—E building | | | v | v | | brick | | v | | | brick | horizontal | | | | | | brick | brick |

| lower wall & column—E building | concrete | brick | v | v | | brick | | | | | | | | | | | | brick | |

| upper wall & column—E building | | | v | v | | brick | | v | | | brick | horizontal | | | | | | brick | brick |

| G building |

| corner façade—G building | soil | | | | | | | | | | | | wood | | wood | wood | wood | brick | brick |

| wooden door—G building | tile | | | | | | | | | | | | wood | | wood | wood | wood | brick | |

Table 2.

Collaboration of tools and the environment.

Table 2.

Collaboration of tools and the environment.

| | Tasks | App and Software | Platforms | Notes |

|---|

| 1 | Images | | Handheld and mobile devices | For first reconstruction |

| 2 | Photogrammetry modeling | Zephyr®, Meshroom®, and AutoDesk Recap Photo® | Desktop | First and second reconstruction |

| 3 | 3D modeling—editing and format transfer | Geomagic Studio® and Meshlab® | Desktop | First and second reconstructed models |

| 4 | 3D model—tolerance analysis in global registration | Geomagic Studio® | Desktop | |

| 5 | AR platform—cloud access, QR code generation, and interaction | Augment® | Smartphone and desktop | |

| 6 | Video conferencing and recording (both ends) | Skype® | Smartphone and desktop | |

| 7 | Screen-recording—images or videos recorded at field and host end | Skype® | Smartphone (iPhone® and Realme X50®) and desktop | |

| 8 | Picture-taking—capture from video conference | Zephyr® | Smartphone (Sony® Xperia 1 II/III) and desktop | |

| 9 | YouTube broadcasting | YouTube® and OBS Studio® | Smartphone and desktop | 4K HDMI to USB-C (Pengo®) |

| 10 | Rapid prototyping | ComeTrue® (color prints) and UP Plus 2 ® (ABS) | Desktop (ComeTrue® T10 in 1200 × 556 dpi and UP Plus 2 ®) | |

Table 3.

Collaboration of tools and the environment.

Table 3.

Collaboration of tools and the environment.

| AR Types | Remote Control | Remote Assistance | First-Person AR Object Interaction | APP-Independent |

|---|

| Apps | AirMirror® | AR Remote Assistance, XRmeet® | Augment®, Sketchfab®, Aero®, Vuforia View® | |

| Original 3D process | n/a | 2D screen annotation only | 3D object transformation | 3D object transformation |

| Confirm result | Screen captures | Screen captures | Export app-specific format: Place, usdz | Virtual reconstruction: screen captures + photogrammetry |

| Follow-up 3D operation | n/a | No | Yes | Yes |

| dimensions | n/a | 2D | app-specific 3D | general 3D format |

| Exported media | n/a | Images | APP scene descriptive file | Sequential images to 3D |

| 3D background export | n/a | In 2D image only | No | Yes, after 3D photogrammetric modeling |

| 3D scene save | n/a | No | Yes | Yes |

| Media openness | n/a | No | To the same app only | Yes, through general 3D format such as OBJ, vrml |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).