Active Fire Mapping on Brazilian Pantanal Based on Deep Learning and CBERS 04A Imagery

Abstract

:1. Introduction

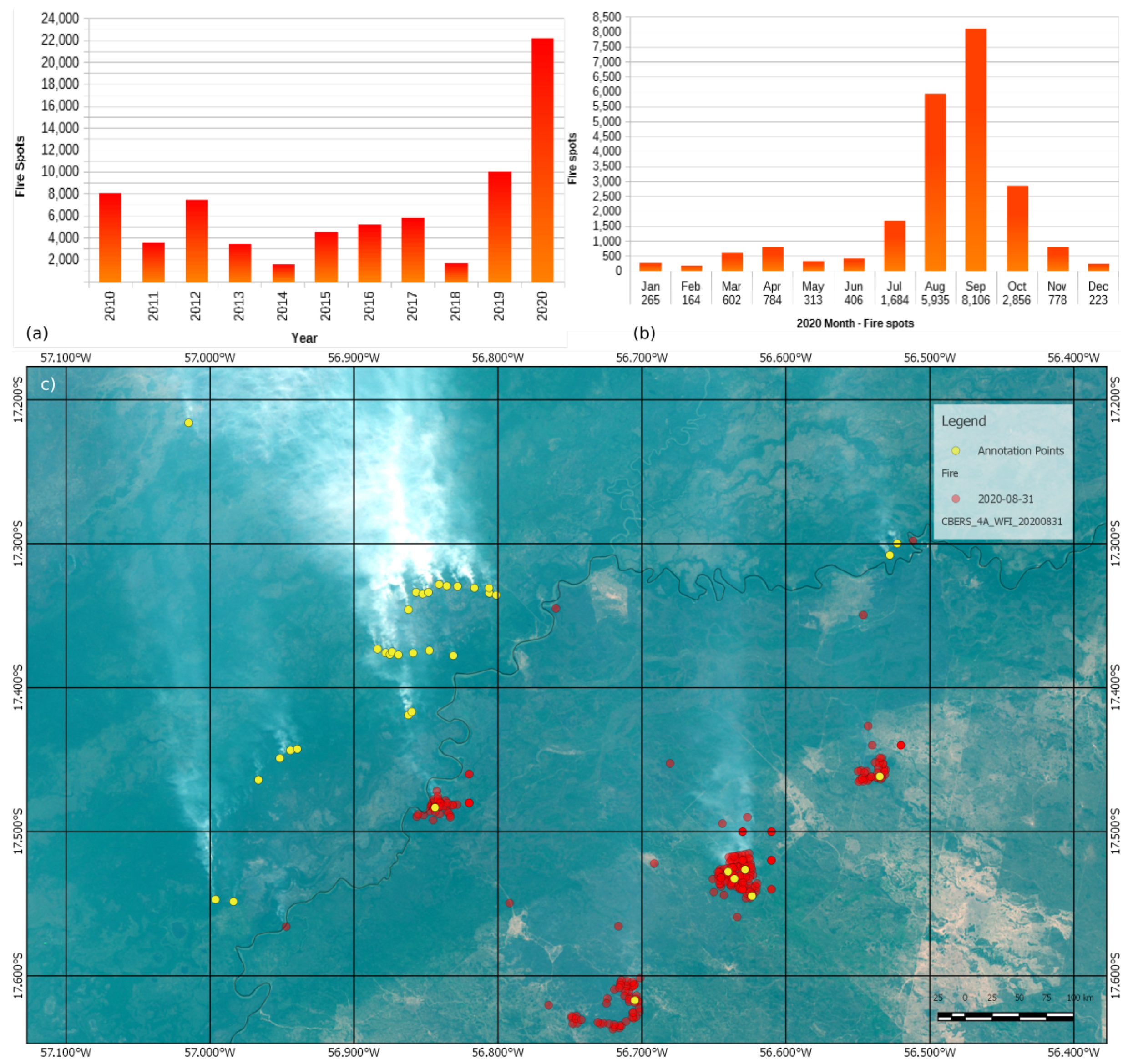

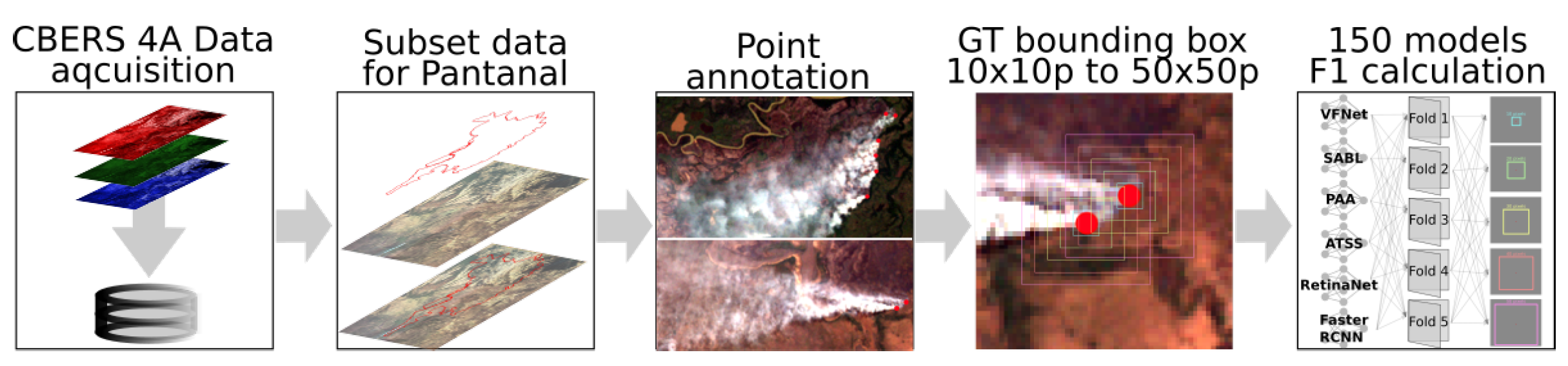

2. Materials and Methods

2.1. Study Area and Imagery

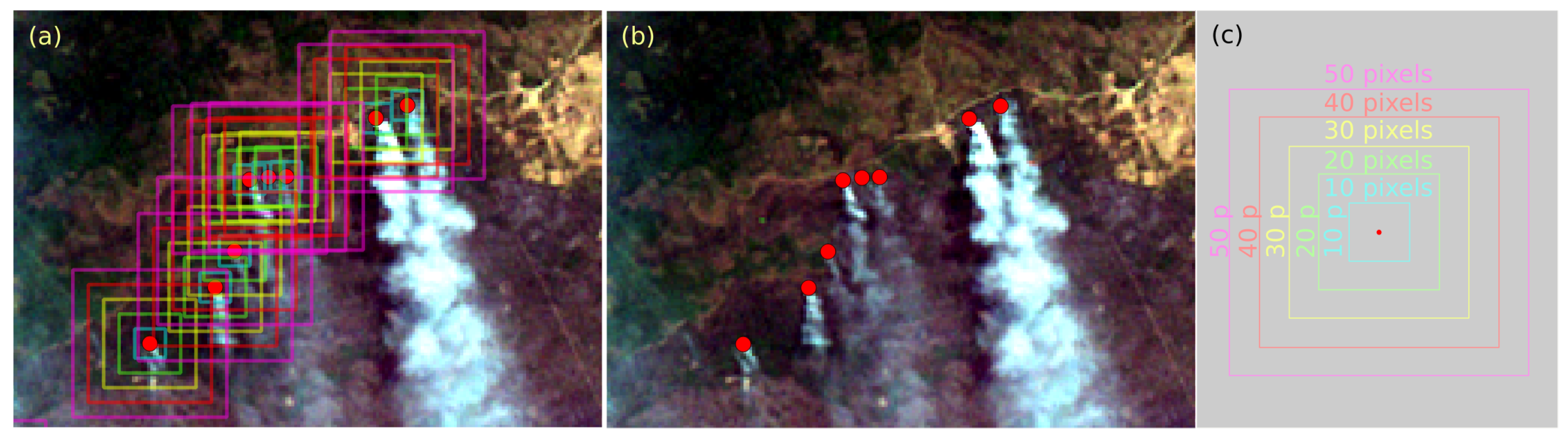

2.2. Active Fire Detection Approach

2.3. Object Detection Methods

2.4. Experimental Setup

2.5. Method Assessment

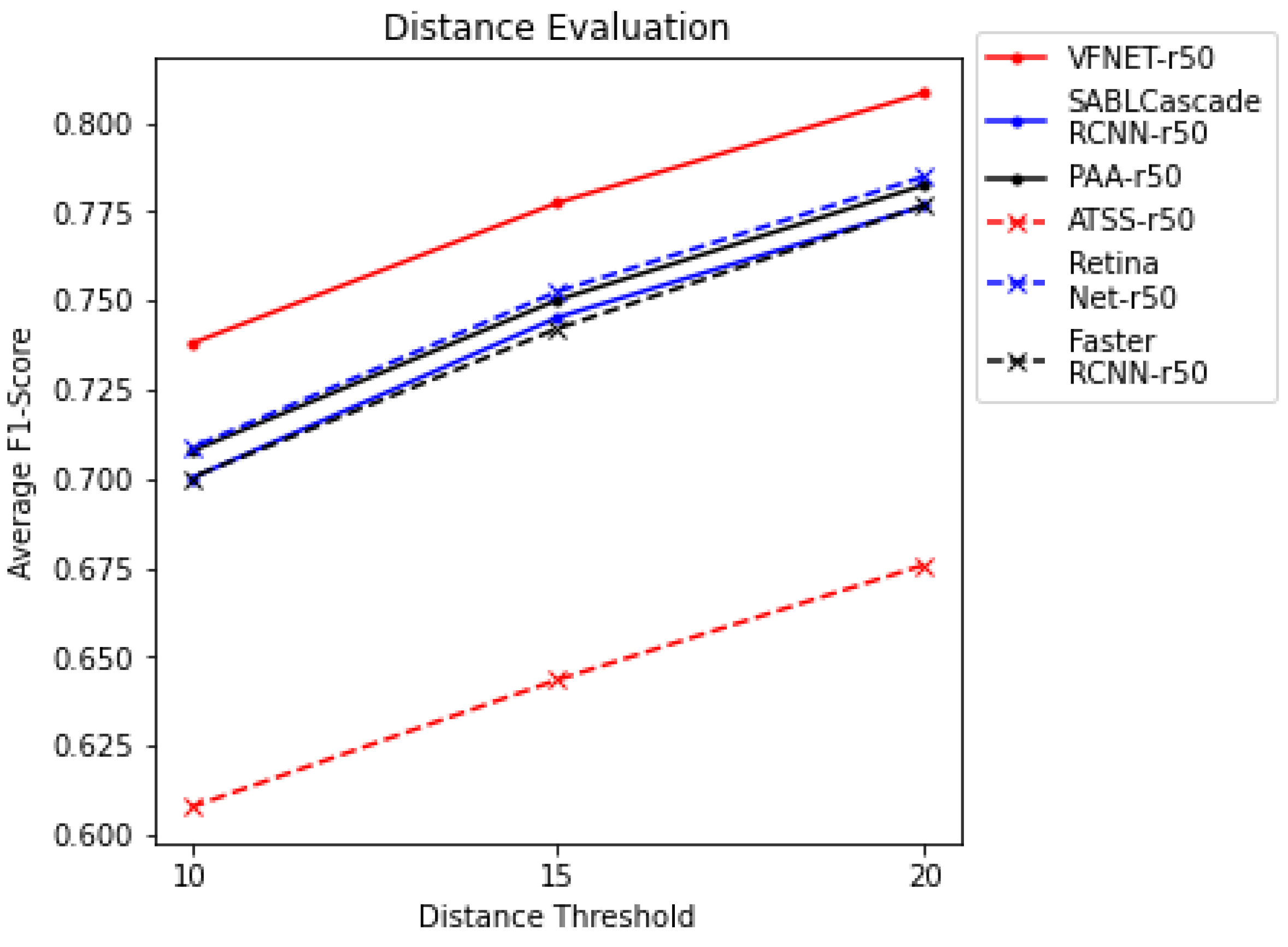

3. Results and Discussion

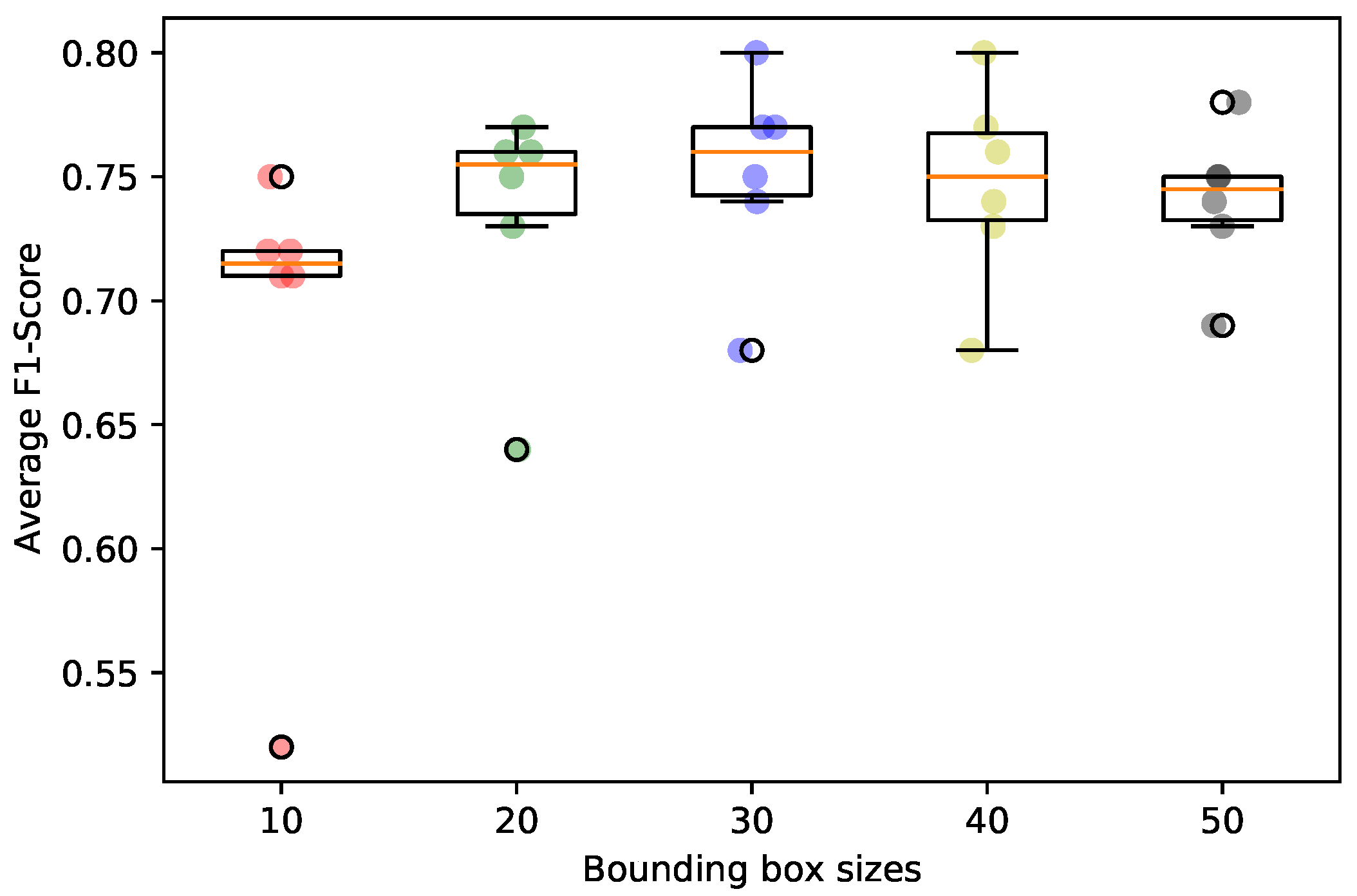

3.1. Quantitative Analysis

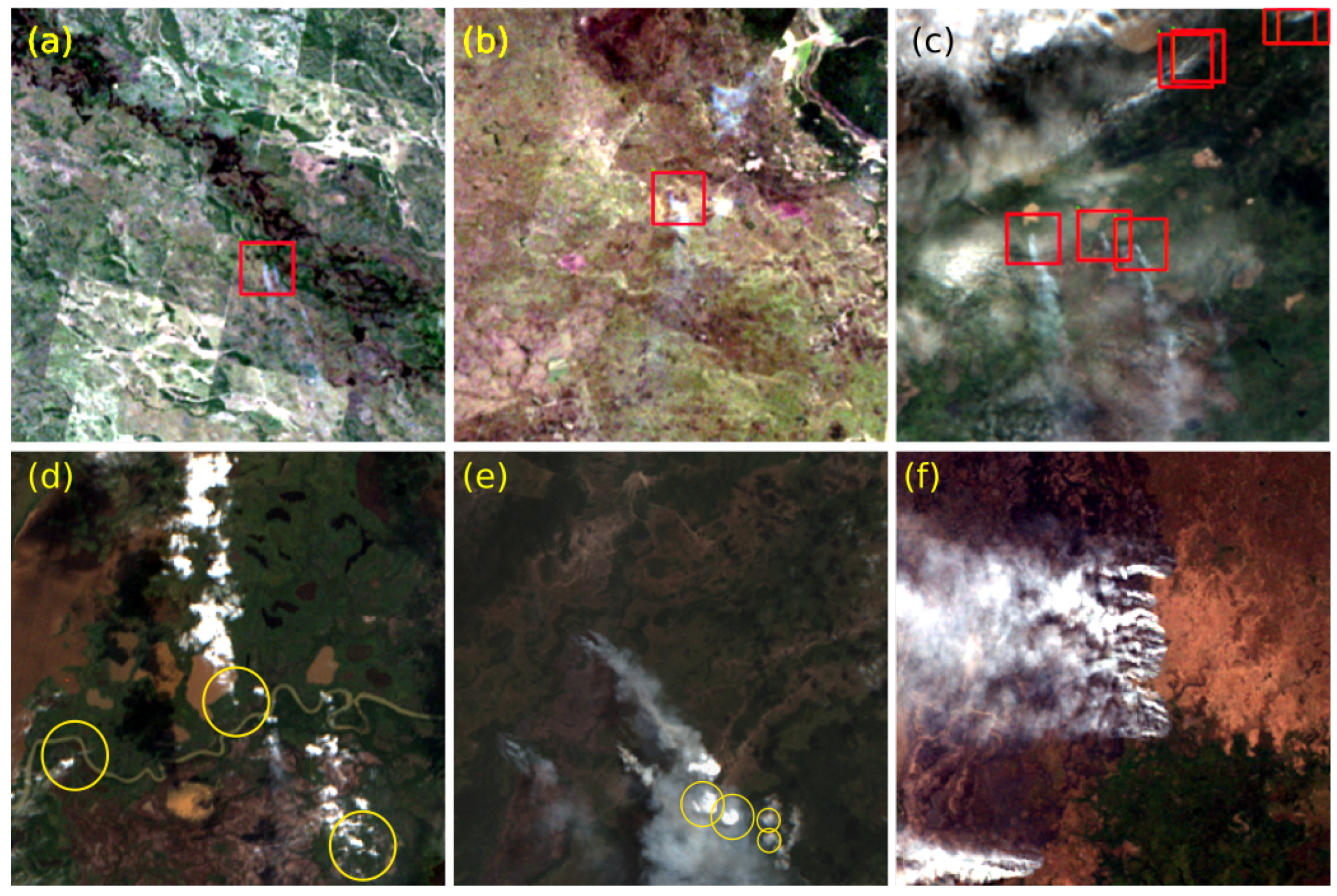

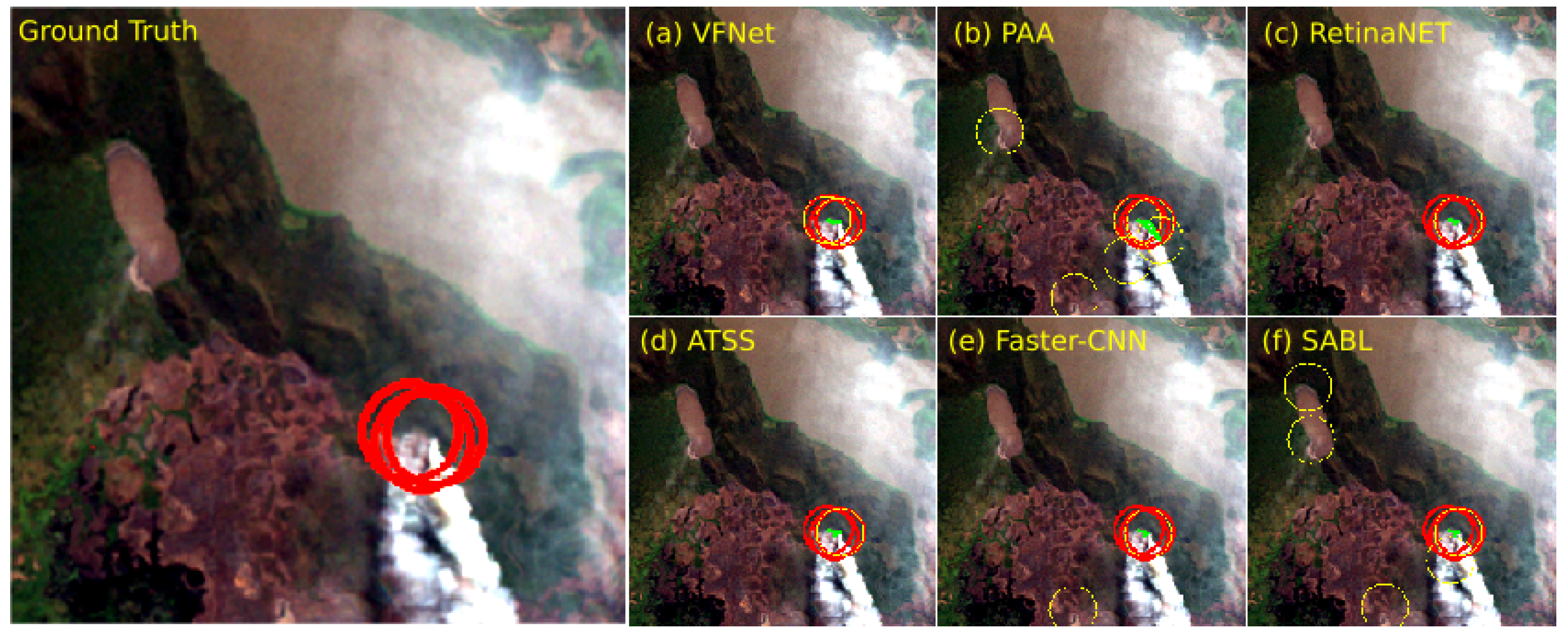

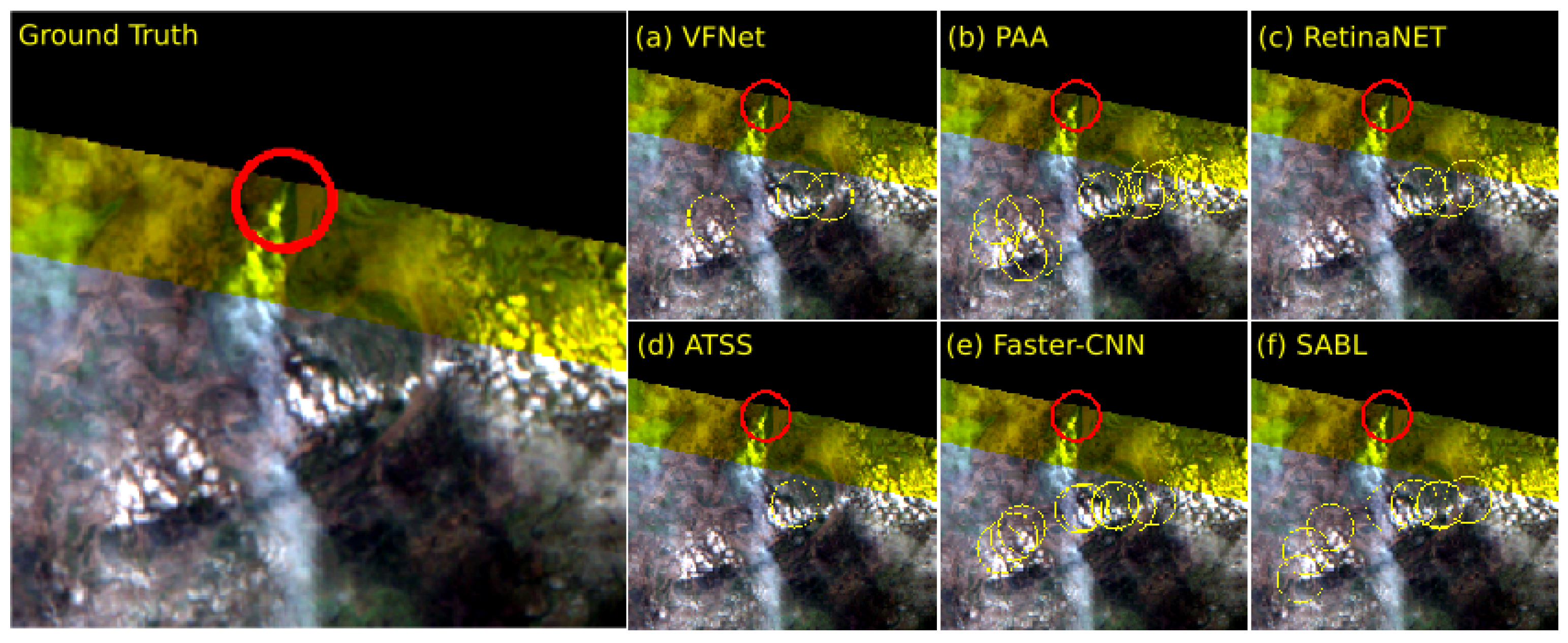

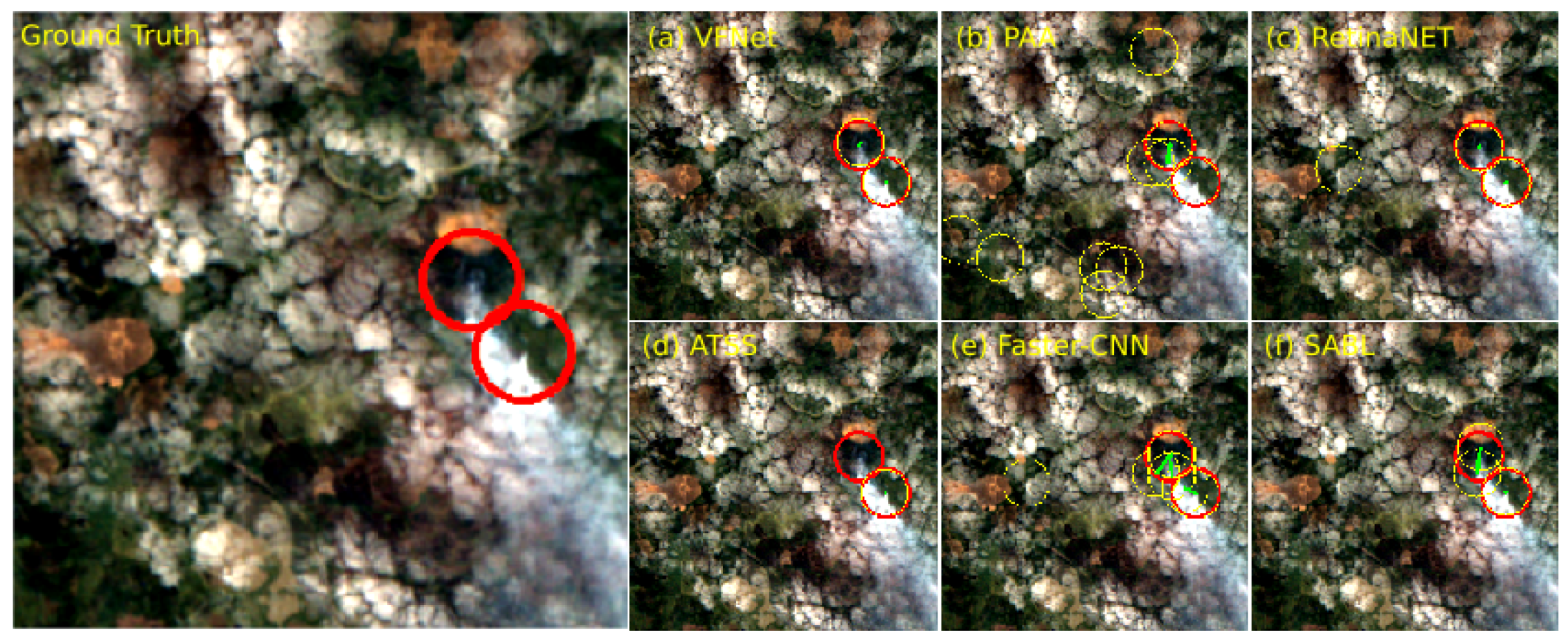

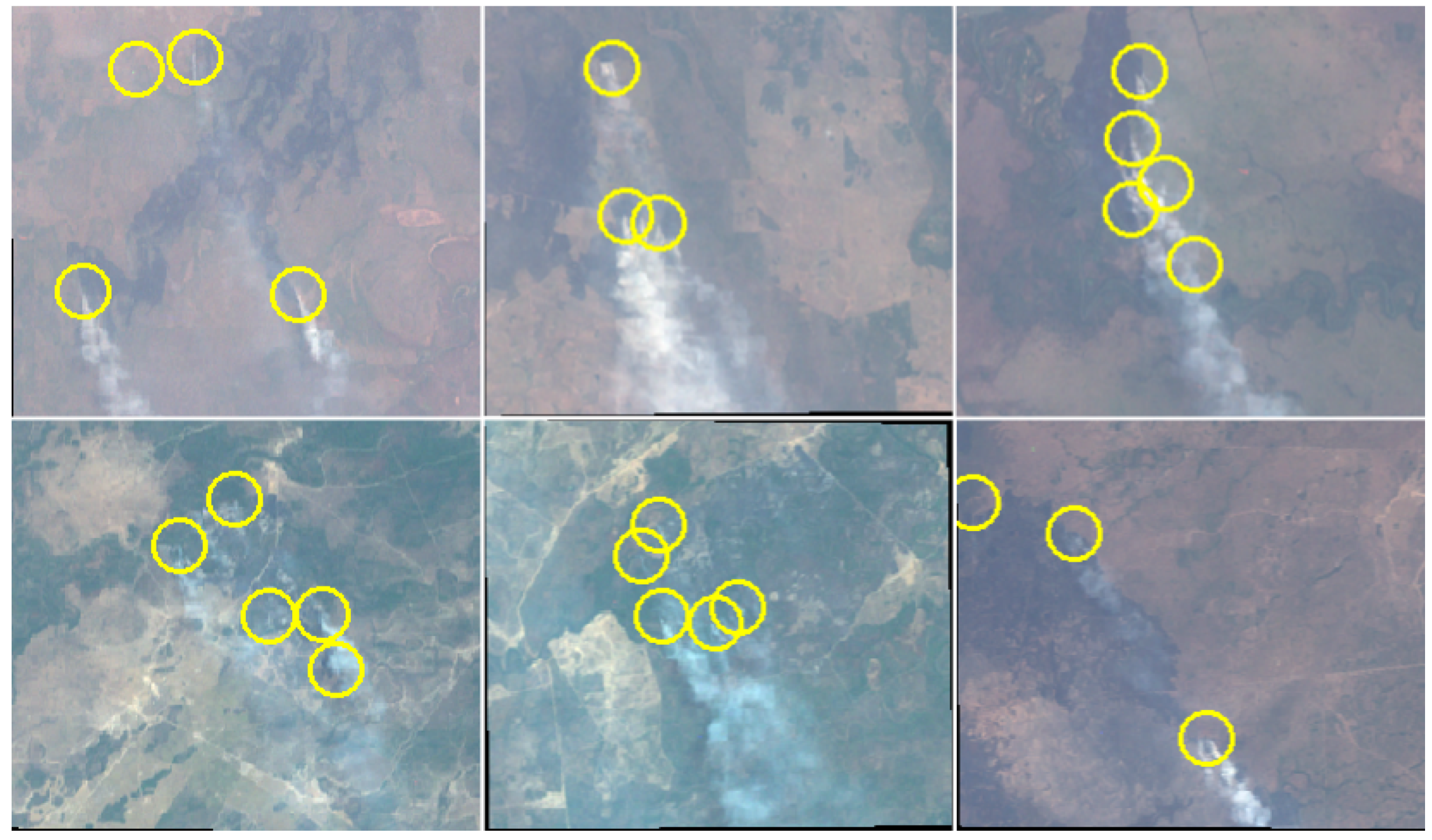

3.2. Qualitative Analysis and Discussion

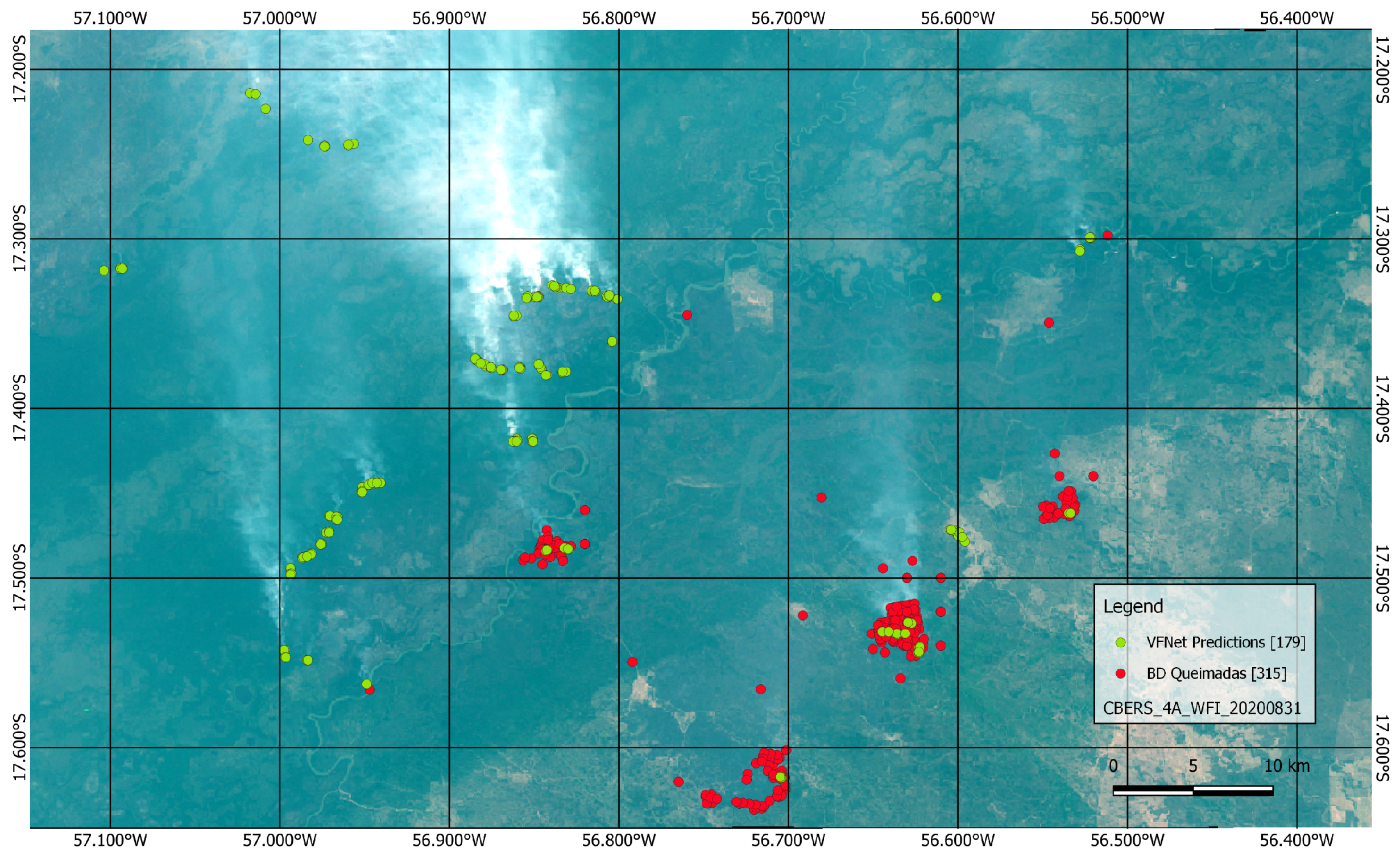

3.3. Comparison with BD Queimadas Database

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Calheiros, D.F.; Oliveira, M.; Padovani, C.R. Hydro-ecological processes and anthropogenic impacts on the ecosystem services of the Pantanal wetland. In Tropical Wetland Management: The South-American Pantanal and the International Experience; Routledge: London, UK, 2012; pp. 29–57. [Google Scholar]

- da Silva, J.B.; Junior, L.C.G.V.; Faria, T.O.; Marques, J.B.; Dalmagro, H.J.; Nogueira, J.S.; Vourlitis, G.L.; Rodrigues, T.R. Temporal variability in evapotranspiration and energy partitioning over a seasonally flooded scrub forest of the Brazilian Pantanal. Agric. For. Meteorol. 2021, 308, 108559. [Google Scholar] [CrossRef]

- do Brasil, S.F. Constituição da República Federativa do Brasil; Senado Federal, Centro Gráfico: Brasília, Brazil, 1988. [Google Scholar]

- Alho, C.J.; Sabino, J. Seasonal Pantanal flood pulse: Implications for biodiversity. Oecologia Aust. 2012, 16, 958–978. [Google Scholar] [CrossRef] [Green Version]

- Junk, W.J.; Da Cunha, C.N.; Wantzen, K.M.; Petermann, P.; Strüssmann, C.; Marques, M.I.; Adis, J. Biodiversity and its conservation in the Pantanal of Mato Grosso, Brazil. Aquat. Sci. 2006, 68, 278–309. [Google Scholar] [CrossRef]

- Braz, A.M.; Melo, D.S.; Boni, P.V.; Decco, H.F. A estrutura fundiária do pantanal brasileiro. Finisterra 2020, 55, 157–174. [Google Scholar]

- INPE. Portal do Monitoramento de Queimadas e Incêndios Florestais. 2020. Available online: http://www.inpe.br/queimadas (accessed on 1 September 2019).

- Xu, W.; Liu, Y.; Veraverbeke, S.; Wu, W.; Dong, Y.; Lu, W. Active Fire Dynamics in the Amazon: New Perspectives From High-Resolution Satellite Observations. Geophys. Res. Lett. 2021, 48, e2021GL093789. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the 2016 International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016; Atlantis Press: Dordrecht, The Netherlands, 2016. [Google Scholar]

- Wahyuni, E.S.; Hendri, M. Smoke and Fire Detection Base on Convolutional Neural Network. ELKOMIKA J. Tek. Energi Elektr. Tek. Telekomun. Tek. Elektron. 2019, 7, 455. [Google Scholar] [CrossRef]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithms Comput. Technol. 2019, 13, 1748302619887689. [Google Scholar] [CrossRef] [Green Version]

- Pan, H.; Badawi, D.; Zhang, X.; Cetin, A.E. Additive neural network for forest fire detection. Signal Image Video Process. 2019, 14, 675–682. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based forest fire detection algorithm using convolutional neural network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 10305–10310. [Google Scholar]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 22–26 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.T.; Lee, H.W.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the 2017 IEEE international conference on consumer electronics (ICCE), Taipei, Taiwan, 12–14 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 252–253. [Google Scholar]

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary results from a wildfire detection system using deep learning on remote camera images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef] [Green Version]

- Vani, K. Deep learning based forest fire classification and detection in satellite images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 8–20 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 61–65. [Google Scholar]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. Smokenet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, W.; Cao, Y.; Chen, K.; Pang, J.; Gong, T.; Shi, J.; Loy, C.C.; Lin, D. Side-aware boundary localization for more precise object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 403–419. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9759–9768. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. Varifocalnet: An iou-aware dense object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2021; pp. 8514–8523. [Google Scholar]

- Kim, K.; Lee, H.S. Probabilistic anchor assignment with iou prediction for object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 355–371. [Google Scholar]

- Gef (Global Environment Facility) Pantanal/Upper Paraguay Project. Implementation of Integrated River Basin Management Practices in the Pantanal and Upper Paraguay River Basin. Strategic Action Program for the Integrated Management of the Pantanal and Upper Paraguay River Basin. ANA/GEF/UNEP/OAS; TDA Desenho & Arte Ltda: Brasilia, Brazil, 2004; 63p. [Google Scholar]

- Alvares, C.A.; Stape, J.L.; Sentelhas, P.C.; Gonçalves, J.d.M.; Sparovek, G. Köppen’s climate classification map for Brazil. Meteorol. Z. 2013, 22, 711–728. [Google Scholar] [CrossRef]

- IBGE. Biomas. 2020. Available online: https://www.ibge.gov.br/geociencias/informacoes-ambientais/vegetacao/15842-biomas.html?=&t=downloads (accessed on 1 September 2019).

- INPE. CBERS 4A. 2020. Available online: http://www.cbers.inpe.br/sobre/cbers04a.php (accessed on 1 September 2019).

- Abuelgasim, A.; Fraser, R. Day and night-time active fire detection over North America using NOAA-16 AVHRR data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; IEEE: Piscataway, NJ, USA, 2002; Volume 3, pp. 1489–1491. [Google Scholar]

- Christopher, S.A.; Wang, M.; Barbieri, K.; Welch, R.M.; Yang, S.K. Satellite remote sensing of fires, smoke and regional radiative energy budgets. In Proceedings of the 1997 IEEE International Geoscience and Remote Sensing Symposium Proceedings, IGARSS’97, Remote Sensing—A Scientific Vision for Sustainable Development, Singapore, 3–8 August 1997; Volume 4, pp. 1923–1925. [Google Scholar]

- Giglio, L.; Descloitres, J.; Justice, C.O.; Kaufman, Y.J. An enhanced contextual fire detection algorithm for MODIS. Remote Sens. Environ. 2003, 87, 273–282. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9627–9636. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhang, X.; Wan, F.; Liu, C.; Ji, R.; Ye, Q. Freeanchor: Learning to match anchors for visual object detection. arXiv 2019, arXiv:1909.02466. [Google Scholar]

- Ke, W.; Zhang, T.; Huang, Z.; Ye, Q.; Liu, J.; Huang, D. Multiple anchor learning for visual object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10206–10215. [Google Scholar]

- Li, H.; Wu, Z.; Zhu, C.; Xiong, C.; Socher, R.; Davis, L.S. Learning from noisy anchors for one-stage object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10588–10597. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Kuhn, H.W. The Hungarian Method for the Assignment Problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 839–847. [Google Scholar]

- Gomes, M.; Silva, J.; Gonçalves, D.; Zamboni, P.; Perez, J.; Batista, E.; Ramos, A.; Osco, L.; Matsubara, E.; Li, J.; et al. Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method. Sensors 2020, 20, 6070. [Google Scholar] [CrossRef]

- Biffi, L.J.; Mitishita, E.; Liesenberg, V.; Santos, A.A.d.; Gonçalves, D.N.; Estrabis, N.V.; Silva, J.d.A.; Osco, L.P.; Ramos, A.P.M.; Centeno, J.A.S.; et al. ATSS Deep Learning-Based Approach to Detect Apple Fruits. Remote Sens. 2021, 13, 54. [Google Scholar] [CrossRef]

| Date | Path | Row | Level | Date | Path | Row | Level |

|---|---|---|---|---|---|---|---|

| 4 May 2020 | 214 | 140 | L4 | 21 July 2020 | 217 | 132 | L4 |

| 5 May 2020 | 220 | 132 | L2 | 21 July 2020 | 217 | 140 | L4 |

| 5 May 2020 | 220 | 140 | L2 | 22 July 2020 | 223 | 132 | L4 |

| 10 May 2020 | 219 | 140 | L4 | 26 July 2020 | 216 | 132 | L4 |

| 15 May 2020 | 218 | 140 | L4 | 26 July 2020 | 216 | 140 | L4 |

| 20 May 2020 | 217 | 132 | L4 | 27 July 2020 | 222 | 132 | L4 |

| 20 May 2020 | 217 | 140 | L4 | 27 July 2020 | 222 | 140 | L4 |

| 31 May 2020 | 221 | 140 | L4 | 31 July 2020 | 215 | 132 | L4 |

| 10 June 2020 | 219 | 132 | L4 | 1 August 2020 | 221 | 132 | L4 |

| 10 June 2020 | 219 | 140 | L4 | 1 August 2020 | 221 | 140 | L4 |

| 15 June 2020 | 218 | 132 | L4 | 5 August 2020 | 214 | 140 | L4 |

| 15 June 2020 | 218 | 140 | L4 | 6 August 2020 | 220 | 132 | L4 |

| 20 June 2020 | 217 | 132 | L4 | 6 August 2020 | 220 | 140 | L4 |

| 20 June 2020 | 217 | 140 | L4 | 10 August 2020 | 213 | 132 | L4 |

| 25 June 2020 | 216 | 132 | L4 | 10 August 2020 | 213 | 140 | L4 |

| 30 June 2020 | 215 | 132 | L4 | 11 August 2020 | 219 | 132 | L4 |

| 30 June 2020 | 215 | 140 | L4 | 11 August 2020 | 219 | 140 | L2 |

| 1 July 2020 | 221 | 132 | L4 | 15 August 2020 | 212 | 132 | L4 |

| 1 July 2020 | 221 | 140 | L4 | 16 August 2020 | 218 | 132 | L4 |

| 5 July 2020 | 214 | 140 | L4 | 26 August 2020 | 216 | 132 | L4 |

| 6 July 2020 | 220 | 132 | L4 | 26 August 2020 | 216 | 140 | L4 |

| 11 July 2020 | 219 | 132 | L4 | 27 August 2020 | 222 | 132 | L4 |

| 15 July 2020 | 212 | 140 | L4 | 31 August 2020 | 215 | 132 | L4 |

| 16 July 2020 | 218 | 132 | L4 | 31 August 2020 | 215 | 140 | L4 |

| 16 July 2020 | 218 | 140 | L4 |

| Fold | Test | Train | Validation | |||

|---|---|---|---|---|---|---|

| Patches | Images | Patches | Images | Patches | Images | |

| F1 | 79 (10%) | 10 | 497 (64%) | 28 | 199 (26%) | 10 |

| F2 | 82 (11%) | 10 | 493 (64%) | 28 | 200 (26%) | 10 |

| F3 | 187 (24%) | 10 | 437 (56%) | 28 | 151 (19%) | 10 |

| F4 | 182 (23%) | 9 | 360 (46%) | 29 | 233 (30%) | 10 |

| F5 | 245 (32%) | 9 | 371 (48%) | 29 | 159 (21%) | 10 |

| Methods | Average Minimum Distances (±SD) |

|---|---|

| VFNET | 8.39 (±17.76) |

| SABL Cascade RCNN | 9.58 (±18.41) |

| PAA | 15.02 (±22.97) |

| ATSS | 5.04 (±14.02) |

| RetinaNet | 7.82 (±16.28) |

| Faster RCNN | 9.80 (±19.41) |

| Metric | (±SD) |

|---|---|

| Precision | 0.83 (±0.29) |

| Recall | 0.91 (±0.23) |

| F1-Score | 0.84 (±0.25) |

| Average Minimum Distances | 11.12 (±16.25) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Higa, L.; Marcato Junior, J.; Rodrigues, T.; Zamboni, P.; Silva, R.; Almeida, L.; Liesenberg, V.; Roque, F.; Libonati, R.; Gonçalves, W.N.; et al. Active Fire Mapping on Brazilian Pantanal Based on Deep Learning and CBERS 04A Imagery. Remote Sens. 2022, 14, 688. https://doi.org/10.3390/rs14030688

Higa L, Marcato Junior J, Rodrigues T, Zamboni P, Silva R, Almeida L, Liesenberg V, Roque F, Libonati R, Gonçalves WN, et al. Active Fire Mapping on Brazilian Pantanal Based on Deep Learning and CBERS 04A Imagery. Remote Sensing. 2022; 14(3):688. https://doi.org/10.3390/rs14030688

Chicago/Turabian StyleHiga, Leandro, José Marcato Junior, Thiago Rodrigues, Pedro Zamboni, Rodrigo Silva, Laisa Almeida, Veraldo Liesenberg, Fábio Roque, Renata Libonati, Wesley Nunes Gonçalves, and et al. 2022. "Active Fire Mapping on Brazilian Pantanal Based on Deep Learning and CBERS 04A Imagery" Remote Sensing 14, no. 3: 688. https://doi.org/10.3390/rs14030688

APA StyleHiga, L., Marcato Junior, J., Rodrigues, T., Zamboni, P., Silva, R., Almeida, L., Liesenberg, V., Roque, F., Libonati, R., Gonçalves, W. N., & Silva, J. (2022). Active Fire Mapping on Brazilian Pantanal Based on Deep Learning and CBERS 04A Imagery. Remote Sensing, 14(3), 688. https://doi.org/10.3390/rs14030688