Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting

Abstract

:1. Introduction

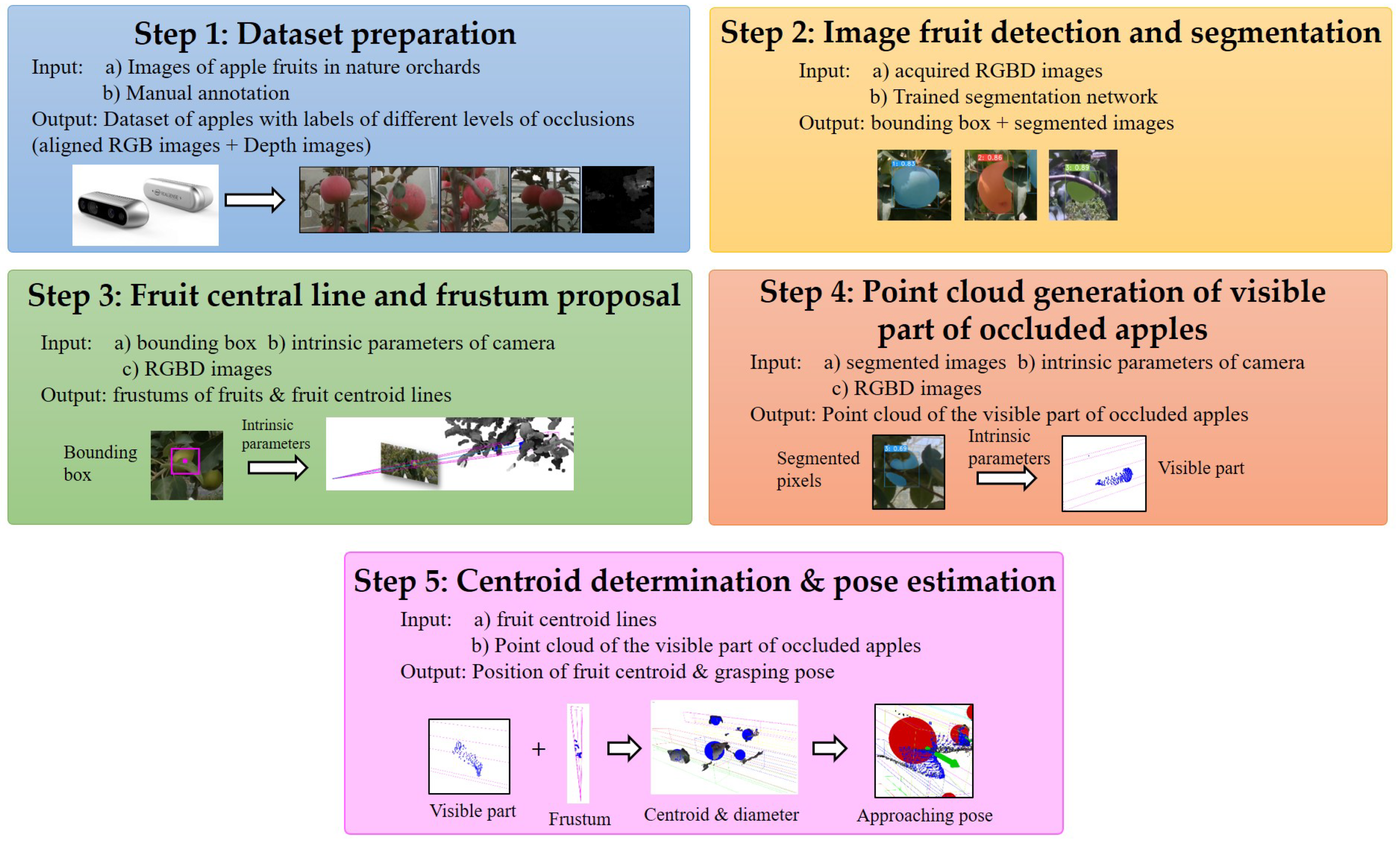

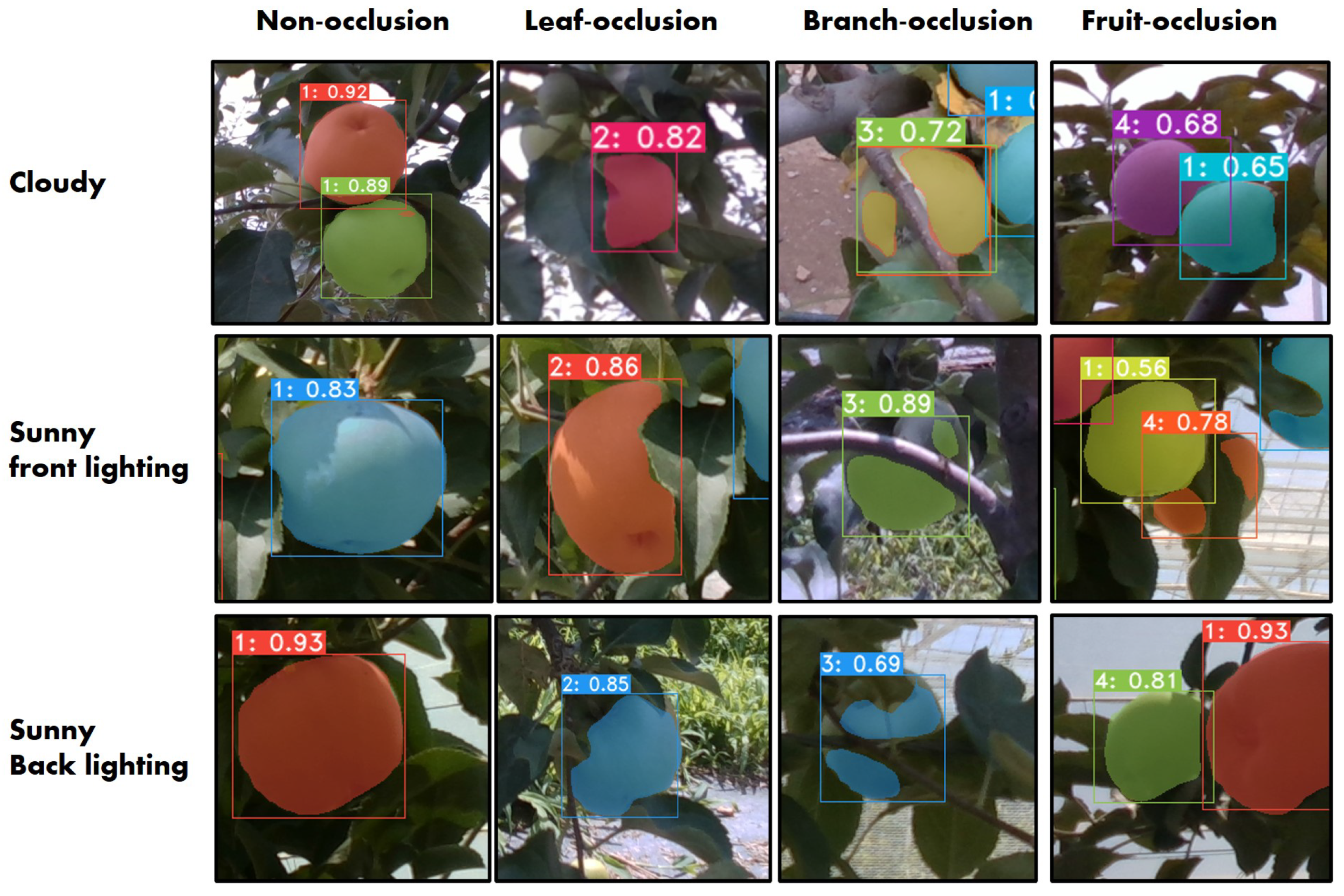

- An instance segmentation network was employed to provide the location and geometry information of fruits in four cases of occlusions including leaf-occluded, branch-occluded, fruit-occluded, and non-occluded;

- A point-cloud-processing pipeline is presented to refine the estimations of the 3D positional and pose information of partially occluded fruits and to provide 3D approaching pose vectors for the robotic gripper.

2. Related Work

2.1. Fruit Recognition

2.2. 3D Fruit Localization and Approaching Direction Estimation

3. Methods and Materials

3.1. Hardware and Software Platform

- Computing module: Intel NUC11 Enthusiast with CPU:Intel CoreTM i7-1165G7 processor; GPU: Nvidia Geforce RTX 2060;

- RGBD camera: Intel Realsense D435i with a global shutter, ideal range: 0.3 m to 3 m, depth accuracy: <2% at 2 m, depth Field of View (FOV): 87° × 58°;

- Tracked mobile platform with autonomous navigation system: Global Navigation Satellite System (GNSS) + a laser scanner; electric drive: DC 48 V, two 650 W motors; working speed: 0–5 km/h; carrying capacity: 80 kg; size: 1150 × 750 × 650 mm (length × width × height);

- Robotic arm: Franka Emika 7-dof robotic arm;

- LiDAR: A Velodyne HDL-64E LiDAR is for the true value acquisition of fruits’ 3D position coordinates.

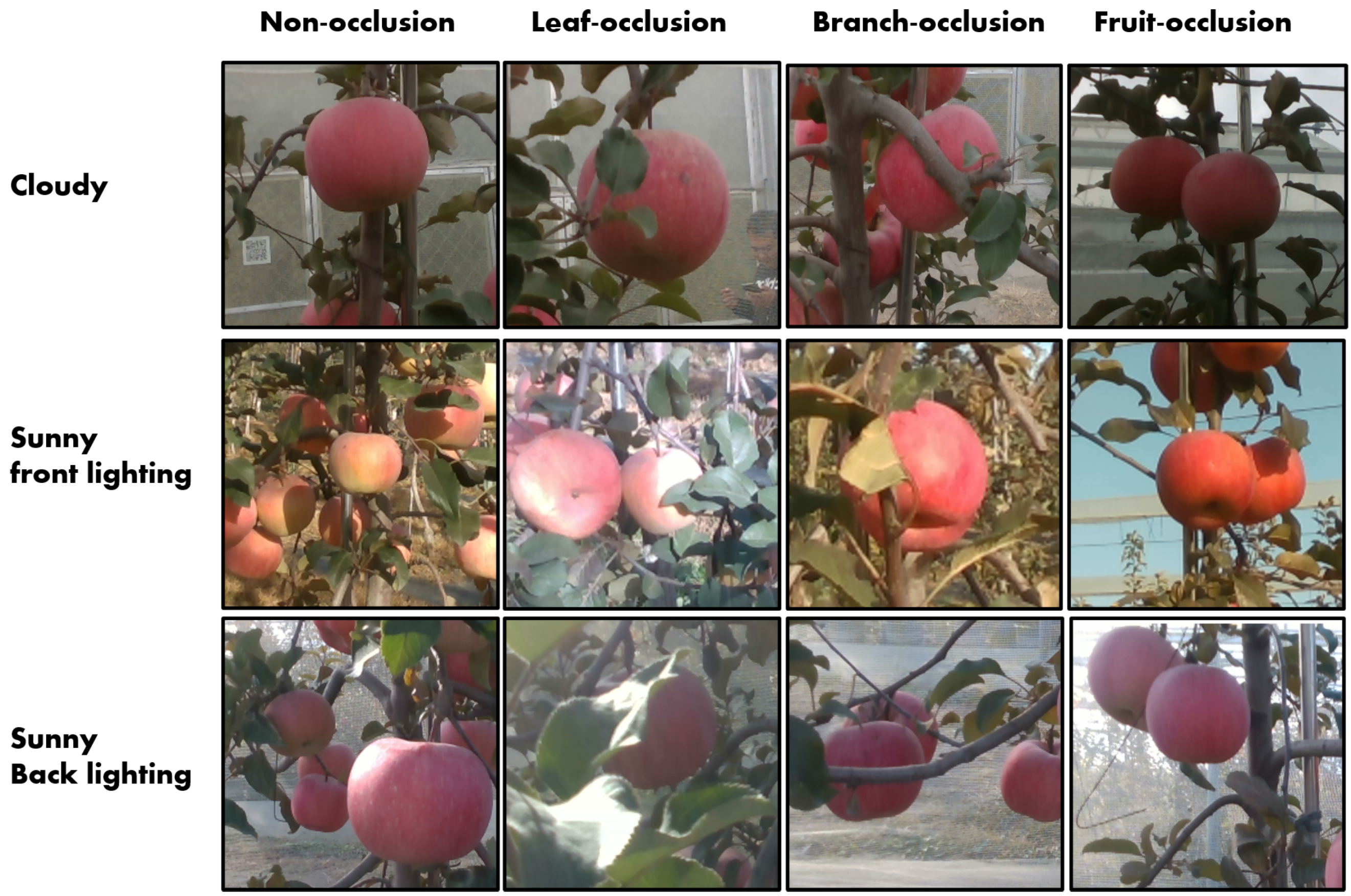

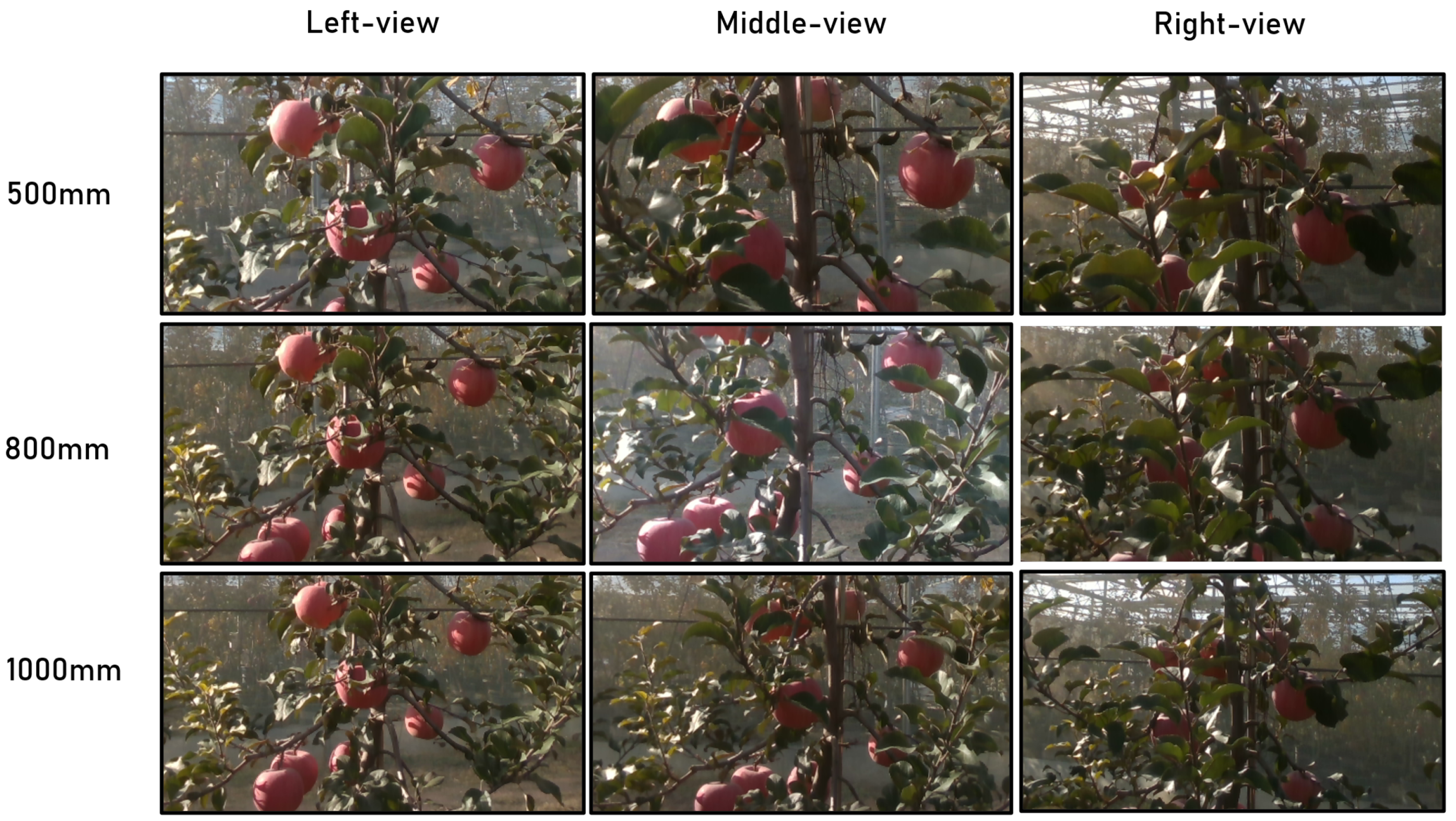

3.2. Data Preparation

- Different illumination: front lighting, side lighting, back lighting, cloudy;

- Different occlusions: non-occluded, leaf-occluded, branch/wire-occluded, fruit-occluded;

- Different periods of the day: morning (08:00–11:00), noon (11:00–14:00), afternoon (14:00–18:00).

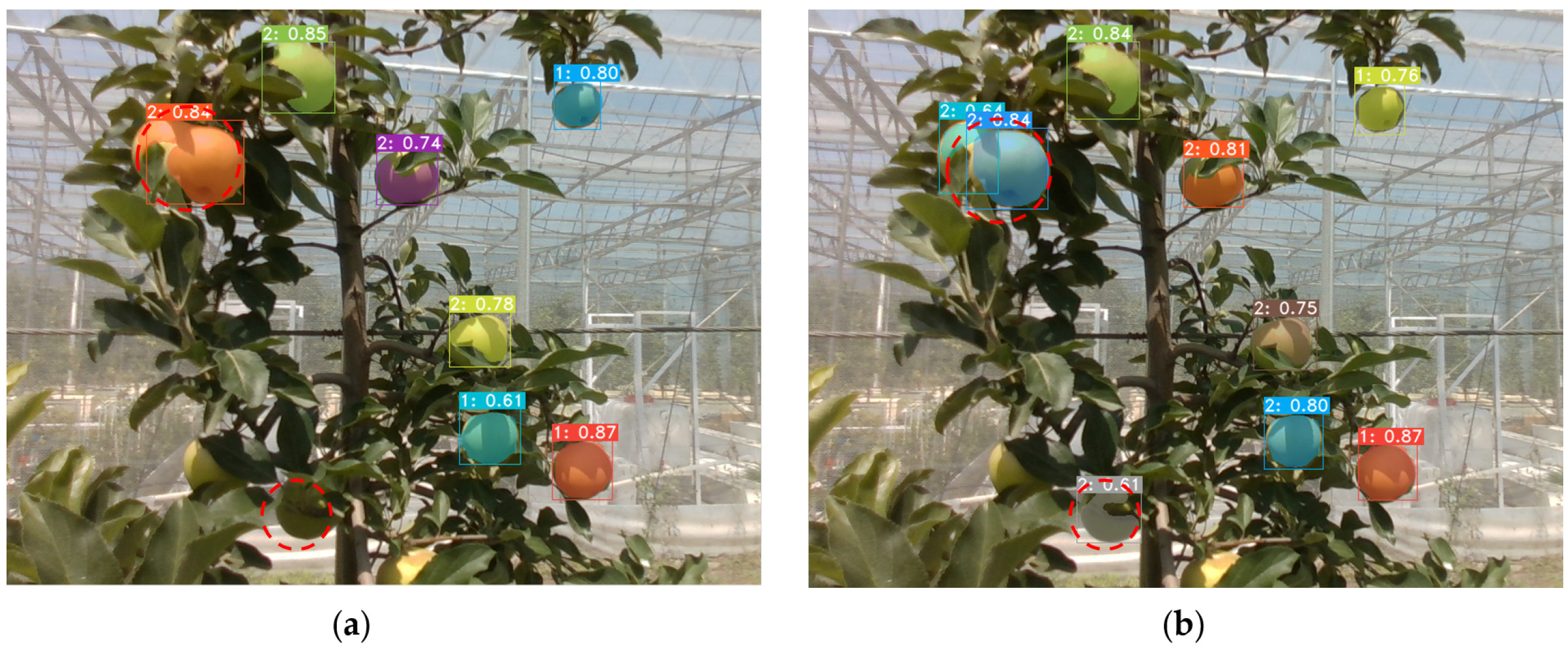

3.3. Image Fruit Detection and Instance Segmentation

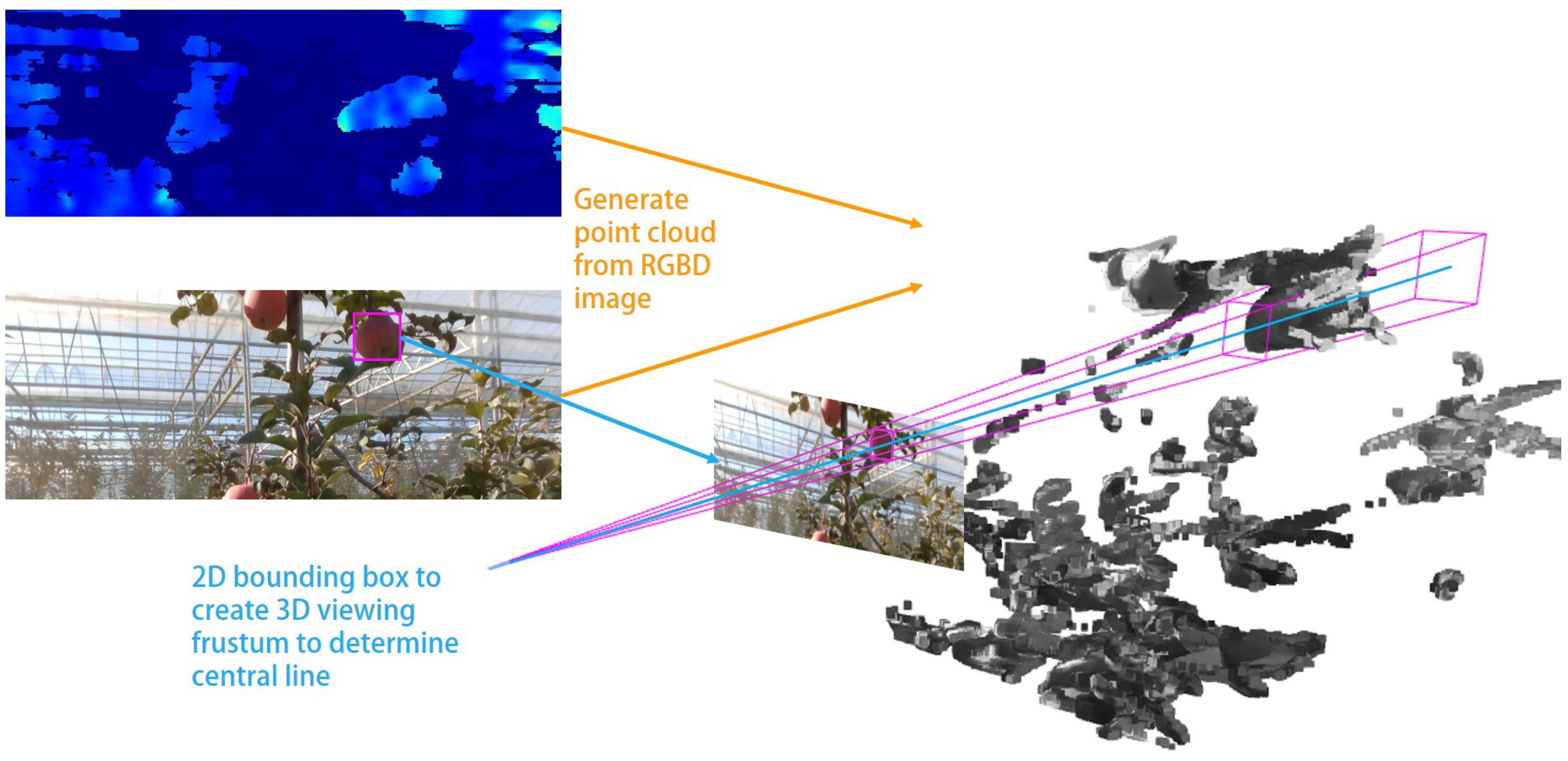

3.4. Fruit Central Line and Frustum Proposal

3.5. Point Cloud Generation of Visible Parts of Occluded Apples

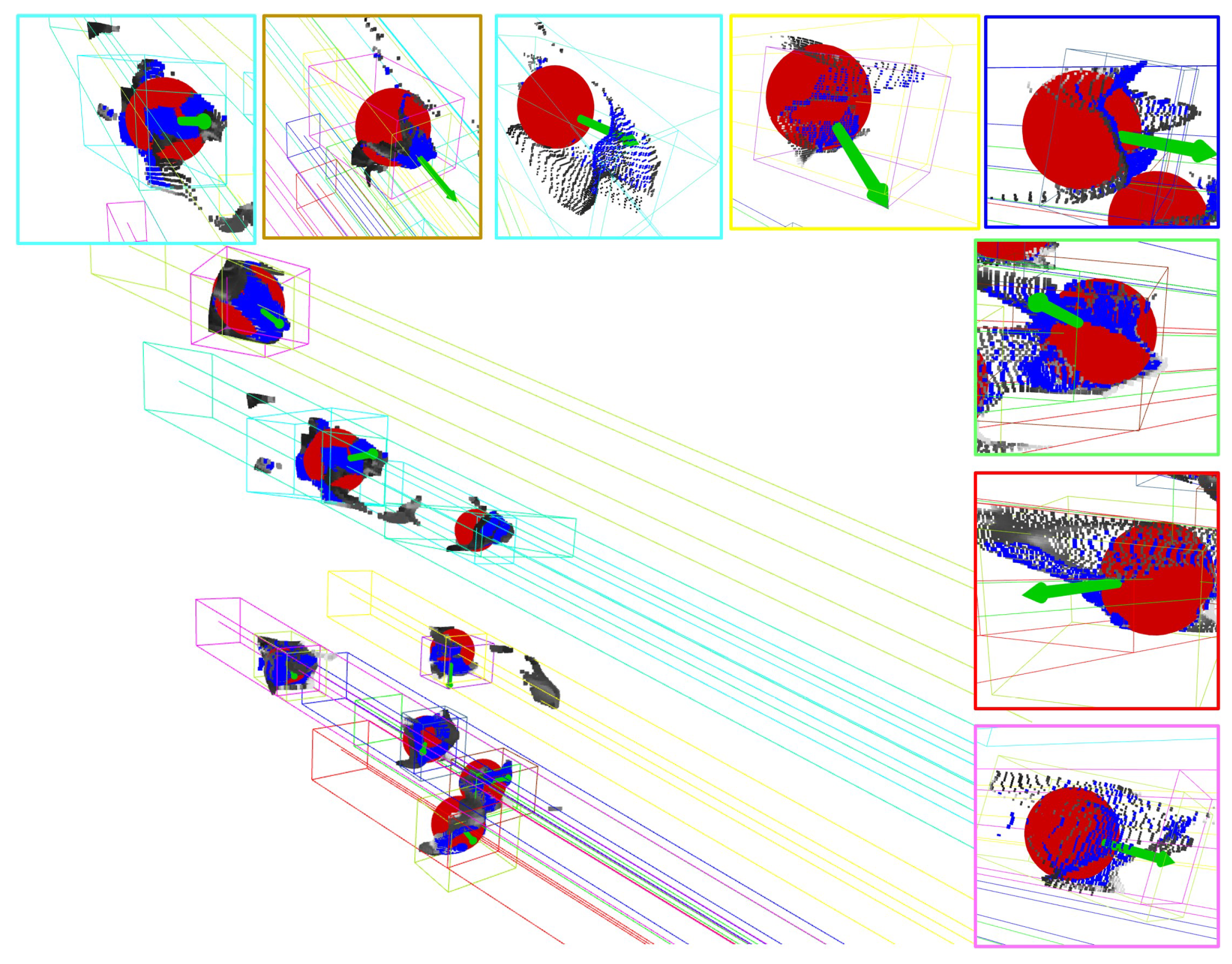

- 1.

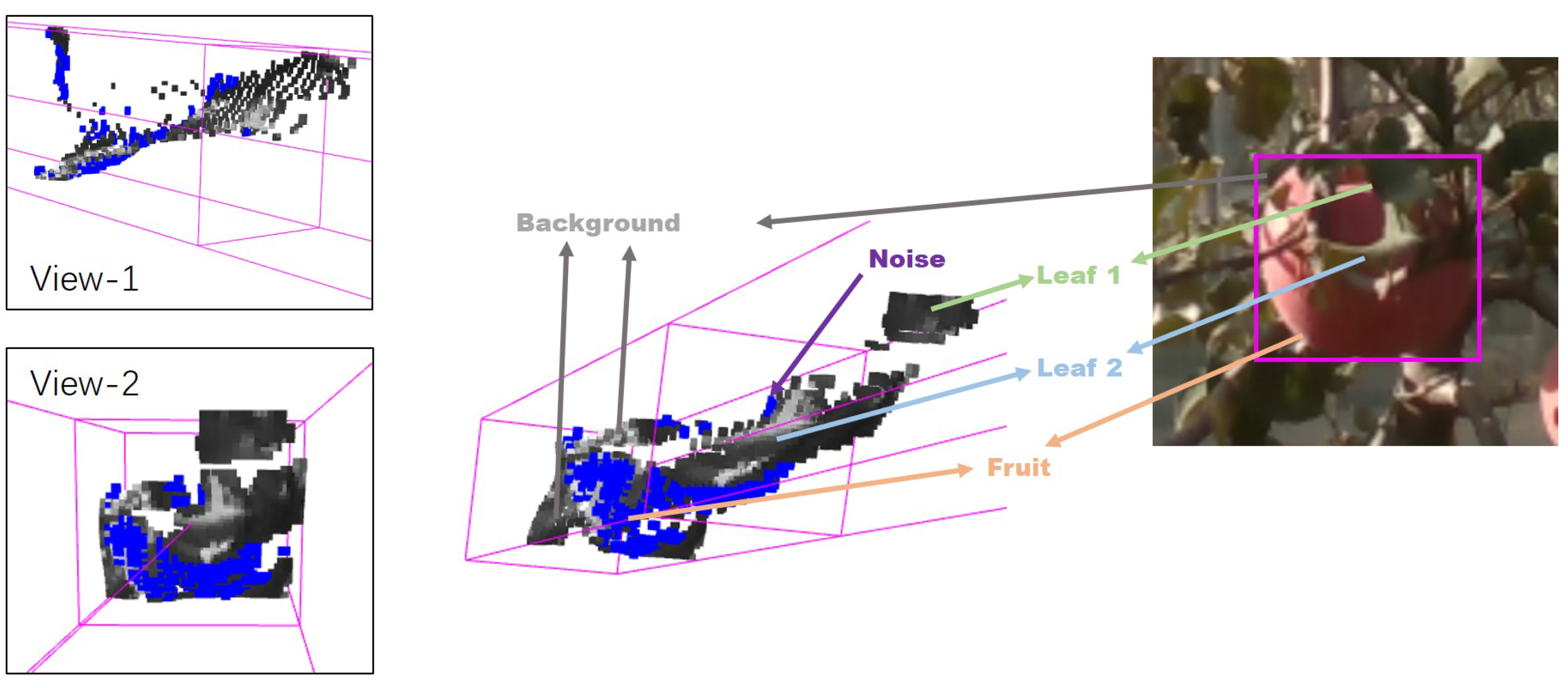

- Generation of point clouds under the fruits’ masks. According to the fruits’ masks detected by the RCNN network and combining their corresponding depth map, the point clouds inside the masks can be generated, as shown in Figure 12. Ideally, the point cloud(s) generated from the fruit’s mask is (are) supposed to be distributed on the surface of the fruit sphere. In practice, however, due to the nonideal characteristics of sensors and the inaccuracy of mask segmentation, there are deficiencies and outliers in the point clouds, resulting in the acquired point clouds being fragmentary and distorted; see Figure 9. Therefore, the following step is necessary;

- 2.

- Selection of the most likely point cloud. To sort out the cluster on the target’s surface, we used Density-Based Spatial Clustering of Applications with Noise (DBSCAN) to cluster the target point cloud. By DBSCAN, the point clouds generated from the masks can be clustered. Then, through counting how many points each cluster holds, the point cloud holding more points is selected as the best cluster. Taking the cluster as the most likely point cloud belonging to the target’s surface, we may remove the other point clouds in the frustum.

3.6. Centroid Determination and Pose Estimation

- 1.

- Obtaining the centroid of the filtered point cloud, denoted by ;

- 2.

- Calculating the radius from the 2D bounding box of the target and camera intrinsic parameters by:where is the width of the bounding box and are the pixel positions of the left side and the right side of the bounding box on the U-axis, respectively; are the z-axis coordinate of the point ; r denotes the radius of the sphere; is the scale factor on the U-axis of the camera;

- 3.

- Constructing a sphere with radius r by taking as a spherical center, two points of intersection between the sphere and the central line of the frustum were obtained;

- 4.

- Taking the far point of the above two as the fruit’s centroid ;

- 5.

- Taking the direction vector from to as the approaching vector.

4. Experimental Results and Discussion

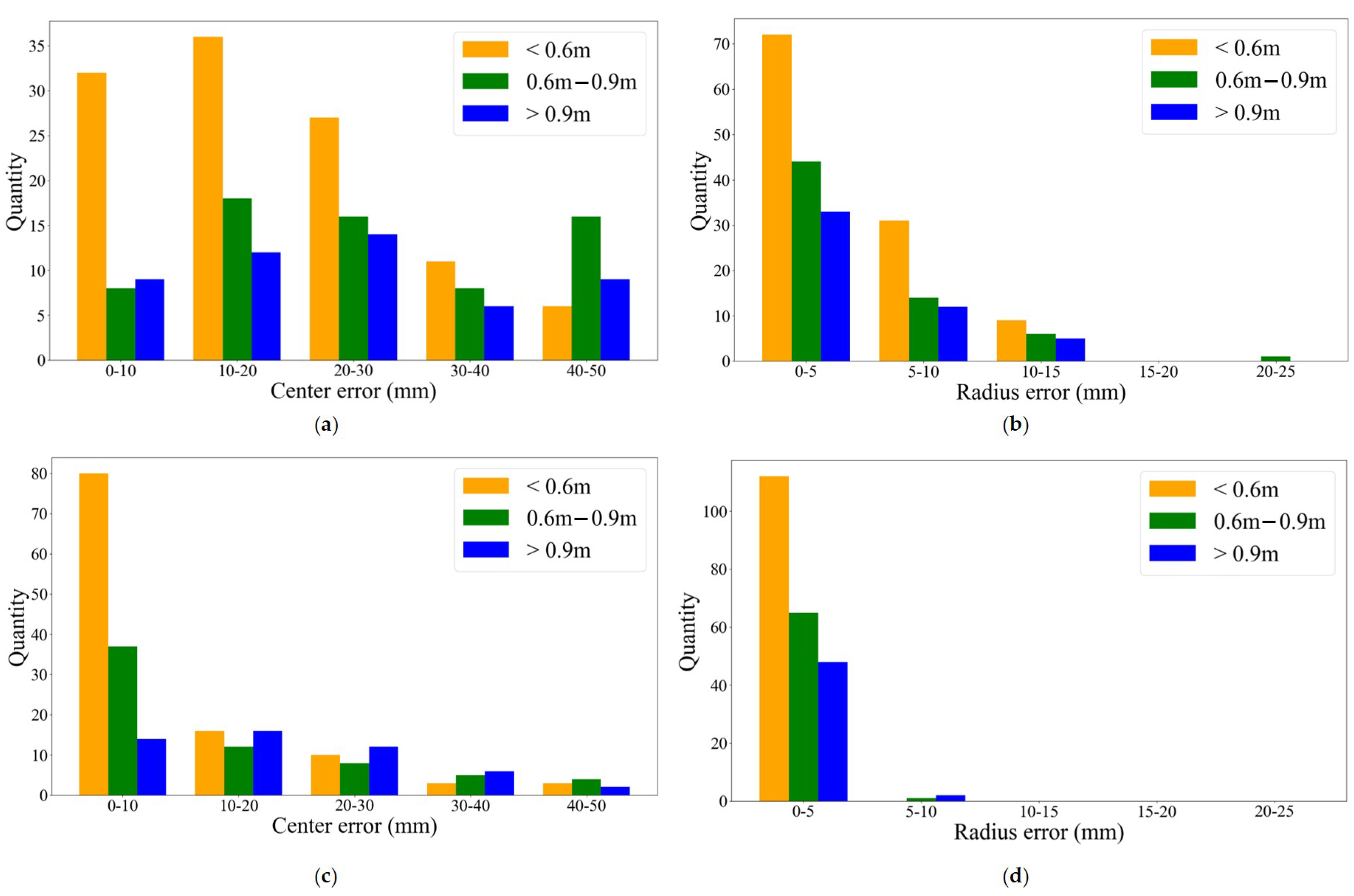

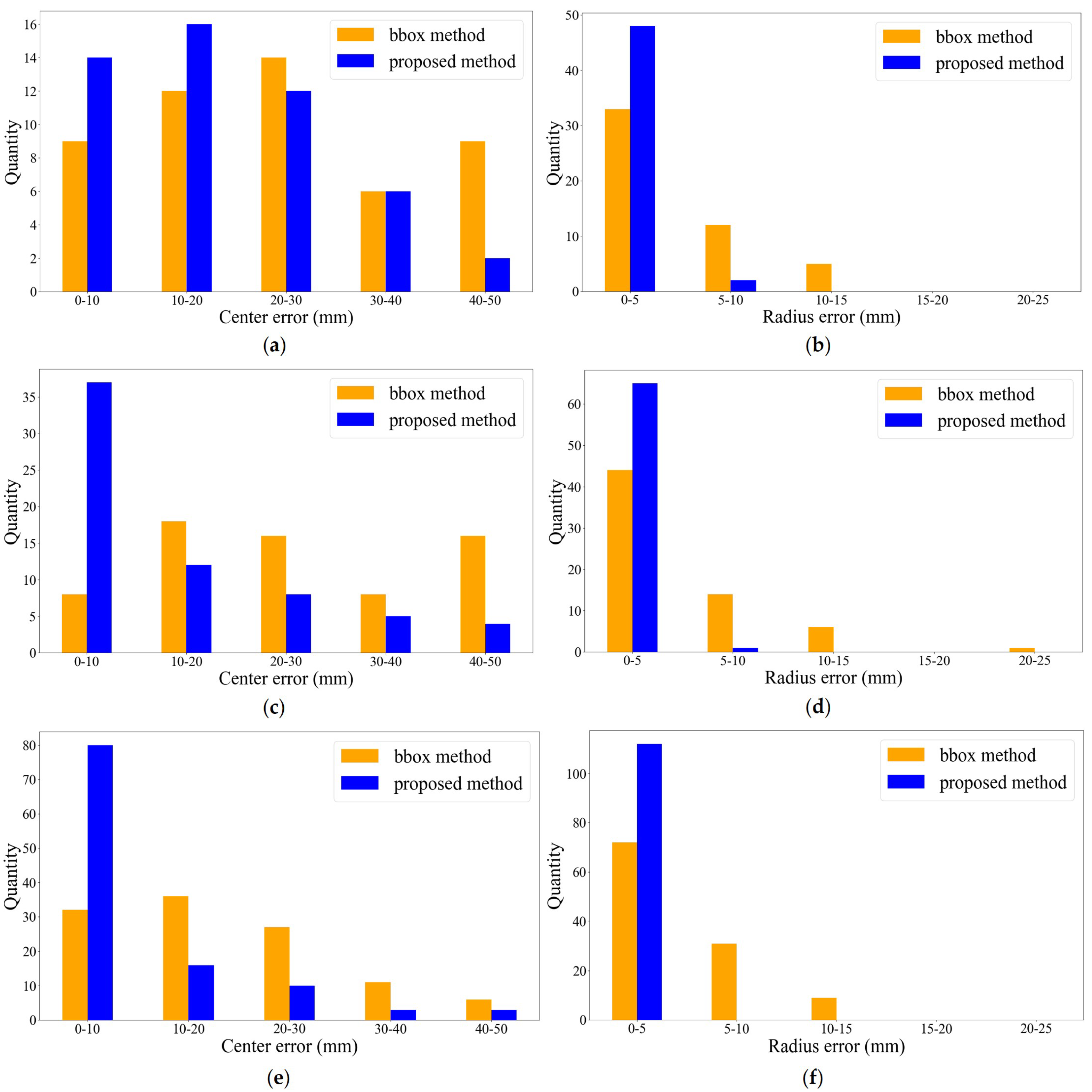

4.1. Results of Localization and Approaching Vector Estimation

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Zhang, Z.; Heinemann, P.H. Economic analysis of a low-cost apple harvest-assist unit. HortTechnology 2017, 27, 240–247. [Google Scholar] [CrossRef] [Green Version]

- Zhuang, J.; Hou, C.; Tang, Y.; He, Y.; Luo, S. Computer vision-based localisation of picking points for automatic litchi harvesting applications towards natural scenarios. Biosyst. Eng. 2019, 187, 1–20. [Google Scholar] [CrossRef]

- Ji, W.; Zhao, D.; Cheng, F.; Xu, B.; Zhang, Y.; Wang, J. Automatic recognition vision system guided for apple harvesting robot. Comput. Electr. Eng. 2012, 38, 1186–1195. [Google Scholar] [CrossRef]

- Zhao, D.; Wu, R.; Liu, X.; Zhao, Y. Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background. Trans. Chin. Soc. Agric. Eng. 2019, 35, 164–173. [Google Scholar]

- Kang, H.; Chen, C. Fast implementation of real-time fruit detection in apple orchards using deep learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-modal deep learning for Fuji apple detection using RGBD cameras and their radiometric capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Comput. Electron. Agric. 2020, 169, 105165. [Google Scholar] [CrossRef]

- Quan, L.; Wu, B.; Mao, S.; Yang, C.; Li, H. An Instance Segmentation-Based Method to Obtain the Leaf Age and Plant Centre of Weeds in Complex Field Environments. Sensors 2021, 21, 3389. [Google Scholar] [CrossRef]

- Liu, H.; Soto, R.A.R.; Xiao, F.; Lee, Y.J. YolactEdge: Real-time Instance Segmentation on the Edge. arXiv 2021, arXiv:cs.CV/2012.12259. [Google Scholar]

- Dandan, W.; Dongjian, H. Recognition of apple targets before fruits thinning by robot based on R-FCN deep convolution neural network. Trans. Chin. Soc. Agric. Eng. 2019, 35, 156–163. [Google Scholar]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Karkee, M.; Zhang, Q.; Zhang, X.; Yaqoob, M.; Fu, L.; Wang, S. Multi-class object detection using faster R-CNN and estimation of shaking locations for automated shake-and-catch apple harvesting. Comput. Electron. Agric. 2020, 173, 105384. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A review of key techniques of vision-based control for harvesting robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar] [CrossRef]

- Buemi, F.; Massa, M.; Sandini, G.; Costi, G. The agrobot project. Adv. Space Res. 1996, 18, 185–189. [Google Scholar] [CrossRef]

- Kitamura, S.; Oka, K. Recognition and cutting system of sweet pepper for picking robot in greenhouse horticulture. In Proceedings of the IEEE International Conference Mechatronics and Automation, Niagara Falls, ON, Canada, 29 July–1 August 2005; Volume 4, pp. 1807–1812. [Google Scholar]

- Xiang, R.; Jiang, H.; Ying, Y. Recognition of clustered tomatoes based on binocular stereo vision. Comput. Electron. Agric. 2014, 106, 75–90. [Google Scholar] [CrossRef]

- Plebe, A.; Grasso, G. Localization of spherical fruits for robotic harvesting. Mach. Vis. Appl. 2001, 13, 70–79. [Google Scholar] [CrossRef]

- Gongal, A.; Silwal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Apple crop-load estimation with over-the-row machine vision system. Comput. Electron. Agric. 2016, 120, 26–35. [Google Scholar] [CrossRef]

- Grosso, E.; Tistarelli, M. Active/dynamic stereo vision. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 868–879. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, J.; Fu, L.; Majeed, Y.; Feng, Y.; Li, R.; Cui, Y. Improved kiwifruit detection using pre-trained VGG16 with RGB and NIR information fusion. IEEE Access 2019, 8, 2327–2336. [Google Scholar] [CrossRef]

- Tu, S.; Pang, J.; Liu, H.; Zhuang, N.; Chen, Y.; Zheng, C.; Wan, H.; Xue, Y. Passion fruit detection and counting based on multiple scale faster R-CNN using RGBD images. Precis. Agric. 2020, 21, 1072–1091. [Google Scholar] [CrossRef]

- Zhang, Z.; Pothula, A.K.; Lu, R. A review of bin filling technologies for apple harvest and postharvest handling. Appl. Eng. Agric. 2018, 34, 687–703. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-field high throughput grapevine phenotyping with a consumer-grade depth camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Zheng, C.; Zhao, D.; Gao, P.; Duan, K. Segmentation OF apple point clouds based on ROI in RGB images. Inmateh Agric. Eng. 2019, 59, 209–218. [Google Scholar] [CrossRef]

- Lehnert, C.; Sa, I.; McCool, C.; Upcroft, B.; Perez, T. Sweet pepper pose detection and grasping for automated crop harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2428–2434. [Google Scholar] [CrossRef] [Green Version]

- Lehnert, C.; English, A.; Mccool, C.; Tow, A.W.; Perez, T. Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef] [Green Version]

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of an autonomous tomato harvesting robot with rotational plucking gripper. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 652–657. [Google Scholar]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava Detection and Pose Estimation Using a Low-Cost RGBD Sensor in the Field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef] [Green Version]

- Tao, Y.; Zhou, J. Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Comput. Electron. Agric. 2017, 142, 388–396. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Chen, C. Visual Perception and Modeling for Autonomous Apple Harvesting. IEEE Access 2020, 8, 62151–62163. [Google Scholar] [CrossRef]

- Häni, N.; Roy, P.; Isler, V. MinneApple: A benchmark dataset for apple detection and segmentation. IEEE Robot. Autom. Lett. 2020, 5, 852–858. [Google Scholar] [CrossRef] [Green Version]

- Keskar, N.S.; Socher, R. Improving generalization performance by switching from adam to sgd. arXiv 2017, arXiv:1712.07628. [Google Scholar]

- Sahin, C.; Garcia-Hernando, G.; Sock, J.; Kim, T.K. A review on object pose recovery: From 3d bounding box detectors to full 6d pose estimators. Image Vis. Comput. 2020, 96, 103898. [Google Scholar] [CrossRef] [Green Version]

- Magistri, F.; Chebrolu, N.; Behley, J.; Stachniss, C. Towards In-Field Phenotyping Exploiting Differentiable Rendering with Self-Consistency Loss. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13960–13966. [Google Scholar] [CrossRef]

- Bellocchio, E.; Costante, G.; Cascianelli, S.; Fravolini, M.L.; Valigi, P. Combining domain adaptation and spatial consistency for unseen fruits counting: A quasi-unsupervised approach. IEEE Robot. Autom. Lett. 2020, 5, 1079–1086. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; From, P.J. Symmetry-based 3D shape completion for fruit localisation for harvesting robots. Biosyst. Eng. 2020, 197, 188–202. [Google Scholar] [CrossRef]

| Networks Model | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) | Reference |

|---|---|---|---|---|---|

| Improved YOLOv3 | 97 | 90 | 87.71 | — | [4] |

| LedNet | 85.3 | 82.1 | 82.6 | 83.4 | [5] |

| Improved R-FCN | 95.1 | 85.7 | — | 90.2 | [11] |

| Mask RCNN | 85.7 | 90.6 | — | 88.1 | [8] |

| DaSNet-v2 | 87.3 | 86.8 | 88 | 87.3 | [12] |

| Faster RCNN | — | — | 82.4 | 86 | [13] |

| Improved YOLOV5s | 83.83 | 91.48 | 86.75 | 87.49 | [14] |

| Average Precision (AP) | FPS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Network | Backbone | Non-Occlusion | Leaf-Occlusion | Branch-Occlusion | Fruit-Occlusion | |||||

| Model | Bbox | Mask | Bbox | Mask | Bbox | Mask | Bbox | Mask | ||

| Mask | ResNet-50 | 38.14 | 40.12 | 29.56 | 28.14 | 9.1 | 6.14 | 13.2 | 8.85 | 17.3 |

| RCNN | ResNet-101 | 38.39 | 39.62 | 25.13 | 25.96 | 7.61 | 5.23 | 9.96 | 7.88 | 14.5 |

| MS | ResNet-50 | 38 | 38.12 | 27.04 | 25.27 | 4.88 | 6.36 | 9.03 | 8.29 | 17.1 |

| RCNN | ResNet-101 | 39.09 | 40.66 | 27.98 | 24.97 | 7.52 | 7.05 | 10.99 | 10.36 | 13.6 |

| YOLACT | ResNet-50 | 43.53 | 44.27 | 26.29 | 26.08 | 16.67 | 13.35 | 15.33 | 14.61 | 35.2 |

| ResNet-101 | 39.85 | 41.48 | 28.07 | 27.56 | 11.61 | 11.31 | 21.22 | 21.88 | 34.3 | |

| YOLACT++ | ResNet-50 | 42.62 | 44.03 | 32.06 | 35.49 | 18.17 | 13.8 | 21.48 | 20.28 | 31.1 |

| ResNet-101 | 43.98 | 45.06 | 34.23 | 36.17 | 15.46 | 13.49 | 11.52 | 13.33 | 29.6 | |

| Group 1 | Group 2 | Group 3 | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|

| bbx mtd. | our mtd. | bbx mtd. | our mtd. | bbx mtd. | our mtd. | bbx mtd. | our mtd. | ||

| Max. error (mm) | 49.65 | 47.24 | 49.01 | 49.07 | 48.46 | 42.80 | 49.65 | 49.07 | |

| Min. error (mm) | 2.24 | 0.05 | 4.79 | 0.19 | 0.67 | 1.65 | 0.67 | 0.05 | |

| Center | Med. error (mm) | 17.16 | 5.69 | 24.43 | 8.25 | 21.44 | 14.94 | 19.77 | 8.03 |

| Mean error (mm) | 18.36 | 9.15 | 24.43 | 13.73 | 22.70 | 17.74 | 21.51 | 12.36 | |

| Std. error (mm) | 11.09 | 9.99 | 13.55 | 13.10 | 13.15 | 10.38 | 12.74 | 11.59 | |

| Max. error (mm) | 14.47 | 1.21 | 27.11 | 6.72 | 14.84 | 5.44 | 27.11 | 6.72 | |

| Min. error (mm) | 0.01 | 0.54 | 0.08 | 1.18 | 0.14 | 0.26 | 0.01 | 0.26 | |

| Radius | Med. error (mm) | 4.01 | 0.98 | 3.40 | 1.37 | 4.26 | 2.22 | 3.97 | 1.18 |

| Mean error (mm) | 4.78 | 0.96 | 4.77 | 1.48 | 4.61 | 2.41 | 4.74 | 1.43 | |

| Std. error (mm) | 3.20 | 0.14 | 4.89 | 0.69 | 3.83 | 1.04 | 3.90 | 0.84 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, T.; Feng, Q.; Qiu, Q.; Xie, F.; Zhao, C. Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting. Remote Sens. 2022, 14, 482. https://doi.org/10.3390/rs14030482

Li T, Feng Q, Qiu Q, Xie F, Zhao C. Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting. Remote Sensing. 2022; 14(3):482. https://doi.org/10.3390/rs14030482

Chicago/Turabian StyleLi, Tao, Qingchun Feng, Quan Qiu, Feng Xie, and Chunjiang Zhao. 2022. "Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting" Remote Sensing 14, no. 3: 482. https://doi.org/10.3390/rs14030482

APA StyleLi, T., Feng, Q., Qiu, Q., Xie, F., & Zhao, C. (2022). Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting. Remote Sensing, 14(3), 482. https://doi.org/10.3390/rs14030482