Validating the Crop Identification Capability of the Spectral Variance at Key Stages (SVKS) Computed via an Object Self-Reference Combined Algorithm

Abstract

1. Introduction

2. Materials and Methods

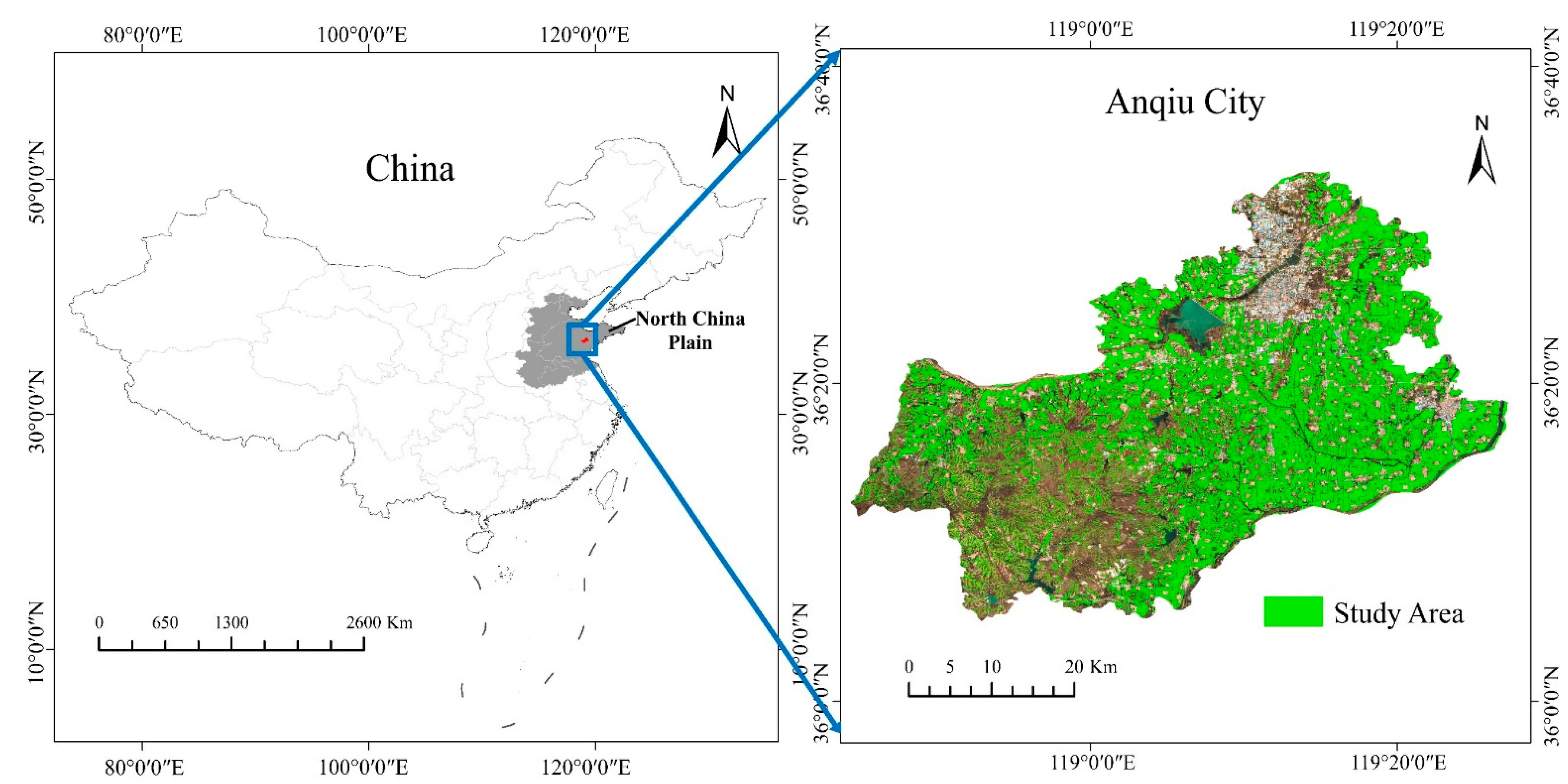

2.1. Study Area

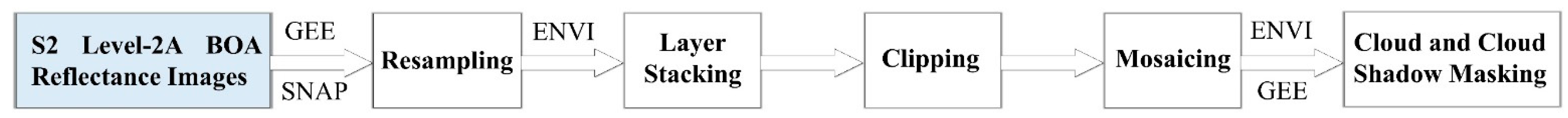

2.2. Sentinel-2 Data and Preprocessing

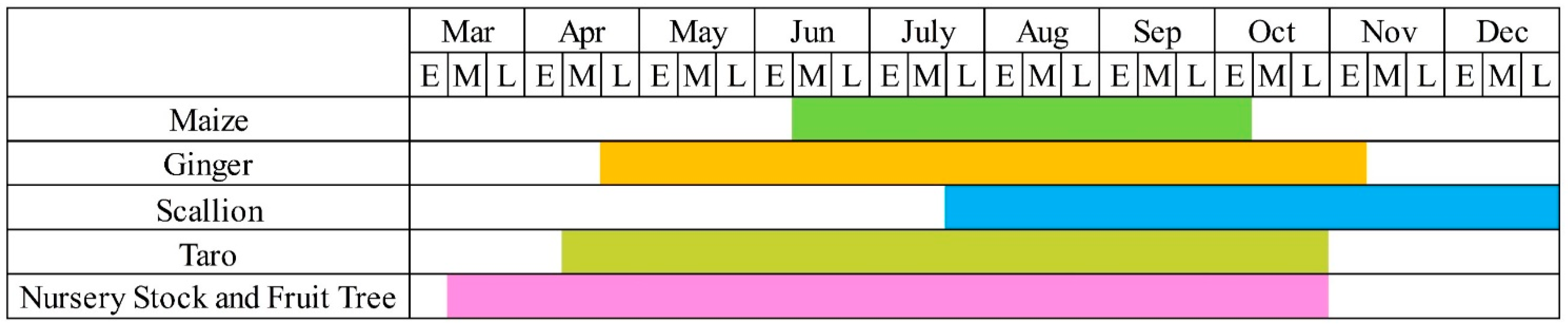

2.3. Field Observation

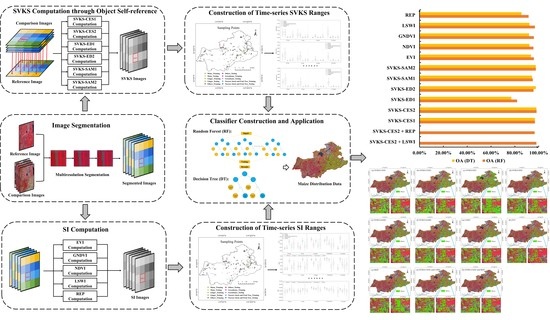

2.4. Experimental Design

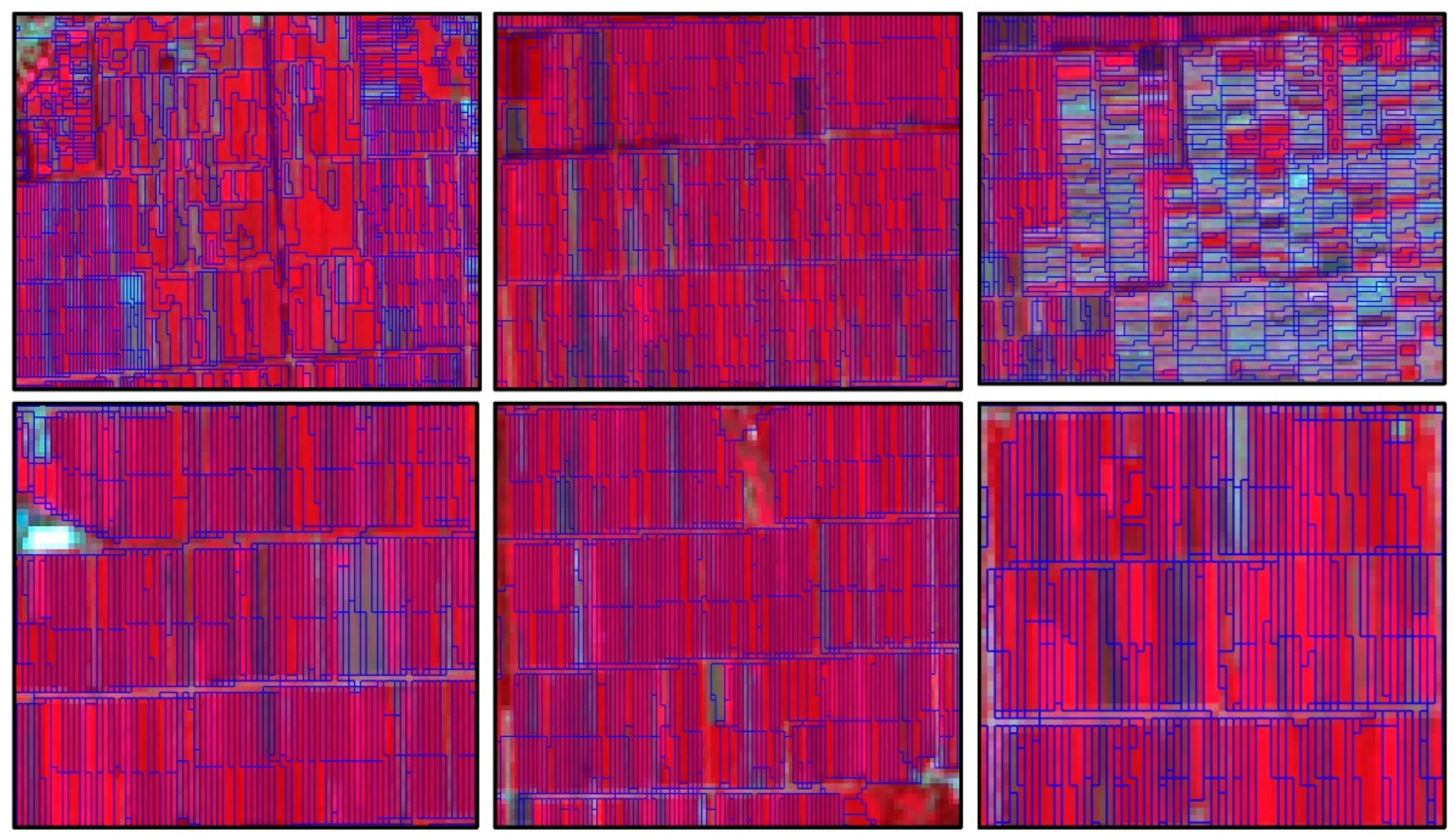

2.4.1. Image Segmentation

2.4.2. Computation of the Identification Indexes

2.4.3. Random Forest and Decision Tree-Based Classification

2.4.4. Accuracy Computation

3. Results

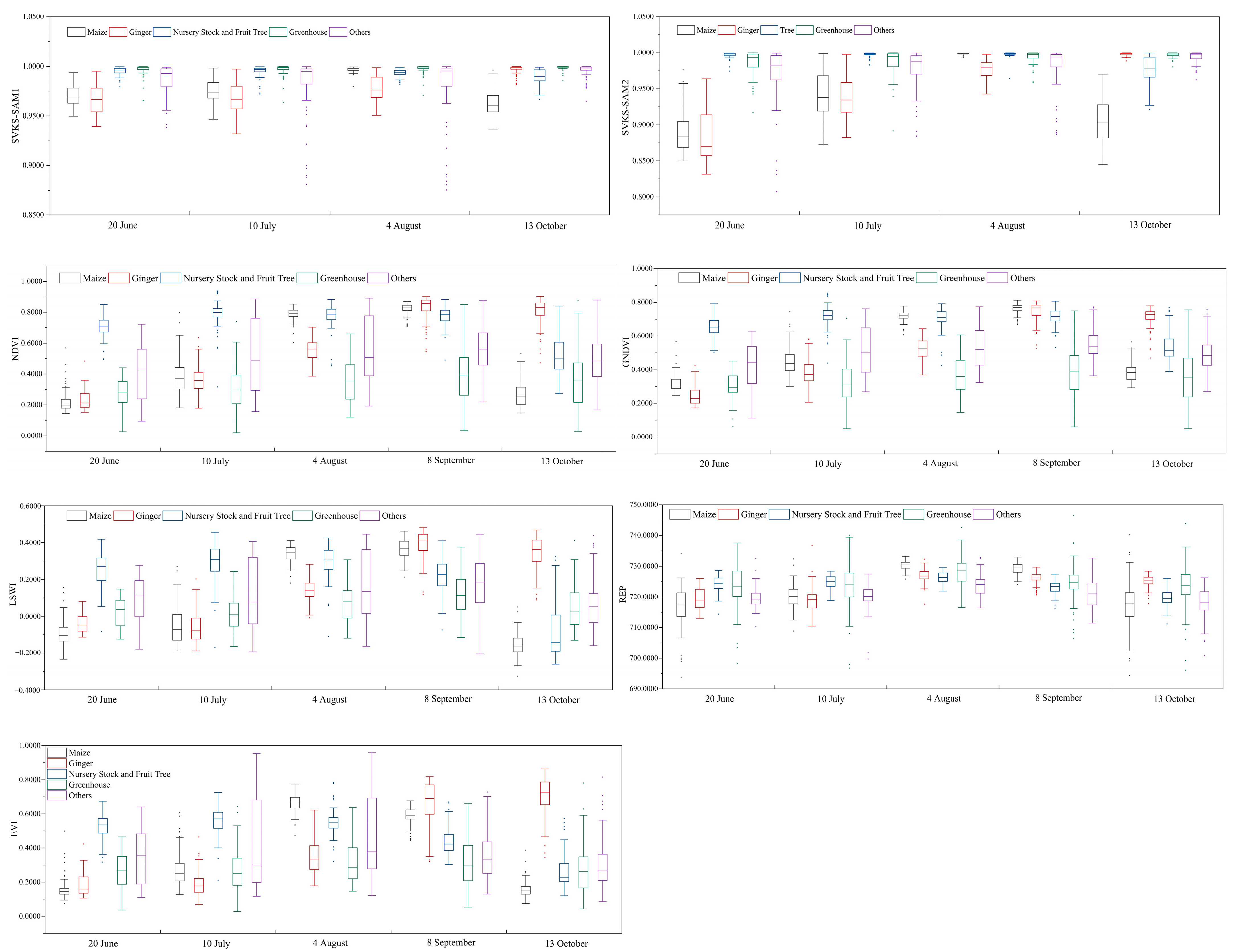

3.1. Feature Analysis of the Identification Index Time-Series Ranges

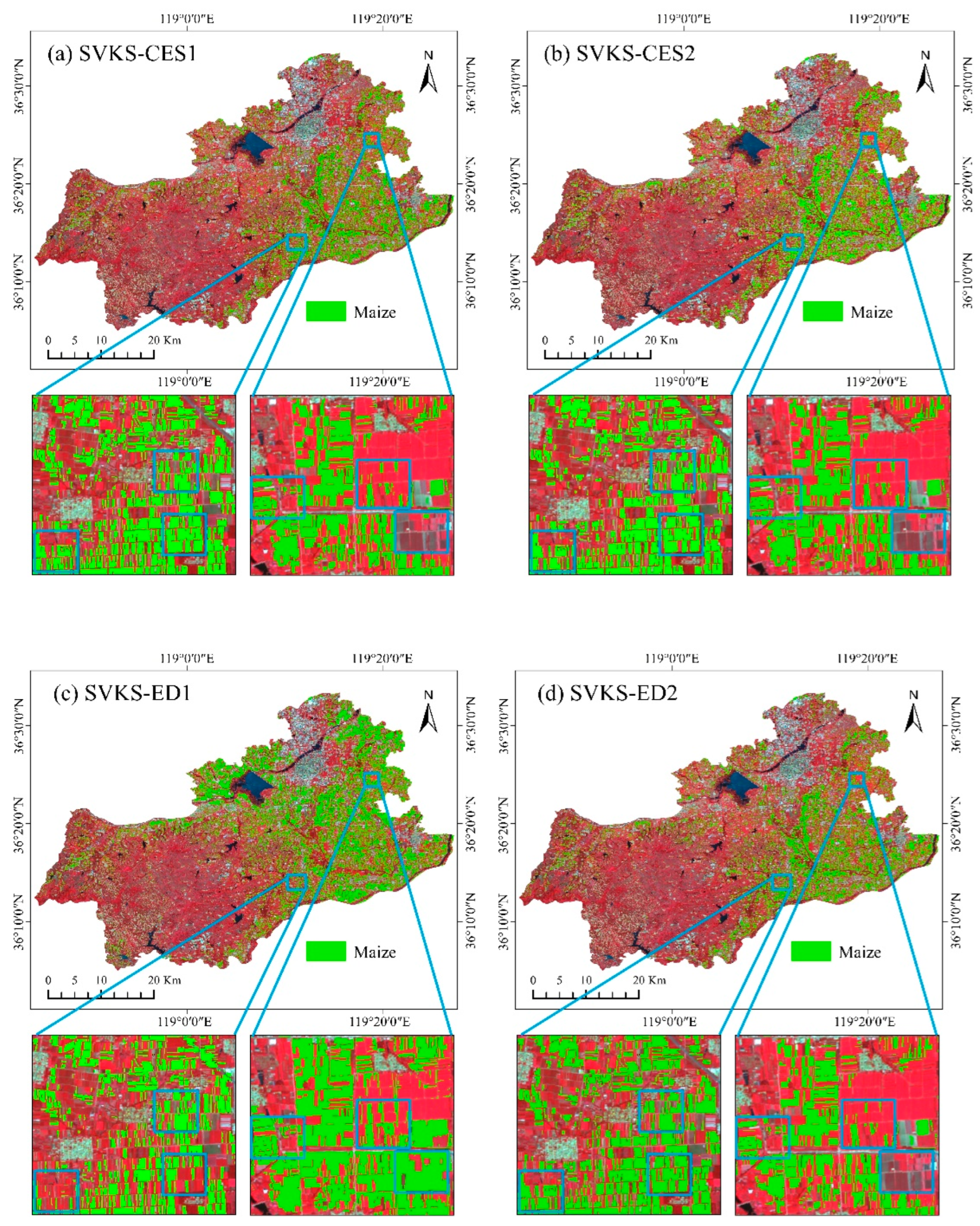

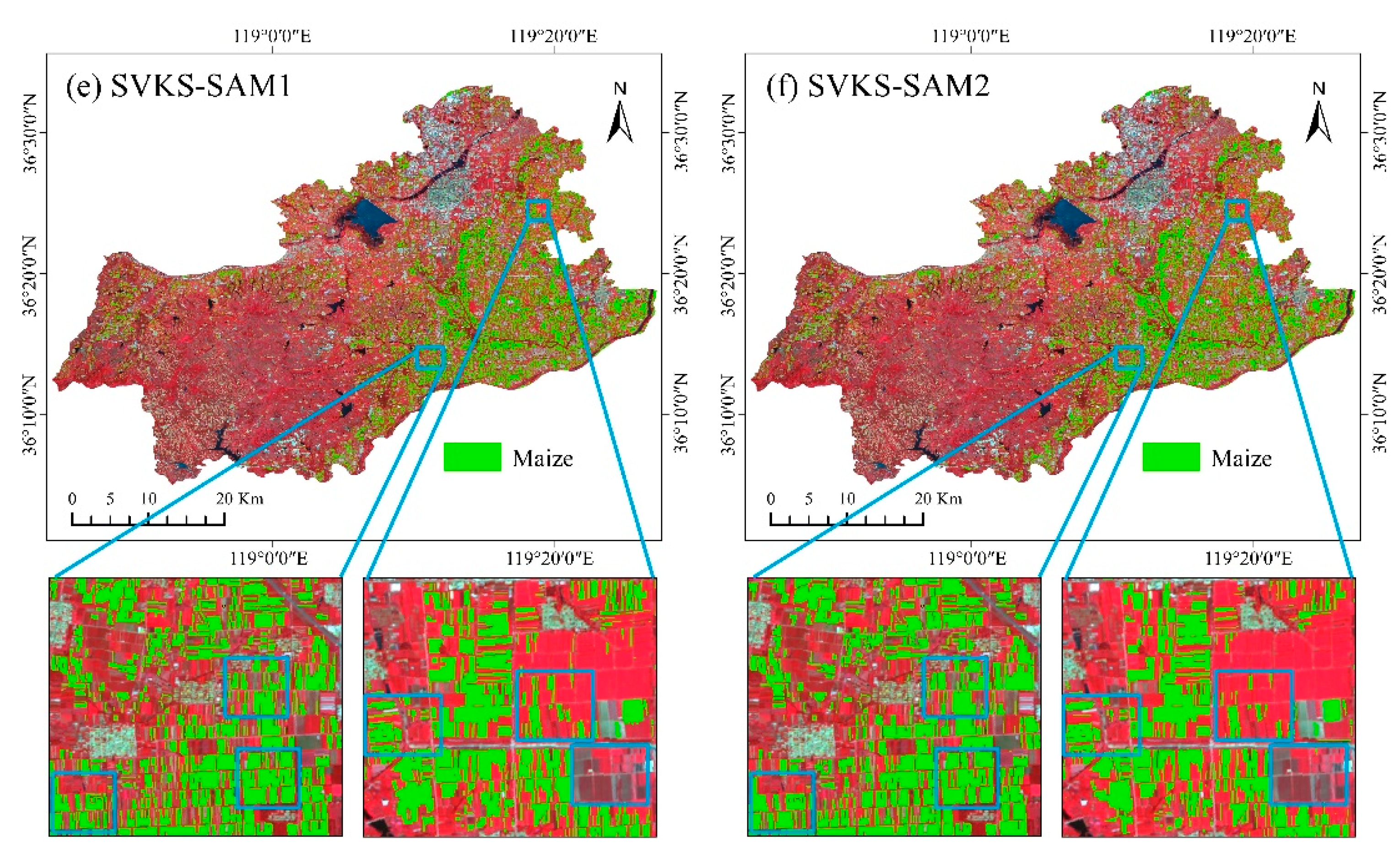

3.2. Maize Identification Based on a Single SVKS Type

3.3. Maize Identification Based on a Single SI Type

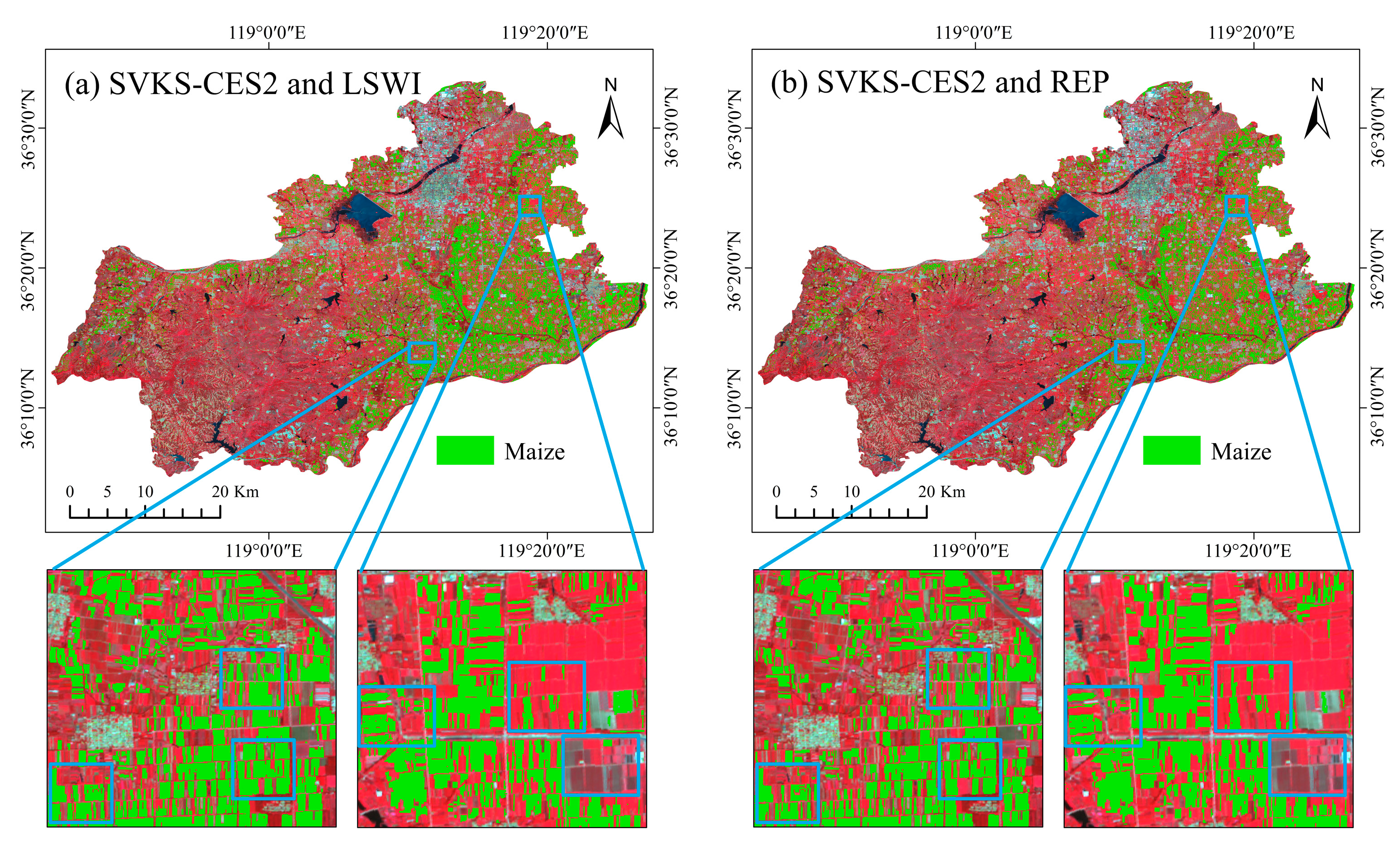

3.4. Crop Identification Capability Analysis and Assessment

4. Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. Statistical Yearbook. 2021. Available online: https://www.fao.org/3/cb4477en/online/cb4477en.html (accessed on 31 May 2022).

- Wang, P.; Wu, D.; Yang, J.; Ma, Y.; Feng, R.; Huo, Z. Summer maize growth under different precipitation years in the Huang-Huai-Hai plain of China. Agric. For. Meteorol. 2020, 285–286, 107927. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, S.; Zhao, L.; Huang, X.; Jiang, X. Parcel-based summer maize mapping and phenology estimation combined using Sentinel-2 and time series Sentinel-1 data. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102720. [Google Scholar] [CrossRef]

- Xun, L.; Wang, P.; Li, L.; Wang, L.; Kong, Q. Identifying crop planting areas using Fourier-transformed feature of time series MODIS leaf area index and sparse-representation-based classification in the North China plain. Int. J. Remote Sens. 2019, 40, 2034–2052. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, W.; Shi, Z.; Qin, Q. Establishment of a comprehensive drought monitoring index based on multisource remote sensing data and agricultural drought monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2113–2126. [Google Scholar] [CrossRef]

- Degani, O.; Chen, A.; Dor, S.; Orlov-Levin, V.; Jacob, M.; Shoshani, G.; Rabinovitz, O. Remote evaluation of maize cultivars susceptibility to late wilt disease caused by Magnaporthiopsis maydis. J. Plant Pathol. 2022, 104, 509–525. [Google Scholar] [CrossRef]

- Yoo, B.H.; Kim, K.S.; Park, J.Y.; Moon, K.H.; Ahn, J.J.; Fleisher, D.H. Spatial portability of random forest models to estimate site-specific air temperature for prediction of emergence dates of the Asian Corn Borer in North Korea. Comput. Electron. Agric. 2022, 199, 107113. [Google Scholar] [CrossRef]

- Diao, C. Remote sensing phenological monitoring framework to characterize corn and soybean physiological growing stages. Remote Sens. Environ. 2020, 248, 111960. [Google Scholar] [CrossRef]

- Zhu, W.; Rezaei, E.E.; Nouri, H.; Sun, Z.; Li, J.; Yu, D.; Siebert, S. UAV-based indicators of crop growth are robust for distinct water and nutrient management but vary between crop development phases. Field Crop. Res. 2022, 284, 108582. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, S.; Song, L.; Xu, Z.; Liu, Y.; Xu, T.; Zhu, Z. Estimation of daily evapotranspiration and irrigation water efficiency at a Landsat-like scale for an arid irrigation area using multi-source remote sensing data. Remote Sens. Environ. 2018, 216, 715–734. [Google Scholar] [CrossRef]

- Ren, J.; Shao, Y.; Wan, H.; Xie, Y.; Campos, A. A two-step mapping of irrigated corn with multi-temporal MODIS and Landsat analysis ready data. ISPRS J. Photogramm. Remote Sens. 2021, 176, 69–82. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available sentinel 1/2 imagery and google earth engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Ebrahimy, H.; Mirbagheri, B.; Matkan, A.; Azadbakht, M. Per-pixel land cover accuracy prediction: A random forest-based method with limited reference sample data. ISPRS J. Photogramm. Remote Sens. 2021, 172, 17–27. [Google Scholar] [CrossRef]

- Li, R.; Xu, M.; Chen, Z.; Gao, B.; Cai, J.; Shen, F.; He, X.; Zhuang, Y.; Chen, D. Phenology-based classification of crop species and rotation types using fused MODIS and Landsat data: The comparison of a random-forest-based model and a decision-rule-based model. Soil Tillage Res. 2021, 206, 104838. [Google Scholar] [CrossRef]

- Hu, Q.; Sulla-Menashe, D.; Xu, B.; Yin, H.; Tang, H.; Yang, P.; Wu, W. A phenology-based spectral and temporal feature selection method for crop mapping from satellite time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 218–229. [Google Scholar] [CrossRef]

- Qiu, B.; Huang, Y.; Chen, C.; Tang, Z.; Zou, F. Mapping spatiotemporal dynamics of maize in China from 2005 to 2017 through designing leaf moisture based indicator from normalized multi-band drought index. Comput. Electron. Agric. 2018, 153, 82–93. [Google Scholar] [CrossRef]

- Shahrabi, H.S.; Ashourloo, D.; Moeini Rad, A.; Aghighi, H.; Azadbakht, M.; Nematollahi, H. Automatic silage maize detection based on phenological rules using Sentinel-2 time-series dataset. Int. J. Remote Sens. 2020, 41, 8406–8427. [Google Scholar] [CrossRef]

- Zhong, L.; Gong, P.; Biging, G.S. Efficient corn and soybean mapping with temporal extendability: A multi-year experiment using Landsat imagery. Remote Sens. Environ. 2014, 140, 1–13. [Google Scholar] [CrossRef]

- Li, S.; Li, F.; Gao, M.; Li, Z.; Leng, P.; Duan, S.; Ren, J. A new method for winter wheat mapping based on spectral reconstruction technology. Remote Sens. 2021, 13, 1810. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S.; Wheeler, B.; Iiames, J.S.; Campbell, J.B. An evaluation of time-series smoothing algorithms for land-cover classifications using MODIS-NDVI multi-temporal data. Remote Sens. Environ. 2016, 174, 258–265. [Google Scholar] [CrossRef]

- Sun, H.; Xu, A.; Lin, H.; Zhang, L.; Mei, Y. Winter wheat mapping using temporal signatures of MODIS vegetation index data. Int. J. Remote Sens. 2012, 33, 5026–5042. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L.; Kastens, J.H. Analysis of time-series MODIS 250 m vegetation index data for crop classification in the U.S. central great plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef]

- Yang, Y.; Tao, B.; Ren, W.; Zourarakis, D.P.; Masri, B.E.; Sun, Z.; Tian, Q. An improved approach considering intraclass variability for mapping winter wheat using multitemporal MODIS EVI images. Remote Sens. 2019, 11, 1191. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)—Interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Mohajane, M.; Essahlaoui, A.; Oudija, F.; El Hafyani, M.; Teodoro, A.C. Mapping forest species in the central middle atlas of morocco (Azrou Forest) through remote sensing techniques. ISPRS Int. J. Geo Inf. 2017, 6, 275. [Google Scholar] [CrossRef]

- Ren, Z.; Zhai, Q.; Sun, L. A novel method for hyperspectral mineral mapping based on clustering-matching and nonnegative matrix factorization. Remote Sens. 2022, 14, 1042. [Google Scholar] [CrossRef]

- Wang, K.; Yong, B. Application of the frequency spectrum to spectral similarity measures. Remote Sens. 2016, 8, 344. [Google Scholar] [CrossRef]

- Zhang, W.; Li, W.; Zhang, C.; Li, X. Incorporating spectral similarity into markov chain geostatistical cosimulation for reducing smoothing effect in land cover postclassification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1082–1095. [Google Scholar] [CrossRef]

- Sun, L.; Gao, F.; Xie, D.; Anderson, M.; Chen, R.; Yang, Y.; Yang, Y.; Chen, Z. Reconstructing daily 30 m NDVI over complex agricultural landscapes using a crop reference curve approach. Remote Sens. Environ. 2021, 253, 112156. [Google Scholar] [CrossRef]

- Yang, H.; Pan, B.; Li, N.; Wang, W.; Zhang, J.; Zhang, X. A systematic method for spatio-temporal phenology estimation of paddy rice using time series Sentinel-1 images. Remote Sens. Environ. 2021, 259, 112394. [Google Scholar] [CrossRef]

- South, S.; Qi, J.; Lusch, D.P. Optimal classification methods for mapping agricultural tillage practices. Remote Sens. Environ. 2004, 91, 90–97. [Google Scholar] [CrossRef]

- Zhang, X.; Li, P. Lithological mapping from hyperspectral data by improved use of spectral angle mapper. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 95–109. [Google Scholar] [CrossRef]

- Nidamanuri, R.R.; Zbell, B. Normalized spectral similarity score (NS3) as an efficient spectral library searching method for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 226–240. [Google Scholar] [CrossRef]

- Mondal, S.; Jeganathan, C. Mountain agriculture extraction from time-series MODIS NDVI using dynamic time warping technique. Int. J. Remote Sens. 2018, 39, 3679–3704. [Google Scholar] [CrossRef]

- Cui, J.; Yan, P.; Wang, X.; Yang, J.; Li, Z.; Yang, X.; Sui, P.; Chen, Y. Integrated assessment of economic and environmental consequences of shifting cropping system from wheat-maize to monocropped maize in the North China plain. J. Clean. Prod. 2018, 193, 524–532. [Google Scholar] [CrossRef]

- Cui, J.; Sui, P.; Wright, D.L.; Wang, D.; Sun, B.; Ran, M.; Shen, Y.; Li, C.; Chen, Y. Carbon emission of maize-based cropping systems in the North China plain. J. Clean. Prod. 2019, 213, 300–308. [Google Scholar] [CrossRef]

- Nagabhatla, N.; Kühle, P. Tropical agrarian landscape classification using high-resolution GeoEYE data and segmentationbased approach. Eur. J. Remote Sens. 2016, 49, 623–642. [Google Scholar] [CrossRef]

- Lourenço, P.; Teodoro, A.C.; Gonçalves, J.A.; Honrado, J.P.; Cunha, M.; Sillero, N. Assessing the performance of different OBIA software approaches for mapping invasive alien plants along roads with remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2021, 95, 102263. [Google Scholar] [CrossRef]

- Ren, T.; Liu, Z.; Zhang, L.; Liu, D.; Xi, X.; Kang, Y.; Zhao, Y.; Zhang, C.; Li, S.; Zhang, X. Early identification of seed maize and common maize production fields using sentinel-2 images. Remote Sens. 2020, 12, 2140. [Google Scholar] [CrossRef]

- Watts, J.D.; Lawrence, R.L.; Miller, P.R.; Montagne, C. Monitoring of cropland practices for carbon sequestration purposes in north central Montana by Landsat remote sensing. Remote Sens. Environ. 2009, 113, 1843–1852. [Google Scholar] [CrossRef]

- Song, Q.; Hu, Q.; Zhou, Q.; Hovis, C.; Xiang, M.; Tang, H.; Wu, W. In-Season Crop Mapping with GF-1/WFV Data by Combining Object-Based Image Analysis and Random Forest. Remote Sens. 2020, 9, 1184. [Google Scholar] [CrossRef]

- Jhonnerie, R.; Siregar, V.P.; Nababan, B.; Prasetyo, L.B.; Wouthuyzen, S. Random forest classification for mangrove land cover mapping using Landsat 5 TM and ALOS PALSAR imageries. In Proceedings of the 1st International Symposium on Lapan-Ipb Satellite (LISAT) for Food Security and Environmental Monitoring, Bogor, Indonesia, 25–26 November 2014; pp. 215–221. [Google Scholar]

- Kang, Y.; Hu, X.; Meng, Q.; Zou, Y.; Zhang, L.; Liu, M.; Zhao, M. Land cover and crop classification based on red edge indices features of GF-6 WFV time series data. Remote Sens. 2021, 13, 4522. [Google Scholar] [CrossRef]

- Reddy, G.S.; Rao, C.L.N.; Venkataratnam, L.; Rao, P.V.K. Influence of plant pigments on spectral reflectance of maize, groundnut and soybean grown in semi-arid environments. Int. J. Remote Sens. 2001, 22, 3373–3380. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Enclona, E.A.; Ashton, M.S.; Van Der Meer, B. Accuracy assessments of hyperspectral waveband performance for vegetation analysis applications. Remote Sens. Environ. 2004, 91, 354–376. [Google Scholar] [CrossRef]

| First Fixed Parameter | Second Fixed Parameter | Variable Parameter |

|---|---|---|

| shape = 0.1 | compactness = 0.5 | scale parameter = 10 |

| scale parameter = 30 | ||

| scale parameter = 50 | ||

| scale parameter = 70 | ||

| scale parameter = 90 | ||

| scale parameter = 50 | compactness = 0.5 | compactness = 0.1 |

| compactness = 0.3 | ||

| compactness = 0.5 | ||

| compactness = 0.7 | ||

| compactness = 0.9 | ||

| scale parameter = 50 | shape = 0.1 | shape = 0.1 |

| shape = 0.3 | ||

| shape = 0.5 | ||

| shape = 0.7 | ||

| shape = 0.9 |

| SVKS Type | Bands in the Computation Process |

|---|---|

| SVKS-CES1 | B2, B3, B4 |

| SVKS-CES2 | B2, B3, B4, B5, B6, B7, B8 |

| SVKS-ED1 | B2, B3, B4 |

| SVKS-ED2 | B2, B3, B4, B5, B6, B7, B8 |

| SVKS-SAM1 | B2, B3, B4 |

| SVKS-SAM2 | B2, B3, B4, B5, B6, B7, B8 |

| Classifier Type | Parameter Name | Value |

|---|---|---|

| Random Forest | depth | 0 |

| min sample count | 0 | |

| max categories | 16 | |

| active variables | 0 | |

| max tree number | 50 | |

| forest accuracy | 0.01 | |

| Decision Tree | depth | 0 |

| min sample count | 0 | |

| max categories | 16 | |

| cross validation folds | 3 |

| Classifier | Identification Index | User Class | Sampling Points Employed for Testing | |||

|---|---|---|---|---|---|---|

| Maize | Non-maize | Total | UA | |||

| RF | SVKS-CES1 | Maize | 99 | 4 | 103 | 96.12% |

| Non-maize | 11 | 356 | 367 | 97.00% | ||

| Total | 110 | 360 | ||||

| PA | 90.00% | 98.89% | OA: 96.81% | |||

| SVKS-CES2 | Maize | 102 | 0 | 102 | 100.00% | |

| Non-maize | 8 | 360 | 368 | 97.83% | ||

| Total | 110 | 360 | ||||

| PA | 92.73% | 100.00% | OA: 98.30% | |||

| SVKS-ED1 | Maize | 97 | 72 | 169 | 57.40% | |

| Non-maize | 13 | 288 | 301 | 95.68% | ||

| Total | 110 | 360 | ||||

| PA | 88.18% | 80.00% | OA: 81.91% | |||

| SVKS-ED2 | Maize | 102 | 12 | 114 | 89.47% | |

| Non-Maize | 8 | 348 | 356 | 97.75% | ||

| Total | 110 | 360 | ||||

| PA | 92.73% | 96.67% | OA: 95.74% | |||

| SVKS-SAM1 | Maize | 95 | 8 | 103 | 92.23% | |

| Non-maize | 15 | 352 | 367 | 95.91% | ||

| Total | 110 | 360 | ||||

| PA | 86.36% | 97.78% | OA: 95.11% | |||

| SVKS-SAM2 | Maize | 101 | 2 | 103 | 98.06% | |

| Non-maize | 9 | 358 | 367 | 97.55% | ||

| Total | 110 | 360 | ||||

| PA | 91.82% | 99.44% | OA: 97.66% | |||

| DT | SVKS-CES1 | Maize | 99 | 4 | 103 | 96.12% |

| Non-maize | 11 | 356 | 367 | 97.00% | ||

| Total | 110 | 360 | ||||

| PA | 90.00% | 98.89% | OA: 96.81% | |||

| SVKS-CES2 | Maize | 101 | 0 | 101 | 100.00% | |

| Non-maize | 9 | 360 | 369 | 97.56% | ||

| Total | 110 | 360 | ||||

| PA | 91.82% | 100.00% | OA: 98.09% | |||

| SVKS-ED1 | Maize | 89 | 87 | 176 | 50.57% | |

| Non-maize | 21 | 273 | 294 | 92.86% | ||

| Total | 110 | 360 | ||||

| PA | 80.91% | 75.83% | OA: 77.02% | |||

| SVKS-ED2 | Maize | 101 | 2 | 103 | 98.06% | |

| Non-maize | 9 | 358 | 367 | 97.55% | ||

| Total | 110 | 360 | ||||

| PA | 91.82% | 99.44% | OA: 97.66% | |||

| SVKS-SAM1 | Maize | 93 | 9 | 102 | 91.18% | |

| Non-maize | 17 | 351 | 368 | 95.38% | ||

| Total | 110 | 360 | ||||

| PA | 84.55% | 97.50% | OA: 94.47% | |||

| SVKS-SAM2 | Maize | 101 | 2 | 103 | 98.06% | |

| Non-maize | 9 | 358 | 367 | 97.55% | ||

| Total | 110 | 360 | ||||

| PA | 91.82% | 99.44% | OA: 97.66% | |||

| Classifier | Identification Index | User Class | Sampling Points Employed for Testing | |||

|---|---|---|---|---|---|---|

| Maize | Non-Maize | Total | UA | |||

| RF | EVI | Maize | 92 | 1 | 93 | 98.92% |

| Non-maize | 18 | 359 | 377 | 95.23% | ||

| Total | 110 | 360 | ||||

| PA | 83.64% | 99.72% | OA: 95.96% | |||

| NDVI | Maize | 92 | 3 | 95 | 96.84% | |

| Non-maize | 18 | 357 | 375 | 95.20% | ||

| Total | 110 | 360 | ||||

| PA | 83.64% | 99.17% | OA: 95.53% | |||

| GNDVI | Maize | 94 | 3 | 97 | 96.91% | |

| Non-maize | 16 | 357 | 373 | 95.71% | ||

| Total | 110 | 360 | ||||

| PA | 85.45% | 99.17% | OA: 95.96% | |||

| LSWI | Maize | 97 | 2 | 99 | 97.98% | |

| Non-maize | 13 | 358 | 371 | 96.50% | ||

| Total | 110 | 360 | ||||

| PA | 88.18% | 99.44% | OA: 96.81% | |||

| REP | Maize | 86 | 16 | 102 | 84.31% | |

| Non-maize | 24 | 344 | 368 | 93.48% | ||

| Total | 110 | 360 | ||||

| PA | 78.18% | 95.56% | OA: 91.49% | |||

| DT | EVI | Maize | 81 | 1 | 82 | 98.78% |

| Non-maize | 29 | 359 | 388 | 92.53% | ||

| Total | 110 | 360 | ||||

| PA | 73.64% | 99.72% | OA: 93.62% | |||

| NDVI | Maize | 77 | 3 | 80 | 96.25% | |

| Non-maize | 33 | 357 | 390 | 91.54% | ||

| Total | 110 | 360 | ||||

| PA | 70.00% | 99.17% | OA: 92.34% | |||

| GNDVI | Maize | 77 | 5 | 82 | 93.90% | |

| Non-maize | 33 | 355 | 388 | 91.49% | ||

| Total | 110 | 360 | ||||

| PA | 70.00% | 98.61% | OA: 91.91% | |||

| LSWI | Maize | 94 | 20 | 114 | 82.46% | |

| Non-maize | 16 | 340 | 356 | 95.51% | ||

| Total | 110 | 360 | ||||

| PA | 85.45% | 94.44% | OA: 92.34% | |||

| REP | Maize | 87 | 45 | 132 | 65.91% | |

| Non-maize | 23 | 315 | 338 | 93.20% | ||

| Total | 110 | 360 | ||||

| PA | 79.09% | 87.50% | OA: 85.53% | |||

| Combined Utilization Type | User Class | Sampling Points Employed for Testing | |||

|---|---|---|---|---|---|

| Maize | Non-Maize | Total | UA | ||

| SVKS-CES2, LSWI | Maize | 101 | 0 | 101 | 100.00% |

| Non-maize | 9 | 360 | 369 | 97.56% | |

| Total | 110 | 360 | |||

| PA | 91.82% | 100.00% | OA: 98.09% | ||

| SVKS-CES2, REP | Maize | 94 | 0 | 94 | 100.00% |

| Non-maize | 16 | 360 | 376 | 95.74% | |

| Total | 110 | 360 | |||

| PA | 85.45% | 100.00% | OA: 96.60% | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Meng, J.; Shi, T.; Zhang, X.; Wang, Y.; Luo, X.; Lin, Z.; You, X. Validating the Crop Identification Capability of the Spectral Variance at Key Stages (SVKS) Computed via an Object Self-Reference Combined Algorithm. Remote Sens. 2022, 14, 6390. https://doi.org/10.3390/rs14246390

Zhao H, Meng J, Shi T, Zhang X, Wang Y, Luo X, Lin Z, You X. Validating the Crop Identification Capability of the Spectral Variance at Key Stages (SVKS) Computed via an Object Self-Reference Combined Algorithm. Remote Sensing. 2022; 14(24):6390. https://doi.org/10.3390/rs14246390

Chicago/Turabian StyleZhao, Hailan, Jihua Meng, Tingting Shi, Xiaobo Zhang, Yanan Wang, Xiangjiang Luo, Zhenxin Lin, and Xinyan You. 2022. "Validating the Crop Identification Capability of the Spectral Variance at Key Stages (SVKS) Computed via an Object Self-Reference Combined Algorithm" Remote Sensing 14, no. 24: 6390. https://doi.org/10.3390/rs14246390

APA StyleZhao, H., Meng, J., Shi, T., Zhang, X., Wang, Y., Luo, X., Lin, Z., & You, X. (2022). Validating the Crop Identification Capability of the Spectral Variance at Key Stages (SVKS) Computed via an Object Self-Reference Combined Algorithm. Remote Sensing, 14(24), 6390. https://doi.org/10.3390/rs14246390