Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images

Abstract

1. Introduction

- We used an unsupervised adversarial domain adaptation method for unsupervised agricultural land extraction. This work can reduce the labeling cost of agricultural land remote sensing images;

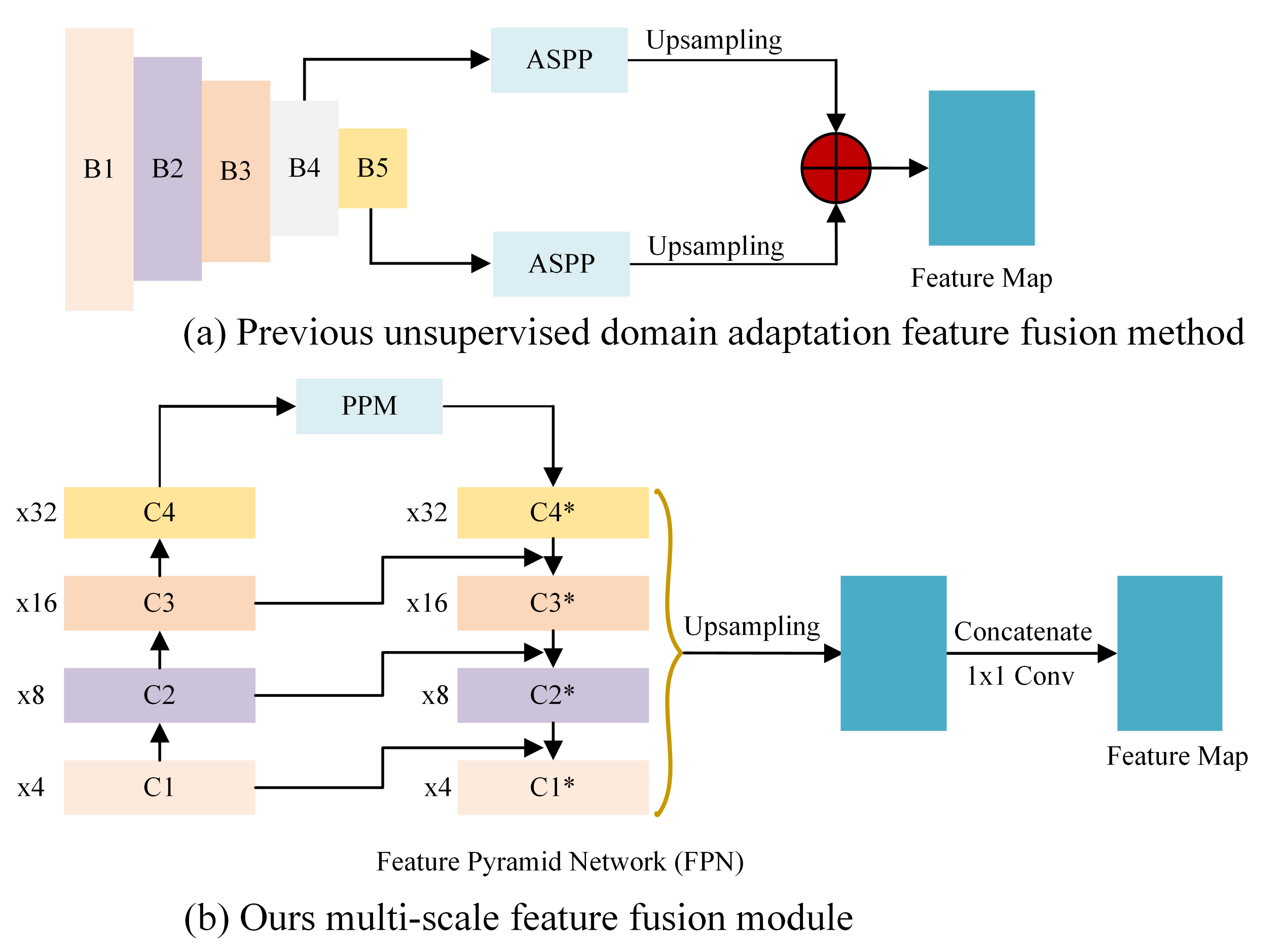

- We designed a multi-scale feature fusion module (MSFF) to adapt to different spatial resolution agricultural land datasets and learn more robust domain invariant features;

- Our approach achieved better results in unsupervised agricultural land segmentation.

2. Related Work

2.1. Unsupervised Domain Adaptation Semantic Segmentation

2.2. Multi-Scale Feature Fusion

3. Proposed Method

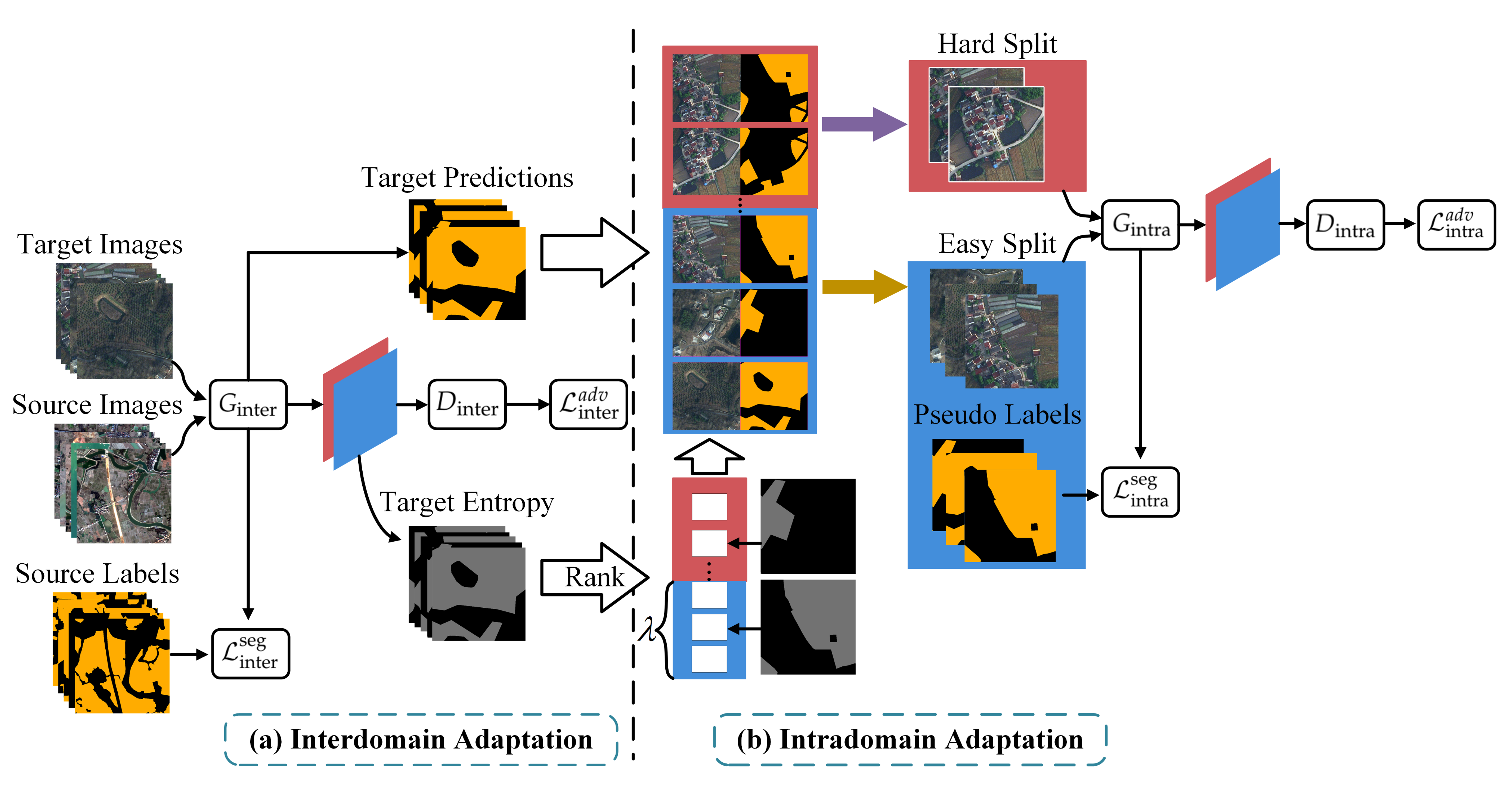

3.1. Overview

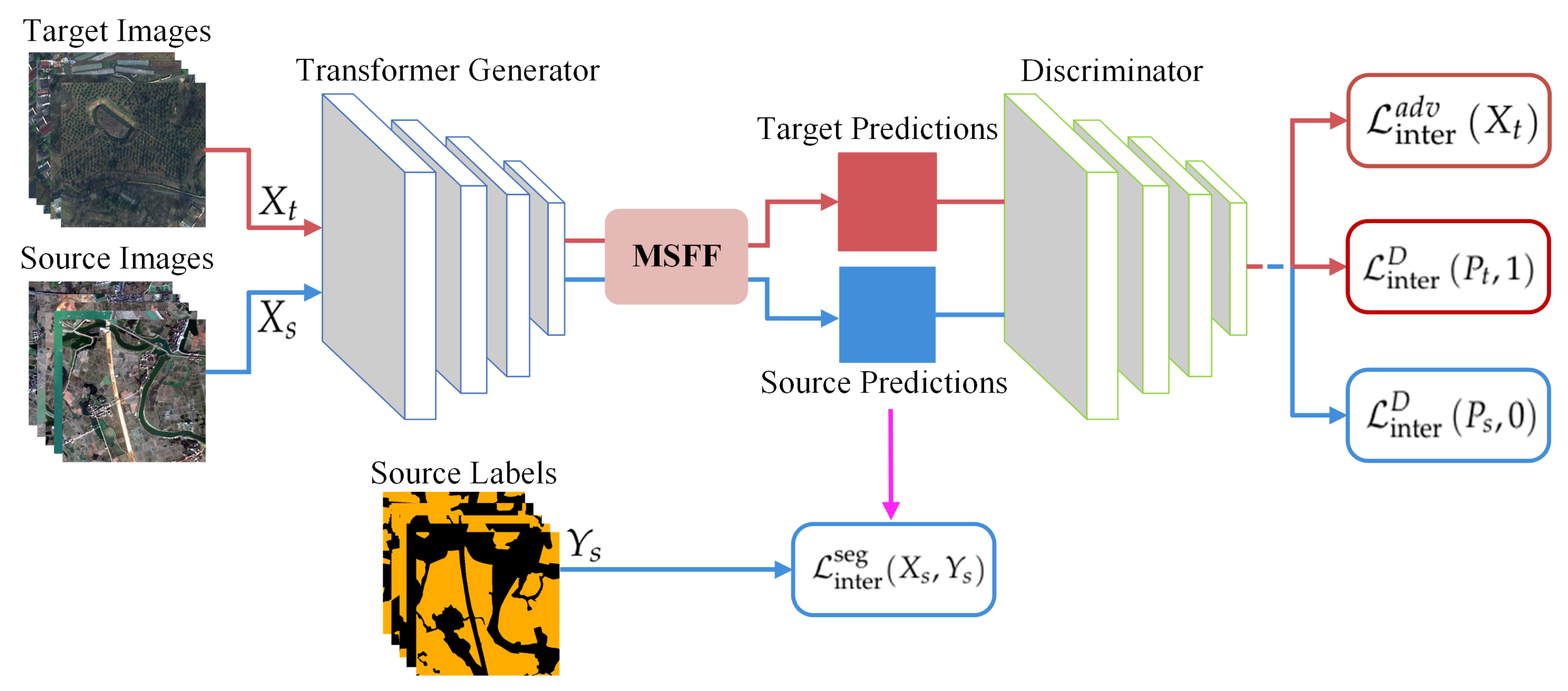

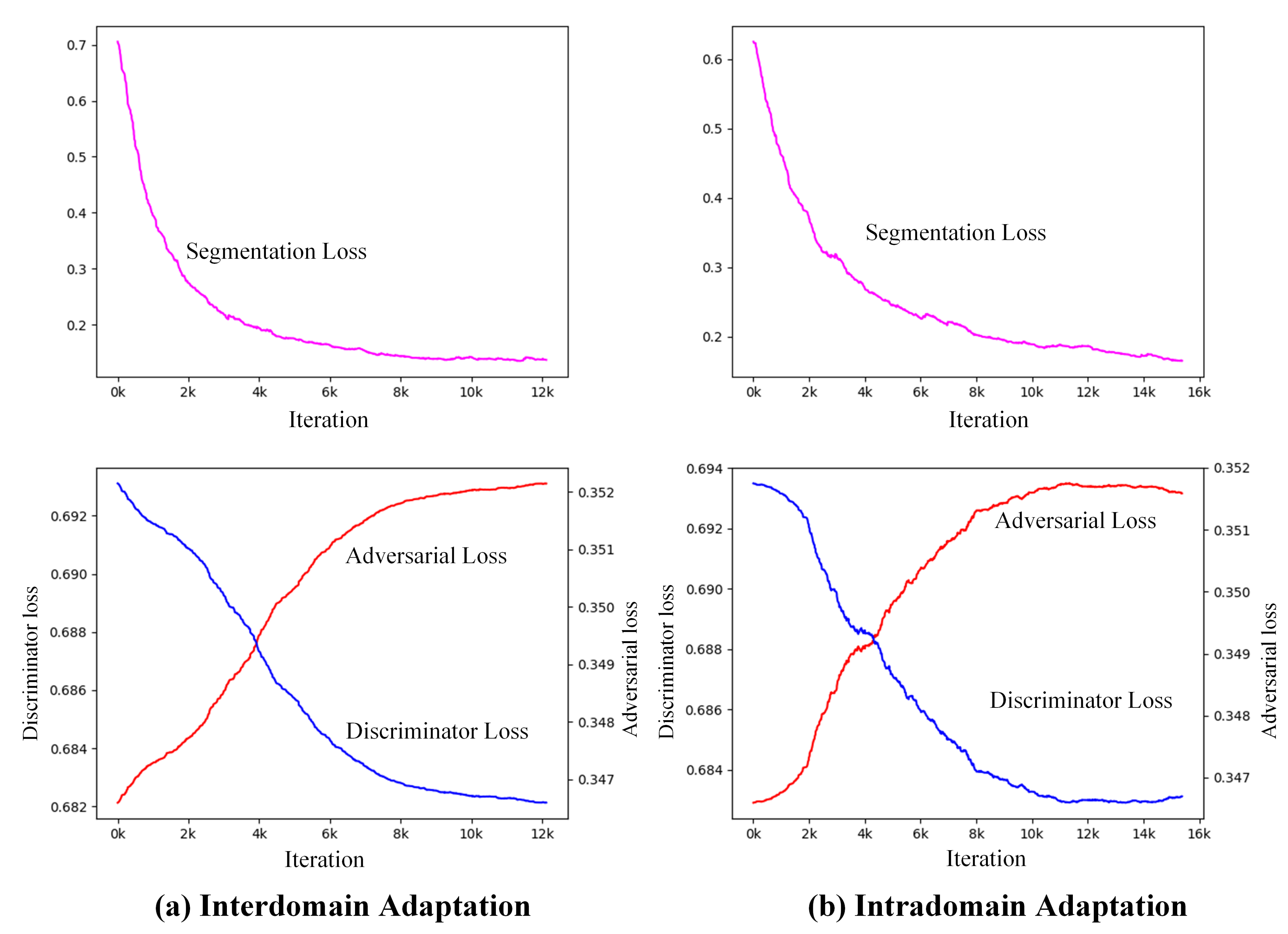

3.2. Interdomain Adaptation

3.3. Intradomain Adaptation

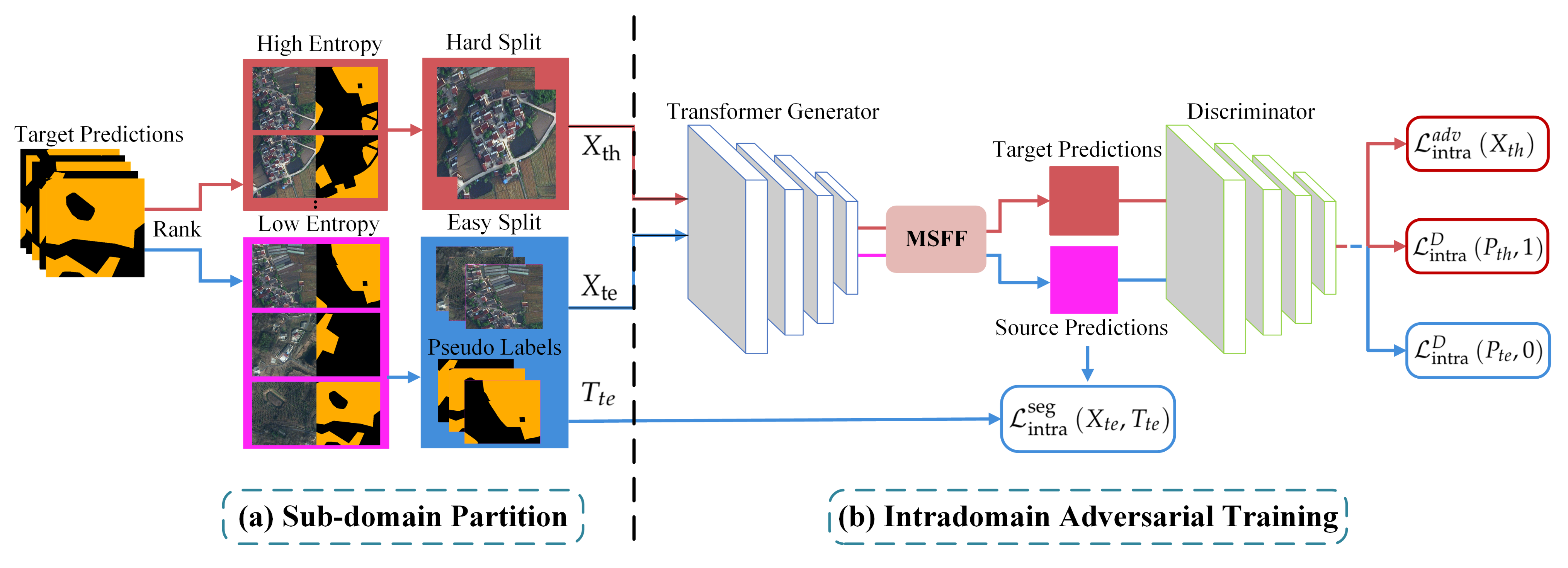

3.3.1. Sub-Domain Partition

3.3.2. Intradomain Adversarial Training

3.4. Multi-Scale Feature Fusion Module

4. Experiments

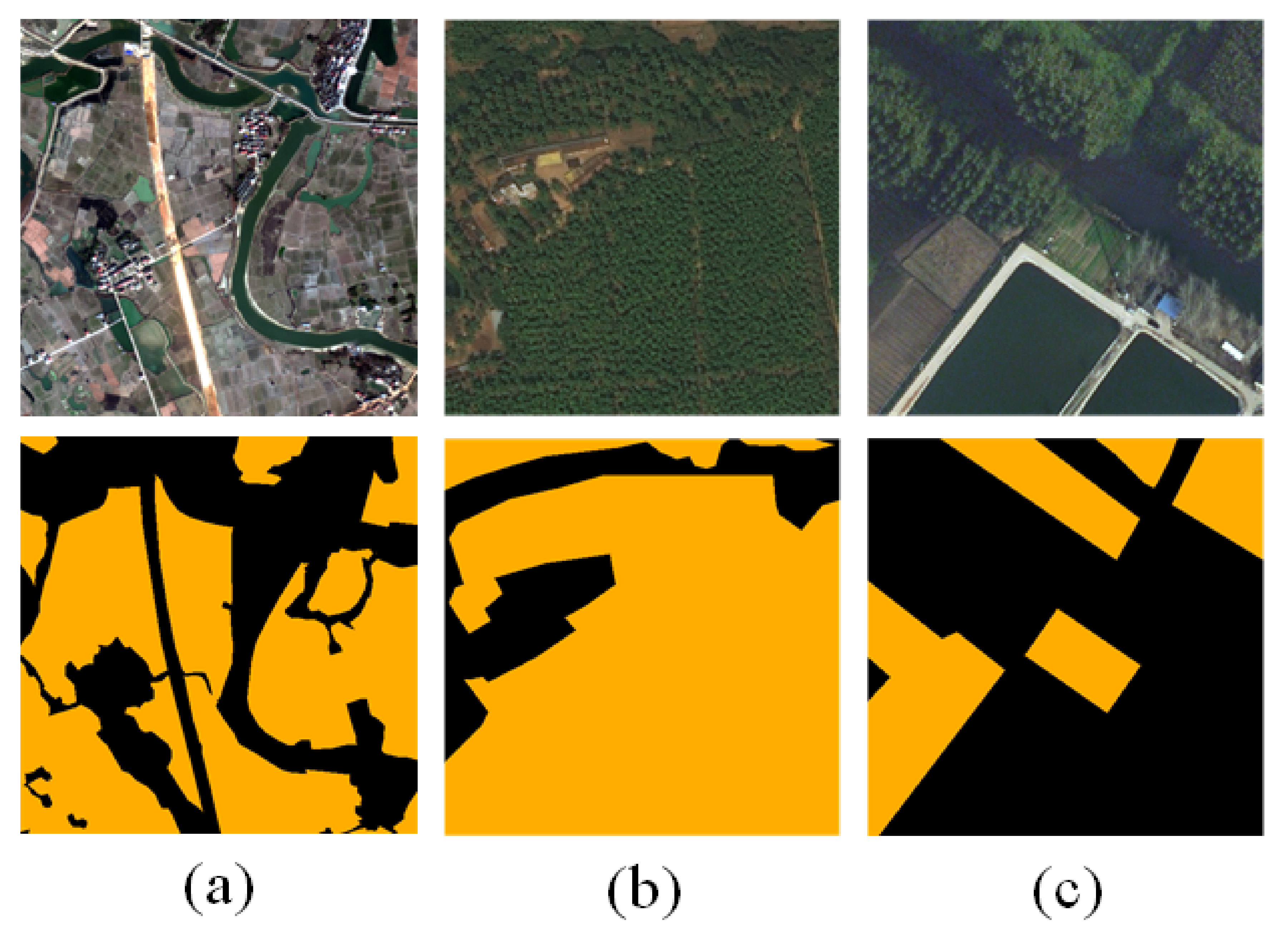

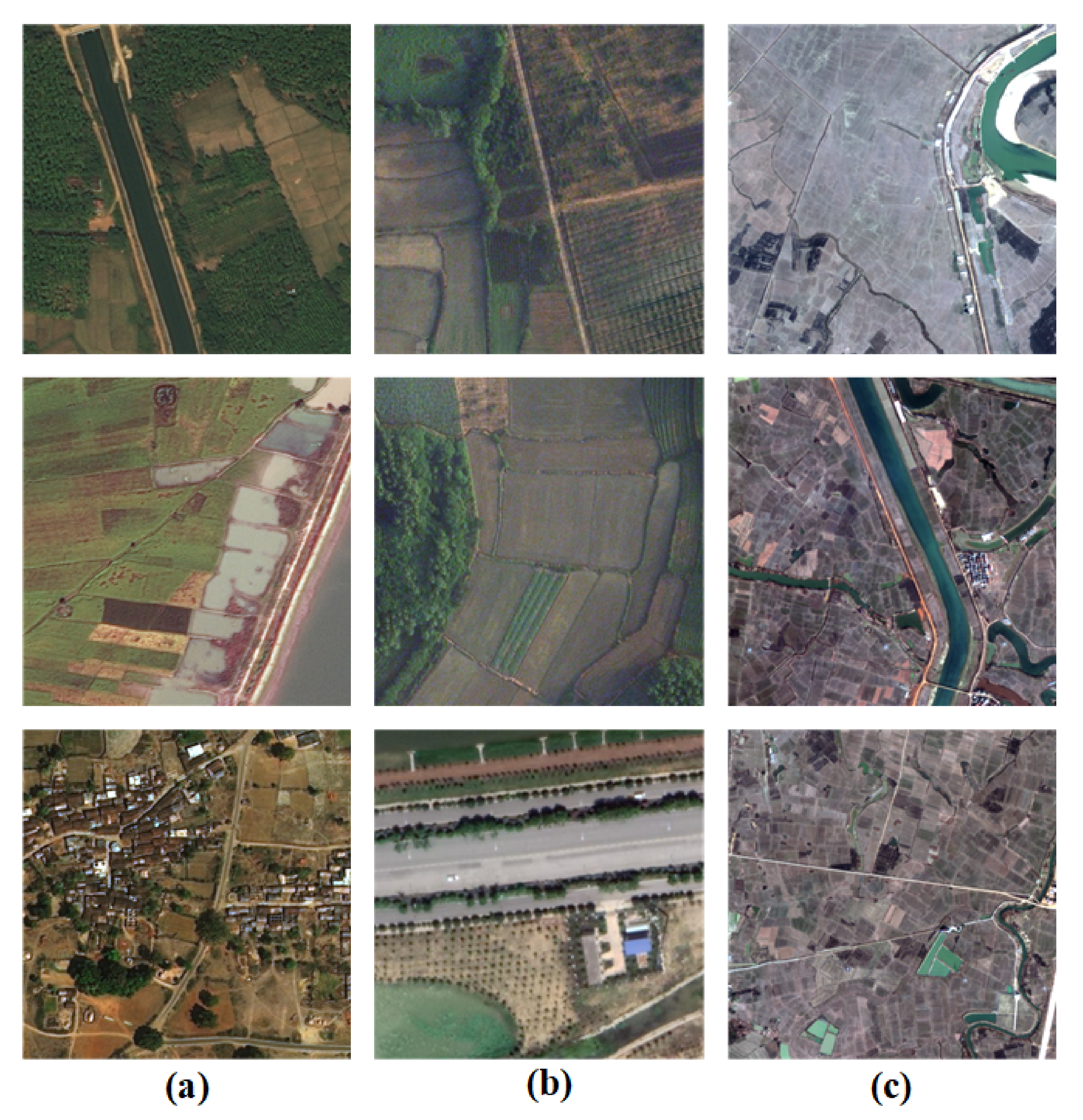

4.1. Dataset Description

4.2. Data Processing

4.3. Evaluation Metrics

4.4. Implementation Details

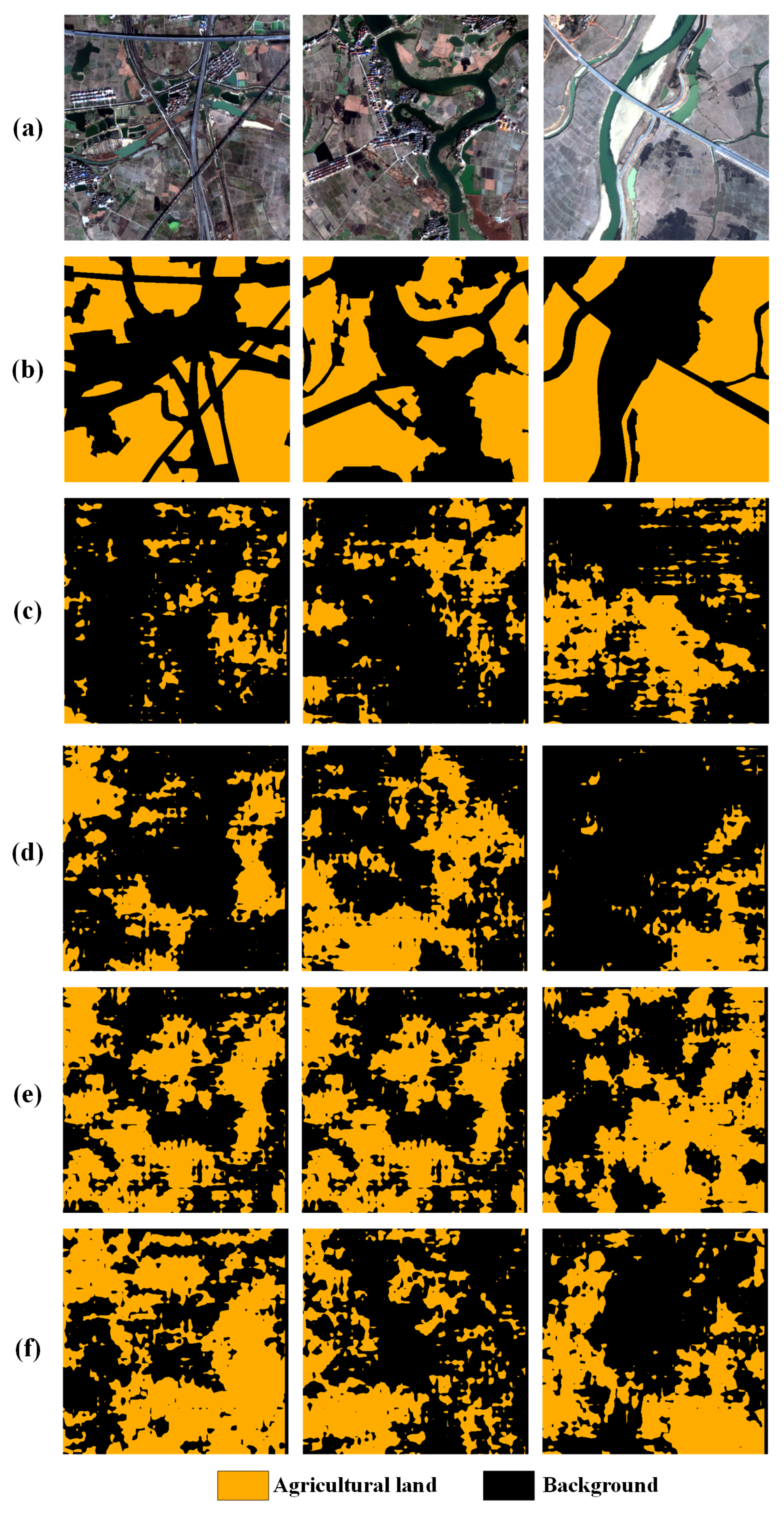

4.5. UDA Results on Different Adaptation Setting

5. Discussion

5.1. Comparative Methods

5.2. Ablation Study on Feature Fusion Method

5.3. Subdomain Division Factor

5.4. Model Training

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Alcantara, C.; Kuemmerle, T.; Prishchepov, A.V.; Radeloff, V.C. Mapping abandoned agriculture with multi-temporal modis satellite data. Remote Sens. Environ. 2012, 124, 334–347. [Google Scholar] [CrossRef]

- Matton, N.; Canto, G.S.; Waldner, F.; Valero, S.; Morin, D.; Inglada, J.; Arias, M.; Bontemps, S.; Koetz, B.; Defourny, P. An automated method for annual cropland mapping along the season for various globally-distributed agrosystems using high spatial and temporal resolution time series. Remote Sens. 2015, 7, 13208–13232. [Google Scholar] [CrossRef]

- Gebbers, R.; Adamchuk, V.I. Precision agriculture and food security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef] [PubMed]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring us agriculture: The us department of agriculture, national agricultural statistics service, cropland data layer program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Lu, R.; Wang, N.; Zhang, Y.; Lin, Y.; Wu, W.; Shi, Z. Extraction of agricultural fields via dasfnet with dual attention mechanism and multi-scale feature fusion in south xinjiang, china. Remote Sens. 2022, 14, 2253. [Google Scholar] [CrossRef]

- Li, Z.; Chen, S.; Meng, X.; Zhu, R.; Lu, J.; Cao, L.; Lu, P. Full convolution neural network combined with contextual feature representation for cropland extraction from high-resolution remote sensing images. Remote Sens. 2022, 14, 2157. [Google Scholar] [CrossRef]

- Shang, R.; Zhang, J.; Jiao, L.; Li, Y.; Marturi, N.; Stolkin, R. Multi-scale adaptive feature fusion network for semantic segmentation in remote sensing images. Remote Sens. 2020, 12, 872. [Google Scholar] [CrossRef]

- Zhang, X.; Cheng, B.; Chen, J.; Liang, C. High-resolution boundary refined convolutional neural network for automatic agricultural greenhouses extraction from gaofen-2 satellite imageries. Remote Sens. 2021, 13, 4237. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Sen, L.I.; Ling, P.E.N.G.; Yuan, H.U.; Tianhe, C.H.I. Fd-rcf-based boundary delineation of agricultural fields in high resolution remote sensing images. J. Univ. Chin. Acad. Sci. 2020, 37, 483. [Google Scholar]

- Su, T.; Li, H.; Zhang, S.; Li, Y. Image segmentation using mean shift for extracting croplands from high-resolution remote sensing imagery. Remote Sens. Lett. 2015, 6, 952–961. [Google Scholar] [CrossRef]

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef]

- Hong, R.; Park, J.; Jang, S.; Shin, H.; Kim, H.; Song, I. Development of a parcel-level land boundary extraction algorithm for aerial imagery of regularly arranged agricultural areas. Remote Sens. 2021, 13, 1167. [Google Scholar] [CrossRef]

- Xue, Y.; Zhao, J.; Zhang, M. A watershed-segmentation-based improved algorithm for extracting cultivated land boundaries. Remote Sens. 2021, 13, 939. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. Deepglobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. Loveda: A remote sensing land-cover dataset for domain adaptive semantic segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- Peng, D.; Guan, H.; Zang, Y.; Bruzzone, L. Full-level domain adaptation for building extraction in very-high-resolution optical remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar] [CrossRef]

- Ma, C.; Sha, D.; Mu, X. Unsupervised adversarial domain adaptation with error-correcting boundaries and feature adaption metric for remote-sensing scene classification. Remote Sens. 2021, 13, 1270. [Google Scholar] [CrossRef]

- Kwak, G.; Park, N. Unsupervised domain adaptation with adversarial self-training for crop classification using remote sensing images. Remote Sens. 2022, 14, 4639. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise unsupervised domain adaptation with adversarial self-training for road segmentation of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Zhou, H.; Wang, R.; Yang, J. Road segmentation for remote sensing images using adversarial spatial pyramid networks. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4673–4688. [Google Scholar] [CrossRef]

- Guo, J.; Yang, J.; Yue, H.; Liu, X.; Li, K. Unsupervised domain-invariant feature learning for cloud detection of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Lu, X.; Gong, T.; Zheng, X. Multisource compensation network for remote sensing cross-domain scene classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2504–2515. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef]

- Baktashmotlagh, M.; Harandi, M.T.; Lovell, B.C.; Salzmann, M. Unsupervised domain adaptation by domain invariant projection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 769–776. [Google Scholar]

- Shen, J.; Qu, Y.; Zhang, W.; Yu, Y. Wasserstein distance guided representation learning for domain adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Hoffman, J.; Wang, D.; Yu, F.; Darrell, T. Fcns in the wild: Pixel-level adversarial and constraint-based adaptation. arXiv 2016, arXiv:1612.02649. [Google Scholar]

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.; Chandraker, M. Learning to adapt structured output space for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- Vu, T.-H.; Jain, H.; Bucher, M.; Cord, M.; Pérez, P. Advent: Adversarial entropy minimization for domain adaptation in semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2517–2526. [Google Scholar]

- Li, Y.; Yuan, L.; Vasconcelos, N. Bidirectional learning for domain adaptation of semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6936–6945. [Google Scholar]

- Pan, F.; Shin, I.; Rameau, F.; Lee, S.; Kweon, I.S. Unsupervised intra-domain adaptation for semantic segmentation through self-supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3764–3773. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Li, J. Hrcnet: High-resolution context extraction network for semantic segmentation of remote sensing images. Remote Sens. 2020, 13, 71. [Google Scholar] [CrossRef]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, a.S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Dang, B.; Li, Y. Msresnet: Multiscale residual network via self-supervised learning for water-body detection in remote sensing imagery. Remote Sens. 2021, 13, 3122. [Google Scholar] [CrossRef]

- He, C.; Li, S.; Xiong, D.; Fang, P.; Liao, M. Remote sensing image semantic segmentation based on edge information guidance. Remote Sens. 2020, 12, 1501. [Google Scholar] [CrossRef]

- Li, J.; Xiu, J.; Yang, Z.; Liu, C. Dual path attention net for remote sensing semantic image segmentation. Isprs Int. J. -Geo-Inf. 2020, 9, 571. [Google Scholar] [CrossRef]

| Dataset | Resolution | Sensor | Origin Size | Training Data | Test Data |

|---|---|---|---|---|---|

| DeepGlobe | 0.5 m/pixel | WorldView-2 | 30,470 | - | |

| GID | 4 m/pixel | GF-2 | 73,490 | 734 | |

| LoveDA | 0.3 m/pixel | Spaceborne | 15,829 | 324 |

| DeepGlobe → LoveDA | ||||

|---|---|---|---|---|

| Methods | IoU | COM | COR | F1 |

| Source-only | 36.327 | 45.895 | 62.861 | 49.108 |

| AdaptSegNet [35] | 46.921 | 74.478 | 55.190 | 60.404 |

| ADVENT [36] | 49.392 | 74.621 | 58.085 | 61.169 |

| BDL [37] | 52.234 | 79.747 | 59.011 | 65.334 |

| IntraDA [38] | 51.710 | 82.855 | 57.341 | 64.589 |

| Ours | 55.763 | 81.370 | 62.549 | 67.750 |

| GID ⟶ LoveDA | ||||

|---|---|---|---|---|

| Methods | IoU | COM | COR | F1 |

| Source-only | 36.229 | 40.139 | 83.018 | 46.026 |

| AdaptSegNet [35] | 42.931 | 54.221 | 71.295 | 55.454 |

| ADVENT [36] | 45.035 | 60.762 | 65.021 | 58.250 |

| BDL [37] | 44.592 | 58.231 | 67.447 | 57.732 |

| IntraDA [38] | 48.254 | 60.365 | 72.898 | 60.799 |

| Ours | 53.470 | 92.109 | 56.891 | 66.042 |

| DeepGlobe ⟶ GID | ||||

|---|---|---|---|---|

| Methods | IoU | COM | COR | F1 |

| Source-only | 26.986 | 39.204 | 62.884 | 36.599 |

| AdaptSegNet [35] | 43.098 | 68.614 | 59.414 | 56.067 |

| ADVENT [36] | 45.995 | 77.435 | 57.403 | 58.940 |

| BDL [37] | 46.348 | 75.789 | 58.373 | 59.508 |

| IntraDA [38] | 47.631 | 68.488 | 60.293 | 61.465 |

| Ours | 49.553 | 88.545 | 54.535 | 62.439 |

| UDA Setting | Method | IoU | COM | COR | F1 |

|---|---|---|---|---|---|

| DeepGlobe ⟶ LoveDA | baseline | 51.710 | 82.855 | 57.341 | 64.589 |

| ours | 55.763 | 81.370 | 62.549 | 67.750 | |

| GID ⟶ LoveDA | baseline | 48.254 | 60.365 | 72.898 | 60.799 |

| ours | 53.470 | 92.109 | 56.891 | 66.042 | |

| DeepGlobe ⟶ GID | baseline | 47.631 | 68.488 | 60.293 | 61.465 |

| ours | 49.553 | 88.545 | 54.535 | 62.439 |

| 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | |

|---|---|---|---|---|---|

| DeepGlobe ⟶ LoveDA | 54.160 | 54.446 | 54.953 | 55.763 | 54.471 |

| GID ⟶ LoveDA | 52.913 | 53.470 | 53.231 | 52.557 | 51.797 |

| DeepGlobe ⟶ GID | 48.685 | 49.553 | 48.012 | 48.197 | 44.745 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Xu, S.; Sun, J.; Ou, D.; Wu, X.; Wang, M. Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images. Remote Sens. 2022, 14, 6298. https://doi.org/10.3390/rs14246298

Zhang J, Xu S, Sun J, Ou D, Wu X, Wang M. Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images. Remote Sensing. 2022; 14(24):6298. https://doi.org/10.3390/rs14246298

Chicago/Turabian StyleZhang, Junbo, Shifeng Xu, Jun Sun, Dinghua Ou, Xiaobo Wu, and Mantao Wang. 2022. "Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images" Remote Sensing 14, no. 24: 6298. https://doi.org/10.3390/rs14246298

APA StyleZhang, J., Xu, S., Sun, J., Ou, D., Wu, X., & Wang, M. (2022). Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images. Remote Sensing, 14(24), 6298. https://doi.org/10.3390/rs14246298