LRFFNet: Large Receptive Field Feature Fusion Network for Semantic Segmentation of SAR Images in Building Areas

Abstract

1. Introduction

- We design a network called LRFFNet that outperforms many SOTA works on the SAR semantic segmentation task.

- The proposed CFP module can fuse multi-level features and improve the ability to capture contextual information.

- The proposed LFCA module can reassign the channel weights, the channel with more information is given higher attention, the useless information is suppressed, and the ability to locate the channel containing key information is improved.

- Our proposed auxiliary branch can restrict the network to perform segmentation within the building area and reduce the phenomenon of color blocks generated outside the building area and optimize the segmentation results.

2. Related Work

2.1. Traditional Approaches

2.2. Deep Learning-Based Methods

3. Proposed Method

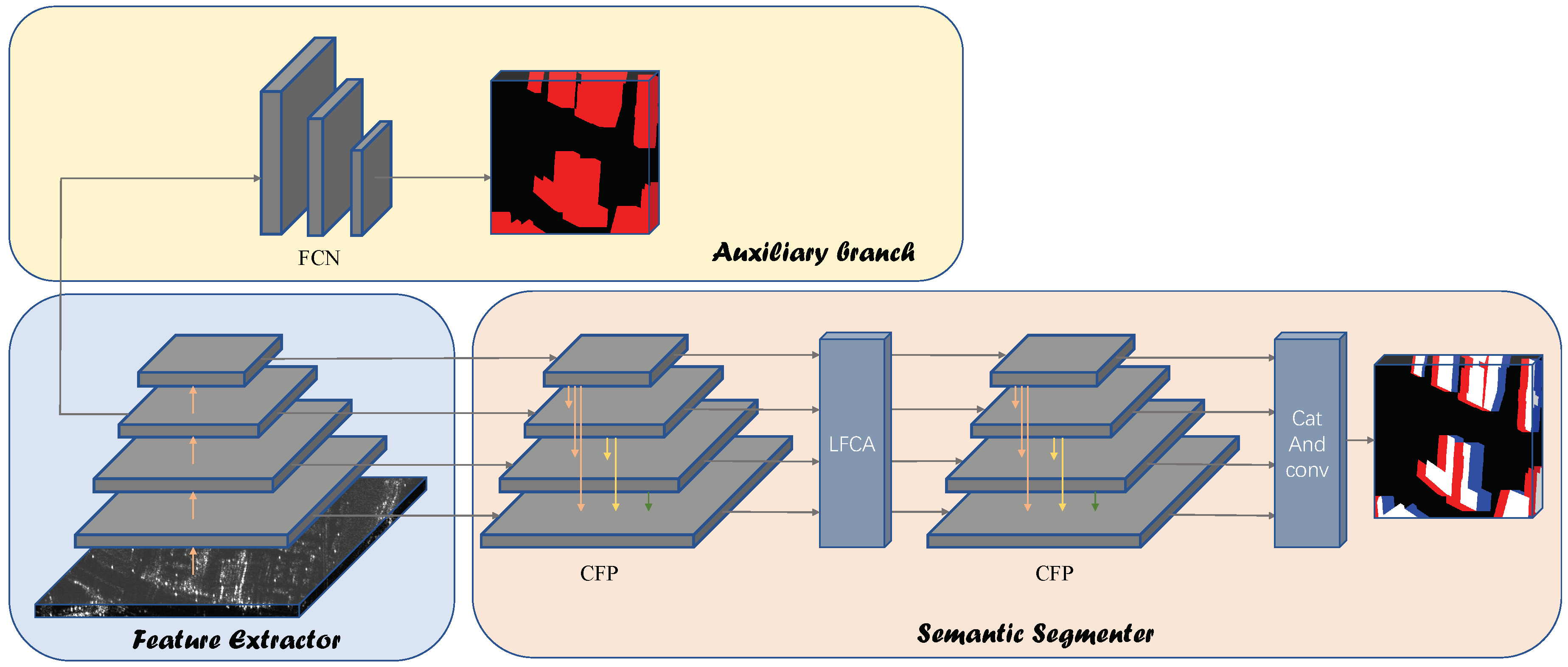

3.1. Overall Architecture

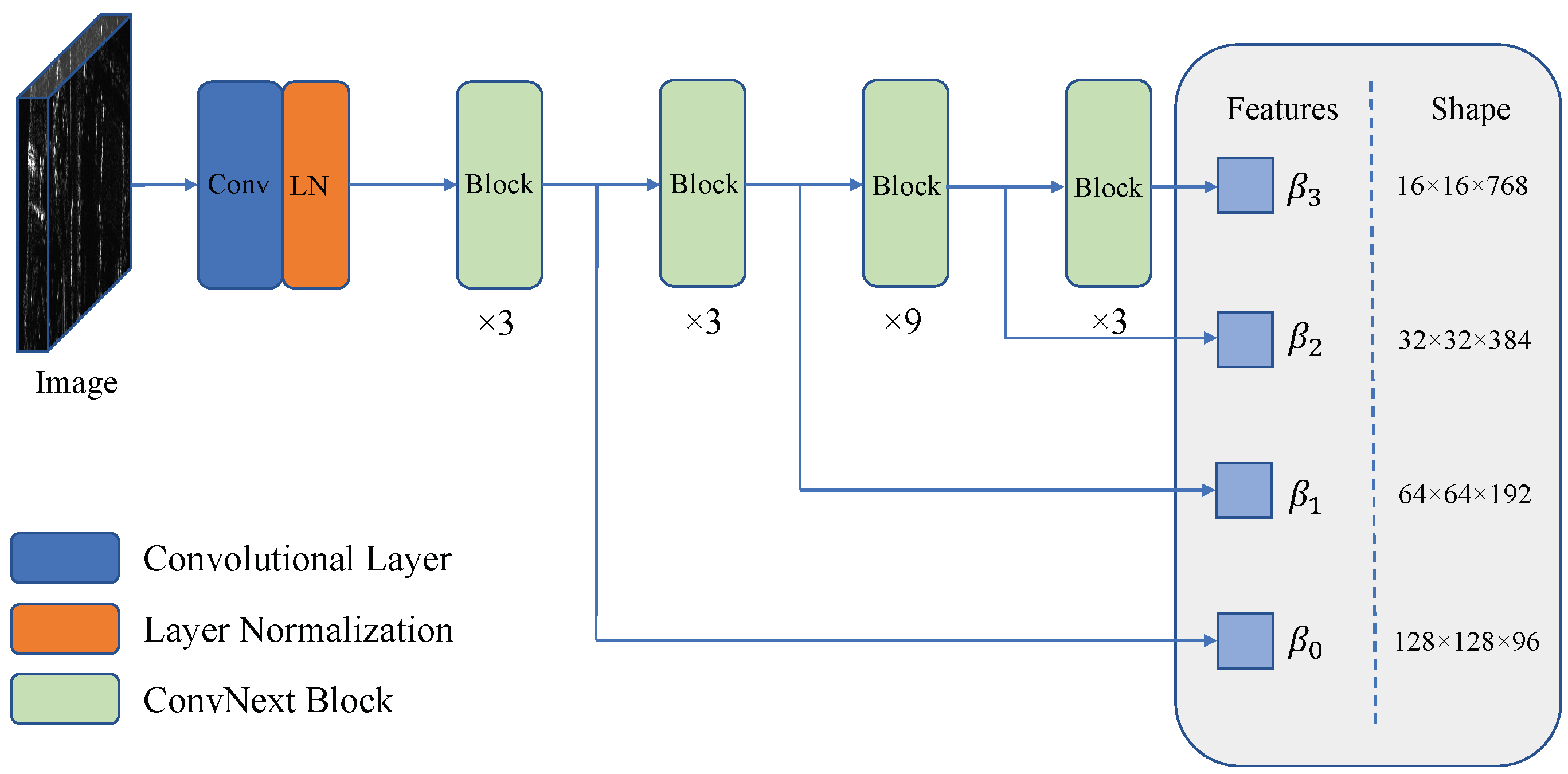

3.2. Feature Extractor

3.3. Semantic Segmenter

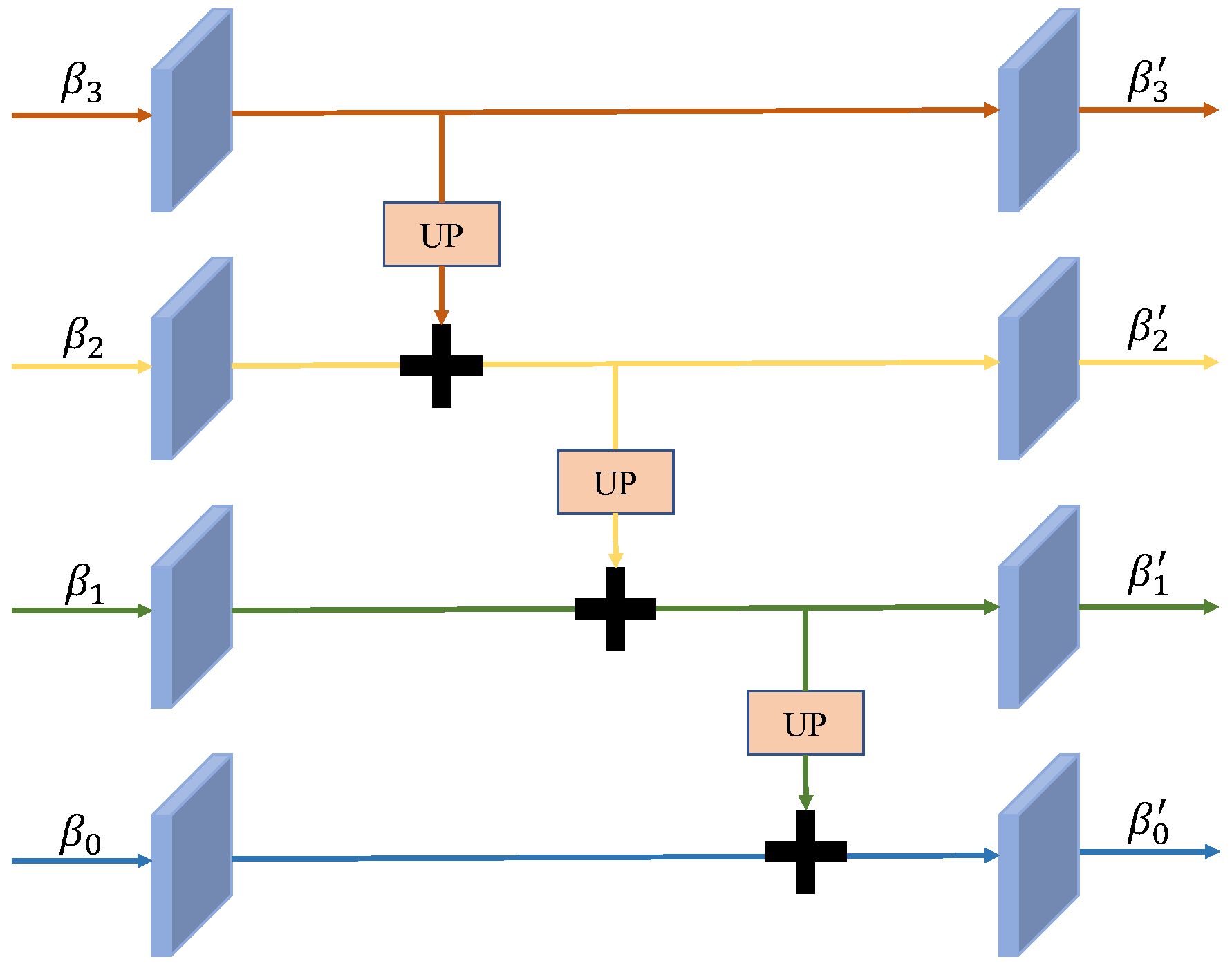

3.3.1. Cascade Feature Pyramid Module

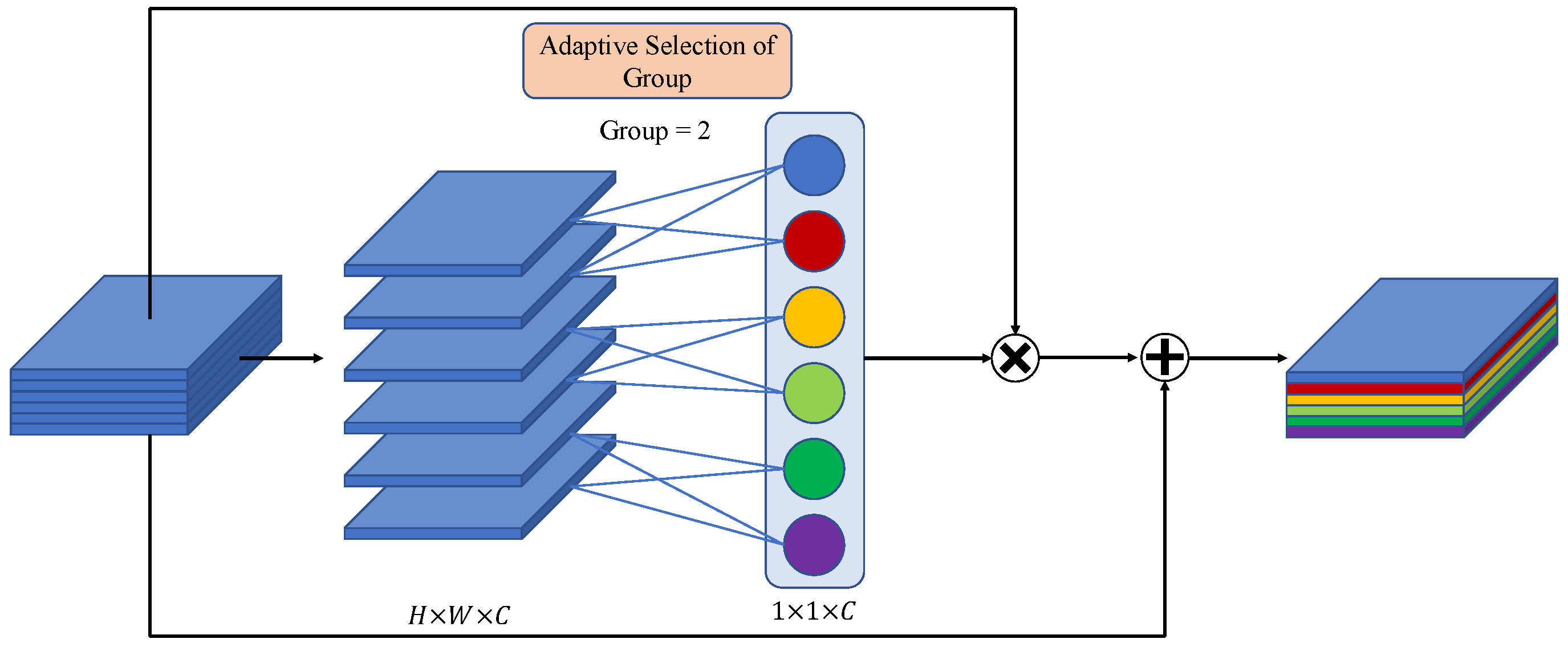

3.3.2. Large Receptive Field Channel Attention Module

3.4. Auxiliary Branch

3.5. Loss Function

4. Experiments and Discussion

4.1. Experimental Settings

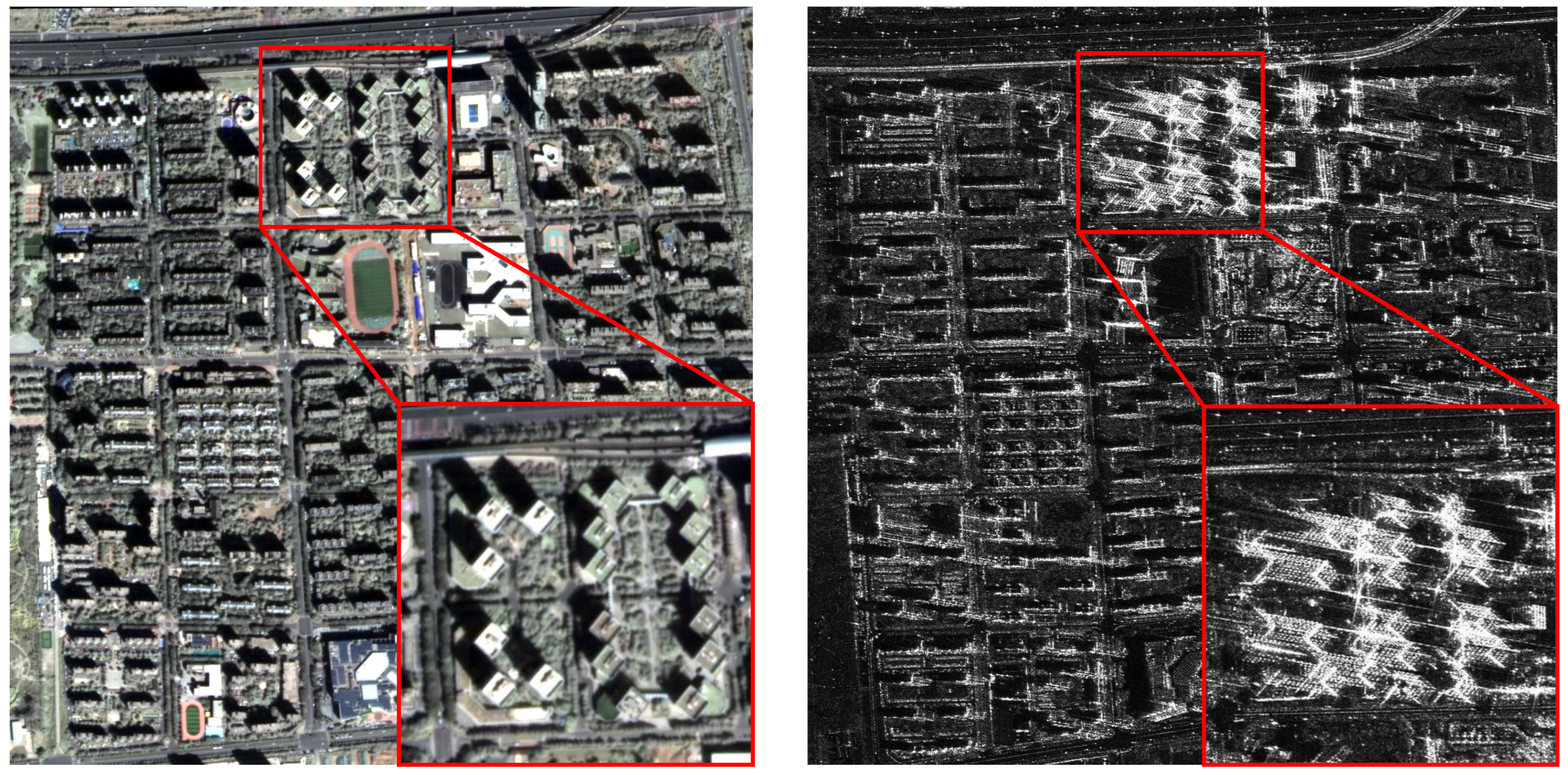

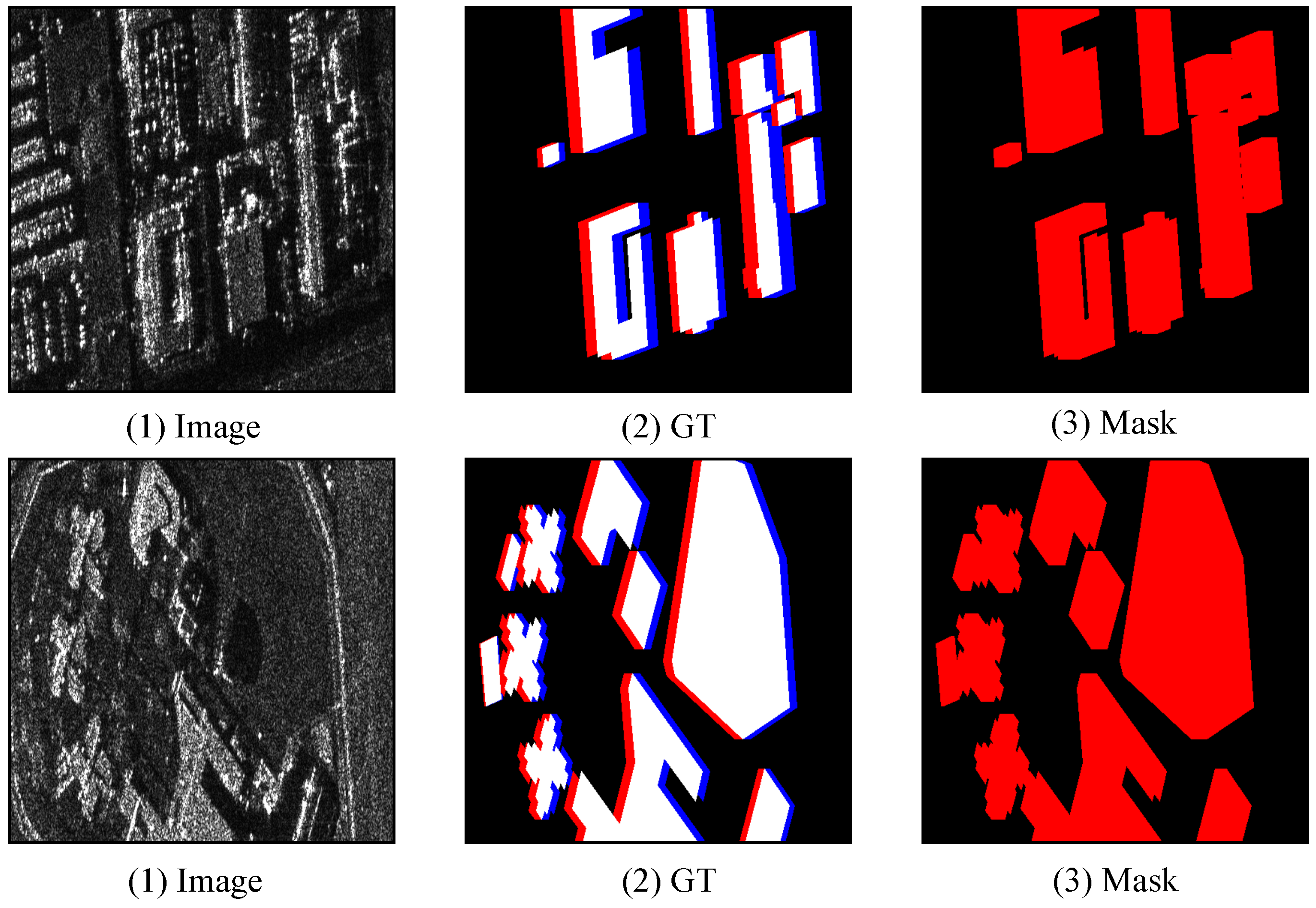

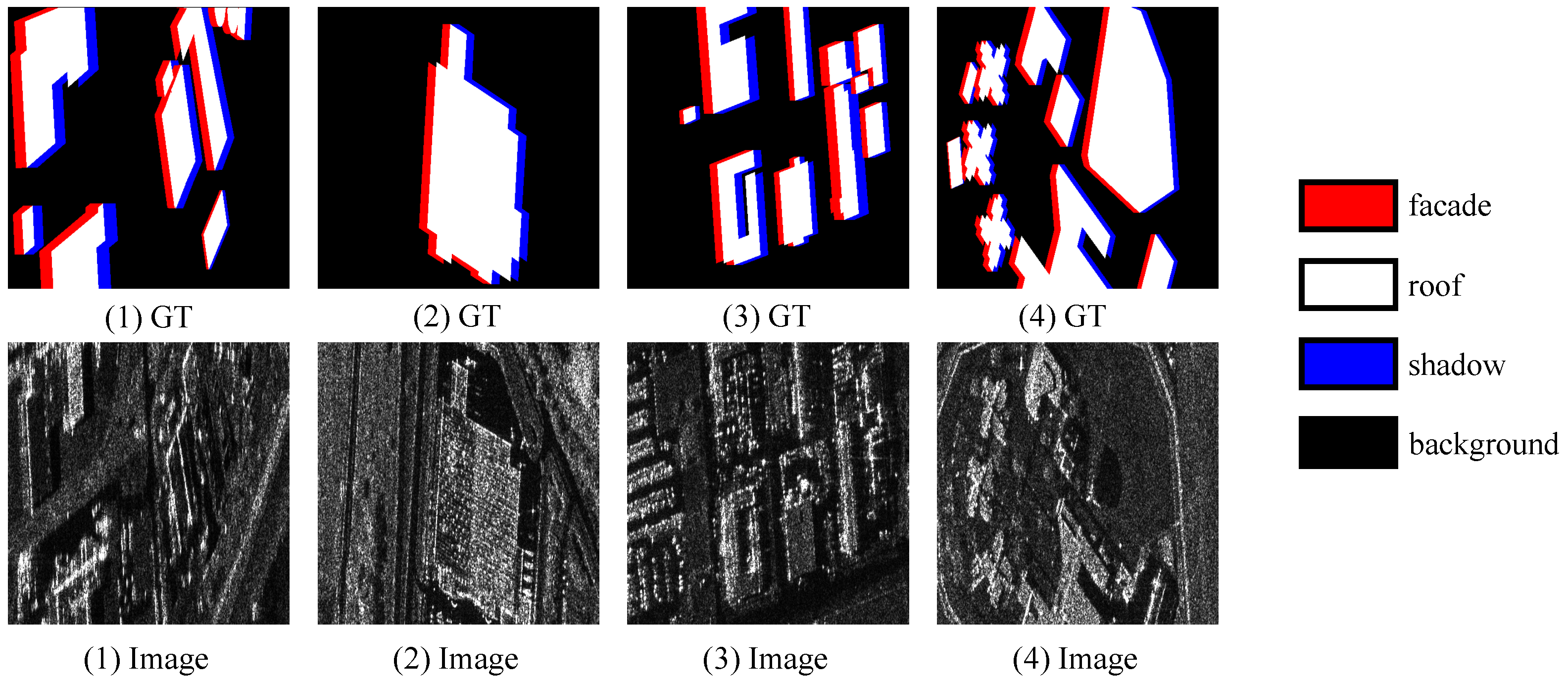

4.1.1. Dataset Description

4.1.2. Comparison Methods and Evaluation Metrics

4.1.3. Implementation Details

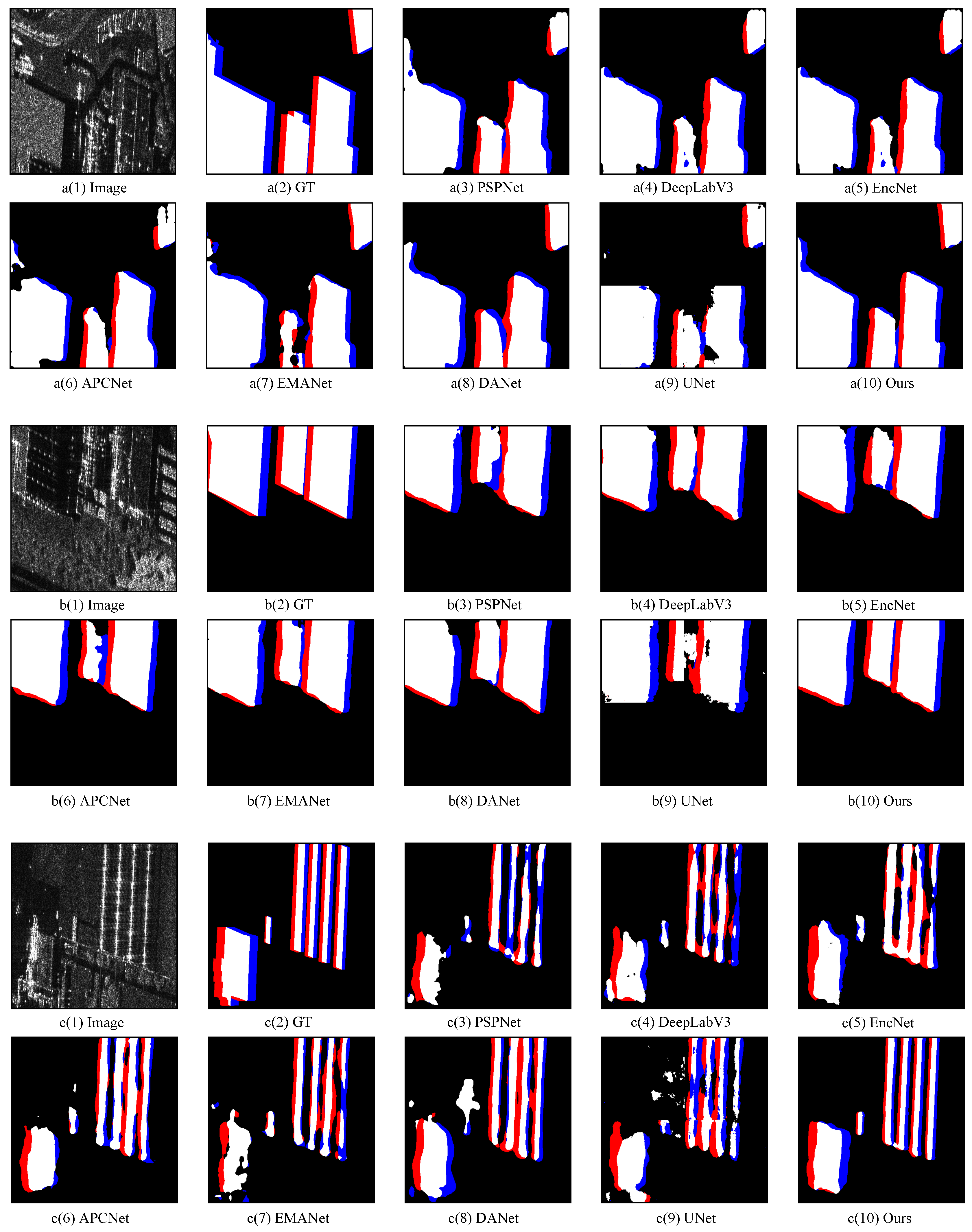

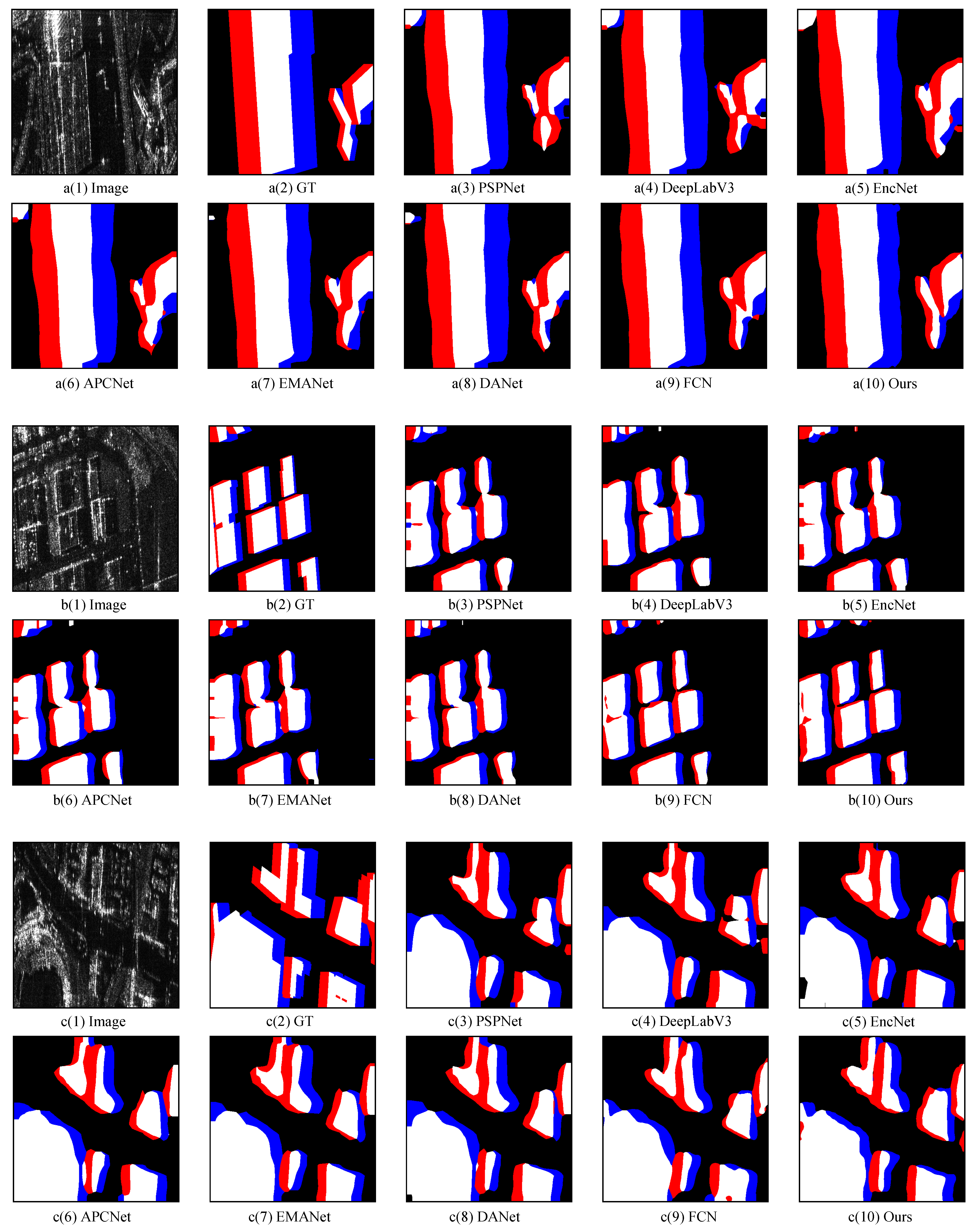

4.2. Comparative Experiments and Analysis

4.3. Ablation Experiments

4.3.1. Effect of Cascade Feature Pyramid Module

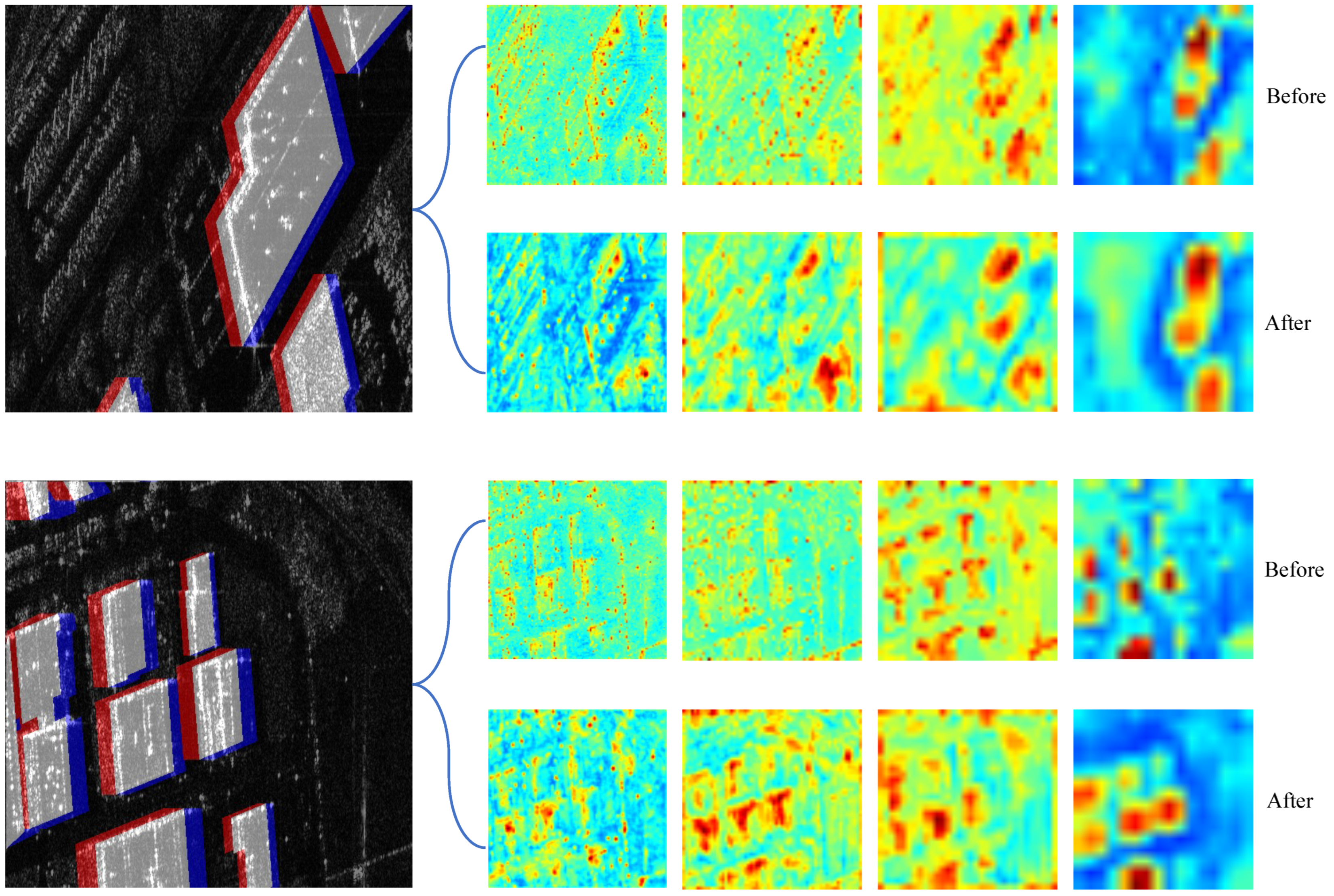

4.3.2. Effect of the Large Receptive Field Channel Attention Module

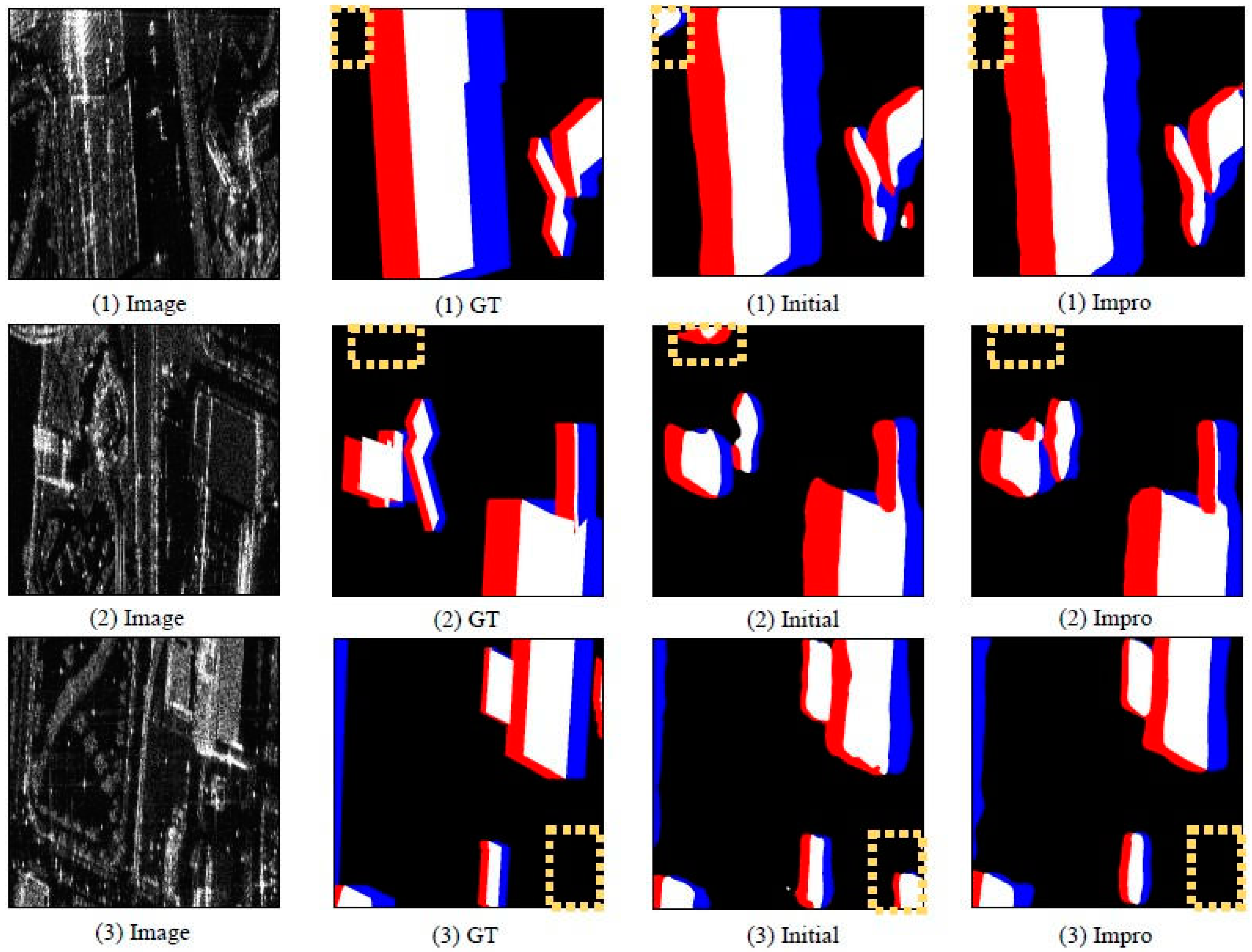

4.3.3. Effect of the Auxiliary Branch

4.4. Analysis of Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar; Wiley: New York, NY, USA, 1991; Volume 11. [Google Scholar]

- Chen, F.; Lasaponara, R.; Masini, N. An overview of satellite synthetic aperture radar remote sensing in archaeology: From site detection to monitoring. J. Cult. Herit. 2017, 23, 5–11. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Cumming, I.G.; Wong, F.H. Digital processing of synthetic aperture radar data. Artech House 2005, 1, 108–110. [Google Scholar]

- Joyce, K.E.; Samsonov, S.; Levick, S.R.; Engelbrecht, J.; Belliss, S. Mapping and monitoring geological hazards using optical, LiDAR, and synthetic aperture RADAR image data. Nat. Hazards 2014, 73, 137–163. [Google Scholar] [CrossRef]

- Chen, J.; Qiu, X.; Ding, C.; Wu, Y. CVCMFF Net: Complex-valued convolutional and multifeature fusion network for building semantic segmentation of InSAR images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Mangai, U.G.; Samanta, S.; Das, S.; Chowdhury, P.R.; Varghese, K.; Kalra, M. A hierarchical multi-classifier framework for landform segmentation using multi-spectral satellite images-a case study over the indian subcontinent. In Proceedings of the 2010 Fourth Pacific-Rim Symposium on Image and Video Technology, Singapore, 14–17 November 2010; pp. 306–313. [Google Scholar]

- Yu, Q.; Clausi, D.A. IRGS: Image segmentation using edge penalties and region growing. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2126–2139. [Google Scholar]

- Jogin, M.; Madhulika, M.; Divya, G.; Meghana, R.; Apoorva, S. Feature extraction using convolution neural networks (CNN) and deep learning. In Proceedings of the 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bengaluru, Karnataka, 18–19 May 2018; pp. 2319–2323. [Google Scholar]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. A deep neural network for oil spill semantic segmentation in Sar images. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3773–3777. [Google Scholar]

- Tupin, F. Extraction of 3D information using overlay detection on SAR images. In Proceedings of the 2003 2nd GRSS/ISPRS Joint Workshop on Remote Sensing and Data Fusion over Urban Areas, Berlin, Geramny, 22–23 May 2003; pp. 72–76. [Google Scholar]

- Ding, B.; Wen, G.; Ma, C.; Yang, X. An efficient and robust framework for SAR target recognition by hierarchically fusing global and local features. IEEE Trans. Image Process. 2018, 27, 5983–5995. [Google Scholar] [CrossRef]

- Ma, A.; Wang, J.; Zhong, Y.; Zheng, Z. Factseg: Foreground activation-driven small object semantic segmentation in large-scale remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Zhang, M.; Li, Z.; Tian, B.; Zhou, J.; Tang, P. The backscattering characteristics of wetland vegetation and water-level changes detection using multi-mode SAR: A case study. Int. J. Appl. Earth Obs. Geoinf. 2016, 45, 1–13. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Sun, Z.; Geng, H.; Lu, Z.; Scherer, R.; Woźniak, M. Review of road segmentation for SAR images. Remote Sens. 2021, 13, 1011. [Google Scholar] [CrossRef]

- Cohen, A.; Rivlin, E.; Shimshoni, I.; Sabo, E. Memory based active contour algorithm using pixel-level classified images for colon crypt segmentation. Comput. Med. Imaging Graph. 2015, 43, 150–164. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision, Prague, Czech Republic, 11–14 May 2004; Volume 1, pp. 1–2. [Google Scholar]

- Chen, C.W.; Luo, J.; Parker, K.J. Image segmentation via adaptive K-mean clustering and knowledge-based morphological operations with biomedical applications. IEEE Trans. Image Process. 1998, 7, 1673–1683. [Google Scholar] [CrossRef]

- Carreira, J.; Sminchisescu, C. Constrained parametric min-cuts for automatic object segmentation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3241–3248. [Google Scholar]

- Ma, F.; Xiang, D.; Yang, K.; Yin, Q.; Zhang, F. Weakly Supervised Deep Soft Clustering for Flood Identification in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised deep slow feature analysis for change detection in multi-temporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef]

- Huang, S.; Zhang, H.; Pižurica, A. Subspace clustering for hyperspectral images via dictionary learning with adaptive regularization. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Roerdink, J.B.; Meijster, A. The watershed transform: Definitions, algorithms and parallelization strategies. Fundam. Inform. 2000, 41, 187–228. [Google Scholar] [CrossRef]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Blake, A.; Kohli, P.; Rother, C. Markov Random Fields for Vision and Image Processing; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Sutton, C.; McCallum, A. An introduction to conditional random fields. Found. Trends Mach. Learn. 2012, 4, 267–373. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 18–22 September 2015; pp. 234–241. [Google Scholar]

- Zhang, H.; Dana, K.; Shi, J.; Zhang, Z.; Wang, X.; Tyagi, A.; Agrawal, A. Context encoding for semantic segmentation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7151–7160. [Google Scholar]

- He, J.; Deng, Z.; Zhou, L.; Wang, Y.; Qiao, Y. Adaptive pyramid context network for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7519–7528. [Google Scholar]

- Li, X.; Zhong, Z.; Wu, J.; Yang, Y.; Lin, Z.; Liu, H. Expectation-maximization attention networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9167–9176. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Wu, T.; Tang, S.; Zhang, R.; Cao, J.; Zhang, Y. Cgnet: A light-weight context guided network for semantic segmentation. IEEE Trans. Image Process. 2020, 30, 1169–1179. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Shaban, M.; Salim, R.; Abu Khalifeh, H.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A deep-learning framework for the detection of oil spills from SAR data. Sensors 2021, 21, 2351. [Google Scholar] [CrossRef]

- Wang, X.; Cavigelli, L.; Eggimann, M.; Magno, M.; Benini, L. HR-SAR-Net: A deep neural network for urban scene segmentation from high-resolution SAR data. In Proceedings of the 2020 IEEE Sensors Applications Symposium (SAS), Kuala Lumpur, Malaysia, 9–11 March 2020; pp. 1–6. [Google Scholar]

- Ding, L.; Zheng, K.; Lin, D.; Chen, Y.; Liu, B.; Li, J.; Bruzzone, L. MP-ResNet: Multipath residual network for the semantic segmentation of high-resolution PolSAR images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Wu, W.; Li, H.; Li, X.; Guo, H.; Zhang, L. PolSAR image semantic segmentation based on deep transfer learning—Realizing smooth classification with small training sets. IEEE Geosci. Remote Sens. Lett. 2019, 16, 977–981. [Google Scholar] [CrossRef]

- Yue, Z.; Gao, F.; Xiong, Q.; Wang, J.; Hussain, A.; Zhou, H. A novel attention fully convolutional network method for synthetic aperture radar image segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4585–4598. [Google Scholar] [CrossRef]

- He, W.; Song, H.; Yao, Y.; Jia, H. Mapping of Urban Areas from SAR Images via Semantic Segmentation. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1440–1443. [Google Scholar]

- Cha, K.; Seo, J.; Choi, Y. Contrastive Multiview Coding with Electro-Optics for SAR Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Davari, A.; Islam, S.; Seehaus, T.; Hartmann, A.; Braun, M.; Maier, A.; Christlein, V. On Mathews correlation coefficient and improved distance map loss for automatic glacier calving front segmentation in SAR imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Bi, H.; Xu, L.; Cao, X.; Xue, Y.; Xu, Z. Polarimetric SAR image semantic segmentation with 3D discrete wavelet transform and Markov random field. IEEE Trans. Image Process. 2020, 29, 6601–6614. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 11976–11986. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Luo, Y.; Qiu, X.; Peng, L.; Wang, W.; Lin, B.; Ding, C. A novel solution for stereo three-dimensional localization combined with geometric semantic constraints based on spaceborne SAR data. ISPRS J. Photogramm. Remote Sens. 2022, 192, 161–174. [Google Scholar] [CrossRef]

| Method | IoU per Class (%) | mIoU (%) | Acc per Class (%) | mAcc (%) | aAcc (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| BG | FD | RF | SD | BG | FD | RF | SD | ||||

| Unet | 85.80 | 27.32 | 49.67 | 21.70 | 46.13 | 94.05 | 35.95 | 67.48 | 29.02 | 56.62 | 84.98 |

| Res+PSPNet | 89.76 | 34.80 | 60.48 | 27.79 | 53.21 | 96.62 | 43.77 | 74.54 | 35.98 | 62.73 | 88.56 |

| Res+DeepLabV3 | 90.12 | 34.98 | 60.76 | 30.38 | 54.06 | 95.89 | 45.78 | 76.89 | 41.11 | 64.92 | 88.61 |

| Res+EncNet | 89.79 | 33.94 | 59.55 | 28.93 | 53.06 | 96.46 | 43.95 | 73.31 | 38.48 | 63.05 | 88.40 |

| Res+ApcNet | 89.93 | 32.91 | 60.40 | 29.78 | 53.26 | 96.41 | 42.23 | 74.78 | 39.83 | 63.31 | 88.52 |

| Res+EmaNet | 90.03 | 33.81 | 60.49 | 28.64 | 53.24 | 97.00 | 43.09 | 72.95 | 37.34 | 62.60 | 88.68 |

| Res+DaNet | 90.15 | 36.48 | 60.52 | 31.78 | 54.73 | 96.81 | 46.11 | 73.00 | 42.15 | 64.52 | 88.91 |

| ours | 93.01 | 47.63 | 70.57 | 42.14 | 63.34 | 97.16 | 61.81 | 81.44 | 56.25 | 74.17 | 91.64 |

| Method | IoU per Class (%) | mIoU (%) | Acc per Class (%) | mAcc (%) | aAcc (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| BG | FD | RF | SD | BG | FD | RF | SD | ||||

| ConvN+PSPNet | 92.29 | 40.98 | 66.60 | 37.75 | 59.40 | 96.76 | 54.15 | 80.01 | 51.24 | 70.54 | 90.54 |

| ConvN+DeepLabV3 | 92.50 | 42.69 | 66.35 | 39.46 | 60.25 | 96.88 | 56.28 | 79.38 | 53.11 | 71.41 | 90.74 |

| ConvN+EncNet | 92.39 | 40.98 | 66.14 | 36.08 | 58.90 | 96.81 | 54.40 | 79.80 | 49.01 | 70.01 | 90.47 |

| ConvN+ApcNet | 92.63 | 40.87 | 66.77 | 37.82 | 59.52 | 97.15 | 52.68 | 80.09 | 50.81 | 70.18 | 90.76 |

| ConvN+EmaNet | 92.62 | 41.50 | 67.31 | 37.72 | 59.78 | 97.08 | 53.30 | 80.69 | 50.86 | 70.48 | 90.81 |

| ConvN+DaNet | 92.33 | 40.15 | 66.11 | 37.14 | 58.93 | 96.82 | 52.95 | 80.00 | 49.96 | 69.93 | 90.47 |

| ConvN+FPN | 92.45 | 42.16 | 67.75 | 35.99 | 59.59 | 96.96 | 55.23 | 80.64 | 48.69 | 70.38 | 90.72 |

| ours | 93.01 | 47.63 | 70.57 | 42.14 | 63.34 | 97.16 | 61.81 | 81.44 | 56.25 | 74.17 | 91.64 |

| Method | IoU per Class (%) | mIoU (%) | Acc per Class (%) | mAcc (%) | aAcc (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| BG | FD | RF | SD | BG | FD | RF | SD | ||||

| MP-ResNet | 87.23 | 32.82 | 59.48 | 28.86 | 52.10 | 96.07 | 43.82 | 72.18 | 37.66 | 62.43 | 88.48 |

| HR-SARNet | 81.63 | 20.96 | 44.61 | 19.67 | 41.72 | 89.96 | 31.41 | 63.32 | 28.44 | 53.28 | 80.80 |

| MS-FCN | 85.83 | 30.12 | 55.77 | 25.92 | 49.41 | 95.07 | 41.07 | 73.23 | 36.73 | 61.53 | 84.69 |

| ours | 93.01 | 47.63 | 70.57 | 42.14 | 63.34 | 97.16 | 61.81 | 81.44 | 56.25 | 74.17 | 91.64 |

| Component | mIoU (%) | mAcc (%) | aAcc (%) | |||

|---|---|---|---|---|---|---|

| convN | FPN | CFP | C-CFP | |||

| ✓ | ✓ | - | - | 59.59 | 70.38 | 90.72 |

| ✓ | - | ✓ | - | 61.02 | 71.59 | 91.10 |

| ✓ | - | - | ✓ | 61.68 | 72.20 | 91.22 |

| Components | IoU per Class (%) | mIoU (%) | Acc per Class (%) | mAcc (%) | aAcc (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ConvN | C-CFP | LFCA | BG | FD | RF | SD | BG | FD | RF | SD | |||

| ✓ | ✓ | - | 92.66 | 45.08 | 68.85 | 40.13 | 61.68 | 97.21 | 57.65 | 80.43 | 53.52 | 72.20 | 91.22 |

| ✓ | ✓ | ✓ | 93.02 | 47.27 | 70.70 | 41.16 | 63.04 | 97.21 | 60.19 | 82.50 | 54.22 | 73.53 | 91.64 |

| (Ratio) | mIoU (%) | mAcc (%) | aAcc (%) |

|---|---|---|---|

| 2 | 62.43 | 73.41 | 91.41 |

| 1 | 63.14 | 73.77 | 91.65 |

| 0.2 | 63.34 | 74.17 | 91.64 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, B.; Zhang, W.; Hu, Y.; Chu, Q.; Li, Q. LRFFNet: Large Receptive Field Feature Fusion Network for Semantic Segmentation of SAR Images in Building Areas. Remote Sens. 2022, 14, 6291. https://doi.org/10.3390/rs14246291

Peng B, Zhang W, Hu Y, Chu Q, Li Q. LRFFNet: Large Receptive Field Feature Fusion Network for Semantic Segmentation of SAR Images in Building Areas. Remote Sensing. 2022; 14(24):6291. https://doi.org/10.3390/rs14246291

Chicago/Turabian StylePeng, Bo, Wenyi Zhang, Yuxin Hu, Qingwei Chu, and Qianqian Li. 2022. "LRFFNet: Large Receptive Field Feature Fusion Network for Semantic Segmentation of SAR Images in Building Areas" Remote Sensing 14, no. 24: 6291. https://doi.org/10.3390/rs14246291

APA StylePeng, B., Zhang, W., Hu, Y., Chu, Q., & Li, Q. (2022). LRFFNet: Large Receptive Field Feature Fusion Network for Semantic Segmentation of SAR Images in Building Areas. Remote Sensing, 14(24), 6291. https://doi.org/10.3390/rs14246291