Abstract

The Surface Water and Ocean Topography (SWOT) mission will be affected by various sources of systematic errors, which are correlated in space and in time. Their amplitude before calibration might be as large as tens of centimeters, i.e., able to dominate the mission error budget. To reduce their magnitude, we developed so-called data-driven (or empirical) calibration algorithms. This paper provided a summary of the overall problem, and then presented the calibration framework used for SWOT, as well as the pre-launch performance simulations. We presented two complete algorithm sequences that use ocean measurements to calibrate KaRIN globally. The simple and robust Level-2 algorithm was implemented in the ground segment to control the main source of error of SWOT’s hydrology products. In contrast, the more sophisticated Level-3 (multi-mission) algorithm was developed to improve the accuracy of ocean products, as well as the one-day orbit of the SWOT mission. The Level-2 algorithm yielded a mean inland error of 3–6 cm, i.e., a margin of 25–80% (of the signal variance) with respect to the error budget requirements. The Level-3 algorithm yielded ocean residuals of 1 cm, i.e., a variance reduction of 60–80% with respect to the Level-2 algorithm.

Keywords:

SWOT; KaRIn; altimetry; interferometry; calibration; data-driven; cross-calibration; cross-over; error budget 1. Introduction and Context

1.1. SWOT Error Budget and Systematic Errors

The Surface Water and Ocean Topography (SWOT) mission from NASA (National Aeronautics and Space Administration), CNES (Centre National d’Etudes Spatiales), CSA (Canadian Space Agency) and UKSA (United Kingdom Space Agency) will provide two-dimensional topography information over the oceans and inland freshwater bodies. Morrow et al. [1] and Fu and Rodriguez [2] give an updated description of the mission objectives, the instrument principle, and the scientific requirements.

SWOT has two main objectives: to observe mesoscale to sub-mesoscale over the oceans and to observe the water cycle over land. To achieve these goals, SWOT’s main instrument is the Ka-band radar interferometer (KaRIn), a synthetic aperture radar interferometer with two thin ribbon-shaped swaths of 50 km each. The other instruments onboard include a Jason-class nadir-looking altimeter (for cross-comparisons with KaRIn, calibration, and nadir coverage), a two-beam microwave radiometer (to correct for the wet troposphere path delay), and a precise orbit determination payload (required for the radial accuracy of topography measurements).

The SWOT mission will fly on two different orbits. The first has a one-day revisit time with very sparse coverage. The one-day orbit will be used for approximately six months during the commissioning phase of the mission. For this reason, it is sometimes called the Calibration/Validation (or Cal/Val) orbit. The second orbit, also called “science orbit”, has a 21-day revisit time with global coverage for latitudes below 78°.

The mission and performance error budget from Esteban-Fernandez et al. [3] highlights the stringent requirements in terms of error control:

- SWOT’s error budget is required to be one order of magnitude below the ocean signal for wavelengths ranging from 15 to 1000 km (expressed as a power spectrum);

- KaRIn’s white noise must be less than 1.4 cm for 2 km pixels on ocean (or 2.7 cm for 1 km pixels);

- KaRIn measurement must have a 10 cm height accuracy and a 1.7 cm/1 km slope accuracy over 10 km of river flow distance (for river widths greater than 100 m);

- The topography requirements for the nadir altimeter are derived from Jason-class missions.

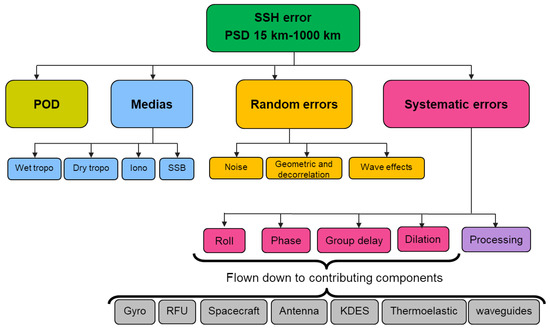

Among the many error sources described in [3], this paper focused on the so-called systematic errors. These errors have multiple sources, as shown Figure 1: an error in the attitude knowledge (e.g., imperfect roll angle used in the interferogram to topography reconstruction); an error in the interferometric phase or group delay (e.g., from hardware or electronics); an imperfect knowledge of the true KaRIn mast length… The details of each error source are largely beyond the scope of this manuscript and properly addressed by Esteban-Fernandez et al. [3]. Similarly, the breakdown of each error type into sub-system allocations (grey boxes of Figure 1) is not relevant in the context of this paper. However, Figure 1 remains interesting because it gives a good idea of the complexity of the so-called systematic errors.

Figure 1.

Schematics of the error breakdown for SWOT. The total error (green box) is the sum of many components. The systematic errors discussed in this paper are in the pink box. They have different origins (smaller pink boxes) and each SWOT subsystem (grey box) has an allocation, which is verified by the Project through simulation or hardware testing.

For practical purposes, the systematic errors in pink can be divided into four signatures or components (more details in Section 2.2): one bias for each swath, a linear component in the cross-track direction and for each swath, a quadratic component common to both swaths, and a residual that is almost constant in time. These four components are independent and additive with other sources of error.

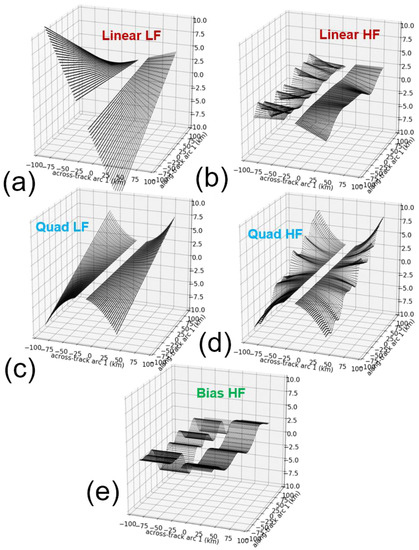

The first three components are shown in Figure 2. While their general form is known analytically, they have a time-varying amplitude, and the amplitude may change at different time scales (e.g., qualitative difference between panel (a) and panel (b)). Moreover, even the so-called ‘bias’ term of panel (e), is only constant in the cross-track direction for a given time step and a given swath (left or right). The value of the cross-track bias may evolve in time, at different time scales, and independently for each swath. The amplitude and the time scales involved are discussed in Section 2.2. We will see in Section 2 that a dedicated calibration algorithm is needed to mitigate these three components, and to keep the overall SWOT error within its requirements.

Figure 2.

Qualitative examples of SWOT’s systematic errors. Panel (a) shows an example of low-frequency linear component. Panel (b) is the same for the high-frequency linear component. Panels (c) and (d) show the quadratic component for low-frequencies and high-frequencies respectively. Panel (e) shows an example high-frequency bias component.

The last component (or residual) cannot be modeled with a simple analytical model as it contains the sum of complex effects. On the bright side, the residual is mostly time-invariant (see [3]), and it is not ambiguous with the other components (e.g., zero-mean, no linear or quadratic signature). The residual is absorbed by a specific ground correction named the phase screen. To that extent, we will not discuss it here. This paper focuses on the error signatures of Figure 2.

1.2. Data-Driven Calibration

Past papers have explored various mechanisms to mitigate the systematic errors. Enjolras et al. [4] was the first and most simple implementation of the crossover algorithm used for SWOT’s roll error. Dibarboure et al. [5] extended this strategy to resolve other components, as well as more challenging time variations. Furthermore, Dibarboure et Ubelmann [6] explored the strengths and weaknesses of four different types of calibration algorithms, as well as the impact of the satellite orbit and revisit time. Using a similar algorithm on their hydrologic target of interest (Lake Baikal), Du et al. [7] have demonstrated an ability to complement the ocean-based correction presented in this paper with their regional calibration. More recently, Febvre et al. [8] explored the possibility of tackling the same problem with a framework based on physics-informed artificial intelligence.

All these papers use a common approach: they take the KaRIn topography measurement, and they adjust empirical models to isolate the systematic errors. In other words, they are data-driven calibration algorithms. The other common trait of these papers is that they explored the strengths and weaknesses of their algorithm in a vacuum: realistic SWOT errors were not known at the time, let alone simulated by the Project. To that extent, their analyses were useful to understand some concepts and generic implications, but they did not test an end-to-end calibration scheme, nor did they discuss the nature of residual systematic errors after their data-driven calibration.

1.3. Objective of This Paper

In that context, the objective of this paper was to give the first end-to-end overview of the SWOT data-driven calibration problem: why a calibration is helpful or needed, what will be the end-to-end algorithm sequence, and what is the current performance assessment based on pre-launch simulations. This paper briefly summarized the main findings from previous work, but did not duplicate their detailed description of each algorithm (e.g., equations, strengths and limits). Note that the final implementation by the Project will also be documented in the so-called Algorithm and Theoretical Basis Documents (or ATBD) which will be made public soon (no reference at the time of this writing).

This paper is organized as follows. Section 2 details the input data (e.g., ocean models, SWOT orbits, KaRIn noise) and prelaunch scenarios for SWOT (e.g., description of the uncalibrated errors). Section 3 presents the data-driven calibration methodology and the complete algorithm sequence. Section 4 gives the results with the pre-launch error performance (residual error after the calibration is applied), and Section 5 discusses various implications and extensions of this paper, such as sensitivity tests, limitations, and tentative algorithm improvements, as well as a post-launch outlook.

2. Input Data and Prelaunch Scenarios for SWOT

2.1. Input Data

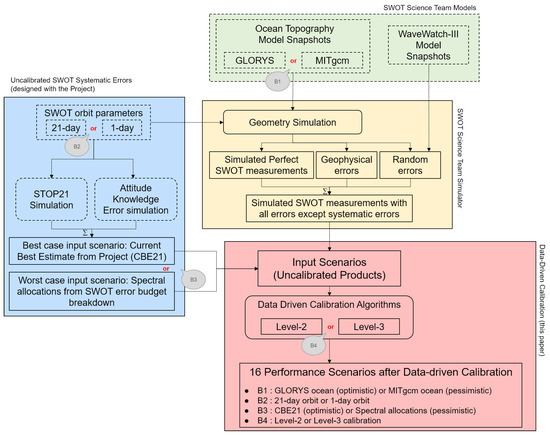

All the simulations presented hereafter started from two simulated datasets (see schematics from Figure 3). Firstly, we generated a simulated SWOT ocean product (nadir altimeter and KaRIn) without the systematic errors (yellow box in Figure 3). Secondly, we generated a simulation of the uncalibrated systematic errors only (blue box in Figure 3) by combining some inputs and data from the SWOT Project. The sum of these contributions yielded a simulated SWOT product before the data-driven algorithm. This input was then injected into the algorithms of Section 3 (red box in Figure 3) to produce a simulation of the calibrated product. By comparing the uncalibrated error simulation (input) with the calibration parameters (output), we can infer the performance of the calibration algorithm.

Figure 3.

Overview of our end-to-end simulation scheme. The data-driven algorithm and simulations presented in this paper are in the red box. They use two inputs: a simulation of SWOT productions without systematic errors (output of the yellow bow) and a simulation of the uncalibrated systematic errors (output of the blue box). The four simulations branches B1 to B4 combine into 16 possible scenarios. Dashed boxes were provided by the SWOT Project (blue) or other members of the SWOT Science team (green).

To simulate SWOT measurements (available in [9]), we used the open-source SWOT Ocean Science Simulator, initially developed by Gaultier et al. [10] and updated by Gaultier et Ubelmann [11]. This simulator first interpolates the surface topography snapshots of an ocean model to emulate a “perfect” SWOT-like measurement of the ocean topography (hereafter considered as our simulated ground truth): nadir altimeter and KaRIn interferometer.

In our study, we used two global ocean models (B1 in the green box from Figure 3):

- The GLORYS 1/12° model from Lellouche et al. [12], as it is the state-of-art operational system operated by Mercator Ocean International in the frame of the operational Copernicus Marine Service. This global model has a sufficient resolution to resolve large- and medium-mesoscale and is quite realistic when it comes to the global ocean circulation, including in polar regions. However, its relatively coarse resolution makes it unable to resolve small- to sub-mesoscale. Moreover, it does not have any forcing from tides, so the GLORYS topography does not contain any signature from internal tides.

For these reasons, our GLORYS-based simulated SWOT products were considered here as a “best-case” simulation. To illustrate, for actual flight data from SWOT, tides were removed from the topography measurement using a good-but-not-perfect tides model. In the GLORYS simulation, the lack of tidal signature is equivalent to assuming that the tides correction of flight data from SWOT will be perfect (i.e., absolutely zero residual from barotropic and baroclinic tides). Similarly, the lack of small- to submesoscale in our calibration is admittedly optimistic as we underestimate how they might affect our calibration (discussed in Section 5.2.1);

- The Massachusetts Institute of Technology general circulation model (MITgcm) on a 1/48-degree nominal Latitude/Longitude-Cap horizontal grid (LLC4320). This is one of the latest iterations of the model initially presented by Marshall et al. [13] and discussed in Rocha et al. [14] among others. The strength of this model is the unprecedented resolution (horizontal and vertical) for a global simulation, which makes it possible to resolve small to sub-mesoscale features in the global surface topography snapshots. It is also forced with tides, thus adding important ocean features of interest for SWOT scientific objectives, and as well as more challenges for the data-driven calibration of KaRIn products (see Section 3.3).

However, the LLC4320 simulation suffers from forcing errors on tides (Arbic et al. [15]), resulting in tidal features that might be stronger than reality, as well as forcing errors on the atmosphere (6-h lag resulting in desynchronization of some diurnal and semi-diurnal barotropic signatures). There are also unrealistic behaviors in polar sea-ice and rare spurious data in coastal regions (because of the sea-ice and bathymetry/shoreline masks). Despite these issues, the LLC4320 MITgcm simulation remains one of the best global models we could use in this work. Because of the errors, the LLC4320-based simulated SWOT products are considered as a “worst case” ocean for our calibration study. The forcing errors leave higher residuals, which would be equivalent to very imperfect tides corrections in flight data from SWOT, especially on internal tides;

- We also used the NEMO-eNATL60 regional model simulation of the North Atlantic at 1/60° from the Institut des Geosciences et de l’Environnement (IGE). Brodeau et al. [16] describe the configuration and the validation performed on this model. Despite its relatively limited geographical coverage (North Atlantic), this model complements our simulations because the topography snapshots compare extremely well with the observations at all scales (i.e., arguably more realistic than the global models above). The model also exists with and without tides, which is very useful to understand the impact of barotropic and baroclinic tides in our local inversions. For the sake of concision, we did not detail the analyses performed with this model as they were generally used to confirm or to modulate some findings from the global models.

Regarding the non-systematic error simulations, we used the default configuration of the SWOT simulator (detailed in [10]). The uncorrelated random noise is generated consistently with the description from the SWOT Project [3], using snapshots of the WaveWatch-III wave model to modulate the noise variance as a function of the significant wave height. The wet troposphere error was generated using the methodology of Ubelmann et al. [17]. The “systematic errors” (namely roll, phase, baseline, timing) were processed separately (Section 2.2).

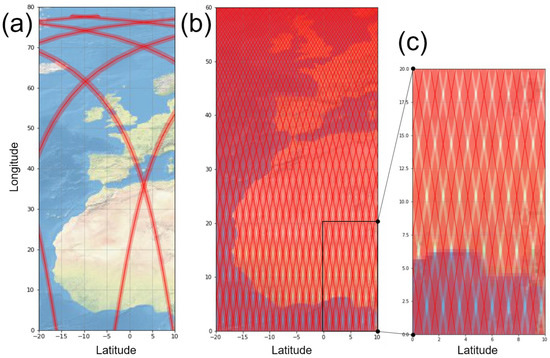

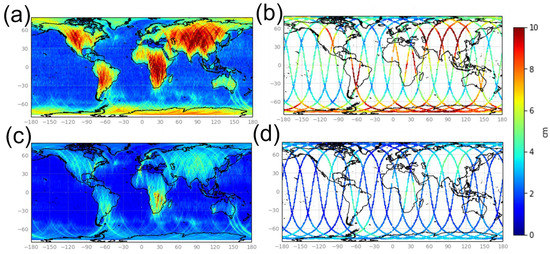

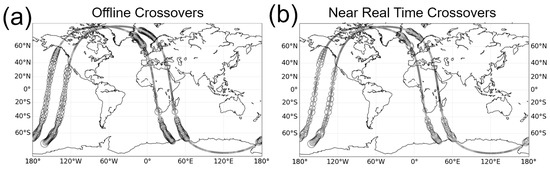

We also simulated two sets of SWOT products for each ocean model (B2 in the blue box from Figure 3): one set for the 21-day science orbit (the orbit parameters where provided by the SWOT Project. The geometry simulation was performed with the SWOT Scientific Simulator), and one set for the one-day Cal/Val orbit. Having this parallel setup was shown (Dibarboure et Ubelmann [6]) to be very important because the orbit controls the space/time distribution of the SWOT measurements as well as the location and time difference of image-to-image overlaps which are used in our algorithm. This property is shown by Figure 4, where panel (a) shows the very sparse coverage of the one-day CalVal orbit. For this orbit, the entire cycle yields only 10 crossover diamonds per pass (half-orbit) and only two crossovers from 63°S to 63°N. In other words, for this orbit, there are ocean segments up to 6500 km without any crossover diamond. This property will be discussed in the following sections. In contrast, the map of panel (b) and the zoom in panel (c) show that the 21-day orbit has a very dense coverage, where crossover diamonds between ascending and descending passes are nearly ubiquitous (approximately 60,000 crossovers per 21-day cycle).

Figure 4.

Coverage of KaRIn products (red area, semi-transparent) and the nadir altimeter (red line). Panel (a) is the one-day or Cal/Val orbit. Panel (b) is the 21-day or science orbit. Panel (c) is a zoom of panel (b) at low latitudes: it emphasizes the rare regions that are not observed by KaRIn and the near ubiquity of crossover diamonds (darker red).

The consequences of this sampling difference between the two orbits are discussed by Dibarboure et Ubelmann [6] as it defines the types of calibration algorithms that can be used. We will see in the next section that it is the reason why we developed two algorithms: the SWOT-only Level-2 algorithm based on crossover diamonds for the 21-day orbit and for hydrology products, and the multi-mission Level-3 algorithm for the ocean and the one-day orbit.

Note that for both orbits, multi-mission crossovers (e.g., when the Sentinel-3 or Sentinel-6 altimeters are in the KaRIn swath) yield millions of kilometers of 1D crossover segments at all latitudes. Dibarboure et Ubelmann [6] showed that even if we limit these 1D crossovers to short time differences (1–3 h), and two external altimeters, multi-mission crossovers already provide a very good coverage. Adding all other altimeters in operations (e.g., CRYOSAT-2, SARAL, HAYANG-2B/2C, Jason-3 or CFOSAT) yields a massive amount of additional reference points, which can be used as independent measurements of the “ground truth” for data-driven calibration. However, the nadir constellation is limited by the intrinsic noise level of conventional altimeters (i.e., wavelengths larger than 40 km for recent sensors to 70 km for older altimeters).

2.2. Uncalibrated Error Scenarios

To simulate the uncalibrated systematic errors, we developed two reference scenarios with the SWOT Project (B3 in the blue box from Figure 3). The first scenario was based on the spectral allocations of Esteban-Fernandez [3]: for each component, we considered that 100% of the spectral allocation is an actual error (i.e., no margin with respect to allocations). We used an inverse FFT on the reference spectra provided by the SWOT Project, and we generated a one-year realization in the temporal domain (i.e., a time-evolving signal with the right along-track correlation). We repeated the process for each component of Figure 1.

In practice, the following additive cross-track components are generated:

- A bias in each swath originating in the group delay of either antenna (timing error);

- A linear signature in each swath originating in the interferometric phase error;

- A linear signature in both swaths originating in imperfect roll angle knowledge (e.g., gyrometer error);

- A quadratic signature originating in the imperfect knowledge of the interferometric baseline length.

Because each of these spectra follows a K−2 power law: the power spectrum S decreases as the wavenumber k increases with the relationship S(k) ∝ k−2. The PSD is therefore linear with a slope of −2 in a log/log plot. The resulting error contains a continuous mix of large amplitude and low-frequency errors, and smaller amplitude and higher-frequency errors (e.g., a mix of Figure 2a,b). This “allocation” scenario is now the default configuration of the SWOT simulator [11]. In the context of data-driven calibration, we consider this allocation scenario as a worst-case (or pessimistic) because each component is at the acceptable limit given in the breakdown of the SWOT error budget.

In contrast, the second scenario used the most recent simulations from the Project, in order to get closer to the expected performance at launch (i.e., lower error because the Project has margins for each component). The second scenario is the “current best estimate” (or CBE) pre-launch error assessment from the SWOT Project. It was first setup in 2017, then revised in 2021 (hence the label CBE21) using more faithful simulations and hardware test outputs. In comparison with the allocations of the first pessimistic scenario, the uncalibrated errors can be smaller by a factor of 2 to 10 for some components and wavelengths.

However, these simulations might arguably underestimate some error sources, or simply not replicate unknown effects that will be discovered on flight data. To that extent, this CBE21 scenario should be considered as optimistic in the context of our data-driven calibration assessment. To use a consistent wording with the first scenario, we will hereafter talk about CBE21 as our best case scenario even though it is not strictly optimal for each error component. To summarize, one might expect the reality of flight-data calibration errors to be higher than our CBE21 scenario and lower than the allocation scenario.

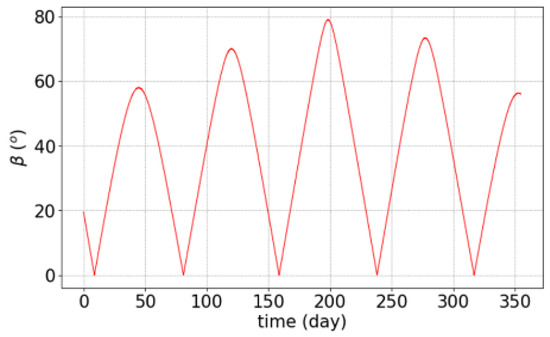

Furthermore, for the CBE21, we use the sum of two simulations provided by the SWOT Project: one simulation for the attitude knowledge error (essentially errors from the star-trackers and platform, attitude reconstruction algorithm), and one simulation for the KaRIn instrument (thermo-elastical distortion of the antennas or mast, thermal snaps, electronics…). Both simulations use a common value for the beta angle that is shown in Figure 5. This angle, between the sun and the SWOT orbit plane, is important as it controls the thermal conditions along the orbit circle (e.g., eclipses) for the platform, attitude sensors, instrument structure, electronics, etc.

Figure 5.

Angle between the sun and the SWOT orbit plane (also known as beta angle) as a function of time over one year.

In addition to the two simulations from the SWOT Project, the random error originating in the precise gyrometer was simulated as it was in the allocation scenario: inverse FFT of the roll spectrum to replicate the random nature of this error. The spectrum itself is robust and based on flight-proven performance from previous missions: the K−2 spectrum is the integration in time of the gyrometer white noise on angular velocities.

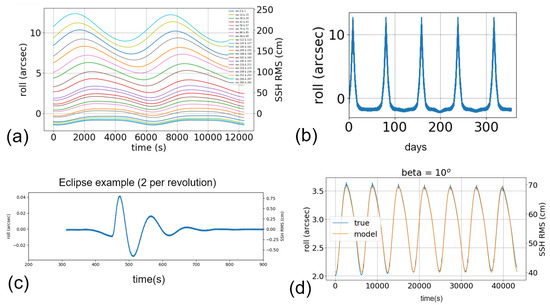

Figure 6 gives an overview of the simulated attitude knowledge error. Each curve in panel (a) shows the roll knowledge error (in arcsec) made along two subsequent revolutions, and the induced topography error (in cm RMS). The colors correspond to the first revolution of each day over a 21-day cycle (and all beta angle configurations). The general shape of these curves shows that the error is dominated by a non-zero mean value up to 10 arcsec (i.e., 2 m RMS on KaRIn topography) and a smooth signal that can be approximated by a handful of harmonics and sub-harmonics of the orbital period (repeating patterns). The amplitude along the orbit circle ranges from 5 cm to tens of centimeters. The mean value and the harmonics also change continuously each day: this change is controlled by the beta angle from Figure 5. This modulation is more visible in Figure 6b, which shows the roll error as a function of time throughout one year. The peaks in this panel correspond to the phases when the beta angle goes to zero (the sun is in the orbit plane). On top of this slowly evolving signal, each transition in and out of eclipse is simulated by the SWOT Project with a transient signature of a few minutes and a small amplitude (e.g., Figure 6c) to approximate the response to thermal snaps. The final output of this first simulation is shown in Figure 6d.

Figure 6.

Simulation of the systematic attitude knowledge error (excluding the random component from the gyrometer).

The second simulation used as an input for our study is for the KaRIn instrument. Our input data was generated by the structural thermal optical (STOP) simulator, which is used by the Project to perform end-to-end analyses of in-flight temperatures and deformations of external structures. This simulator reproduces many complex effects and error sources that are beyond the scope of this manuscript.

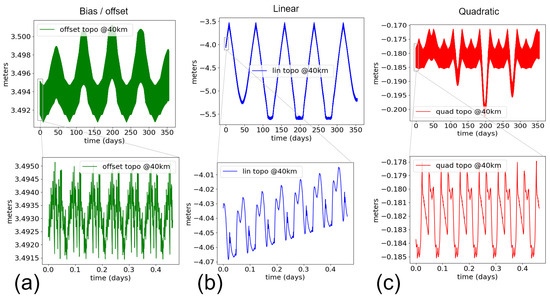

As far as data-driven calibration is concerned, Figure 7 gives an overview of the latest iteration of the STOP simulation (updated in 2021). In practice, the total simulated STOP21 error is essentially the sum of three components: a bias for each swath, a linear cross-track component for each swath, and a quadratic cross-track component. There is also a residual with a centimeter-level mean value (to be tackled by the Phase Screen algorithm) and a sub-millimeter evolution around the mean. By construction, this residual is not corrected by our algorithm, so it will be a small contributor to the error after our calibration.

Figure 7.

Breakdown of the systematic topography errors from the KaRIn instrument STOP21 simulations (unit: meter RMS). Panel (a) is the bias per swath (timing/group delay error). Panel (b) is for the linear component (instrument roll knowledge plus phase errors). Panel (c) is for the quadratic component (interferometric baseline length error). For each panel, the top figure shows the uncalibrated error as a function of time over one year, and the bottom figure is a zoom over a period of 11 h (13 passes).

Panel (a) of Figure 7 shows the temporal evolution of the bias root mean square error (RMSE) over one year (top figure) and a zoom over the first 11 h. Panels (b) and (c) are the same for the linear and quadratic components. These figures illustrate very well the order of magnitude of the topography signature, as well as the four time-scales involved:

- There is a large non-zero mean for this one-year simulation. In practice, this yearly average might exhibit small inter-annual variations. To illustrate, the Sun controls the illumination and thermal conditions of the satellite. The Sun also has an 11-year cycle. This solar cycle might show up in SWOT as very slowly evolving conditions. In other words, our “non-zero mean” could actually be found to be a very slow signal from the natural variability of the Sun. This yearly time-scale indicates that any calibration performed on a temporal window of one 21-day cycle or less should yield a non-zero mean;

- Slow variations with time-scales of the order of a few weeks to a few months. These modulations are caused by changes in the beta angle of Figure 5. Note that the relationship with the beta-angle can be quite complex, hence the need of a sophisticated simulation such as STOP21. This time-scale indicates that any calibration performed on a temporal window of a few hours or less will observe a linear evolution of the uncalibrated errors;

- Repeating patterns with a time-scale of the order of 15 min to 2 h. These signatures are clearly harmonics of the orbital revolution period (e.g., thermal conditions changing along the orbit circle in a cyclic pattern). This time-scale indicates that the calibration algorithm can exploit the repeating nature of some error signatures using orbital harmonic interpolators rather than basic 1D interpolators;

- High-frequency components with a time-scale of a few minutes or less (essentially high-frequency noise or sharp discontinuities in the curves of Figure 7). These high-frequency components are also broadband because they are affecting many along-track wavelengths (as opposed to the harmonics of the repeating patterns). This time scale is important because it might affect the ocean error budget (requirement from 15 to 1000 km, i.e., 2.5–150 s), either globally if the error is ubiquitous, or locally at certain latitudes if the high-frequency error is limited to specific positions along the orbit circle.

Furthermore, Table 1 gives an overview of the error RMS for each component and for each time scale. These values should be compared to the SWOT hydrology requirement and error budget. The total requirement is 10 cm RMS, and the primary contributor is the allocation for systematic errors (7.5 cm RMS). Table 1 shows that many components are much larger than this requirement. That is the reason why a data-driven calibration is required for SWOT to meet its hydrology requirement. The rationale is clearly explained in the SWOT error description document from Esteban-Fernandez [3].

Table 1.

Synthesis of the uncalibrated error RMS from STOP21 for different time scales (columns), and for all the components (rows). Unit: cm.

For oceanography, the requirements are articulated as a 1D (along-track) power spectral density (PSD) from 15 to 1000 km. The last column of Table 1 shows that, for these scales, SWOT does not need a calibration algorithm to meet its ocean requirements, the content of STOP21 is below its spectral allocation for all wavelengths from 15 to 1000 km (not shown), and even significantly below for most components. To that extent, the STOP21 simulation confirmed the statements from Esteban-Fernandez [3].

Nevertheless, because the presence of low frequency bias/linear/quadratic signals would leave decimetric to metric residual errors in the cross-track direction (i.e., alter 2D derivatives such as geostrophic velocities, vorticity, etc.), it would be quite beneficial to apply a data-driven calibration of ocean products as well. Such an ocean calibration should be focused only on wavelengths larger than 1000 km, in order not to alter the SWOT products in the critical wavelength range of 15 to 1000 km where requirements apply.

To summarize, in the sections below, we use two “uncalibrated error” scenarios (B3 in Figure 3). The spectral “allocation” scenario is our pessimistic/worst case, which assumes that each systematic error source is set to 100% of its theoretical allocation. The “current best estimate 2021” scenario is a more realistic (arguably optimistic/best case) simulation input that was built with the SWOT Project. The uncalibrated errors can be as large as a few meters (two orders of magnitude larger than the SWOT requirements) and they have four different time- scales: inter-annual, beta angle variations, orbital harmonics, and periods shorter than 3 min (approximately 1000 km).

The data-driven algorithm aims to reduce the three systematic error components (bias, linear, quadratic) in each swath, and for time scales ranging from the 3 min to slower components. This is required to meet the hydrology requirements of 7.5 cm RMS (the calibrated error must be smaller than this threshold). Over the ocean, no calibration is required to meet the 1D along-track spectral requirements from 15 to 1000 km, but a data-driven calibration would be beneficial to reduce the metric-level cross-track errors and biases originating, especially for time-scales longer than 3 min where the amplitude is large.

3. Method: Data-Driven Calibration and Practical Implementation

This section gives an overview of the data-driven calibration algorithms. They are used on the input data of Section 2 to generate the results of Section 4. In Section 3.1, we first make a brief synthesis of the calibration principles detailed in previous papers [4,5,6], and we recall the main pitfall of such empirical algorithms, then we present two end-to-end calibration schemes (B4 in the red box from Figure 3). The first algorithm (Section 3.2) is a part of the operational SWOT ground segment (Level-2): its goal is to ensure that hydrology requirements are met in a robust and self-sufficient way (SWOT data only). The second algorithm (Section 3.3) is a research counterpart that is operated in a multi-mission context (Level-3): its goal is to further improve the performance over the ocean, and in the very specific case of the one-day orbit.

3.1. Data-Driven Calibration: Basic Principles

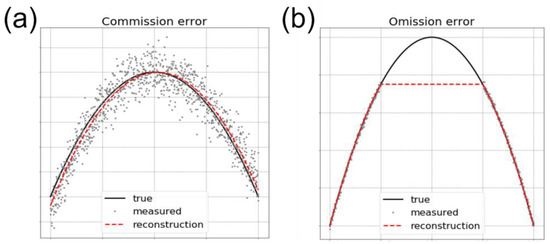

All data-driven calibration algorithms share the same basic principle. The systematic error sources have a signature on the measurements of water surface topography that is known analytically. Therefore, it is possible to adjust an analytical model on the measured topography, and to remove it, using just the SWOT topography product. This is why the algorithm is often called empirical, or data-driven.

To illustrate, the roll error creates a linear signature in the cross-track direction. The simplest way to remove the error would be to adjust a linear model in the cross-track direction for a given time step, or for a given time range. By repeating the process for each time step, and by removing the adjusted value, any signature from roll would be effectively removed. This basic strategy is called the “direct” retrieval method in [6]. Note that it can be used wherever the KaRIn image is almost complete (few missing/invalid pixels). In other words, it can be used almost everywhere over the ocean and large lakes. However, it cannot be used over most inland segments: the KaRIn topography will be usable only in the presence of inland water (rivers, reservoirs, floodplains…), which results in extremely sparse inland coverage: not enough to use a data-driven calibration.

The pitfall of this data-driven approach is that KaRIn has a very narrow field of view (2 × 50 km with a near nadir gap of 2 × 10 km). At these scales, the actual geophysical slope (ocean and inland waters) is not zero. There is an ambiguity between the systematic errors and the true topography of interest: the two signatures are by no means numerically orthogonal. As a result, a poorly implemented algorithm would alter, or even destroy entirely some signals of interest. The same effect would happen in the presence of geophysical errors on KaRIn data: wet troposphere path-delay residuals left by an imperfect radiometer correction, sea-state bias with a cross-track signature… Dibarboure et Ubelmann [6] extensively discuss this so-called leakage of geophysical signatures in the calibration algorithms.

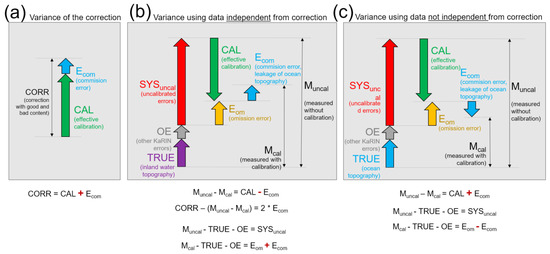

The actual challenge of the data-driven calibration is therefore not to remove the errors, but to isolate them from the signal of interest. To achieve this goal, it is necessary to orthogonalize the numerical problem, i.e., to leverage some properties of the signal and errors so that they become less ambiguous. In practice, Dibarboure et Ubelmann [6] describe and discuss three different methods to achieve a better separation:

- M1: One can use a first guess or prior for the true ocean topography (or sea surface height, SSH). The rationale is to remove as much ocean variability as possible with external data, in order to reduce the ambiguity with the systematic errors.

The first step is to not use the SSH, but to remove a mean sea surface (MSS) to cancel out the geoid and the mean dynamic topography (MDT). Similarly, instead of the raw sea surface height SSH, it is very important to apply all geophysical corrections and to use a corrected SSH anomaly (SSHA). To illustrate, by removing a model for the barotropic tides, we remove most of the ambiguity between this geophysical content of the SSH, and the systematic errors, which mitigates the leakage of tides into the calibration.

The second step is to reduce the influence of the ocean circulation and mesoscale. To do this, mono-mission algorithms can build a first-guess (or prior) from the SWOT nadir altimeter (M1a). Similarly, multi-mission algorithms can leverage higher-resolution topography maps derived from the nadir altimeter constellation (M1b), or even topography forecasts from operational ocean models;

- M2: One can use image-to-image differences instead of a single KaRIn product. When the time lag between two images is shorter than correlation time scales of the ocean, using a difference between two pixels will cancel out a fraction of the ocean variability (the slow components). The closer in time the two images are, the more variability is removed with this process.

Furthermore, the residual ocean variability that remains in the image-to-image difference is not only smaller in amplitude, but also shorter in spatial scales. Because the field of view of SWOT is only 120 km from swath edge to swath edge, having smaller geophysical features means we improve the orthogonality between the errors and ocean.

In practice, Dibarboure et Ubelmann [6] describe three algorithms based on this differential strategy. The first method (M2a) is based on crossover differences: when an ascending pass and a descending pass meet, there is a diamond-shaped region where they overlap. This algorithm can be used for all orbits, but when the orbit revisit time of 21-day or more; most of the SWOT coverage is actually within a crossover diamond (see Figure 4c). In contrast, for the one-day orbit, there are only 10 crossovers per pass, and they can be thousands of kilometers away from one another (see Figure 4a). For the one-day orbit, the SWOT mission will revisit exactly the same pixels every day. So, it becomes possible to perform an image-to-image difference everywhere. Leveraging this property of the one-day orbit is the basis of the collinear retrieval algorithm (M2b) from [6].

The third differential strategy is the sub-cycle algorithm (M2c): it was developed for a backup orbit option of SWOT where adjoining swaths are only one day apart from one another (i.e., one-day orbit sub-cycle). Yet, at the time of this writing, SWOT will not use this orbit, so we will not discuss the sub-cycle algorithm here. It is still noteworthy because it might be interesting for future swath-altimeter missions with different orbit properties (see Section 5.5);

- M3: One can use a statistical knowledge of the problem to reduce the ambiguity. Qualitatively, most ocean features look very different from a 1000+ km bias between the left/right swaths of KaRIn, or a quadratic shape aligned with the satellite tracks, or the thin stripes of high-frequency systematic errors. From a numerical point of view, the 3D ocean decorrelation scales in space and time are very different from the covariance of swath-aligned systematic errors. Similarly, the SWOT errors have an along-track/temporal spectrum which is known from theory, hardware testing. It can also be measured from uncalibrated data (see Section 5.4). It is therefore possible to replace simple least square inversions by Gauss-Markov inversions or Kalman filters that exploit this statistical information. This was shown by [6] to reduce significantly the leakage of the ocean variability into the calibration parameters. The process can be used either during the local retrieval (M3a) such as in a crossover region, or it can be used to better interpolate between subsequent calibration zones (M3b).

The calibration model adjustment is performed in so-called “calibration zones”. For various reasons, these zones are not ubiquitous. There can be gaps between them (e.g., between subsequent crossovers in Figure 4c). Conversely, when multiple algorithms are used, the calibration zones of two methods can overlap. To that extent, one needs to interpolate/blend the local calibration estimates into a unique and global calibration dataset.

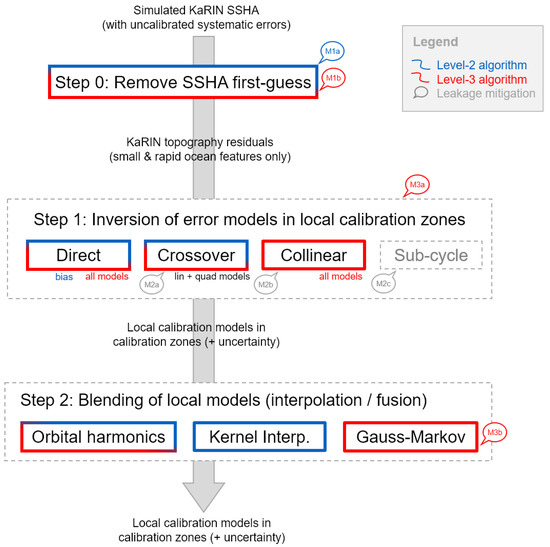

Consequently, the overall calibration is organized as in the schematics of Figure 8. There is a three-step process. Step 0 removes as much geophysical content as possible from KaRIn measurements (e.g., large mesoscale) using an ocean prior or first-guess. At the end of step 0, only the high-frequency, high-wavenumber ocean features remain in the calibration input. Step 1 activates one or two local calibration retrieval methods on KaRIn images, or on image-to-image differences. At the end of step 1, we collect a series of calibration models per error component (bias, linear quadratic) in so-called calibration zones (e.g., crossover diamond) with an uncertainty (e.g., error covariance). Then step 3 performs the final fusion of the local calibration models into a global calibration that can be applied to reduce the systematic errors over any region, surface type, and target. This correction is also provided with an uncertainty (or quality flag).

Figure 8.

Schematics of the end-to-end calibration scheme. Each rectangle is a processing step. The blue items are for the Level-2 algorithm sequence, and the red items are for the Level-3 algorithm sequence.

As discussed above, the leakage mitigation methods M1 to M3 exist in different flavors. This results in two very different algorithm sequences. The blue color in Figure 8 is for the Level-2 algorithm. This sequence is designed for the SWOT ground segment: it secures the hydrology error requirements; it must be based on SWOT data only (KaRIn + nadir) to ensure that the mission is self-sufficient; and it must be resilient to inaccurate pre-launch assumptions (e.g., it cannot be affected by incorrect spectra or decorrelation functions). In contrast, the red color is for the Level-3 algorithm. This sequence is operated in a multi-mission and non-operational context so it can leverage as many external datasets as needed. It is also designed as a research processor so it can use more sophisticated but fragile variants with 3D covariance functions and in-flight measured spectra. The Level-2 sequence is detailed in Section 3.2; and the Level-3 algorithm is detailed in Section 3.3. Their output performances are described in Section 4.1 and Section 4.2, respectively.

3.2. Ground Segment Calibration Algorithm Sequence (Level-2)

This algorithm sequence uses the blue boxes of Figure 8. In processing step 0, we use the nadir altimeter content as a prior of the sea surface height anomaly (SSHA). In processing step 1, we use the direct method to calibrate the bias in each swath, and the crossover method for the other components (linear and quadratic). The image-to-image difference is computed for crossover regions, and the linear and quadratic models are adjusted on the difference. This results in a series of local calibration models for each component. Then in processing step 2, we inject these local models in an orbital harmonics interpolator to retrieve the repeating patterns along the orbit circle. Lastly, we interpolate the residual with a simple kernel interpolator (least squares, weighted with each error bar, Gaussian kernel, 1000 km cut-off). Because the interpolator sometimes encounters some extremely long segments as long as 10,000 to 20,000 km, the interpolator switches to linear interpolation when the kernel is smaller than the distance between subsequent crossovers.

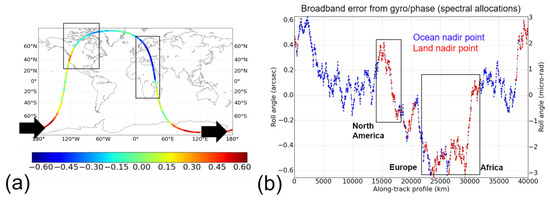

Figure 9a shows an example of the uncalibrated error (linear component) for one arbitrary revolution. Panel (a) shows its overall geometry: the revolution starts in the South Pacific, crosses the ocean up to North America, then it goes through Greenland, Europe and Africa. Then it crosses the Indian Ocean and Southern Ocean, and it ends over Antarctica. Figure 9b shows, as a function of time, the evolution of the uncalibrated error (linear component): the blue dots are for the ocean, and the red ones for the inland segments. As expected from the spectral allocation scenario (K−2 power law), the error contains a mix of large-scale and large-amplitude features, and rapid changes with a smaller amplitude. In addition to the curve of panel (b), this revolution is also affected by a very large signal from the attitude knowledge error (e.g., Figure 6a) that is a nearly perfect sine function at the orbital revolution period.

Figure 9.

Overview of the uncalibrated roll (linear component of the error) for one arbitrary revolution. Panel (a) shows the geographical location and the roll error in arcsec. Panel (b) shows the uncalibrated error (linear component) for the “spectral allocation” scenario.

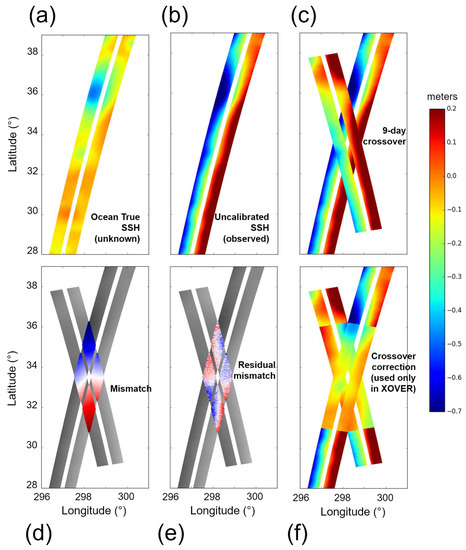

Figure 10 shows the step-by-step inversion for an arbitrary crossover region. Panel (a) is the starting point and final objective (to retrieve this simulated ground truth). Adding the systematic errors in panel (b) completely skews the KaRIn images by tens of centimeters. By forming the crossover difference on the overlapping diamond (panels c and d), we can then use the residual as the input for a least square inversion. In the inversion, we adjust the bias, linear and quadratic analytical models for each swath and each pass. By construction, the least squares minimize the residual variance in panel (e). If we apply the local calibration model, we observe in panel (f) that we correct most of the input errors: near the crossover, the retrieved SSH matches the simulated ground truth of panel (a). The algorithm properly isolated the systematic error signatures from the signal of interest.

Figure 10.

Step-by-step inversion of the crossover retrieval method. Panel (a) shows a segment of KaRIn image without any error (simulated ground truth from the GLORYS model). Panel (b) is the same segment when we add the random and systematic errors. Panel (c) is when we add an overlapping KaRIn images from 9-days before. Panel (d) is the image-to-image difference for the overlapping diamond between the two images from panel (c). Panel (e) is the residual mismatch after we adjust the linear and quadratic models for each image. Panel (f) is when the model adjusted is applied in each swath as a local calibration.

Furthermore, the crossover diamonds are nearly ubiquitous for the 21-day orbit. This is shown by the darker regions of Figure 4c, and quantified in ([6], Figure 17). In practice for the example revolution of Figure 9, we obtain the dense crossover coverage of Figure 11a. For each crossover region, we get an estimate of each component of the systematic error. For the Level-2 algorithm, each crossover yields only a scalar value and a scalar uncertainty for each component. For the Level-3 algorithm below, the same inversion can yield a 1D segment instead of a scalar, and a covariance error instead of a scalar uncertainty.

Figure 11.

Interpolation of local crossover calibrations into a global correction. Panel (a) shows the location of each crossover diamond (circles). Panel (b) is the orbital harmonic interpolator (adjustment of sine functions with a frequency that is a multiple of the orbital revolution period) used on the local crossover estimates (pink dots + vertical error bar) to retrieve the uncalibrated error (black for inland segments, blue for ocean segments). Panel (c) shows the final kernel-based interpolation for the broadband (non-harmonic) signals. The local crossover estimates are the green dots (with vertical error bars). The interpolated value is the black line. The thin grey line is the residual error (difference between the red/blue dots and the black line).

The local crossover model is then injected in the interpolation processing step (Figure 11). For the Level-2 algorithm, we use two subsequent interpolations. Firstly, we correct for the massive (up to 5 m) orbital harmonics and constant signals using an interpolator with sine-functions and frequencies set on multiples of the orbital revolution period (hereafter orbital harmonic interpolator). Because the latitude coverage of ocean regions is very different from pass to pass, we compute the harmonics interpolation over a window of 4 revolutions (eight passes) in order to ensure that all latitudes are observed for ascending and descending crossover segments. This parameter is a trade-off between the performance (a larger time window yields better results) and the practical constraints of operating the algorithm in the ground segment (see Section 5.2.3). The result of this sub-step is shown in panel (b): the input crossover points are the pink dots with a vertical error bar, the estimated harmonics is the red curve, which approximates the true error (black/blue curve) very well. Once the harmonic interpolation has been performed, only the broadband residual remains. It is mitigated by the second interpolator (Figure 11c).

To mitigate the broadband signal, we use the Gaussian interpolator (also known as Gaussian smoother) that both interpolates and low-pass filters a global correction (black line) from the crossovers (green dots with their vertical error bar). The resulting residual (thin grey line) is very small in comparison with the uncalibrated broadband error (blue/red dots). Over the ocean (blue dots in panel c), the interpolator can retrieve even rapid changes in the uncalibrated error, but it is sometimes misled by imperfect crossovers (e.g., leakage of ocean variability, coastal crossovers, sea-ice region…). In contrast, the performance over land is dominated by the lack of local calibration zones (only ocean crossovers can be inverted). As a result, the error increases when KaRIn gets further away from the ocean.

3.3. Research Calibration Algorithm Sequence (Level-3)

This algorithm sequence uses the red boxes of Figure 8. This sequence was initially designed for the one-day orbit, and more specifically because of the very sparse crossover coverage discussed in Section 3.1. Indeed, we will see in the next section that the Level-2 algorithm might not be able to meet the hydrology requirements for this orbit in worst-case scenarios.

The main strength of the Level-3 algorithm sequence is that it is operated in a multi-mission and non-operational context so it can leverage as many external datasets as needed (e.g., mitigation method M1b). It is also designed as a research processor so it can use more sophisticated but fragile variants that exploit covariance functions and in-flight measured spectra as well as to combine crossovers with other retrieval methods (e.g., to merge M2a and M2b) in a “statistically optimal” interpolator (mitigation methods M3a and M3b). Because of this, the Level-3 sequence is well suited to improve the calibration over the ocean, as opposed to the Level-2 algorithm, which only focuses on inland hydrology requirements. Furthermore, the Level-2 algorithm is designed not to affect the small ocean scales (as discussed in Section 2.2, ocean requirements from 15 to 1000 km are met without data-driven calibration). In contrast, the Level-3 is not bound by the limits of the ground segment, thus it can try to reduce the error even for wavelengths smaller than 1000 km.

Like in the Level-2 sequence, processing step 0 uses a prior to reduce the amount of ocean variability (barotropic signals or mesoscale) before the calibration itself. However, in the Level-3, our prior combines the nadir altimeter of SWOT, with a similar content from all other altimeters in operations. The process used to merge the altimeter datasets was developed by Le Traon et al. and Bretherton [18,19]. In their analysis of multi-altimeter maps, Ballarotta et al. [20] illustrate that such a prior captures most of the large mesoscale variance (e.g., wavelengths of 130 km in the Mediterranean Sea or 200 km at mid-latitudes). In practice, our mapping algorithm is a variant of the operational algorithm described by Dibarboure et al. [21]. The two specificities of our prior are that:

1/The coverage is limited to the location and time of KaRIn data (not a global map of SSHA). This choice makes it possible to retrieve smaller and faster features that are often smoothed out in global maps;

2/The local mean is defined by the nadir altimeter from SWOT in an effort to isolate the data-driven calibration of KaRIn’s systematic errors (which by definition affect KaRIn only) from all other sources of errors and ocean variability (which also affect SWOT’s nadir altimeter).

Processing step 1 is then to operate three of the four inversion schemes described by Dibarboure et Ubelmann [6]: for the 21-day orbit, we combine the direct retrieval method and the Crossover retrieval Method, and for the one-day orbit we combine the direct method and the collinear Method.

As discussed in Section 3.1, the advantage of the direct method is its simplicity and universality (it requires a single KaRIn image). So, this method can be used everywhere, on all orbits. The downside is that it is prone to leakage from ocean variability in the correction (the M1 prior is never perfect). In the Level-3 sequence, the leakage is mitigated because we use covariance functions (mitigation method M3a) to reduce the ambiguity between the topographic signatures of the systematic errors and the ocean. This approach is extensively discussed in [6].

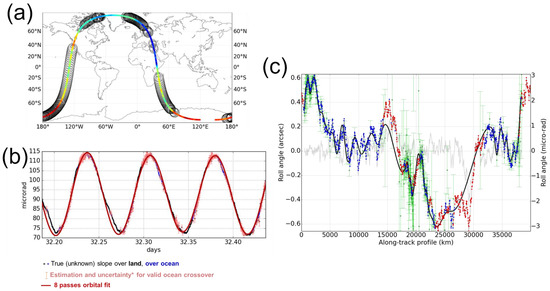

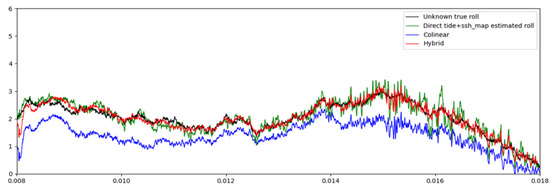

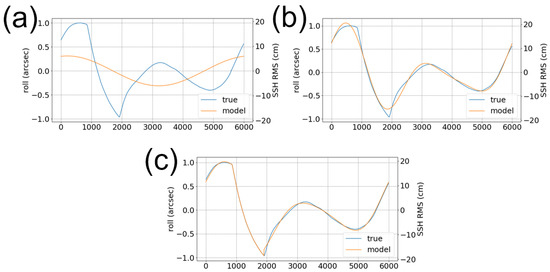

Nevertheless, Figure 12 shows that a small residual leakage remains. The black line in panel (a) is the uncalibrated roll error that the direct method is trying to retrieve. This KaRIn segment is located in the North Atlantic (panel b). This particular segment has a mean value of 2.5 arcsec (i.e., the KaRIn image is tilted by tens of cm) with slow variations (1000–5000 km) of about 1 arcsec, and rapid changes approximately an order of magnitude below (i.e., a few centimeters). The colored lines are the calibration outputs of the direct method when using different ocean priors: a flat surface (yellow), a barotropic tides model (purple), tides plus a static mean dynamic topography or MDT (green). These priors were tested because they are compatible with Level-2 limitations. A common feature to all these simulations is that the direct method is well centered on the mean value, and that the large-scale roll errors are properly retrieved. However, these examples illustrate quite well the limit of using such static priors: when the KaRIn image is in the Gulf Stream, the retrieved roll value in yellow/purple/green clearly deviates from the true uncalibrated value. This is because the scale and magnitude of the ocean eddy slopes is so large that they are misinterpreted as roll signatures in the KaRIn image. In other words, ocean variability leaks into the calibration, as described by [6].

Figure 12.

Example of direct method in the Level-3 algorithm based on the MITgcm model. Panel (a) shows the along-track roll values (in arcsec) as a function of time (in days) for the arbitrary pass of panel (b). Panels (c) and (d) are the same as (a) and (b) for a zoom located in the Tropical Atlantic. The black line of panels (a) and (c) is the true error (unknown) to calibrate. The colored lines are the calibration outputs for the Direct method when using different priors: yellow is for a flat SSH-MSS (no tides correction), purple is for a static barotropic tides model, green is for a tides model plus static mean dynamic topography model, and red is for a multi-mission dynamic SSHA map.

Conversely, the effect is strongly attenuated when we use a Level-3 implementation where the ocean prior is built with a multi-nadir map (red line). The red line (retrieved roll in Level-3) is very close to the black one (uncalibrated true roll error) even in the presence of the largest eddies. This example illustrates the benefits of using mitigation methods M1b: with a better prior, we mitigate the leakage of ocean variability the calibration. The data-driven calibration is now accurate even in the presence of large ocean eddies (i.e., a few hundreds of kilometers).

Yet at smaller scales, the right-hand side part of Figure 12a still exhibits high-frequency deviations from the black line for all colored lines. This calibration error also originates in the leakage from ocean features into the calibration, but not from mesoscale variability. Indeed, the zooms in Figure 12c,d show that the high-frequency calibration artifact actually originates in the presence of very large internal tide (IT) stripes in the KaRIn image. In the MITgcm snapshots, the internal tides have an amplitude of 5 cm or more in the Tropical region, and because of their orientation, there is a non-zero cross-track slope component. Furthermore, these signatures are different from mesoscale eddies so even using mesoscale covariance functions in the Direct retrieval method does not help to lift the ambiguity between this signature and actual roll. As a result, the IT signature leaks into the calibration and the output deviates from the black line with the repeating pattern of IT (here almost a plane wave). Figure 12 uses roll as an example for the sake of clarity, but the same phenomenon occurs on other calibration models (bias and quadratic).

One may argue that the MITgcm ocean reality used in the simulation is known to have some errors in the tides forcing [15], and therefore that this example is much worse than what will happen in reality. Nevertheless, this example still clearly illustrates the existence of an undesirable phenomenon: internal tides might be a significant source of calibration error in many regions. It also illustrates that the direct method has intrinsic limitations because we use a single thin ribbon-shaped image. Generally, the direct method works quite well for wavelength longer than 300 to 500 km. Below this value, the KaRIn field of view of 2 × 50 km makes it very hard to isolate systematic errors from ocean signals.

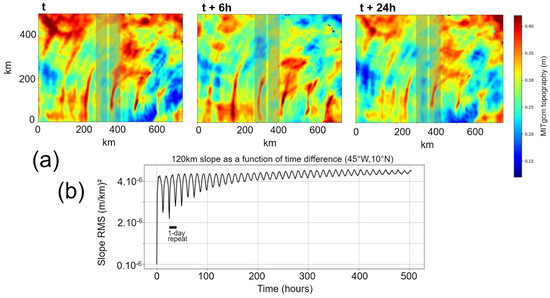

Indeed, Figure 13 shows that in a KaRIn swath, the presence of MITgcm internal tides often creates apparent slopes over the 120 km swath. These slopes have a temporal repeating pattern of 12 and 24 h, as expected. Because of the interactions between internal tides and ocean mesoscale, the topography signature is modulated with time scales of the order of 7 to 15 days (e.g., Ponte and Klein [22]). In turn, this property can be leveraged by the crossover and collinear algorithms: by using an image-to-image difference with a time difference of 1 to 10 days, a fraction of the topography signature is cancelled out when the 2 images are in phase with tides. Because the one-day orbit has a revisit time of almost 24 h, the internal tide signature is almost the same in subsequent KaRIn images, like in the left and right panel of Figure 13a. Other ocean features might change a little, and interact with the internal tides, but the bulk of the internal tide topography signature gets removed in a difference of subsequent daily revisits.

Figure 13.

Illustration of the influence of internal tides in KaRIn images. Panel (a) shows MITgcm SSH snapshots for three arbitrary time steps (namely T0, T0 + 6 h, and T0 + 24 h) over a 700 × 500 km area in the Western Tropical Atlantic. The shaded region shows the geometry of a KaRIn image for scale. Panel (b) shows, the local ocean slope over 120 km as a function of time over a 21-day SWOT cycle.

Furthermore, at these scales, the dominating systematic error have random sources. In other words, they change with each sample. There is no repeating pattern at these short wavelengths, so the uncalibrated errors do not cancel out in the image-to-image difference. This property makes it possible to leverage the collinear Method or Crossover Method to mitigate the ocean leakage (IT and medium mesoscale) during the inversion.

In contrast, for large-scale systematic errors (e.g., more than a few thousands of kilometers), there is a repeating pattern clearly visible in the samples of Figure 7. Because they are repeating, these large-scale errors get cancelled out in a day-to-day difference, so they will not be mitigated by the collinear Method. In other words, this retrieval method is less efficient for scales larger than a few thousands of kilometers. It is necessary to use both the collinear and Direct method concurrently: the former is used to reduce the influence of internal tides and large mesoscale, and the latter is used to retrieve large-scale repeating patterns.

That is where the processing step 2 of Figure 8 comes in. Firstly, we blend the larger scales of the Direct retrieval and the smaller scales of the collinear retrieval. This can be done by summing the outputs of a robust and simple low/high-pass filter, or by using a ‘statistically optimal’ interpolator (Gauss-Markov or Kalman) where we setup the error covariance models of each input. The blended solution, also known as ‘hybrid’ retrieval combines the best properties of each algorithm. The rest of the interpolation is the same as for the Level-2: first, we use a harmonic interpolator with the orbital revolution sub-harmonics like in Figure 11b, then we interpolate the residual to provide a ubiquitous correction like in Figure 11c.

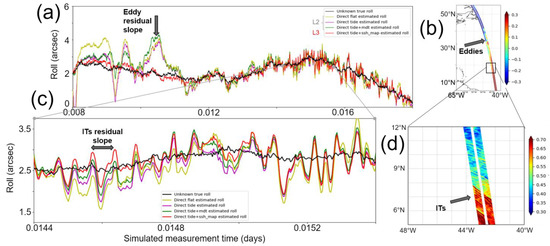

The result is shown in Figure 14. In contrast with the accuracy of the direct method (green line), the collinear Method (blue line) is clearly biased, and generally bad for larger scales. However, using the day-to-day difference strongly reduced the high-frequency signatures of internal tides in the right-hand side part of the plot: the collinear retrieval yields higher precision and lower accuracy. Combining both retrievals into a Hybrid solution (red line) gives the best results: a good retrieval of the large scales and mean, and a strong reduction of the high-frequency errors from the green to the red line.

Figure 14.

Same example as Figure 12a, with the collinear Method. The black line is the uncalibrated roll error to be retrieved. The green line is the roll retrieved with the Level-3 direct method (red curve of Figure 12a). The blue line is the roll retrieved with the collinear Method. The red line is when we combine the Direct and collinear Methods into a so-called Hybrid solution.

For the 21-day orbit, we cannot use the collinear retrieval method because the revisit time is too long when compared with the ocean decorrelation scales or the internal tide demodulation scales of Figure 13b. However, we can use a similar approach with the Crossover Method. The difference is that the local/solar time of the ascending and descending images is not always aligned with the tidal frequencies, so we cancel out a smaller fraction of the internal tide signal.

Note that for Level-2 algorithms, the crossover inversion yields a single scalar for each model (linear, quadratic, and bias per swath) using least squares: the algorithm is more robust and still enough to meet hydrology requirements. For Level-3 algorithm where we try to provide better ocean calibration, we can also retrieve intra-crossover variations of the error and we can leverage the 2D covariance models like in [5].

To summarize, the Level-3 algorithm sequence is a three-step process (red boxes of Figure 8). Step 0 is to use the nadir altimeter constellation (SWOT nadir + Sentinel-6 + Sentinel-3A/3B) as a prior for the large mesoscale. Step 1 is to activate two data-driven retrieval methods for each orbit (Direct + collinear for the one-day orbit, Direct + Crossover for the 21-day orbit). Step 2 is to blend the two retrievals using a Gauss-Markov interpolator, then to interpolate orbital harmonics, and lastly to interpolate the residual in order to have a global correction for the ocean and for hydrology.

The impact of using inaccurate covariance models in Level-3 algorithms was discussed in [6]: the retrieval is not much sensitive to an incorrect level of variance (e.g., regional variations), but the correlation scales are more impactful. In other words, this Level-3 algorithm sequence requires a good statistical knowledge of the correlation models (i.e., the slope of the power spectra). It is relatively easy to setup in simulations as we can perfectly characterize the input fields (ocean and uncalibrated errors). However, in real life, it requires an accurate estimation of the uncalibrated errors of flight data (discussed in Section 5.4) as well as a good statistical description of the true ocean (hence our sensitivity tests with different ocean models, see Section 5.2).

4. Results: Prelaunch Performance Assessment

Section 3 gave a general description of the data-driven calibration algorithms, and it described the two algorithm sequences we implemented (mono-mission Level-2 and multi-mission Level-3). In this section, we present the simulation results and the data-driven calibration performance. Section 4.1 focuses on the Level-2 algorithm sequence, i.e., the calibration sequence implemented in the pre-launch ground segment of SWOT. Section 4.2 then tackles the Level-3 performance, i.e., the expected performance of a research-grade offline correction.

4.1. Performance of Level-2 Operational Calibration

4.1.1. SWOT’s 21-Day Orbit

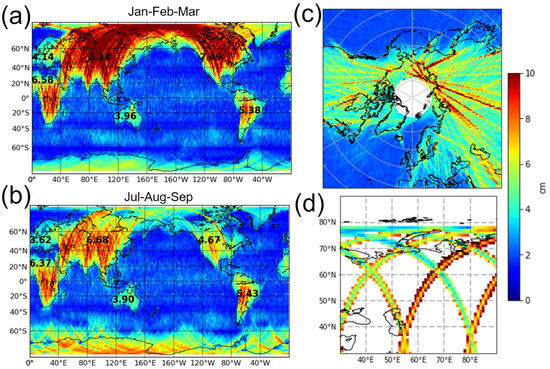

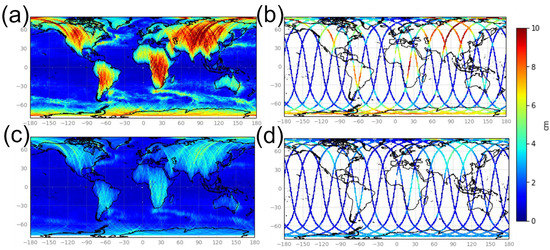

The maps from Figure 15 show the residual error when the Level-2 data-driven calibration is applied over a one-year period. The left panels are for the 21-day orbit: panel (a) is for the spectral allocation scenario, and panel (c) is for the CBE21 scenario (see Section 2.2). The former is arguably a pessimistic or worst case, and the latter might be the most faithful pre-launch simulation.

Figure 15.

Residual systematic errors after the Level-2 data-driven calibration is applied over a one-year simulation (unit: cm RMS). The left panels are for SWOT’s 21-day orbit. The right panels are for the one-day orbit. The upper panels are for the “Spectral Allocation” uncalibrated scenario and the lower panels are for the “Current Best Estimate 2021” scenario.

The maps clearly show two different regimes: ocean and land. Over the ocean, the error after calibration is low with 2 to 3.5 cm for the ‘spectral allocations’ in panel (a) and less than 2 cm for CBE21 in panel (c). Higher errors are observed in sea-ice regions because sea-ice crossovers cannot be used in the data-driven calibration (sea-ice covered regions are actually processed like inland segments). The ocean error is also higher in western boundary currents and the Antarctic Circumpolar Current (ACC). The higher residual is created by the leakage of ocean variability into the correction. Note that the extra error variance in these regions -of the order of a few cm2− is very small in comparison with the regional SSHA variability, which can be one or two orders of magnitude larger. In other words, only 1% to 5% of the ocean signals leak into the calibration.

For hydrology, the error is much larger. For panel (a), it ranges from 4 cm RMS in coastal regions or small continents (Greenland or Australia) to more than 10 cm RMS at the heart of larger continents (North and South America, Africa and Eurasia). For the CBE21 scenario in panel (c) the error ranges from 3 to 7 cm RMS with a very similar geographical distribution. The error naturally increases as SWOT gets away from the ocean because the calibration is made using ocean data, and then interpolated over land. So the farther away from the last ocean crossover, the larger the interpolation error RMS. This is important because depending on where the hydrology target is located, the error level of the Level-2 product could almost triple.

Moreover, in addition to the continent-scale geographical variability, there is also a smaller scale variability associated with the swath width. Indeed, because most of the error are linear or quadratic-shaped in the cross-track direction, the RMSE is higher on the outer edges of the KaRIn coverage. An example is given in Figure 16d where the center of the swath has a 5 to 7 cm RMSE whereas the error on the outer edges can be as large as 8 to 10 cm if not more. To that extent, it is quite important to know where a hydrology target is located within the swath, especially for the one-day orbit where a given point is seen by only a single KaRIn pass.

Figure 16.

Seasonal variations of the systematic errors after the Level-2 data-driven calibration is applied (unit: cm RMS). Panel (a) is the same as Figure 15 for January to March (wintertime in the Northern hemisphere) and panel (b) is for July to September (wintertime in Southern hemisphere). Panel (c) is a snapshot for an arbitrary cycle during the transition from panel (a) to panel (c). Panel (d) shows a zoom over Eurasia for an arbitrary cycle of the one-day orbit.

In addition to the geographical variability, there is also a significant amount of temporal variability in many regions. Indeed, Figure 16 (panels a and b) shows the same maps as Figure 15a, with data from opposite seasons. In North America or Eurasia, the error can be three times larger during the wintertime (Figure 16a) than during the summertime (Figure 16b). The opposite is true over Antarctica and the Southern Ocean. In contrast, other regions have a relatively stable error level (e.g., Australia, South America, Africa, most of the ocean). The reason for this temporal variability is given by Figure 16c: most SWOT passes that go through the northern continents also go through the Arctic Ocean where the seasonal sea-ice coverage strongly affects the crossover coverage. During the wintertime, extremely few crossovers can be used. This results in extremely long interpolations starting from the Indian Ocean coast, Eurasia, the Eastern Siberian Sea or Beaufort Sea, then North America and up to the North Atlantic coast. That is almost an entire hemisphere or 20,000 km without any ocean crossover.

In contrast, during the summertime, the Arctic Ocean gets free of sea-ice, and it becomes possible to have at least a handful of crossovers between Eurasia and North America, which essentially cuts the long interpolation into two smaller ones. Furthermore, as expected from the uncalibrated error power spectra (K−2 power laws), the error rapidly increases with the interpolated segment length. The process is also shown analytically by Esteban-Fernandez in [3]. The longer interpolations created by sea ice induce larger error residuals.

For Antarctica, the same process occurs with the sea-ice coverage of the Southern Ocean. During the southern wintertime, the Antarctica segments are longer because of the presence of sea-ice around the continent, and the interpolator error increases as well. While the process over Antarctica has a smaller magnitude than in the Northern hemisphere, the error increase over the Southern Ocean is very large (local error multiplied by 2.5 or 3). This is why even 1-year simulations exhibit significantly higher errors in the regions where sea-ice may appear during the wintertime.

Furthermore, Table 2 (row 1 and row 3) gives an overview of the global root mean square error (RMSE) for both surfaces and for each scenario. Over the ocean, the error ranges from 1.5 cm RMS for CBE21 to 2.2 cm RMS for the spectral allocations. For inland segments, the error is 3.1 cm RMS for CBE21 and 6.5 cm RMS for spectral allocations. In both cases, the residual error after calibration is less than the SWOT hydrology requirements, and the right-hand side column gives the margins with respect to these requirements (between 25% and 83%). In other words, in our simulations, the Level-2 algorithm is actually able to successfully reduce the systematic errors by 1 or 2 order of magnitudes, as well as to meet the hydrology requirements at global scale.

Table 2.

Overview of the global Level-2 performance after data-driven calibration for different scenarios. The left columns are for the global ocean (sea-ice regions are excluded) with the Root Mean Square Error (RMSE) in cm and the wavelength λ where the calibration stops to be beneficial (measured from the power spectra below). The right columns are for the inland regions with the RMSE in cm and the margins with respect to the hydrology requirements (expressed in % of variance).

Nonetheless, there is a significant amount of geographical and seasonal variability. For the CBE21 scenario, the requirements are met in all regions and all seasons. For the scenario with spectral allocation however, there is much more variance left, and in particular in the 5000 to 15,000 km range where sea-ice affects the interpolation process. As a result, the error after calibration can be locally higher than the requirements, in particular during the wintertime and the Northern hemisphere.

4.1.2. SWOT’s One-Day Orbit

The right-hand side panels from Figure 15 show the residual error when the Level-2 data-driven calibration is applied for the one-day orbit. Panel (b) is for the spectral allocation scenario and panel (d) is for the CBE21. The continents, these maps are somewhat similar to panel (a) and (c) for inland regions. The farther away from the ocean, the higher the residual error after calibration. The error is also larger in many regions such as South America or Antarctica.

In contrast, the geographical distribution over the oceans is very different panel (a) and panel (c). The residual error was quite homogeneous for the 21-day orbit and increasing only because of ocean variability. For the one-day orbit, the error is still 2 to 3 cm near the ocean crossovers, but these crossovers are so sparse, and so far away from one another, that very long interpolations must be performed between them. As a result, the ocean error strongly increases in all ocean regions that are far from ocean crossovers: to illustrate, near the Equator the error can be as large as 7 cm RMS, i.e., more than one order of magnitude higher in variance (or power spectrum). This major change in crossover distribution is also the reason why the inland error is higher for the one-day orbit. For a given river or lake, the closest ocean calibration zone is sometimes much farther away with sparse one-day crossovers than for the denser crossover coverage of the 21-day orbit. As a result, the inland interpolated segments are usually longer, sometimes by more than 5000 km. And like in the presence of sea-ice discussed above, longer inland interpolations yield larger calibration errors.

The values of Table 2 for the one-day orbit (row 2 and row 4) show that the ocean global RMSE increases at lot for the allocation scenario, which in turns increases the inland RMSE. The result is that the hydrology requirements are no longer met for the one-day orbit (the margin becomes minus 8%). In contrast, for the CBE21 scenario, the error increases much less over the ocean (from 1.5 to 2 cm RMS) which, in turn, barely affects the inland RMS and margins. For this CBE21 scenario, there is significantly much less uncalibrated variance in the STOP21 simulations than in the allocations for wavelengths above 5000 km, which in turns reduces not only the total error, but also the necessity to have crossovers at all latitudes and all regions.

To summarize, the one-day orbit becomes a more challenging problem for the simple and robust Level-2 algorithms. One the one hand, the official CBE21 scenario from the SWOT Project indicates that the algorithm is enough to meet the requirements. On the other hand, the pessimistic/worst case spectral allocations indicate that if flight data suffer from a larger uncalibrated error, the Level-2 algorithm might be insufficient to meet the hydrology requirement during this phase. This finding was one important driver for the definition of the Level-3 algorithm.

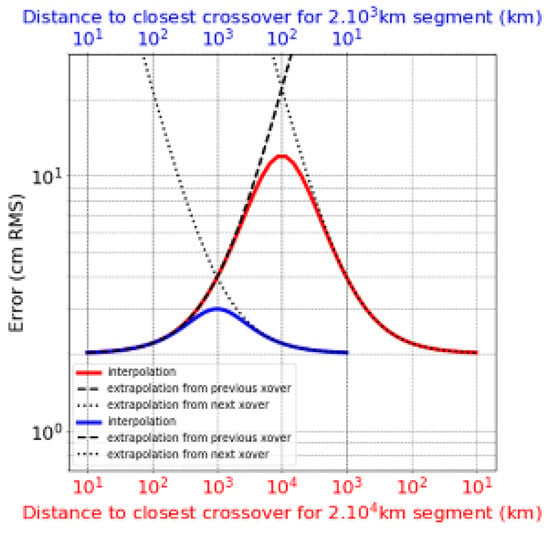

4.1.3. Spectral Metrics

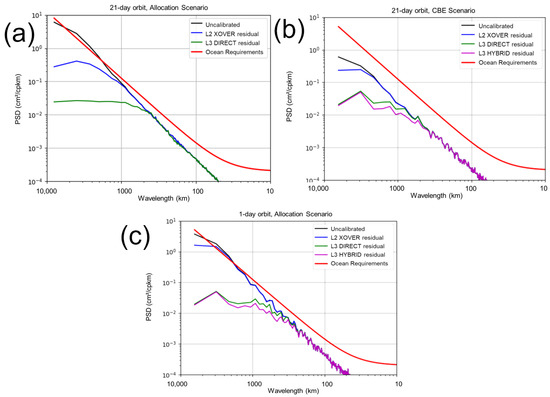

As explained in previous sections, the ocean requirements are expressed as power spectra density (PSD) thresholds from 15 to 1000 km. In essence, the total SWOT error budget must always be below a small fraction the ocean SSHA spectrum (SNR = 10 dB) for these wavelengths. This will ensure that KaRIn captures correctly at least 90% of the variance of large to small mesoscale, or even submesoscale features. It also ensures that the spectral slopes of interest for oceanographic research are not significantly affected by measurement errors.

Figure 17a shows the PSD of the ocean requirements in red (extended up to 10,000 km), the uncalibrated error in black. From 15 to 1000 km, the uncalibrated error allocation is significantly below the requirements. As discussed in previous sections, data-driven calibration is not needed for SWOT to meet its ocean requirements.

Figure 17.

Power spectral density (PSD) of the requirements (red), uncalibrated (black) and calibrated (blue for Level-2, and green/purple for the Level-3) errors over the ocean. Panel (a) is for the 21-day orbit and the ‘spectral allocation scenarios’. Panel (b) is for the 21-day orbit and the CBE21 scenario. Panel (c) is for the one-day orbit and the ‘spectral allocation scenarios’.

The blue line in Figure 17a (error PSD after Level-2 calibration) shows that the crossover algorithm starts to kick in at 1000 km: the blue line departs from the black line, i.e., the calibration is effectively mitigating the errors. This is by construction as we estimate only one scalar value per crossover in processing step 1 and the Gaussian interpolator of processing step 2 is setup to smooth scales smaller than 1000 km. For scales smaller than 1000 km, the data-driven Level-2 calibration leaves the data untouched since the requirements are met anyway. Above 1000 km, the calibration reduces the error by a factor 2 to 50: the longer the wavelength, the better the error reduction. There is also (not shown) a huge gain at the orbital revolution period (40,000 km) because a lot of uncalibrated error variance is concentrated near this specific frequency (see Section 2.2).

However, in the case of the CBE21 in the 21-day orbit (Figure 17b), the uncalibrated error (black) is almost an order of magnitude below the allocation for all scales, especially above 1000 km. Because the magnitude of the uncalibrated is smaller, the crossover algorithm kicks in at 2500 km where it removes as much as 30% of variance for the larger scales. In the CBE21 scenario, the data-driven calibration essentially removes only the variance of the orbital harmonics and for very large scales. This is the reason why it is less affected by the sparse crossover coverage in Figure 15d. In contrast, Figure 17c shows that for the allocation scenario of the one-day orbit, and despite the large amount of uncalibrated variance from 1000 to 10,000 km, the Level-2 crossover method is barely efficient below 5000 km. This is a spectral view of the higher errors in Figure 15b when KaRIn is away from crossovers.

Note that we defined the 1000 km limit with SWOT’s Project Team. The rationale is to not alter the data if the raw measurement already meets the requirement. However, the practical consequence is that the residual error after calibration could be as large as 5 cm RMS and more for the one-day orbit. This choice is the second driver for the definition of the Level-3 algorithm: to provide the best possible ocean calibration for scales above and below 1000 km for both orbits.

4.2. Performance of Level-3 Research Calibration