Weighted Local Ratio-Difference Contrast Method for Detecting an Infrared Small Target against Ground–Sky Background

Abstract

1. Introduction

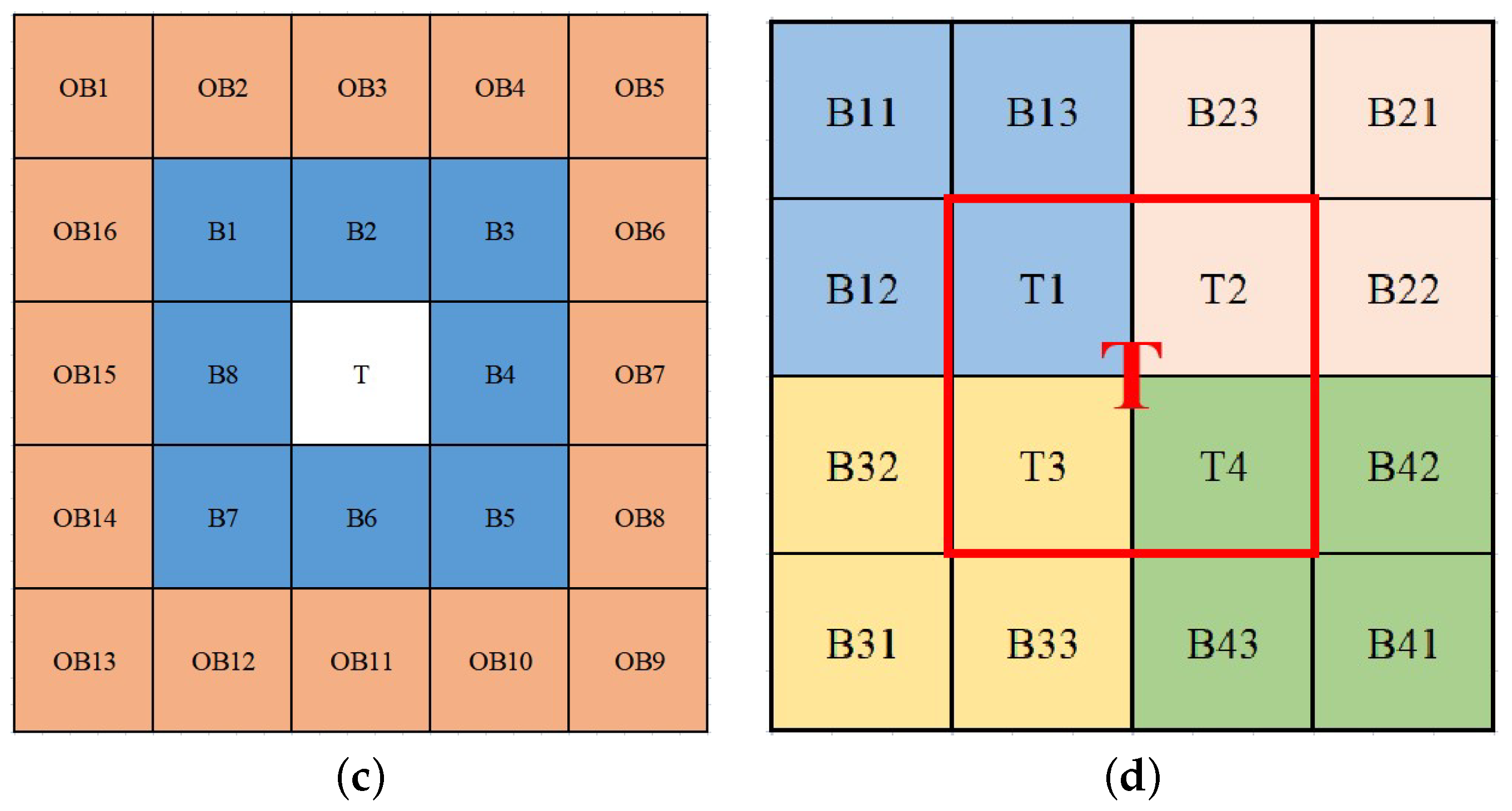

- A local ratio-difference contrast (LRDC) method that can simultaneously enhance the target and suppress complex background clutter and noise is proposed by combining local ratio information and difference information. LRDC uses the mean of the Z max pixel gray values in the center block to effectively solve the problem of poorly enhancing the target at low contrast when the traditional LCM-based method is applied.

- A simple and effective strategy of block difference product weighted (BDPW) mapping is designed on the basis of spatial dissimilarity of the target to improve the robustness of the WLRDC method. BDPW can further suppress background clutter residuals without increasing the computation complexity given that this strategy is also calculated using the gray of the center and adjacent blocks.

2. Related Work

3. Materials and Methods

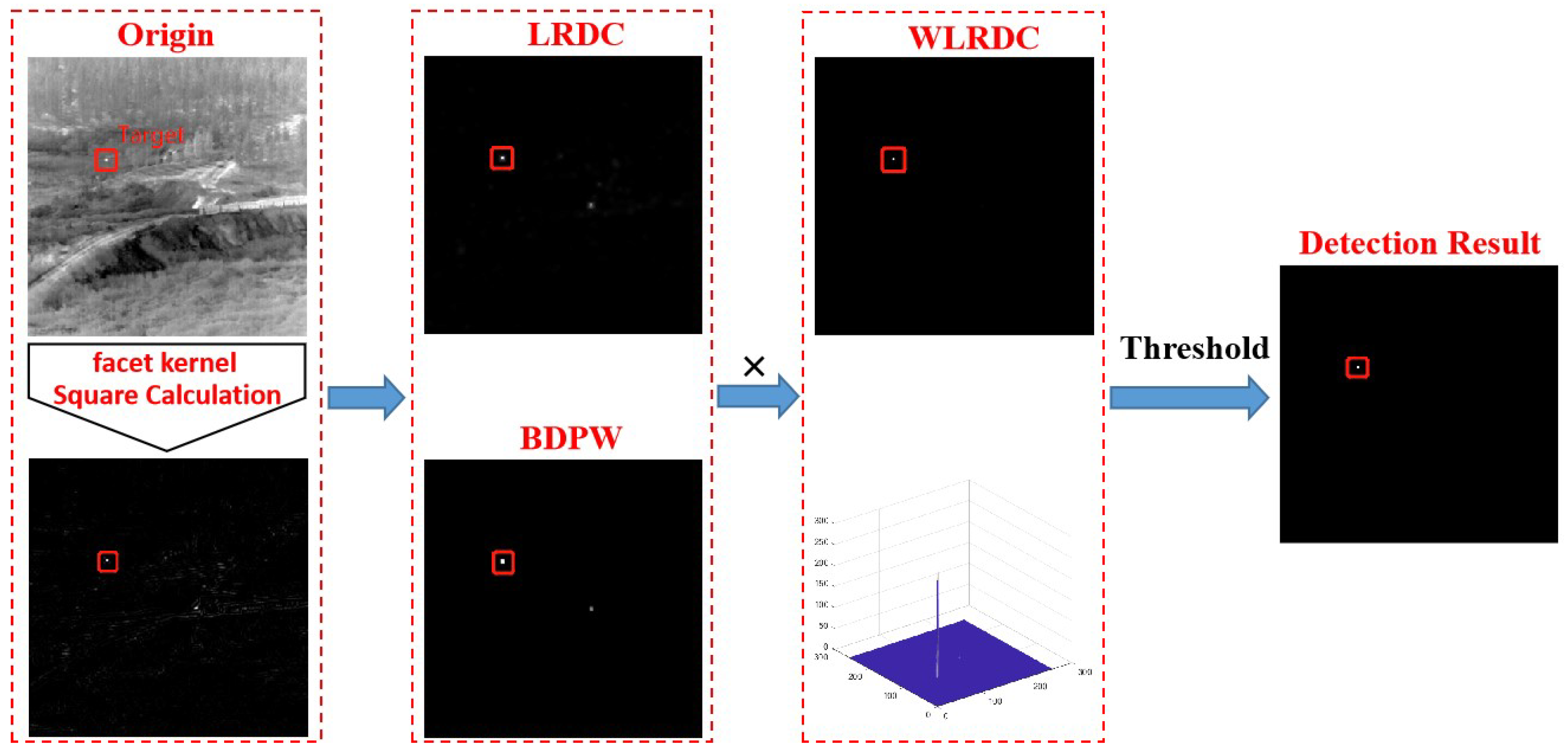

3.1. Preprocessing: Target Enhancement

3.1.1. Facet Kernel Filtering

3.1.2. Square Calculation

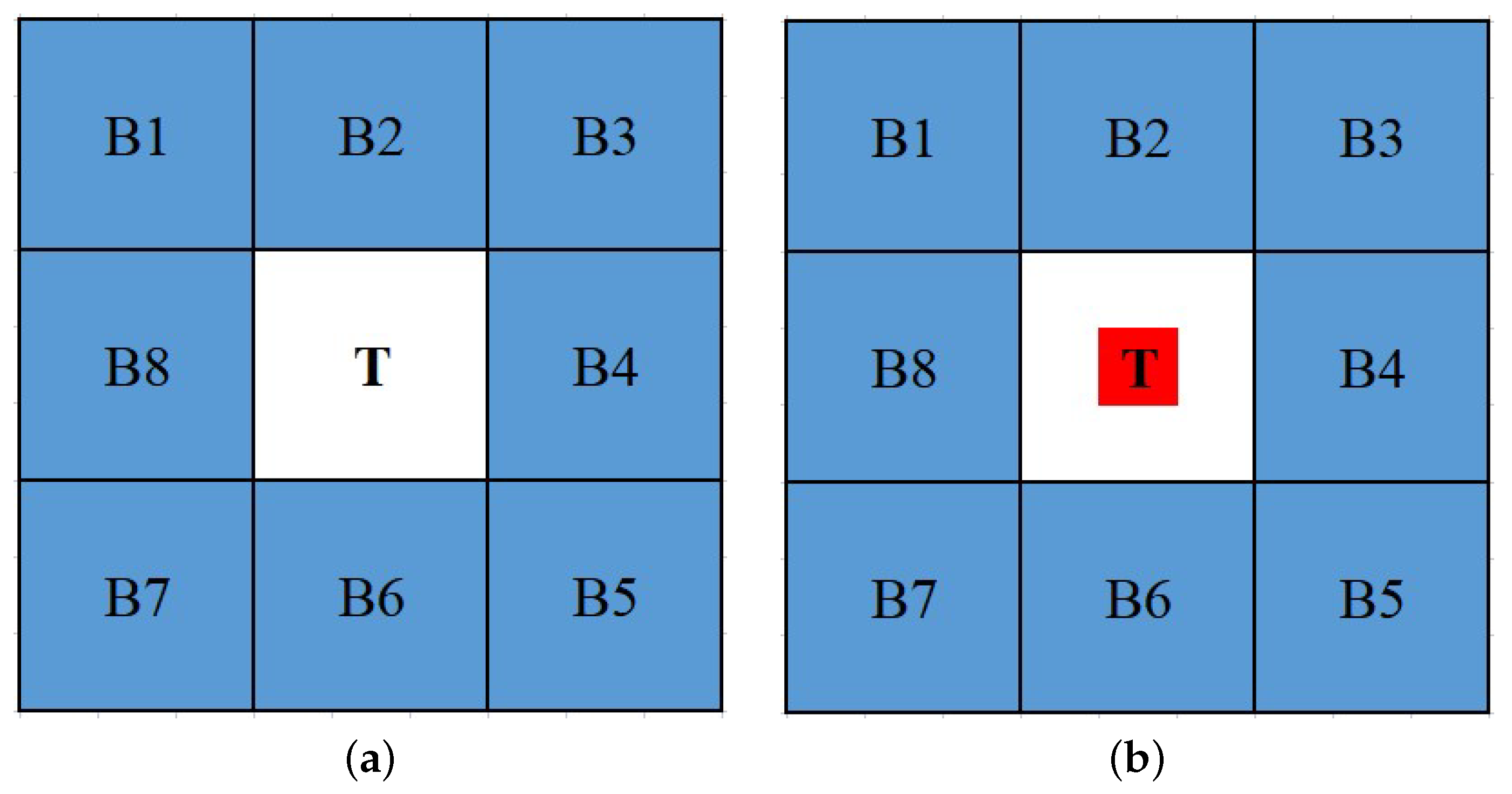

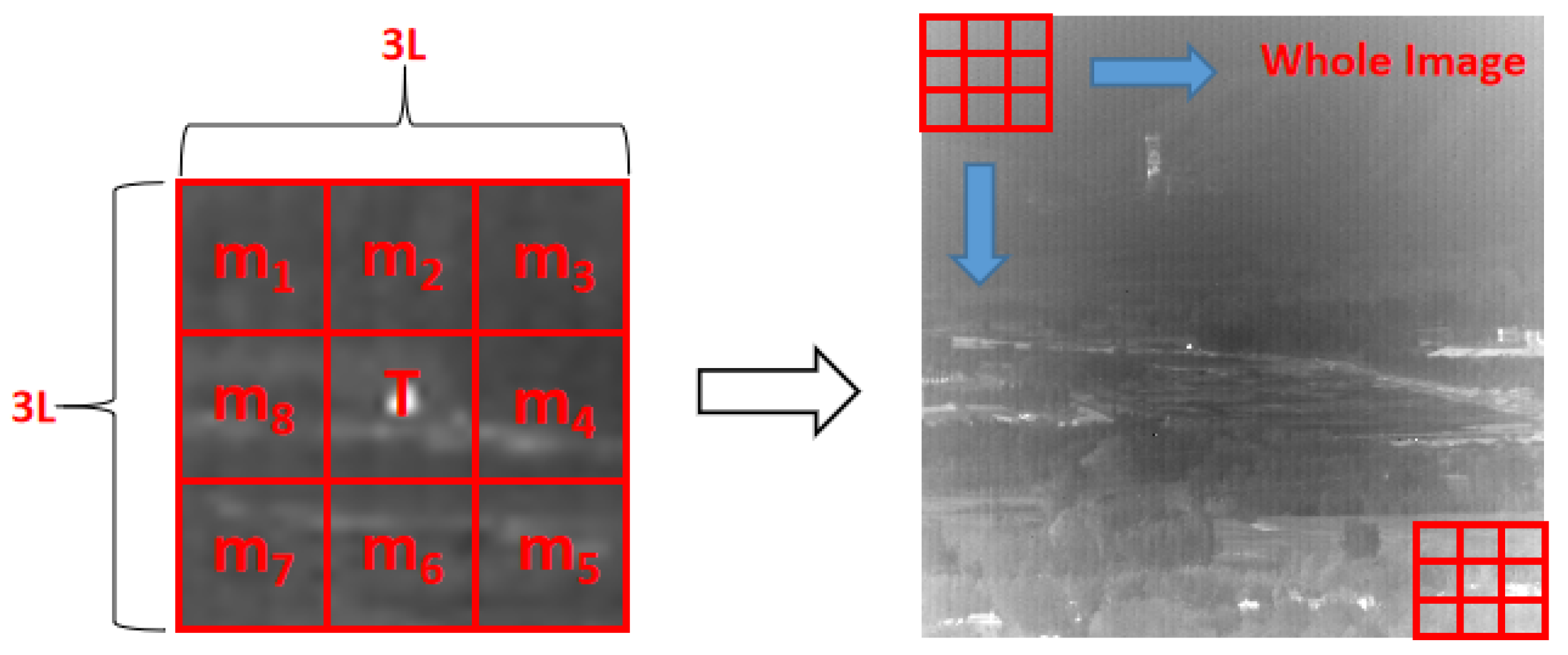

3.2. Calculation of LRDC

- False alarms easily occur when pixel-sized noises with high brightness (PNHB) appear in the background, the maximum gray value of the center block is used in the ratio calculation, and PNHB is easily taken as the target. improves the accurate representation of gray features of the central block and avoids the weighting of PNHB in the calculation of LRDC.

- Compared with the method that only uses the gray mean of the central block, our method uses the mean of Z maximum gray values in the center block to expand the contrast between the target and the background further as well as enhance the target.

3.3. Calculation of BDPW

- If the central block is the target, then we can easily obtain the following because the target is the most significant in the local region:

- If the central block is the background, then we can easily obtain the following because the background is a uniform area with some noise in the local region:

3.4. Multi-Scale Calculation of WLRDC

3.5. Target Extraction

| Algorithm 1 Detection steps of the proposed WLRDC method. |

|

3.6. Complexity Analysis

4. Experimental Results and Analysis

4.1. Experimental Setup

4.1.1. Datasets

4.1.2. Evaluation Criteria

4.1.3. Baseline Methods

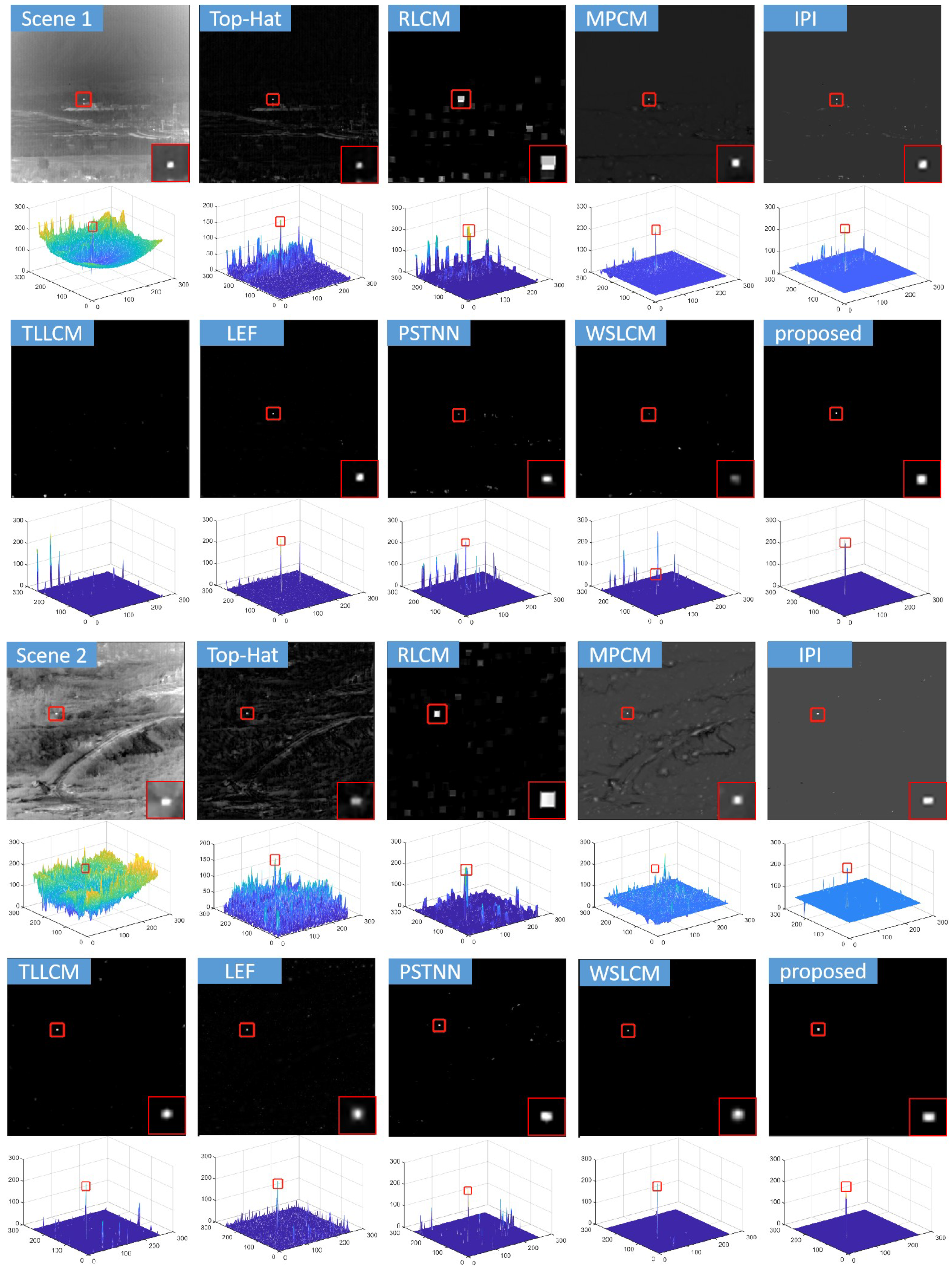

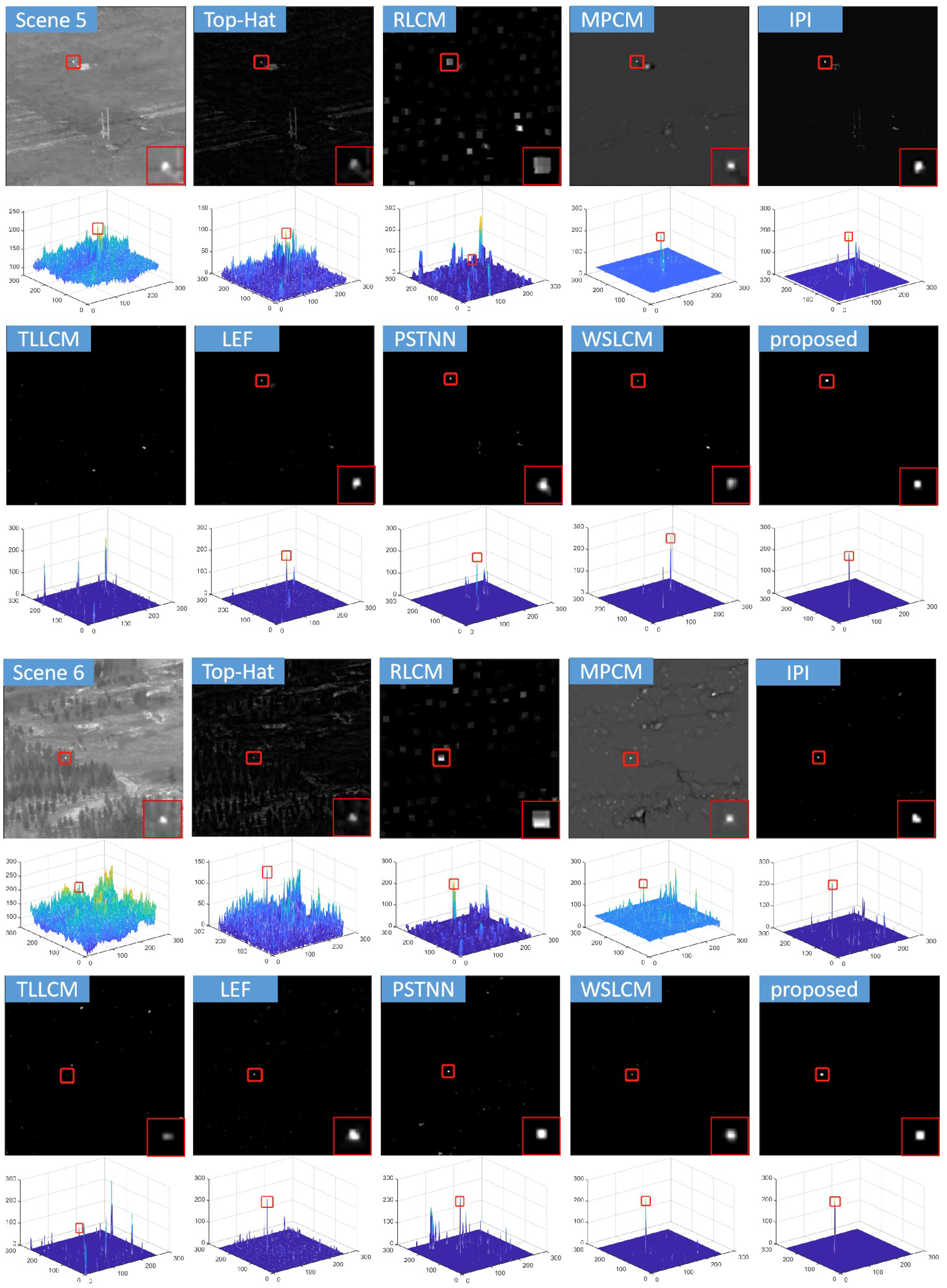

4.2. Comparison with State-of-the-Art Methods

5. Discussion

5.1. Discussion of Detection Performance

5.2. Discussion of the Key Parameter Z

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, T.; Wu, H.; Liu, Y.; Peng, L.; Yang, C.; Peng, Z. Infrared Small Target Detection Based on Non-Convex Optimization with Lp-Norm Constraint. Remote Sens. 2019, 11, 559. [Google Scholar] [CrossRef]

- Chen, Y.; Xin, Y. An Efficient Infrared Small Target Detection Method Based on Visual Contrast Mechanism. IEEE Geosci. Remote Sens. Lett. 2016, 13, 962–966. [Google Scholar] [CrossRef]

- Han, J.; Yong, M.; Huang, J.; Mei, X.; Ma, J. An Infrared Small Target Detecting Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2015, 13, 452–456. [Google Scholar] [CrossRef]

- Pang, D.D.; Shan, T.; Li, W.; Ma, P.G.; Tao, R.; Ma, Y.R. Facet Derivative-Based Multidirectional Edge Awareness and Spatial–Temporal Tensor Model for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Li, Z.Z.; Chen, J.; Hou, Q.; Fu, H.X.; Dai, Z.; Jin, G.; Li, R.Z.; Liu, C.J. Sparse representation for infrared dim target detection via a discriminative over-complete dictionary learned online. Sensors 2014, 14, 9451–9470. [Google Scholar] [CrossRef] [PubMed]

- Deng, H.; Wei, Y.; Tong, M. Small target detection based on weighted self-information map. Infrared Phys. Technol. 2013, 60, 197–206. [Google Scholar] [CrossRef]

- Zhou, F.; Wu, Y.; Dai, Y.; Wang, P. Detection of Small Target Using Schatten 1/2 Quasi-Norm Regularization with Reweighted Sparse Enhancement in Complex Infrared Scenes. Remote Sens. 2019, 11, 2058. [Google Scholar] [CrossRef]

- Lu, Y.; Dong, L.; Zhang, T.; Xu, W. A Robust Detection Algorithm for Infrared Maritime Small and Dim Targets. Sensors 2020, 20, 1237. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Lv, H.; Zhou, F. Infrared small target enhancement by using sequential top-hat filters. Proc. Int. Symp. Optoelectron. Technol. Appl. 2014, 9301, 417–421. [Google Scholar]

- Zeng, M.; Li, J.; Peng, Z. The design of top-hat morphological filter and application to infrared target detection. Infrared Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Meng, H.E.; Ronda, V.; Chan, P. Max-mean and Max-median filters for detection of small-targets. Proc. SPIE Int. Soc. Opt. Eng. 1999, 3809, 74–83. [Google Scholar]

- Fan, H.; Wen, C. Two-Dimensional Adaptive Filtering Based on Projection Algorithm. IEEE Trans. Signal Process. 2004, 52, 832–838. [Google Scholar] [CrossRef]

- Zhao, Y.; Pan, H.; Du, C.; Peng, Y.; Zheng, Y. Bilateral two dimensional least mean square filter for infrared small target detection. Infrared Phys. Technol. 2014, 65, 17–23. [Google Scholar] [CrossRef]

- Peng, L.B.; Zhang, T.F.; Liu, Y.H.; Li, M.H.; Peng, Z.M. Infrared dim target detection using shearlet’s kurtosis maximization under non-uniform background. Symmetry 2019, 11, 723. [Google Scholar] [CrossRef]

- Nie, J.Y.; Qu, S.C.; Wei, Y.T.; Zhang, L.M.; Deng, L.Z. An Infrared Small Target Detection Method Based on Multiscale Local Homogeneity Measure. Infrared Phys. Technol. 2018, 90, 186–194. [Google Scholar] [CrossRef]

- Chen, C.L.; Li, H.; Wei, Y.T.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Han, J.H.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A robust infrared small target detection algorithm based on human visual system. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar]

- Qin, Y.; Li, B. Effective infrared small target detection utilizing a novel local contrast method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Han, J.H.; Liang, K.; Zhou, B.; Zhu, X.Y.; Zhao, J.; Zhao, L.L. Infrared small target detection utilizing the multi-scale relative local contrast measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Wei, Y.T.; You, X.G.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recogn. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Cui, Z.; Yang, J.; Jiang, S.; Li, J. An infrared small target detection algorithm based on high-speed local contrast method. Infrared Phys. Technol. 2016, 76, 474–481. [Google Scholar] [CrossRef]

- Xia, C.Q.; Li, X.R.; Zhao, L.Y.; Shu, R. Infrared Small Target Detection Based on Multiscale Local Contrast Measure Using Local Energy Factor. IEEE Geosci. Remote Sens. Lett. 2020, 17, 157–161. [Google Scholar] [CrossRef]

- Han, J.H.; Moradi, S.; Faramarzi, I.; Zhang, H.H.; Zhao, Q.; Zhang, X.J.; Li, N. Infrared Small Target Detection Based on the Weighted Strengthened Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1670–1674. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A local contrast method for infrared small-target detection utilizing a tri-layer window. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1822–1826. [Google Scholar] [CrossRef]

- Wu, L.; Ma, Y.; Fan, F.; Wu, M.H.; Huang, J. A Double-Neighborhood Gradient Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1476–1480. [Google Scholar] [CrossRef]

- Lu, X.F.; Bai, X.F.; Li, S.X.; Hei, X.H. Infrared Small Target Detection Based on the Weighted Double Local Contrast Measure Utilizing a Novel Window. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Gao, C.Q.; Meng, D.Y.; Yang, Y.; Wang, Y.T.; Zhou, X.F.; Hauptmann, A.G. Infrared patch-image model forsmall target detection in a single image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.Y.; Peng, Z.M.; Kong, D.H.; He, Y.M. Infrared dim and small target detection based on stable multi-subspace learning in heterogeneous scenes. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5481–5493. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y. Reweighted Infrared Patch-Tensor Model With Both Nonlocal and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, Z. Infrared small target detection based on partial sum of the tensor nuclear norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

- Deng, L.Z.; Zhu, H.; Tao, C.; Wei, Y.T. Infrared moving point target detection based on spatial–temporal local contrast filter. Infrared Phys. Technol. 2016, 76, 168–173. [Google Scholar] [CrossRef]

- Zhao, B.; Xiao, S.; Lu, H.; Wu, D. Spatial-temporal local contrast for moving point target detection in space-based infrared imaging system. Infrared Phys. Technol. 2018, 95, 53–60. [Google Scholar] [CrossRef]

- Du, P.; Askar, H. Infrared Moving Small-Target Detection Using Spatial-Temporal Local Difference Measure. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1817–1821. [Google Scholar] [CrossRef]

- Liu, H.K.; Zhang, L.; Huang, H. Small Target Detection in Infrared Videos Based on Spatio-Temporal Tensor Model. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8689–8700. [Google Scholar] [CrossRef]

- Pang, D.D.; Shan, T.; Ma, P.G.; Li, W.; Liu, S.H.; Tao, R. A Novel Spatiotemporal Saliency Method for Low-Altitude Slow Small Infrared Target Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, K.D.; Li, S.Y.; Niu, S.S.; Zhang, K. Detection of Infrared Small Targets Using Feature Fusion Convolutional Network. IEEE Access. 2019, 7, 146081–146092. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss Detection vs. False Alarm: Adversarial Learning for Small Object Segmentation in Infrared Images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 8508–8517. [Google Scholar]

- Dai, Y.M.; Wu, Y.Q.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Kim, J.H.; Hwang, Y. GAN-Based Synthetic Data Augmentation for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. [Google Scholar] [CrossRef]

- Guan, X.W.; Peng, Z.M.; Huang, S.Q.; Chen, Y.P. Gaussian Scale-Space Enhanced Local Contrast Measure for Small Infrared Target Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 327–331. [Google Scholar] [CrossRef]

- Han, J.H.; Liu, S.B.; Qin, G.; Zhao, Q.; Zhang, H.H.; Li, N.N. A Local Contrast Method Combined With Adaptive Background Estimation for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1442–1446. [Google Scholar] [CrossRef]

- Han, J.H.; Xu, Q.Y.; Saed, M.; Fang, H.Z.; Yuan, X.Y.; Qi, Z.M.; Wan, J.Y. A Ratio-Difference Local Feature Contrast Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Du, P.; Askar, H. Infrared Small Target Detection Based on Facet-Kernel Filtering Local Contrast Measure; Springer: Singapore, 2019. [Google Scholar]

- Qi, S.; Xu, G.; Mou, Z.; Huang, D.; Zheng, X. A fast-saliency method for real-time infrared small target detection. Infrared Phys. Technol. 2016, 77, 440–450. [Google Scholar] [CrossRef]

- Yang, P.; Dong, L.L.; Xu, W.H. Infrared Small Maritime Target Detection Based on Integrated Target Saliency Measure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2369–2386. [Google Scholar] [CrossRef]

- Hui, B.W.; Song, Z.Y.; Fan, H.Q.; Zhong, P.; Hu, W.D.; Zhang, X.F.; Lin, J.G.; Su, H.Y.; Jin, W.; Zhang, Y.J.; et al. A dataset for infrared image dim-small aircraft target detection and tracking under ground/air background. China Sci. Data 2020, 5, 291–302. [Google Scholar]

| Data | Number of Frames | Image Resolution | Background Description | Target Type |

|---|---|---|---|---|

| Scene 1 | 259 | 256 × 256 | Ground–sky background, high voltage towers, and strong radiation buildings | UAV |

| Scene 2 | 151 | 256 × 256 | Ground–sky background, high-brightness roads, and forests | UAV |

| Scene 3 | 131 | 256 × 256 | Ground–sky background, grasslands, and strong radiation ground | UAV |

| Scene 4 | 75 | 256 × 256 | Ground–sky background, trees, and high-brightness ground | UAV |

| Scene 5 | 100 | 256 × 256 | Ground–sky background, telegraph poles, and high-brightness ground | UAV |

| Scene 6 | 150 | 256 × 256 | Ground–sky background, forests, and strong ground disturbance clutter | UAV |

| Methods | Parameter Settings |

|---|---|

| Top-Hat [9] | Structure size: square, local window size: 3 × 3 |

| RLCM [19] | = (2,4), (5,9) and (9,16) |

| MPCM [20] | Local window size: N = 3,5,7,9. mean filter size: 3 × 3 |

| IPI [27] | Patch size: 50 × 50, sliding step: 10, = 1/, = 10−7 |

| TLLCM [24] | Window size: 3 × 3, s = 5,7,9 |

| LEF [22] | P = 1,3,5,7,9, = 0.5, and h = 0.2 |

| PSTNN [30] | Patch size: 40 × 40, sliding step: 40, = , = 10−7 |

| WSLCM [23] | K = 9, = 0.6∼0.9 |

| Proposed | Local window size: L = 3,5,7,9, K = 4,9,11 |

| Methods | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 |

|---|---|---|---|---|---|---|

| Top-Hat [9] | 30.321 | 23.568 | 20.404 | 10.105 | 6.059 | 15.975 |

| RLCM [19] | 26.046 | 30.587 | 28.373 | 21.395 | 8.476 | 18.487 |

| MPCM [20] | 30.796 | 38.533 | 37.942 | 24.485 | 18.996 | 22.243 |

| IPI [27] | 35.465 | 38.994 | 34.064 | 23.766 | 16.049 | 31.107 |

| TLLCM [24] | 33.302 | 41.840 | 39.910 | 30.099 | 17.976 | 29.228 |

| LEF [22] | 38.445 | 40.831 | 37.878 | 28.637 | 18.818 | 30.620 |

| PSTNN [30] | 35.573 | 38.407 | 29.373 | 26.285 | 17.017 | 24.576 |

| WSLCM [23] | 36.353 | 42.122 | 40.456 | 31.293 | 19.414 | 30.478 |

| Proposed | 38.023 | 42.847 | 42.550 | 33.966 | 20.636 | 30.793 |

| Methods | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 |

|---|---|---|---|---|---|---|

| Top-Hat [9] | 24.167 | 7.264 | 5.468 | 2.920 | 2.009 | 5.958 |

| RLCM [19] | 15.735 | 15.615 | 13.402 | 10.747 | 2.719 | 7.868 |

| MPCM [20] | 28.897 | 45.049 | 43.421 | 15.294 | 9.957 | 12.717 |

| IPI [27] | 43.060 | 41.818 | 25.871 | 13.589 | 6.414 | 33.793 |

| TLLCM [24] | 33.967 | 57.377 | 50.029 | 27.964 | 8.159 | 28.127 |

| LEF [22] | 60.306 | 51.201 | 39.703 | 23.685 | 8.875 | 32.058 |

| PSTNN [30] | 43.872 | 39.179 | 16.295 | 18.037 | 7.146 | 16.031 |

| WSLCM [23] | 50.165 | 59.105 | 53.180 | 32.643 | 9.443 | 32.262 |

| Proposed | 57.183 | 64.301 | 67.949 | 43.330 | 10.753 | 32.355 |

| Methods | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 | Scene 6 |

|---|---|---|---|---|---|---|

| Top-Hat [9] | 0.594 | 0.564 | 0.544 | 0.544 | 0.542 | 0.547 |

| RLCM [19] | 4.297 | 4.423 | 4.478 | 4.387 | 4.536 | 4.371 |

| MPCM [20] | 0.162 | 0.138 | 0.133 | 0.126 | 0.131 | 0.122 |

| IPI [27] | 9.266 | 8.826 | 8.939 | 9.327 | 9.863 | 8.922 |

| TLLCM [24] | 1.535 | 1.277 | 1.432 | 1.476 | 1.285 | 1.233 |

| LEF [22] | 19.252 | 19.612 | 20.744 | 19.430 | 20.156 | 19.443 |

| PSTNN [30] | 0.299 | 0.257 | 0.302 | 0.315 | 0.267 | 0.324 |

| WSLCM [23] | 5.269 | 4.807 | 5.478 | 4.626 | 5.444 | 5.294 |

| Proposed | 0.216 | 0.217 | 0.217 | 0.219 | 0.221 | 0.220 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, H.; Ma, P.; Pang, D.; Li, W.; Qian, J.; Guo, X. Weighted Local Ratio-Difference Contrast Method for Detecting an Infrared Small Target against Ground–Sky Background. Remote Sens. 2022, 14, 5636. https://doi.org/10.3390/rs14225636

Wei H, Ma P, Pang D, Li W, Qian J, Guo X. Weighted Local Ratio-Difference Contrast Method for Detecting an Infrared Small Target against Ground–Sky Background. Remote Sensing. 2022; 14(22):5636. https://doi.org/10.3390/rs14225636

Chicago/Turabian StyleWei, Hongguang, Pengge Ma, Dongdong Pang, Wei Li, Jinwang Qian, and Xingchen Guo. 2022. "Weighted Local Ratio-Difference Contrast Method for Detecting an Infrared Small Target against Ground–Sky Background" Remote Sensing 14, no. 22: 5636. https://doi.org/10.3390/rs14225636

APA StyleWei, H., Ma, P., Pang, D., Li, W., Qian, J., & Guo, X. (2022). Weighted Local Ratio-Difference Contrast Method for Detecting an Infrared Small Target against Ground–Sky Background. Remote Sensing, 14(22), 5636. https://doi.org/10.3390/rs14225636