Multi-Sensor Fusion Based Estimation of Tire-Road Peak Adhesion Coefficient Considering Model Uncertainty

Abstract

1. Introduction

1.1. Motivation

1.2. Related Work

1.3. Contribution

1.4. Paper Organization

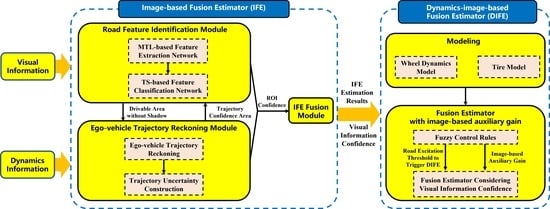

2. Estimation Framework

3. Design of Image-Based Fusion Estimator Considering Model Uncertainty

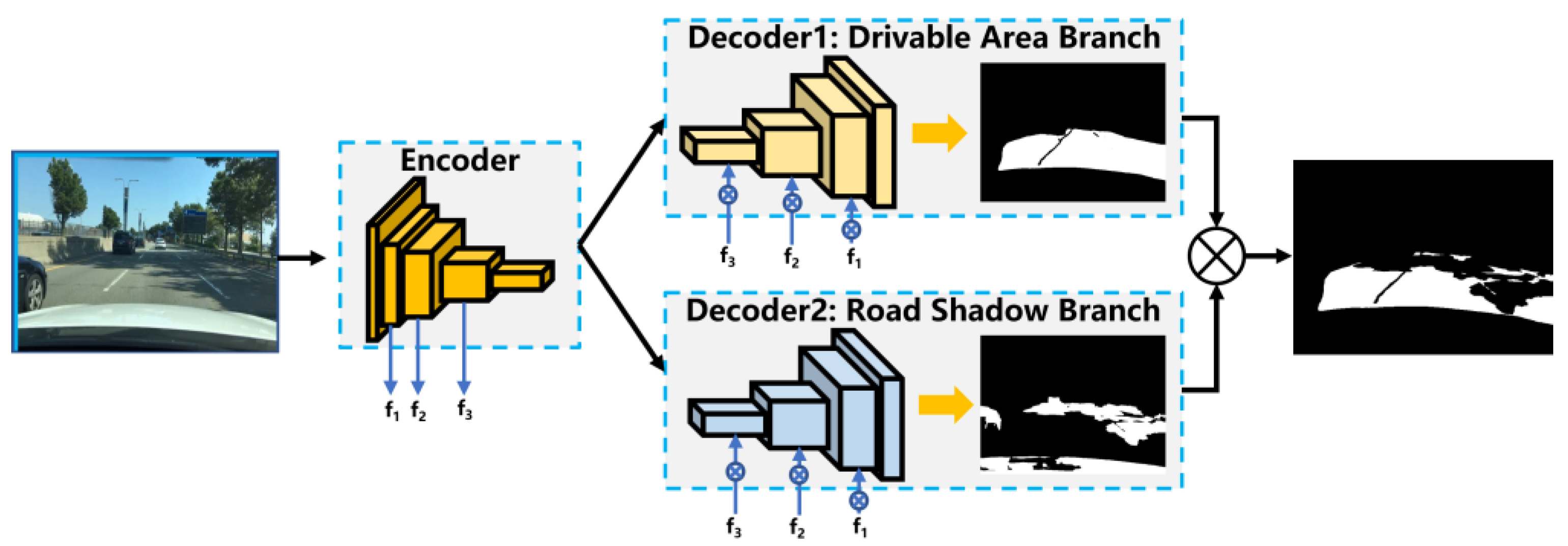

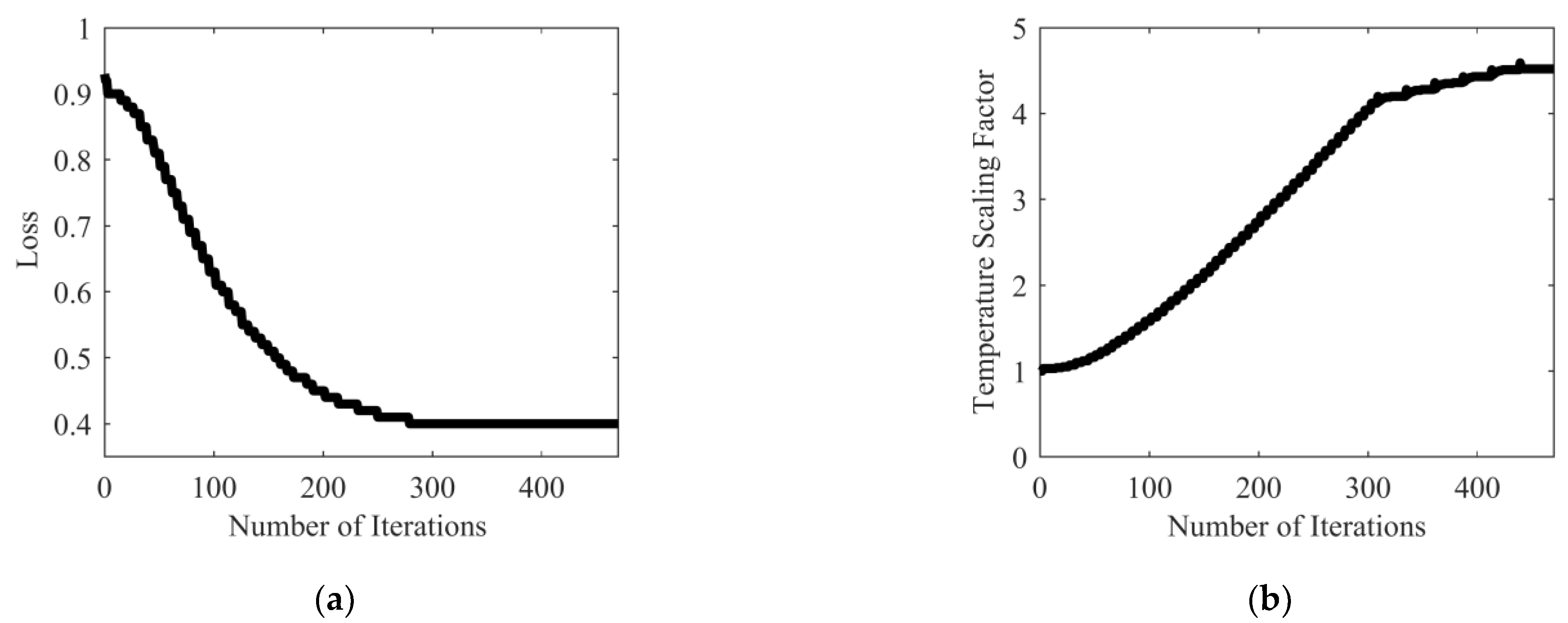

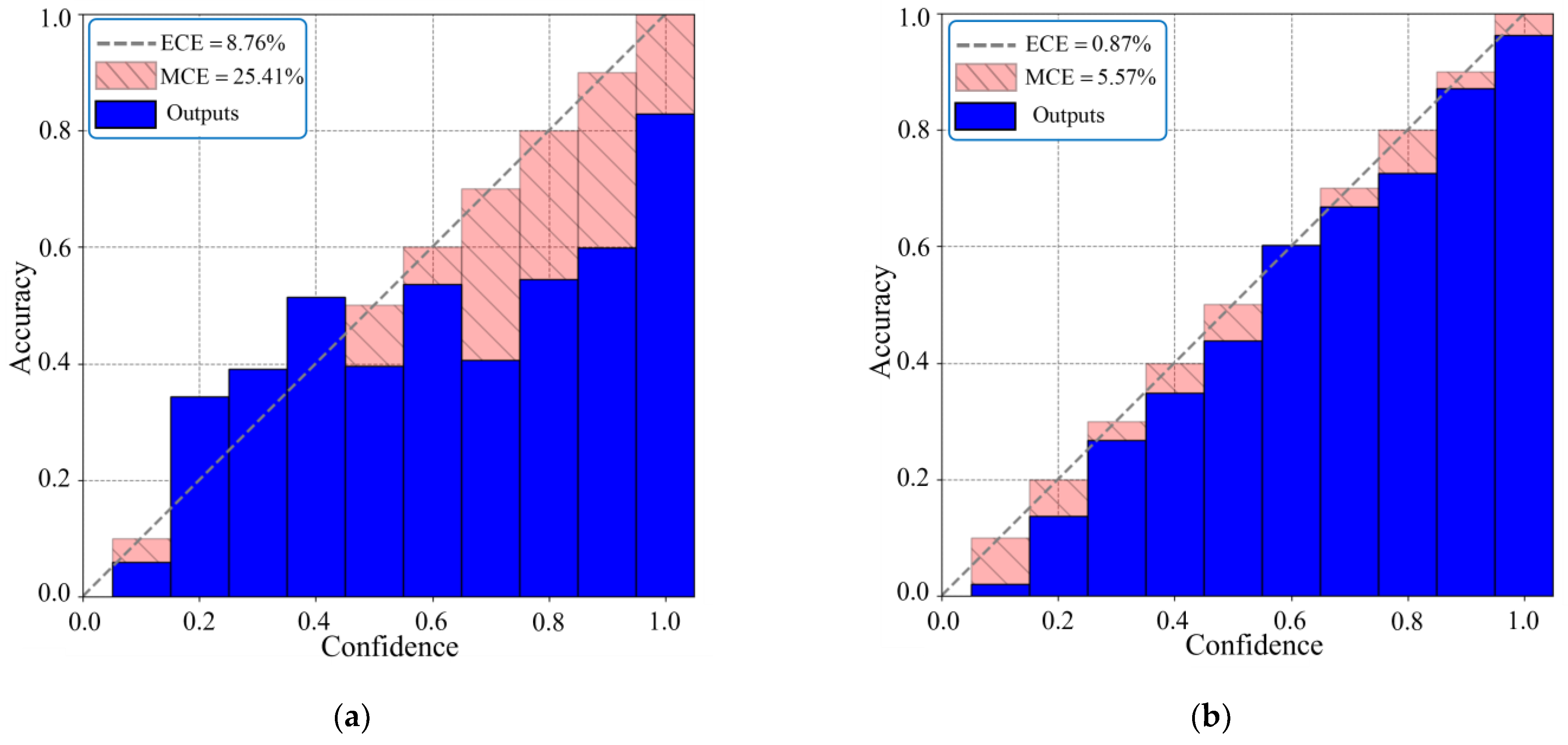

3.1. Road Feature Identification Module Considering Deep-Learning Model Uncertainty

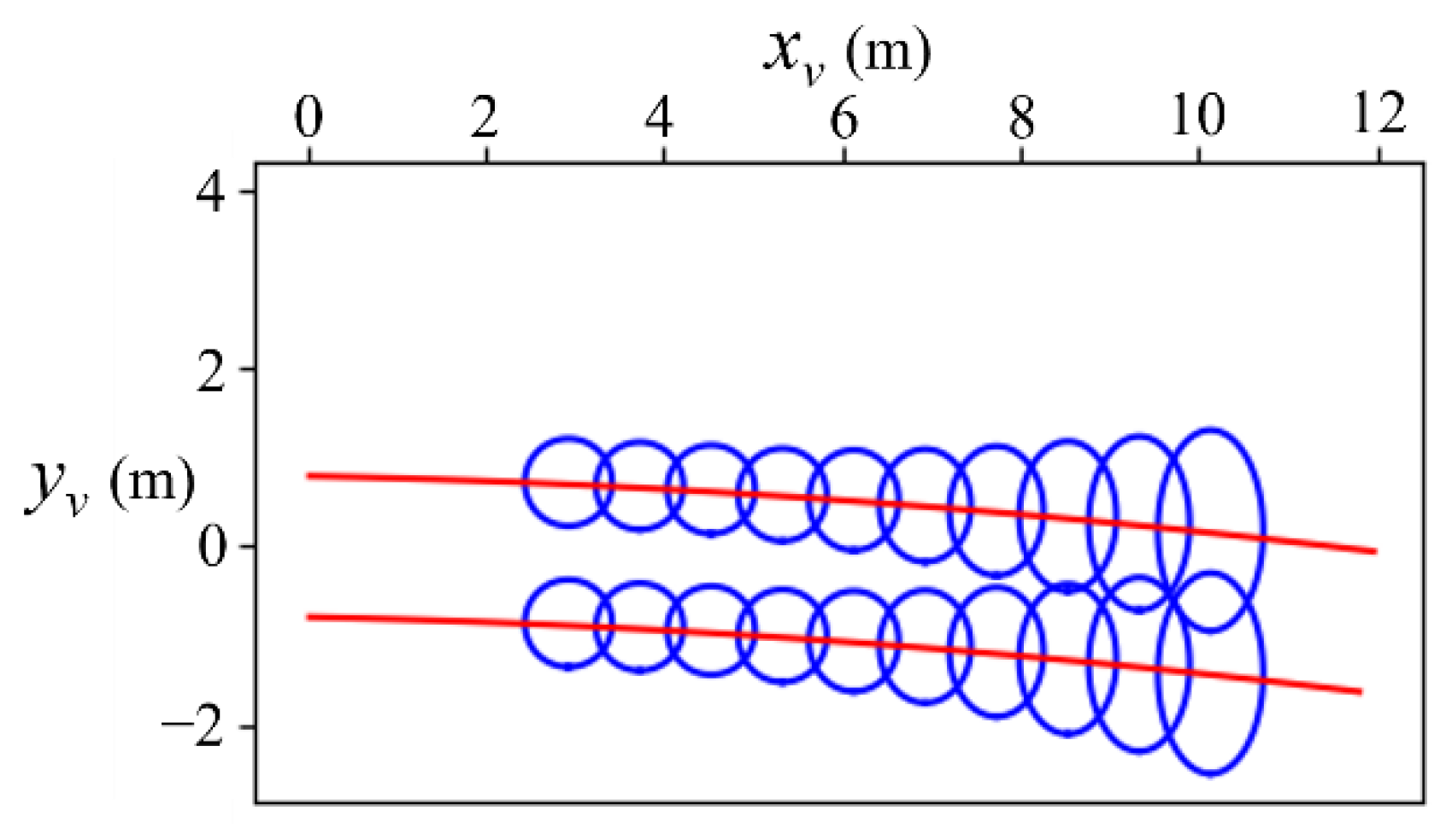

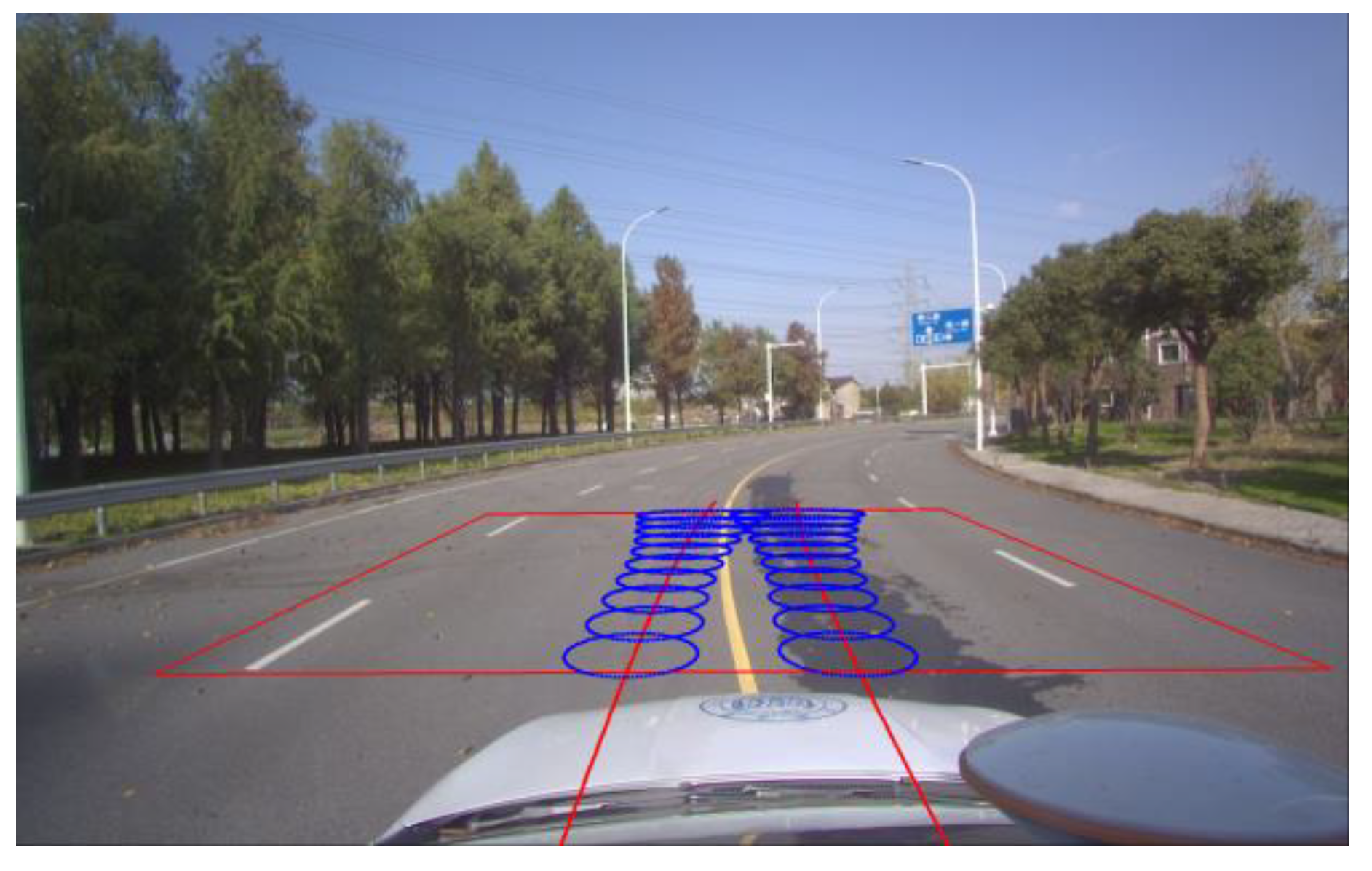

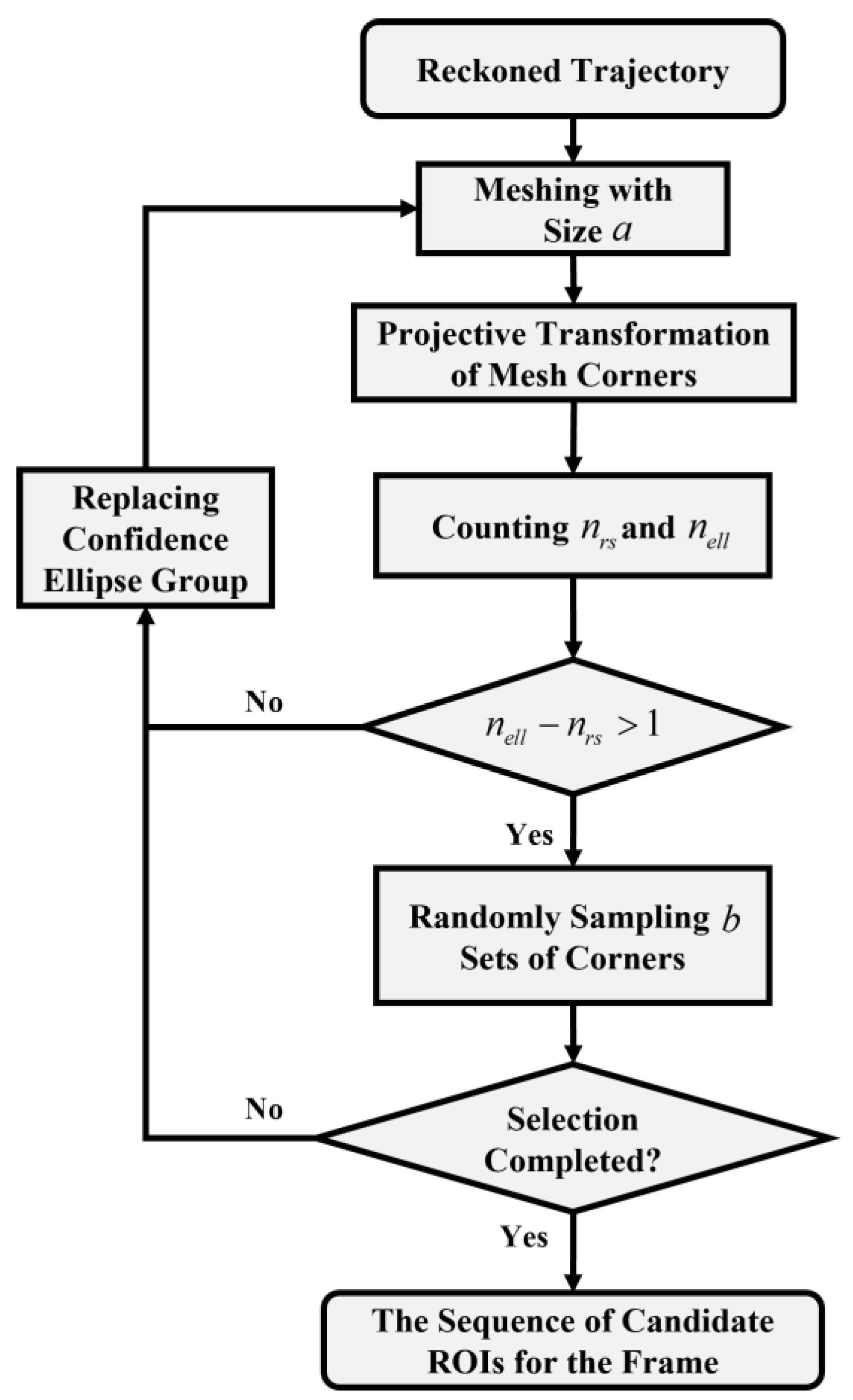

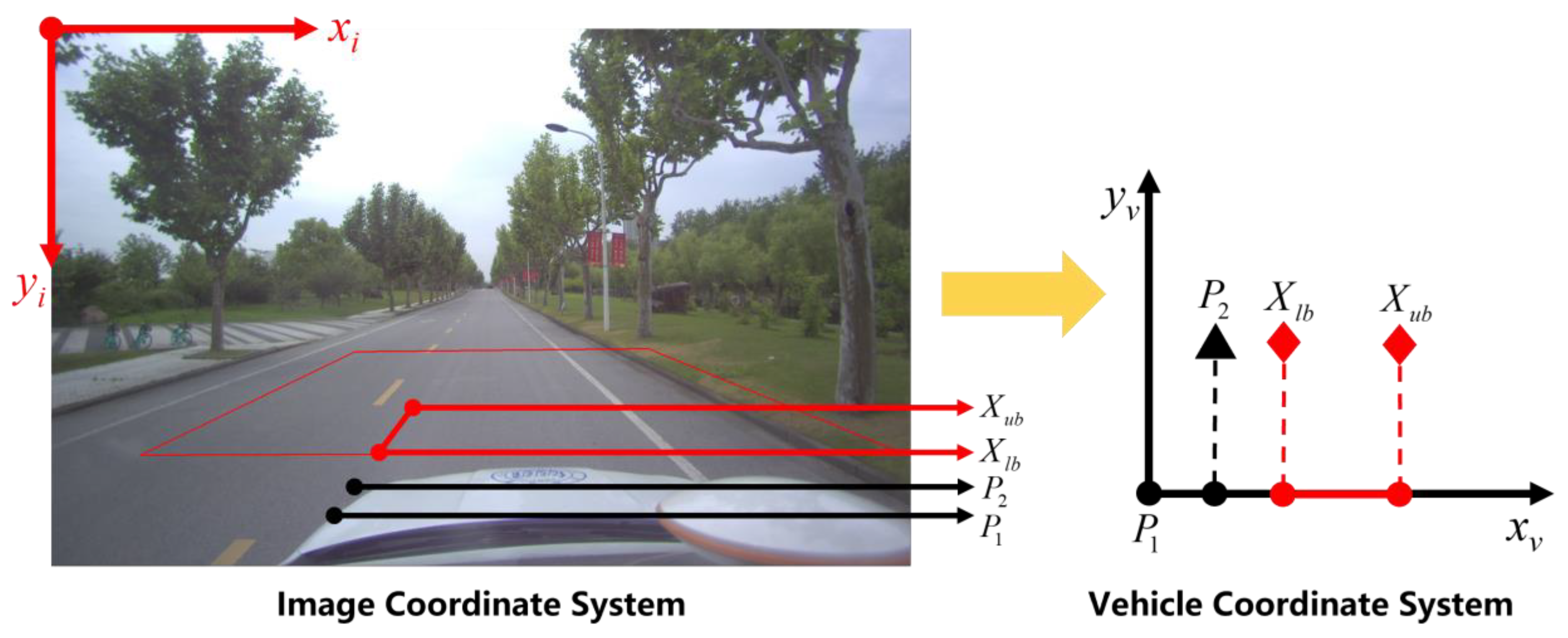

3.2. Ego-Vehicle Trajectory Reckoning Module Considering Kinematic Model Uncertainty

3.3. Fusion Module Based on Improved DSET

3.4. Spatiotemporal Transformation Strategy for Identification Results

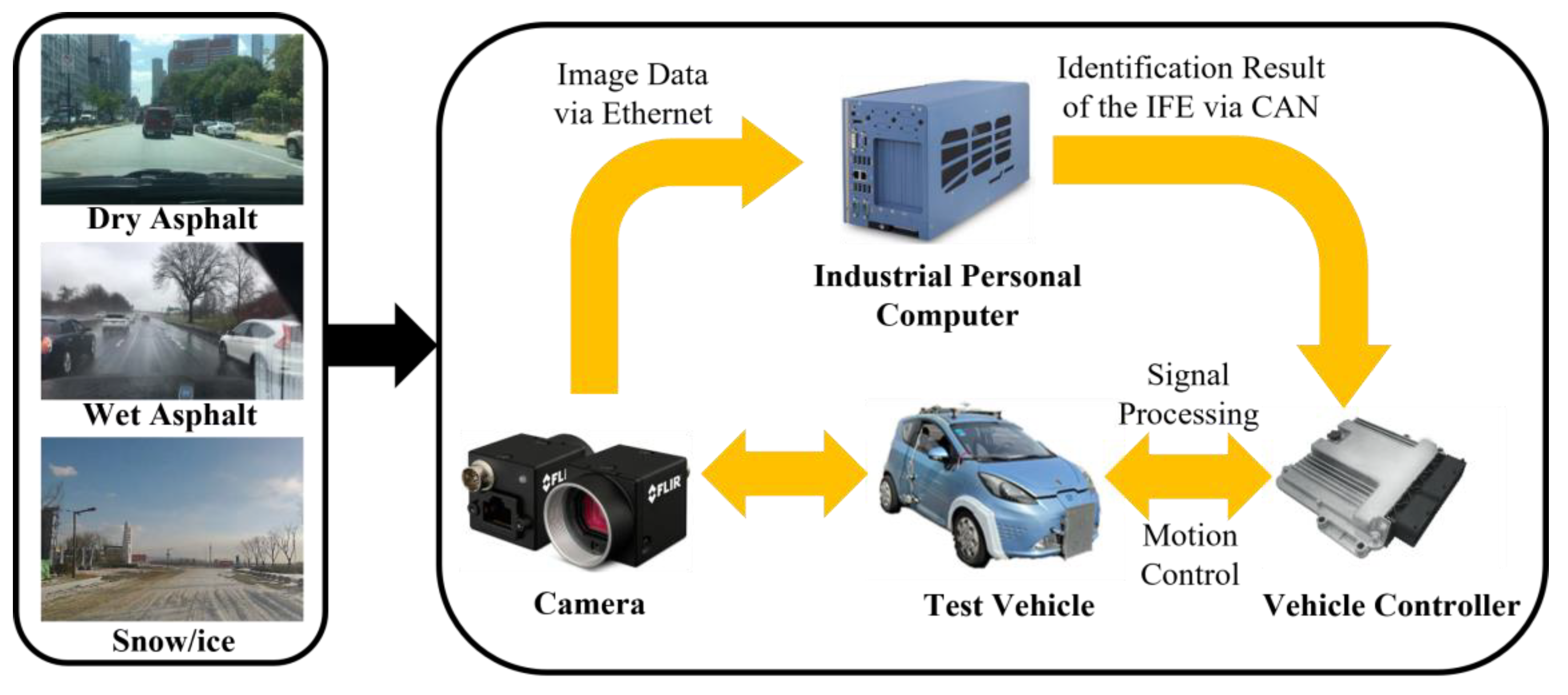

4. Design of Dynamics-Image-Based Fusion Estimator

4.1. Modeling

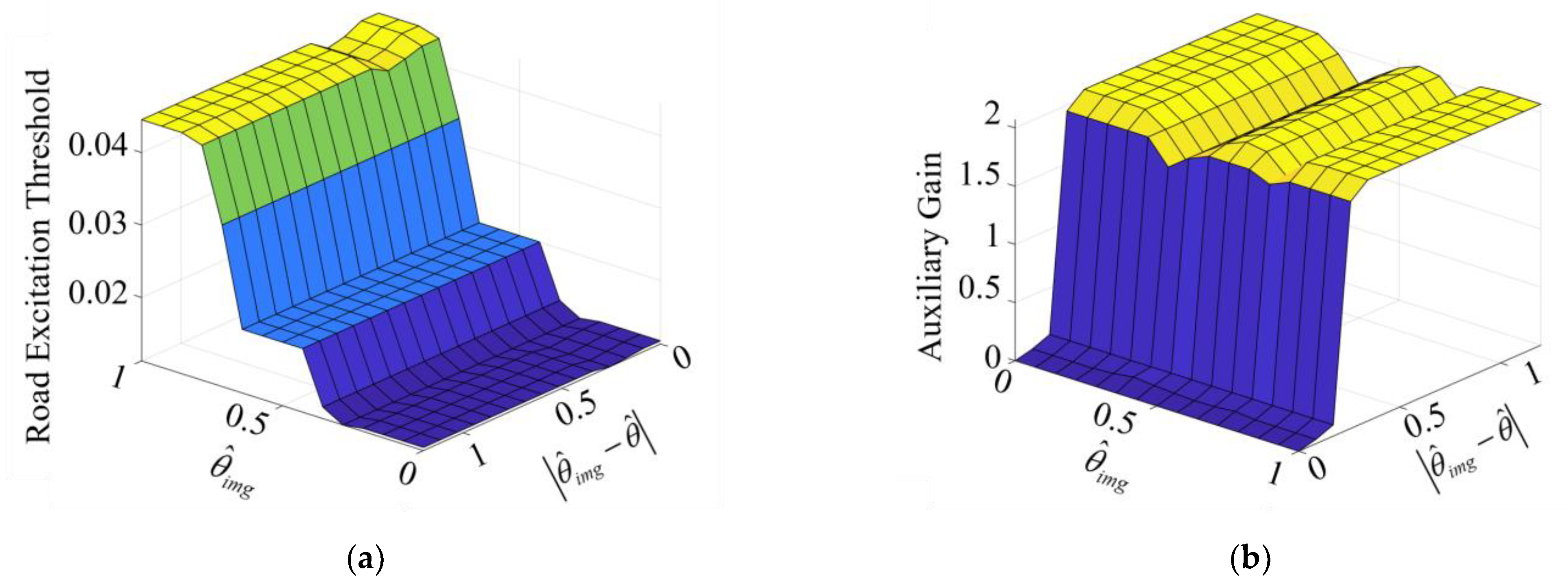

4.2. Fusion Estimator Design

5. Simulation and Experimental Validation

5.1. Performance Validation for IFE

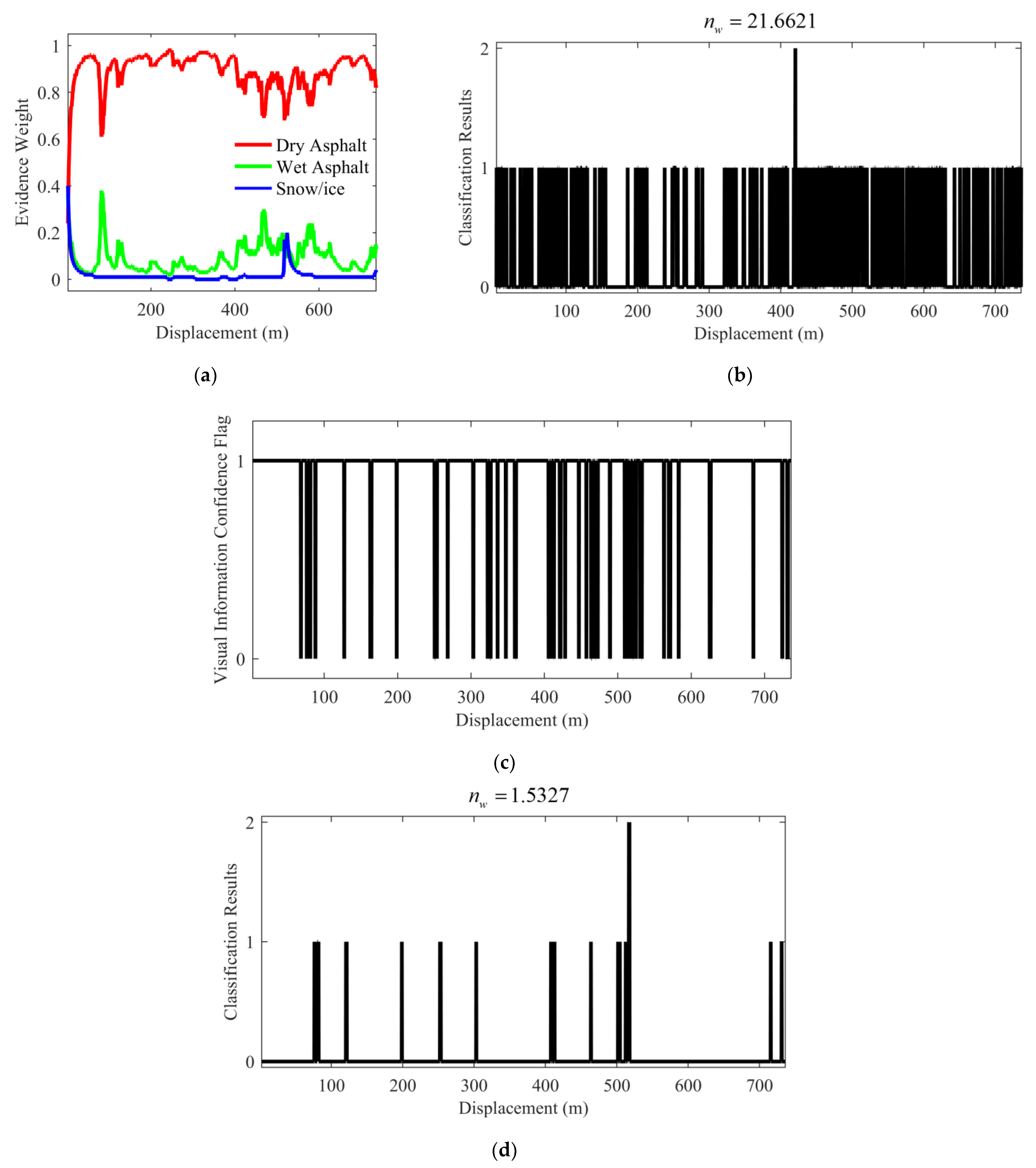

5.1.1. Test under the Normal Scenario

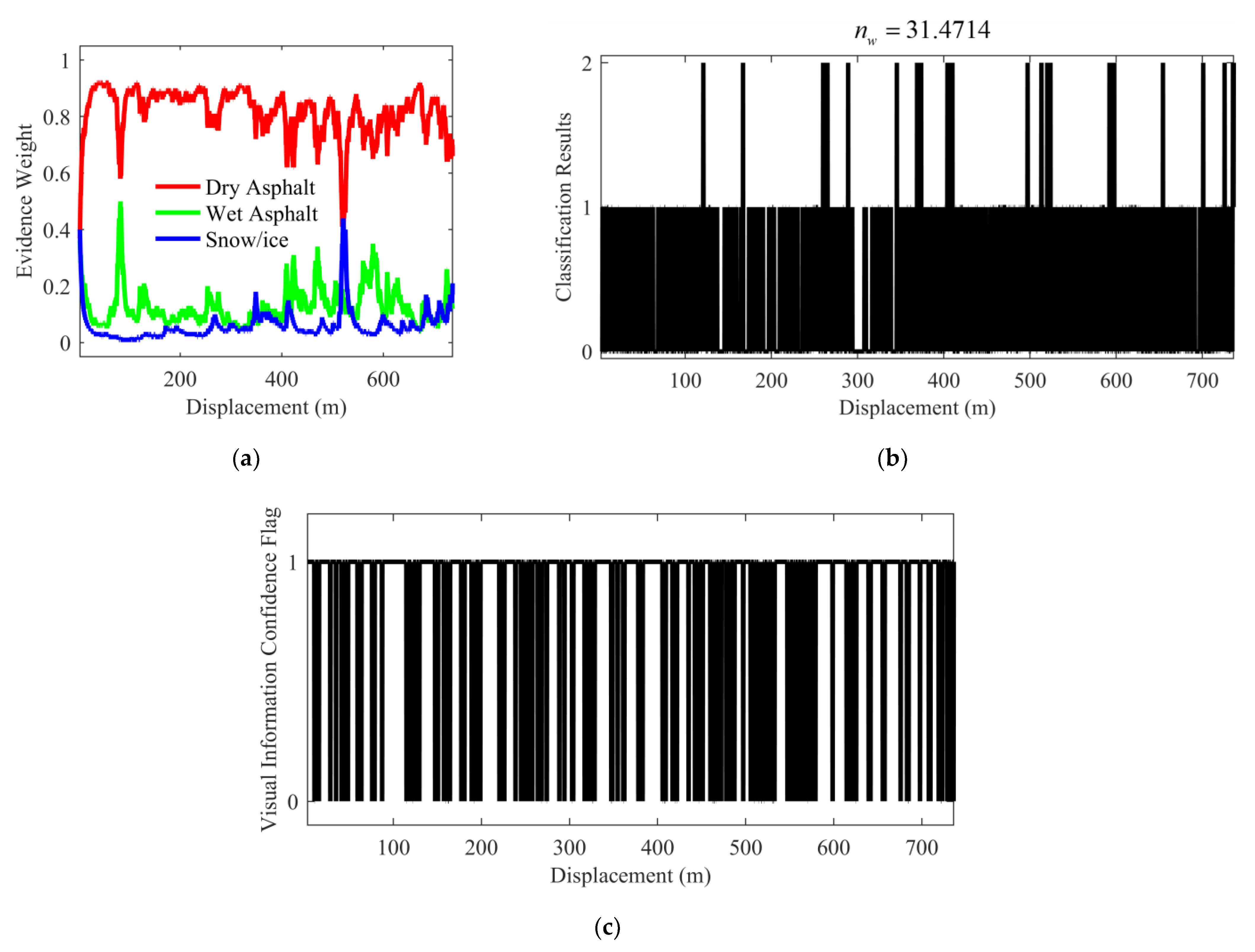

5.1.2. Test under the Scenario with Environmental Interference

5.1.3. Specific Test Results of IFE

5.2. Performance Validation for DIFE

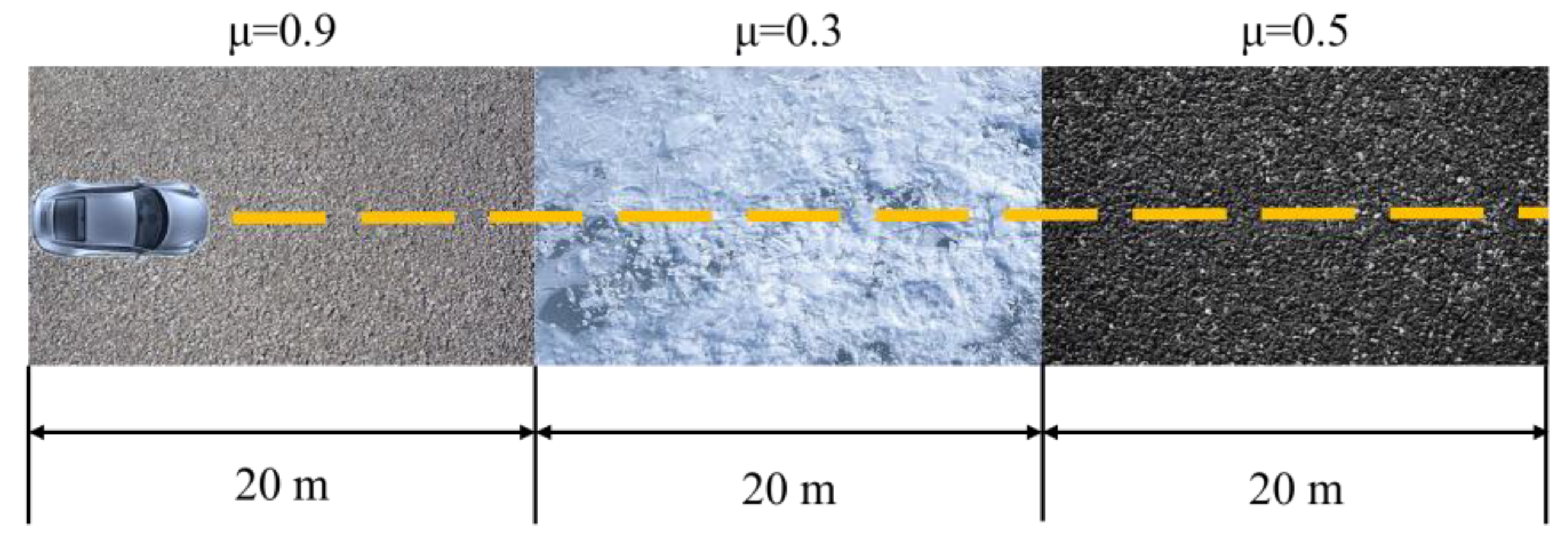

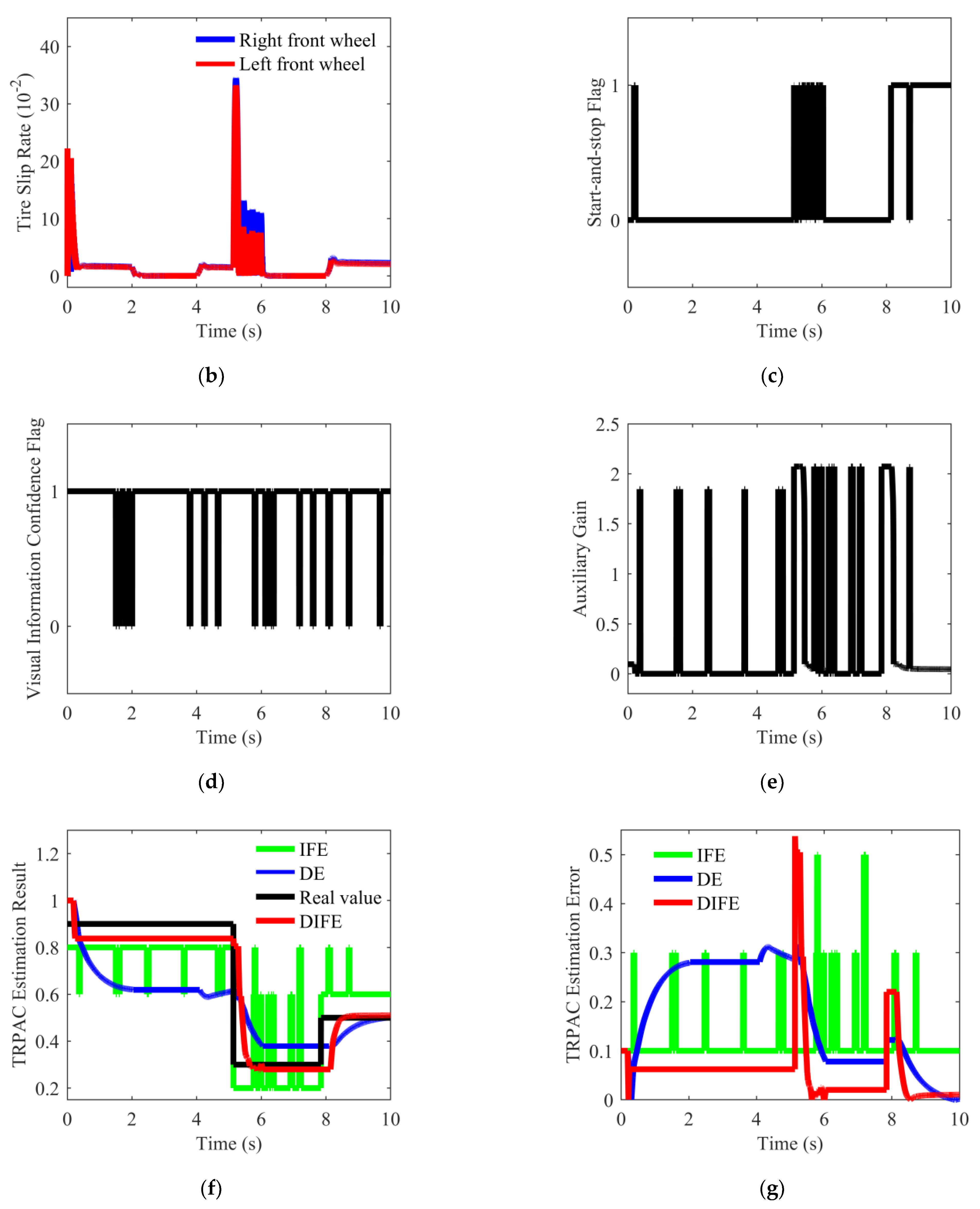

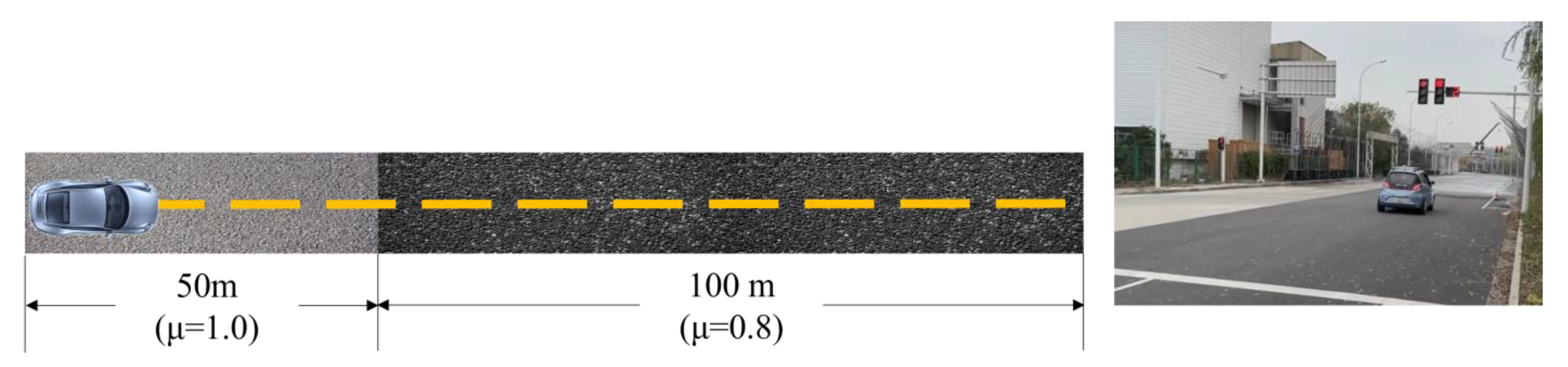

5.2.1. Simulation on Variable Friction Road

5.2.2. Vehicle Test on Variable Friction Road

5.2.3. Specific Test Results of DIFE

6. Conclusions

- (1)

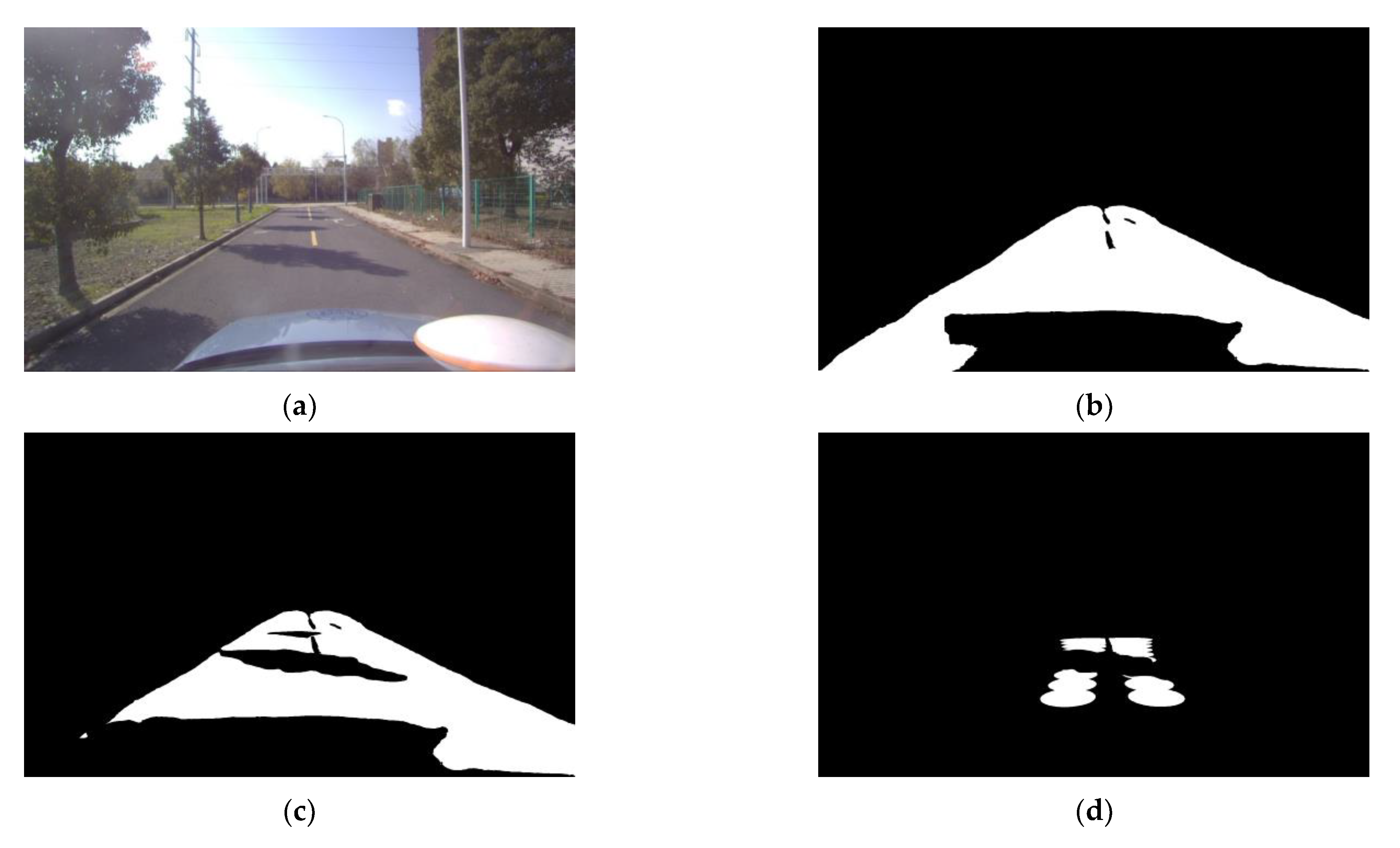

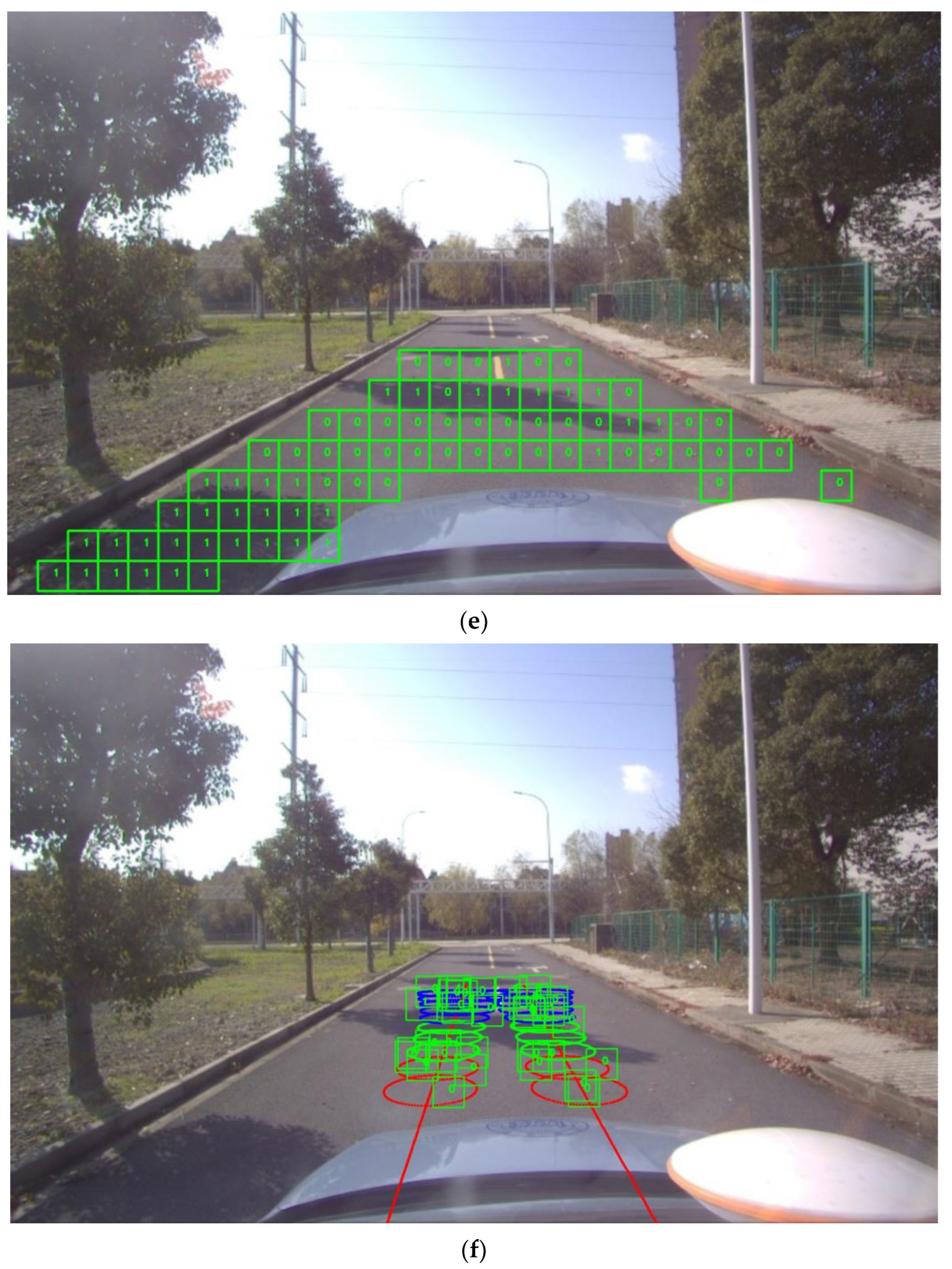

- Considering common visual degradation factors, an IFE is proposed to achieve accurate classification of the road surface condition on which the vehicle will travel. The road image is segmented and classified in the road feature identification module to obtain road features without shadow coverage. Then, the ego-vehicle trajectory reckoning module is applied to extract the effective road area. Based on the fusion module, the candidate ROIs on the effective road area are fused to output the defined visual information confidence and classification results at the same time.

- (2)

- Based on gain scheduling theory, a DIFE considering the visual information confidence is designed to accelerate the convergence of DE while considering the uncertainty of visual information. In this way, the influence of incorrect IFE estimation results can be avoided as much as possible, and the accuracy of TRPAC estimation results can be ensured.

- (3)

- The performance of the IFE is verified in the vehicle tests under undisturbed and disturbed scenarios. The effectiveness of DIFE is verified in simulation and real vehicle experiments. The results show that the proposed DIFE has better estimation robustness and estimation accuracy than other single-source-based estimators. Moreover, the proposed DIFE can quickly respond to dangerous conditions such as sudden changes in the road surface, which is beneficial to the vehicle active safety system and intelligent transportation system.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DE | Dynamics-based Estimator |

| DFE | Dynamics-based Fusion Estimator |

| DIFE | Dynamics-image-based Fusion Estimator |

| DSET | Dempster-Shafer Evidence Theory |

| IE | Image-based Estimator |

| IFE | Image-based Fusion Estimator |

| TRPAC | Tire-Road Peak Adhesion Coefficient |

References

- Blundell, M.; Harty, D. The Multibody Systems Approach to Vehicle Dynamics, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2015; pp. 335–450. [Google Scholar]

- Xue, W.; Zheng, L. Active Collision Avoidance System Design Based on Model Predictive Control with Varying Sampling Time. Automot. Innov. 2020, 3, 62–72. [Google Scholar] [CrossRef]

- Cabrera, J.A.; Castillo, J.J.; Perez, J.; Velasco, J.M.; Guerra, A.J.; Hernandez, P. A procedure for determining tire-road friction characteristics using a modification of the magic formula based on experimental results. Sensors 2018, 18, 896. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Xu, Y.; Pan, M.; Ren, F. A vehicle ABS adaptive sliding-mode control algorithm based on the vehicle velocity estimation and tyre/road friction coefficient estimations. Veh. Syst. Dyn. 2014, 52, 475–503. [Google Scholar] [CrossRef]

- Faraji, M.; Majd, V.J.; Saghafi, B.; Sojoodi, M. An optimal pole-matching observer design for estimating tyre-road friction force. Veh. Syst. Dyn. 2010, 48, 1155–1166. [Google Scholar] [CrossRef]

- Beal, C.E. Rapid Road Friction Estimation using Independent Left/Right Steering Torque Measurements. Veh. Syst. Dyn. 2020, 58, 377–403. [Google Scholar] [CrossRef]

- Hu, J.; Rakheja, S.; Zhang, Y. Real-time estimation of tire-road friction coefficient based on lateral vehicle dynamics. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2020, 234, 2444–2457. [Google Scholar] [CrossRef]

- Shao, L.; Jin, C.; Lex, C.; Eichberger, A. Robust road friction estimation during vehicle steering. Veh. Syst. Dyn. 2019, 57, 493–519. [Google Scholar] [CrossRef]

- Shao, L.; Jin, C.; Eichberger, A.; Lex, C. Grid Search Based Tire-Road Friction Estimation. IEEE Access 2020, 8, 81506–81525. [Google Scholar] [CrossRef]

- Liu, W.; Xiong, L.; Xia, X.; Lu, Y.; Gao, L.; Song, S. Vision-aided intelligent vehicle sideslip angle estimation based on a dynamic model. IET Int. Transp. Syst. 2020, 14, 1183–1189. [Google Scholar] [CrossRef]

- Almazan, E.J.; Qian, Y.; Elder, J.H. Road Segmentation for Classification of Road Weather Conditions. In Proceedings of the Computer Vision—ECCV 2016. 14th European Conference: Workshops, Cham, Switzerland, 8–16 October 2016. [Google Scholar]

- Leng, B.; Jin, D.; Xiong, L.; Yu, Z. Estimation of tire-road peak adhesion coefficient for intelligent electric vehicles based on camera and tire dynamics information fusion. Mech. Syst. Signal Process. 2021, 150, 1–15. [Google Scholar] [CrossRef]

- Wang, S.; Kodagoda, S.; Shi, L.; Wang, H. Road-Terrain Classification for Land Vehicles: Employing an Acceleration-Based Approach. IEEE Veh. Technol. Mag. 2017, 12, 34–41. [Google Scholar] [CrossRef]

- Roychowdhury, S.; Zhao, M.; Wallin, A.; Ohlsson, N.; Jonasson, M. Machine Learning Models for Road Surface and Friction Estimation using Front-Camera Images. In Proceedings of the 2018 International Joint Conference on Neural Networks, Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar]

- Cheng, L.; Zhang, X.; Shen, J. Road surface condition classification using deep learning. J. Visual Commun. Image Represent. 2019, 64, 102638. [Google Scholar] [CrossRef]

- Du, Y.; Liu, C.; Song, Y.; Li, Y.; Shen, Y. Rapid Estimation of Road Friction for Anti-Skid Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2461–2470. [Google Scholar] [CrossRef]

- Peng, L.; Wang, H.; Li, J. Uncertainty Evaluation of Object Detection Algorithms for Autonomous Vehicles. Automot. Innov. 2021, 4, 241–252. [Google Scholar] [CrossRef]

- Chen, L.; Luo, Y.; Bian, M.; Qin, Z.; Luo, J.; Li, K. Estimation of tire-road friction coefficient based on frequency domain data fusion. Mech. Syst. Sig. Process. 2017, 85, 177–192. [Google Scholar] [CrossRef]

- Svensson, L.; Torngren, M. Fusion of Heterogeneous Friction Estimates for Traction Adaptive Motion Planning and Control. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference, Indianapolis, IN, USA, 19–22 September 2021. [Google Scholar]

- Jin, D.; Leng, B.; Yang, X.; Xiong, L.; Yu, Z. Road Friction Estimation Method Based on Fusion of Machine Vision and Vehicle Dynamics. In Proceedings of the 31st IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 19 October–13 November 2020. [Google Scholar]

- Tian, C.; Leng, B.; Hou, X.; Huang, Y.; Zhao, W.; Jin, D.; Xiong, L.; Zhao, J. Robust Identification of Road Surface Condition Based on Ego-Vehicle Trajectory Reckoning. Automot. Innov. 2022, 1–12. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 31st Meeting of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hullermeier, E.; Waegeman, W. Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods. Mach. Learn. 2021, 110, 457–506. [Google Scholar] [CrossRef]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Chen, H.; Wang, X.; Wang, J. A Trajectory Planning Method Considering Intention-aware Uncertainty for Autonomous Vehicles. In Proceedings of the 2018 Chinese Automation Congress, Xi’an, China, 30 November–2 December 2018. [Google Scholar]

- Peniak, P.; Bubenikova, E.; Kanalikova, A. The redundant virtual sensors via edge computing. In Proceedings of the 26th International Conference on Applied Electronics, Pilsen, Czech Republic, 7–8 September 2021. [Google Scholar]

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Yager, R.R., Liu, L., Eds.; Springer: Berlin, Germany, 2008; pp. 57–72. [Google Scholar]

- Bian, M.; Chen, L.; Luo, Y.; Li, K. A dynamic model for tire/road friction estimation under combined longitudinal/lateral slip situation. In Proceedings of the SAE 2014 World Congress and Exhibition, Detroit, MI, USA, 8–10 April 2014. [Google Scholar]

- Yu, F.; Lin, Y. Automotive System Dynamics, 2nd ed.; Mechanical Industry Press: Beijing, China, 2017; pp. 30–67. [Google Scholar]

- Xia, X.; Xiong, L.; Sun, K.; Yu, Z.P. Estimation of maximum road friction coefficient based on Lyapunov method. Int. J. Automot. Technol. 2016, 17, 991–1002. [Google Scholar] [CrossRef]

| Title 1 | Dry Asphalt | Wet Asphalt | Snow/Ice |

|---|---|---|---|

| Training set (normal) | 4258 | 2442 | 1214 |

| Validation set (normal) | 1075 | 617 | 299 |

| Validation set (noised) | 750 | 450 | 221 |

| S | M | B | |

|---|---|---|---|

| S | SS | SM | SB |

| M | MS | MM | MB |

| B | BS | BM | BB |

| Parameter | Value |

|---|---|

| Mass (kg) | 1343.8 |

| Wheelbase (m) | 2.305 |

| Wheel track (m) | 1.356 |

| Height of COG (m) | 0.54 |

| Tire rolling radius (m) | 0.29 |

| Distance between COG and rear axle (m) | 1.193 |

| Motor peak torque (Nm) | 320 |

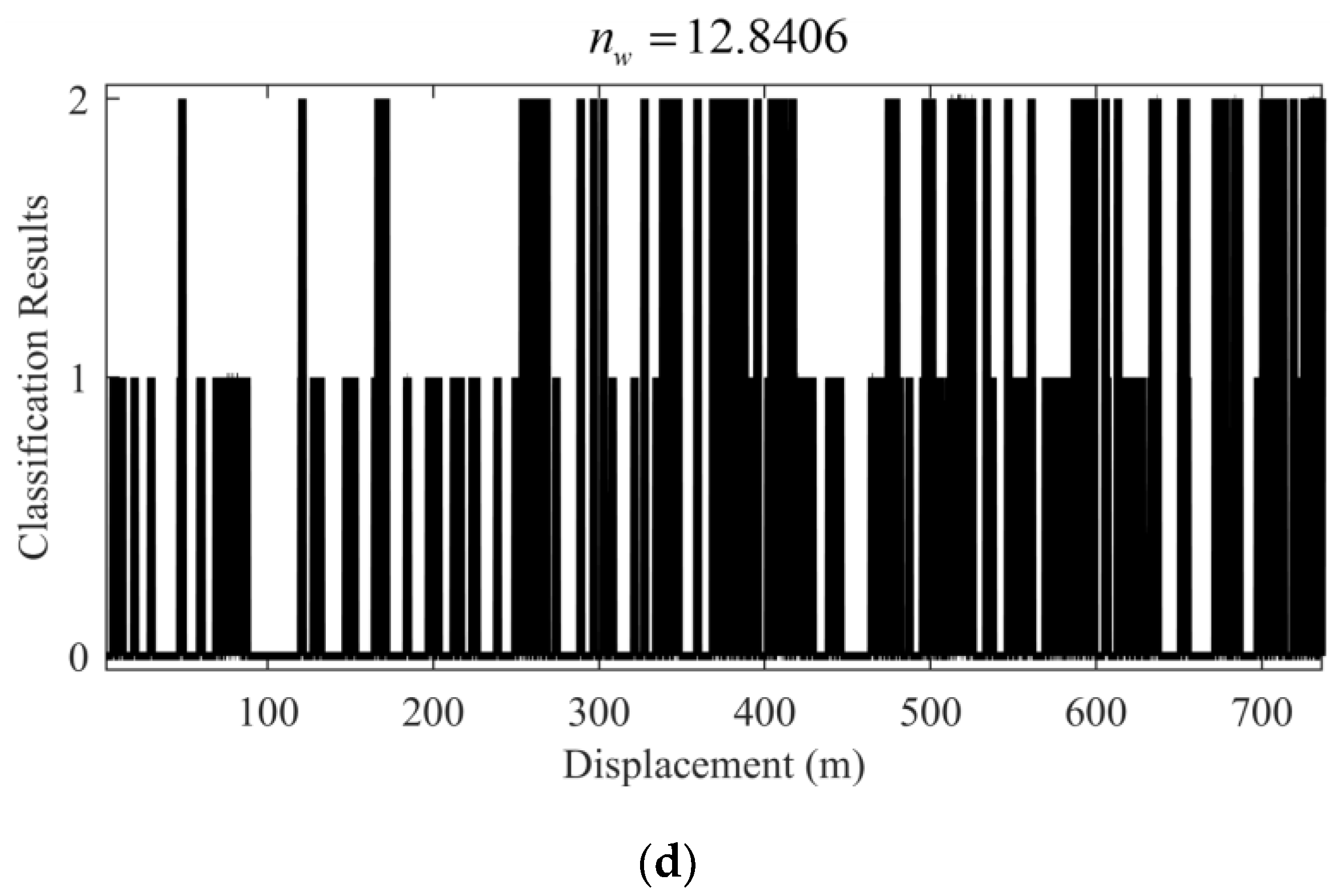

| Scenarios | Methods | Average Incorrect Classification Results per 100 Consecutive Frames |

|---|---|---|

| Normal | IE based on voting strategy [20] | 21.6621 |

| IFE | 1.5327 | |

| w/Interference | IE based on voting strategy [20] | 31.4714 |

| IFE | 12.8406 |

| Condition | Methods | Steady-State Estimation Error | Convergence Time |

|---|---|---|---|

| Simulation | IFE | 0.1~0.5 | 0.03 s |

| DE | 0.08 | 1.45~1.6 s | |

| DIFE | 0.02 | 0.5 s | |

| Vehicle test | IFE | 0.2~0.4 | 0.03 s |

| DE | >0.09 | >4 s | |

| DIFE | 0.03 | 0.4 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, C.; Leng, B.; Hou, X.; Xiong, L.; Huang, C. Multi-Sensor Fusion Based Estimation of Tire-Road Peak Adhesion Coefficient Considering Model Uncertainty. Remote Sens. 2022, 14, 5583. https://doi.org/10.3390/rs14215583

Tian C, Leng B, Hou X, Xiong L, Huang C. Multi-Sensor Fusion Based Estimation of Tire-Road Peak Adhesion Coefficient Considering Model Uncertainty. Remote Sensing. 2022; 14(21):5583. https://doi.org/10.3390/rs14215583

Chicago/Turabian StyleTian, Cheng, Bo Leng, Xinchen Hou, Lu Xiong, and Chao Huang. 2022. "Multi-Sensor Fusion Based Estimation of Tire-Road Peak Adhesion Coefficient Considering Model Uncertainty" Remote Sensing 14, no. 21: 5583. https://doi.org/10.3390/rs14215583

APA StyleTian, C., Leng, B., Hou, X., Xiong, L., & Huang, C. (2022). Multi-Sensor Fusion Based Estimation of Tire-Road Peak Adhesion Coefficient Considering Model Uncertainty. Remote Sensing, 14(21), 5583. https://doi.org/10.3390/rs14215583