Estimating Crop Seed Composition Using Machine Learning from Multisensory UAV Data

Abstract

1. Introduction

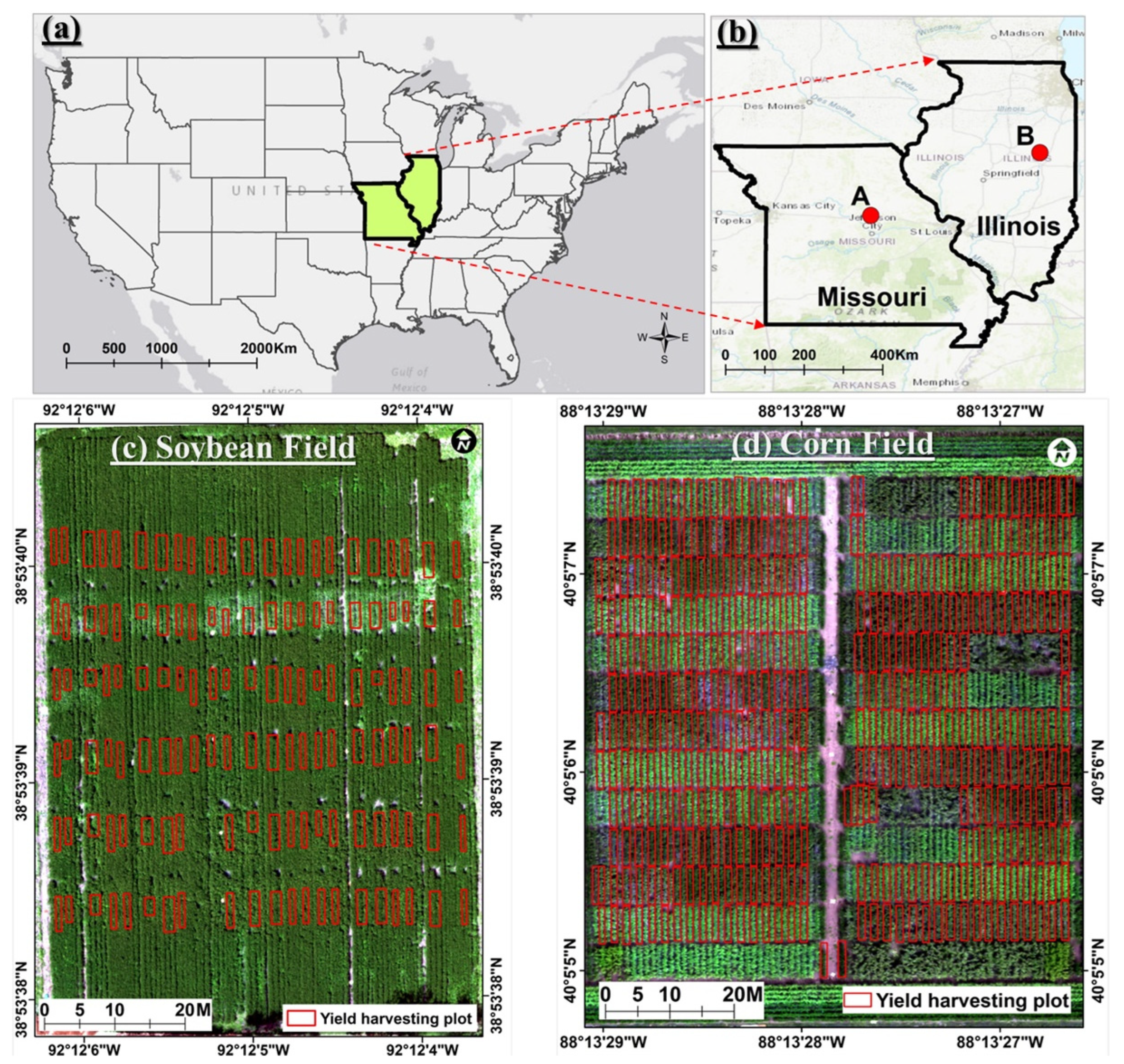

2. Test Site and Data

2.1. Test Sites

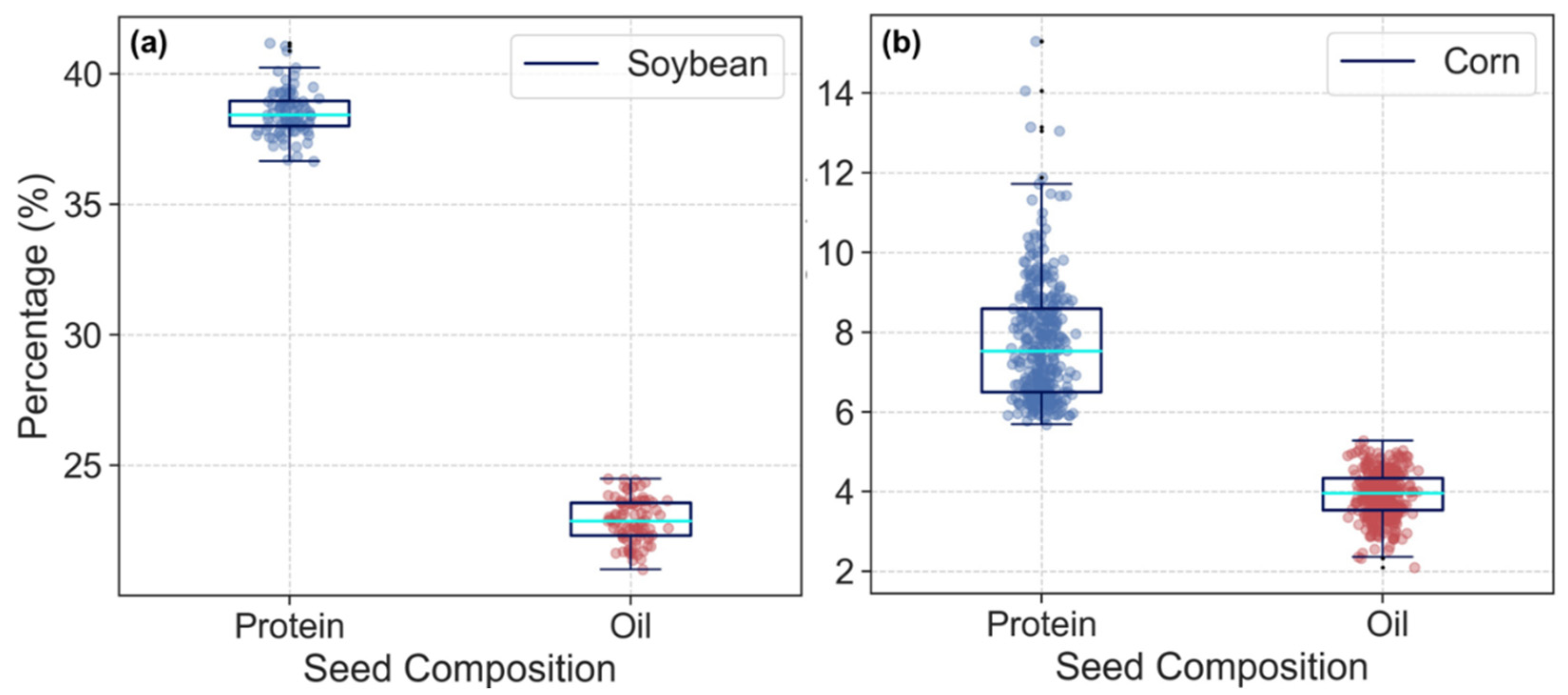

2.1.1. Soybean Field and Experiment Setup

2.1.2. Cornfield and Experiment Setup

2.2. Data Acquisition

2.2.1. Soybean Grain Yield Sampling and Seed Composition Measurement

2.2.2. Corn Grain Yield Sampling and Seed Composition Measurement

2.2.3. UAV Data Collection

2.2.4. UAV Data Preprocessing

Hyperspectral Image Processing

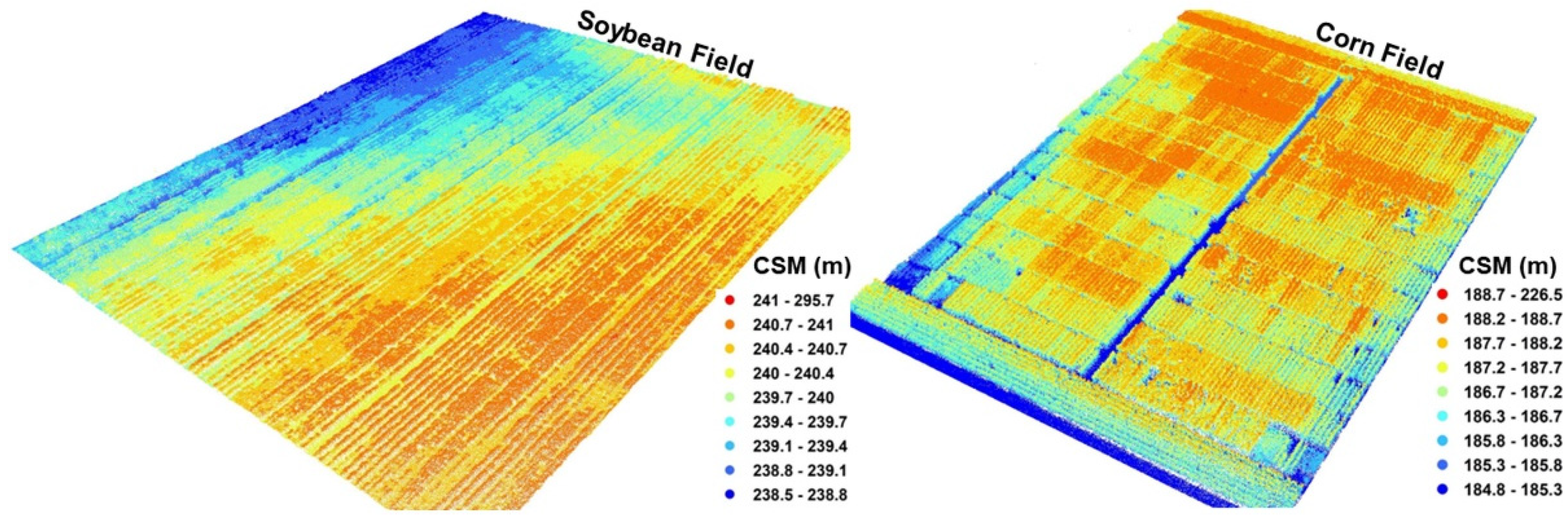

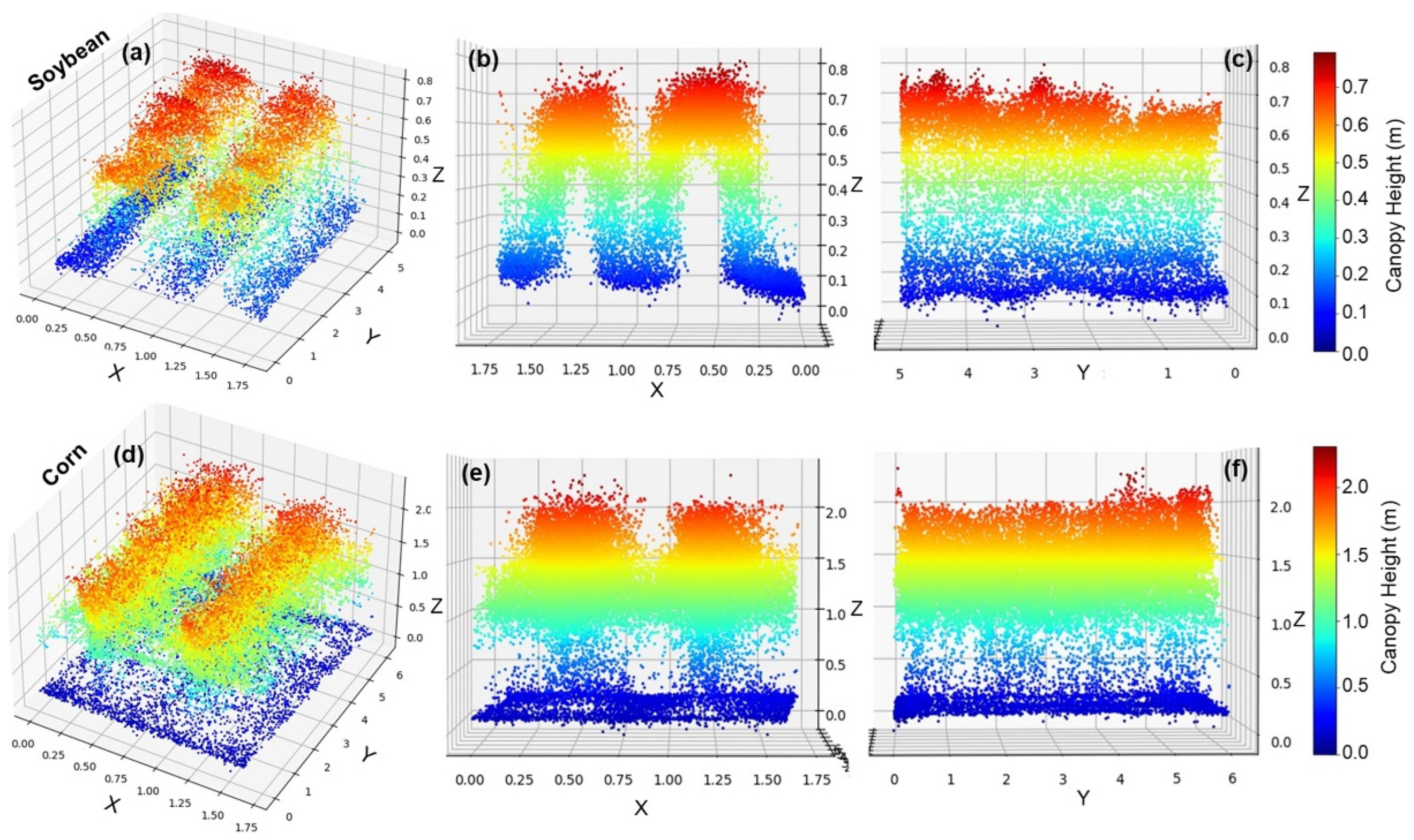

LiDAR Data Processing

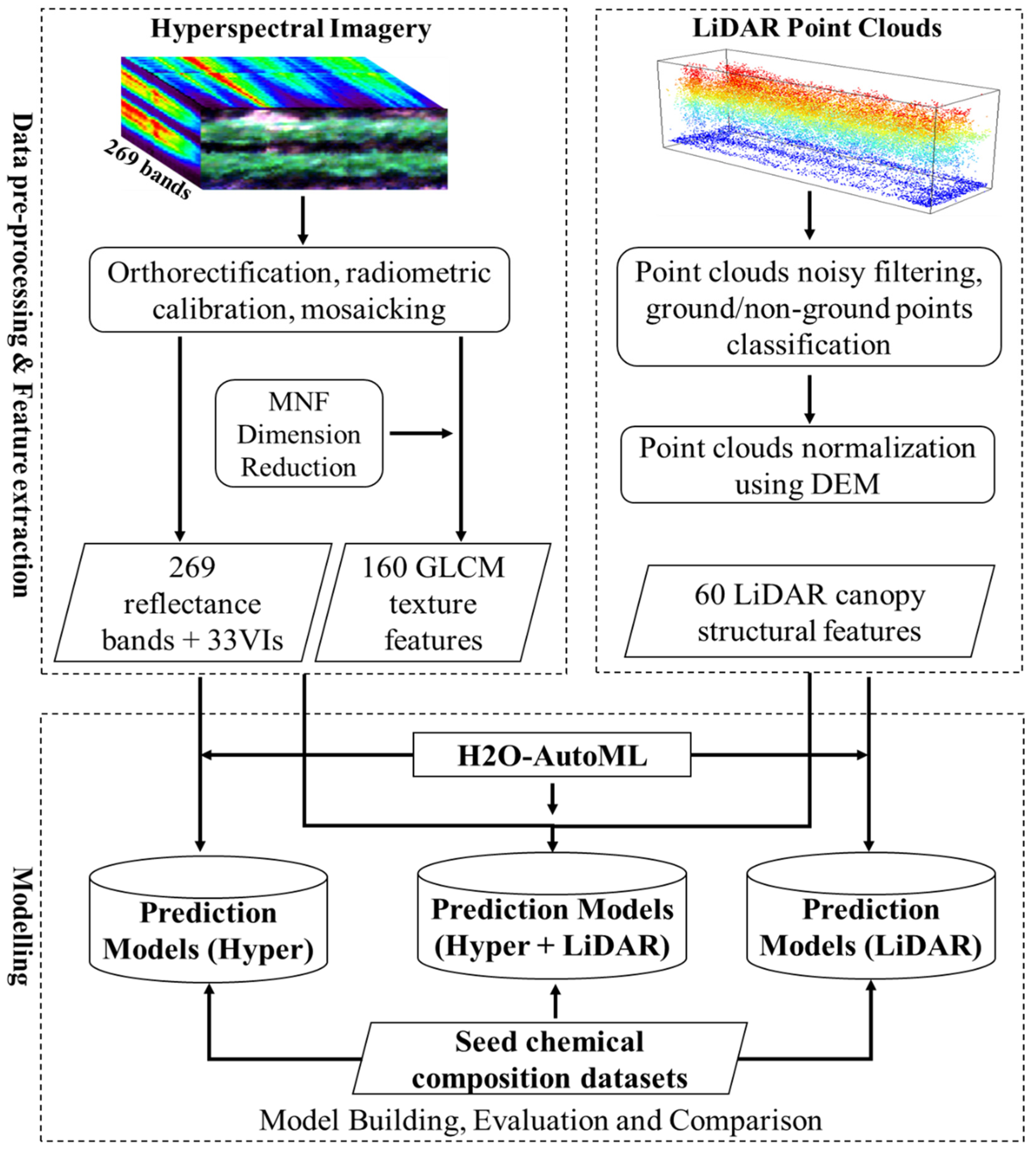

3. Methodology

3.1. Feature Extraction

3.1.1. Hyperspectral-Imagery-Based Feature Extraction

3.1.2. LiDAR Data-Based Canopy Structure Feature Extraction

3.2. Modeling Methods

3.2.1. Automated Machine Learning

3.2.2. Feature Selection

3.2.3. Model Evaluation

4. Results and Discussion

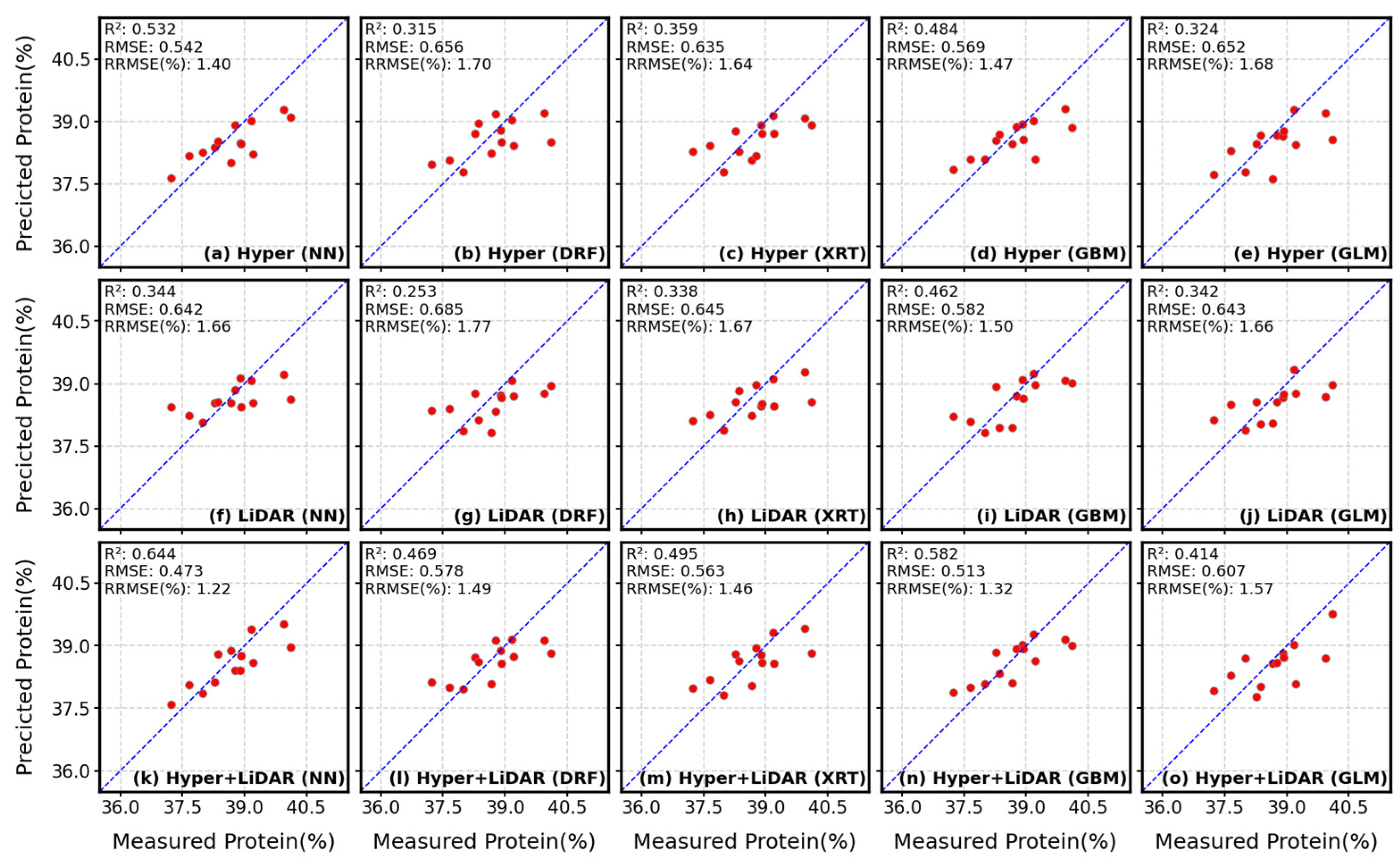

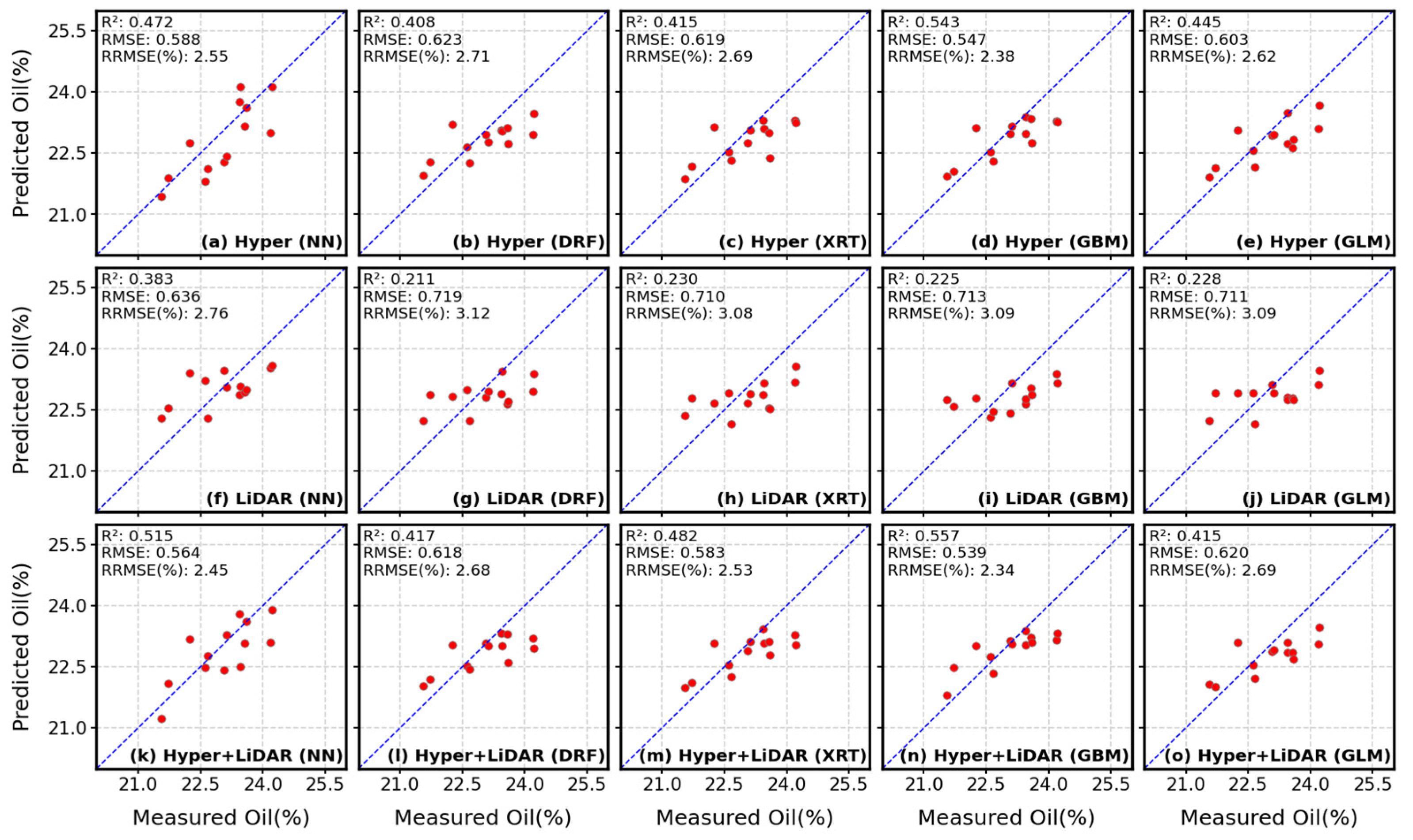

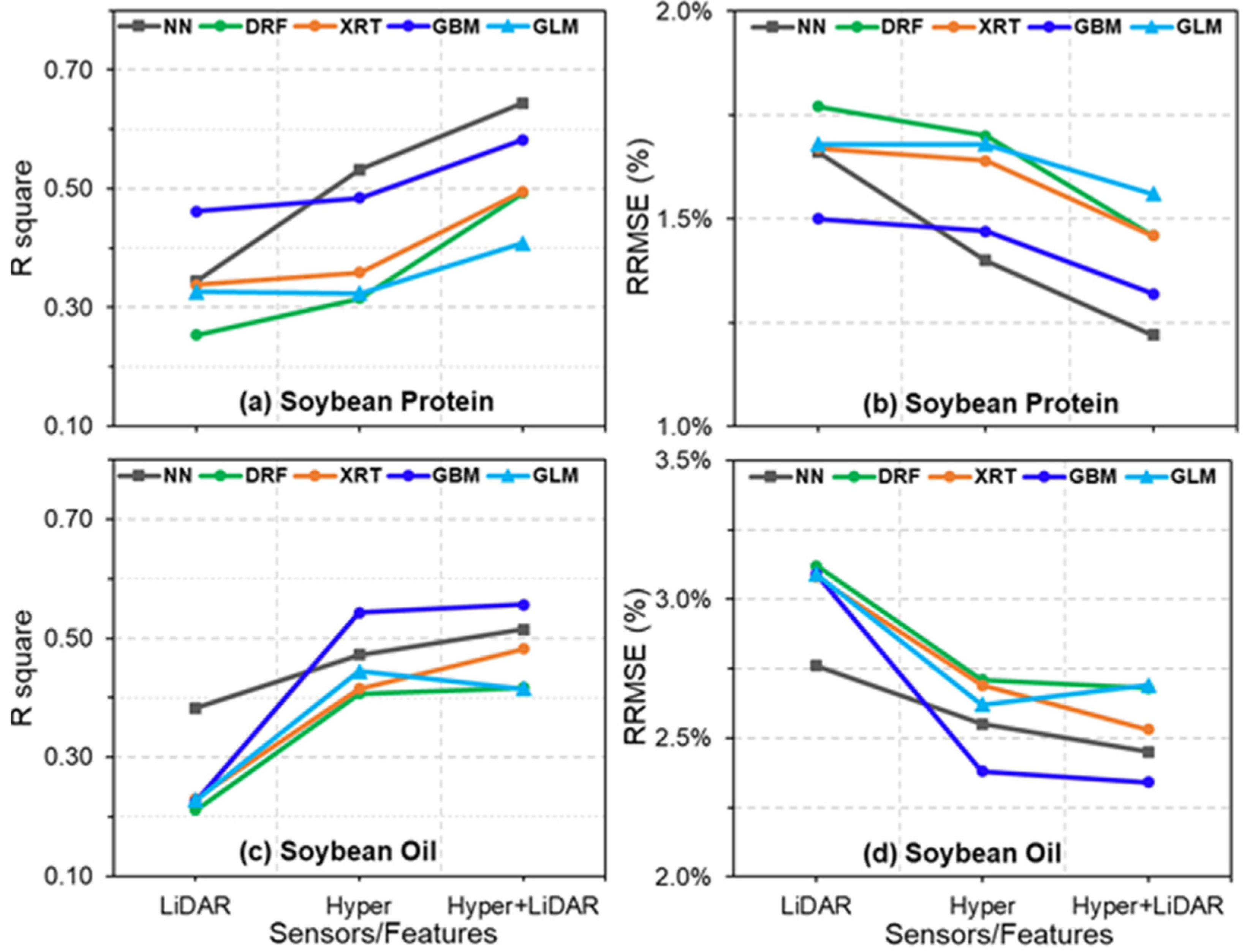

4.1. Estimation of Soybean Seed Protein and Oil Concentrations

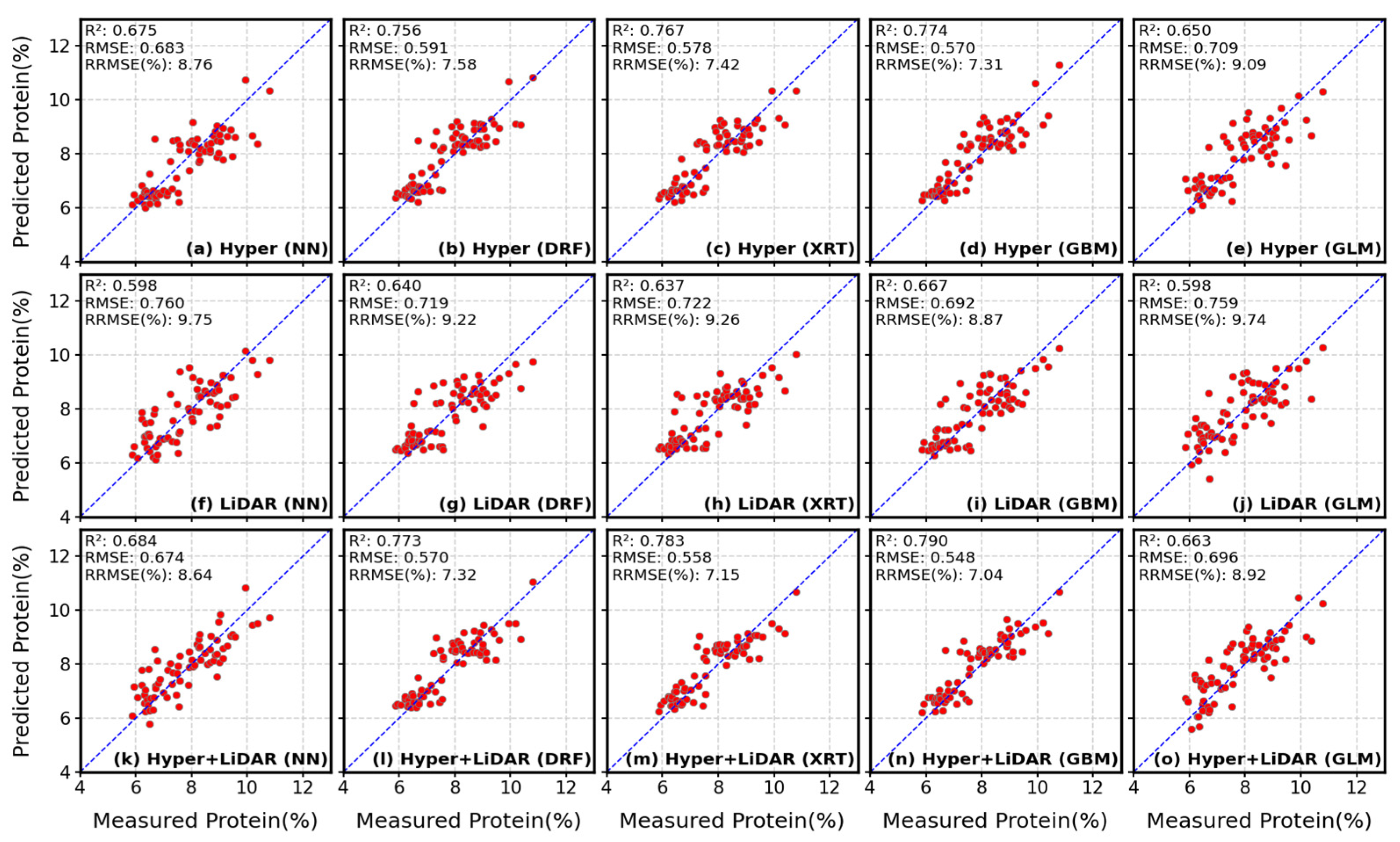

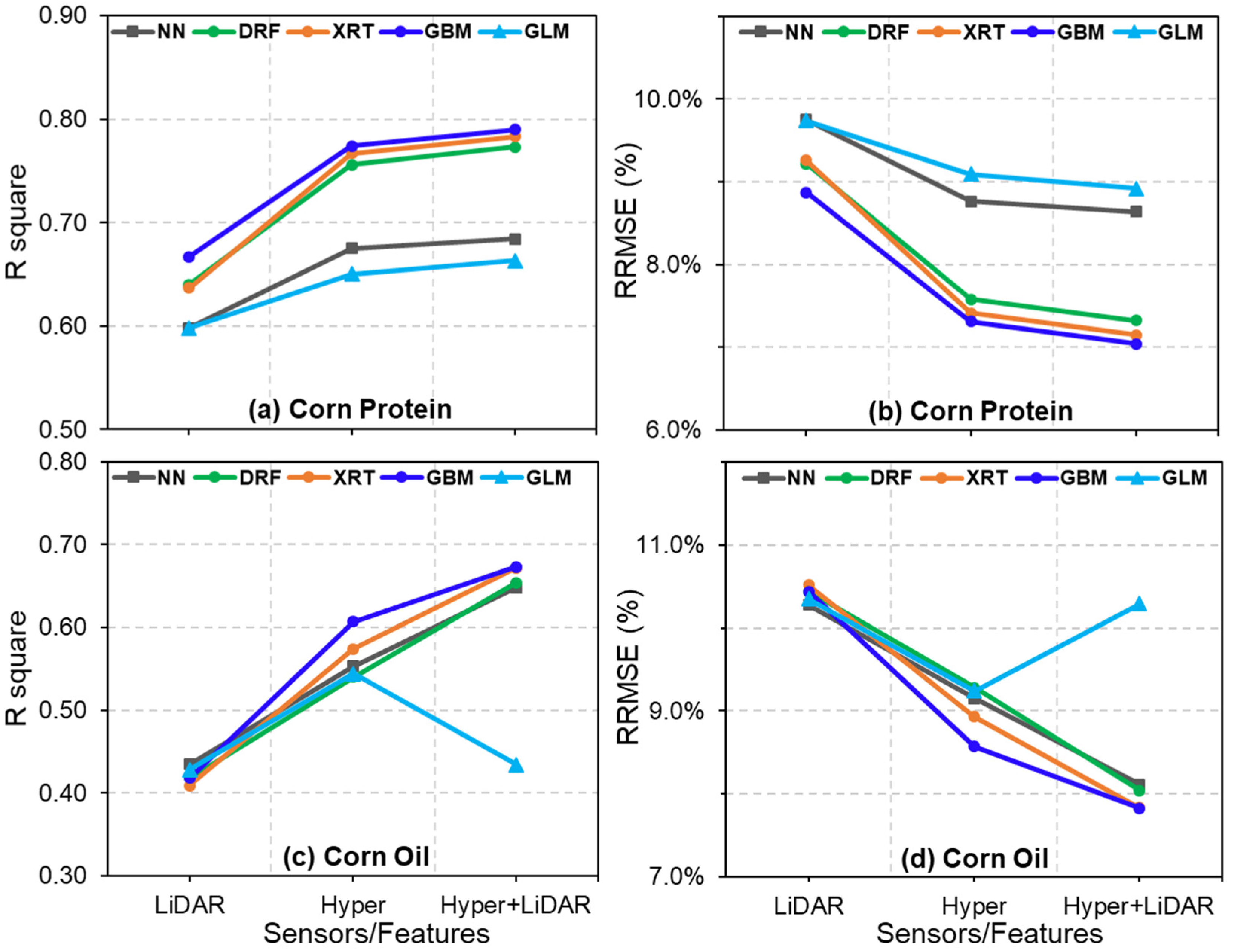

4.2. Estimation of Corn Seed Protein and Oil Concentrations

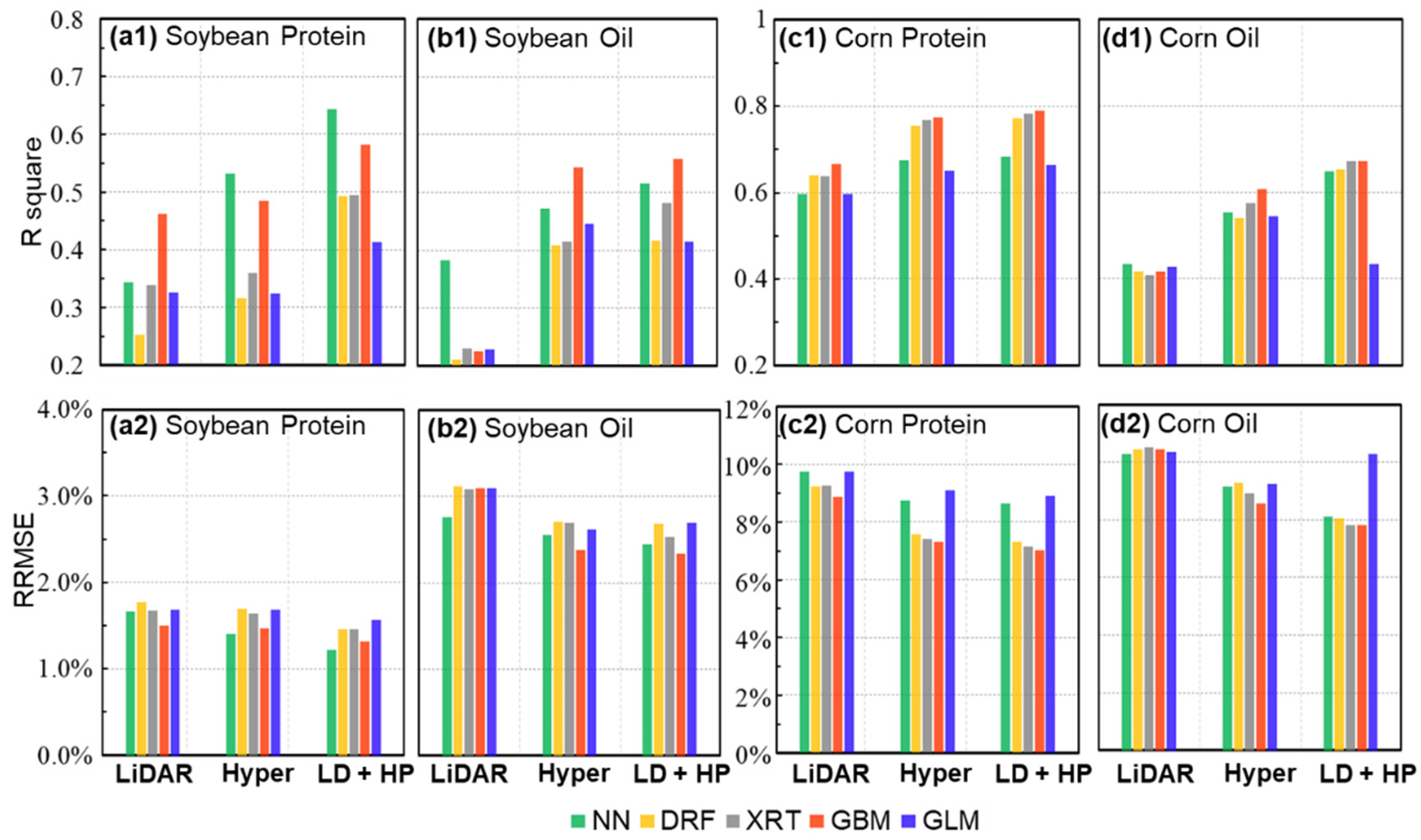

4.3. Comparisons of Hyperspectral- and LiDAR-Based Seed Composition Estimations

4.4. Contribution of Multisensory Data Fusion for Seed Protein and Oil Concentration Estimations

4.5. Performance of Different Models for the Prediction of Protein and Oil Concentrations

5. Conclusions

- UAV platforms, when integrated with multiple sensors, can provide multi-domain information on crop canopy (canopy spectral, texture, structure, 2D, 3D, etc.). The R2 of 0.79 and 0.64 for corn and soybean protein estimation and R2 values of 0.67 and 0.56 for corn and soybean oil estimation prove that the multimodal UAV platform is a promising tool for crop-seed-composition estimation.

- Reasonable predictions of soybean and corn seed protein and oil concentrations can be achieved using hyperspectral imagery-derived canopy spectral and texture features. With slightly lower prediction accuracies compared to hyperspectral data, LiDAR point-cloud-based canopy structure features were also proven to be significant indicators for crop-seed-composition estimation.

- The combination of hyperspectral and LiDAR data provided superior performance for the estimation of soybean and corn seed protein and oil concentrations over models based on either hyperspectral or LiDAR data alone. The inclusion of LiDAR-based canopy structure information likely alleviates saturation issues associated with hyperspectral-based features, which may have underpinned the slightly improved performance of models using Hyper + LiDAR over those using hyperspectral data only.

- The automated machine-learning approach H2O-AutoML employed in this work provided an efficient platform and framework that facilitated the model building and evaluation procedures. With respect to the theH2O-AutoML algorithms tested, the GBM outperformed other methods in most cases, followed by the NN method, and GLM was the least suitable algorithm.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gerland, P.; Raftery, A.E.; Ševčíková, H.; Li, N.; Gu, D.; Spoorenberg, T.; Alkema, L.; Fosdick, B.K.; Chunn, J.; Lalic, N. World population stabilization unlikely this century. Science 2014, 346, 234–237. [Google Scholar] [CrossRef]

- Nonhebel, S. Global food supply and the impacts of increased use of biofuels. Energy 2012, 37, 115–121. [Google Scholar] [CrossRef]

- Alexandratos, N.; Bruinsma, J. World Agriculture towards 2030/2050: The 2012 Revision; FAO: Rome, Italy, 2012. [Google Scholar]

- Hunter, M.C.; Smith, R.G.; Schipanski, M.E.; Atwood, L.W.; Mortensen, D.A. Agriculture in 2050: Recalibrating targets for sustainable intensification. Bioscience 2017, 67, 386–391. [Google Scholar] [CrossRef]

- Koc, A.B.; Abdullah, M.; Fereidouni, M. Soybeans processing for biodiesel production. Soybean-Appl. Technol. 2011, 19, 32. [Google Scholar]

- Shea, Z.; Singer, W.M.; Zhang, B. Soybean Production, Versatility, and Improvement. In Legume Crops-Prospects, Production and Uses; IntechOpen: London, UK, 2020. [Google Scholar]

- Venton, D. Core Concept: Can bioenergy with carbon capture and storage make an impact? Proc. Natl. Acad. Sci. USA 2016, 113, 13260–13262. [Google Scholar] [CrossRef]

- Pagano, M.C.; Miransari, M. The importance of soybean production worldwide. In Abiotic and Biotic Stresses in Soybean Production; Elsevier: Amsterdam, The Netherlands, 2016; pp. 1–26. [Google Scholar]

- Medic, J.; Atkinson, C.; Hurburgh, C.R. Current knowledge in soybean composition. J. Am. Oil Chem. Soc. 2014, 91, 363–384. [Google Scholar] [CrossRef]

- Rouphael, Y.; Spíchal, L.; Panzarová, K.; Casa, R.; Colla, G. High-throughput plant phenotyping for developing novel biostimulants: From lab to field or from field to lab? Front. Plant Sci. 2018, 9, 1197. [Google Scholar] [CrossRef]

- Huang, M.; Wang, Q.; Zhu, Q.; Qin, J.; Huang, G. Review of seed quality and safety tests using optical sensing technologies. Seed Sci. Technol. 2015, 43, 337–366. [Google Scholar] [CrossRef]

- Ferreira, D.; Galão, O.; Pallone, J.; Poppi, R. Comparison and application of near-infrared (NIR) and mid-infrared (MIR) spectroscopy for determination of quality parameters in soybean samples. Food Control 2014, 35, 227–232. [Google Scholar] [CrossRef]

- Seo, Y.-W.; Ahn, C.K.; Lee, H.; Park, E.; Mo, C.; Cho, B.-K. Non-destructive sorting techniques for viable pepper (Capsicum annuum L.) seeds using Fourier transform near-infrared and raman spectroscopy. J. Biosyst. Eng. 2016, 41, 51–59. [Google Scholar] [CrossRef]

- Yadav, P.; Murthy, I. Calibration of NMR spectroscopy for accurate estimation of oil content in sunflower, safflower and castor seeds. Curr. Sci. 2016, 110, 73–76. [Google Scholar] [CrossRef]

- Zhang, H.; Song, T.; Wang, K.; Wang, G.; Hu, H.; Zeng, F. Prediction of crude protein content in rice grain with canopy spectral reflectance. Plant Soil Environ. 2012, 58, 514–520. [Google Scholar] [CrossRef]

- Li, Z.; Taylor, J.; Yang, H.; Casa, R.; Jin, X.; Li, Z.; Song, X.; Yang, G. A hierarchical interannual wheat yield and grain protein prediction model using spectral vegetative indices and meteorological data. Field Crops Res. 2020, 248, 107711. [Google Scholar] [CrossRef]

- Li-Hong, X.; Wei-Xing, C.; Lin-Zhang, Y. Predicting grain yield and protein content in winter wheat at different N supply levels using canopy reflectance spectra. Pedosphere 2007, 17, 646–653. [Google Scholar]

- Pettersson, C.; Eckersten, H. Prediction of grain protein in spring malting barley grown in northern Europe. Eur. J. Agron. 2007, 27, 205–214. [Google Scholar] [CrossRef]

- Pettersson, C.-G.; Söderström, M.; Eckersten, H. Canopy reflectance, thermal stress, and apparent soil electrical conductivity as predictors of within-field variability in grain yield and grain protein of malting barley. Precis. Agric. 2006, 7, 343–359. [Google Scholar] [CrossRef]

- Aykas, D.P.; Ball, C.; Sia, A.; Zhu, K.; Shotts, M.-L.; Schmenk, A.; Rodriguez-Saona, L. In-Situ Screening of Soybean Quality with a Novel Handheld Near-Infrared Sensor. Sensors 2020, 20, 6283. [Google Scholar] [CrossRef]

- Chiozza, M.V.; Parmley, K.A.; Higgins, R.H.; Singh, A.K.; Miguez, F.E. Comparative prediction accuracy of hyperspectral bands for different soybean crop variables: From leaf area to seed composition. Field Crops Res. 2021, 271, 108260. [Google Scholar] [CrossRef]

- Rodrigues, F.A.; Blasch, G.; Defourny, P.; Ortiz-Monasterio, J.I.; Schulthess, U.; Zarco-Tejada, P.J.; Taylor, J.A.; Gérard, B. Multi-temporal and spectral analysis of high-resolution hyperspectral airborne imagery for precision agriculture: Assessment of wheat grain yield and grain protein content. Remote Sens. 2018, 10, 930. [Google Scholar] [CrossRef]

- Martin, N.F.; Bollero, A.; Bullock, D.G. Relationship between secondary variables and soybean oil and protein concentration. Trans. ASABE 2007, 50, 1271–1278. [Google Scholar] [CrossRef]

- Zhao, H.; Song, X.; Yang, G.; Li, Z.; Zhang, D.; Feng, H. Monitoring of nitrogen and grain protein content in winter wheat based on Sentinel-2A data. Remote Sens. 2019, 11, 1724. [Google Scholar] [CrossRef]

- Tan, C.; Zhou, X.; Zhang, P.; Wang, Z.; Wang, D.; Guo, W.; Yun, F. Predicting grain protein content of field-grown winter wheat with satellite images and partial least square algorithm. PLoS ONE 2020, 15, e0228500. [Google Scholar] [CrossRef]

- LI, C.-j.; WANG, J.-h.; Qian, W.; WANG, D.-c.; SONG, X.-y.; Yan, W.; HUANG, W.-j. Estimating wheat grain protein content using multi-temporal remote sensing data based on partial least squares regression. J. Integr. Agric. 2012, 11, 1445–1452. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D. UAV-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ICI 8640 P, FLIR Vue Pro R 640, and thermomap cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Sarkar, T.K.; Ryu, C.-S.; Kang, Y.-S.; Kim, S.-H.; Jeon, S.-R.; Jang, S.-H.; Park, J.-W.; Kim, S.-G.; Kim, H.-J. Integrating UAV remote sensing with GIS for predicting rice grain protein. J. Biosyst. Eng. 2018, 43, 148–159. [Google Scholar]

- Hama, A.; Tanaka, K.; Mochizuki, A.; Tsuruoka, Y.; Kondoh, A. Estimating the protein concentration in rice grain using UAV imagery together with agroclimatic data. Agronomy 2020, 10, 431. [Google Scholar] [CrossRef]

- Zhou, X.; Kono, Y.; Win, A.; Matsui, T.; Tanaka, T.S. Predicting within-field variability in grain yield and protein content of winter wheat using UAV-based multispectral imagery and machine learning approaches. Plant Prod. Sci. 2021, 24, 137–151. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Tilly, N.; Aasen, H.; Bareth, G. Fusion of plant height and vegetation indices for the estimation of barley biomass. Remote Sens. 2015, 7, 11449–11480. [Google Scholar] [CrossRef]

- Colombo, R.; Bellingeri, D.; Fasolini, D.; Marino, C.M. Retrieval of leaf area index in different vegetation types using high resolution satellite data. Remote Sens. Environ. 2003, 86, 120–131. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Duan, B.; Liu, Y.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R.; Fang, S. Remote estimation of rice LAI based on Fourier spectrum texture from UAV image. Plant Methods 2019, 15, 124. [Google Scholar] [CrossRef] [PubMed]

- Sibanda, M.; Mutanga, O.; Rouget, M.; Kumar, L. Estimating biomass of native grass grown under complex management treatments using worldview-3 spectral derivatives. Remote Sens. 2017, 9, 55. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Pacifici, F.; Chini, M.; Emery, W.J. A neural network approach using multi-scale textural metrics from very high-resolution panchromatic imagery for urban land-use classification. Remote Sens. Environ. 2009, 113, 1276–1292. [Google Scholar] [CrossRef]

- Feng, W.; Wu, Y.; He, L.; Ren, X.; Wang, Y.; Hou, G.; Wang, Y.; Liu, W.; Guo, T. An optimized non-linear vegetation index for estimating leaf area index in winter wheat. Precis. Agric. 2019, 20, 1157–1176. [Google Scholar] [CrossRef]

- Walter, J.D.; Edwards, J.; McDonald, G.; Kuchel, H. Estimating biomass and canopy height with LiDAR for field crop breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Xi, X.; Nie, S.; Fan, X.; Chen, H.; Yang, X.; Peng, D.; Lin, Y.; Zhou, G. Combining hyperspectral imagery and LiDAR pseudo-waveform for predicting crop LAI, canopy height and above-ground biomass. Ecol. Indic. 2019, 102, 801–812. [Google Scholar] [CrossRef]

- Dilmurat, K.; Sagan, V.; Moose, S. Ai-Driven Maize Yield Forecasting Using Unmanned Aerial Vehicle-Based Hyperspectral And Lidar Data Fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 3, 193–199. [Google Scholar] [CrossRef]

- Burgess, A.J.; Retkute, R.; Herman, T.; Murchie, E.H. Exploring relationships between canopy architecture, light distribution, and photosynthesis in contrasting rice genotypes using 3D canopy reconstruction. Front. Plant Sci. 2017, 8, 734. [Google Scholar] [CrossRef]

- Wang, C.; Hai, J.; Yang, J.; Tian, J.; Chen, W.; Chen, T.; Luo, H.; Wang, H. Influence of leaf and silique photosynthesis on seeds yield and seeds oil quality of oilseed rape (Brassica napus L.). Eur. J. Agron. 2016, 74, 112–118. [Google Scholar] [CrossRef]

- Wang, C.; Nie, S.; Xi, X.; Luo, S.; Sun, X. Estimating the biomass of maize with hyperspectral and LiDAR data. Remote Sens. 2017, 9, 11. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Aimonino, D.R.; Barge, P.; Tortia, C.; Gay, P. 2D and 3D data fusion for crop monitoring in precision agriculture. In Proceedings of the 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Portici, Italy, 24–26 October 2019; pp. 62–67. [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Bhadra, S.; Sagan, V.; Maimaitijiang, M.; Maimaitiyiming, M.; Newcomb, M.; Shakoor, N.; Mockler, T.C. Quantifying leaf chlorophyll concentration of sorghum from hyperspectral data using derivative calculus and machine learning. Remote Sens. 2020, 12, 2082. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Bhadra, S.; Maimaitiyiming, M.; Brown, D.R.; Sidike, P.; Fritschi, F.B. Field-scale crop yield prediction using multi-temporal WorldView-3 and PlanetScope satellite data and deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 174, 265–281. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Paheding, S.; Bhadra, S.; Gosselin, N.; Burnette, M.; Demieville, J.; Hartling, S.; LeBauer, D.; Newcomb, M. Data-Driven Artificial Intelligence for Calibration of Hyperspectral Big Data. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5510320. [Google Scholar] [CrossRef]

- Babaeian, E.; Paheding, S.; Siddique, N.; Devabhaktuni, V.K.; Tuller, M. Estimation of root zone soil moisture from ground and remotely sensed soil information with multisensor data fusion and automated machine learning. Remote Sens. Environ. 2021, 260, 112434. [Google Scholar] [CrossRef]

- LeDell, E.; Poirier, S. H2o automl: Scalable automatic machine learning. In Proceedings of the AutoML Workshop at ICML, Online, 17–18 July 2020. [Google Scholar]

- Jin, H.; Song, Q.; Hu, X. Auto-keras: An efficient neural architecture search system. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1946–1956. [Google Scholar]

- Li, K.-Y.; Burnside, N.G.; de Lima, R.S.; Peciña, M.V.; Sepp, K.; Cabral Pinheiro, V.H.; de Lima, B.R.C.A.; Yang, M.-D.; Vain, A.; Sepp, K. An Automated Machine Learning Framework in Unmanned Aircraft Systems: New Insights into Agricultural Management Practices Recognition Approaches. Remote Sens. 2021, 13, 3190. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Maimaitiyiming, M.; Erkbol, H.; Hartling, S.; Peterson, K.; Peterson, J.; Burken, J.; Fritschi, F. UAV/satellite multiscale data fusion for crop monitoring and early stress detection. In Proceedings of the ISPRS International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019. [Google Scholar]

- Hartling, S.; Sagan, V.; Maimaitijiang, M. Urban tree species classification using UAV-based multi-sensor data fusion and machine learning. GISci. Remote Sens. 2021, 58, 1250–1275. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Miller, A.J.; Kwasniewski, M. Leveraging very-high spatial resolution hyperspectral and thermal UAV imageries for characterizing diurnal indicators of grapevine physiology. Remote Sens. 2020, 12, 3216. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Erkbol, H.; Adrian, J.; Newcomb, M.; LeBauer, D.; Pauli, D.; Shakoor, N.; Mockler, T. UAV-BASED SORGHUM GROWTH MONITORING: A COMPARATIVE ANALYSIS OF LIDAR AND PHOTOGRAMMETRY. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 489–496. [Google Scholar] [CrossRef]

- Rouse Jr, J.W.; Haas, R.; Schell, J.; Deering, D. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA: Washington, DC, USA, 1974.

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Chen, J.M.; Cihlar, J. Retrieving leaf area index of boreal conifer forests using Landsat TM images. Remote Sens. Environ. 1996, 55, 153–162. [Google Scholar] [CrossRef]

- Perry Jr, C.R.; Lautenschlager, L.F. Functional equivalence of spectral vegetation indices. Remote Sens. Environ. 1984, 14, 169–182. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.; Kerr, Y.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Estimating chlorophyll content from hyperspectral vegetation indices: Modeling and validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Vincini, M.; Frazzi, E.; D’Alessio, P. Angular dependence of maize and sugar beet VIs from directional CHRIS/Proba data. In Proceedings of the 4th ESA CHRIS PROBA Workshop, Frascati, Italy, 19 September 2006; pp. 19–21. [Google Scholar]

- Gamon, J.; Penuelas, J.; Field, C. A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 1992, 41, 35–44. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Vogelmann, J.; Rock, B.; Moss, D. Red edge spectral measurements from sugar maple leaves. Int. J. Remote Sens. 1993, 14, 1563–1575. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Miller, J.R.; Noland, T.L.; Mohammed, G.H.; Sampson, P.H. Scaling-up and model inversion methods with narrowband optical indices for chlorophyll content estimation in closed forest canopies with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1491–1507. [Google Scholar] [CrossRef]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and FPAR: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Green, A.A.; Berman, M.; Switzer, P.; Craig, M.D. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef]

- Park, J.-I.; Park, J.; Kim, K.-S. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating above-ground biomass of maize using features derived from UAV-based RGB imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef]

- Gijsbers, P.; LeDell, E.; Thomas, J.; Poirier, S.; Bischl, B.; Vanschoren, J. An open source AutoML benchmark. arXiv 2019, arXiv:1907.00909. [Google Scholar]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Miller, P.J.; McArtor, D.B.; Lubke, G.H. A gradient boosting machine for hierarchically clustered data. Multivar. Behav. Res. 2017, 52, 117. [Google Scholar] [CrossRef] [PubMed]

- Houborg, R.; McCabe, M.F. A hybrid training approach for leaf area index estimation via Cubist and random forests machine-learning. ISPRS J. Photogramm. Remote Sens. 2018, 135, 173–188. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Nelder, J.A.; Wedderburn, R.W. Generalized linear models. J. R. Stat. Soc. Ser. A Gen. 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Zebari, R.; Abdulazeez, A.; Zeebaree, D.; Zebari, D.; Saeed, J. A comprehensive review of dimensionality reduction techniques for feature selection and feature extraction. J. Appl. Sci. Technol. Trends 2020, 1, 56–70. [Google Scholar] [CrossRef]

- Song, F.; Guo, Z.; Mei, D. Feature selection using principal component analysis. In Proceedings of the 2010 International Conference on System Science, Engineering Design and Manufacturing Informatization, Washington, DC, USA, 12–14 November 2010; pp. 27–30. [Google Scholar]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef]

- Strobl, C.; Malley, J.; Tutz, G. An introduction to recursive partitioning: Rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychol. Methods 2009, 14, 323. [Google Scholar] [CrossRef] [PubMed]

- Chu, C.; Hsu, A.-L.; Chou, K.-H.; Bandettini, P.; Lin, C.; Initiative, A.s.D.N. Does feature selection improve classification accuracy? Impact of sample size and feature selection on classification using anatomical magnetic resonance images. Neuroimage 2012, 60, 59–70. [Google Scholar] [CrossRef]

- Wang, L.; Tian, Y.; Yao, X.; Zhu, Y.; Cao, W. Predicting grain yield and protein content in wheat by fusing multi-sensor and multi-temporal remote-sensing images. Field Crops Res. 2014, 164, 178–188. [Google Scholar] [CrossRef]

- Xu, X.; Teng, C.; Zhao, Y.; Du, Y.; Zhao, C.; Yang, G.; Jin, X.; Song, X.; Gu, X.; Casa, R. Prediction of wheat grain protein by coupling multisource remote sensing imagery and ECMWF data. Remote Sens. 2020, 12, 1349. [Google Scholar] [CrossRef]

- Onoyama, H.; Ryu, C.; Suguri, M.; Iida, M. Estimation of rice protein content before harvest using ground-based hyperspectral imaging and region of interest analysis. Precis. Agric. 2018, 19, 721–734. [Google Scholar] [CrossRef]

- Xiu-liang, J.; Xin-gang, X.; Feng, H.-k.; Xiao-yu, S.; Wang, Q.; Ji-hua, W.; Wen-shan, G. Estimation of grain protein content in winter wheat by using three methods with hyperspectral data. Int. J. Agric. Biol. 2014, 16, 498–504. [Google Scholar]

- Wang, Z.; Chen, J.; Zhang, J.; Fan, Y.; Cheng, Y.; Wang, B.; Wu, X.; Tan, X.; Tan, T.; Li, S. Predicting grain yield and protein content using canopy reflectance in maize grown under different water and nitrogen levels. Field Crops Res. 2021, 260, 107988. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Xu, J.-X.; Ma, J.; Tang, Y.-N.; Wu, W.-X.; Shao, J.-H.; Wu, W.-B.; Wei, S.-Y.; Liu, Y.-F.; Wang, Y.-C.; Guo, H.-Q. Estimation of Sugarcane Yield Using a Machine Learning Approach Based on UAV-LiDAR Data. Remote Sens. 2020, 12, 2823. [Google Scholar] [CrossRef]

- Eitel, J.U.; Magney, T.S.; Vierling, L.A.; Brown, T.T.; Huggins, D.R. LiDAR based biomass and crop nitrogen estimates for rapid, non-destructive assessment of wheat nitrogen status. Field Crops Res. 2014, 159, 21–32. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; Rodriguez-Gonzalvez, P.; Rainey, K.M. Yield prediction by machine learning from UAS-based multi-sensor data fusion in soybean. Plant Methods 2020, 16, 78. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation index weighted canopy volume model (CVMVI) for soybean biomass estimation from unmanned aerial system-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Walter, J.; Edwards, J.; McDonald, G.; Kuchel, H. Photogrammetry for the estimation of wheat biomass and harvest index. Field Crop Res. 2018, 216, 165–174. [Google Scholar] [CrossRef]

- Verma, N.K.; Lamb, D.W.; Reid, N.; Wilson, B. Comparison of Canopy Volume Measurements of Scattered Eucalypt Farm Trees Derived from High Spatial Resolution Imagery and LiDAR. Remote Sens. 2016, 8, 388. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Evaluating the performance of photogrammetric products using fixed-wing UAV imagery over a mixed conifer–broadleaf forest: Comparison with airborne laser scanning. Remote Sens. 2018, 10, 187. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Spangenberg, G.; Kant, S. Fusion of spectral and structural information from aerial images for improved biomass estimation. Remote Sens. 2020, 12, 3164. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Lu, J.; Cheng, D.; Geng, C.; Zhang, Z.; Xiang, Y.; Hu, T. Combining plant height, canopy coverage and vegetation index from UAV-based RGB images to estimate leaf nitrogen concentration of summer maize. Biosyst. Eng. 2021, 202, 42–54. [Google Scholar] [CrossRef]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Cota, G.; Sagan, V.; Maimaitijiang, M.; Freeman, K. Forest Conservation with Deep Learning: A Deeper Understanding of Human Geography around the Betampona Nature Reserve, Madagascar. Remote Sens. 2021, 13, 3495. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Golden, C.E.; Rothrock Jr, M.J.; Mishra, A. Comparison between random forest and gradient boosting machine methods for predicting Listeria spp. prevalence in the environment of pastured poultry farms. Food Res. Int. 2019, 122, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, A.K.; Safaei, N.; Khaki, S.; Lopez, G.; Zeng, W.; Ewert, F.; Gaiser, T.; Rahimi, J. Comparison of Machine Learning Methods for Predicting Winter Wheat Yield in Germany. arXiv 2021, arXiv:2105.01282. [Google Scholar]

- Robinson, C.; Schumacker, R.E. Interaction effects: Centering, variance inflation factor, and interpretation issues. Mult. Linear Regres. Viewp. 2009, 35, 6–11. [Google Scholar]

- Vilar, L.; Gómez, I.; Martínez-Vega, J.; Echavarría, P.; Riaño, D.; Martín, M.P. Multitemporal modelling of socio-economic wildfire drivers in central Spain between the 1980s and the 2000s: Comparing generalized linear models to machine learning algorithms. PLoS ONE 2016, 11, e0161344. [Google Scholar] [CrossRef]

| Seed Composition | *NO. | Mean | Max. | Min. | SD | CV (%) |

|---|---|---|---|---|---|---|

| Soybean protein (%) | 91 | 38.5 | 41.2 | 36.7 | 0.87 | 2.3% |

| Soybean oil (%) | 91 | 22.9 | 24.5 | 21.0 | 0.83 | 3.6% |

| Corn protein (%) | 369 | 7.7 | 15.3 | 5.7 | 1.45 | 18.9% |

| Corn oil (%) | 369 | 3.9 | 5.3 | 2.1 | 0.57 | 14.5% |

| Sensor | Vender/Brand | Recorded Info. | Spectral Properties | *GSD/ Point-Density |

|---|---|---|---|---|

| Hyperspectral | Headwall Hyperspec Nano | 269 VNIR bands | 400–1000 nm with FWHM of 6 nm | 3 cm |

| LiDAR | Velodyne HDL-32 | LAS point clouds | / | 900 pts/m2 |

| Spectral Features | Formulation | Ref. |

|---|---|---|

| 269 raw bands | The reflectance value of each band | / |

| Ratio vegetation index | RVI = R800/R680 | [61] |

| Simple Ratio Index750 | SR705 = R750/R705 | [62] |

| Modified Red Edge Simple Ratio Index | mSR705 = (R750 − R705)/(R750 + R705 − 2R445) | [63] |

| Normalized Difference Vegetation Index750 | ND705 = (R750 − R445)/(R705 − R445) | [63] |

| Modified Normalized Difference Vegetation Index | mND705 = (R750 − R445)/(R700 − R445) | [63] |

| Modified simple ratio | MSR = (R800/R700 − 1)/(R800/R700 + 1)0.5 | [64] |

| Difference vegetation index | DVI = R800 − R680 | [65] |

| Red-edge Chlorophyll Index | CIred-edge = R790/R720 − 1 | [66] |

| Green Chlorophyll Index | CIgreen=(R840 − R870)/R550 − 1 | [66] |

| Normalized difference vegetation index | NDVI = (R800 − R670)/(R800 + R670) | [61] |

| Green normalized difference vegetation index | GNDVI = (R750 – R550)/(R750 + R550) | [67] |

| Normalized difference red-edge | NDRE = (R790 − R720)/(R790 + R720) | [68] |

| MERIS terrestrial Chlorophyll index | MTCI = (R754 − R709)/(R709 − R681) | [69] |

| The enhanced vegetation index | EVI = 2.5((R800 − R670)/(R800+6R670 − 7.5R475 + 1)) | [70] |

| Enhanced vegetation Index (2-band) | EVI2 = 2.5(R800 − R670)/(R800 + 2.4R670 + 1) | [71] |

| Improved soil adjusted vegetation index | MSAVI = 0.5[2R800 + 1 − ((2R800 + 1)0.5 − 8(R800 − R670))0.5] | [72] |

| Optimized soil adjusted vegetation index | OSAVI = 1.16(R800 − R670)/(R800 + R670 + 0.16) | [73] |

| Optimized soil adjusted vegetation index2 | OSAVI2 = 1.16(R750 − R705)/(R750 + R705 + 0.16) | [74] |

| Modified chlorophyll absorption in reflectance index | MCARI = [(R700 − R670) − 0.2(R700 − R550)] (R700/R670) | [75] |

| Transformed chlorophyll absorption in reflectance index | TCARI = 3[(R700 − R670) − 0.2(R700 − R550) (R700/R670)] | [76] |

| MCARI/OSAVI | MCARI/OSAVI | [75] |

| TCARI/OSAVI | TCARI/OSAVI | [76] |

| Wide dynamic range vegetation index | WDRVI = (aR810 − R680)/(aR810 + R680) (a = 0.12) | [77] |

| Visible atmospherically resistance index | VARI = (R550 − R670)/(R550 + R670 − R475) | [78] |

| Triangular Vegetation Index | TVI = 0.5[120(R750 – R550) − 200(R670 – R550)] | [79] |

| Modified Triangular Vegetation Index 1 | MTVI1 = 1.2[1.2(R800 – R550) − 2.5(R670 – R550)] | [80] |

| Modified Triangular Vegetation Index 2 | MTVI2 = 1.5[1.2(R800 – R550) − 2.5(R670 – R550)]/[(2R800+1)2 − 6R800+5(R670)0.5 − 0.5)]0.5 | [80] |

| Spectral Polygon Vegetation Index | SPVI = 0.4[3.7(R800 − R670) − 1.2|R530 − R670|] | [81] |

| Photochemical Reflectance Index | PRI = (R531 − R570)/(R531 + R570) | [82] |

| Renormalized difference vegetation index | RDVI = (R800 − R670)/(R800 + R670)0.5 | [83] |

| Vogelmann Red Edge Index 1 | VOG1 = R740/R720 | [84] |

| Vogelmann Red Edge Index 2 | VOG2 = (R734 − R747)/(R715 + R726) | [85] |

| Vogelmann Red Edge Index 3 | VOG3 = (R734 − R747)/(R715 + R720) | [85] |

| Nonlinear Vegetation Index | NLI = (R8102 − R680)/(R8102 + R680) | [86] |

| Modified Nonlinear Vegetation Index | MNLI = (1 + 0.5) (R8102 − R680)/(R8102 + R680 + 0.5) | [87] |

| NO. | Texture Measures | Formula |

|---|---|---|

| 1 | Mean (M.E.) | |

| 2 | Variance (V.A.) | |

| 3 | Homogeneity (H.O.) | |

| 4 | Contrast (C.O.) | |

| 5 | Dissimilarity (DI) | |

| 6 | Entropy (EN) | |

| 7 | Second Moment (S.M.) | |

| 8 | Correlation (CC) |

| Metrics | Descriptions |

|---|---|

| Hmax | Maximum of canopy height (intensity) |

| Hmin | Minimum of canopy height (intensity) |

| Hmean | Mean of canopy height (intensity) |

| Hmedian | Median of canopy height (intensity) |

| Hmode | Mode of canopy height (intensity) |

| Hsd | Standard deviation of canopy height (intensity) |

| Hcv | Coefficient of variation of canopy height (intensity) |

| Hmad | Hmad = 1.4826 × median (|height (intensity) − Hmedian (Imedian)|) |

| Haad | Haad = mean (|height (intensity) − Hmean (Imean)|) |

| Hper | Percentile of canopy height/intensity: H10 (I10), H20 (I20), H30 (I30), H40 (I40), H50 (I50), H60 (I60), H70 (I70), H80 (I80), H90 (I90), H95 (I95), H98 (I98), H99 (I99) |

| Hiqr | The Interquartile Range (iqr) of canopy height (intensity), Hiqr (Iiqr) = H75 (I75) − H25 (I25) |

| Hskn | Skewness of canopy height (intensity) |

| Hkurt | Kurtosis of canopy height (intensity) |

| Hcrd | Canopy return (intensity) density is the proportion of points (intensity) above the height quantiles (10th, 30th, 50th, 70th and 90th) to the total number of points (or sum of intensity): Hd10 (Id10), Hd30 (Id30), Hd50 (Id50), Hd70 (Id70) and Hd90 (Id90) |

| Hcrr | Canopy relief ratio of height (Intensity): (Hmean (Imean) − Hmin (Imin))/(Hmax (Imax) − Hmin (Imin)) |

| Hlii | Laser intercept index (canopy returns/total returns), a description of fractional canopy cover. |

| Hcg | The ratio of canopy returns (intensity) and ground returns (intensity) |

| Input | FN * | Metrics | NN | DRF | XRT | GBM | GLM |

|---|---|---|---|---|---|---|---|

| Hyper | 462 | R2 | 0.532 | 0.315 | 0.359 | 0.484 | 0.324 |

| RMSE | 0.542 | 0.656 | 0.634 | 0.569 | 0.652 | ||

| RRMSE | 1.40% | 1.70% | 1.64% | 1.47% | 1.68% | ||

| LiDAR | 60 | R2 | 0.344 | 0.253 | 0.338 | 0.462 | 0.326 |

| RMSE | 0.642 | 0.685 | 0.645 | 0.582 | 0.651 | ||

| RRMSE | 1.66% | 1.77% | 1.67% | 1.50% | 1.68% | ||

| Hyper + LiDAR | 522 | R2 | 0.644 | 0.493 | 0.495 | 0.582 | 0.414 |

| RMSE | 0.473 | 0.565 | 0.563 | 0.513 | 0.607 | ||

| RRMSE | 1.22% | 1.46% | 1.46% | 1.32% | 1.57% |

| Input | FN * | Metrics | NN | DRF | XRT | GBM | GLM |

|---|---|---|---|---|---|---|---|

| Hyper | 462 | R2 | 0.472 | 0.408 | 0.415 | 0.543 | 0.445 |

| RMSE | 0.588 | 0.623 | 0.619 | 0.547 | 0.603 | ||

| RRMSE | 2.55% | 2.71% | 2.69% | 2.38% | 2.62% | ||

| LiDAR | 60 | R2 | 0.383 | 0.211 | 0.230 | 0.225 | 0.228 |

| RMSE | 0.636 | 0.719 | 0.710 | 0.713 | 0.711 | ||

| RRMSE | 2.76% | 3.12% | 3.08% | 3.09% | 3.09% | ||

| Hyper + LiDAR | 522 | R2 | 0.515 | 0.417 | 0.482 | 0.557 | 0.415 |

| RMSE | 0.564 | 0.618 | 0.583 | 0.539 | 0.620 | ||

| RRMSE | 2.45% | 2.68% | 2.53% | 2.34% | 2.69% |

| Input | FN * | Metrics | NN | DRF | XRT | GBM | GLM |

|---|---|---|---|---|---|---|---|

| Hyper | 462 | R2 | 0.675 | 0.756 | 0.767 | 0.774 | 0.650 |

| RMSE | 0.683 | 0.591 | 0.578 | 0.570 | 0.709 | ||

| RRMSE | 8.76% | 7.58% | 7.41% | 7.31% | 9.09% | ||

| LiDAR | 60 | R2 | 0.598 | 0.640 | 0.637 | 0.667 | 0.598 |

| RMSE | 0.760 | 0.719 | 0.722 | 0.692 | 0.759 | ||

| RRMSE | 9.74% | 9.22% | 9.26% | 8.87% | 9.75% | ||

| Hyper + LiDAR | 522 | R2 | 0.684 | 0.773 | 0.783 | 0.790 | 0.663 |

| RMSE | 0.674 | 0.570 | 0.558 | 0.548 | 0.696 | ||

| RRMSE | 8.64% | 7.32% | 7.15% | 7.04% | 8.92% |

| Input | FN * | Metrics | NN | DRF | XRT | GBM | GLM |

|---|---|---|---|---|---|---|---|

| Hyper | 462 | R2 | 0.553 | 0.540 | 0.574 | 0.607 | 0.544 |

| RMSE | 0.341 | 0.346 | 0.333 | 0.320 | 0.345 | ||

| RRMSE | 9.15% | 9.28% | 8.93% | 8.57% | 9.24% | ||

| LiDAR | 60 | R2 | 0.435 | 0.417 | 0.409 | 0.418 | 0.428 |

| RMSE | 0.384 | 0.390 | 0.392 | 0.389 | 0.386 | ||

| RRMSE | 10.28% | 10.44% | 10.52% | 10.44% | 10.35% | ||

| Hyper + LiDAR | 522 | R2 | 0.648 | 0.654 | 0.672 | 0.673 | 0.434 |

| RMSE | 0.303 | 0.300 | 0.292 | 0.292 | 0.384 | ||

| RRMSE | 8.11% | 8.04% | 7.83% | 7.82% | 10.29% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dilmurat, K.; Sagan, V.; Maimaitijiang, M.; Moose, S.; Fritschi, F.B. Estimating Crop Seed Composition Using Machine Learning from Multisensory UAV Data. Remote Sens. 2022, 14, 4786. https://doi.org/10.3390/rs14194786

Dilmurat K, Sagan V, Maimaitijiang M, Moose S, Fritschi FB. Estimating Crop Seed Composition Using Machine Learning from Multisensory UAV Data. Remote Sensing. 2022; 14(19):4786. https://doi.org/10.3390/rs14194786

Chicago/Turabian StyleDilmurat, Kamila, Vasit Sagan, Maitiniyazi Maimaitijiang, Stephen Moose, and Felix B. Fritschi. 2022. "Estimating Crop Seed Composition Using Machine Learning from Multisensory UAV Data" Remote Sensing 14, no. 19: 4786. https://doi.org/10.3390/rs14194786

APA StyleDilmurat, K., Sagan, V., Maimaitijiang, M., Moose, S., & Fritschi, F. B. (2022). Estimating Crop Seed Composition Using Machine Learning from Multisensory UAV Data. Remote Sensing, 14(19), 4786. https://doi.org/10.3390/rs14194786