Complex Mountain Road Extraction in High-Resolution Remote Sensing Images via a Light Roadformer and a New Benchmark

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

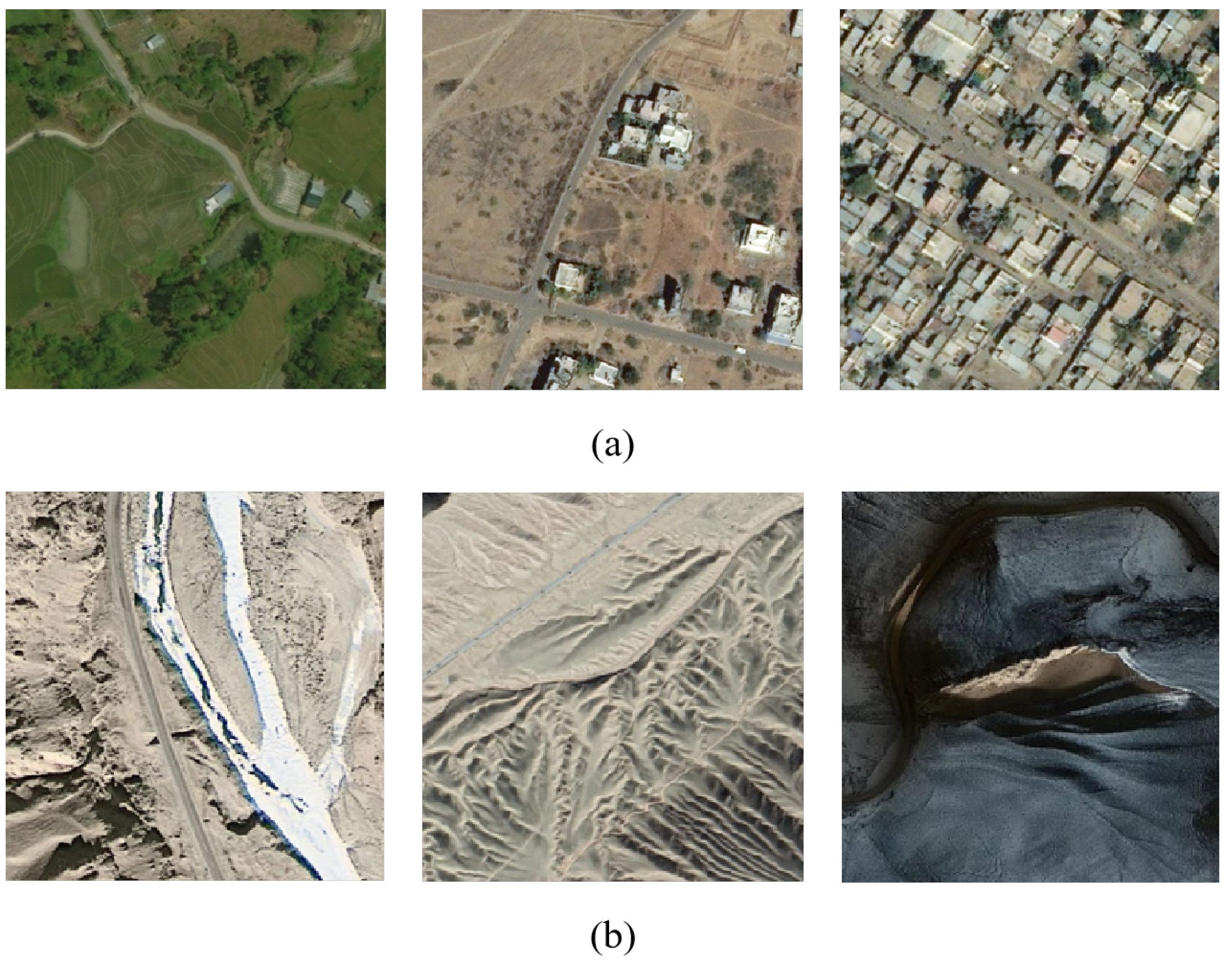

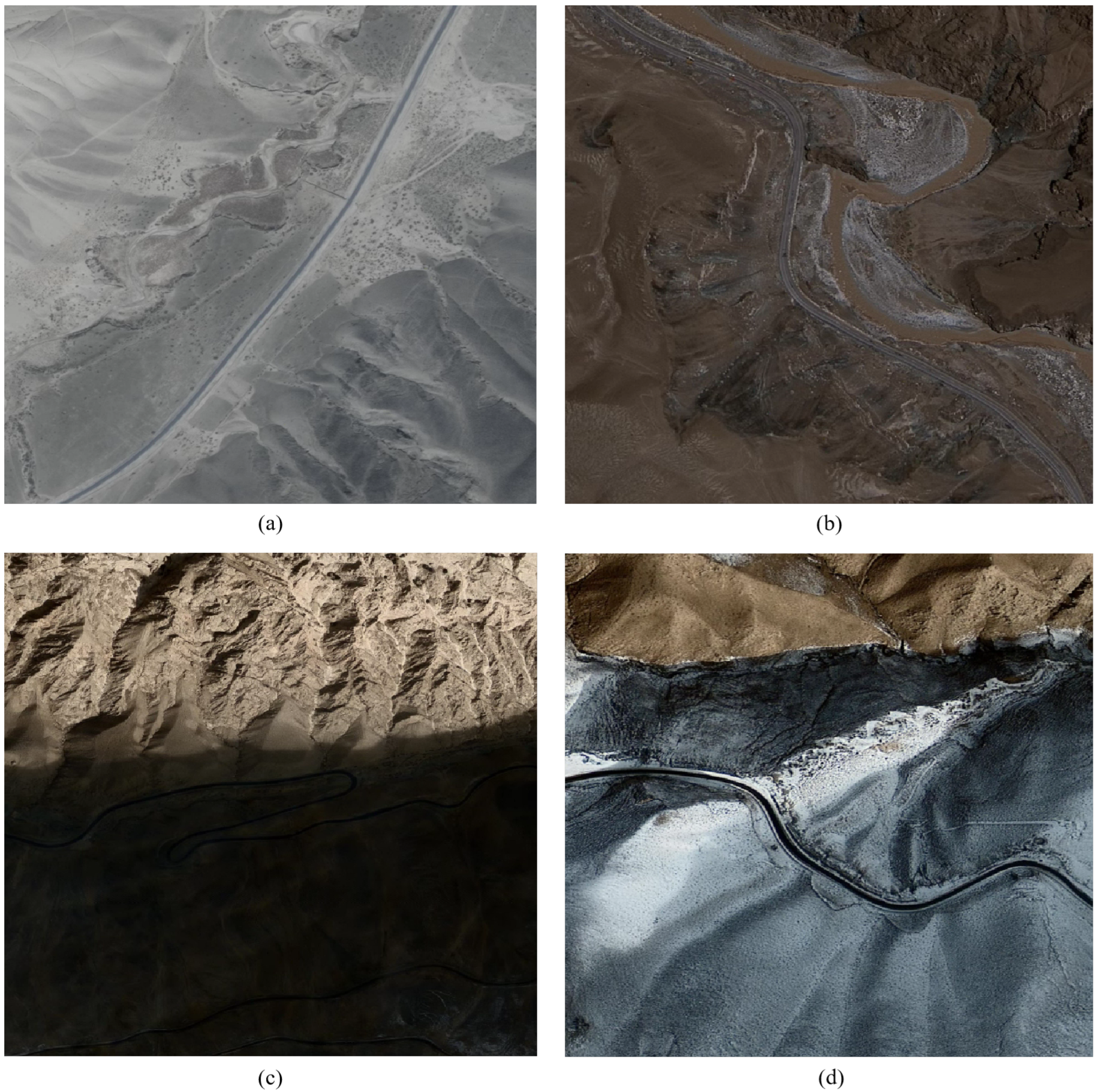

2.2. Datasets

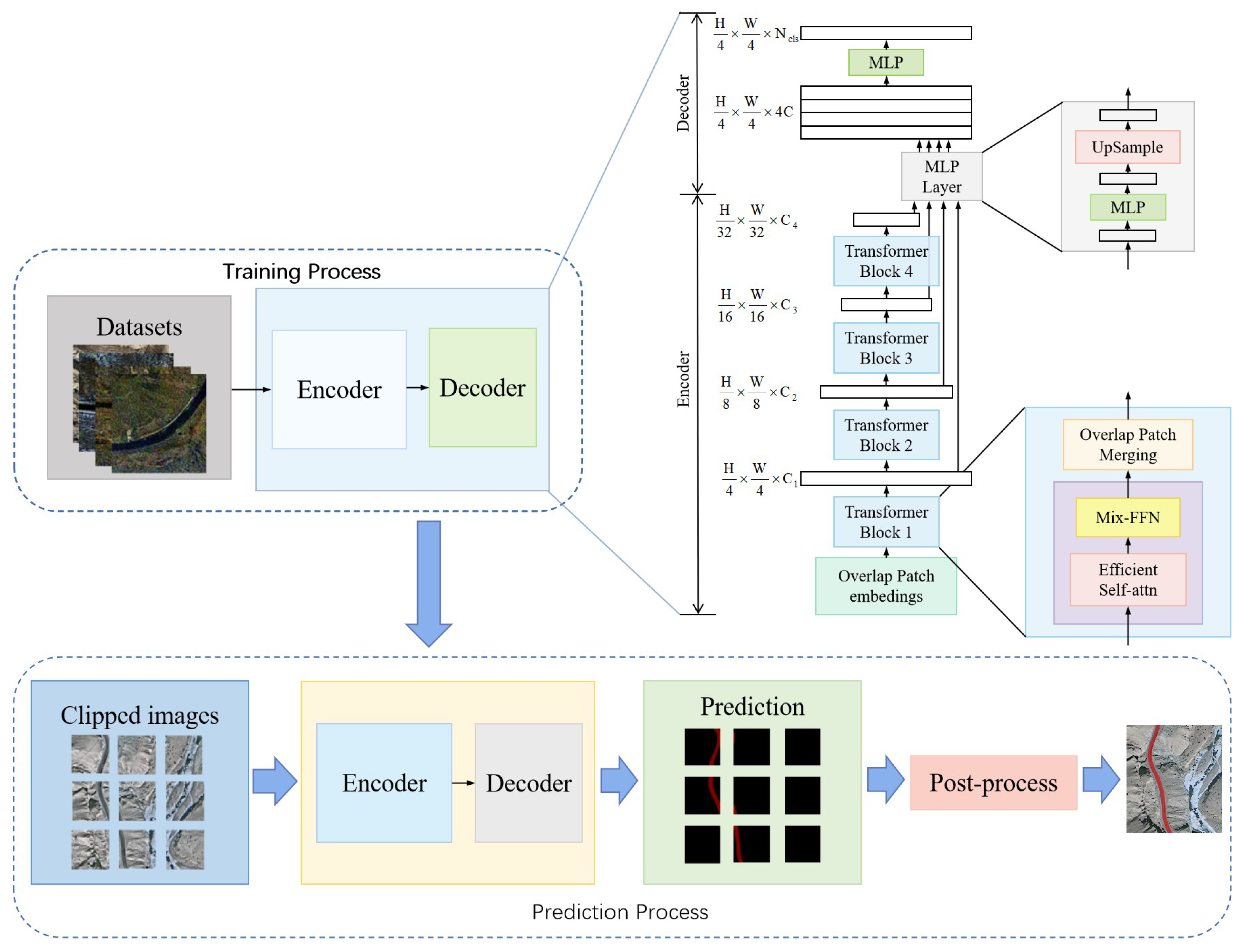

2.3. Method

2.3.1. Image Pre-Processing

2.3.2. Light Roadformer Model

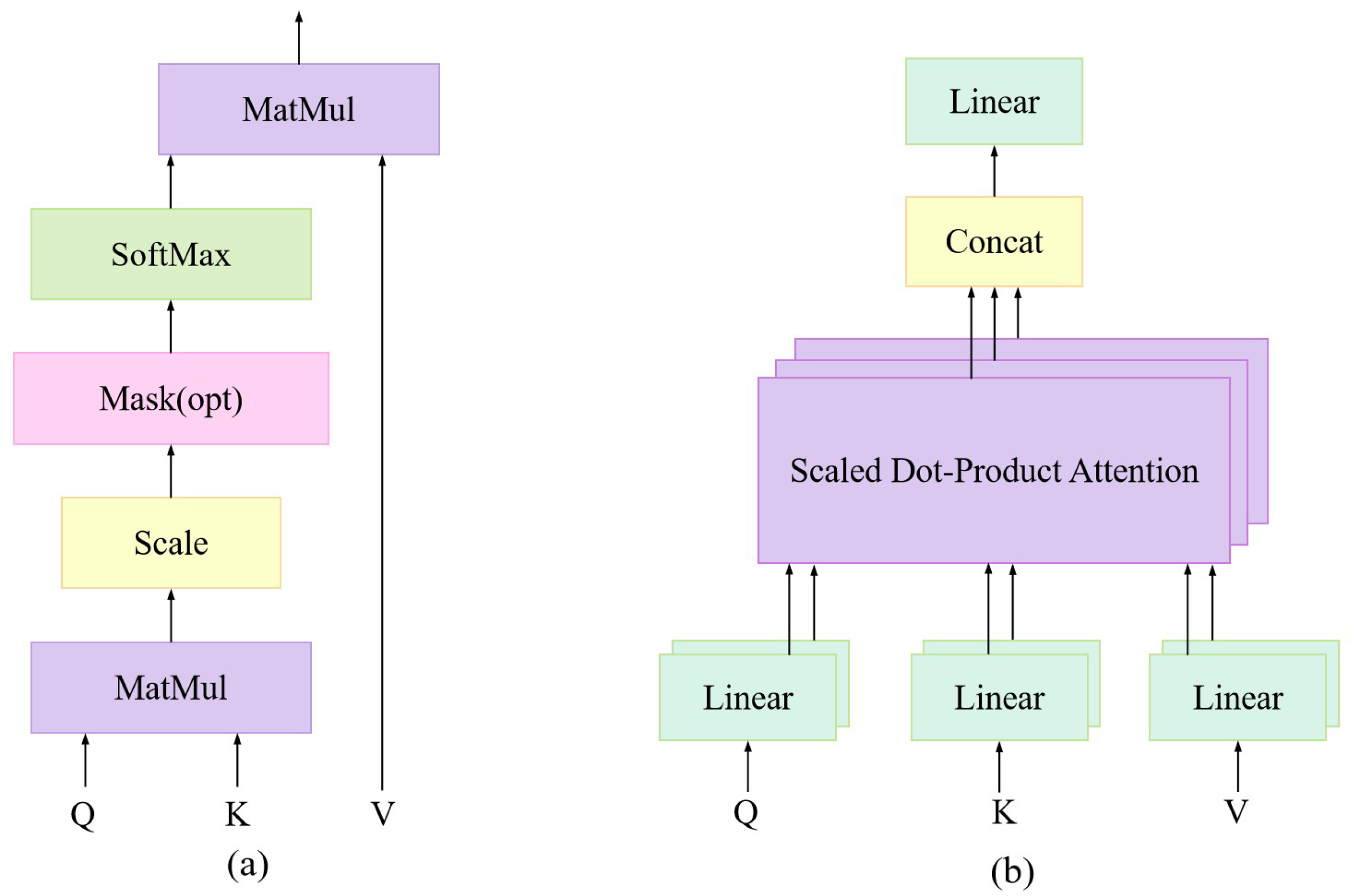

- Encoder:The encoder consists of a pyramid structure, extracting high-resolution coarse features and low-resolution fine-grained features, which help enhance the performance of the segmentation: for every transformer block, there is a self-attention layer, feed-forward network, and overlap patch merging.The attention module maps the query and sets the key-value pairs to the output: first, a compatibility function is used to calculate the weight from the query and the corresponding key of dimension . Then, the weight is assigned to each value, and the weighted sum of the values is computed as the output. The attention module can be described as in Equation (1) and (a) in Figure 6.where O represents the query, P represents the key, and Q represents the value.The complexity of the attention module is . The complexity of the self-attention mechanism is reduced using a reduction ratio R, which is expressed as:where the shape of P is ; Equation (2) reshapes P to , whose shape is ; Equation (3) converts to the origin sequence; the complexity is reduced from to .Instead of using single attention, multihead attention was applied in the model. The multihead attention projects queries, keys, and values multiple times, and then, an attention function is performed in parallel. This results in the concatenated final values, as shown in (b) of Figure 6. The role of the multihead attention model structure is to jointly learn information from different representation subspaces.The mix-feed-forward network (Mix-FFN) was used to learnthe location information, after the self-attention module. In vision transformer (ViT) [55], positional encoding (PE) is used to introduce the location information. However, the fixed PE leads to a drop in accuracy when interpolated. Here, 3 × 3 conv was applied to alleviate the problem in [72]. Here, Xie et al. [73] showed that a 3 × 3 convolution could provide positional information and replaced the PE with the FFN. The Mix-FFN is formulated as follows:where is the feature from the self-attention module. The Mix-FFN mixes a 3×3 convolution and an MLP into each FFN.

- Decoder:The decoder part first unifies the channel dimension by an MLP layer in Equation (5), and all the features from different transformer blocks are up-sampled and concatenated in Equation (6); then, the feature is fused by an MLP layer. Finally, the segmentation mask M is predicted from the fused feature in Equation (8).The decoder part gathers the extracted features and draws the road extraction map, which consists of four steps:

- 1.

- Unify the channel dimension by an MLP layer:where represents the feature extracted from the transformer blocks, represents the channel number of the output features, C represents the channel number of the feature, and is the unified feature.

- 2.

- All the features of different sizes are upsampled to the same size:where W is the image width and H is the image height.

- 3.

- The features are concatenated and fused by an MLP layer.

- 4.

- The mask M is segmented from the fused feature by an MLP layer to produce the extracted road segments.where represent the class number of objects and M is the final mask predicted.

2.4. Post-Process

- 1.

- First, traverse the whole resulting image to find the predicted road pixel and ignore the non-road pixel.

- 2.

- Second, for each road pixel, take every pixel that is predicted as the road and connect this to itself as in the same road segment; then, search the connected pixel to calculate the size of the road segments.

- 3.

- Third, according to the ratio of the extracted road segment area to the entire image area and whether the road segment is connected to the edge of the image, the non-road segment is removed.

3. Results

3.1. Experimental Setting and Evaluation Metrics

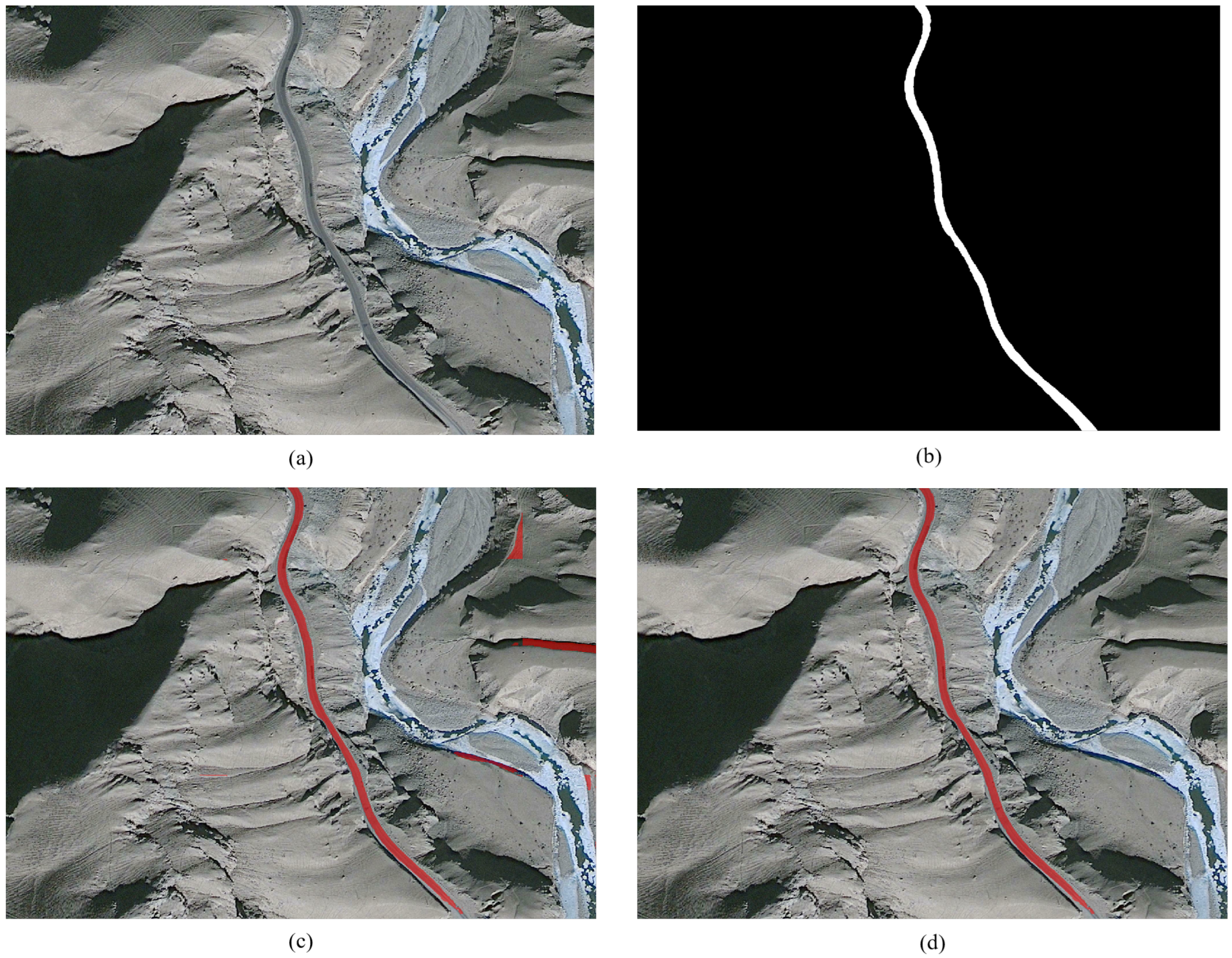

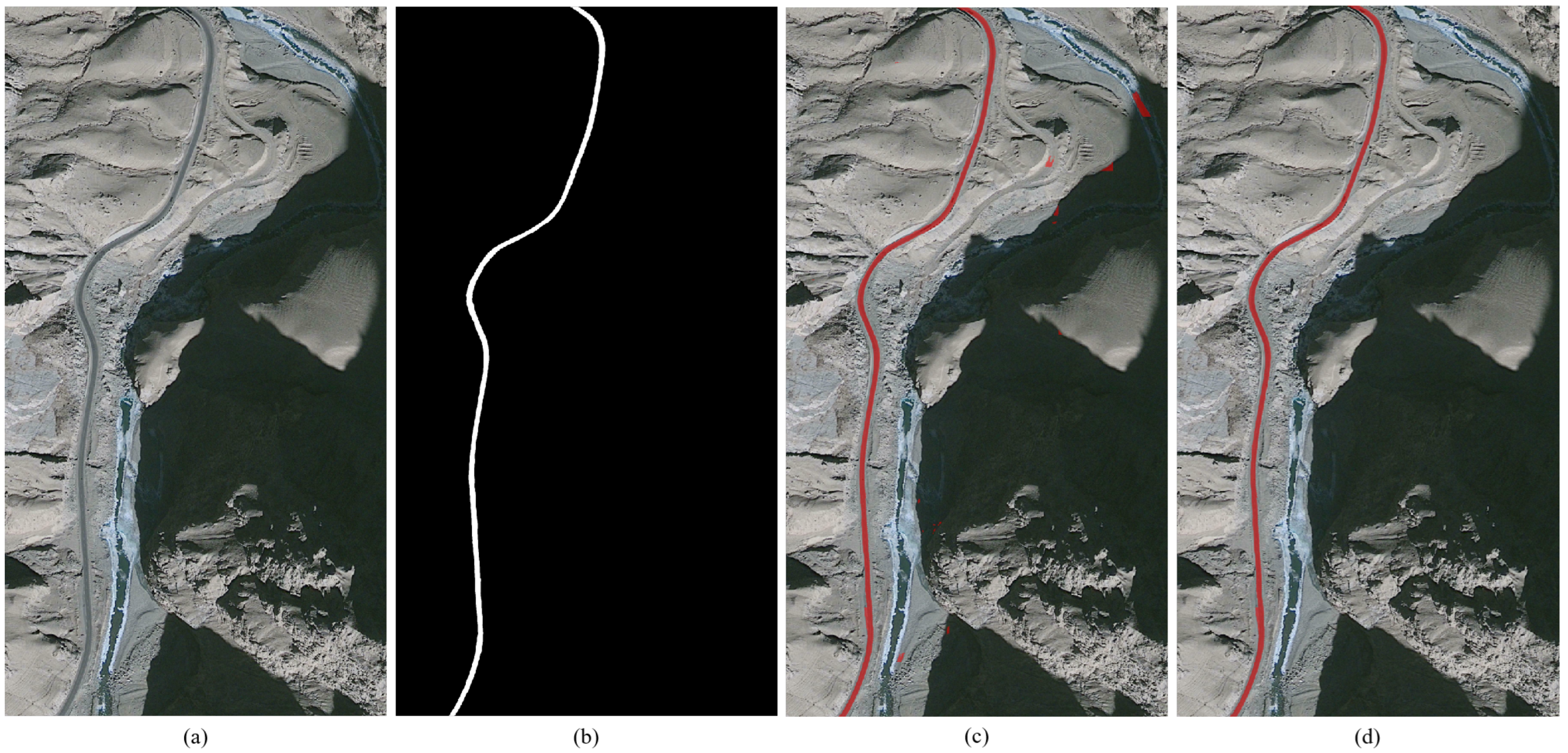

3.2. Prediction Experiment

4. Discussion

4.1. Comparison with the Existing Datasets

4.2. Experimental Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, M.; Sui, H.; Chen, S.; Wang, J.; Chen, X. BT-RoadNet: A boundary and topologically-aware neural network for road extraction from high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2020, 168, 288–306. [Google Scholar] [CrossRef]

- Panteras, G.; Cervone, G. Enhancing the temporal resolution of satellite-based flood extent generation using crowdsourced data for disaster monitoring. Int. J. Remote Sens. 2018, 39, 1459–1474. [Google Scholar] [CrossRef]

- Han, W.; Feng, R.; Wang, L.; Cheng, Y. A semi-supervised generative framework with deep learning features for high-resolution remote sensing image scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 145, 23–43. [Google Scholar] [CrossRef]

- Han, W.; Chen, J.; Wang, L.; Feng, R.; Li, F.; Wu, L.; Tian, T.; Yan, J. A survey on methods of small weak object detection in optical high-resolution remote sensing images. IEEE Geosci. Remote. Sens. Mag. 2021, 9, 8–34. [Google Scholar] [CrossRef]

- Shi, W.; Miao, Z.; Debayle, J. An integrated method for urban main-road centerline extraction from optical remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 3359–3372. [Google Scholar] [CrossRef]

- Han, W.; Li, J.; Wang, S.; Zhang, X.; Dong, Y.; Fan, R.; Zhang, X.; Wang, L. Geological Remote Sensing Interpretation Using Deep Learning Feature and an Adaptive Multisource Data Fusion Network. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Han, W.; Li, J.; Wang, S.; Wang, Y.; Yan, J.; Fan, R.; Zhang, X.; Wang, L. A context-scale-aware detector and a new benchmark for remote sensing small weak object detection in unmanned aerial vehicle images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102966. [Google Scholar] [CrossRef]

- Mathibela, B.; Newman, P.; Posner, I. Reading the road: Road marking classification and interpretation. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2072–2081. [Google Scholar] [CrossRef]

- Mnih, V.; Hinton, G.E. Learning to detect roads in high-resolution aerial images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 210–223. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- He, H.; Yang, D.; Wang, S.; Wang, S.; Li, Y. Road extraction by using atrous spatial pyramid pooling integrated encoder-decoder network and structural similarity loss. Remote Sens. 2019, 11, 1015. [Google Scholar] [CrossRef]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road extraction from high-resolution remote sensing imagery using refined deep residual convolutional neural network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef]

- Wulamu, A.; Shi, Z.; Zhang, D.; He, Z. Multiscale road extraction in remote sensing images. Comput. Intell. Neurosci. 2019, 2019, 2373798. [Google Scholar] [CrossRef] [PubMed]

- Courtial, A.; El Ayedi, A.; Touya, G.; Zhang, X. Exploring the potential of deep learning segmentation for mountain roads generalisation. ISPRS Int. J. Geo-Inf. 2020, 9, 338. [Google Scholar] [CrossRef]

- Kolhe, A.; Bhise, A. Modified PLVP with Optimised Deep Learning for Morphological based Road Extraction. Int. J. Image Data Fusion 2022, 13, 155–179. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Inter-national. J. Comput. 2004, 1, 321–331. [Google Scholar]

- Gruen, A.; Li, H. Road extraction from aerial and satellite images by dynamic programming. ISPRS J. Photogramm. Remote Sens. 1995, 50, 11–20. [Google Scholar] [CrossRef]

- Dal Poz, A.; Do Vale, G. Dynamic programming approach for semi-automated road extraction from medium-and high-resolution images. ISPrS Arch. 2003, 34, W8. [Google Scholar]

- Dal Poz, A.P.; Gallis, R.A.; Da Silva, J.F. Three-dimensional semiautomatic road extraction from a high-resolution aerial image by dynamic-programming optimization in the object space. IEEE Geosci. Remote Sens. Lett. 2010, 7, 796–800. [Google Scholar] [CrossRef]

- Park, S.R. Semi-automatic road extraction algorithm from IKONOS images using template matching. In Proceedings of the 22nd Asian Conference on Remote Sensing, Singapore, 5–9 November 2001. [Google Scholar]

- Lin, X.; Shen, J.; Liang, Y. Semi-automatic road tracking using parallel angular texture signature. Intell. Autom. Soft Comput. 2012, 18, 1009–1021. [Google Scholar] [CrossRef]

- Fu, G.; Zhao, H.; Li, C.; Shi, L. Road detection from optical remote sensing imagery using circular projection matching and tracking strategy. J. Indian Soc. Remote Sens. 2013, 41, 819–831. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, J.; Liu, Z.; Shen, J. Semi-automatic extraction of ribbon roads from high resolution remotely sensed imagery by T-shaped template matching. In Proceedings of the Geoinformatics 2008 and Joint Conference on GIS and Built Environment: Classification of Remote Sensing Images, Guangzhou, China, 28–29 June 2008; SPIE: Bellingham, WA, USA, 2008; Volume 7147, pp. 168–175. [Google Scholar]

- Kirthika, A.; Mookambiga, A. Automated road network extraction using artificial neural network. In Proceedings of the 2011 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 3–5 June 2011; pp. 1061–1065. [Google Scholar]

- Miao, Z.; Wang, B.; Shi, W.; Wu, H.; Wan, Y. Use of GMM and SCMS for accurate road centerline extraction from the classified image. J. Sens. 2015, 2015, 784504. [Google Scholar] [CrossRef]

- Li, L.; Zhang, X. A quickly automatic road extraction method for high-resolution remote sensing images. Geomat. Sci. Technol. 2015, 3, 27–33. [Google Scholar] [CrossRef]

- Abdollahi, A.; Bakhtiari, H.R.R.; Nejad, M.P. Investigation of SVM and level set interactive methods for road extraction from google earth images. J. Indian Soc. Remote Sens. 2018, 46, 423–430. [Google Scholar] [CrossRef]

- Li, R.; Cao, F. Road network extraction from high-resolution remote sensing image using homogenous property and shape feature. J. Indian Soc. Remote Sens. 2018, 46, 51–58. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, L.; Zhuo, L.; Geng, W.; Wang, C. Multiple Saliency Features Based Automatic Road Extraction from High-Resolution Multispectral Satellite Images. Chin. J. Electron. 2018, 27, 133–139. [Google Scholar] [CrossRef]

- Baumgartner, A.; Steger, C.; Mayer, H.; Eckstein, W. Multi-resolution, semantic objects, and context for road extraction. In Semantic Modeling for the Acquisition of Topographic Information from Images and Maps; Birkhäuser Verlag: Basel, Switzerland, 1997; pp. 140–156. [Google Scholar]

- Karaman, E.; Çinar, U.; Gedik, E.; Yardımcı, Y.; Halıcı, U. Automatic road network extraction from multispectral satellite images. In Proceedings of the 2012 20th Signal Processing and Communications Applications Conference (SIU), Mugla, Turkey, 18–20 April 2012; pp. 1–4. [Google Scholar]

- Ding, L.; Yang, Q.; Lu, J.; Xu, J.; Yu, J. Road extraction based on direction consistency segmentation. In Chinese Conference on Pattern Recognition; Springer: Singapore, 2016; pp. 131–144. [Google Scholar]

- Huang, X.; Zhang, L. Road centreline extraction from high-resolution imagery based on multiscale structural features and support vector machines. Int. J. Remote Sens. 2009, 30, 1977–1987. [Google Scholar] [CrossRef]

- Mitra, P.; Shankar, B.U.; Pal, S.K. Segmentation of multispectral remote sensing images using active support vector machines. Pattern Recognit. Lett. 2004, 25, 1067–1074. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Object-based road extraction from satellite images using ant colony optimization. Int. J. Remote Sens. 2017, 38, 179–198. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto (Canada): Toronto, ON, Canada, 2013. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully convolutional networks for building and road extraction: Preliminary results. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1591–1594. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Shi, Q.; Liu, X.; Li, X. Road detection from remote sensing images by generative adversarial networks. IEEE Access 2017, 6, 25486–25494. [Google Scholar] [CrossRef]

- Costea, D.; Marcu, A.; Slusanschi, E.; Leordeanu, M. Creating roadmaps in aerial images with generative adversarial networks and smoothing-based optimization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2100–2109. [Google Scholar]

- Batra, A.; Singh, S.; Pang, G.; Basu, S.; Jawahar, C.; Paluri, M. Improved road connectivity by joint learning of orientation and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10385–10393. [Google Scholar]

- Lian, R.; Huang, L. DeepWindow: Sliding window based on deep learning for road extraction from remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1905–1916. [Google Scholar] [CrossRef]

- Li, Z.; Wegner, J.D.; Lucchi, A. Topological map extraction from overhead images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1715–1724. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Yin, W.; Schütze, H.; Xiang, B.; Zhou, B. Abcnn: Attention-based convolutional neural network for modeling sentence pairs. Trans. Assoc. Comput. Linguist. 2016, 4, 259–272. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.H.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 1–17 October 2021; pp. 558–567. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12299–12310. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Lin, Y.; Xu, D.; Wang, N.; Shi, Z.; Chen, Q. Road extraction from very-high-resolution remote sensing images via a nested SE-Deeplab model. Remote Sens. 2020, 12, 2985. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, Z.; Huang, X.; Zhang, Y. MRENet: Simultaneous extraction of road surface and road centerline in complex urban scenes from very high-resolution images. Remote Sens. 2021, 13, 239. [Google Scholar] [CrossRef]

- Ding, L.; Bruzzone, L. DiResNet: Direction-aware residual network for road extraction in VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10243–10254. [Google Scholar] [CrossRef]

- Wang, S.; Bai, M.; Mattyus, G.; Chu, H.; Luo, W.; Yang, B.; Liang, J.; Cheverie, J.; Fidler, S.; Urtasun, R. Torontocity: Seeing the world with a million eyes. arXiv 2016, arXiv:1612.00423. [Google Scholar]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. Roadtracer: Automatic extraction of road networks from aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4720–4728. [Google Scholar]

- Belli, D.; Kipf, T. Image-conditioned graph generation for road network extraction. arXiv 2019, arXiv:1910.14388. [Google Scholar]

- Xu, Z.; Sun, Y.; Liu, M. Topo-boundary: A benchmark dataset on topological road-boundary detection using aerial images for autonomous driving. IEEE Robot. Autom. Lett. 2021, 6, 7248–7255. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, W.; Gui, Q.; Li, X.; Wang, L. Split depth-wise separable graph-convolution network for road extraction in complex environments from high-resolution remote-sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, Z.; Li, Y.; Xia, L.; Wang, H.; Li, S.; Jiao, S.; Lei, Y. Road extraction in mountainous regions from high-resolution images based on DSDNet and terrain optimization. Remote Sens. 2020, 13, 90. [Google Scholar] [CrossRef]

- Chen, W.; Zhou, G.; Liu, Z.; Li, X.; Zheng, X.; Wang, L. NIGAN: A Framework for Mountain Road Extraction Integrating Remote Sensing Road-Scene Neighborhood Probability Enhancements and Improved Conditional Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vision, Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Ketcham, D.J.; Lowe, R.W.; Weber, J.W. Image Enhancement Techniques for Cockpit Displays; Technical Report; Hughes Aircraft Co Culver City Ca Display Systems Lab: Culver City, CA, USA, 1974. [Google Scholar]

- Chu, X.; Tian, Z.; Zhang, B.; Wang, X.; Wei, X.; Xia, H.; Shen, C. Conditional positional encodings for vision transformers. arXiv 2021, arXiv:2102.10882. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- He, S.; Bastani, F.; Jagwani, S.; Park, E.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; Sadeghi, M.A. Roadtagger: Robust road attribute inference with graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10965–10972. [Google Scholar]

- Contributors, M. MMSegmentation: Openmmlab Semantic Segmentation Toolbox and Benchmark. 2020. Available online: https://github.com/open-mmlab/mmsegmentation (accessed on 18 May 2022).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. Deepglobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 603–612. [Google Scholar]

- Zhang, H.; Dana, K.; Shi, J.; Zhang, Z.; Wang, X.; Tyagi, A.; Agrawal, A. Context encoding for semantic segmentation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7151–7160. [Google Scholar]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9716–9725. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

| Dataset Name | Number of Images | Resolution | Image Width |

|---|---|---|---|

| DeepGlobe | 6226 | 1.2 m | 1024 |

| Massachusetts | 1171 | 0.5 m | 1500 |

| RDCME | 775 | 0.6 m | 256 |

| Model Name | Training IoU | Testing IoU | Parameters Counts |

|---|---|---|---|

| Danet | 88.26 | 87.35 | 68,808,170 |

| Light Roadformer | 89.50 | 88.80 | 68,719,810 |

| CCNet | 87.82 | 86.96 | 49,812,965 |

| EncNet | 87.33 | 86.63 | 54,862,278 |

| STDC2 | 87.73 | 86.59 | 12.596,904 |

| UPerNet | 88.42 | 85.93 | 85,394,276 |

| BiSeNetv2 | 85.75 | 85.75 | 14,804,255 |

| HRNet | 88.48 | 86.68 | 65,846,402 |

| GCNet | 88.52 | 87.12 | 68,609,253 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Jiang, Y.; Wang, L.; Han, W.; Feng, R.; Fan, R.; Wang, S. Complex Mountain Road Extraction in High-Resolution Remote Sensing Images via a Light Roadformer and a New Benchmark. Remote Sens. 2022, 14, 4729. https://doi.org/10.3390/rs14194729

Zhang X, Jiang Y, Wang L, Han W, Feng R, Fan R, Wang S. Complex Mountain Road Extraction in High-Resolution Remote Sensing Images via a Light Roadformer and a New Benchmark. Remote Sensing. 2022; 14(19):4729. https://doi.org/10.3390/rs14194729

Chicago/Turabian StyleZhang, Xinyu, Yu Jiang, Lizhe Wang, Wei Han, Ruyi Feng, Runyu Fan, and Sheng Wang. 2022. "Complex Mountain Road Extraction in High-Resolution Remote Sensing Images via a Light Roadformer and a New Benchmark" Remote Sensing 14, no. 19: 4729. https://doi.org/10.3390/rs14194729

APA StyleZhang, X., Jiang, Y., Wang, L., Han, W., Feng, R., Fan, R., & Wang, S. (2022). Complex Mountain Road Extraction in High-Resolution Remote Sensing Images via a Light Roadformer and a New Benchmark. Remote Sensing, 14(19), 4729. https://doi.org/10.3390/rs14194729