1. Introduction

Crop supply is a global issue, particularly in the context of global climate change, rising population, and urbanization. With increasing food demand worldwide, agriculture production and food security should be guaranteed by ensuring biodiversity and limiting the environmental impacts [

1]. This makes reliable information about crop spatial distribution and growing patterns crucial for studying regional agriculture production and supply, making political decisions, and facilitating crop management [

2,

3].

The classification of crop spatial distributions are valuable for agricultural monitoring and for the implementation and evaluation of crop management strategies [

4,

5]. Hence, crop type mapping is in high demand. Field research and remote sensing have always been the most important sources for obtaining agricultural information [

6], and since the first launch of Earth observation satellites in 1972, continuous agriculture mapping and monitoring over large areas became possible with the Earth Observation (EO) data. Moreover, the new generation of EO data, nowadays, has increased the resolution of sensors for agriculture uses, therefore since the last few decades, the science of agriculture mapping and monitoring has developed quickly, with diverse types of high spatial and temporal resolution EO data. For example, Sun et al. in 2019 [

4] conducted a study of the crop types that were located at the lower reaches of the Yangzi River in China. They performed a classification of crop-type dynamics during the growing season by using three advanced machine learning algorithms (Support Vector Machine (SVM), Artificial Neural Network (ANN), and Random Forest (RF)) with a combination of three advanced sensors (Sentinel-1 backscatter, optical Sentinel-2, and Landsat-8). Arvor et al. in 2010 [

7] provided a methodology for mapping the main crops and agricultural practices in the Mato Grosso state in Brazil; this study was performed by two successive, supervised classifications with the Enhanced Vegetation Index (EVI) time series from the MODIS sensor to create an agricultural mask and a crop classification of three main crops in the state. In another study by Forkuor et al. in 2014 [

8], they found that an integration of multi-temporal optical RapidEye and dual-polarized Synthetic Aperture Radar (SAR) TerraSAR-X data can efficiently improve the classification accuracy of crops and crop group mapping in West Africa, in spite of excessive cloud cover, small sized fields, and a heterogeneous landscape. Furthermore, in the Finistère department, Xie and Niculescu 2021 [

9] evaluated the multiannual change detections of different Land Cover Land Use (LCLU) regions, including agricultural land with accuracy indices between 70% and 90%, by using high-resolution satellite imagery (SPOT 5 and Sentinel-2) and three algorithms that were implemented: RF, SVM, and the Convolutional Neural Network (CNN).

More importantly, many studies of crop mapping focuses on winter crop mapping. Dong et al. in 2020 [

10] proposed a method called phenology-time-weighted dynamic time warping (PT-DTW) for mapping winter wheat using Sentinel-2 time series data, and this new method may exploit phenological features in two periods, with a NDPI (Normalized Difference Phenology Index) providing more robust vegetation information and reducing the adverse impacts of soil and snow cover during the overwintering period. Zhou et al. in 2017 [

11] studied the feasibility of winter wheat mapping in an urban agricultural region with a complex planting structure using three machine learning classification methods (SVM, RF, and neural network (NN)), and the possibility of improving classification accuracy by combining SAR and optical data.

Besides the contributions of the new generation of EO data, the diversity of the classification approaches and methods have provided more resources for agriculture mapping and monitoring. The classical, direct extraction approach is the traditional and most used classification approach that is used to extract single or multiple crop types directly from satellite images [

12,

13,

14]. Moreover, we also propose the hierarchical classification approach for crops mapping in this study. Hierarchical classification is well known for its capacity to solve a complex classification problem by separating the problem into a set of smaller progressive classifications; it produces a series of thematic maps to progressively classify the image into detailed classes. Wardlow and Egbert [

12] investigated the applicability of time-series Moderate Resolution Imaging Spectro-radiometer (MODIS) 250 m normalized difference vegetation index (NDVI) data for large-scale crop mapping in the Central Great Plains of the U.S. The hierarchical classification scheme was applied in this study with high classification accuracy, and instead of directly solving a complex irrigated crop mapping problem, a four-level hierarchical classification framework was implemented to produce a series of crop-related thematic maps that progressively classified cropland areas into detailed classes. Ibrahim et al. in 2021 [

15] have also employed the hierarchical classification scheme to map crop types and cropping systems in Nigeria, using the RF classifier and Sentinel-2 imagery. Firstly, they produced a land cover map with five classes in order to eliminate other land cover types, then the next classification was performed only on cropland, where the specific crop types and cropping systems were mapped. The results indicated that the crop types were well classified with high accuracy, despite the study area being heterogeneous and smallholder-dominated.

In recent years, most studies in the agricultural field have explored the performance of different classification algorithms. Random Forest (RF) is one of the most well-known and widely used algorithms in the field for its optimal classification accuracy, effectiveness on large data bases, and its capability of estimating the importance of the variables in the classification [

8,

16,

17,

18,

19]. The RF classification algorithm is traditionally run as a Pixel-Based Classification, which has proven efficient and accurate in agriculture fields by many studies [

16,

20,

21,

22]. On the other hand, the advantage of Object-Based Classification (OBC) is well documented and many recent studies have the conclusion that OBC usually outperforms PBC for its higher classification accuracy, better potential for extracting land cover information in a heterogeneous area with small size field, and the capacity to produce a more homogenous class [

23,

24]. However, even though Object-Based Classification is better developed and considered as more accurate than PBC, both classification methods are able to achieve a great degree of accuracy.

Aside from mapping and analyzing the crop spatial distribution, understanding agricultural growing patterns is also a key element for crop management. Crop phenology monitoring and the identification of the main phenological stages are highly necessary for agricultural production predicting, efficient interventions of farmers and decision-makers during the phenological phases such as fertilization, pesticide application, and irrigation [

25]. In particular, germination is the most critical phase to be understood, and it is the starting point of the growing season. Based on the germination information, the farmer and decision-makers are able to make a future projection of the season, estimate the whole seasonal phenology for crop growth, and predict its production [

25]. Furthermore, phenology is highly related to the seasonal dynamics of a growth environment, therefore, in the context of global warming, the phenology of many plants, especially crops, may have changed [

6].

Crop phenology is usually monitored with optical satellite images using vegetation indices. For example, Pan et al. in 2015 [

26] analyzed the phenology of winter wheat and summer corn in the Guanzhong Plain in the Shanxi Province, China by using Normalized Difference Vegetation index (NDVI) time series data and extracted seasonality information from the NDVI time series for measuring phenology parameters. The potential of another less-known index, the Normalized Difference Phenology Index (NDPI), is exploited by Gan et al. in 2020 [

27] in order to detect winter wheat green-up dates. During the evaluation with three other indices (NDVI, EVI, and EVI2), the results indicate that NDPI outperforms the other indices with the highest consistency with the ground truth.

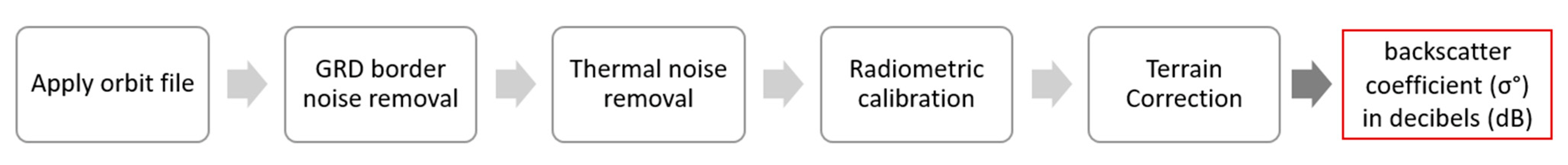

Compared to the optical data, SAR data is less used in agricultural areas. Nevertheless, lately, with the emergence of a new generation of high-resolution SAR data, in particular since the Copernicus program Sentinel-1 C-band high spatial–temporal resolution images became available, SAR data has begun to draw interest, especially for its advantage of having its own source of energy, making it nearly independent of weather conditions [

8]. Thus, SAR backscattering coefficient time series data is now more frequently used for crop phenology monitoring. While optical data strongly depends on the chlorophyll content in the plants, SAR data can reveal the main changes in the canopy structure, identify significant phenological stages, and determine the main growing period with the signal that is received after interacting with the canopy of the plants. Therefore, studies of crop phenology monitoring using SAR data have increased considerably in recent years. Meroni et al. in 2020 [

28] conducted a study of retrieving the crop-specific land surface phenology (LSP) of eight major European crops from Sentinel-1 SAR and Sentinel-2 optical data, where crop phenology was detected on the temporal profiles of the ratio of the backscattering coefficient VH/VV from Sentinel-1 and NDVI from Sentinel-2. They revealed that the crop phenology that was detected by Sentinel-1 and 2 can be complementary. Wali et al. in 2020 [

29] introduced rice phenology monitoring in the Miyazaki prefecture of Japan by using Sentinel-1 dual polarization (VV and VH) time series data, and attempted to clarify the relationship between rice growth parameters and the backscattering coefficient using the combination of two linear-regression lines. Canisius et al. in 2018 [

30] exploited SAR polarimetric parameters that were derived from fully polarimetric RADARSAT-2 SAR time series data to predict the growth pattern and phenological stages of canola and spring wheat in the Nipissing agricultural district of Northern Ontario, Canada. Mandal et al. in 2020 [

31] proposed a dual-pol radar vegetation index (DpRVI) from Sentinel-1 difference data (VV-VH) to characterize the vegetation growth of three crop types (canola, soybean, and wheat) from sowing to full canopy development, with the accumulation of the Plant Area Index (PAI) and biomass.

The feasibility and effectiveness of winter crop type mapping and phenology monitoring with optical or SAR satellite data has been proven by many studies in agricultural field, however, some limitations remain. For example, the potential of a vegetation index other than NDVI and EVI has rarely been explored, and the studies have never been performed in a coastal area with fragmented and small-scale fields. More importantly, almost all the research perform and evaluate a single classification approach or method, instead of comparing different approaches and methods for crop type mapping.

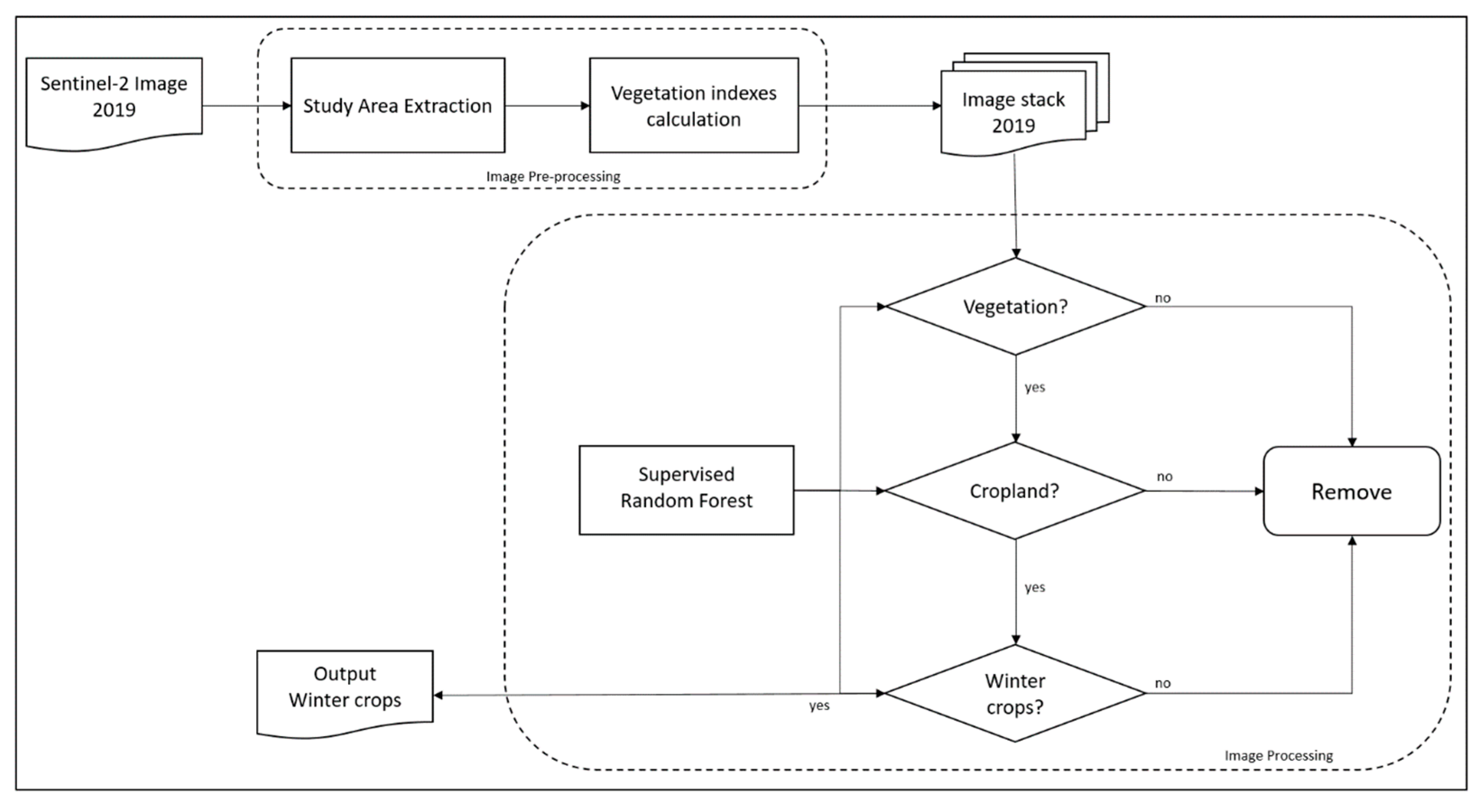

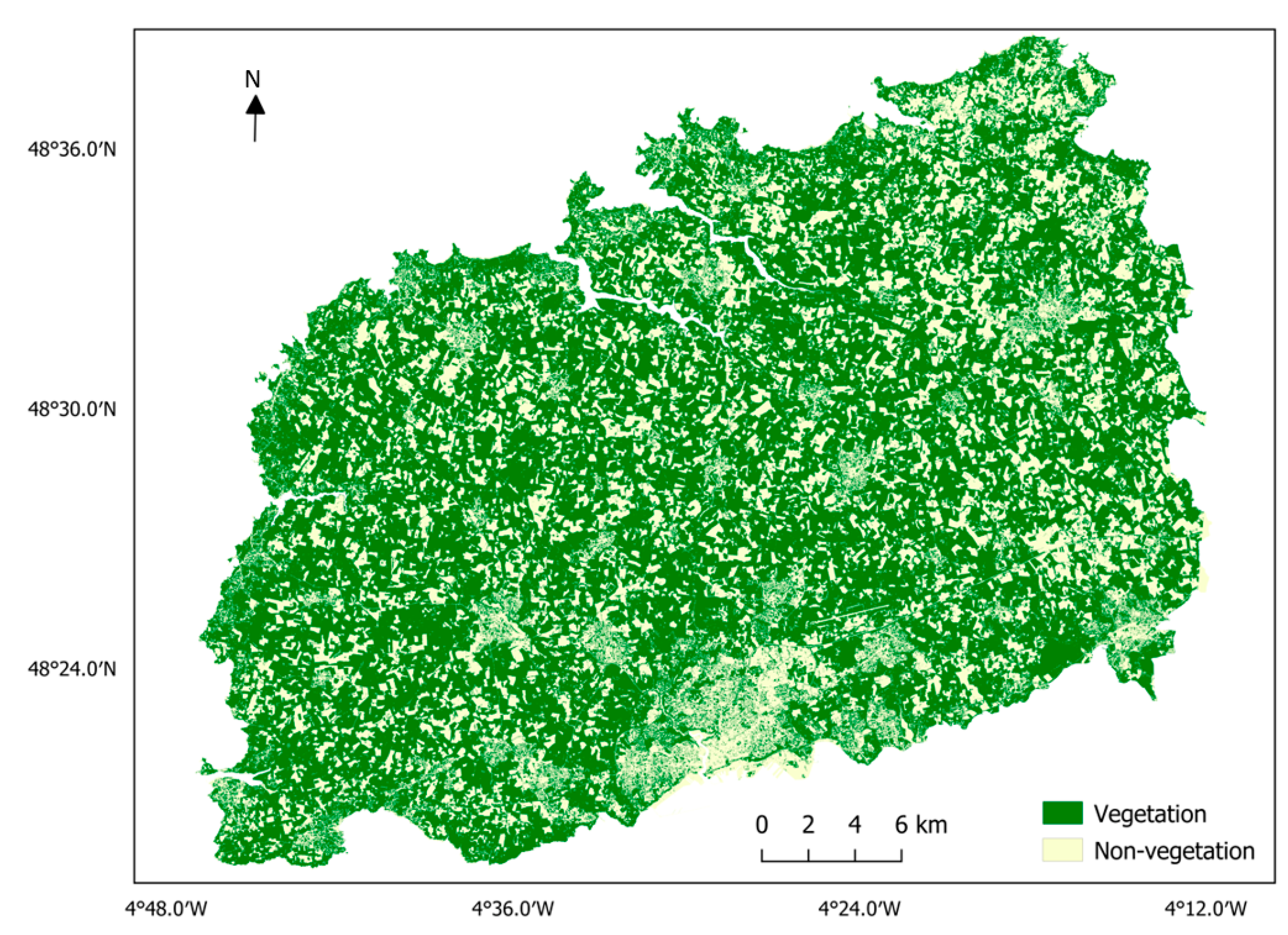

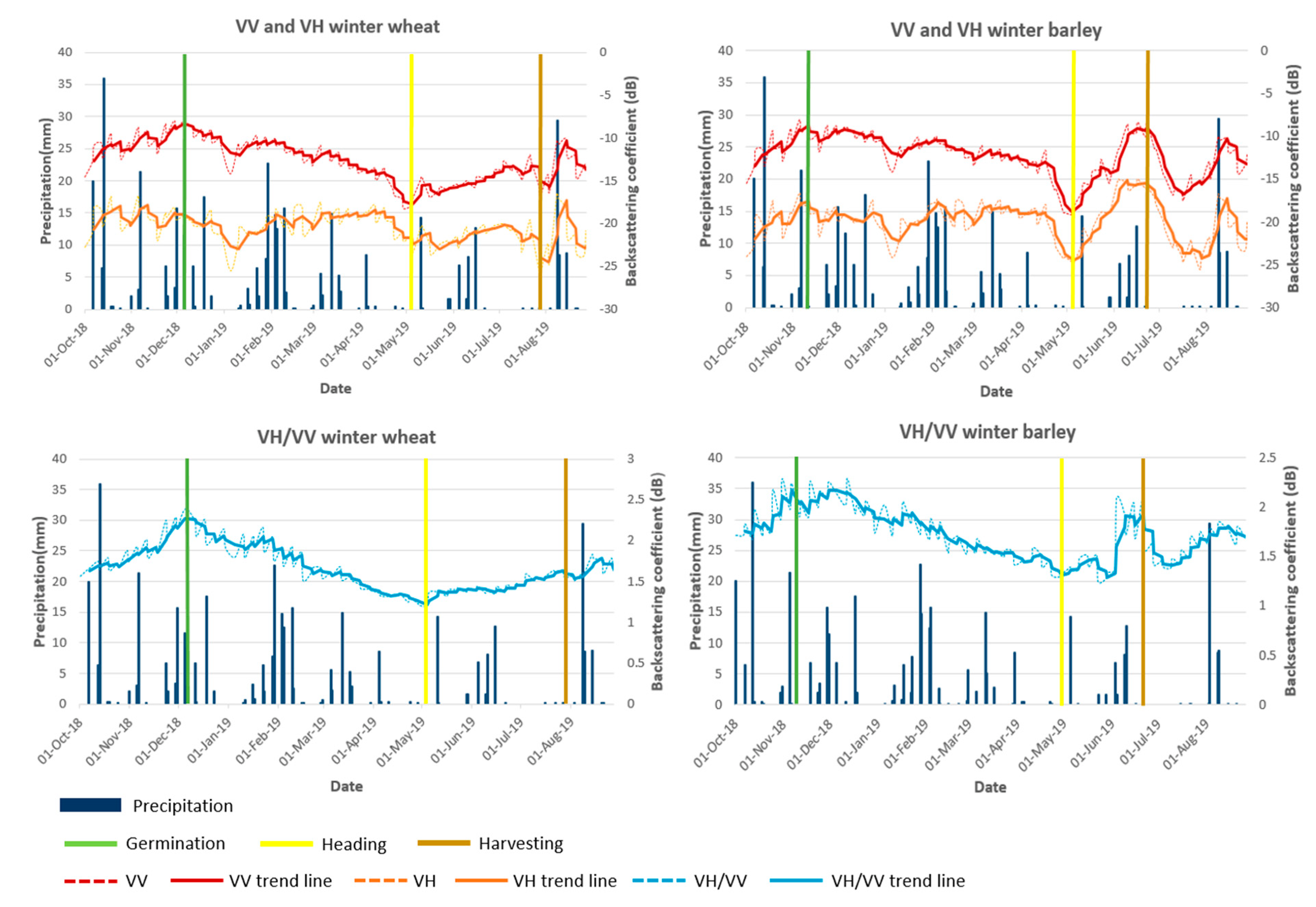

In this study, we introduce a methodology to map two winter crop types (winter wheat and winter barley) with Sentinel-2 optical data that was acquired during the growing season of the winter crops. Two different classification approaches (hierarchical classification and classical direct extraction) were performed using RF-supervised classification algorithms, and two classification methods (PBC and OBC) were operated and evaluated within the hierarchical classification framework. With the classification results of the winter crops, we are able to monitor their phenology with Sentinel-1 C-band SAR backscatter time series and precipitation data in order to understand their temporal behavior from sowing to harvesting, identify the three main phenological stages (germination, heading, and ripening, including harvesting), and study how crop phenology responds to weather conditions.

The main objectives of this study are listed as follows:

Study the feasibility of mapping winter crops with Sentinel-2 10 m spatial resolution data in a fragmented area that is dominated by small-size fields;

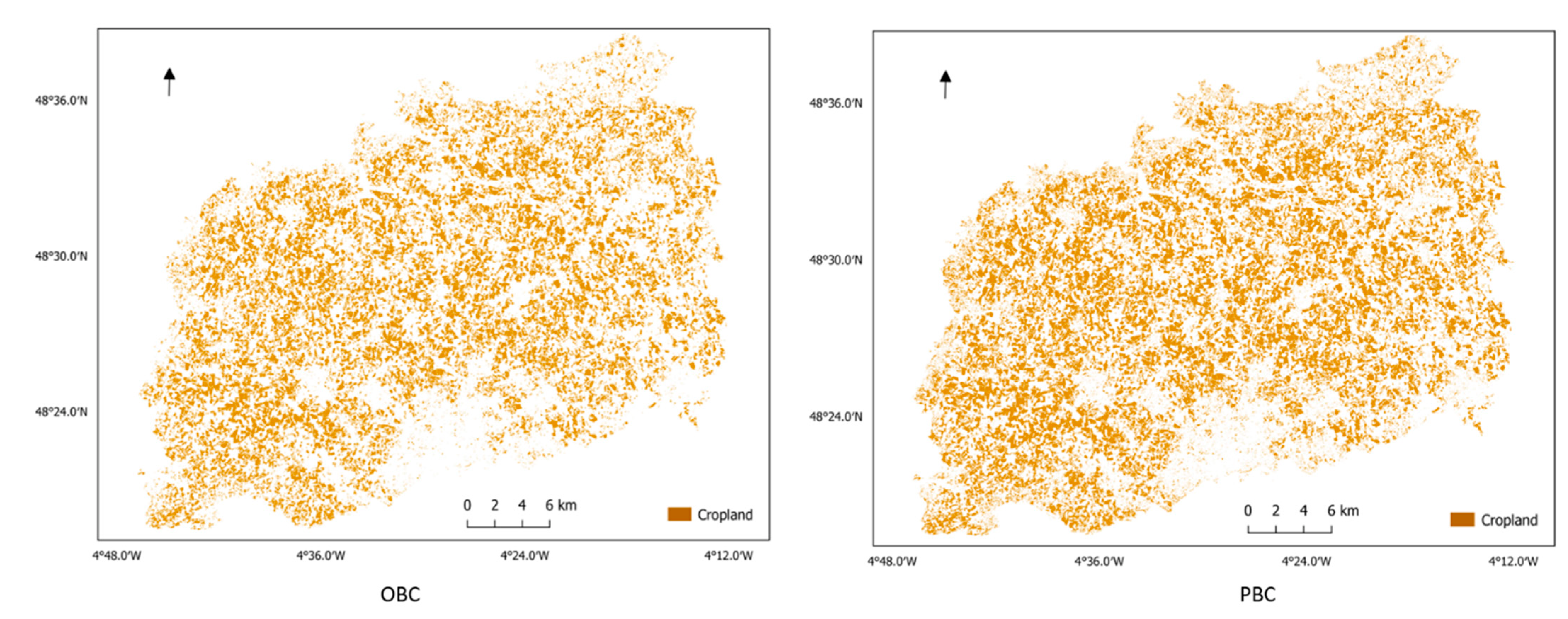

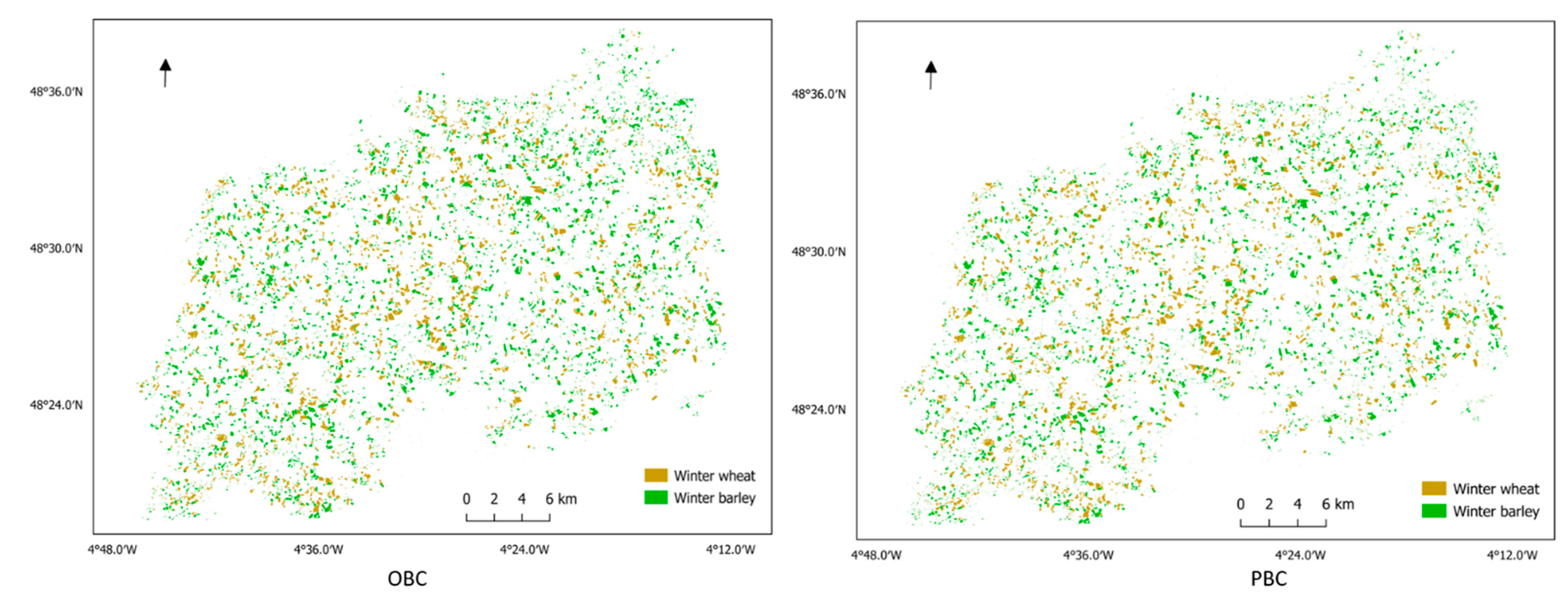

Perform hierarchical classification and classical direct extraction and evaluate the performance of both classification approaches;

Perform PBC and OBC and compare the performance in each level of the hierarchical classification structure;

Study the correlation between crop phenology and Sentinel-1 C-band SAR backscatter time series data and identify three phenological stages and the main growth period of the winter crops.

2. Study Area

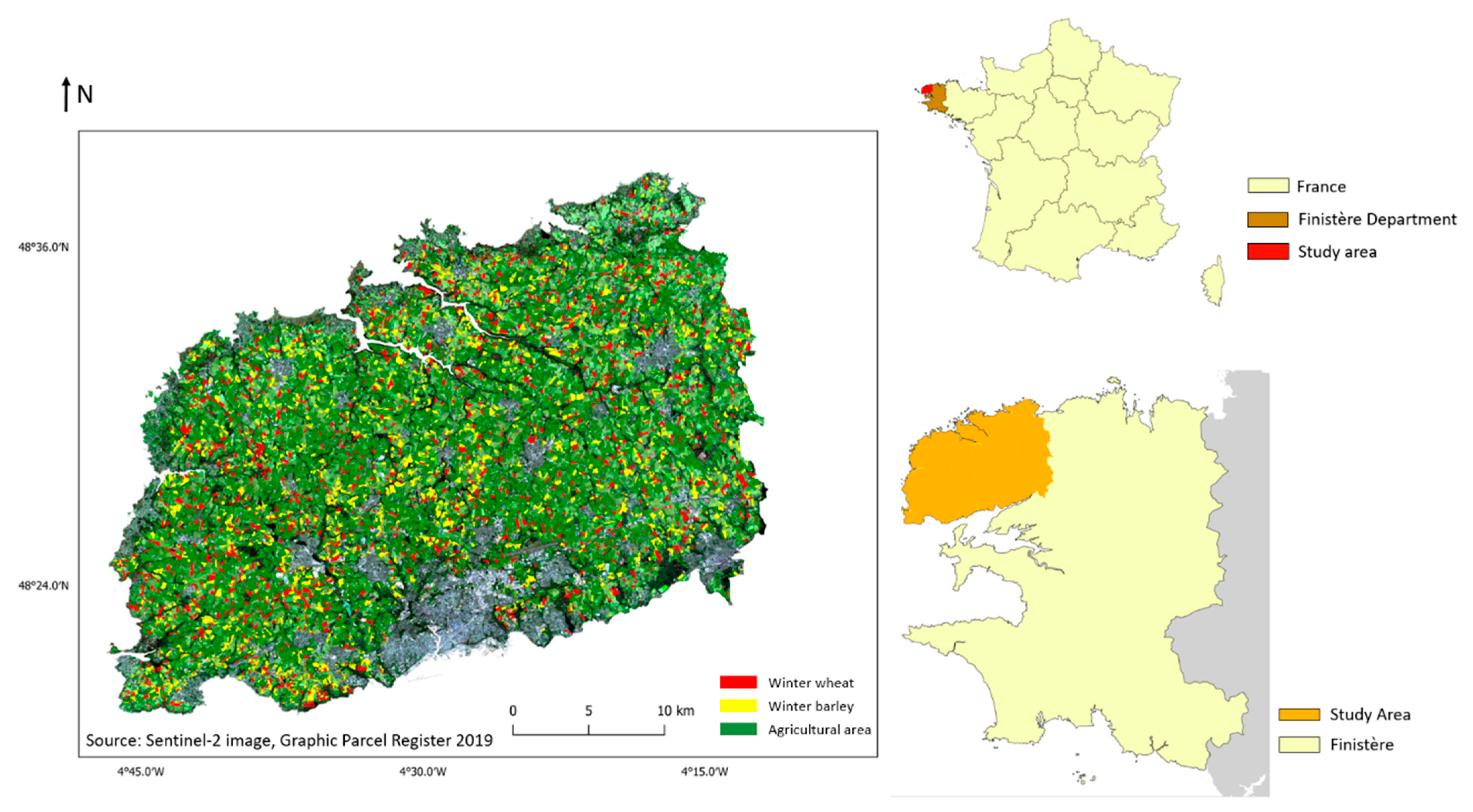

The study area is located on the west coast of France in the north of the Finistère department and the region of Brittany (

Figure 1).

The study area covers a land surface of 1034.41 km

2, and extends between the latitudes of 48°19′39″N and 48°40′41″N, and the longitudes of 4°12′50″W and 4°47′13″W. According to French National Institute of Geographic and Forest Information (IGN), the northern part of Finistère is mostly dominated by plains, and the elevation of the area ranges between 0 m and 100 m [

32]. The study area is mostly occupied by cropland, temporal or permanent grasslands, small area of forests and shrubs, urban agglomeration in the south, and a wetland area in the north [

33]. Climatically, the north of Finistère is classified as type Cfb (temperate oceanic climate), according to the Köppen climate classification, and it is characterized by warm winters and cool summers. On average, the northern region of Finistère receives 941 mm of total precipitation per year, with the annual average temperature being 12.1 °C (7.7 °C and 16.8 °C are the monthly average temperatures for the coldest and warmest months, respectively), and therefore the warm temperate climate with frequent rainfall provides very favorable conditions for agriculture activities.

With such climate and topography conditions, agriculture is an important economic sector in the study area, and a considerable number of locals work in an agricultural or related sector in the department. There are 384,408 hectares of useful agricultural area in the department, so 57% of the department’s surface is devoted to agricultural use [

34]. One of main agricultural productions are crops, including corn, winter wheat, and winter barley, and vegetables [

35].

Hence, it is important to develop a methodology to map one or several specific crop types and monitor their growth stages by using free access, high quality satellite images for crop production management. The north of the Finistère department was chosen as our first study area because of its favorable natural conditions, highly active agricultural activities, and its proximity, which facilitate the field research and interaction with farmers.

7. Conclusions

Three issues surrounding winter crops have been studied and discussed in this paper. Firstly, two types of winter crops (winter wheat and winter barley) were mapped by using a Sentinel-2 high-resolution image, and two different classification approaches were performed. Both the hierarchical classification, which turns a complex classification problem into a series of smaller classifications, and the classical direct extraction, which extracts the winter crops directly from the original satellite image, were carried out. The hierarchical classification was composed of three smaller classifications: vegetation extraction from the original image, cropland extraction from the vegetation, and finally the winter crop extraction from other crops. Additionally, PBC and OBC were both performed in the last two steps and evaluated in order to keep the most accurate classification for further processing and analysis. Subsequently, crop phenology monitoring was performed, based on the results of the previous step by using Sentinel-1 C-band SAR time series data, and the three important phenological stages (germination, heading, and ripening (harvesting)) and the main growing periods were identified as well.

To respond to the objectives of the study and as the contribution of this paper, our results showed that winter crops in a fragmented landscape with heterogeneous land cover were successfully detected with high accuracy by using a Sentinel-2 image and the classification approaches that have been proposed. In particular, the hierarchical classification framework significantly improved the classification accuracy (0.1 and 0.06 increase in the kappa and OA, respectively, against classical direct extraction), moreover the classification of winter barley is also enhanced by reducing the confusion between winter barley and grassland with the hierarchical classification framework (0.094 increase in the F-score). Within the hierarchical classification, each classification method has its advantage; OBC slightly outperformed PBC in cropland extraction, yet PBC achieved higher accuracy in winter crops mapping. Although some small differences can be noticed, however there is no significant statistical divergence between the two classification methods.

The results also lead to the conclusion that Sentinel-1 C-band SAR-polarized backscatter time series has great potential to monitor winter agriculture phenology in a coastal area with frequent rainfall. Three phenological stages and main growing periods could be easily identified from the time series in a single polarization or from the ratio, and furthermore the timing of the stages and the growing periods of the crops that are observed in the results highly conform to the field knowledge.

Although very satisfactory results were acquired in this study, some recommendations can be made for further studies, such as applying Sentinel-2 time series or SAR data for crop mapping in order to increase the classification accuracy, and in particular to reduce the confusion between winter barley and grasslands or other crop types. Exploring the potential of the combination of SAR and optical data for identifying more phenological stages and growth periods from the time series is advocated by us.