Abstract

Early detection of wildfires has been limited using the sun-synchronous orbit satellites due to their low temporal resolution and wildfires’ fast spread in the early stage. NOAA’s geostationary weather satellites GOES-R Advanced Baseline Imager (ABI) can acquire images every 15 min at 2 km spatial resolution, and have been used for early fire detection. However, advanced processing algorithms are needed to provide timely and reliable detection of wildfires. In this research, a deep learning framework, based on Gated Recurrent Units (GRU), is proposed to detect wildfires at early stage using GOES-R dense time series data. GRU model maintains good performance on temporal modelling while keep a simple architecture, makes it suitable to efficiently process time-series data. 36 different wildfires in North and South America under the coverage of GOES-R satellites are selected to assess the effectiveness of the GRU method. The detection times based on GOES-R are compared with VIIRS active fire products at 375 m resolution in NASA’s Fire Information for Resource Management System (FIRMS). The results show that GRU-based GOES-R detections of the wildfires are earlier than that of the VIIRS active fire products in most of the study areas. Also, results from proposed method offer more precise location on the active fire at early stage than GOES-R Active Fire Product in mid-latitude and low-latitude regions.

1. Introduction

In recent years, climate change and human activities have caused increasing numbers of wildfires. At the same time, both of the frequency and severity of wildfires are expected to increase due to climate change [1]. In 2021, California had 8835 incidents that burned a total of 1,039,616 ha while British Columbia had 1610 wildfires that burned 868,203 ha. While in California, in 2021, there has been 8835 incidents and total 1,039,616 ha of land burned. Among those most hazardous wildfires, the spreading speed for some of them is extremely high. For example, Dixie Fire in 2021 spreaded very fast in first several days because of the strong winds. Therefore, early detection of the active fire has become increasingly important to support early response and suppression efforts, thus reduce the damages caused by the fast-spreading wildfires.

Several early fire detection systems have been developed using remote sensing technologies, including satellite-based system, aerial-based system and terrestrial-based system. It is believed that such system can mitigate the impact of the wildfires in early stages [2]. Typical wildfire produces a peak radiance between 3 μm to 5 μm which belongs to Medium-infrared [3]. But for wildfires with higher burning temperature, the peak radiance shifts towards Short-wave Infrared (SWIR) bands with 2.2 μm wavelength. Thus, SWIR can also detect active fires when Medium infrared band saturated. Depending on the spatial and spectral resolution of the sensors in the satellites, active fire detection methods can be divided into three categories. Firstly, for medium-resolution sun-synchronous orbit satellite data, Sentinel-2A/-2B MultiSpectral Instrument (MSI) and Landsat-8/-9 Operational Land Imager (OLI) offer SWIR bands which can effectively detect active fire with 20 m to 30 m spatial resolution. But in terms of temporal resolution, Sentinel-2 has 5 days revisit time and Landsat-8/-9 has 8 days that are too in-frequent for detecting active fires (Hu et al. [4]). On the other hand, coarse spatial resolution data from sun-synchronous orbit satellites, provide Medium infrared (MIR) band with twice daily revisits that can facilitate to detect wildfires in the early stage (Add 2 references, one on VIIRS active fire detection and other other on MODIS active fire detection). Further, sensors on board the the geostationary satellites like GOES-R also provide MIR band but with lower spatial resolution and limited coverage of the earth. As a trade-off, the temporal resolution is much higher. For example, GOES-R ABI instrument provides images over the same area every 15 min. The high temporal resolution enables the early detection of wildfires and capture their progressions in the early-stage.

Past studies have explored the use of satellite images for active fire detection. Hu et al. [4] evaluated Sentinel-2 images for active fire detection. In [5,6], Landsat-8 images were exploited to detect active fires. However, low temporal resolution of Sentinel-2 and Landsat-8 data offer very limited capability for active fire detection due to their low temporal resolution. Using coarse resolution data from sun-synchronous orbit satellites, a number of active fire products have been developed to provide the location and the time of the active fires on a daily basis [7,8,9]. Visible Infrared Imaging Radiometer Suite (VIIRS) [7] on board Suomi National Polar-orbiting Partnership (NPP) satellite, Sea and Land Surface Temperature Radiometer (SLSTR) on board Sentinel-3 satellite and the Moderate Resolution Imaging Spectroradiometer (MODIS) [8] on board Terra and Aqua are among the most commonly used instruments to detect active fire points. MODIS active fire product (MCD14DL) and VIIRS active fire product (VNP14IMG) both offer day and night observations of active fire pixels, owing to the revisit time of roughly half a day. In terms of spatial resolution, VIIRS active fire product offers 375 m spatial resolution while MODIS active fire product offers 1 km spatial resolution [10,11]. Higher spatial resolution of VIIRS sensor helps to detect more active fire pixels as high spatial resolution helps to detect low-intensity fires [12]. Sentinel-3 SLSTR has similar capture time and spatial resolution as MODIS, but in [9], the author argued that SLSTR can detect fires with lower FRP than MODIS, where fire radiance power (FRP) is used to quantify fire intensity and fire severity [13]. However, revisit time of half a day is still not sufficient for early fire detection, given the fact that some wildfires spread swiftly in the early stage.

Hence, the need for higher temporal resolution motivates the emerging of active fire product using geostationary satellites like Geostationary Operational Environmental Satellites R Series (GOES-R). GOES-R series, consists of 2 geostationary satellites, GOES-16 and GOES-17, can provide the full coverage of North America and South America. At the same time, owing to the high temporal resolution of GOES-R satellites, each image is acquired every 15 min.

In [14,15], GOES Early Fire Detection (GOES-EFD) developed a threshold-based method utilizing images from GOES satellites, and demonstrated good potential for geostationary satellites to swiftly detect active fires. Similarly, in [16], an early wildfire detection system was developed to monitor the wildfires in the Eastern Mediterranean using METEOSAT Second Generation (MSG) Spinning Enhanced Visible and Infrared Imager (SEVIRI), which provides coverage over Africa and Europe. GOES-R Active Fire product is based on WildFire Automated Biomass Burning Algorithm (WF_ABBA) proposed by [17]. It is a threshold-based method which preserves original spatial resolution and temporal resolution of GOES-R. The proposed GOES-R Active Fire product is evaluated in [18,19], the authors argue the active fire product is not adequately reliable, with false alarm rate around 60% to 80% for medium and low confidence fire pixels. How to swiftly detect active fire with limited false alarms remains a challenge in early fire detection. Deep learning models like Convolutional Neural Network and Recurrent Neural Network, as a state-of-the-art statistic tools, is widely used in classification problems of remote sensing images [20,21,22]. By leveraging low-level representations of the input data, deep learning models are capable of making robust classification in active fire detection. In [23], a deep learning algorithm based on convolutional neural network was adopted using GOES-16 images as the input to detect wildfires. The proposed method utilize spatial and spectral information to classify the center pixel of image patches. It shows optimal results in both detection time and accuracy for active fire detection. However, the paper had limited study regions and lacks comprehensive comparison of detection time between proposed method and other active fire product. Also, the authors utilized GOES-R images with the original 2 km spatial resolution, the texture information within the patch is limited to make robust classification, as the size of the early stage active fire generate has smaller size than 4 km2.

Because of the high temporal resolution of GOES-R data, it is easy to generate a time-series of images. However, research about early fire detection using temporal information of GOES-R images is limited. Therefore, it is desirable to evaluate deep learning-based models using GOES-R time series for early active fire detection in terms of both early detection time and false alarm rates. The objective of this research is to evaluate a deep learning model using GOES-R time series to achieve early detection of wildfires. In particular, a Recurrent Neural Network based model utilizing Gated Recurrent Units (GRU) [24] is proposed owing to its ability to model temporal information and high computational efficiency. The model utilizes the temporal information of low spatial resolution GOES-R time-series imagery and aims to achieve earlier detection time than that of VIIRS active fire product from FIRMS and provide more precise location of early stage active fires than GOES-R active fire product.

2. Materials

2.1. Study Area

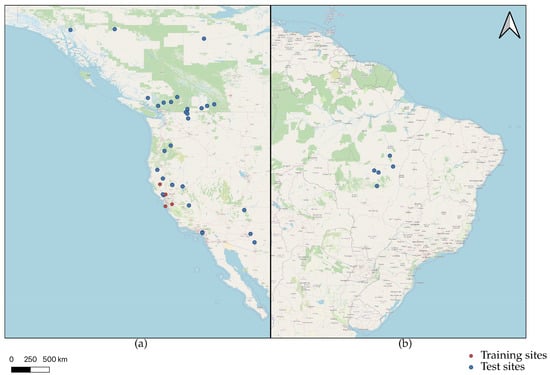

Four large complex wildfires of California in 2020 are used as the training set and thirty eight different test areas are selected across North America and South America, as shown in Figure 1. The detailed information of training sites is shown in Table 1 and the information of test sites is shown in Table 2. For test regions in Tropical Climate Zone, the landcovers are mainly broadleaf forests on flat plains in the Amazon. For test areas in Subtropical Zone, landcovers are primarily Savanna located in mountainous terrain. Test sites in Temperate Zone generally have needleleaf forests in mountains as their landcovers. Wildfires after 2018 are tested because of the availability of the GOES-R images. Due to the limitation in the coverage of the satellites, no study areas are outside North America and South America.

Figure 1.

Study areas from North America (a) and South America (b), all the wildfire regions are generated using QGIS and overlaid on Base Map Layer in Open Street Map.

Table 1.

Information of Training sites. Duration is the number of days used in the Training dataset. The name of the wildfire are either named after fire names from The Department of Forestry and Fire Protection of California.

Table 2.

Information of Test sites. The duration is not provided as we only focus on the first several days for each wildfire. The name of the wildfire are either named after fire names from The Department of Forestry and Fire Protection of California, fire numbers from British Columbia Wildfire Service, fire numbers from 2020 Major Amazon Fires Tracker, developed by InfoAmazonia, and the location of the wildfire where it happens.

2.2. GOES-R ABI Imagery

The Geostationary Operational Environmental Satellite-R series (GOES) are the geostationary weather satellites launched collaboratively by National Aeronautics and Space Administration (NASA) and National Oceanic and Atmospheric Administration (NOAA) of United States. GOES-R series consist of two satellites at two different operational longitude position. GOES-16 entered service from 18 December 2017, and operates at east position of North America at longitude 75.2° W. It provides the full coverage of South America and East part of the North America. On the other hand, GOES-17 became operational from 19 February 2019, and reached at west position at longitude of 137.2° W. Similarly, GOES-17 provides the full coverage of the west part of the North America, including British Columbia, Canada and Alaska, United States. Although the nominal spatial resolution for GOES-R Advance baseline Imager (ABI) images is 2 km, pixels grows larger when the latitude of the region increases because of the high azimuth angle. [19]

With the Advanced Baseline Imager on board as one of the primary instruments, GOES-R series satellites are able to capture optical images with four times spatial resolution than previous generations of GOES satellites [25]. GOES-R offers spectral bands ranging from Blue Band (0.45–0.49 μm), Red Band (0.59–0.69 μm), Near-Infrared Bands (0.86–1.38 μm), SWIR Band (2.23–2.27 μm), MIR Bands (3.80–7.44 μm), and Long-wave Infrared Bands (8.3–13.6 μm). Among all the spectral bands, Middle Infrared (MIR) band 7 at 3.80–4.00 μm wavelength is widely used in different active fire products. The main reason is that the spectral radiance of burning biomass generally locates in Middle Infrared [26]. Also, Thermal Infrared (TIR) band 14 at 10.8–11.6 μm and band 15 at 11.8–12.8 μm wavelength can provide a good contrast between the burning biomass and non-burning areas. At the same time, TIR can be effective to distinguish clouds with active fire. Thus, it becomes the perfect candidate for cloud/smoke mask. The preprocessing of the dataset and the selection of threshold for cloud mask are discussed in Section 3.1.

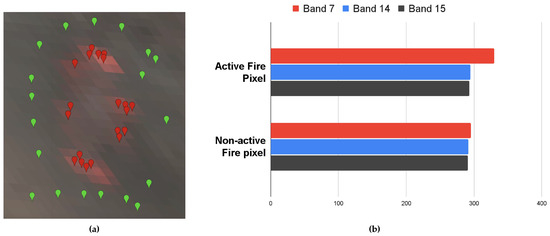

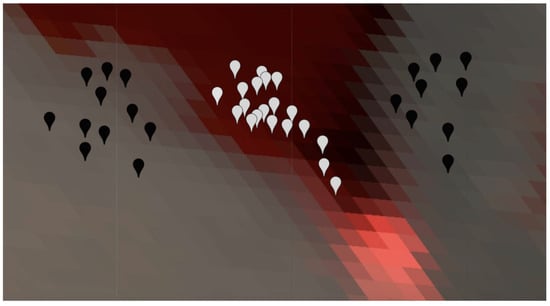

As shown in Figure 2, 20 points from the active fire areas and non-active fire areas are sampled separately. Red pins represent samples from Active Fire regions, green pins represent samples from non-active fire regions. Then, the average value of Band 7, Band 14 and Band 15 is calculated over all 20 samples respectively. It can be observed that band 7 in MIR has significant high value in active fire areas while all three bands stay relatively the same for non-active fire areas. The higher value of Band 7 for active fire pixels makes the pixel show red color in the image.

Figure 2.

Inspections on the mean values for burning areas and non-burned areas in different bands. (a) the GOES-R ABI image captured at 6 p.m. on 8 September 2020, with composition R: Band 7, G: Band 14, B: Band 15. Red pins represent active fire pixels, green pins represent non-active fire pixels. (b) The mean values of randomly sampled pixels within burning areas and non-burning areas.

2.3. VIIRS Active Fire Product

Considering high temporal resolution of GOES-R ABI imagery, it is difficult to label all the GOES-R ABI images for the full progression of the active fire. Hence, an active fire product which has moderate temporal resolution but also keep good spatial resolution is needed to serve as the training label. VIIRS active fire product provides twice daily active fire mapping at 375 m spatial resolution, is very well suitable as the training label. VIIRS Active Fire products provides a tabulate file consists coordinates, detection time, Band I4 and Band I5 values of active fire points. VIIRS band I4 at 3.55–3.90 μm has similar wavelength as GOES-R Band 7 which is utilized to detect active fire and VIIRS Band I5 at 10.5–12.4 μm has similar wavelength as GOES-R Band 14 which is served as the main contrast band to I4. The similarity in wavelength also ensures the coincidence of active fire pixels detected.

From tabulate data, a raster file is constructed using the geolocation information of active fire points and the spatial resolution at nadir. For each pixel, a binary is assigned to each pixel to indicate if that pixel has active fire. Because the actual spatial resolution at each pixel is different from the spatial resolution at nadir, the generated raster is sparse, as shown in Figure 3. Hence, VIIRS Active Fire product is only served as a coarse label to the network.

Figure 3.

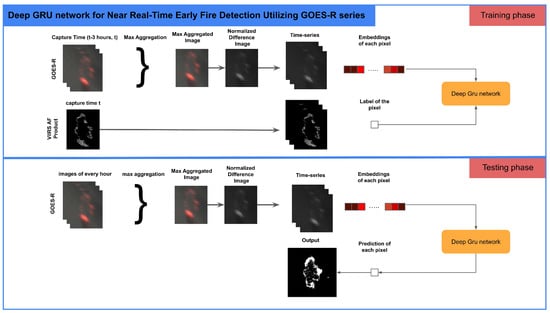

Deep GRU Network for Early Fire Detection.

3. Methods

The overall methodology of GRU-based early fire detection using GOES-R data is shown in Figure 3. The main framework mainly consists of two parts, preprocessing for embedding generation and Deep GRU network for active fire detection.

3.1. Dataset Generation and Preprocessing

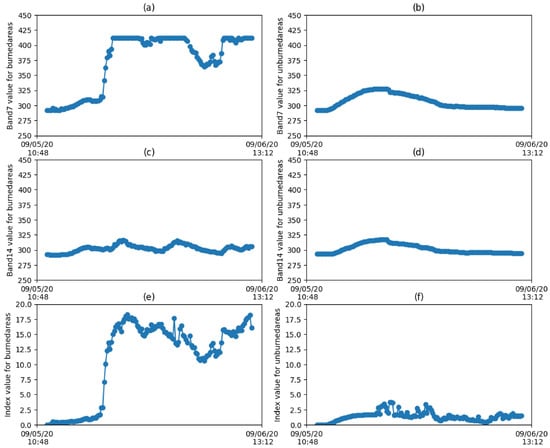

The GOES-R dataset is pre-processed using Google Earth Engine [27]. As discussed in Section 2, MIR Band is sensitive to burning biomass on the ground, and TIR band is served as a good contrast to the MIR band. Thus, for each GOES-R image, the normalized difference () between MIR band and TIR band is generated according to Equation (1) to provide a better indication of the active fire. The range of the normalized difference is between 0 and 1. But since Band 7 and Band 14 have similar values as shown in Figure 4, the normalized difference value is close to 0. Hence, we scale up the normalized difference by a factor of 100.

Figure 4.

Inspections on time series of Band 7 (a), Band 14 (c), and Normalized difference value (e) of Burned area and time series of Band 7 (b), Band 14 (d), and Normalized difference value (f) of Unburned area. Study area: Creek Fire, California between 5 September 2020 12:00 to 6 September 2020 12:00.

To provide an insight on the effect of the normalized difference , points from active fire and non-active areas are randomly sampled separately to generate the time-series over one day. As shown in Figure 4, the time series of band 7, band 14 and proposed normalized difference for burned area and unburned area clearly shows normalized difference can help better distinguish the active fire pixels from the background.

Furthermore, Band 15 with wavelength λ15 = 11.8 − 12.8 μm is served as the cloud and smoke mask. The threshold is obtained by sampling cloud/smoke and non-cloud/smoke regions as shown in Figure 5. 20 samples are sampled separately from cloud/smoke and non-cloud/smoke regions. The minimum Band 15 value of Non-cloud/smoke regions is 280, and the maximum Band 15 value of cloud/smoke regions is 250. Based on our experiments, mask with value 280 has the best masking result visually. Hence, as shown in Equation (2), all the pixels with Band 15 value larger than 280 will be preserved in the image.

Figure 5.

Sampling of Smoke/Cloud and Non-Smoke/Cloud Regions, Black Pins represent Non-Smoke/Cloud Regions and White Pins represent Smoke and Cloud.

Even though GOES-R has high temporal resolution, it is difficult to find the matching image pairs at the exact same timestamp. Because of the high dynamic of the cloud and smoke, several minutes difference can lead to a very different image. By applying max aggregation to all images within 3 h before the capture time of VIIRS images, it can effectively reduce the interference of the cloud and smoke, so that the active fire pixels can match each other between two images. Hence, the aggregated images are served as the input data source.

However, the main challenge is the coarse resolution of GOES-R images. Considering the training label has 375 m spatial resolution, GOES-R images are bilinear resampled from 2 km spatial resolution to 375 spatial resolution. Due to the reason that the upsampled GOES-R images are still coarse in texture information, we propose pixel-level classification instead of object-level classification.

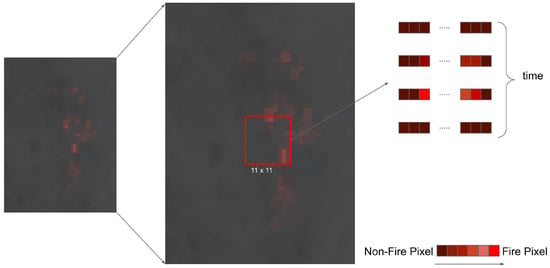

To better classify each pixel, it is necessary to encode spatial information into the embeddings of each pixel. To generate the embeddings of each pixel, two steps are involved as shown in Figure 6. (1) The first step is to take an 11 by 11 image patch, and then flatten the image patch into a vector. We take patch size as 11 according to the number of pixels of the upsampled images within the distance between the center of two pixels of the original low-resolution images. This vector serves as the embedding for the center pixel in the patch. (2) The second step is to stack all the vectors for the same center pixel in different timestamps to form the time-series. The resulting time-series of the vectors are used as the input of the model.

Figure 6.

Preprocessing of the GOES-R imagery to generate time-series embeddings.

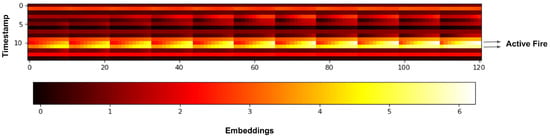

As shown in the Figure 7, the time-series of the embeddings show the progression of the wildfire. For each row, it represents the flattened 11 by 11 image patch. The neighboring pixels above the center pixel locates in the columns of the left, while the neighboring pixels below the center pixel are in the right columns. And the center pixels are located at column 60. For each column, it represents the fluctuation of band values. When the wildfire progresses from bottom-right to top-left in given image patch, the highest value in its neighborhood flows from right to left in the embedding. For each 11 pixels in the embedding map, it can be observed that bright spots shifted from right to left. Also, the pixels on the right columns tend to have more numbers of high value pixels than the pixels on the left columns. This represents the wildfire is at the bottom of the image patch. Especially, when the highest value locates at the center pixel, the center pixel is more likely to be classified as the fire pixel. The time-series model is possible to leverage the fluctuation of values of center pixel and its neighboring pixels at all previous timestamps to classify the center pixel.

Figure 7.

Time-series of embedding, x-axis represents the embedded vector for each pixel and its neighborhood pixels and its corresponding label at the last column, y-axis represents the timestamps. The figure shows the embedding for the first 20 timestamps for one image patch.

3.2. Deep GRU Network

3.2.1. Gated Recurrent Unit

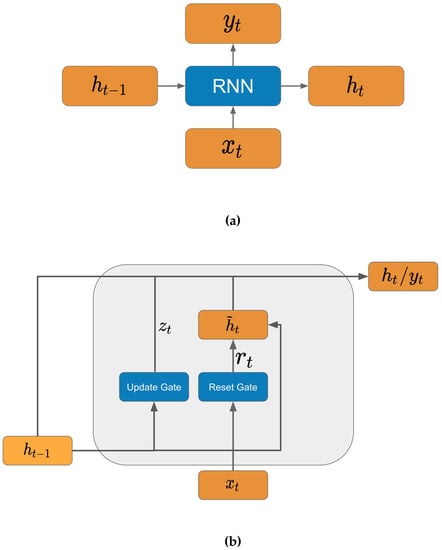

To process the time-series of the embedding, we propose to use Recurrent neural network. As shown in Figure 8, at timestamp t, the basic architecture of recurrent neural network consists of input , output and hidden state . Like feed forward network, input at each timestamp is fed into the network with weights and biases in the hidden neuron. Recurrent Neural network also utilizes the hidden state from previous timestamp and combine it with the input to generate the output. Owing to this sequential architecture, recurrent neural network has become the standard to process time-series data, because the output of each timestamp is high correlated with the hidden states of the previous timestamps.

Figure 8.

Basic Architecture of Recurrent neural network (a) and Architecture of Gated Recurrent Unit (b).

Among all the RNN variants, Gated Recurrent Unit [24] and Long Short Term Memory (LSTM) [28] are known to be the most well-known one which solves the problem within classical recurrent neural network like gradient vanish and capable to keep long term memory. As shown in Figure 8, inside GRU, there are two major components, reset gate and update gate. Reset gate defines the amount of information from previous hidden state to be forgot. The output of reset gate and update gate can be calculated in Equation (3) and Equation (4), in which, and are weight matrix for the input, and are weight matrix for hidden state.

The output of GRU can be calculated as Equations (5) and (6). is the activation function of the GRU, and U are weights to calculate the intermediate result. Output of Reset Gate is multiplied element-wise with the hidden state of the previous timestamp. When is close to 0, that means previous hidden state is dropped in calculating the intermediate output. Then the output of Update Gate defines the weights of the previous output and the intermediate output in the output of the GRU at current timestamp.

LSTM is a generalization of GRU, but it also has two extra gates, output gate and forget gates. Output gate defines the percentage of output to be used in the hidden state in the next timestamp, but the output of GRU is equivalent to the new hidden state. Forget gate which defines whether or not to erase the previous hidden state, but in GRU, it is calculated directly by using 1 minus the output of update gate. In this case, GRU has around half of the parameters compared to LSTM because of less gates involved in the calculation. Compared to LSTM, GRU is computationally cheaper, and can provide similar performance as LSTM [29]. Because early fire detection is a time-critical application, we choose GRU as the basic unit of the network to reduce the computation time in training and prediction phase.

3.2.2. Deep GRU Network

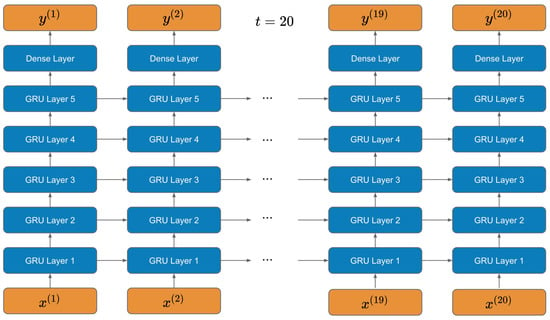

In this section, we propose the Deep GRU Network to best utilize the time-series information for all the images. As shown in Figure 9, this network is implemented as a six-layer architecture. We run a set of models using different hyperparameters, and the parameters shown in Table 3 give the best result. The network consists of 5 layers GRU network with many-to-many architecture and one level of Dense network to generate the output.

Figure 9.

Deep GRU network architecture.

Table 3.

Detail Structure of Deep GRU Network.

As shown in the Table 3, the network takes a sequence of 20 vectors of pixels as the input for 20 different timestamps. And for each vector, it corresponds to one image patch from the image with one center pixel and all its neighboring pixels. The deep GRU network proposed consists of 5 GRU layer with 256 hidden states. At the output layer, there is one single dense layer applied across all the timestamps with sigmoid activation function, which generate the classification for the center pixel.

3.2.3. Loss Function

The training label is in one-hot format and there are two classes fire/non-fire for each center pixel. Since the number of non-fire pixels is much more than the number of fire pixels, Focal loss [30] is used as the loss function. Shown as Equation (7), Focal loss is widely used in classification of the highly unbalanced dataset.

In which, in Equation (8) is the probability of pixels being classified as fire or non-fire pixels. is the focusing parameter used in the modulating factor , the modulating factor decreases to nearly zero when classifying samples from one class which dominates the dataset. This behavior down-weights the well-classified samples, and in this scenarios is the non-fire pixels. While for fire pixels, since the probabilities are low, the modulating factor is close to 1, and it does not change the loss. is the weighting factor, and it gives different weights to different factors. Modulating factor and weighting factor both try to reduce the loss value caused by accumulating errors from mis-classification of easy samples.

3.3. Testing Stage

Preprocessing and Inferencing

To achieve near real-time requirement, the input data for inference is slightly modified to achieve higher time requirements. Alternatively, max-aggregated GOES-R images for each hour are used in inference. Although, the interference of smoke and cloud can become higher because less images are aggregated together. To reduce the mis-classification caused by smoke and clouds, a threshold is applied to each pixel. Based on our experiment, the best performance for mid latitude and high latitude region is obtained when the threshold equals to 4. In terms of the low latitude regions such as Amazon forest in Brazil, the threshold is set to 1.

3.4. Setup

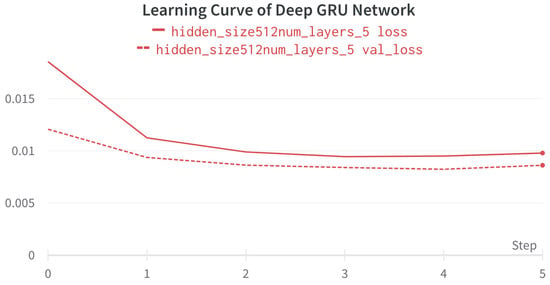

All the satellite images are collected from Google Earth Engine [27] and the deep learning model is developed using Tensorflow [31]. The training dataset is composed of 4 large-scale wildfires from California in 2020. The test dataset consists of max-aggregated images from 36 study areas across North America and South America as shown in Figure 1. For the detection time evaluation, we only detect the earliest time when there are fire pixels in the output images. While for accuracy assessment, the error of commission and error of omission on the burned area for three study areas in North America will be discussed in Section 4. After preprocessing, in total 60000 samples which consists of time-series of vectors of pixels are used in training. In terms of the processing platform, we trained our network using NVIDIA Tesla K80 GPU from Google Colab with minibatch size of 128. By applying the Adam optimizer with learning rate , the network coverage in 10 epochs. The training loss curve is shown in Figure 10.

Figure 10.

Learning Curve of proposed Deep GRU model with training loss and validation loss.

3.5. Accuracy Assessment

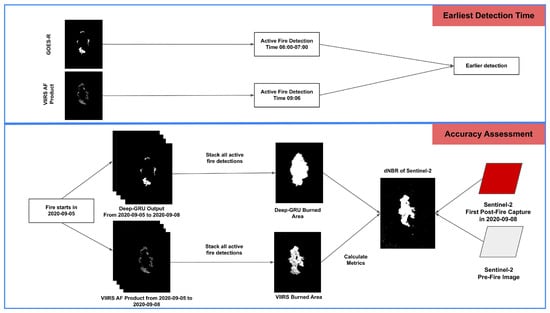

The assessment of the network is based on two factors: (1) the earliest detection time, (2) accuracy assessment on the burned areas as shown in Figure 11.

Figure 11.

Evaluation Diagrams for Earliest Detection Time and Burned Area Accuracy Assessment.

For the earliest detection, the proposed method is compared with FIRMS VIIRS fire product, which provides active fire detection twice a day. The earliest detection time of the proposed method is based on earliest time when fire pixels appear in the output images.

For the accuracy assessment on burned areas, Difference Normalized Burned Ratio (dNBR) of Sentinel-2 image is served as the reference for the accuracy assessment. The dNBR is calculate based on the difference on Normalized Burned Ratio between post-fire image and the pre-fire image. To evaluate the accuracy for early-stage wildfire, the post-fire image is the earliest Sentinel-2 image after the wildfire. And the pre-fire image is using averaged Sentinel-2 image for the past 3 months before the wildfire. Finally, the burned area can be obtained by thresholding the Difference Normalized Burned Ratio. To follow the same spatial resolution of the output of the proposed method, the Sentinel-2 images are downsampled to 375 m spatial resolution. In this experiment, we use threshold .

Besides the proposed method, FIRMS VIIRS fire product and GOES-R fire product are also tested on the same study area for comparison. GOES-R active fire product serves as the baseline of the accuracy assessment as it is based on the same data source. Due to the reason that these remote sensing images all have lower spatial resolution than Sentinel-2 images, dNBR of Sentinel-2 images are used as the ground truth. There are four different evaluation metrics. Following the evaluation done by [7], independent error of commission and independent error of omission are evaluated for direct burned area mapping. Error of Commission is characterized as the false alarm, where the image patch from Sentinel-2 corresponding to the fire pixel in low resolution images does not contain any burned areas. In same sense, Error of Omission is defined that where the image patches from Sentinel-2 contain burned area but low resolution images fail to detect. Furthermore, classical evaluation metrics for image segmentation like F1 score and Intersection over Union (IoU) are provided. The equation for F1 score and IoU score are shown in Equations (11) and (12). In the equation, TP means True Positive detection, FP is False Positive detection and FN is False Negative detection.

4. Results

4.1. Earliest Detection of Active Fires

In this section, the detection time of proposed method is compared with the FIRMS VIIRS active fire product. Because of the nature of the proposed method that the max-aggregated image over each hour is used as the input, the detection time of the proposed method is also provided as a period on the imaged used as the input.

As shown in Table 4, the proposed method utilizing GOES-R time-series can detect 24 out of 38 wildfires earlier than VIIRS and 6 out of 38 wildfires similar to VIIRS, and 8 out of 38 wildfires later than VIIRS. In total, 78.95% of the wildfire can be detected earlier with the proposed method than VIIRS. Regionally, most of the wildfires at all locations show earlier or similar detections. Especially, the early detection time show premium result in United States and Brazil.

Table 4.

Comparison over all study areas for FIRMS detection time and Deep GRU network detection time, ‘+1’ means the detection of the active fire happens on the next day of the start date. The name of the wildfire are either named after fire names from The Department of Forestry and Fire Protection of California, fire numbers from British Columbia Wildfire Service, fire numbers from 2020 Major Amazon Fires Tracker, developed by InfoAmazonia, and the location of the wildfire where it happens.

However, there are still some regions having later detection time than the VIIRS detection. Regionally, 6 out of 8 late wildfire detection are located in Canada, and 2 of 8 are located in United States. The result indicates late detections are often in high latitude regions like Canada.

4.2. Accuracy Assessment on Burned Areas

For this section, the accuracy of the proposed GRU method is assessed by comparing the detected burned areas with burned areas derived from Sentinel-2 MSI data. Since the proposed method detects the active fire instead of burned area, in this experiment, the burned area is obtained by accumulating all active fire pixels before the capture time of the Sentinel-2 image which serves as the ground truth.

4.2.1. The Creek Fire, California, US

The creek fire, currently the fourth largest fire in California’s history, starts from 4 September 2020. This tremendous wildfire caused 153,738 ha of land burned which mostly belongs to Sierra National Forest. Noticeably, from 4 September 2020 to 9 September 2020, this wildfire expands between 20,000 acres to 50,000 acres each day. This speed of expansion further proves the early detection and rapid progression mapping of wildfire is time-critical.

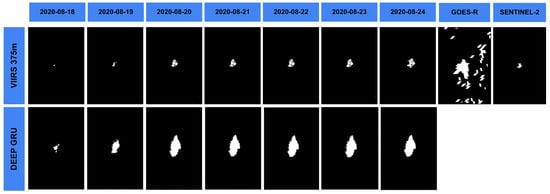

As shown in Figure 12, burned area progression mapping generated from VIIRS 375 m active fire product and proposed method are compared each day after the emerging of the wildfire. For evaluation purpose, the earliest possible Sentinel-2 image can be obtained at 17:30 GMT, 8 September 2020. Each VIIRS burned area mapping is generated by accumulating the available day and night capture at around 08:30 GMT and 20:30 GMT and overlaying on burned areas of previous days. As for the proposed Deep GRU network, the outputs at each hour are accumulated and overlaid on all previous output binary maps. For the baseline, active fire points of GOES-R active fire product before the capture time of Sentinel-2 are accumulated for the same area of interest. Quantitatively, proposed method provides the lowest error of omission and comparable error of commission compared with VIIRS for the Creek Fire as shown in Table 5. At the same time, the proposed method outperforms the baseline in F1 Score and mIoU and shows comparable numbers to VIIRS, which serves as the training label in the training phase. That indicates the proposed method could show preciser location than GOES-R Active Fire product in this study region.

Figure 12.

Burned area progression mapping of VIIRS 375 m active fire product and proposed method for 5 September 2020 to 8 September 2020 until Sentinel-2 image is available. Accumulated image from GOES-R active fire product from 5 September 2020 to 8 September 2020 is served as the baseline.

Table 5.

Accuracy Evaluation on burned area mappings using VIIRS active fire product, GOES-R active fire product, output of proposed Deep-GRU network based on GOES-R images, output of Support Vector Machine (SVM) and Random Forest using GOES-R images.

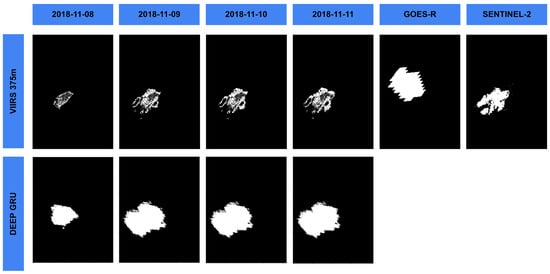

4.2.2. The Camp Fire, California, US

The Camp Fire is the deadliest and most destructive wildfire in California’s history. With 62,052.8 ha of land being burned, this wildfire happened in Northern California’s Butte County, and lasted nearly two weeks from 8 to 25 November 2018.

From Figure 13, it can be observed that the wildfire progresses extremely fast in the first day. The earliest capture of the Sentinel-2 images is on 11 November 2020. Compared with Sentinel-2 dNBR image, VIIRS active points have underestimations on the burned areas because of the reconstruction error caused by the process of generating VIIRS active fire detection raster mentioned in Section 2.3. It leads to high error of omission as shown in Table 5. In contrary, proposed method also overestimates the burned area, which caused relatively high error of commission compared to VIIRS. However, for both F1 score and mIoU, proposed method outperforms VIIRS 375 m active fire product and GOES-R active fire product. The baseline GOES-R active fire product conducts both high error of commission error and error of omission, which makes both the F1 score and mIoU much lower than both of the methods.

Figure 13.

Burned area progression mapping of VIIRS 375 m active fire product and proposed method for 8 November 2018 to 11 November 2020 until Sentinel-2 image is available. Accumulated image from GOES-R active fire product from 8 November 2018 to 11 November 2020 is served as the baseline.

4.2.3. The Doctor Creek Fire, British Columbia, Canada

The Doctor Creek Fire, started from 19 Septembe, locates at 25 km southwest of Canal Flats, British Columnbia, Canada. The size of this wildfire is 7645 ha, which is the largest wildfire of British Columbia for the whole 2020.

In Figure 14, the results are evaluated using the dNBR of Sentinel-2 images captured on 24 August 2020. Different from those two California wildfires evaluated previously, Doctor Creek fire has much smaller burned area and it locates at high latitude region. In this case, proposed method and GOES-R active fire product both have extremely high error of commission compared to VIIRS. The reason is also because of the distortion at high latitude regions as discussed in Section 4.1. Compared with the baseline GOES-R Active Fire product, false alarms of GOES-R active fire product are significantly higher than proposed method, and create pepper and salt noise in the image. Thus, the error of commission is higher than the proposed method. For the error of omission, proposed method has lower score because of the sparsity of the VIIRS active fire points. Both proposed method and GOES-R Active Fire product have 0 as the error of omission.

Figure 14.

Burned area progression mapping of VIIRS 375 m active fire product and proposed method for 18 to 24 August 2020 until Sentinel-2 image is available.

For comparison study, two classical machine learning algorithms Support Vector Machine (SVM) and Random Forest (RF) are trained using the same training dataset and tested over three test regions. Unlike Deep-GRU Network, SVM and RF classifiers are trained using CPU, which has significant higher training time and consumes larger memory. The hyperparameters used in two ML models is shown in Table 6. For RF, randomized search is used to find the best performing parameters based on the mean cross-validated score of the estimators, and the criterion function is Geni impurity. In this work, we selected the parameter from the grid:

Table 6.

Hyperparameters of Machine Learning models.

Other parameters like and are set as the default value “None” provided by the scikit-learn Python package, which means there is no limit to maximum numbers of leaf nodes and all samples from the training dataset are used in training the estimators. In the end, the parameters shown in Table 6 are selected as the model used in the comparison.

For SVM, the regularization parameter is selected from (0.0001, 0.001, 0.01, 0.1, 1, 10, 100, 1000) using grid search depending on the mean cross-validated score. The regularization parameter defines the tolerance to the mis-classifications. It is used as a scale factor to the penalty caused by the mis-classifications. Thus, the regularization parameter is scaled up by the order of magnitude. The larger the parameter, the stricter the classifier is. However, large regularization parameters also cause long training time as the trade-off. In the evaluation, we used the parameters in Table 6 which has the best mean cross-validated score. From the Table 5, both SVM and RF shows better F1 score and IoU score compared to GOES-R Active Fire product. But the proposed Deep-GRU Network provides better performance than SVM for all the study regions. Also, Deep-GRU Network provides better performance in Creek Fire and Doctor Creek Fire, but has slightly lower F1 Score than Random Forest.

5. Discussion

5.1. Earliest Detection of Active Fires

As shown in Section 4.1, majorities of the wildfires can be detected earlier or similar to the VIIRS Active Fire product. The results demonstrated that the proposed method has great ability to detect early fires.

For the earlier detection, proposed methods can detect fire hot spots within the half-day gap between 2 VIIRS images. High temporal resolution enables GOES-R to have more frequent acquisition than VIIRS. Thus, for early fire detection, GOES-R has better chance to observe the initial stage of wildfires. Application wise, the earlier the detection time is, the more time the decision makers can deliver emergency service to the wildfire affected regions.

For the wildfires with similar detection time, there are two different scenarios. The first one is when the emerging of the wildfire is covered by the heavy cloud. And at the detection time when the fire hot spot was visible from both of the instruments, and was large enough to be detected by both methods. The second scenario is when the capture time of VIIRS coincidence with GOES-R capture time, and the fire radiance power of the wildfire is high enough for coarse resolution GOES-R to detect.

For the later detection, It is because of the difference in orbits of Suomi-NPP and GOES-R. GOES-R is geostationary satellites with orbit locates at the equator. By its nature, there is distortion for contours in high latitude region due to the slant viewing angle. In contrast, Suomi-NPP is a polar-orbiting satellite, and there is no distortion in high latitude region as the satellite directly pass above those regions. Hence, the footprint of GOES-R in high latitude regions is larger then footprint of low and mid-latitude regions. It leads the spatial resolution of the GOES-R images decreases significantly. As a result, the early stages of the wildfires in those regions are more difficult to detect. For other late detections in the United States, the main reason is the tested fire has low fire radiance power in early stages. For example, when the emerging of the wildfire coincides with the capture time of VIIRS, and the active fire is in small scale, the advantage of high spatial resolution of VIIRS would help detect the fire with low fire radiance power (FRP). It is difficult for Deep GRU network to extract the fire pixels out of the GOES-R image, since the area covered by one GOES-R pixel equals nearly 28 times larger than the areas covered by one VIIRS pixel.

5.2. Accuracy Assessment on Burned Areas

From both the quantitative results and qualitative results, it can be observed that the proposed method has better detection accuracy than the GOES-R Active Fire product. Between different models, the proposed method also shows better detection accuracy than the other two machine learning models. The reason is that Deep-GRU Network utilizes the time-series information to classify the pixels while the SVM and RF models simply classify each pixel based on its embeddings.

From the quantitative results, it can be observed that the proposed method has higher F1 Score and IoU score than VIIRS Active Fire product for the Camp Fire. By visually comparing the results, it is observed that the burned area images from VIIRS are sparse. And this is the main reason for the high error of omission in the VIIRS AF product. The reason of this sparsity is partly due to lack of captures for some of the active fire points and partly about the error introduced when transferring VIIRS raster to active fire points when developing the active fire product.

Compared to VIIRS images, the proposed method generated overestimated burned areas because of the low spatial resolution. The baseline for accuracy assessment, GOES-R active fire product, can barely cover the correct location of the burned area, thus results in both high error of commission and error of omission. For the Doctor Creek Fire study region, it can be observed that both the proposed method and GOES-R AF product commit high error of commission compared to VIIRS AF Product. This is because the overestimation of the burned area covers all the regions of the ground truth. The overestimation is caused by the distortion at high-latitute regions as discussed in Section 5.1. Finally, the proposed method has higher F1 score and mIoU than GOES-R active fire points but lower than VIIRS 375 m active fire product because of the distortion effect in high latitude district.

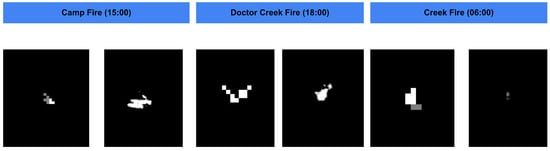

For the initial state of the wildfire, the proposed method could compare with the GOES-R active fire product since this is the only data available. As shown in Figure 15, for following study areas, the proposed method can detect the wildfire at about the same time as GOES-R active fire product. But owing to the proposed method actually upsampled the GOES-R ABI images to 375 spatial resolution, the proposed method can identify smaller regions at the early stage of the wildfire. Although for Camp fire the effect is less significant, we argue that the emerging stage of this wildfire is not exactly captured because of the interference of cloud and smoke. And by the time the fire is detected, the size of the wildfire has been at large scale. Overall, the proposed method helps to identify the burning biomass in a smaller scale at the initial stage of the wildfire.

Figure 15.

Emerging stage of the wildfire at three study areas and its capture time.

In summary, for burned area mapping, the proposed Deep GRU network utilizing GOES-R images can accurately monitor wildfires in mid-latitude regions such as California. However, due to the strong distortion effect in high latitude district, the proposed method performed better than the GOES-R active fire product, but is still not optimal to monitor the exact location of the burned areas.

6. Conclusions

In this research, we proposed Deep GRU network to detect active fires from GOES-R ABI images in the early stage of the wildfire. Based on the experiment on the study area, the proposed network can detect majorities of the wildfire earlier than VIIRS Active Fire product. At the same time, the proposed network can identify burned areas more accurately than GOES-R Active Fire product in the early stage, and significantly reduced the false alarm. By applying resampling and using 375 m spatial resolution VIIRS active fire points as training label, the output of the network also provide descent burned area mapping in mid-latitude region. The major contribution of this paper can be summaerized as follows:

- The proposed network leverage GOES-R time-series and is possible to detect majorities of the wildfire in study areas earlier than the wildly used VIIRS Active Fire Product in NASA’s Fire Information for Resource Management System. The study areas spread across low-latitude regions mid-latitude regions and high-latitude regions. It shows good generalizability in detecting wildfires in the early stage.

- The proposed method provides good indication of areas affected by the wildfires in the early stage for low-resolution and mid-resolution regions compared to GOES-R Active Fire product. Especially, it significantly reduced the false alarms of GOES-R Active Fire product. For high-latitude regions such as Doctor Creek Fire in British Columbia, the detection shows high error of commission because of the distortion caused by the terminologies of geostationary satellites. Also, for the first detection of the active fire, the proposed method also shows more accurate location of the wildfire compared to GOES-R Active Fire product, while VIIRS active fire product is not available at that time.

Author Contributions

Conceptualization, methodology, implementation, validation, formal analysis, investigation, original draft preparation, Y.Z.; Conceptualization, methodology, validation, resources, writing—review and editing, supervision, project administration, funding acquisition, Y.B. All authors have read and agreed to the published version of the manuscript.

Funding

The research is part of the project ‘Sentinel4Wildfire’ funded by Formas, the Swedish research council for sustainable development and the project ‘EO-AI4Global Change’ funded by Digital Futures.

Data Availability Statement

The datasets used in this study are available from on request from the authors.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Sample Availability

Samples of the code are available from the authors.

Abbreviations

The following abbreviations are used in this manuscript:

| EO | Earth Observation |

| DL | Deep Learning |

| GRU | Gated Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| GOES-R | Geostationary Operational Environmental Satellites R Series |

| SLSTR | Sea and Land Surface Temperature Radiometer |

| VIIRS | Visible Infrared Imaging Radiometer Suite |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

References

- Canadell, J.G.; Monteiro, P.M.; Costa, M.H.; Da Cunha, L.C.; Cox, P.M.; Alexey, V.; Henson, S.; Ishii, M.; Jaccard, S.; Koven, C.; et al. Global carbon and other biogeochemical cycles and feedbacks. In Proceedings of the AGU Fall Meeting, Online, 13–17 December 2021. [Google Scholar]

- Pradhan, B.; Suliman, M.; Awang, M.A.B. Forest fire susceptibility and risk mapping using remote sensing and geographical information systems (GIS). Disaster Prev. Manag. 2007, 16, 344–352. [Google Scholar] [CrossRef]

- San-Miguel-Ayanz, J.; Ravail, N. Active Fire Detection for Fire Emergency Management: Potential and Limitations for the Operational Use of Remote Sensing. Nat. Hazards 2005, 35, 361–376. [Google Scholar] [CrossRef]

- Hu, X.; Ban, Y.; Nascetti, A. Sentinel-2 MSI data for active fire detection in major fire-prone biomes: A multi-criteria approach. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102347. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef]

- Kumar, S.S.; Roy, D.P. Global operational land imager Landsat-8 reflectance-based active fire detection algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I. The New VIIRS 375 m active fire detection data product: Algorithm description and initial assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Giglio, L.; Schroeder, W.; Justice, C. The collection 6 MODIS active fire detection algorithm and fire products. Remote Sens. Environ. 2016, 178, 31–41. [Google Scholar] [CrossRef]

- Xu, W.; Wooster, M.; He, J.; Zhang, T. First study of Sentinel-3 SLSTR active fire detection and FRP retrieval: Night-time algorithm enhancements and global intercomparison to MODIS and VIIRS AF products. Remote Sens. Environ. 2020, 248, 111947. [Google Scholar] [CrossRef]

- Oliva, P.; Schroeder, W. Assessment of VIIRS 375 m active fire detection product for direct burned area mapping. Remote Sens. Environ. 2015, 160, 144–155. [Google Scholar] [CrossRef]

- Schroeder, W.; Prins, E.; Giglio, L.; Csiszar, I.; Schmidt, C.; Morisette, J.; Morton, D. Validation of GOES and MODIS active fire detection products using ASTER and ETM+ data. Remote Sens. Environ. 2008, 112, 2711–2726. [Google Scholar] [CrossRef]

- Li, F.; Zhang, X.; Kondragunta, S. Biomass Burning in Africa: An Investigation of Fire Radiative Power Missed by MODIS Using the 375 m VIIRS Active Fire Product. Remote. Sens. 2020, 12, 1561. [Google Scholar]

- Fu, Y.; Li, R.; Wang, X.; Bergeron, Y.; Valeria, O.; Chavardès, R.D.; Wang, Y.; Hu, J. Fire Detection and Fire Radiative Power in Forests and Low-Biomass Lands in Northeast Asia: MODIS versus VIIRS Fire Products. Remote. Sens. 2020, 12, 2870. [Google Scholar] [CrossRef]

- Koltunov, A.; Ustin, S.; Quayle, B.; Schwind, B. Early Fire Detection (GOES-EFD) System Prototype. In Proceedings of the ASPRS Annual Conference, Sacramento, CA, USA, 19–23 March 2012. [Google Scholar]

- Koltunov, A.; Ustin, S.L.; Quayle, B.; Schwind, B.; Ambrosia, V.; Li, W. The development and first validation of the GOES Early Fire Detection (GOES-EFD) algorithm. Remote Sens. Environ. 2016, 184, 436–453. [Google Scholar]

- Kotroni, V.; Cartalis, C.; Michaelides, S.; Stoyanova, J.; Tymvios, F.; Bezes, A.; Christoudias, T.; Dafis, S.; Giannakopoulos, C.; Giannaros, T.M.; et al. DISARM Early Warning System for Wildfires in the Eastern Mediterranean. Sustainability 2020, 12, 6670. [Google Scholar] [CrossRef]

- Schmidt, C.; Hoffman, J.; Prins, E.; Lindstrom, S. GOES-R Advanced Baseline Imager (ABI) Algorithm Theoretical Basis Document for Fire/Hot Spot Characterization; Version 2.0; NOAA: Silver Spring, MD, USA, 2010.

- Li, F.; Zhang, X.; Kondragunta, S.; Schmidt, C.C.; Holmes, C.D. A preliminary evaluation of GOES-16 active fire product using Landsat-8 and VIIRS active fire data, and ground-based prescribed fire records. Remote Sens. Environ. 2020, 237, 111600. [Google Scholar]

- Hall, J.V.; Zhang, R.; Schroeder, W.; Huang, C.; Giglio, L. Validation of GOES-16 ABI and MSG SEVIRI active fire products. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101928. [Google Scholar] [CrossRef]

- Zhu, X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A review. arXiv 2017, arXiv:1710.03959. [Google Scholar]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M. Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning. Sci. Rep. 2020, 10, 1322. [Google Scholar]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Toan, N.T.; Phan, T.C.; Hung, N.; Jo, J. A deep learning approach for early wildfire detection from hyperspectral satellite images. In Proceedings of the 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 38–45. [Google Scholar]

- Cho, K.; Merrienboer, B.V.; Çaglar, G.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Mission Overview|GOES-R Series. Available online: https://www.goes-r.gov/mission/mission.html (accessed on 4 July 2021).

- Barducci, A.; Guzzi, D.; Marcoionni, P.; Pippi, I. Comparison of fire temperature retrieved from SWIR and TIR hyperspectral data. Infrared Phys. Technol. 2004, 46, 1–9. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [PubMed]

- Wang, W.; Yang, N.; Wei, F.; Chang, B.; Zhou, M. Gated Self-Matching Networks for Reading Comprehension and Question Answering. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 15th USENIX Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).