1. Introduction

The Paraguayan Chaco is a complex ecosystem rich in rare species, and its dry forest is part of the largest dry forest in the world [

1]. At the same time, Paraguay is one of the fastest deforested countries in Latin America, and more than 40% of the Paraguayan Chaco’s forest cover has been lost since 1987 [

2]. This is a major threat to the conservation of existing flora, fauna and soil conditions. Paraguay’s forestry and environmental authorities try to ensure the protection of the environment through the implementation of environmental laws and resolutions, the basis of which was created in 1986 with Decree 18831/86 [

3]. Among other aspects, it prohibits the deforestation of contiguous areas larger than 100 ha and requires that the logged areas be separated by forested stripes at least 100 m wide. These forested stripes primarily serve to preserve forest and protect the soil from wind erosion, which is why they are also referred to as

windbreaks in the following. Further regulations concerning a redefinition of the width of windbreaks [

4,

5] and the protection of the endangered tree species

palo santo (Balnessia sarmientoi) [

6], were defined in the following years. This resulted in the formation of central forest patches in agricultural fields—a second important landscape feature in the Paraguayan Chaco that shall protect the rare palo santo tree.

Similar regulations also exist in Argentina and define the realization of windbreaks in the Argentinian Chaco [

7]. The established rules are important to limit the negative consequences that deforestation has on nature. Windbreaks connect micro-ecosystems by allowing flora and fauna to migrate, and they have a positive influence on the microclimate. They alter the magnitude and direction of wind, which regulates the temperature and moisture of the soils and reduces soil erosion [

8,

9]. In the lee of windbreaks, the evaporation of surface humidity is reduced, and the surface heats up more than in exposed areas [

10]. The warmer and more humid soils, in turn, facilitate chemical and biological mechanisms promoting an increased biological diversity [

10]. Moreover, windbreaks can protect soil ecosystems from permanent deterioration as a consequence of droughts and floods [

10]. All of the mentioned points reveal the importance of intact forested stripes.

Ginzburg et al. [

7] found that less than 40% of the mandatory windbreaks were present in their study area in the Argentinian Chaco in 2012. In Paraguay, too, the environmental regulations are often disregarded, and many of the windbreaks are narrower than they should be, or the farmers cut wide aisles to connect neighbouring fields. Comprehensive monitoring of the windbreaks is necessary. However, there are hardly any paved roads in the Paraguayan Chaco, making it difficult to reach remote areas. In situ monitoring of the environmental resolutions requires days of travel and is only possible selectively, whereas satellite remote sensing can provide exhaustive, repeated, and detailed imagery of remote areas.

Even though the importance of windbreaks is well known, only few publications address the monitoring of windbreaks through remote sensing imagery. Liknes et al. [

11] presented a semi-automated, morphology-based approach using binary forest maps to differentiate between different types of windbreaks (i.e., north-south or east-west oriented, or L-shaped). Although their method distinguishes between windbreaks and riparian corridors, it is not applicable if the windbreaks are connected to other forest patches. Different from Liknes et al. [

11], most other studies on windbreaks do not classify the windbreaks in an automated manner. To map windbreaks, Burke et al. [

12] and Ghimire et al. [

13] used the commercial software “eCognition” and the “ENVI Zoom 4.5 Feature Extractor”, respectively, to generate object polygons which they manually optimized in a second step. Piwowar et al. [

14] even waived commercial software, concluding that manual labelling is faster than correcting all errors from the software results. Furthermore, Deng et al. work on manual annotations for their analysis on the width [

15] and continuity [

16] of windbreaks. For the detection of discontinuities, their approach requires vector lines of windbreaks that are continuous over gaps in order to identify the gaps along the line [

16]. A more recent publication by Ahlswede et al. [

17] reviews the potential of deep learning models to recognize linear woody features such as hedges, which have a similar structure as windbreaks, in satellite imagery and shows promising results reaching an F1 score of 75%. To train the convolutional neural networks (CNNs), they had manually annotated polygons for the individual hedges at their disposal. What all these approaches have in common and what differentiates them from the situation in the Paraguayan Chaco is the fact that they all address isolated windbreaks, well-separated from each other, while grids of connected windbreaks are characteristic for the Paraguayan Chaco. Moreover, most of these publications address much smaller study areas of less than 4000 km

(study area Paraguayan Chaco: 240,000 km

).

In contrast with [

7,

14,

15,

16], who manually digitised windbreaks based on forest masks, we aim at an automatic recognition of gaps in windbreaks and of central forest patches in agricultural fields. In the last decade, deep learning has become an important tool in Earth observation [

18,

19,

20,

21]. This sub-field of machine learning uses neural networks to analyse large amounts of data by learning suitable feature extractors. The basic structure of neural networks consists of successive layers of adjustable parameters. Here, the increasing depth of the models reflects the possibility of being able to map increasingly complex feature representations. Due to this model layout, with a sufficient amount and variability of training data, deep learning models have the potential to learn complex feature representations from the training data [

22]. In particular, CNNs from the computer vision domain for the analysis of image data [

22,

23] and their further development have led to the consideration of multiscale spatial features [

20,

24,

25,

26], which make them the most widely used deep learning model in Earth observation [

19,

20,

21]. As stated above, large and variable training data sets, e.g., sets of images with their corresponding pixel-wise class label, are necessary to optimise a deep learning model sufficiently. Particularly in Earth observation, the acquisition of training data is very time intensive and often requires experts who are skilled in interpreting remote sensing imagery [

27,

28]. Furthermore, the Paraguayan Chaco is a rather small application area in the deep learning domain. To train a neural network on annotated images from the Paraguayan Chaco, probably the entire region would be needed for training. Additionally, in case of mapping gaps in windbreaks, such labelling is an extremely time-intensive task because each gap needs to be annotated as an individual polygon.

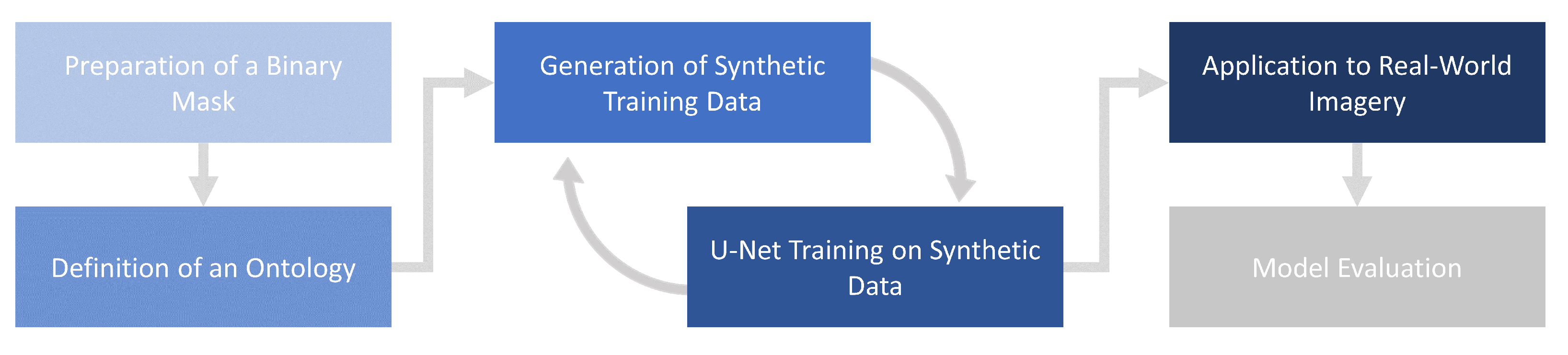

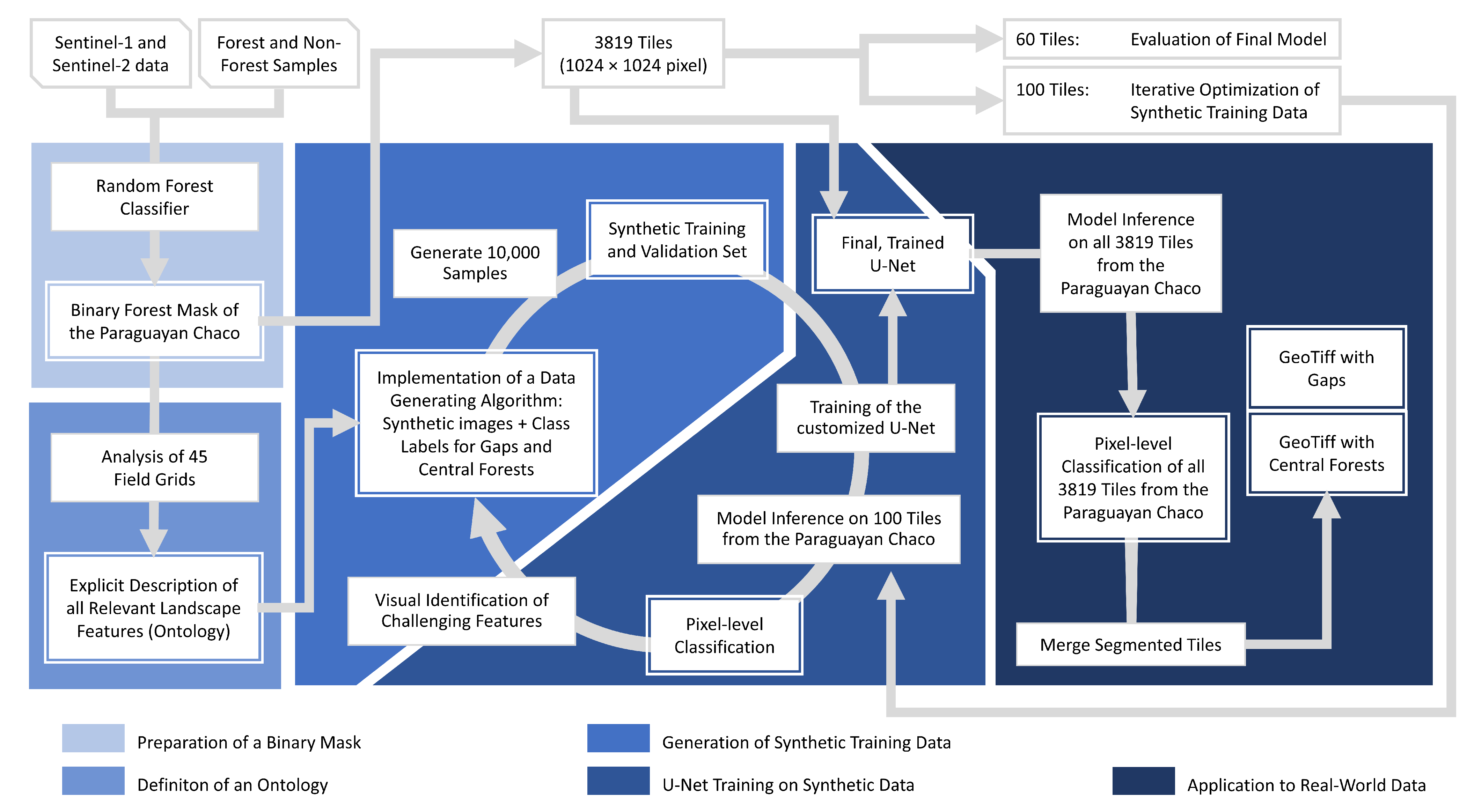

To overcome these limitations, this study uses a deep learning approach and synthetic training data to detect windbreak gaps and central forest patches. Similar to Liknes et al. [

11], we conduct the windbreak mapping based on binary forest maps. The forest maps were derived from Sentinel-1 and -2 imagery using an random forest (RF) classification to distinguish between forest and non-forest areas. Consequently, this simplifies the following training of the CNN because it only learns from binary images. Thus, it is widely independent of a specific sensor radiometry, acquisition geometry, and light conditions, and only needs to focus on the spatial features. Additionally, instead of manually labelling heaps of image tiles, we train our CNN on completely synthetically generated training data. This is a novel approach for landscape feature recognition and has, to our knowledge, yet only been applied in one study on natural landscape elements, i.e., [

29], and in a few other studies on human-made objects, e.g., [

28,

30,

31]. By using synthetic data, we can not only avoid resource-intensive manual work, but we can also make sure that sufficiently variable and numerous training data are available. For a deep learning model to solve the given task by learning a highly generalised but still accurate representation of the underlying features, we need thousands of different examples. Furthermore, the synthetically generated training data are adjustable, and therewith, it provides the opportunity to control the deep learning model behaviour. In order to systematically embed expert knowledge about the agro-environmental system, spatial features, and the Earth observation sensor, the recently proposed SyntEO framework for synthetic data generation in Earth observation by Hoeser and Kuenzer [

28] was used as a strategic guide.

Based on the binary forest map and the synthetic training data set, we aim at mapping deficiencies in windbreaks and central forest patches in an efficient and automated manner in the entire Paraguayan Chaco for the year 2020. At the same time, we would like to demonstrate in this study how the concept of a synthetic training data set can be implemented in the remote sensing domain and reveal this method’s opportunities and limitations.

2. Study Area

The study region of this work is the Paraguayan Chaco, which is located in the western part of Paraguay. It constitutes 25% of the Great American Chaco—a forest region with a subtropical climate spreading over several South American countries [

1]. The landscape of the Paraguayan Chaco is dominated by regularly flooded savannahs in the East and the South. In contrast, the central and north-western parts are characterized by dry forests which are, especially in the centre, permeated by agricultural fields. Even though the region comprises more than 60% of the country, having an area of approximately 240,000 km

, only 3% of the population lives there [

1]. At the same time, the Paraguayan Chaco has been identified as one of the regions with the highest rates of deforestation in South America [

2,

32,

33]. Numbers for 2008–2020 show that every year, 3200 km

of the forest is logged [

2], whereby the land is subsequently used as pasture in most cases [

32,

33].

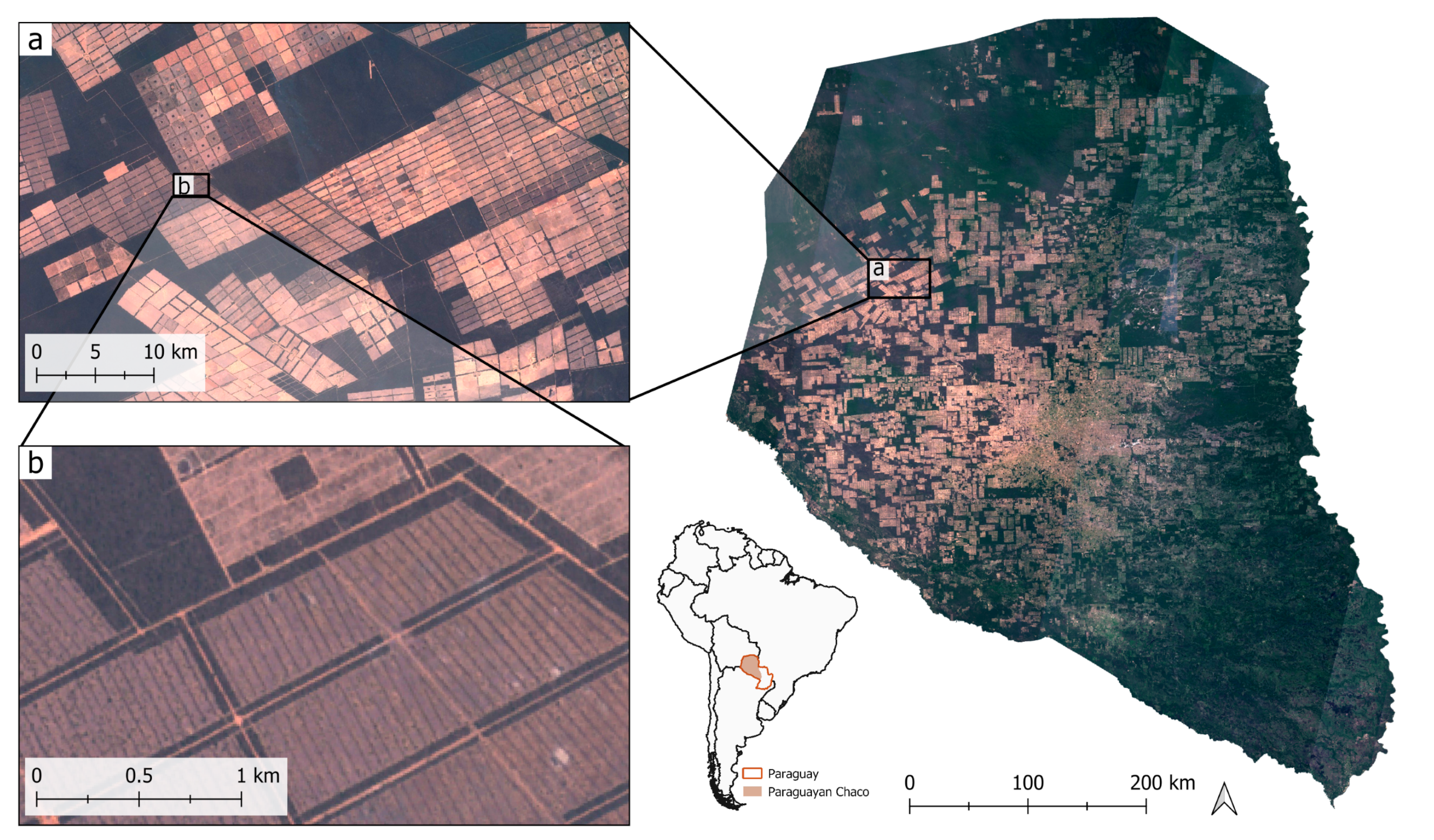

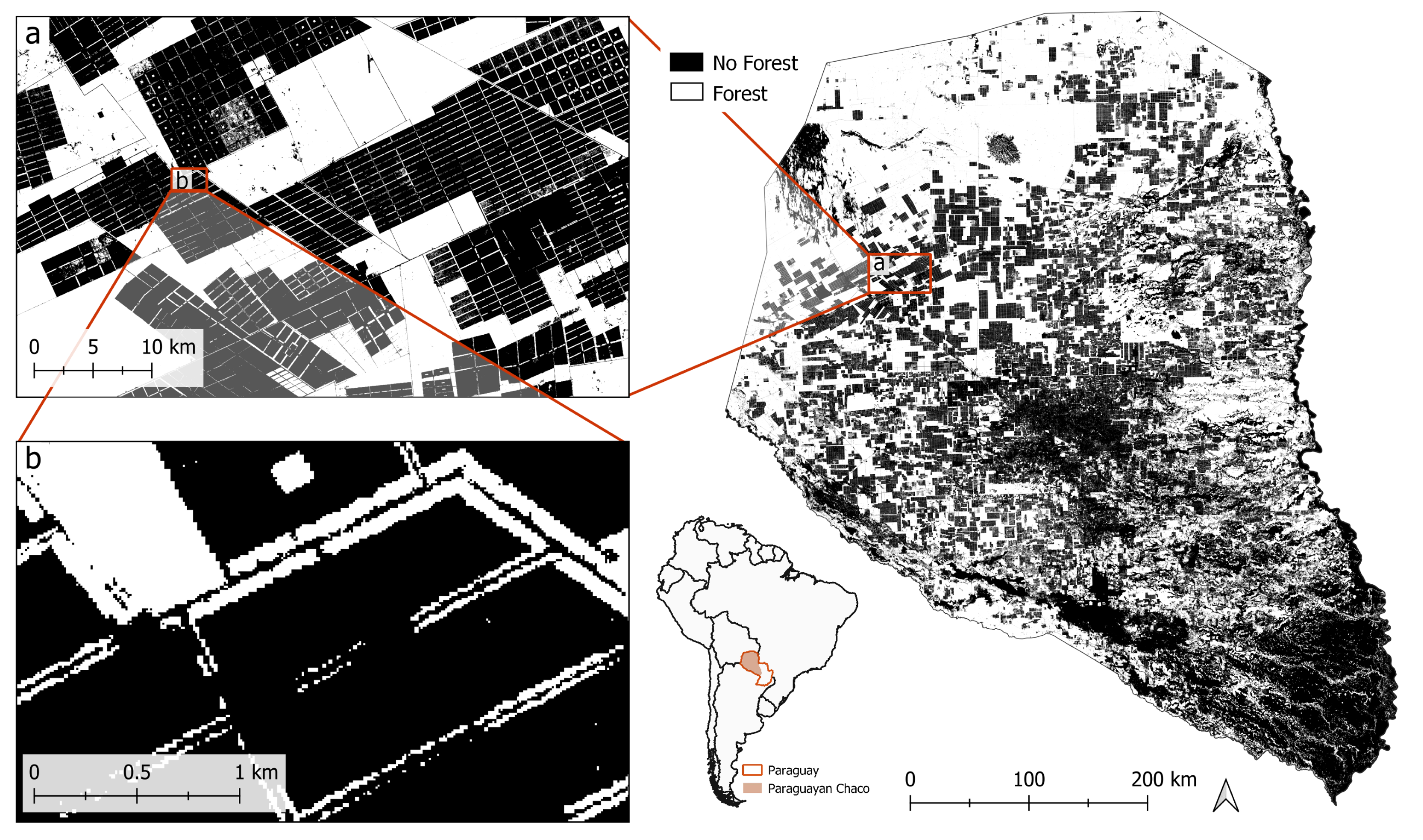

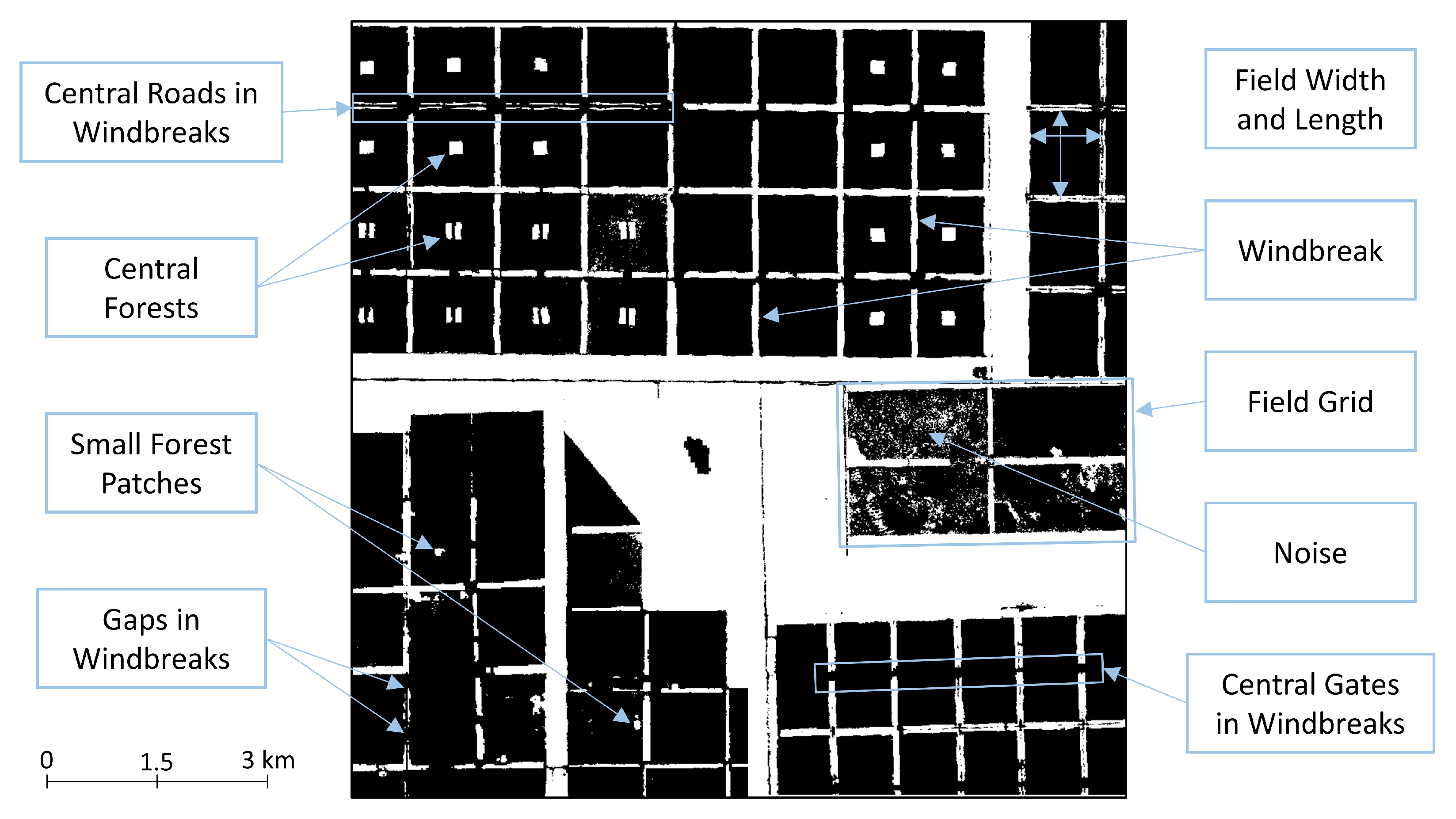

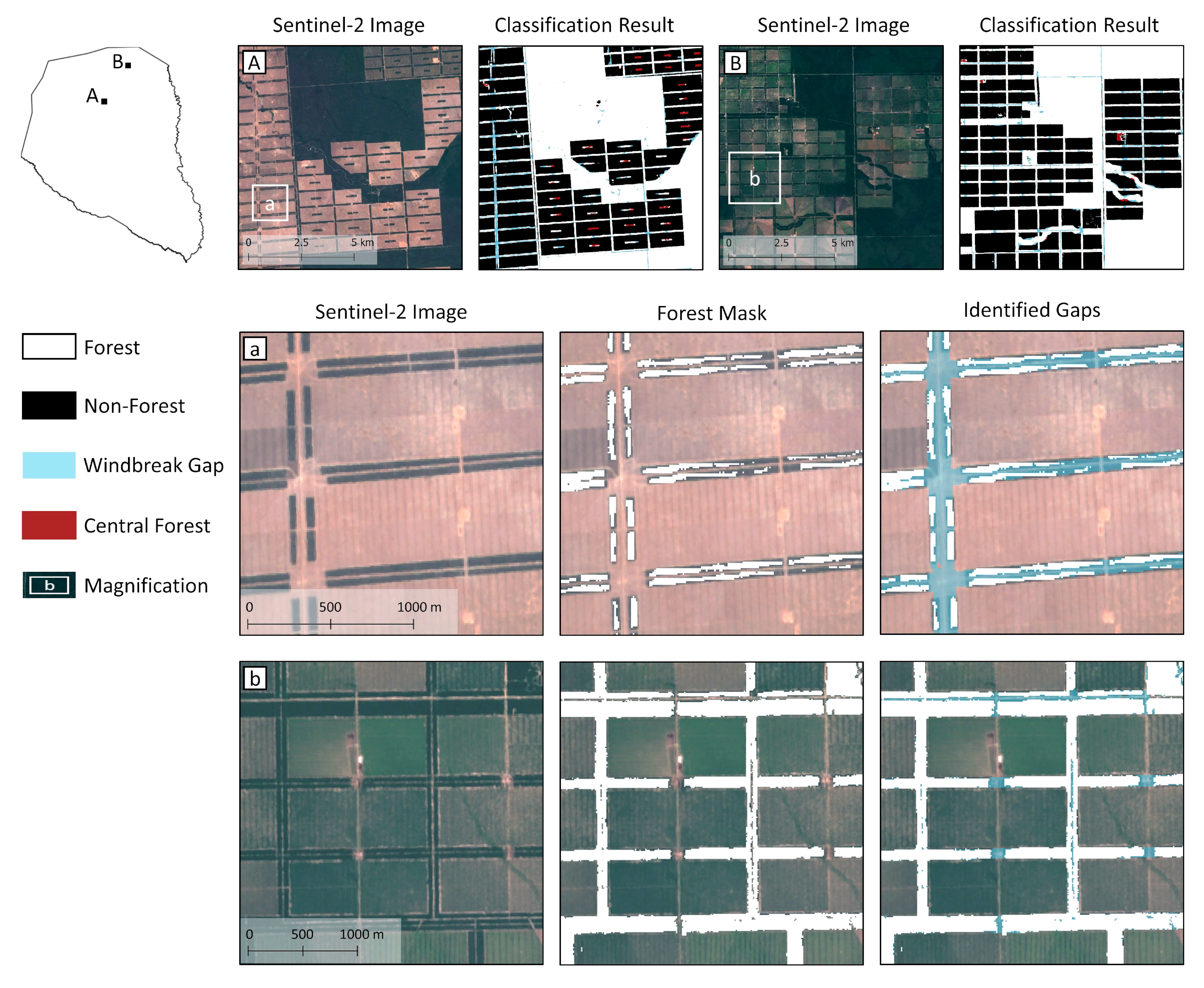

Figure 1 shows an overview map of the Paraguayan Chaco with two magnifications of different scales which reveal the typical shape and arrangement of the agricultural areas: rectangular and grid-like. This structure is the result of a decree passed by the Paraguayan government in 1986. It specifies that continuously logged areas must not be larger than 100 ha and that they must be separated from each other by forested stripes with a minimum width of 100 m [

3]. Intact forested stripes, also called windbreaks, are essential landscape elements that protect the soil from wind erosion, which is important, since the Paraguayan Chaco is a flat plane with average wind velocities of 16.2 to 24.5 km/h [

1]. However, many of these windbreaks are eroded or interrupted, which is depicted in

Figure 1b.

4. Results

Our application aimed to identify gaps in windbreaks and central forest patches in agricultural fields of the Paraguayan Chaco in a two-step approach. First, an RF classifier was used to create a binary forest map of the study area. The resulting map has an overall accuracy of 92.9% and a Kappa coefficient of 0.82. As this forest cover map was the data basis for the design of the synthetic data set and also input for the CNN inference, it was already presented in

Section 3.1 in

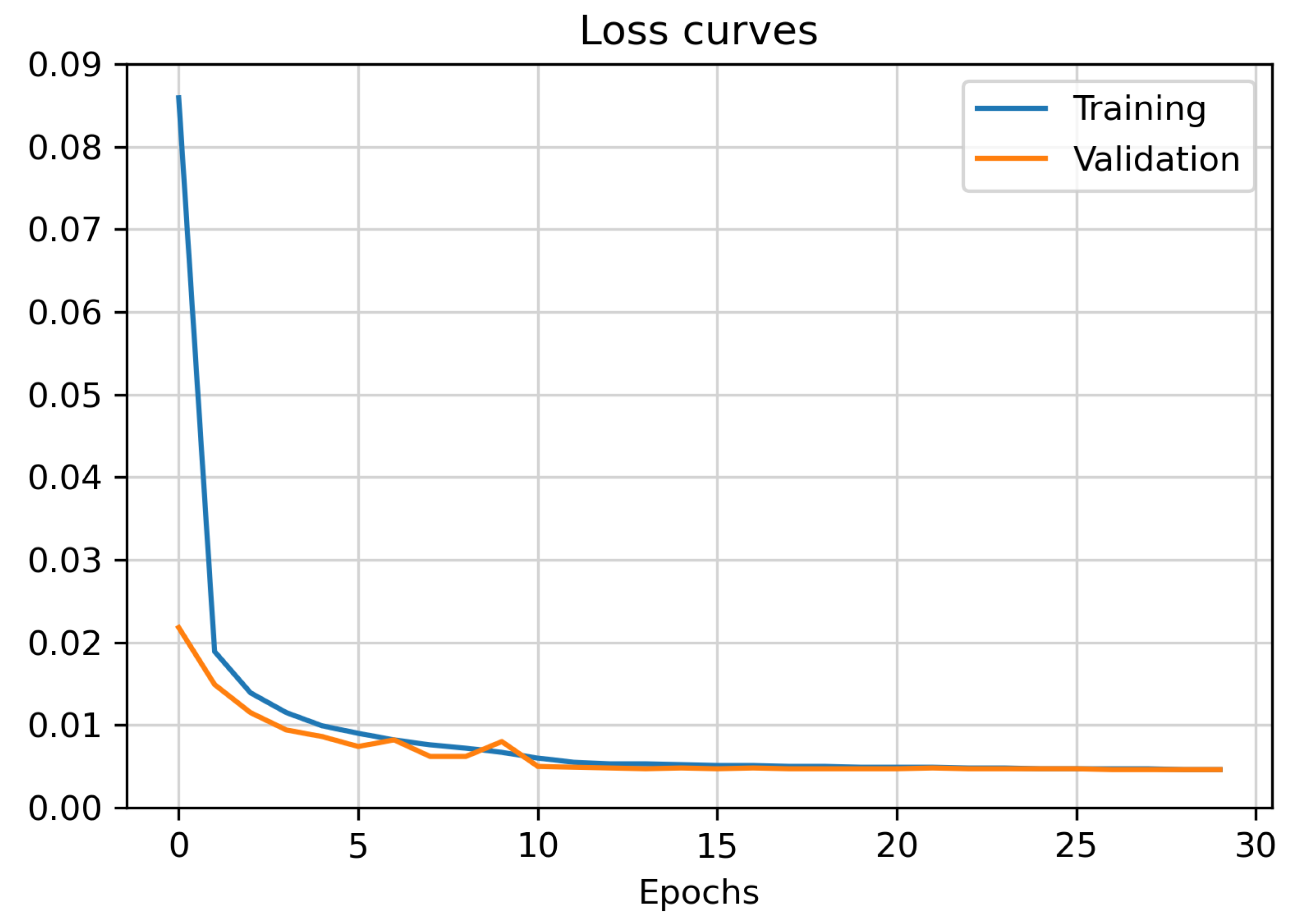

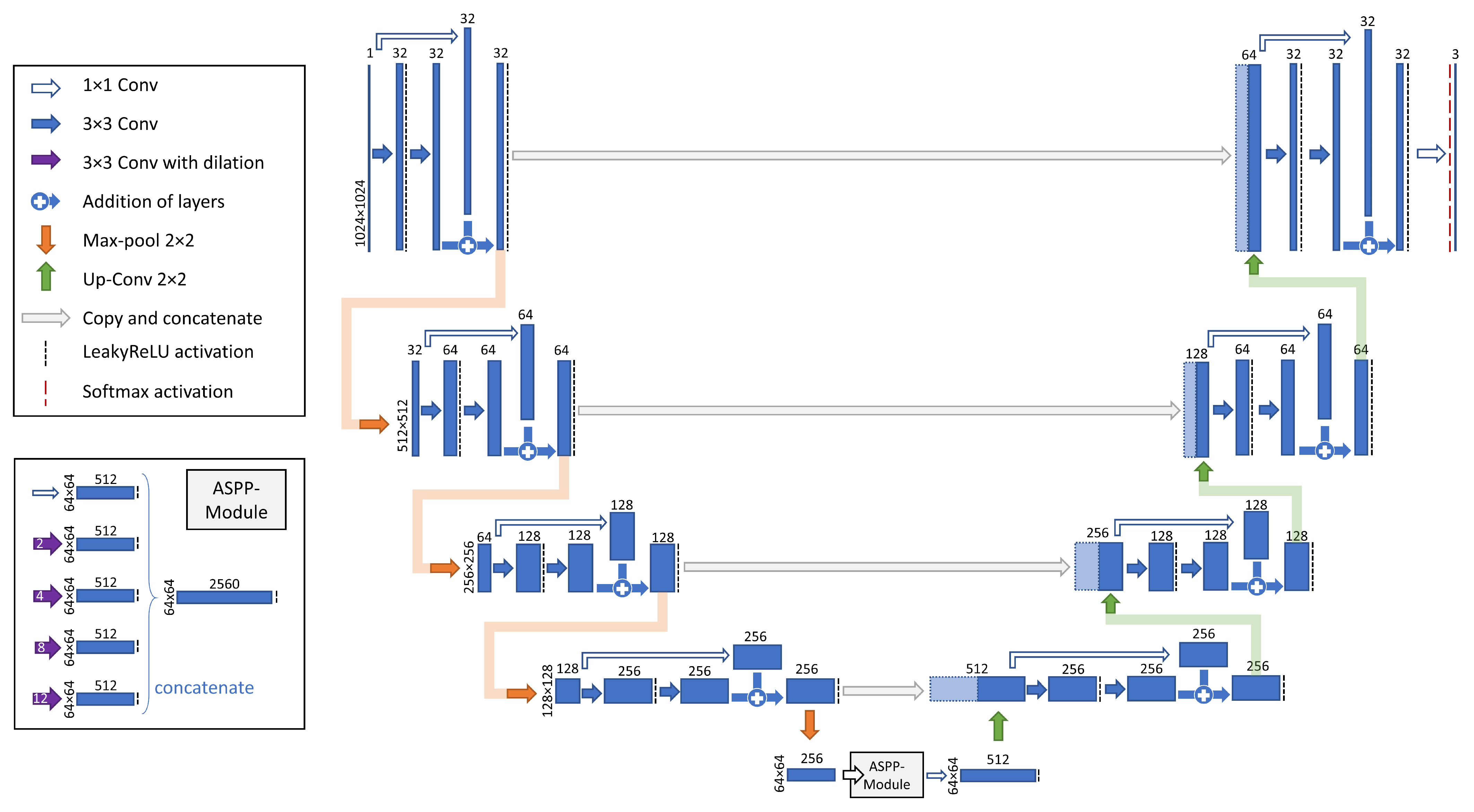

Figure 4. In the second step, a CNN was trained to classify central forests and gaps in windbreaks.

Figure 9 shows the classification results of two tiles along with two magnifications that reveal more details. The top row of the illustration displays for two tiles both the Sentinel-2 RGB composite and the binary RF classification of the forest areas with the gaps and the central forests that were identified by the trained CNN. In the corresponding magnifications below, the non-forest areas are not displayed and for a ground-truth reference, the results are placed upon a Sentinel-2 RGB image, in which the windbreaks are clearly visible. In both magnifications, paths separate the windbreaks into two parallel forest strips, and there are passages between the fields, either in the field corner magnification (a) or centrally positioned magnification (b). Even though the windbreaks in magnification (a) look very consistent in the satellite image, we can see that the derived forest mask does not cover them completely. From a visual analysis of the Sentinel-2 image, it is very likely that some windbreak pixels were falsely classified as non-forest areas, which might be the result of mixed pixels in the satellite image, which has a 10 m resolution. The windbreaks in magnification (b) are wider than in magnification (a) and were detected completely by the RF classifier. Unlike in the first example, most of the paths within the windbreaks were not classified as non-forest areas. Only one road in the upper part of the magnification seems to be wide enough to provide the required spectral information. The right column in

Figure 9 shows the areas which the U-Net has classified as gaps. For magnification (a), the classified gaps include all pixels that belong to the windbreak but were previously classified as non-forest by the RF model, as well as the interruptions and passages that we could already identify in the satellite image. Hence, the identified gaps comprise both actual windbreak gaps and parts of the windbreaks that were missing in the forest mask. In magnification (b), the existing windbreaks were captured completely by the forest mask. Therefore, all pixels that were subsequently classified as gaps are actual gaps. The CNN correctly identified both the paths along as well as the interruptions across the windbreaks as gaps.

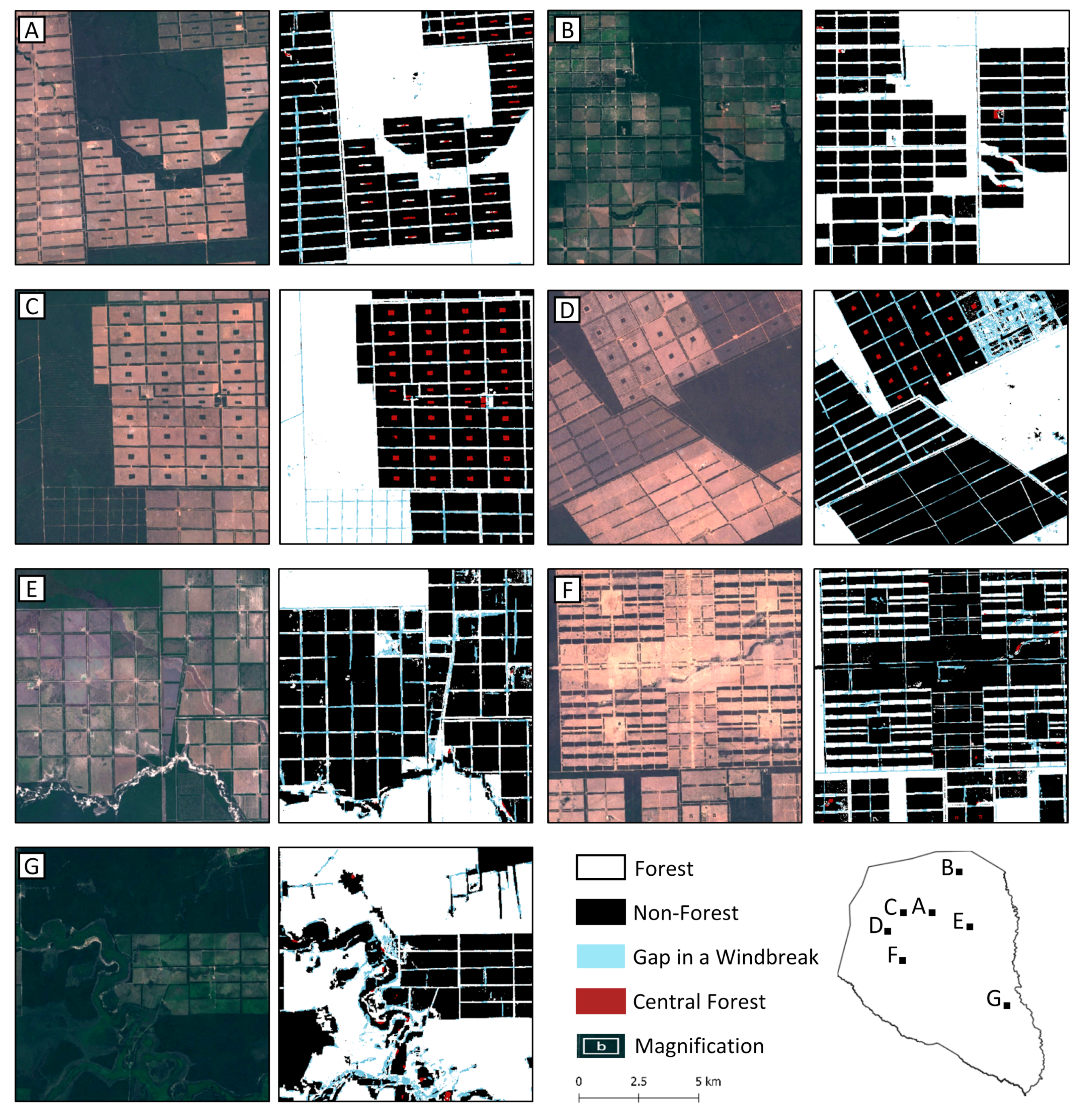

Figure 10 shows again the classification results for tiles A and B as well as five other tiles of the study area (C–G) to better visualise the variety of landscape patterns in the Paraguayan Chaco. For each tile, the Sentinel-2 RGB image is shown to the left, and the binary forest maps with the U-Net classification are shown to the right. In most cases, all central forests in one field grid were localized correctly (e.g., tiles C, D). Thereby, the model did not only recognize that there is a central forest, but also all pixels of a central forest were classified correctly. However, in some cases where the shape of the central forest patches differed strongly from what is proposed in the synthetic data set, this landscape class was not recognized correctly (e.g., tile A). During the real-world observation of the 45 field grids, none of the central forests exceeded 400 m, so longer central forests were not included in the synthetic training data. Accordingly, the model had difficulties identifying the exceptionally long central forests in tile A (approximately 100 m × 480 m). A second reason why central forests might not be detected are “salt and pepper”-like areas (e.g., tile D) which simultaneously trigger an over-classification of gaps. Apart from regions near rivers, which were not included in the training data, there are hardly any areas in which central forests are classified as false positives. In tile G, we can also see that rivers provoke an increased false positive rate for gaps as well. The inclusion of more natural structures such as rivers could reduce the overestimation of central forests and gaps in these regions. One might also expect that smaller groves of trees or shrubs (e.g., tiles E, F) might also be misinterpreted as part of a windbreak or as a central forest. However, this is not the case. The trained CNN is able to disregard such forest patches for classification purposes.

Overall, the classification of windbreak gaps shows robust results for both small central gates (e.g., tile B), as well as for very eroded forest stripes (e.g., tiles D, E), and forested stripes which contain a road (e.g., tiles A, E). Moreover, in very narrow (e.g., tile D) and very wide (e.g., tile F) windbreaks, the model is able to recognize gaps. Tile F additionally poses the challenge that the fields are not structured in regular grids as they usually are in the Paraguayan Chaco, but rather in four blocks of horizontal stripes of forest and non-forest. The segmentation of gaps that need to be filled to separate the individual fields from each other is reasonable.

For a quantitative evaluation of the CNN performance (

Table 1), we considered the model performance on the test set from the Paraguayan Chaco. The model reached an F1 score of 73.6% classifying the central forests and a score of 70.4% for the windbreak gaps. Additionally, the agricultural fields, which were derived by considering all non-forest areas excluding the classified gaps, sufficiently match the manually annotated fields, even reaching an F1 score of 95.7% and an IoU of 91.7%. Considering the IoU of all three classes, the model reached a mean IoU (mIoU) of 68.1%. During the model training, we did not distinguish between gaps in windbreaks and other gaps in the forest cover, which is why we also did not consider any other gaps except for windbreak gaps in the evaluation. The test data set included gaps in the direct neighbourhood of agricultural fields or field grids, and all other detected gaps which were further away than 150 m from a field were masked. For the central forests, the precision value (77.3%) is higher than the recall (70.2%), which entails that central forests are rather missed out than there being areas falsely classified as central forests. In the case of the gaps, the recall (75.7%) is higher than the precision (65.9%). For this class, the trained CNN showed a tendency to classify more pixels as a gap than missing gap pixels.

5. Discussion

With their high spatial and temporal resolution, the Sentinel-1 and Sentinel-2 satellites meet the requirements to map land use and land cover of large areas. However, a good map of forest and non-forest areas alone is not sufficient for monitoring the various functions that the forests provide in the Paraguayan Chaco: Large and continuous forest stands are required to provide shelter for numerous species, forested stripes between pastures are needed to protect the soil from wind erosion, and through central forest patches in agricultural areas, the rare tree species palo santo must be protected [

3,

6,

10]. In combination with synthetic data generation to train deep learning models, satellite remote sensing offers the possibility of large-scale monitoring of environmentally valuable landscape elements in the Paraguayan Chaco. So far, this potential has not been exploited. With an RF classifier, we have derived a forest cover map of the entire Paraguayan Chaco from Sentinel-1 and Sentinel-2 data. On this basis, we have developed a synthetic data set which can be generated automatically, depicting structures of the derived forest mask. Our results have shown that the customized U-Net, which we trained on the synthetic data set, is capable of identifying gaps in windbreaks and central forests with F1-scores of 70% and 73%, respectively, in the entire Paraguayan Chaco without need for additional input data or information.

For a holistic discussion of the results, we first discuss the binary forest map of the Paraguayan Chaco because the entire classification of forest landscape elements is based on this map. In the visual analysis, we have seen that the forest mask mainly shows problems in the windbreaks, even though the RF classifier reached an overall accuracy of more than 92%. In particular, the thin windbreaks of 20 to 40 m pose a problem for the classifier, most likely due to the only slightly higher spatial resolution of the satellite input data (10 m). Therefore, major parts of the thin windbreaks are represented by mixed pixels, containing both forest and non-forest spectral information. A second possible reason for the incomplete detection of existing windbreak pixels is that the windbreak is degraded and does not consist of an intact forest, but only of shrubbery. In this case, the classification as non-forest would be correct. We see that based on a Sentinel derived forest mask with a 10 m resolution, it is not possible to differentiate between obviously existing gaps in the form of passages, gaps due to degradation (e.g., shrubbery), and gaps due to misclassified pixels (e.g., mixed pixels). Particularly, the misclassified pixels in the forest mask affect the subsequent classification of gaps.

Figure 9 shows that the occurrence of many gaps in the forest mask does not pose a problem to the U-Net when detecting the gaps in the windbreaks.

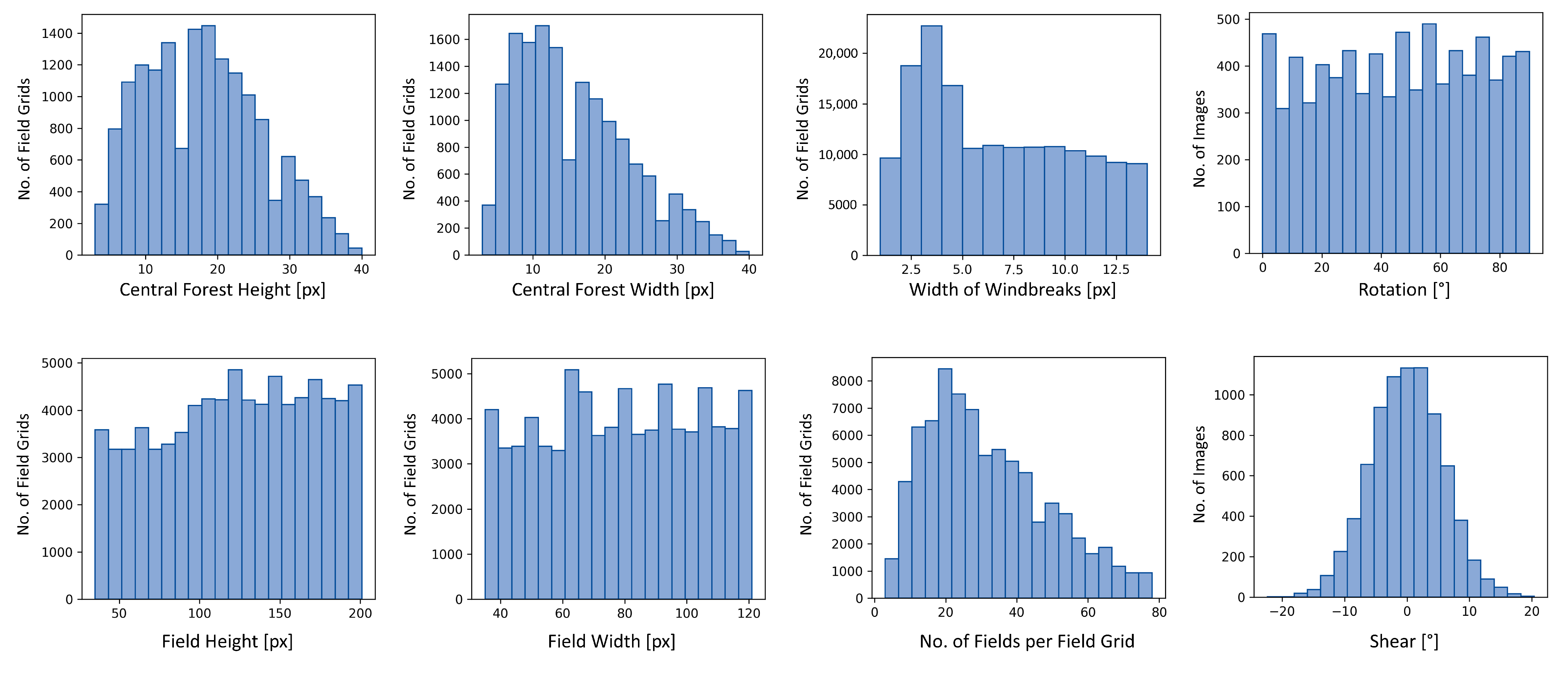

Nevertheless, there are some limitations in detecting windbreak gaps because the synthetic training data do not include all details that appear in the forest mask. The trained U-Net employs the features it learns from the synthetic data to classify all features it sees in the real data. For instance, we did not include roads through the compact forest in the synthetic data. Thus, the model classified these as gaps. To the trained CNN, the roads are small non-forest zones between coherent woodlands, which is indeed very similar to the appearance of the gaps on which the model was trained. We saw that the elongated central forests could not be detected in tile A in

Figure 10. These central forests had a length of approximately 480 m, which exceeds the maximum length of central forests of 400 m in the artificial data, as we can also observe in the statistical distributions of landscape features (

Figure 7). We see in this example that it might happen that the synthetically generated data do not cover the entire range of attribute values as they occur in real-world data. However, for manually labelled data, too, it is probable that the training data do not contain the exact same features and distributions over attribute values as there are in the area of application. Having the possibility to immediately compare the statistical distributions (

Figure 7) of the artificial training set to the problematic features in the real-world data is one big advantage of using synthetically generated training data. Using manually labelled training data would require an additional step to derive statistics. A second benefit is that one can directly modify the features in the training data and, for instance, increase the maximum length of central forests. This would be almost impossible to realize with manually labelled data.

Figure 6 shows the scene composition on which the synthetic images were based. Hence, the information where there are windbreaks, agricultural areas, and compact forests already exist, the extension of the prediction by these classes can easily be implemented by adding them to the class labels. Manual labelling of one additional class, e.g., to additionally classify pixels that belong to agricultural areas, would probably take weeks compared to the generation of a new synthetic data set which is easily feasible.

The visual analysis of the classification results also revealed that natural structures, for instance, water bodies, provoked falsely classified central forests and gaps. To prevent such misclassifications, we would have had to include more natural structures in the synthetic training data. Although we have shown through the integration of the small forest patches that it is possible to model naturally structured features, the synthetic design of such structures is more complex and time-intensive than the synthetic design of geometric features. In addition, this involves increased use of random variables and noise functions, which makes it difficult to numerically control the size or shape of the resulting landscape elements. Hence, there is always a trade-off between modelling the images as realistically as necessary while keeping the data generation process as simple and traceable as possible to make sure that the synthetic data design does not become more time-intensive than manual labelling. Additionally, we have also noticed that simply adding new features might harm the classification performance of existing features because statistical distributions that were suitable before may need to be adjusted as well.

In summary, the areas within the windbreak fractions were well identified as gaps such that the union of forest mask and detected gaps give a good approximation of where there should be forest to protect the fields from wind erosion. However, it must be considered that the detected gaps are spaces in the derived forest mask and not necessarily spaces in the actual windbreaks. Although the windbreak gaps are partly overestimated, the result can present a good overview of where there are hot spots of defective windbreaks and where the windbreaks are in good condition. Such maps can be particularly beneficial to Paraguayan forestry and environmental authorities such as the Instituto Forestal Nacional (INFONA), which is concerned with monitoring regulations such as the presence of windbreaks and central forests. It would, therefore, be interesting to investigate how the presented approach performs on higher resolved imagery from satellites, such as Planet (0.5–3 m) or WorldView (0.3–3.7 m) satellites. However, it is unclear whether these purely optical data can produce forest maps of comparable quality as the combination of the multispectral optical Sentinel-2 images with the radar images from Sentinel-1. In addition, Planet and WorldView data are less easily accessible. Another strength of synthetically generating data is that once a framework is set up, it can easily be adapted to related tasks or new application regions with a similar landscape. For instance, if the fields in a new application area have different shapes, are smaller, larger, or even round, the concerned features can be adapted in the data set generator, which is much faster than a setup from scratch. Additionally, the adaptation to new spatial image resolutions is possible, provided that the landscape features can still be adequately displayed on the specified image size (here,

pixels). The Argentinian Chaco, extending across the country’s North, strongly resembles the Paraguayan Chaco and would therefore be another possible area where the presented method can be applied. Presumably, only small modifications of field sizes or barrier widths would be required. Bolivia, bordering Paraguay’s Northwest, also has large, agriculturally dominated regions. However, the fields there have thinner, but more frequent, windbreaks arranged in a striped rather than a grid pattern. Thus, more extensive adjustments to the data generator would probably be necessary. Another region where windbreaks between logged areas were left in a grid-like structure, almost identical to the structures in the Paraguayan Chaco, is the island Hokkaido in Japan [

50]. Here, the forest strips are about 180 m wide and occur every 3 km, approximately.

It can be concluded that the presented approach works well for classifying features in binary images, which in return makes the gap and forest patch detection dependent on the quality of the binary mask. Therefore, it would be interesting to apply this method directly to RGB or even multispectral images in future research. However, the generation of artificial scenes with realistic surface textures can become a complex task. The fact that more complex tasks require a much larger number of training samples is not a problem since, theoretically, an infinite number of artificial training images can be generated, which is probably the greatest advantage compared with manual data generation. Most other studies using synthetic training data for remote sensing tasks address the detection of human-made objects such as aircraft [

51,

52,

53], vehicles [

54,

55], or wind farms [

28] and wind turbines [

56]. Berkson et al., Liu et al., Weber et al., and Han et al. [

51,

53,

54,

55] use real satellite images and images generated with existing software and position the object of interest onto the satellite image background. Such an approach would not have been feasible for detecting central forests and windbreaks because these are not explicit objects such as aircraft. Similar to this study, Hoeser and Kuenzer [

28], Hoeser et al. [

56] and Isikdogan et al. [

29] break away from the division into foreground and background and model the landscape scenes as a whole. Hoeser and Kuenzer [

28] and Hoeser et al. [

56] aim at a global detection of wind turbines in offshore wind farms and generate detailed and complex synthetic SAR-scenes of coastal areas to use these for training. Since complete landscape modelling is resource-expensive, we chose to pursue a more straightforward approach by focussing on a binary representation of the landscape. This proceeding is similar to that of Isikdogan et al. [

29] who generated a synthetic data set to extract the centre lines of river networks in MNDWI images. Although river networks often have natural twists and turns, the authors successfully used straight geometries to model the river landscape. Thereby, they proved that it is not always necessary to generate synthetic images that perfectly imitate the real world, but that it might be sufficient to represent basic spatial contexts and features.

With our approach, we show that it is possible to model more complex landscapes, allowing analysis beyond a land cover classification. It is the first approach to synthetically model the spatial arrangement of different land use classes and subdivide the classes into further subclasses based on their geometry and arrangement. The methodology can be applied and adapted to new tasks and is straightforward to implement. Additionally, once a synthetic data set is created, and a model is set up and trained according to our approach, it is suitable to monitor the future developments of the considered landscape features on a regular basis. In Paraguay, there are no signs of the intensive use of land for cattle ranching and the accompanying deforestation diminishing in the coming years. Therefore, it is all the more important to ensure that at least the regulations implemented for the protection of soil, flora, and fauna are adhered to. Such supervision can be enabled through the presented method. The next step towards an operational system is to find the most suitable architecture for this task. A comprehensive comparison with different state-of-the-art architectures such as DeepLabV3+ will be an important challenge in subsequent research in order to obtain the best possible classification results.