Improving Mountain Snow and Land Cover Mapping Using Very-High-Resolution (VHR) Optical Satellite Images and Random Forest Machine Learning Models

Abstract

:1. Introduction

1.1. Satellite Snow Mapping and Mixed Pixels

1.2. Very-High-Resolution Snow Mapping and Machine Learning

- Can random forest models and minimally processed VHR stereo multispectral images be used to accurately classify snow cover at the meter scale without SWIR bands or more complex atmospheric, topographic, and BRDF corrections?

- (a)

- What combination(s) of input layers provide the best model performance?

- (b)

- Can a single model trained for one region be used to accurately classify snow when applied to out-of-region images?

- How do coarser resolution operational fSCA products from the spectral unmixing of Landsat (30 m) and MODIS (500 m) images compare with the VHR snow cover products?

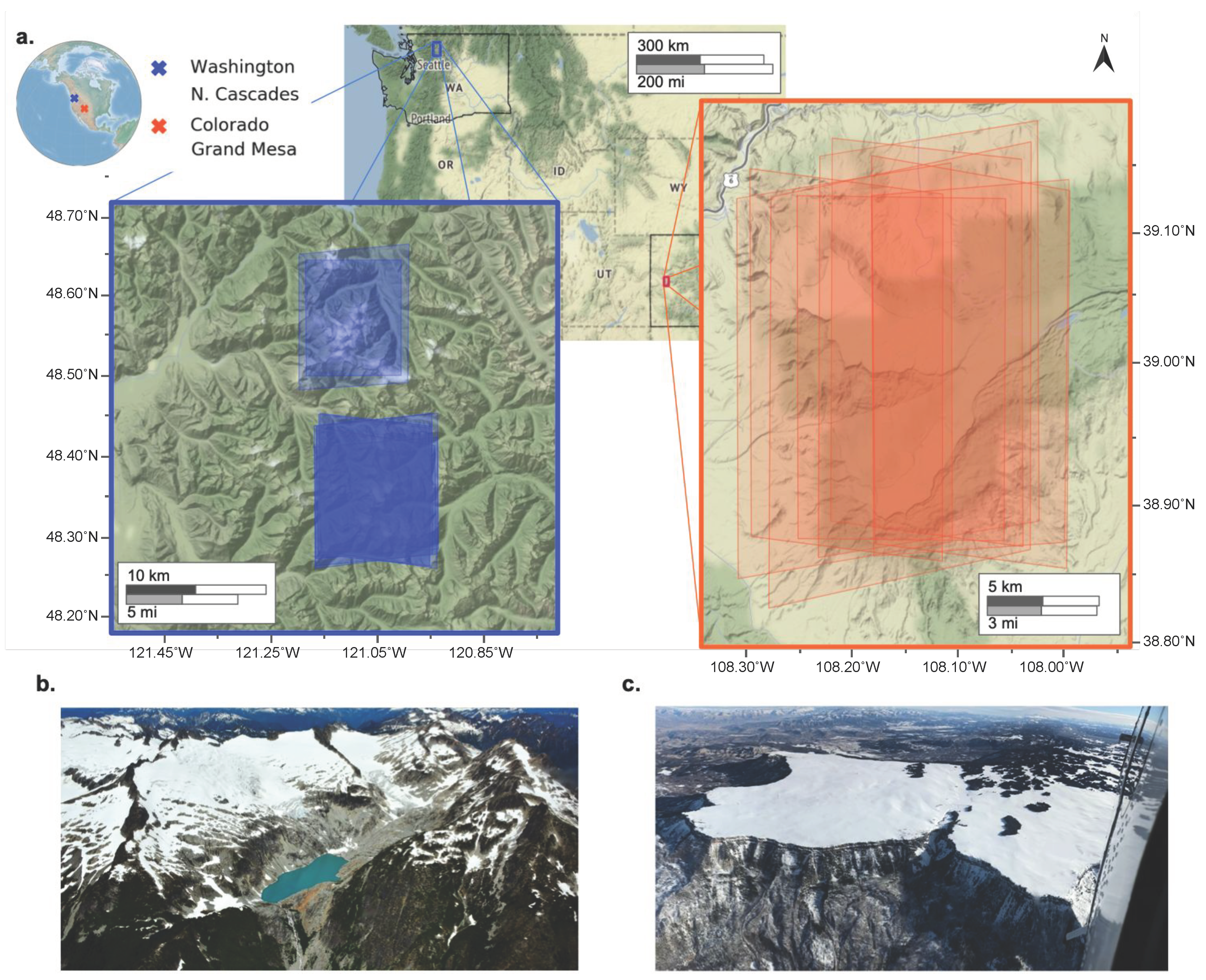

2. Study Sites and Data

2.1. Study Sites

2.2. Data

3. Materials and Methods

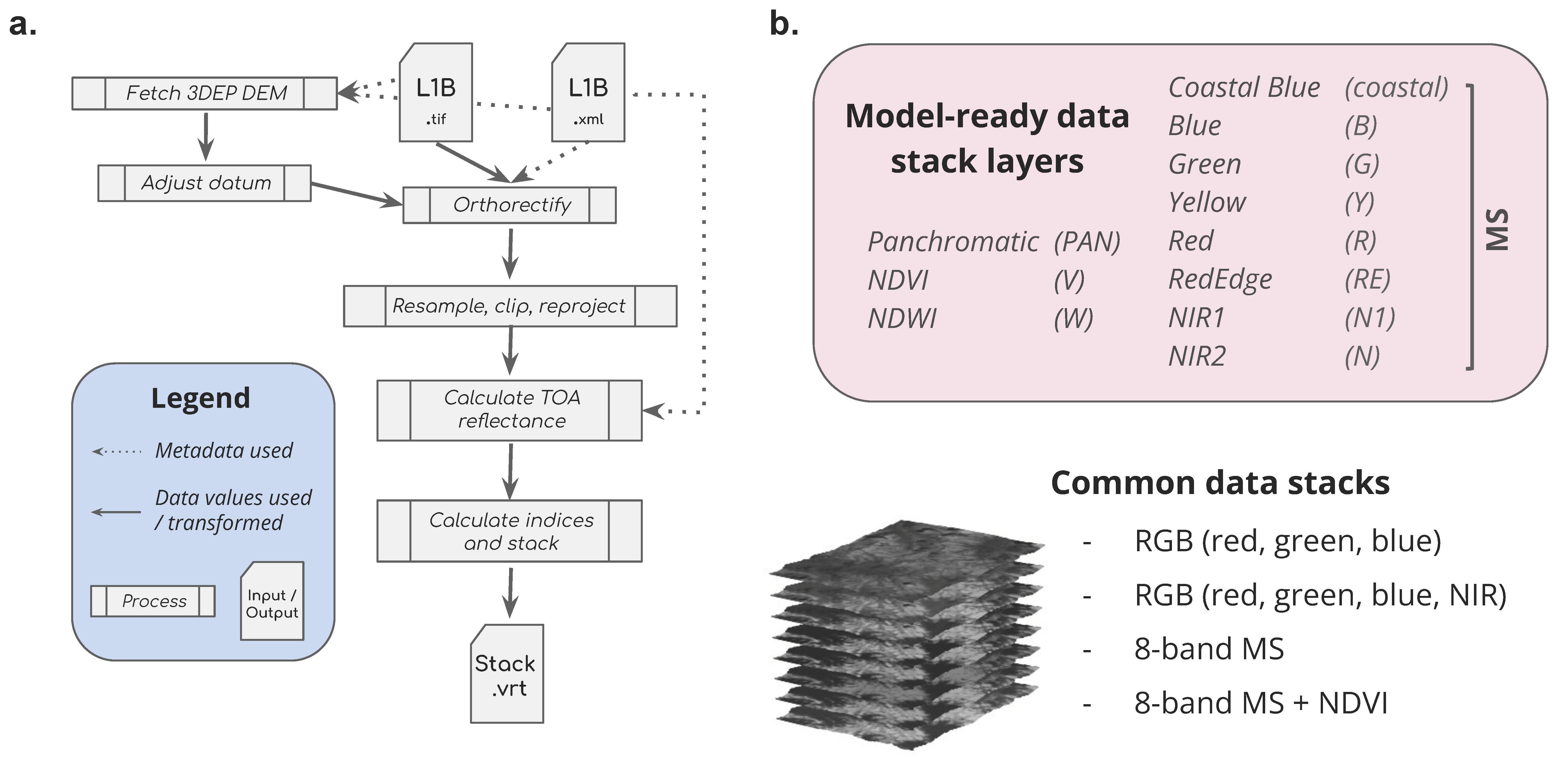

3.1. Pre-Processing

3.2. Classification

3.2.1. Machine Learning Algorithm Selection and Model Implementation

3.2.2. Model Input Configurations

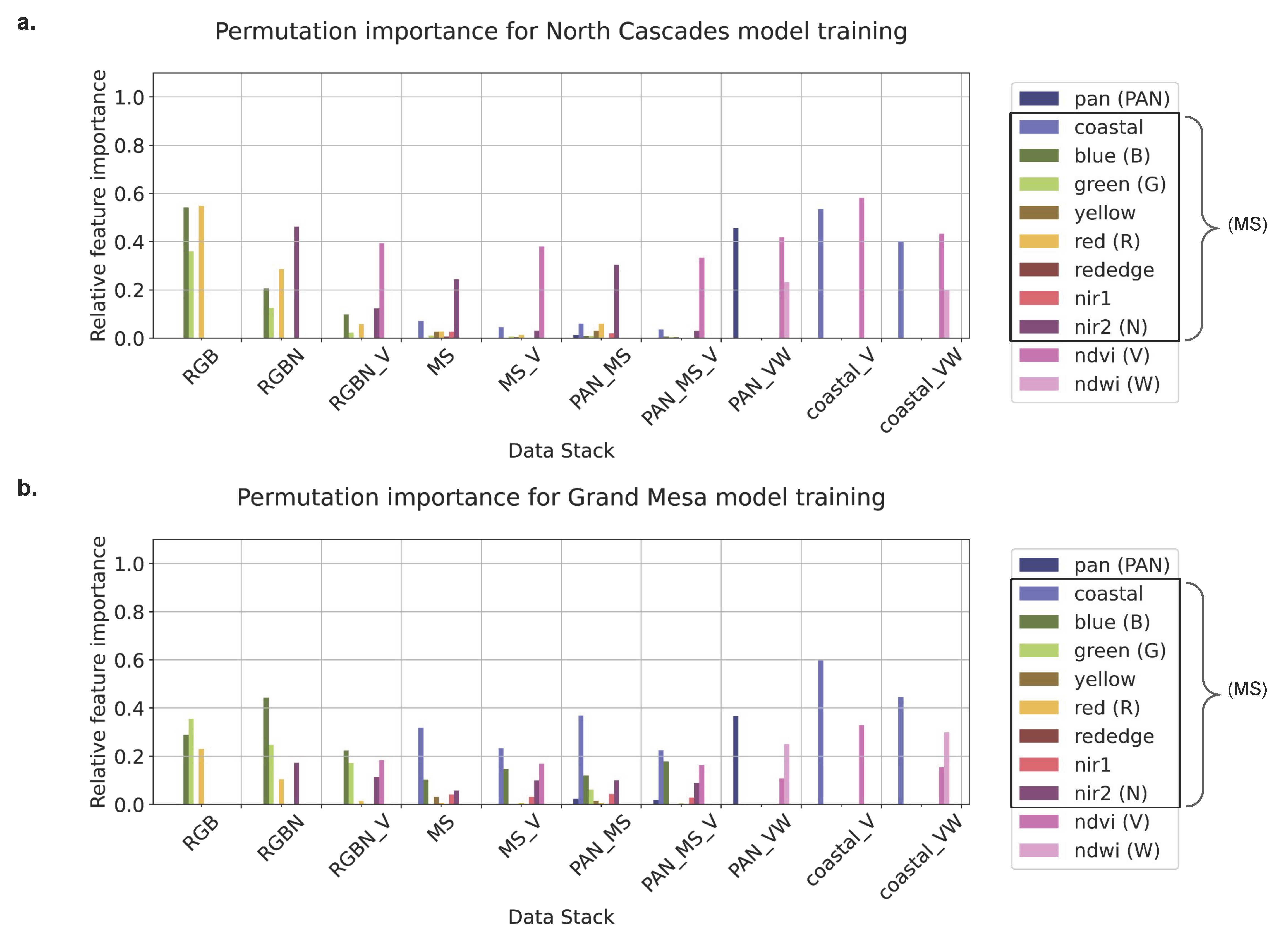

3.2.3. Feature Importance

3.2.4. Training Data

3.2.5. Accuracy Assessment

3.2.6. Model Transfer Tests and Generalization

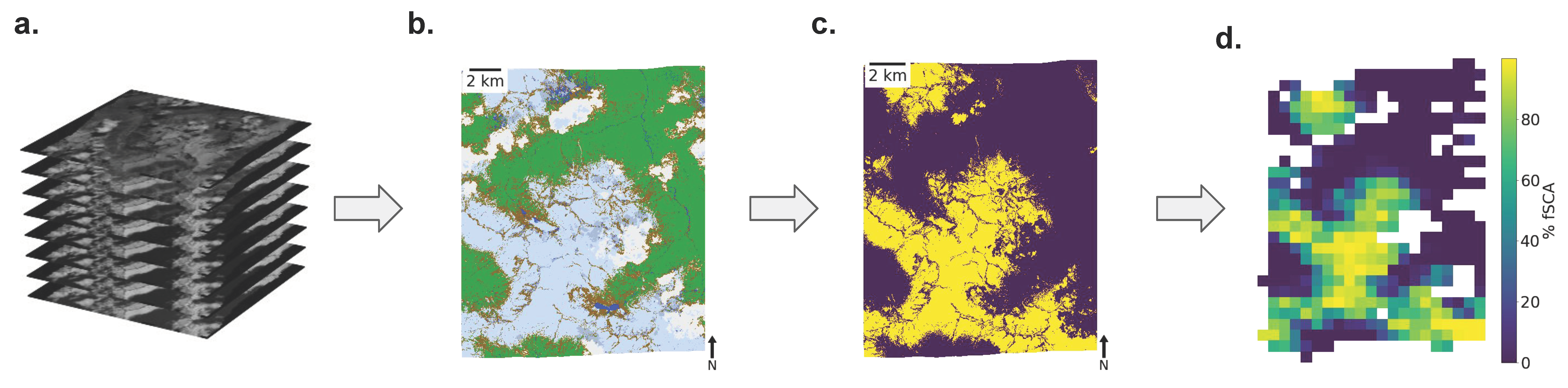

3.2.7. Snow Cover Products and Comparison with Other Snow Cover Datasets

4. Results

4.1. Single-Scene Model Performance

4.2. Single-Scene Feature Importance

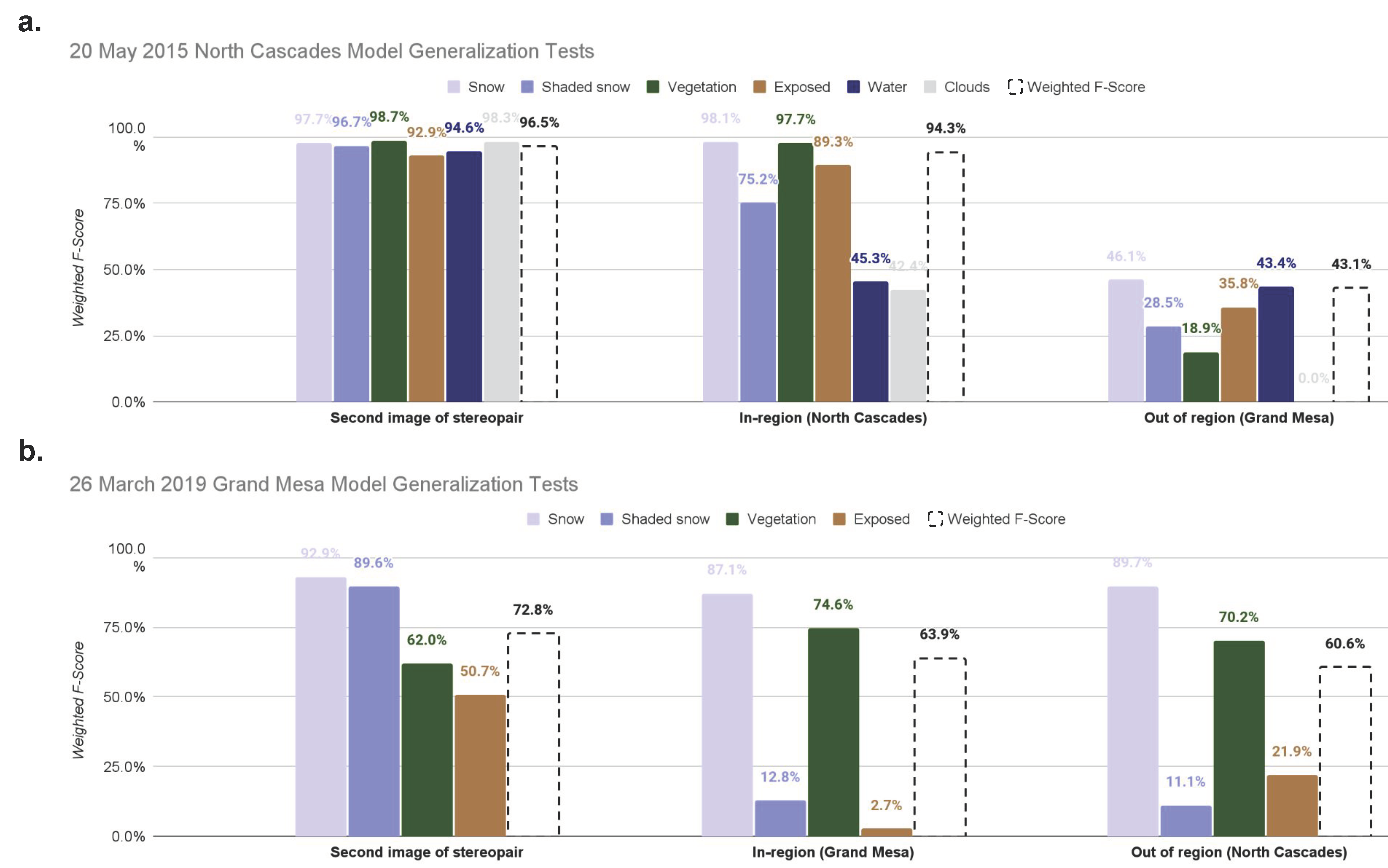

4.3. Model Transfer and Generalization

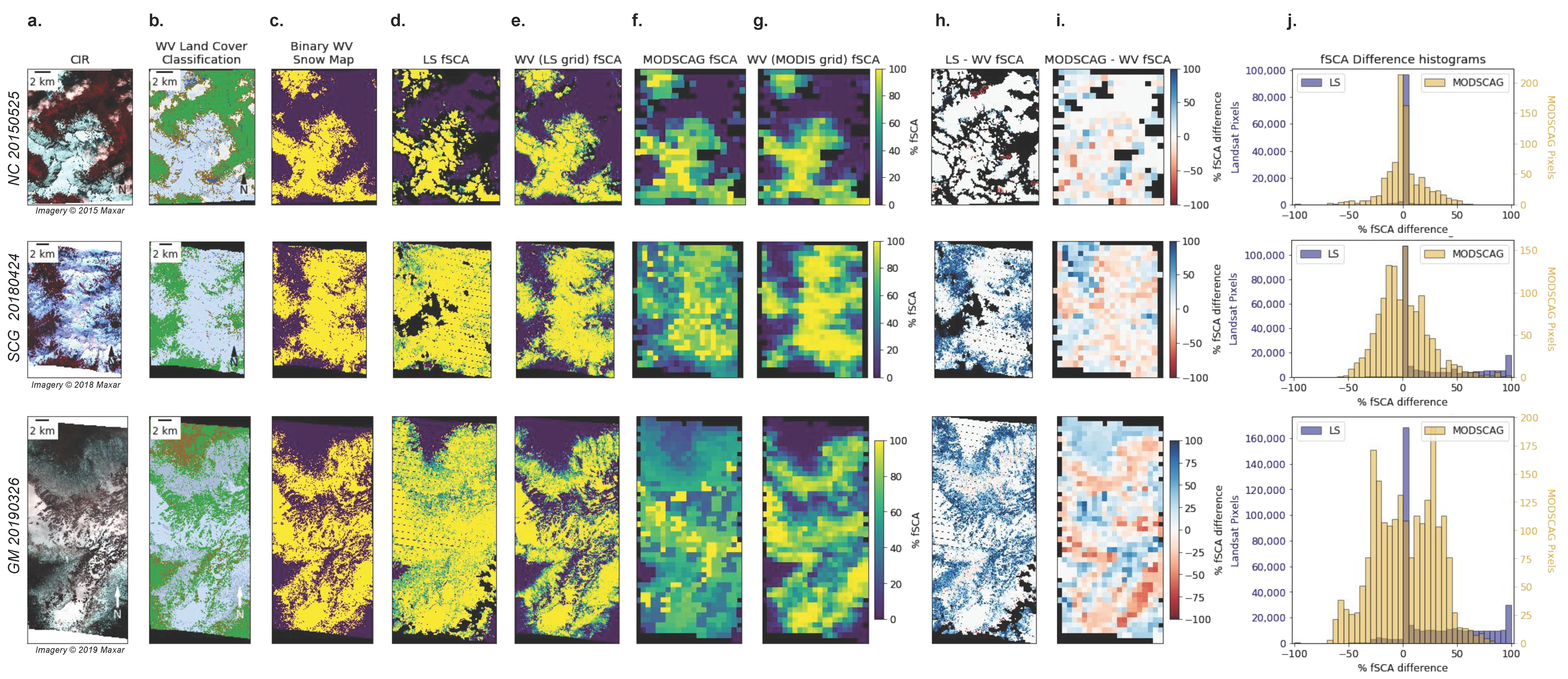

4.4. Snow Classification Comparisons

4.4.1. Qualitative Assessment of fSCA Difference

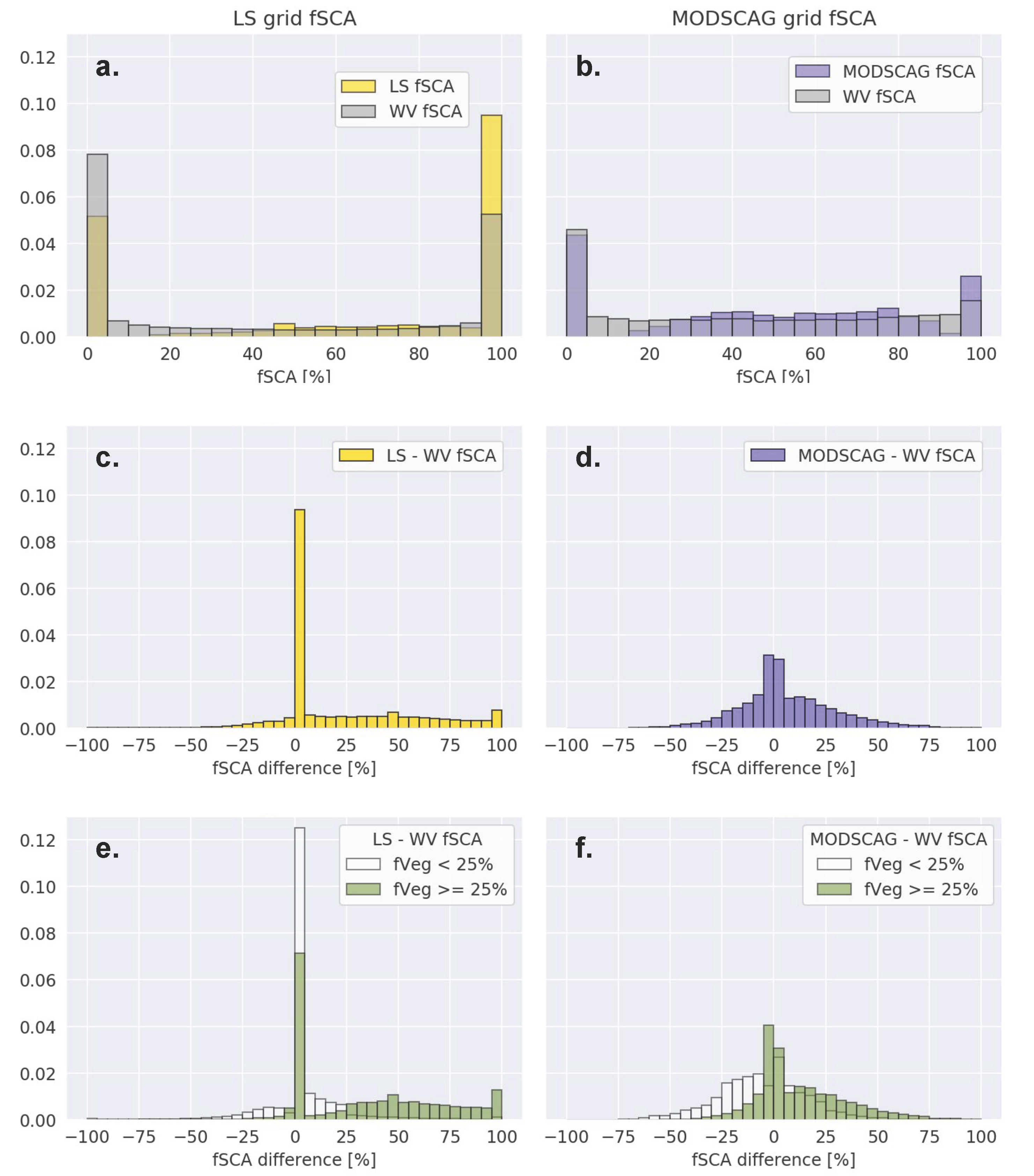

4.4.2. Quantitative Assessment of Aggregated fSCA Difference

5. Discussion

5.1. Single-Scene Models

5.2. Model Transfer and Generalization

5.3. Snow Classification Comparisons

5.4. The Need for Fine-Scale Snow Cover

5.5. Limitations and Considerations

- Improved preprocessing routines to obtain reliable absolute-surface-reflectance values for inter-image comparison;

- A larger library of training data (i.e., more labeled images to cover the range of reflectance values within each class);

- Simplified classification via hierarchical binary models;

- Regionally specific models over global models.

5.6. Operational Potential of WorldView-2 and WorldView-3 Snow Cover Products

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bales, R.C.; Molotch, N.P.; Painter, T.H.; Dettinger, M.D.; Rice, R.; Dozier, J. Mountain Hydrology of the Western United States. Water Resour. Res. 2006, 42. [Google Scholar] [CrossRef]

- Viviroli, D.; Dürr, H.H.; Messerli, B.; Meybeck, M.; Weingartner, R. Mountains of the World, Water Towers for Humanity: Typology, Mapping, and Global Significance. Water Resour. Res. 2007, 43. [Google Scholar] [CrossRef]

- Jones, H.G. The Ecology of Snow-Covered Systems: A Brief Overview of Nutrient Cycling and Life in the Cold. Hydrol. Process. 1999, 13, 2135–2147. [Google Scholar] [CrossRef]

- Steltzer, H.; Landry, C.; Painter, T.H.; Anderson, J.; Ayres, E. Biological Consequences of Earlier Snowmelt from Desert Dust Deposition in Alpine Landscapes. Proc. Natl. Acad. Sci. USA 2009, 106, 11629–11634. [Google Scholar] [CrossRef]

- Walker, D.A.; Halfpenny, J.C.; Walker, M.D.; Wessman, C.A. Long-Term Studies of Snow-Vegetation Interactions: A Hierarchic Geographic Information System Helps Examine Links between Species Distributions and Regional Patterns of Greenness. BioScience 1993, 43, 287–301. [Google Scholar] [CrossRef]

- Winkler, D.E.; Butz, R.J.; Germino, M.J.; Reinhardt, K.; Kueppers, L.M. Snowmelt Timing Regulates Community Composition, Phenology, and Physiological Performance of Alpine Plants. Front. Plant Sci. 2018, 9, 114. [Google Scholar] [CrossRef]

- Mankin, J.S.; Viviroli, D.; Singh, D.; Hoekstra, A.Y.; Diffenbaugh, N.S. The Potential for Snow to Supply Human Water Demand in the Present and Future. Environ. Res. Lett. 2015, 10, 114016. [Google Scholar] [CrossRef]

- Elder, K.; Dozier, J.; Michaelsen, J. Snow Accumulation and Distribution in an Alpine Watershed. Water Resour. Res. 1991, 27, 1541–1552. [Google Scholar] [CrossRef]

- Winstral, A.; Elder, K.; Davis, R.E. Spatial Snow Modeling of Wind-Redistributed Snow Using Terrain-Based Parameters. J. Hydrometeorol. 2002, 3, 524–538. [Google Scholar] [CrossRef]

- Molotch, N.P.; Bales, R.C. Scaling Snow Observations from the Point to the Grid Element: Implications for Observation Network Design. Water Resour. Res. 2005, 41. [Google Scholar] [CrossRef] [Green Version]

- Broxton, P.D.; Harpold, A.A.; Biederman, J.A.; Troch, P.A.; Molotch, N.P.; Brooks, P.D. Quantifying the Effects of Vegetation Structure on Snow Accumulation and Ablation in Mixed-Conifer Forests. Ecohydrology 2015, 8, 1073–1094. [Google Scholar] [CrossRef]

- Mazzotti, G.; Essery, R.; Moeser, C.D.; Jonas, T. Resolving Small-Scale Forest Snow Patterns Using an Energy Balance Snow Model with a One-Layer Canopy. Water Resour. Res. 2020, 56, e2019WR026129. [Google Scholar] [CrossRef]

- Berman, E.E.; Coops, N.C.; Kearney, S.P.; Stenhouse, G.B. Grizzly Bear Response to Fine Spatial and Temporal Scale Spring Snow Cover in Western Alberta. PLoS ONE 2019, 14, e0215243. [Google Scholar] [CrossRef] [PubMed]

- Cosgrove, C.L.; Wells, J.; Nolin, A.W.; Putera, J.; Prugh, L.R. Seasonal Influence of Snow Conditions on Dall’s Sheep Productivity in Wrangell-St Elias National Park and Preserve. PLoS ONE 2021, 16, e0244787. [Google Scholar] [CrossRef] [PubMed]

- Essery, R.; Pomeroy, J. Implications of Spatial Distributions of Snow Mass and Melt Rate for Snow-Cover Depletion: Theoretical Considerations. Ann. Glaciol. 2004, 38, 261–265. [Google Scholar] [CrossRef]

- Thibault, I.; Ouellet, J.-P. Hunting Behaviour of Eastern Coyotes in Relation to Vegetation Cover, Snow Conditions, and Hare Distribution. Écoscience 2005, 12, 466–475. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V.; DiGirolamo, N.E.; Bayr, K.J. MODIS Snow-Cover Products. Remote Sens. Environ. 2002, 83, 181–194. [Google Scholar] [CrossRef]

- Nolin, A.W. Recent Advances in Remote Sensing of Seasonal Snow. J. Glaciol. 2010, 56, 1141–1150. [Google Scholar] [CrossRef]

- Giles, P. Remote Sensing and Cast Shadows in Mountainous Terrain. Photogramm. Eng. Remote Sens. 2001, 67, 833–839. [Google Scholar]

- Dozier, J.; Painter, T.H. Multispectral and Hyperspectral Remote Sensing of Alpine Snow Properties. Annu. Rev. Earth Planet. Sci. 2004, 32, 465–494. [Google Scholar] [CrossRef]

- Vikhamar, D.; Solberg, R. Subpixel Mapping of Snow Cover in Forests by Optical Remote Sensing. Remote Sens. Environ. 2003, 84, 69–82. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V. Development of Methods for Mapping Global Snow Cover Using Moderate Resolution Imaging Spectroradiometer Data. Remote Sens. Environ. 1995, 54, 127–140. [Google Scholar] [CrossRef]

- Rittger, K.; Raleigh, M.S.; Dozier, J.; Hill, A.F.; Lutz, J.A.; Painter, T.H. Canopy Adjustment and Improved Cloud Detection for Remotely Sensed Snow Cover Mapping. Water Resour. Res. 2020, 56, e2019WR024914. [Google Scholar] [CrossRef]

- Valovcin, F.R. Snow/Cloud Discrimination; Air Force Geophysics Laboratory: Hanscom Afs, MA, USA, 1976. [Google Scholar]

- Hall, D.K.; Riggs, G.A. Accuracy Assessment of the MODIS Snow Products. Hydrol. Process. 2007, 21, 1534–1547. [Google Scholar] [CrossRef]

- Selkowitz, D.J.; Forster, R.R.; Caldwell, M.K. Prevalence of Pure Versus Mixed Snow Cover Pixels across Spatial Resolutions in Alpine Environments. Remote Sens. 2014, 6, 12478–12508. [Google Scholar] [CrossRef]

- Painter, T.H.; Dozier, J.; Roberts, D.A.; Davis, R.E.; Green, R.O. Retrieval of Subpixel Snow-Covered Area and Grain Size from Imaging Spectrometer Data. Remote Sens. Environ. 2003, 85, 64–77. [Google Scholar] [CrossRef]

- Painter, T.H.; Rittger, K.; McKenzie, C.; Slaughter, P.; Davis, R.E.; Dozier, J. Retrieval of Subpixel Snow Covered Area, Grain Size, and Albedo from MODIS. Remote Sens. Environ. 2009, 113, 868–879. [Google Scholar] [CrossRef]

- Rosenthal, W.; Dozier, J. Automated Mapping of Montane Snow Cover at Subpixel Resolution from the Landsat Thematic Mapper. Water Resour. Res. 1996, 32, 115–130. [Google Scholar] [CrossRef]

- Hao, S.; Jiang, L.; Shi, J.; Wang, G.; Liu, X. Assessment of MODIS-Based Fractional Snow Cover Products over the Tibetan Plateau. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 533–548. [Google Scholar] [CrossRef]

- Cristea, N.C.; Breckheimer, I.; Raleigh, M.S.; HilleRisLambers, J.; Lundquist, J.D. An Evaluation of Terrain-Based Downscaling of Fractional Snow Covered Area Data Sets Based on LiDAR-Derived Snow Data and Orthoimagery. Water Resour. Res. 2017, 53, 6802–6820. [Google Scholar] [CrossRef]

- Walters, R.D.; Watson, K.A.; Marshall, H.-P.; McNamara, J.P.; Flores, A.N. A Physiographic Approach to Downscaling Fractional Snow Cover Data in Mountainous Regions. Remote Sens. Environ. 2014, 152, 413–425. [Google Scholar] [CrossRef]

- Parr, C.; Sturm, M.; Larsen, C. Snowdrift Landscape Patterns: An Arctic Investigation. Water Resour. Res. 2020, 56, e2020WR027823. [Google Scholar] [CrossRef]

- Lundquist, J.D.; Lott, F. Using Inexpensive Temperature Sensors to Monitor the Duration and Heterogeneity of Snow-Covered Areas. Water Resour. Res. 2008, 44. [Google Scholar] [CrossRef]

- Mott, R.; Schlögl, S.; Dirks, L.; Lehning, M. Impact of Extreme Land Surface Heterogeneity on Micrometeorology over Spring Snow Cover. J. Hydrometeorol. 2017, 18, 2705–2722. [Google Scholar] [CrossRef]

- Thorn, C.E.; Hall, K. Nivation and Cryoplanation: The Case for Scrutiny and Integration. Prog. Phys. Geogr. Earth Environ. 2002, 26, 533–550. [Google Scholar] [CrossRef]

- Currier, W.R.; Sun, N.; Wigmosta, M.; Cristea, N.; Lundquist, J.D. The Impact of Forest-Controlled Snow Variability on Late-Season Streamflow Varies by Climatic Region and Forest Structure. Hydrol. Process. 2022, 36, e14614. [Google Scholar] [CrossRef]

- Marston, C.G.; Aplin, P.; Wilkinson, D.M.; Field, R.; O’Regan, H.J. Scrubbing Up: Multi-Scale Investigation of Woody Encroachment in a Southern African Savannah. Remote Sens. 2017, 9, 419. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liu, C.; Huang, X.; Li, X.; Liang, T. MODIS Fractional Snow Cover Mapping Using Machine Learning Technology in a Mountainous Area. Remote Sens. 2020, 12, 962. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Gao, S.; Hu, T.; Liu, J.; Guo, Q. The Transferability of Random Forest in Canopy Height Estimation from Multi-Source Remote Sensing Data. Remote Sens. 2018, 10, 1183. [Google Scholar] [CrossRef] [Green Version]

- Revuelto, J.; Billecocq, P.; Tuzet, F.; Cluzet, B.; Lamare, M.; Larue, F.; Dumont, M. Random Forests as a Tool to Understand the Snow Depth Distribution and Its Evolution in Mountain Areas. Hydrol. Process. 2020, 34, 5384–5401. [Google Scholar] [CrossRef]

- Tsai, Y.-L.S.; Dietz, A.; Oppelt, N.; Kuenzer, C. Wet and Dry Snow Detection Using Sentinel-1 SAR Data for Mountainous Areas with a Machine Learning Technique. Remote Sens. 2019, 11, 895. [Google Scholar] [CrossRef]

- Wu, W.; Li, M.-F.; Xu, X.; Tang, X.-P.; Yang, C.; Liu, H.-B. The Transferability of Random Forest and Support Vector Machine for Estimating Daily Global Solar Radiation Using Sunshine Duration over Different Climate Zones. Theor. Appl. Climatol. 2021, 146, 45–55. [Google Scholar] [CrossRef]

- Marti, R.; Gascoin, S.; Berthier, E.; de Pinel, M.; Houet, T.; Laffly, D. Mapping Snow Depth in Open Alpine Terrain from Stereo Satellite Imagery. Cryosphere 2016, 10, 1361–1380. [Google Scholar] [CrossRef]

- Deschamps-Berger, C.; Gascoin, S.; Berthier, E.; Deems, J.; Gutmann, E.; Dehecq, A.; Shean, D.; Dumont, M. Snow Depth Mapping from Stereo Satellite Imagery in Mountainous Terrain: Evaluation Using Airborne Laser-Scanning Data. Cryosphere 2020, 14, 2925–2940. [Google Scholar] [CrossRef]

- Bair, E.H.; Stillinger, T.; Dozier, J. Snow Property Inversion from Remote Sensing (SPIReS): A Generalized Multispectral Unmixing Approach with Examples from MODIS and Landsat 8 OLI. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7270–7284. [Google Scholar] [CrossRef]

- Haugerud, R.A.; Tabor, R.W. Geologic Map of the North Cascade Range, Washington; Scientific Investigations Map 2940, 2 Sheets, Scale 1:200,000; 2 Pamphlets, 29 p. and 23 p.; U.S. Geological Survey: Tsukuba, Japan, 2009.

- Kruckeberg, A.R. The Natural History of Puget Sound Country; University of Washington Press: Seattle, WA, USA, 1991; ISBN 978-0-295-97019-6. [Google Scholar]

- O’Neel, S.; McNeil, C.; Sass, L.C.; Florentine, C.; Baker, E.H.; Peitzsch, E.; McGrath, D.; Fountain, A.G.; Fagre, D. Reanalysis of the US Geological Survey Benchmark Glaciers: Long-Term Insight into Climate Forcing of Glacier Mass Balance. J. Glaciol. 2019, 65, 850–866. [Google Scholar] [CrossRef]

- Rasmussen, L.A.; Tangborn, W.V. Hydrology of the North Cascades Region, Washington: 1. Runoff, Precipitation, and Storage Characteristics. Water Resour. Res. 1976, 12, 187–202. [Google Scholar] [CrossRef]

- Bach, A. Snowshed Contributions to the Nooksack River Watershed, North Cascades Range, Washington. Geogr. Rev. 2002, 92, 192–212. [Google Scholar] [CrossRef]

- Trujillo, E.; Molotch, N.P. Snowpack Regimes of the Western United States. Water Resour. Res. 2014, 50, 5611–5623. [Google Scholar] [CrossRef]

- Leffler, R.J.; Horvitz, A.; Downs, R.; Changery, M.; Redmond, K.T.; Taylor, G. Evaluation of a National Seasonal Snowfall Record at the Mount Baker, Washington, Ski Area; National Weather Digest: Norman, OK, USA, 2001; pp. 15–20. [Google Scholar]

- Kim, E.; Gatebe, C.; Hall, D.; Newlin, J.; Misakonis, A.; Elder, K.; Marshall, H.P.; Hiemstra, C.; Brucker, L.; De Marco, E.; et al. NASA’s Snowex Campaign: Observing Seasonal Snow in a Forested Environment. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 1388–1390. [Google Scholar]

- Webb, R.W.; Raleigh, M.S.; McGrath, D.; Molotch, N.P.; Elder, K.; Hiemstra, C.; Brucker, L.; Marshall, H.P. Within-Stand Boundary Effects on Snow Water Equivalent Distribution in Forested Areas. Water Resour. Res. 2020, 56, e2019WR024905. [Google Scholar] [CrossRef]

- Austin, G. Fens of Grand Mesa, Colorado: Characterization, Impacts from Human Activities, and Restoration; Prescott College: Prescott, AZ, USA, 2008. [Google Scholar]

- Yeend, W.E. Quaternary Geology of the Grand and Battlement Mesas Area, Colorado; U.S. G.P.O.: Washington, DC, USA, 1969.

- Kulakowski, D.; Veblen, T. Historical Range of Variability of Forest Vegetation of Grand Mesa National Forest, Colorado. USDA Forest Service, Rocky Mountain Region and the Colorado Forest Restoration Institute, Fort Collins. 84 Pages. (Refereed); Colorado Forest Restoration Institute: Fort Collins, CO, USA, 2006. [Google Scholar]

- Kuester, M.I. Absolute Radiometric Calibration: 2016v0; Digital Globe: Westminster, CO, USA, 2017. [Google Scholar]

- U.S. Geological Survey. 1/3rd Arc-Second Digital Elevation Models (DEMs)—USGS National Map 3DEP Downloadable Data Collection; U.S. Geological Survey: Reston, VA, USA, 2017.

- Beyer, R.A.; Alexandrov, O.; McMichael, S. The Ames Stereo Pipeline: NASA’s Open Source Software for Deriving and Processing Terrain Data. Earth Space Sci. 2018, 5, 537–548. [Google Scholar] [CrossRef]

- Shean, D.E.; Alexandrov, O.; Moratto, Z.M.; Smith, B.E.; Joughin, I.R.; Porter, C.; Morin, P. An Automated, Open-Source Pipeline for Mass Production of Digital Elevation Models (DEMs) from Very-High-Resolution Commercial Stereo Satellite Imagery. ISPRS J. Photogramm. Remote Sens. 2016, 116, 101–117. [Google Scholar] [CrossRef]

- Updike, T.; Comp, C. Radiometric Use of WorldView-2 Imagery; DigitalGlobe Inc.: Oakland, CA, USA, 2010; p. 17. [Google Scholar]

- McFeeters, S.K. The Use of the Normalized Difference Water Index (NDWI) in the Delineation of Open Water Features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 6, 2825–2830. [Google Scholar]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation Importance: A Corrected Feature Importance Measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef] [PubMed]

- Berhane, T.M.; Costa, H.; Lane, C.R.; Anenkhonov, O.A.; Chepinoga, V.V.; Autrey, B.C. The Influence of Region of Interest Heterogeneity on Classification Accuracy in Wetland Systems. Remote Sens. 2019, 11, 551. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the Importance of Training Data Sample Selection in Random Forest Image Classification: A Case Study in Peatland Ecosystem Mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Koenig, J.; Gueguen, L. A Comparison of Land Use Land Cover Classification Using Superspectral WorldView-3 vs Hyperspectral Imagery. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–5. [Google Scholar]

- Elmes, A.; Alemohammad, H.; Avery, R.; Caylor, K.; Eastman, J.R.; Fishgold, L.; Friedl, M.A.; Jain, M.; Kohli, D.; Laso Bayas, J.C.; et al. Accounting for Training Data Error in Machine Learning Applied to Earth Observations. Remote Sens. 2020, 12, 1034. [Google Scholar] [CrossRef]

- Foody, G.M. Explaining the Unsuitability of the Kappa Coefficient in the Assessment and Comparison of the Accuracy of Thematic Maps Obtained by Image Classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Pontius Jr, R.G.; Millones, M. Death to Kappa: Birth of Quantity Disagreement and Allocation Disagreement for Accuracy Assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Stehman, S.V.; Foody, G.M. Key Issues in Rigorous Accuracy Assessment of Land Cover Products. Remote Sens. Environ. 2019, 231, 111199. [Google Scholar] [CrossRef]

- Selkowitz, D.; Painter, T.; Rittger, K.; Schmidt, G.; Forster, R. The USGS Landsat Snow Covered Area Products: Methods and Preliminary Validation. In Automated Approaches for Snow and Ice Cover Monitoring Using Optical Remote Sensing; University of Utah: Salt Lake City, UT, USA, 2017; pp. 76–119. [Google Scholar]

- U.S. Geological Survey. Earth Resources Observation And Science Center Collection-1 Landsat Level-3 Fractional Snow Covered Area (FSCA) Science Product; U.S. Geological Survey: Reston, VA, USA, 2018.

- Hall, D.K.; Riggs, G.A.; DiGirolamo, N.E.; Román, M.O. Evaluation of MODIS and VIIRS Cloud-Gap-Filled Snow-Cover Products for Production of an Earth Science Data Record. Hydrol. Earth Syst. Sci. 2019, 23, 5227–5241. [Google Scholar] [CrossRef]

- Arvidson, T.; Gasch, J.; Goward, S.N. Landsat 7′s Long-Term Acquisition Plan—An Innovative Approach to Building a Global Imagery Archive. Remote Sens. Environ. 2001, 78, 13–26. [Google Scholar] [CrossRef]

- McGrath, D.; Webb, R.; Shean, D.; Bonnell, R.; Marshall, H.-P.; Painter, T.H.; Molotch, N.P.; Elder, K.; Hiemstra, C.; Brucker, L. Spatially Extensive Ground-Penetrating Radar Snow Depth Observations During NASA’s 2017 SnowEx Campaign: Comparison with In Situ, Airborne, and Satellite Observations. Water Resour. Res. 2019, 55, 10026–10036. [Google Scholar] [CrossRef]

- Nolin, A.W.; Sproles, E.A.; Rupp, D.E.; Crumley, R.L.; Webb, M.J.; Palomaki, R.T.; Mar, E. New Snow Metrics for a Warming World. Hydrol. Process. 2021, 35, e14262. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Ploton, P.; Mortier, F.; Réjou-Méchain, M.; Barbier, N.; Picard, N.; Rossi, V.; Dormann, C.; Cornu, G.; Viennois, G.; Bayol, N.; et al. Spatial Validation Reveals Poor Predictive Performance of Large-Scale Ecological Mapping Models. Nat. Commun. 2020, 11, 4540. [Google Scholar] [CrossRef]

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C. Land Cover Classification in an Era of Big and Open Data: Optimizing Localized Implementation and Training Data Selection to Improve Mapping Outcomes. Remote Sens. Environ. 2022, 268, 112780. [Google Scholar] [CrossRef]

- Lamare, M.; Dumont, M.; Picard, G.; Larue, F.; Tuzet, F.; Delcourt, C.; Arnaud, L. Simulating Optical Top-of-Atmosphere Radiance Satellite Images over Snow-Covered Rugged Terrain. Cryosphere 2020, 14, 3995–4020. [Google Scholar] [CrossRef]

- Qiu, S.; Lin, Y.; Shang, R.; Zhang, J.; Ma, L.; Zhu, Z. Making Landsat Time Series Consistent: Evaluating and Improving Landsat Analysis Ready Data. Remote Sens. 2019, 11, 51. [Google Scholar] [CrossRef]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the Random Forest Framework for Classification of Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef] [Green Version]

- Xin, Q.; Woodcock, C.E.; Liu, J.; Tan, B.; Melloh, R.A.; Davis, R.E. View Angle Effects on MODIS Snow Mapping in Forests. Remote Sens. Environ. 2012, 118, 50–59. [Google Scholar] [CrossRef]

- Pestana, S.; Chickadel, C.C.; Harpold, A.; Kostadinov, T.S.; Pai, H.; Tyler, S.; Webster, C.; Lundquist, J.D. Bias Correction of Airborne Thermal Infrared Observations over Forests Using Melting Snow. Water Resour. Res. 2019, 55, 11331–11343. [Google Scholar] [CrossRef]

- Rittger, K.; Krock, M.; Kleiber, W.; Bair, E.H.; Brodzik, M.J.; Stephenson, T.R.; Rajagopalan, B.; Bormann, K.J.; Painter, T.H. Multi-Sensor Fusion Using Random Forests for Daily Fractional Snow Cover at 30 m. Remote Sens. Environ. 2021, 264, 112608. [Google Scholar] [CrossRef]

- Rittger, K.; Bormann, K.J.; Bair, E.H.; Dozier, J.; Painter, T.H. Evaluation of VIIRS and MODIS Snow Cover Fraction in High-Mountain Asia Using Landsat 8 OLI. Front. Remote Sens. 2021, 2, 647154. [Google Scholar] [CrossRef]

- Macander, M.J.; Swingley, C.S.; Joly, K.; Raynolds, M.K. Landsat-Based Snow Persistence Map for Northwest Alaska. Remote Sens. Environ. 2015, 163, 23–31. [Google Scholar] [CrossRef]

- Billings, W.D.; Bliss, L.C. An Alpine Snowbank Environment and Its Effects on Vegetation, Plant Development, and Productivity. Ecology 1959, 40, 388–397. [Google Scholar] [CrossRef]

- Watson, A.; Davison, R.W.; French, D.D. Summer Snow Patches and Climate in Northeast Scotland, U.K. Arct. Alp. Res. 1994, 26, 141–151. [Google Scholar] [CrossRef]

- Björk, R.G.; Molau, U. Ecology of Alpine Snowbeds and the Impact of Global Change. Arct. Antarct. Alp. Res. 2007, 39, 34–43. [Google Scholar] [CrossRef]

- Marshall, A.M.; Link, T.E.; Abatzoglou, J.T.; Flerchinger, G.N.; Marks, D.G.; Tedrow, L. Warming Alters Hydrologic Heterogeneity: Simulated Climate Sensitivity of Hydrology-Based Microrefugia in the Snow-to-Rain Transition Zone. Water Resour. Res. 2019, 55, 2122–2141. [Google Scholar] [CrossRef]

- Zong, S.; Lembrechts, J.J.; Du, H.; He, H.S.; Wu, Z.; Li, M.; Rixen, C. Upward Range Shift of a Dominant Alpine Shrub Related to 50 Years of Snow Cover Change. Remote Sens. Environ. 2022, 268, 112773. [Google Scholar] [CrossRef]

- Dobrowski, S.Z. A Climatic Basis for Microrefugia: The Influence of Terrain on Climate. Glob. Change Biol. 2011, 17, 1022–1035. [Google Scholar] [CrossRef]

- Ford, K.R.; Ettinger, A.K.; Lundquist, J.D.; Raleigh, M.S.; Lambers, J.H.R. Spatial Heterogeneity in Ecologically Important Climate Variables at Coarse and Fine Scales in a High-Snow Mountain Landscape. PLoS ONE 2013, 8, e65008. [Google Scholar] [CrossRef]

- Lundquist, J.D.; Flint, A.L. Onset of Snowmelt and Streamflow in 2004 in the Western United States: How Shading May Affect Spring Streamflow Timing in a Warmer World. J. Hydrometeorol. 2006, 7, 1199–1217. [Google Scholar] [CrossRef]

- Roberts, D.R.; Bahn, V.; Ciuti, S.; Boyce, M.S.; Elith, J.; Guillera-Arroita, G.; Hauenstein, S.; Lahoz-Monfort, J.J.; Schröder, B.; Thuiller, W.; et al. Cross-Validation Strategies for Data with Temporal, Spatial, Hierarchical, or Phylogenetic Structure. Ecography 2017, 40, 913–929. [Google Scholar] [CrossRef]

- Dewitz, J. National Land Cover Database (NLCD) 2019 Products; US Geological Survey: Sioux Falls, SD, USA, 2021.

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July 2019–2 August 2019; pp. 5901–5904. [Google Scholar]

- Zhou, Z.-H. A Brief Introduction to Weakly Supervised Learning. Natl. Sci. Rev. 2018, 5, 44–53. [Google Scholar] [CrossRef]

- Cannistra, A.F.; Shean, D.E.; Cristea, N.C. High-Resolution CubeSat Imagery and Machine Learning for Detailed Snow-Covered Area. Remote Sens. Environ. 2021, 258, 112399. [Google Scholar] [CrossRef]

- John, A.; Cannistra, A.F.; Yang, K.; Tan, A.; Shean, D.; Hille Ris Lambers, J.; Cristea, N. High-Resolution Snow-Covered Area Mapping in Forested Mountain Ecosystems Using PlanetScope Imagery. Remote Sens. 2022, 14, 3409. [Google Scholar] [CrossRef]

- Dai, C.; Howat, I.M. Detection of Saturation in High-Resolution Pushbroom Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1684–1693. [Google Scholar] [CrossRef]

- Carroll, T.; Cline, D.; Fall, G.; Nilsson, A.; Li, L.; Rost, A. NOHRSC Operations and the Simulation of Snow Cover Properties for the Coterminous. In Proceedings of the U.S. 69th Annual Western Snow Conference, Claryville, NY, USA, 5–7 June 2001; pp. 1–10. [Google Scholar]

- Wrzesien, M.L.; Pavelsky, T.M.; Durand, M.T.; Dozier, J.; Lundquist, J.D. Characterizing Biases in Mountain Snow Accumulation From Global Data Sets. Water Resour. Res. 2019, 55, 9873–9891. [Google Scholar] [CrossRef]

- Native Land Digital Native Land Territories Map 2022. Available online: https://native-land.ca/ (accessed on 30 December 2020).

| Location | Date | Sensor | Catalog ID | Mean Sun Elevation | Mean Sun Azimuth | Mean Satellite Elevation | Mean Satellite Azimuth | Mean Off-Nadir Viewing Angle | PAN GSD [m] | MS GSD [m] |

|---|---|---|---|---|---|---|---|---|---|---|

| WA N Cascades | 20 May 2015 | WV-3 | 104001000C1BB800 | 60.0° | 157.2° | 58.5° | 196.4° | 28.3° | 0.39 | 1.55 |

| 104001000CB3D400 | 60.0° | 156.7° | 82.7° | 6.6° | 6.9° | 0.31 | 1.25 | |||

| WA N Cascades: South Cascade Glacier | 24 April 2018 | WV-3 | 104001003B034600 | 53.8° | 162.7° | 63.5° | 145.3° | 23.8° | 0.36 | 1.45 |

| 104001003B7AC300 | 53.8° | 162.3° | 61.4° | 54.2° | 26.0° | 0.37 | 1.50 | |||

| 27 May 2018 | WV-3 | 104001003D88B900 | 63.0° | 171.7° | 61.7° | 318.2° | 25.7° | 0.37 | 1.49 | |

| 104001003DD34200 | 63.0° | 172.2° | 58.9° | 246.9° | 28.0° | 0.39 | 1.54 | |||

| 5 May 2019 | WV-3 | 104001004C8CF300 | 56.6° | 158.2° | 58.1° | 132.6° | 28.7° | 0.39 | 1.57 | |

| 104001004CBC0600 | 56.6° | 157.8° | 58.8° | 72.2° | 28.3° | 0.39 | 1.55 | |||

| CO: Grand Mesa | 1 February 2017 | WV-3 | 1040010026C28A00 | 31.9° | 160.5° | 62.3° | 137.0° | 24.9° | 0.37 | 1.47 |

| 10400100276B9500 | 31.9° | 160.2° | 57.1° | 52.7° | 29.9° | 0.40 | 1.59 | |||

| 1040010028192C00 | 32.0° | 160.7° | 56.9° | 152.4° | 29.6° | 0.40 | 1.59 | |||

| 10400100286A3900 | 31.9° | 160.4° | 63.3° | 65.7° | 24.2° | 0.36 | 1.45 | |||

| 3 April 2018 | WV-2 | 103001007A0DBD00 | 53.7° | 290.0° | 78.9° | 109.4° | 10.0° | 0.48 | 1.91 | |

| 103001007B395800 | 53.7° | 210.2° | 52.7° | 28.1° | 33.5° | 0.65 | 2.59 | |||

| 26 March 2019 | WV-3 | 1040010048434C00 | 52.3° | 163.4° | 62.7° | 315.7° | 24.9° | 0.37 | 1.47 | |

| 104001004918EB00 | 52.3° | 163.8° | 55.8° | 236.1° | 30.7° | 0.41 | 1.62 |

| Data Stack | Input Data Stack Layers |

|---|---|

| coastal_V | coastal, NDVI |

| coastal_VW | coastal, NDVI, NDWI |

| PAN_VW | panchromatic, NDVI, NDWI |

| RGB | red, green, blue |

| RGBN | red, green, blue, near infrared 2 |

| RGBN_V | red, green, blue, near infrared 2, NDVI |

| MS | coastal, blue, green, yellow, red, red edge, near infrared 1, near infrared 2 |

| MS_V | coastal, blue, green, yellow, red, red edge, near infrared 1, near infrared 2, NDVI |

| PAN_MS | panchromatic, coastal, blue, green, yellow, red, red edge, near infrared 1, near infrared 2 |

| PAN_MS_V | panchromatic, coastal, blue, green, yellow, red, red edge, near infrared 1, near infrared 2, NDVI |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.M.; Shean, D. Improving Mountain Snow and Land Cover Mapping Using Very-High-Resolution (VHR) Optical Satellite Images and Random Forest Machine Learning Models. Remote Sens. 2022, 14, 4227. https://doi.org/10.3390/rs14174227

Hu JM, Shean D. Improving Mountain Snow and Land Cover Mapping Using Very-High-Resolution (VHR) Optical Satellite Images and Random Forest Machine Learning Models. Remote Sensing. 2022; 14(17):4227. https://doi.org/10.3390/rs14174227

Chicago/Turabian StyleHu, J. Michelle, and David Shean. 2022. "Improving Mountain Snow and Land Cover Mapping Using Very-High-Resolution (VHR) Optical Satellite Images and Random Forest Machine Learning Models" Remote Sensing 14, no. 17: 4227. https://doi.org/10.3390/rs14174227

APA StyleHu, J. M., & Shean, D. (2022). Improving Mountain Snow and Land Cover Mapping Using Very-High-Resolution (VHR) Optical Satellite Images and Random Forest Machine Learning Models. Remote Sensing, 14(17), 4227. https://doi.org/10.3390/rs14174227