1. Introduction

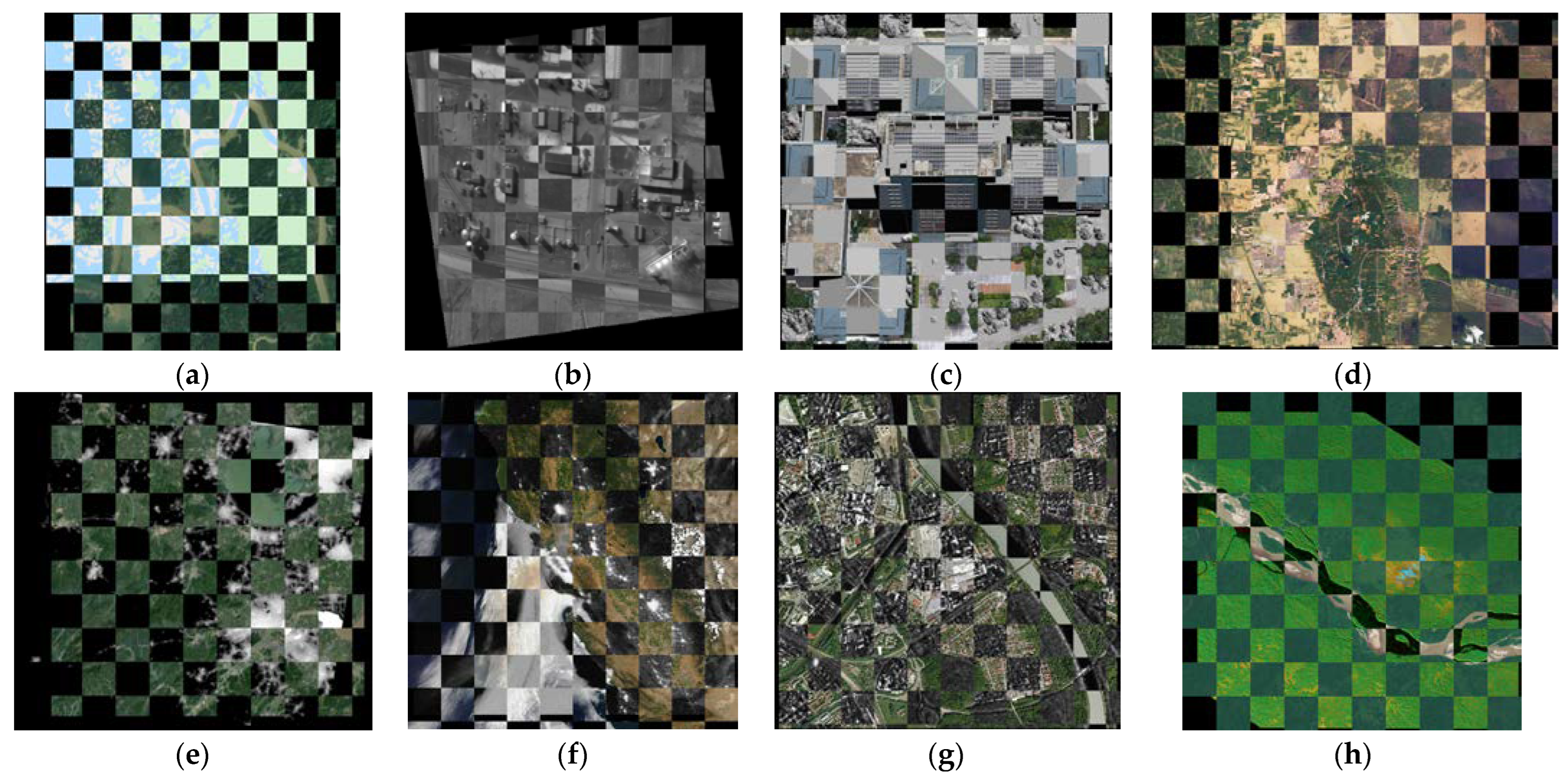

With the increasing improvement of remote sensing systems in recent years, the joint application of multi-modal remote sensing images (MRSIs) has received more and more attention. As a basic step required for MRSIs processing, image matching is the process of aligning two or more images with overlapping ranges obtained under different imaging conditions [

1], and its accuracy has an important impact on subsequent applications. However, due to different physical imaging mechanisms, MRSIs may have significant nonlinear radiation distortions (NRD) and geometric distortions, which pose a considerable challenge to image matching [

2].

There are three main categories of current multi-modal image matching methods: area-based, feature-based, and learning-based methods [

3]. With the continuous advancement of technology, learning-based matching algorithms have gradually matured and achieved some good results [

4,

5]. However, these algorithms require large datasets for training, have weak generalization ability, and require high computational resources, so their generality is limited.

The key of area-based matching methods is to establish reasonable and effective similarity measures. Traditional spatial domain methods include the sum of squared differences (SSD) [

6], normalized cross-correlation (NCC), mutual information (MI) [

7], and matching by tone mapping (MTM) [

8], but such methods have high time complexity. Kuglin et al. developed a phase correlation algorithm based on frequency domain information by transforming SAR and optical satellite images into the frequency domain [

9]. The OS-PC algorithm proposed by Xiang et al. combined robust feature representation with 3D phase correlation to make the algorithm more robust to radiometric and geometric differences [

10]. To match images with significant NRD, Ye et al. proposed a novel HOPC descriptor [

11] that uses phase congruency [

12] features instead of the original gradient features to represent geometric structure or shape features of images with better matching results. To compensate for the drawback of sparse sampling of the HOPC algorithm, Ye et al. developed the channel feature of the orientated gradients (CFOG) algorithm for constructing descriptors pixel by pixel [

13]. Fan et al. further proposed an angle-weighted orientation gradients (AWOG) descriptor that assigns gradient values to only the two most relevant orientations and uses 3D phase correlation as a similarity metric, which obviously improves the algorithm’s performance [

14]. However, the area-based methods need to have good initial correspondence and can only handle simple translational transformations, so they have not been widely used.

Feature-based matching methods accomplish the final match by determining reliable feature correspondences between images. The scale-invariant feature transform (SIFT) is one of the most representative algorithms that has been widely used in optical image registration [

15]. Since then, many improved versions of the SIFT algorithm have been developed. PCA-SIFT effectively simplifies the computation of the original algorithm by principal component analysis [

16]. Speeded-up robust feature (SURF) is based on the Hessian matrix for feature detection and accelerates the operation through the integral graph technique [

17]. Affine-SIFT makes SIFT affine-invariant by simulating two camera axis orientation parameters [

18]. SAR-SIFT enhances robustness to speckle noise by introducing a new gradient definition [

19]. Adaptive binning scale-invariant feature transform (AB-SIFT) adopts an adaptive binning strategy to compute the local feature descriptor, which greatly increases the distinguishability of the descriptor [

20]. PSO-SIFT overcomes the intensity differences between remote sensing images by optimizing the gradient definition and improves the feature matching strategy by integrating the location, scale, and orientation information of each keypoint [

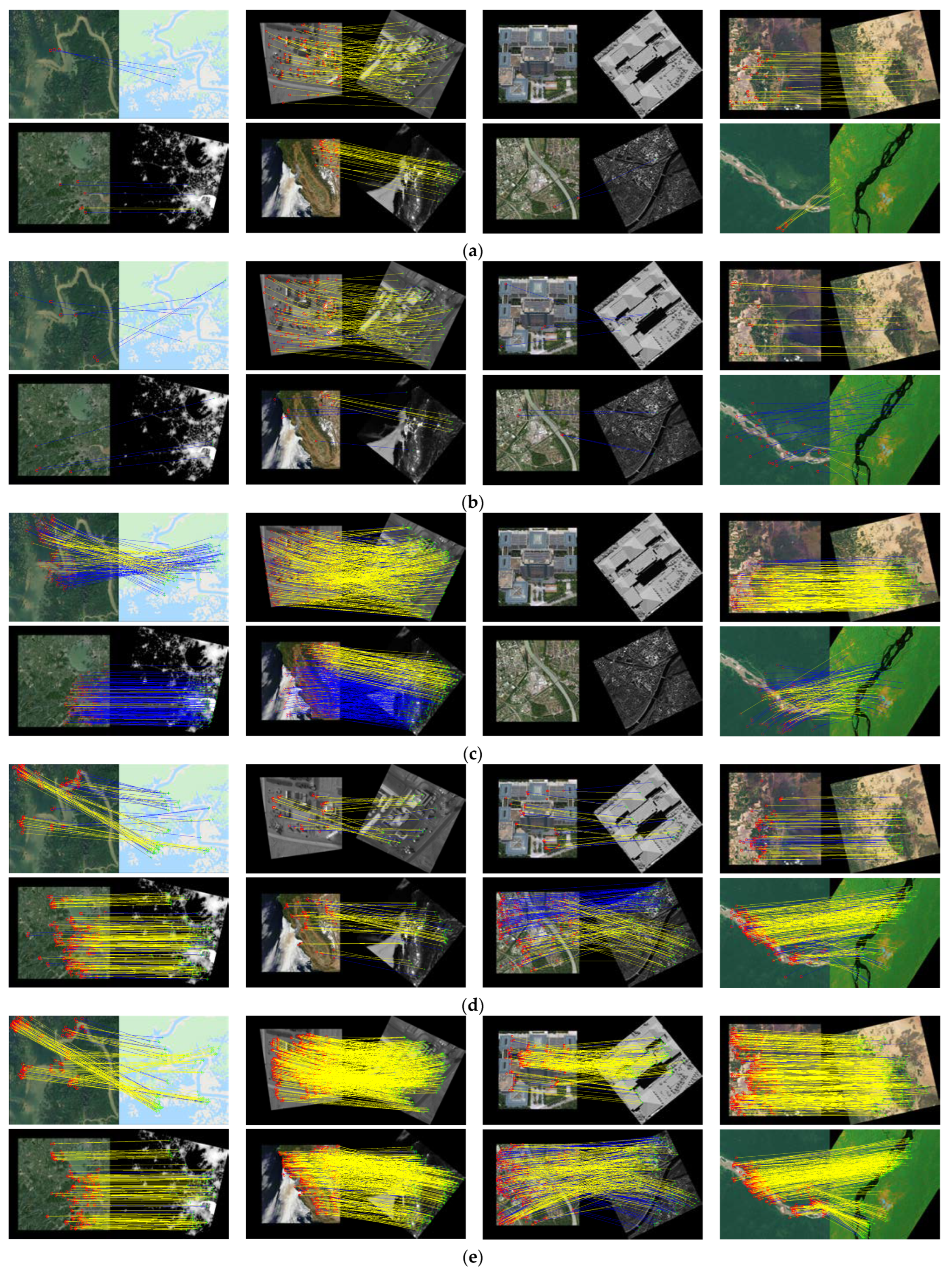

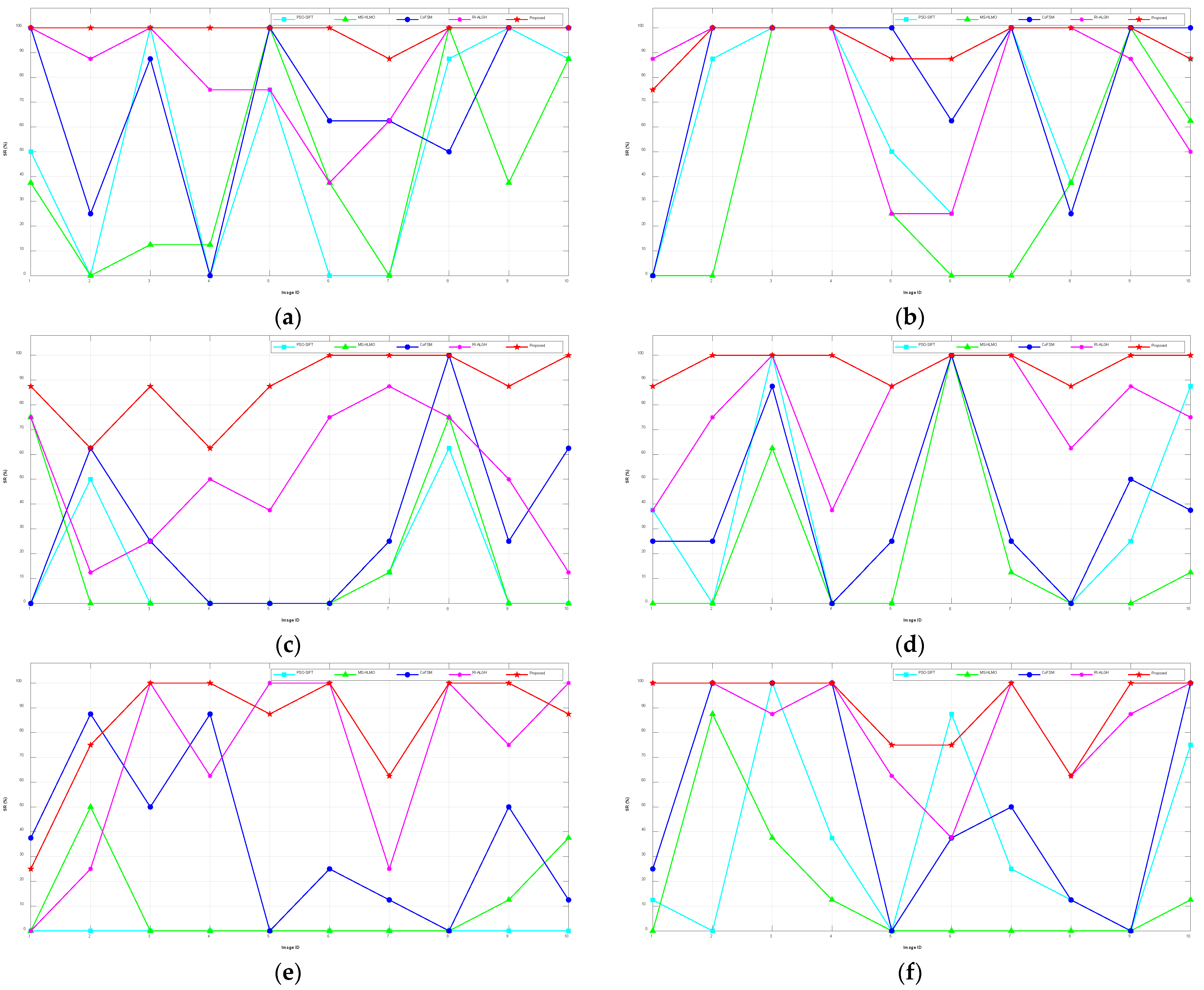

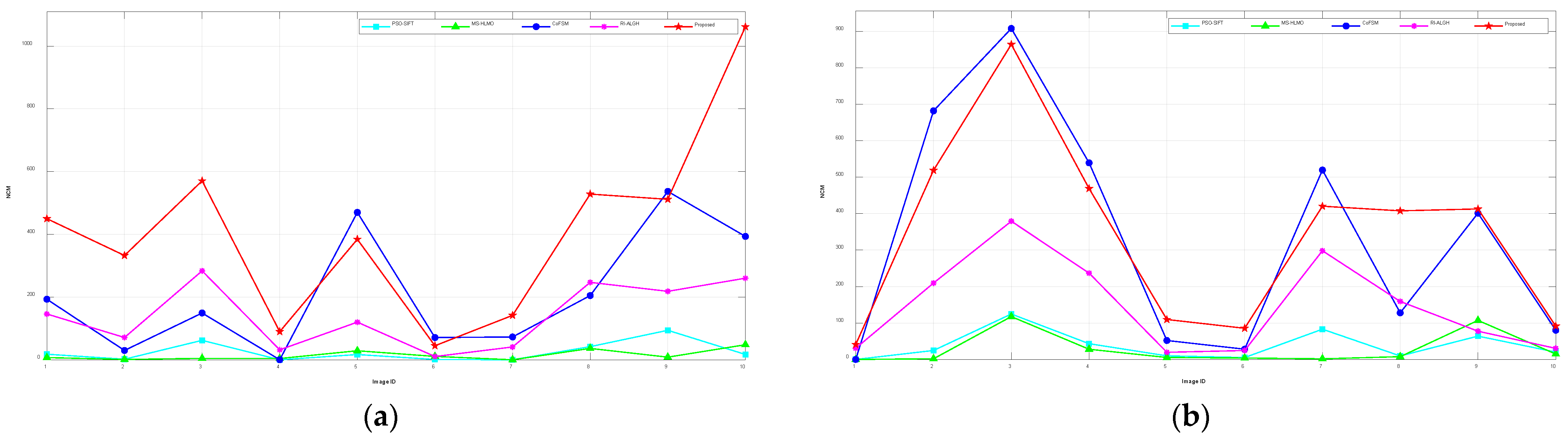

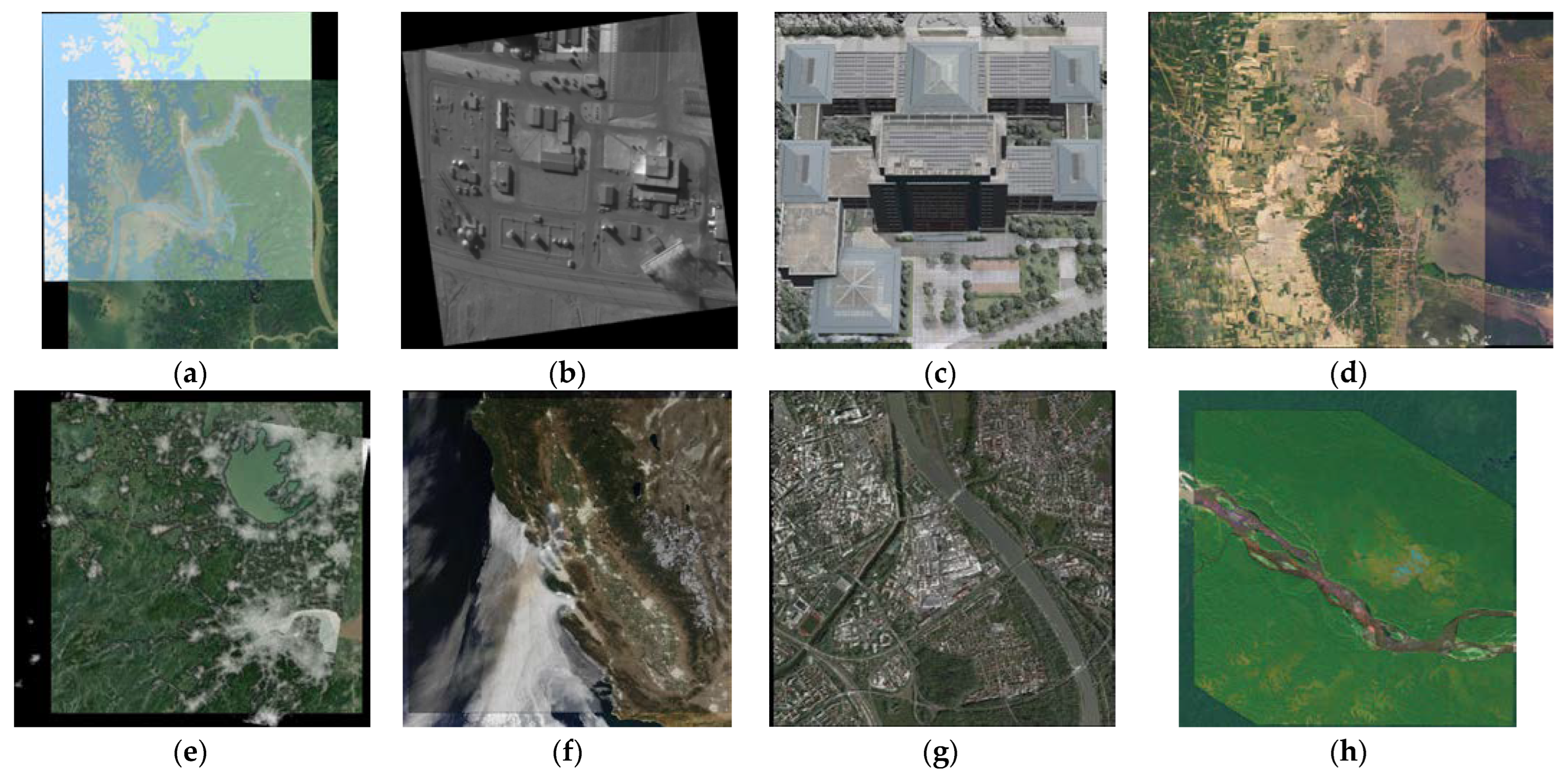

21]. OS-SIFT utilizes the multiscale ratio of exponentially weighted averages and multiscale Sobel operators for gradient calculation. Then, keypoints are determined by performing a local maximum search in the scale space and considering the spatial relationship between the keypoints, which significantly enhances the robustness of the algorithm [

22]. To further enhance robustness to NRD, Gao et al. proposed a feature-based multiscale histogram of local main orientation (MS-HLMO) registration algorithm for feature extraction on a partial main orientation map (PMOM) with a generalized gradient location, which is characterized by high intensity, rotation, and scale invariance [

23]. Yao et al. constructed a new co-occurrence scale space based on the co-occurrence filter (CoF), optimized the image gradient, and established a position-optimized Euclidean distance function. The performance of the algorithm was found to be significantly improved [

1].

The above algorithms all use gradients to describe features, which mainly utilize the spatial domain information of the image. Moreover, frequency domain information also plays an important role in feature-based matching algorithms. Fan et al. proposed a structural descriptor (PCSD) that combines nonlinear diffusion and phase congruency, built on phase congruency structural images in a grouping manner, effectively increasing the distinguishability of the descriptors [

24]. Ye et al. proposed the use of MMPC-Lap for feature detection and the use of a local histogram of orientated phase congruency (LHOPC) to describe features, which can resist variations in illumination and contrast [

25]. Radiation-invariant feature transform (RIFT) first introduced the concept of the maximum index map (MIM), which can resist a certain degree of NRD [

26]. Yao et al. constructed the anisotropic weighted moment equation and extended the phase congruency model to design the histogram of absolute phase consistency gradients (HAPCG) [

27] algorithm. Yu et al. proposed a novel descriptor called the rotation-invariant amplitudes of log-Gabor orientation histograms (RI-ALGH) by combining spatial feature detection with local frequency domain description [

28]. Fan et al. proposed an effective coarse-to-fine matching method for multi-modal remote sensing images (3MRS), which further improves the matching accuracy of the algorithm by performing template matching through a 3D phase correlation strategy based on coarse matching using MIM [

29]. Yang et al. constructed the improved local phase sharpness feature and phase orientation feature to replace the original gradient amplitude and orientation features, and established a local phase sharpness orientation (LPSO) descriptor by the log-polar coordinate system [

30]. Although the above feature-based matching algorithms possess varying degrees of robustness to radiometric and geometric distortions, the performance of the algorithms degrades significantly when both are present, mainly facing two major difficulties [

2]: (1) when performing feature description, the estimation of the principal orientation based on local image features is often time-consuming and error-prone, affecting the final matching results and (2) when performing feature matching, a large number of outliers are generated due to the presence of significant NRD.

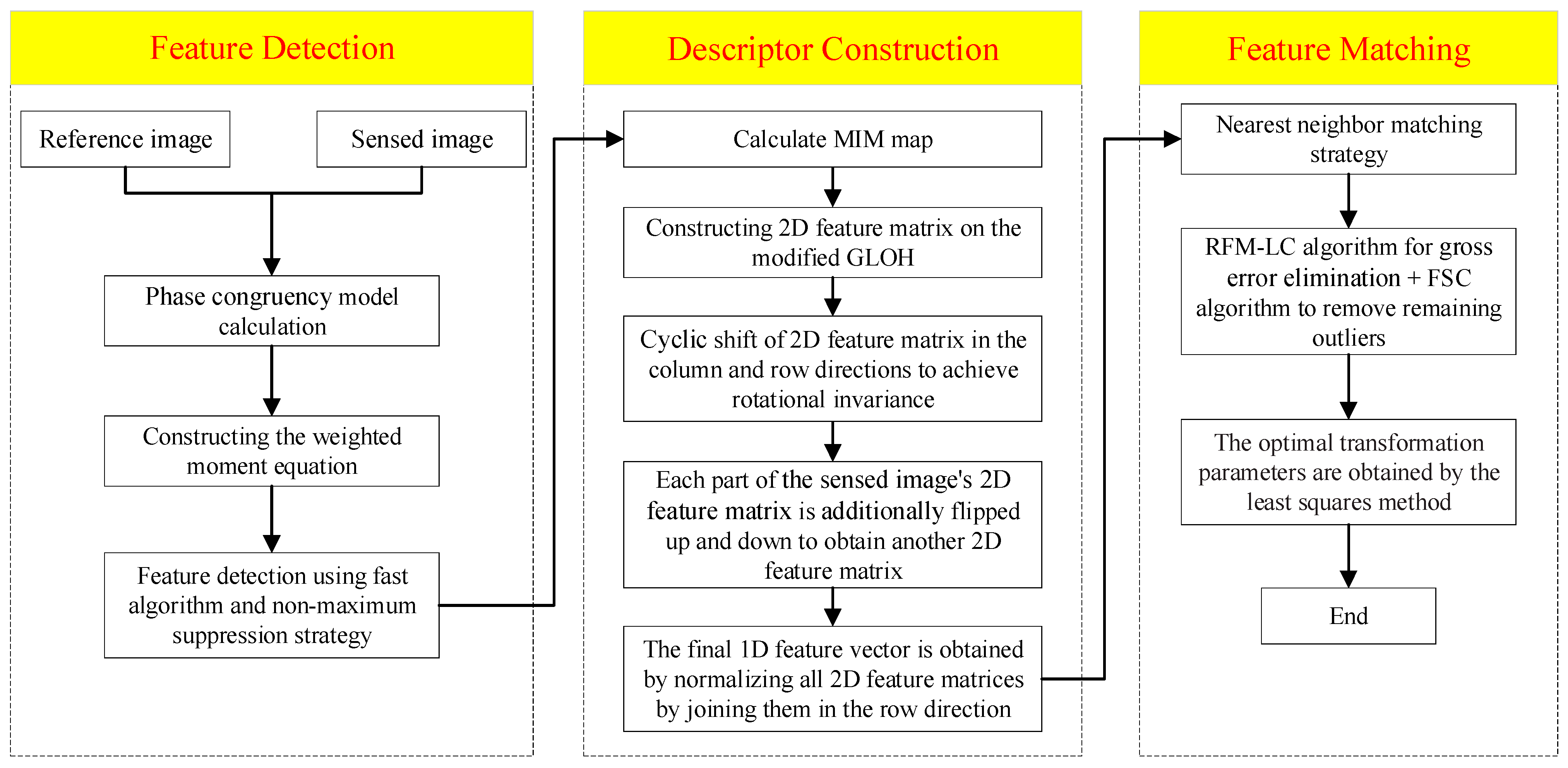

Based on the above analysis, we propose a rotationally robust feature-matching method based on the MIM and 2D matrix, called the rotation-invariant local phase orientation histogram (RI-LPOH), which can better handle severe rotational and translational deformations in the presence of significant NRD. There are three main contributions provided by RI-LPOH:

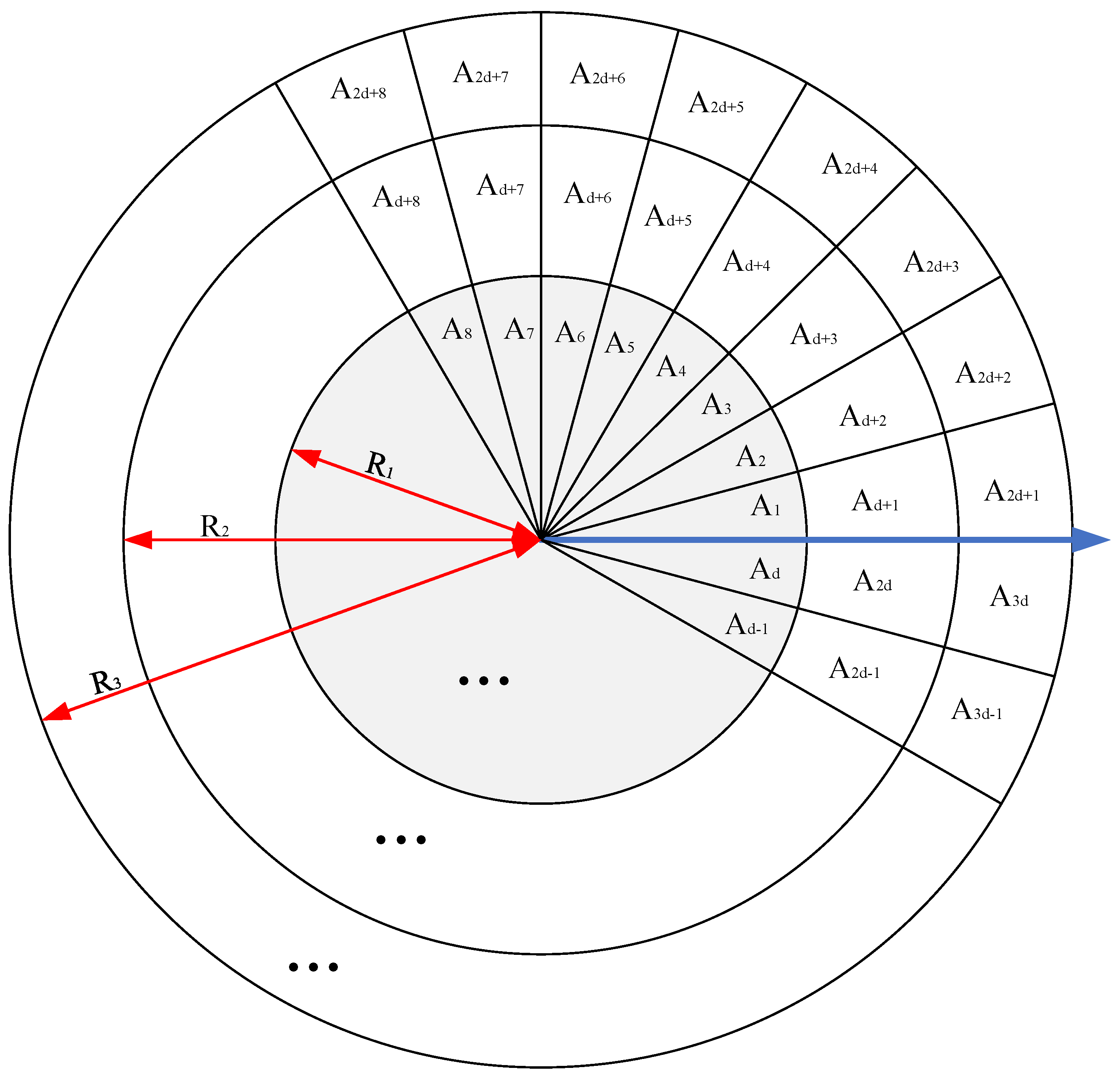

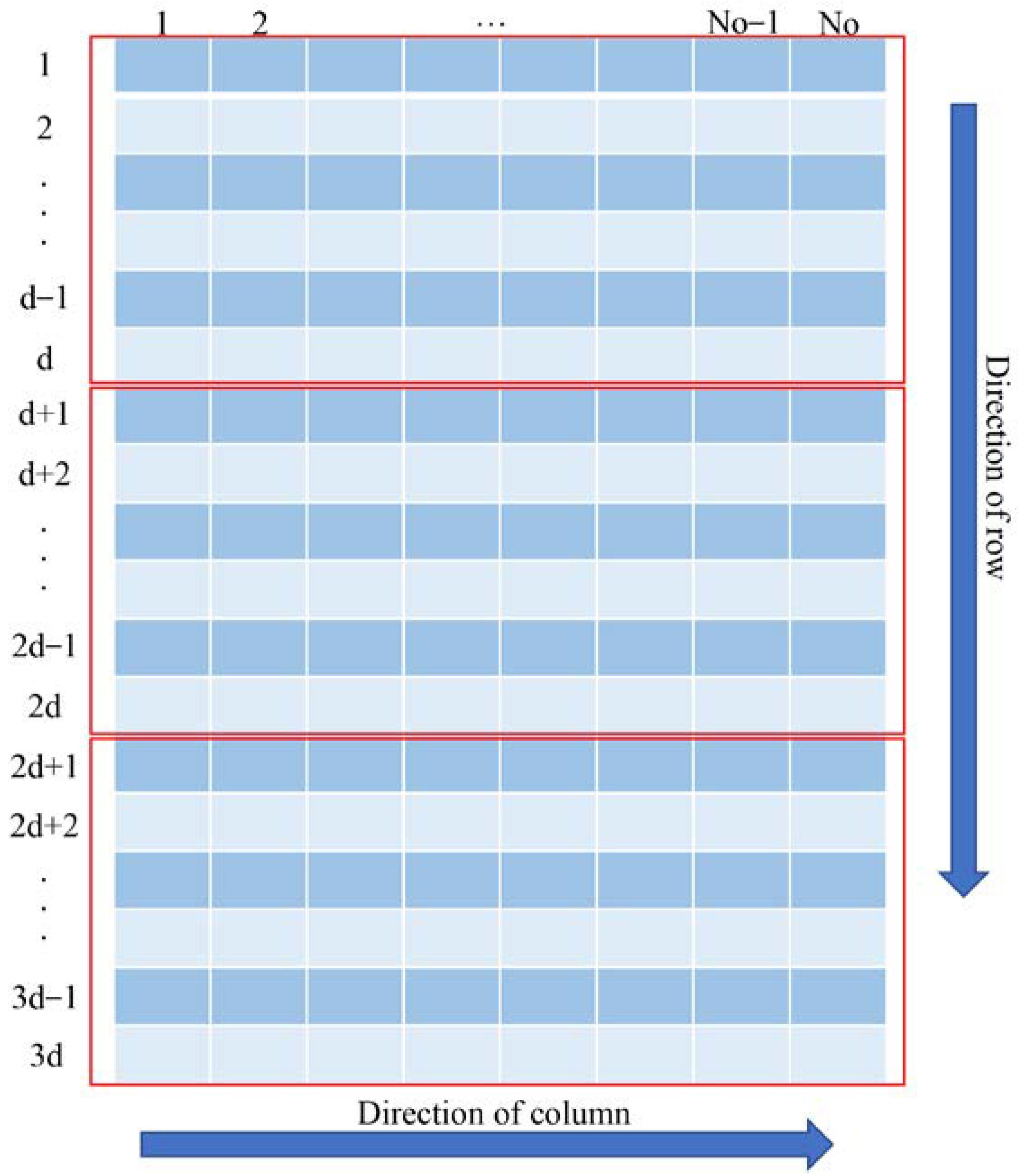

We improved the gradient location orientation histogram (GLOH) structure and achieved robustness to rotation by cyclic shifting of the 2D feature matrix in both the column and row directions without estimating the principal orientation separately, which greatly improved the computational efficiency.

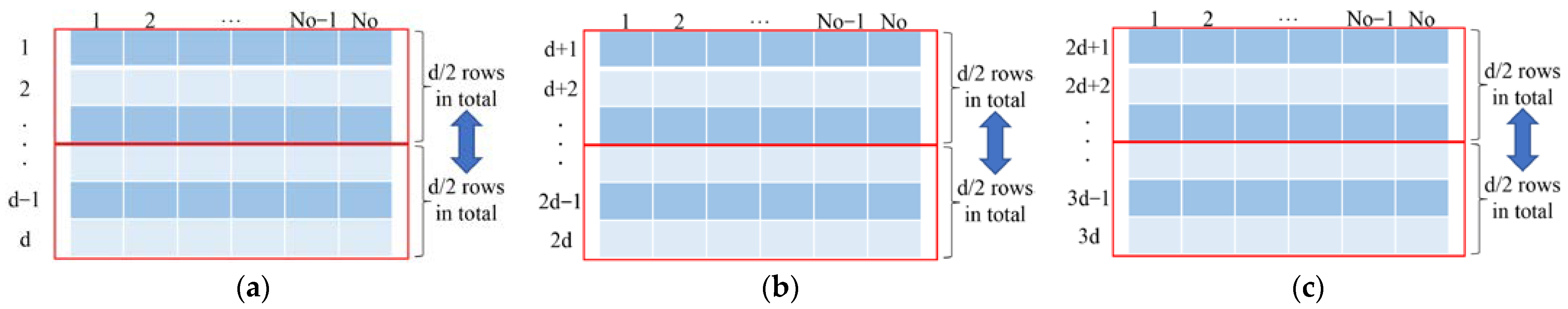

We additionally flipped each part of the sensed image’s 2D feature matrix up and down after the cyclic shift to further avoid intensity inversion and improve the success rate of matching.

By introducing the RFM-LC [

31] algorithm to screen the obtained initial matches, we alleviated the adverse effects caused by the high proportion of outliers.

The rest of this paper is organized as follows:

Section 2 describes the implementation of the proposed method in detail.

Section 3 shows the experiments and results concerning RI-LPOH.

Section 4 discusses several important aspects related to RI-LPOH.

Section 5 summarizes the whole paper.