Abstract

Deep-learning (DL)-based object detection algorithms can greatly benefit the community at large in fighting fires, advancing climate intelligence, and reducing health complications caused by hazardous smoke particles. Existing DL-based techniques, which are mostly based on convolutional networks, have proven to be effective in wildfire detection. However, there is still room for improvement. First, existing methods tend to have some commercial aspects, with limited publicly available data and models. In addition, studies aiming at the detection of wildfires at the incipient stage are rare. Smoke columns at this stage tend to be small, shallow, and often far from view, with low visibility. This makes finding and labeling enough data to train an efficient deep learning model very challenging. Finally, the inherent locality of convolution operators limits their ability to model long-range correlations between objects in an image. Recently, encoder–decoder transformers have emerged as interesting solutions beyond natural language processing to help capture global dependencies via self- and inter-attention mechanisms. We propose Nemo: a set of evolving, free, and open-source datasets, processed in standard COCO format, and wildfire smoke and fine-grained smoke density detectors, for use by the research community. We adapt Facebook’s DEtection TRansformer (DETR) to wildfire detection, which results in a much simpler technique, where the detection does not rely on convolution filters and anchors. Nemo is the first open-source benchmark for wildfire smoke density detection and Transformer-based wildfire smoke detection tailored to the early incipient stage. Two popular object detection algorithms (Faster R-CNN and RetinaNet) are used as alternatives and baselines for extensive evaluation. Our results confirm the superior performance of the transformer-based method in wildfire smoke detection across different object sizes. Moreover, we tested our model with 95 video sequences of wildfire starts from the public HPWREN database. Our model detected 97.9% of the fires in the incipient stage and 80% within 5 min from the start. On average, our model detected wildfire smoke within 3.6 min from the start, outperforming the baselines.

1. Introduction

1.1. Motivation

There were more than 58,000 wildfires recorded in the United States in 2021, which have burned more than seven million acres [1,2]. The condition is particularly worse for the Western United States, where ninety percent of the land is impacted by severe drought. In California, fire seasons have been starting earlier and lasting longer. The five-year average cost of fire fighting in the U.S. is USD 2.35 billion [3]. Furthermore, researchers [4] have found evidence to associate fine particles found in wildfire smoke to repository morbidity and complications in general. The shear damage and suppression costs of wildfires have motivated many researchers to develop systems to detect fire at the early stages.

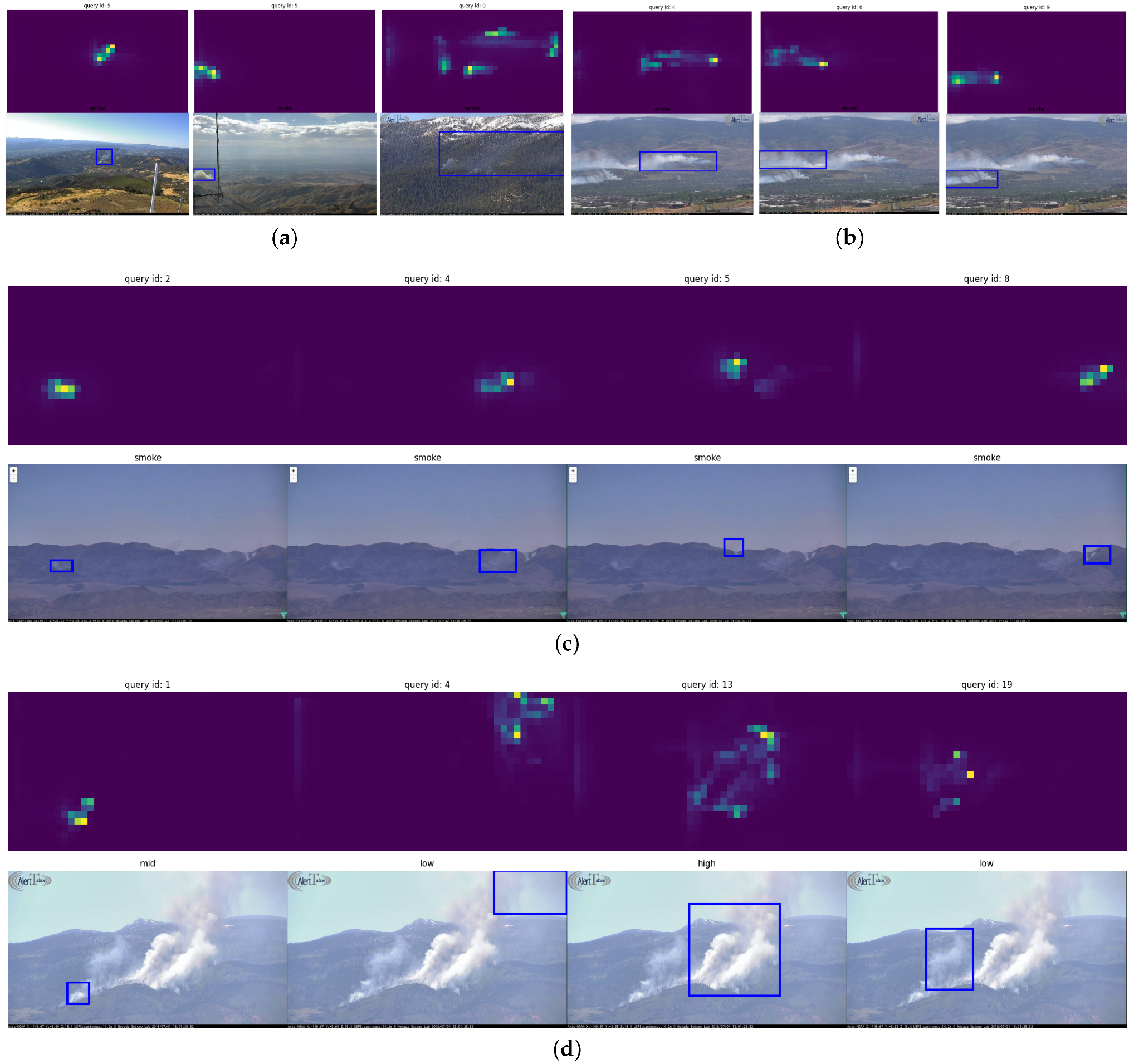

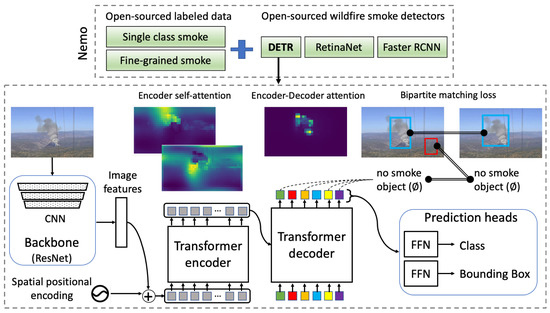

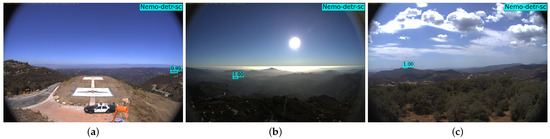

Figure 1.

Encoder self-attention for a set of reference points. The example shows that the encoder is able to separate instances and regions, even at the early stages of training.

Figure 1.

Encoder self-attention for a set of reference points. The example shows that the encoder is able to separate instances and regions, even at the early stages of training.

The first detection systems were based on typical fire-sensing technologies such as gas, heat, and smoke detectors. Conventional sensors have a limited range and coverage, while suffering from a slow response time, as smoke particles need to reach the sensors to activate them [5,6]. Later works were based on optical remote sensing at three different acquisition levels: satellite, aerial, and terrestrial. References [7,8,9] leveraged satellite remote sensing to detect fire. Satellites offer extensive views of the Earth’s surface; however, they suffer from a relatively coarse spatial and temporal resolution and, thus, are only effective in the detection and monitoring of large-scale forest fires [10]. Moreover, satellites operating in Low Earth Orbit (LEO) offer finer resolutions, making them more suitable for detecting fire in the early phases, but take a long time to re-position and thus have limited coverage [11]. Ideally, a constellation of low-orbit satellites with reliable and fast network capabilities can provide the required coverage [11]. Aerial remote sensing approaches, such as deploying sensors on high-altitude aircraft and balloons, have also been tried, but they are costly or have limited coverage [11].

Finally, a terrestrial option to sense fire would be optical cameras installed at good vantage points. Two large-scale networks of such cameras are already deployed in the Western United States. AlertWildfire [12] provides access to 900 cameras across eight states, while HPWREN [13] provides a comprehensive coverage of the wildfire-prone Southern California. The placement of these cameras enables a range view of up to 50 miles [14]. Some are also capable of panning, tilting, and zooming (PTZ), offering additional options to monitor wildfires at different resolutions. In this paper, we use the videos from these two networks as our raw input data for training and evaluating our deep-learning-based wildfire smoke detectors, an example of which is shown in Figure 1. The frames extracted from these raw videos are mostly 2 Mega Pixels (MP), with 3 MP and 6 MP the next-most-common dimensions in the dataset.

Wildfires typically ignite in rural and remote areas, such as forests, using abundant wildland vegetation as fuel [15]. They spread quickly and uncontrollably, making them difficult to control in a short time. Thus, the most important objective of any wildfire detection system is to detect wildfires early and before they grow [15]. This is called the incipient stage of fire development. Wildfires in the incipient stage comprise a non-flaming smoldering with relatively low heat. Recognizing fire at this stage offers the best chance of suppression. Moreover, flame is not typically visible at this stage; thus, any wildfire detection system that aims at early detection must focus on detecting smoke and not flame. Additionally, the smoke plume at this stage tends to be very small and, in some cases, not visible [11,16] by human observation of wildfire videos, especially in the first few minutes after the start of the fire. Thus, any wildfire detection system tailored to early detection should be able to detect small smoke plumes, typically far from view and on the horizon.

1.2. Challenges

After an extensive review of the literature, we found that the majority of works have a holistic approach towards fire stages and do not focus on a particular stage (e.g., incipient). The fastest wildfire smoke detection method that we know of [16] reported a 6.3 min detection latency based on 24 challenging wildfire sequences (i.e., a 9 min detection latency after converting their reference times to the official start times reported in the HPWREN database [17]). Moreover, the majority of existing works [10,18,19,20,21,22,23,24,25,26,27,28,29,30,31] do not distinguish between flame and smoke or simply focus on flame, which has more pronounced features, is easier to detect, and is mostly visible only in the more advanced stages. Furthermore, a significant number of works detect smoke or flame at relatively close range [18,19,20,21,22,23,32,33,34,35], such as using CCTV cameras in indoor or urban environments [24,25,26,27]. We only know of one study [16] that explicitly focuses on detecting smoke plumes on the horizon.

We believe the main reason why the literature is lacking in long-range and early incipient smoke detection is the shear difficulty of finding, processing, and annotating smoke bounding boxes at this stage. Smoke at this stage tends to be extremely small, shallow, and in some cases, only visible through careful and repetitive observation of video footage and zooming. Interestingly, there is no lack of databases of raw videos and images of wildlands and wildfire scenes, thanks to the prevalence of terrestrial remote sensing networks such as [12,13]. However, there is a lack of processed, open-source, and labeled data for early bounding box detection of wildfire smoke. Most existing works have some commercial or proprietary aspect [16,36,37] or share only a subset of their labeled data [11].

We noticed a common trend in the existing deep-learning-based studies. They are all based on convolutional neural networks (ConvNets or CNNs). Some of the works attempt to indirectly localize smoke regions through a moving window approach combined with secondary image classification [11,21,38], while others are based on more sophisticated object detection methods such as Faster R-CNN with feature pyramid networks (FPNs) [39], EfficientDet [40], RetinaNet [41], and Yolo [42,43], to predict and localize flame and smoke bounding boxes. However, what all ConvNets have in common is the inherent locality of convolutional filters, which limits the model in exploring all the possible long-range relationships between elements of an image. Moreover, such systems are typically anchor-based, and their performance and generalizability are limited to the design of the anchors. In addition, the inference speed and accuracy is heavily impacted by image size [11].

1.3. Nemo: An Open-Source Transformer-Supercharged Benchmark for Fine-Grained Wildfire Smoke Detection

We hypothesize that, to improve upon the CNN-based wildfire detection methods, especially in terms of detection rate in the early incipient stage, size of smoke, and detection range, we need a method that can tap into the long-range dependencies between image pixels. We found encoder–decoder Transformers to be a promising solution, as the global computation and perfect memory of attention-based mechanisms have already made them the superior model for long sequences, particularly in problems such as natural language processing (NLP) and speech recognition [44]. Very recently, visual Transformers have shown outstanding results in the prediction of natural hazards, such as landslides [45] and wildfire flame [46]. We hypothesize that the global attention of the encoder layer can help capture long-range dependencies in the large images of our dataset, in a similar fashion to how attention mechanisms capture latent relationships in long sentences in NLP. We also found a general-purpose object detection tool based on Transformers, namely DEtection TRansformer (DETR) [47].

To fill the gaps, we propose a novel benchmark, namely the Nevada Smoke detection benchmark (Nemo), for wildfire smoke and fine-grained smoke density detection. Nemo is an evolving collection of labeled and processed datasets and trained DL-based wildfire smoke (and smoke density) detectors, freely available to the research community [48]. To the best of our knowledge, this is the first open-source benchmark for wildfire smoke density detection and wildfire smoke detection tailored to the early incipient stage. To create our dataset, we collect, process, and label thousands of images from raw videos for the purpose of bounding box detection of wildfire smoke. We mainly focus our preprocessing efforts on the earliest minutes of smoke visibility (i.e., early incipient stage). Furthermore, we create a finer-grained version of our labeled annotations to further divide the smoke region into three subcategories based on perceived smoke density. The overarching goal of the fine-grained smoke detection is to help differentiate particles of smoke based on their thickness, opacity, and density, which typically associates with the severity of smoke (i.e., thicker smoke being more severe). This can have potential applications in climate intelligence such as predicting pollutants and hazards.

Our main wildfire smoke detectors are based on encoder–decoder Transformers, and in particular on Facebook’s Detection Transformer (DETR) [47]. DETR is an end-to-end, one-stage object detector, meaning it has removed the need for anchor generation and second-stage refinements. DETR is trained on the COCO dataset of 80 everyday objects, such as person, car, TV, etc. We transfer weights from the DETR COCO object detector, but remove the classification head (i.e., class weights and biases), and then load the state dictionary from the transferred model. To adapt it to smoke detection, we also change the input dimension of the decoder by removing the query embeddings and replace it with a different set of queries, learned for the smoke detection use-case. All the other intermediary weights and biases were kept from the transferred model to help reduce the training schedule. To the best of our knowledge, this is the first study on the Transformer architecture for early wildfire detection. There exists another work [46] that uses visual Transformers, but for wildfire flame segmentation, which is both a separate task (i.e., panoptic segmentation) and different target object (i.e., flame). The main contributions of this study are summarized as follows:

- We show that the self- and inter-attention mechanisms of encoder–decoder Transformers utilize long-range relationships and global reasoning to achieve state-of-the-art performance for wildfire smoke and smoke density detection. An example visualization of the encoder self-attention weights from a model we trained on our data is shown in Figure 1. This shows that even at an early stage of training (i.e., encoding), the model already attends to some form of instance separation.

- Additionally, our benchmark offers trained smoke detectors based on well-established and highly optimized object detection algorithms, namely Faster R-CNN with FPN [39] and RetinaNet [41]. The reasons for choosing these alternative object detection algorithms were their impressive results in incipient stage smoke detection, reported in a recent work [16], and to provide a comparison and context for our Transformer-based smoke detector. Our results based on numerous visual inferences and popular object detection metrics (i.e., mAP, PASCAL VOC, etc.) show that the encoder–decoder Transformer architecture performs better than the state-of-the-art ConvNets for wildfire detection.

- We also create additional dataset configurations, motivated by our initial results, which returned a relatively high number of false detections in a challenging set of empty images. We add collage images of smoke and non-smoke scenes. Our results show a significant reduction of false alarms for all models. We also created an alternative dataset, tailored to situations where the underlying object detection codebase does not support explicit addition of negative samples for training.

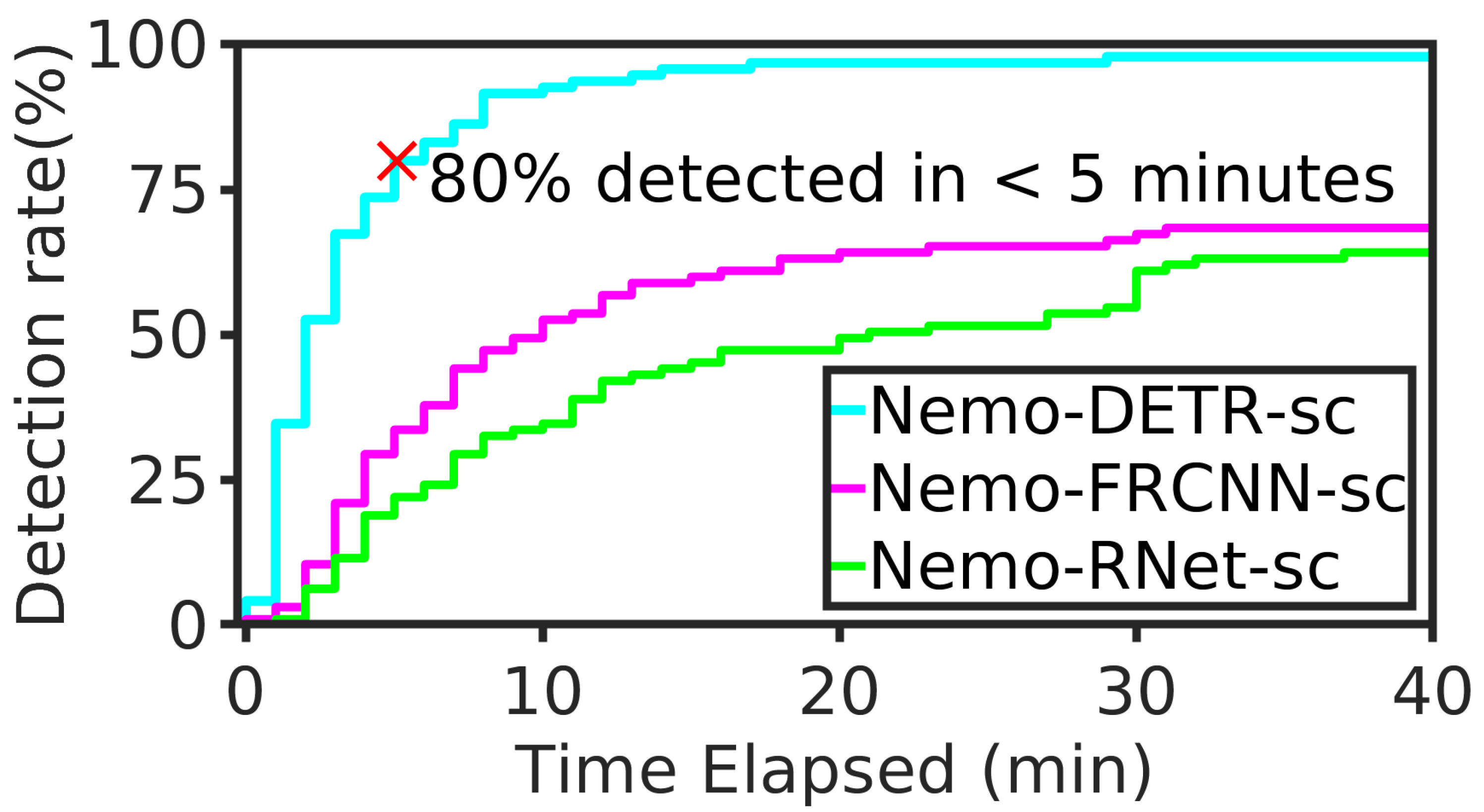

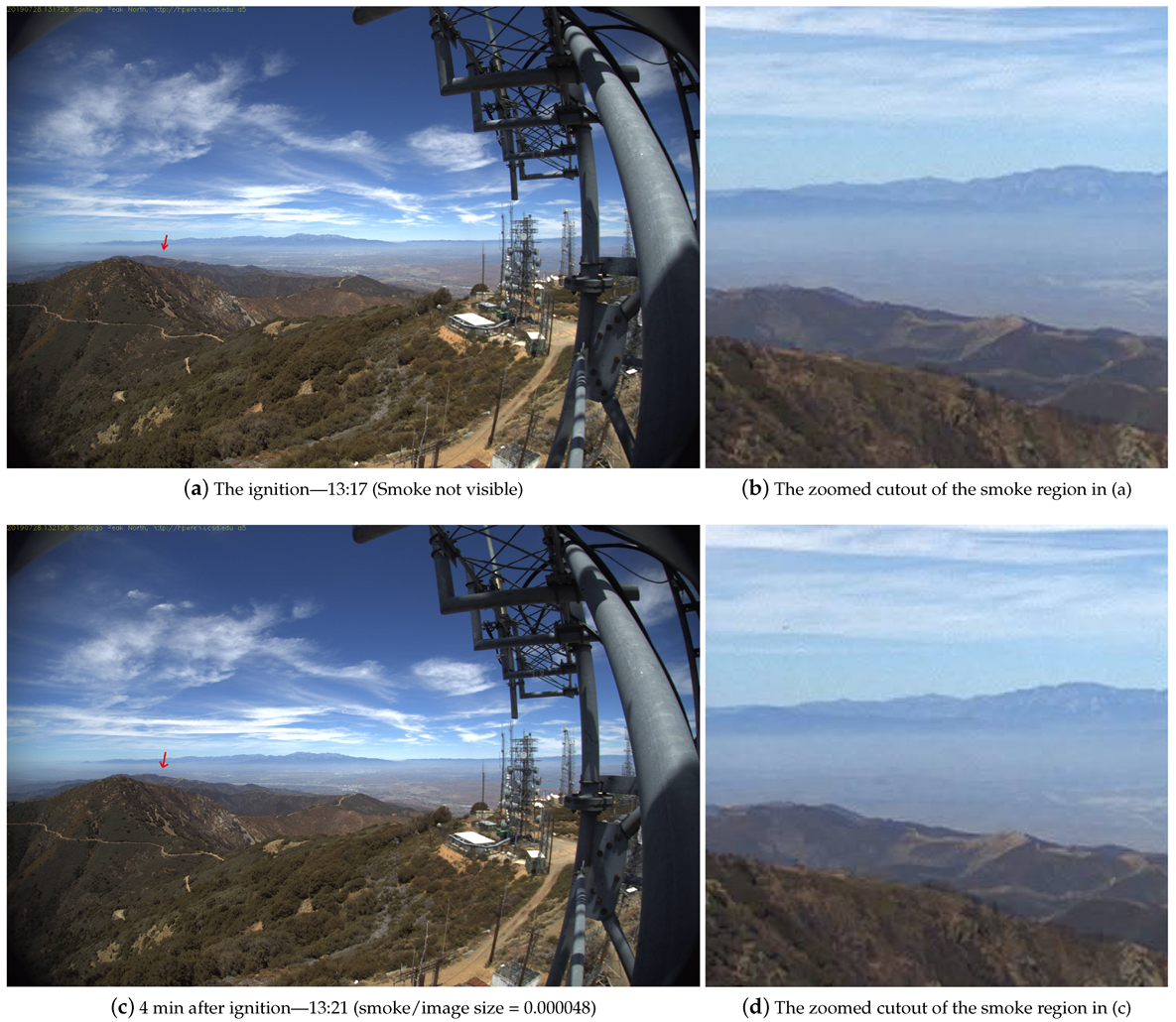

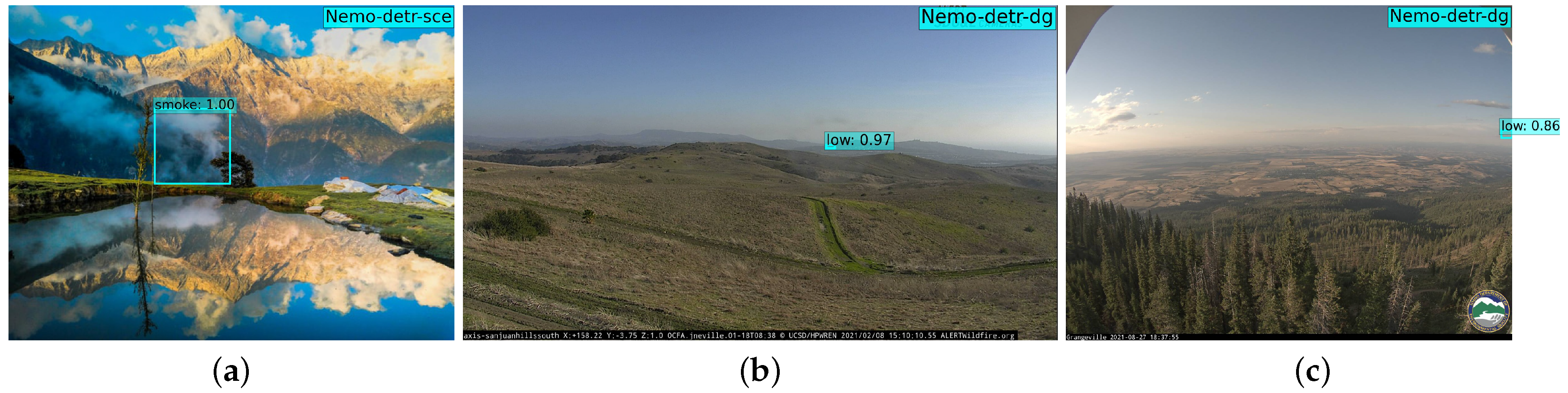

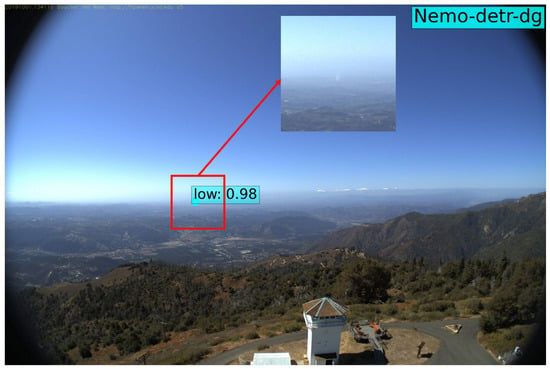

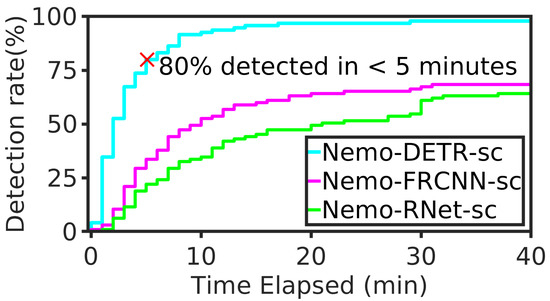

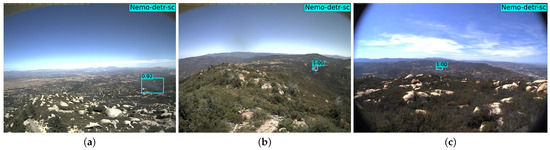

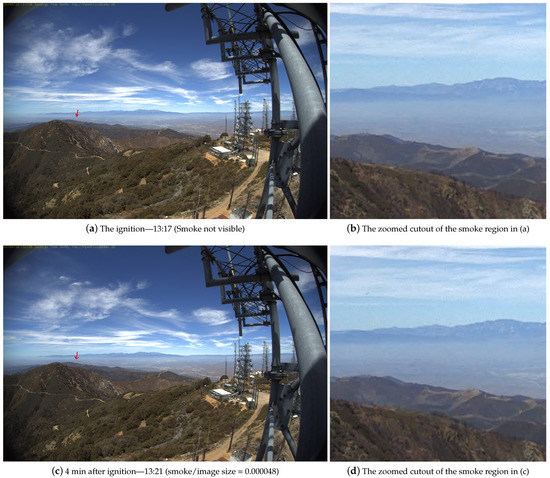

- Furthermore, we perform an extensive time-series analysis on a large test set, collected exclusively from the incipient stage of 95 wildfires [13,17]. From time-stamps recorded on the 95 video sequences and our detection results, we determined the mean time of detection after the start of the fire (i.e., mean detection delay, or latency). To the best of our knowledge, this is the largest analysis of its kind. Our Transformer-supercharged detector can predict wildfire smoke within 3.6 min from the start of the fire, on average. In context, we compared our results to 16 video sequences used in a similar analysis from [16]. We show that our model detects wildfire smoke more than 7 min faster than the best-performing model reported in the literature [16]. Our model was able to detect 97.9% of the wildfires within the incipient stage and more than two-thirds of the fires within 3 min. Since the majority of the smoke columns in the first few minutes of a fire are extremely small, far, and shallow, then by extension, we confirm that the proposed models are effective at detecting small fires. For instance, our model was able to detect objects as small as 24 by 26 pixels in an image of 3072 by 2048 pixels (6 MP). In relative terms, the correctly detected smoke object is 0.0099% of the input image, as shown in Figure 2.

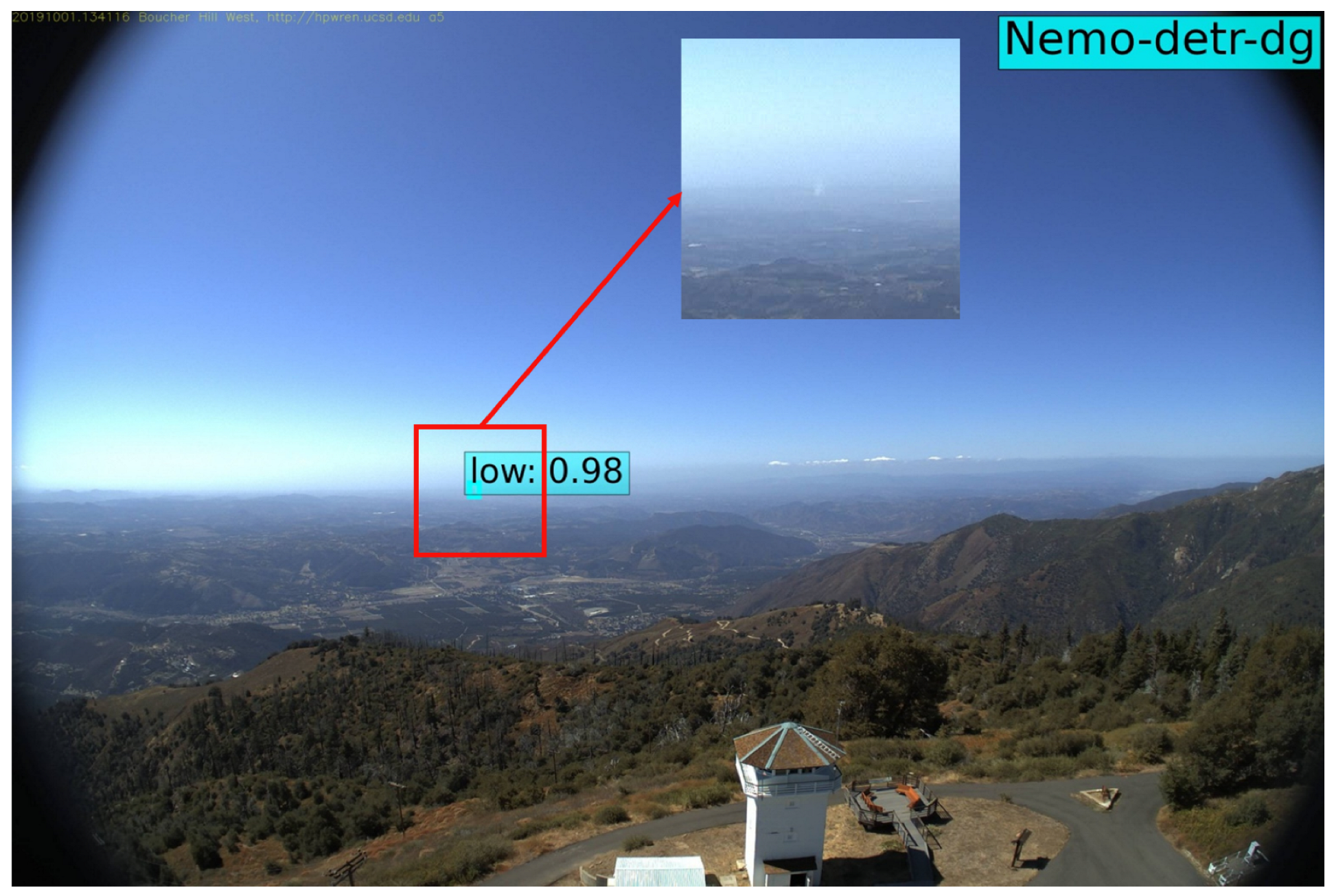

Figure 2. A tiny column of smoke near the horizon is correctly detected as low-density smoke, two minutes after the start of the fire. Viewed from a west-facing fixed camera on top of Palomar Mountain, California, on 1 October 2019. The smoke object is 24 × 26 pixels wide, and the image is 3072 × 2048 pixels, which makes the detected object less than 0.01% of the image. A focused 300 by 300 cutout of the smoke area is shown, with the smoke column centered.

Figure 2. A tiny column of smoke near the horizon is correctly detected as low-density smoke, two minutes after the start of the fire. Viewed from a west-facing fixed camera on top of Palomar Mountain, California, on 1 October 2019. The smoke object is 24 × 26 pixels wide, and the image is 3072 × 2048 pixels, which makes the detected object less than 0.01% of the image. A focused 300 by 300 cutout of the smoke area is shown, with the smoke column centered. - In addition, our Transformer-based detectors obtain more than 50% and 80% average precision (AP) for small and large objects, respectively, outperforming the baselines by more than 20% and 6%, respectively. This shows that our models are also effective at detecting larger fires in an advanced stage, which is an easier task, albeit still important, since it demonstrates the accuracy and applicability of our model in the continuous monitoring of developing wildfires.

The overarching objective of this study is to provide a free, open-source repository with labeled wildfire data and state-of-the-art smoke detectors with the core principal of repeatability and ease of use for the research community. We made our annotations, images, and trained models freely available at [48].

2. Background

2.1. State-of-the-Art

In this section, we discuss related work including state-of-the-art wildfire detection methods as summarized in Table 1. To the best of our knowledge, in terms of the approach, all existing works fall within the following main categories: (1) image processing and feature-based machine learning; (2) data-driven/deep-learning-based methods that also include the newer Transformer architecture.

Table 1.

State of fire detection. Main differences between existing studies are summarized, using 3 categories for brevity.

2.1.1. Image Processing and Feature-Based Wildfire Detection Methods

The earliest fire detection systems were based on infrared and ultraviolet sensors and were heavily impacted by false alarms [53]. They merely produced a signal determining fire without any indication of the location and size. Later works mostly used color features to identify fire from the background, using different color schemes and channels, such as red, green, blue (RGB) [54] and hue, saturation, intensity (HSI) [55]. However, these methods fail to detect fire reliably as they are sensitive to changes in illumination and choosing the exact fire pixel range (i.e., 0–255) can be very difficult. The results showed that color features alone are not sufficient to reliably detect fire.

Later detection methods incorporated a combination of static (e.g., colors, shapes, texture unique to fire) and dynamic (e.g., motion, flickering, and growth) characteristics of fire. Other features such as fire location or 3D modeling of the landscape have also been used along with static and dynamic features. Models often switch between individual pixel analysis and overall shape analysis depending on the parameters being analyzed. A probabilistic pattern recognition approach proposed by [18], uses color, surface coarseness, and boundary roughness of estimated fire regions combined with dynamic features such as randomness of area size and growth of the estimated pixel area due to flickering [19] combined color and motion features to find fires in videos. They compared colors to a histogram of Gaussian mixture model of a normal fire’s RGB profile, then the motion features were tested based on whether the area size is changing. The model suffered from high false alarm rates. Another work [20] used RGB, and wavelet analysis was used to estimate the possibility of fire based on the oscillation of luminosity values. The model suffered from generalizability, as very limited test-cases were examined. Another work [56] added texture features to pixel color classification in a method called “best of both worlds fire detection” (BoWFire) to detect fire. Ko et al. [57] proposed a mutli-stage approach by feeding the regions, proposed by pixel color classification, to a luminance map to remove noise. Then, a temporal fire model with wavelet coefficients is passed to a two-class support vector machine (SVM) for final verification. Foggia et al. [58] proposed a multi-expert system that combines color, shape variation, and motion features to detect fire in surveillance videos. They used a large dataset of real fire videos to prove the reliability of this method in terms of accuracy and false alarms. Moreover, a flame detection model proposed in Emmy et al. [59] integrates color, motion, and both static and dynamic textures. The results showed the effectiveness of the model in differentiating fire from fire-like moving objects. Ajith and Ramon [32] proposed an unsupervised segmentation that extracts motion, spatial, and temporal features from different regions to identify fire and smoke in infrared videos. Several commercial companies have also implemented sophisticated solutions to detect fires using state-of-the-art sensors and image processing algorithms, such as FireWatch [36] and ForestWatch [37].

While feature-based methods demonstrate their merits in fire detection, they still come short in dealing with false alarms [11]. They typically require more domain knowledge and expertise to handcraft custom features, which makes these approaches less applicable across disciplines and use-cases.

An interesting category of studies help wildfire detection through forecasting wildfire-susceptible regions. Gholamnia et al. [60] provided a comparative review of ML methods for wildfire susceptibility mapping.

2.1.2. Deep-Learning-Based Wildfire Detection Methods

Over the last decade, advancements in computer hardware and the proliferation of Big Data have created a successful trend of using deep learning methods for computer vision tasks such as road monitoring [61], agriculture [62], medical imaging [63], landslide detection [64,65], image segmentation [66], and object detection [67]. The most obvious advantage of deep learning methods is the ability of the convolutional layers to extract rich feature maps from the data itself, without reliance on handcrafted features. For the task of object detection, DL methods can efficiently detect object regions, determine their boundaries, and outperform classical machine learning models [67].

These studies typically fall into two main categories: single-stage and two-stage object detection. There are also works that detect wildfire objects using image classification coupled with a sliding-window block-based inference system [11,21]. Works in single-stage object detection typically use models such as You Only Look Once (YOLO) [42,68,69], Single Shot Multibox Detector (SSD) [70], and, more recently, methods based on Transformers [47,71]. The most popular two-stage models are based on R-CNN [72], in particular Faster R-CNN [73] and its numerous extensions [74,75]. The main advantage of two-stage detectors is their relative robustness against false alarms, which heavily contributes to their superior accuracy. Two-stage detectors go through a selective search process [76], in which a sparse set of candidate regions is proposed. Most negative regions are filtered out at this stage before passing through the second stage, in which a CNN-based classifier further refines the proposed boxes into the desired foreground classes and dismisses the background. One major drawback of two-stage detectors is their speed, which has been gradually improved through the years. On the other hand, one-stage detectors trade accuracy for speed [41] by using the same single feed-forward fully convolutional network for both bounding box detection and classification tasks.

In recent years, deep learning techniques have become very popular for classification and detection of wildfires [77]. Deep learning models require a huge amount of data to train properly; thus transfer learning (i.e., pre-trained weights from general object detection) is very common, instead of training from scratch. The existing literature typically use CNN-based models for fire detection. Several research works have attempted CNN-based image classification to identify fire or smoke in images [7,21,24,25,26,27,28,33]. In one of the earliest works, Zhang et al. [21] used Vanilla CNN and cropped image patches to train a binary classifier for fire detection. A custom CNN model (DNCNN) is presented in [52] for classification of smoke images. In [24,25], Khan et al. fine-tuned GoogleNet [78] and AlexNet [79], respectively to classify fires in images captured from CCTV cameras. They further proposed a fire localization algorithm that works on top of a trained classifier in [26]. Khan et al. [27] improved their previous results by proposing a lightweight fire and smoke classification model based on MobileNet [80] tailored to uncertain surveillance environments. Pan et al. [38] also conducted transfer learning based on MobileNet-v2 [80], but with a block-based strategy to detect the fire boundaries. Similar to [81], Govil et al. [11] proposed a block-based smoke detection model, but with a focus on smaller, distant objects. Their model is trained using Inception-V3 [82], and their detection output is enhanced with an inference system that utilizes historical classification scores to reduce false alarms. Another interesting block-based fire detection approach [30] fine-tunes DenseNet [83] for classification. They used an augmentation technique based on generative adversarial networks (GANs) [84], and in particular, cycle-consistent adversarial networks (CycleGANs) [85] to address the data imbalance between wildfire and forest background data. A recent fire image classification model [28] uses multi-scale feature maps to enhance robustness in images with varying fire characteristics. All aforementioned studies showed incremental improvements in terms of accuracy and false alarms in fire detection.

Furthermore, advanced one-stage and two-stage object detectors were used in [10,16,22,23,29,31,34,51,81]. Zhang et al. [81] combined synthetic smoke images, captured indoors in front of a green screen with a wildland background, to extend positive samples and train a Faster R-CNN model. A comparative study [22] used Faster-RCNN [73], YOLO [68], and SSD [70] to detect fire. They concluded that SSD achieved better overall performance. Furthermore, Barmpoutis et al. [51] combined Faster R-CNN [73] for region proposals and vector of indigenous aggregated descriptors (VLAD) to refine the candidates and improve detection accuracy. In [23], spatial features are learned by Faster-RCNN to detect fire boundaries, which are then passed to a long short-term memory (LSTM) algorithm to verify the classification, thus reducing false alarms and improving detection rate. Li et al. [31] compared four novel CNN-based models, Faster R-CNN [73], R-FCN [86], SSD [70], and Yolo-v3 [42]. They concluded that Yolov3 achieves the highest accuracy with the fastest detection time of 28 FPS. Recently, an interesting work by Xu et al. [10] integrated two one-stage object detectors (i.e., YOLOv5 [43] and EfficientDet [40]) and a classifier (i.e., EfficientNet [87]) in one multi-stage wildfire detection pipeline. They outperformed state-of-the-art models such as Yolov3, Yolov5, EfficientDet, and SSD. Their result showed a dramatic decrease of false alarms in different forest fire scenarios [10]. Another work [16] used Faster R-CNN with feature pyramid networks [39] and RetinaNet [41] to detect small distant wildfire smoke in an average of 6.3 min from the first observation of the fire. They divided the smoke class into three sub-classes based on their position in the image. Their initial results showed a significant number of false alarms, mainly due to clouds. However, they retrained their models using labeled cloud objects and negative samples and significantly reduced the false alarms [16].

2.2. Trends and Motivation

After an extensive review of recent studies on wildfire classification and detection and an inspection of their reported results, discussions, and qualitative evaluations, we noticed certain trends, similarities, and differences, which partly motivated our work.

2.2.1. What the Methods Have in Common

What most deep-learning-based fire detection methods have in common is being a form of ConvNet, most commonly Faster R-CNN, except, to the best of our knowledge, only one recent study [46], which used vision Transformers [88] for wildfire flame segmentation, which is basically a separate task (i.e., panoptic segmentation) and a different target object (i.e., flame vs. smoke). ConvNets are limited at modeling the global context and have limitations in terms of the computational cost. On the other hand, vision Transformers can capture long-range relations between input patches using self-attention mechanisms. One caveat of Transformers is their reliance on transfer learning, which is a fair limitation as transfer learning is very common among existing models. Using pretrained weights, vision Transformers have shown promising results, outperforming state-of-the-art ConvNets [89].

Our main wildfire smoke detection model is based on Facebook’s (now Meta) End-to-End Object detection (DETR) [47]. Similar to Faster R-CNN and its many variants (e.g., feature pyramid networks [39], Mask R-CNN [74], RetinaNet [41], etc.), since DETR first came out in 2020, several DETR variations have been proposed (e.g., Deformable DETR [71], UP-DETR [90], Dynamic DETR [91], etc.). However, in this paper, we mainly explore DETR, that has proven its efficiency in general object detection on the challenging COCO dataset. It is fully possible that other variations of DETR, and visual Transformers in general, would perform better for our use-case, and we plan to investigate those in future work.

2.2.2. Differences

What makes the existing fire detection studies different from one another is their focus on, or definitions of, the following concepts, as summarized in Table 1:

- Fire is a generic term, and a popular trend in existing literature is to use fire to refer to flame. Flame is the visible (i.e., light-emitting) gaseous part of a fire, and a significant number of studies actually focus on flame detection.

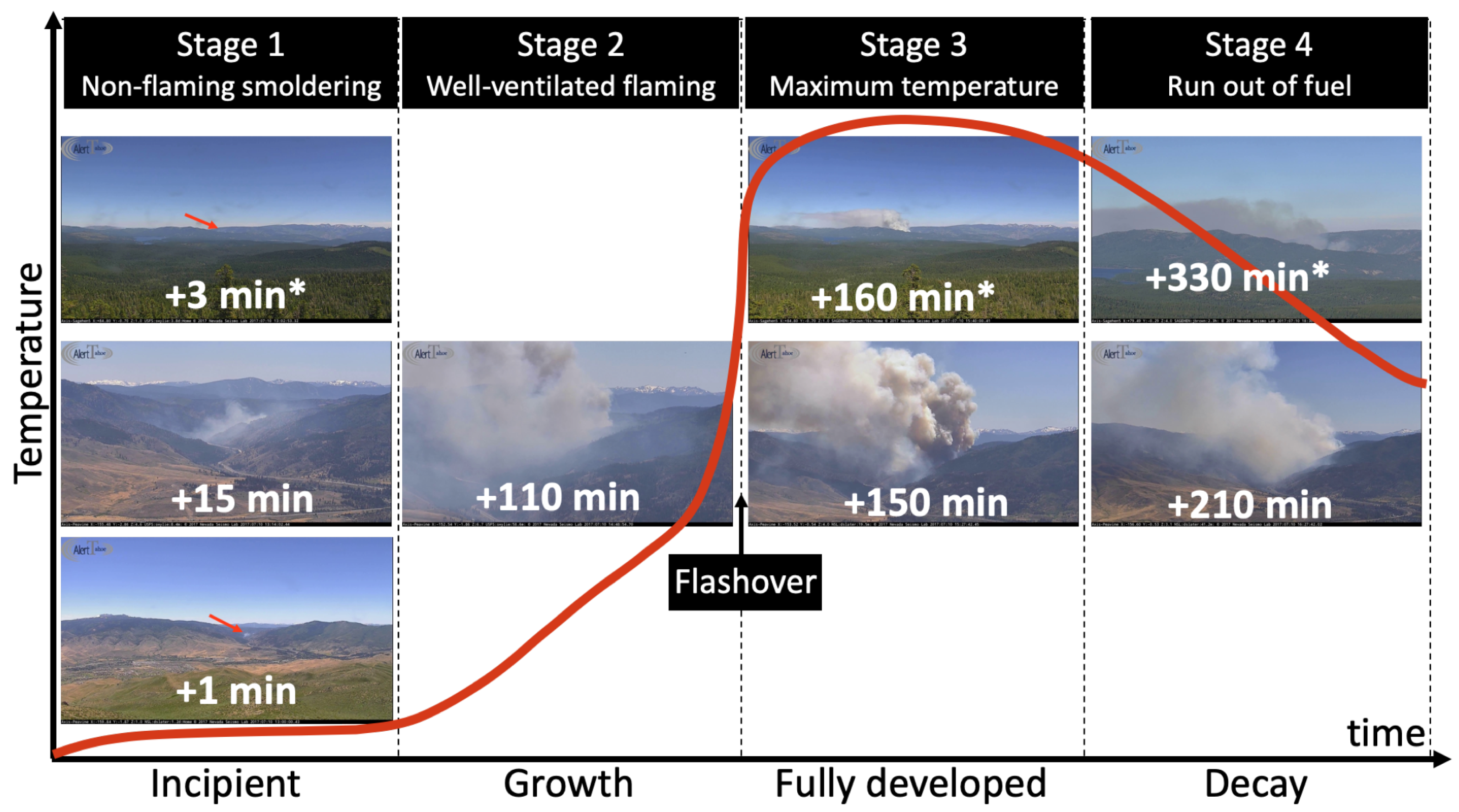

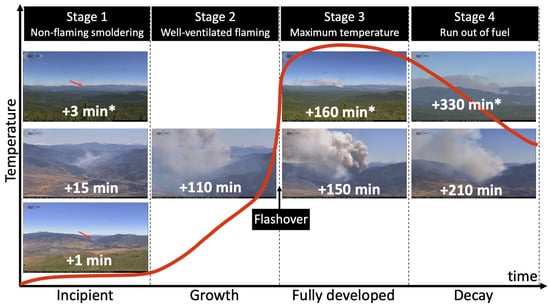

- The stages of fire typically include: incipient, growth, fully developed, and decay, as shown in Figure 3. The example shown is the Farad Fire, west of Reno, Nevada, and it lasted for multiple days. It is possible that a fire in general goes through these stages in a matter of minutes, hours, days, or even weeks. The length and severity of wildfires and the duration of each stage vary and depend on different factors. For instance, weather conditions and other wildfire-susceptibility factors, such as elevation, temperature, wind speed/direction, fuel, distance to roads and rivers, detection time, and fire fighting efforts can all affect the duration of a fire [92,93].

Figure 3. The 4 stages of fire. The example shown is the Farad Fire of July 2017 observed from two different cameras (Peavine fire camera and * Sage Hen Fire Camera).The definition of early detection is relative. In this paper, we define the early half of the incipient stage as early detection. Most studies consider the incipient and early growth stages as early detection [6]. However, through an inspection of numerous wildfire videos, we observed that wildfires at the growth stage commonly transition to fully developed very rapidly. For example, the Farad Fire in Figure 3 was confirmed around Minute 15, using fire cameras (i.e., the image at Minute 15 is zoomed in), and by the time the first suppression efforts were made, it was already at the brink of flashover (i.e., Minute 110). In densely populated California, the median detection latency is 15 min [11], typically reported by people calling 9-1-1. In the literature, only one study has explicitly focused on the incipient stage [11] and, in particular, earlier than 15 min. Unfortunately, their efforts have moved to the commercial side. Initially, we replicated their sliding window block-based detection model as the main baseline, but the accuracy of localizing smoke regions highly depends on the size and number of tiles (i.e., blocks), which made the inferences very slow. Thus, we opted for more advanced object detectors as alternative models, in particular Faster R-CNN [39] and RetinaNet [41], which have been successfully employed by recent work [16] for early wildfire detection.

Figure 3. The 4 stages of fire. The example shown is the Farad Fire of July 2017 observed from two different cameras (Peavine fire camera and * Sage Hen Fire Camera).The definition of early detection is relative. In this paper, we define the early half of the incipient stage as early detection. Most studies consider the incipient and early growth stages as early detection [6]. However, through an inspection of numerous wildfire videos, we observed that wildfires at the growth stage commonly transition to fully developed very rapidly. For example, the Farad Fire in Figure 3 was confirmed around Minute 15, using fire cameras (i.e., the image at Minute 15 is zoomed in), and by the time the first suppression efforts were made, it was already at the brink of flashover (i.e., Minute 110). In densely populated California, the median detection latency is 15 min [11], typically reported by people calling 9-1-1. In the literature, only one study has explicitly focused on the incipient stage [11] and, in particular, earlier than 15 min. Unfortunately, their efforts have moved to the commercial side. Initially, we replicated their sliding window block-based detection model as the main baseline, but the accuracy of localizing smoke regions highly depends on the size and number of tiles (i.e., blocks), which made the inferences very slow. Thus, we opted for more advanced object detectors as alternative models, in particular Faster R-CNN [39] and RetinaNet [41], which have been successfully employed by recent work [16] for early wildfire detection. - Target objects: We noticed a trend that flame is correctly labeled as flame only when smoke is also considered as a separate target object.

- Object size and detection range are relative, based on the proportion of the object to the image size. We noticed that a majority of related works focus on close- and medium-range detection (i.e., middle or large relative object size), as listed in Table 1. In Figure 3, close range would be similar to the snapshot shown at +110 min and medium range to +15 and +330 min. Fewer studies have focused on far-range detection (e.g., +1 and +160 min). An example at Minute 3 shows the Farad Fire on the horizon.

3. Data and Methods

In this section, we present our open-source Transformer-supercharged benchmark, namely the Nevada Smoke detection benchmark (Nemo). Nemo is an evolving collection of labeled datasets and state-of-the-art deep learning models for wildfire smoke detection and localization. We extracted frames containing smoke from 1073 videos from AlertWildfire [12] and, then, labeled them with the class and bounding box of the smoke regions. We created a single-class smoke dataset and a separate multi-class dataset for fine-grained smoke detection based on the perceived pixel density of the smoke regions. We adapted two popular two-stage object detectors (i.e., Faster R-CNN and RetinaNet) and a Transformer-based one-stage object detector (i.e., DETR) for wildfire smoke detection. Our preliminary results confirmed the inherent problem of false alarms in object detection. To overcome this, we created additional dataset configurations to explicitly add negative samples and reduce false alarms. Finally, a separate public database (HPWREN [13]) was used to test our employed model’s ability to detect fire within the early incipient stage. Our benchmark (dataset and wildfire smoke detectors) is available for public use [48].

3.1. Dataset

3.1.1. Data Source

In recent years, multi-institutional research projects such as Alert Wildfire [12] and the High Performance Wireless Research & Education Network (HPWREN) [13] have become the leading source of live and archived video footage and image snapshots of rural and remote wildland areas on the west coast of the United States. While the initial objectives of these university-led projects have been research areas such as seismology and geophysics, they have morphed into invaluable resources for cross-disciplinary research in wildfire management, as well as for fire fighters and public safety agencies in multiple states. The network-connected cameras particularly help in: (1) Discover/locate/verify fire ignition through continuous manual observation of camera feeds, (2) scaling allocated resources according to spread dynamics and severity, (3) persistent monitoring of fire behavior through containment and until its demise, and (4) help develop an evacuation strategy and support decision-making in case of firestorms. While the cameras, sensors, and available resources are effective for the latter three, the initial step, which is discovery, can be greatly improved with sophisticated deep-learning-based systems [11]. In this paper, we collaborated with The University of Nevada’s Seismological Laboratory (NSL) [94], which is one of the leading groups in the AlertWildfire consortium, along with the University of California San Diego (UCSD) and the University of Oregon (UO) [12].

3.1.2. Domain-Specific Challenges in Camera-Based Wildfire Data

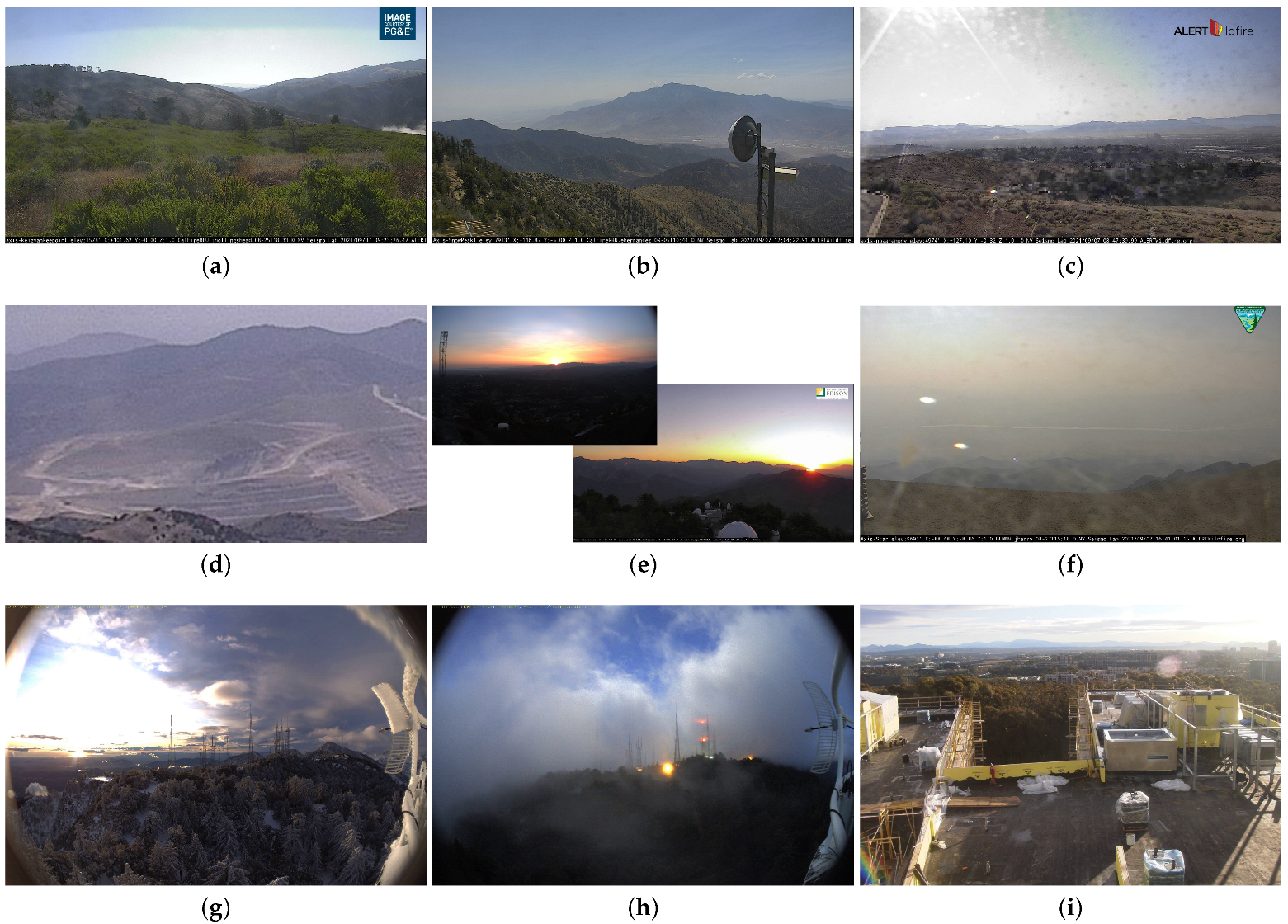

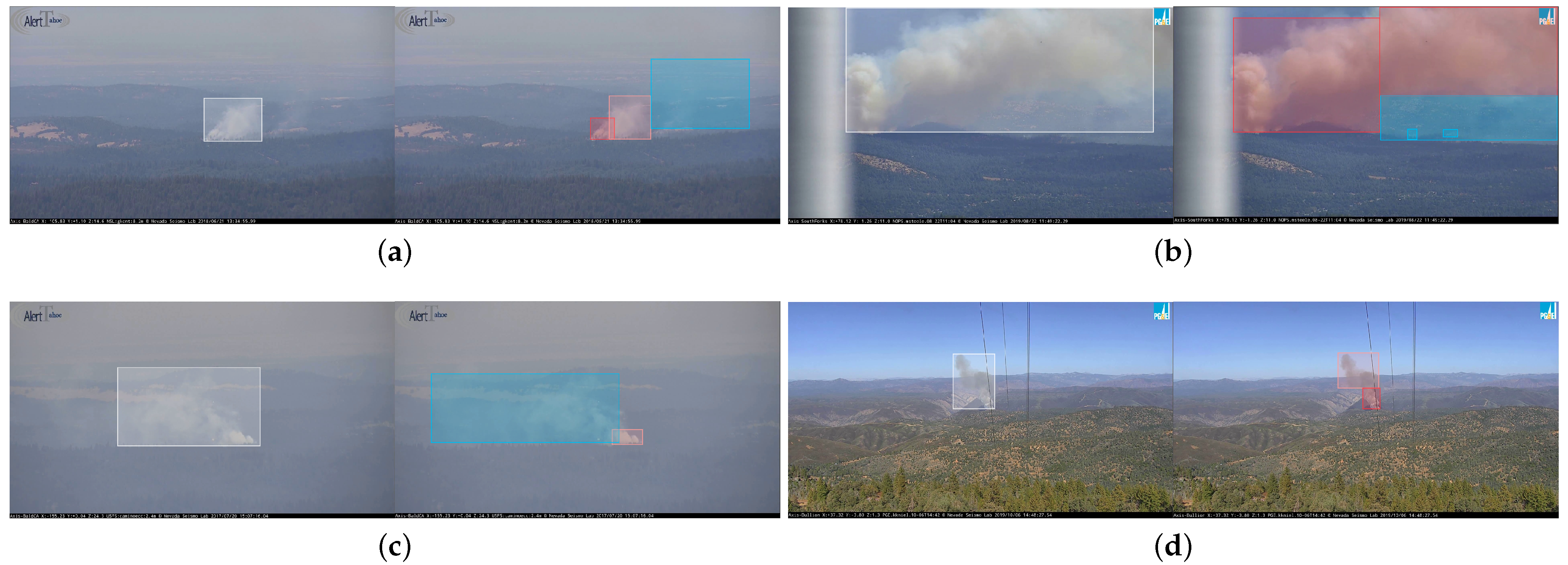

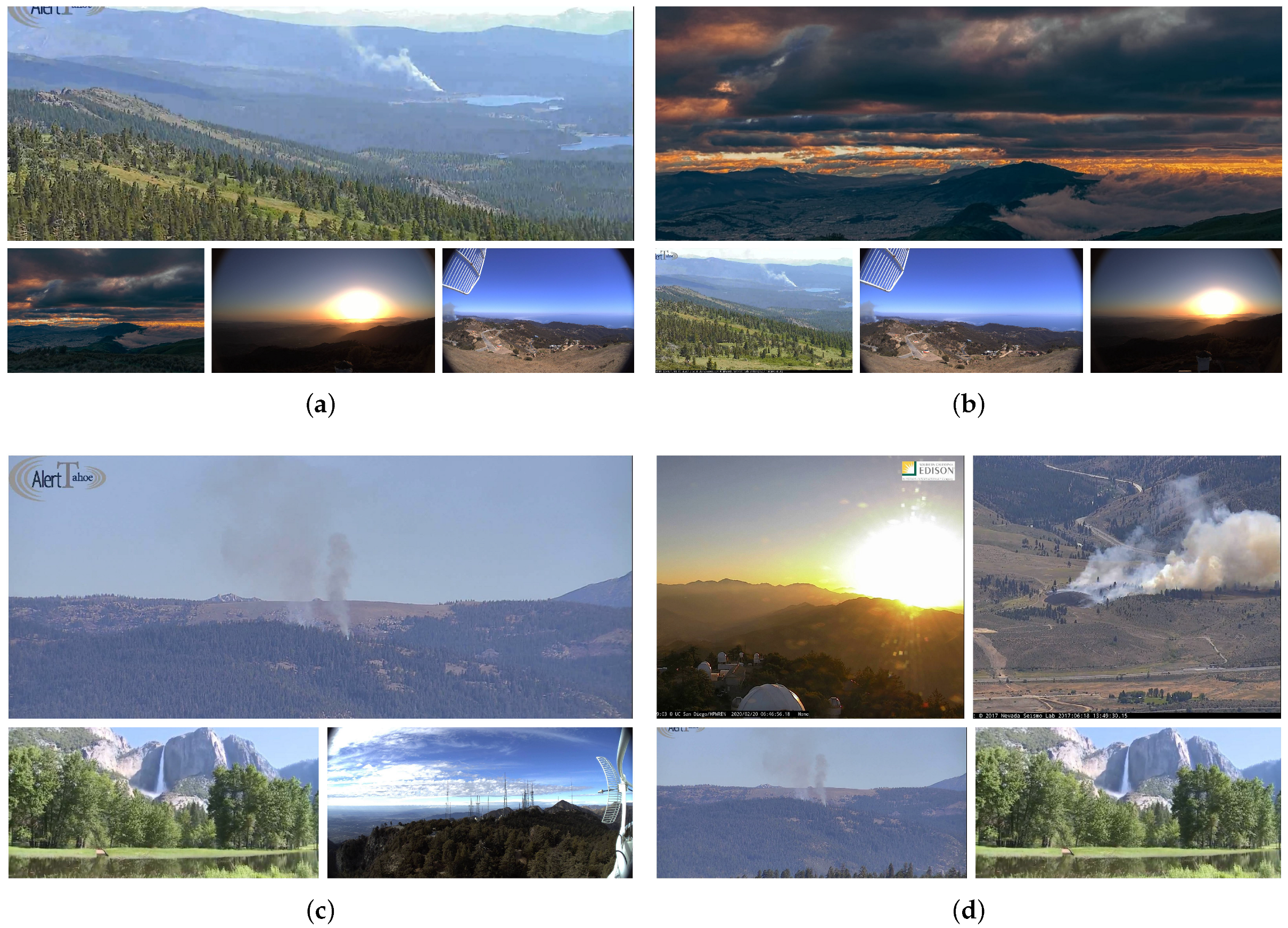

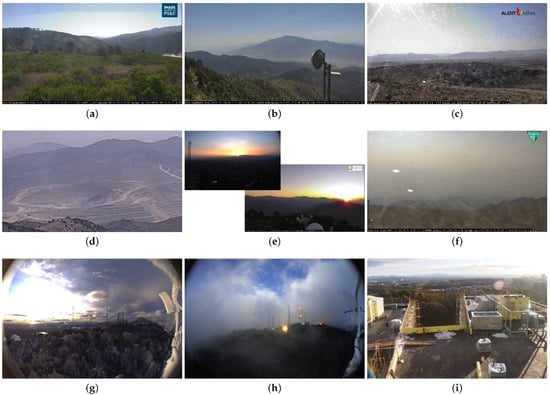

In this paper, our main wildfire object of interest is smoke, in particular smoke at the early incipient stage. To design an effective wildfire detection model, domain-specific challenges considering camera feeds, similar wildfire objects, and other factors related to non-object (non-smoke) classes need to be considered. Figure 4 shows an overview representative example of these objects.

Figure 4.

Examples of smoke-like objects. Most images are from Nevada and California wildlands and the Sierra Mountains. (a) Small cloud on the right. (b) Cloud on a mountain top with hazy background. (c) Dirty lens, reflection, and smog. (d) Small pile of dust resembles smoke at an early stage. (e) Sunset and sunrise. (f) Heavy dust, glare, and smudge. (g) Tiny, low-altitude cloud, and snow. (h) Heavy fog and yellow flood light. (i) Miscellaneous objects and glare.

AlertWildFire [12] provides access to 900 cameras across eight states (i.e., Nevada, California, Oregon, Washington, Utah, Idaho, Colorado, and recently Montana). Such an expansion has been made possible due to the usage of existing communications platforms such as third-party microwave towers, which are typically placed on mountaintops. The camera placements offer an extensive vantage point with range views of up to 50 miles, which makes it possible to detect wildfires across counties. The extensive views from our source cameras pose unique challenges for object detection, as there will be huge backgrounds and the target object can be an excessively small portion of the frame, which causes an extreme foreground–background class imbalance. For instance, some of the images from our test set are from fixed wide-angle cameras that generate 3072 by 2048-pixel frames every minute. Consider the example shown in Figure 2: even with spatial clues such as our correct prediction, it is still difficult to see the smoke without zooming due to the extremely small relative size of the object compared to the background, which is less than 0.01% of the image in this case.

The background can include objects that are very similar to smoke or similar to objects that typically occur close or next to smoke (e.g., flame), which can also trigger false alarms. Furthermore, it is important to distinguish wildfire at advanced stages, which is larger and more distinctive and thus less similar to background objects and easier to detect. Most of these challenges only apply to smoke at the early incipient stage. According to our observations of numerous images, similar objects to incipient wildfire smoke include, but are not limited to: cloud, fog, smog, dust, lens smudge, snow, glare, and miscellaneous distant landscape objects such as winding roads, while similar objects to flame include the Sun and various shades and reflections of the sunrise and sunset.

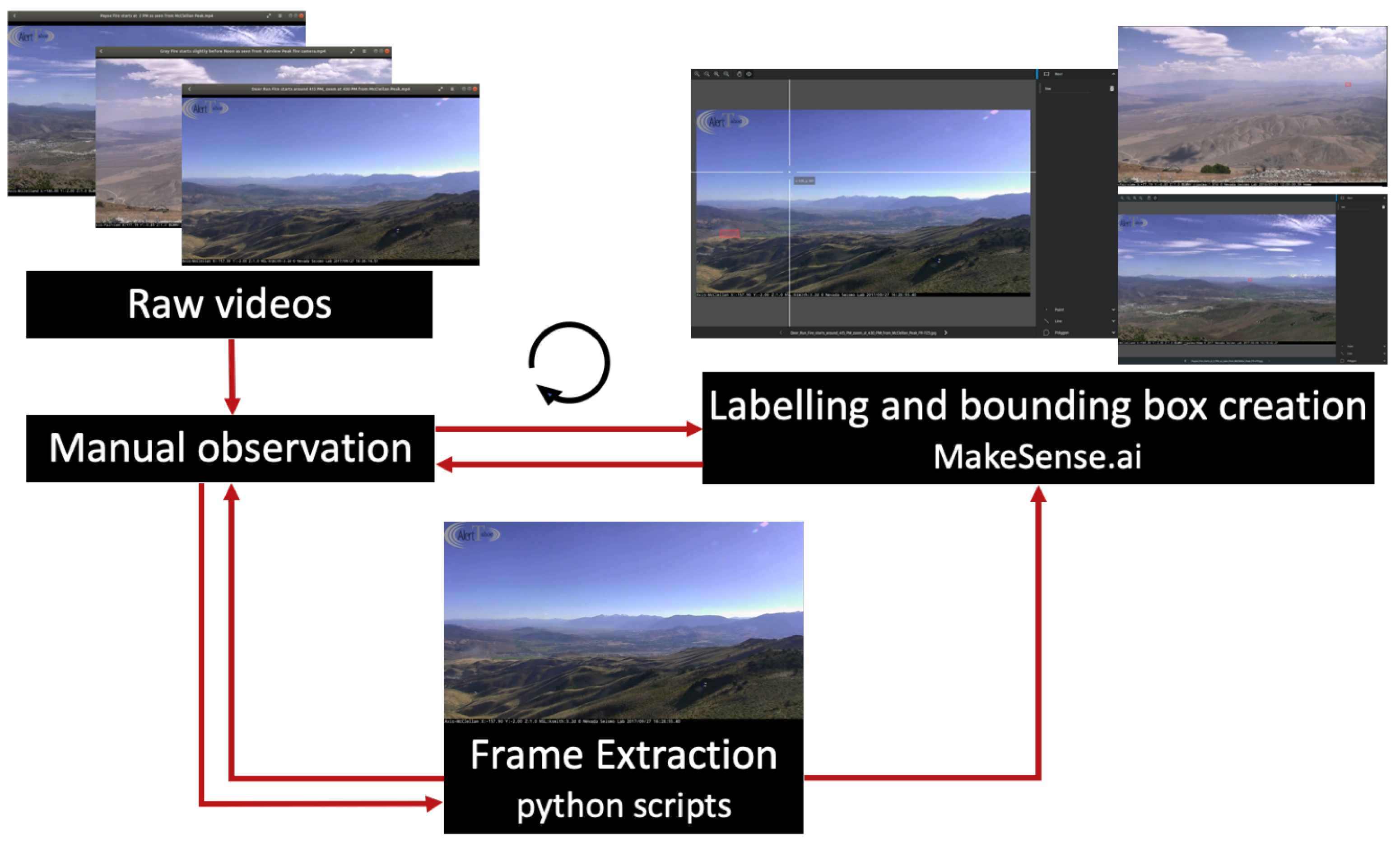

3.1.3. Data Collection and Annotation

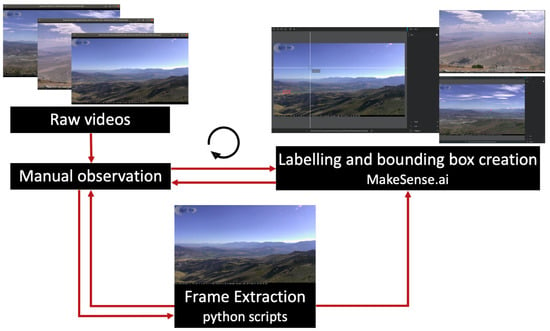

Collecting and labeling wildfire smoke from our raw videos came with certain challenges: small object size, the shallow and fuzzy nature of smoke, huge backgrounds and variety of smoke-like objects, unstructured and variable-length source videos, moving background scenes, obstruction caused by sunlight, glare, illumination, fog, haze, and other random environmental and technical factors that hamper visibility. We mainly overcame these challenges by careful and repetitive manual observation of the source videos with attention to spatial and temporal clues within the file names. Moreover, finding wildfire starts and labeling smoke objects at the early incipient stage is uniquely challenging and in stark contrast to the effort required for the later stages. Fine-grained relabeling of smoke objects based on their density also added to these challenges. To summarize, Figure 5 shows the preprocessing workflow for the early incipient stage. A group of individuals helped with this process, which took a substantial amount of time and effort in the form of revisions and re-annotations.

Figure 5.

Early incipient data preprocessing workflow. Raw videos are manually observed to determine the appropriate period for data extraction, using automated scripts. This is particularly necessary for long videos. The extracted images are then labeled with bounding boxes using the makesense.ai tool [95]. Since smoke at this stage tends to be small and typically not or hardly visible, a continuous feedback loop is crucial to locate smoke and draw the bounding boxes correctly. Finding wildfire starts is particularly difficult, since they are shallow and typically on or near the horizon.

We collected and annotated our data in different phases:

- Phase 1: initial frame extraction;

- Phase 2: single-class annotation;

- Phase 3: smoke sub-class density re-annotation;

- Phase 4: collage images and dummy annotations.

In the first phase, we closely inspected raw input videos and extracted the frames containing smoke objects. We then annotated the smoke objects with bounding boxes in Phase 2. In Phase 3, smoke instances were divided into multiple bounding boxes and relabeled based on the density of smoke pixels. We randomly divided our dataset into two non-overlapping sets (i.e., training and validation). We also handpicked a small test set of negative samples, consisting of challenging smoke-like objects. We then trained our deep learning models using the single-class and multi-class datasets. The initial results showed high accuracy with 1.2–2.4% of false alarms for the validation set. However, the models detected a relatively high number of false alarms in the challenging negative set. This motivated Phase 4, where we add new dataset configurations to increase the diversity of background objects. Table 2 provides an overview of our dataset configurations. The details of each phase are provided in the following.

Table 2.

Dataset configurations’ overview.

Phase 1—initial frame extraction: We use 1073 raw videos containing fires, acquired by our collaborators at AlertWildfire [12]. The acquisition system is based on pan–tilt–zoom (PTZ) cameras. The majority of fires happen in wildlands, forests, remote areas, or near highways. There are also residential fires, prescribed burns, car and plane accidents, and wildfires at night time. The video file names are unstructured and have no consistent or standard meta data and naming convention. Thus, reliably grouping them based on location, date, or other contextual information such as stage of development was not feasible.

Our extracted frames arefrom different stages of fire, as illustrated in Figure 3. We used spatial and temporal clues in the video file names to help extract more images from videos containing wildfire starts and the early incipient stage (e.g., Small Fire near Highway 40 (Mogul Verdi) put out quickly and Evans Fire starts at 744 AM and camera points at Peavine 833 AM) and less from videos containing advanced stages. The clues within the file names are crucial, as some of the fires were excessively small and very difficult to spot, even after zooming into high-definition snapshots, and sometimes even if the exact start time was known. The observation difficulty is usually due to smoke size, opacity, distance, weather, or lighting conditions, among other things. Moreover, the PTZ cameras are regularly zoomed and moved around after fire discovery. Thus, we tried to collect more frames before discovery. Due to the challenges above, we went through several iterations and re-annotations to make sure smoke regions are captured correctly. There are also videos that only show fully developed wildfires (e.g., Fire fighters turn the corner on the Slide fire, as seen from Snow Valley Peak at 4 PM and 5th hour of the Truckee Fire near Nixon, NV from the Virginia Peak fire camera 9–10 AM). Such footage are of no interest to us as there are no useful frames in the entire video. In total, we extracted 7023 frames and annotated 4347 images. However, many of these annotations were later removed from our dataset for reasons mentioned next.

Phase 2—single-class annotation: We initially annotated 4347 images using MakeSense AI [95], resulting in 4801 instances (i.e., smoke objects annotated with bounding boxes). At first, our annotations contained four classes: smoke, fire, flame, and nightSmoke, but we decided to only keep the smoke class. Determining the boundaries of smoke objects at night is a very difficult task with unreliable annotations. Flame is typically visible in the zoomed frames and more advanced stages, which is not our objective. The fire class, which we used to label bigger (typically more advanced) fires, was mostly removed or relabeled as smoke. What remained is our initial single-class smoke dataset containing 2587 images. It is randomly divided into 2349 training images containing 2450 instances and 238 validation images containing 246 instances.

A long-term goal and aspiration of our project is to design a system that aids in climate prediction and determining the severity of wildfire smoke particles and other hazardous pollutants and emissions. We noticed that in any stage of a wildfire, smoke columns have varying opacity and density. Smoke at the earliest stages tends to be shallow and less severe than smoke at later stages. In some cases, different sub-regions of the same smoke column tend to have varying opacity. Thus, to aid in our goal of detecting smoke at the earliest stage and to design a proof-of-concept model for detecting the severity of pollutants, we defined three additional sub-classes of smoke based on their density: low, mid, and high.

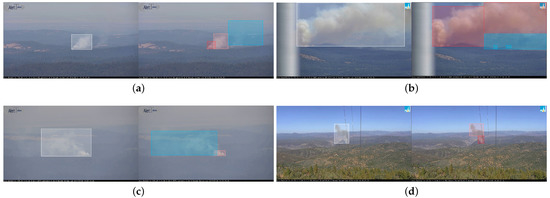

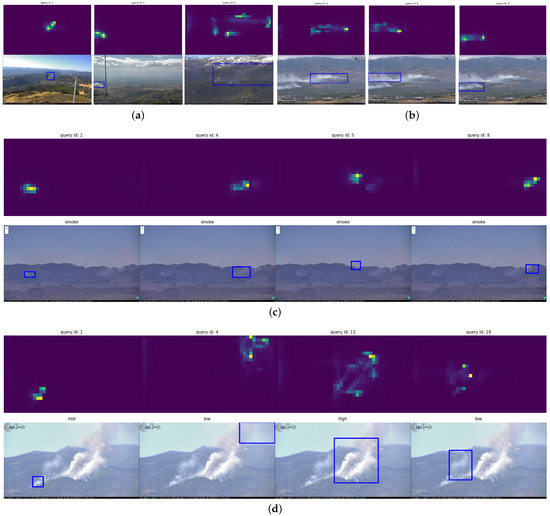

Phase 3—smoke density re-annotation: We re-annotated the single-class smoke objects into three sub-classes based on perceived pixel density: low, mid, and high. Figure 6 shows four typical examples of redrawing the bounding boxes to reflect various density levels. For better visualization, smoke that was relatively bigger or zoomed in was chosen. This sub-categorization helps account for variations in visual appearance and fine-grained information of smoke columns. There were, however, several unique challenges.

Figure 6.

Examples of smoke density re-annotation. The left side shows our initial annotation with the single category “smoke” (bounding box denoted with white color). On the right side, the same images were re-annotated with smoke as the super-category, and “low” (blue), “mid” (pink), and “high” (red) as the categories based on the relative opacity of the smoke. (a) Axis-Bald-Mtn-CA 2018-06-21 13:34. (b) Axis-SouthForks 2019-08-22 11:49. (c) Axis-Bald-Mtn-CA Placerville 2017-07-20 15:07. (d) Axis-Bullion Briceburg fire 2019-10-06 14:48.

One of the hardest challenges in re-annotation is finding low-density parts of the smoke, as they tend to blend into the background or are extremely small. We noticed that the only way to properly annotate the low-density areas is to revisit the video sources and use the movement of the shallow parts to distinguish the bounding areas in the respective captured frames. An interesting example of such a case is shown in Figure 6b, where only a large coarse bounding box was created at first and labeled as smoke. However, as shown on the right side, we re-annotated the image into a combination of five low- and high-density smoke areas. Interestingly, in our initial coarse annotation, we overlooked two new fires that had just started slightly in front of the large column of smoke. These two new fires were caused by embers falling from the bigger fire. Embers can be very dangerous and travel far and unpredictably based on the weather and wind conditions. With re-annotation, the two new fires would receive their own boxes with the appropriate density label.

Another challenge is to decide what constitutes different levels of density. The sub-categorization was performed subjectively, based on the relative opacity of different parts of the smoke column in the context of the background. We first attempted to automate the sub-categorization process, using relative pixel values and clustering algorithms to determine clusters of similarly colored grey-scales in different areas of the image. However, the camera feeds were captured at varying locations and varying times of the day with dynamic tilting and zooming, causing the scene and illumination to change drastically. Moreover, the relative position of the Sun and pre-existing weather conditions create more uncertainty, which makes the clustering approach highly unreliable. Thus, we manually annotated the smoke density with attention to existing background conditions and clues from the file names.

It is also important to note that the bounding boxes for density could be very granular within each image. For example, an entire column of smoke that was simply labeled as smoke could now be annotated using several bounding boxes (e.g., up to 10 in some cases). As a result, we ended up with considerably more instances. In this phase, we extracted another 200 frames from the source videos, all from the incipient stage. Our re-annotated dataset had three classes of smoke, with 2564 images containing 3832 training instances and 250 images containing 420 validation instances.

Challenging negative test set: We handpicked a set of 100 images containing no smoke from various sources (i.e., AlertWildfire, HPWREN, Internet). Importantly, this is not a set of random empty images, but 100 images, carefully and deliberately selected, to challenge the predictions based on the resemblance to smoke objects in our training set and other environmental conditions that make smoke prediction unreliable (e.g., haze, dust, fog, glare).

Phase 4—collage images and dummy annotations: We created additional dataset configurations to improve the diversity of background objects in our training set. We incorporated empty images by adding collages of smoke and non-smoke images or adding empty images without annotations. We also created a version of our dataset that added empty images with dummy annotations. This hack is suitable for situations where the underlying object detection codebasedoes not support negative samples for training. The motivation for Phase 4 was our initial results, which showed a relatively high number of false alarms in our challenging negative test set, as discussed in more detail in Section 3.3. Additional details about the collages and dummy annotations are provided in Section 3.4.

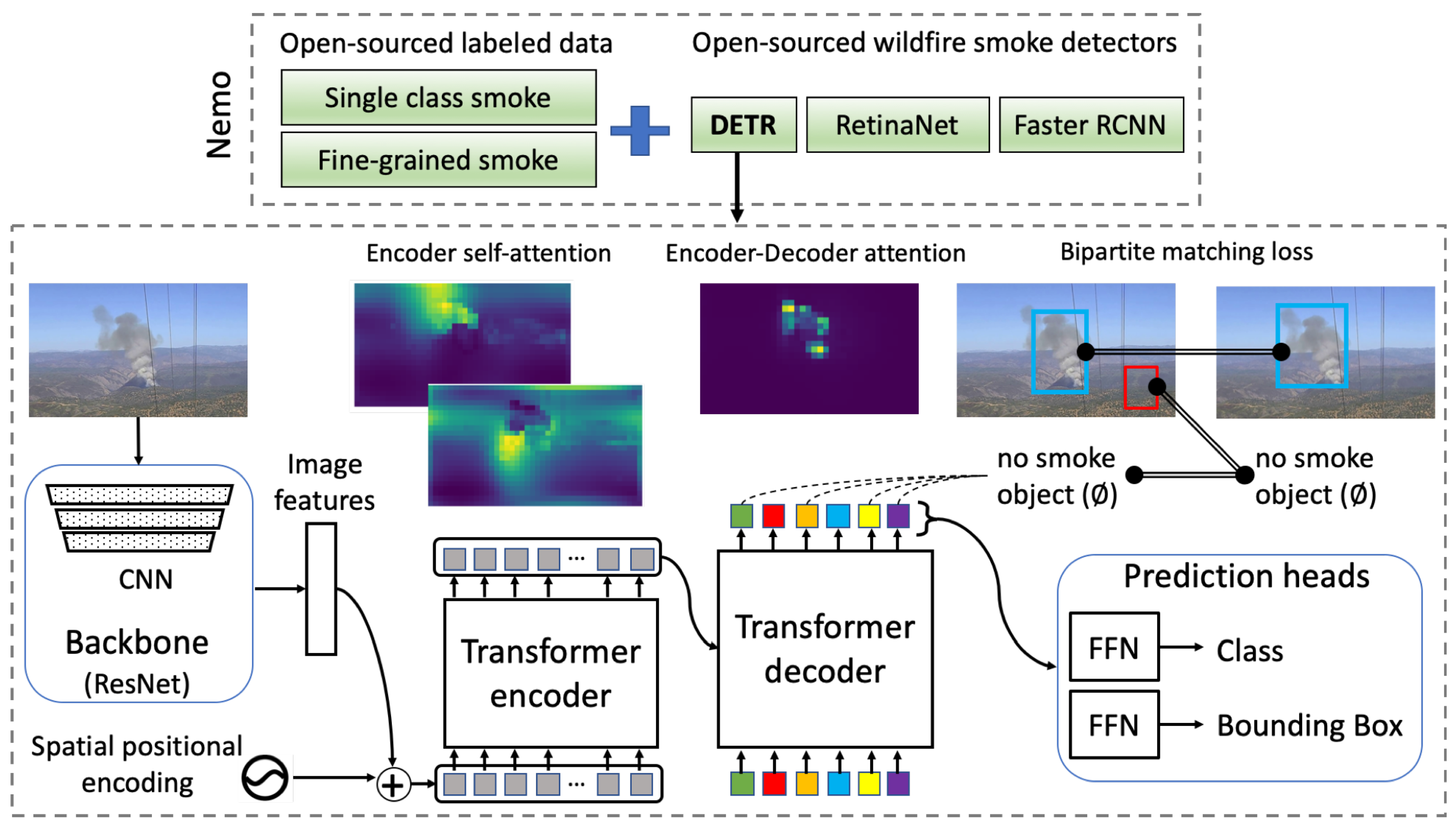

3.2. Nemo: An Open-Source Transformer-Supercharged Benchmark for Fine-Grained Wildfire Smoke Detection

Nemo is an evolving collection of wildfire smoke and smoke density dataset configurations and object detection algorithms fine-tuned for wildfire smoke and fine-grained smoke density detection. In this paper, our main wildfire smoke detectors are based on Facebook’s DETR, while also providing models based on the powerful Faster R-CNN with feature pyramid networks and RetinaNet as alternative smoke detectors.

3.2.1. DETR Architecture

The architecture of DETR is shown in Figure 7. There are three reasons why we chose DETR as the main model. First, it is based on encoder–decoder Transformers where the self-attention mechanism of the encoder layer performs the global processing of information, which is superior to the inherently local convolutions of other modern detectors. This helps the model attend to the global context of the image and improve the detection accuracy. Second, DETR employs a decoder layer that supports parallel decoding of objects, whereas the original attention mechanism [96] uses autoregressive decoding with RNNs and thuscan only predict one element at a time. Last, but not least, DETR approaches the task of predicting a set of bounding boxes directly in an end-to-end fashion. This effectively removes the need for complicated handcrafted process of designing anchors and region proposals associated with modern single-stage (e.g., Yolo family) and two-stage (e.g., FRCNN family) object detectors.

Figure 7.

Nemo overview.

The three main components of DETR are: a convolutional backbone, an encoder–decoder Transformer, and a feed-forward network (FFN) to predict the final detection output, as depicted in Figure 7. First, a CNN backbone (i.e., mainly Resnet50) extracts 2D feature representations of the input smoke image. The feature maps are then passed to the encoder in a flattened sequence, as expected by the encoder. A set of fixed sine spatial encodings is also passed as the input to each attention layer due to the permutation invariance of Transformers. The decoder, in turn, receives a fixed number of learned object queries, N, as input while also attending to the encoder outputs. Object queries are learned positional embeddings, and the model can only make as many detections as the number of object queries. Thus, N is set to be significantly larger than the typically expected number of objects in an image. In Nemo, we default to 10 and 20 object queries for single-class smoke and smoke density detection, respectively. Similar to the encoder, these object queries are added to the input of each attention layer, where the decoder transforms them into output embeddings. The output embeddings are then passed to the FFN prediction heads, where a shared three-layer perceptron either predicts a detection (a class and bounding box) or a special “no-object” class.

3.2.2. DETR Prediction Loss

The main learning brain of DETR is the bipartite matching loss. Consider a simple example with a ground truth image containing two smoke objects. Let denote the set of ground truth objects (i.e., class and bounding box pairs) and object queries; thus, is a set of predictions. We also consider ground truth y to be of size N. In this example, since there are only two ground truth objects in the image, we pad the rest (i.e., eight) with ⌀ (no smoke object). For each element i of the ground truth , we have as the target class label (e.g., smoke; low, mid, high, or ⌀) and in cxcywh format denoting the center coordinates, width, and height of the ground truth box normalized over the image size. Consequently, for each predicted box , we have an array of class probabilities equal to the number of categories plus one for the no-object class and predicted bounding box . Now, the two sets are ready for a bipartite matching search for a permutation of N pairs with the lowest pairwise matching cost as follows:

For all N pairs matched in the previous step, the overall loss is efficiently computed using the Hungarian algorithm [47]. Similar to common object detectors, the loss is defined as a linear combination of class loss and box loss. The class loss is the negative log-likelihood, while the box loss itself is a combination of the commonly used loss and generalized IoU loss [97].

3.3. Inherent False Alarm and Class Imbalance

Our preliminary results on single-class and multi-class smoke density detection showed promising performance, as in Table 3. Especially for the single-class model, our results are comparable to official DETR evaluations on the COCO dataset based on mAP. This is interesting given that the official models have seen upwards of 11,000 images per target class on average, compared to 2500 smoke images in our case. This confirms that transferring weights from a model trained on different everyday objects and retraining the classification head for smoke detection is promising.

Table 3.

Preliminary results (in percentage (%)). False positive rates are marked in bold. FPR is the false positive rate for the validation set consisting of images containing smoke. FPR is the false positive rate for the challenging negative test set.

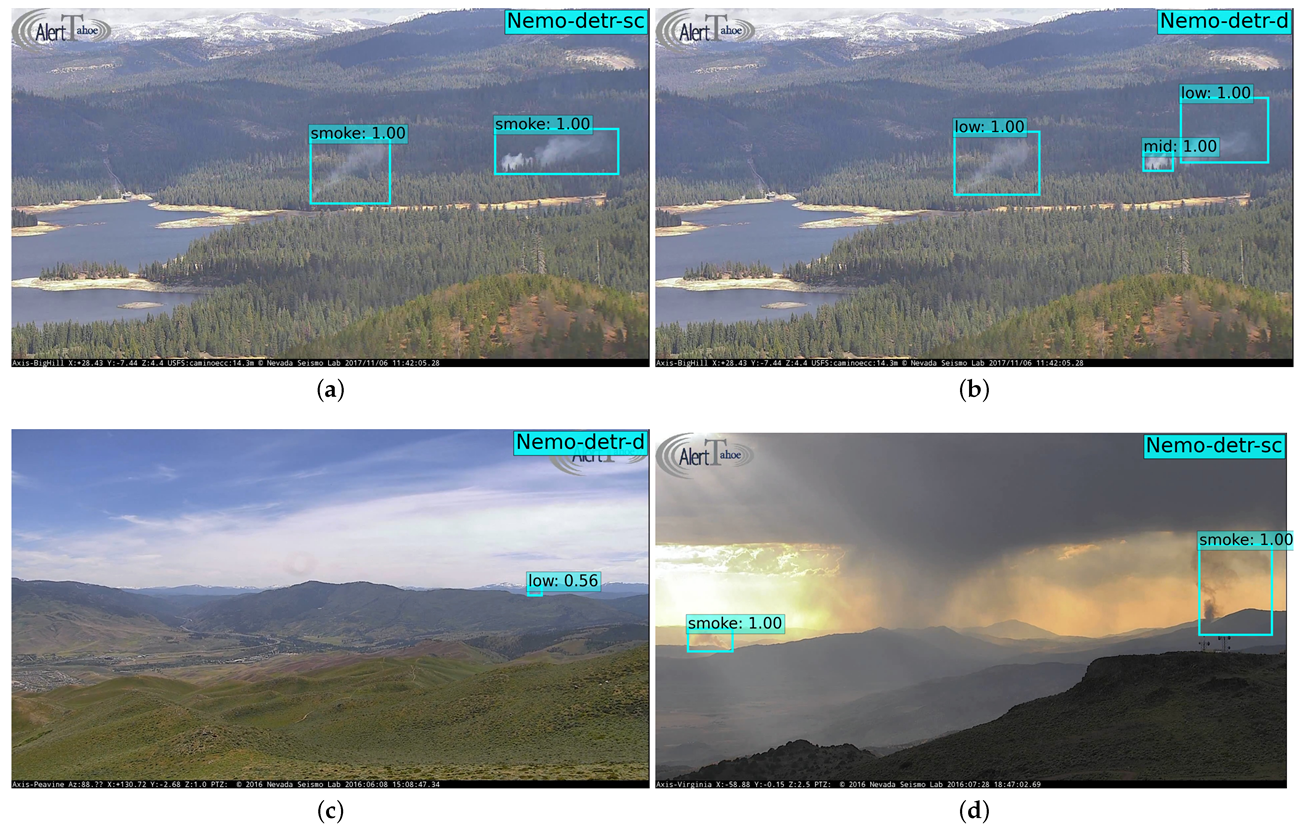

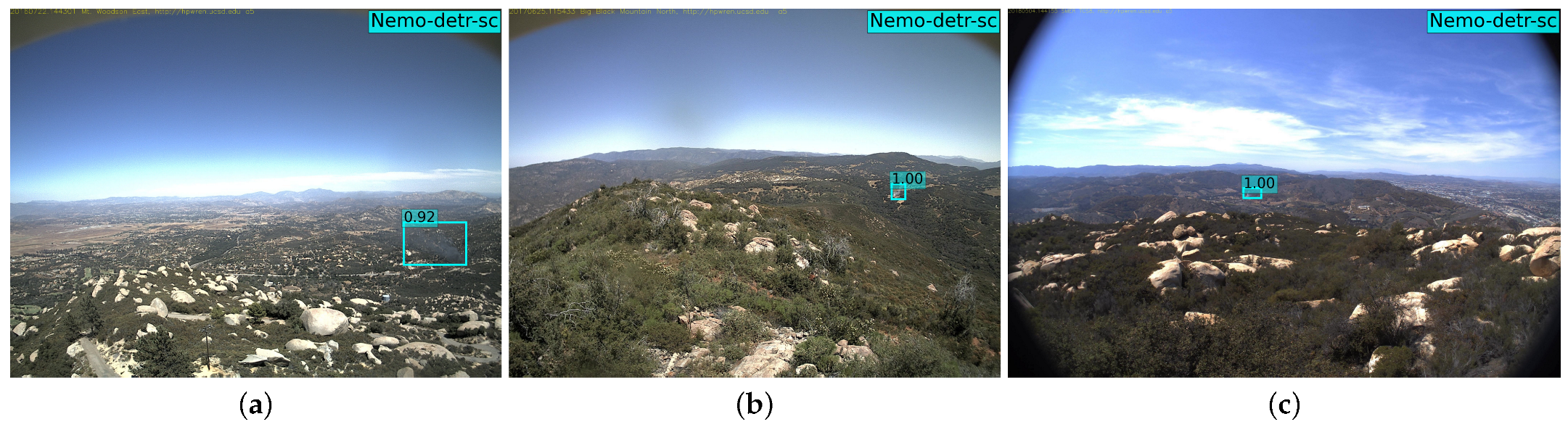

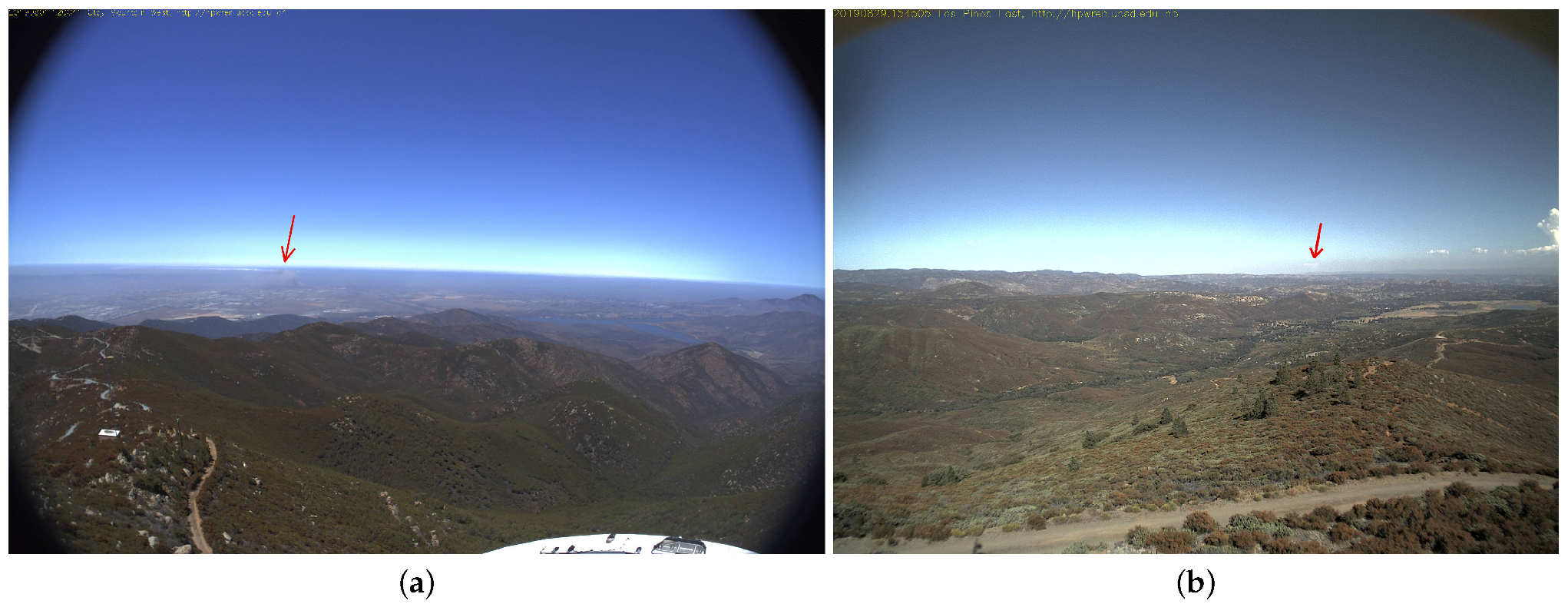

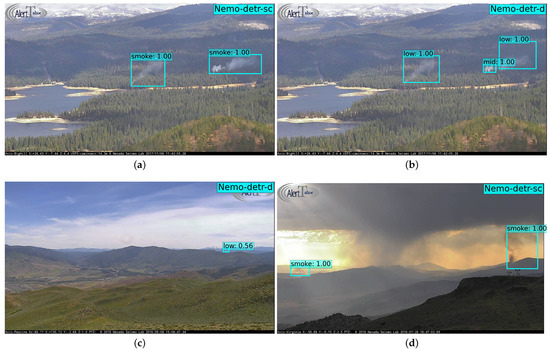

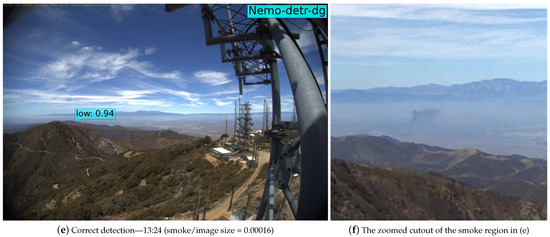

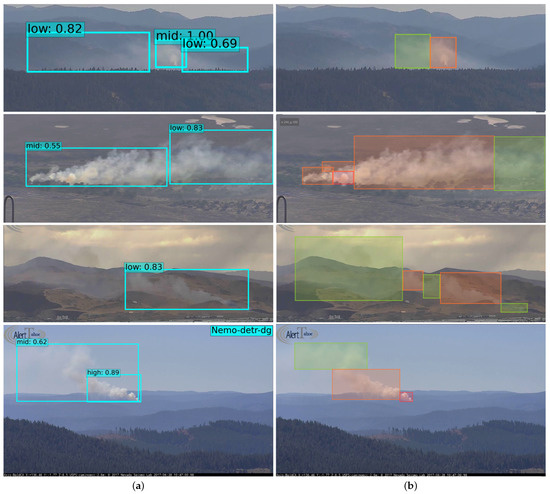

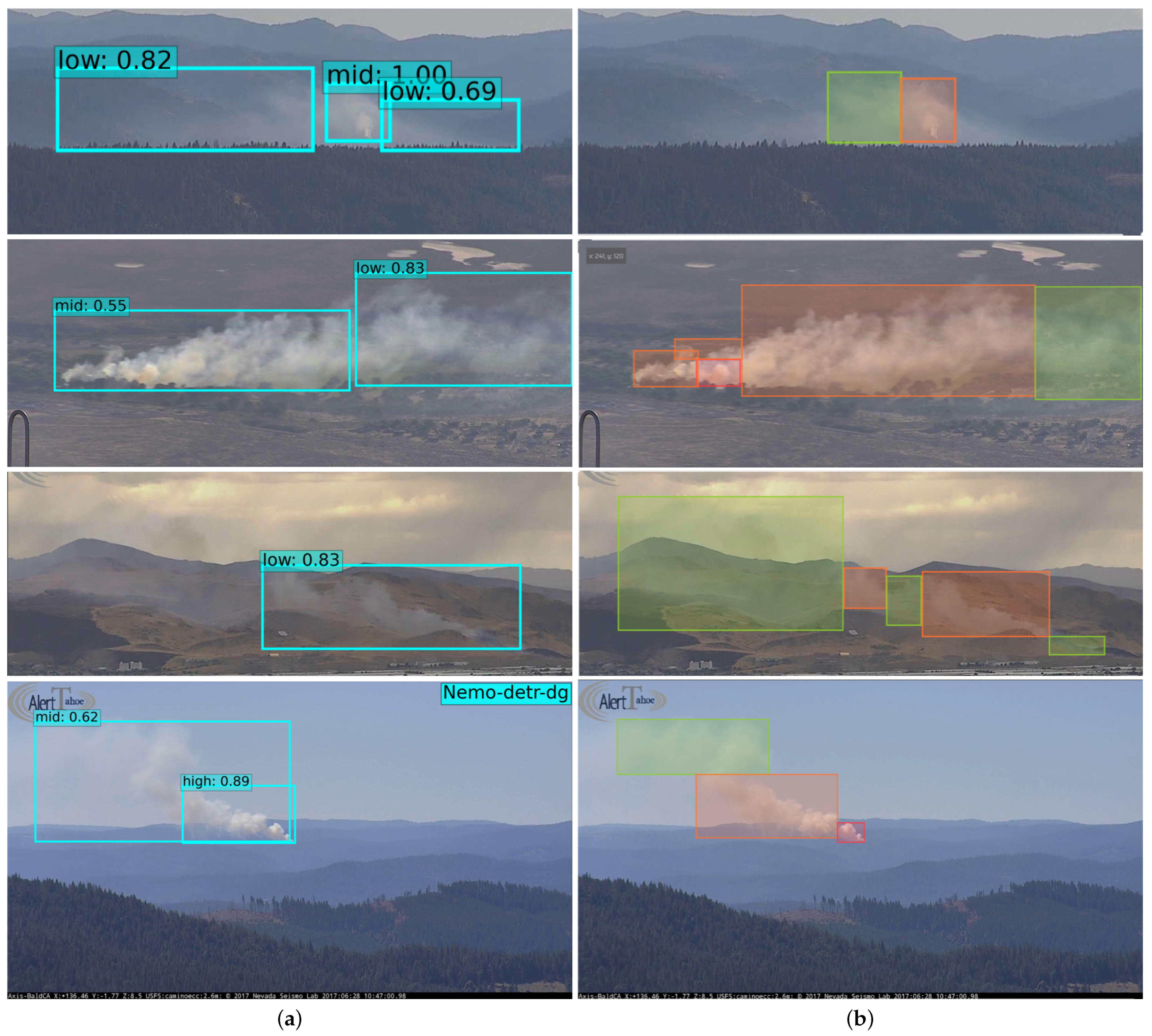

Figure 8 illustrates examples of smoke detection from the models presented in Table 3. Through extensive visual inferences and numerical evaluations, we confirmed the high accuracy of our wildfire smoke detectors. In particular, smoke was detected and localized in positive images with great precision and rare false positives, as noted by the 1.2% false positive rate (i.e., FPR). However, testing against our challenging set of empty images resulted in a false detection, as highlighted in Table 3 under FPR. This motivated us to investigate whether the false alarm issue is due to our training or the inherent performance of the base model (i.e., DETR trained on COCO) that we transferred the weights from. To confirm this, we conducted the following two evaluation experiments.

Figure 8.

Representative examples of smoke detection from the models presented in Table 3. (a,b) show single-class and density inferences, respectively, of the same image: prescribed burns near Union Valley Reservoir seen from Big Hill Mtn, CA. (c) Sage Hen incident north of Truckee, CA. (d) Tule and Rock fire take off northwest of Virginia Peak, CA.

First, we qualitatively evaluated the base model against challenging images containing the desired COCO objects, similar to the example shown in Figure A1. The full list of COCO classes is available online [98]. We verified that the base model has exceptional detection performance, which was expected and in line with the results reported in the paper [47].

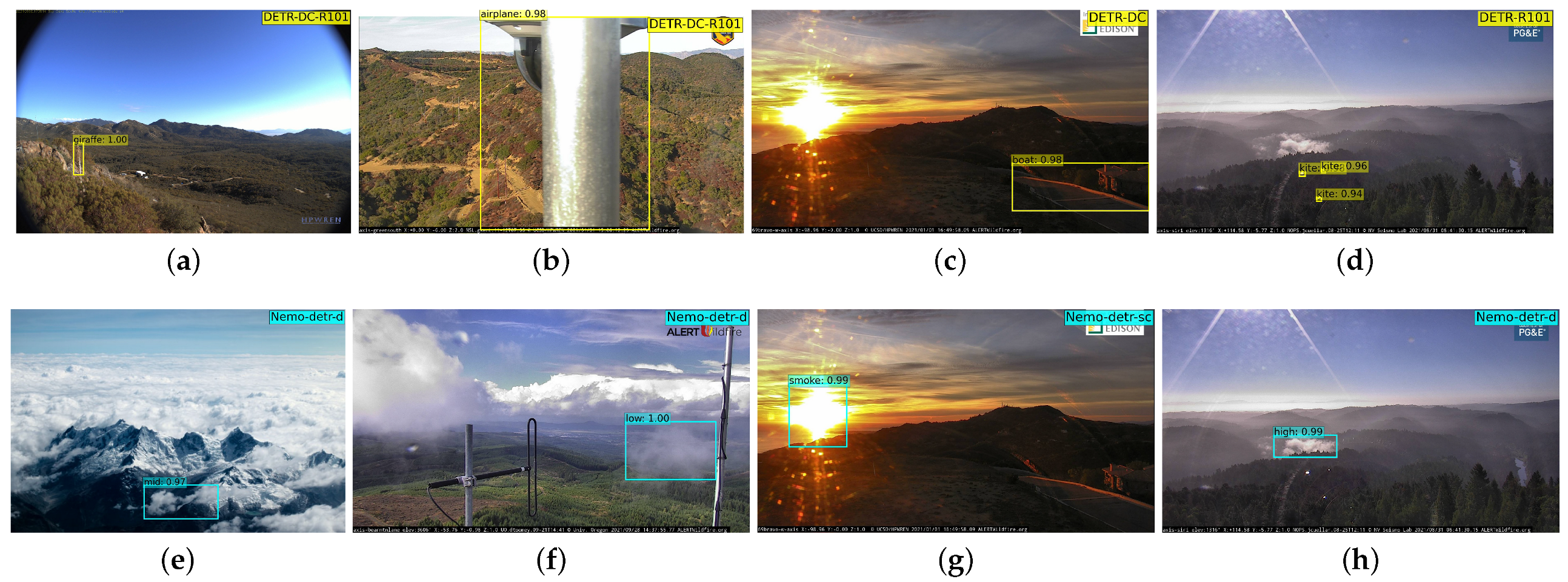

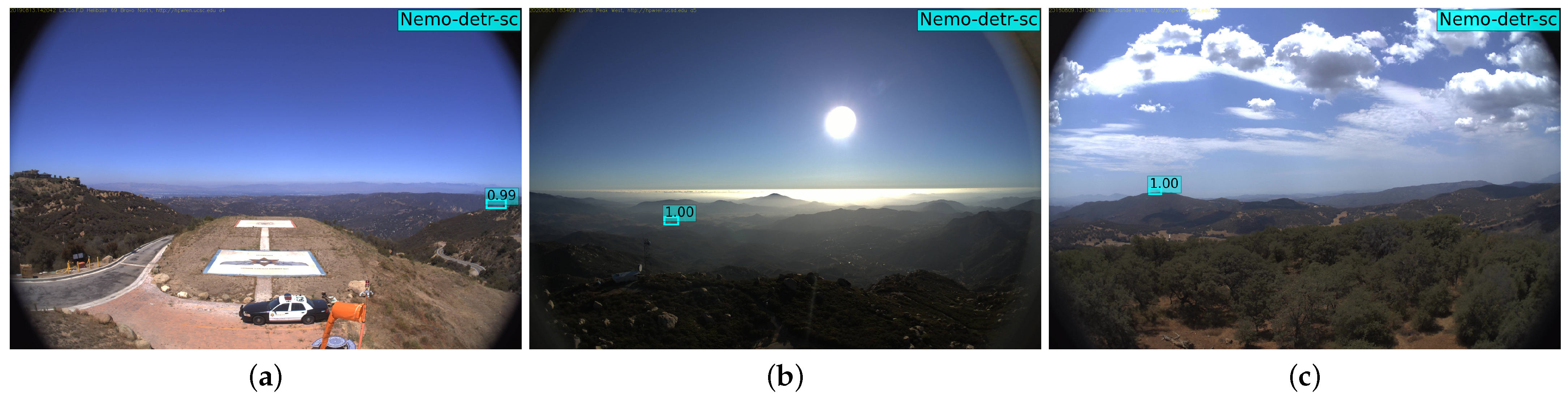

Second, we tested COCO DETR against our handpicked negative test set, which includes smoke-like objects. Besides a few exceptions (e.g., person, airplane, and truck in the background), this set does not include any of the 80 COCO objects. Our tests, shown in Table 4 under FPR, returned false detections, meaning DETR classified background objects incorrectly in 20 different images out of 100. Note that we did not handpick this test set for similarity to COCO objects (e.g., cauliflower to resemble broccoli, etc.), so our test set is relatively easier for a COCO detector, compared with a smoke detector. Furthermore, the original DETR is trained on COCO 2017 dataset, which has a higher number of objects and variety for each target class. This exploratory test confirmed that the false alarm performance of our wildfire smoke detection is inherent from the base transferred model. Through numerous visual tests, we confirmed that in the presence of desired objects, the detector is accurate and precise, even in the presence of challenging target-like objects (Figure 8). However, in the absolute absence of target objects, the model tends to predict false alarms on target-like objects in the background. This could be intuitively understood as the attention mechanism of the Transformer model is designed to focus on the target object, making the model robust against the target-like objects in the background when there exists a target object. Figure 9 shows examples of false alarms created by our single-class and density smoke detectors alongside inferences from DETR’s COCO object detector. This offers a visualization of our previous discussion.

Table 4.

Evaluation of DETR models on the COCO validation set (denoted by the mAP) and false alarm performance against images with no target objects (denoted by the FPR). We mainly transferred weights from DETR with 42% AP on the COCO benchmark. This shows that even the highest-performing object detector with a state-of-the-art 44.9% mAP still creates 15 false alarms when used against images with no COCO object. This shows that our smoke detector with 21 false alarms against a dataset with smoke-like objects is already performing well and as expected.

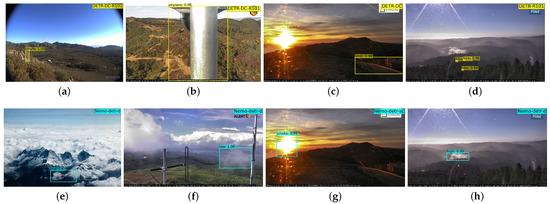

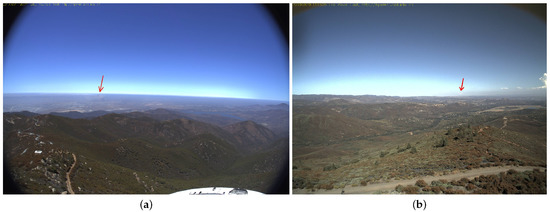

Figure 9.

Images on the top show false alarm examples of the DETR COCO detector on our challenging non-object test set. Images at the bottom show the same, but for the Nemo-DETR models presented in Table 3. (a) A tall tree is detected as a giraffe. (b) A camera attached to a pole is detected as an airplane with 99% confidence. (c) A driveway is detected as a boat. (d) Glares are detected as kites with high confidence. (e) Cloud in an unrealistic background detected as medium density smoke. (f) Rainy cloud detected as smoke. (g) Sun is detected as smoke, based on the similarity to flame, which is an object that exists near smoke in some training images. (h) Fog is detected as high-density smoke.

Image classification focuses solely on correct classification of images, whereas object detection involves both classification and localization of objects within images. Unlike image classifiers that explicitly feed negative samples to the model, object detectors are typically trained with positive images and their respective bounding box annotations, and the negative samples exist implicitly, meaning all regions of images that do not correspond to a bounding box are “negative samples”, which by itself is the clear majority of the data. In fact, a well-known problem in object detection is extreme foreground background class imbalance, where easily classified negatives can degenerate training by dominating the loss. To counter the class imbalance, an effective approach is to down-weight easy negatives. In our adopted models, Faster R-CNN uses subsampling to balance positive/negative region proposals. For RetinaNet, focal loss (FL) [41] is employed as in Equation (3) to down-weight the easily classified samples.

where is a modulating factor with a tunable focusing parameter , which controls the rate of down-weighting easily classified samples. Consequently, if is 0, then FL would be the same as cross-entropy. Empirically, has been found to work best, but given the different nature of smoke object detection, we experimented with different values and found no significant differences, so we opted for the default.

For our main adopted model, DETR [47], we use a coefficient to down-weight the log probability term when the class is predicted as non-object (i.e., background) in the bipartite matching loss, as specified in Equation (2). By default, we down-weight easy negatives by a factor of 10, but other values were extensively tested. Despite using these methods for tuning the down-weighting parameters, the false alarms reported in Table 3 and Table 4 still need to be improved.

3.4. Collage Images and Dummy Annotations

To improve our preliminary models in terms of false alarms, we incorporated empty images (i.e., images with no smoke, all background) using different strategies: simply adding empty images with no corresponding annotations, creating and adding collages of smoke and empty images with their respective bounding box annotations, and finally, adding empty images with dummy category annotations.

Given the inherent problem of the extreme foreground background class imbalance discussed earlier, adding more background in the form of empty images seems counterintuitive, as it increases these problematic easy negatives in our dataset, making the extreme imbalance even more extreme. We argue several reasons and discussion points on why it may be necessary to add empty images explicitly.

Single-stage object detectors, such as DETR, do not refine the proposed bounding boxes, using a complementary classifier, or nonmax suppression. The fact that DETR outperforms two-stage detectors on the challenging COCO benchmark simply attests to the superiority of Transformers. However, to further improve its robustness against challenging backgrounds in the wildfire domain, it is important to improve the diversity of the non-smoke background.

One obvious and the most effective way would be to collect enough positive data to cover a reasonable variety of backgrounds, which is a very challenging task, especially for wildfire smoke in the early stages. Target objects do not always happen with all possible backgrounds. For example, in our initial training set (sc and d), we do not have smoke happening in the same image as different shades of sunrise and sunset. Consequently, our initial model tends to incorrectly detect Sun as a smoke object, as shown in Figure 9g. The same situation applies to snow as wildfires amid snow are rare, but possible [99]. Thus, it is important to diversify the background, and one way of doing so is by adding empty images to the dataset with no bounding box annotations.

Object detection algorithms were not originally designed with the expectation of adding explicit negative samples. In fact, support for negative samples was only added recently. For instance, Faster R-CNN and RetinaNet did not support negative samples until April 2020 (i.e., PyTorch v0.6.0) and March 2021 (i.e., v0.9.0) updates, respectively [100]. Thus, the code base for many existing inference systems may not support negative samples. Therefore, to cover such scenarios, we tried two workarounds: one harder, but robust approach (collages) and another easy, scalable, but hacky approach (dummy annotations).

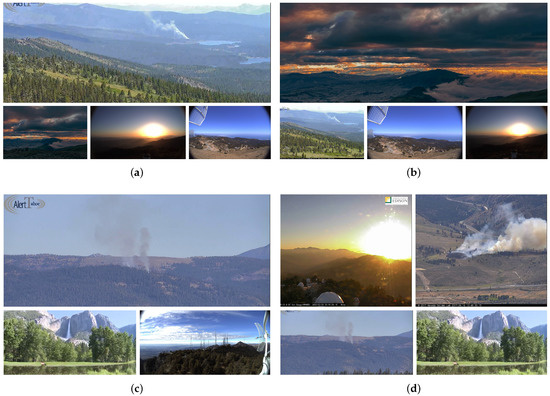

3.4.1. Collages

The first approach is using combined images (i.e., collages) of smoke and non-smoke scenes, similar to Figure 10. However, creating such collages and annotating smoke objects requires significant effort. First, collages need to be created in a way that avoids the introduction of spatial bias to the model. For example, the non-object and object images should not dominantly be in the same position within the collage (i.e., top-right, top-left, etc.), but shifted around in different ways to add variety. For instance, Figure 10a,b are permutations of the same set of images, some containing smoke and some only smoke-like background. Once collages are created, bounding boxes must be manually drawn and labeled, which can be laborious, given that the smoke sub-images may contain incipient smoke that is much smaller compared to the full images. To annotate the bounding boxes in some of the collage images containing small smoke objects, we employed our existing detectors to help us find smoke regions, albeit not always reliable.

Figure 10.

Four representative examples of collage images used for training. Different configurations were used (e.g., 1 by 1, 1 by 2, 2 by 1, 2 by 2, 1 by 3, etc.). The images within the collages were often reused, but shifted to create variability and avoid learning bias. (a,b) are permutations of the same set of images. In (a), the bottom-right and top images have smoke, and the other images are used as new background samples. (c) Collage of three images, with a larger image containing smoke and two smaller images containing non-smoke background. (d) The top-right and bottom-left are images containing smoke, and the top-left is a shade of sunrise that our initial training had never seen due to a lack of incipient-stage smoke happening naturally in such a background.

The multi-step and time-consuming task of creating and annotating collages meant that we settled for 116 of these collages to add to our training set, as also specified in Table 2. The number of added collage images may not be enough, but in practice, the collages would add background variety and positive samples at the same time, thus affecting class imbalance less than simply adding empty images. The difficulties of creating collages prompted us to think of another work-around, a simpler one.

3.4.2. Annotations with Dummy Category

The second approach is to add empty images directly (no collage) and add a dummy class. Then, for each empty image added to the dataset, create a minimal bounding box (1 by 1 pixel) with a random location within the image dimension. The advantage of this approach over creating collages is that it can be easily automated and scaled up in seconds, as it does not need any manual annotation of smoke objects. We created a simple pipeline to obtain empty images, extract their dimensions, and then, generate a random bbox with COCO format as follows:

where rand(0, Width) and rand(0, Height) create random top-left x and y positions, respectively, within the image dimension. The bounding boxes have the minimal width and height of 1 pixel. Random positioning of the dummy object is crucial, as the model would learn unimportant features, if for instance, a fixed position of top-left (i.e., 0, 0) was chosen based on the assumption that no target objects are at those extreme locations. Consequently, during inference, any detection of the dummy category would be discarded. This work-around would support older object detection inference systems that do no support negative samples.

To cover all configurations, we consider the option of adding empty images without the corresponding entry under the annotations in the COCO format. In conclusion, we tried all configurations to cover any scenario where the object detection algorithm did or did not support images with no annotation entry. Table 2 lists our dataset configurations. For single-class annotation, we do not attempt dummy or collage annotations and leave other configurations for future exploration. In the next section, we present the full evaluation results, including our baselines, RetinaNet, and Faster R-CNN, which we trained with our dataset for comparison.

4. Experimental Results and Discussion

In this section, the full comparative evaluations are presented based on the metrics presented in the following section.

4.1. Performance Evaluation

To evaluate the performance of our models, we used various metrics. For consistency, we mainly focused on the Microsoft COCO criteria and Pascal VOC metric, as in Table 5, all of which are based on the intersection over union (IoU), while using other metrics to further evaluate our density detectors. The IoU is commonly used to evaluate object detection algorithms. It measures the overlap between the predicted bounding boxes and the associated ground truth boxes via the following Equation (4).

Table 5.

COCO evaluation criteria.

A perfectly overlapping prediction would get an IoU score of 1, while a false alarm (i.e., predicted bounding box with no overlap) gets a zero. Consequently, the IoU can be used to determine whether the predicted bounding box is true or false by setting a fixed threshold for the IoU. If the IoU is bigger than the threshold, the detection is considered to be correct; otherwise, the detection is considered false and discarded.

However, a fixed threshold can potentially cause bias in the evaluation metric. Thus, it is common to use the Microsoft COCO mean average precision (i.e., mAP, or simply AP) as the primary metric to evaluate object detection algorithms. The AP interpolates 10 points associated with 10 different thresholds in the 0.5–0.95 range with a step size of 0.05, as specified in Equation (5).

Based on the above, the COCO evaluator (i.e., pycocotools [101]) automatically measures the AP at different levels and scales, as mentioned in Table 5. The IoU score of 0.33 was also considered, inspired by a recent study [16], which specifies smoke objects to have a fuzzy appearance, and lowering the overlap threshold would help keep valid smoke detections that otherwise would be discarded.

Besides the standard MS COCO and PASCAL VOC, we define separate metrics to better evaluate our models, especially for models trained on our smoke density annotations. Smoke is a special object, with diverse shapes and patterns [10]. As mentioned in smoke density re-annotation (see Section 3.1.3), breaking a single columnof smoke into fine-grained sub-regions based on density can result in drawing and labeling inconsistencies, and noise in data (Figure A2). Thus, bounding boxes generated by smoke detectors may be slightly misplaced from the ground truth and impact the AP results, but the detectors do identify the regions successfully, as shown in Figure A2. In addition, the inherent similarity between our neighboring density classes (i.e., low vs. mid and mid vs. high) can also impact the AP results. Importantly, a case where the prediction is two density levels off is rare in our results, especially for Nemo-DETR. Moreover, with the extremely small smoke objects (i.e., ∼ pixels), the smallest shift in the predicted bounding box relative to the ground truth can drastically affect the overlap and the corresponding IoU. Inspired by [10] and to evaluate the models more comprehensively, we use two additional metrics: frame accuracy (FA) and false positive rate (FPR). For a positive image, if the detector misses any smoke object, we count it as a frame miss (FM), otherwise as a frame hit (FH). Consequently, if the detector predicts any smoke-like object in our challenging negative set as smoke, we count it as a false positive (FP), otherwise as a true negative (TN). We then calculate the as specified in Equation (6):

4.2. Results

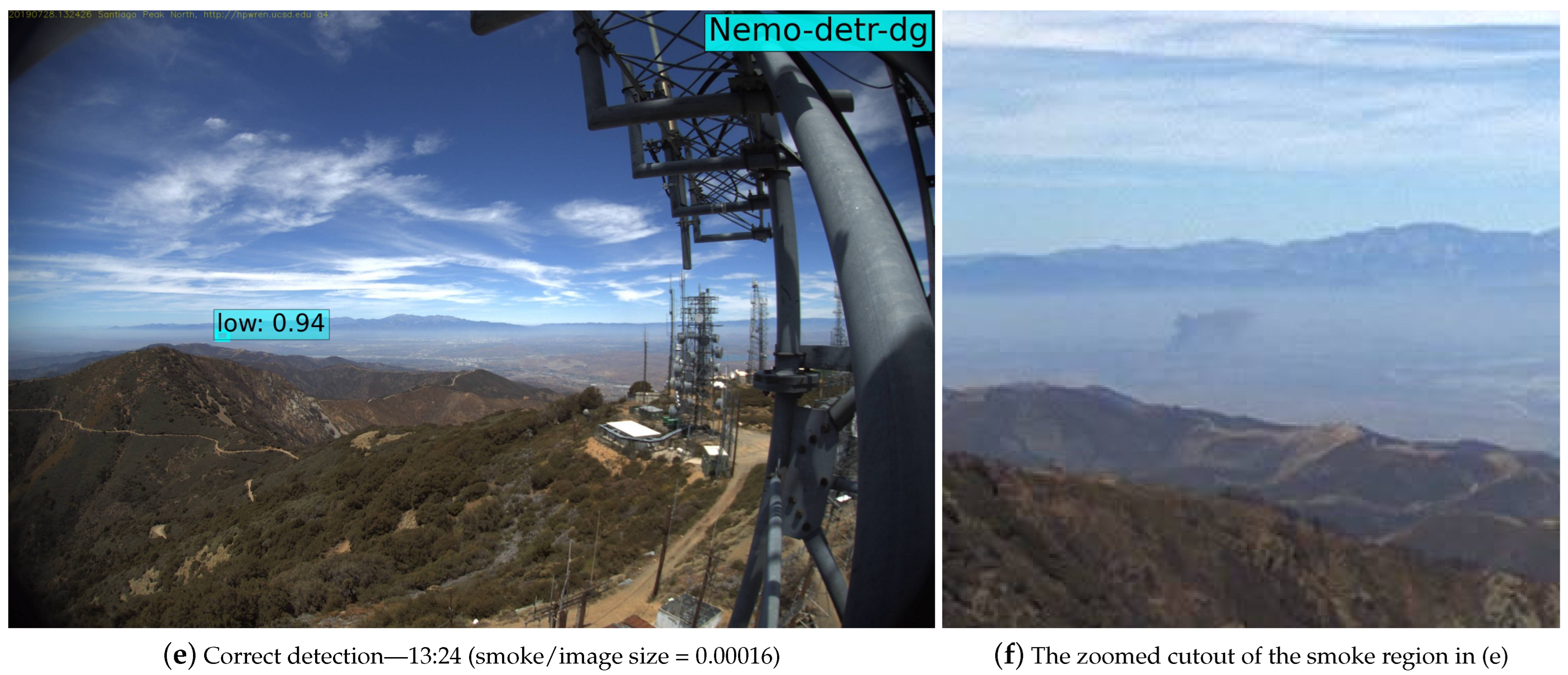

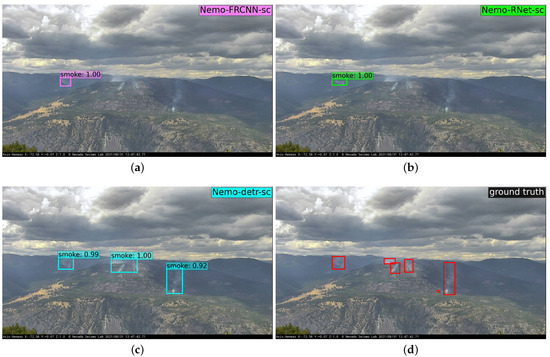

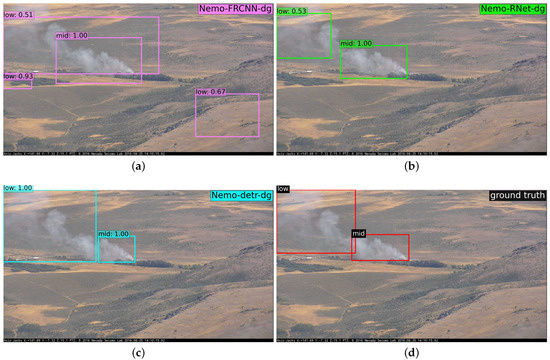

In this section, we present the complete comparative evaluation of our smoke object detectors (i.e., FRCNN, RetinaNet, and DETR). It is important to note that FRCNN and RetinaNet have been used before for wildfire detection and, very recently, fine-tuned by [16] to achieve impressive results. Their dataset is similar in terms of the number of images. Their training data were collected from national parks in Portugal, while ours from Nevada and California, which are relatively similar. However, due to some commercial aspects of the existing work [16] and the fact that we use different datasets, we do not compare them directly for evaluation. Instead, we trained Faster R-CNN and RetinaNet with our datasets as the reference for comparison. Table 6 and Table 7 list the detailed comparative evaluation of the Nemo smoke and smoke density detectors, respectively. Figure 11 and Figure 12 show an example of smoke bounding box detection for the single-class and density detectors, respectively. The employed models were trained using different Nemo dataset configurations, as noted in the name according to Table 2. Despite performing extensive experiments with different hyperparameters, we only include results from select model checkpoints with the highest mAP for consistency and brevity. The models and datasets are available at project Nemo’s GitHub [48] along with more details about the experiments.

Table 6.

Wildfire smoke detection results. Three different models, DETR, FRCNN, and RetinaNet (RNet) were trained with our dataset (Nemo) using the two data configurations from Table 2 (sc and sce).

Table 7.

Wildfire smoke density detection results. Wildfire smoke detection results. Three different models, DETR, FRCNN, and RetinaNet (RNet) were trained with our dataset (Nemo) using the data configurations from Table 2 (d, dg, de, dge, and dda).

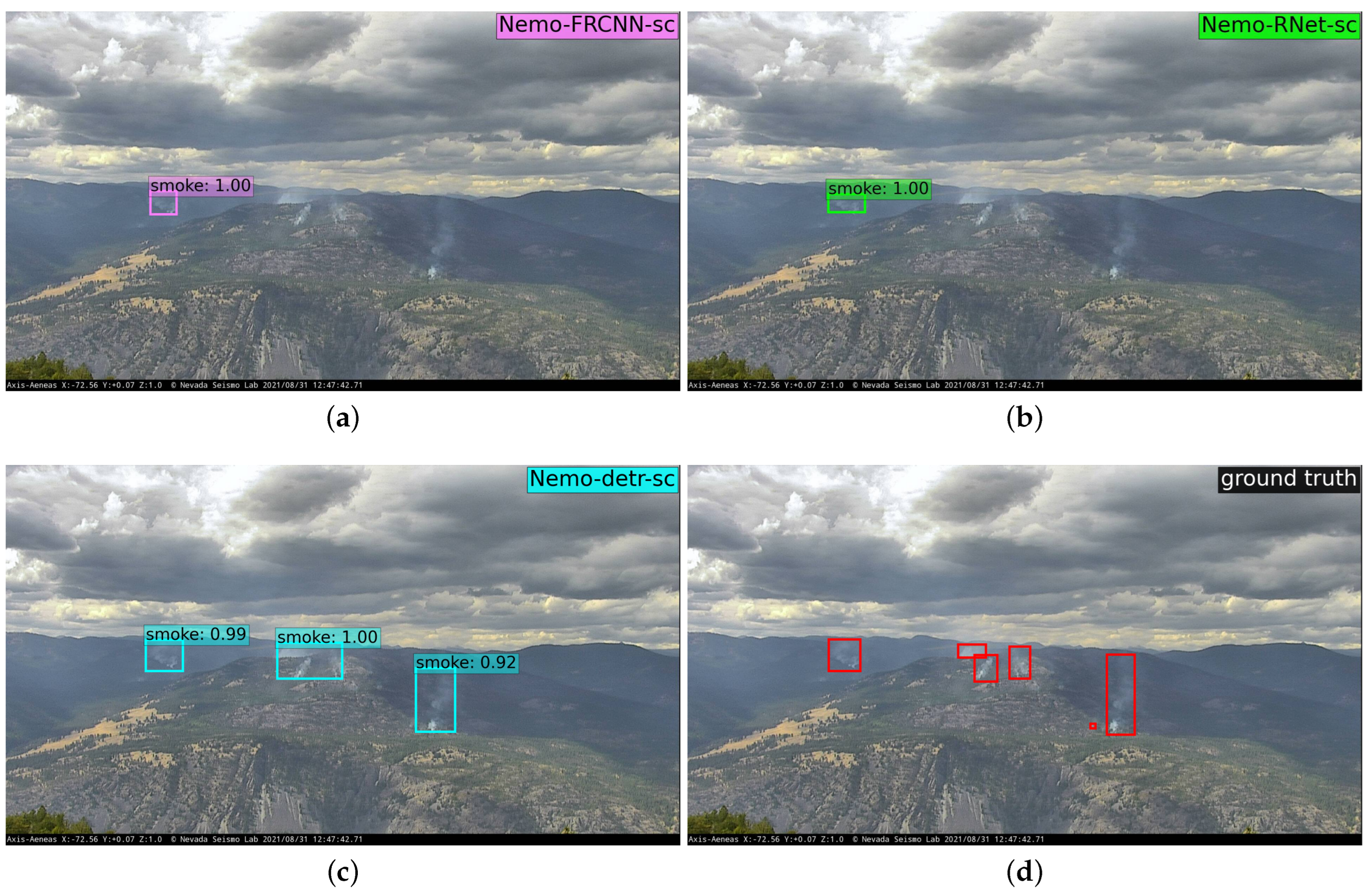

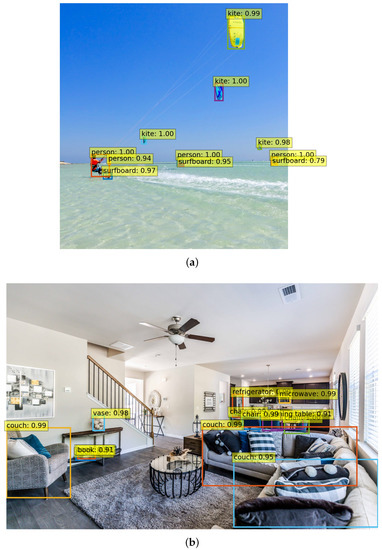

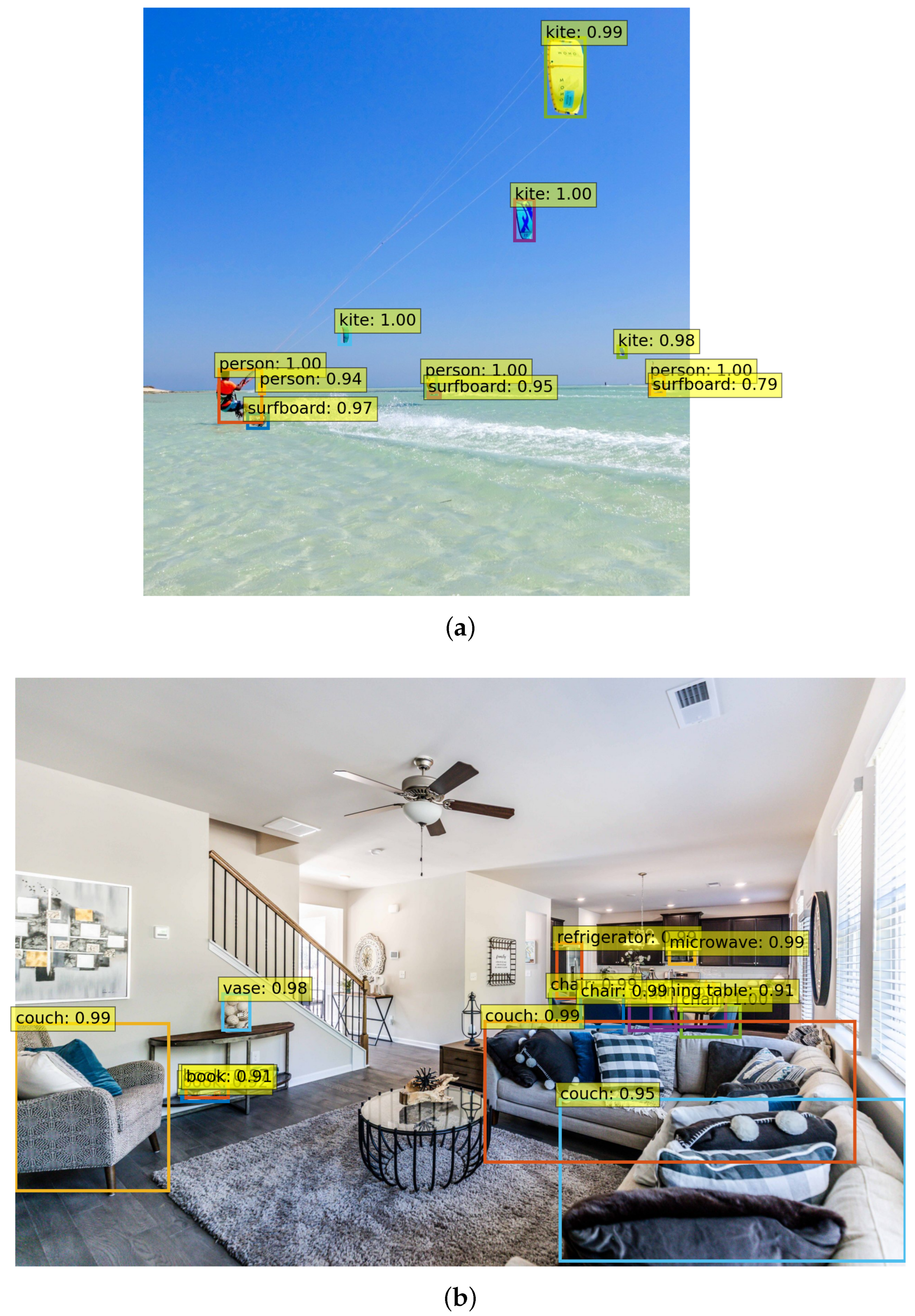

Figure 11.

Wildfire single-class smoke bounding box predictions by (a) Faster R-CNN (Nemo-FRCNN-sc), (b) RetinaNet (Nemo-RNet-sc), and (c) DETR (Nemo-DETR-sc). (d) shows the ground truth annotation. The image shown is from a camera at Aeneas Lookout, Washington, 31 August 2021.

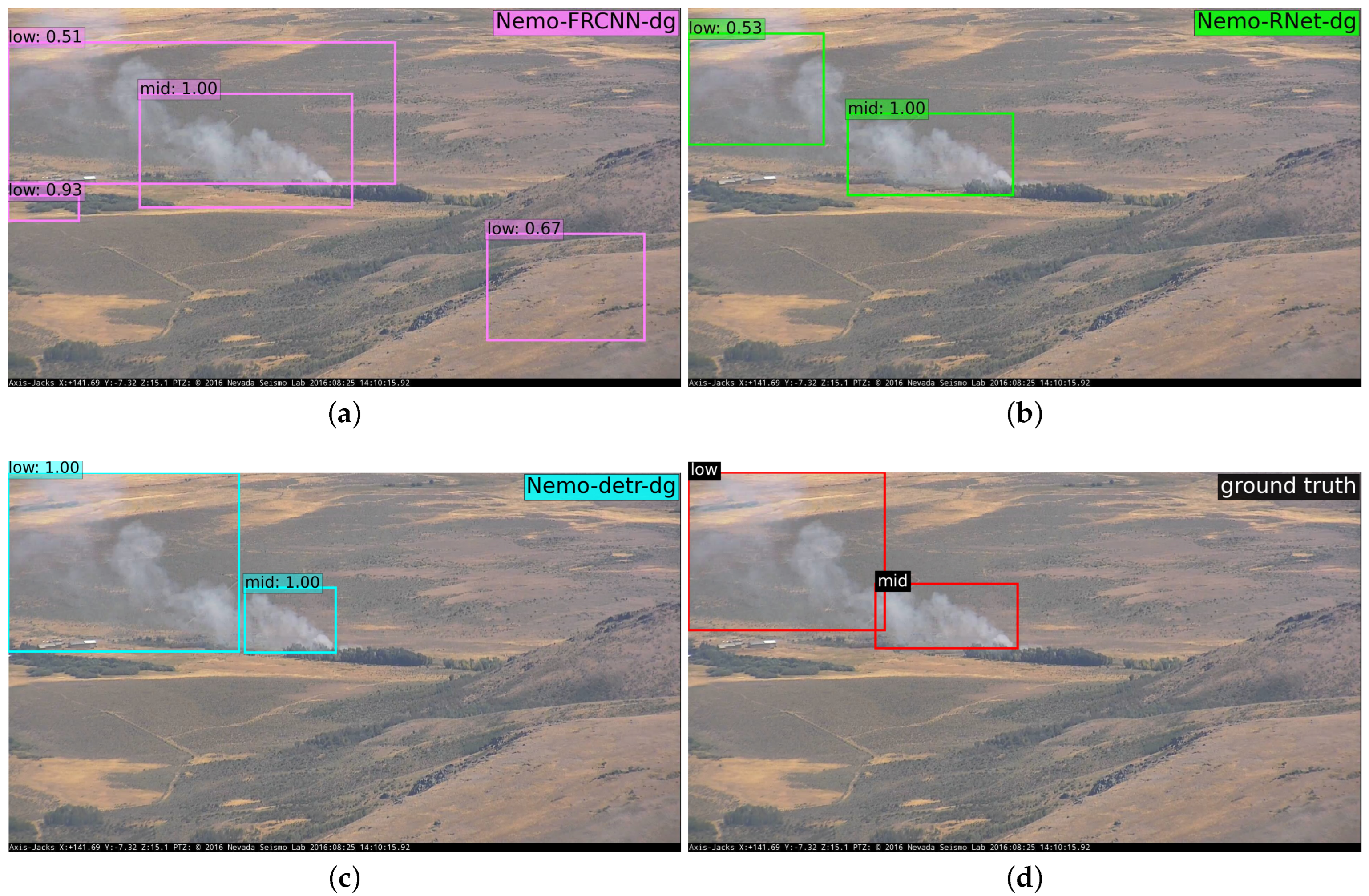

Figure 12.

Wildfire smoke density bounding box predictions by (a) Faster R-CNN (Nemo-FRCNN-dg), (b) RetinaNet (Nemo-RNet-dg), and (c) DETR (Nemo-DETR-dg). (d) shows the ground truth annotation. The image shown was recorded August 2016 from the Axis-Jacks camera located at Jacks Peak, Northern Nevada.

The results confirm that improving the variety of background using any of the strategies (i.e., collage, empty images with and without dummy annotations) can significantly reduce the number of false positives in our challenging negative set, as denoted by FPR. Importantly, however, the overall performance and precision of smoke bounding box detection is not significantly improved for positive images, as specified by mAP. For example, the best initial single-class model in terms of mAP returned 26 false alarms in our challenging non-smoke dataset, while after adding empty images, the number of false alarms significantly dropped to three. However, the mAP and PASCAL VOC metric (AP50) did not significantly improve. For instance, the mAP is only improved by up to 1.7% and 0.4% for smoke and smoke density detection, respectively. The same observations apply to RetinaNet and Faster R-CNN. We hypothesize this to be due to the extra class imbalance introduced by adding empty images, further diluting the loss. We also notice that the models trained with collage images (dg) returned the highest average precision for DETR and RetinaNet and the second-highest for FRCNN, which is interesting because collage is the only data configuration besides original (i.e., sc and d) that does not add significant class imbalance to the dataset.