On the Efficient Implementation of Sparse Bayesian Learning-Based STAP Algorithms

Abstract

:1. Introduction

2. Signal Model

3. A Brief Review of the Traditional MSBL-STAP Algorithms

4. Proposed Algorithms

- (1)

- Calculate the initial values

- (2)

- Repeat:

- (3)

- Output: and .

- Step 1: Give the initial values ,

- Step 2: Give the and , Using (19)–(24), obtain the first columns of the covariance matrix by applying 2-D FFT, with flops.

- Step 3: Given the first columns of , compute the through 2-D L-D algorithm, with flops.

- Step 4: Utilizing (60)–(86), calculate the vector and the mean matrix by applying 2-D FFT and IFFT, with flops.

- Step 5: Update and using (10) and (11).

- Step 6: Repeat step 2 to step 5 until the predefined convergence criteria is satisfied.

- Step 7: obtain the estimated angle-Doppler profile using (13)

- Step 8: Compute the CNCM using (14)

- Step 9: Compute the optimal STAP weight vector Using (15).

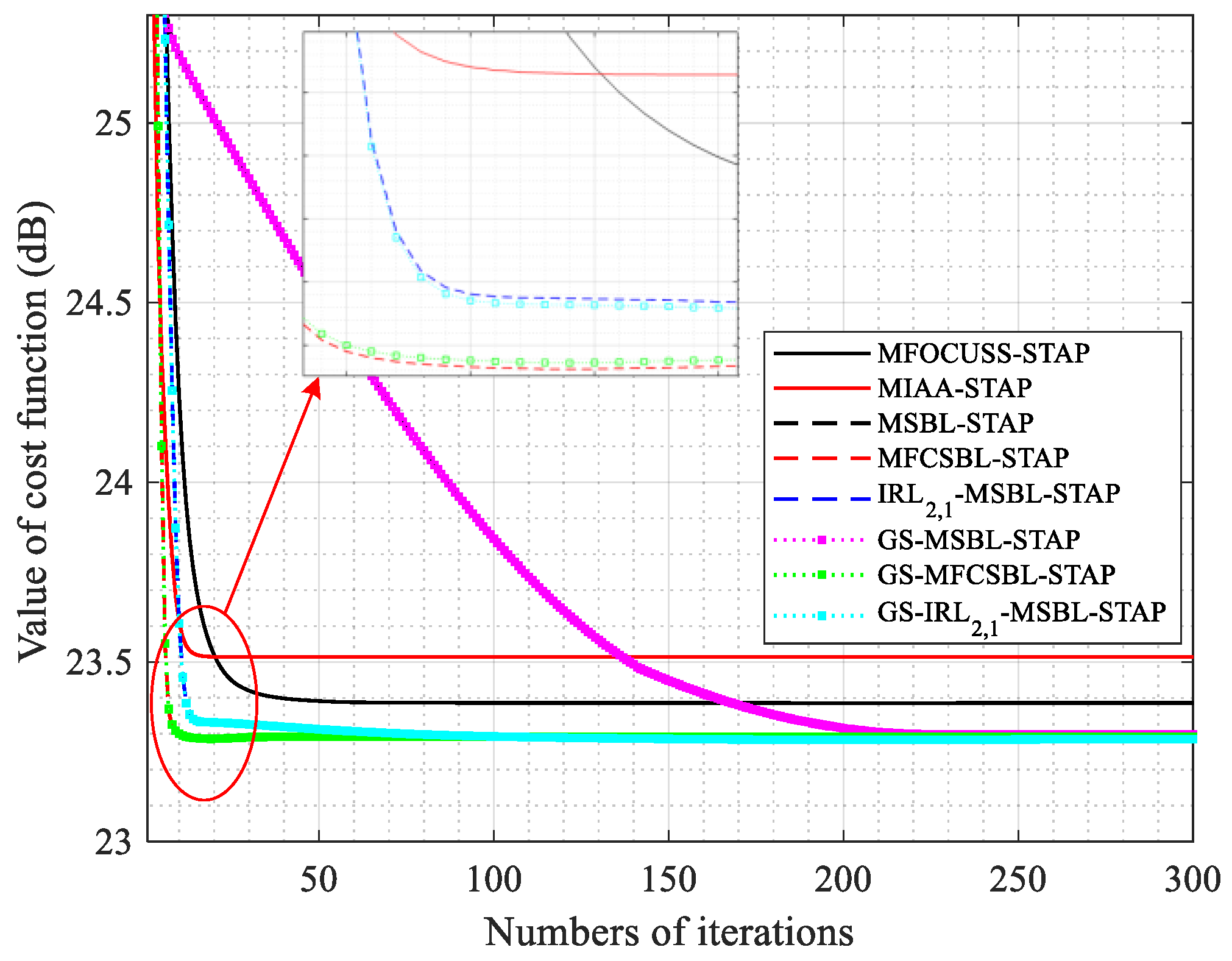

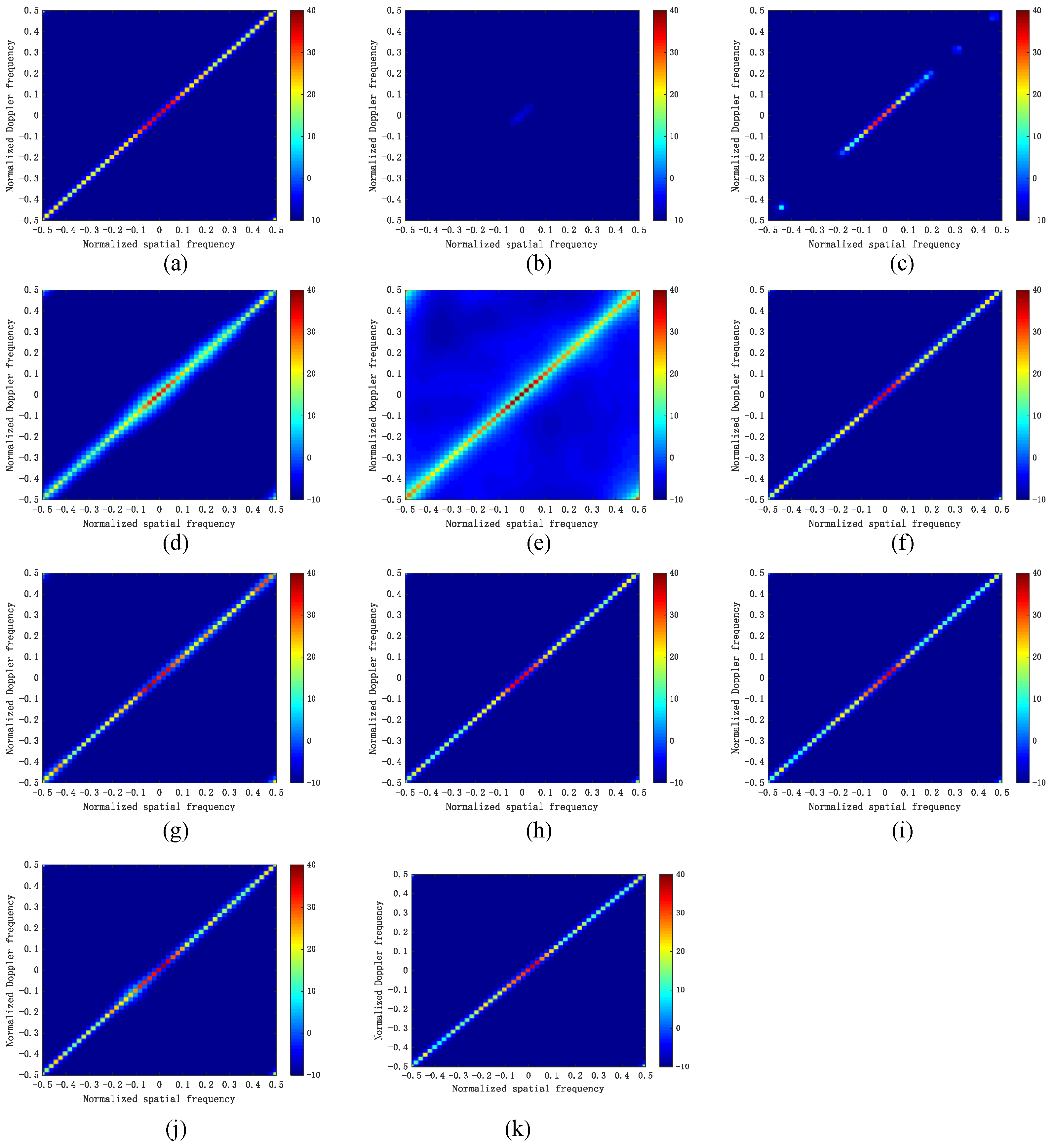

5. Numerical Simulation

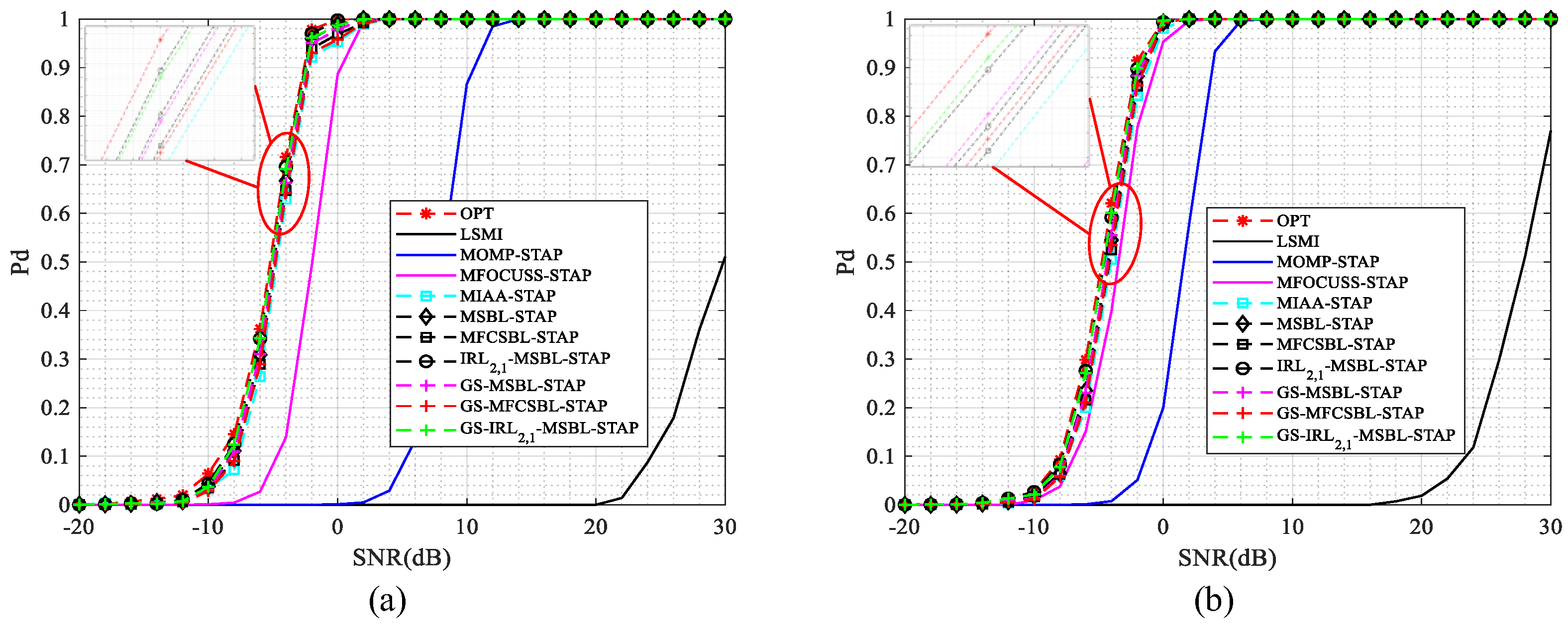

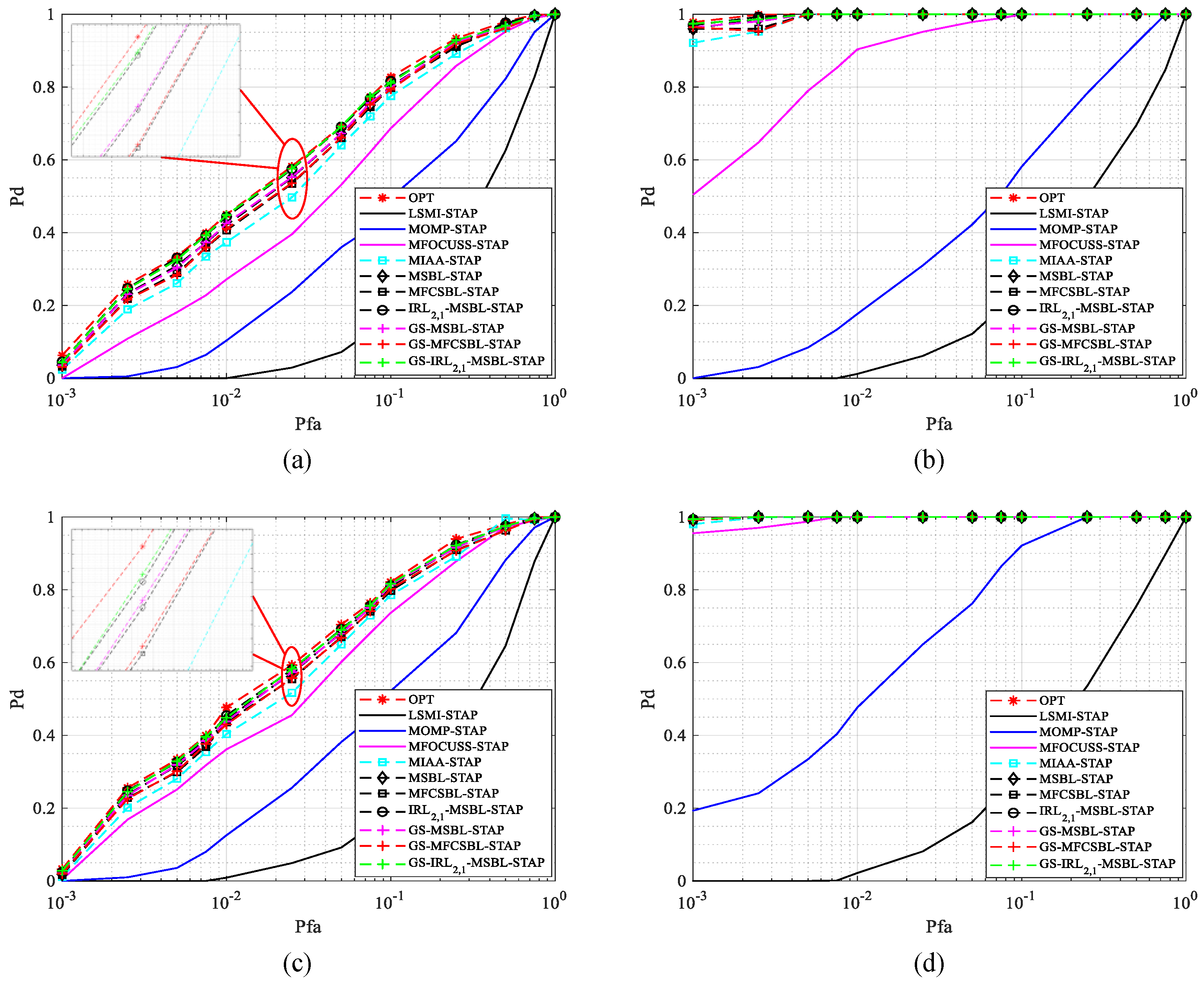

5.1. Simulated Data

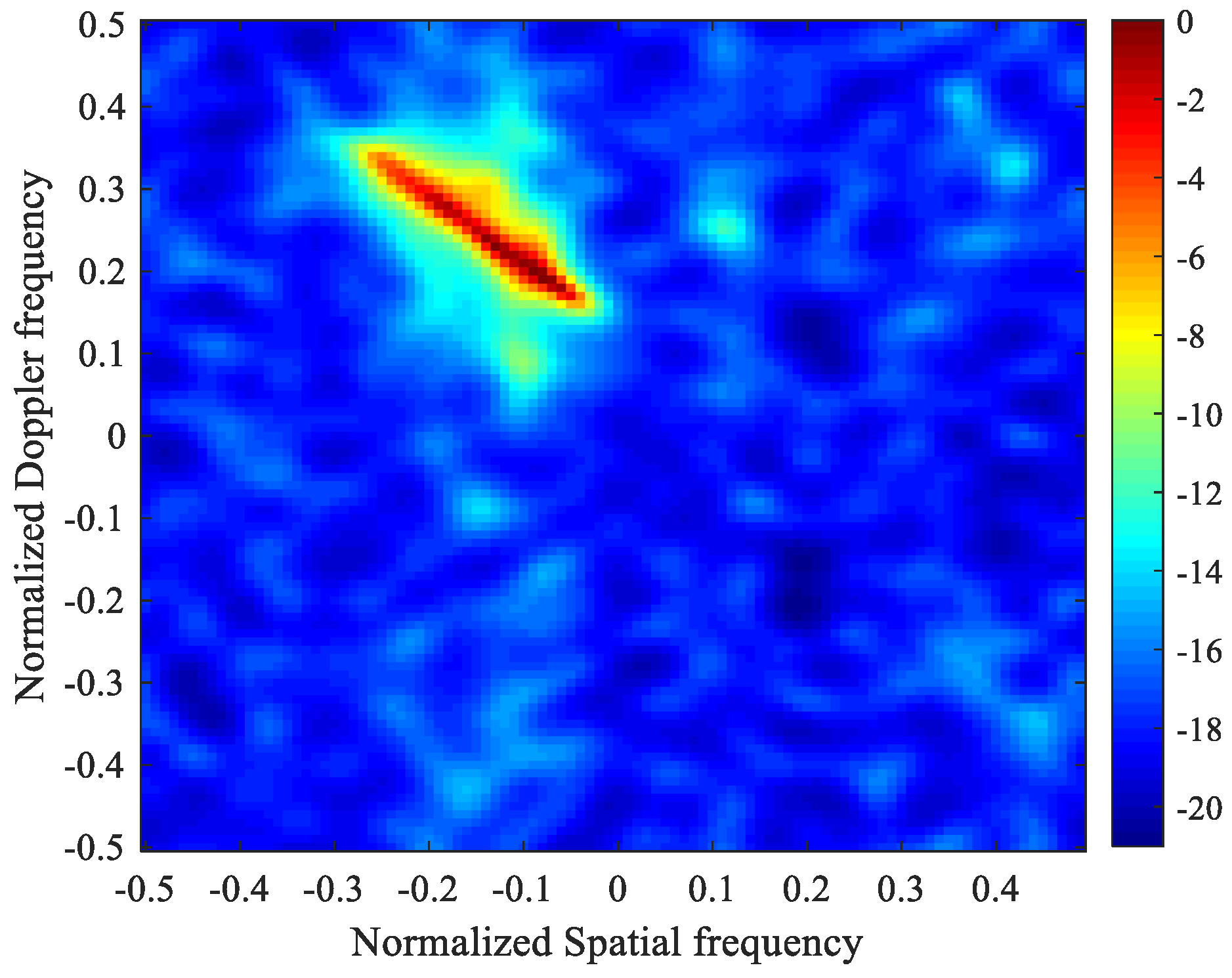

5.2. Measured Data

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

References

- Ward, J. Space-Time Adaptive Processing for Airborne Radar; MIT Lincoln Laboratory: Lexington, KY, USA, 1994. [Google Scholar]

- Klemm, R. Principles of Space-Time Adaptive Processing; The Institution of Electrical Engineers: London, UK, 2002. [Google Scholar]

- Guerci, J.R. Space-Time Adaptive Processing for Radar; Artech House: Norwood, MA, USA, 2003. [Google Scholar]

- Brennan, L.E.; Mallett, J.D.; Reed, I.S. Theory of Adaptive Radar. IEEE Trans. Aerosp. Electron. Syst. 1973, 9, 237–251. [Google Scholar] [CrossRef]

- Reed, I.S.; Mallett, J.D.; Brennan, L.E. Rapid Convergence Rate in Adaptive Arrays. IEEE Trans. Aerosp. Electron. Syst. 1974, 10, 853–863. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive sensing. IEEE Signal Proc. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Trzasko, J.; Manduca, A. Relaxed Conditions for Sparse Signal Recovery With General Concave Priors. IEEE Trans. Signal Process. 2009, 57, 4347–4354. [Google Scholar] [CrossRef]

- Davies, M.E.; Gribonval, R. Restricted Isometry Constants where ℓp sparse recovery can fail for 0 < p ≤ 1. IEEE Trans. Inf. Theory 2009, 55, 2203–2214. [Google Scholar]

- David, M.E.; Eldar, Y.C. Rank awareness in joint sparse recovery. IEEE Trans. Inf. Theory 2012, 58, 1135–1146. [Google Scholar]

- Mallat, S.G.; Zhang, Z. Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 1993, 41, 3397–3415. [Google Scholar] [CrossRef]

- Davis, G.; Mallat, S.; Avellaneda, M. Adaptive greedy approximations. J. Constr. Approx. 1997, 13, 57–98. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Donoho, D.L.; Tsaig, Y.; Drori, I.; Starck, J.-L. Sparse solution of underdetermined systems of linear equations by stagewise orthogonal matching pursuit. IEEE Trans. Inf. Theory 2012, 58, 1094–1121. [Google Scholar] [CrossRef]

- Tropp, J.A. Just relax: Convex programming methods for identifying sparse signals in noise. IEEE Trans. Inf. Theory 2006, 52, 1030–1051. [Google Scholar] [CrossRef]

- Donoho, D.L.; Elad, M.; Temlyakov, V.N. Stable recovery of sparse overcomplete representations in the presence of noise. IEEE Trans. Inf. Theory 2005, 52, 6–18. [Google Scholar] [CrossRef]

- Koh, K.; Kim, S.J.; Boyd, S. An interior-point method for large-scale ℓ1-regularized logistic regression. J. Mach. Learn. Res. 2007, 1, 606–617. [Google Scholar]

- Daubechies, I.; Defrise, M.; De, M.C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. J. Issued Courant Inst. Math. Sci. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Wright, S.J.; Nowak, R.D.; Figueiredo, M.A.T. Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 2009, 57, 2479–2493. [Google Scholar] [CrossRef]

- Donoho, D.L.; Tsaig, Y. Fast Solution of l1-Norm Minimization Problems When the Solution May Be Sparse. IEEE Trans. Inf. Theory 2008, 54, 4789–4812. [Google Scholar] [CrossRef]

- Gorodnitsky, I.F.; Rao, B.D. Sparse signal reconstruction from limited data using FOCUSS: A re-weighted minimum norm algorithm. IEEE Trans. Signal Process. 1997, 45, 600–616. [Google Scholar] [CrossRef]

- Cotter, S.; Rao, B.; Engan, K.; Kreutz-Delgado, K. Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE Trans. Signal Process. 2005, 53, 2477–2488. [Google Scholar] [CrossRef]

- Yardibi, T.; Li, J.; Stoica, P.; Xue, M.; Baggeroer, A.B. Source localization and sensing: A nonparametric iterative adaptive approach based on weighted least squares. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 425–443. [Google Scholar] [CrossRef]

- Rowe, W.; Li, J.; Stoica, P. Sparse iterative adaptive approach with application to source localization. In Proceedings of the IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing, St. Martin, France, 15–18 December 2013; pp. 196–199. [Google Scholar]

- Yang, Z.C.; Li, X.; Wang, H.Q.; Jiang, W.D. On clutter sparsity analysis in space-time adaptive processing airborne radar. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1214–1218. [Google Scholar] [CrossRef]

- Duan, K.Q.; Yuan, H.D.; Xu, H.; Liu, W.J.; Wang, Y.L. Sparisity-based non-stationary clutter suppression technique for airborne radar. IEEE Access 2018, 6, 56162–56169. [Google Scholar] [CrossRef]

- Yang, Z.C.; Wang, Z.T.; Liu, W.J. Reduced-dimension space-time adaptive processing with sparse constraints on beam-Doppler selection. Signal Process. 2019, 157, 78–87. [Google Scholar] [CrossRef]

- Zhang, W.; An, R.X.; He, Z.S.; Li, H.Y. Reduced dimension STAP based on sparse recovery in heterogeneous clutter environments. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 785–795. [Google Scholar] [CrossRef]

- Li, Z.Y.; Wang, T. ADMM-Based Low-Complexity Off-Grid Space-Time Adaptive Processing Methods. IEEE Access 2020, 8, 206646–206658. [Google Scholar] [CrossRef]

- Su, Y.Y.; Wang, T.; Li, Z.Y. A Grid-Less Total Variation Minimization-Based Space-Time Adaptive Processing for Airborne Radar. IEEE Access 2020, 8, 29334–29343. [Google Scholar] [CrossRef]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. 2001, 1, 211–244. [Google Scholar]

- Wipf, D.P.; Rao, B.D. Sparse Bayesian learning for basis selection. IEEE Trans. Signal Process. 2004, 52, 2153–2164. [Google Scholar] [CrossRef]

- Wipf, D.P.; Rao, B.D. An empirical Bayesian strategy for solving the simultaneous sparse approximation problem. IEEE Trans. Signal Process. 2007, 55, 3704–3716. [Google Scholar] [CrossRef]

- Ji, S.; Xue, Y.; Carin, L. Bayesian compressive sensing. IEEE Trans. Signal Process. 2008, 56, 2346–2356. [Google Scholar] [CrossRef]

- Baraniuk, R.G.; Cevher, V.; Duarte, M.F.; Hegde, C. Model-based compressive sensing. IEEE Trans. Inf. Theory 2010, 56, 1982–2001. [Google Scholar] [CrossRef]

- Zhang, Z.; Rao, B.D. Extension of SBL algorithms for the recovery of block sparse signals with intra-block correlation. IEEE Trans. Signal Process. 2013, 61, 2009–2015. [Google Scholar] [CrossRef]

- Duan, K.Q.; Wang, Z.T.; Xie, W.C.; Chen, H.; Wang, Y.L. Sparsity-based STAP algorithm with multiple measurement vectors via sparse Bayesian learning strategy for airborne radar. IET Signal Process. 2017, 11, 544–553. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, X.; Long, T.; Sarkar, T.K. Robust sparse Bayesian learning STAP method for discrete interference suppression in nonhomogeneous clutter. In Proceedings of the IEEE Radar Conference, Seattle, WA, USA, 8–12 May 2017; pp. 1003–1008. [Google Scholar]

- Wu, Q.; Zhang, Y.D.; Amin, M.G.; Himed, B. Space-Time Adaptive Processing and Motion Parameter Estimation in Multistatic Passive Radar Using Sparse Bayesian Learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 944–957. [Google Scholar] [CrossRef]

- Li, Z.H.; Guo, Y.D.; Zhang, Y.S.; Zhou, H.; Zheng, G.M. Sparse Bayesian learning based space-time adaptive processing against unknown mutual coupling for airborne radar using middle subarray. IEEE Access 2019, 7, 6094–6108. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Guo, Y.; Wang, Q.; Wu, Y. A novel STAP algorithm for airborne MIMO radar based on temporally correlated multiple sparse Bayesian learning. Math. Probl. Eng. 2016, 2016, 3986903. [Google Scholar] [CrossRef]

- Liu, C.; Wang, T.; Zhang, S.; Ren, B. A Fast Space-Time Adaptive Processing Algorithm Based on Sparse Bayesian Learning for Airborne Radar. Sensors 2022, 22, 2664. [Google Scholar] [CrossRef]

- Liu, K.; Wang, T.; Wu, J.; Chen, J. A Two-Stage STAP Method Based on Fine Doppler Localization and Sparse Bayesian Learning in the Presence of Arbitrary Array Errors. Sensors 2022, 22, 77. [Google Scholar] [CrossRef]

- Cui, N.; Xing, K.; Duan, K.; Yu, Z. Knowledge-aided block sparse Bayesian learning STAP for phased-array MIMO airborne radar. IET Radar Sonar Navig. 2021, 15, 1628–1642. [Google Scholar] [CrossRef]

- Cui, N.; Xing, K.; Duan, K.; Yu, Z. Fast Tensor-based Three-dimensional Sparse Bayesian Learning Space-Time Adaptive Processing Method. J. Radars 2021, 10, 919–928. [Google Scholar]

- Wang, Z.T.; Xie, W.; Duan, K.; Wang, Y. Clutter suppression algorithm based on fast converging sparse Bayesian learning for airborne radar. Signal Process. 2017, 130, 159–168. [Google Scholar] [CrossRef]

- Liu, C.; Wang, T.; Zhang, S.; Ren, B. Clutter suppression based on iterative reweighted methods with multiple measurement vectors for airborne radar. IET Radar Sonar Navig. 2022; early view. [Google Scholar] [CrossRef]

- Xue, M.; Xu, L.; Li, J. IAA spectral estimation: Fast implementation using the Gohberg–Semencul factorization. IEEE Trans. Signal Process. 2011, 59, 3251–3261. [Google Scholar]

- Kailath, T.; Sayed, A.H. Displacement structure: Theory and applications. SIAM Rev. 1995, 37, 297–386. [Google Scholar] [CrossRef]

- Blahut, R.E. Fast Algorithms for Signal Processing; Cambridge University Press: London, UK, 2010. [Google Scholar]

- Noor, F.; Morgera, S.D. Recursive and iterative algorithms for computing eigenvalues of Hermitian Toeplitz matrices. IEEE Trans. Signal Process. 1993, 41, 1272–1280. [Google Scholar] [CrossRef]

- Jain, J.R. An efficient algorithm for a large Toeplitz set of linear equations. IEEE Trans. Acoust. Speech Signal Process. 1980, 27, 612–615. [Google Scholar] [CrossRef]

- Glentis, G.O.; Jakobsson, A. Efficient implementation of iterative adaptive approach spectral estimation techniques. IEEE Trans. Signal Process. 2011, 59, 4154–4167. [Google Scholar] [CrossRef]

- Harville, D.A. Matrix Algebra from a Statistician’s Perspective; Springer: New York, NY, USA, 1998. [Google Scholar]

- Wax, M.; Kailath, T. Efficient inversion of Toeplitz-block Toeplitz matrix. IEEE Trans. Acoust. Speech Signal Process. 1983, 31, 1218–1221. [Google Scholar] [CrossRef]

- Musicus, B. Fast MLM power spectrum estimation from uniformly spaced correlations. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 333–1335. [Google Scholar] [CrossRef]

- Glentis, G.O. A Fast Algorithm for APES and Capon Spectral Estimation. IEEE Trans. Signal Process. 2008, 56, 4207–4220. [Google Scholar] [CrossRef]

- Jakobsson, A.; Marple, S.L.; Stoica, P. Computationally efficient two-dimensional Capon spectrum analysis. IEEE Trans. Signal Process. 2000, 48, 2651–2661. [Google Scholar] [CrossRef]

- Yang, Z.; Li, X.; Wang, H.; Jiang, W. Adaptive clutter suppression based on iterative adaptive approach for airborne radar. Signal Process. 2013, 93, 3567–3577. [Google Scholar] [CrossRef]

- Robey, F.; Fuhrmann, D.; Kelly, E.; Nitzberg, R. A CFAR adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef]

- Titi, G.W.; Marshall, D.F. The ARPA/NAVY Mountaintop Program: Adaptive signal processing for airborne early warning radar. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing, Atlanta, GA, USA, 9 May 1996. [Google Scholar]

| Input: training samples , dictionary matrix . |

| Initialize: , , , , . |

| Repeat: |

| The iterative procedure terminates when the iteration termination condition in (12) is satisfied. |

| Get the estimated angle-Doppler profile using (13). |

| Reconstruct the CNCM using (14) and compute the optimal STAP weight vector using (15). |

| Input: training samples , dictionary matrix . |

| Initialize: , , , . |

| Repeat: |

| The iterative procedure terminates when the iteration termination condition in (12) is satisfied. |

| Get the estimated angle-Doppler profile using (13). |

| Reconstruct the CNCM using (14) and compute the optimal STAP weight vector using (15). |

| Parameter | Value |

|---|---|

| Bandwidth | 2.5 M |

| Wavelength | 0.3 m |

| Pulse repetition frequency | 2000 Hz |

| Platform velocity | 150 m/s |

| Platform height | 9 km |

| Element number | 8 |

| Pulse number | 8 |

| CNR | 40 dB |

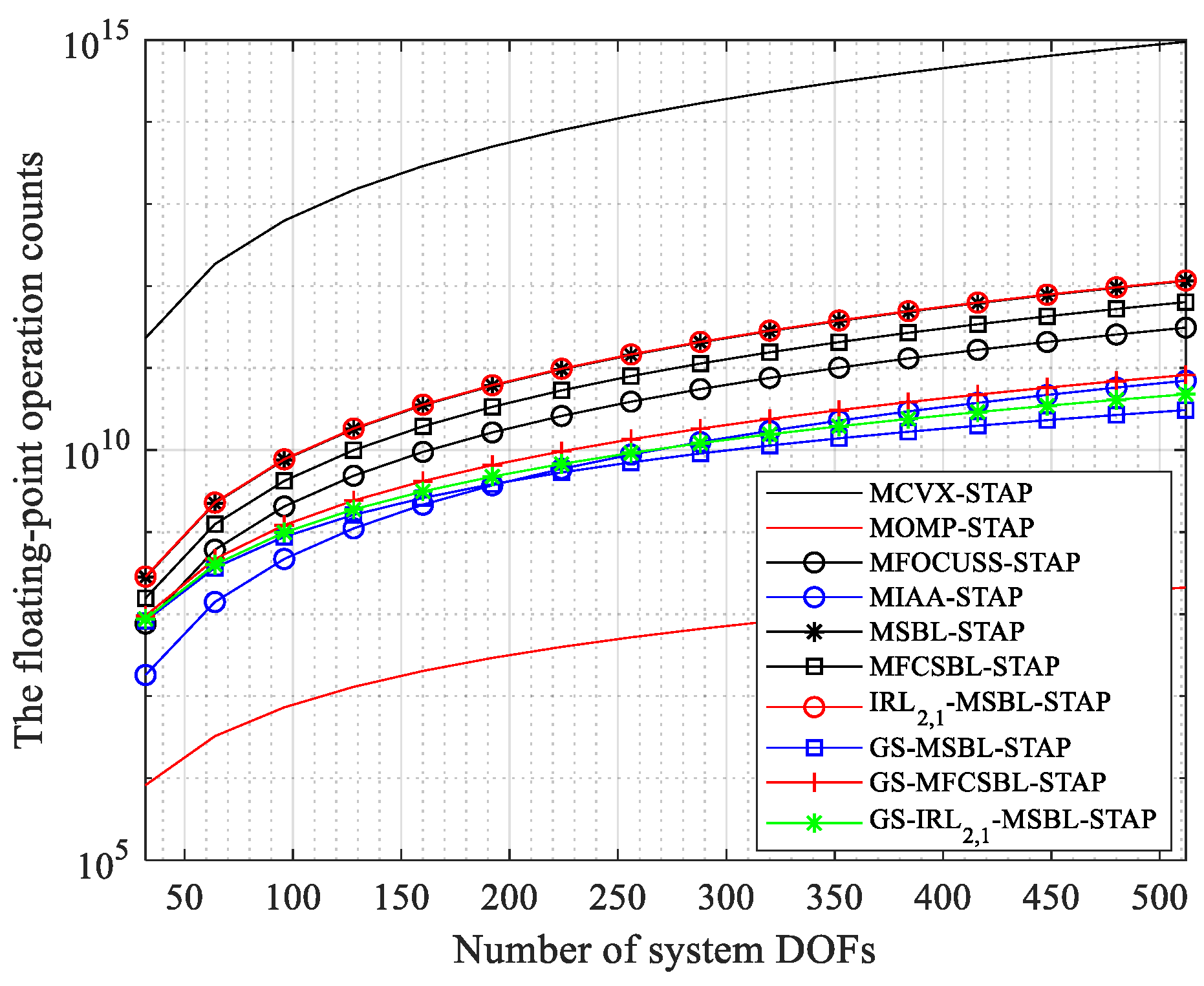

| Algorithm | The Number of Floating-Point Operations for a Single Iteration |

|---|---|

| MCVX-STAP | |

| MOMP-STAP | |

| MFOCUSS-STAP | |

| MIAA-STAP | |

| MSBL-STAP | |

| MFCSBL-STAP | |

| GS-MSBL-STAP | |

| GS-MFCSBL-STAP | |

| System DOFs | 128 | 256 | 512 | ||

|---|---|---|---|---|---|

| The Number of Floating-Operations for Single Iteration | |||||

| Algorithm | |||||

| MCVX-STAP | 1.484 × 1013 | 1.187 × 1014 | 9.499 × 1014 | ||

| MOMP-STAP | 1.296 × 107 | 5.206 × 107 | 2.111 × 108 | ||

| MFOCUSS-STAP | 4.861 × 109 | 3.884 × 1010 | 3.105 × 1011 | ||

| MIAA-STAP | 1.107 × 109 | 8.791 × 109 | 7.006 × 1010 | ||

| MSBL-STAP | 1.802 × 1010 | 1.441 × 1011 | 1.152 × 1012 | ||

| MFCSBL-STAP | 9.962 × 109 | 7.964 × 1010 | 6.369 × 1011 | ||

| 1.828 × 1010 | 1.462 × 1011 | 1.169 × 1012 | |||

| GS-MSBL-STAP | 1.619 × 109 | 7.072 × 109 | 3.070 × 1010 | ||

| GS-MFCSBL-STAP | 2.424 × 109 | 1.351 × 1010 | 8.223 × 1010 | ||

| 1.888 × 109 | 9.220 × 109 | 4.788 × 1010 | |||

| Algorithm | Running Time |

|---|---|

| MCVX-STAP | 900.4931 s |

| MOMP-STAP | 0.0254 s |

| MFOCUSS-STAP | 3.8556 s |

| MIAA-STAP | 0.7614 s |

| MSBL-STAP | 15.1402 s |

| MFCSBL-STAP | 1.4835 s |

| 1.8533 s | |

| GS-MSBL-STAP | 1.3409 s |

| GS-MFCSBL-STAP | 0.3610 s |

| 0.1914 s |

| Algorithm | Running Time |

|---|---|

| MFOCUSS-STAP | 41.9740 s |

| MIAA-STAP | 2.3135 s |

| MSBL-STAP | 43.8430 s |

| MFCSBL-STAP | 4.7761 s |

| 6.7693 s | |

| GS-MSBL-STAP | 2.3971 s |

| GS-MFCSBL-STAP | 0.8380 s |

| 0.4277 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Wang, T.; Wu, J.; Liu, C.; Cui, W. On the Efficient Implementation of Sparse Bayesian Learning-Based STAP Algorithms. Remote Sens. 2022, 14, 3931. https://doi.org/10.3390/rs14163931

Liu K, Wang T, Wu J, Liu C, Cui W. On the Efficient Implementation of Sparse Bayesian Learning-Based STAP Algorithms. Remote Sensing. 2022; 14(16):3931. https://doi.org/10.3390/rs14163931

Chicago/Turabian StyleLiu, Kun, Tong Wang, Jianxin Wu, Cheng Liu, and Weichen Cui. 2022. "On the Efficient Implementation of Sparse Bayesian Learning-Based STAP Algorithms" Remote Sensing 14, no. 16: 3931. https://doi.org/10.3390/rs14163931

APA StyleLiu, K., Wang, T., Wu, J., Liu, C., & Cui, W. (2022). On the Efficient Implementation of Sparse Bayesian Learning-Based STAP Algorithms. Remote Sensing, 14(16), 3931. https://doi.org/10.3390/rs14163931