1. Introduction

Thunder and lightning are violent atmospheric events that must have impressed humans since prehistoric times. Lightning itself, as well as strong winds and precipitation, hail, or even down bursts or tornadoes that might accompany thunderstorms, are not only impressive appearances in satellite images but can pose significant risk to life and property. Even in modern times, fatalities and severe damages from thunderstorms are still occurring at unfortunate rates, and many commercial operations, such as airports or public outdoor events (sports, music, gatherings), rely on risks assessments and prediction of lightning in order to operate safely. In this context, accurate forecasts for the next few hours are particularly important. Usually, thunderstorms are associated with lightning, which easily can be detected by triangulation using multiple radio wave antennas.

Unfortunately, the prediction of thunderstorms and lightning (Cb) is a very difficult problem: The development of atmosphere’s electric field with locally strong charges of different signs is crucially dependent on cloud processes. Charge generation is based on the collision of ice particles (ice crystals, graupel or hail particle) in the presence of super-cooled water droplets [

1]. Furthermore, other processes of this kind are proposed for charge generation; see, e.g., [

2]. Nevertheless, the collisions are enhanced in strong, localized atmospheric updrafts that are typically formed by frontal movements or spontaneous convective events induced by heat in situations of unstable atmospheric layering. In particular, the latter effects are highly sensitive to small-scale perturbations and thus are hard to predict.

Although significant improvements in Cb forecasting have been achieved with numerical weather prediction (NWP) [

3,

4], accurate forecasts of Cb location and strength are still a major challenge. Hence, nowcasting methods are typically used to issue warnings with short lead times (in the range of up to a few hours) [

5]. An early overview of nowcasting of (severe) weather phenomena is given in [

6]. Nowcasting can in general be viewed as a special type of video prediction problem, that is, classification or regression based on a temporal sequence of images. Thus, methods proposed for, e.g., video frame prediction in natural videos are applicable to nowcasting and are related; see [

7].

Radar-based methods are often used for regional nowcasting—in particular, the (Doppler-) radar-based remote sensing of (heavy) precipitation events and their movement, depending on the method in combination with lightning data [

8]. The forecast is typically based on two subsequent images and extrapolation of the movement of the observed storm cells, e.g., by optical flow methods [

9] or tracking methods [

10]. This limits the ability to predict newly forming or decaying cells and limits long-term precision. For example, operational systems such as NowCastMIX [

10] struggle with predictions for lead times beyond two hours. That is why for longer lead times, NWP models are used.

An alternative source of information are images recorded by geostationary satellites. They cover larger regions than methods based on weather radar. For example, the SEVIRI instrument onboard Meteosat’s second generation (MSG) platform provides data in twelve spectral bands with a temporal resolution of 15 min and a spatial resolution with

sub satellite point, which corresponds to

in Central Europe. These data can be used like radar data to determine atmospheric motion vectors (AMVs) using optical flow and to provide information about the brightness temperature of clouds (BT), which can be used to indicate thunderstorms. For example, the physically motivated usage of the “sandwich” method, which is based on the BT difference between the

water vapor (WV) channel and the IR window channel [

11], or alternatively the

WV channel [

12]. Yet, Cbs are defined by lightning. Hence, in order to improve the accuracy of the detection of Cbs, which is the basis for the nowcasting, it is recommended to use lightning data in addition. For example, [

13] use the Advanced Baseline Imager (ABI) and flash-extent density (FED) from the Geostationary Lightning Mapper (GLM) on board GOES-16, as well as satellite and solar zenith angles and geo-coordinates to predict “intense convection”. In [

14], satellite-based information is combined with lightning data from the Vaisala Global Lightning Detection Network (GLD360) and information from NWP. Moreover, a combination of satellite and radar information is applied; for example, [

15] make use of five temporal images from two ABI channels to predict convective regions, derived from Multi-Radar Multi-Sensor (MRMS) precipitation types.

However, it remains unclear whether physical nowcasting methods are capable of making the best possible use of the available information given in the satellite image and lightning data. As discussed before, current approaches, e.g., [

14,

16,

17], do not take into account the decay or development of (new) cells. Corresponding information may be hidden in the input data and unused. The visual analysis of thunderstorms based on lightning data and satellite imagery suggests that there may be more information in the data that can be extracted. Thus, an obvious alternative is to resort to machine learning [

18,

19] and in particular deep representation learning techniques [

13,

20], which have, in recent years, become able to automatically build highly predictive statistical models even from data with extremely complex statistical dependency structure, such as differentiating different breeds of dogs in general photography [

21,

22,

23].

Our study follows this approach: We predict lighting events from image sequences containing satellite imagery and a map of recent lightning events through an image-to-image translation performed by (a variant of) a convolutional U-Net [

24], which could be considered a “canonical” network approach to image-segmentation and translation tasks. Our study is designed in particular to address the question of what insight can be gained from satellite images alone for short-term Cb-forecasting, and which parts of the data are most important to this end. The use of machine learning methods, and specifically deep neural networks, has been studied previously in the literature [

25,

26,

27,

28,

29]. Specifically, U-Nets have been used in related studies for precipitation forecasting [

30,

31]. Regarding convection, [

32] published a study that uses radar images (2 min, 1 km grid) to predict precipitation with a lead time up to 6 h using a (residual) U-Net. Similarly, Shi et al. [

33] train a Trajectory GRU model to predict precipitation based on radar images (6 min, ca. 1 km grid). A random forest classifier to detect convective initiation (CI) from geostationary satellite data training on hand-crafted features, where CI is derived from radar data, was proposed in [

34]. A similar model based on four commonly available surface weather variables (air pressure at station level (

QFE), air temperature, relative humidity, and wind speed) to predict lightning events for lead times with up to 30

training a decision tree was developed in [

35].

However, lighting prediction comes with the problem of heavy

class imbalance which is a general challenge for classification with statistical machine learning: In many relevant scenarios, lighting events are rather rare; thus, a classifier that just predicts “no (nearby) lightning” for all outputs can easily reach accuracies close to

. Previous work has mitigated this issue by resampling in evenly balanced examples [

18] or considering conditions with high prevalence [

20], which then also assumes balanced base-rates when making predictions. In our paper, we develop a method to automatically balance class weights to optimize a deep learning classifier for high predictive power (such as high critical success index, CSI). This permits a simple “end-to-end” training with operationally meaningful predictions (i.e., using recent lightning and satellite observations, the classifier predicts future events with above-chance accuracy). To obtain an indicator of the quality achieved, our results are compared with those of an optical flow-based nowcasting method of the German Weather Service (DWD).

A second and possibly even more interesting question is understanding which information actually contributes to making better predictions. Having a strong classifier that outperforms hand-engineered operational models by simple, automatic statistical learning opens up the opportunity to study this question: By withholding data at the learning stage and tracking the reduction in performance, we can attribute how much information the classifier was able to draw from these sources (formally as lower bound of how much statistical information about the event is contained in portions of the data). We conduct an experiment where we measure the variability of the prediction performance over different times of the day, and retrain classifiers for visible (including a 1.6 m near-infrared channel), infrared, and two water vapor bands.

Overall, our study makes two key contributions: (i) a simple method for end-to-end training of lightning events from image data that is both practical (applicable to data with real-world, skewed prior class frequencies) and accurate (outperforming state-of-the-art optical flow-based systems), and (ii) we obtain some novel insights of which sources of information are useful for making predictions by examining the performance characteristics of the learned classifier.

2. Materials and Methods

Our method performs statistical learning to learn a mapping that takes satellite images and measurements of recent lightning activity as input and predicts future lighting activity as output, with a specific lead time.

Satellite images: Formally, we define the geostationary satellite images as functions

denoted as

, where

t is the time at which the image capturing has begun,

and

are the longitude and latitude, respectively, and

refers to the wave-length band measured. The specific satellite imagery used is provided via the Spinning Enhanced Visible and InfraRed Imager (SEVIRI) of the Meteosat Second Generation (MSG) system, and obtained from EUMETSAT [

36]. Images are available in 12 discrete frequency bands with a finite temporal resolution of

min. Eight bands represent the thermal infrared (IR) range, providing radiance resulting from the emission of the atmosphere and the Earth surface. They can by used to estimate the brightness temperature of the atmosphere and the surface. Three channels in the visible (VIS) spectrum measure the reflection of solar light at clouds or the Earth’s surface. This information can be used to retrieve the albedo of clouds and the surface. Lastly, the High-Resolution Visible (HRV) channel contains multiple broadband detection elements to scan the Earth with a lower sampling distance. The spatial resolution of the satellite images in Central Europe is roughly

latitude and

longitude (except the HRV band, which we do not consider). All satellite data are projected to an equirectangular projection with an equal spatial resolution of

. We represent the satellite data as collections of 2D images

as data with values quantized to a 16-bit integer.

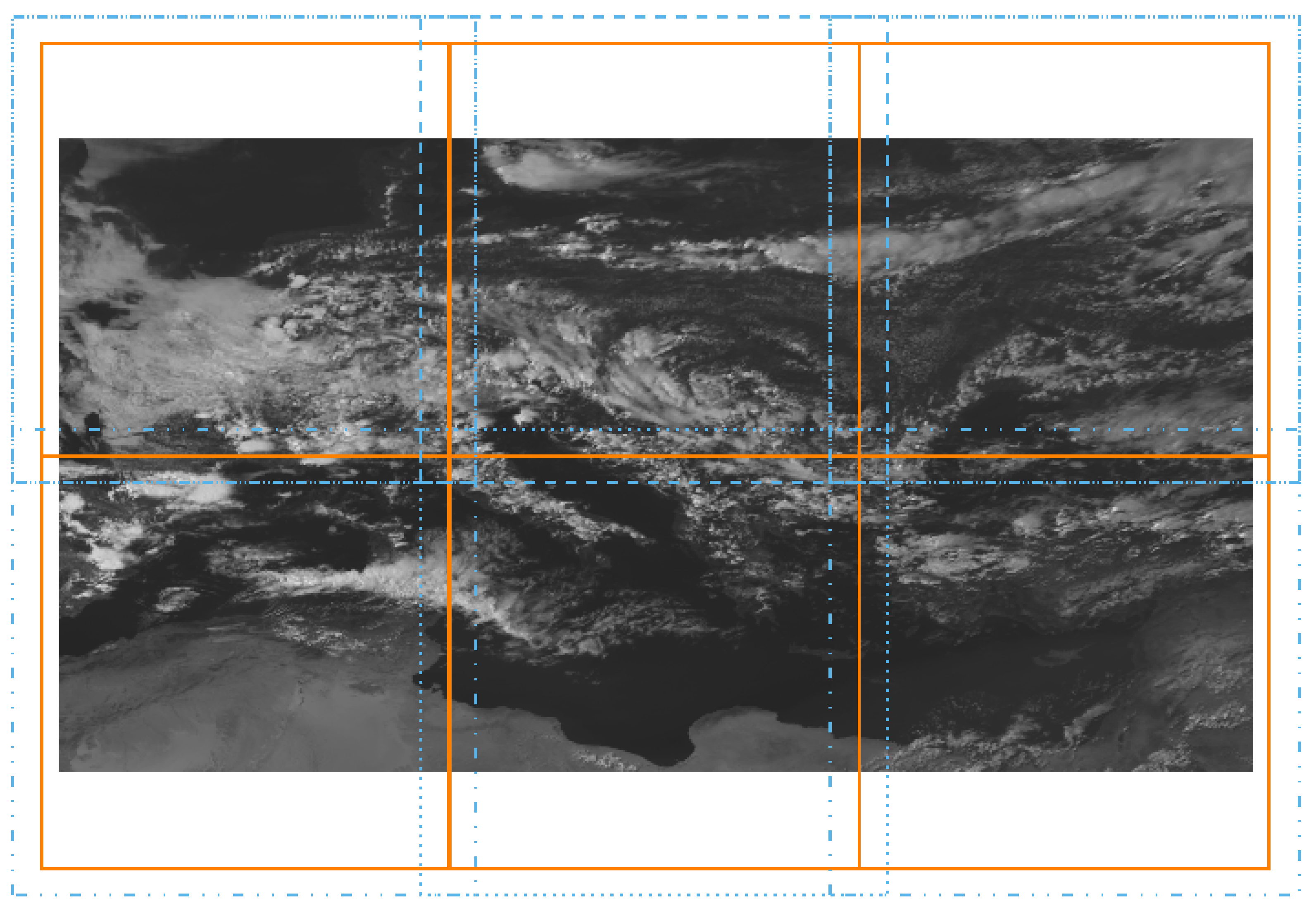

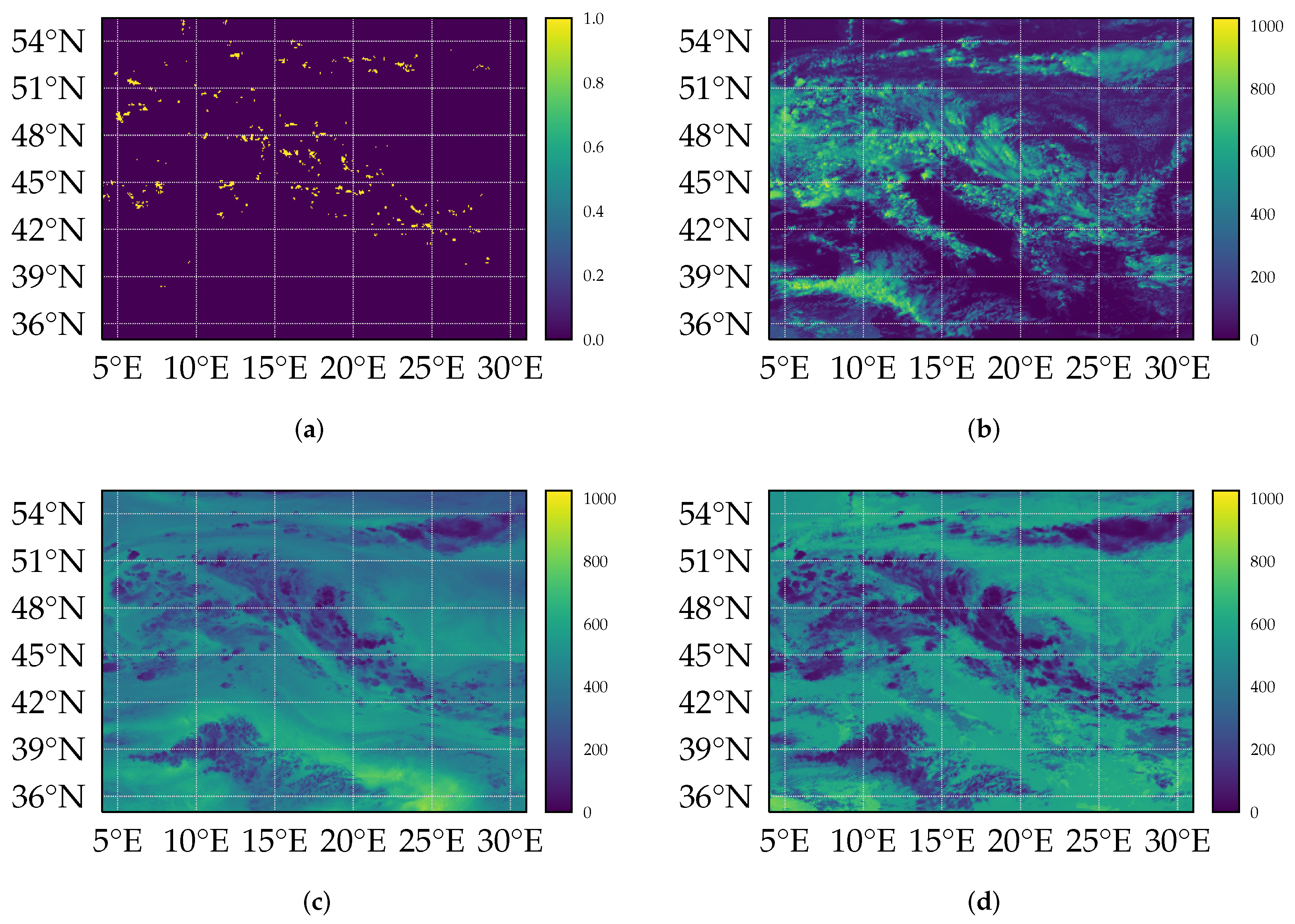

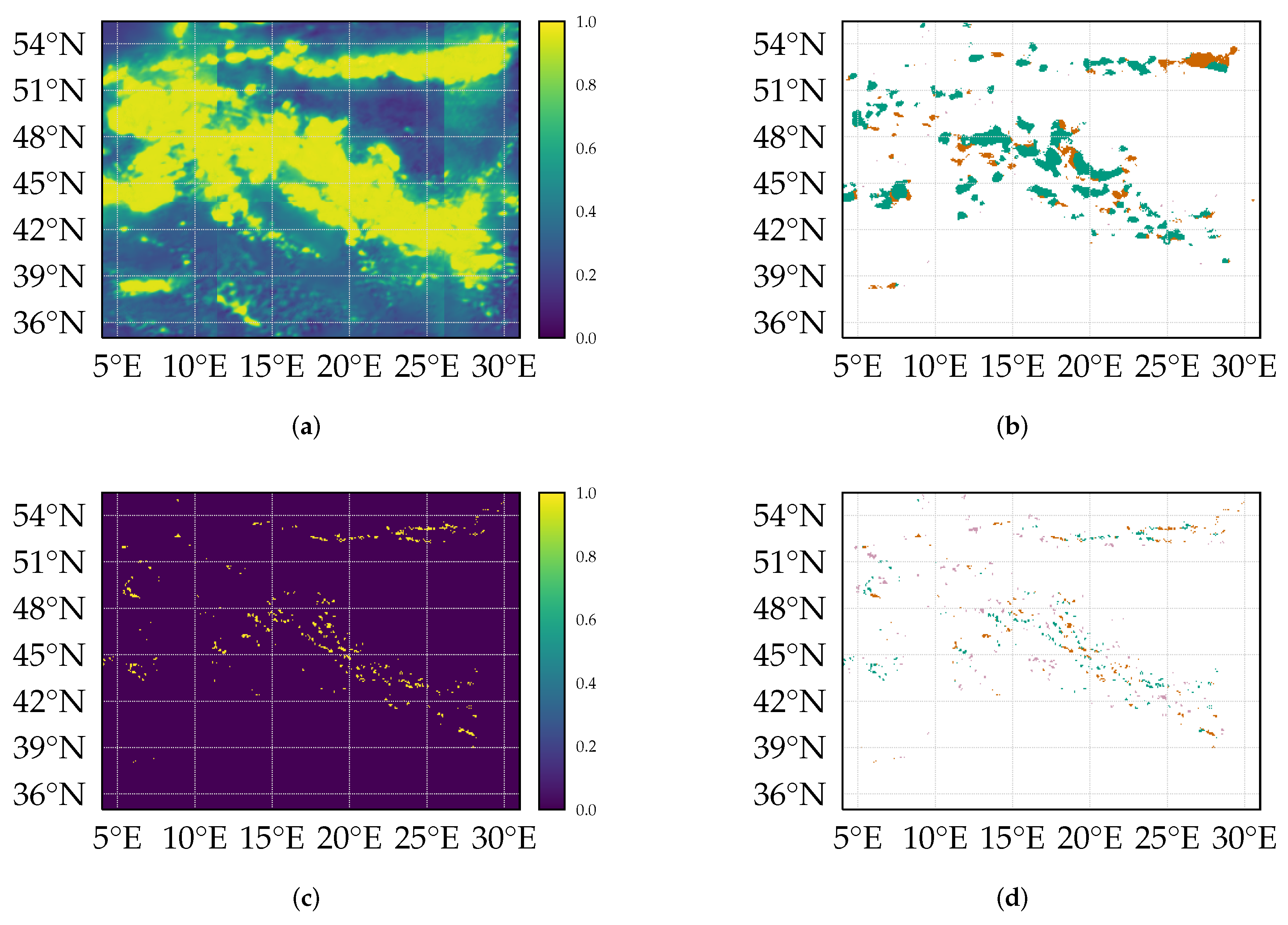

Figure 1b–d show examples of processed satellite imagery of the VIS006 (

m), WV062 (

m), and IR120 (

m) channels at 4 June 2016 at 12:00 h UTC, where a lot of lightning occurred across Central Europe. The images already indicate that the optical band might be more informative, as the infrared channels only see the cloud tops. However, the patterns in the visible spectrum are complex and not easy to capture in a hand-designed classifier.

Lightning images: Measurements of lightning activity are given as point sets

, with two spatial geo-coordinates, a time coordinate, and the electrical current

. The lightning data used in this study were obtained from the LINET lightning detection network [

8]. LINET is a low-frequency long-range lightning detection network (VLF/LF) using the time-of-arrival (TOA) method, consisting of several ground-based lightning sensors. For further processing, we convert the point set into images, with the same spatial and temporal resolution of the satellite images. To this end, we bin all lightning events in an

aggregation time span of

min, during which the satellite data have been measured, or, during experiments with varying

lead-time , offset by the corresponding

, and binarize them by setting the closest pixel to 1 (with background set to 0). To filter out potential noise, only lightning events with an electric current of at least 1 kA were considered. We denote the resulting lightning images by

.

Figure 1a shows an example of a processed lightning image for the same example date (4 June 2016, 12 h UTC).

The region of interest (ROI) in our work is the mid-latitudes in Central Europe, between

and

, and

and

. The data are cropped to the defined ROI and split up in smaller patches, according to

Appendix A.3. We focus our work on the months May to August in the years of 2016 and 2017. As additional test data set, we use data from August and September of 2021. Detailed information about the data and their use can be seen in

Table A1 and

Table A2 for 2016/2017 and 2021, respectively. The processing of the data is done using multiple tools, such as

pyPublicDecompWT [

37],

SatPy [

38],

Pyresample [

39], and

Cartopy [

40].

The learning task can now be posed as a probabilistic prediction of a future lightning image: Let

denote the

current time,

the

lead time for the prediction,

the

temporal sample spacing,

the

aggregation time span (in our case:

, and

k the

number of past satellite images used for the prediction. With this information, we want to determine a probabilistic classifier

that computes a probability map that specifies for each pixel the likelihood of a lightning event being marked in the future lightning image:

This problem is solved by representing the function

by a deep neural network with parameters

. Learning is performed using maximum-likelihood: We assume that we are given a set of

N training images with pixel-dimensions

and determine a good-fitting

as a (local) maximum of the likelihood function with L1 regularization:

with

Here, the operator refers to the pixel at position in the respective images; and w denotes a per-pixel class weight, based on the prediction skill of the sample. L1 regularization is scaled by the factor .

The main issue with the cross entropy (CE)-based loss function shown in Equation (

4) is the naive (unweighted) summation over all pixels, regardless of their classification. Even when including all pixels in a

search radius , the amount of pixels “with lightning” is extremely sparse. Therefore, we add the event-based, per-pixel weight

w in Equation (

3), which addresses the issue of heavy class imbalance in a sample. This differs from per-sample weighting strategies based on class balance, such as [

41]. Per-sample weights work well with known class imbalances, e.g., when data from one class are underrepresented in the data set, by scaling the importance of single samples. This is only partially useful in our case: Thunderstorms are comparatively rare, but when they occur, lightning activity is reasonably high, spanning over multiple pixels in a sample.

In the following, we will construct a per-pixel weight map

w, based on the samples class labels

, the predictions

, and a (fixed) “search radius” (

) as follows:

where

is the set of pixels classified as false positive given the search radius

, the predictions

p, and ground truth labels

l of the sample, and

is the modified class-weight of the “lightning” class in the data set, and

its corresponding counterpart. The class-weight

of a class “cw” is defined as

where

is the amount of instances of the class. Scaling by

keeps the overall loss at a similar magnitude, so that the sum of the weights of all examples roughly stays constant. The values of

and

are pre-computed for the whole training split of the data set, and are shown in

Table 1. Taking false positives into account,

w will adapt to the performance of the classifier

f. The training split of the data set is detailed in

Table A1.

Table 1.

Computed pixel-weights of the training split for the 2016/2017 data set, based on class-weights shown in

Table 2 and Equation (

7).

Table 1.

Computed pixel-weights of the training split for the 2016/2017 data set, based on class-weights shown in

Table 2 and Equation (

7).

| Class | Pixel-Weight |

|---|

| |

| 5 × 10−5 |

The

search radius is used to model the label uncertainty introduced by various factors, such as the dislocation between lightning and the fictitious center of the Cb, the geolocation error of the satellite, and the movement of the cloud [

12]. During the computation of

w, it is used to determine false positive predictions. The weight map

w can efficiently be computed at every training step in parallel. During training, the loss values are averaged over all pixels and samples in a batch.

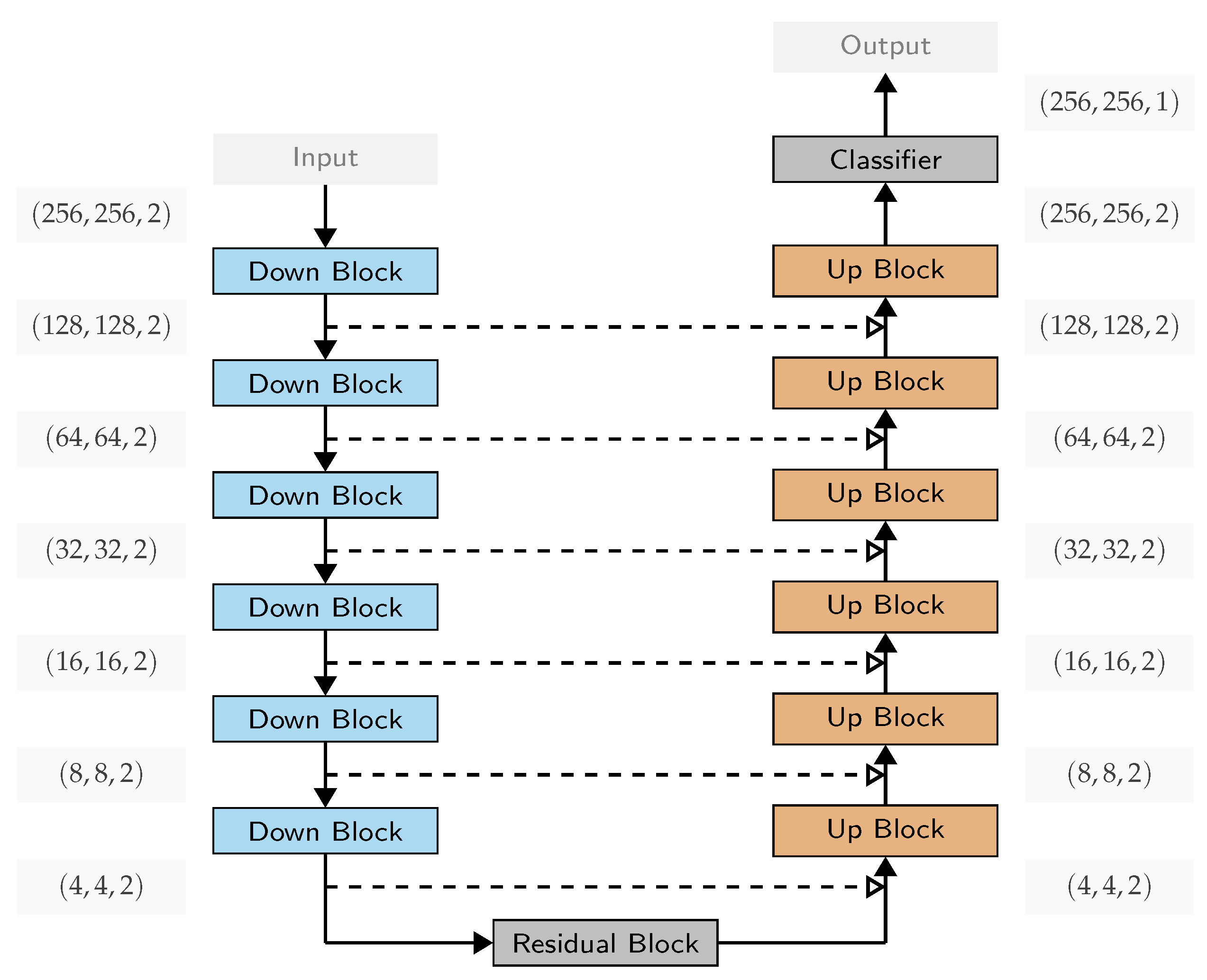

The network

f used in our study is based on the U-Net [

24] architecture, combined with ResNet-v2 [

42] residual blocks, adapted to work with three-dimensional input.

The input to our model is of the form

, where

B is the batch size,

H and

W the height and width of an image,

T the amount of time-frames, and

C the amount of channels. We fixed the height and width of our model to be 256 px × 256 px, which equals 12.8° × 12.8°, or roughly 1425 km × 1425 km. Larger input areas are split up according to the domain decomposition scheme described in

Appendix A.3. Further, each input possesses a boundary region, overlapping with neighboring inputs, which allows for a more precise prediction of the non-overlapping region, but which is excluded from the optimization process and evaluation.

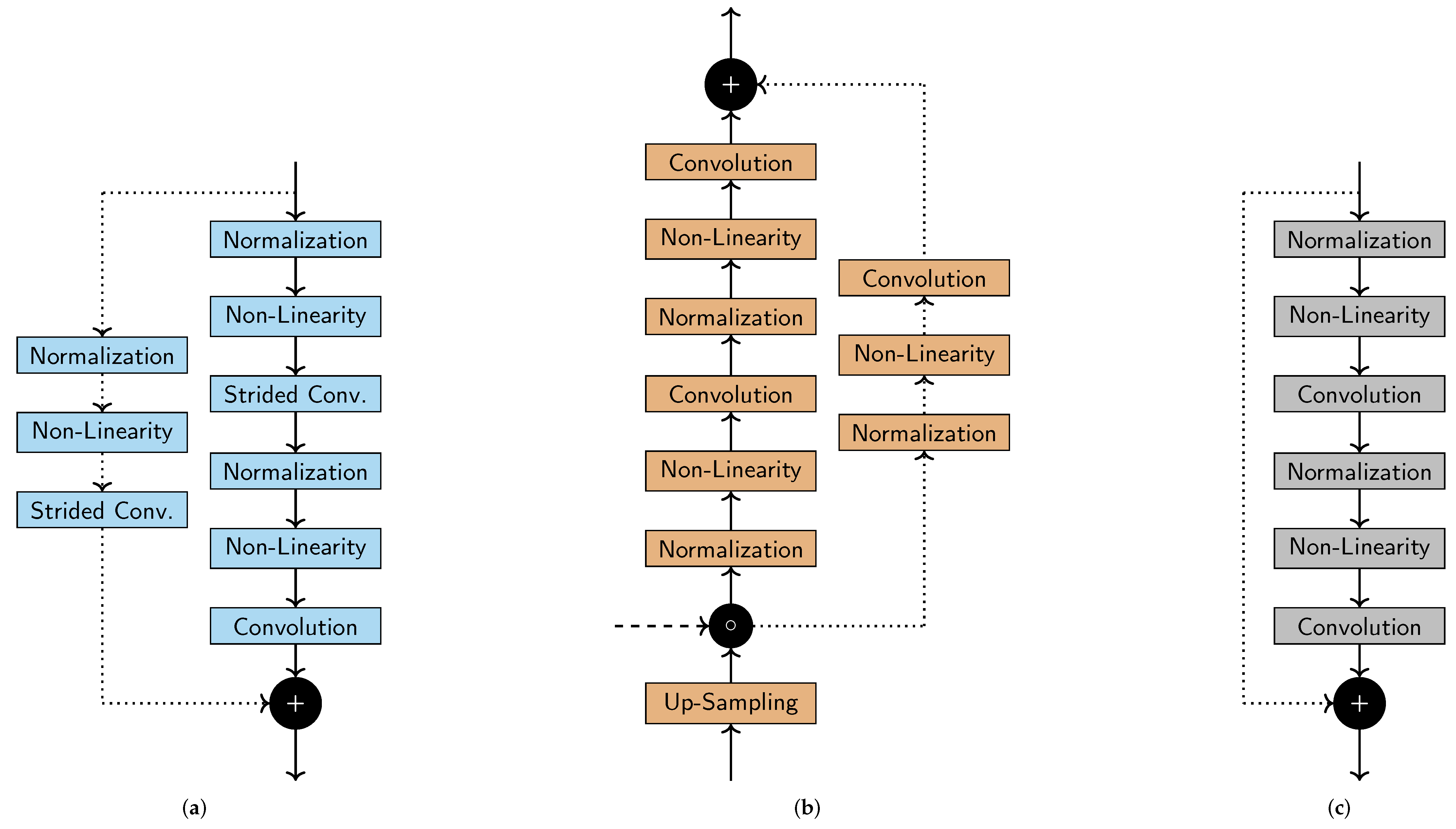

Figure 2 shows an overall view of the used network architecture. The U-Net structure, including skip-connections, can be seen. Varying from the original architecture, we replace the stacked convolution layers and the pooling layer at each down/up sampling step with residual blocks (with stride where necessary). Instead of cropping the feature map of the contracting path, we use the feature map after the down-sampling operator. We use convolutions for down-sampling, but deterministic trilinear up-sampling operations. Deviating from the original architecture, we replace batch normalization layers with instance normalization [

43], which normalizes each element of the batch independently, i.e., only across the spatial and time dimension.

The residual blocks consist of full pre-activation units, optionally paired with pre-activated convolution shortcuts, as described in [

23].

Figure 3 illustrates the used residual (

Figure 3c), down-sampling (

Figure 3a), and up-sampling blocks (

Figure 3b). Convolution layers use

filters for up- and down-sampling, and

otherwise. Non-linear layers consist of ReLU [

44] activation functions.

As usual, we increase the amount of channels per block (representing coarser spatial scales). Empirically, we found good validation results for a stronger increase (only) for larger lead-times—details are shown in

Table 3. When growing, it follows an exponential curve countering the spatial down- and up-sampling per block in the U-Net, which restraints memory use for weights and activations. The resulting network can be trained on consumer graphics cards at a reasonable batch sizes. Together with the training settings, the expressivity of the neural network is adapted to “match” the complexity of the modeled problem.

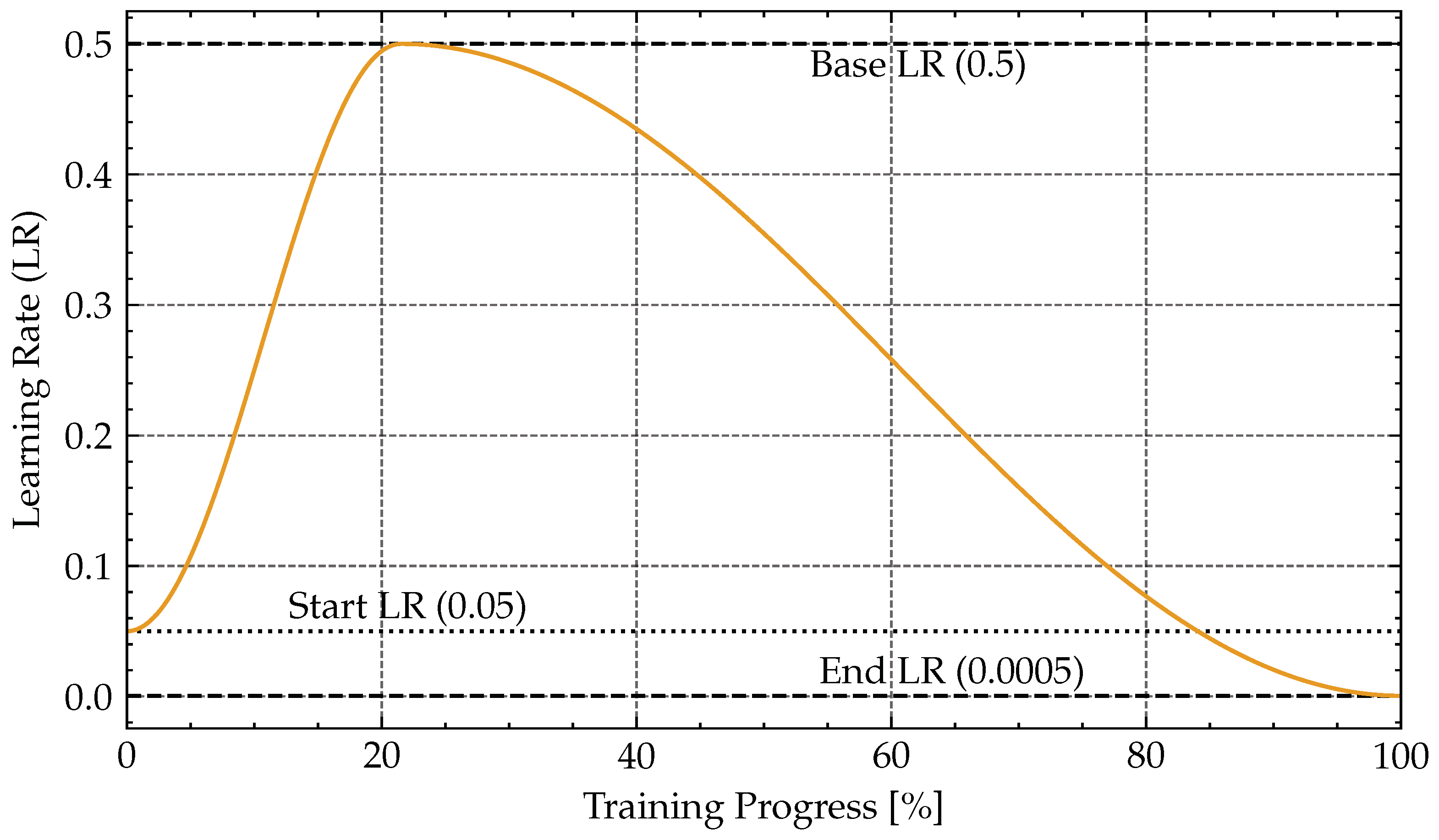

Our model is trained using stochastic gradient descent (SGD) with momentum [

45] using decoupled weight decay (SGDW) [

46] to optimize the loss function, described in Equation (

3). The base learning rate (LR) is set as

, and the weight decay (WD) and L1 regularization are set based on the number of channels of the network, as shown in

Table 4. We use a batch size of 64 and train the network for 14 epochs. In each epoch, the network trains on a random permutation of the complete training data set. At the end of each epoch, we validate the network’s performance on the validation data set and save its parameters to disk. Every 8th batch, we adapt the decision threshold based on the current best performance. During training, the LR is scheduled with a 1-cycle LR scheduler [

47] (two-phase, cosine decay). The learning rate schedule throughout the training process is discussed in more detail in

Appendix A.5 and is depicted in

Figure A2. Additional to the use of our custom weighted loss function, samples with no lightning activity are discarded during training.

All parameters (of all experiments) were tuned based on data given in

Table A1. Our method uses the following input features: SEVIRI channel VIS 0.6, VIS 0.8, nIR 1.6, IR 3.9, WV 6.2, WV 7.3, IR 8.7, IR 9.7, IR 10.8, IR 12.0, IR 13.4 and the most recent lightning events from LINET.

We use PyTorch [

48] for all of our experiments and train on a single machine, equipped with an Intel Core i7-8700 Processor and a single NVIDIA TITAN RTX GPU. A reference implementation is provided under a free license.

3. Results

We evaluate our method on the testing data set aside from the original 2016/17 data set (

Table A1 in the

Appendix A.1), as well as testing data from the month of August and September of 2021, for which comparison data with a nowcasting method [

14] at DWD were available. In line with good experimental practice, all hyperparameter tuning has been concluded using validation data before running any inference on any test data has been performed (with frozen, final hyperparameter settings). In particular in our case, where substantial hyperparameter tuning was required, this protocol minimizes the risk of reporting a coincidental success. Further, training and testing were performed 5 times with independent random initialization and training of the network, which can always lead to (a bit of) spread in the results, and mean and standard deviation are reported.

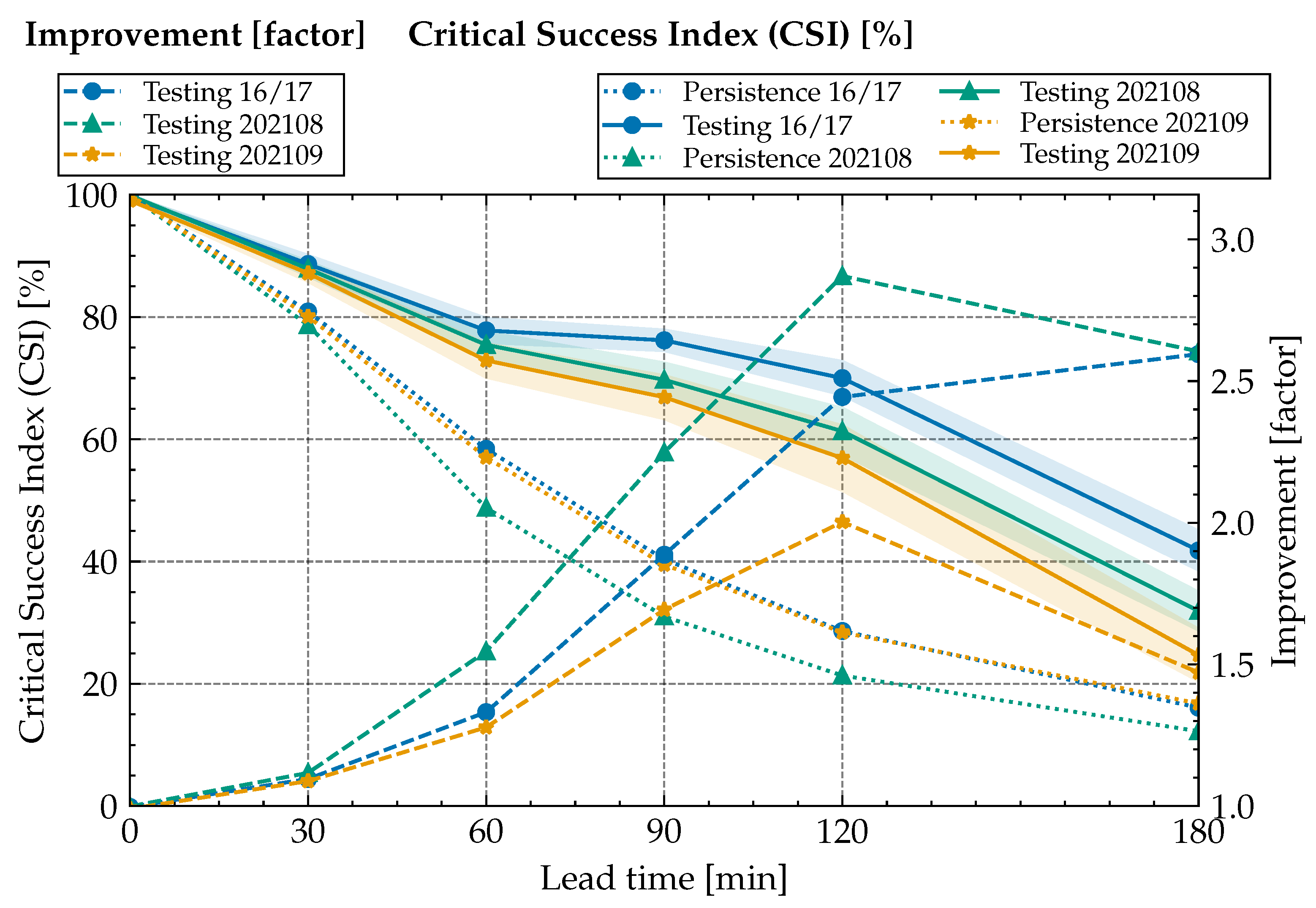

We report the critical success index (CSI) (see

Appendix A.2 for details) of the deep network and a base-line “persistence” method, which just assumes that the most recent lightning events at the start of the prediction period persist at the same spot indefinitely. We also compute an “improvement factor” that measures the factor by which the deep network outperforms the base-line persistence model in terms of CSI. As discussed in more detail in

Appendix A.4, this makes it easier to compare to other methods while avoiding misjudgement due to small deviations in the evaluation protocols for the corresponding CSIs.

3.1. Classifier Performance and Base-Line Comparison

As a first sanity check, for zero lead time, the network reaches very close to success (i.e., matching the base-line when tasked with just predicting the last known events), which means that the network has been able to successfully learn to base its decisions solely on the lightning data in this setting.

With growing lead-time, the results diverge from base-line, with substantial advantages for the learning-based model. We obtain a CSI of about 57–80% at 120 min and 25–42% at 180 min lead time (depending on the data set), corresponding to a factor of improvement of ≥1.5 at 3 h and ≥2.0 at 2 h lead time.

This can be interpreted as a good outcome: Usually a CSI value of is used to decide if the forecast provides a useful prediction. The CSI value is well above for all testing periods up to a lead time of 120 min, demonstrating the quality and potential practical relevance of the classifier obtained from statistical machine learning.

Specifically, our model was able to transfer to unseen data from September 2021, as neither the year nor the month was in the training or validation data set. As expected, testing results for this period show a decline in performance, but are still above

CSI for a 120 min lead time. Data from 2021 in general perform a bit worse than testing data from the corresponding months of the 2016/17 data. We believe that the close temporal proximity of the 2016/17 testing data to training data is likely responsible for this. While we reduce direct data leakage of capturing the same convective cell by maintaining a 12 h gap in between adjacent weeks, the statistical similarity of the overall meteorological conditions appears as a plausible explanation. The increased drop for September 2021 is consistent with this hypothesis as the atmospheric conditions in September generally differ from May to August, which are used for training. As shown in

Table 5, the performance on validation data is not systematically better than testing performance, showing that the hyperparameter choice has most likely not been overly specific to training and validation data used for method development.

3.2. Prediction Structure

To provide an impression of the outputs produced by the network,

Figure 5 shows the model logits (inputs to the softmax-layer that yields normalized probabilities) and skill (True/False positives and negatives per pixels) for the previously used example of a severe weather event on 4 June 2016 at 12:00 h UTC [

49]. It shows how the predicted lightning map significantly reduces false negatives and positives over base-line persistence. Visually, one can see that the predictions are automatically enlarged by the network to match the search radius, but our formulation of the adaptively weighted loss (Equation (

6)) does not lead to “overblown” predictions, thereby reducing false positives (a naive dilation operation on all input and output data to model the search radius would lead to smudged, unsharp predictions at the border regions and could seriously harm false positive rates).

Further details of the results are presented and discussed in the following subsections.

3.3. Comparison against Physical Nowcasting Method

The DWD currently works on an improvement of the nowcasting applied within the 24/7 nowcasting method, discussed in detail in [

14]. In order to obtain a first hint of the possible improvements of a machine-learning-based method, the factor of improvement between persistence and nowcasting was calculated for the 120 min prediction for both approaches. Due to a deviation in the lead time reference point, a 120 min lead time in our work transfers to a

min lead time in the physical nowcasting method. The validation method has been adapted as far as possible for this comparison. Nevertheless, it is not a direct comparison and hence only provides an indicator for possible improvements using the method presented in this manuscript. The authors of [

14] state a CSI for the persistence algorithm of

, which is consistent with the values reported in our work; see

Table A3. For the physical nowcasting method, they state a CSI of

; thus, the improvement factor is of about

and hence significantly below the values achieved by our learned classifier. This is not proof but a strong indicator that the developed method is able to gain hidden information and to improve the prediction of Cbs in the time frame of 0–3 h.

3.4. Feature Attribution

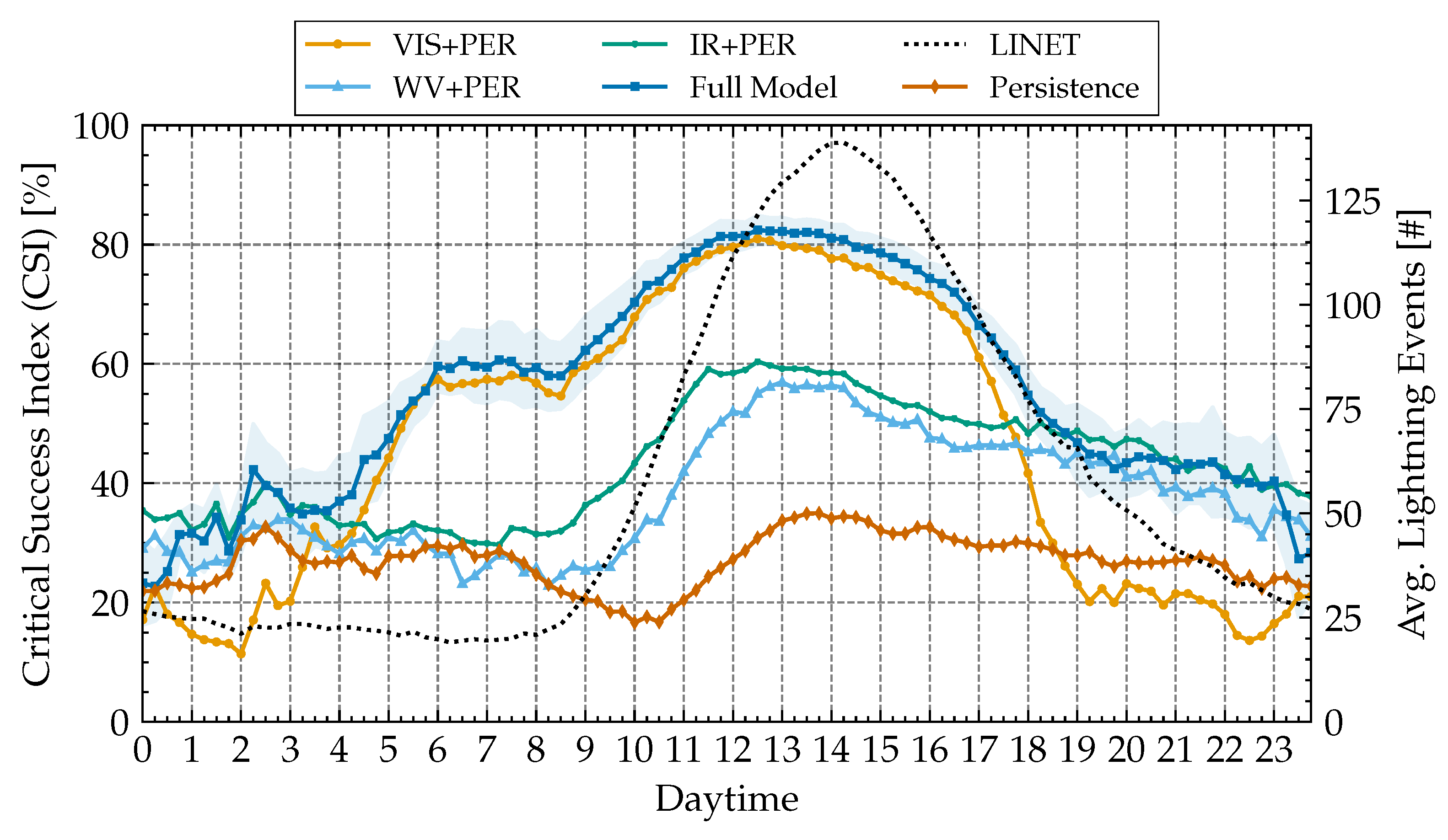

After observing that our classifier is able to make statistically strong predictions (in comparison to alternative methods), we ask the question which “features” (input channels) are most informative in making the decision.

Figure 6 shows the result of training the classifier again, but with some inputs omitted. The graph shows the CSI over the course of the day, with an overlay of the number of lightning events recorded by LINET (dotted black curve) at these times of day on average (clearly peaking in the afternoon UTC time, which is late afternoon local time in the observed region). The time on the

x-axis denotes to the prediction time and corresponds to the number of lightning events shown (the actual data used for the prediction are taken 2 h prior).

The full classifier model (dark blue curve) shows a significantly lower CSI at night-time than during the day: With the sunrise starting, performance improves, and drops again later in the evening; the increase in performance during 4–6 am UTC coincides with the beginning of dawn in Central Europe (local daylight-saving time being offset by +2 h) in the relevant time frame of May to August. As more daylight becomes available closer to the start of summer, more visible information becomes available earlier in the day, which could explain the transition in accuracy. Similarly, accuracy declines towards the end of the day.

A second phenomenon, apparently overlayed with the hypothesized daylight effect, is a further increase in CSI between 9 and 16 h UTC, peaking around noon (notably, at about 80% CSI). This corresponds to the time where most LINET events have been recorded. Apparently, prediction becomes easier if events are more common (which is a statistically plausible finding and would be expected).

The hypothesis that the network bases its decision mostly on information in the visible spectrum of light is solidified by looking at the CSI-curves when using only images from the visible spectrum (VIS/light orange) versus various infrared channels only (light blue/green): During daylight, the model using only visible features drops only slightly in performance compared to the full model (often within the -margin of error of the full model), while the infrared models perform significantly worse. Only at night, the prediction benefits from IR-information (with visible-only falling behind), although at an overall poorer level of performance. Surprisingly, the water-vapor bands alone yield an even slightly worse performance than the other IR-channels.

The comparison against base-line persistence shows an occasional drop below base-line for the restricted models, which might be explained by training noise (with variance increasing during low-event-count night times); this also suggests to not over-interpret smaller differences (WV vs. IR), but the gap to visible light appears very large and correspondingly very unlikely coincidental.

In summary, we observe that visible light has by far the largest contribution to classification performance, indicating the image recognition in the visible spectrum plays a major role. When visible light is available, the model performs very well at levels of CSI of 60–80%, which might have some operational relevance for applications to daytime activities. Infrared imagery appears to be much less informative to our classifier, which can only sustain a generally lower prediction performance consistently at day and night times. The water vapor channels alone, which are a target for physically motivated, “hand-crafted” models [

12], lead to overall worse prediction results than visible or other infrared bands.