Dense Oil Tank Detection and Classification via YOLOX-TR Network in Large-Scale SAR Images

Abstract

:1. Introduction

- (1)

- To enhance the representation of feature maps and focus on the region of interest of oil tanks, the Transformer encoder is integrated into the YOLOX-TR, which can improve the localization accuracy of oil tanks in high-density areas.

- (2)

- To augment the extraction of discriminative features between the two types of multiscale oil tanks, YOLOX-TR employs structural reparameterized VGG-like (RepVGG) blocks to reparameterize the backbone with multi-branch typologies without increasing computation in inference time, which can help distinguish the two types of tanks and improve the classification accuracy.

- (3)

- To realize end-to-end detection in large-scale SAR images automatically, a slicing detection module based on sliding window detection and non maximum suppression (NMS) is employed to the detect layer of YOLOX-TR, which facilitates the deployment of the model in practical applications.

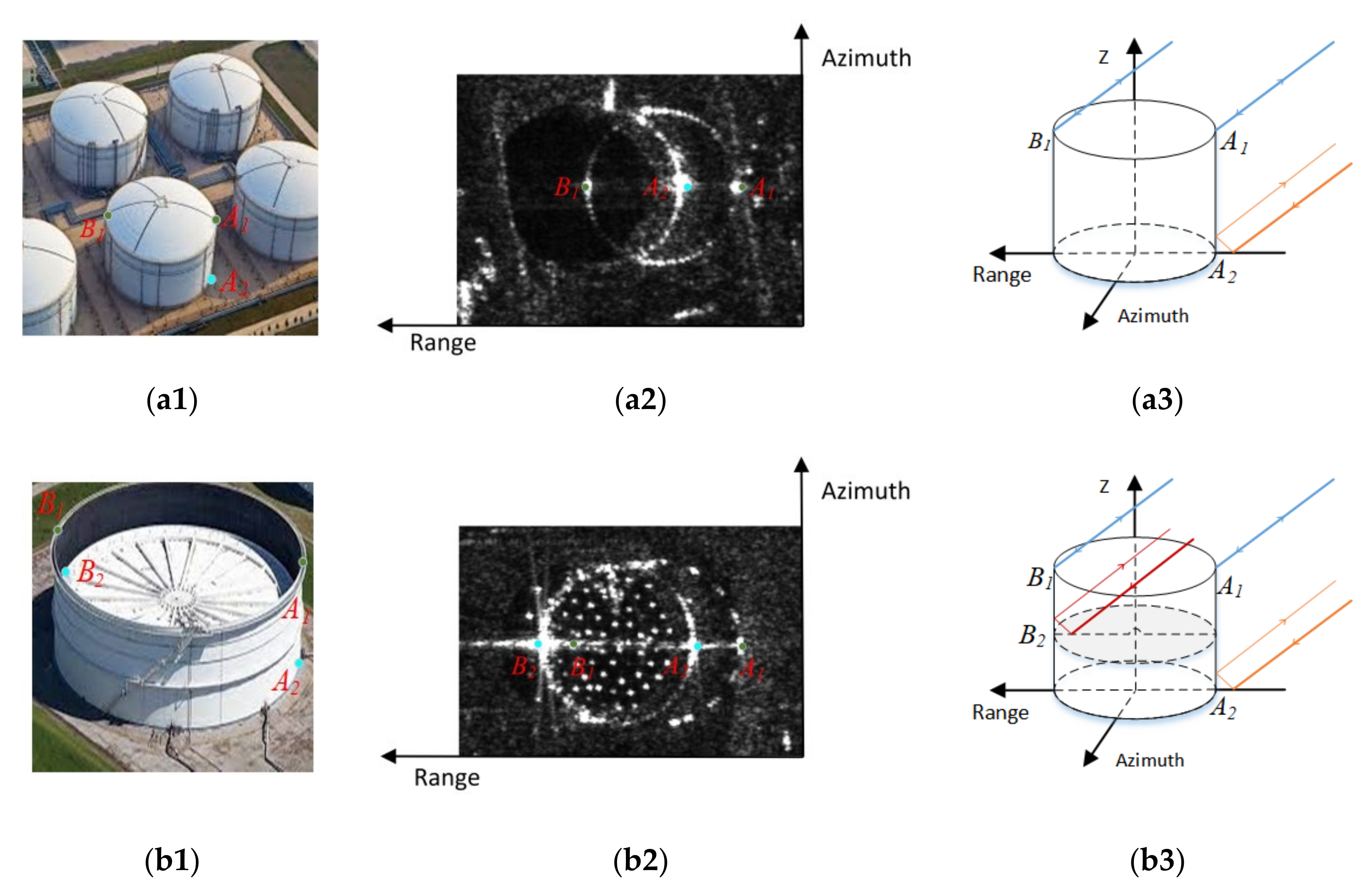

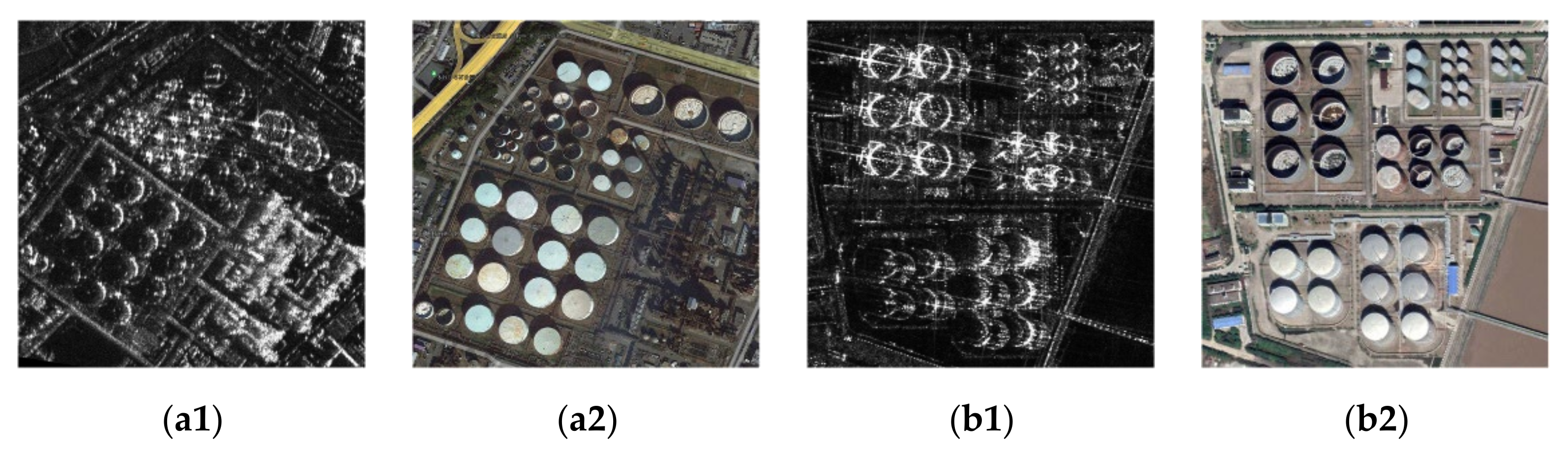

2. Materials and Methods

2.1. Dataset Construction

2.2. Construction of the YOLOX-TR Model

2.2.1. Overview of YOLOX-TR

2.2.2. Transformer Encoder

2.2.3. Reparameterized Backbone RepCSP

3. Experiments and Results

3.1. Dataset and Setting

3.2. Implementation Details

3.3. Evaluation Metric

3.4. Ablation Experiments

3.5. Comparison with Other Detectors

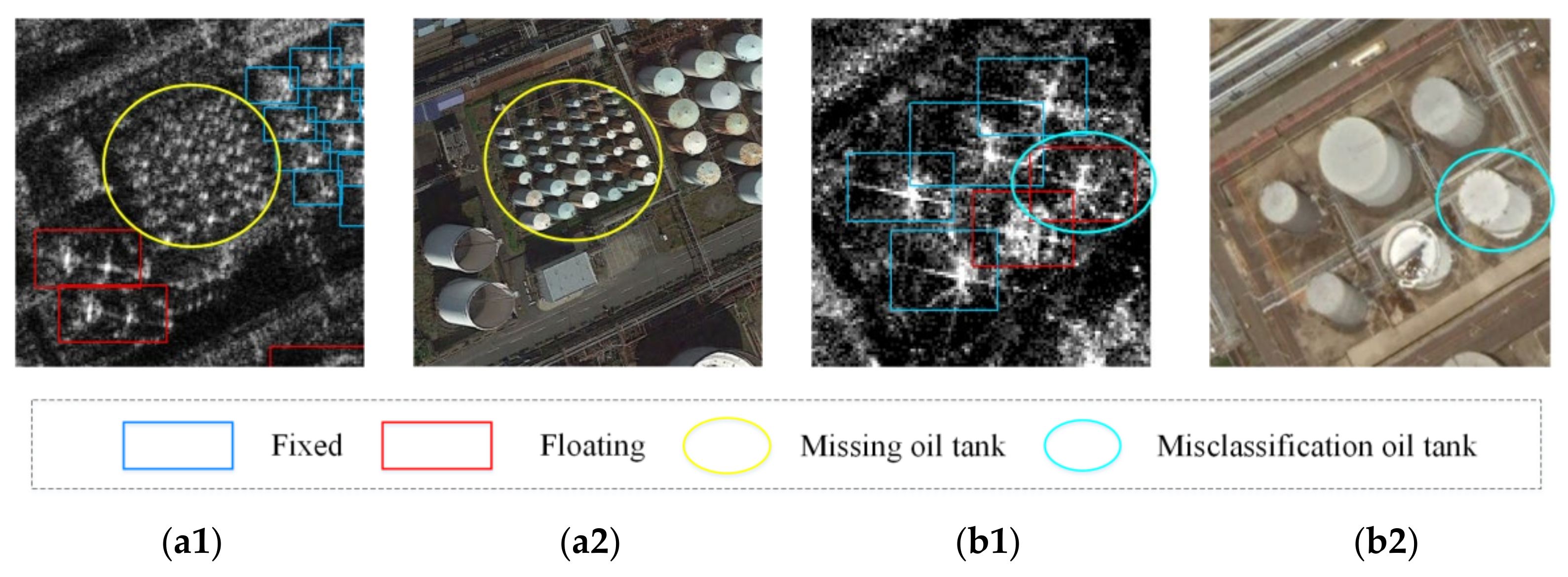

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pullarcot, S. Above Ground Storage Tanks: Practical Guide to Construction, Inspection, and Testing; Taylor & Francis Group, LLC: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Semadeni, M. Storage of Energy, Overview. Encyclopedia of Energy; Cleveland, C.J., Ed.; Elsevier: New York, NY, USA, 2004; pp. 719–738. [Google Scholar]

- Zhang, W.S.; Wang, C.; Zhang, H.; Wu, F.; Tang, Y.X.; Mu, X.P. An Automatic Oil Tank Detection Algorithm Based on Remote Sensing Image. J. Astronaut. 2006, 6, 1298–1301. [Google Scholar]

- Han, X.W.; Fu, Y.L.; Li, G. Oil Depots Recognition Based on Improved Hough Transform and Graph Search. J. Electron. Inf. Technol. 2011, 33, 66–72. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, D.; Jiang, Z. Oil Tank Detection via Target-Driven Learning Saliency Model. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017. [Google Scholar]

- Jing, M.; Zhao, D.; Zhou, M.; Gao, Y.; Jiang, Z.; Shi, Z. Unsupervised Oil Tank Detection by Shape-Guide Saliency Model. IEEE Geosci. Remote Sens. Lett. 2019, 16, 477–481. [Google Scholar] [CrossRef]

- Wang, T.; Li, Y.; Yu, S.; Liu, Y. Estimating the Volume of Oil Tanks Based on High-Resolution Remote Sensing Images. Remote Sens. 2019, 11, 793. [Google Scholar] [CrossRef] [Green Version]

- Yu, B.; Chen, F.; Wang, Y.; Wang, N.; Yang, X.; Ma, P.; Zhou, C.; Zhang, Y. Res2-Unet+, a Practical Oil Tank Detection Network for Large-Scale High Spatial Resolution Images. Remote Sens. 2021, 13, 4740. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, Y.; Guo, J.; Li, F.; Hu, Y.; Lei, B.; Ding, C. Accurate Localization of Oil Tanks in Remote Sensing Images via FGMRST-Based CNN. Remote Sens. 2021, 13, 4646. [Google Scholar] [CrossRef]

- Zhang, L.B.; Liu, C.Y. A Novel Saliency-Driven Oil Tank Detection Method for Synthetic Aperture Radar Images. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech, and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 2608–2612. [Google Scholar]

- Zhang, L.B.; Wang, S.; Liu, C.; Wang, Y. Saliency-Driven Oil Tank Detection Based on Multidimensional Feature Vector Clustering for SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 653–657. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, C. Oil Tank Detection Using Co-Spatial Residual and Local Gradation Statistic in Sar Images. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2000–2004. [Google Scholar]

- Liu, C.; Xie, C.; Yang, J.; Xiao, Y.; Bao, J. A method for coastal oil tank detection in polarimetric SAR images based on recognition of T-shaped harbor. J. Syst. Eng. Electron. 2018, 29, 499–509. [Google Scholar]

- Lopez, C.V.; Stilla, U. Monitoring of Oil Tank Filling With Spaceborne SAR Using Coherent Scatterers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5638–5655. [Google Scholar] [CrossRef]

- Xu, H.P.; Chen, W.; Sun, B.; Chen, Y.; Li, C. Oil tank detection in synthetic aperture radar images based on quasi-circular shadow and highlighting arcs. J. Appl. Remote Sens. 2014, 8, 083689. [Google Scholar] [CrossRef]

- Zhang, Y.T.; Chen, H.Z.; Ding, C.B.; Wang, H.Q. The multi-path scattering characteristics and the geometry extraction of cylinder tanks in SAR image. J. Infrared Millim. Waves 2012, 31, 379–384. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Zhu, W. Target Detection Based on Edge-Aware and Cross-Coupling Attention for SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ma, C.; Zhang, Y.; Guo, J.; Hu, Y.; Geng, X.; Li, F.; Lei, B.; Ding, C. End-to-End Method with Transformer for 3D Detection of Oil Tank from Single SAR Image. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–19. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao HY, M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Tsung, Y.; Lin, P.; Goyal, R. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 2999–3007. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

| RepCSP | Transformer | mAP% | mAP0.5% | Precision% | Recall% | F1% | GFLOPS |

|---|---|---|---|---|---|---|---|

| × | × | 58.35 | 92.12 | 92.18 | 89.22 | 90.68 | 26.6 |

| √ | × | 59.53 | 93.78 | 93.85 | 90.61 | 92.20 | 25.3 |

| × | √ | 59.96 | 94.13 | 94.97 | 90.89 | 92.89 | 27.3 |

| √ | √ | 60.80 | 94.82 | 95.64 | 91.91 | 93.74 | 26.1 |

| RepCSP | Transformer | AP0.5% | AP0.5:0.95% | ||

|---|---|---|---|---|---|

| Floating | Fixed | Floating | Fixed | ||

| × | × | 96.4 | 87.8 | 68.3 | 48.4 |

| √ | × | 97.2 | 90.4 | 68.7 | 50.4 |

| × | √ | 97.4 | 90.9 | 68.6 | 51.1 |

| √ | √ | 97.7 | 91.9 | 69.1 | 52.5 |

| Backbone | Neck | mAP% | mAP0.5% | Parameters (M) | GFLOPS |

|---|---|---|---|---|---|

| × | × | 58.35 | 92.12 | 8.94 | 26.6 |

| √ | × | 59.20 | 93.81 | 10.12 | 27.0 |

| √ | √ | 59.96 | 94.13 | 11.30 | 27.3 |

| Method | Roof Type (AP0.5%) | mAP0.5% | GFLOPS | Parameters (M) | |

|---|---|---|---|---|---|

| Floating | Fixed | ||||

| RetinaNet | 89.3 | 72.1 | 80.7 | 81.87 | 36.13 |

| Faster RCNN | 90.3 | 78.5 | 84.4 | 91.01 | 41.13 |

| SSD(300) | 90.0 | 79.9 | 85.0 | 137.31 | 23.88 |

| Yolov5-s | 95.2 | 88.4 | 91.8 | 15.9 | 7.3 |

| Yolox-TR | 97.7 | 91.9 | 94.8 | 26.1 | 8.48 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Zhang, B.; Xu, C.; Zhang, H.; Wang, C. Dense Oil Tank Detection and Classification via YOLOX-TR Network in Large-Scale SAR Images. Remote Sens. 2022, 14, 3246. https://doi.org/10.3390/rs14143246

Wu Q, Zhang B, Xu C, Zhang H, Wang C. Dense Oil Tank Detection and Classification via YOLOX-TR Network in Large-Scale SAR Images. Remote Sensing. 2022; 14(14):3246. https://doi.org/10.3390/rs14143246

Chicago/Turabian StyleWu, Qian, Bo Zhang, Changgui Xu, Hong Zhang, and Chao Wang. 2022. "Dense Oil Tank Detection and Classification via YOLOX-TR Network in Large-Scale SAR Images" Remote Sensing 14, no. 14: 3246. https://doi.org/10.3390/rs14143246

APA StyleWu, Q., Zhang, B., Xu, C., Zhang, H., & Wang, C. (2022). Dense Oil Tank Detection and Classification via YOLOX-TR Network in Large-Scale SAR Images. Remote Sensing, 14(14), 3246. https://doi.org/10.3390/rs14143246