UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment

Abstract

:1. Introduction

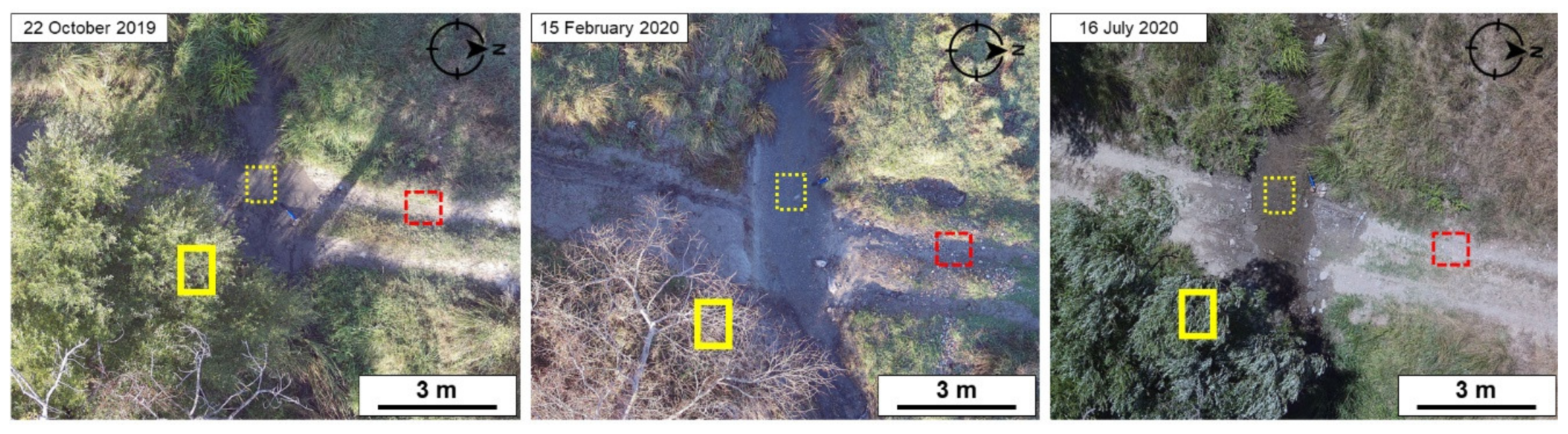

2. Materials and Methods

2.1. Study Site

2.2. Instruments

2.3. Field Data Collection

2.4. Data Processing

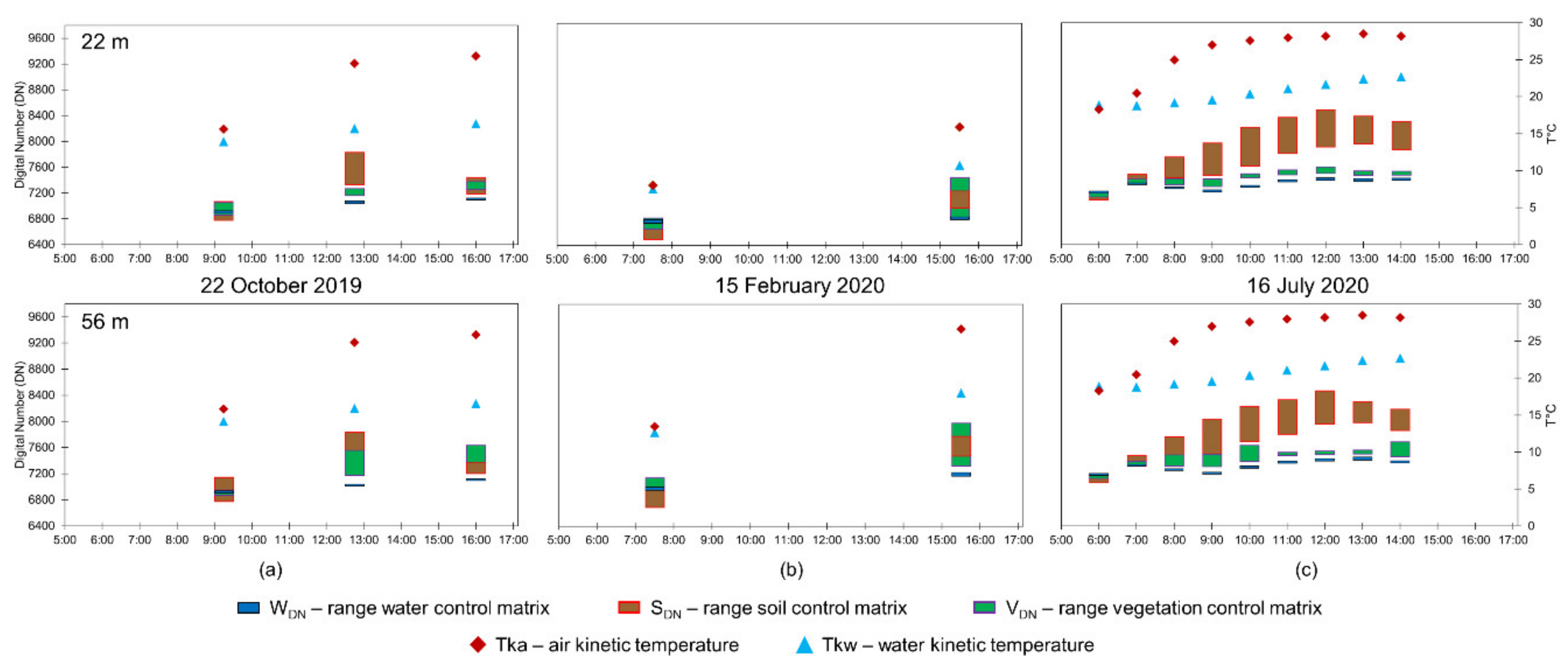

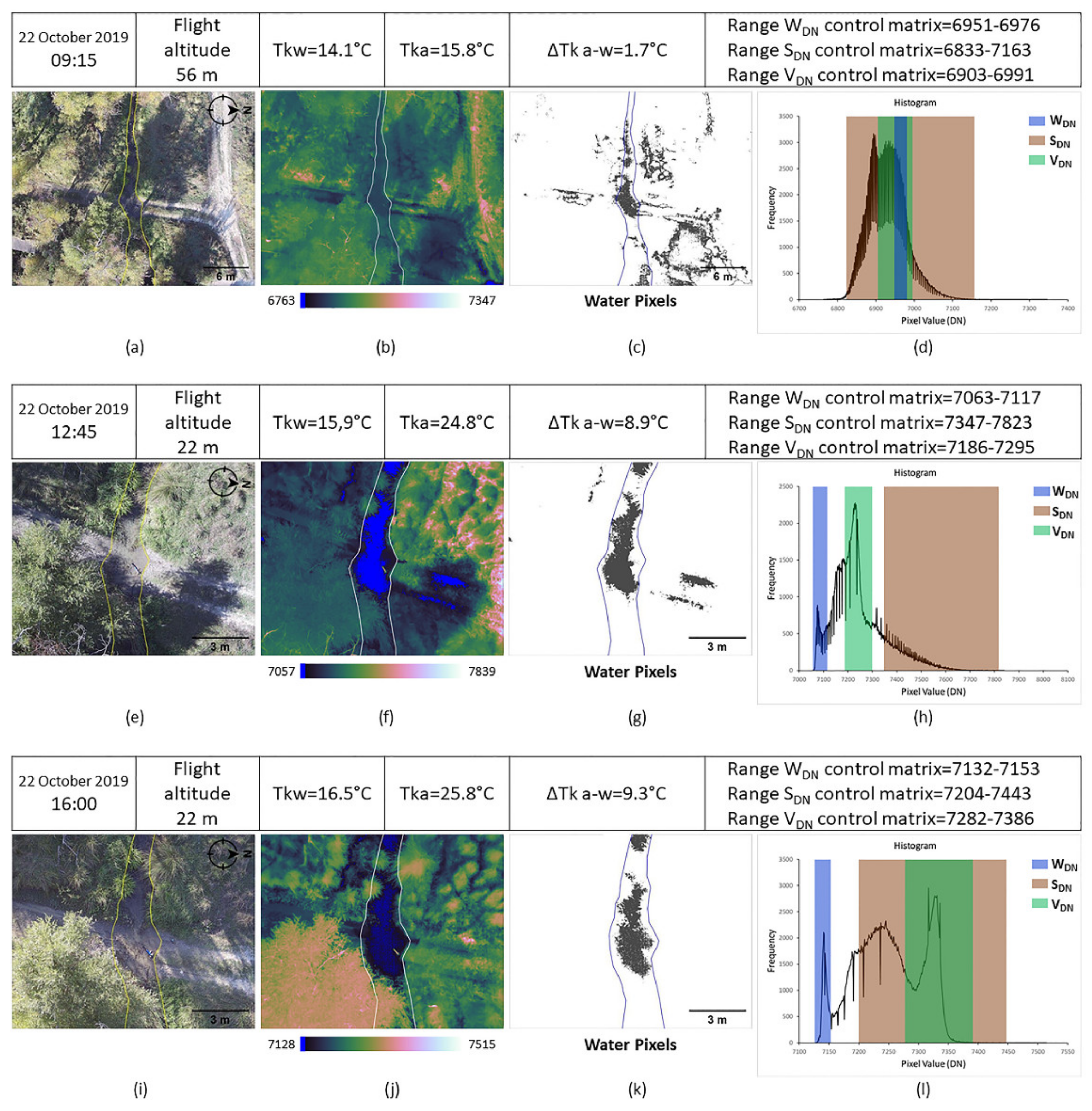

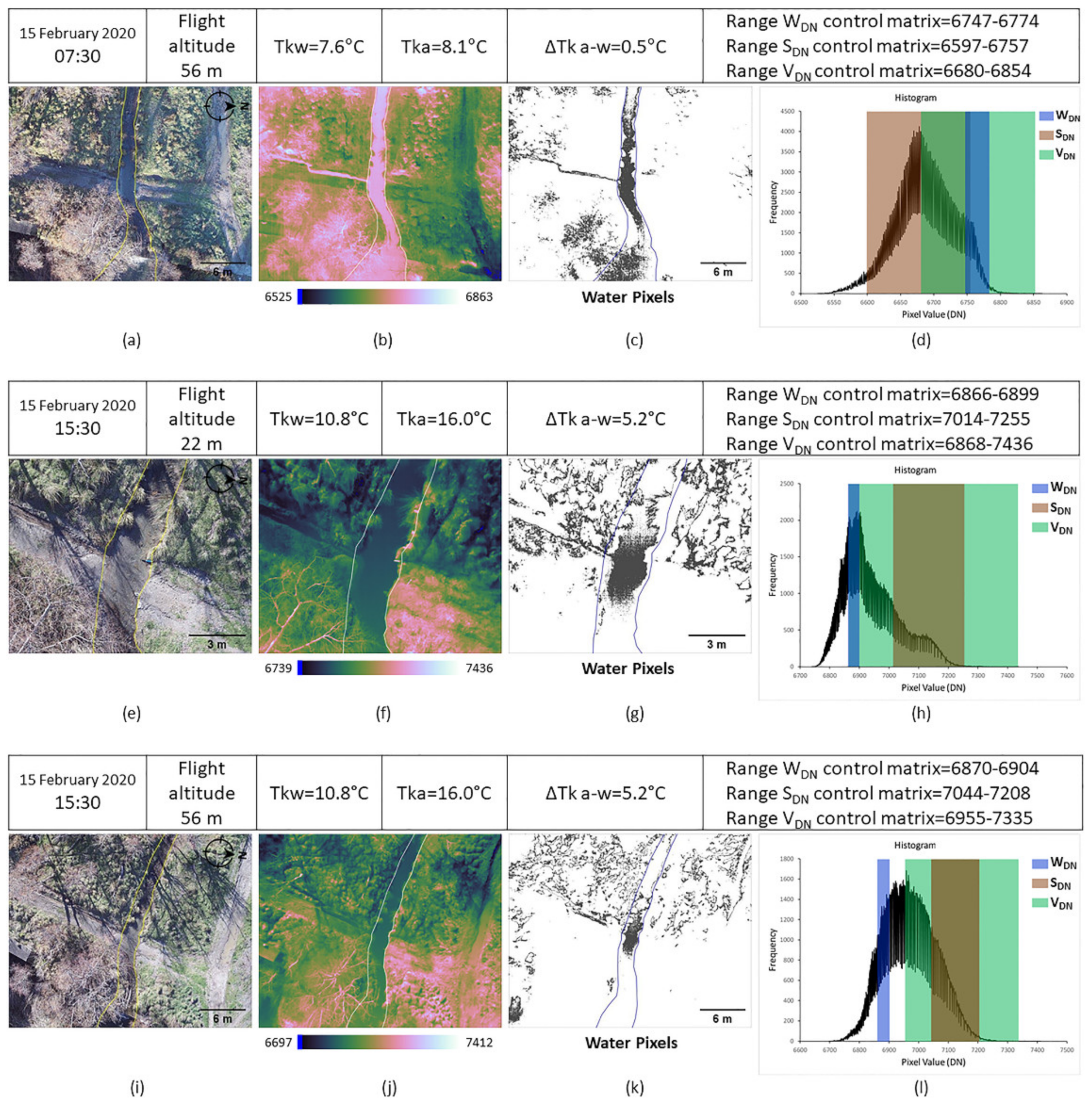

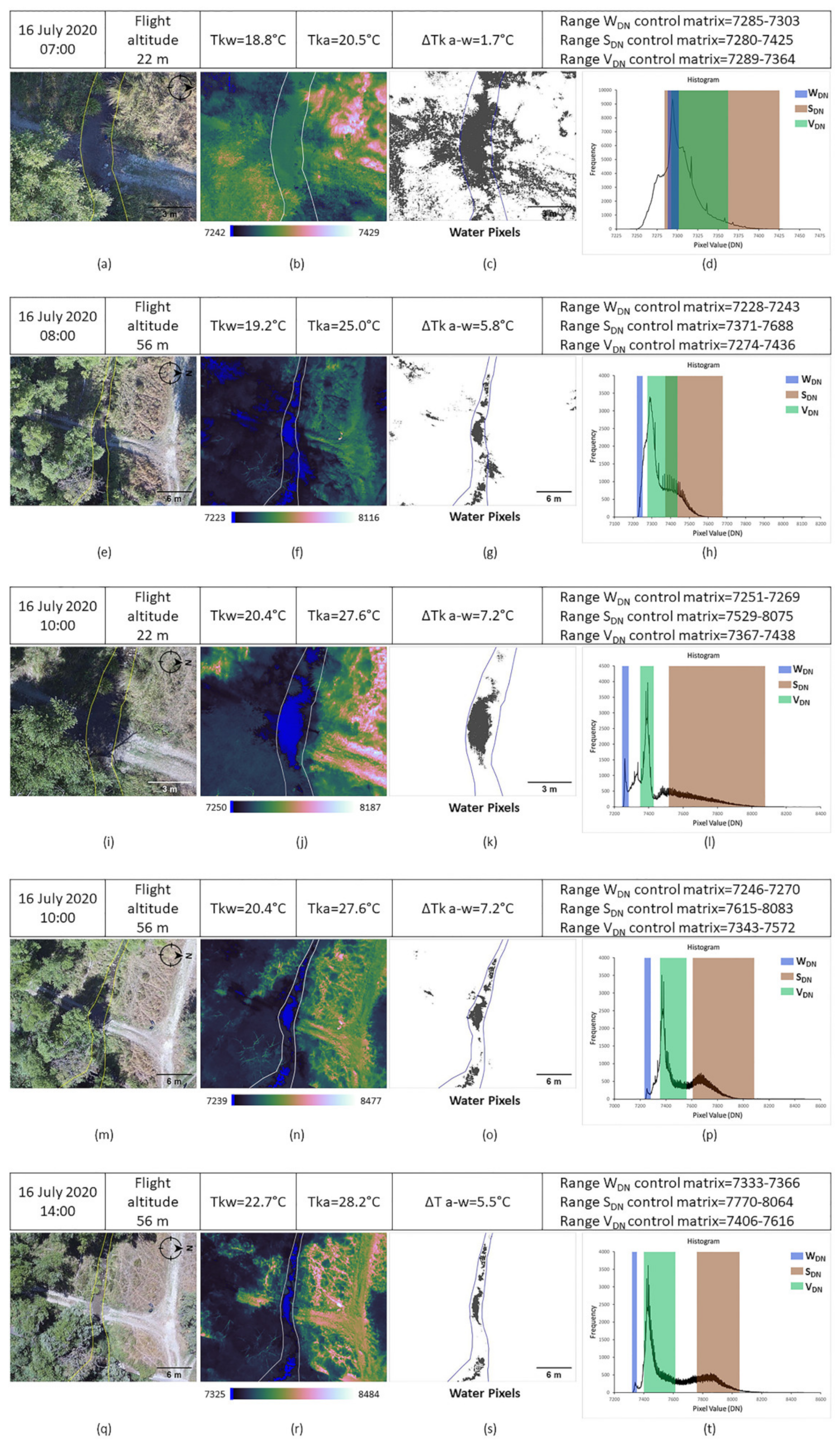

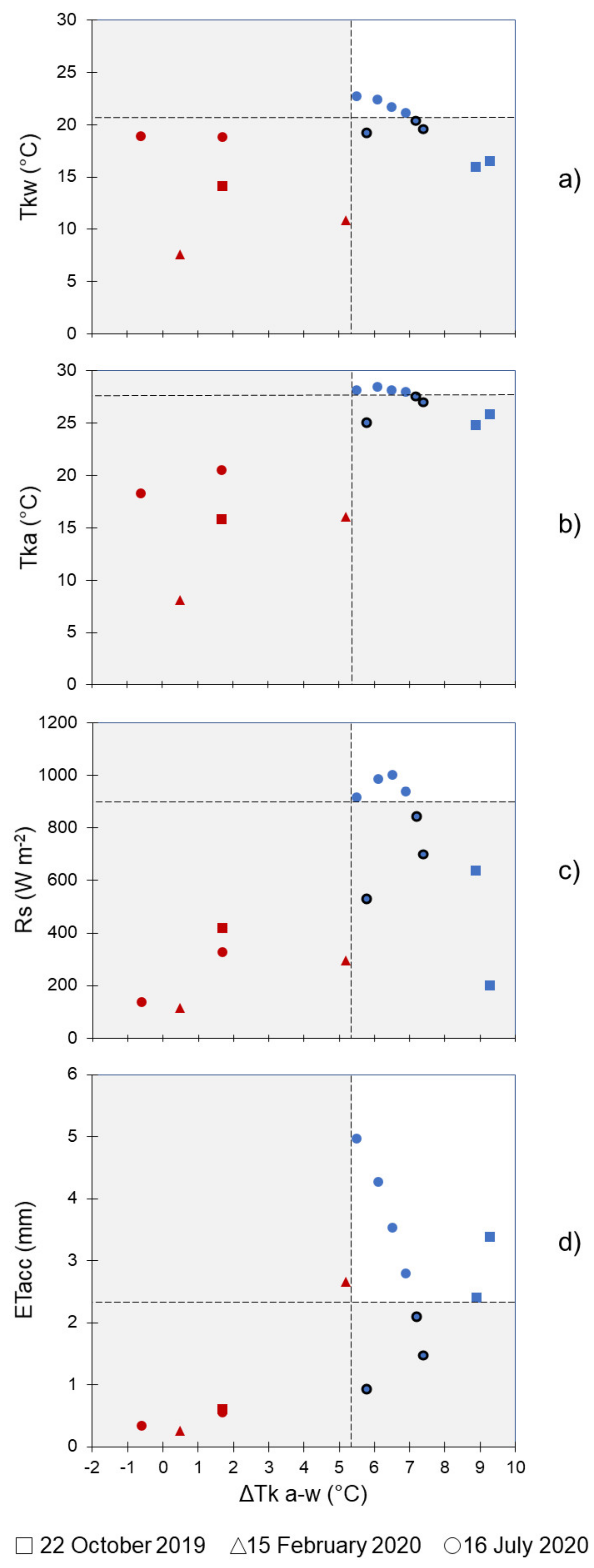

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Dor, E.B.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef] [Green Version]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small Unmanned Aircraft Systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote sensing of the environment with small Unmanned Aircraft Systems (UASs), part 2: Scientific and commercial applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef] [Green Version]

- Tomaštík, J.; Mokroš, M.; Surový, P.; Grznárová, A.; Merganič, J. UAV RTK/PPK Method–An optimal solution for mapping inaccessible forested areas? Remote Sens. 2019, 11, 721. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Watson, C. An Automated technique for generating georectified mosaics from ultra–high resolution Unmanned Aerial Vehicle (UAV) imagery, based on Structure from Motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Wallace, L. Direct georeferencing of ultrahigh–resolution UAV imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- DeBell, L.; Anderson, K.; Brazier, R.E.; King, N.; Jones, L. Water resource management at catchment scales using lightweight UAVs: Current capabilities and future perspectives. J. Unmanned Veh. Syst. 2015, 4, 7–30. [Google Scholar] [CrossRef]

- Jensen, A.M.; Neilson, B.T.; McKee, M.; Chen, Y. Thermal remote sensing with an autonomous unmanned aerial remote sensing platform for surface stream temperatures. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, IEEE, Munich, Germany, 22–27 July 2012; pp. 5049–5052. [Google Scholar]

- Tamminga, A.; Hugenholtz, C.; Eaton, B.; Lapointe, M. Hyperspatial remote sensing of channel reach morphology and hydraulic fish habitat using an Unmanned Aerial Vehicle (UAV): A first assessment in the context of river research and management. River Res. Appl. 2015, 31, 379–391. [Google Scholar] [CrossRef]

- Woodget, A.S.; Carbonneau, P.E.; Visser, F.; Maddock, I.P. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 47–64. [Google Scholar] [CrossRef] [Green Version]

- Spence, C.; Mengistu, S. Deployment of an unmanned aerial system to assist in mapping an intermittent stream. Hydrol. Process. 2016, 30, 493–500. [Google Scholar] [CrossRef]

- Pai, H.; Malenda, H.F.; Briggs, M.A.; Singha, K.; González–Pinzón, R.; Gooseff, M.N.; Tyler, S.W. Potential for small Unmanned Aircraft Systems applications for identifying groundwater–surface water exchange in a meandering river reach. Geophys. Res. Lett. 2017, 44, 11868–11877. [Google Scholar] [CrossRef] [Green Version]

- Woodget, A.S.; Austrums, R.; Maddock, I.P.; Habit, E. Drones and digital photogrammetry: From classifications to continuums for monitoring river habitat and hydromorphology. Wiley Interdiscip. Rev. Water 2017, 4, e1222. [Google Scholar] [CrossRef] [Green Version]

- Briggs, M.A.; Dawson, C.B.; Holmquist–Johnson, C.L.; Williams, K.H.; Lane, J.W. Efficient hydrogeological characterization of remote stream corridors using drones. Hydrol. Process. 2018, 33, 316–319. [Google Scholar] [CrossRef] [Green Version]

- Borg Galea, A.; Sadler, J.P.; Hannah, D.M.; Datry, T.; Dugdale, S.J. Mediterranean intermittent rivers and ephemeral streams: Challenges in monitoring complexity. Ecohydrology 2019, 12, e2149. [Google Scholar] [CrossRef]

- Casas–Mulet, R.; Pander, J.; Ryu, D.; Stewardson, M.J.; Geist, J. Unmanned Aerial Vehicle (UAV)–based Thermal Infra–Red (TIR) and optical imagery reveals multi–spatial scale controls of cold–water areas over a groundwater–dominated riverscape. Front. Environ. Sci. 2020, 8, 64. [Google Scholar] [CrossRef]

- Samboko, H.T.; Abas, I.; Luxemburg, W.M.J.; Savenije, H.H.G.; Makurira, H.; Banda, K.; Winsemius, H.C. Evaluation and improvement of remote sensing–based methods for river flow management. Phys. Chem. Earth, Parts A/B/C 2020, 117, 102839. [Google Scholar] [CrossRef]

- Kuhn, J.; Casas–Mulet, R.; Pander, J.; Geist, J. Assessing stream thermal heterogeneity and cold–water patches from UAV–based imagery: A matter of classification methods and metrics. Remote Sens. 2021, 13, 1379. [Google Scholar] [CrossRef]

- Datry, T.; Larned, S.T.; Tockner, K. Intermittent rivers: A challenge for freshwater ecology. Bioscience 2014, 64, 229–235. [Google Scholar] [CrossRef] [Green Version]

- Berger, E.; Haase, P.; Kuemmerlen, M.; Leps, M.; Schäfer, R.; Sundermann, A. Water quality variables and pollution sources shaping stream macroinvertebrate communities. Sci. Total. Environ. 2017, 587, 1–10. [Google Scholar] [CrossRef]

- Durighetto, N.; Vingiani, F.; Bertassello, L.E.; Camporese, M.; Botter, G. Intraseasonal drainage network dynamics in a headwater catchment of the Italian Alps. Water Resour. Res. 2020, 56, e2019WR025563. [Google Scholar] [CrossRef] [Green Version]

- Senatore, A.; Micieli, M.; Liotti, A.; Durighetto, N.; Mendicino, G.; Botter, G. Monitoring and Modeling drainage network contraction and dry down in Mediterranean headwater catchments. Water Resour. Res. 2021, 57, e2020WR028741. [Google Scholar] [CrossRef]

- Botter, G.; Vingiani, F.; Senatore, A.; Jensen, C.; Weiler, M.; McGuire, K.; Mendicino, G.; Durighetto, N. Hierarchical climate–driven dynamics of the active channel length in temporary streams. Sci. Rep. 2021, 11, 21503. [Google Scholar] [CrossRef]

- Rivas Casado, M.; Ballesteros Gonzalez, R.; Wright, R.; Bellamy, P. Quantifying the effect of aerial imagery resolution in automated hydromorphological river characterisation. Remote Sens. 2016, 8, 650. [Google Scholar] [CrossRef] [Green Version]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Tateishi, R. A water index for rapid mapping of shoreline changes of five East African Rift Valley lakes: An empirical analysis using Landsat TM and ETM+ data. Int. J. Remote Sens. 2006, 27, 3153–3181. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, Y.; Ling, F.; Wang, Q.; Li, W.; Li, X. Water bodies’ mapping from Sentinel–2 imagery with modified Normalized Difference Water Index at 10–m spatial resolution produced by sharpening the SWIR band. Remote Sens. 2016, 8, 354. [Google Scholar] [CrossRef] [Green Version]

- Niroumand–Jadidi, M.; Vitti, A. Reconstruction of river boundaries at sub–pixel resolution: Estimation and spatial allocation of water fractions. ISPRS Int. J. Geo–Inf. 2017, 6, 383. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Yan, Z.; Shen, Q.; Cheng, G.; Gao, L.; Zhang, B. Water body extraction from very high spatial resolution remote sensing data based on fully convolutional networks. Remote Sens. 2019, 11, 1162. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Li, Y.; Wei, Y.; Chen, Z.; Xie, P. Water body extraction from Sentinel–3 image with multiscale spatiotemporal super–resolution mapping. Water 2020, 12, 2605. [Google Scholar] [CrossRef]

- Bhaga, T.D.; Dube, T.; Shekede, M.D.; Shoko, C. Impacts of climate variability and drought on surface water resources in sub–saharan africa using remote sensing: A review. Remote Sens. 2020, 12, 4184. [Google Scholar] [CrossRef]

- Han, W.; Huang, C.; Duan, H.; Gu, J.; Hou, J. Lake phenology of freeze–thaw cycles using random forest: A case study of Qinghai Lake. Remote Sens. 2020, 12, 4098. [Google Scholar] [CrossRef]

- Wang, R.; Xia, H.; Qin, Y.; Niu, W.; Pan, L.; Li, R.; Zhao, X.; Bian, X.; Fu, P. Dynamic Monitoring of surface water area during 1989–2019 in the Hetao Plain using Landsat Data in Google Earth Engine. Water 2020, 12, 3010. [Google Scholar] [CrossRef]

- Kolli, M.K.; Opp, C.; Karthe, D.; Groll, M. Mapping of major land–use changes in the Kolleru Lake freshwater ecosystem by using Landsat Satellite images in Google Earth Engine. Water 2020, 12, 2493. [Google Scholar] [CrossRef]

- Jiang, Y.; Fu, P.; Weng, Q. Assessing the impacts of urbanization–associated land use/cover change on land surface temperature and surface moisture: A case study in the Midwestern United States. Remote Sens. 2015, 7, 4880–4898. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Wang, L.; Ross, K.W.; Liu, C.; Berry, K. Standardized soil moisture index for drought monitoring based on soil moisture active passive observations and 36 years of North American land data assimilation system data: A case study in the Southeast United States. Remote. Sens. 2018, 10, 301. [Google Scholar] [CrossRef] [Green Version]

- Gao, B.C. NDWI–A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Xu, H. Modification of Normalised Difference Water Index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Lacaux, J.P.; Tourre, Y.M.; Vignolles, C.; Ndione, J.A.; Lafaye, M. Classification of ponds from high–spatial resolution remote sensing: Application to Rift Valley Fever epidemics in Senegal. Remote Sens. Environ. 2007, 106, 66–74. [Google Scholar] [CrossRef]

- Ji, L.; Zhang, L.; Wylie, B. Analysis of dynamic thresholds for the Normalized Difference Water Index. Photogramm. Eng. Remote Sens. 2009, 75, 1307–1317. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation, 7th ed.; John Wiley and Sons: New York, NY, USA, 2015. [Google Scholar]

- Handcock, R.N.; Torgersen, C.E.; Cherkauer, K.A.; Gillespie, A.R.; Tockner, K.; Faux, R.N.; Tan, J. Thermal Infrared Remote Sensing of Water Temperature in Riverine Landscapes. In Fluvial Remote Sensing for Science and Management; Carbonneau, P.E., Piégay, H., Eds.; Wiley–Blackwell: Chichester, UK, 2012; pp. 85–113. [Google Scholar]

- Dugdale, S.J.; Kelleher, C.A.; Malcolm, I.A.; Caldwell, S.; Hannah, D.M. Assessing the potential of drone–based thermal infrared imagery for quantifying river temperature heterogeneity. Hydrol. Process. 2019, 33, 1152–1163. [Google Scholar] [CrossRef]

- Dugdale, S.J.; Bergeron, N.E.; St–Hilaire, A. Spatial distribution of thermal refuges analysed in relation to riverscape hydromorphology using airborne thermal infrared imagery. Remote Sens. Environ. 2015, 160, 43–55. [Google Scholar] [CrossRef]

- Chen, F.; Dudhia, J. Coupling an advanced land surface–Hydrology model with the Penn State–NCAR MM5 modeling system. Part I: Model implementation and sensitivity. Mon. Weather Rev. 2001, 129, 569–585. [Google Scholar] [CrossRef] [Green Version]

- Olbrycht, R.; Więcek, B.; De Mey, G. Thermal drift compensation method for microbolometer thermal cameras. Appl. Opt. 2012, 51, 1788–1794. [Google Scholar] [CrossRef]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al–Mashharawi, S.; Al–Amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A calibration procedure for field and UAV–based uncooled thermal infrared instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef]

- Ribeiro–Gomes, K.; Hernández–López, D.; Ortega, J.F.; Ballesteros, R.; Poblete, T.; Moreno, M.A. Uncooled thermal camera calibration and optimization of the photogrammetry process for UAV applications in agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef]

- Ebersole, J.L.; Liss, W.J.; Frissell, C.A. Cold water patches in warm streams: Physicochemical characteristics and the influence of shading. JAWRA J. Am. Water Resour. Assoc. 2003, 39, 355–368. [Google Scholar] [CrossRef]

- Harvey, M.C.; Rowland, J.V.; Luketina, K.M. Drone with thermal infrared camera provides high resolution georeferenced imagery of the Waikite geothermal area, New Zealand. J. Volcanol. Geotherm. Res. 2016, 325, 61–69. [Google Scholar] [CrossRef]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV–based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef] [Green Version]

- Kelly, J.; Kljun, N.; Olsson, P.O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sens. 2019, 11, 567. [Google Scholar] [CrossRef] [Green Version]

| Zenmuse XT2 | |

|---|---|

| Thermal sensor type | Uncooled VOx Microbolometer |

| Thermal sensor resolution | 640 × 512 pixels |

| Pixel pitch | 17 µm |

| Spectral band | 7.5–13.5 µm |

| Lens | 19 mm, FOV 32° × 26° |

| Camera visual sensor | CMOS, 1/1.7”, 4000 × 3000 pixels |

| Lens | 8 mm, FOV 57.12° × 42.44° |

| Date | Number of Flights | CET/CEST Time | Flight Height (m) | GSD (cm) | RGB–IR Image Sets |

|---|---|---|---|---|---|

| 22 October 2019 1 | 3 | 09:15; 12:45; 16:00 | 6 | ||

| 15 February 2020 | 2 | 07:30; 15:30 | 22; 56 | 2; 5 | 4 |

| 16 July 2020 1 | 9 | 06:00–14:00 2 | 18 |

| Date | CET/CEST Time | Tkw (°C) | Tka (°C) | ΔTk a–w (°C) | ΔTkw 1 (°C) | Δtka 1 (°C) |

|---|---|---|---|---|---|---|

| 22 October 2019 | 09:15 | 14.1 | 15.8 | 1.7 | 2.4 | 10.0 |

| 12:45 | 15.9 | 24.8 | 8.9 | |||

| 16:00 | 16.5 | 25.8 | 9.3 | |||

| 15 February 2020 | 07:30 | 7.6 | 8.1 | 0.5 | 3.2 | 7.9 |

| 15:30 | 10.8 | 16 | 5.2 | |||

| 16 July 2020 | 06:00 | 18.9 | 18.3 | −0.6 | 3.9 | 10.2 |

| 07:00 | 18.8 | 20.5 | 1.7 | |||

| 08:00 | 19.2 | 25.0 | 5.8 | |||

| 09:00 | 19.6 | 27.0 | 7.4 | |||

| 10:00 | 20.4 | 27.6 | 7.2 | |||

| 11:00 | 21.1 | 28.0 | 6.9 | |||

| 12:00 | 21.7 | 28.2 | 6.5 | |||

| 13:00 | 22.4 | 28.5 | 6.1 | |||

| 14:00 | 22.7 | 28.2 | 5.5 |

| Date | CET/CEST Time | WDN Overlapped with VDN and SDN | |

|---|---|---|---|

| 22 m Flight Height | 56 m Flight Height | ||

| 22 October 2019 | 09:15 | OTD–FD | OTD–FD |

| 12:45 | NOTD | NOTD | |

| 16:00 | NO | NO | |

| 15 February 2020 | 07:30 | OTD–FD | OTD–FD |

| 15:30 | OTD–FD | NOTD–FD | |

| 16 July 2020 | 06:00 | OTD–FD | OTD–FD |

| 07:00 | OTD–FD | OTD–FD | |

| 08:00 | NOTD–FD | NOTD–FD | |

| 09:00 | NOTD–FD | NOTD–FD | |

| 10:00 | NO | NOTD–FD | |

| 11:00 | NO | NO | |

| 12:00 | NO | NO | |

| 13:00 | NO | NO | |

| 14:00 | NO | NO | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Micieli, M.; Botter, G.; Mendicino, G.; Senatore, A. UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment. Remote Sens. 2022, 14, 108. https://doi.org/10.3390/rs14010108

Micieli M, Botter G, Mendicino G, Senatore A. UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment. Remote Sensing. 2022; 14(1):108. https://doi.org/10.3390/rs14010108

Chicago/Turabian StyleMicieli, Massimo, Gianluca Botter, Giuseppe Mendicino, and Alfonso Senatore. 2022. "UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment" Remote Sensing 14, no. 1: 108. https://doi.org/10.3390/rs14010108

APA StyleMicieli, M., Botter, G., Mendicino, G., & Senatore, A. (2022). UAV Thermal Images for Water Presence Detection in a Mediterranean Headwater Catchment. Remote Sensing, 14(1), 108. https://doi.org/10.3390/rs14010108