Development of Improved Semi-Automated Processing Algorithms for the Creation of Rockfall Databases

Abstract

1. Introduction

2. Materials and Methods

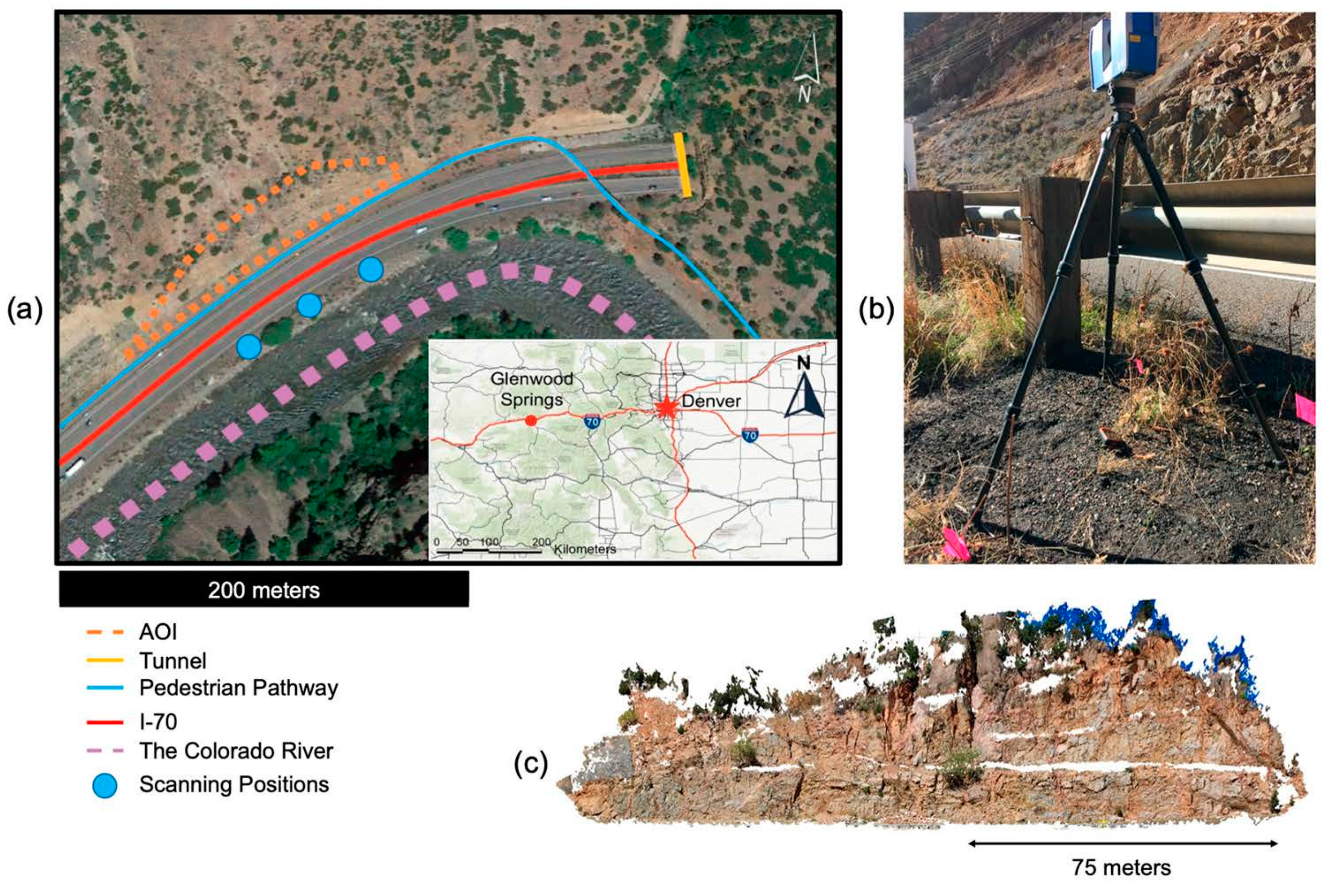

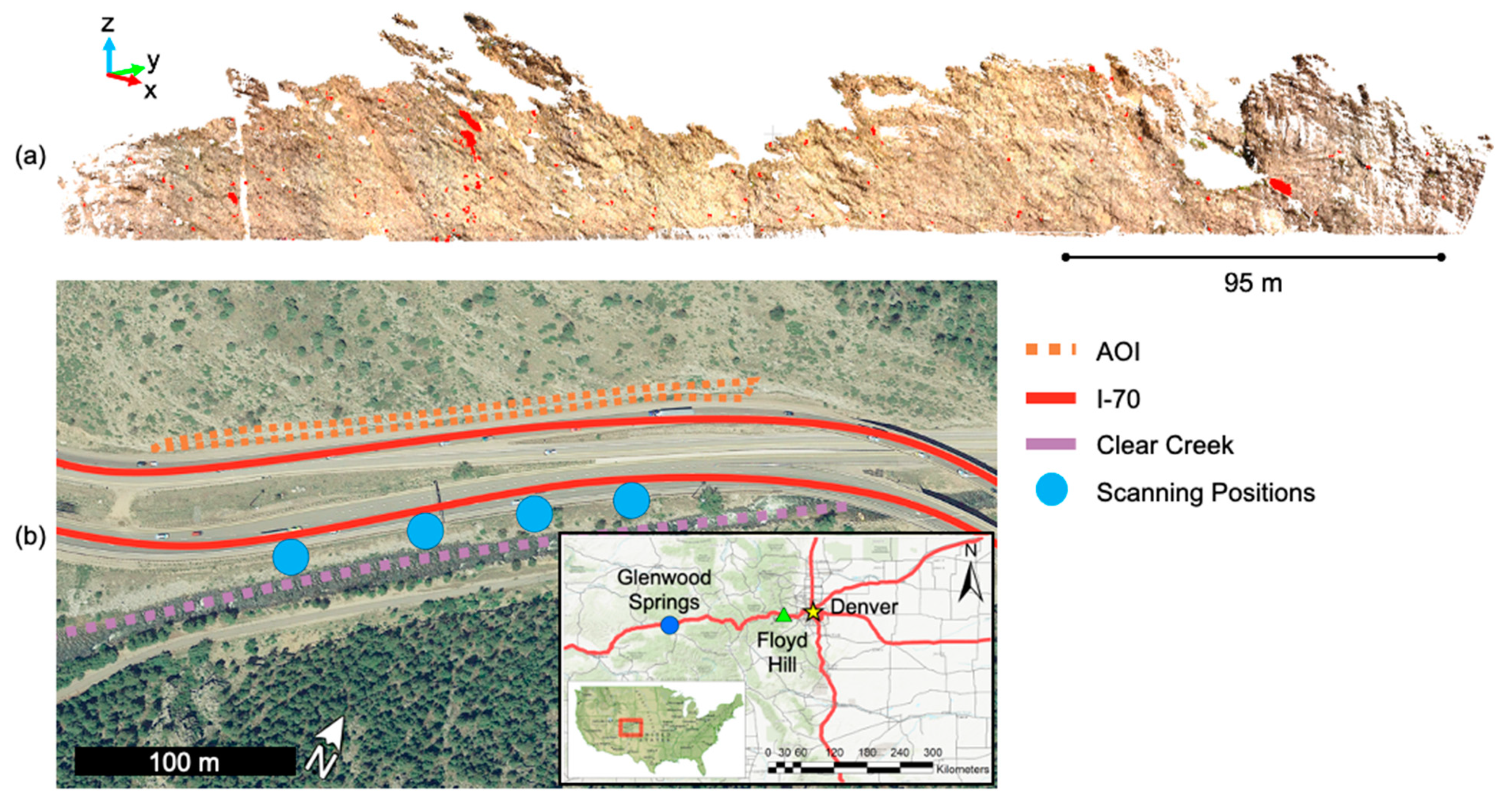

2.1. Study Site

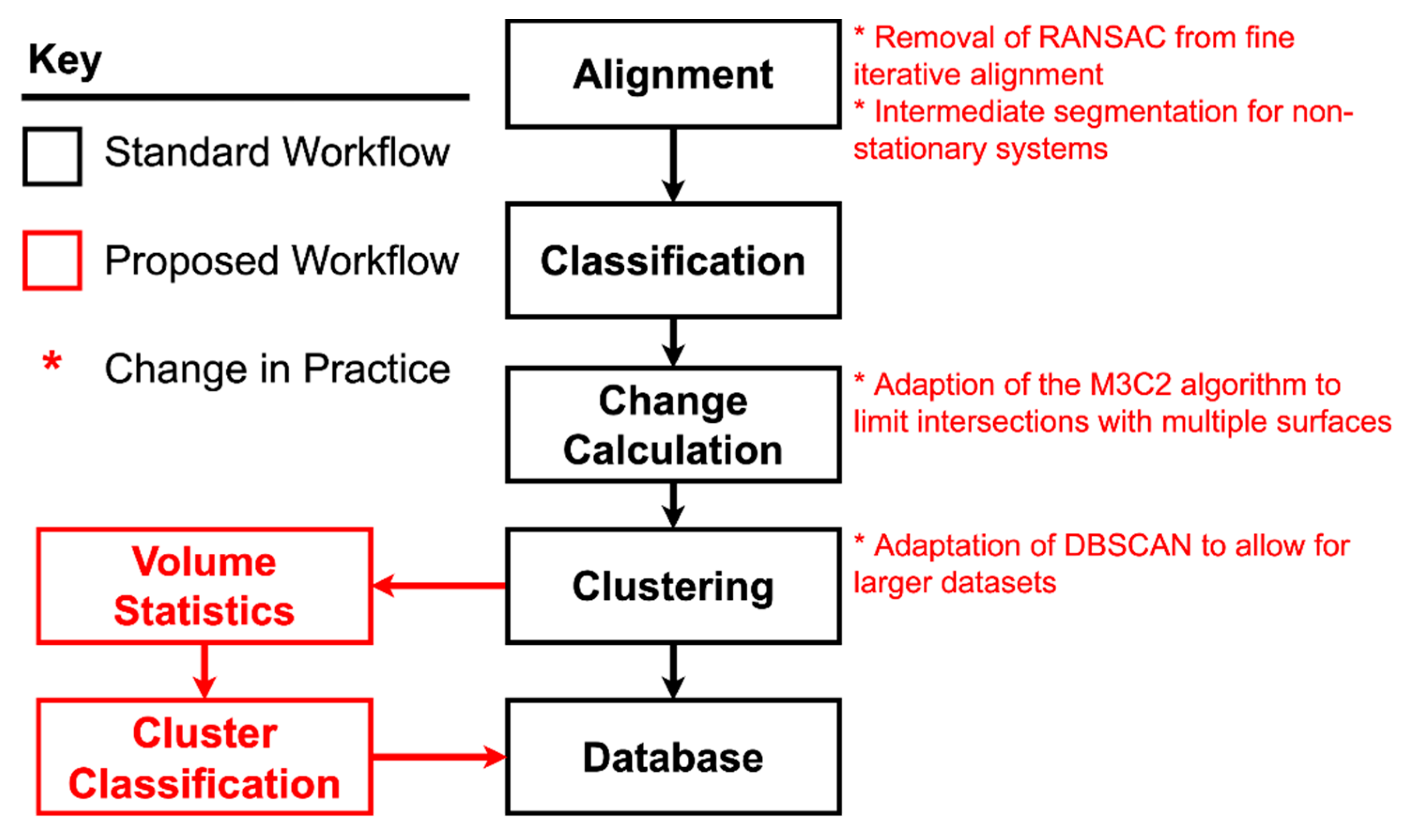

2.2. Workflow Overview

- Alignment: The process of putting one or more point cloud(s) in a common coordinate system.

- Classification: Categorizing points within the point cloud.

- Change calculation: Comparing the points between a reference cloud and a secondary (data) cloud to determine changes in point locations.

- Clustering: Labelling points by some characteristic of the points (e.g., point density).

2.3. Preprocessing

- Converting the scanner’s proprietary file format to ASCII.

- Selecting points that define the boundary of the area of interest in 3D space.

- Determining suitable ranges of parameters for the algorithms (e.g., subsampling size, normal calculation radius).

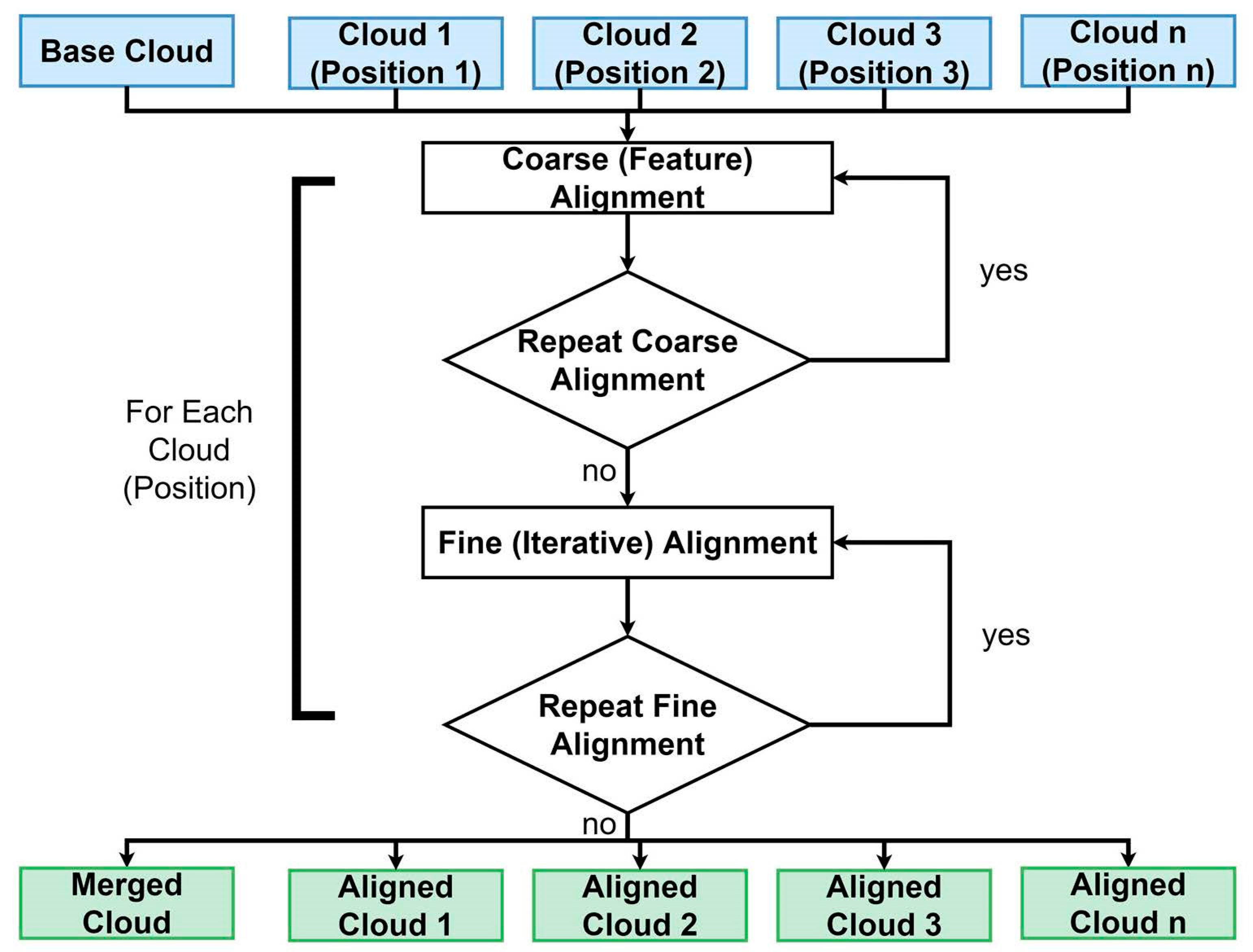

2.4. Alignment

- Align scans from different viewpoints into a common location.

- Align scans from different points in time into a common location.

- Reference scans to a known coordinate system.

2.4.1. Coarse Alignment

2.4.2. Fine (Iterative) Alignment

- Choice of correspondence pair points that can be used to relate the two point clouds.

- Rejection of correspondence pairs points that do not match given criteria.

- Determination of the error corresponding to the pairs of points following transformation.

- Repetition until error is minimized below a threshold or until a stopping condition is satisfied.

2.5. Classification

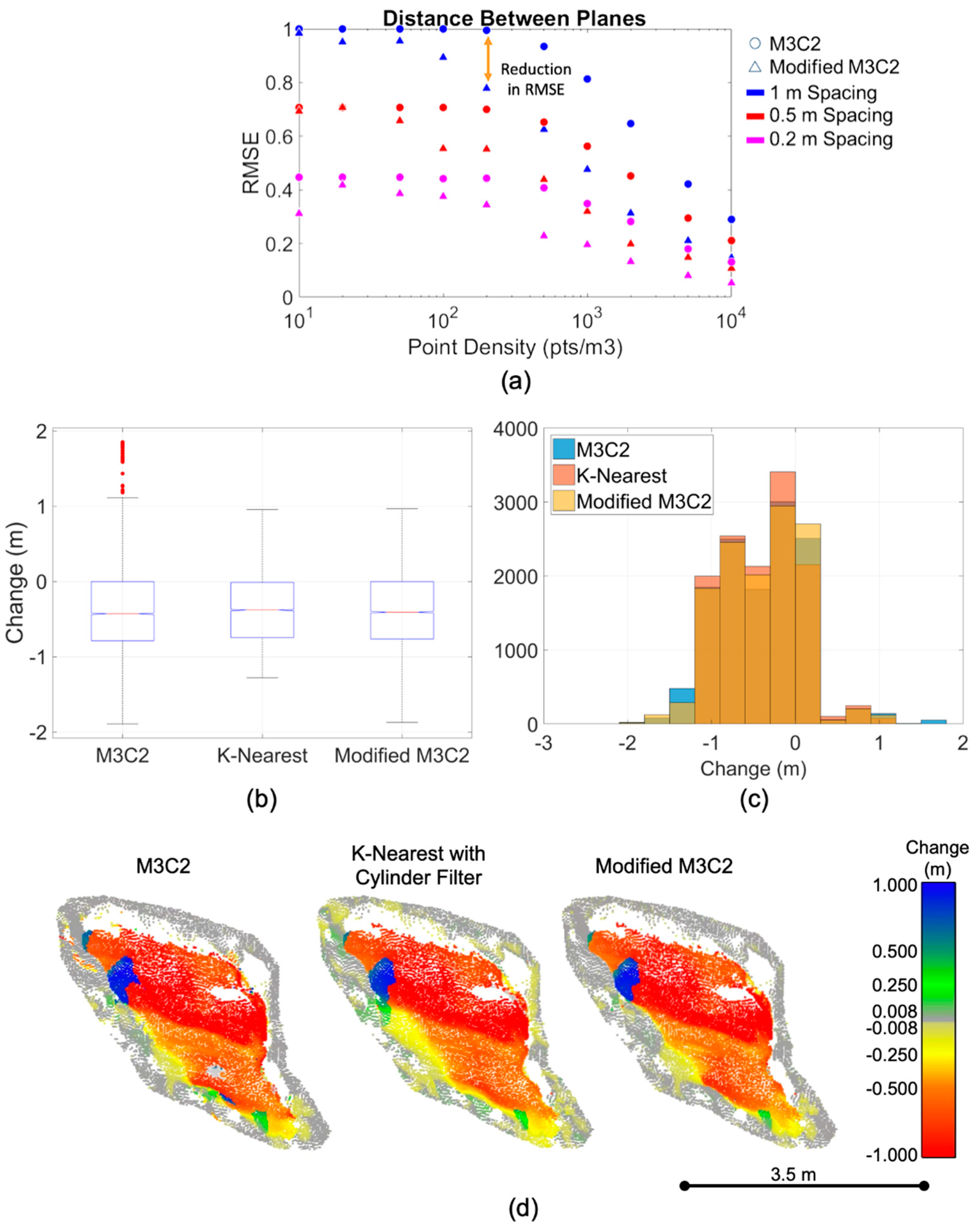

2.6. Change Detection

- Determination of change direction (positive or negative along normal) using M3C2.

- Project the cylinder in the determined direction until K points have been found

2.7. Clustering

2.8. Volume Statistics

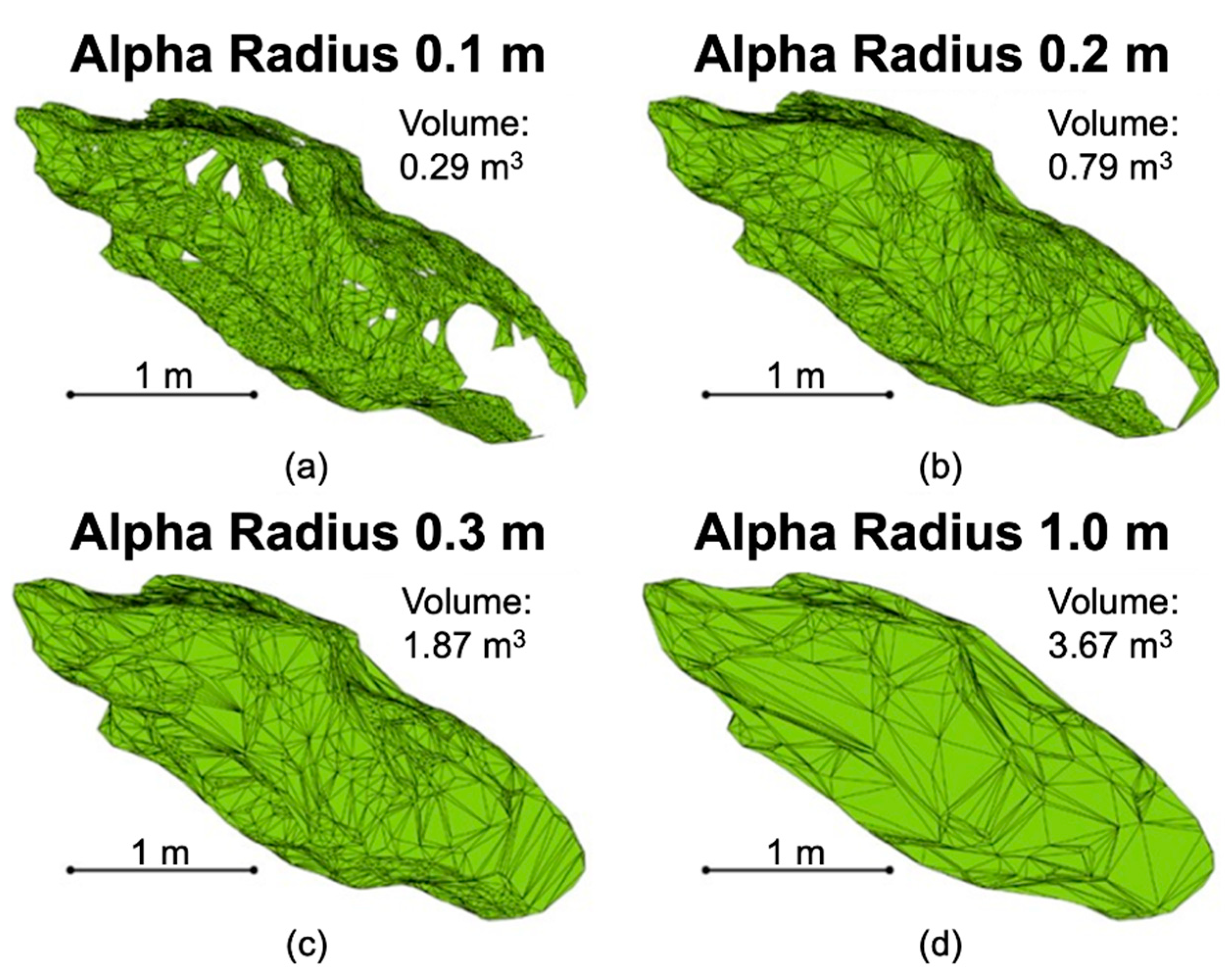

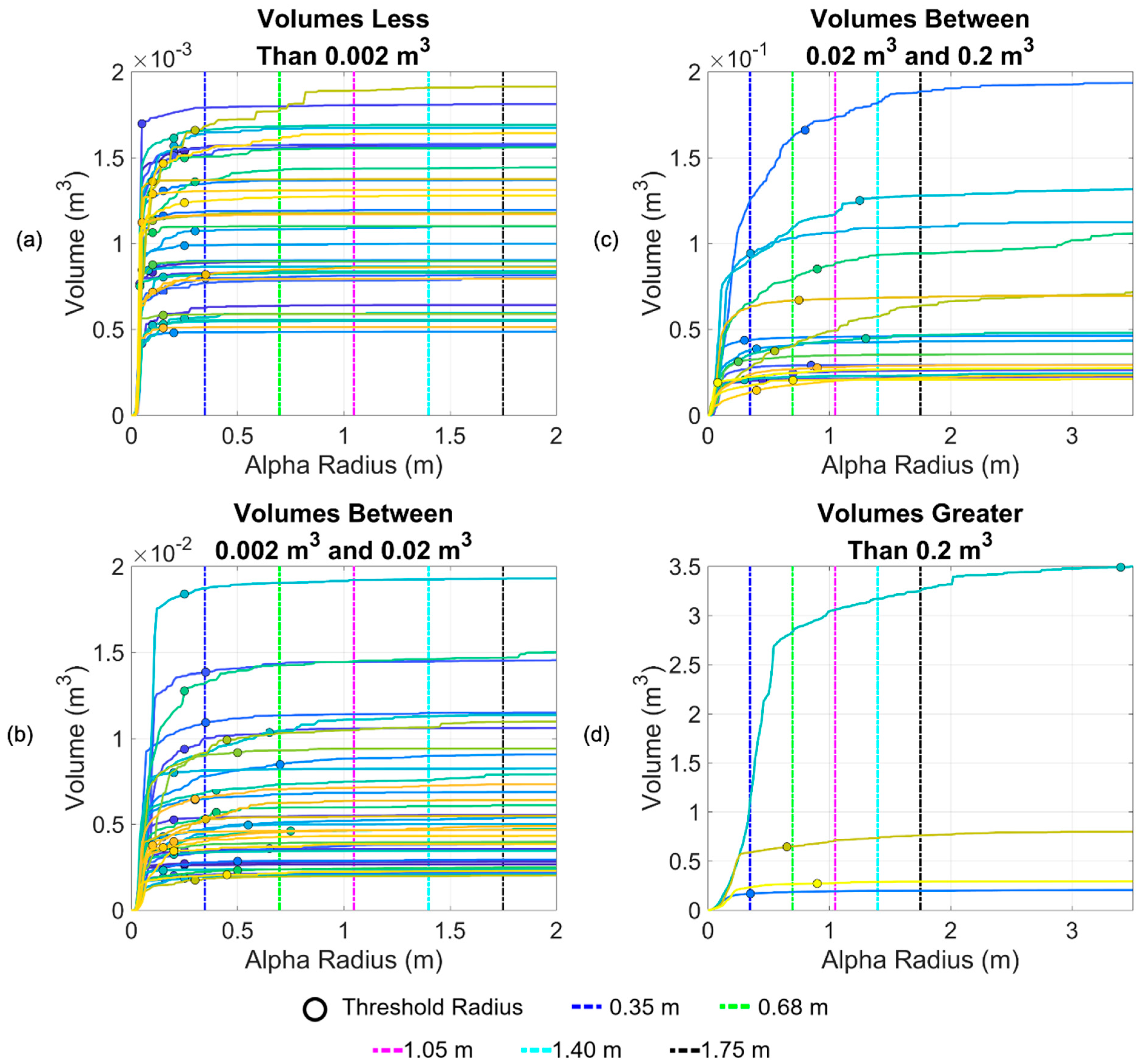

2.8.1. Shape Reconstruction

- Panel A: When the alpha radius is small, many holes appear resulting in an underestimation of the volume.

- Panel B: Increasing the radius decreases the number of holes and increases the volume estimate.

- Panel C: Holes have been eliminated with this, but some of the detail in the shape has been lost. This implies a threshold radius between 0.2 m and 0.3 m was suitable.

- Panel D: Too large of a radius results in complete deterioration of the shape.

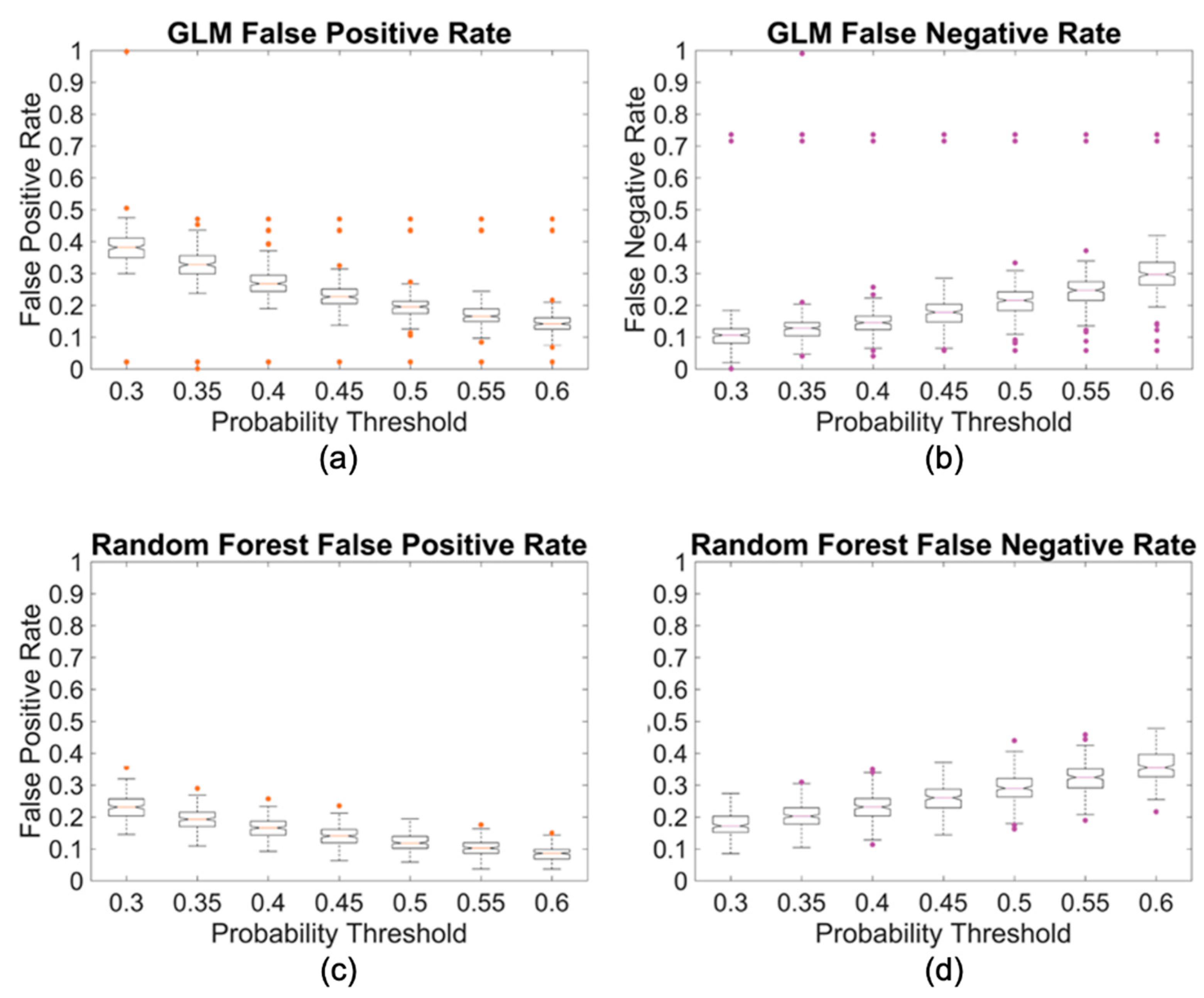

2.9. Cluster Classification

3. Results

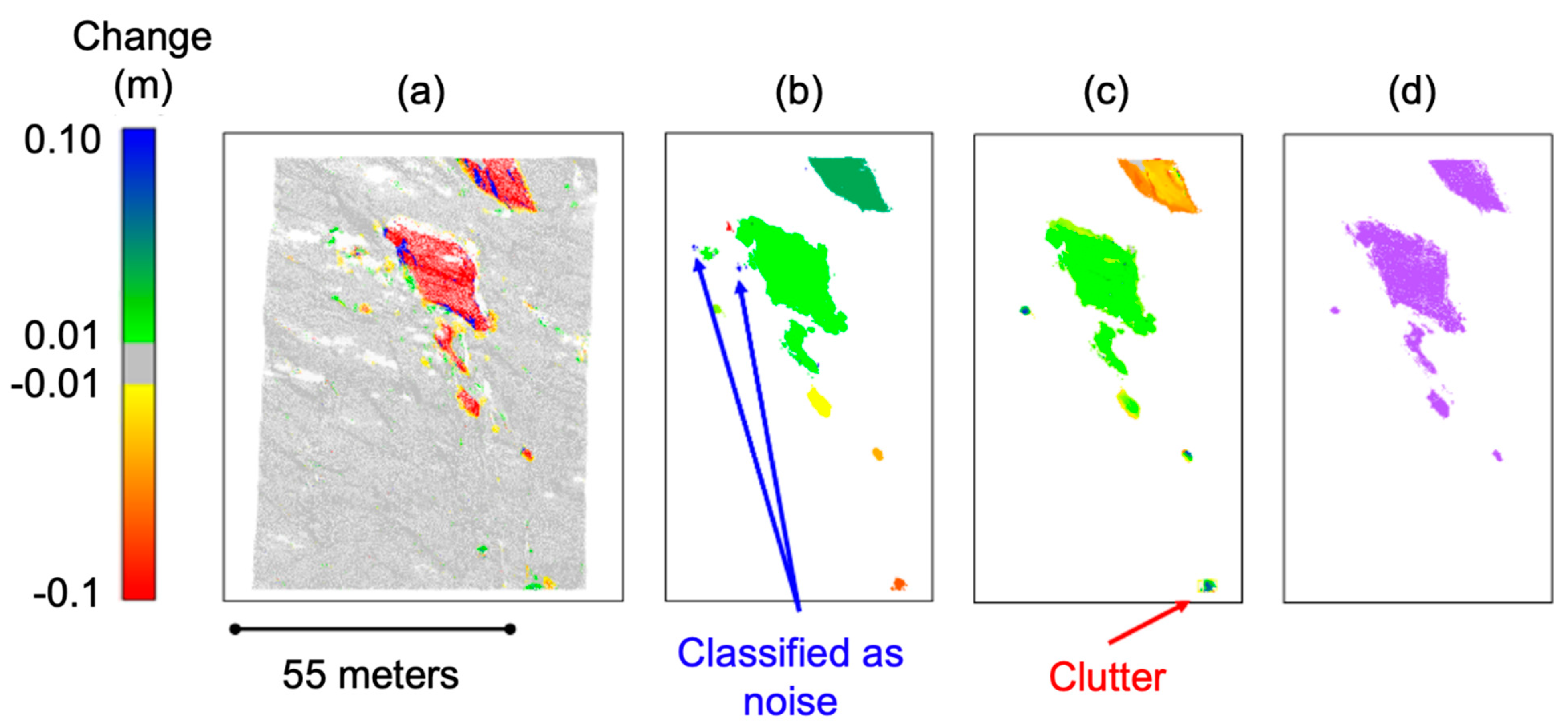

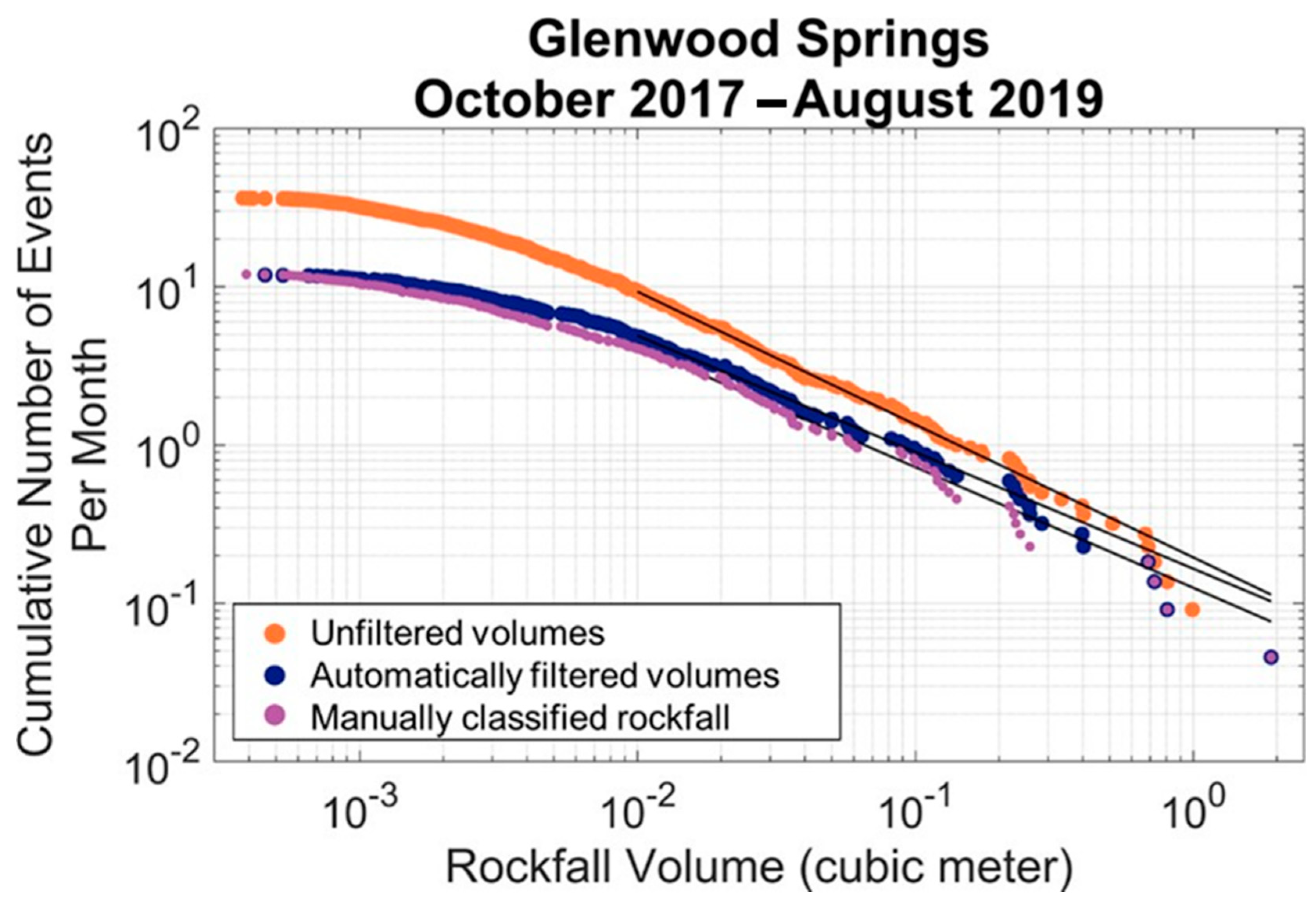

3.1. Results of the Workflow Applied to the Glenwood Springs Slope

- The maximum cluster volume (corresponding to the infinite alpha radius) was greater than 0.2 m3.

- The centroid of the cluster was within a threshold distance from the centroid of another cluster.

- calculate the distance between cluster centroids.

- select the larger of the primary axis lengths for each of the clusters.

- if the distance between centroids is smaller than the larger of the primary axis lengths, the clusters were flagged for manual validation.

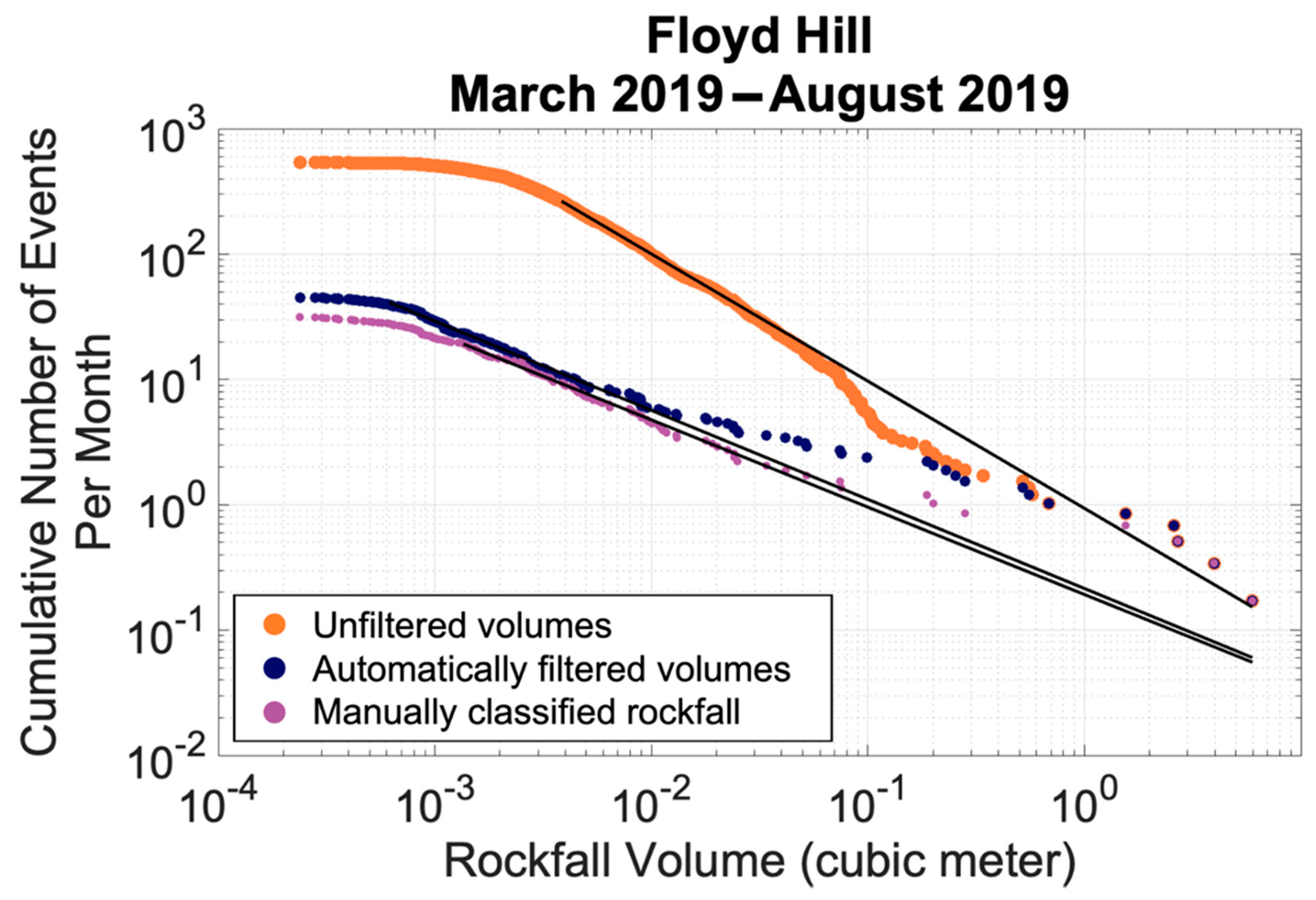

3.2. Results of the Workflow Aplied to a Secondary Slope

4. Discussion

5. Conclusions

- Testing the new modified M3C2 change algorithm showed that limiting the extent of the cylinder projection can reduce error from capturing secondary surfaces.

- Application of the proposed workflow to a secondary slope shows that this workflow can easily be adapted and used to processes point cloud data for rock slopes with different characteristics (e.g., point spacing and rock type).

- The proposed machine learning algorithm can be used to provide a classification of volumes, but it is limited by the number and quality of training samples provided. The proposed classification method resulted in FNR and FPR values of less than 20% for the initially tested Glenwood Springs Site. This proposed method for labelling cluster can save hours of manual volume validation.

- When applied to the secondary Floyd Hill site and without updating the training data), the proposed machine learning algorithm reduced error (as a percentage of automatic labels that disagreed with the manual label) to less than 10%. When training data specific to the Floyd Hill site were used to classify clusters, the error was further reduced to less than 6.5%.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Telling, J.; Lyda, A.; Hartzell, P.; Glennie, C. Review of Earth science research using terrestrial laser scanning. Earth Sci. Rev. 2017, 169, 35–68. [Google Scholar] [CrossRef]

- Prokop, A. Assessing the applicability of terrestrial laser scanning for spatial snow depth measurements. Cold Reg. Sci. Technol. 2008, 54, 155–163. [Google Scholar] [CrossRef]

- Corripio, J.G.; Durand, Y.; Guyomarc’h, G.; Mérindol, L.; Lecorps, D.; Pugliése, P. Land-based remote sensing of snow for the validation of a snow transport model. Cold Reg. Sci. Technol. 2004, 39, 93–104. [Google Scholar] [CrossRef]

- Deems, J.S.; Painter, T.H.; Finnegan, D.C. Lidar measurement of snow depth: A review. J. Glaciol. 2013, 59, 467–479. [Google Scholar] [CrossRef]

- Rosser, N.J.; Petley, D.; Lim, M.; Dunning, S.; Allison, R.J. Terrestrial laser scanning for monitoring the process of hard rock coastal cliff erosion. Q. J. Eng. Geol. Hydrogeol. 2005, 38. [Google Scholar] [CrossRef]

- Grayson, R.; Holden, J.; Jones, R.R.; Carle, J.A.; Lloyd, A.R. Improving particulate carbon loss estimates in eroding peatlands through the use of terrestrial laser scanning. Geomorphology 2012, 179, 240–248. [Google Scholar] [CrossRef]

- Nagihara, S.; Mulligan, K.R.; Xiong, W. Use of a three-dimensional laser scanner to digitally capture the topography of sand dunes in high spatial resolution. Earth Surf. Process. Landf. 2004, 29, 391–398. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, W.; Huang, L.; Vimarlund, V.; Wang, Z. Applications of terrestrial laser scanning for tunnels: A review. J. Traffic Transp. Eng. (Engl. Ed.) 2014, 1, 325–337. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Abellán, A.; Vilaplana, J.M.; Calvet, J.; Garcia-Sellés, D.; Asensio, E. Rockfall monitoring by Terrestrial Laser Scanning—Case study of the basaltic rock face at Castellfollit de la Roca (Catalonia, Spain). Nat. Hazards Earth Syst. Sci. 2011, 11, 829–841. [Google Scholar] [CrossRef]

- Kromer, R.; Walton, G.; Gray, B.; Lato, M.; Group, R. Development and optimization of an automated fixed-location time lapse photogrammetric rock slope monitoring system. Remote Sens. 2019, 11, 1890. [Google Scholar] [CrossRef]

- Oppikofer, T.; Jaboyedoff, M.; Blikra, L.; Derron, M.H.; Metzger, R. Characterization and monitoring of the Åknes rockslide using terrestrial laser scanning. Nat. Hazards Earth Syst. Sci. 2009, 9, 1003–1019. [Google Scholar] [CrossRef]

- Olsen, M.J.; Wartman, J.; McAlister, M.; Mahmoudabadi, H.; O’Banion, M.S.; Dunham, L.; Cunningham, K. To fill or not to fill: Sensitivity analysis of the influence of resolution and hole filling on point cloud surface modeling and individual rockfall event detection. Remote Sens. 2015, 7, 12103–12134. [Google Scholar] [CrossRef]

- Lim, M.; Petley, D.N.; Rosser, N.J.; Allison, R.J.; Long, A.J.; Pybus, D. Combined digital photogrammetry and time-of-flight laser scanning for monitoring cliff evolution. Photogramm. Rec. 2005, 20109–20129. [Google Scholar] [CrossRef]

- Abellán, A.; Calvet, J.; Vilaplana, J.; Blanchard, J. Detection and spatial prediction of rockfalls by means of terrestrial laser scanner monitoring. Geomorphology 2010, 119, 162–171. [Google Scholar] [CrossRef]

- Hungr, O.; Evans, S.G.; Hazzard, J. Magnitude and frequency of rock falls and rock slides along the main transportation corridors of southwestern British Columbia. Can. Geotech. J. 1999, 36, 224–238. [Google Scholar] [CrossRef]

- Hungr, O.; McDougall, S.; Wise, M.; Cullen, M. Magnitude–frequency relationships of debris flows and debris avalanches in relation to slope relief. Geomorphology 2008, 96, 355–365. [Google Scholar] [CrossRef]

- van Veen, M.; Hutchinson, D.; Kromer, R.; Lato, M.; Edwards, T. Effects of sampling interval on the frequency—Magnitude relationship of rockfalls detected from terrestrial laser scanning using semi-automated methods. Landslides 2017, 14, 1579–1592. [Google Scholar] [CrossRef]

- Santana, D.; Corominas, J.; Mavrouli, O.; Garcia-Sellés, D. Magnitude–frequency relation for rockfall scars using a Terrestrial Laser Scanner. Eng. Geol. 2012, 145–146, 50–64. [Google Scholar] [CrossRef]

- Benjamin, J.; Rosser, N.J.; Brain, M.J. Emergent characteristics of rockfall inventories captured at a regional scale. Earth Surf. Process. Landf. 2020, 45, 2773–2787. [Google Scholar] [CrossRef]

- Bonneau, D.; Hutchinson, D.; Difrancesco, P.-M.; Coombs, M.; Sala, Z. 3-Dimensional Rockfall Shape Back-Analysis: Methods and Implications. Nat. Hazards Earth Syst. Sci. 2019, 19, 2745–2765. [Google Scholar] [CrossRef]

- De Vilder, S.J.; Rosser, N.J.; Brain, M. Forensic analysis of rockfall scars. Geomorphology 2017, 295, 202–214. [Google Scholar] [CrossRef]

- Kromer, R.A.; Abellán, A.; Hutchinson, D.J.; Lato, M.; Chanut, M.A.; Dubois, L.; Jaboyedoff, M. Automated Terrestrial Laser Scanning with Near Real-Time Change Detection-Monitoring of the Séchilienne Landslide. Earth Surf. Dyn. 2017, 5, 293–310. [Google Scholar] [CrossRef]

- Williams, J.; Rosser, N.J.; Hardy, R.; Brain, M.; Afana, A. Optimising 4-D surface change detection: An approach for capturing rockfall magnitude–frequency. Earth Surf. Dyn. 2018, 6, 101–119. [Google Scholar] [CrossRef]

- Eltner, A.; Kaiser, A.; Abellan, A.; Schindewolf, M. Time lapse structure-from-motion photogrammetry for continuous geomorphic monitoring. Earth Surf. Process. Landf. 2017, 42, 2240–2253. [Google Scholar] [CrossRef]

- Kromer, R.; Hutchinson, D.; Lato, M.; Gauthier, D.; Edwards, T. Identifying rock slope failure precursors using LiDAR for transportation corridor hazard management. Eng. Geol. 2015, 195, 93–103. [Google Scholar] [CrossRef]

- Rosser, N.J.; Lim, M.; Petley, D.; Dunning, S.; Allison, R. Patterns of precursory rockfall prior to slope failure. J. Geophys. Res. 2007, 112, F04014. [Google Scholar] [CrossRef]

- Kromer, R.; Lato, M.; Hutchinson, D.J.; Gauthier, D.; Edwards, T. Managing rockfall risk through baseline monitoring of precursors using a terrestrial laser scanner. Can. Geotech. J. 2017, 54, 953–967. [Google Scholar] [CrossRef]

- Stock, G.M.; Martel, S.J.; Collins, B.D.; Harp, E.L. Progressive failure of sheeted rock slopes: The 2009-2010 Rhombus Wall rock falls in Yosemite Valley, California, USA. Earth Surf. Process. Landf. 2012, 37, 546–561. [Google Scholar] [CrossRef]

- Royán, M.J.; Abellán, A.; Jaboyedoff, M.; Vilaplana, J.M.; Calvet, J. Spatio-temporal analysis of rockfall pre-failure deformation using Terrestrial LiDAR. Landslides 2014, 11, 697–709. [Google Scholar] [CrossRef]

- Delonca, A.; Gunzburger, Y.; Verdel, T. Statistical correlation between meteorological and rockfall databases. Nat. Hazards Earth Syst. Sci. 2014, 14, 1953–1964. [Google Scholar] [CrossRef]

- D’amato, J.; Hantz, D.; Guerin, A.; Jaboyedoff, M.; Baillet, L.; Mariscal, A. Influence of meteorological factors on rockfall occurrence in a middle mountain limestone cliff. Hazards Earth Syst. Sci. 2016, 16, 719–735. [Google Scholar] [CrossRef]

- Matsuoka, N.; Sakai, H. Rockfall activity from an alpine cliff during thawing periods. Geomorphology 1999, 28, 309–328. [Google Scholar] [CrossRef]

- Luckman, B.H. Rockfalls and rockfall inventory data: Some observations from surprise valley, Jasper National Park, Canada. Earth Surf. Process. 1976, 1, 287–298. [Google Scholar] [CrossRef]

- Pierson, L.A.; Vickle, R.V. Rockfall Hazard Rating System: Participants’ Manual. August 1993. Available online: https://trid.trb.org/view/459774 (accessed on 1 February 2020).

- Pierson, L.A.; Davis, S.A.; van Vickle, R. Rockfall Hazard Rating System: Implementation Manual. August 1990. Available online: https://trid.trb.org/view/361735 (accessed on 1 February 2020).

- Budetta, P.; Nappi, M. Comparison between qualitative rockfall risk rating systems for a road affected by high traffic intensity. Nat. Hazards Earth Syst. Sci. 2013, 13, 1643–1653. [Google Scholar] [CrossRef][Green Version]

- Santi, P.; Russell, C.P.; Higgins, J.D.; Spriet, J.I. Modification and statistical analysis of the Colorado Rockfall Hazard Rating System. Eng. Geol. 2009, 104, 55–65. [Google Scholar] [CrossRef]

- Chau, K.T.; Wong, R.H.C.; Liu, J.; Lee, C.F. Rockfall Hazard Analysis for Hong Kong Based on Rockfall Inventory. Rock Mech. Rock Engng 2003, 36, 383–408. [Google Scholar] [CrossRef]

- Carrea, D.; Abellán, A.; Derron, M.-H.; Jaboyedoff, M. Automatic Rockfalls Volume Estimation Based on Terrestrial Laser Scanning Data. In Engineering Geology for Society and Territory—Volume 2; Springer International Publishing: Cham, Switzerland, 2015; pp. 425–428. [Google Scholar]

- Williams, J. Insights into Rockfall from Constant 4D Monitoring; Durham University: Durham, UK, 2017. [Google Scholar]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- DiFrancesco, P.-M.; Bonneau, D.; Hutchinson, D.J. The Implications of M3C2 Projection Diameter on 3D Semi-Automated Rockfall Extraction from Sequential Terrestrial Laser Scanning Point Clouds. Remote Sens. 2020, 12, 1885. [Google Scholar] [CrossRef]

- Bonneau, D.; Difrancesco, P.-M.; Hutchinson, D. Surface Reconstruction for Three-Dimensional Rockfall Volumetric Analysis. ISPRS Int. J. Geo-Inf. 2019, 8, 548. [Google Scholar] [CrossRef]

- Kromer, R.; Abellán, A.; Hutchinson, D.; Lato, M.; Edwards, T.; Jaboyedoff, M. A 4D Filtering and Calibration Technique for Small-Scale Point Cloud Change Detection with a Terrestrial Laser Scanner. Remote Sens. 2015, 7, 13029–13052. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional alpha shapes. In Proceedings of the 1992 Workshop on Volume Visualization, VVS 1992, New York, NY, USA, 19–20 October 1992; pp. 75–82. [Google Scholar] [CrossRef]

- Amenta, N.; Choi, S.; Kolluri, R.K. The power crust. In Proceedings of the Symposium on Solid Modeling and Applications, Ann Arbor, MI, USA, 4–8 June 2001; pp. 249–260. [Google Scholar] [CrossRef]

- Mejfa-Navarro, M.; Wohl, E.; Oaks, S. Geological hazards, vulnerability, and risk assessment using GIS: Model for Glenwood Springs, Colorado. Geomorphology 1994, 10, 331–354. [Google Scholar] [CrossRef]

- Kirkham, R.M.; Streufert, R.K.; Cappa, J.A.; Shaw, C.A.; Allen, J.L.; Schroeder, T.J.I. Geologic Map of the Glenwood Springs Quadrangle, Garfield County, Colorado. 2009. Available online: https://ngmdb.usgs.gov/Prodesc/proddesc_94610.htm (accessed on 4 March 2020).

- Abellán, A.; Oppikofer, T.; Jaboyedoff, M.; Rosser, N.; Lim, M.; Lato, M. Terrestrial laser scanning of rock slope instabilities. Earth Surf. Process. Landf. 2014, 39, 80–97. [Google Scholar] [CrossRef]

- Rusu, R.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D Point cloud based object maps for household environments. Rob. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Gomez, C.; Purdie, H. UAV- based Photogrammetry and Geocomputing for Hazards and Disaster Risk Monitoring—A Review. Geoenviron. Disasters 2016, 3, 1–11. [Google Scholar] [CrossRef]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef]

- Zhong, Y. Intrinsic shape signatures: A shape descriptor for 3D object recognition. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 689–696. [Google Scholar] [CrossRef]

- Rusu, R.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar] [CrossRef]

- Holz, D.; Ichim, A.; Tombari, F.; Rusu, R.; Behnke, S. Registration with the Point Cloud Library: A Modular Framework for Aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. In Proceedings of the 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 2724–2729. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar] [CrossRef]

- He, Y.; Liang, B.; Yang, J.; Li, S.; He, J. An Iterative Closest Points Algorithm for Registration of 3D Laser Scanner Point Clouds with Geometric Features. Sensors 2017, 17, 1862. [Google Scholar] [CrossRef]

- Pulli, K. Multiview registration for large data sets. In Proceedings of the 2nd International Conference on 3-D Digital Imaging and Modeling, Ottawa, ON, Canada, 4–8 October 1999; pp. 160–168. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Ferland, F.; Michaud, F. Relative Motion Threshold for Rejection in ICP Registration. Springer Tracts Adv. Robot. 2010, 62, 229–238. [Google Scholar] [CrossRef]

- Weidner, L.; Walton, G.; Kromer, R. Classification methods for point clouds in rock slope monitoring: A novel machine learning approach and comparative analysis. Eng. Geol. 2019, 263. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. In Proceedings of the ISPRS WG III/3, III/4, V/3 Workshop “Laser scanning 2005”, Enschede, The Netherlands, 12–14 September 2005; pp. 30–35. [Google Scholar]

- Guerin, A.; Rosetti, J.-P.; Hantz, D.; Jaboyedoff, M. Estimating rock fall frequency in a limestone cliff using LIDAR measurements. In Proceedings of the International Conference on Landslide Risk, Tabarka, Tunisia, 14–16 March 2013; Available online: https://www.researchgate.net/publication/259873223_Estimating_rock_fall_frequency_in_a_limestone_cliff_using_LIDAR_measurements (accessed on 6 April 2020).

- Tonini, M.; Abellán, A. Rockfall detection from terrestrial lidar point clouds: A clustering approach using R. J. Spat. Inf. Sci. 2013, 8, 95–110. [Google Scholar] [CrossRef]

- Ester, M.; Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD’96: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; Volume 3, pp. 226–231. Available online: http://citeseer.ist.psu.edu/viewdoc/summary?doi=10.1.1.121.9220 (accessed on 21 September 2019).

- Collett, D. Modelling Binary Data, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- The Mathworks Inc. FitGLM. 2020. Available online: https://www.mathworks.com/help/stats/fitglm.html (accessed on 26 February 2020).

- Dietterich, T. Overfitting and Undercomputing in Machine Learning. ACM Comput. Surv. 1995, 27, 326–327. [Google Scholar] [CrossRef]

- The MathWorks Inc. TreeBagger. 2020. Available online: https://www.mathworks.com/help/stats/treebagger.html (accessed on 26 February 2020).

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Sheridan, D.M.; Reed, J.C.; Bryant, B. Geologic Map of the Evergreen Quadrangle, Jefferson County, Colorado. U.S. Geological Survey 1972. Available online: https://ngmdb.usgs.gov/Prodesc/proddesc_9606.htm (accessed on 27 February 2021).

- Sheridan, D.M.; Marsh, S.P. Geologic Map of the Squaw Pass quadrangle, Clear Creek, Jefferson, and Gilpin Counties, Colorado. 1976. Available online: https://ngmdb.usgs.gov/ngm-bin/pdp/zui_viewer.pl?id=15298 (accessed on 6 January 2020).

| Parameter | Use(s) | Value(s) | Guidance |

|---|---|---|---|

| SOR Filter | Reducing inconsistencies in surface point density. | 50 nearest neighbors | SOR should be tuned to ensure that regions of bedrock covered by the scanner are not removed. |

| Voxel Filter | Temporarily subsample to increase efficiency of rough alignment. | 0.45 m | Subsampling should be greater than the average point spacing of the point cloud. |

| Uniform Sampling Filter | (a) Temporarily subsample to increase efficiency of rough alignment; (b) Subsample to a desired point spacing. | (a) 0.3 m; (b) 0.02 m | Subsampling should be greater than the average point spacing of the point cloud. |

| Parameter | Use(s) | Value(s) | Guidance |

|---|---|---|---|

| Normal Radius | Radius used to construct normal for (a) the coarse alignment; (b) the fine alignment. Must always be larger than the subsampling used to increase efficiency. | (a) 3 m; (b) 1.2 m | Normal radius should be greater than the subsampling. |

| Feature Radius | Search radius the PCL algorithm used to generate feature histograms and find keypoints. Should be larger than the normal radius. | 4.5 m | Feature radius should be greater than the normal radius. |

| Inlier Distance | A metric used to define the threshold for inlier and outlier points for keypoint rejection for the course alignment. | 0.25 m | Inlier distance should be approximately 10x the average point spacing. |

| Threshold Degree | A threshold defining how much normals in a correspondence pair can deviate from one another. | 40 degrees | The threshold degree should be tuned based upon the geometry of the slopes jointing. Testing showed an optimal threshold degree between 38 and 42 degrees. |

| Multiple of S.D. | The distance as a multiple of the standard deviation of nearest neighbors that valid correspondence pairs should be within, with respect to one another. | 1.3 | Multiple of standard deviation should be reduced to remove more outliers. However, a multiplier less than 1 was shown to reject all correspondences in most cases. |

| Parameter | Glenwood Springs | Floyd Hill | Comment |

|---|---|---|---|

| Voxel Subsampling Radius (coarse alignment) | 0.45 m | 0.5 m | The higher voxel subsampling at the Floyd Hill site was used to accommodate for the lower point density (greater point spacing) and greater roughness (due to geology) in the raw scans. |

| Normal Radius (coarse alignment) | 3 m | 3 m | |

| Feature Radius (coarse alignment) | 4.5 m | 4.5 m | |

| Inlier Distance (coarse alignment) | 1.3 | 1.3 | |

| Uniform Subsampling Radius (fine alignment) | 0.3 m | 0.3 m | |

| Normal Radius (fine alignment) | 1.2 m | 1.2 m | . |

| Threshold Degree (fine alignment) | 40 degrees | 40 degrees | |

| Threshold Radius (point classification) | 0.9 m | 0.9 m | |

| Normal Radius (change calculation) | 0.25 m | 0.25 m | |

| Projection Radius (change calculation) | 0.15 m | 0.08 m | The cylinder radius was decreased due to updated recommendations provided by DiFrancesco et al. [44]. |

| Epsilon (DBSCAN) | 0.1 m | 0.1 m | |

| Minimum Points (DBSCAN) | 35 m | 16 m | Differences were due to lower point density at Floyd Hill. |

| Probability Threshold | 0.325 | 0.104 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schovanec, H.; Walton, G.; Kromer, R.; Malsam, A. Development of Improved Semi-Automated Processing Algorithms for the Creation of Rockfall Databases. Remote Sens. 2021, 13, 1479. https://doi.org/10.3390/rs13081479

Schovanec H, Walton G, Kromer R, Malsam A. Development of Improved Semi-Automated Processing Algorithms for the Creation of Rockfall Databases. Remote Sensing. 2021; 13(8):1479. https://doi.org/10.3390/rs13081479

Chicago/Turabian StyleSchovanec, Heather, Gabriel Walton, Ryan Kromer, and Adam Malsam. 2021. "Development of Improved Semi-Automated Processing Algorithms for the Creation of Rockfall Databases" Remote Sensing 13, no. 8: 1479. https://doi.org/10.3390/rs13081479

APA StyleSchovanec, H., Walton, G., Kromer, R., & Malsam, A. (2021). Development of Improved Semi-Automated Processing Algorithms for the Creation of Rockfall Databases. Remote Sensing, 13(8), 1479. https://doi.org/10.3390/rs13081479