Hyperspectral Image Super-Resolution with Self-Supervised Spectral-Spatial Residual Network

Abstract

1. Introduction

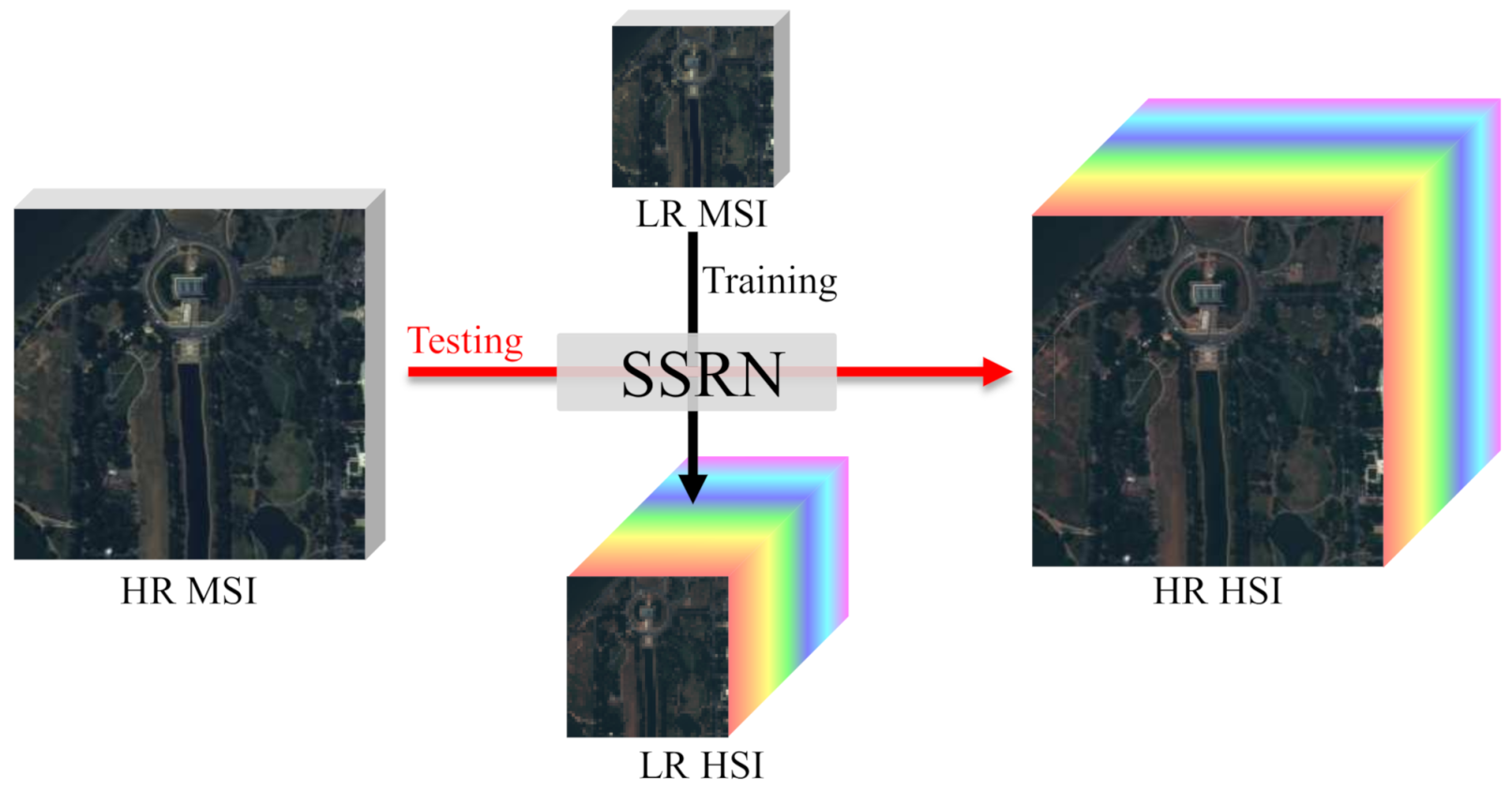

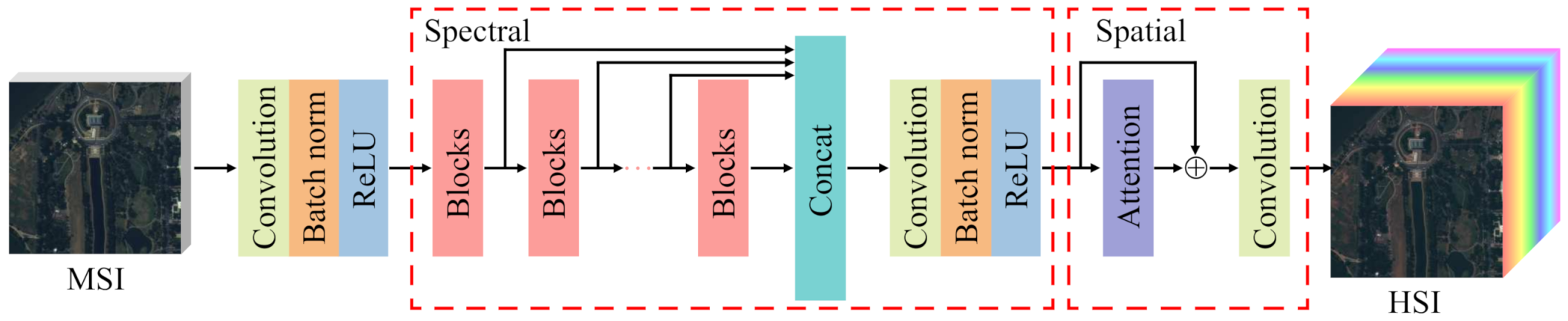

- A spectral-spatial residual network is proposed to consider the fusion of HR MSIs and LR HSIs as a pixel-wise spectral mapping problem. In SSRN, the HR HSI is estimated from the HR MSI at the desired spatial resolution, which can effectively preserve spatial structures of HR HSIs.

- A self-supervised fine-tuning strategy is proposed to promote SSRN learning optimal spectral mapping. The self-supervised fine-tuning does not require HR HSIs as the supervised information.

- A spatial module configured with the attention mechanism is proposed to explore the complementarity of adjacent pixels. The attention mechanism can explore the spectral-spatial features from homogeneous adjacent pixels, which is beneficial to the learning of pixel-wise spectral mapping.

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

3. Materials and Methods

3.1. Proposed Method

3.1.1. Problem Formulation

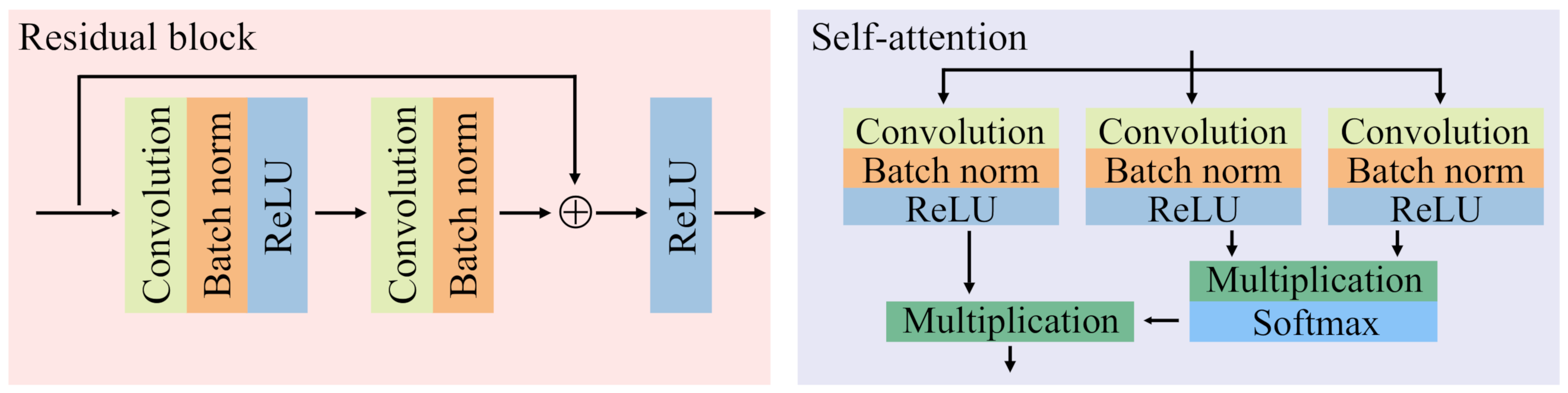

3.1.2. Architecture of SSRN

3.1.3. Loss Function

3.1.4. Self-Supervised Fine-Tuning

3.2. Software and Package

3.3. Databases

3.4. Evaluation Metrics

4. Results

4.1. Parameter Settings of SSRN

4.1.1. Number of Convolutional Kernels

4.1.2. Number of Residual Blocks

4.1.3. Balancing Parameter

4.2. Ablation Study

4.3. Comparisons with Other Methods on Simulated Databases

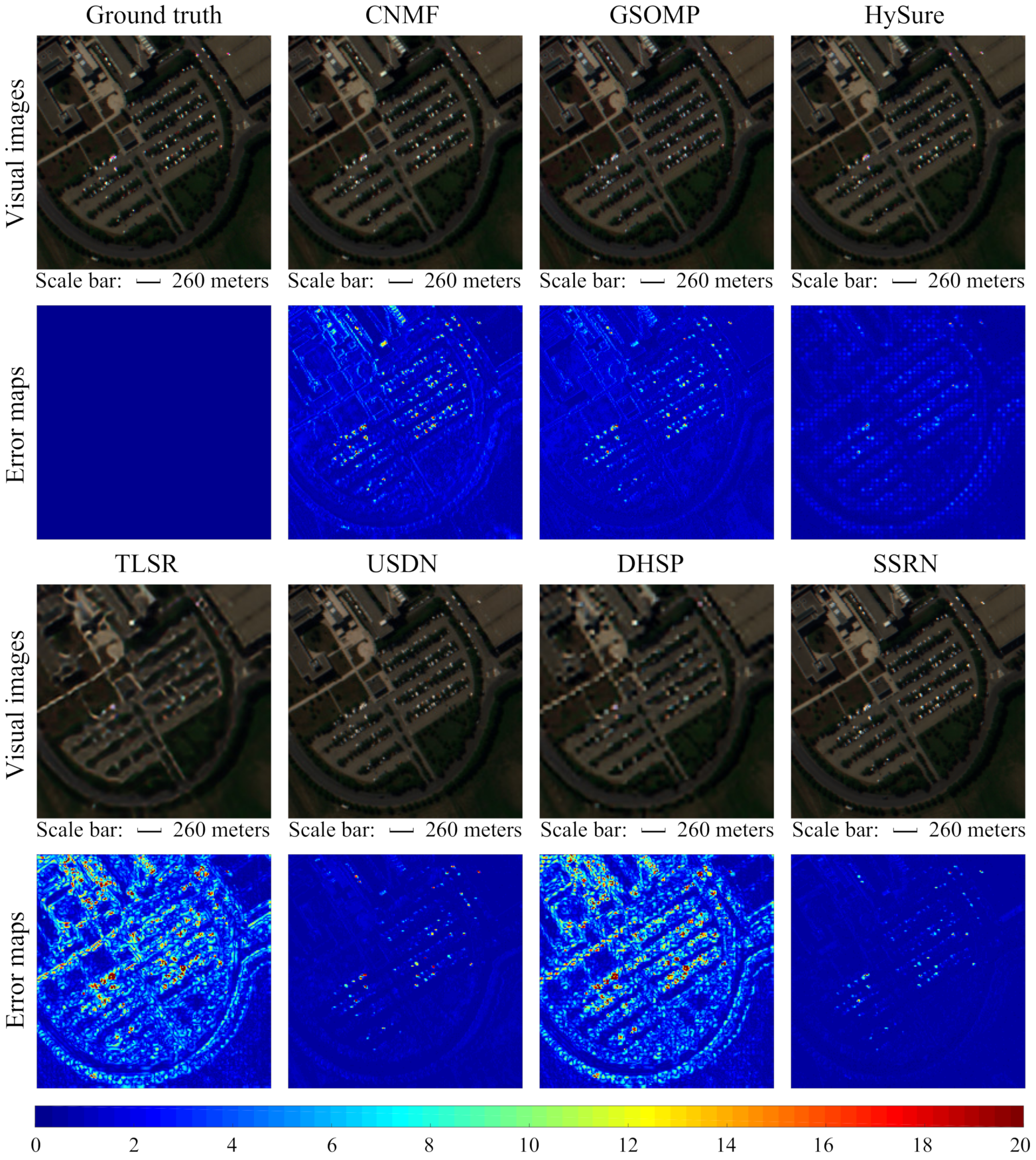

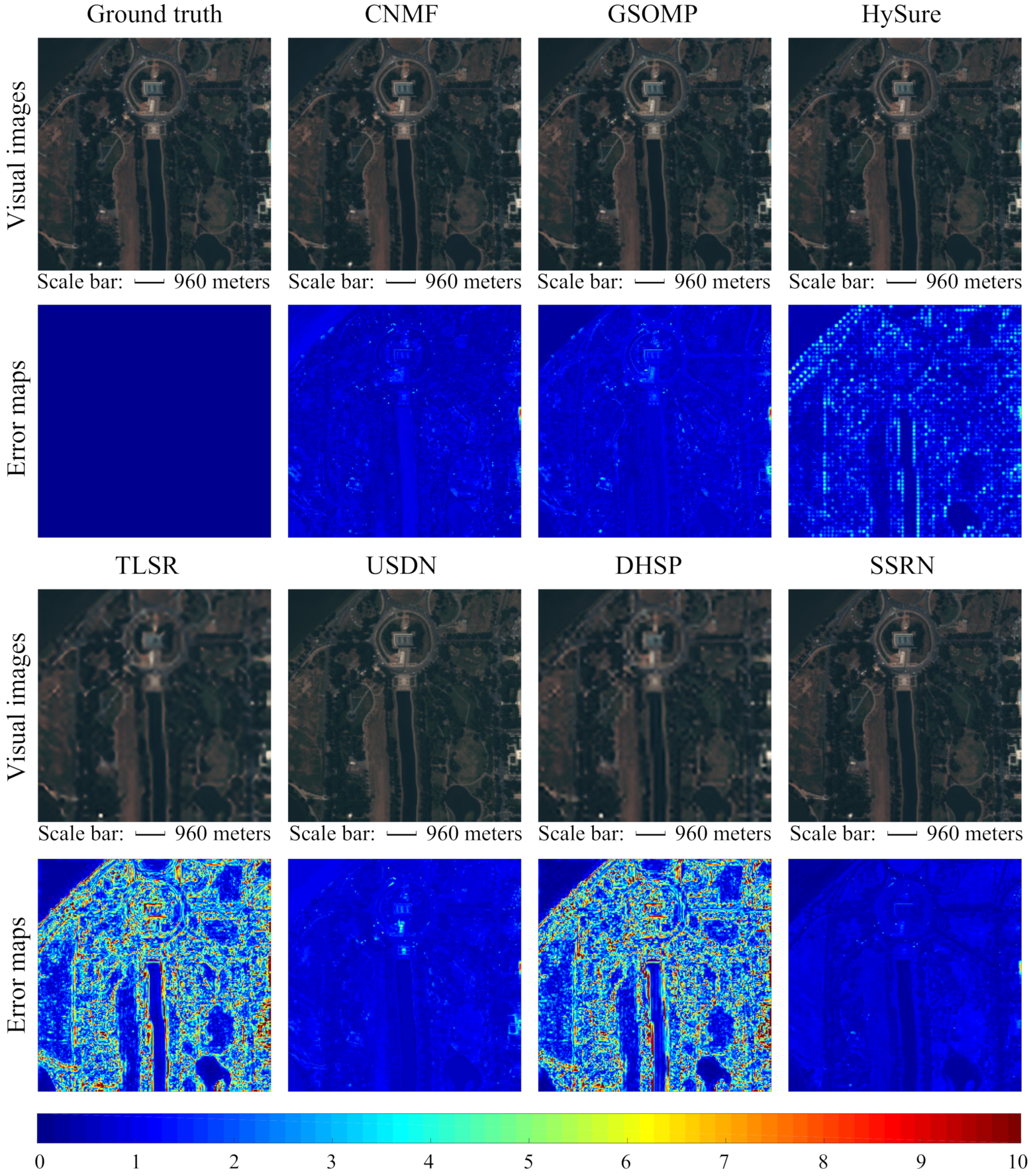

4.3.1. PU Database

4.3.2. WDCM Database

4.4. Comparisons with Other Methods on Real Databases

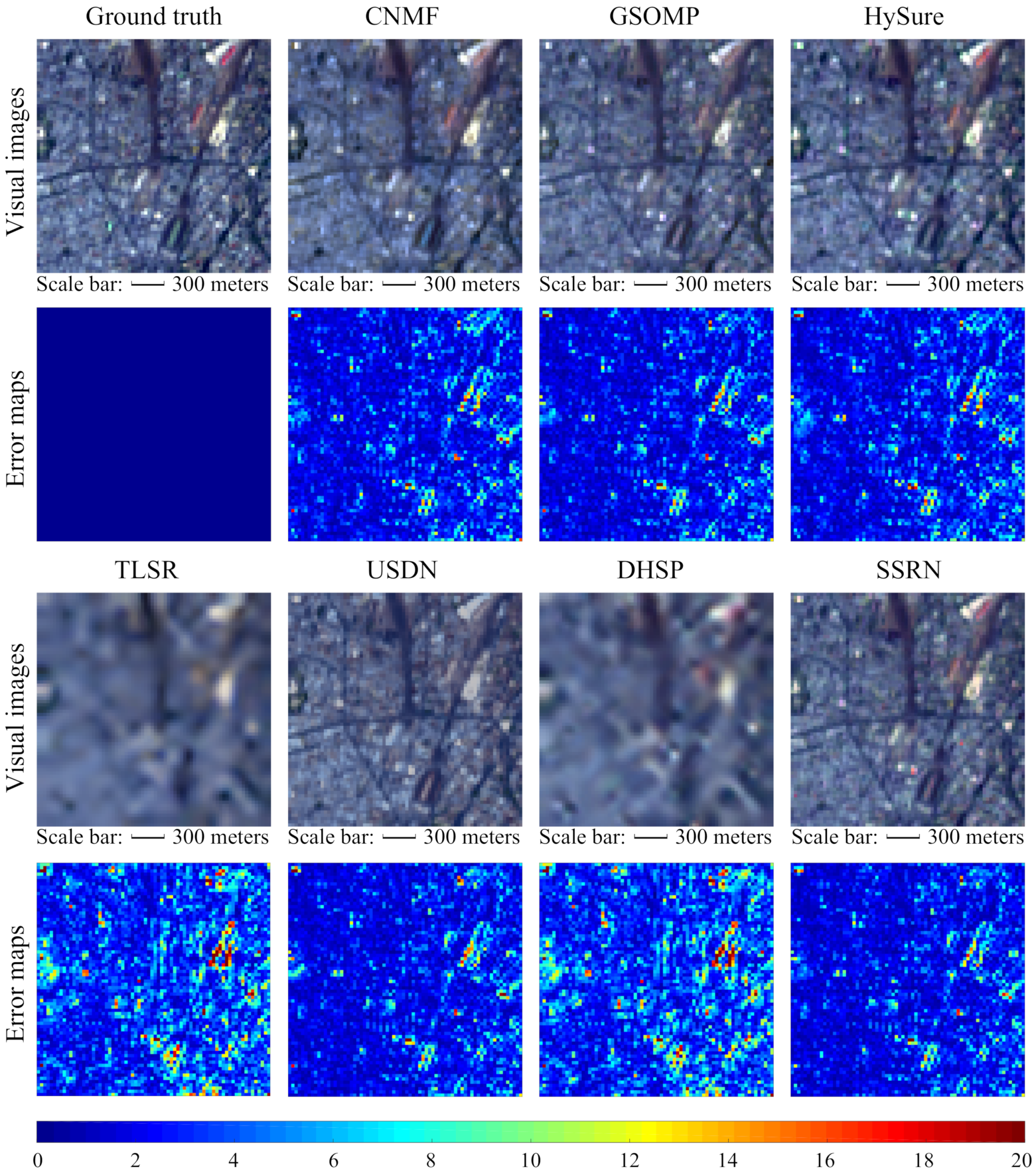

4.4.1. Paris Database

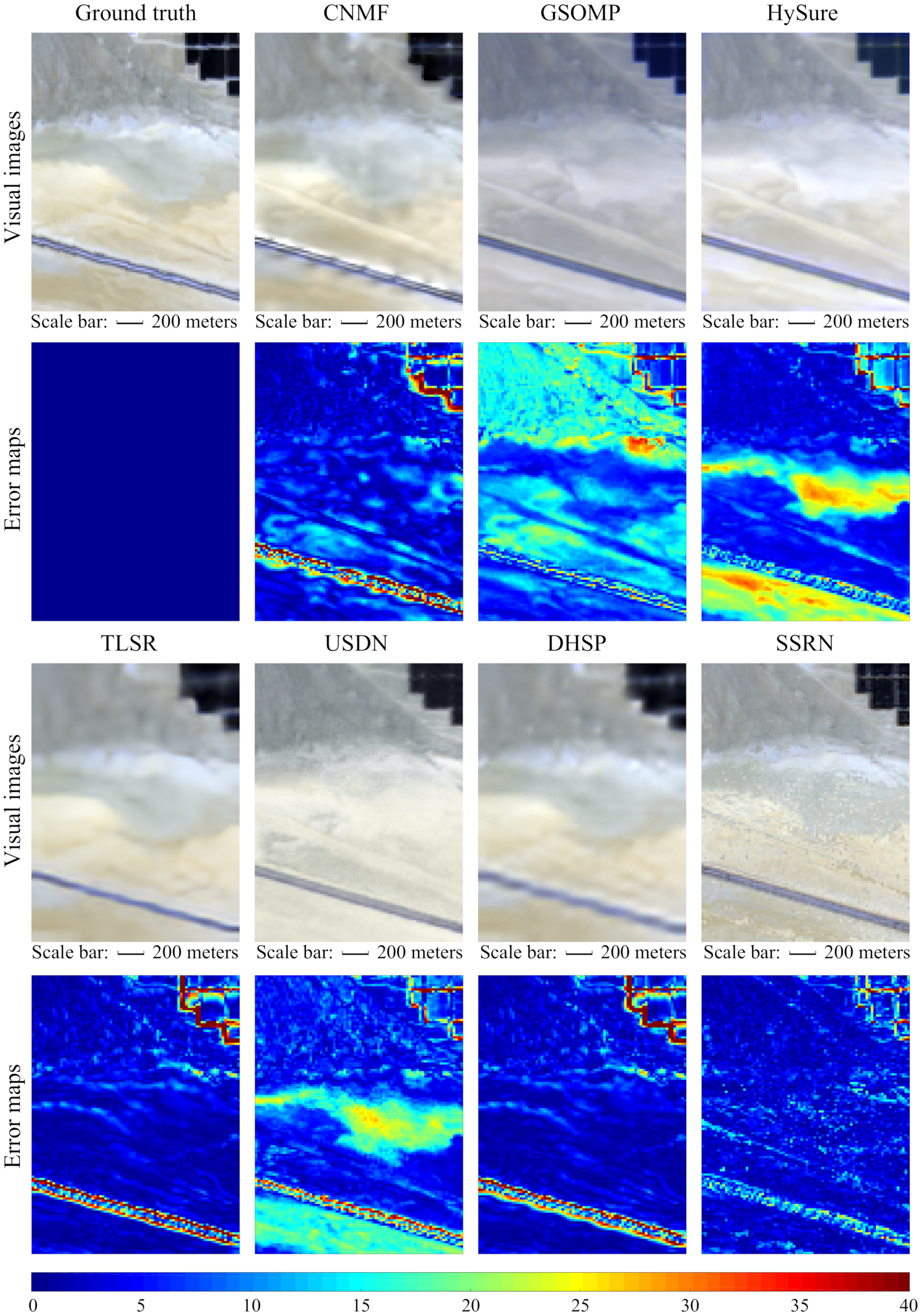

4.4.2. Ivanpah Playa Database

4.5. Time Cost

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M. Super-Resolution for Hyperspectral and Multispectral Image Fusion Accounting for Seasonal Spectral Variability. IEEE Trans. Image Process. 2020, 29, 116–127. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Zheng, X.; Lu, X.; Wu, S. Spectral-Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3232–3245. [Google Scholar] [CrossRef]

- Zhao, X.; Li, W.; Zhang, M.; Tao, R.; Ma, P. Adaptive Iterated Shrinkage Thresholding-Based Lp-Norm Sparse Representation for Hyperspectral Imagery Target Detection. Remote Sens. 2020, 12, 3991. [Google Scholar] [CrossRef]

- Ren, X.; Lu, L.; Chanussot, J. Toward Super-Resolution Image Construction Based on Joint Tensor Decomposition. Remote Sens. 2020, 12, 2535. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, M.; Yang, S. Multispectral and Hyperspectral Image Fusion Based on Group Spectral Embedding and Low-Rank Factorization. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1363–1371. [Google Scholar] [CrossRef]

- Chen, W.; Lu, X. Unregistered Hyperspectral and Multispectral Image Fusion with Synchronous Nonnegative Matrix Factorization. In Chinese Conference on Pattern Recognition and Computer Vision, Proceedings of the Third Chinese Conference, PRCV 2020, Nanjing, China, 16–18 October 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 602–614. [Google Scholar]

- Liu, W.; Lee, J. An Efficient Residual Learning Neural Network for Hyperspectral Image Superresolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1240–1253. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simões, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and Multispectral Data Fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Han, X.; Shi, B.; Zheng, Y. Self-Similarity Constrained Sparse Representation for Hyperspectral Image Super-Resolution. IEEE Trans. Image Process. 2018, 27, 5625–5637. [Google Scholar] [CrossRef]

- Feng, X.; He, L.; Cheng, Q.; Long, X.; Yuan, Y. Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information. Remote Sens. 2020, 12, 1009. [Google Scholar] [CrossRef]

- Simões, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A Convex Formulation for Hyperspectral Image Superresolution via Subspace-Based Regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J. Fast Fusion of Multi-Band Images Based on Solving a Sylvester Equation. IEEE Trans. Image Process. 2015, 24, 4109–4121. [Google Scholar] [CrossRef]

- Zhou, Y.; Feng, L.; Hou, C.; Kung, S. Hyperspectral and Multispectral Image Fusion Based on Local Low Rank and Coupled Spectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5997–6009. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, M.; Yang, S.; Jiao, L. Spatial-Spectral-Graph-Regularized Low-Rank Tensor Decomposition for Multispectral and Hyperspectral Image Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1030–1040. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Fang, L.; Lu, T.; Bioucas-Dias, J.M. Nonlocal Sparse Tensor Factorization for Semiblind Hyperspectral and Multispectral Image Fusion. IEEE Trans. Cybern. 2020, 50, 4469–4480. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Zhang, L.; Nie, J.; Wei, W.; Zhang, Y.; Liao, S.; Shao, L. Unsupervised Adaptation Learning for Hyperspectral Imagery Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 3070–3079. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, W.; Wang, Q.; Li, X. SSR-NET: Spatial-Spectral Reconstruction Network for Hyperspectral and Multispectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 1–13. [Google Scholar] [CrossRef]

- Xie, Q.; Zhou, M.; Zhao, Q.; Meng, D.; Zuo, W.; Xu, Z. Multispectral and Hyperspectral Image Fusion by MS/HS Fusion Net. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1585–1594. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Guo, A.; Fang, L. Deep Hyperspectral Image Sharpening. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5345–5355. [Google Scholar] [CrossRef]

- Xie, W.; Jia, X.; Li, Y.; Lei, J. Hyperspectral Image Super-Resolution Using Deep Feature Matrix Factorization. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6055–6067. [Google Scholar] [CrossRef]

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the Accuracy of Multispectral Image Pansharpening by Learning a Deep Residual Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef]

- Wang, W.; Zeng, W.; Huang, Y.; Ding, X.; Paisley, J. Deep Blind Hyperspectral Image Fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 16–20 June 2019; pp. 4149–4158. [Google Scholar] [CrossRef]

- Li, K.; Xie, W.; Du, Q.; Li, Y. DDLPS: Detail-Based Deep Laplacian Pansharpening for Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8011–8025. [Google Scholar] [CrossRef]

- Yuan, Y.; Zheng, X.; Lu, X. Hyperspectral Image Superresolution by Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1963–1974. [Google Scholar] [CrossRef]

- Sidorov, O.; Hardeberg, J.Y. Deep Hyperspectral Prior: Single-Image Denoising, Inpainting, Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 16–20 June 2019; pp. 3844–3851. [Google Scholar] [CrossRef]

- Qu, Y.; Qi, H.; Kwan, C. Unsupervised Sparse Dirichlet-Net for Hyperspectral Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2511–2520. [Google Scholar] [CrossRef]

- Fang, L.; Zhuo, H.; Li, S. Super-resolution of hyperspectral image via superpixel-based sparse representation. Neurocomputing 2018, 273, 171–177. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse Spatio-spectral Representation for Hyperspectral Image Super-resolution. In European Conference on Computer Vision, Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 63–78. [Google Scholar]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J. Hyperspectral and Multispectral Image Fusion Based on a Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J. Bayesian fusion of hyperspectral and multispectral images. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3176–3180. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Hyperspectral resolution enhancement using high-resolution multispectral imagery with arbitrary response functions. IEEE Trans. Geosci. Remote Sens. 2005, 43, 455–465. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J. Bayesian Fusion of Multi-Band Images. IEEE J. Sel. Top. Signal Process. 2015, 9, 1117–1127. [Google Scholar] [CrossRef]

- Irmak, H.; Akar, G.B.; Yuksel, S.E. A MAP-Based Approach for Hyperspectral Imagery Super-Resolution. IEEE Trans. Image Process. 2018, 27, 2942–2951. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Lin, C.; Ma, F.; Chi, C.; Hsieh, C. A Convex Optimization-Based Coupled Nonnegative Matrix Factorization Algorithm for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1652–1667. [Google Scholar] [CrossRef]

- Li, S.; Dian, R.; Fang, L.; Bioucas-Dias, J.M. Fusing Hyperspectral and Multispectral Images via Coupled Sparse Tensor Factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Cui, R.; Li, B.; Song, R.; Li, Y.; Du, Q. Hyperspectral Image Super-Resolution with 1D-2D Attentional Convolutional Neural Network. Remote Sens. 2019, 11, 2859. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, T.; Zheng, Y.; Zhang, D.; Huang, H. Hyperspectral Image Super-Resolution with Optimized RGB Guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 11653–11662. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, B.; Lu, R.; Zhang, H.; Liu, H.; Varshney, P.K. FusionNet: An Unsupervised Convolutional Variational Network for Hyperspectral and Multispectral Image Fusion. IEEE Trans. Image Process. 2020, 29, 7565–7577. [Google Scholar] [CrossRef]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A New Pan-Sharpening Method with Deep Neural Networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Li, J.; Wu, C.; Song, R.; Xie, W.; Ge, C.; Li, B.; Li, Y. Hybrid 2-D-3-D Deep Residual Attentional Network with Structure Tensor Constraints for Spectral Super-Resolution of RGB Images. IEEE Trans. Geosci. Remote Sens. 2020, 1–15. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, T.; Zheng, Y.; Zhang, D.; Huang, H. Joint Camera Spectral Response Selection and Hyperspectral Image Recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Akhtar, N.; Mian, A. Hyperspectral Recovery from RGB Images using Gaussian Processes. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 100–113. [Google Scholar] [CrossRef]

- Yan, L.; Wang, X.; Zhao, M.; Kaloorazi, M.; Chen, J.; Rahardja, S. Reconstruction of Hyperspectral Data from RGB Images with Prior Category Information. IEEE Trans. Comput. Imaging 2020, 6, 1070–1081. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral Image Classification with Deep Feature Fusion Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Sun, H.; Li, S.; Zheng, X.; Lu, X. Remote Sensing Scene Classification by Gated Bidirectional Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 82–96. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X. A Supervised Segmentation Network for Hyperspectral Image Classification. IEEE Trans. Image Process. 2021, 30, 2810–2825. [Google Scholar] [CrossRef]

- Lu, X.; Dong, L.; Yuan, Y. Subspace Clustering Constrained Sparse NMF for Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3007–3019. [Google Scholar] [CrossRef]

- Du, X.; Zheng, X.; Lu, X.; Doudkin, A.A. Multisource Remote Sensing Data Classification with Graph Fusion Network. IEEE Trans. Geosci. Remote Sens. 2021, 1–11. [Google Scholar] [CrossRef]

- Dian, R.; Li, S. Hyperspectral Image Super-Resolution via Subspace-Based Low Tensor Multi-Rank Regularization. IEEE Trans. Image Process. 2019, 28, 5135–5146. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Yasuma, F.; Mitsunaga, T.; Iso, D.; Nayar, S.K. Generalized Assorted Pixel Camera: Postcapture Control of Resolution, Dynamic Range, and Spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3631–3640. [Google Scholar] [CrossRef]

| Number | 16 | 32 | 64 | 128 | 256 | 512 |

|---|---|---|---|---|---|---|

| PSNR | 31.772 | 32.477 | 32.926 | 33.044 | 33.123 | 33.048 |

| SAM | 1.485 | 1.385 | 1.287 | 1.245 | 1.228 | 1.245 |

| Number | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| PSNR | 32.850 | 33.149 | 33.123 | 33.167 | 32.978 | 32.941 |

| SAM | 1.232 | 1.215 | 1.228 | 1.213 | 1.219 | 1.240 |

| 0.001 | 0.01 | 0.1 | 1 | 5 | 10 | |

|---|---|---|---|---|---|---|

| PSNR | 31.959 | 32.473 | 33.167 | 33.060 | 31.884 | 29.940 |

| SAM | 1.436 | 1.411 | 1.213 | 1.244 | 1.254 | 1.267 |

| Ablation Study | |||||

|---|---|---|---|---|---|

| MLFA | × | √ | √ | √ | √ |

| Spatial module | × | × | √ | √ | √ |

| Cosine similarity loss | × | × | × | √ | √ |

| Fine-tuning | × | × | × | × | √ |

| PSNR | 31.902 | 32.061 | 32.150 | 33.167 | 33.232 |

| SAM | 1.514 | 1.482 | 1.494 | 1.213 | 1.211 |

| Methods | PSNR | UIQI | RMSE | ERGAS | SAM |

|---|---|---|---|---|---|

| CNMF [36] | 33.072 | 0.963 | 5.828 | 3.654 | 3.710 |

| GSOMP [30] | 35.117 | 0.971 | 4.819 | 3.230 | 4.050 |

| HySure [12] | 38.710 | 0.983 | 3.226 | 2.037 | 3.453 |

| TLSR [26] | 25.349 | 0.783 | 14.093 | 8.625 | 6.815 |

| USDN [28] | 36.944 | 0.977 | 3.835 | 2.620 | 3.340 |

| DHSP [27] | 25.702 | 0.799 | 13.504 | 8.282 | 6.606 |

| SSRN | 39.741 | 0.985 | 2.886 | 1.980 | 2.781 |

| Methods | PSNR | UIQI | RMSE | ERGAS | SAM |

|---|---|---|---|---|---|

| CNMF [36] | 32.217 | 0.948 | 1.520 | 74.197 | 1.944 |

| GSOMP [30] | 31.979 | 0.956 | 1.729 | 57.587 | 1.877 |

| HySure [12] | 30.484 | 0.940 | 2.316 | 59.799 | 2.518 |

| TLSR [26] | 21.663 | 0.712 | 8.595 | 61.663 | 6.095 |

| USDN [28] | 31.355 | 0.935 | 1.805 | 122.336 | 2.264 |

| DHSP [27] | 21.917 | 0.749 | 8.566 | 122.069 | 5.967 |

| SSRN | 33.232 | 0.954 | 1.448 | 61.216 | 1.211 |

| Methods | PSNR | UIQI | RMSE | ERGAS | SAM |

|---|---|---|---|---|---|

| CNMF [36] | 27.879 | 0.819 | 7.564 | 3.601 | 3.534 |

| GSOMP [30] | 28.235 | 0.817 | 7.299 | 3.517 | 3.381 |

| HySure [12] | 27.621 | 0.824 | 7.886 | 3.763 | 3.759 |

| TLSR [26] | 24.671 | 0.520 | 10.985 | 5.130 | 4.806 |

| USDN [28] | 27.975 | 0.803 | 7.509 | 3.622 | 3.435 |

| DHSP [27] | 24.569 | 0.516 | 11.106 | 5.185 | 4.935 |

| SSRN | 28.350 | 0.829 | 7.185 | 3.434 | 3.334 |

| Methods | PSNR | UIQI | RMSE | ERGAS | SAM |

|---|---|---|---|---|---|

| CNMF [36] | 23.399 | 0.721 | 15.600 | 2.395 | 1.456 |

| GSOMP [30] | 20.855 | 0.481 | 21.703 | 3.295 | 3.575 |

| HySure [12] | 21.658 | 0.531 | 19.126 | 2.939 | 2.221 |

| TLSR [26] | 23.702 | 0.786 | 15.149 | 2.330 | 1.440 |

| USDN [28] | 22.143 | 0.487 | 18.048 | 2.769 | 2.169 |

| DHSP [27] | 23.963 | 0.792 | 14.672 | 2.257 | 1.418 |

| SSRN | 27.770 | 0.807 | 9.447 | 1.451 | 1.451 |

| Methods | CPU/GPU | PU | WDCM | Paris | Playa |

|---|---|---|---|---|---|

| CNMF [36] | CPU | 12.37 | 14.26 | 1.56 | 3.64 |

| GSOMP [30] | CPU | 77.60 | 160.70 | 10.59 | 20.52 |

| HySure [12] | CPU | 40.96 | 58.73 | 6.85 | 26.21 |

| TLSR [26] | CPU | 1130.83 | 541.05 | 251.68 | 492.56 |

| USDN [28] | GPU | 782.65 | 198.68 | 151.53 | 134.43 |

| DHSP [27] | GPU | 796.92 | 1885.74 | 259.89 | 470.56 |

| SSRN | GPU | 74.09 | 117.21 | 82.88 | 181.46 |

| Methods | PSNR | UIQI | RMSE | ERGAS | SAM |

|---|---|---|---|---|---|

| CNMF [36] | 42.403 | 0.845 | 2.273 | 2.337 | 6.629 |

| GSOMP [30] | 37.204 | 0.824 | 5.122 | 5.439 | 12.556 |

| HySure [12] | 41.331 | 0.814 | 2.130 | 2.530 | 6.645 |

| TLSR [26] | 34.148 | 0.744 | 5.206 | 5.879 | 6.221 |

| USDN [28] | 37.711 | 0.825 | 3.769 | 3.847 | 11.493 |

| DHSP [27] | 34.205 | 0.703 | 5.190 | 5.840 | 7.096 |

| SSRN | 40.558 | 0.816 | 2.520 | 3.031 | 5.523 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, W.; Zheng, X.; Lu, X. Hyperspectral Image Super-Resolution with Self-Supervised Spectral-Spatial Residual Network. Remote Sens. 2021, 13, 1260. https://doi.org/10.3390/rs13071260

Chen W, Zheng X, Lu X. Hyperspectral Image Super-Resolution with Self-Supervised Spectral-Spatial Residual Network. Remote Sensing. 2021; 13(7):1260. https://doi.org/10.3390/rs13071260

Chicago/Turabian StyleChen, Wenjing, Xiangtao Zheng, and Xiaoqiang Lu. 2021. "Hyperspectral Image Super-Resolution with Self-Supervised Spectral-Spatial Residual Network" Remote Sensing 13, no. 7: 1260. https://doi.org/10.3390/rs13071260

APA StyleChen, W., Zheng, X., & Lu, X. (2021). Hyperspectral Image Super-Resolution with Self-Supervised Spectral-Spatial Residual Network. Remote Sensing, 13(7), 1260. https://doi.org/10.3390/rs13071260