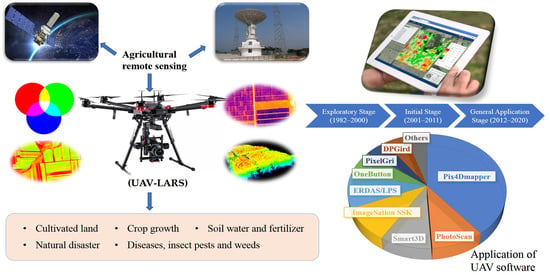

A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China

Abstract

1. Introduction

2. Methodology

- Identification of the need of review

- Inclusion criterion

- Identification of keywords

- Literature collection

- Literature management

- Information extraction and analysis

- Summary and future prospect

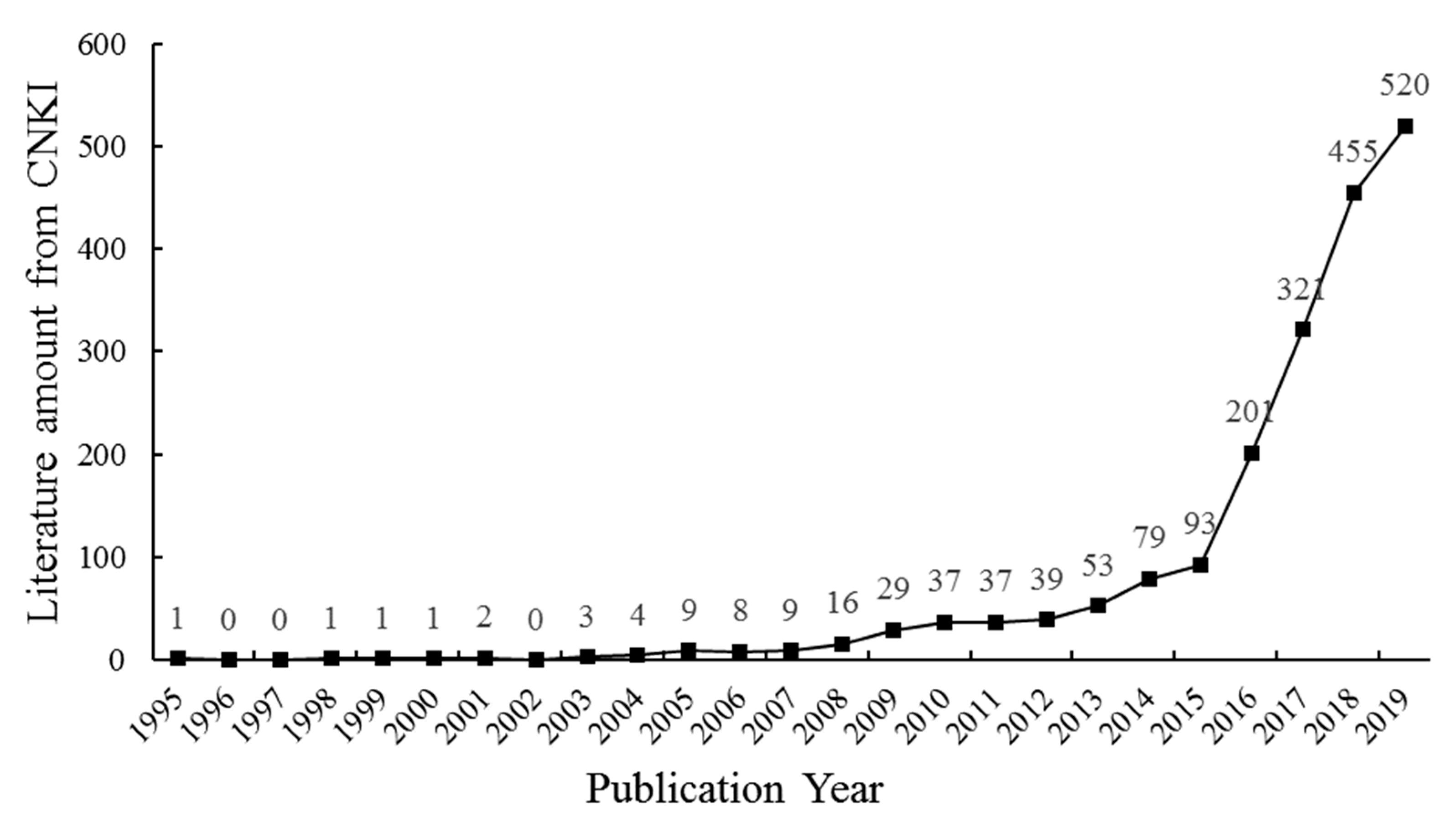

3. History of UAV-LARS Development in China

3.1. Exploratory Stage (1982–2000)

3.2. Initial Stage (2001–2011)

3.3. General Application Stage (2012–2020)

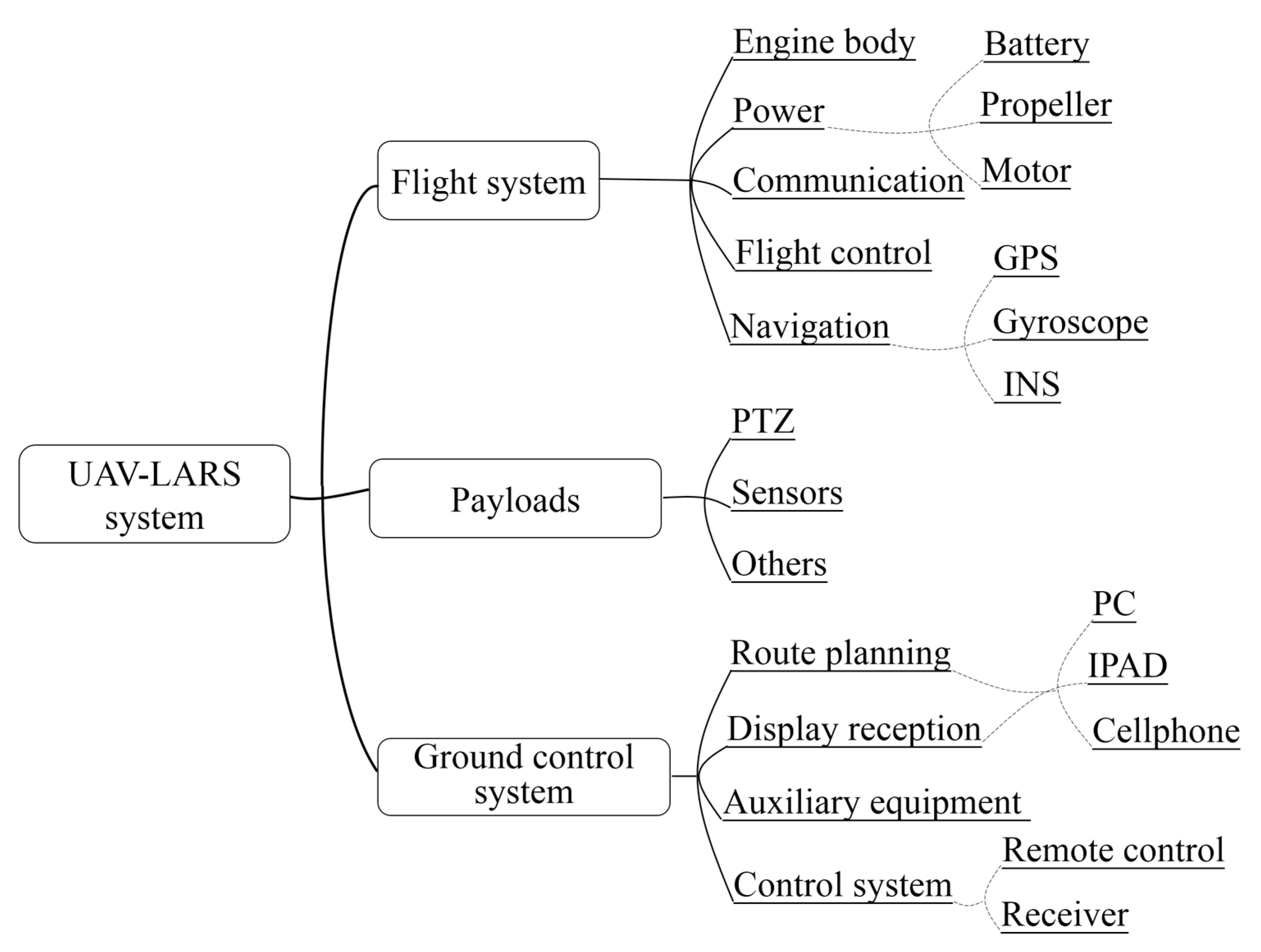

4. Technical Details of the UAV-LARS Platform

4.1. System Architecture

4.2. Types of UAVs

4.3. Payload

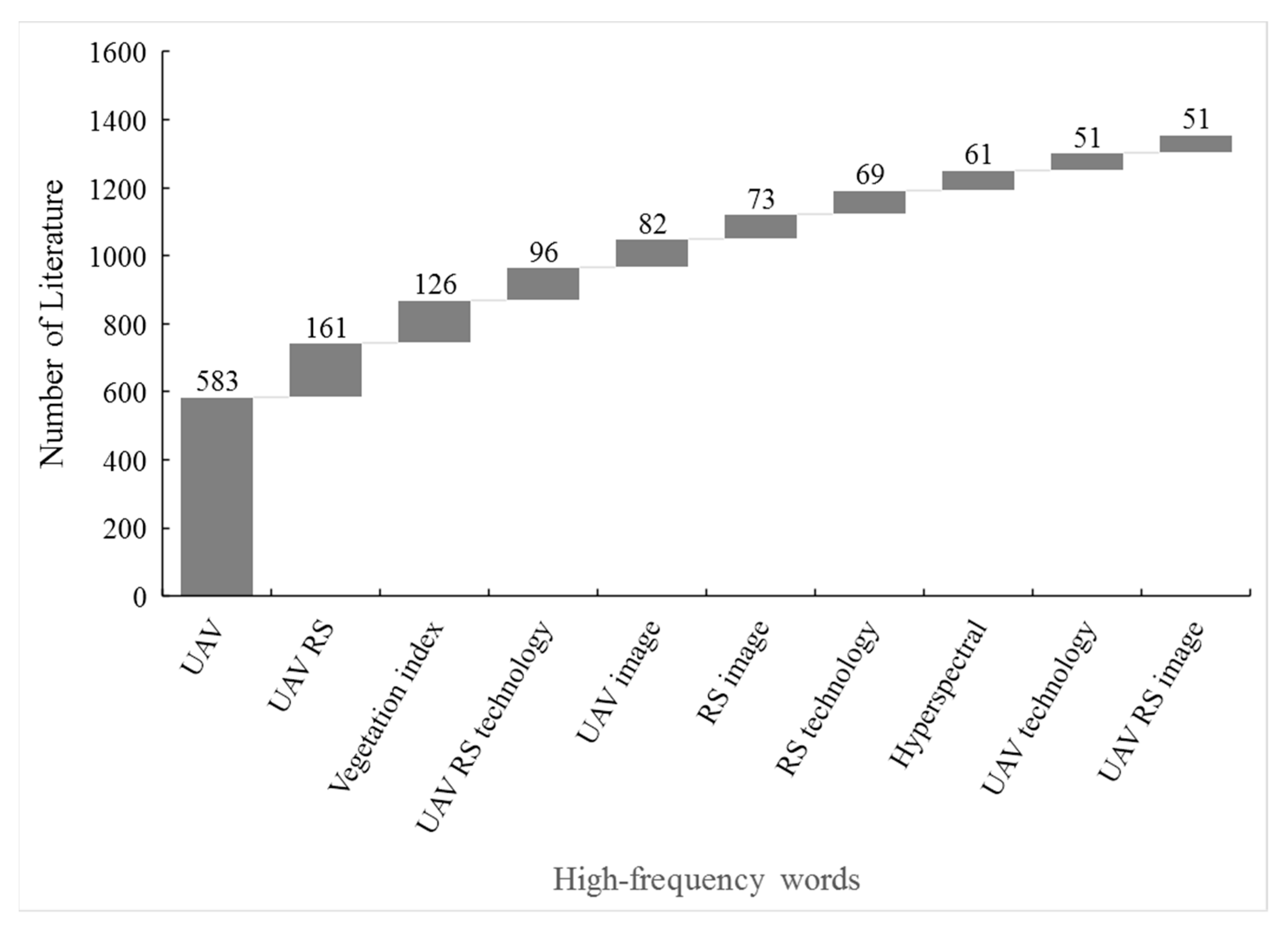

5. Agricultural UAV-LARS Literature Statistics

6. UAV Image Processing

7. UAV-LARS Application in Agriculture

7.1. Dynamic Monitoring of Cultivated Land

7.2. Crop Growth Monitoring

7.3. Monitoring Soil Water and Fertilizer in Cultivated Land

7.4. Diseases, Insect Pests and Weeds Identification

7.5. Natural Disaster Assessment

8. Existing Problems

8.1. Endurance Capability

8.2. Safety of Air UAV Operation

8.3. Monitoring Effectiveness

9. Conclusions and Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, D.; Shao, Q.; Yue, H. Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review. Remote Sens. 2019, 11, 1308. [Google Scholar] [CrossRef]

- Tang, H.J. Progress and Prospect of Agricultural Remote Sensing Research. J. Agric. 2018, 8, 167–171. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Zappa, C.J.; Brown, S.M.; Laxague, N.J.M.; Dhakal, T.; Subramaniam, A. Using Ship-Deployed High-Endurance Unmanned Aerial Vehicles for the Study of Ocean Surface and Atmospheric Boundary Layer Processes. Front. Mar. Sci. 2020, 6, 777. [Google Scholar] [CrossRef]

- Bagaram, M.B.; Giuliarelli, D.; Chirici, G.; Giannetti, F.; Barbati, A. UAV Remote Sensing for Biodiversity Monitoring: Are Forest Canopy Gaps Good Covariates? Remote Sens. 2018, 10, 1397. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Xiang, T.Z.; Xia, G.S.; Zhang, L. Mini-Unmanned Aerial Vehicle-Based Remote Sensing: Techniques, applications, and prospects. IEEE Geosc. Rem. Sen. M. 2019, 7, 29–63. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 2349–2391. [Google Scholar] [CrossRef]

- Stanisław, A.; Dawid, P.; Roman, U. Unmanned aerial vehicles for environmental monitoring with special reference to heat loss. E3S Web Conf. 2017, 19, 02005. [Google Scholar] [CrossRef]

- Yan, L.; Liao, X.H.; Zhou, C.H.; Fan, B.K.; Gong, J.Y.; Cui, P.; Zheng, Y.Q.; Tan, X. The impact of UAV remote sensing technology on the industrial development of China: A review. J. Geo Inf. Sci. 2019, 21, 476–495. [Google Scholar] [CrossRef]

- Yu, C.W. Development of civil UAV in China. Robot. Ind. 2017, 1, 52–58. [Google Scholar] [CrossRef]

- Bai, Y.L.; Jin, J.Y.; Yang, L.P.; Zhang, N.; Wang, L. Low altitude remote sensing technology and its application in precision agriculture. Soil Fert. Sci. Chin. 2004, 1, 3–6, 52. [Google Scholar] [CrossRef]

- Ma, R.S.; Sun, H.; Ma, L.J.; Lin, Z.G.; Wu, C.H. Land use Survey Based on Image from Miniature Unmanned Aerial Vehicle. Remote Sens. Inf. 2006, 1, 43–45. [Google Scholar] [CrossRef]

- Xie, C.X.; Chen, S.L.; Lin, Z.J.; Zhou, Y.Q.; Li, Y. Application of UAV remote sensing technology in investigation of medicinal plant resources. Mod. Chin. Med. 2007, 9, 4–6. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhang, T.M.; Peng, X.D.; Yan, G.Q.; Chen, Y. The application of small UAV(SUAV) in Farmland information monitoring system. J. Agric. Mech. Res. 2010, 32, 183–186. [Google Scholar] [CrossRef]

- Leng, W.F.; Wang, H.G.; Xu, Y.; Ma, Z.H. Preliminary study on monitoring wheat stripe rust with using UAV. Acta Phytopath. Sin. 2012, 42, 202–205. [Google Scholar] [CrossRef]

- Mukherjee, A.; Misra, S.; Raghuwanshi, N.S. A survey of unmanned aerial sensing solutions in precision agriculture. J. Netw. Comput. Appl. 2019, 148, 102461. [Google Scholar] [CrossRef]

- Civil Aviation Administration of China. Interim Regulations on Flight Management of Unmanned Aerial Vehicles. 2018. Available online: http://www.caac.gov.cn/HDJL/YJZJ/201801/t20180126_48853.html (accessed on 23 March 2021).

- Park, M.; Lee, S.G.; Lee, S. Dynamic Topology Reconstruction Protocol for UAV Swarm Networking. Symmetry 2020, 12, 1111. [Google Scholar] [CrossRef]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On crop height estimation with UAVs. IEEE RSJ Int. Conf. Intell. Robots Syst. 2014. [Google Scholar] [CrossRef]

- Sun, G.; Huang, W.J.; Chen, P.F.; Gao, S.; Wang, X. Advances in UAV-based Multispectral Remote Sensing Applications. Trans. Chin. Soc. Agric. Mach. 2018, 49, 1–17. [Google Scholar] [CrossRef]

- Li, B.; Liu, R.Y.; Liu, S.H.; Liu, Q.; Liu, F.; Zhou, G.Q. Monitoring vegetation coverage variation of winter wheat by low-altitude UAV remote sensing system. Trans. Chin. Soc. Agric. Eng. 2012, 28, 160–165. [Google Scholar] [CrossRef]

- Mao, Z.H.; Deng, L.; Sun, J.; Zhang, A.W.; Chen, X.Y.; Zhao, Y. Research on the application of UAV multispectral remote sensing in the maize chlorophyll prediction. Spectrosc. Spect. Anal. 2018, 38, 2923–2931. [Google Scholar] [CrossRef]

- Wei, Q.; Zhang, B.Z.; Wei, Z.; Han, X.; Duan, C.F. Estimation of Canopy Chlorophyll Content in Winter Wheat by UAV Multispectral Remote Sensing. J. Triticeae Crop. 2020, 3, 365–372. [Google Scholar] [CrossRef]

- Qin, Z.F.; Chang, Q.R.; Xie, B.N.; Shen, J. Rice leaf nitrogen content estimation based on hysperspectral imagery of UAV in Yellow River diversion irrigation district. Trans. Chin. Soc. Agric. Eng. 2016, 32, 77–85. [Google Scholar] [CrossRef]

- Wang, J.Z.; Ding, J.L.; Ma, X.K.; Ge, X.Y.; Liu, B.H.; Liang, J. Detection of Soil Moisture Content Based on UAV-derived hyperspectral imagery and spectral index in oasis cropland. Trans. Chin. Soc. Agric. Mach. 2018, 49, 164–172. [Google Scholar] [CrossRef]

- Liang, H.; Liu, H.H.; He, J. Rice Photosynthetic performance monitoring system based on UAV hyperspectral. J. Agric. Mech. Res. 2020, 42, 214–218. [Google Scholar] [CrossRef]

- Bian, J. Diagnostic Model for Crops Moisture Status Based on UAV Thermal Infrared. Master’s Thesis, Northwest A&F University, Yanglin, China, 2019. [Google Scholar]

- Duan, C.F.; Hu, Z.H.; Wei, Z.; Zhang, B.Z.; Chen, H.; Li, R. Estimation of summer maize evapotranspiration and its influencing factors based on UAVs thermal infrared remote sensing. Water Saving Irrigation 2019, 12, 110–116. [Google Scholar]

- Zhang, Z.T.; Xu, C.H.; Tan, C.X.; Bian, J.; Han, W.T. Influence of coverage on soil moisture content of field corn inversed from thermal infrared remote sensing of UAV. Trans. Chin. Soc. Agric. Mach. 2019, 50, 213–225. [Google Scholar] [CrossRef]

- Wang, K.L. Cotton Canopy Information Recognition Based on Visible Light and Thermal Infrared Image of UAV. Master’s Thesis, Chinese Academy of Agricultural Sciences, Beijing, China, 2019. [Google Scholar]

- Yang, F. Estimation of Winter Wheat Aboveground Biomass with UAV LiDAR and Hyperspectral Data; Xi’an University of Science and Technology: Xi’an, China, 2017. [Google Scholar]

- Chen, H. LAI Inversion Method for Crop Based on LiDAR and Multispectral Remote Sensing. Master’s Thesis, Shihezi University, Shihezi, China, 2018. [Google Scholar]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef]

- Chen, P.F.; Xu, X.G. A comparison of photogrammetric software packages for mosaicking unmanned aerial vehicle (UAV) images in agricultural application. Acta Agron. Sin. 2020, 46, 1112–1119. [Google Scholar] [CrossRef]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Yang, J.B.; Jiang, Y.T.; Yang, X.B.; Guo, G.M. A fast mosaic algorithm of UAV images based on Dense SIFT feature matching. J. Geo Inf. Sci. 2019, 21, 588–599. [Google Scholar] [CrossRef]

- Li, F.F.; Xiao, B.L.; Jia, Y.H.; Mao, X.L. Improved SIFT algorithm and its application in automatic registration of remotely-sensed imagery. Geomat. Inf. Sci. Wuhan Univ. 2009, 34, 1245–1249. [Google Scholar] [CrossRef]

- Zhao, Z.; Ling, X.; Sun, C.K.; Li, Y.Z. UAV tilted images matching research based on POS. Remote Sens. Land Resour. 2016, 28, 87–92. [Google Scholar] [CrossRef]

- Chen, X.L.; Chen, X.L.; Peng, Y.Y. The key technology research on image processing in unmanned aerial vehicle. Beijing Surv. Mapp. 2016, 3, 24–27. [Google Scholar] [CrossRef]

- Jia, Y.J.; Xu, Z.A.; Su, Z.B.; Rizwan, A.M. Mosaic of crop remote sensing images from UAV based on improved SIFT algorithm. Trans. Chin. Soc. Agric. Eng. 2017, 33, 123–129. [Google Scholar] [CrossRef]

- Ma, L.J.; Ma, R.S.; Lin, Z.G.; Wu, C.H.; Sun, H. Application of micro UAV Remote Sensing. J. Meteorol. Res. Appl. 2005, 26, 180–181. [Google Scholar] [CrossRef]

- Wang, Y.H.; Zhang, Y.Y.; Men, L.J.; Liu, B. UAV survey in the third national land survey application of pilot project in Gansu. Geomat. Spat. Inf. Technol. 2019, 42, 219–221. [Google Scholar] [CrossRef]

- Lei, Y.; Zhou, W.Z. Application prospect of UAV tilt photogrammetry technology in land survey. Resour. Habitant Environ. 2019, 7, 11–13. [Google Scholar] [CrossRef]

- Yu, K.; Shan, J.; Wang, Z.M.; Lu, B.H.; Qiu, L.; Mao, L.J. Land use status monitoring in small scale by unmanned aerial vehicles (UAVs) observations. Jiangsu Agric. Sci. 2019, 35, 853–859. [Google Scholar] [CrossRef]

- Xu, P.; Xu, W.C.; Luo, Y.F.; Han, Y.W.; Wang, J.Y. Precise classification of cultivated land based on visible remote sensing image of UAV. J. Agric. Sci. Tech. 2019, 21, 79–86. [Google Scholar] [CrossRef]

- Liu, Z.; Wan, W.; Huang, J.Y.; Han, Y.W.; Wang, J.Y. Progress on key parameters inversion of crop growth based on unmanned aerial vehicle remote sensing. Trans. Chin. Soc. Agric. Eng. 2018, 34, 60–71. [Google Scholar] [CrossRef]

- Gao, L.; Yang, G.J.; Wang, B.S.; Yu, H.Y.; Xu, B.; Feng, H.K. Soybean leaf area index retrieval with UAV (unmanned aerial vehicle) remote sensing imagery. Chin. J. Eco Agric. 2015, 23, 868–876. [Google Scholar] [CrossRef]

- Pei, H.J.; Feng, H.K.; Li, C.C.; Jin, X.L.; Li, Z.H.; Yang, G.J. Remote sensing monitoring of winter wheat growth with UAV based on comprehensive index. Trans. Chin. Soc. Agric. Eng. 2017, 33, 74–82. [Google Scholar] [CrossRef]

- Chu, H.L.; Xiao, Q.; Bai, J.H.; Cheng, J. The retrieval of leaf area index based on remote sensing by unmanned aerial vehicle. Remote Sens. Tech. Appl. 2017, 32, 140–148. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Helge, A.; Andreas, B.; Andreas, B.; Georg, B. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Lan, Y.; Deng, X.; Zeng, G. Advances in diagnosis of crop diseases, pests and weeds by UAV remote sensing. Smart Agric. 2019, 1, 1–19. [Google Scholar] [CrossRef]

- Chen, Z.; Ma, C.Y.; Sun, H.; Cheng, Q.; Duan, F.Y. The inversion methods for water stress of irrigation crop based on unmanned aerial vehicle remote sensing. China Agric. Inf. 2019, 31, 23–35. [Google Scholar] [CrossRef]

- Song, H.Y. Detection of Soil by Near Infrared Spectroscopy; Chemical Industry Press: Beijing, China, 2013. [Google Scholar]

- Wang, L. A Research About Remote Sensing Monitoring Method of Soil Organic Matter Based on Imaging Spectrum Technology. Master’s Thesis, Henan Polytechnic University, Zhengzhou, China, 2016. [Google Scholar]

- Guo, H.; Zhang, X.; Lu, Z.; Tian, T.; Xu, F.F.; Luo, M.; Wu, Z.G.; Sun, Z.J. Estimation of organic matter content in southern paddy soil based on airborne hyperspectral images. J. Agric. Sci. Tech. 2020, 22, 60–71. [Google Scholar]

- Deutsch, C.A.; Tewksbury, J.J.; Tigchelaar, M.; Battisti, D.S.; Merrill, S.C.; Huey, R.B.; Naylor, R.L. Increase in crop losses to insect pests in a warming climate. Science 2018, 361, 916–919. [Google Scholar] [CrossRef] [PubMed]

- Huang, W.; Shi, Y.; Dong, Y.; Zhang, Y.J.; Liu, L.Y.; Wang, J.H. Progress and prospects of crop diseases and pests monitoring by remote sensing. Smart Agric. 2019, 1, 1–11. [Google Scholar] [CrossRef]

- Zhao, H.; Yang, C.; Guo, W.; Zhang, L.; Zhang, D. Automatic Estimation of Crop Disease Severity Levels Based on Vegetation Index Normalization. Remote Sens. 2020, 12, 1930. [Google Scholar] [CrossRef]

- Viera-Torres, M.; Sinde-González, I.; Gil-Docampo, M.; Bravo-Yandún, V.; Toulkeridis, T. Generating the baseline in the early detection of bud rot and red ring disease in oil palms by geospatial technologies. Remote Sens. 2020, 12, 3229. [Google Scholar] [CrossRef]

- Ma, S.Y.; Guo, Z.Z.; Wang, S.T.; Zhang, K. Hyperspectral remote sensing monitoring of Chinese chestnut red mite diseases and insect pests in UAV. Trans. Chin. Soc. Agric. Mach. 2021, 1–12. Available online: https://kns.cnki.net/kcms/detail/11.1964.S.20210204.1844.004.html (accessed on 23 March 2021).

- Tian, M.L.; Ban, S.T.; Yuan, T.; Wang, Y.Y.; Ma, C.; Li, L.Y. Monitoring of rice damage by rice leaf roller using UAV-based remote sensing. Acta Agric. Shanghai 2020, 36, 137–142. [Google Scholar] [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.J.; Liu, L.Y.; Wang, J.H. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precis. Agric. 2007, 8, 187–197. [Google Scholar] [CrossRef]

- Wang, Z.; Chu, G.K.; Zhang, H.J.; Liu, S.X.; Huang, X.C.; Gao, F.R.; Zhang, C.Q.; Wang, J.X. Identification of diseased empty rice panicles based on Haar-like feature of UAV optical image. Trans. Chin. Soc. Agric. Eng. 2018, 34, 73–82. [Google Scholar] [CrossRef]

- Cui, M.N. Study on Dynamic Monitoring of Cotton Spider Mites Based on Remote Sensing of UAV. Master’s Thesis, Shihezi University, Shihezi, China, 2019. [Google Scholar]

- Wang, S.B.; Han, Y.; Chen, J.; Pan, Y.; Cao, Y.; Meng, H. Weed classification of remote sensing by UAV in ecological irrigation areas based on deep learning. J. Drain. Irrig. Mach. Eng. 2018, 36, 1137–1141. [Google Scholar] [CrossRef]

- Xue, J.L.; Dai, J.G.; Zhao, Q.Z.; Zhang, G.S.; Cui, M.N.; Jiang, N. Cotton field weed detection based on low-altitude drone image and YOLOv3. J. Shihezi Univ. Nat. Sci. 2019, 37, 21–27. [Google Scholar] [CrossRef]

- Dong, J.H.; Yang, X.D.; Gao, L.; Wang, B.S.; Wang, L. Information extraction of winter wheat lodging area based on UAV remote sensing image. Heilongjiang Agric. Sci. 2016, 147–152. [Google Scholar] [CrossRef]

- Tian, M.L.; Ban, S.T.; Chang, Q.R.; You, M.M.; Luo, D.; Wang, L.; Wang, S. Use of hyperspectral images from UAV-based imaging spectroradiometer to estimate cotton leaf area index. Trans. Chin. Soc. Agric. Eng. 2016, 32, 102–108. [Google Scholar] [CrossRef]

- Zheng, E.G.; Tian, Y.F.; Chen, T. Region extraction of corn lodging in UAV images based on deep learning. J. Henan Agric. Sci. 2018, 47, 155–160. [Google Scholar] [CrossRef]

- Zhang, X.L.; Guan, H.X.; Liu, H.J.; Meng, X.T.; Yang, H.X.; Ye, Q.; Yu, W.; Zhang, H.S. Extraction of maize lodging area in mature period based on UAV multispectral image. Trans. Chin. Soc. Agric. Eng. 2019, 35, 98–106. [Google Scholar] [CrossRef]

- Dai, J.G.; Zhang, G.S.; Guo, P.; Zeng, T.J.; Cui, M.N.; Xue, J.L. Information extraction of cotton lodging based on multi-spectral image from UAV remote sensing. Trans. Chin. Soc. Agric. Eng. 2019, 35, 63–70. [Google Scholar] [CrossRef]

- Gan, P.; Dong, Y.S.; Sun, L.; Yang, G.J.; Li, Z.H.; Yang, F.; Wang, L.Z.; Wang, J.W. Evaluation of Maize Waterlogging Disaster Using UAV LiDAR Data. Sci. Agric. Sin. 2017, 50, 2983–2992. [Google Scholar] [CrossRef]

- Zhou, H.; Yan, Z.X. Application of OneButton Software in Remote Sensing Image Processing. Bull. Surv. Mapp. 2017, S1, 59–61, 78. [Google Scholar] [CrossRef]

- Su, R.D. Application of UAV in modern agriculture. Jiangsu Agric. Sci. 2019, 47, 75–79. [Google Scholar] [CrossRef]

- Bajo, J.; Hallenborg, K.; Pawlewski, P.; Botti, V.; Sánchez-Pi, N.; Duque Méndez, N.D.; Lopes, F.; Julian, V. [Communications in Computer and Information Science] Highlights of Practical Applications of Agents, Multi-Agent Systems, and Sustainability—The PAAMS Collection. In Proceedings of the International Workshops of PAAMS 2015, Salamanca, Spain, 3–4 June 2015; Volume 524. [Google Scholar] [CrossRef]

| Category | Benefits | Limitations |

|---|---|---|

| Fixed-wing | Long endurance Large load Fast flight speed Large operation range | Takeoff needs run-up landing needs glide No hovering capability |

| Multirotor | Fly horizontally and vertically Vertical takeoff and landing Hovering at a given location Autonomous navigation Simple structure | Short endurance time Small load Poor resistance to harsh environment |

| Unmanned helicopter | Vertical takeoff and landing Hovering at a given location Flight stability | Complex wing structure High maintenance cost |

| Type | No. of Propellers | Fuselage Weight (Kg) | Endurance Time (min) | Payload (Kg) | Price Range (U.S.D) |

|---|---|---|---|---|---|

| M210 | 4 | 4.8 | 38 | 2.3 | 5000–15,000 |

| M600Pro | 6 | 9.1 | 38 | 6.0 | 4999–15,000 |

| S800 | 6 | 3.7 | 16 | 2.5 | 1800 |

| S900 | 6 | 3.3 | 18 | 4.9 | 1300 |

| S1000 | 8 | 4.4 | 15 | 5.6 | 3400 |

| Sensor | Type | Characteristics | Memory (Mb/Min) | Price (U.S.D) | Software | Citations |

|---|---|---|---|---|---|---|

| Digital camera | DJI ZENMUSE Z3 (DJI Technology Co., Ltd., Shenzhen, China), Canon 5DMark II (Canon Inc., Tokyo, Japan), Nikon D800E (Nikon Corp., Tokyo, Japan), SONY α7r (Sony Corp., Tokyo, Japan), PhaseOne IQ180 (PhaseOne Corp., Copenhagen, Denmark), PhaseOne iXM (PhaseOne Corp., Copenhagen, Denmark), Hasselblad H6D-100c (F. W. Hasselblad and Co., Gothenburg, Sweden) | Pixel: 10–100 million, Frame: small and middle size, Weight: 100–2500 g | 50–2000 | 900–35,000 | Pix4D Mapper (Pix4D SA, Lausanne, Switzerland), Photoscan (Agisoft LLC, St. Petersburg, Russia), OneButton (Research System Inc., Manassas, VA, USA) | [24] |

| Multispectral imager | Parrot Sequoia (Parrot Inc., Paris, France), Micasense RedEdge (MicaSense Inc., Seattle, WA, USA), Tetracam ADC (Tetracam Inc., Chatsworth, CA, USA),Cubert S128 (Cubert GmbH, Ulm, Germany), DJI multispectral carema (DJI Technology Co., Ltd., Shenzhen, China) | High automation, Staring imaging, Weight: 30–700 g, Spectral range: 400–1100 nm | 800–4000 | 5000–16,000 | Pix4D Mapper, Photoscan, OneButton, ICE (Microsoft Corp., Redmond, WA, USA) | [25,26,27] |

| Hyperspectral imager | Cubert UHD185 (Cubert GmbH, Ulm, Germany), Headwall Nano-Hyperspec (Headwall Photonics Inc., Fitchburg, WI, USA), SENOP-RIKOLA (Senop Oy, Kangasala, Finland), Gaiasky-mini (Sichuan Dualix Spectral Imaging Technology Co., Ltd., Chengdu, China) | Spectral range: 350–2500 nm, Spectral sampling interval: 0.4–4.5 nm, Spectral resolution: 4–10 nm, Spectral numbers: 100–400, Weight: 400–2000 g | 600–3000 | 70,000–150,000 | Pix4D Mapper, Photoscan, OneButton; ICE | [28,29,30] |

| Thermal infrared imager | DJI XT TIRcamera (DJI Technology Co., Ltd., Shenzhen, China), VuePro 640R (FLIR Systems Inc., Wilsonville, OR, USA), FLIR Camera Tau2 (FLIR Systems Inc., Wilsonville, OR, USA), FLIR Thermo CAM SC3000 (FLIR Systems Inc., Wilsonville, OR, USA), Optris PI450 (Optris GmbH, Berlin, Germany) | Spectral range: 3.5–13.5 μm, Spatial resolution: 640 × 512 pixels, Temperature resolution: 0.05 °C, Temperature range: −20–100 °C, Spatial resolution: <10 cm | 3–100 | 10,000–15,000 | Pix4D Mapper, Photoscan | [31,32,33,34] |

| LiDAR | Riegl VUX-1 (Rigel Laser Measurement Systems GmbH, Wien, Österreich) | Weight: 3600 g, Wavelength: 1550 nm, Spot diameter: 25 mm | 1000–60,000 | 150,000–200,000 | LiDAR360 (Beijing Digital Green Earth Technology Co., Ltd., Beijing, China), CloudStation (YellowScan company, Montpellier, France) | [35,36] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. https://doi.org/10.3390/rs13061221

Zhang H, Wang L, Tian T, Yin J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sensing. 2021; 13(6):1221. https://doi.org/10.3390/rs13061221

Chicago/Turabian StyleZhang, Haidong, Lingqing Wang, Ting Tian, and Jianghai Yin. 2021. "A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China" Remote Sensing 13, no. 6: 1221. https://doi.org/10.3390/rs13061221

APA StyleZhang, H., Wang, L., Tian, T., & Yin, J. (2021). A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sensing, 13(6), 1221. https://doi.org/10.3390/rs13061221