1. Introduction

Object detection in remote sensing images is one of the basic tasks within satellite imagery processing. Its initial purpose is to extract the category and location information of the object from a remote sensing image [

1]. This task involves a wide range of applications in various fields, such as remote sensing image road detection [

2], ship detection [

3], aircraft detection [

4], etc. It is also a high-advance technique for remote sensing image analysis, image content understanding, and scene understanding. Since object detection is the foundation of many other tasks, its importance has attracted the attention of many scholars, and extensive and in-depth research has been carried out, resulting in many research achievements.

However, object detection in remote sensing images is still a challenging task due to multiple reasons [

5,

6]. First, the remote sensing image is obtained from an overhead perspective, so objects can have any orientation. Second, the scale of different types of objects varies greatly. Third, the size of remote sensing images is extremely large, it is an inhibitor for existing algorithms to be applied to images with such a large size. Besides, labeled remote sensing image samples are scarce [

5], and thus the available labeled samples are insufficient for training an onboard detector, which increases the difficulty of object detection.

Generally, remote sensing images are transmitted back to the ground through a satellite data transmission system, and then an object detection task is performed, which requires several procedures to complete. However, object detection on satellite has its unique advantages in military and civilian missions, it responds to ground conditions and provides feedback in real-time. For reasons that direct object detection on satellite makes it possible to transfer what the user might be looking for, and thus the bandwidth and cost of the data transmission technology used to send images data from the satellite camera to the ground station can be reduced greatly. It is a promising direction in this domain.

The focus is on performance when performing remote sensing image object detection on the ground. In the past ten years, a variety of algorithms have been proposed for object detection in remote sensing images. These methods can be roughly divided into traditional methods and deep learning (DL)-based methods. Traditional machine learning methods such as template matching-based object detection, knowledge-based object detection, object-based image analysis (OBIA) object detection, and machine learning-based object detection have made great efforts to improve the performance of object detection algorithm, but DL-based methods can often result in better performance.

These methods mentioned above all use low-level features for object detection. The disadvantage of using low-level features is that it requires manual extraction of features. Although it can achieve better results in specific application scenarios, this type of method is highly dependent on prior knowledge, resulting in the poor adaptability and generalization of the detection model.

However, with the continuous development of deep learning technology, its performance grows to be excellent, such as faster regions with CNN features (Faster-RCNN) [

7], you only look once (YOLO) [

8,

9,

10], single-shot multi-box detector (SSD) [

11], and ThunderNet [

12]. Various methods have been proposed by applying deep learning technology to remote sensing image object detection, such as rotation-invariant convolutional neural networks (RICNN) [

13], newly trained CNN [

13], and context-based feature fusion single shot multi-box detector (CBFF-SSD) [

14], which deploys feature fusion methods to improve detection performance. Although the use of deep learning methods for remote sensing image object detection greatly improves the performance of the detectors, its massive computational complexity and extremely large storage space requirements hinder its deployment on satellites.

With the progress of remote sensing technology, the demand for object detection on satellites is becoming increasingly urgent. However, limited by the space environment, the computing power that can be provided on the satellite is far from that on the ground. In this situation, remote sensing image object detection on satellites not only has to deal with the ongoing challenges but also needs to solve the problem of the feasibility of the detector. The feasibility of the detector on the satellite is equally important as its performance. To improve the performance of the detector, some techniques used on the ground, such as feature fusion, and the use of a deeper network, may consume massive storage space and calculations that may make them impossible to implement on satellites.

To solve the problems mentioned above, this paper introduces the idea of a lightweight network into remote sensing image object detection and proposes a lightweight remote sensing image object detection framework called multi-scale feature fusion SNET (MSF-SNET). The backbone network of the proposed framework is partly modified based on the lightweight network SNET [

12] to reduce the on-board processing complexity and the number of parameters. In this framework, a recursive feature fusion strategy is used to balance performance and feasibility. The proposed framework performs object detection on multiple feature maps to solve the problem of large-scale changes between different classes of objects.

Due to the lack of training samples and the large changes in the object appearance affected by the imaging conditions, the training samples are particularly rotated, cut, symmetrically flipped, zoomed, and moderately occluded. It is worth noting that the remote sensing image is difficult to adapt to the input of the deep learning model, the large size image is split according to a certain overlap ratio during training and detection.

The main contributions of this paper are summarized as follows.

1. We introduce a novel lightweight remote sensing image object detection framework called MSF-SNET. The proposed framework is an end-to-end detection model with multiple scale features in the detection part and feature reuse and fusion strategy is adopted. It is applicable to object detection on satellite.

2. Through experiments on NWPU-VHR-10 [

15] and DIOR [

16] remote sensing image object detection dataset we show that our framework achieves acceptable performance with fewer parameters and less computational cost compared with state-of-the-art methods [

12,

13,

14]. The feasibility of the framework is important as its performance. We make a balance between performance and feasibility by reducing network parameters and computing costs.

3. A feature fusion method is proposed to take advantage of low-level features and deep features to improve the performance of object detection with a negligible amount of parameters and computational cost increase.

4. Cost Density is proposed in this paper. It is a fair and effective metric suitable for evaluating the performance of the algorithm under the condition of consuming the same resources. It provides a quantitative approach to evaluate the algorithm with effectiveness.

The rest of this paper is organized as follows.

Section 2 reviews the related work of the object detection framework for remote sensing images. In

Section 3, we introduce our proposed method and present the details of the proposed framework, namely Multi-scale fusion SNET, MSF-SNET. In

Section 4, experimental results on two different datasets are illustrated to demonstrate the performance of our method.

Section 5 contains a discussion of the implication of the results of

Section 4.

Section 6 involves our conclusions plus some ideas for further work.

2. Related Work

Remote sensing image object detection methods can be roughly divided into four categories: expert-based methods, machine learning (ML)-based methods, deep learning (DL)-based methods, and light DL methods.

Expert-based methods are further divided into three classes as template matching-based methods, knowledge-based methods, and object-based image analysis (OBIA) [

1].

Template matching-based methods regard the object detection task as a similarity matching problem between template and objects. For example, these methods are used to detect roads in remote sensing images because roads can be viewed as a simple template and shifted through the image [

17,

18,

19,

20,

21] to find the best matches. Although this method is simple and effective, it is easily affected by rotation, scale changes, and viewpoint change.

Knowledge-based methods regard the object detection problem as a hypothesis testing problem [

22], such as using the geometric knowledge of the object to detect buildings in the image [

23,

24,

25,

26]. In this type of method, the establishment of prior knowledge and rules is essential. The performance of these methods depends heavily on the prior knowledge of experts and detection rules. However, complete prior knowledge establishment is difficult and detection rules are subjective.

OBIA object detection converts the object detection task into a classification problem. The image is first broken up into objects representing land-based features, and then prior knowledge or established rules are applied to classify these objects [

27,

28]. This type of method can comprehensively use the object shape, texture, context knowledge, expert knowledge, and other information for object detection. For example, these methods are used to detect landslide mapping [

29,

30,

31,

32,

33], land cover, and land-use mapping [

34,

35]. Moreover, the prior knowledge is not complete and expert knowledge used in classification is still subjective.

ML-based methods play an important role in remote sensing image object detection. These methods first extract features from the training data, such as histogram of gradient (HOG) features [

36], bag-of-words (BoW) features [

37], local binary patterns (LBP) features [

38], and Haar-like features [

39]. Then supervised, semi-supervised, or weakly supervised methods are used to train a classifier, such as support vector machine (SVM) classifier [

40], AdaBoost [

41], and k-nearest-neighbor (kNN) [

42] to classify the extracted features. These methods are used in many applications, for example, aircraft detection [

43,

44], ship detection [

45,

46], vehicle detection [

47,

48], and airport runway detection [

49]. Although these methods have achieved promising performance in applications mentioned above, they still rely on handcrafted feature descriptors. Generic feature descriptor is still not available. It is still a challenging issue to design a general discriminative feature for object detection.

DL-based methods, especially deep convolutional neural networks (CNN), have started to dominate the object detection task. One significant advantage of CNN is completely unsupervised feature learning. It can learn discriminative features directly from data.

These methods can be categorized into two main types: one-stage methods and two-stage methods. One-stage methods adopt a fully convolutional architecture that outputs a fixed number of predictions on the grid. While two-stage methods leverage a proposal network to find regions that have a high probability to contain an object. Then a second network is used to get the classification score and spatial offsets of the proposals from the proposal network. One-stage methods prioritize inference speed. While two-stage methods prioritize detection accuracy.

The typical two-stage methods are R-CNN [

50], Fast R-CNN [

51], and Faster R-CNN [

7]. The typical single-stage methods are YOLO [

8], YOLO9000 [

9], YOLO V3 [

10], YOLO V4 [

52], SSD [

11], and DSSD [

53].

Although the CNN-based algorithms perform well in the object detection task, their excellent performance comes at the cost of many parameters and high computational cost.

Light CNN methods have been proposed, such as ThunderNet [

54], MobilenetV1 [

55], MobilenetV2 [

56], SqueezeNet [

57], ShuffleNet [

58], ShuffleNet V2 [

57], Xception [

59], Light Head R-CNN [

60], and model compression to reduce the time complexity and space complexity of the network.

Some of these light CNN methods use a lightweight backbone such as SNET used in ThunderNet. While some of these methods use a light head such as Light Head R-CNN. Otherwise, model compression is also used to shrink trained neural networks. Compressed models usually perform similarly to the original model while using a fraction of the computational resources.

In the field of remote sensing, the excellent performance of CNN in object detection task has attracted many researchers to make great efforts to CNN based remote sensing image object detection [

61,

62,

63,

64].

To solve the problem of object rotation variations in remote sensing images, some methods are proposed based on or Faster-RCNN architecture. For example, a rotation-invariant layer [

13] is proposed and added to RCNN. Besides, rotation-invariant regularity and Fisher discrimination regularizer [

65] are added to Faster-RCNN. Multi-angle anchors [

66] are also introduced into the region proposal network (RPN) [

7] to solve this problem.

To improve the object positioning accuracy, an unsupervised score-based bounding box regression (USB-BBR) method [

61] is proposed, and a position-sensitive balancing (PSB) method [

6] is used to enhance the quality of the generated region proposal.

For the sake of inference speed, some one-stage methods are proposed. For example, a regression-based vehicle detection method [

67] and a rotation-invariant detector [

68] with a rotatable bounding box are proposed based on SSD. A method [

69] that can detect ships in any direction is proposed based on YOLOv2. A detection framework [

70] with multi-scale feature fusion is proposed based on YOLO, and a novel effectively optimized one-stage network (NEOON) [

71] method based on YOLOV3 is proposed.

The abovementioned methods have achieved superior performance and facilitated the development of remote sensing image object detection greatly. However, there are still some challenges to be addressed when the detector is deployed on the satellite. Constrained by affordable resources in the space environment, a more lightweight model is desired to reduce calculations, parameter storage space, and power consumption.

In this paper, we tackle the previous problem and propose a lightweight object detection framework for remote sensing images. Due to the lightweight property of ThunderNet, we construct our framework based on its backbone network named SNET. Inspired by the idea of the deconvolutional single shot detector (DSSD), a lightweight feature fusion method is proposed and we detect small objects on shallow features and big objects on the deep features. The experimental results confirmed that the proposed lightweight object detection framework can achieve excellent performance in remote sensing image object detection.

3. Method

Remote sensing datasets may involve a variety of objects at different scales. For example, among the ten classes of objects contained in NWPU VHR-10 dataset, vehicles and storage tanks are very different in scale from ships, baseball fields, and basketball courts. Therefore, the remote sensing image object detection network is required to be able to detect objects in large and small sizes in an onboard environment. Constrained by affordable computing power, it is still a very challenging problem to achieve the object detection of satellite imagery.

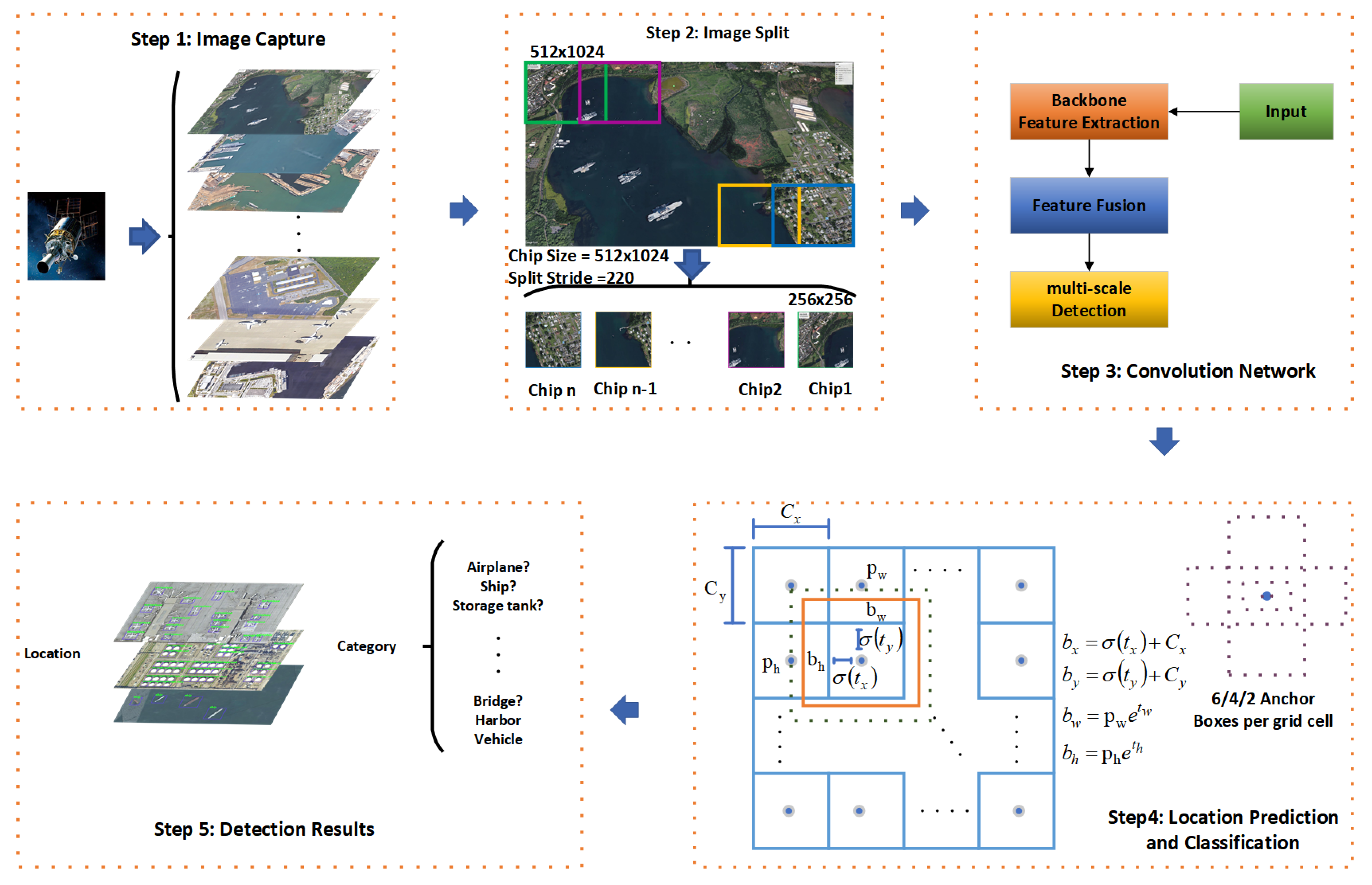

This paper proposes an onboard object detection framework based on SNET, called Multi-scale fusion SNET (MSF-SNET), which is optimized for the onboard application environment and is a lightweight framework. Next, the characteristics of MSF-SNET will be analyzed. The working process of MSF- SNET framework on the satellite is shown in

Figure 1.

The workflow of an onboard object detection system in which MSF-SNET is used is divided into five steps.

Step1: image capture. The image obtained by the satellite camera has an ultra-large size, which far exceeds the input image size of the convolutional network.

Step 2: slice the image. Although the captured image can be rescaled, adjusting the size will cause information loss, particularly small objects may be lost completely. Therefore, it is necessary to slice the captured image to make the size meet the input requirements of the convolutional network. Considering the hardware implementation of the onboard environment, each slice is fixed at 256 × 256 in this article, and the step size is 220, that is, the input size of the convolutional network is 256 × 256.

Step 3: features extraction. The backbone network of MSF-SNET plays a vital role in the overall architecture which is responsible for extracting features. The performance of the backbone network determines the performance of the entire detector, and its computational cost also occupies most of the calculation of the entire detector. MSF-SNET uses a lightweight backbone network to reduce the amount of calculation while maintaining its performance.

Step 4: position regression and classification. Position regression and classification are performed on six different scale feature maps to get the locations and classes of objets reside in each chip. The location regression and classification method are logistic regression which was also adopted by YOLO V3.

Step 5: detection results. The final detection results are obtained according to indices of the slices and the detection result of each slice.

3.1. Multi-Scale Fusion SNET

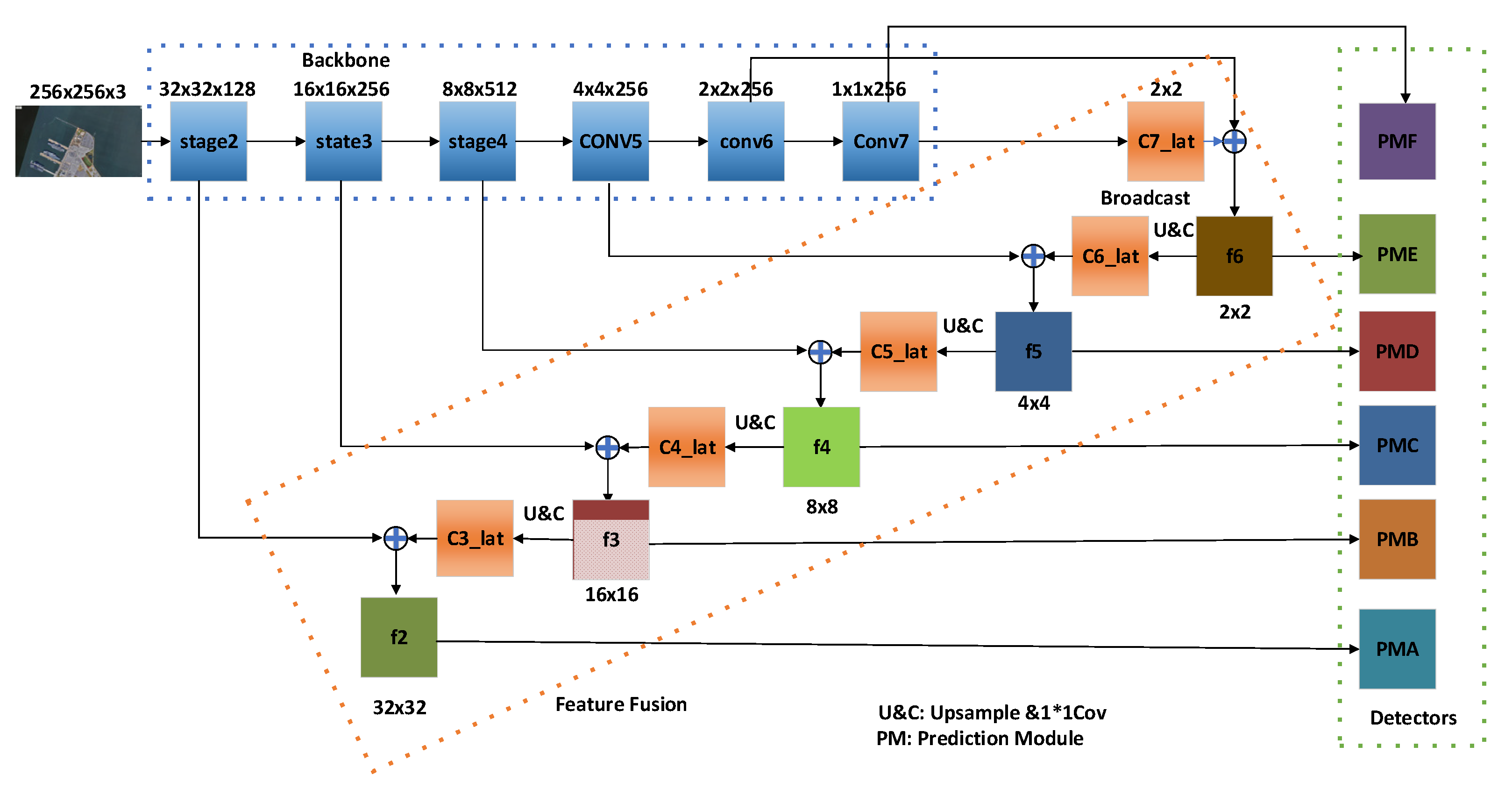

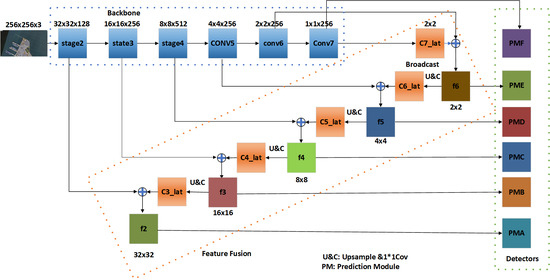

In this part, the details of MSF-SNET are described. Our model design emphasizes the lightweight feature and computational efficiency of the model without reduction of detection accuracy. The MSF-SNET network structure is illustrated in

Figure 2.

3.1.1. Input Image Size

The input image size in the object detection network is relatively large. For instance, the YOLO input image size is 416 × 416, and the FPN input image size exceeds 800 × 800. Although the input image with large size has certain advantages, it will cause huge calculation burden. The results of ThunderNet indicate that the input image size of the CNN network needs to be compatible with its backbone network. When a large backbone network is used to extract features of a small image, the size of the extracted features is low, and detailed features will be lost. On the contrary, when a small backbone network is used to extract features of a large image, its ability to extract features is limited by the network, so that information of the image is lost. In practical scenarios, satellite images are sent to the detection model after being sliced and the model is implemented by dedicated hardware, which limited the input image size. The selected image size needs to be suitable and simple for hardware implementation. Considering comprehensively, the input image size is set to 256 × 256. The input image into the model in this work is an RGB image.

3.1.2. Backbone Network

The basic function of the backbone network is to extract features from the image, which has a significant impact on the performance of object detection. The design goal of the MSF-SNET backbone network is to obtain as many objects features as possible with a low computational cost.

Previous work [

60] has proved the importance of the receptive field to the object detection model. In terms of large objects in remote sensing images, such as baseball fields, basketball courts, ships, etc., they require a larger receptive field to extract contextual information and related feature information. Large receptive fields can effectively represent more features of large objects. The small receptive field only perceives the local information of the object, which causes the decrease of the precision rate. For large objects detection, deeper features with a large receptive field are required, while for small objects detection, low-level features are required.

Besides, low-level features contain a large amount of spatial location information. The localization subtask is more sensitive to the low-level features, but deep features are more discriminative and important to the classification subtasks. The lightweight backbone network needs to consider the impact of both features on two types of subtasks. Therefore, our backbone uses multi-stage to extract both deep features and low-level features, so that MSF-SNET can make use of the discriminability of deep features to improve the accuracy of a classification and make use of the spatial detail information of low-level features to improve the accuracy of the location.

While the previous lightweight object detection models can improve speed and reduce model parameters, there are still some limitations, for example, ShuffleNetV1/V2 has restricted receptive field, MobileNetV2 lacks low-level features, and Xception suffer from the insufficient high-level features under small computational budgets [

54].

ThunderNet considers the above factors and makes improvements based on ShuffleNet V2, but it can still be improved.

Based on these insights, we built a more lightweight backbone network based on the backbone network (SNET) of Thundernet.

First, we replace the 5 × 5 depthwise convolutions with a 3 × 3 separable atrous convolution (dilated rate = 2) [

72]. It effectively reduces the computational cost and the number of parameters while maintaining its receptive field.

Second, to adapt to the detection of super-large objects, three additional convolutional layers are added to extract deeper features. At the same time, to perform position regression and improve the detection ability of small objects, and balance the deep and low-level features, three feature layers from the backbone network together with the last three layers are used as detection feature layers.

The last convolutional layer of stage2 is denoted as C2, the last convolutional layer of stage3 is denoted as C3, and the last convolutional layer of Stage4 is denoted as C4. Three newly added convolutional layers are denoted as C5, C6, and C7 respectively. Object detection and position regression are performed on the output feature maps of these 6 convolutional layers.

Table 1 shows the overall architecture of the backbone.

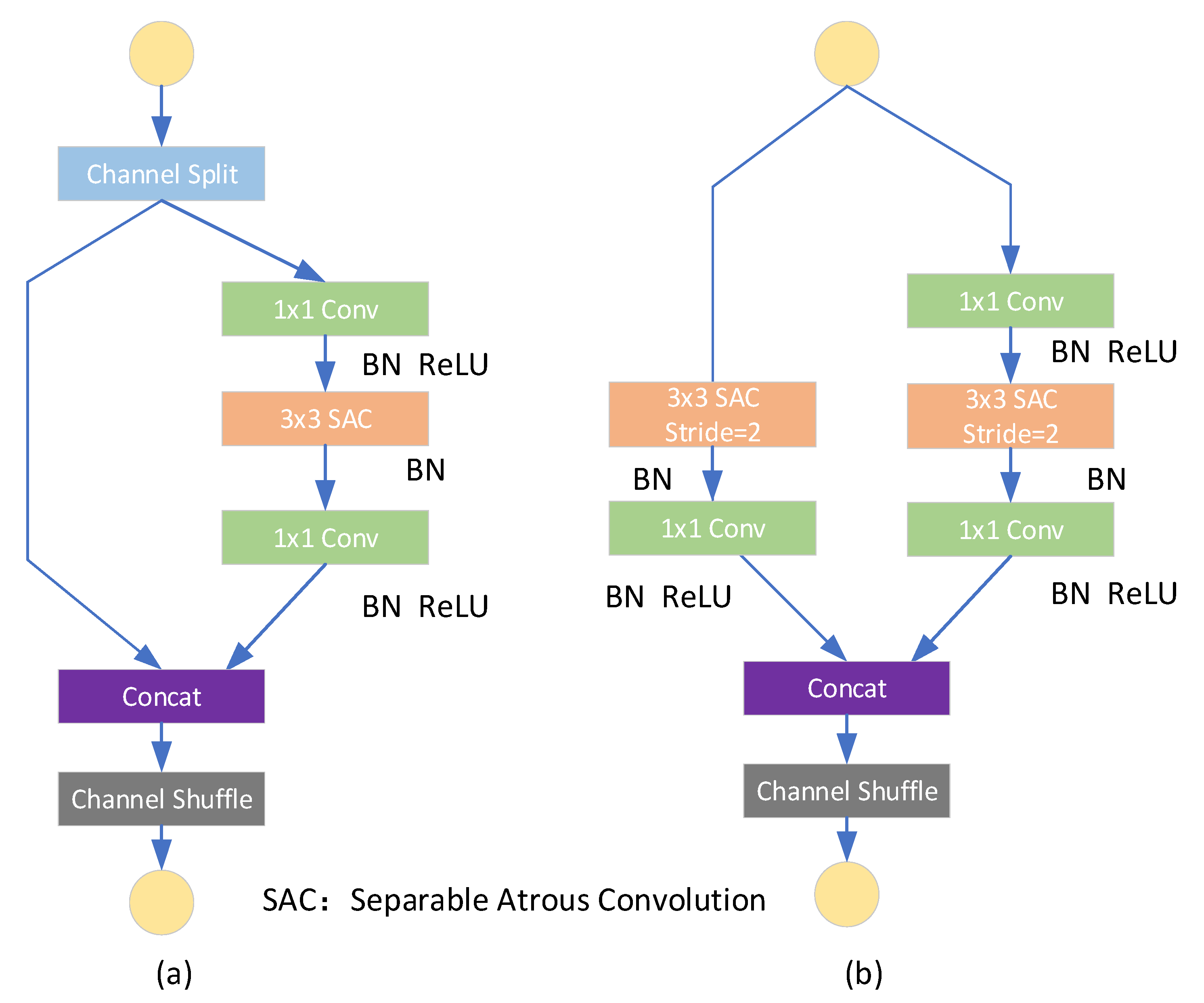

The basic building blocks of MSF-SNET are shown in

Figure 3. In

Table 1, the basic components of stage2, stage3, and stage4 use the same units as Shufflenet v2 used. Stage2 is composed of one SDU and three BUs cascaded to each other. The SDU achieves 2 times downsampling, and BU is the basic component of the backbone network.

As shown in

Table 1 the backbone is made up of three stages and four convolution layers. The three stages contain two basic units named spatial down-sampling unit (SDU) and basic building (BU) which are shown in

Figure 3. The first layer in the backbone is a 3 × 3 convolution layer with twenty-four filters followed by a max-pooling layer. The max-pooling layer uses a window of size 3 × 3 with a stride of 2. Stage 2, stage 3, and stage 4 are the main blocks of the backbone. One SDU and three BUs are stacked to form stage2 and stage 4, one SDU, and seven BUs are stacked to form stage 3. the output size of each layer or block is shown in

Table 1. The output of the previous layer is the input of the next layer, so the input size and output size of each layer can be obtained according to

Table 1.

We compared the proposed framework with ThunderNet in terms of network parameters and computational cost (Flops, one Flop stands for one multiplication and one addition operation). The comparison results in

Table 2, it implies that the network parameters of the proposed framework have been reduced by 4.2%, and the computational cost has been reduced by 5.0% when the input image size is 256 × 256. By reducing the number of parameters and the computational complexity, the proposed algorithm is more efficient.

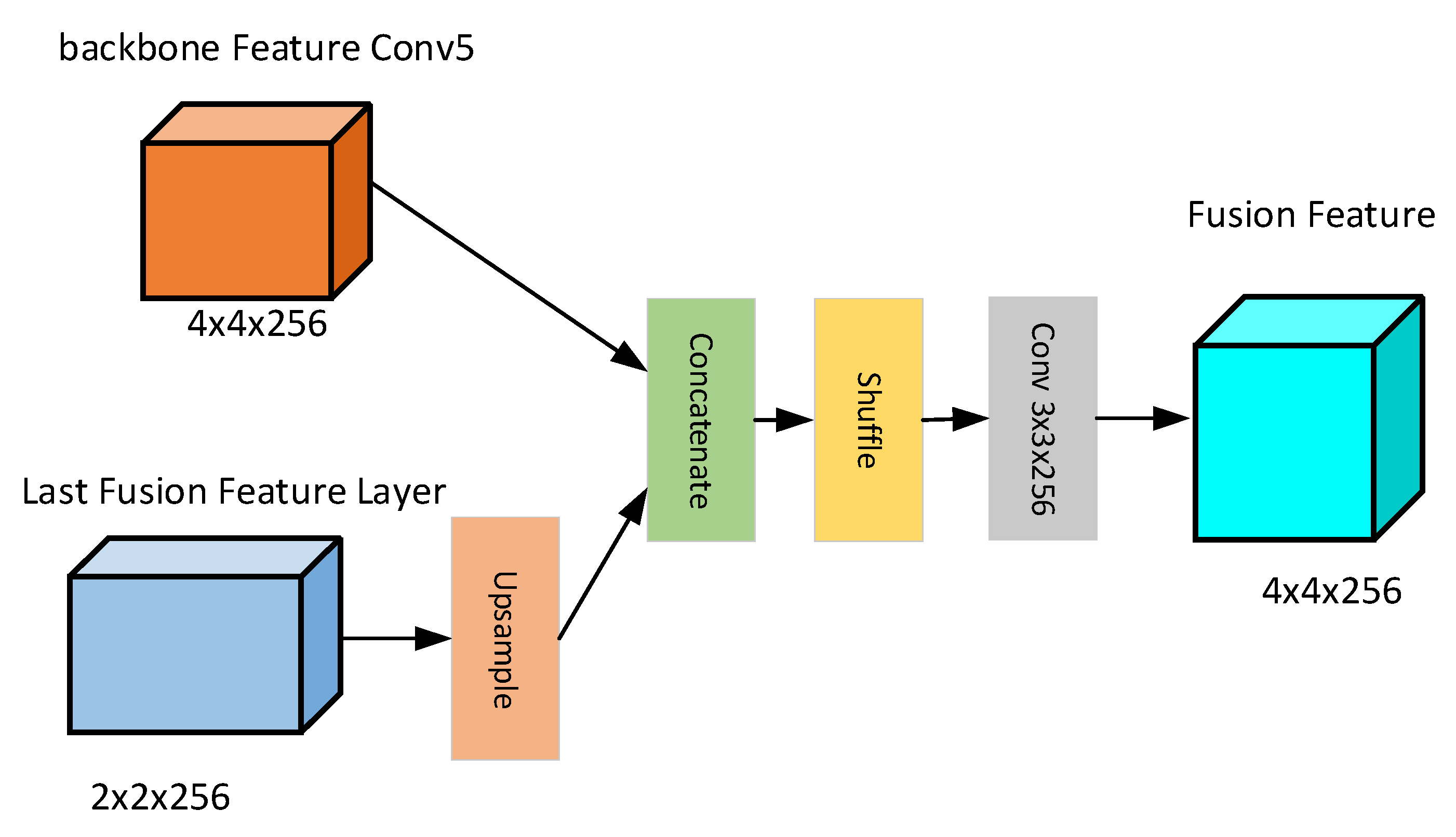

3.2. Feature Fusion

To improve the accuracy of classification and location, deep features and low-level features are combined so that the discriminability of deep features can be fully utilized. The most common feature fusion method is the feature pyramid network (FPN) [

73]. While improving the detection performance, FPN tends to obtain a limited receptive field and an increase of the number of blocks will lead to a large parameter burden and memory consumption. Thus, it is not suitable for application in lightweight networks. Inspired by previous work [

53,

74], a lightweight feature fusion method is proposed. The proposed method is illustrated in

Figure 4.

The output features of the last layer are up-sampled and then subjected to a 3 × 3 convolution to obtain the features of the same size as the previous node. Then the two features are cascaded, and feature shuffle is performed to form the final detection feature map. For example, the last output feature map C7 is up-sampled and convolved to obtain C7_lat, which has the same size as C6. C7_lat and C6 are concatenated and shuffled to form a feature map f6 for object detection. Then f6 is up-sampled and convolved to obtain C6_lat. Concatenate C6_lat and C5 to form a feature map of another scale. By analogy, all object detection feature maps are obtained. This method achieves the fusion of deep features and low-level features with a little computational cost.

Many references have concluded that although the fusion of multi-layer features can improve the performance of the algorithm, it requires many computing resources. SSD experimental results suggest that feature fusion can improve the accuracy by about 1-1.5%. Although the performance was slightly improved, the computation cost of feature fusion was 5.04G Flops (one multiplication and one addition), which increased by 14.2%. Computation cost is too high compared with the performance improvement brought by feature fusion. Unlike DSSD, our method abandons the use of deconvolution. Instead, the fused features are directly up-sampled and cascaded with the features obtained from the backbone network. The features are shuffled and convolved to obtain the fusion features of the upper layer. The experimental results demonstrate that this method achieves similar performance as the feature fusion method of DSSD, but the method results in a reduction of computing cost.

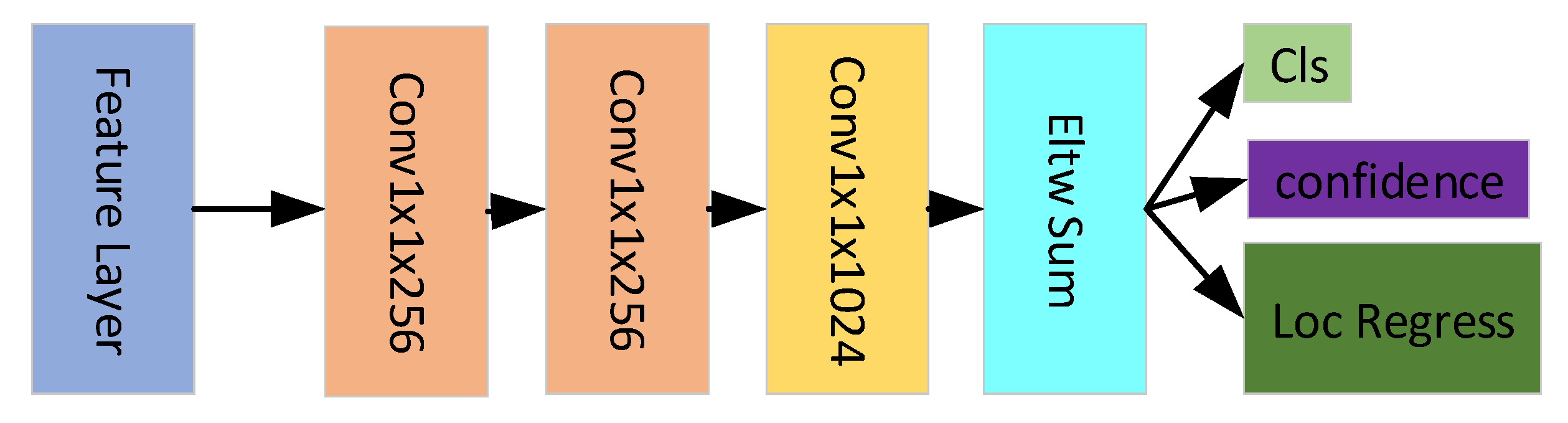

3.3. Predict Module

RCNN series are the representatives of two-stage detection algorithms, YOLO and SSD are the typical representatives of one-stage detection algorithms. For two-stage detection algorithms, the proposed boxes are first obtained through the region proposal network (RPN), and then position regression and classification are performed on this basis. The one-stage object detection network considers that each point on the feature map as a detection point, which corresponds to several anchors, and then performs position regression and classification based on these anchors. Compared with the one-stage algorithm, the two-stage algorithm requires an additional RPN network to propose a frame. Therefore, it needs to take up extra storage space for parameters and occupy extra computing resources, while its accuracy will be slightly higher than that of the one-stage object detection algorithm. For object detection onboard, a balance needs to be made between accuracy and computational cost. High accuracy is not the only pursuit. Moreover, it is expected that the detection part is simple and efficient, thus the computational complexity is reduced.

A one-stage detection network is more suitable for satellite applications due to its simplicity and rapidity. To this end, we adopt the one-stage network as the basic detection method. Inspired by SSD and DSSD algorithms, objects are detected on multiple feature scales. The second stage output, third stage output, and fourth stage output of SNET are employed as the detection feature maps. These features are low-level features that contain rich location information and are more beneficial to small object detection. Besides, to detect large-scale objects, another three convolution layers are added to the network, thus three more feature maps are generated respectively. These three feature maps are extracted from deep features that contain rich semantic information. Therefore, it is convenient for large object detection.

After trying four kinds of detection modules, DSSD describes the result that the detection module shown in

Figure 5 conducted the highest efficiency. In this paper, this detection module is used as the object detection part.

3.4. Detection Architecture

As shown in

Figure 2, the output size of stage2, stage3, and stage4 are 32 × 32 × 128, 16 × 16 × 256, 8 × 8 × 512 respectively. The feature map size is reduced to half of the original size after each stage by convolution with a stride of 2. To build a rich feature representation of the original image, another three convolution layers named C5, C6, and C7 are added. The feature map size of each layer is also reduced to half of the original size after each layer by maxpooling with a stride of 2. So, the output size of C5, C6, and C7 are 4 × 4 × 256, 2 × 2 × 256, and 1 × 1 × 256 respectively. The strategy for feature fusion shown in

Figure 4 is adopted to generate the fused feature maps, i.e., f2, f3, f4, f5, and f6.

The prediction module shown in

Figure 5 is used as a detector to perform detection. Six detectors (PMA, PMB, PMC, PMD, PME, and PMF) are set up to perform detection on different fused feature maps and the output of conv7 simultaneously. The six detectors of diverse sizes and sensitivities could take advantage of both low-level and high-level features in this way to improve the detection performance.

The input image is split into a grid of S × S cells in YOLO network. A cell is responsible for detecting the existence of the object if the center of which is failed into the cell. Similarly, these six detectors make detection at feature maps of six different sizes, having strides 256, 128, 64, 32, 2, and 16 respectively. This means, with an input image of size 256 × 256, we make detections on scales 1 × 1, 2 × 2, 4 × 4, 8 × 8, 16 × 16, and 32 × 32. The 1 × 1 and 2 × 2 layers are responsible for detecting large objects, the 4 × 4 and 8 × 8 layers are for detecting medium objects, and the 16 × 16 and 32 × 32 layers detect the smaller objects. Therefore, the sensitivity of the six detectors is different from each other. Each of them plays a complementary role with each other.

3.5. Model Training

MSF-SNET uses multiple detectors to detect objects on feature maps of different scales. The scales of the feature maps sizes are 32 × 32, 16 × 16, 8 × 8, 4 × 4, 2 × 2, and 1 × 1 respectively. The network adopts the same training strategy as YOLO V3. Several preselected boxes are preset on the grid of each feature map. Each preselected box has a different scale and aspect ratio. The preselected box is matched with the real box (GT box) during the training process. The matching rule is that the intersection over union (IOU) value between the preselected box and the real box is greater than a certain threshold (IOU > 0.5). To remove the overlap detections, non-maximum suppression (NMS) is used for post-processing. The NMS threshold is equal to 0.5.

After the above matching process, a small number of predicted bounding boxes can be matched with the real bounding boxes, while most of the predicted bounding boxes are filtered. This will result in an imbalance between positive and negative samples. To avoid this situation, we sort the probability of each predicted bounding box and discard the predicted bounding box with low probability to adjust the ratio between positive and negative samples to 1:3. This will make the training process converge more easily.

Normally, the number of large objects in an image is much smaller than the number of tiny objects. We put this factor into consideration when setting anchors. The number of anchors set on each grid of the low-level feature map is greater than that of the deep feature map. Six anchors are set on each grid of the first two feature maps, four anchors are set on each grid of the middle two feature maps, and two anchors are set on each grid of the last two feature maps. The final setting is [6,6,4,4,2,2], which greatly reduces the number of anchors on these feature maps. The aspect ratio of Anchors is set to [1,2,3,1/2,1/3,1]. Each anchor corresponds to six parameters, which refer to four positional parameters, one confidence parameter, and one class parameter respectively.

As shown in Equation (1), the loss function of YOLO V3 is adopted as the loss function of MSF-SNET whichconsists of three parts, i.e., position loss, class loss, and confidence loss.

For each predicted bounding box, the four parameters output by the network are the normalized coordinate offset value

and

of the object center point and the scaling factor

and

of the bounding box. The coordinates of the center point and the width and height of the predicted bounding box are represented by

,

,

, and

respectively.

and

are the width and height of the anchor mapped to the feature map. The relationship between these parameters can be expressed by Equation (2).

The position loss function uses squared error loss, which can be expressed byEquation (3).

where

is a binary factor, which means that the value is 1 only when the IOU between the j-th predicted bounding box in the i-th grid and the ground truth box is the largest, otherwise it is 0. That is, only those predicted bounding boxes with the largest IOU of the ground truth boxes contribute to the loss function, otherwise, they are not included in the loss function.

To improve the detection rate of the model for small objects, a penalty factor is added to the loss function, which can be expressed as Equation (4).

SE stands for squared error and can be expressed as Equation (5).

The class loss function adopts the cross-entropy loss function, which can be expressed as Equation (6).

The confidence loss function also uses the cross-entropy loss function. Unlike the class loss function, which only calculates the loss corresponding to the prediction bounding box with the largest IOU, the confidence loss function calculates all prediction boxes, which can be expressed as Equation (7).

where BCE represents cross-entropy loss, which can be expressed as Equation (8).

The parameter λ involved in the above loss function is the adjustment factor of the loss function, and the proportion of each part of the loss function can be adjusted according to the actual situation. Here λ is equal to 1.

To strengthen the robustness and generalization of the model, image data are augmented. Since the remote sensing image is obtained overhead, the same object may present different directions and multiple perspectives. Therefore, image rotation, object rotation, and image scaling are used to augment the data, with a scaling range of [0.5, 2]. In both the training and testing process, the image size input to the model is 256 × 256. Therefore, the remote sensing images are clipped at a 20% overlap rate before being sent to the model. Besides, we add objects randomly in the image to improve the detection performance of small objects.

4. Experimental Results

To verify and evaluate the performance of the proposed MSF-SNET framework, the public dataset NWPU VHR-10 and the DIOR dataset are used in our work. The following is a detailed description of the experimental environment, test procedures, and dataset used in our work.

4.1. Datasets

The NWPU VHR-10 dataset contains ten types of objects. A total of 650 images, including 757 airplanes, 302 ships, 655 storage tanks, 390 baseball diamonds, 524 tennis courts, 159 basketball courts, 163 ground track fields, 224 harbors, 124 bridges, and 477 vehicles, with the bounding boxes of manual annotation. The maximum size of images in NWPU VHR-10 dataset is 1728 × 1028 pixels, and the minimum size is 533 × 597 pixels. The DIOR data set contains 20 types of objects and a total of 23,463 images. The size of images in the DIOR dataset is 800 × 800 pixels.

The number of images in NWPU VHR-10 is relatively small compared with DIOR dataset. Two datasets with different number of images are both utilized to verify whether the model is effective on different dataset. Meanwhile, DIOR dataset covers more object types, which can effectively verify the adaptability performance of the model.

4.2. Implementation

Due to the number of images in the NWPU VHR-10 dataset is small, 80% of the images were randomly selected as the training set and 20% of the images as the test set in our experiment. In contrast, The DIOR dataset is larger. In the experiment, 90% of the images were randomly selected as the training set and 10% of the images as the test set. Besides, to improve the generalization ability of the model, the data within these two datasets are augmented as described in

Section 3.3.

The MSF-SNET model is an end-to-end model. We implemented the model using the open-source PyTorch1.3 framework and trained it using graphics processing units (GPUs). Since MSF-SNET is modified based on SNET, we use the pre-training weight of SNET on the VOC2007 dataset as the initial weight of MSF-SNET. Then fine-tuned it on the NWPU VHR-10 and DIOR datasets. In our experiment, we used a stochastic gradient descent algorithm (SGD) to update the parameters. The batch size used in the experiment is 16, and a total of 300 epochs were performed. The learning rate of the first 100 Epochs is 0.01, that of the middle 100 epochs is 0.001, and that of the next 100 epochs is 0.0001. The momentum and weight decay were set to 0.9 and 0.0005 respectively. The PC operating system used in the experiment is Ubuntu 18.04, the CPU is Intel i7-7700, the RAM is 16GB and the GPU is NVIDIA GeForce GTX1080.

4.3. Evaluation Metrics

Precision-Recall Curve, average precision (AP) and mean average precision (mAP) is widely applied as quantitative evaluation indicators for object detection. In this paper, these indicators are also adopted to evaluate the performance of the proposed algorithm. Besides, the time complexity and space complexity of the proposed framework are also evaluated by cost density.

Precision and recall are defined as Equations (9) and (10).

Accuracy indicates how many of all positive objects are correctly predicted, and the recall rate shows how many of all positive objects are correctly predicted. AP calculates the average of all precisions with a recall rate between 0 and 1. AP is a commonly used index to measure the detection accuracy of the detector. For example, algorithms such as RCNN, YOLO, and SSD also use this evaluation index. In practical applications, the calculation method is as Equation (11)

where

.

For single class objects, AP is used to measure algorithm performance, but in multi-class object detection tasks, mAP (see Equation (12)) is used to measure detector performance, which is defined as the average value of AP on multiple classes of objects.

The time complexity of a model is measured by the amount of calculation it consumes. The greater the amount of calculation it consumes, the higher its time complexity is. In this paper, the time complexity of the model is measured by counting the number of Flops consumed in the testing phase. Flop is defined as multiply and accumulation (MAC). Besides, the number of parameters is also an important indicator of model performance, as well as algorithm space complexity, which determines whether the model can be deployed.

As each algorithm runs on different platforms with different performances, the computing power is not the same. A comparison of average running time per image does not indicate the pros and cons of the algorithm. To fairly compare the performance of the algorithm, a cost density evaluation metrics is proposed, which is related to the number of parameters and computational costs required by the algorithm. The calculation method is as Equation (13).

The smaller the cost density is, the better performance the algorithm can achieve while consuming the same resources.

4.4. Experimental Results and Analysis

4.4.1. Results for NWPU VHR-10 dataset

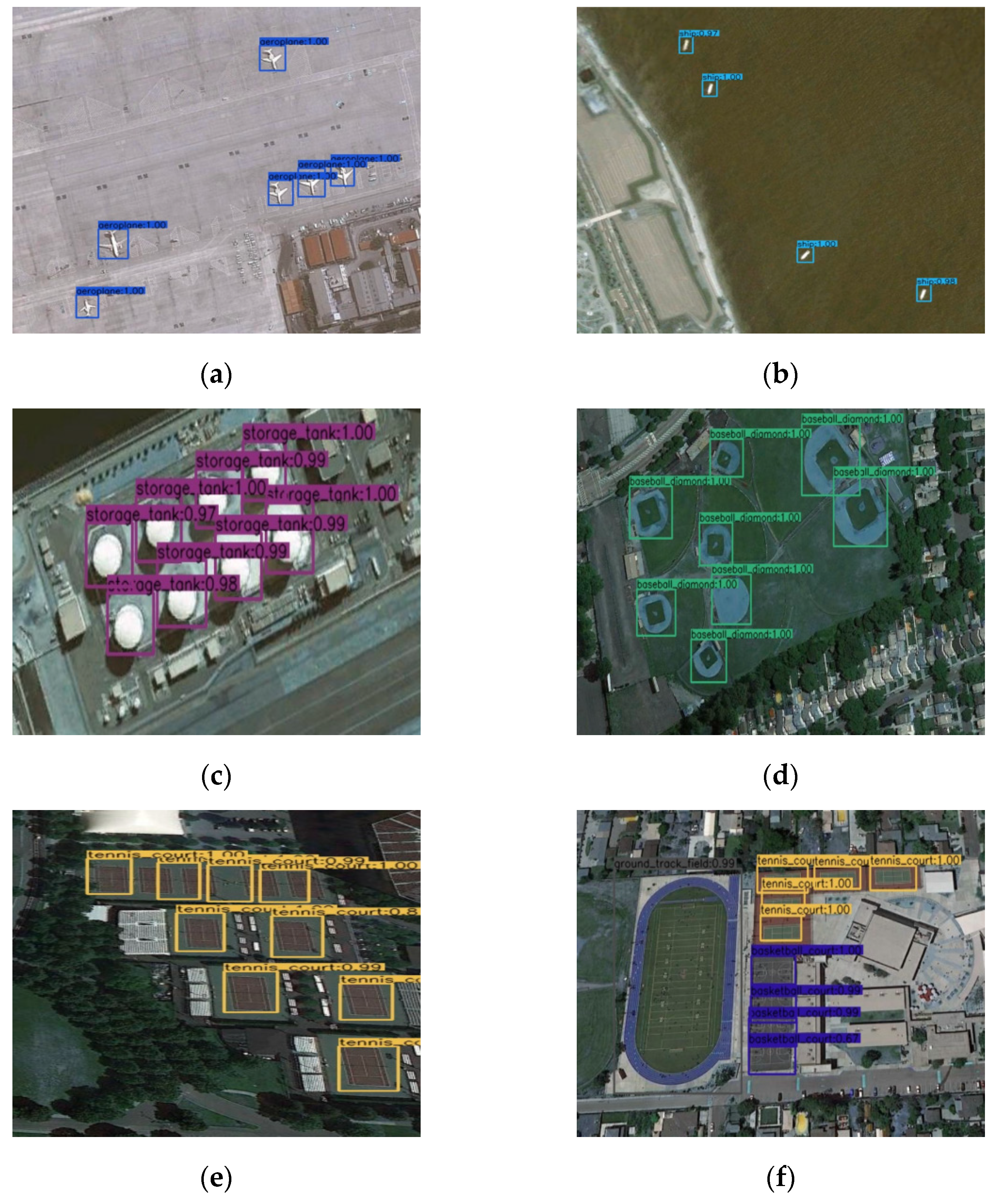

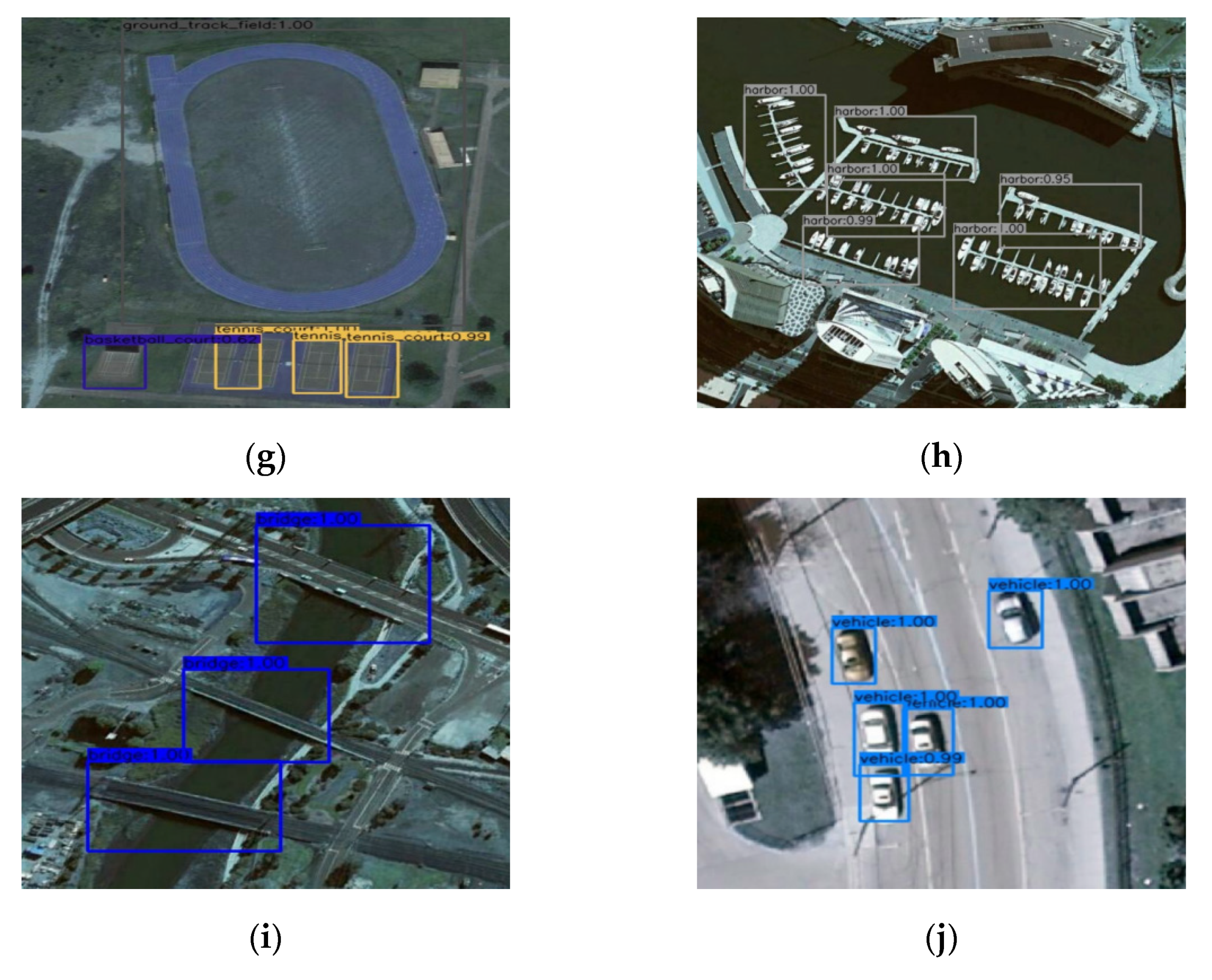

Some examples of the detection results of the proposed framework MSF-SNET on NWPU VHR-10 are illustrated in

Figure 6. The results imply that the proposed algorithm is effective when detecting ten classes of objects. The proposed algorithm is not only effective for large objects detection, (for example, the Ground track field in

Figure 6f,g, the harbor in

Figure 6h and the bridge in

Figure 6i), but also effective for small objects. For example, it can detect the small ship object in

Figure 6b and the Vehicle small object in

Figure 6j.

To examine and evaluate the performance of the proposed framework MSF-SNET, we compared this algorithm with the other five benchmark algorithms on AP and mAP indicators, and the results are presented in

Table 3.

As shown in

Table 3, the MSF-SNET algorithm is slightly better than the Thundernet algorithm which is due to the adopted multi-scale feature detection method in our proposed algorithm and the improvement brought by the fusion of deep and low-level features. Compared with Thundernet, the MSF-SNET algorithm has a higher average detection accuracy (AP) in all categories. In particular, the AP on the three types of objects of Storage tank, Tennis court, and Vehicle exceed the Thundernet algorithm by nearly 10% and the mAP is 9.92% higher than Thundernet.

Compared with CBFF-SSD [

14], MSF-SNET only has a slightly higher average detection accuracy of the Bridge category, and the average detection accuracy of other categories is slightly lower than that of the CBFF-SSD algorithm, and mAP is 9% lower than this algorithm. Although the MSF-SNET algorithm is inferior to the CBFF-SSD algorithm in performance, it has obvious advantages in terms of the number of parameters and computational complexity. The number of parameters of MSF-SNET is 1.52M, and CBFF-SSD is 14.74M, which is 9 times of the proposed framework. The computational cost of the MSF-SNET is 0.327 GFlops, while that of the CBFF-SSD is 5.51 GFlops, which is 16.85 times that of the algorithm proposed in this article.

As shown in

Table 4, the Cost Density of MSF-SNET is the lowest among these algorithms, which is only 0.39, It implies that the algorithm proposed in this paper has the best performance within the same computational resources.

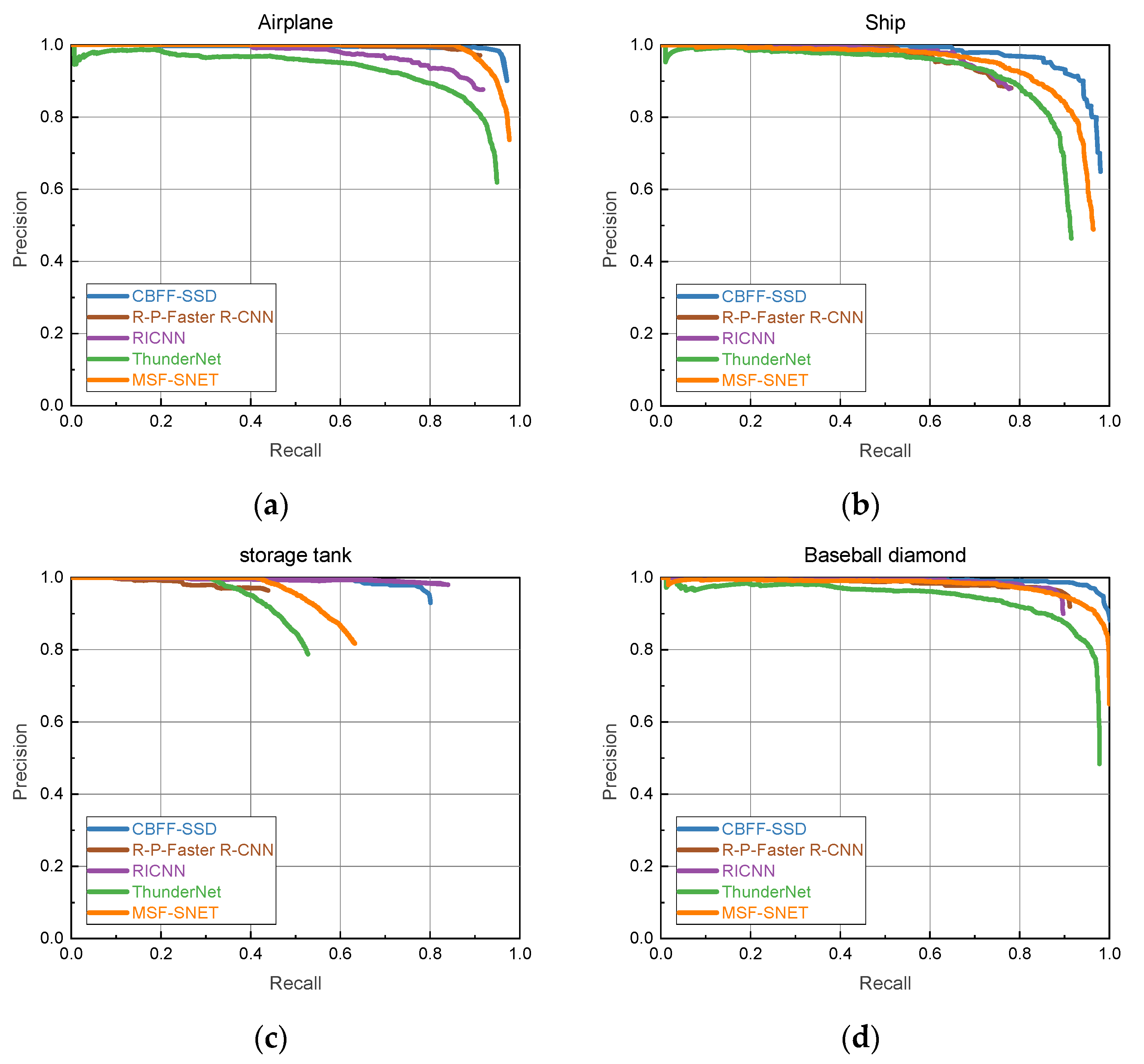

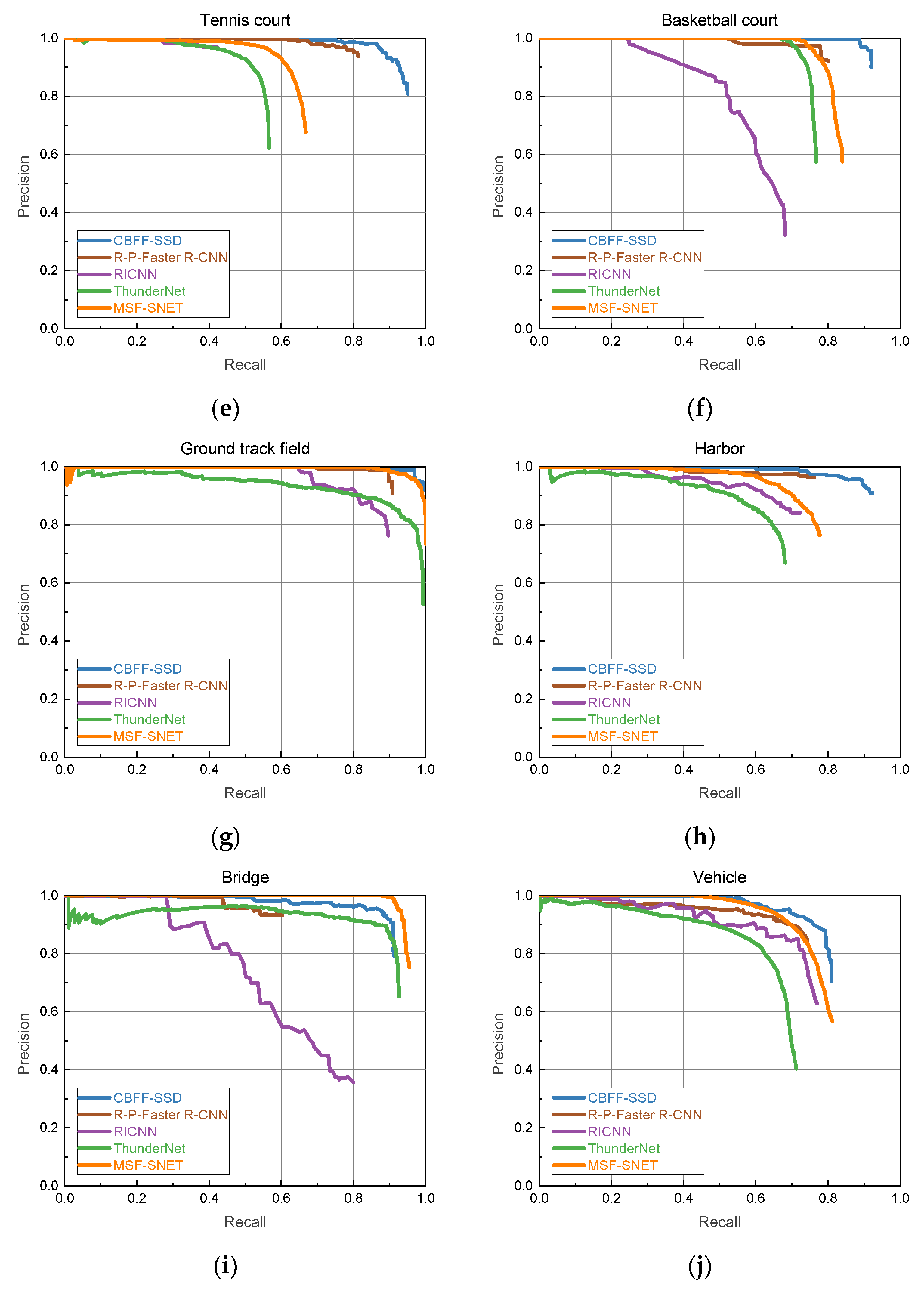

Figure 7 shows the PR curves of MSF-SNET in each category. It can be seen from the figure that although the algorithm’s mAP reaches 82.42%, the detection accuracy on the storage tank and tennis court categories is relatively low.

4.4.2. Results for DIOR Dataset

To further evaluate the performance of the proposed MSF-SNET framework, it was also trained on DIOR dataset. The detection average precision (%) of the proposed framework and other 13 representative deep learning-based algorithms are shown in

Table 5 from category 1 to category 10, and in

Table 6 from category 11 to category 20. each object category in DIOR is assigned an index. In

Table 5 and

Table 6, C1, C2, C3, C4, C5, C6, C7, C8, C9, C10, C11, C12, C13, C14, C15, C16, C17, C18, C19, and C20 are corresponding to Airplane, Airport, Baseball field, Basketball court, Bridge, Chimney, Dam, Expressway service area, Expressway, toll station, Golf course, Ground track field, Harbor, Overpass, Ship, Stadium, Storage tank, Tennis court, Train station, Vehicle, and Wind mill respectively.

Nine region proposal-based algorithms and four regression-based algorithms are selected as the benchmark algorithms for tests [

16]. Proposal-based algorithms include R-CNN [

50], RICNN [

13], RICAOD [

66], Faster R-CNN (FRCNN) [

7], RIFD-CNN [

75], Faster R-CNN with FPN, Mask R-CNN (MRCNN) [

76] with FPN, PANet [

77] and Thundernet. regression-based algorithms include YOLOv3 [

10], SSD [

11], RetinaNet [

78], and CornerNet [

79].

As shown in

Table 5 and

Table 6, MSF-SNET achieves the best mAP value of 66.5%. Compared with Thundernet, MSF-SNET obtains 3% mAP gains and MSF-SNET improves AP values of twenty object categories, which illustrated that our multi-scale feature detector works properly.

Besides, MSF-SNET outperforms other representative algorithms in terms of mAP and obtains the best AP value for Airplane, Baseball field, Chimney, Harbor, Stadium, and Storage tank. These results demonstrate that our lightweight object detection framework can get even better performance with much fewer parameters and fewer computing operations.

SSD gets the best mAP in four regression-based algorithms and RetinaNet gets the best mAP in Nine region proposal-based algorithms. In this experiment, we choose SSD and RetinaNet as two representative models to compared Cost Density metrics for fair evaluations, in which three representative backbone networks are used.

Table 7 shows that our framework has the lowest Cost Density compared with VGG16, Resnet-50, Resnet-101, which are set in SSD and RetinaNet respectively. It illustrated that our proposed framework gets the best mAP with only about half Cost Density compared with RetinaNet algorithm (Resnet-50 backbone). SSD has about 49 times of Cost Density compared proposed framework with accuracy dropping nearly 8%.

The experiment results show that MSF-SNET is a superior lightweight object detection framework. It not only surpasses other benchmark models on mAP performance but also significantly reduces the computational costs compared with other models.

5. Discussion

The experimental results show that the MSF-SNET proposed in this paper has comparable performance in remote sensing image object detection, and it is effective on both the NWPU VHR-10 dataset and the DIOR dataset. When the performance of the detection algorithm is not inferior or slightly inferior, the time complexity and space complexity of the model reaches SOTA. At present, most of the object detection algorithms only focus on the evaluation indicators of the algorithm itself, such as accuracy, AP, and mAP, while few of them consider the time complexity of the algorithm.

There are generally two application scenarios for remote sensing image object detectors. One of the scenarios is to detect objects in remote sensing images on the ground. Another application scenario is to apply the detector in an onboard environment, which is the application scenario that this article focuses on. In this application scenario, the detector has too many parameters to store or is too expensive to be applied on the satellite due to the computational cost of predictions. In fact, for satellite detection, the number of parameters and the computational cost is as important as the performance. The advantage of our proposed algorithm lies in that it not only enhances the performance of the algorithm itself but also carefully considers the key issues that need to be urgently solved in actual satellite application scenarios.

The number of parameters of our detector and its computational cost is compared with the current mainstream remote sensing image object detection algorithms in

Table 4, and the SOTA results are obtained. The test results show that the architecture proposed in this paper can be used in scenarios where storage resources and computing resources are limited, especially is suitable for satellites application. The results will promote the deployment of object detection frameworks on satellites by easing the burden of computation.

Besides, the Cost Density parameter proposed in this paper can be further applied to better evaluate the performance of different algorithms in case of consuming the same resources, and can also be used to evaluate the performance of the algorithms in situations of limited resources.

It should be noted that when designing the detector in this article, we did not consider the types of accelerators to deploying on the satellite, such as CPU, GPU, a dedicated processor, or FPGA. Therefore, it is not optimized for a certain accelerator. For example, when using an accelerator on field programmable gate array (FPGA), it is necessary to pay attention to the influence of network structure, quantization method, whether pruning is required, model parameters, and image data loading, etc. It is worthwhile to further study the detector based on a hardware for a certain accelerator.

6. Conclusions

In our research, we use modified SNET to extract features. However, the difference is that MSF-SNET adopts a one-stage end-to-end model, which considers the two aspects of accuracy and lightweight comprehensively and makes a compromise between them. The number of parameters and the amount of calculation consumed by CNN is further significantly reduced without loss of performance.

The test results on the NWPU VHR-10 dataset and the DIOR dataset demonstrate that our proposed lightweight model is not inferior to other algorithms in accuracy and mAP, but has notable advantages in terms of parameters and computational cost reduction. Through training and testing of the network, we can draw the following conclusions:

The performance of the lightweight model MSF-SNET is not inferior to other deep network models.

Although MSF-SNET has been tested on the NWPU VHR-10 dataset and DIOR dataset, which indicates our model MSF-SNET can be widely applied to remote sensing object detection tasks.

Due to its lightweight characteristics, MSF-SNET can meet the strict requirements of onboard object detection with the constraints of time and space complexity.

The design goal of MSF-SNET is not the pursuit of the ultimate performance, but rather a balance between performance and complexity. This research is more inclined to use limited performance loss to reduce the implementation complexity.

However, in this study, no assumption is made about the accelerator used in the proposed algorithm. In future research, we will further explore the use of specific accelerators in remote sensing image object detection tasks, the further optimization of the lightweight model, and the impact on network design. As far as we know, there is no mature remote sensing image object detection model deployed on satellites. We are committed to advancing the application of the deep learning network model with our research.