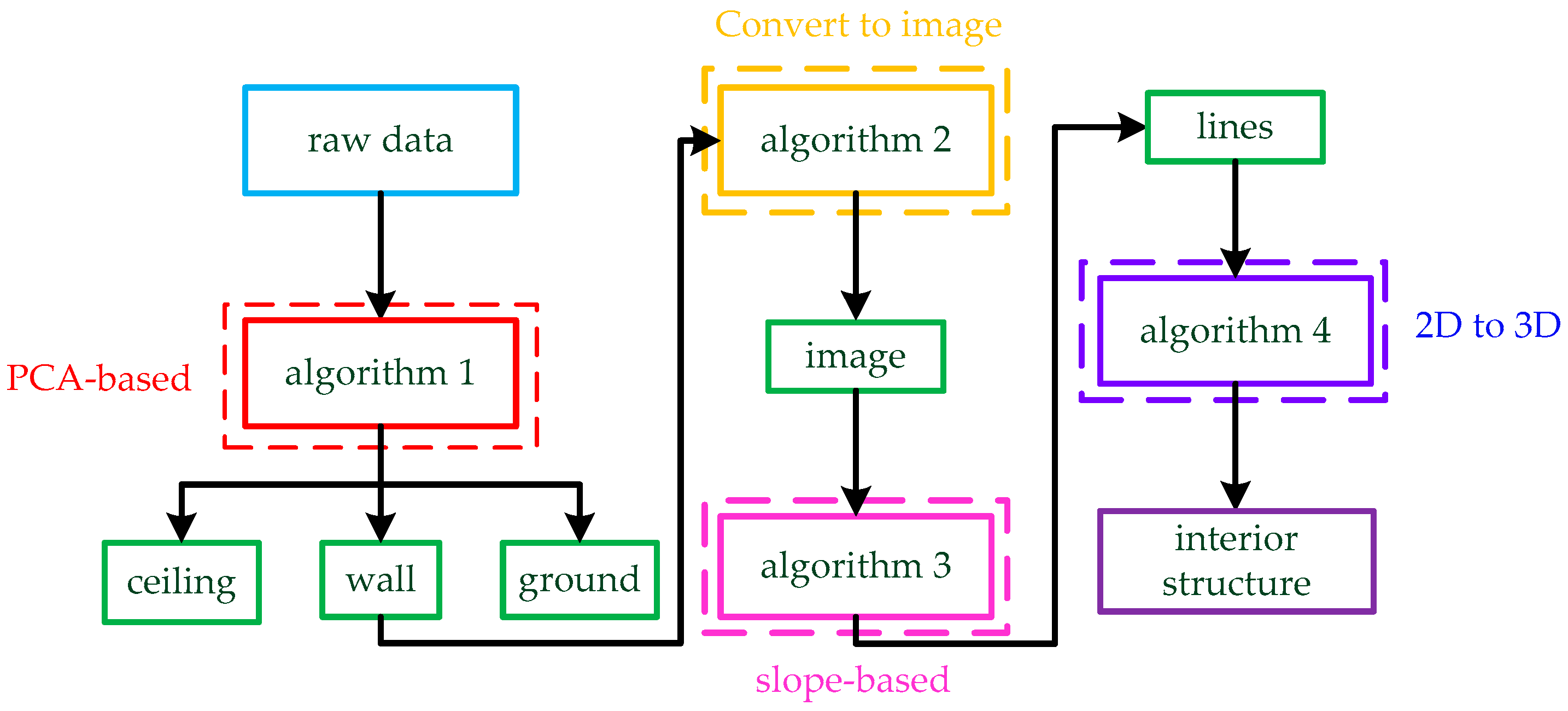

Figure 1.

The whole process of the method. The raw data are input to the PCA-based Algorithm 1, and the data are divided into three parts: ceiling, wall, and ground. Then, the three-dimensional wall is transformed into an image by Algorithm 2. Next, we use slope-based Algorithm 3 to find lines in the image. Finally, the three-dimensional interior structure is restored from lines by Algorithm 4.

Figure 1.

The whole process of the method. The raw data are input to the PCA-based Algorithm 1, and the data are divided into three parts: ceiling, wall, and ground. Then, the three-dimensional wall is transformed into an image by Algorithm 2. Next, we use slope-based Algorithm 3 to find lines in the image. Finally, the three-dimensional interior structure is restored from lines by Algorithm 4.

Figure 2.

Wall extraction method based on PCA: PCA is used to fit the plane for the raw data, and the data are divided into two parts by the plane: ceiling data and ground data. The two parts of data are projected into two-dimensional data by PCA, and then the grid threshold method is used to find the feature points of the ground and ceiling. PCA is used to fit the feature points to obtain the level of the ground and ceiling. Finally, thresholds are set for the two planes and the point clouds of the floor and ceiling are removed.

Figure 2.

Wall extraction method based on PCA: PCA is used to fit the plane for the raw data, and the data are divided into two parts by the plane: ceiling data and ground data. The two parts of data are projected into two-dimensional data by PCA, and then the grid threshold method is used to find the feature points of the ground and ceiling. PCA is used to fit the feature points to obtain the level of the ground and ceiling. Finally, thresholds are set for the two planes and the point clouds of the floor and ceiling are removed.

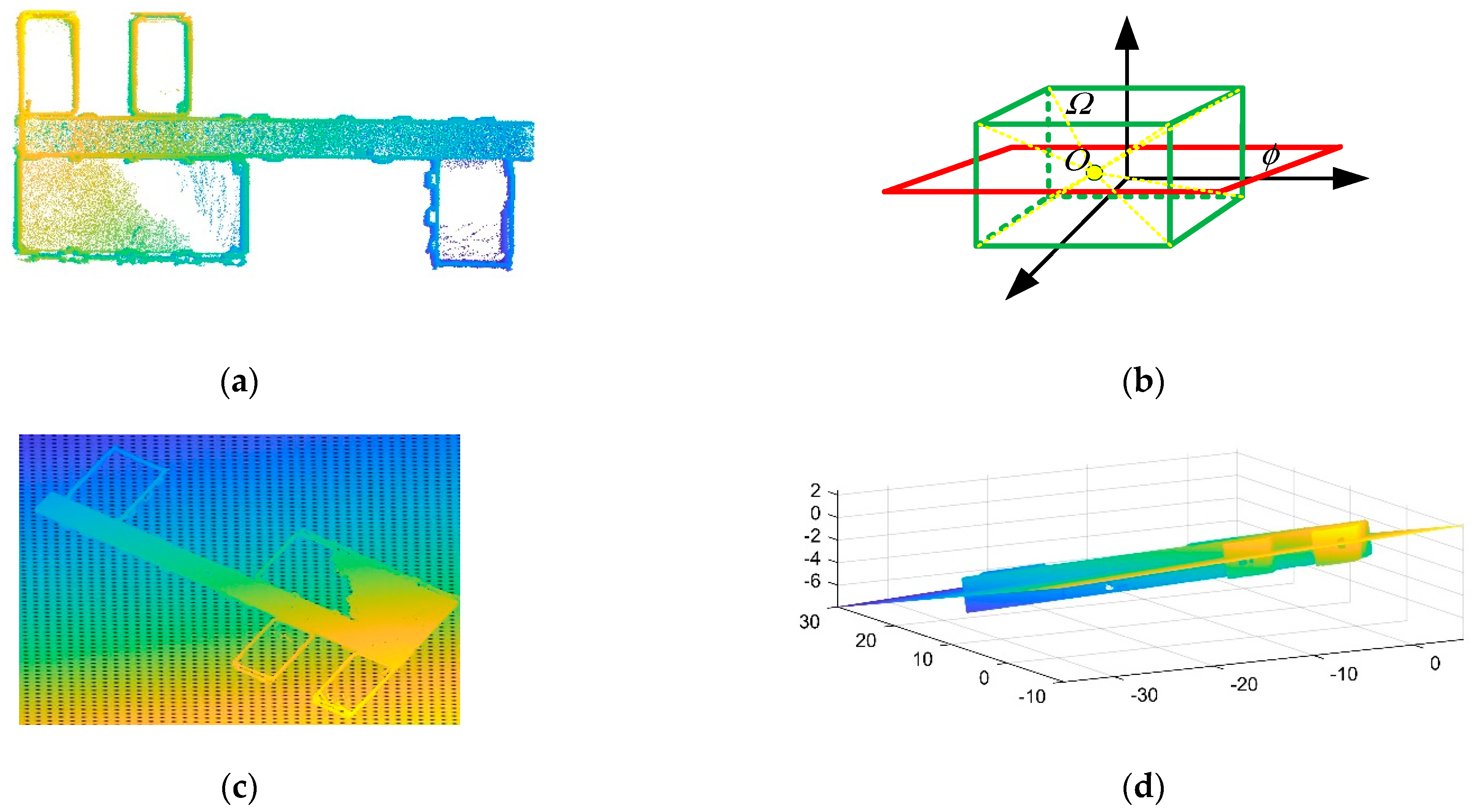

Figure 3.

PCA is used to find cross-sections of the data: (a) raw point cloud data; (b) suppose that is a cuboid composed of point clouds and is the average value of data; is the plane determined by the normal vector and obtained from PCA; (c,d) the plane fitted in the raw data by PCA.

Figure 3.

PCA is used to find cross-sections of the data: (a) raw point cloud data; (b) suppose that is a cuboid composed of point clouds and is the average value of data; is the plane determined by the normal vector and obtained from PCA; (c,d) the plane fitted in the raw data by PCA.

Figure 4.

The grid threshold method. The original data are projected two-dimensionally and meshed. Part of the ground data consists of these points whose quantity is one in the grid.

Figure 4.

The grid threshold method. The original data are projected two-dimensionally and meshed. Part of the ground data consists of these points whose quantity is one in the grid.

Figure 5.

The process of data conversion. The wall data are projected on the ground plane, which was fitted in the previous section. The two-dimensional data are processed by a grid radius outlier removal (GROR) filter, and the coordinate values are enlarged by appropriate multiples. Finally, the data are normalized and transformed into a binary image.

Figure 5.

The process of data conversion. The wall data are projected on the ground plane, which was fitted in the previous section. The two-dimensional data are processed by a grid radius outlier removal (GROR) filter, and the coordinate values are enlarged by appropriate multiples. Finally, the data are normalized and transformed into a binary image.

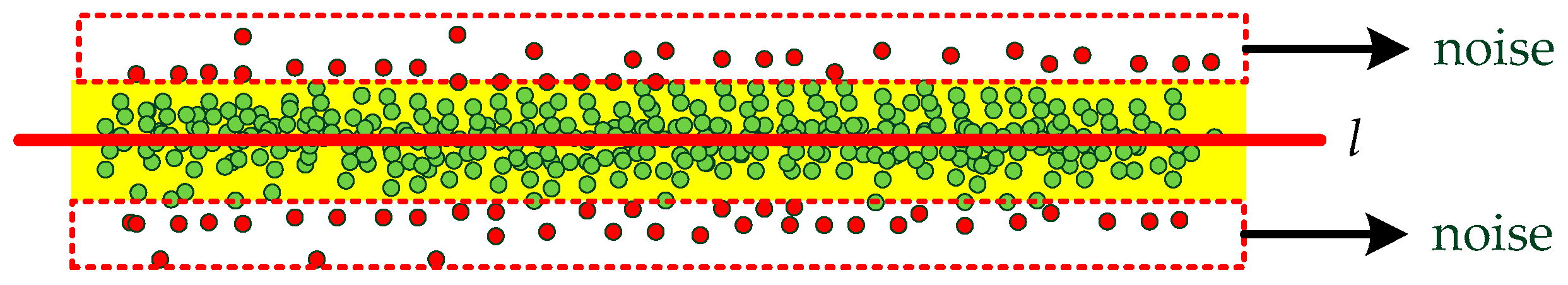

Figure 6.

The noise in the points. We need to extract the line and remove the noise.

Figure 6.

The noise in the points. We need to extract the line and remove the noise.

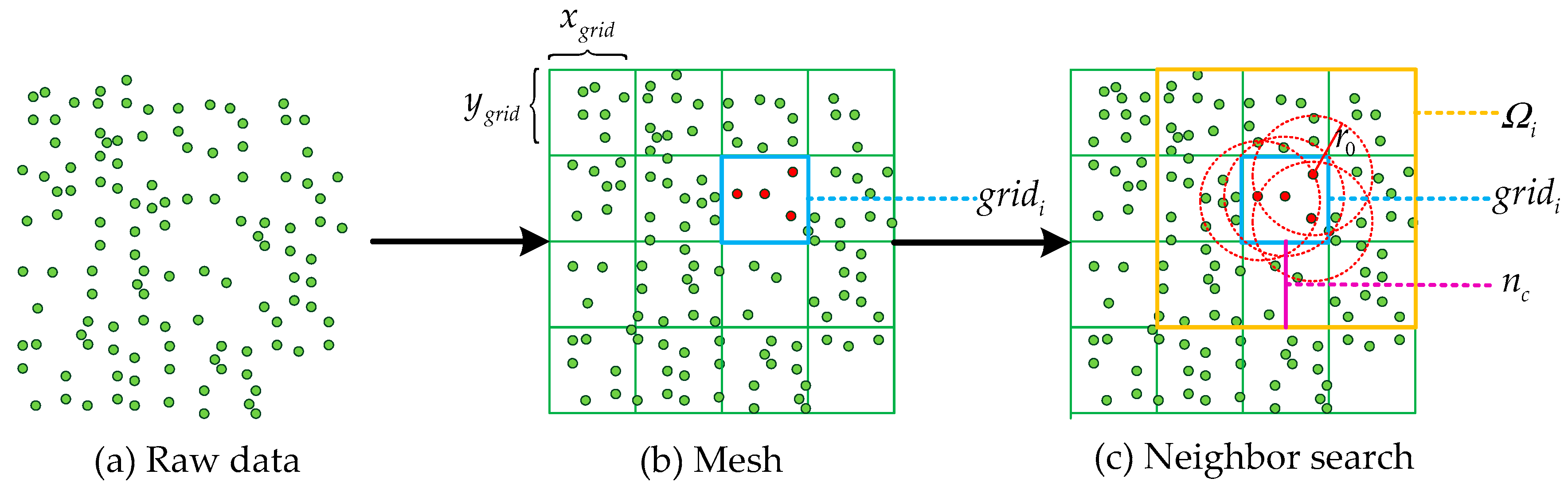

Figure 7.

Grid radius outlier removal (GROR) filter. The resolutions of the divided grid are and . The grid to be processed is . is the number of adjacent grids, and the search area is determined by . is the radius of the neighbor search.

Figure 7.

Grid radius outlier removal (GROR) filter. The resolutions of the divided grid are and . The grid to be processed is . is the number of adjacent grids, and the search area is determined by . is the radius of the neighbor search.

Figure 8.

The grid subsampling method. Given the coordinates of integer points, each point in the raw data is updated to the nearest integer point. The shape of the data is retained and the amount of data is reduced in the subsampling result .

Figure 8.

The grid subsampling method. Given the coordinates of integer points, each point in the raw data is updated to the nearest integer point. The shape of the data is retained and the amount of data is reduced in the subsampling result .

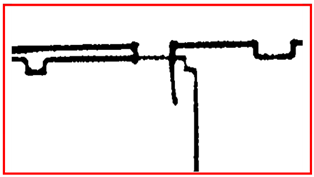

Figure 9.

The process of converting grid points to the image: the pixel corresponding to the positions of the grid points in the image is given the value . The image is made up of pixels in the red box in the final step.

Figure 9.

The process of converting grid points to the image: the pixel corresponding to the positions of the grid points in the image is given the value . The image is made up of pixels in the red box in the final step.

Figure 10.

The process of line segment extraction. First, filtering is used to remove the burrs from the image, and existing skeleton extraction algorithms are used to extract the image algorithm. Then, we calculate the slopes of the points and optimize the slopes accordingly. Finally, we judge all lines according to the specified rules.

Figure 10.

The process of line segment extraction. First, filtering is used to remove the burrs from the image, and existing skeleton extraction algorithms are used to extract the image algorithm. Then, we calculate the slopes of the points and optimize the slopes accordingly. Finally, we judge all lines according to the specified rules.

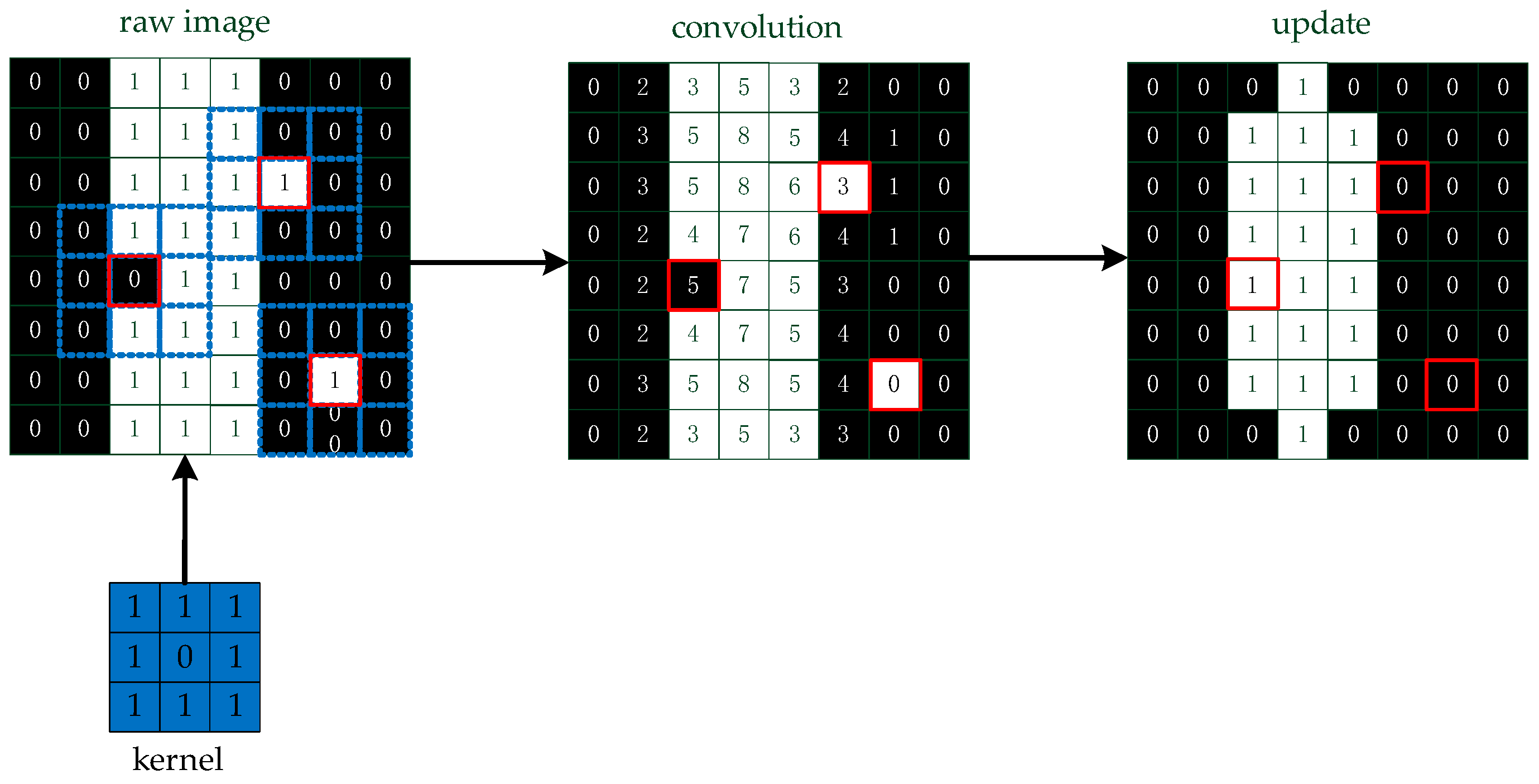

Figure 11.

A simple method to remove the burr. We convolve the image and update the pixels of the image based on the convolution values.

Figure 11.

A simple method to remove the burr. We convolve the image and update the pixels of the image based on the convolution values.

Figure 12.

The slope of the nearest neighbor. The slope is given four values: 0, 1, −1, 2.

Figure 12.

The slope of the nearest neighbor. The slope is given four values: 0, 1, −1, 2.

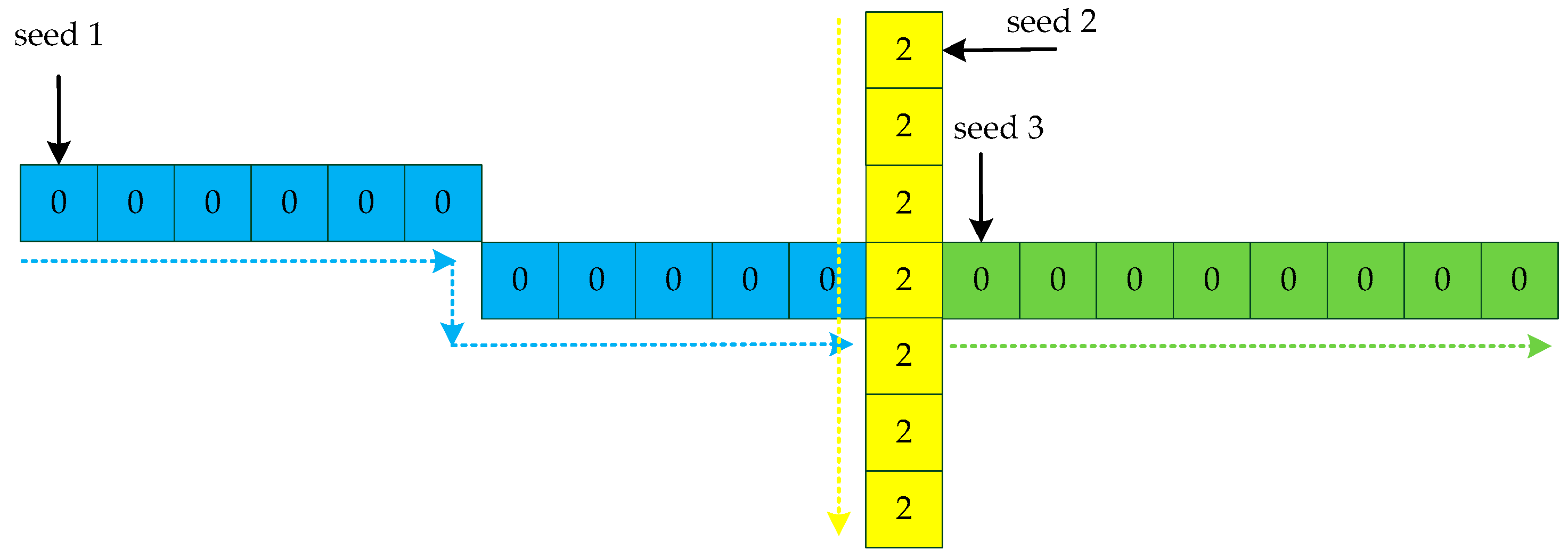

Figure 13.

The process of calculating the slope. First, seed 1 is randomly selected in the image. Seed 1 has multiple nearest neighbor points and picks one at random to calculate the slope value 0. The values of seed 1 and neighbor 2 are 0. Then, neighbor 2 is the next seed 1. The points for which the slope has been calculated are not searched later. Seed 1 stops growing if the current point has no neighbors. Then, seed n is randomly selected and the same growth process is carried out until all the pixels of the image are calculated.

Figure 13.

The process of calculating the slope. First, seed 1 is randomly selected in the image. Seed 1 has multiple nearest neighbor points and picks one at random to calculate the slope value 0. The values of seed 1 and neighbor 2 are 0. Then, neighbor 2 is the next seed 1. The points for which the slope has been calculated are not searched later. Seed 1 stops growing if the current point has no neighbors. Then, seed n is randomly selected and the same growth process is carried out until all the pixels of the image are calculated.

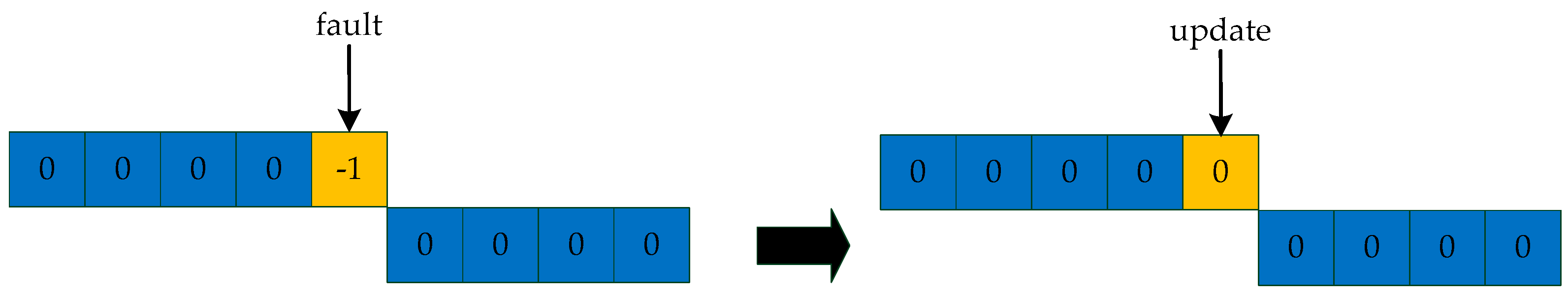

Figure 14.

Eliminate faults in lines. The fault is caused by noise and algorithm error, and we update the slope of the current point using the slope of the point’s nearest neighbor.

Figure 14.

Eliminate faults in lines. The fault is caused by noise and algorithm error, and we update the slope of the current point using the slope of the point’s nearest neighbor.

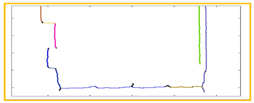

Figure 15.

Line searching method based on slopes. Determine the seed point according to the judgment condition, and then find the line according to the slope of the nearest neighbor point until traversing all the points.

Figure 15.

Line searching method based on slopes. Determine the seed point according to the judgment condition, and then find the line according to the slope of the nearest neighbor point until traversing all the points.

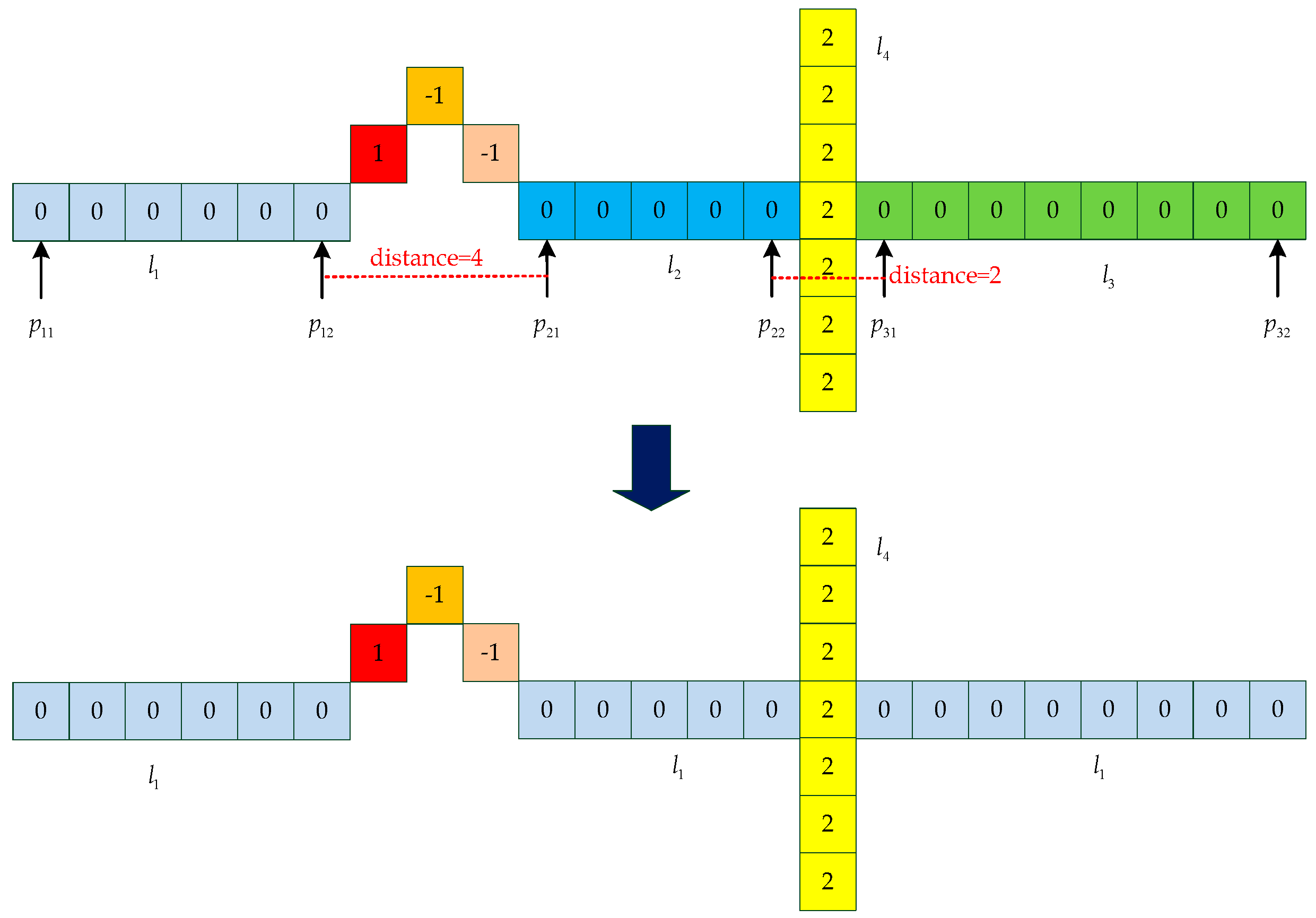

Figure 16.

An example for updating lines. We set the distance threshold to 5. For the line and the line , the distance between the endpoint and the endpoint is less than the threshold. Therefore, the two lines are classified as the same line. In the same way, the line and the line are classified as the same line.

Figure 16.

An example for updating lines. We set the distance threshold to 5. For the line and the line , the distance between the endpoint and the endpoint is less than the threshold. Therefore, the two lines are classified as the same line. In the same way, the line and the line are classified as the same line.

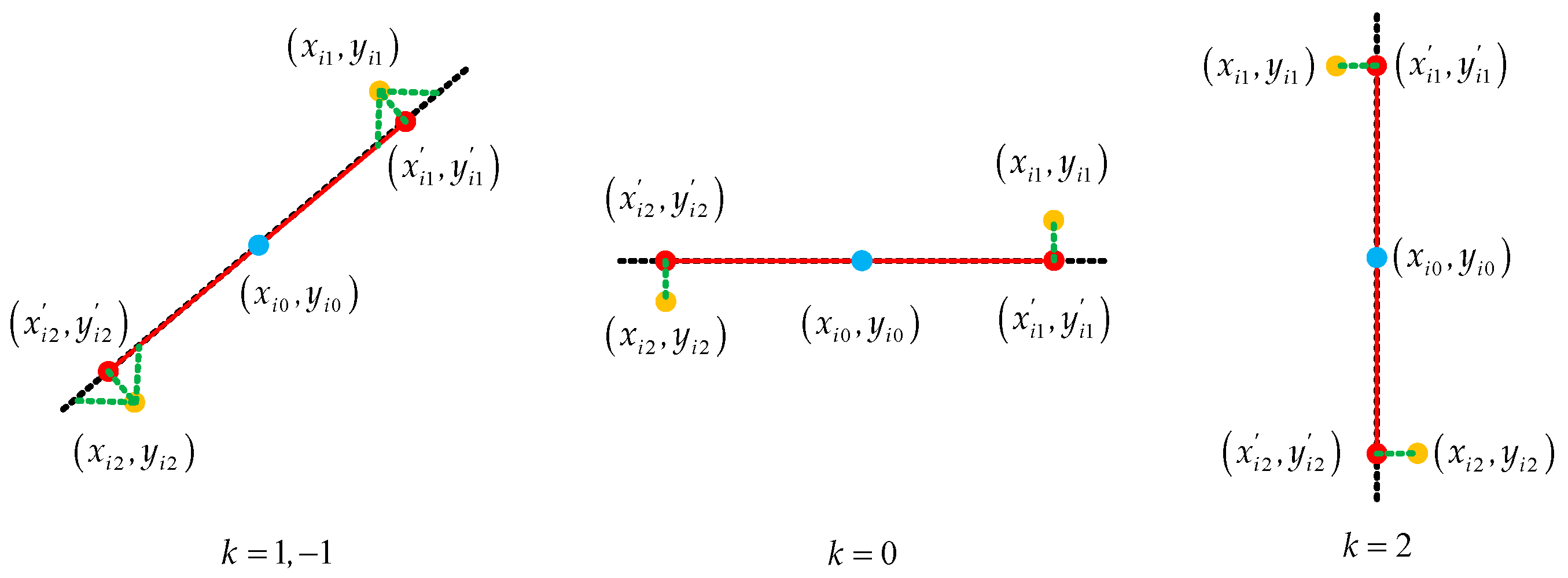

Figure 17.

The process of determining new endpoints. The dotted line is defined by the midpoint and the slope . If the slope is 1 or −1, the vertical point of the raw endpoints on the line is the new endpoint. We calculate the points in which the original endpoint intersects the line in both the horizontal and vertical directions; the midpoint of the points is a new endpoint. If the slope is 0, the abscissa of the new endpoints is the same as the raw endpoints, and the ordinate is the same as the midpoint. If the slope is 2, the abscissa of the new endpoints is the same as the midpoint, and the ordinate is the same as the raw endpoints.

Figure 17.

The process of determining new endpoints. The dotted line is defined by the midpoint and the slope . If the slope is 1 or −1, the vertical point of the raw endpoints on the line is the new endpoint. We calculate the points in which the original endpoint intersects the line in both the horizontal and vertical directions; the midpoint of the points is a new endpoint. If the slope is 0, the abscissa of the new endpoints is the same as the raw endpoints, and the ordinate is the same as the midpoint. If the slope is 2, the abscissa of the new endpoints is the same as the midpoint, and the ordinate is the same as the raw endpoints.

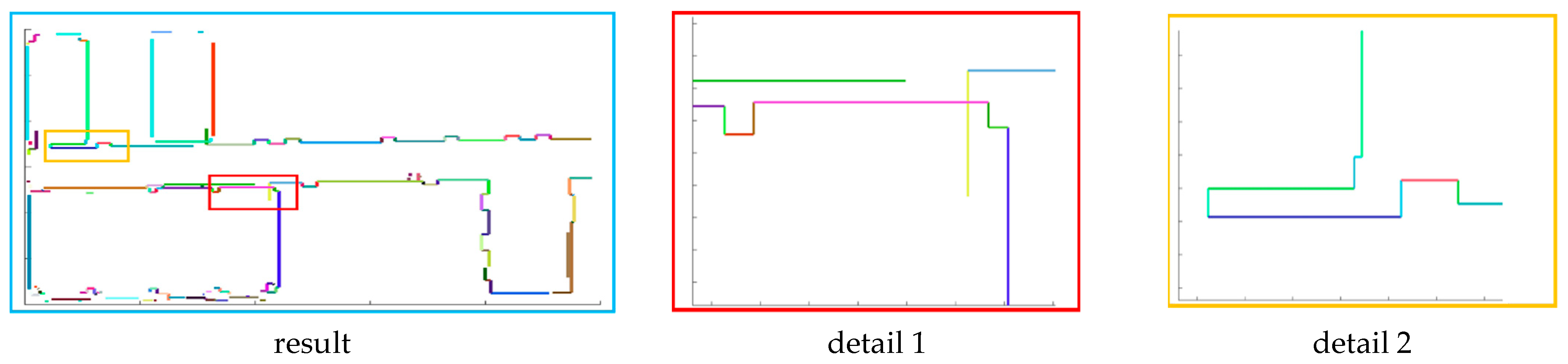

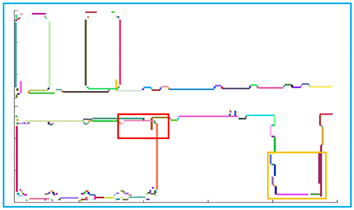

Figure 18.

The result of line correction. All the lines that satisfy the condition are connected and we show them in detail.

Figure 18.

The result of line correction. All the lines that satisfy the condition are connected and we show them in detail.

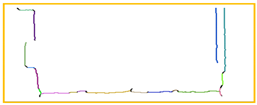

Figure 19.

Comparison of experimental results. The yellow line is the result that we obtain and the black points are the raw data. We obtain the basic outline of the data, and the reason why some parts are not considered lines is that the points are discontinuous or the slope changes are complicated.

Figure 19.

Comparison of experimental results. The yellow line is the result that we obtain and the black points are the raw data. We obtain the basic outline of the data, and the reason why some parts are not considered lines is that the points are discontinuous or the slope changes are complicated.

Figure 20.

The result of three-dimensional reconstruction. To make the result clearer, we removed the ceiling and painted all the vertical planes on the ground.

Figure 20.

The result of three-dimensional reconstruction. To make the result clearer, we removed the ceiling and painted all the vertical planes on the ground.

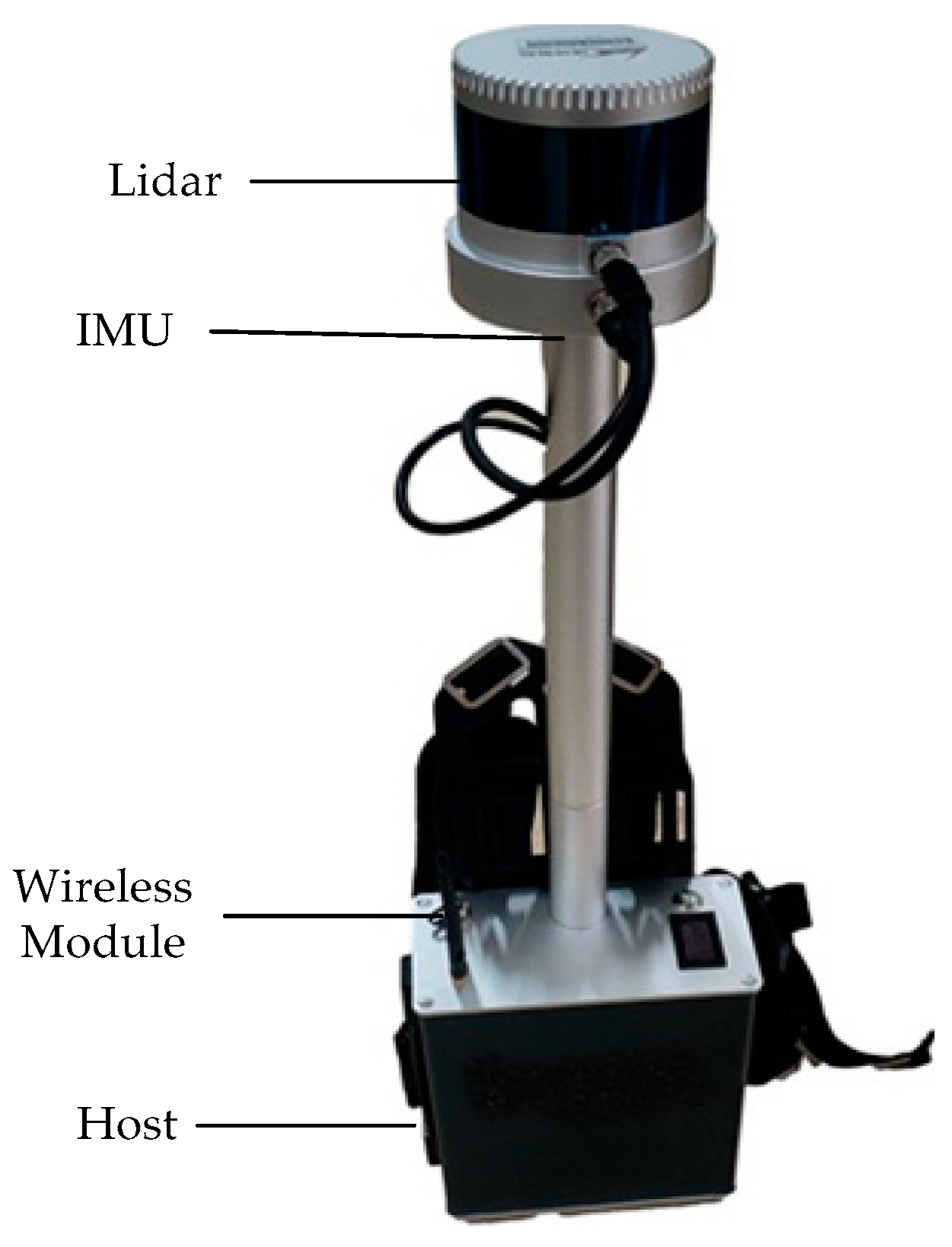

Figure 21.

The experimental equipment. It is a backpack system integrating LiDAR, an inertial measurement unit (IMU), a wireless module, and an upper computer.

Figure 21.

The experimental equipment. It is a backpack system integrating LiDAR, an inertial measurement unit (IMU), a wireless module, and an upper computer.

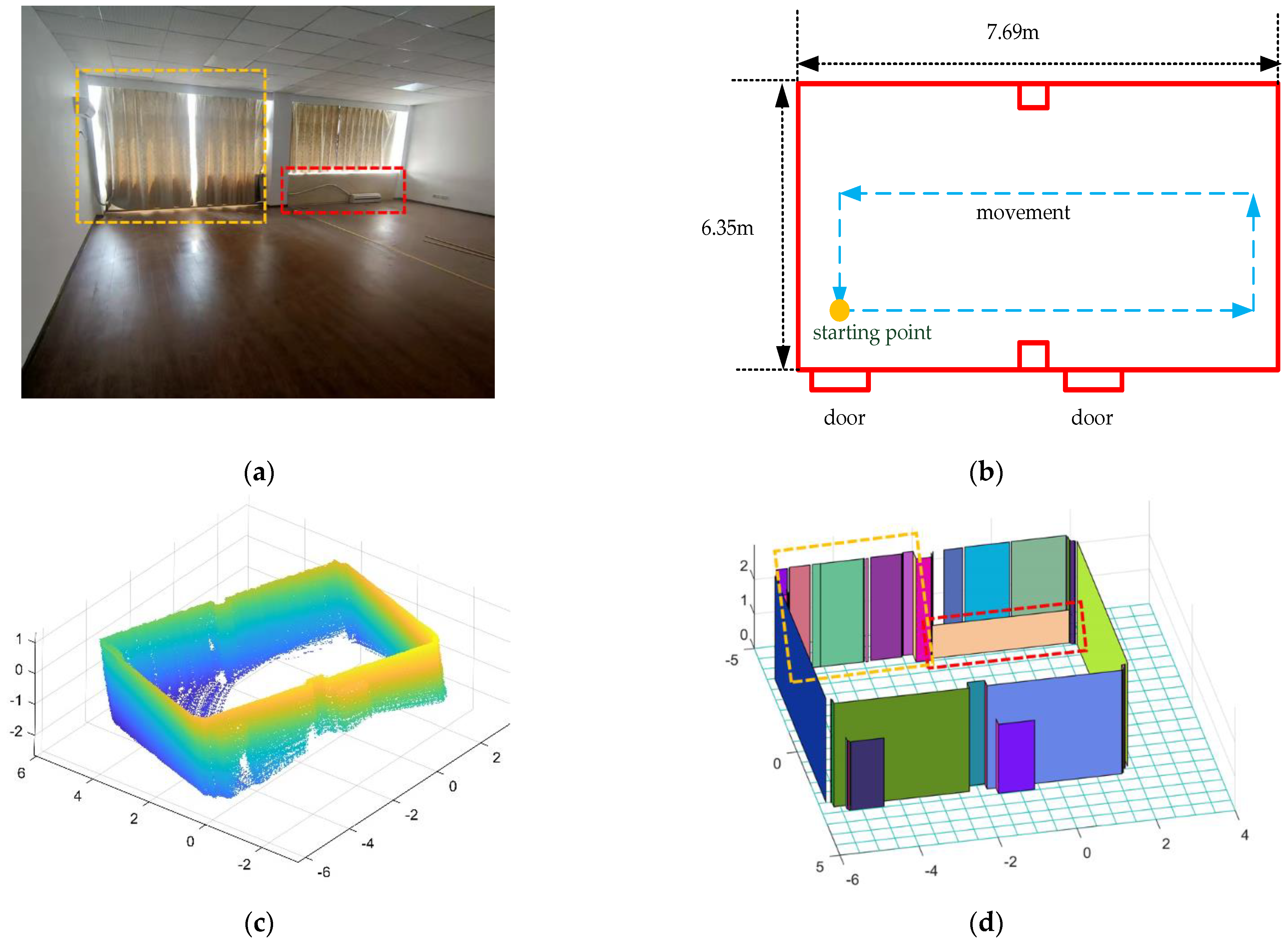

Figure 22.

The resulting presentation of scene 1: (a) photo of the real scene; (b) the two-dimensional plane of the scene; we measured the dimensions of the room and marked the starting point and trajectory; (c) point cloud data after removing the ceiling; (d) the result of the reconstruction of the interior structure, and each color represents a plane.

Figure 22.

The resulting presentation of scene 1: (a) photo of the real scene; (b) the two-dimensional plane of the scene; we measured the dimensions of the room and marked the starting point and trajectory; (c) point cloud data after removing the ceiling; (d) the result of the reconstruction of the interior structure, and each color represents a plane.

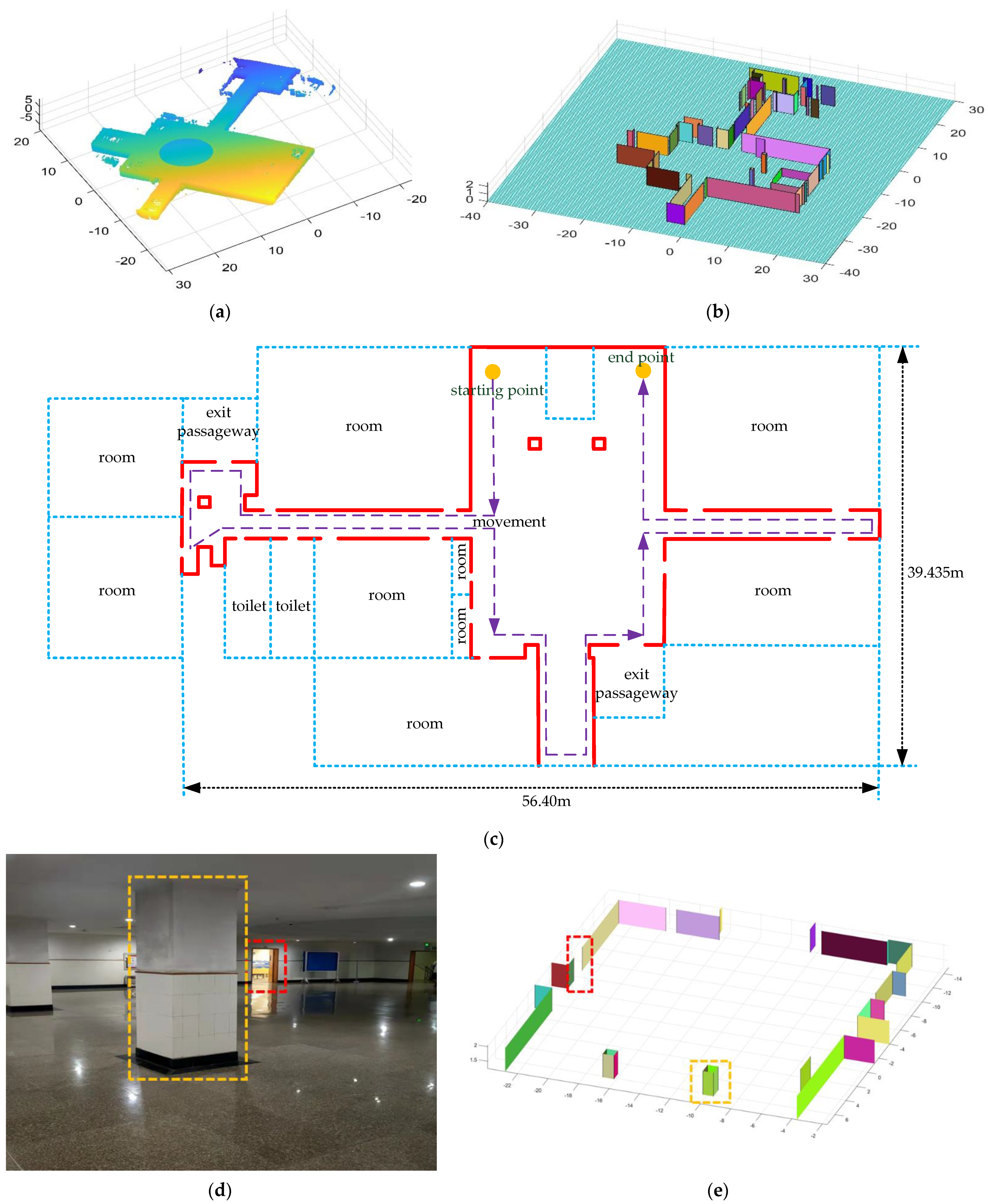

Figure 23.

The resulting presentation of scene 2: (a) point cloud data of scene 2; (b) the experimental results obtained by our algorithm; to better show the interior, we removed the ceiling; (c) we provide the two-dimensional plan of the floor and mark out the rooms, the toilets, the safe passage, and our movements; the gaps between the lines are the doors; (d,e) are the first part of the real scene and the comparison of experimental results; (f,g) are the second part of the real scene and the comparison of experimental results.

Figure 23.

The resulting presentation of scene 2: (a) point cloud data of scene 2; (b) the experimental results obtained by our algorithm; to better show the interior, we removed the ceiling; (c) we provide the two-dimensional plan of the floor and mark out the rooms, the toilets, the safe passage, and our movements; the gaps between the lines are the doors; (d,e) are the first part of the real scene and the comparison of experimental results; (f,g) are the second part of the real scene and the comparison of experimental results.

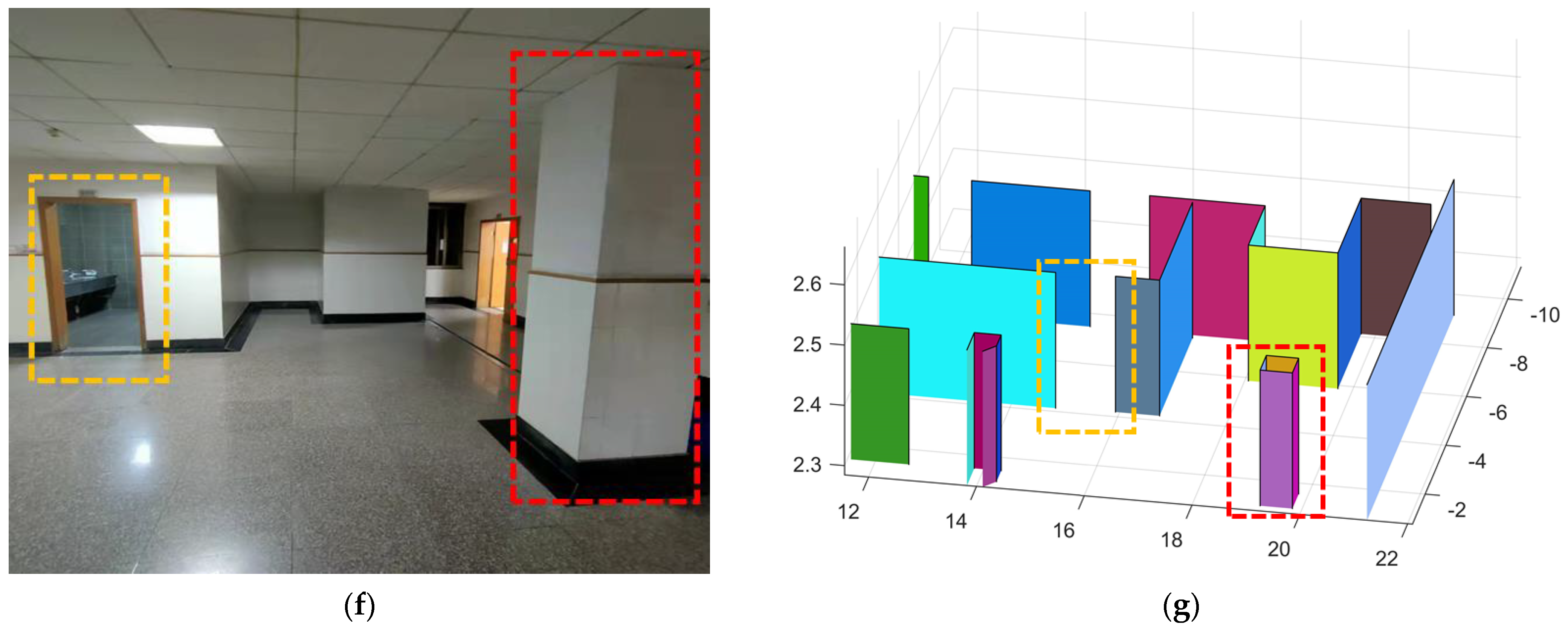

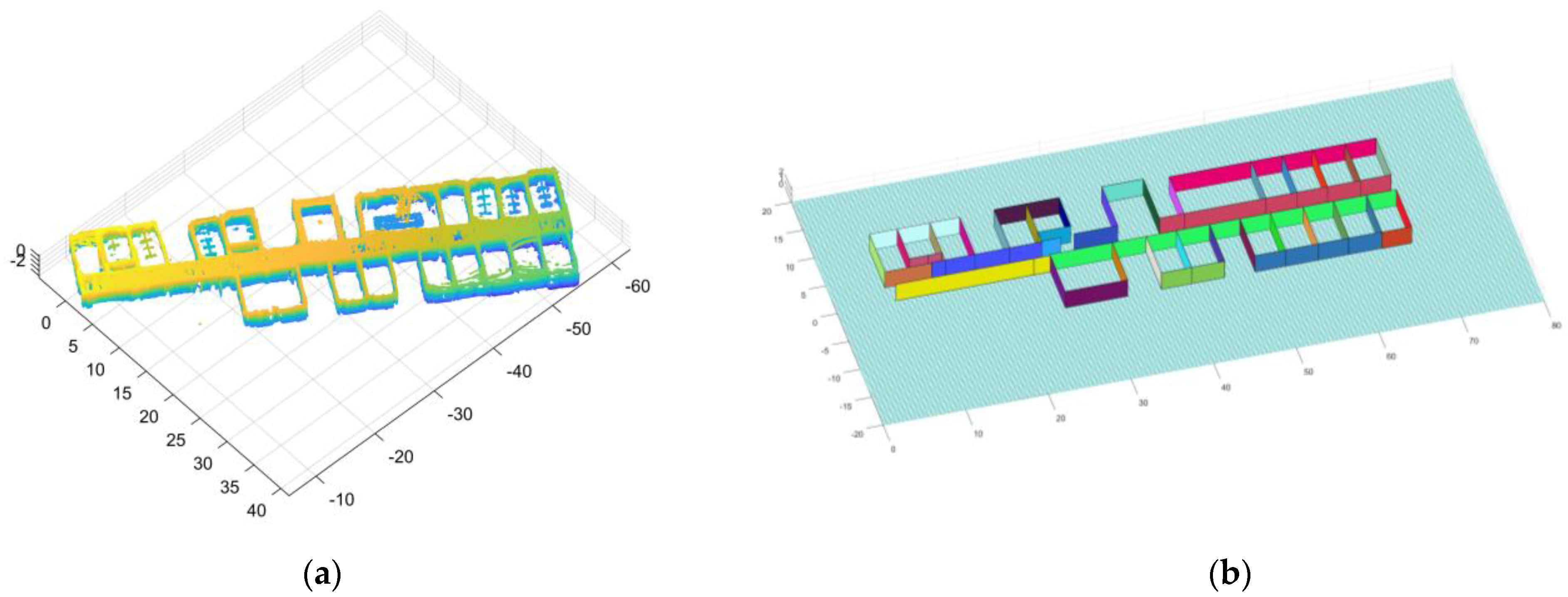

Figure 24.

The resulting presentation of scene 3: (a) point cloud data of scene 3; (b) the experimental results; (c) the two-dimensional plan of the data; we moved and scanned each room for a few seconds as we passed it; (d,e) we marked two locations in the simulation results that corresponded to the real world.

Figure 24.

The resulting presentation of scene 3: (a) point cloud data of scene 3; (b) the experimental results; (c) the two-dimensional plan of the data; we moved and scanned each room for a few seconds as we passed it; (d,e) we marked two locations in the simulation results that corresponded to the real world.

Table 1.

The process of extracting the wall: Project the initial data onto a plane, and then the ground data are screened by the grid threshold method. The ground is restored to a three-dimensional form and treated with the SOR filter. The ground and ceiling are fitted into a plane; then, the wall data are extracted by setting thresholds.

Table 2.

Projection and transformation of data. First, the raw data are projected onto the ground plane. Then, the noise is removed by the GROR filter. We enlarge the coordinates 70 times and the grid subsampling method is used to make the data regular. The regular data can be converted to an image, which is prepared for the next section.

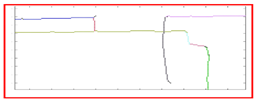

Table 3.

Image skeleton extraction. The contour of the image is extracted by the skeleton extraction algorithm and lines are refined into single pixels. Similarly, the skeleton made up of two-dimensional points is reduced by the image skeleton.

Table 4.

The process of finding lines. In these images, each line segment is represented by a separate color except black. First, we find points that belong to the same line by using neighboring points. In detail, we can see that points that originally belong to the same line are divided into multiple lines due to noise and algorithm. Then, by optimizing the line based on endpoint and slope, we solve the problem. Finally, we draw the lines by slope, midpoint, and new endpoints.

Table 5.

Some important parameter settings for the scenarios.

Table 5.

Some important parameter settings for the scenarios.

| | | | | | | |

|---|

| Scene 1 | 200 | 0.01 | 10 | 100 | 10 | 40 |

| Scene 2 | 600 | 0.03 | 10 | 10 | 5 | 8 |

| Scene 3 | 300 | 0.03 | 10 | 10 | 15 | 5 |

Table 6.

The running time of several algorithms.

Table 6.

The running time of several algorithms.

| | | | |

|---|

| Scene 1 | 4.064 s | 45.432 s | 1.995 s |

| Scene 2 | 7.735 s | 57.559 s | 2.037 s |

| Scene 3 | 5.644 s | 78.373 s | 2.034 s |

Table 7.

The data volume statistics of several steps algorithm.

Table 7.

The data volume statistics of several steps algorithm.

| | | | | |

|---|

| Scene 1 | 720,224 × 3 | 537,721 × 3 | 26,349 × 2 | 701 × 776 |

| Scene 2 | 1,804,574 × 3 | 768,450 × 3 | 7858 × 2 | 406 × 571 |

| Scene 3 | 1,568,974 × 3 | 756,038 × 3 | 2143 × 2 | 187 × 52 |

Table 8.

Fitting the parameters of the ceiling and floor equations.

Table 8.

Fitting the parameters of the ceiling and floor equations.

| | | | | | | | | |

|---|

| Scene 1 | −0.015 | 0.1674 | 0.9858 | −1.3243 | −0.0181 | 0.1523 | 0.9882 | 1.6697 |

| Scene 2 | 0.2833 | −0.0893 | 0.9549 | −0.7801 | 0.2807 | −0.0877 | 0.9558 | 1.7295 |

| Scene 3 | −0.0039 | 0.0277 | 0.9996 | −1.1052 | −0.0125 | 0.0325 | 0.9994 | 1.4702 |

Table 9.

Parameter comparison between the actual scenario and the predicted scenario.

Table 9.

Parameter comparison between the actual scenario and the predicted scenario.

| | | | | | | | | | | | |

|---|

| Scene 1 | 53 | 7.652 m | 6.337 m | 2.994 m | 7.690 m | 6.350 m | 3.000 m | 0.038 m | 0.013 m | 0.006 m | 0.019 m |

| Scene 2 | 86 | 56.360 m | 39.390 m | 2.510 m | 56.400 m | 39.435 m | 2.500 m | 0.040 m | 0.045 m | 0.010 m | 0.030 m |

| Scene 3 | 46 | 61.444 m | 15.815 m | 2.575 m | 61.400 m | 15.800 m | 2.560 m | 0.044 m | 0.015 m | 0.015 m | 0.025 m |

Table 10.

Comparison of the characteristics of several different methods. Aspect 1: sources of data; Aspect 2: ground calculation; Aspect 3: filtering; Aspect 4: data optimization; Aspect 5: the manifestation of doors and windows; Aspect 6: accuracy of the number of planes.

Table 10.

Comparison of the characteristics of several different methods. Aspect 1: sources of data; Aspect 2: ground calculation; Aspect 3: filtering; Aspect 4: data optimization; Aspect 5: the manifestation of doors and windows; Aspect 6: accuracy of the number of planes.

| | Aspect 1 | Aspect 2 | Aspect 3 | Aspect 4 | Aspect 5 | Aspect 6 |

|---|

| Ochmann et al. | laser scanner | ✗ | ✓ | ✗ | ✗ | ✓ |

| Ambrus et al. | laser scanner | ✗ | ✗ | ✓ | ✓ | ✓ |

| Jung et al. | laser scanner | ✗ | ✓ | ✓ | ✓ | ✓ |

| Wang et al. | laser scanner | ✗ | ✓ | ✓ | ✓ | ✓ |

| Xiong et al. | laser scanner | ✗ | ✓ | ✗ | ✓ | ✓ |

| Sanchez et al. | laser scanner | ✗ | ✓ | ✗ | ✓ | ✗ |

| Oesau et al. | laser scanner | ✗ | ✗ | ✓ | ✓ | ✓ |

| Mura et al. | laser scanner | ✗ | ✓ | ✗ | ✓ | ✓ |

| ours | LiDAR | ✓ | ✓ | ✓ | ✓ | ✓ |