Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery

Abstract

:1. Introduction

2. Material and Methods

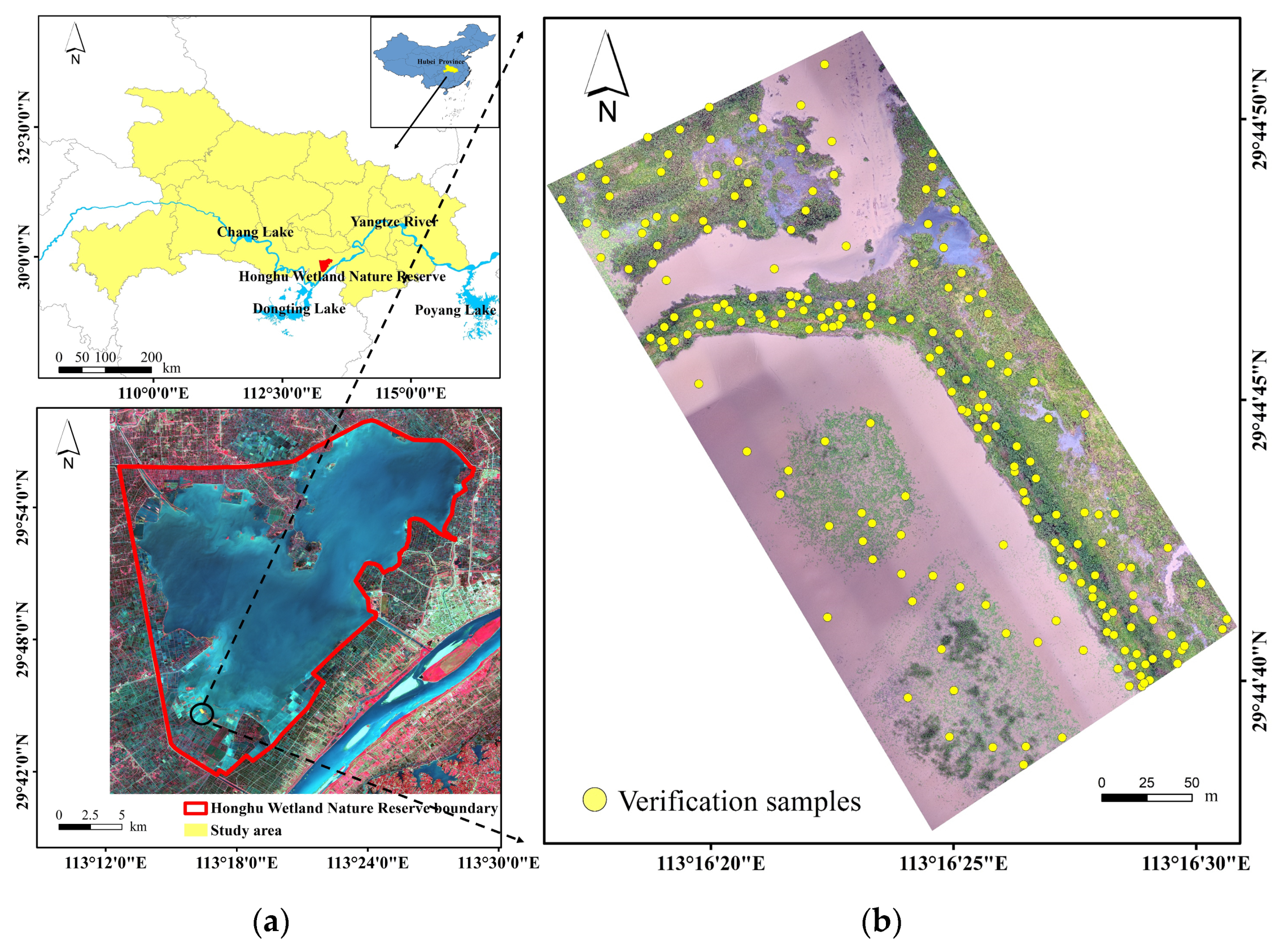

2.1. Study Area

2.2. Data Acquisition and Pre-Processing

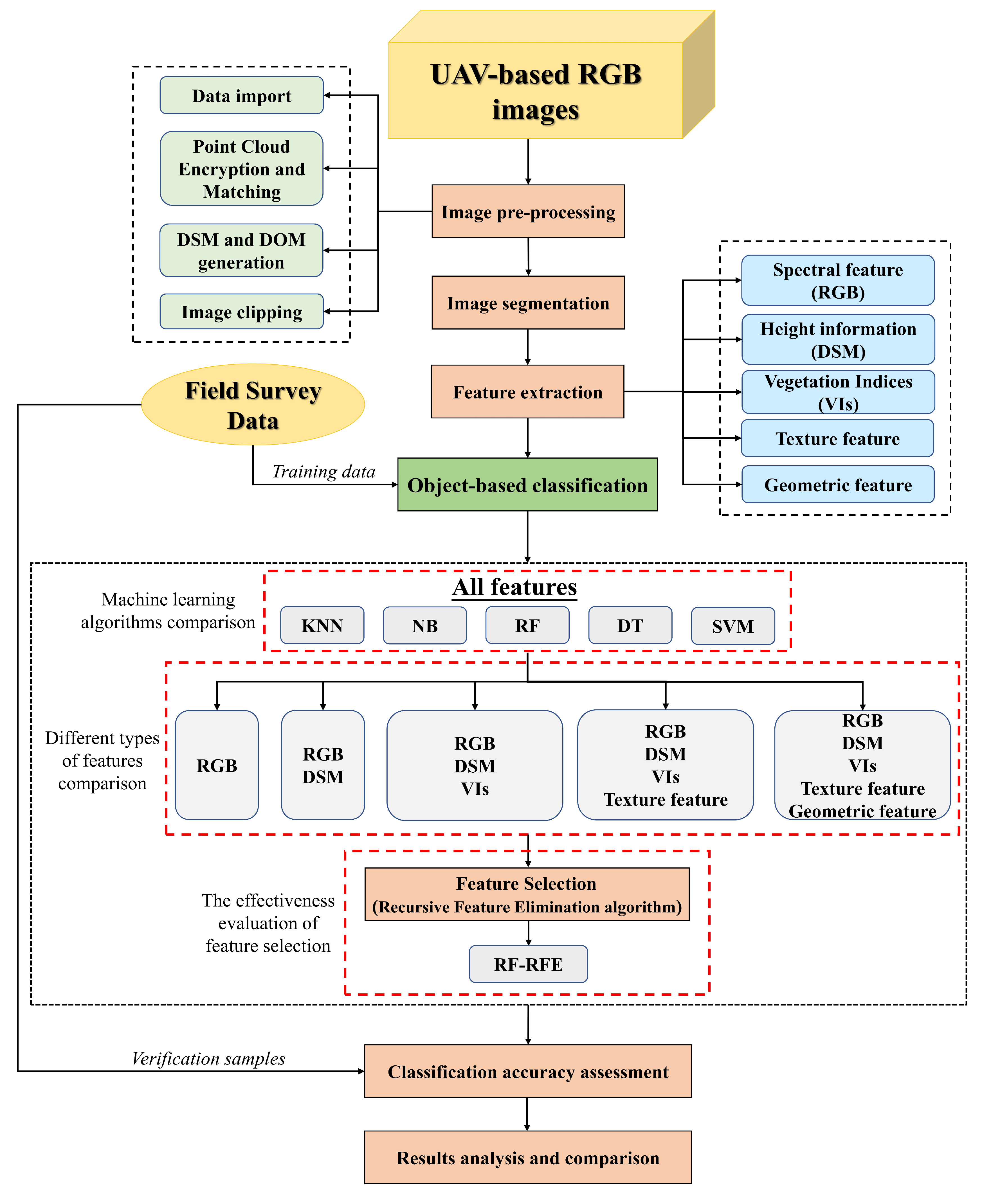

2.3. Study Workflow

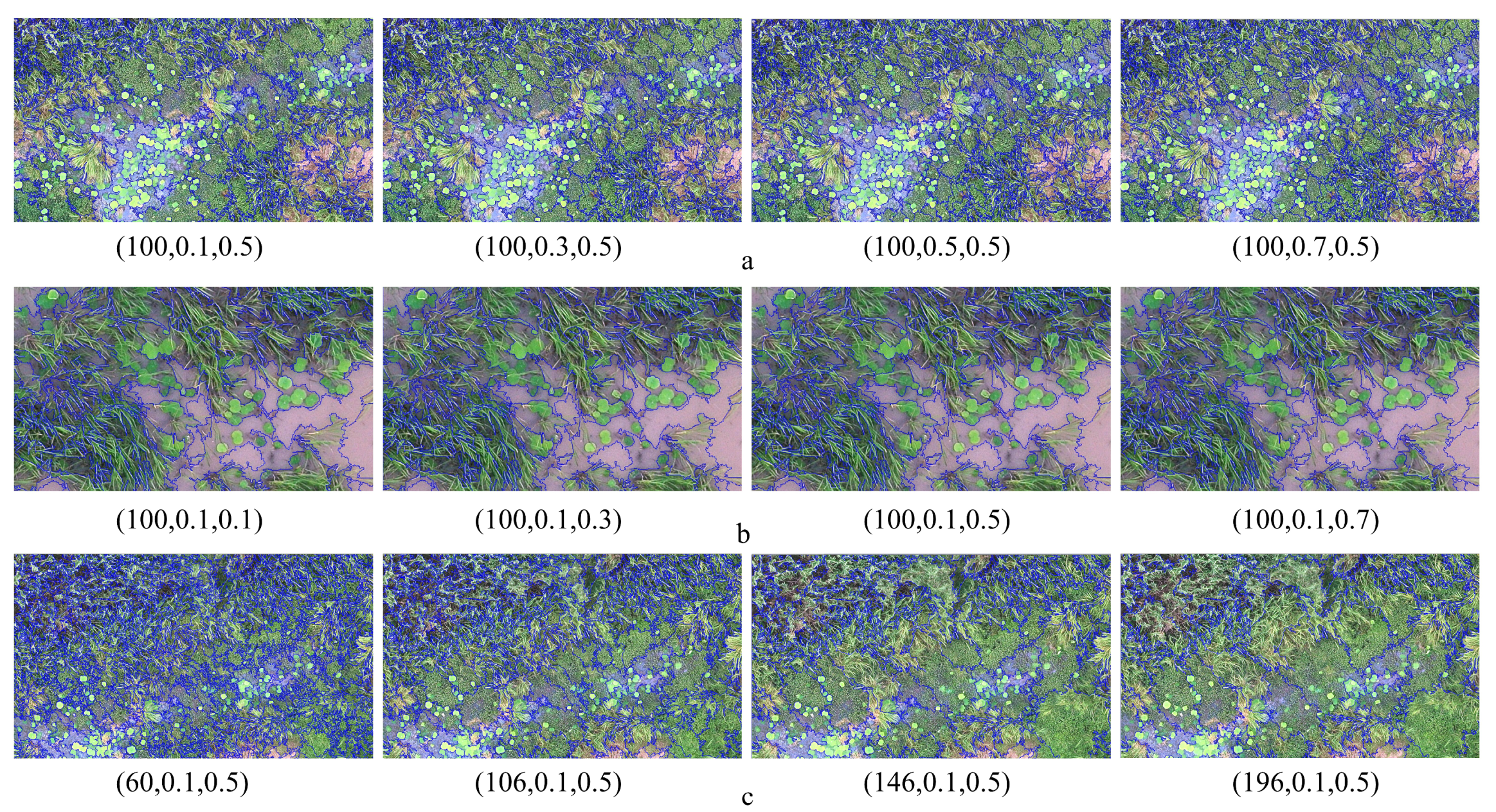

2.3.1. Image Segmentation

2.3.2. Features Derived from DOM and DSM

- (1)

- Spectral features are the basic features of wetland vegetation identification. Mean value and standard deviation of the red, green, blue bands and brightness were extracted.

- (2)

- DSM was used to represent the relative height of wetland vegetation. Height information can increase the separability between vegetation types, especially in areas with dense vegetation [58]. Therefore, the mean value and standard deviation of DSM were also used for wetland vegetation classification.

- (3)

- Vegetation indices, serving as a supplement of vegetation spectral information, were constructed using the available red, green and blue spectral bands [59]. They effectively distinguish vegetation from the surrounding terrain background and greatly improve the application potential of UAV-based RGB imagery. A total of 14 frequently used vegetation indices were calculated (Table 2).

- (4)

- Texture features have been frequently applied for terrain classification [57] and successfully improved the accuracy of image classification and information extraction. The gray-level co-occurrence matrix (GLCM) was used to extract 24 texture features, including mean, standard deviation (StdDev), entropy (Ent), angular second moment (ASM), correlation (Cor), dissimilarity (Dis), contrast (Con) and homogeneity (Hom) of red, green and blue bands [60].

- (5)

| Vegetation Indices | Full Name | Formulation | Reference |

|---|---|---|---|

| ExG | Excess green index | [62] | |

| ExGR | Excess green minus excess red index | [35] | |

| VEG | Vegetation index | [35] | |

| CIVE | Color index of vegetation | [63] | |

| COM | Combination index | [64] | |

| COM2 | Combination index 2 | [63] | |

| NGRDI | Normalized green–red difference index | [35] | |

| NGBDI | Normalized green–blue difference index | [65] | |

| VDVI | Visible-band difference vegetation index | [65] | |

| RGRI | Red–green ratio index | [66] | |

| BGRI | Blue–green ratio index | [67] | |

| WI | Woebbecke index | [62] | |

| RGBRI | Red–green–blue ratio index | [68] | |

| RGBVI | Red–green–blue vegetation | [22] |

2.3.3. Machine Learning Algorithm Comparison

2.3.4. Contribution of Different Types of Features Evaluation

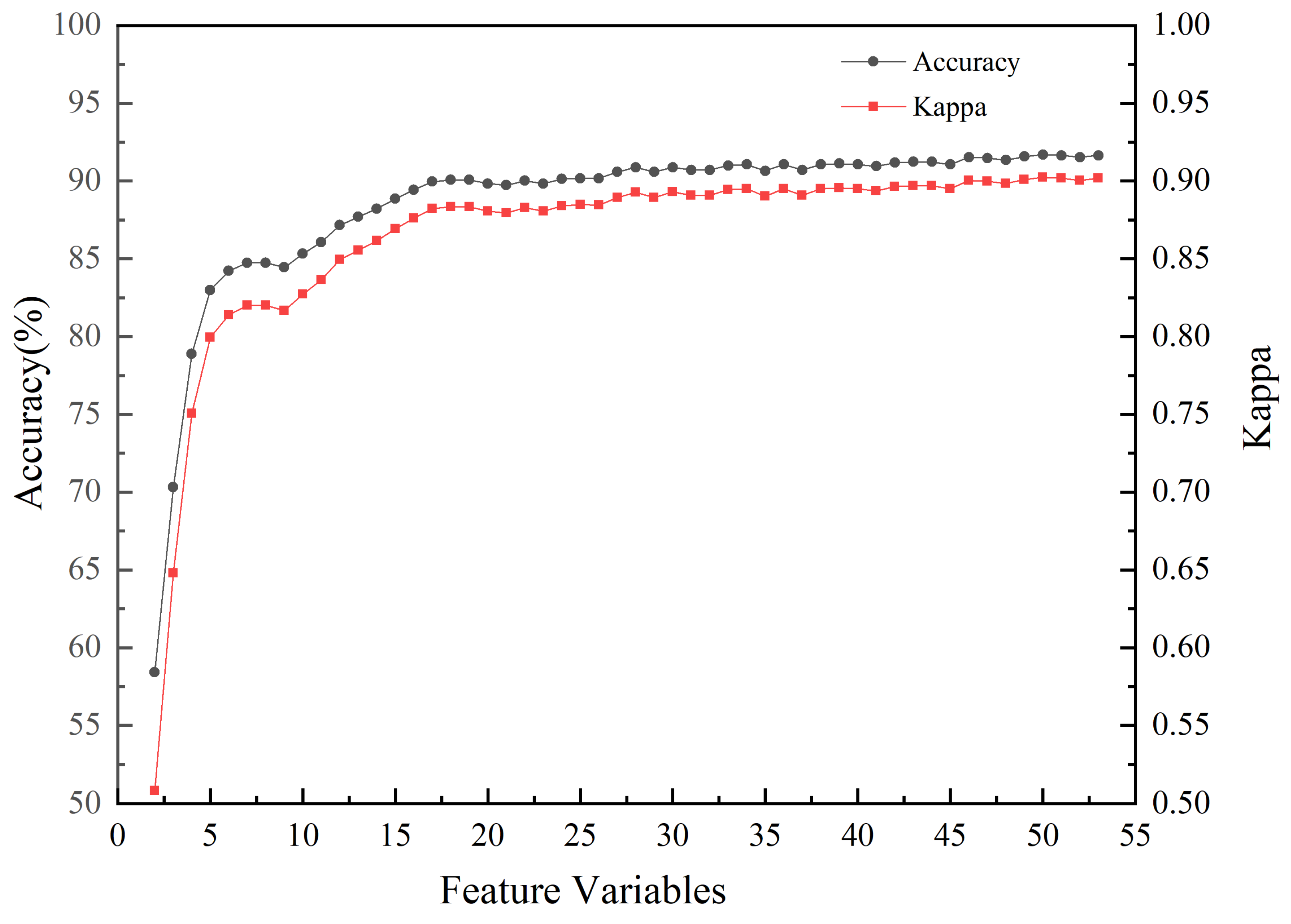

2.3.5. Effectiveness Evaluation of Feature Selection

2.3.6. Ten Object-Based Classification Scenarios Design and Accuracy Assessment

3. Results and Discussion

3.1. Analysis of Image Segmentation Results

3.2. Performance Comparison of Machine Learning Algorithms on Wetland Vegetation Classification

3.3. Contribution of Different Types of Features to Wetland Vegetation Classification

3.4. Analysis of Recursive Feature Elimination Results

3.5. Analysis of the Effectiveness of Feature Selection

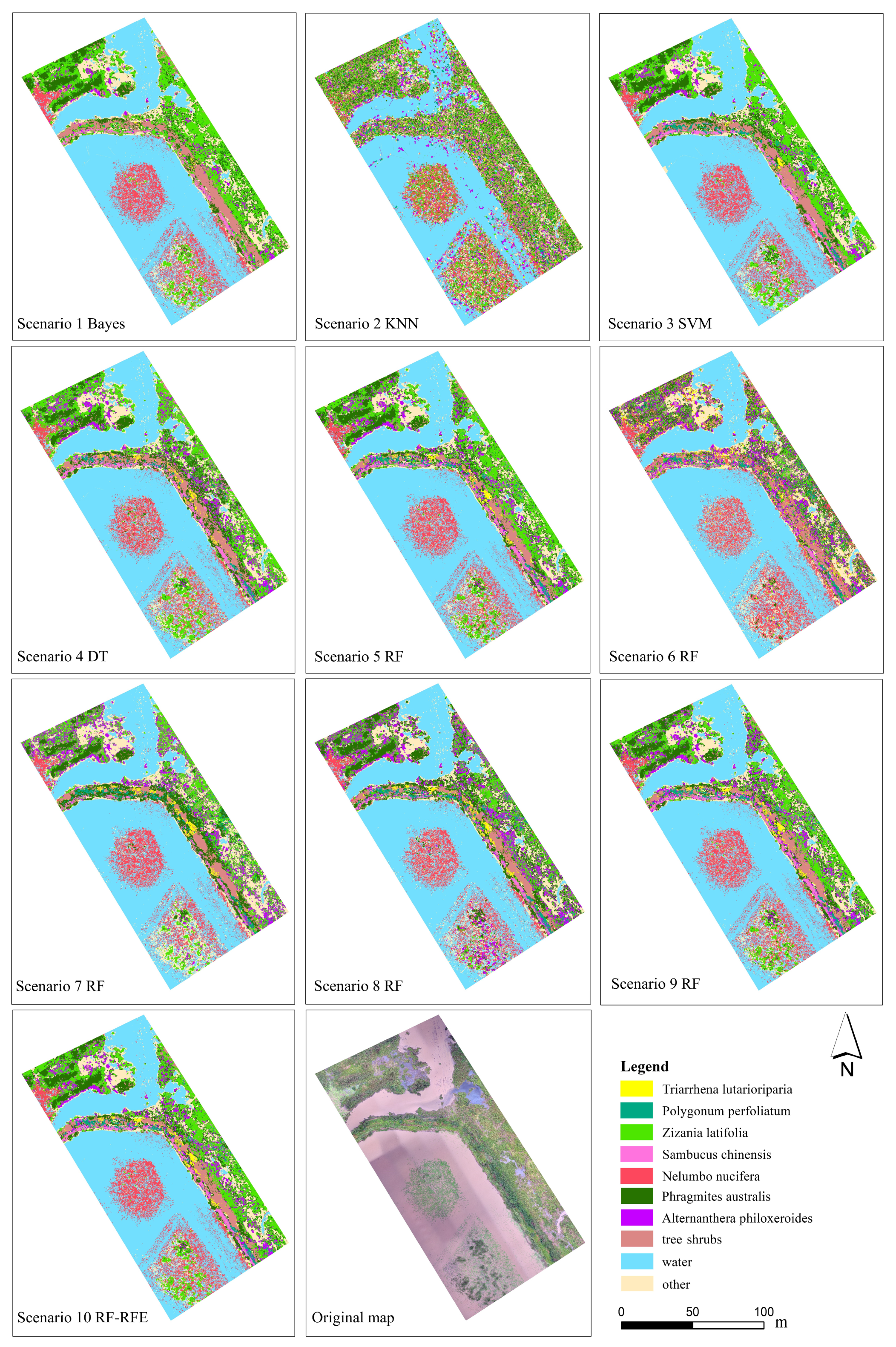

3.6. Comparison of Object-Based Classification Results

4. Conclusions

- (1)

- When object-based wetland vegetation classification was carried out with all the original features, RF performed better than Bayes, KNN, SVM, and DT.

- (2)

- Multi-feature combination can help UAV-based RGB imagery realize wetland vegetation classification. Different types of features contributed unequally to the classifier. Owing to the similarity of the spectral information in wetland vegetation, height information became the primary feature discriminating wetland vegetation. The contribution of vegetation indices to wetland vegetation classification was irreplaceable, and texture features were less important than vegetation indices, but still indispensable.

- (3)

- The classification effect of RF-RFE was the best among the ten scenarios. Feature selection is an effective way to improve the performance of the classifier. A large number of features of redundant or irrelevant information negatively affects the classification. The RFE feature selection algorithm can effectively select the best feature subset to improve the classification accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zedler, J.B.; Kercher, S. Wetland resources: Status, trends, ecosystem services, and restorability. Annu. Rev. Environ. Resour. 2005, 30, 39–74. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.L.; Lu, D.S.; Yang, B.; Sun, C.H.; Sun, M. Coastal wetland vegetation classification with a landsat thematic mapper image. Int. J. Remote Sens. 2011, 32, 545–561. [Google Scholar] [CrossRef]

- Taddeo, S.; Dronova, I. Indicators of vegetation development in restored wetlands. Ecol. Indic. 2018, 94, 454–467. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Adeli, S.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.J.; Brisco, B.; Tamiminia, H.; Shaw, S. Wetland monitoring using sar data: A meta-analysis and comprehensive review. Remote Sens. 2020, 12, 2190. [Google Scholar] [CrossRef]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Wetland assessment using unmanned aerial vehicle (uav) photogrammetry. In Proceedings of the 23rd Congress of the International-Society-for-Photogrammetry-and-Remote-Sensing (ISPRS), Prague, Czech Republic, 12–19 July 2016; pp. 781–788. [Google Scholar]

- Guo, M.; Li, J.; Sheng, C.L.; Xu, J.W.; Wu, L. A review of wetland remote sensing. Sensors 2017, 17, 777. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lane, C.R.; Liu, H.X.; Autrey, B.C.; Anenkhonov, O.A.; Chepinoga, V.V.; Wu, Q.S. Improved wetland classification using eight-band high resolution satellite imagery and a hybrid approach. Remote Sens. 2014, 6, 12187–12216. [Google Scholar] [CrossRef] [Green Version]

- Martinez, J.M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the amazon floodplain using multitemporal sar data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Pengra, B.W.; Johnston, C.A.; Loveland, T.R. Mapping an invasive plant, phragmites australis, in coastal wetlands using the eo-1 hyperion hyperspectral sensor. Remote Sens. Environ. 2007, 108, 74–81. [Google Scholar] [CrossRef]

- Wright, C.; Gallant, A. Improved wetland remote sensing in yellowstone national park using classification trees to combine tm imagery and ancillary environmental data. Remote Sens. Environ. 2007, 107, 582–605. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Novo, E.; Barbosa, C.C.F.; Gastil, M. Dual-season mapping of wetland inundation and vegetation for the central amazon basin. Remote Sens. Environ. 2003, 87, 404–428. [Google Scholar] [CrossRef]

- Belluco, E.; Camuffo, M.; Ferrari, S.; Modenese, L.; Silvestri, S.; Marani, A.; Marani, M. Mapping salt-marsh vegetation by multispectral and hyperspectral remote sensing. Remote Sens. Environ. 2006, 105, 54–67. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.H. Species classification using unmanned aerial vehicle (uav)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS-J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Ruwaimana, M.; Satyanarayana, B.; Otero, V.; Muslim, A.M.; Syafiq, A.M.; Ibrahim, S.; Raymaekers, D.; Koedam, N.; Dahdouh-Guebas, F. The advantages of using drones over space-borne imagery in the mapping of mangrove forests. PLoS ONE 2018, 13, e0200288. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, S.M.; Zhao, G.X.; Lang, K.; Su, B.W.; Chen, X.N.; Xi, X.; Zhang, H.B. Integrated satellite, unmanned aerial vehicle (uav) and ground inversion of the spad of winter wheat in the reviving stage. Sensors 2019, 19, 1485. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of uav, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef] [Green Version]

- Joyce, K.E.; Anderson, K.; Bartolo, R.E. Of course we fly unmanned-we’re women! Drones 2021, 5, 21. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep learning in forestry using uav-acquired rgb data: A practical review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining uav-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Jiang, X.P.; Gao, M.; Gao, Z.Q. A novel index to detect green-tide using uav-based rgb imagery. Estuar. Coast. Shelf Sci. 2020, 245, 106943. [Google Scholar] [CrossRef]

- Sugiura, R.; Tsuda, S.; Tamiya, S.; Itoh, A.; Nishiwaki, K.; Murakami, N.; Shibuya, Y.; Hirafuji, M.; Nuske, S. Field phenotyping system for the assessment of potato late blight resistance using rgb imagery from an unmanned aerial vehicle. Biosyst. Eng. 2016, 148, 1–10. [Google Scholar] [CrossRef]

- Dronova, I.; Kislik, C.; Dinh, Z.; Kelly, M. A review of unoccupied aerial vehicle use in wetland applications: Emerging opportunities in approach, technology, and data. Drones 2021, 5, 45. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone image segmentation using machine and deep learning for mapping raised bog vegetation communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Corti Meneses, N.; Brunner, F.; Baier, S.; Geist, J.; Schneider, T. Quantification of extent, density, and status of aquatic reed beds using point clouds derived from uav-rgb imagery. Remote Sens. 2018, 10, 1869. [Google Scholar] [CrossRef] [Green Version]

- Fu, B.L.; Liu, M.; He, H.C.; Lan, F.W.; He, X.; Liu, L.L.; Huang, L.K.; Fan, D.L.; Zhao, M.; Jia, Z.L. Comparison of optimized object-based rf-dt algorithm and segnet algorithm for classifying karst wetland vegetation communities using ultra-high spatial resolution uav data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 15. [Google Scholar] [CrossRef]

- Dragut, L.; Tiede, D.; Levick, S.R. Esp: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Zheng, Y.H.; Wu, J.P.; Wang, A.Q.; Chen, J. Object- and pixel-based classifications of macroalgae farming area with high spatial resolution imagery. Geocarto Int. 2018, 33, 1048–1063. [Google Scholar] [CrossRef]

- Estoque, R.C.; Murayama, Y.; Akiyama, C.M. Pixel-based and object-based classifications using high- and medium-spatial-resolution imageries in the urban and suburban landscapes. Geocarto Int. 2015, 30, 1113–1129. [Google Scholar] [CrossRef] [Green Version]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Object-based classification of wetland vegetation using very high-resolution unmanned air system imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef] [Green Version]

- Abeysinghe, T.; Milas, A.S.; Arend, K.; Hohman, B.; Reil, P.; Gregory, A.; Vazquez-Ortega, A. Mapping invasive phragmites australis in the old woman creek estuary using uav remote sensing and machine learning classifiers. Remote Sens. 2019, 11, 1380. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.L.; Liu, J.T.; Gong, J.H. Uav remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sanchez, J.; Pena, J.M.; de Castro, A.I.; Lopez-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from uav. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS-J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Geng, R.F.; Jin, S.G.; Fu, B.L.; Wang, B. Object-based wetland classification using multi-feature combination of ultra-high spatial resolution multispectral images. Can. J. Remote Sens. 2020, 46, 784–802. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T. Random forests for classification in ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Zhang, C.Y.; Xie, Z.X. Object-based vegetation mapping in the kissimmee river watershed using hymap data and machine learning techniques. Wetlands 2013, 33, 233–244. [Google Scholar] [CrossRef]

- Zhou, X.Z.; Wen, H.J.; Zhang, Y.L.; Xu, J.H.; Zhang, W.G. Landslide susceptibility mapping using hybrid random forest with geodetector and rfe for factor optimization. Geosci. Front. 2021, 12, 101211. [Google Scholar] [CrossRef]

- Balha, A.; Mallick, J.; Pandey, S.; Gupta, S.; Singh, C.K. A comparative analysis of different pixel and object-based classification algorithms using multi-source high spatial resolution satellite data for lulc mapping. Earth Sci. Inform. 2021, 14, 2231–2247. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Hamedianfar, A. New semi-automated mapping of asbestos cement roofs using rule-based object-based image analysis and taguchi optimization technique from worldview-2 images. Int. J. Remote Sens. 2017, 38, 467–491. [Google Scholar] [CrossRef]

- Qian, Y.G.; Zhou, W.Q.; Yan, J.L.; Li, W.F.; Han, L.J. Comparing machine learning classifiers for object-based land cover classification using very high resolution imagery. Remote Sens. 2015, 7, 153–168. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. A comparison of three feature selection methods for object-based classification of sub-decimeter resolution ultracam-l imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 70–78. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; Vanhuysse, S.; Lennert, M.; Shimoni, M.; Kalogirou, S.; Wolff, E. Less is more: Optimizing classification performance through feature selection in a very-high-resolution remote sensing object-based urban application. GISci. Remote Sens. 2018, 55, 221–242. [Google Scholar] [CrossRef]

- Cao, J.J.; Leng, W.C.; Liu, K.; Liu, L.; He, Z.; Zhu, Y.H. Object-based mangrove species classification using unmanned aerial vehicle hyperspectral images and digital surface models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef] [Green Version]

- Zuo, P.P.; Fu, B.L.; Lan, F.W.; Xie, S.Y.; He, H.C.; Fan, D.L.; Lou, P.Q. Classification method of swamp vegetation using uav multispectral data. China Environ. Sci. 2021, 41, 2399–2410. [Google Scholar]

- Boon, M.A.; Tesfamichael, S. Determination of the present vegetation state of a wetland with uav rgb imagery. In Proceedings of the 37th International Symposium on Remote Sensing of Environment, Tshwane, South Africa, 8–12 May 2017; Copernicus Gesellschaft Mbh: Tshwane, South Africa; pp. 37–41. [Google Scholar]

- Zhang, T.; Ban, X.; Wang, X.L.; Cai, X.B.; Li, E.H.; Wang, Z.; Yang, C.; Zhang, Q.; Lu, X.R. Analysis of nutrient transport and ecological response in honghu lake, china by using a mathematical model. Sci. Total Environ. 2017, 575, 418–428. [Google Scholar] [CrossRef]

- Liu, Y.; Ren, W.B.; Shu, T.; Xie, C.F.; Jiang, J.H.; Yang, S. Current status and the long-term change of riparian vegetation in last fifty years of lake honghu. Resour. Environ. Yangtze Basin 2015, 24, 38–45. [Google Scholar]

- Flanders, D.; Hall-Beyer, M.; Pereverzoff, J. Preliminary evaluation of ecognition object-based software for cut block delineation and feature extraction. Can. J. Remote Sens. 2003, 29, 441–452. [Google Scholar] [CrossRef]

- Pena-Barragan, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Gao, Y.; Mas, J.F.; Maathuis, B.H.P.; Zhang, X.M.; Van Dijk, P.M. Comparison of pixel-based and object-oriented image classification approaches - a case study in a coal fire area, wuda, inner mongolia, china. Int. J. Remote Sens. 2006, 27, 4039–4055. [Google Scholar]

- Lin, F.F.; Zhang, D.Y.; Huang, Y.B.; Wang, X.; Chen, X.F. Detection of corn and weed species by the combination of spectral, shape and textural features. Sustainability 2017, 9, 1335. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.X.; Li, Q.Z.; Liu, J.G.; Du, X.; Dong, T.F.; McNairn, H.; Champagne, C.; Liu, M.X.; Shang, J.L. Object-based crop classification using multi-temporal spot-5 imagery and textural features with a random forest classifier. Geocarto Int. 2018, 33, 1017–1035. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Ren, T.W.; Liu, D.Y.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.Y.; Zhang, X.D.; Li, S.M. Identification of seed maize fields with high spatial resolution and multiple spectral remote sensing using random forest classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef] [Green Version]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land cover classification from fused dsm and uav images using convolutional neural networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef] [Green Version]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Agapiou, A. Vegetation extraction using visible-bands from openly licensed unmanned aerial vehicle imagery. Drones 2020, 4, 27. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification. With airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef] [Green Version]

- Woebbecke, D.M.; Meyer, G.E.; Vonbargen, K.; Mortensen, D.A. Color indexes for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support vector machines for crop/weeds identification in maize fields. Expert Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef] [Green Version]

- Du, M.M.; Noguchi, N. Monitoring of wheat growth status and mapping of wheat yield’s within-field spatial variations using color images acquired from uav-camera system. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef] [Green Version]

- Wan, L.; Li, Y.J.; Cen, H.Y.; Zhu, J.P.; Yin, W.X.; Wu, W.K.; Zhu, H.Y.; Sun, D.W.; Zhou, W.J.; He, Y. Combining uav-based vegetation indices and image classification to estimate flower number in oilseed rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef] [Green Version]

- Calderon, R.; Navas-Cortes, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early, detection of verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Xie, B.; Yang, W.N.; Wang, F. A new estimate method for fractional vegetation cover based on uav visual light spectrum. Sci. Surv. Mapp. 2020, 45, 72–77. [Google Scholar]

- Shiraishi, T.; Motohka, T.; Thapa, R.B.; Watanabe, M.; Shimada, M. Comparative assessment of supervised classifiers for land use-land cover classification in a tropical region using time-series palsar mosaic data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1186–1199. [Google Scholar] [CrossRef]

- Wieland, M.; Pittore, M. Performance evaluation of machine learning algorithms for urban pattern recognition from multi-spectral satellite images. Remote Sens. 2014, 6, 2912–2939. [Google Scholar] [CrossRef] [Green Version]

- Murthy, S.K. Automatic construction of decision trees from data: A multi-disciplinary survey. Data Min. Knowl. Discov. 1998, 2, 345–389. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Apte, C.; Weiss, S. Data mining with decision trees and decision rules. Futur. Gener. Comp. Syst. 1997, 13, 197–210. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Gxokwe, S.; Dube, T.; Mazvimavi, D. Leveraging google earth engine platform to characterize and map small seasonal wetlands in the semi-arid environments of south africa. Sci. Total Environ. 2022, 803, 12. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Prasad, A.M.; Iverson, L.R.; Liaw, A. Newer classification and regression tree techniques: Bagging and random forests for ecological prediction. Ecosystems 2006, 9, 181–199. [Google Scholar] [CrossRef]

- Wang, X.F.; Wang, Y.; Zhou, C.W.; Yin, L.C.; Feng, X.M. Urban forest monitoring based on multiple features at the single tree scale by uav. Urban For. Urban Green. 2021, 58, 10. [Google Scholar] [CrossRef]

- Zhou, X.C.; Zheng, L.; Huang, H.Y. Classification of forest stand based on multi-feature optimization of uav visible light remote sensing. Sci. Silvae Sin. 2021, 57, 24–36. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Hsu, H.H.; Hsieh, C.W.; Lu, M.D. Hybrid feature selection by combining filters and wrappers. Expert Syst. Appl. 2011, 38, 8144–8150. [Google Scholar] [CrossRef]

- Wang, A.G.; An, N.; Chen, G.L.; Li, L.; Alterovitz, G. Accelerating wrapper-based feature selection with k-nearest-neighbor. Knowledge-Based Syst. 2015, 83, 81–91. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Mao, Y.; Pi, D.Y.; Liu, Y.M.; Sun, Y.X. Accelerated recursive feature elimination based on support vector machine for key variable identification. Chin. J. Chem. Eng. 2006, 14, 65–72. [Google Scholar] [CrossRef]

- Griffith, D.C.; Hay, G.J. Integrating geobia, machine learning, and volunteered geographic information to map vegetation over rooftops. ISPRS Int. J. Geo-Inf. 2018, 7, 462. [Google Scholar] [CrossRef] [Green Version]

- Randelovic, P.; Dordevic, V.; Milic, S.; Balesevic-Tubic, S.; Petrovic, K.; Miladinovic, J.; Dukic, V. Prediction of soybean plant density using a machine learning model and vegetation indices extracted from rgb images taken with a uav. Agronomy 2020, 10, 1108. [Google Scholar] [CrossRef]

- Morgan, G.R.; Wang, C.Z.; Morris, J.T. Rgb indices and canopy height modelling for mapping tidal marsh biomass from a small unmanned aerial system. Remote Sens. 2021, 13, 3406. [Google Scholar] [CrossRef]

- Tian, Y.C.; Huang, H.; Zhou, G.Q.; Zhang, Q.; Tao, J.; Zhang, Y.L.; Lin, J.L. Aboveground mangrove biomass estimation in beibu gulf using machine learning and uav remote sensing. Sci. Total Environ. 2021, 781. [Google Scholar] [CrossRef]

- Dale, J.; Burnside, N.G.; Hill-Butler, C.; Berg, M.J.; Strong, C.J.; Burgess, H.M. The use of unmanned aerial vehicles to determine differences in vegetation cover: A tool for monitoring coastal wetland restoration schemes. Remote Sens. 2020, 12, 4022. [Google Scholar] [CrossRef]

- Lu, J.S.; Eitel, J.U.H.; Engels, M.; Zhu, J.; Ma, Y.; Liao, F.; Zheng, H.B.; Wang, X.; Yao, X.; Cheng, T.; et al. Improving unmanned aerial vehicle (uav) remote sensing of rice plant potassium accumulation by fusing spectral and textural information. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 15. [Google Scholar] [CrossRef]

- Jiang, Y.F.; Zhang, L.; Yan, M.; Qi, J.G.; Fu, T.M.; Fan, S.X.; Chen, B.W. High-resolution mangrove forests classification with machine learning using worldview and uav hyperspectral data. Remote Sens. 2021, 13, 1529. [Google Scholar] [CrossRef]

- Liu, H.P.; Zhang, Y.X. Selection of landsat8 image classification bands based on mlc-rfe. J. Indian Soc. Remote Sens. 2019, 47, 439–446. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.Y.; Blaschke, T.; Li, M.C.; Tiede, D.; Zhou, Z.J.; Ma, X.X.; Chen, D.L. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Gibson, D.J.; Looney, P.B. Seasonal-variation in vegetation classification on perdido key, a barrier-island off the coast of the florida panhandle. J. Coast. Res. 1992, 8, 943–956. [Google Scholar]

- Zhang, Q. Research progress in wetland vegetation classification by remote sensing. World For. Res. 2019, 32, 49–54. [Google Scholar]

- Zhang, X.Y.; Feng, X.Z.; Wang, K. Integration of classifiers for improvement of vegetation category identification accuracy based on image objects. N. Z. J. Agric. Res. 2007, 50, 1125–1133. [Google Scholar] [CrossRef]

- Hao, P.Y.; Wang, L.; Niu, Z. Comparison of hybrid classifiers for crop classification using normalized difference vegetation index time series: A case study for major crops in north xinjiang, china. PLoS ONE 2015, 10, e0137748. [Google Scholar] [CrossRef]

| Feature Type | Feature Names | Feature Description | Feature Number |

|---|---|---|---|

| Spectral feature | Mean R, mean G, mean B, standard deviation R, standard deviation G, standard deviation B | R, G and B represent the DN value of red, green, and blue bands, respectively. Mean value and standard deviation for each object in the red, blue and green bands, calculated from the DN value of the object’s pixel. | 7 |

| Brightness | Brightness is calculated from the combination of the DN value of the object’s pixel | ||

| Height information | Mean DSM, standard deviation DSM | Mean value and standard deviation for each object in DSM | 2 |

| Vegetation indices | Shown in Table 2 | 14 | |

| Texture feature | GLCM_Mean r, GLCM_Mean g, GLCM_Mean b, GLCM_ StdDev r, GLCM_ StdDev g, GLCM_ StdDev b, GLCM_ Ent r, GLCM_ Ent g, GLCM_ Ent b, GLCM_ASM r, GLCM_ASM g, GLCM_ASM b, GLCM_ Cor r, GLCM_ Cor g, GLCM_ Cor b, GLCM_ Dis r, GLCM_ Dis g, GLCM_ Dis b, GLCM_Con r, GLCM_Con g, GLCM_Con b, GLCM_Hom r, GLCM_Hom g, GLCM_Hom b | Standard deviation (StdDev), entropy (Ent), angular second moment (ASM), correlation (Cor), dissimilarity (Dis), contrast (Con), homogeneity (Hom), r: red band, g: green band, b: blue band Texture features are derived from red, green and blue bands by using gray-level co-occurrence matrix (GLCM). | 24 |

| Geometric feature | Area, compactness, roundness, shape index, density, asymmetry | Geometric features are calculated from the geometry information of the object. | 6 |

| Scenario | Classification Model | Classification Features | Feature Number |

|---|---|---|---|

| 1 | Bayes | RGB+DSM+VIs+Texture+Geometry | 53 |

| 2 | KNN | RGB+DSM+VIs+Texture+Geometry | 53 |

| 3 | SVM | RGB+DSM+VIs+Texture+Geometry | 53 |

| 4 | DT | RGB+DSM+VIs+Texture+Geometry | 53 |

| 5 | RF | RGB+DSM+VIs+Texture+Geometry | 53 |

| 6 | RF | RGB | 7 |

| 7 | RF | RGB+DSM | 9 |

| 8 | RF | RGB+DSM+VIs | 23 |

| 9 | RF | RGB+DSM+VIs+Texture | 47 |

| 10 | RF-RFE | Optimized feature subset | 36 |

| Class | Scenario 1 Bayes | Scenario 2 KNN | Scenario 3 SVM | Scenario 4 DT | Scenario 5 RF | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| UA | PA | UA | PA | UA | PA | UA | PA | UA | PA | |

| Triarrhena lutarioriparia | 100 | 50.00 | 36.36 | 50.00 | 100 | 62.5 | 87.50 | 87.5 | 87.50 | 87.5 |

| Polygonum perfoliatum | 100 | 65.00 | 86.67 | 65.00 | 100 | 85.00 | 94.12 | 80 | 100 | 80.00 |

| Zizania latifolia | 69.23 | 81.82 | 30.95 | 59.09 | 67.86 | 86.36 | 62.96 | 77.27 | 72.41 | 95.45 |

| Sambucus chinensis | 100 | 87.50 | 66.67 | 31.25 | 100 | 96.88 | 91.43 | 100 | 96.88 | 96.88 |

| Nelumbo nucifera | 100 | 97.14 | 100 | 71.43 | 100 | 97.14 | 100 | 91.43 | 100 | 97.14 |

| Phragmites australis | 63.33 | 82.61 | 36.11 | 56.52 | 83.33 | 86.96 | 67.86 | 82.61 | 81.82 | 78.26 |

| other | 93.33 | 100 | 66.67 | 71.43 | 100 | 92.86 | 100 | 100 | 100 | 100 |

| tree shrubs | 64.00 | 94.12 | 43.75 | 41.18 | 63.64 | 82.35 | 80.00 | 70.59 | 73.68 | 82.35 |

| Alternanthera philoxeroides | 88.24 | 71.43 | 73.33 | 52.38 | 88.89 | 76.19 | 93.75 | 71.43 | 88.89 | 76.19 |

| water | 100 | 100 | 86.67 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Overall accuracy (%) | 84.88 | 58.05 | 88.78 | 86.34 | 89.76 | |||||

| Kappa | 0.83 | 0.53 | 0.87 | 0.85 | 0.88 | |||||

| Class | Scenario 6 | Scenario 7 | Scenario 8 | Scenario 9 | Scenario 10 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| UA | PA | UA | PA | UA | PA | UA | PA | UA | PA | |

| Triarrhena lutarioriparia | 55.56 | 62.50 | 77.78 | 87.50 | 77.78 | 87.50 | 87.50 | 87.50 | 87.50 | 87.5 |

| Polygonum perfoliatum | 88.89 | 80.00 | 94.12 | 80.00 | 88.89 | 80.00 | 100 | 80.00 | 100 | 80.00 |

| Zizania latifolia | 66.67 | 36.36 | 55.56 | 45.45 | 75.00 | 54.55 | 67.74 | 95.45 | 75.00 | 95.45 |

| Sambucus chinensis | 87.10 | 84.38 | 100 | 87.50 | 96.77 | 93.75 | 96.77 | 93.75 | 96.97 | 100 |

| Nelumbo nucifera | 83.78 | 88.57 | 100 | 91.43 | 91.89 | 97.14 | 100 | 94.29 | 100 | 97.14 |

| Phragmites australis | 70.59 | 52.17 | 61.29 | 82.61 | 90.00 | 78.26 | 85.71 | 78.26 | 85.71 | 78.26 |

| other | 100 | 92.86 | 70.00 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| tree shrubs | 50.00 | 70.59 | 100 | 82.35 | 65.22 | 88.24 | 71.43 | 88.24 | 71.43 | 88.24 |

| Alternanthera philoxeroides | 45.16 | 66.67 | 69.57 | 76.19 | 70.83 | 80.95 | 88.24 | 71.43 | 94.12 | 76.19 |

| water | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Overall accuracy (%) | 73.66 | 82.44 | 85.85 | 88.78 | 90.73 | |||||

| Kappa | 0.70 | 0.80 | 0.84 | 0.87 | 0.90 | |||||

| Feature Ranking | Feature Name | Feature Ranking | Feature Name | Feature Ranking | Feature Name |

|---|---|---|---|---|---|

| 1 | Mean DSM | 13 | Standard deviation b | 25 | GLCM_Ent r |

| 2 | GLCM_Hom g | 14 | GLCM_StdDev g | 26 | GLCM_Ent g |

| 3 | GLCM_Hom r | 15 | NGRDI | 27 | Standard deviation DSM |

| 4 | COM2 | 16 | ExG | 28 | GLCM_StdDev r |

| 5 | NGBDI | 17 | RGRI | 29 | Area |

| 6 | GLCM_Hom b | 18 | Standard deviation G | 30 | GLCM_StdDev b |

| 7 | VEG | 19 | Standard deviation R | 31 | GLCM_Dis g |

| 8 | BGRI | 20 | GLCM_ASM r | 32 | GLCM_Dis r |

| 9 | VDVI | 21 | CIVE | 33 | COM |

| 10 | WI | 22 | GLCM_Ent b | 34 | GLCM_Cor g |

| 11 | RGBRI | 23 | GLCM_ASM b | 35 | Shape index |

| 12 | RGBVI | 24 | GLCM_ASM g | 36 | Mean B |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, R.; Yang, C.; Li, E.; Cai, X.; Yang, J.; Xia, Y. Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery. Remote Sens. 2021, 13, 4910. https://doi.org/10.3390/rs13234910

Zhou R, Yang C, Li E, Cai X, Yang J, Xia Y. Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery. Remote Sensing. 2021; 13(23):4910. https://doi.org/10.3390/rs13234910

Chicago/Turabian StyleZhou, Rui, Chao Yang, Enhua Li, Xiaobin Cai, Jiao Yang, and Ying Xia. 2021. "Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery" Remote Sensing 13, no. 23: 4910. https://doi.org/10.3390/rs13234910

APA StyleZhou, R., Yang, C., Li, E., Cai, X., Yang, J., & Xia, Y. (2021). Object-Based Wetland Vegetation Classification Using Multi-Feature Selection of Unoccupied Aerial Vehicle RGB Imagery. Remote Sensing, 13(23), 4910. https://doi.org/10.3390/rs13234910