Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection

Abstract

:1. Introduction

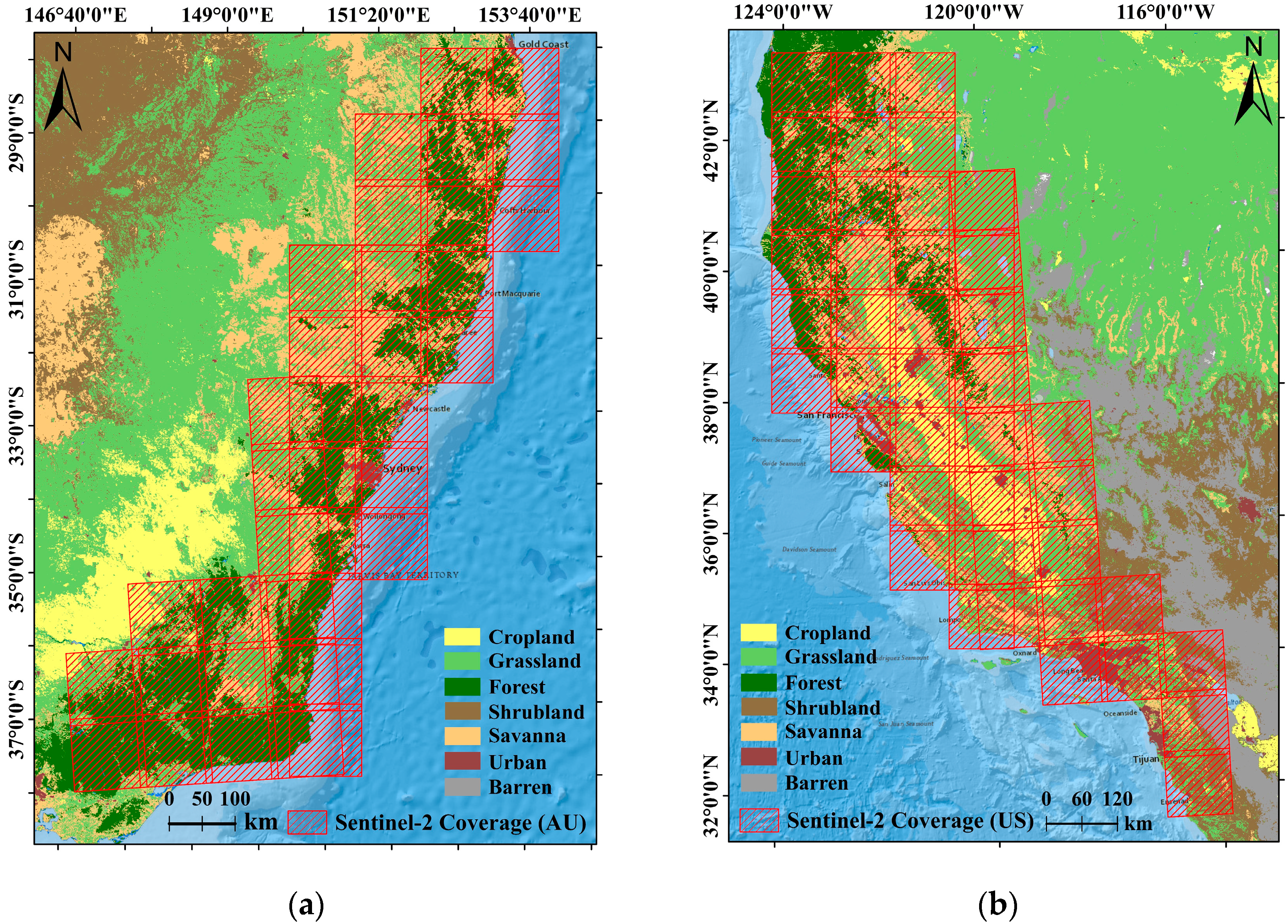

2. Test Sites and Datasets

3. Methodology

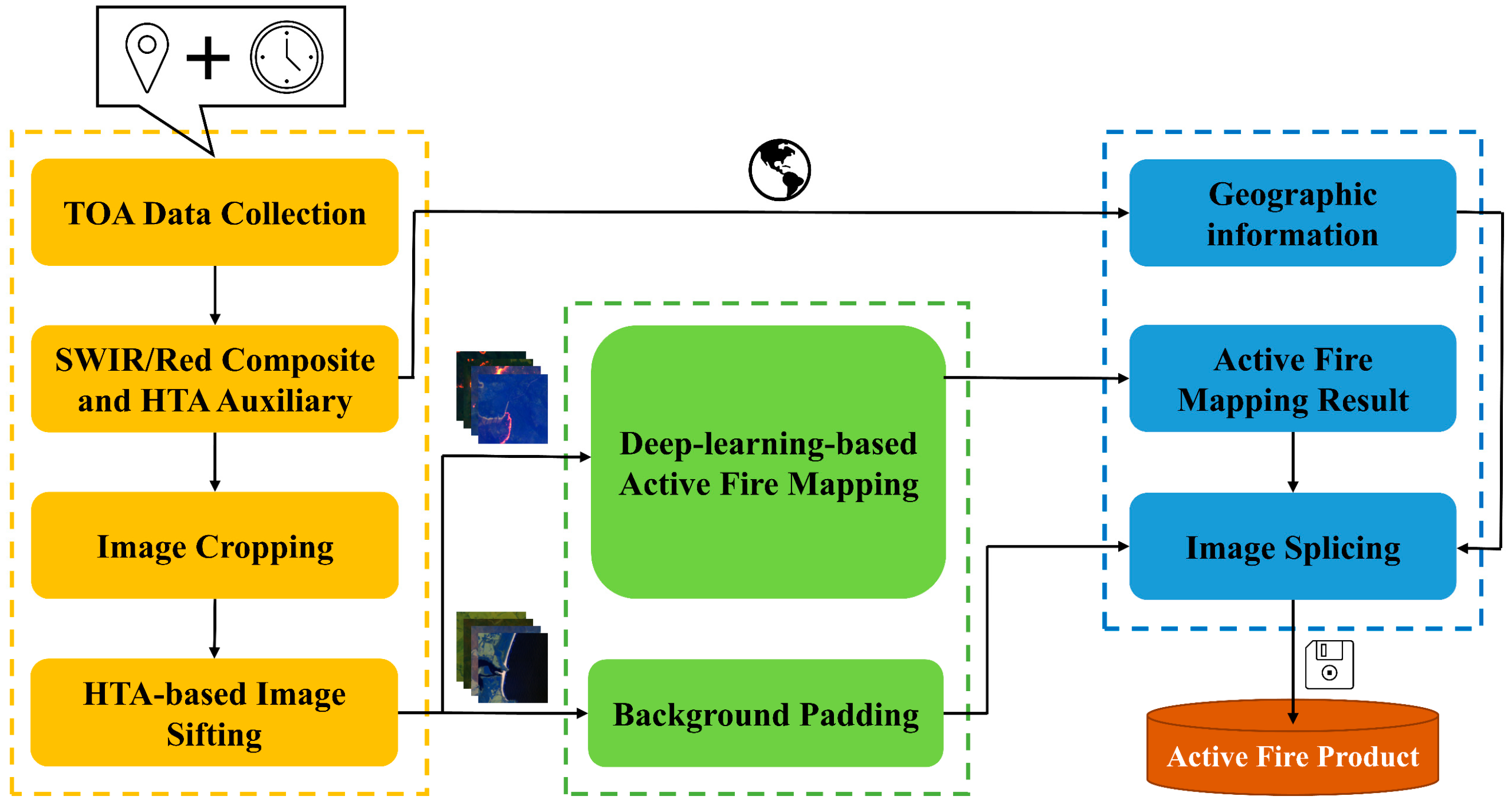

3.1. Automated Active Fire Detection Framework

3.2. Deep-Learning-Based Active Fire Detection

3.3. Evaluation Metrics

3.4. Implementation Details

4. Experiment Results

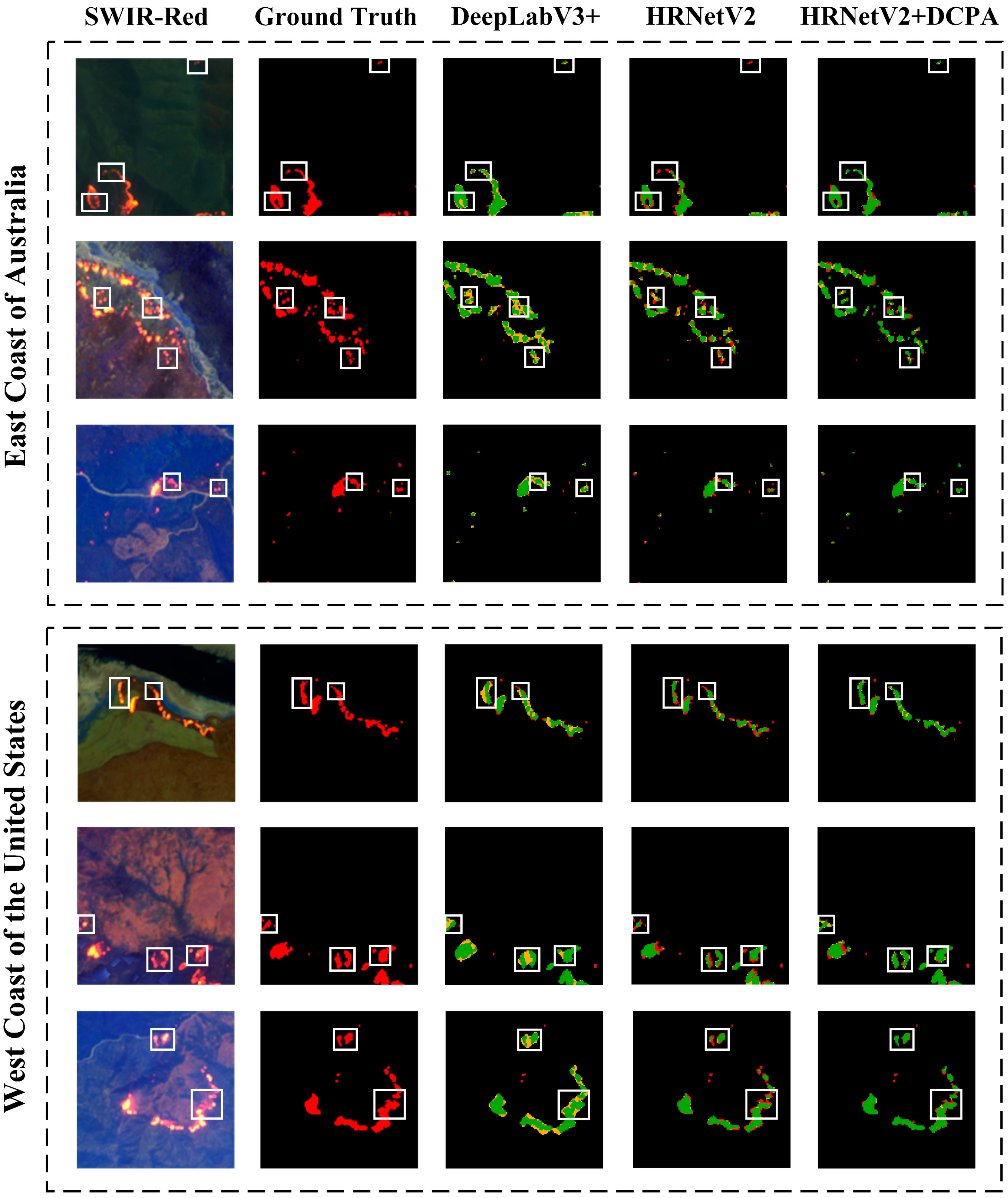

4.1. Deep-Learning-Based Active Fire Detection

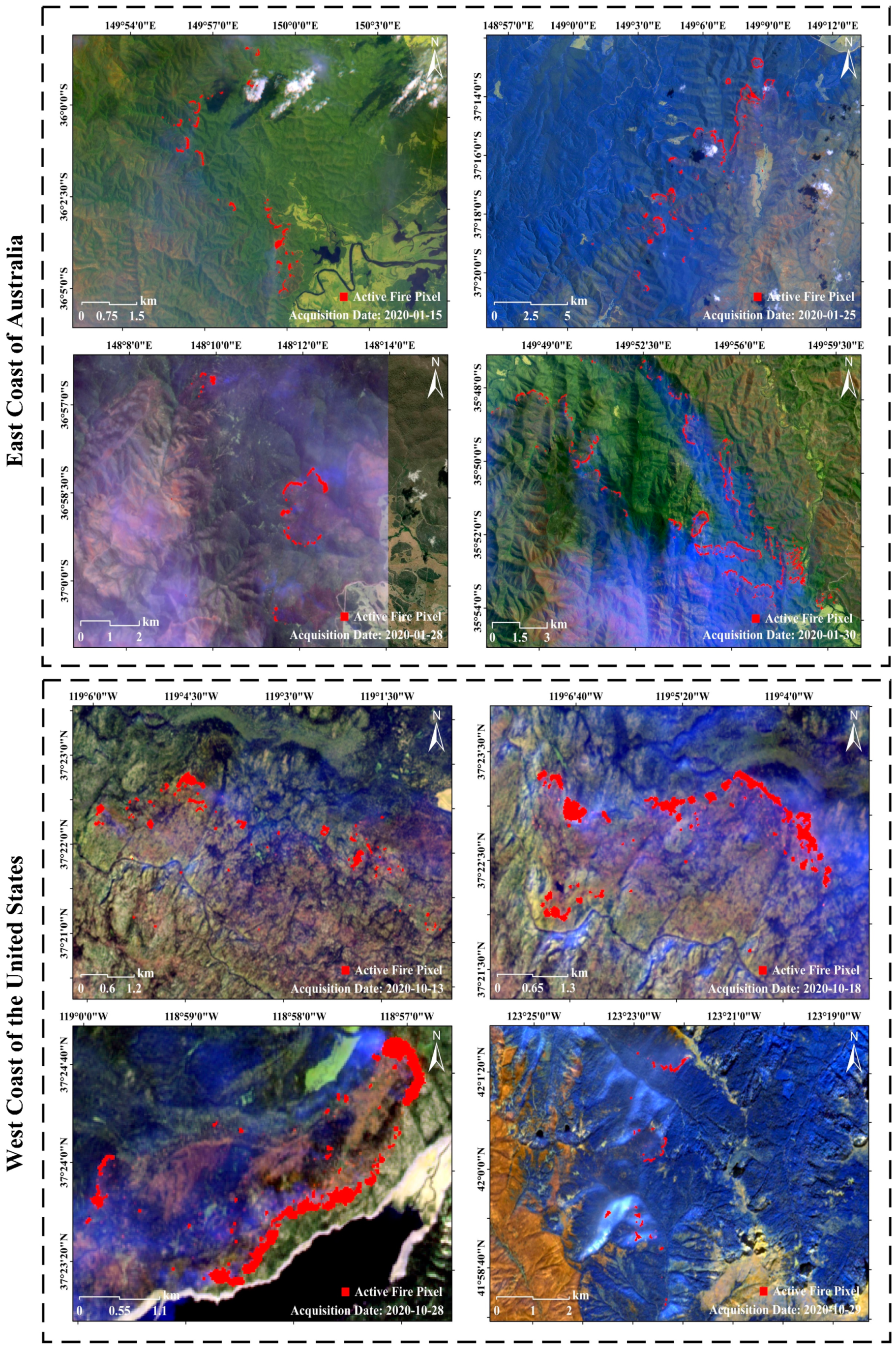

4.2. Automated Active Fire Detection Framework

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Artés, T.; Oom, D.; De Rigo, D.; Durrant, T.H.; Maianti, P.; Libertà, G.; San-Miguel-Ayanz, J. A global wildfire dataset for the analysis of fire regimes and fire behaviour. Sci. Data 2019, 6, 296. [Google Scholar] [CrossRef] [PubMed]

- Kemter, M.; Fischer, M.; Luna, L.; Schönfeldt, E.; Vogel, J.; Banerjee, A.; Korup, O.; Thonicke, K. Cascading hazards in the aftermath of Australia’s 2019/2020 Black Summer wildfires. Earth’s Future 2021, 9, e2020EF001884. [Google Scholar] [CrossRef]

- Killian, D. Black Summer in the Shoalhaven, NSW: What was it like and what could we do better? A resident’s perspective. Public Health Res. Pract. 2020, 30, e3042028. [Google Scholar] [CrossRef]

- Higuera, P.E.; Abatzoglou, J.T. Record-setting climate enabled the extraordinary 2020 fire season in the western United States. Glob. Chang. Biol. 2021, 27, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Ruffault, J.; Martin-StPaul, N.; Pimont, F.; Dupuy, J.-L. How well do meteorological drought indices predict live fuel moisture content (LFMC)? An assessment for wildfire research and operations in Mediterranean ecosystems. Agric. For. Meteorol. 2018, 262, 391–401. [Google Scholar] [CrossRef]

- Sungmin, O.; Hou, X.; Orth, R. Observational evidence of wildfire-promoting soil moisture anomalies. Sci. Rep. 2020, 10, 11008. [Google Scholar]

- Holden, Z.A.; Swanson, A.; Luce, C.H.; Jolly, W.M.; Maneta, M.; Oyler, J.W.; Warren, D.A.; Parsons, R.; Affleck, D. Decreasing fire season precipitation increased recent western US forest wildfire activity. Proc. Natl. Acad. Sci. USA 2018, 115, E8349–E8357. [Google Scholar] [CrossRef] [Green Version]

- Hua, L.; Shao, G. The progress of operational forest fire monitoring with infrared remote sensing. J. For. Res. 2017, 28, 215–229. [Google Scholar] [CrossRef]

- Jones, M.W.; Smith, A.; Betts, R.; Canadell, J.G.; Prentice, I.C.; Le Quéré, C. Climate change increases risk of wildfires. ScienceBrief Rev. 2020, 116, 117. [Google Scholar]

- Giglio, L.; Csiszar, I.; Restás, Á.; Morisette, J.T.; Schroeder, W.; Morton, D.; Justice, C.O. Active fire detection and characterization with the advanced spaceborne thermal emission and reflection radiometer (ASTER). Remote Sens. Environ. 2008, 112, 3055–3063. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef] [Green Version]

- Kumar, S.S.; Roy, D.P. Global operational land imager Landsat-8 reflectance-based active fire detection algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef] [Green Version]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Stroppiana, D.; Pinnock, S.; Gregoire, J.-M. The global fire product: Daily fire occurrence from April 1992 to December 1993 derived from NOAA AVHRR data. Int. J. Remote Sens. 2000, 21, 1279–1288. [Google Scholar] [CrossRef]

- Wooster, M.J.; Xu, W.; Nightingale, T. Sentinel-3 SLSTR active fire detection and FRP product: Pre-launch algorithm development and performance evaluation using MODIS and ASTER datasets. Remote Sens. Environ. 2012, 120, 236–254. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375 m active fire detection data product: Algorithm description and initial assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Giglio, L.; Schroeder, W.; Justice, C.O. The collection 6 MODIS active fire detection algorithm and fire products. Remote Sens. Environ. 2016, 178, 31–41. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, Z.; Chen, F.; Niu, Z.; Li, B.; Yu, B.; Jia, H.; Zhang, M. An active fire detection algorithm based on multi-temporal FengYun-3C VIRR data. Remote Sens. Environ. 2018, 211, 376–387. [Google Scholar] [CrossRef]

- Roberts, G.; Wooster, M. Development of a multi-temporal Kalman filter approach to geostationary active fire detection & fire radiative power (FRP) estimation. Remote Sens. Environ. 2014, 152, 392–412. [Google Scholar]

- Hall, J.V.; Zhang, R.; Schroeder, W.; Huang, C.; Giglio, L. Validation of GOES-16 ABI and MSG SEVIRI active fire products. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101928. [Google Scholar] [CrossRef]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Imai, T.; Inoue, H.; Kumagai, Y.; Miyakawa, T.; Murata, H.; Ohno, T. An introduction to Himawari-8/9—Japan’s new-generation geostationary meteorological satellites. J. Meteorol. Soc. Jpn. Ser. II 2016, 94, 151–183. [Google Scholar] [CrossRef] [Green Version]

- Murphy, S.W.; de Souza Filho, C.R.; Wright, R.; Sabatino, G.; Pabon, R.C. HOTMAP: Global hot target detection at moderate spatial resolution. Remote Sens. Environ. 2016, 177, 78–88. [Google Scholar] [CrossRef]

- Hu, X.; Ban, Y.; Nascetti, A. Sentinel-2 MSI data for active fire detection in major fire-prone biomes: A multi-criteria approach. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102347. [Google Scholar] [CrossRef]

- De Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Ormsby, T.; Napoleon, E.; Burke, R.; Groessl, C.; Feaster, L. Getting to Know ArcGIS Desktop: Basics of ArcView, ArcEditor, and ArcInfo; ESRI, Inc.: West Redlands, CA, USA, 2004. [Google Scholar]

- Qgis: A Free and Open Source Geographic Information System, Version 3.8.0; Computer Software; QGIS Development Team: Zurich, Switzerland, 2018.

- Bouzinac, C.; Lafrance, B.; Pessiot, L.; Touli, D.; Jung, M.; Massera, S.; Neveu-VanMalle, M.; Espesset, A.; Francesconi, B.; Clerc, S. Sentinel-2 level-1 calibration and validation status from the mission performance centre. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4347–4350. [Google Scholar]

- Spoto, F.; Sy, O.; Laberinti, P.; Martimort, P.; Fernandez, V.; Colin, O.; Hoersch, B.; Meygret, A. Overview of Sentinel-2. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 1707–1710. [Google Scholar]

- Sulla-Menashe, D.; Gray, J.M.; Abercrombie, S.P.; Friedl, M.A. Hierarchical mapping of annual global land cover 2001 to present: The MODIS Collection 6 Land Cover product. Remote Sens. Environ. 2019, 222, 183–194. [Google Scholar] [CrossRef]

- Genzano, N.; Pergola, N.; Marchese, F. A Google Earth Engine tool to investigate, map and monitor volcanic thermal anomalies at global scale by means of mid-high spatial resolution satellite data. Remote Sens. 2020, 12, 3232. [Google Scholar] [CrossRef]

- Marchese, F.; Genzano, N.; Neri, M.; Falconieri, A.; Mazzeo, G.; Pergola, N. A Multi-channel algorithm for mapping volcanic thermal anomalies by means of Sentinel-2 MSI and Landsat-8 OLI data. Remote Sens. 2019, 11, 2876. [Google Scholar] [CrossRef] [Green Version]

- Miller, J.D.; Thode, A.E. Quantifying burn severity in a heterogeneous landscape with a relative version of the delta Normalized Burn Ratio (dNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Gargiulo, M.; Dell’Aglio, D.A.G.; Iodice, A.; Riccio, D.; Ruello, G. A CNN-based super-resolution technique for active fire detection on Sentinel-2 data. In Proceedings of the 2019 PhotonIcs & Electromagnetics Research Symposium-Spring (PIERS-Spring), Rome, Italy, 17–20 June 2019; pp. 418–426. [Google Scholar]

- Palacios, A.; Muñoz, M.; Darbra, R.; Casal, J. Thermal radiation from vertical jet fires. Fire Saf. J. 2012, 51, 93–101. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5693–5703. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Li, J.; Levine, M.D.; An, X.; Xu, X.; He, H. Visual saliency based on scale-space analysis in the frequency domain. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 996–1010. [Google Scholar] [CrossRef] [Green Version]

- Ell, T.A.; Sangwine, S.J. Hypercomplex Fourier transforms of color images. IEEE Trans. Image Process. 2006, 16, 22–35. [Google Scholar] [CrossRef]

- Chen, B.; Shu, H.; Coatrieux, G.; Chen, G.; Sun, X.; Coatrieux, J.L. Color image analysis by quaternion-type moments. J. Math. Imaging Vis. 2015, 51, 124–144. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Laurent, T.; Brecht, J. The multilinear structure of ReLU networks. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2908–2916. [Google Scholar]

| Channel | Sentinel-2A | Sentinel-2B | Spatial Resolution | ||

|---|---|---|---|---|---|

| Central Wavelength | Bandwidth | Central Wavelength | Bandwidth | ||

| 4—Red | 664.6 nm | 31 nm | 664.9 nm | 31 nm | 10 m |

| 8A—Narrow NIR | 864.7 nm | 21 nm | 864.0 nm | 22 nm | 20 m |

| 11—SWIR 1 | 1613.7 nm | 91 nm | 1610.4 nm | 94 nm | 20 m |

| 12—SWIR 2 | 2202.4 nm | 175 nm | 2185.7 nm | 185 nm | 20 m |

| Classified | Active Fire | Background | |

|---|---|---|---|

| Reference | |||

| Active Fire | TP | FP | |

| Background | FN | TN | |

| Model | Backbone | OE a | CE a | IoU a | OE b | CE b | IoU b |

|---|---|---|---|---|---|---|---|

| DeepLabV3+ | Xception-71 | 7.6% | 28.1% | 67.8% | 9.1% | 25.3% | 69.5% |

| HRNetV2 | HRNetV2-W48 | 18.5% | 14.2% | 71.8% | 19.0% | 11.5% | 73.2% |

| DCPA+HRNetV2 | HRNetV2-W48 | 17.3% | 13.1% | 73.4% | 17.4% | 9.2% | 76.2% |

| East Coast of Australia | ||||

| Acquisition Date | 2020-01-15 | 2020-01-25 | 2020-01-28 | 2020-01-30 |

| Top Left a | −35.5, 148.7 | −37.3, 148.5 | −36.3, 147.6 | −34.8, 148.7 |

| Top Right a | −35.5, 150.2 | −37.3, 149.2 | −36.3, 148.7 | −34.8, 150.6 |

| Bottom Left a | −36.8, 148.7 | −37.8, 148.5 | −36.9, 147.6 | −37.6, 148.7 |

| Bottom Right a | −36.8, 150.2 | −37.8, 149.2 | −36.9, 148.7 | −37.6, 150.6 |

| IoU | 71.4% | 71.1% | 70.2% | 68.7% |

| Time Cost | 358 s | 105 s | 133 s | 839 s |

| West Coast of the United States | ||||

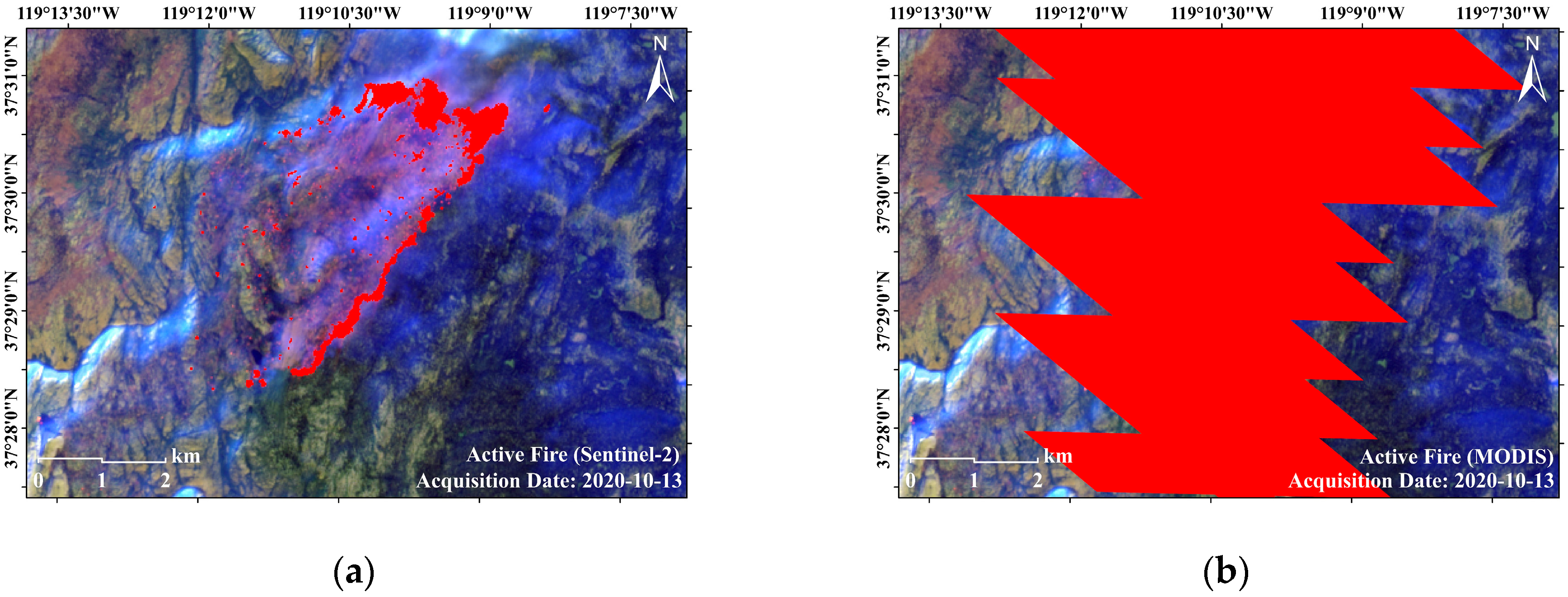

| Acquisition Date | 2020-10-13 | 2020-10-18 | 2020-10-28 | 2020-10-29 |

| Top Left a | 37.2, −119.4 | 37.6, −118.9 | 37.6, −119.4 | 41.9, −123.7 |

| Top Right a | 37.2, −118.6 | 37.6, −118.2 | 37.6, −118.9 | 41.9, −123.3 |

| Bottom Left a | 36.7, −119.4 | 37.3, −118.9 | 37.3, −119.4 | 41.0, −123.7 |

| Bottom Right a | 36.7, −118.6 | 37.3, −118.2 | 37.6, −118.9 | 41.0, −123.3 |

| IoU | 72.6% | 71.3% | 73.0% | 70.8% |

| Time Cost | 58 s | 51 s | 46 s | 132 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.I.; Liu, C.; Du, Z. Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sens. 2021, 13, 4790. https://doi.org/10.3390/rs13234790

Zhang Q, Ge L, Zhang R, Metternicht GI, Liu C, Du Z. Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sensing. 2021; 13(23):4790. https://doi.org/10.3390/rs13234790

Chicago/Turabian StyleZhang, Qi, Linlin Ge, Ruiheng Zhang, Graciela Isabel Metternicht, Chang Liu, and Zheyuan Du. 2021. "Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection" Remote Sensing 13, no. 23: 4790. https://doi.org/10.3390/rs13234790

APA StyleZhang, Q., Ge, L., Zhang, R., Metternicht, G. I., Liu, C., & Du, Z. (2021). Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sensing, 13(23), 4790. https://doi.org/10.3390/rs13234790