Seamless Vehicle Positioning by Lidar-GNSS Integration: Standalone and Multi-Epoch Scenarios

Abstract

:1. Introduction

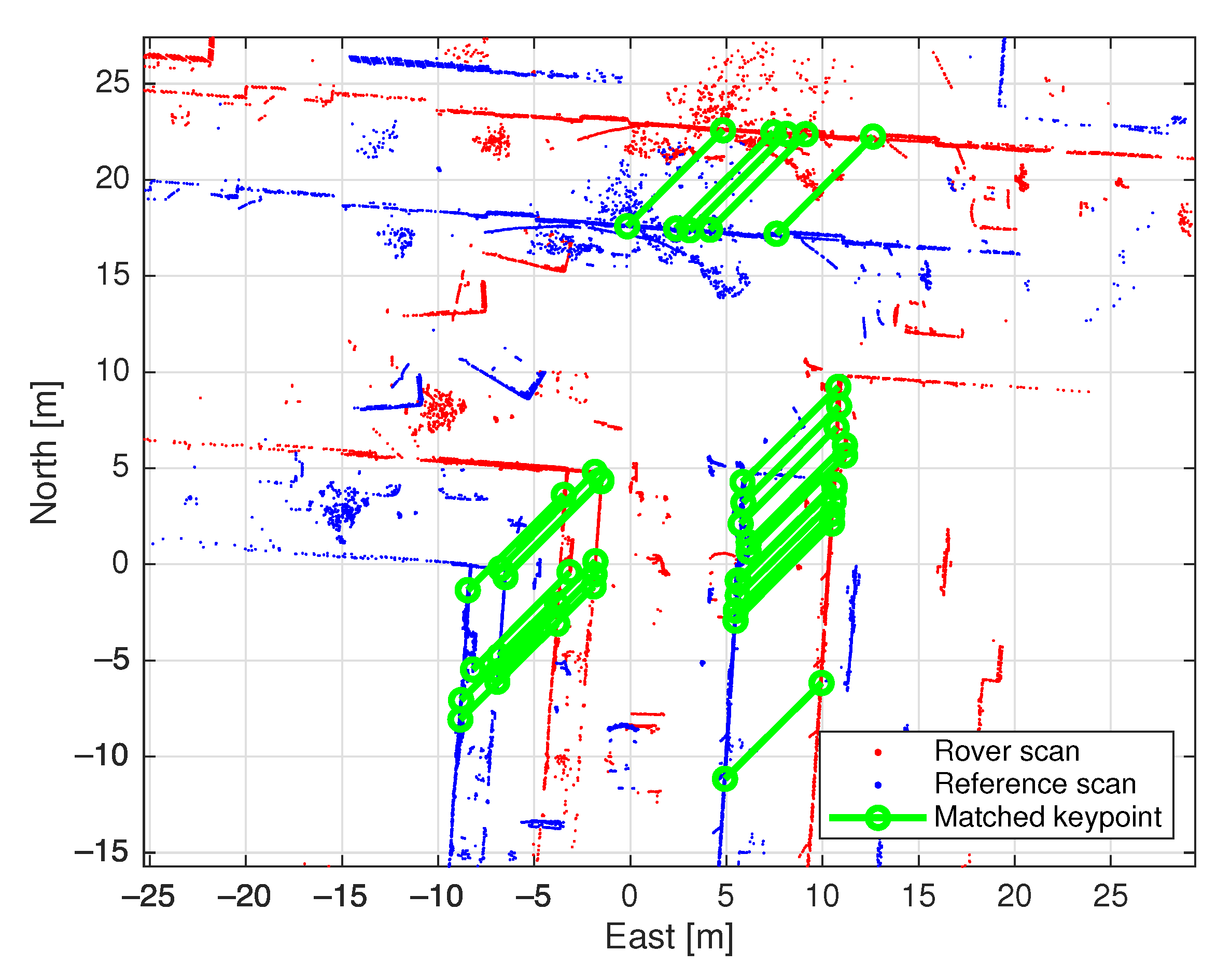

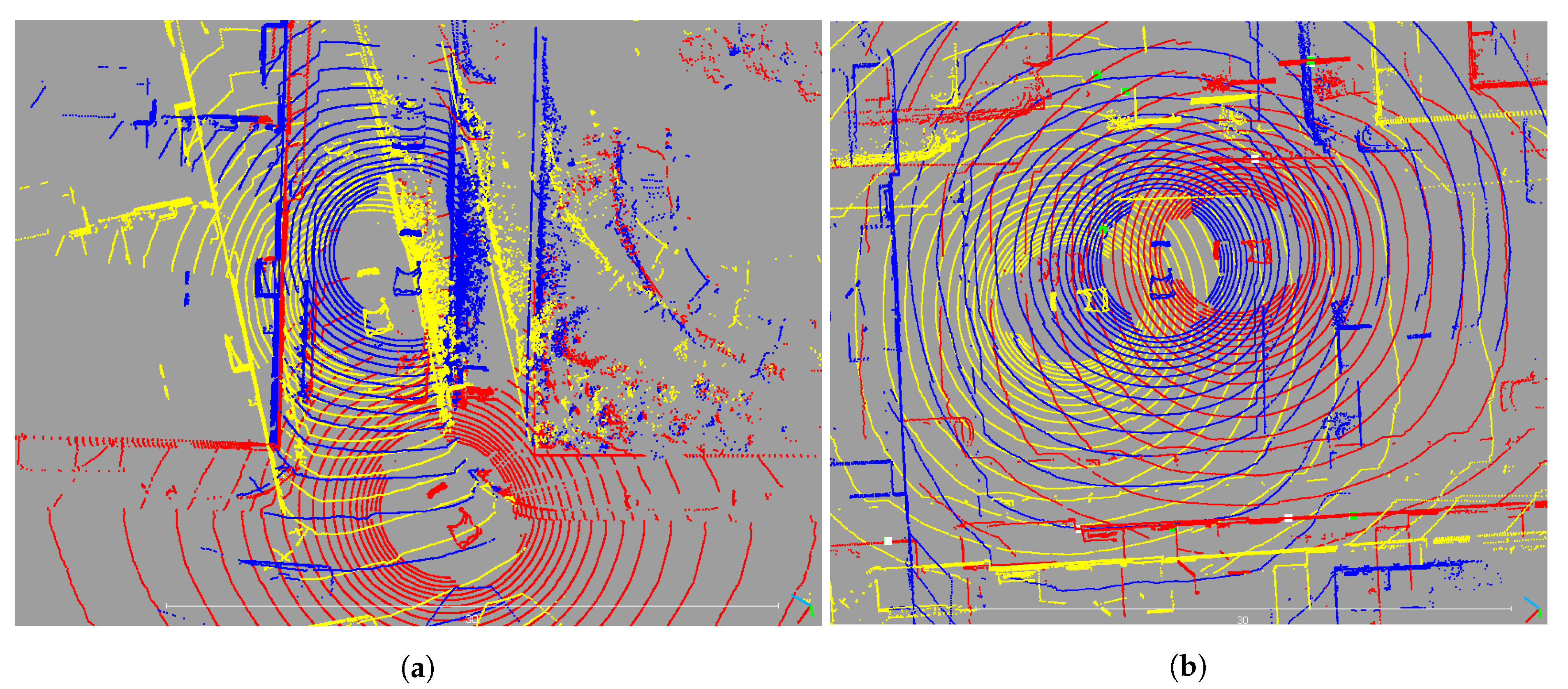

2. Lidar Positioning by Point Cloud Registration

2.1. HD Map Definition

2.2. Deep Learning Model Training and Inference

2.3. Estimating Vehicle Position

3. EKF Formulation via a Mixed Measurement Model

3.1. Lidar and GNSS Observation Equations

3.2. Filter Setup

3.3. Filter Time-Update

3.4. Filter Measurement-Update

4. Experimental Setup and Results

- Lidar keypoint matching success rate is defined as the proportion of the epochs with successfully identified corresponding keypoints which contribute to lidar measurements;

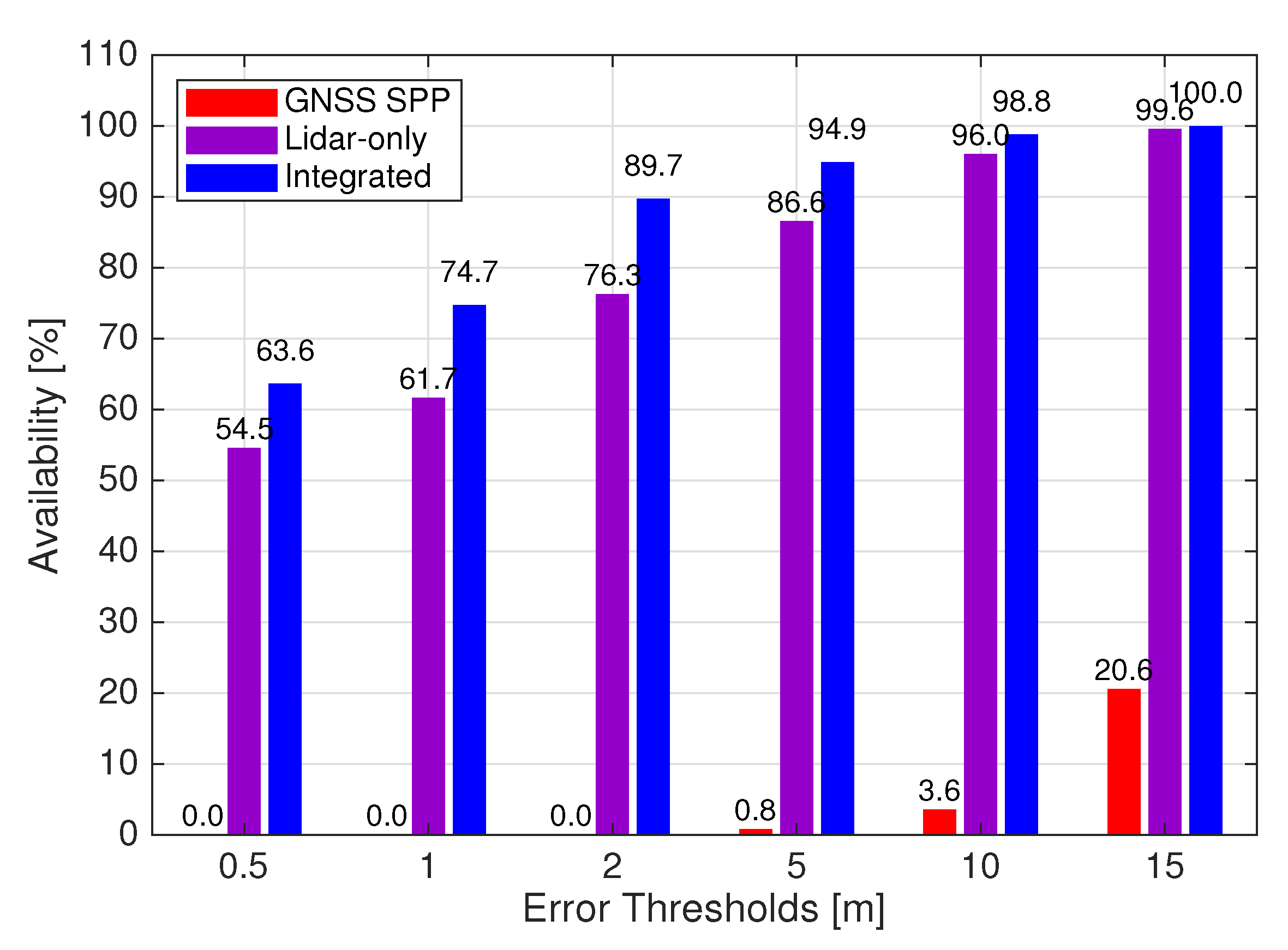

- Availability is defined as the proportion of the epochs with positioning solutions under a specified error threshold;

- Accuracy is measured by the Root Mean Squared Error (RMSE) of the offsets of the positioning solutions from the ground truth.

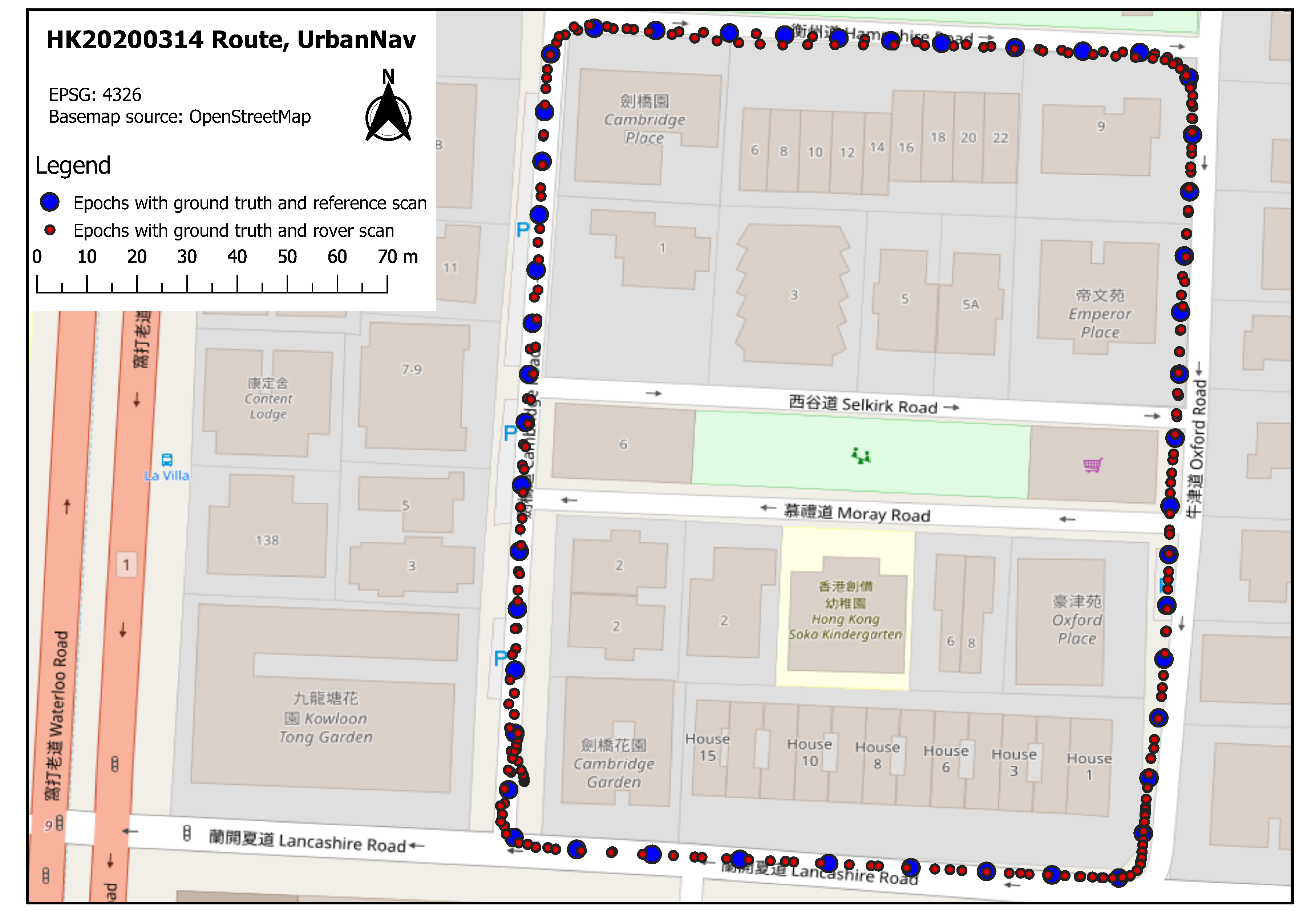

4.1. Experimental Setup

- Velodyne HDL-32E lidar sensor;

- Xsens Mti 10 IMU;

- U-blox M8T GNSS receiver;

- RGB Camera;

- SPAN-CPT GNSS-RTK/INS integrated system.

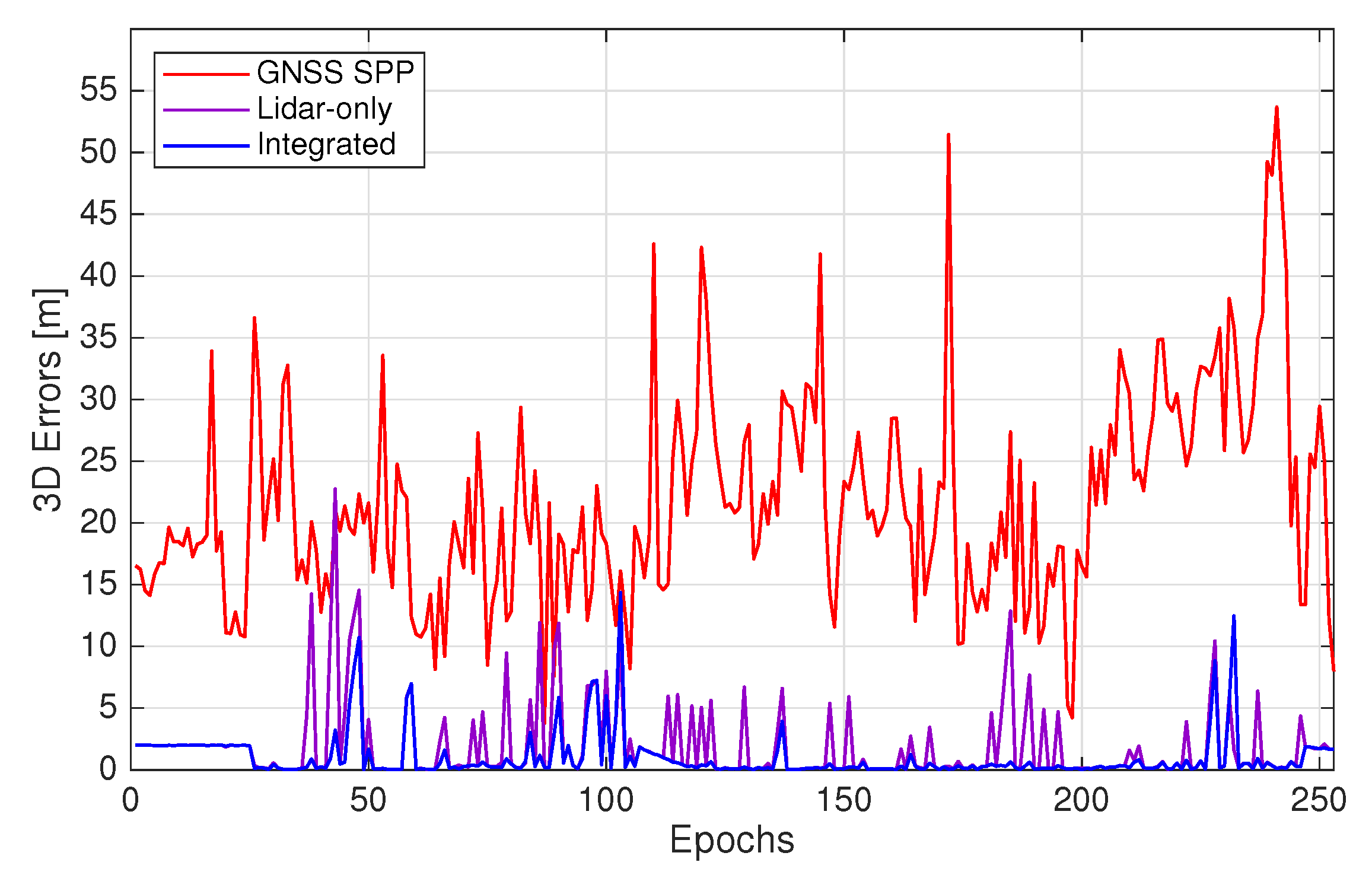

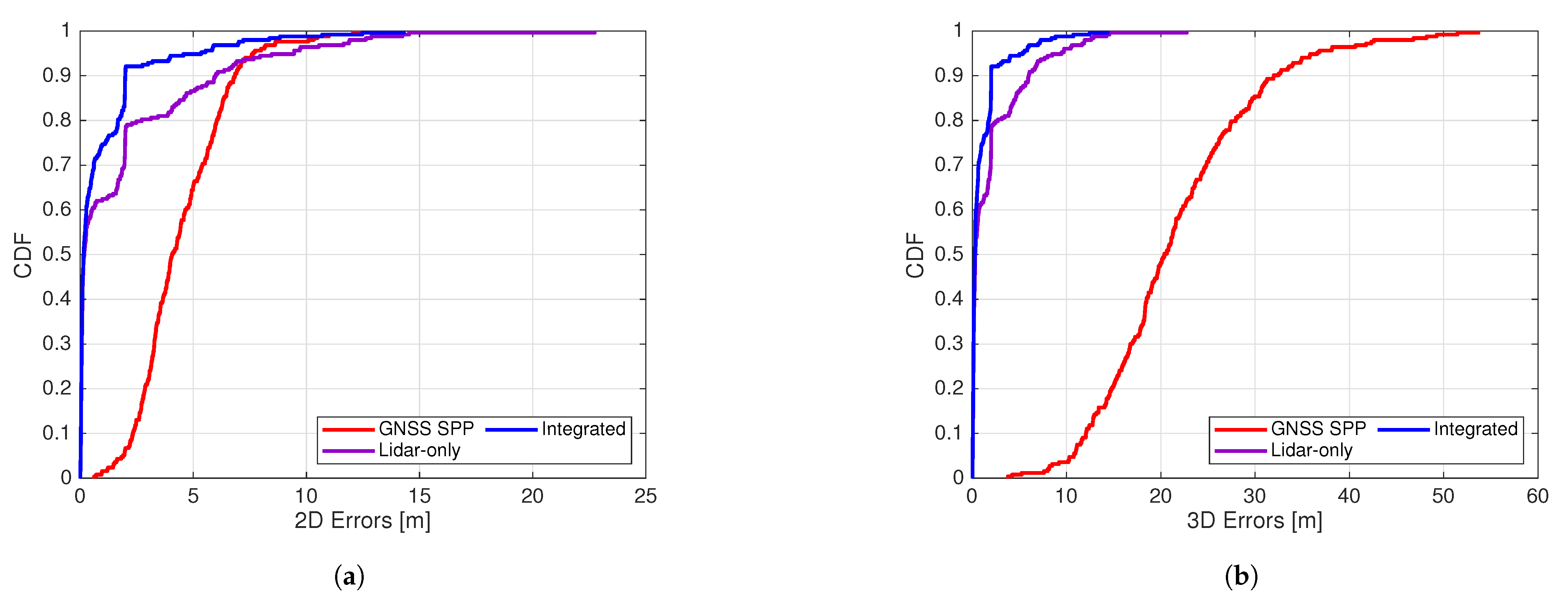

4.2. Positioning Results under Ideal Lidar Conditions

4.3. Positioning Results in Realistic Environments

5. Discussion

5.1. Significant GNSS Code Errors

5.2. Keypoint Matching Errors and Failure

5.3. Accuracy and Availability Improvements Brought by the Integration

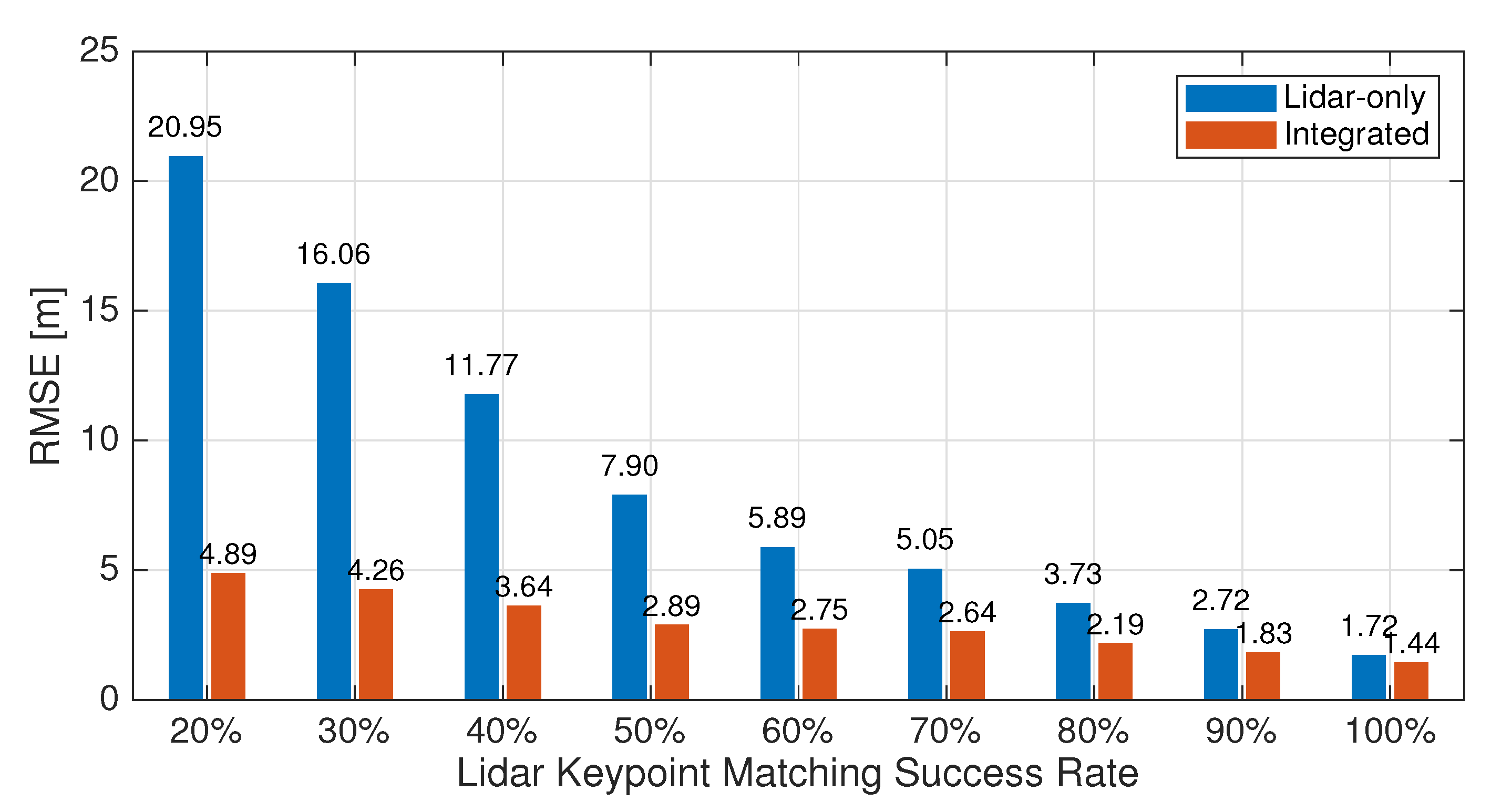

5.4. Keypoint Matching Success Rate Simulation and Comparison

5.5. Runtime Efficiency

6. Conclusions

- Lidar measurements are generated using a deep learning mechanism through point cloud registration with a pre-built HD map for positioning purposes;

- It was demonstrated that the proposed positioning approach (Integrated) can achieve centimeter- to meter-level 3D accuracy for the entirety of the driving duration in densely built-up urban environments, where the accuracy of GNSS code measurements is low and standalone lidar positioning may not always be available;

- When the keypoint matching success rate is low, as can be expected for a realistic scenario, the proposed Integrated approach provides the best accuracy while maintaining 100% availability of positioning solutions.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| EKF | Extended Kalman-Filter |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| HD | High definition |

| ICP | Iterative Closest Point |

| IMU | Inertial Measurement Unit |

| Lidar | Light detection and ranging |

| RANSAC | Random Sample Consensus |

| RMSE | Root Mean Squared Error |

| RTK | Real-time Kinematic |

| SLAM | Simultaneous Localization and Mapping |

| SPP | Standard Point Positioning |

| UERE | User Equivalent Range Error |

| WLS | Weighted Least-Squares |

Appendix A. Jacobian Matrices of the Mixed Models (6) and (11)

References

- Rödel, C.; Stadler, S.; Meschtscherjakov, A.; Tscheligi, M. Towards autonomous cars: The effect of autonomy levels on acceptance and user experience. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, DC, USA, 17–19 September 2014; pp. 1–8. [Google Scholar]

- Joubert, N.; Reid, T.G.; Noble, F. Developments in modern GNSS and its impact on autonomous vehicle architectures. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 2029–2036. [Google Scholar]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Wasle, E. GNSS: Global Navigation Satellite Systems: GPS, Glonass, Galileo, and More; Springer: New York, NY, USA, 2008. [Google Scholar]

- Wen, W.; Zhang, G.; Hsu, L.T. Correcting NLOS by 3D LiDAR and building height to improve GNSS single point positioning. Navigation 2019, 66, 705–718. [Google Scholar] [CrossRef]

- Ghallabi, F.; Nashashibi, F.; El-Haj-Shhade, G.; Mittet, M.A. LIDAR-based lane marking detection for vehicle positioning in an HD map. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2209–2214. [Google Scholar]

- Ramezani, M.; Khoshelham, K. Vehicle positioning in GNSS-deprived urban areas by stereo visual-inertial odometry. IEEE Trans. Intell. Veh. 2018, 3, 208–217. [Google Scholar] [CrossRef]

- Nadarajah, N.; Khodabandeh, A.; Wang, K.; Choudhury, M.; Teunissen, P.J.G. Multi-GNSS PPP-RTK: From large-to small-scale networks. Sensors 2018, 18, 1078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khodabandeh, A.; Zaminpardaz, S.; Nadarajah, N. A study on multi-GNSS phase-only positioning. Meas. Sci. Technol. 2021, 32, 095005. [Google Scholar] [CrossRef]

- Teunissen, P.J.G.; de Jonge, P.J.; Tiberius, C.C.J.M. The least-squares ambiguity decorrelation adjustment: Its performance on short GPS baselines and short observation spans. J. Geod. 1997, 71, 589–602. [Google Scholar] [CrossRef] [Green Version]

- Humphreys, T.E.; Murrian, M.J.; Narula, L. Deep-Urban Unaided Precise Global Navigation Satellite System Vehicle Positioning. IEEE Intell. Transp. Syst. Mag. 2020, 12, 109–122. [Google Scholar] [CrossRef]

- Braasch, M.S. Multipath. In Springer Handbook of Global Navigation Satellite Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 443–468. [Google Scholar]

- Maaref, M.; Khalife, J.; Kassas, Z.M. Lane-level localization and mapping in GNSS-challenged environments by fusing lidar data and cellular pseudoranges. IEEE Trans. Intell. Veh. 2018, 4, 73–89. [Google Scholar] [CrossRef]

- Liu, R.; Wang, J.; Zhang, B. High definition map for automated driving: Overview and analysis. J. Navig. 2020, 73, 324–341. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Y.; Wang, J. Map-based localization method for autonomous vehicles using 3D-LIDAR. IFAC-PapersOnLine 2017, 50, 276–281. [Google Scholar] [CrossRef]

- Im, J.H.; Im, S.H.; Jee, G.I. Extended line map-based precise vehicle localization using 3D LIDAR. Sensors 2018, 18, 3179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ghallabi, F.; El-Haj-Shhade, G.; Mittet, M.A.; Nashashibi, F. LIDAR-Based road signs detection For Vehicle Localization in an HD Map. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1484–1490. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Dai, Y.; Sun, J. Deep learning based point cloud registration: An overview. Virtual Real. Intell. Hardw. 2020, 2, 222–246. [Google Scholar] [CrossRef]

- Mueller, K.; Atman, J.; Kronenwett, N.; Trommer, G.F. A Multi-Sensor Navigation System for Outdoor and Indoor Environments. In Proceedings of the 2020 International Technical Meeting of The Institute of Navigation, San Diego, CA, USA, 21–24 January 2020; pp. 612–625. [Google Scholar]

- Li, N.; Guan, L.; Gao, Y.; Du, S.; Wu, M.; Guang, X.; Cong, X. Indoor and Outdoor Low-Cost Seamless Integrated Navigation System Based on the Integration of INS/GNSS/LIDAR System. Remote Sens. 2020, 12, 3271. [Google Scholar] [CrossRef]

- Qian, C.; Zhang, H.; Li, W.; Shu, B.; Tang, J.; Li, B.; Chen, Z.; Liu, H. A LiDAR aiding ambiguity resolution method using fuzzy one-to-many feature matching. J. Geod. 2020, 94, 98. [Google Scholar] [CrossRef]

- Horache, S.; Deschaud, J.E.; Goulette, F. 3D Point Cloud Registration with Multi-Scale Architecture and Self-supervised Fine-tuning. arXiv 2021, arXiv:2103.14533. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Himmelsbach, M.; Hundelshausen, F.V.; Wuensche, H.J. Fast segmentation of 3D point clouds for ground vehicles. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 560–565. [Google Scholar]

- Henderson, H.V.; Pukelsheim, F.; Searle, S.R. On the History of the Kronecker Product. Linear Multilinear Algebra 1983, 14, 113–120. [Google Scholar] [CrossRef] [Green Version]

- Langley, R.B.; Teunissen, P.J.; Montenbruck, O. Introduction to GNSS. In Springer Handbook of Global Navigation Satellite Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 3–23. [Google Scholar]

- Hobiger, T.; Jakowski, N. Atmospheric signal propagation. In Springer Handbook of Global Navigation Satellite Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 165–193. [Google Scholar]

- Teunissen, P.J.G. Adjustment Theory: An Introduction; Series on Mathematical Geodesy and Positioning; Delft University Press: Delft, The Netherlands, 2000. [Google Scholar]

- Teunissen, P. Dynamic Data Processing; Recursive Least Squares; VSSD: Delft, The Netherlands, 2001. [Google Scholar]

- Wen, W.; Bai, X.; Hsu, L.T.; Pfeifer, T. GNSS/LiDAR Integration Aided by Self-adaptive Gaussian Mixture Models in Urban Scenarios: An Approach Robust to Non-Gaussian Noise. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 647–654. [Google Scholar]

- Pomerleau, F.; Liu, M.; Colas, F.; Siegwart, R. Challenging data sets for point cloud registration algorithms. Int. J. Robot. Res. 2012, 31, 1705–1711. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A modern library for 3D data processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Grinsted, A. Subaxis-Subplot. 2021. Available online: https://au.mathworks.com/matlabcentral/fileexchange/3696-subaxis-subplot (accessed on 1 March 2021).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2019; pp. 8024–8035. [Google Scholar]

- MATLAB. 9.10.0.1710957 (R2021a); The MathWorks Inc.: Natick, MA, USA, 2021. [Google Scholar]

| Lidar Keypoint Matching Success Rate = 100% | ||||

|---|---|---|---|---|

| 2D RMSE [m] | 3D RMSE [m] | Min. 3D Error [m] | Max. 3D Error [m] | |

| GNSS SPP | 4.888 | 23.197 | 3.770 | 53.685 |

| Lidar-only | 1.671 | 1.716 | 0.011 | 10.444 |

| Integrated | 1.423 | 1.445 | 0.014 | 8.831 |

| Lidar Keypoint Matching Success Rate = 80% | ||||

|---|---|---|---|---|

| 2D RMSE [m] | 3D RMSE [m] | Min. 3D Error [m] | Max. 3D Error [m] | |

| GNSS SPP | 4.888 | 23.197 | 3.770 | 53.685 |

| Lidar-only | 5.024 | 5.050 | 0.019 | 22.748 |

| Integrated | 2.168 | 2.187 | 0.019 | 14.359 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Khoshelham, K.; Khodabandeh, A. Seamless Vehicle Positioning by Lidar-GNSS Integration: Standalone and Multi-Epoch Scenarios. Remote Sens. 2021, 13, 4525. https://doi.org/10.3390/rs13224525

Zhang J, Khoshelham K, Khodabandeh A. Seamless Vehicle Positioning by Lidar-GNSS Integration: Standalone and Multi-Epoch Scenarios. Remote Sensing. 2021; 13(22):4525. https://doi.org/10.3390/rs13224525

Chicago/Turabian StyleZhang, Junjie, Kourosh Khoshelham, and Amir Khodabandeh. 2021. "Seamless Vehicle Positioning by Lidar-GNSS Integration: Standalone and Multi-Epoch Scenarios" Remote Sensing 13, no. 22: 4525. https://doi.org/10.3390/rs13224525

APA StyleZhang, J., Khoshelham, K., & Khodabandeh, A. (2021). Seamless Vehicle Positioning by Lidar-GNSS Integration: Standalone and Multi-Epoch Scenarios. Remote Sensing, 13(22), 4525. https://doi.org/10.3390/rs13224525