Learning to Identify Illegal Landfills through Scene Classification in Aerial Images

Abstract

1. Introduction

- Intra-class diversity: in our scenario, this corresponds to the variations of the type of garbage present in the scene (plastics, tires, wood, building material), of its disposition (scattered, collected in dumpsters, trucks, or sheds), as well as to the different geographical contexts (e.g., urban, rural).

- Inter-class similarity: this derives from the fact that the negative class represents all the “other” configurations of the territory (e.g., residential areas, sports campuses, open fields), some of which carry a high visual similarity with the positive class scenes (e.g., industrial districts, legal landfills, cemeteries).

- Object/scene variable scale: the detection of objects might need a varying degree of context (e.g., garbage stored in dumpsters vs. scattered in a large area). Therefore, the classifier should extract relevant features at different scales depending on the type of scene.

- Limited samples: collecting the ground truth is a difficulty in all supervised learning methods. In the addressed scenario, this problem is even more relevant due to the sensitivity of the domain, which may prevent the disclosure of open datasets.

- Cross-domain adaptation: as in all aerial image scene classification tasks, also waste classification evaluation suffers from the limitation of using training and testing data from the same domain (geographical region, acquisition device, employed sensor).

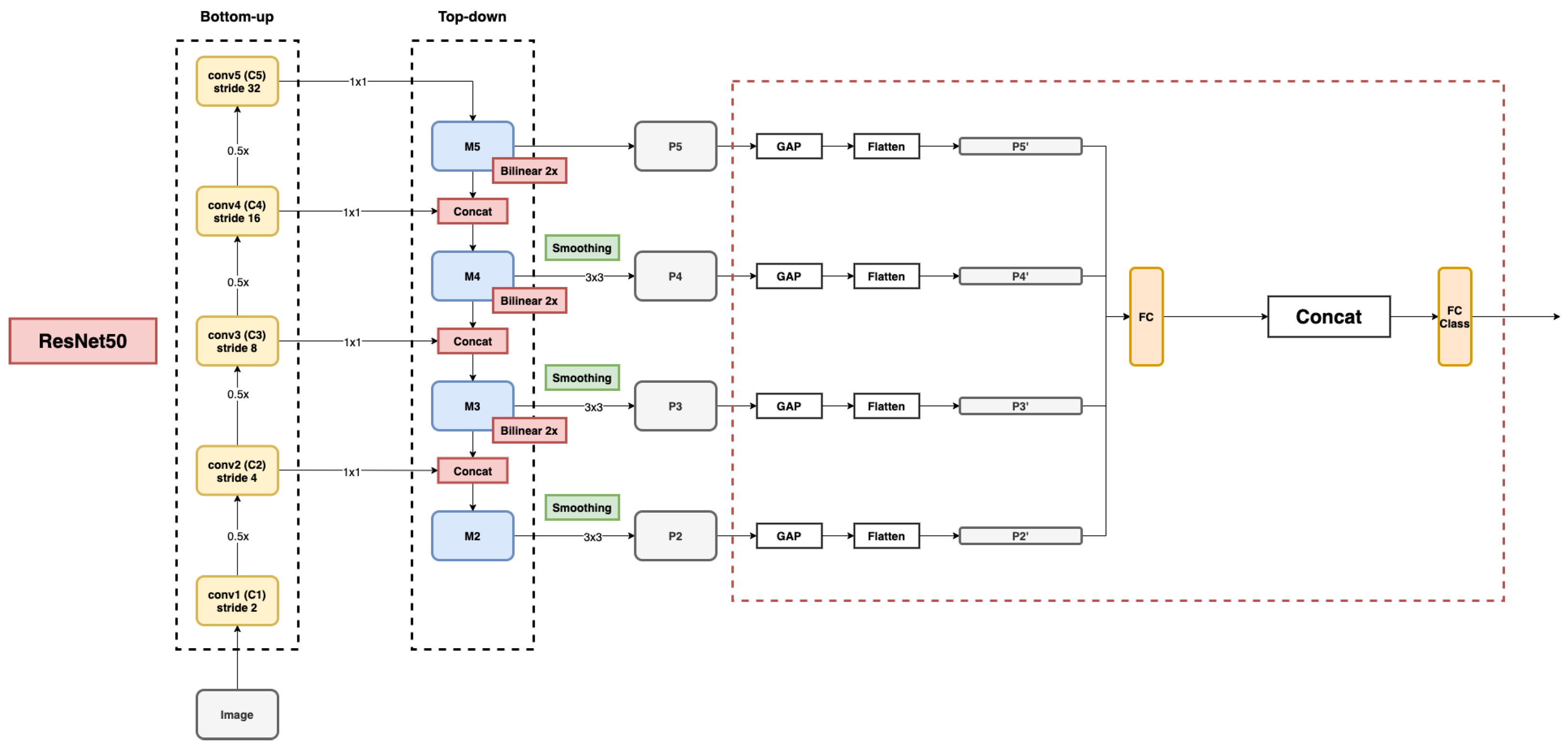

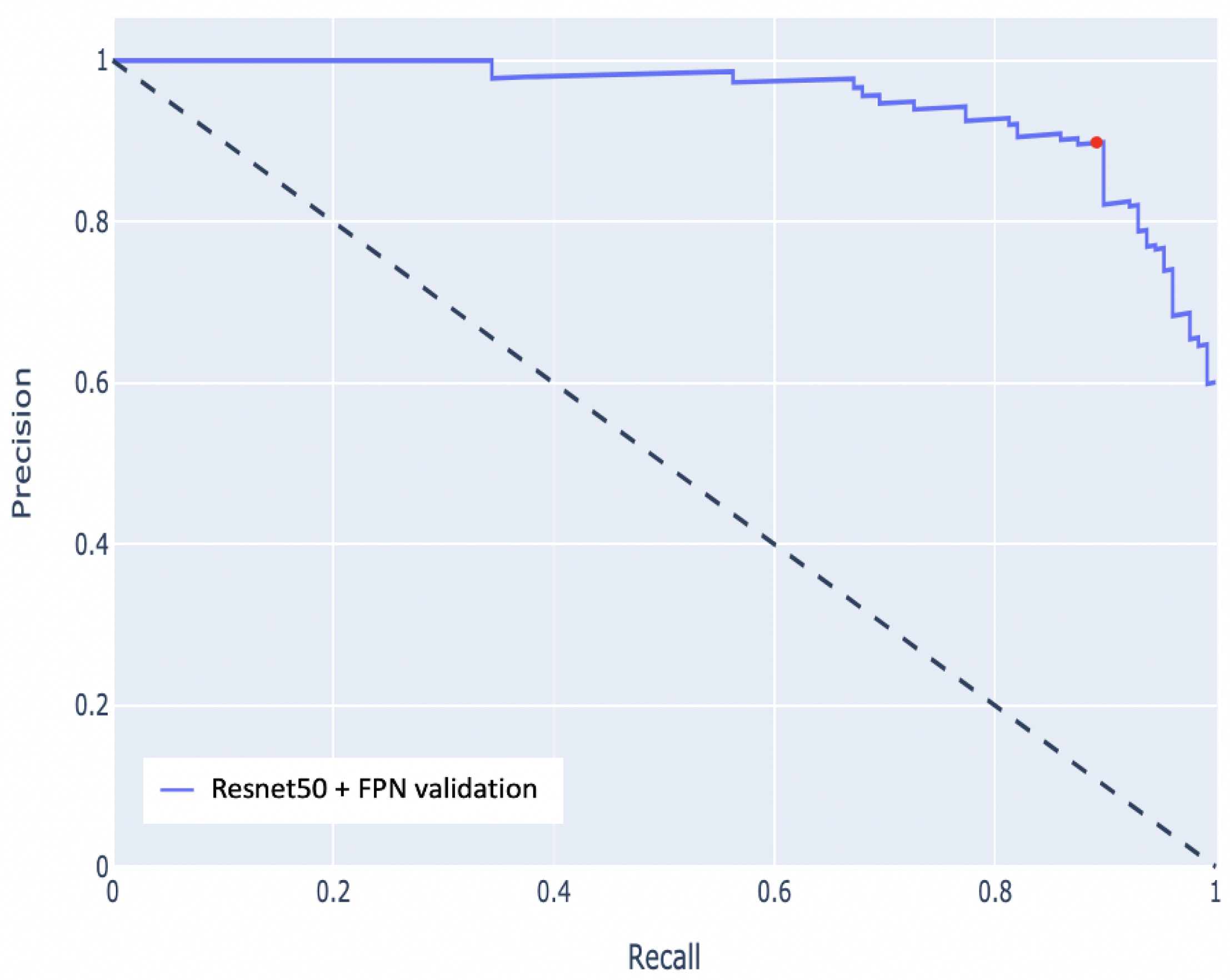

- We train a binary CNN classifier for the task of illegal landfill detection. The proposed architecture exploits a ResNet50 backbone augmented with a Feature Pyramid Network (FPN) links [16], a technique used in object detection tasks to improve the identification of items at different scales. We evaluate the performance of the architecture on a test set of 337 images. The classifier achieves 94.5% average precision and 88.2% F1 score, with 88.6% precision at 87.7% recall. Such a result improves the accuracy w.r.t. object detection methods without requiring the manual creation of bounding boxes;

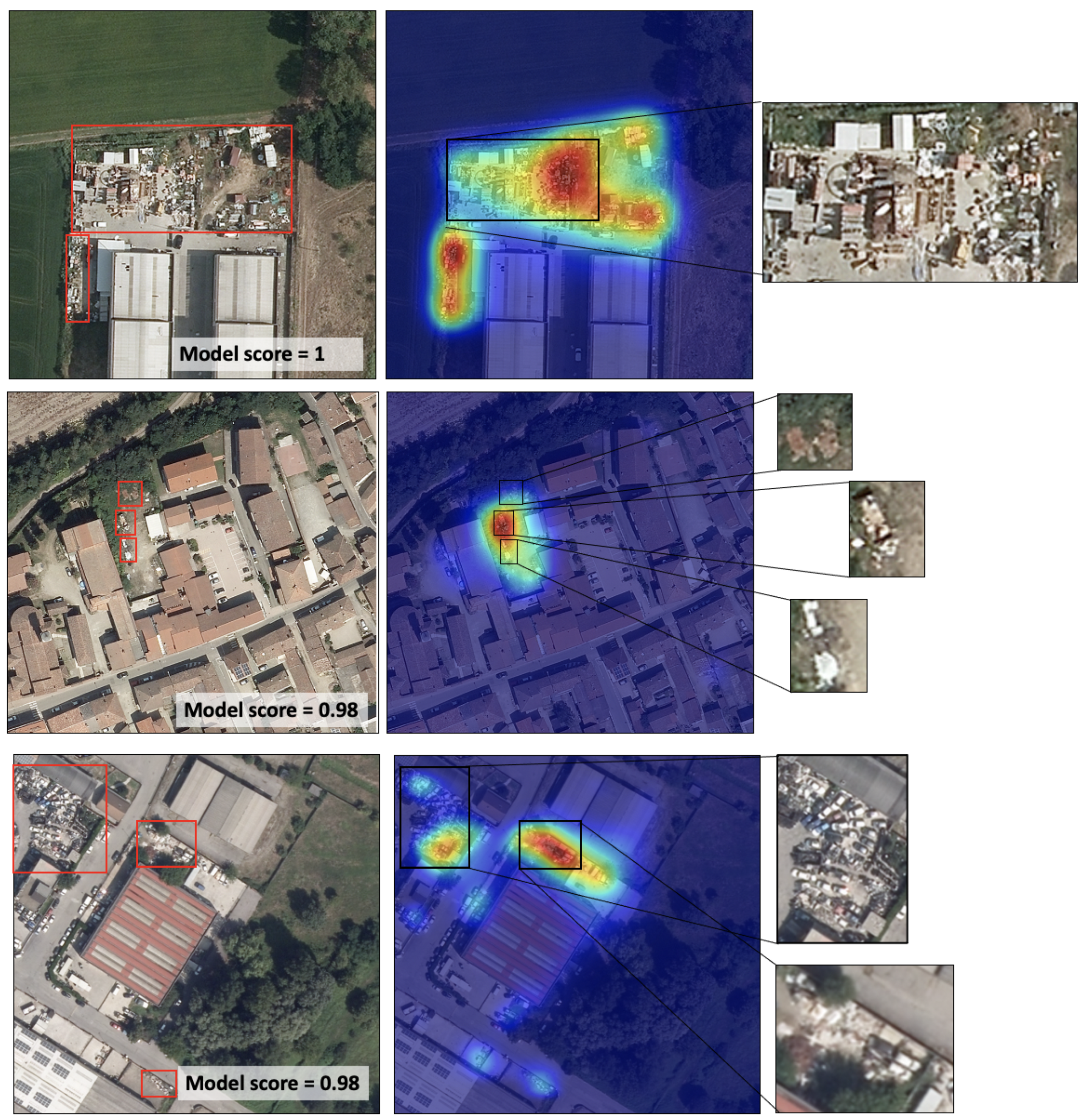

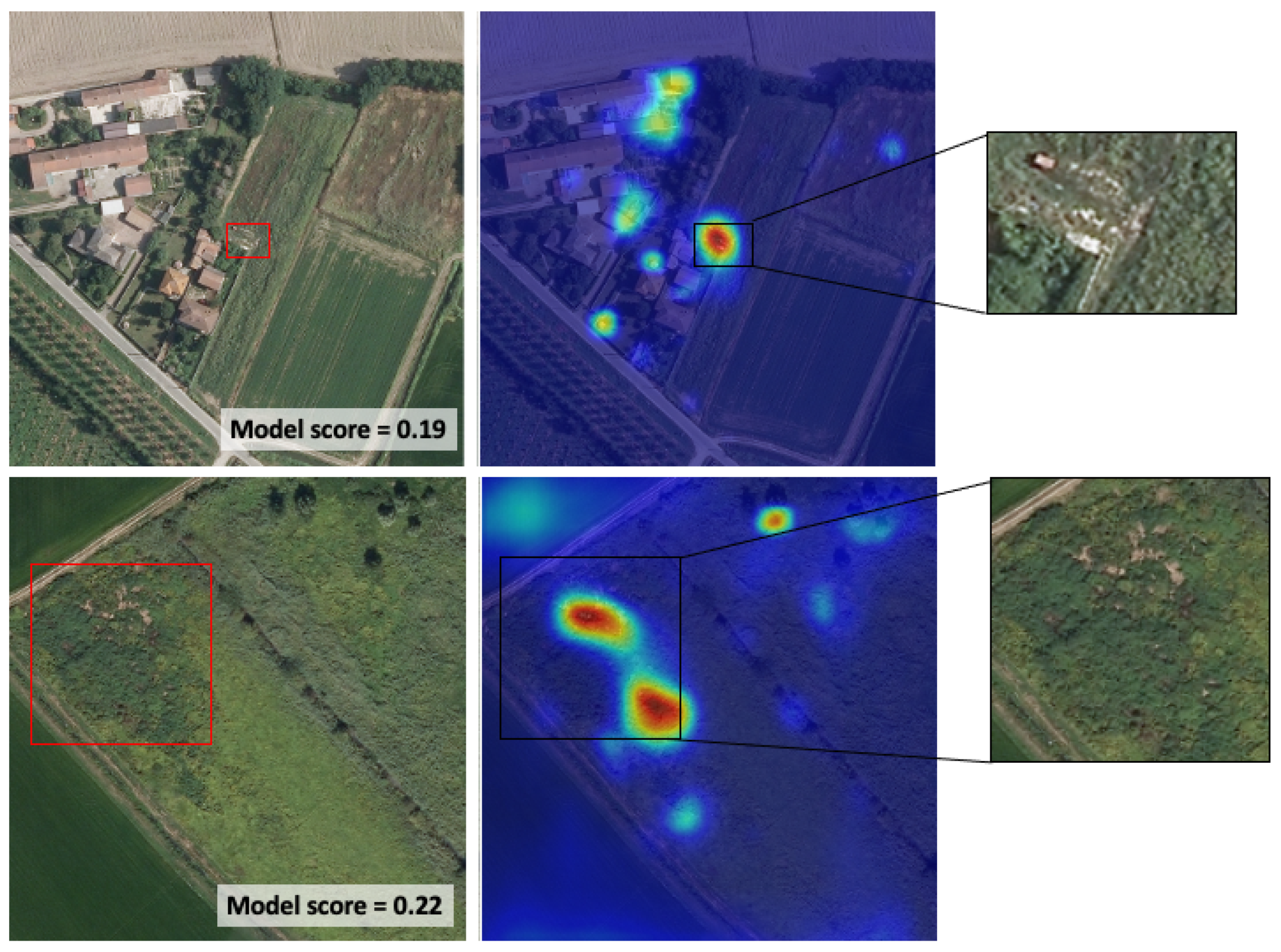

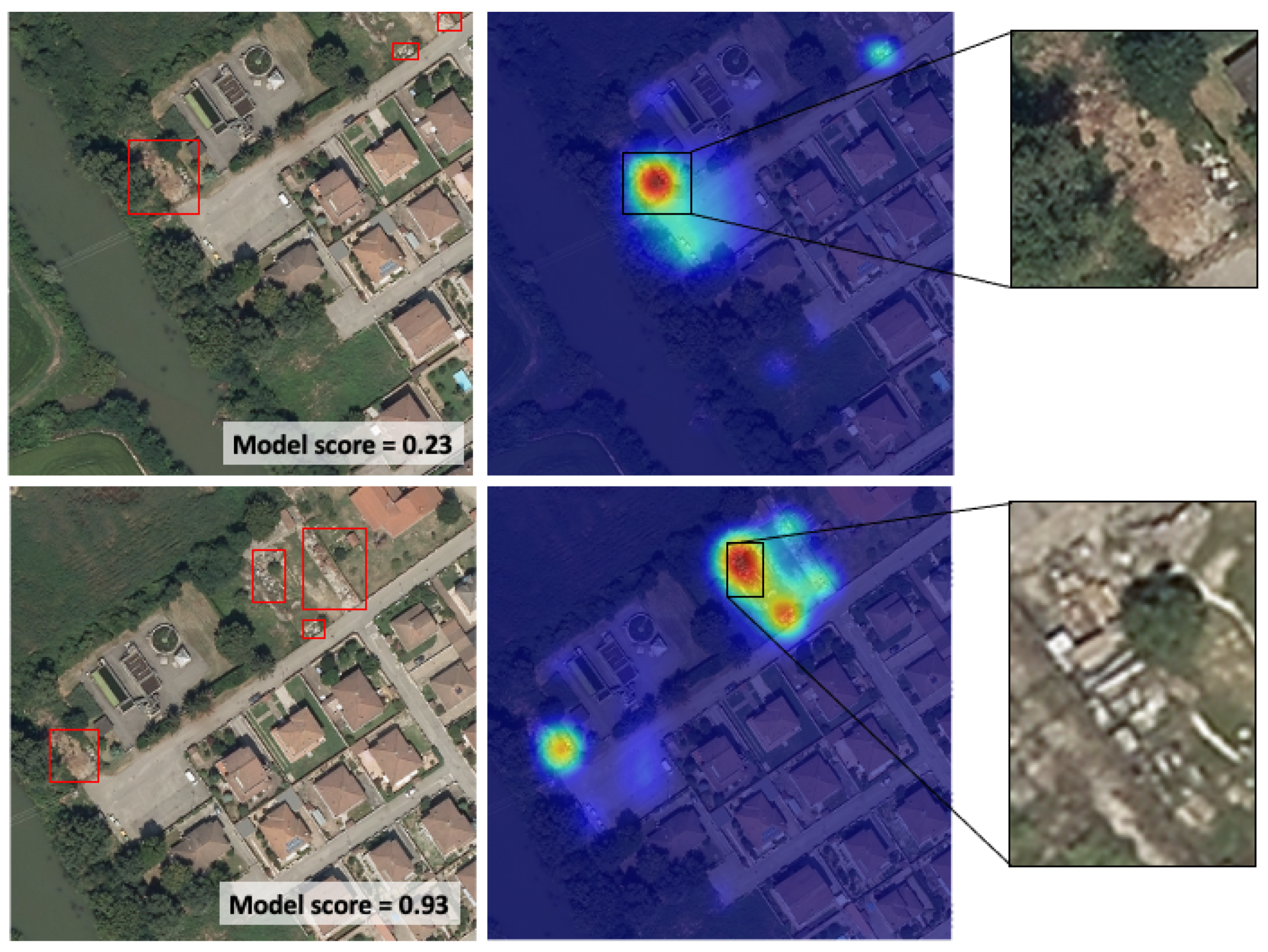

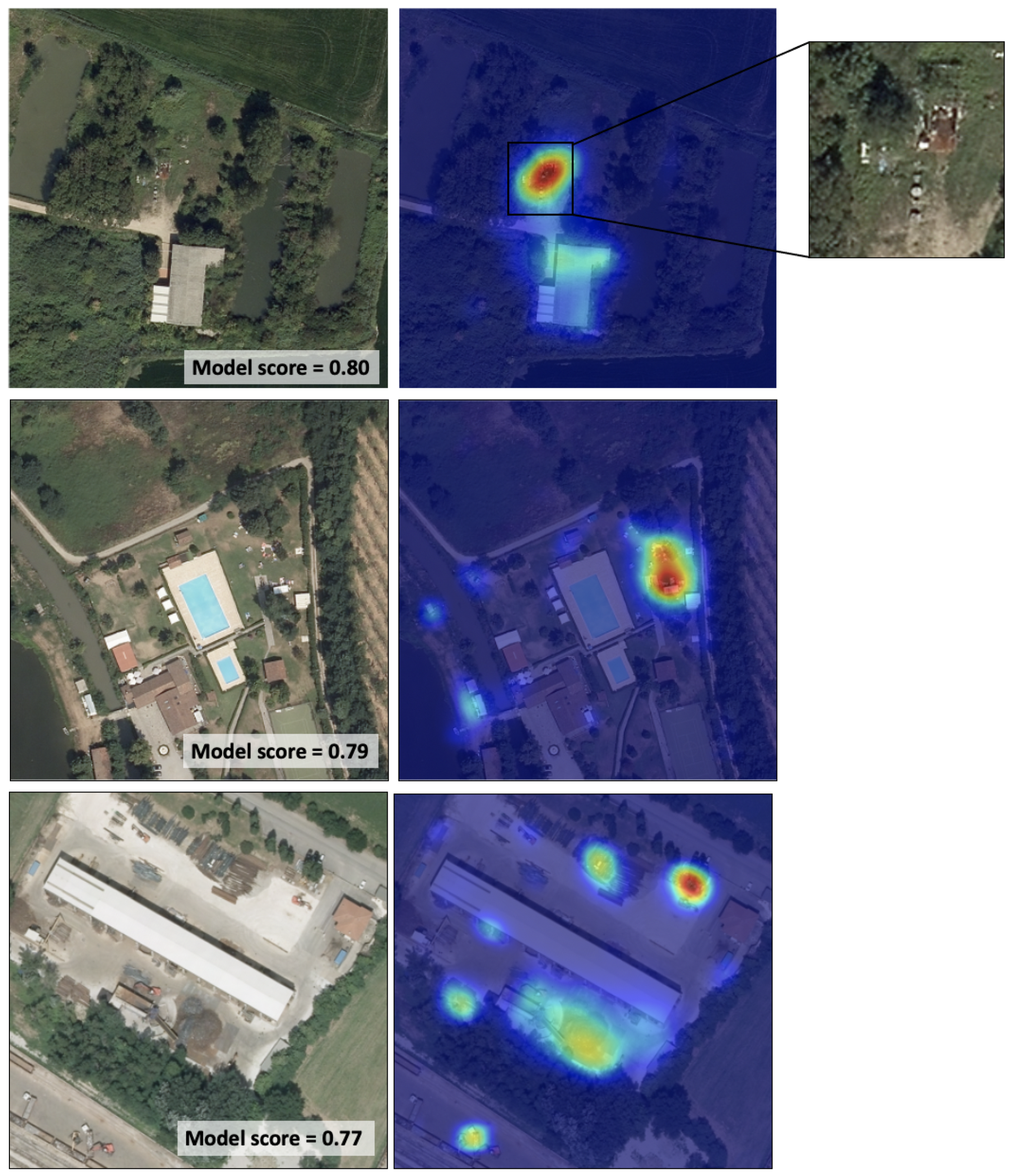

- We analyze the output of the classifier qualitatively by exploiting visual understanding and interpretability techniques (specifically Class Attention Maps—CAMs [17]). This procedure allows identifying the representative image regions where the classifier focuses its attention.

2. Related Work

- Data: the input data to the landfills identification process can include structured data (e.g., cadastral and administrative databases), Geographic Information System (GIS) data (e.g., land use maps, road networks), remote sensing data (optical, multi or hyperspectral), in particular, unmanned aerial vehicle (UAV) images and videos, and street-level images and videos (e.g., from surveillance cameras).

- Time: Data can represent a snapshot at a given time or a data series acquired over a period.

- Output: The output depends on how the problem is specified. It can be formulated as a classification task of geographic locations or of images in which an observation is labeled based on the presence or absence of illegal landfills. Alternatively, it can be defined as a CV localization task (object detection, image semantic segmentation) in which the result is a mask indicating the region of the image that belongs to the illegal landfill area. Based on these formulations, the output can be a set of positive geographical locations or images, object bounding boxes, or image segmentation masks.

- Method: the methods can be manual, e.g., human interpretation of digital data, heuristic, or data-driven. In data-driven methods, the relevant features can be hand-crafted or learned from the data [40]. Data-driven methods in the cited works are primarily supervised and can be further distinguished based on their statistical learning approach (e.g., support vector machines (SVM), deep neural networks, CNNs).

- Range: studies can be small range analyses focusing on the in-depth investigation of a specific landfill or small region or large scale surveys over a broad geographical area.

- Validation: results can be validated qualitatively (e.g., by experts) or quantitatively with the aid of ground truth data (e.g., collections of images or geographic locations corresponding to known waste disposal sites).

2.1. Landfill and Waste Dump Detection from Remote Sensing Data

2.2. Landfill and Waste Dump Detection from GIS and Other Structured Data

2.3. Image Classification for Street-Level Visual Content

2.4. Deep Learning for RS Scene Classification

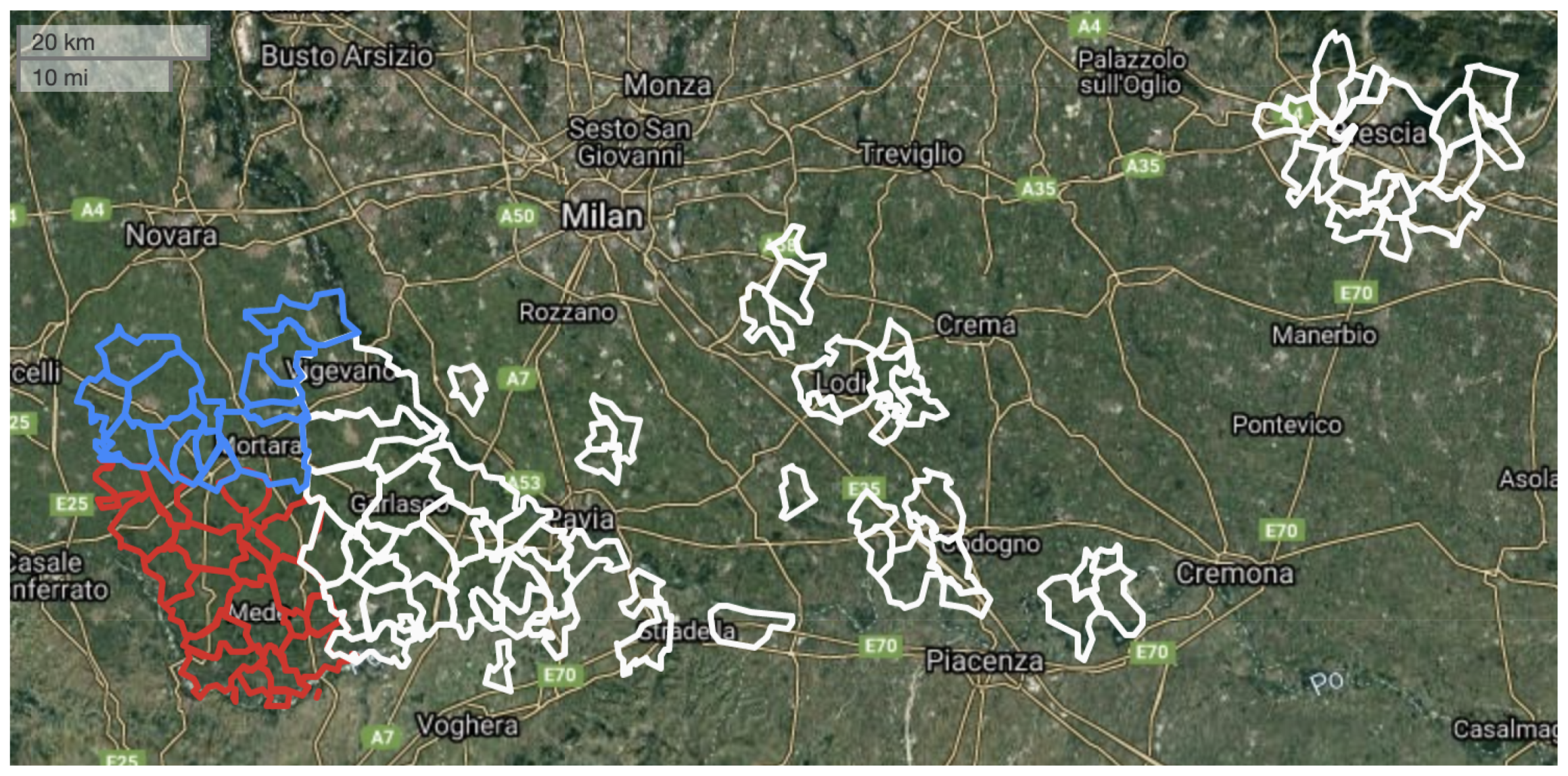

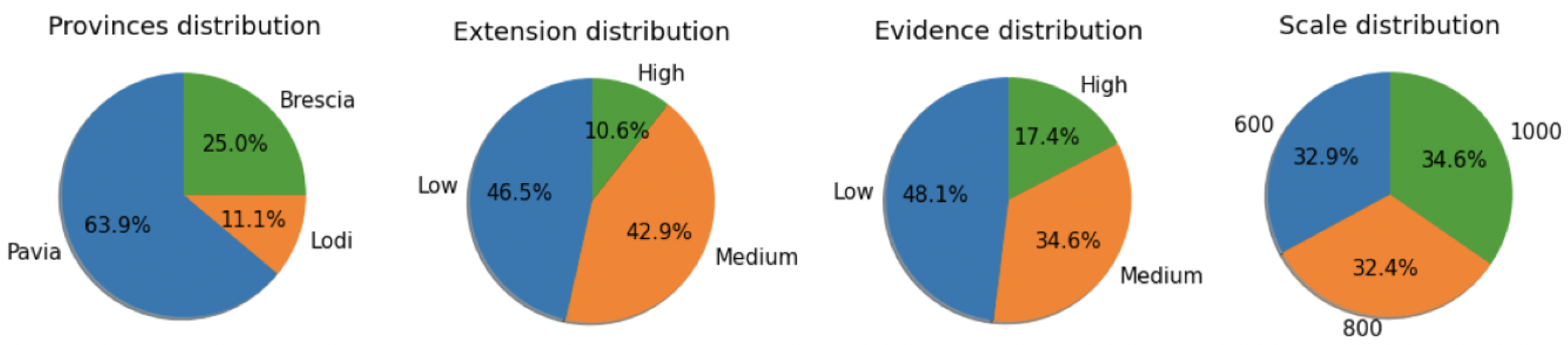

3. Dataset

4. Classification Approach

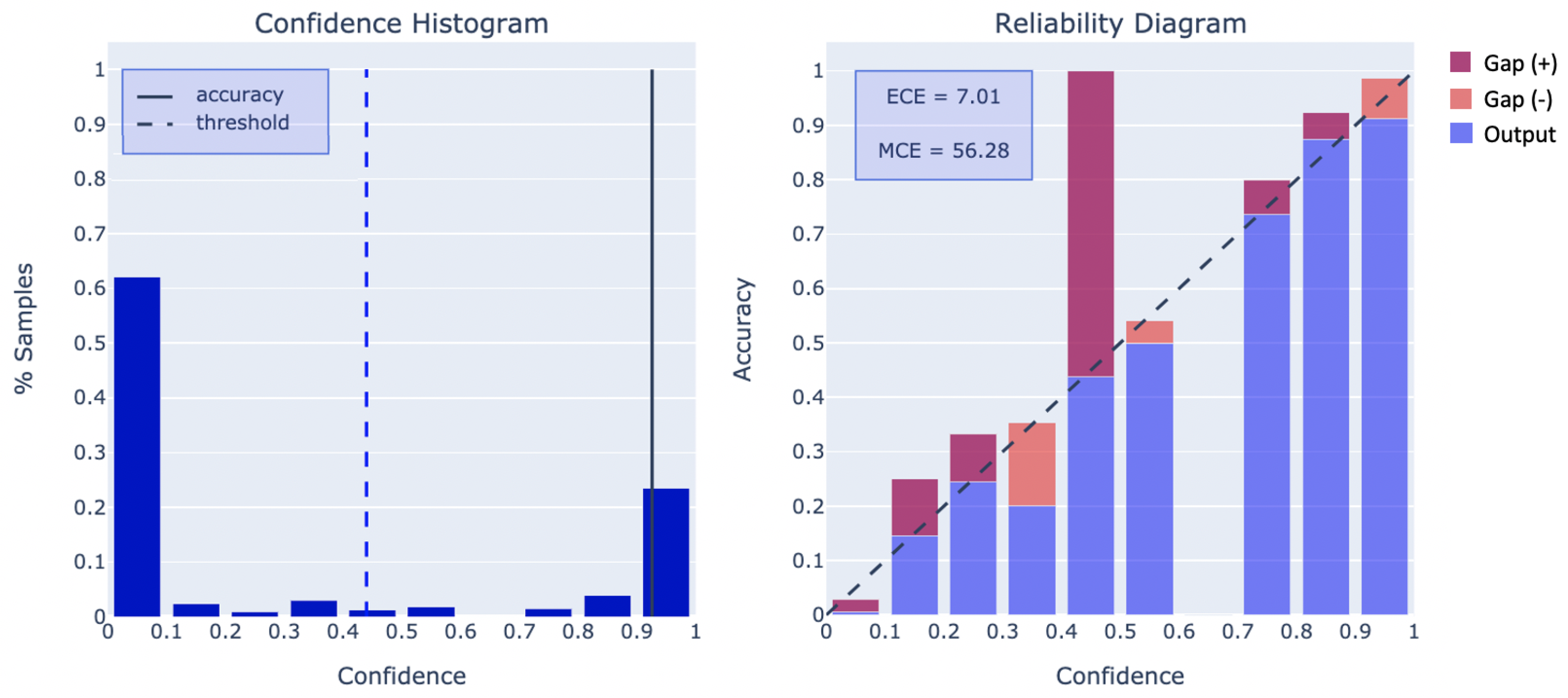

5. Quantitative Analysis

6. Qualitative Analysis

6.1. Examples of True Positives

6.2. Examples of False Negatives

6.3. False Positive Analysis

7. Conclusions and Future Work

- Dataset extension. As analysts inspect new territories, their findings will be incorporated into the dataset, improving the model. Specifically, complex negative examples will be sought. In the present work, negative examples were sampled randomly, but choosing them based on semantic information (e.g., vicinity to “difficult” contexts, such as swimming pools and cemeteries) could reduce false positives substantially;

- Different imagery. The described analysis was executed on a single type of image with a resolution of 20 cm per pixel. The experimentation with other resolutions and different remote sensing products beyond the visible band could lead to more accurate classification, e.g., including the NIR band to exploit the presence of stressed vegetation as a clue for buried waste;

- Classification of waste types. The type of waste present at a location is a clue that helps the analyst categorize a site. Examples include plastic, tires, grouped cars, bulky waste, sludge, or manure. Moreover, waste treatment plants might intentionally misclassify waste to deceive law enforcement authorities, e.g., by using non-hazardous waste codes for hazardous materials. In this scenario, classifying images based on the type of waste is extremely useful;

- Weakly supervised segmentation. Understanding the extension of relevant objects could help estimate the level of risk associated with a detected site, which would help prioritize interventions. Object detection and instance segmentation tools output bounding boxes and masks from which the area of a waste dump can be computed. However, training an object detection or instance segmentation model requires a costly and time-consuming ground truth production process. Weakly supervised methods have attracted interest in recent years to reduce the effort of ground truth creation. Illegal landfill detection could be a perfect use case to apply state-of-the-art weakly-supervised approaches;

- Multi-temporal analysis. Analyzing images taken at different dates could provide information on the site activity, e.g., growing or shrinking;

- Model efficiency. The ultimate goal of automating the photo interpretation task is enabling the complete scanning of the territory at a vast scale in a limited amount of time or even the implementation of near real-time alerting of the insurgence of waste-related risks. This objective requires a substantial reduction in the inference time coupled with a limited loss in prediction reliability.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Association, E.S. Rethinking Waste Crime. 2017. Available online: http://www.esauk.org/application/files/7515/3589/6448/20170502_Rethinking_Waste_Crime.pdf (accessed on 25 May 2021).

- Rocco, G.; Petitti, T.; Martucci, N.; Piccirillo, M.C.; La Rocca, A.; La Manna, C.; De Luca, G.; Morabito, A.; Chirico, A.; Franco, R.; et al. Survival after surgical treatment of lung cancer arising in the population exposed to illegal dumping of toxic waste in the land of fires (‘Terra dei Fuochi’) of Southern Italy. Anticancer Res. 2016, 36, 2119–2124. [Google Scholar]

- Schrab, G.E.; Brown, K.W.; Donnelly, K. Acute and genetic toxicity of municipal landfill leachate. Water Air Soil Pollut. 1993, 69, 99–112. [Google Scholar] [CrossRef][Green Version]

- Limoli, A.; Garzia, E.; De Pretto, A.; De Muri, C. Illegal landfill in Italy (EU)—A multidisciplinary approach. Environ. Forensics 2019, 20, 26–38. [Google Scholar] [CrossRef]

- Jordá-Borrell, R.; Ruiz-Rodríguez, F.; Lucendo-Monedero, Á.L. Factor analysis and geographic information system for determining probability areas of presence of illegal landfills. Ecol. Indic. 2014, 37, 151–160. [Google Scholar] [CrossRef]

- Quesada-Ruiz, L.C.; Rodriguez-Galiano, V.; Jordá-Borrell, R. Characterization and mapping of illegal landfill potential occurrence in the Canary Islands. Waste Manag. 2019, 85, 506–518. [Google Scholar] [CrossRef]

- Slonecker, T.; Fisher, G.B.; Aiello, D.P.; Haack, B. Visible and infrared remote imaging of hazardous waste: A review. Remote Sens. 2010, 2, 2474–2508. [Google Scholar] [CrossRef]

- Youme, O.; Bayet, T.; Dembele, J.M.; Cambier, C. Deep Learning and Remote Sensing: Detection of Dumping Waste Using UAV. Procedia Comput. Sci. 2021, 185, 361–369. [Google Scholar] [CrossRef]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Abdukhamet, S. Landfill Detection in Satellite Images Using Deep Learning. Master’s Thesis, Shanghai Jiao Tong University, Shanghai, China, 2019. [Google Scholar]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. arXiv 2020, arXiv:2005.01094. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, D.; Fu, K.; Wang, Y.; Xu, G.; Sun, X. MARTA GANs: Unsupervised representation learning for remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2092–2096. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Kazaryan, M.; Simonyan, A.; Simavoryan, S.; Ulitina, E.; Aramyan, R. Waste disposal facilities monitoring based on high-resolution information features of space images. E3S Web Conf. 2020, 157, 02029. [Google Scholar] [CrossRef]

- De Carolis, B.; Ladogana, F.; Macchiarulo, N. YOLO TrashNet: Garbage Detection in Video Streams. In Proceedings of the 2020 IEEE Conference on Evolving and Adaptive Intelligent Systems (EAIS), Bari, Italy, 27–29 May 2020; pp. 1–7. [Google Scholar]

- Gill, J.; Faisal, K.; Shaker, A.; Yan, W.Y. Detection of waste dumping locations in landfill using multi-temporal Landsat thermal images. Waste Manag. Res. 2019, 37, 386–393. [Google Scholar] [CrossRef]

- Jakiel, M.; Bernatek-Jakiel, A.; Gajda, A.; Filiks, M.; Pufelska, M. Spatial and temporal distribution of illegal dumping sites in the nature protected area: The Ojców National Park, Poland. J. Environ. Plan. Manag. 2019, 62, 286–305. [Google Scholar] [CrossRef]

- Alfarrarjeh, A.; Kim, S.H.; Agrawal, S.; Ashok, M.; Kim, S.Y.; Shahabi, C. Image classification to determine the level of street cleanliness: A case study. In Proceedings of the IEEE Fourth International Conference on Multimedia Big Data (BigMM), Xi’an, China, 13–18 September 2018; pp. 1–5. [Google Scholar]

- Anjum, M.; Umar, M.S. Garbage localization based on weakly supervised learning in Deep Convolutional Neural Network. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 12–13 October 2018; pp. 1108–1113. [Google Scholar]

- Angelino, C.; Focareta, M.; Parrilli, S.; Cicala, L.; Piacquadio, G.; Meoli, G.; De Mizio, M. A case study on the detection of illegal dumps with GIS and remote sensing images. In Earth Resources and Environmental Remote Sensing/GIS Applications IX; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10790, p. 107900M. [Google Scholar]

- Rad, M.S.; von Kaenel, A.; Droux, A.; Tieche, F.; Ouerhani, N.; Ekenel, H.K.; Thiran, J.P. A computer vision system to localize and classify wastes on the streets. In Proceedings of the International Conference on Computer Vision Systems, Las Vegas, NV, USA, 27–30 June 2017; pp. 195–204. [Google Scholar]

- Manzo, C.; Mei, A.; Zampetti, E.; Bassani, C.; Paciucci, L.; Manetti, P. Top-down approach from satellite to terrestrial rover application for environmental monitoring of landfills. Sci. Total Environ. 2017, 584, 1333–1348. [Google Scholar] [CrossRef]

- Selani, L. Mapping Illegal Dumping Using a High Resolution Remote Sensing Image Case Study: Soweto Township in South Africa. Ph.D. Thesis, University of the Witwatersrand, Johannesburg, South Africa, 2017. [Google Scholar]

- Begur, H.; Dhawade, M.; Gaur, N.; Dureja, P.; Gao, J.; Mahmoud, M.; Huang, J.; Chen, S.; Ding, X. An edge-based smart mobile service system for illegal dumping detection and monitoring in San Jose. In Proceedings of the 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), San Francisco, CA, USA, 4–8 August 2017; pp. 1–6. [Google Scholar]

- Dabholkar, A.; Muthiyan, B.; Srinivasan, S.; Ravi, S.; Jeon, H.; Gao, J. Smart illegal dumping detection. In Proceedings of the 2017 IEEE Third International Conference on Big Data Computing Service and Applications (BigDataService), San Francisco, CA, USA, 6–9 April 2017; pp. 255–260. [Google Scholar]

- Mittal, G.; Yagnik, K.B.; Garg, M.; Krishnan, N.C. Spotgarbage: Smartphone app to detect garbage using deep learning. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 940–945. [Google Scholar]

- Lucendo-Monedero, A.L.; Jordá-Borrell, R.; Ruiz-Rodríguez, F. Predictive model for areas with illegal landfills using logistic regression. J. Environ. Plan. Manag. 2015, 58, 1309–1326. [Google Scholar] [CrossRef]

- Viezzoli, A.; Edsen, A.; Auken, E.; Silvestri, S. The Use of Satellite Remote Sensing and Helicopter Tem Data for the Identification and Characterization of Contaminated. In Proceedings of the Near Surface 2009-15th EAGE European Meeting of Environmental and Engineering Geophysics. European Association of Geoscientists & Engineers, Dublin, Ireland, 17–19 September 2009; p. cp–134. [Google Scholar]

- Chinatsu, Y. Possibility of monitoring of waste disposal site using satellite imagery. JIFS 2009, 6, 23–28. [Google Scholar]

- Biotto, G.; Silvestri, S.; Gobbo, L.; Furlan, E.; Valenti, S.; Rosselli, R. GIS, multi-criteria and multi-factor spatial analysis for the probability assessment of the existence of illegal landfills. Int. J. Geogr. Inf. Sci. 2009, 23, 1233–1244. [Google Scholar] [CrossRef]

- Silvestri, S.; Omri, M. A method for the remote sensing identification of uncontrolled landfills: Formulation and validation. Int. J. Remote Sens. 2008, 29, 975–989. [Google Scholar] [CrossRef]

- Notarnicola, C.; Angiulli, M.; Giasi, C.I. Southern Italy illegal dumps detection based on spectral analysis of remotely sensed data and land-cover maps. In Remote Sensing for Environmental Monitoring, GIS Applications, and Geology III; International Society for Optics and Photonics: Bellingham, WA, USA, 2004; Volume 5239, pp. 483–493. [Google Scholar]

- Salleh, J.B.; Tsudagawa, M. Classification of industrial disposal illegal dumping site images by using spatial and spectral information together. In Proceedings of the 19th IEEE Instrumentation and Measurement Technology Conference (IEEE Cat. No. 00CH37276), IMTC/200, Anchorage, AK, USA, 21–23 May 2002; Volume 1, pp. 559–563. [Google Scholar]

- Lyon, J. Use of maps, aerial photographs, and other remote sensor data for practical evaluations of hazardous waste sites. Photogramm. Eng. Remote Sens. 1987, 53, 515–519. [Google Scholar]

- Erb, T.L.; Philipson, W.R.; Teng, W.L.; Liang, T. Analysis of landfills with historic airphotos. Photogramm. Eng. Remote Sens. 1981, 47, 1363–1369. [Google Scholar]

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. non-handcrafted features for computer vision classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- Garofalo, D.; Wobber, F. Solid waste and remote sensing. Photogramm. Eng. 1974, 40, 45–59. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–27 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–15 June 2015; pp. 1–9. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X. Autonomous garbage detection for intelligent urban management. In Proceedings of the MATEC Web of Conferences. EDP Sciences, Shanghai, China, 12–14 October 2018; Volume 232, p. 01056. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yun, K.; Kwon, Y.; Oh, S.; Moon, J.; Park, J. Vision-based garbage dumping action detection for real-world surveillance platform. ETRI J. 2019, 41, 494–505. [Google Scholar] [CrossRef]

- Blaschke, T.; Strobl, J. What’s wrong with pixels? Some recent developments interfacing remote sensing and GIS. Z. Geoinformationssysteme 2001, 14, 12–17. [Google Scholar]

- Schmitt, M.; Wu, Y.L. Remote Sensing Image Classification with the SEN12MS Dataset. arXiv 2021, arXiv:2104.00704. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Penatti, O.A.; Nogueira, K.; Dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–15 June 2015; pp. 44–51. [Google Scholar]

- Zhao, B.; Zhong, Y.; Xia, G.S.; Zhang, L. Dirichlet-derived multiple topic scene classification model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 54, 2108–2123. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Zhao, L.; Tang, P.; Huo, L. Feature significance-based multibag-of-visual-words model for remote sensing image scene classification. J. Appl. Remote Sens. 2016, 10, 035004. [Google Scholar] [CrossRef]

- Xu, S.; Fang, T.; Li, D.; Wang, S. Object classification of aerial images with bag-of-visual words. IEEE Geosci. Remote Sens. Lett. 2009, 7, 366–370. [Google Scholar]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Li, H.; Dou, X.; Tao, C.; Hou, Z.; Chen, J.; Peng, J.; Deng, M.; Zhao, L. RSI-CB: A large scale remote sensing image classification benchmark via crowdsource data. Sensors 2020, 20, 1594. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, S.; Chanussot, J.; Li, X. Scene classification with recurrent attention of VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1155–1167. [Google Scholar] [CrossRef]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A large-scale benchmark archive for remote sensing image understanding. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5901–5904. [Google Scholar]

- Qi, X.; Zhu, P.; Wang, Y.; Zhang, L.; Peng, J.; Wu, M.; Chen, J.; Zhao, X.; Zang, N.; Mathiopoulos, P.T. MLRSNet: A multi-label high spatial resolution remote sensing dataset for semantic scene understanding. ISPRS J. Photogramm. Remote Sens. 2020, 169, 337–350. [Google Scholar] [CrossRef]

- Hua, Y.; Mou, L.; Jin, P.; Zhu, X.X. MultiScene: A Large-scale Dataset and Benchmark for Multi-scene Recognition in Single Aerial Images. arXiv 2021, arXiv:2104.02846. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, New York, NY, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land use classification in remote sensing images by convolutional neural networks. arXiv 2015, arXiv:1508.00092. [Google Scholar]

- Tong, W.; Chen, W.; Han, W.; Li, X.; Wang, L. Channel-attention-based DenseNet network for remote sensing image scene classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4121–4132. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, J.; Luo, Z.; Li, J.; Chen, C. Remote Sensing Image Scene Classification Based on an Enhanced Attention Module. IEEE Geosci. Remote. Sens. Lett. 2020, 18, 1926–1930. [Google Scholar] [CrossRef]

- Li, L.; Liang, P.; Ma, J.; Jiao, L.; Guo, X.; Liu, F.; Sun, C. A Multiscale Self-Adaptive Attention Network for Remote Sensing Scene Classification. Remote Sens. 2020, 12, 2209. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Q.; Li, X. Attention based network for remote sensing scene classification. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22-27 July 2018; pp. 4740–4743. [Google Scholar]

- Xie, J.; He, N.; Fang, L.; Plaza, A. Scale-free convolutional neural network for remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6916–6928. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Y.; Zhang, N.; Xu, D.; Chen, B. Research on Scene Classification Method of High-Resolution Remote Sensing Images Based on RFPNet. Appl. Sci. 2019, 9, 2028. [Google Scholar] [CrossRef]

- Wang, X.; Wang, S.; Ning, C.; Zhou, H. Enhanced Feature Pyramid Network with Deep Semantic Embedding for Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2021, 1–15. [Google Scholar] [CrossRef]

- Yu, Y.; Li, X.; Liu, F. Attention GANs: Unsupervised deep feature learning for aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 519–531. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–27 July 2017; pp. 2117–2125. [Google Scholar]

- Rahimzadeh, M.; Attar, A.; Sakhaei, S.M. A Fully Automated Deep Learning-based Network for Detecting COVID-19 from a New And Large Lung CT Scan Dataset. 2020. Available online: https://www.medrxiv.org/content/early/2020/09/01/2020.06.08.20121541 (accessed on 27 September 2021).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Pendharkar, P.C. A threshold-varying artificial neural network approach for classification and its application to bankruptcy prediction problem. Comput. Oper. Res. 2005, 32, 2561–2582. [Google Scholar] [CrossRef]

- Z-Flores, E.; Trujillo, L.; Schütze, O.; Legrand, P. A local search approach to genetic programming for binary classification. In Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation, Madrid, Spain, 11–15 July 2015; pp. 1151–1158. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 1321–1330. [Google Scholar]

| Input Data | Output | Method | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| GIS | RS | UAV | Street Level img. | Location Classif. | Img. Classif. | Img. Object Det. | Manual | Heuri stic | Data Driven | ||

| Classical ML | DL/ CNN | ||||||||||

|

Deep Learning and Remote Sensing: Detection of Dumping Waste Using UAV [8] | no | no | yes | no | no | no | yes | no | no | no | yes |

|

Waste disposal facilities monitoring based on high-resolution information features of space images [18] | no | yes | no | no | yes | yes | no | no | yes | no | no |

|

YOLO TrashNet: Garbage Detection in Video Streams [19] | no | no | no | yes | no | no | yes | no | no | no | yes |

|

Landfill Detection in Satellite Images Using Deep Learning [12] | no | yes | no | no | no | no | yes | no | no | no | yes |

|

Characterization and mapping of illegal landfill potential occurrence in the Canary Islands [6] | yes | yes | no | no | yes | no | no | no | no | yes | no |

|

Detection of waste dumping locations in landfill using multi-temporal Landsat thermal images [20] | no | yes | no | no | yes | no | no | no | yes | no | no |

|

Spatial and temporal distribution of illegal dumping sites in the nature p rotected area: the Ojców National Park [21] | yes | no | no | no | yes | no | no | yes | yes | no | no |

|

Image classification to determine the level of street cleanliness: A case study [22] | no | no | no | yes | no | yes | no | no | no | yes | no |

|

Garbage localization based on weakly supervised learning in DCNN [23] | no | no | no | yes | no | no | yes | no | no | no | yes |

|

A case study on the detection of illegal dumps with GIS and RS images [24] | no | yes | no | no | yes | no | no | yes | no | no | no |

| A computer vision system to localize and classify wastes on the streets [25] | no | no | no | yes | no | no | yes | no | no | no | yes |

|

Top-down approach from satellite to terrestrial rover application for monitoring of landfills [26] | yes | yes | no | no | yes | no | no | yes | yes | no | no |

| Mapping illegal dumping using a high resolution RS image case study [27] | no | yes | no | no | no | yes | no | no | no | yes | no |

|

An edge-based smart mobile service system for illegal dumping detection and monitoring [28] | no | no | no | yes | no | yes | yes | no | no | no | yes |

| Smart illegal dumping detection [29] | no | no | no | yes | no | yes | no | no | no | no | yes |

| Spotgarbage: smartphone app to detect garbage using deep learning [30] | no | no | no | yes | no | no | yes | no | no | no | yes |

| Predictive model for areas with illegal landfills using logistic regression [31] | yes | yes | no | no | yes | no | no | no | no | yes | no |

| Factor analysis and GIS for determining probability areas of presence of illegal landfills [5] | yes | no | no | no | yes | no | no | no | no | yes | no |

|

The Use of Satellite RS and Helicopter Tem Data for the Identification and Characterization of Contaminatedcite [32] | yes | yes | no | no | yes | no | no | yes | yes | no | no |

| Possibility of monitoring of waste disposal site using satellite imagery [33] | no | yes | no | no | yes | no | no | yes | no | no | no |

|

GIS, multi-criteria and multi-factor spatial analysis for the probability assessment of illegal landfills [34] | yes | yes | no | no | yes | no | no | yes | no | yes | no |

|

A method for the RS identification of uncontrolled landfills [35] | yes | yes | no | no | yes | no | no | yes | no | yes | no |

| Southern Italy illegal dumps detection based on spectral analysis of remotely sensed data and land-cover maps [36] | no | yes | no | no | no | yes | no | yes | no | yes | no |

|

Classification of industrial disposal illegal dumping site images by using spatial and spectral information together [37] | no | yes | no | no | no | yes | no | no | no | yes | no |

|

Use of maps, aerial photographs, and other RS data for practical evaluations of hazardous waste sites [38] | no | yes | no | no | yes | no | no | yes | no | no | no |

|

Analysis of landfills with historic airphotos [39] | no | yes | no | no | yes | no | no | yes | no | no | no |

| Dataset | Scenes Categories | Per Class Images | Total Images | Year |

|---|---|---|---|---|

| UC-Merced [52] | 21 | 100 | 2100 | 2010 |

| WHU-RS19 [54] | 19 | 50 | 950 | 2012 |

| RSSSCN7 [55] | 7 | 400 | 2800 | 2015 |

| Brazilian Coffee Scene [53] | 2 | 1438 | 2876 | 2015 |

| SIRI-WHU [54] | 12 | 200 | 2400 | 2015 |

| RSC11 [56] | 11 | 112 | 1232 | 2016 |

| AID[57] [58] | 30 | 220/420 | 10,000 | 2017 |

| NWPU-RESISC45 [51] | 45 | 700 | 31,500 | 2017 |

| RSI-CB256 [59] | 35 | 690 | 24000 | 2017 |

| OPTIMAL-31 [60] | 31 | 60 | 1860 | 2018 |

| EuroSAT [10] | 10 | 2000/3000 | 27,000 | 2019 |

| BigEarthNet [61] | 44 | 328/217,119 | 590,326 | 2019 |

| MLRSNet [62] | 46 | 1500/2895 | 109,161 | 2020 |

| MultiScene [63] | 36 | 22/8628 | 14,000 | 2021 |

| SEN12MS [50] | 16 | 14/31,836 | 180,662 | 2021 |

| Resnet50 + FPN | |||||||

|---|---|---|---|---|---|---|---|

| Threshold | Average Precision | Accuracy | F1-Score | Precision | Recall | ECE | |

| Validation (%) | 0.44 | 95.1 | 93.0 | 89.4 | 89.8 | 89.1 | 5.05 |

| Testing (%) | 94.5 | 92.6 | 88.2 | 88.6 | 87.7 | 7.01 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torres, R.N.; Fraternali, P. Learning to Identify Illegal Landfills through Scene Classification in Aerial Images. Remote Sens. 2021, 13, 4520. https://doi.org/10.3390/rs13224520

Torres RN, Fraternali P. Learning to Identify Illegal Landfills through Scene Classification in Aerial Images. Remote Sensing. 2021; 13(22):4520. https://doi.org/10.3390/rs13224520

Chicago/Turabian StyleTorres, Rocio Nahime, and Piero Fraternali. 2021. "Learning to Identify Illegal Landfills through Scene Classification in Aerial Images" Remote Sensing 13, no. 22: 4520. https://doi.org/10.3390/rs13224520

APA StyleTorres, R. N., & Fraternali, P. (2021). Learning to Identify Illegal Landfills through Scene Classification in Aerial Images. Remote Sensing, 13(22), 4520. https://doi.org/10.3390/rs13224520