Author Contributions

Conceptualization, K.C.; methodology, K.C.; software, K.C.; validation, K.C.; formal analysis, K.C. and Z.Z.; investigation, Z.Z.; resources, K.C.; data curation, K.C.; writing—original draft preparation, K.C.; writing—review and editing, K.C. and Z.Z.; visualization, K.C.; supervision, Z.S.; project administration, Z.S.; funding acquisition, Z.S. All authors have read and agreed to the published version of the manuscript.

Figure 1.

Throughput (images with pixels per second on a 2080Ti GPU) versus accuracy (IoU) on WHU aerial building extraction test set. Here, we only calculate the model inference time, not including the time to read images. Our model (SST) outperforms other segmentation methods with a clear margin. For STT, Base (S4), and Base (S5), points on the line from the left to the right refers to the models with different CNN feature extractor of ResNet50, VGG16 and ResNet18, respectively.

Figure 1.

Throughput (images with pixels per second on a 2080Ti GPU) versus accuracy (IoU) on WHU aerial building extraction test set. Here, we only calculate the model inference time, not including the time to read images. Our model (SST) outperforms other segmentation methods with a clear margin. For STT, Base (S4), and Base (S5), points on the line from the left to the right refers to the models with different CNN feature extractor of ResNet50, VGG16 and ResNet18, respectively.

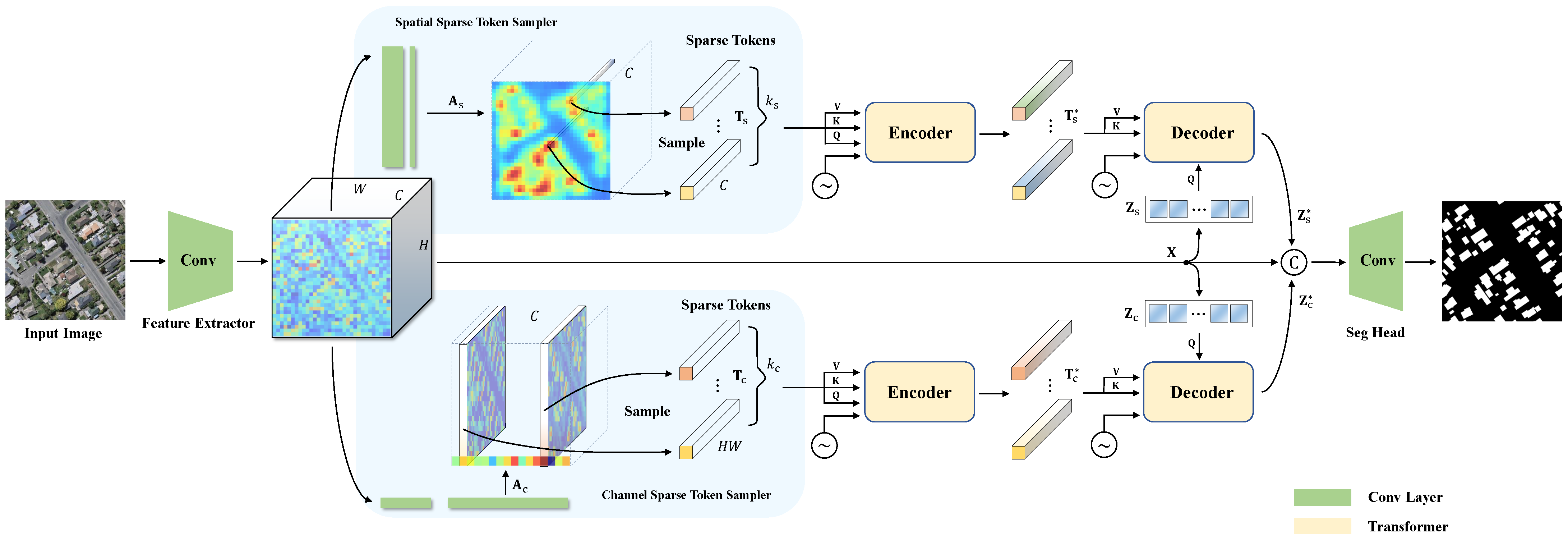

Figure 2.

An overview of the proposed method. Our method consists of a CNN feature extractor, a spatial/channel sparse token sampler, a transformer-based encoder/decoder, and a prediction head. In the transformer part, when modeling the long-range dependency between different channels/spatial locations of the input image, instead of using all features vectors, we select the most important spatial tokens and channel tokens. The sparse tokens greatly reduce the computational and memory consumption. Based on the semantic tokens generated by the encoder, a transformer decoder is employed to refine the original features. Finally, in our prediction head, we apply two upsampling layers to produce high-resolution building extraction results.

Figure 2.

An overview of the proposed method. Our method consists of a CNN feature extractor, a spatial/channel sparse token sampler, a transformer-based encoder/decoder, and a prediction head. In the transformer part, when modeling the long-range dependency between different channels/spatial locations of the input image, instead of using all features vectors, we select the most important spatial tokens and channel tokens. The sparse tokens greatly reduce the computational and memory consumption. Based on the semantic tokens generated by the encoder, a transformer decoder is employed to refine the original features. Finally, in our prediction head, we apply two upsampling layers to produce high-resolution building extraction results.

Figure 3.

Samples from the Wuhan University Aerial Building Dataset (a) and the Inria Aerial Image Labeling Dataset (b). Buildings vary in size, shape and color, and may be occluded by trees.

Figure 3.

Samples from the Wuhan University Aerial Building Dataset (a) and the Inria Aerial Image Labeling Dataset (b). Buildings vary in size, shape and color, and may be occluded by trees.

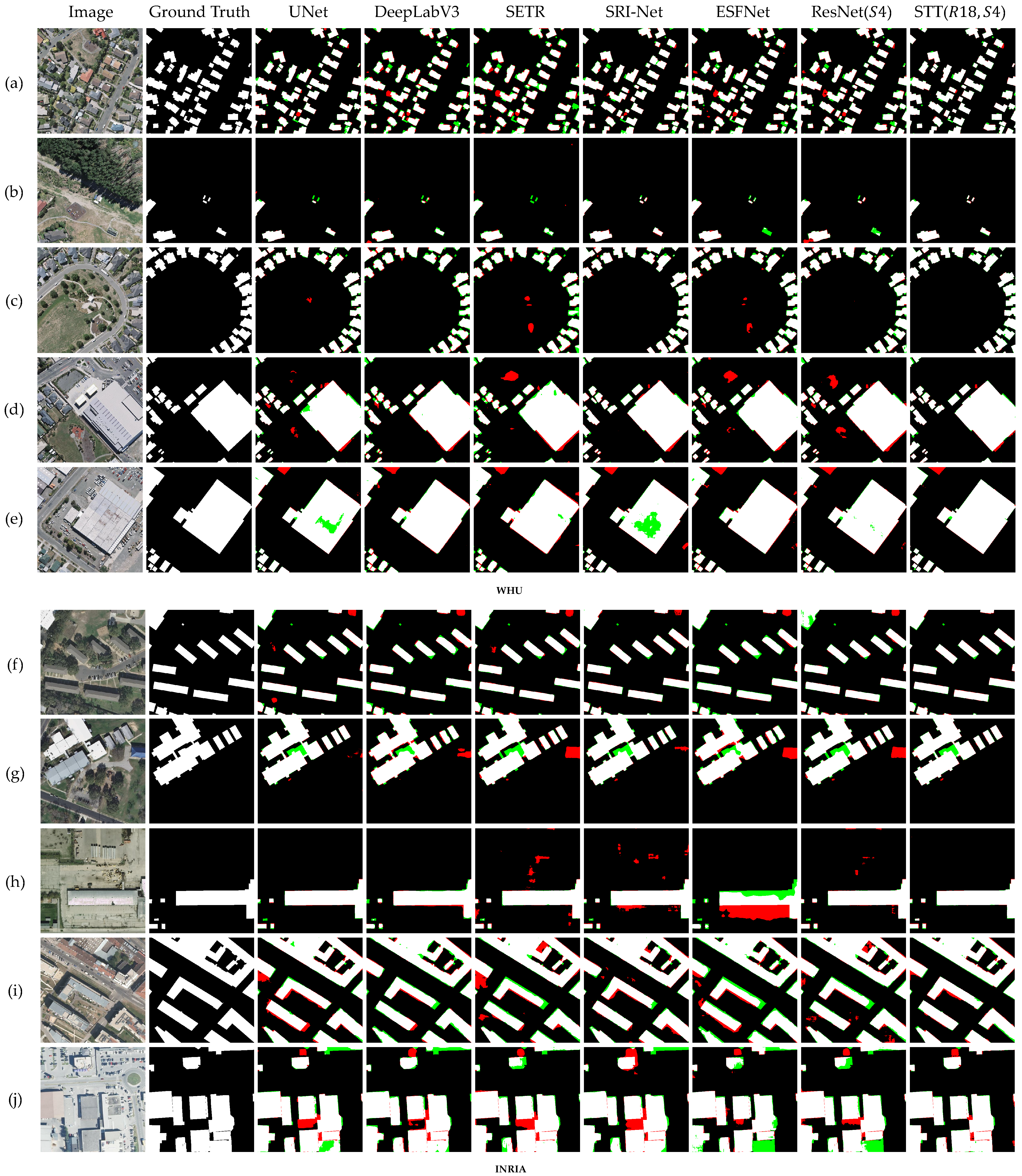

Figure 4.

The results of different methods on samples from the WHU (a–e) and INRIA (f–j) building datasets are visualized. The figure is colored differently to facilitate viewing, with white representing true positive pixels, black representing true negative pixels, red representing false positive pixels, and green representing false negative pixels.

Figure 4.

The results of different methods on samples from the WHU (a–e) and INRIA (f–j) building datasets are visualized. The figure is colored differently to facilitate viewing, with white representing true positive pixels, black representing true negative pixels, red representing false positive pixels, and green representing false negative pixels.

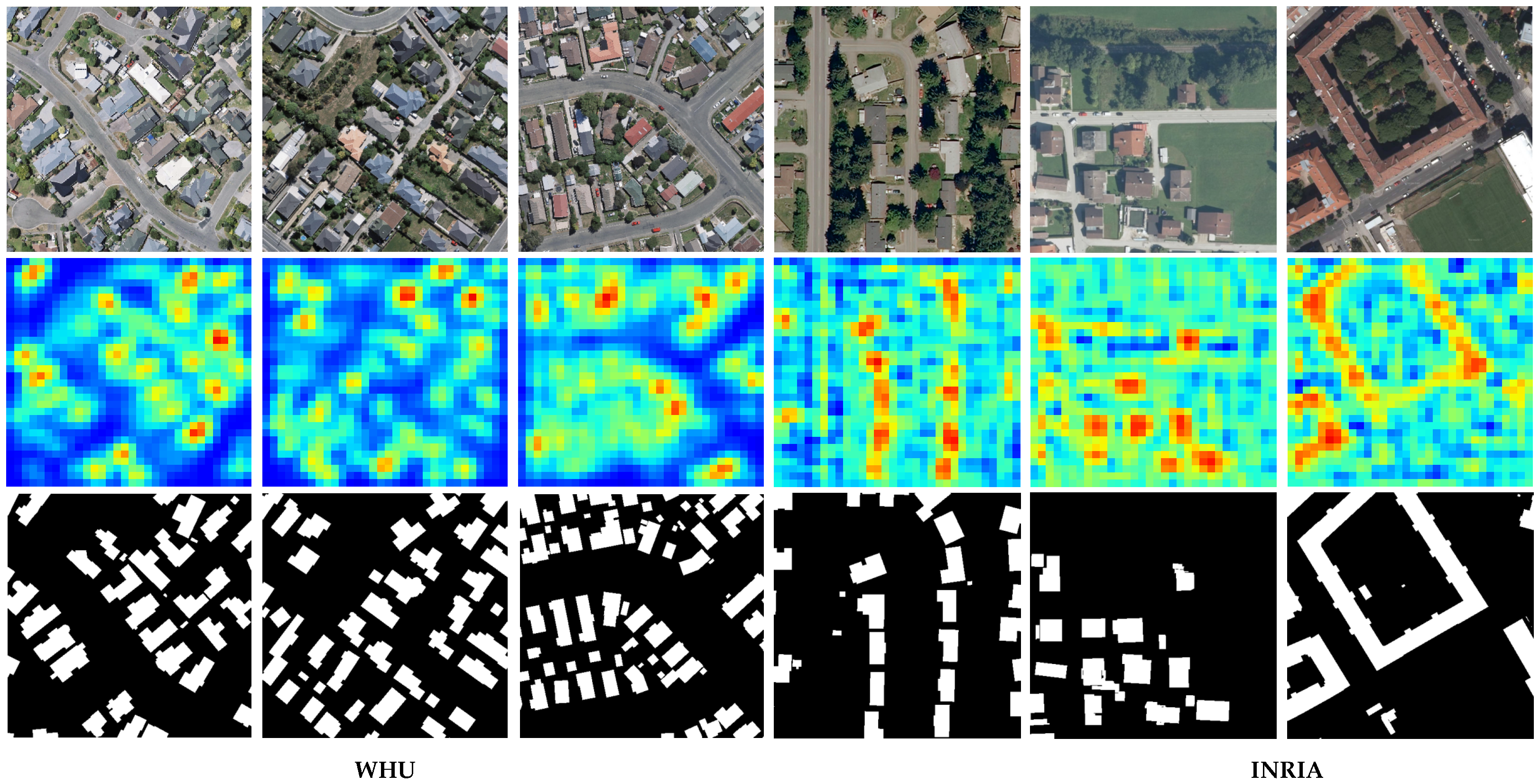

Figure 5.

Visualization of the spatial probabilistic maps. The rows from top to bottom are the input image, the spatial probabilistic map, and the ground truth label, respectively. High values are shown in red color and lower values are shown in blue. The heatmaps are taken from spatial map generator with pixel resolution of .

Figure 5.

Visualization of the spatial probabilistic maps. The rows from top to bottom are the input image, the spatial probabilistic map, and the ground truth label, respectively. High values are shown in red color and lower values are shown in blue. The heatmaps are taken from spatial map generator with pixel resolution of .

Figure 6.

Visualization of channel probabilistic maps and their importance values. There are 4 samples from WHU and INRIA datasets. The left-most column shows input images. The right-most column shows the ground truth labels. The channel importance bars are plotted below for each images. The other 5 columns shows the heatmaps, which are taken from feature extractor with pixel resolution of according to the channel probabilistic map. Here, we only pick the top-5 for visualization.

Figure 6.

Visualization of channel probabilistic maps and their importance values. There are 4 samples from WHU and INRIA datasets. The left-most column shows input images. The right-most column shows the ground truth labels. The channel importance bars are plotted below for each images. The other 5 columns shows the heatmaps, which are taken from feature extractor with pixel resolution of according to the channel probabilistic map. Here, we only pick the top-5 for visualization.

Table 1.

Details of network layers for generating the spatial and channel probabilistic maps.

Table 1.

Details of network layers for generating the spatial and channel probabilistic maps.

| Module | Input Size | Layer | Output Size |

|---|

| Spatial map generator | | Conv2d | |

| BN, LeakyReLU | |

| Conv2d | |

| Sigmoid | |

| Channel map generator | | Conv2d | |

| BN, LeakyReLU | |

| Conv2d | |

| Sigmoid | |

Table 2.

Layers of the prediction head. We use the PixelShuffle layer [

68] for upsampling. The up1

layer is only applied when the last feature map size is

of the original resolution in ablation study. DoubleConv means two convolutional layers following by batch normalization and ReLU activation function.

Table 2.

Layers of the prediction head. We use the PixelShuffle layer [

68] for upsampling. The up1

layer is only applied when the last feature map size is

of the original resolution in ablation study. DoubleConv means two convolutional layers following by batch normalization and ReLU activation function.

| Module | Input Size | Layer | Output Size |

|---|

| up1 | | PixelShuffle | |

| DoubleConv | |

| up2 | | PixelShuffle | |

| DoubleConv | |

| up3 | | PixelShuffle | |

| DoubleConv | |

| predict | | Conv2d | |

| BN, LeakyReLU | |

| Conv2d | |

Table 3.

Computational complexity of different network architectures. PT: Plain Transformer layer. ST: Spatial Transformer in SST. CT: Channel Transformer in SST. are the query matrix, key matrix and value matrix in transformer layer respectively. Values of in the table are their dimensions. Complexity means the memory consumption. Let be the number of query, key, and value elements in the plain transformer, respectively. We have the following observations in general. for self-attention, and for cross-attention.

Table 3.

Computational complexity of different network architectures. PT: Plain Transformer layer. ST: Spatial Transformer in SST. CT: Channel Transformer in SST. are the query matrix, key matrix and value matrix in transformer layer respectively. Values of in the table are their dimensions. Complexity means the memory consumption. Let be the number of query, key, and value elements in the plain transformer, respectively. We have the following observations in general. for self-attention, and for cross-attention.

| Architecture | Q | K | V | Complexity |

|---|

| PT (baseline) | | | | |

| ST Encoder (ours) | | | | |

| ST Decoder (ours) | | | | |

| CT Encoder (ours) | | | | |

| CT Decoder (ours) | | | | |

Table 4.

Ablation study of the context composing. We conduct experiments on different feature compositions before the final prediction head. ST: Spatial Transformer output, . CT: Channel Transformer output, . OM: Original feature Map before the transformer, . †: Without auxiliary loss to generate probabilistic maps.

Table 4.

Ablation study of the context composing. We conduct experiments on different feature compositions before the final prediction head. ST: Spatial Transformer output, . CT: Channel Transformer output, . OM: Original feature Map before the transformer, . †: Without auxiliary loss to generate probabilistic maps.

| Model | ST | CT | OM | WHU | INRIA |

|---|

| IoU | OA | F | IoU | OA | F |

|---|

| ResNet18 | × | × | ✓ | 84.54 | 98.29 | 91.47 | 75.14 | 95.82 | 85.19 |

| ResNet18 | × | × | ✓ | 86.36 | 98.69 | 91.93 | 76.06 | 96.00 | 85.78 |

| SST | ✓ | × | × | 87.39 | 98.63 | 93.02 | 76.36 | 96.03 | 86.00 |

| SST | ✓ | × | ✓ | 88.75 | 98.76 | 93.99 | 76.57 | 96.09 | 86.11 |

| SST | × | ✓ | × | 87.73 | 98.68 | 93.25 | 76.41 | 96.03 | 86.04 |

| SST | × | ✓ | ✓ | 88.77 | 98.77 | 93.99 | 76.84 | 96.12 | 86.32 |

| SST | ✓ | ✓ | × | 88.82 | 98.77 | 94.04 | 76.99 | 96.14 | 86.43 |

| SST | ✓ | ✓ | ✓ | 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

Table 5.

Ablation study of our method SST on the sparse token number (TN) in the spatial and channel transformers. “Throughput” means the number of images ( pixels) processed per second at the inference phase.

Table 5.

Ablation study of our method SST on the sparse token number (TN) in the spatial and channel transformers. “Throughput” means the number of images ( pixels) processed per second at the inference phase.

| TN in ST | TN in CT | Throughput | WHU | INRIA |

|---|

| IoU | OA | F | IoU | OA | F |

|---|

| 4 | 16 | 3194 | 87.73 | 98.68 | 93.25 | 76.72 | 96.10 | 86.24 |

| 16 | 16 | 3158 | 88.48 | 98.76 | 93.77 | 76.85 | 96.13 | 86.32 |

| 64 | 16 | 3134 | 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

| 256 | 16 | 2315 | 89.07 | 98.81 | 94.17 | 77.18 | 96.20 | 86.52 |

| 1024 | 16 | 688 | 88.96 | 98.79 | 94.11 | 77.02 | 96.17 | 86.45 |

| 64 | 4 | 3189 | 87.80 | 98.69 | 93.30 | 76.73 | 96.12 | 86.25 |

| 64 | 8 | 3157 | 88.59 | 98.74 | 93.91 | 76.97 | 96.12 | 86.43 |

| 64 | 32 | 3104 | 89.05 | 98.81 | 94.15 | 77.28 | 96.21 | 86.60 |

| 64 | 64 | 3077 | 89.04 | 98.81 | 94.16 | 77.09 | 96.17 | 86.50 |

| 4 | 4 | 3232 | 86.83 | 98.73 | 92.34 | 76.69 | 96.10 | 86.23 |

| 16 | 8 | 3144 | 88.18 | 98.73 | 93.55 | 76.78 | 96.12 | 86.26 |

| 256 | 32 | 2209 | 89.11 | 98.80 | 94.20 | 77.21 | 96.18 | 86.56 |

| 1024 | 64 | 665 | 88.95 | 98.79 | 94.11 | 76.97 | 96.14 | 86.41 |

Table 6.

Ablation study of the position embeddings (PE) in the spatial and channel transformer on the two datasets. The evaluation is conducted on both the transformer encoder and the transformer decoder.

Table 6.

Ablation study of the position embeddings (PE) in the spatial and channel transformer on the two datasets. The evaluation is conducted on both the transformer encoder and the transformer decoder.

| Spatial Transformer | Channel Transformer | WHU | INRIA |

|---|

| PE in Encoder | PE in Decoder | PE in Encoder | PE in Decoder | IoU | OA | F | IoU | OA | F |

|---|

| × | × | × | × | 88.41 | 98.75 | 93.76 | 76.79 | 96.11 | 86.28 |

| ✓ | × | ✓ | × | 88.83 | 98.79 | 94.02 | 76.94 | 96.12 | 86.38 |

| × | ✓ | × | ✓ | 88.80 | 98.77 | 94.01 | 77.06 | 96.17 | 86.46 |

| ✓ | ✓ | ✓ | ✓ | 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

Table 7.

Evaluations on different ways of generating token probabilistic maps in our method. †: Without auxiliary loss to generate probabilistic maps.

Table 7.

Evaluations on different ways of generating token probabilistic maps in our method. †: Without auxiliary loss to generate probabilistic maps.

| Method | WHU | INRIA |

|---|

| IoU | OA | F | IoU | OA | F |

|---|

| ResNet18 | 84.54 | 98.29 | 91.47 | 75.14 | 95.82 | 85.19 |

| 86.35 | 98.46 | 92.64 | 76.66 | 96.07 | 86.21 |

| 85.65 | 98.42 | 92.14 | 75.71 | 95.96 | 85.69 |

| 85.72 | 98.42 | 92.17 | 76.06 | 96.00 | 85.78 |

| 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

Table 8.

Accuracy of our method SST with different loss balancing factors , and w/ or w/o using pretraining.

Table 8.

Accuracy of our method SST with different loss balancing factors , and w/ or w/o using pretraining.

| Pretrain | WHU | INRIA |

|---|

| IoU | OA | F | IoU | OA | F |

|---|

| 1 | × | 88.09 | 98.71 | 93.58 | 76.44 | 96.07 | 86.05 |

| 0.1 | × | 88.57 | 98.74 | 93.90 | 76.50 | 96.08 | 86.07 |

| 0.01 | × | 88.71 | 98.77 | 93.96 | 76.78 | 96.13 | 86.28 |

| 0.001 | × | 88.66 | 98.75 | 93.95 | 76.95 | 96.14 | 86.39 |

| 0.0001 | × | 88.24 | 98.71 | 93.68 | 76.64 | 96.09 | 86.19 |

| 1 | ✓ | 88.35 | 98.73 | 93.76 | 76.58 | 96.07 | 86.14 |

| 0.1 | ✓ | 88.77 | 98.78 | 93.99 | 76.76 | 96.11 | 86.26 |

| 0.01 | ✓ | 88.90 | 98.78 | 94.07 | 76.99 | 96.14 | 86.43 |

| 0.001 | ✓ | 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

| 0.0001 | ✓ | 88.84 | 98.79 | 94.03 | 76.69 | 96.10 | 86.21 |

Table 9.

Comparison with some well-known image labeling methods and state-of-the-art building extraction methods on the WHU and INRIA building datasets. UNet, SegNet, DANet, and DeepLabV3 are commonly used methods for segmentation tasks in CNN framework. SETR is a transformer-based method for segmentation. The methods mentioned in the second row are all based on the CNN framework for specific building extraction tasks. To validate the efficiency, we report number of parameters (Params.), multiply-accumulate operations (MACs) and images with 512 × 512 pixels per second on a 2080Ti GPU (Throughput).

Table 9.

Comparison with some well-known image labeling methods and state-of-the-art building extraction methods on the WHU and INRIA building datasets. UNet, SegNet, DANet, and DeepLabV3 are commonly used methods for segmentation tasks in CNN framework. SETR is a transformer-based method for segmentation. The methods mentioned in the second row are all based on the CNN framework for specific building extraction tasks. To validate the efficiency, we report number of parameters (Params.), multiply-accumulate operations (MACs) and images with 512 × 512 pixels per second on a 2080Ti GPU (Throughput).

| Model | Params.(M) | MACs(G) | Throughput | WHU | INRIA |

|---|

| IoU | OA | F | IoU | OA | F |

|---|

| UNet [41] | 17.27 | 160.48 | 823 | 87.52 | 98.65 | 93.11 | 77.29 | 96.25 | 86.56 |

| SegNet [42] | 29.44 | 160.56 | 748 | 85.13 | 98.36 | 91.74 | 76.32 | 96.10 | 85.83 |

| DANet [63] | 17.39 | 22.33 | 5787 | 81.22 | 97.82 | 89.57 | 76.02 | 95.94 | 85.80 |

| DeepLabV3 [44] | 15.31 | 20.06 | 7162 | 80.69 | 97.76 | 89.24 | 73.90 | 95.54 | 84.39 |

| SETR [27] | 64.56 | 34.46 | 1105 | 75.92 | 97.13 | 87.75 | 70.34 | 94.87 | 82.06 |

| DAN-Net [62] | 1.98 | 75.29 | 183 | 87.69 | 98.80 | 92.81 | 76.63 | 96.08 | 86.17 |

| MAP-Net [46] | 24.02 | 94.38 | 231 | 88.99 | 98.82 | 94.12 | 76.91 | 96.13 | 86.34 |

| SRI-Net [38] | 13.86 | 176.64 | 257 | 88.84 | 98.73 | 93.98 | 76.84 | 96.12 | 86.32 |

| BRRNet [12] | 17.341 | 255.12 | 491 | 89.03 | 98.81 | 94.14 | 77.05 | 96.47 | 86.61 |

| ESFNet [56] | 0.55 | 89.26 | 9716 | 83.81 | 98.16 | 91.13 | 71.28 | 95.08 | 82.47 |

| VGG16 | 17.88 | 92.24 | 1952 | 85.20 | 98.35 | 91.77 | 77.90 | 96.31 | 87.02 |

| VGG16 | 7.85 | 81.96 | 2078 | 86.28 | 98.49 | 92.47 | 77.99 | 96.36 | 87.08 |

| SST (Ours) | 17.09 | 82.43 | 1632 | 89.37 | 98.84 | 94.11 | 79.15 | 96.56 | 87.81 |

| ResNet18 | 12.12 | 13.37 | 3924 | 84.14 | 98.18 | 91.33 | 74.80 | 95.74 | 85.00 |

| ResNet18 | 2.84 | 10.31 | 4385 | 84.54 | 98.29 | 91.47 | 75.14 | 95.82 | 85.19 |

| SST (Ours) | 12.01 | 10.71 | 3134 | 89.01 | 98.80 | 94.13 | 77.12 | 96.19 | 86.49 |

| ResNet50 | 38.49 | 70.39 | 1311 | 86.47 | 98.50 | 92.50 | 78.04 | 96.34 | 87.08 |

| ResNet50 | 9.37 | 51.65 | 1462 | 86.88 | 98.50 | 92.95 | 78.12 | 96.40 | 87.13 |

| SST (Ours) | 18.74 | 52.25 | 1166 | 90.48 | 98.97 | 94.97 | 79.42 | 96.59 | 87.99 |