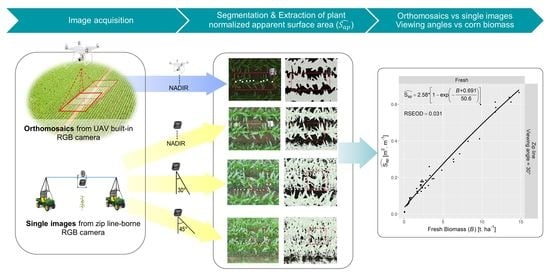

Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass

Abstract

:1. Introduction

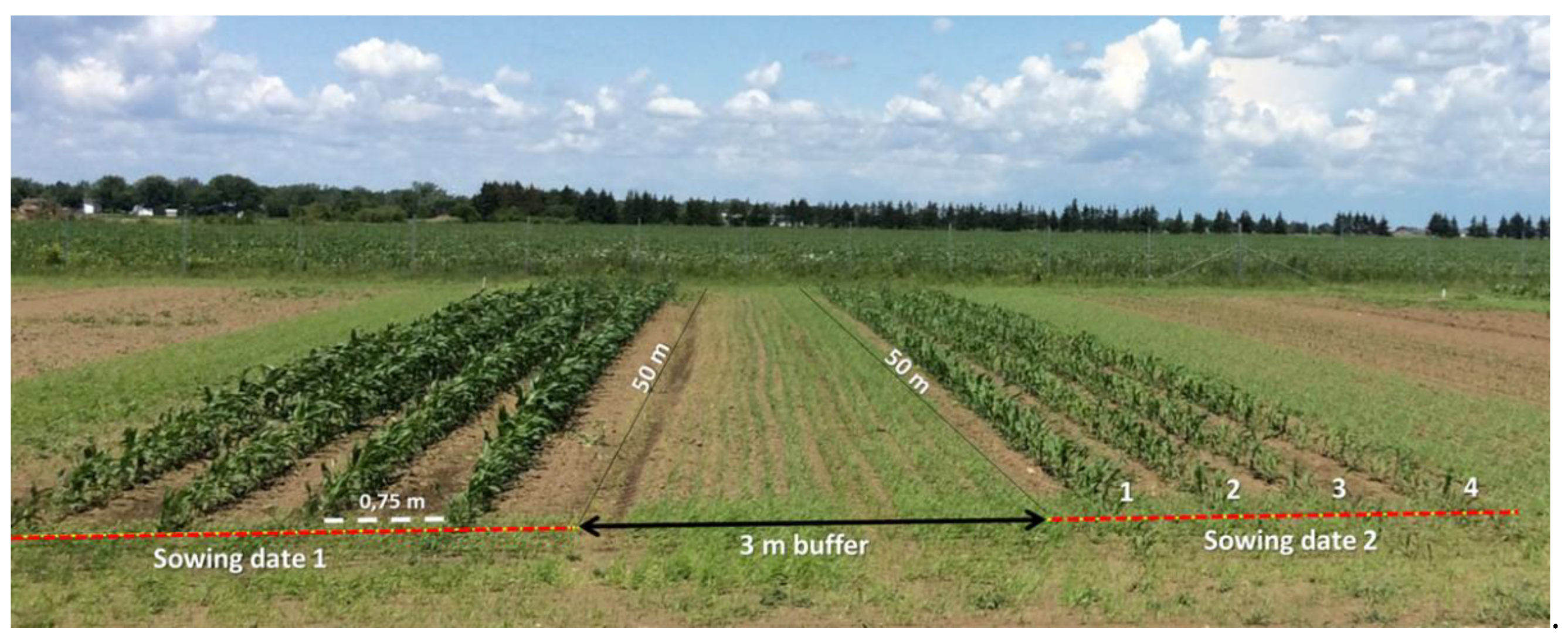

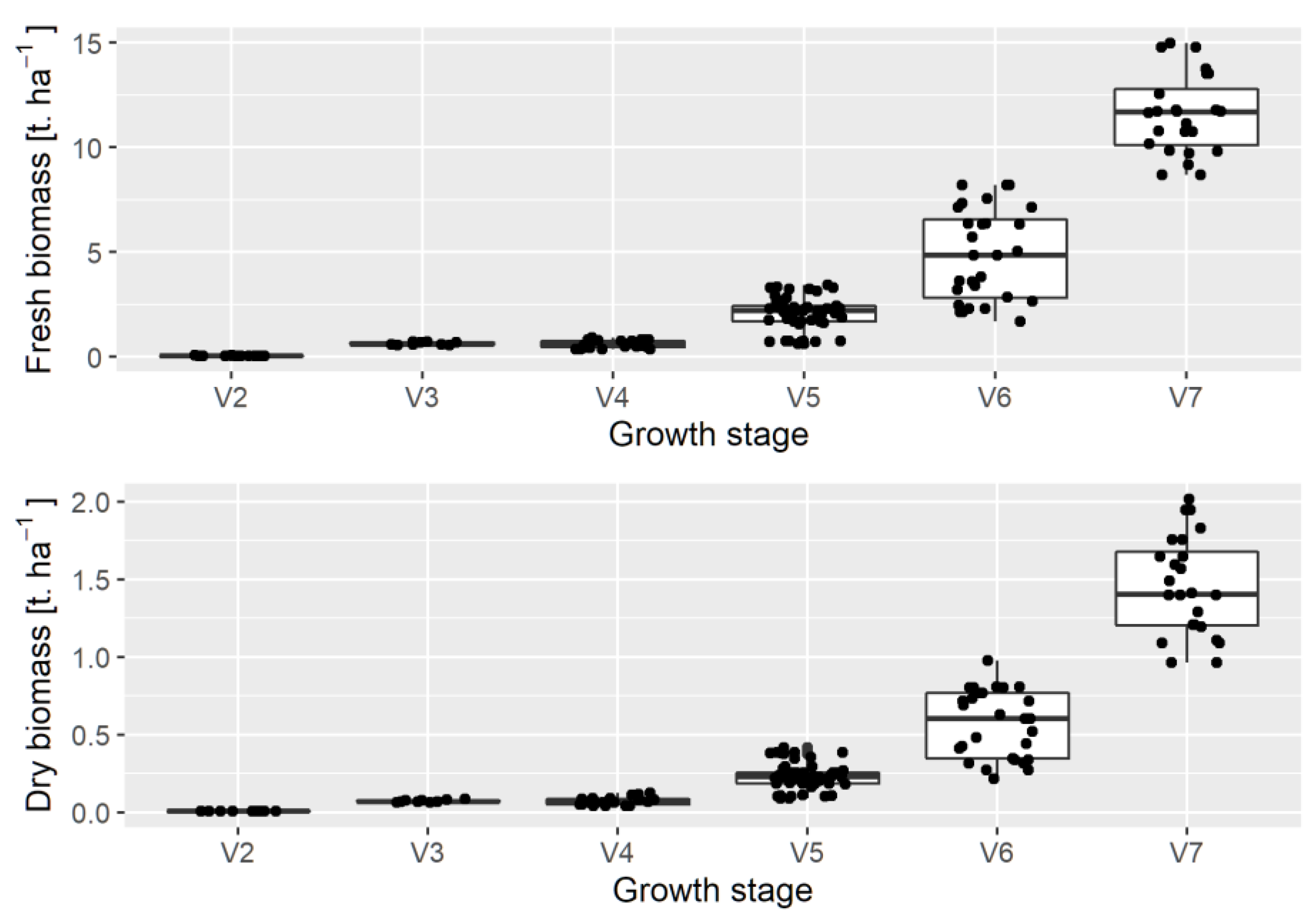

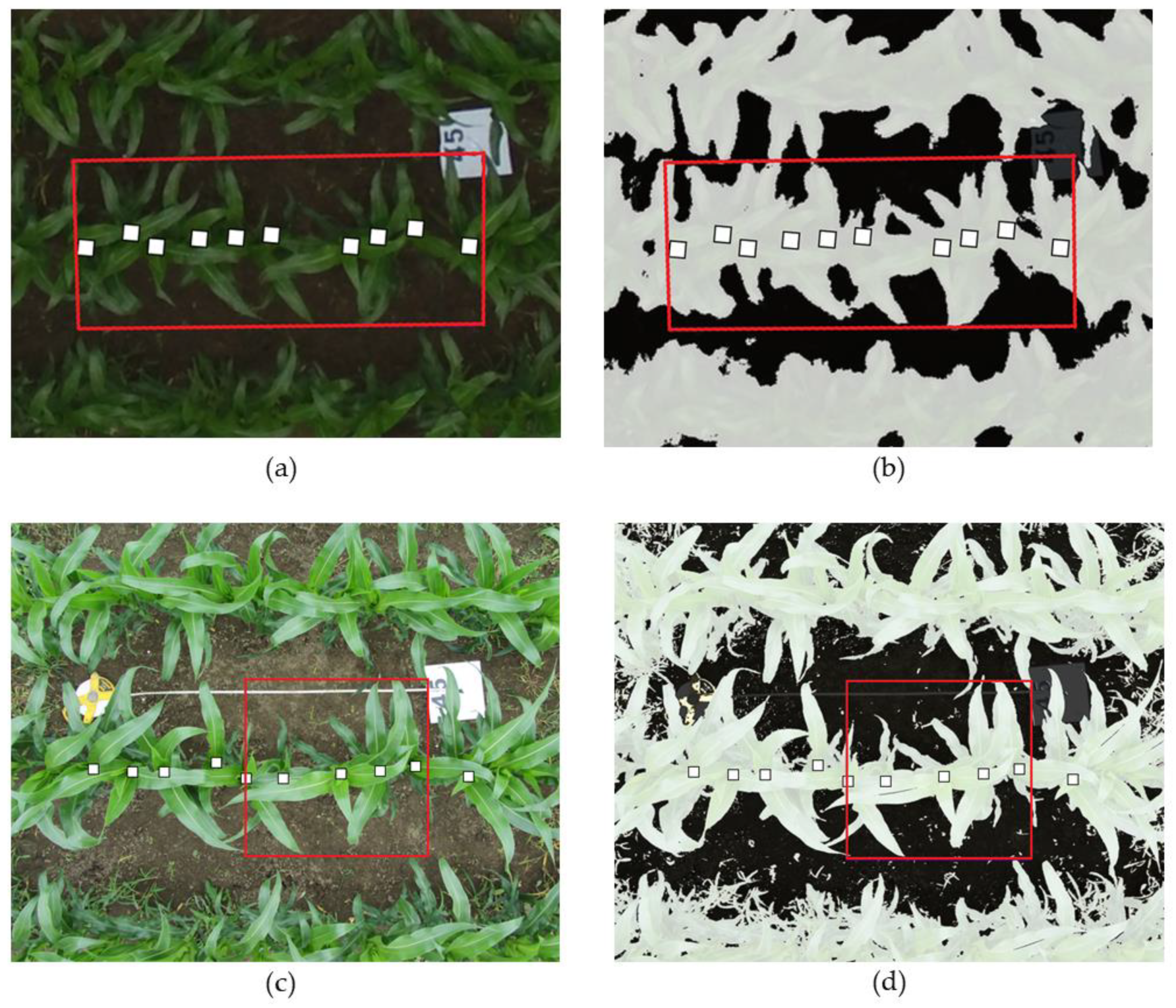

2. Materials and Methods

2.1. Study Site

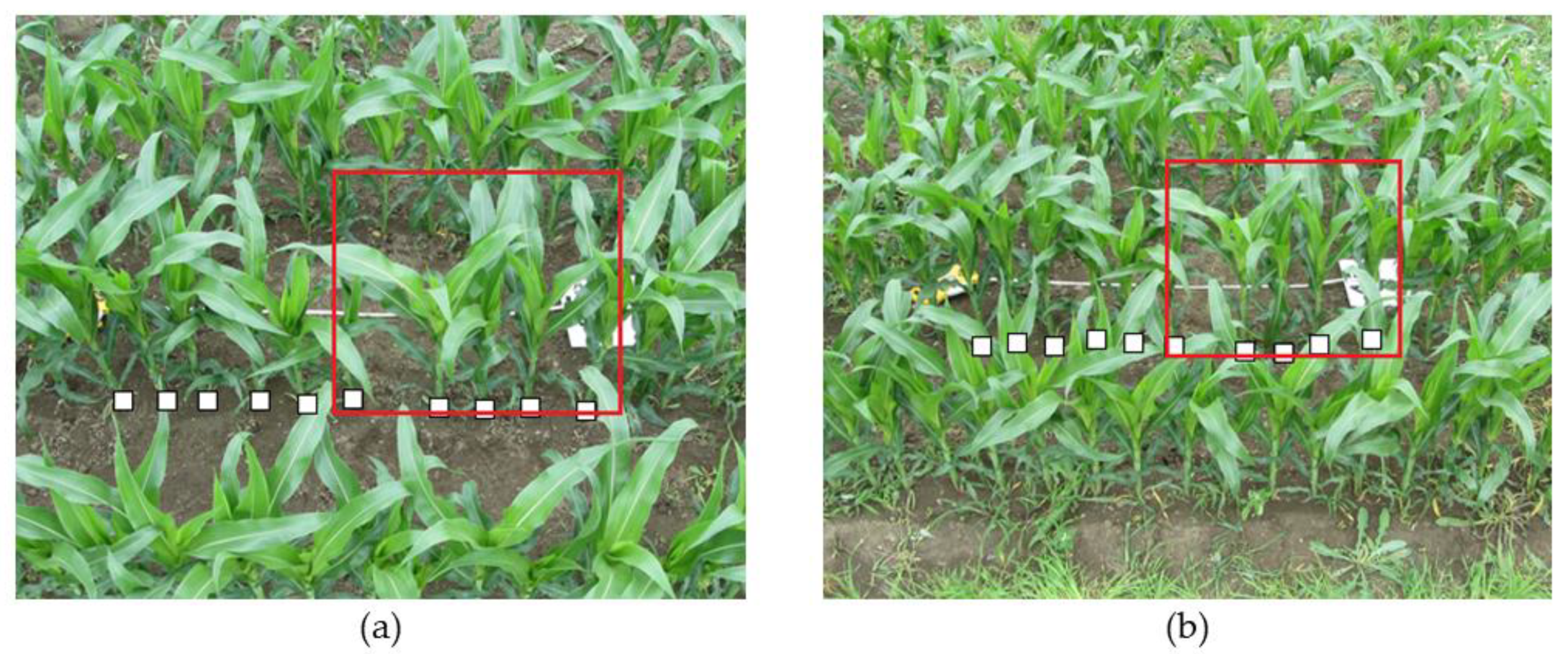

2.2. RGB Imagery Acquisition

2.3. Information Retrieval from RGB Imagery

2.4. Statistical Analysis

3. Results

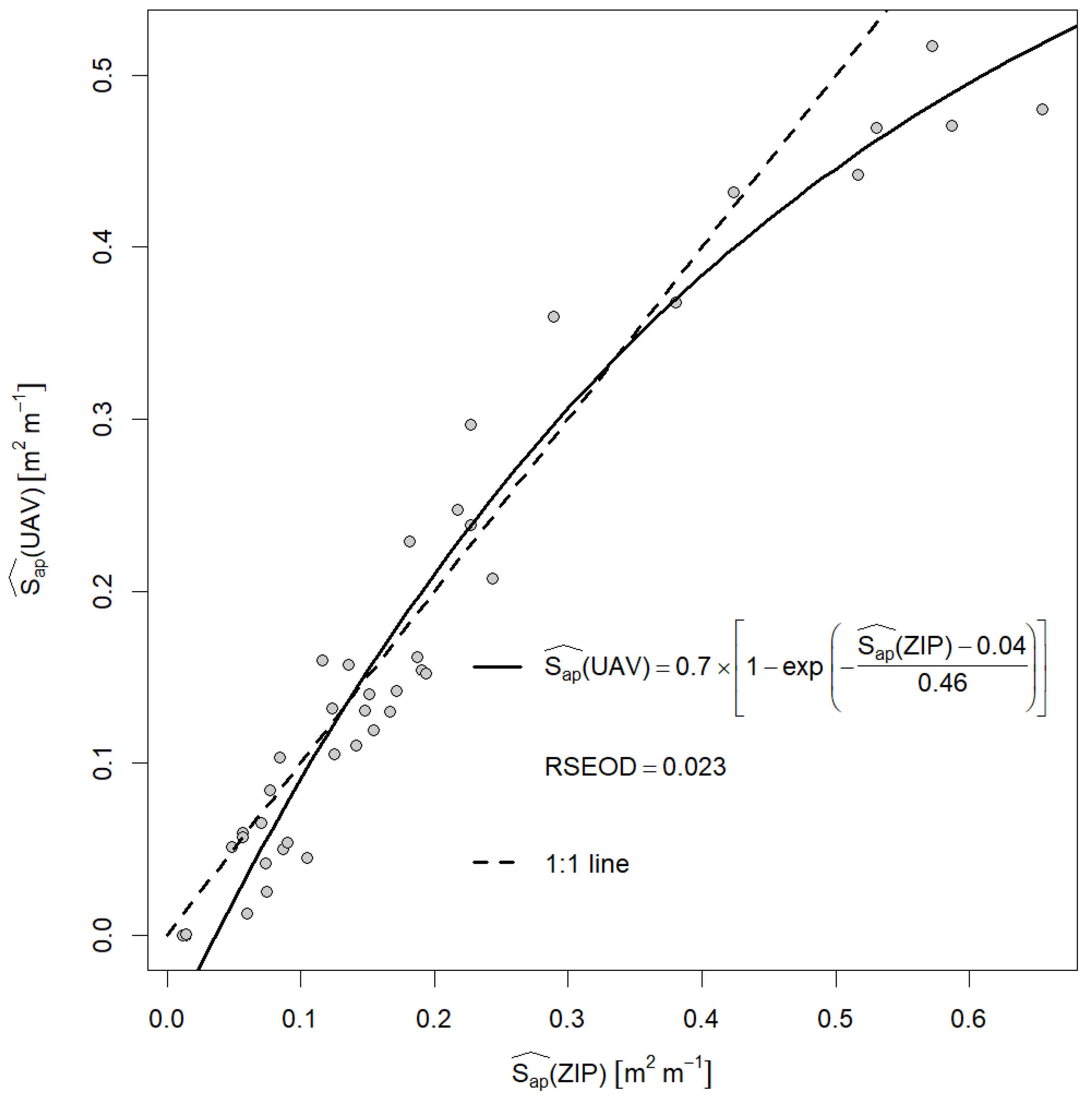

3.1. Comparing UAV and Zip Line at Nadir View

3.2. The Effect of Viewing Angles

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Statistics Canada. Table32-10-0406-01 Land Use. Available online: https://www150.statcan.gc.ca/t1/tbl1/en/tv.action?pid=3210040601 (accessed on 29 April 2021).

- Statistics Canada. Table32-10-0416-01 Hay and field crops. Available online: https://www150.statcan.gc.ca/t1/tbl1/en/tv.action?pid=3210041601 (accessed on 29 April 2021).

- Tilman, D.; Cassman, K.G.; Matson, P.A.; Naylor, R.; Polasky, S. Agricultural sustainability and intensive production practices. Nature 2002, 418, 671–677. [Google Scholar] [CrossRef] [PubMed]

- Tremblay, N.; Bélec, C. Adapting Nitrogen Fertilization to Unpredictable Seasonal Conditions with the Least Impact on the Environment. Horttechnology 2006, 16, 408–412. [Google Scholar] [CrossRef] [Green Version]

- Schröder, J.J.; Neeteson, J.J.; Oenema, O.; Struik, P.C. Does the crop or the soil indicate how to save nitrogen in maize production?: Reviewing the state of the art. Field Crop. Res. 2000, 66, 151–164. [Google Scholar] [CrossRef]

- Shanahan, J.F.; Kitchen, N.R.; Raun, W.R.; Schepers, J.S. Responsive in-season nitrogen management for cereals. Comput. Electron. Agric. 2008, 61, 51–62. [Google Scholar] [CrossRef] [Green Version]

- Precision Ag Definition. Available online: https://www.ispag.org/about/definition (accessed on 27 April 2021).

- Longchamps, L.; Khosla, R. Precision maize cultivation techniques. In Burleigh Dodds Series in Agricultural Science; Cgiar Maize Research Program Manager, C.M., Watson, D., Eds.; Burleigh Dodds Science Publishing Limited: Cambridge, UK, 2017; pp. 107–148. [Google Scholar]

- Yu, Z.; Cao, Z.; Wu, X.; Bai, X.; Qin, Y.; Zhuo, W.; Xiao, Y.; Zhang, X.; Xue, H. Automatic image-based detection technology for two critical growth stages of maize: Emergence and three-leaf stage. Agric. Forest Meteorology. 2013, 174–175, 65–84. [Google Scholar] [CrossRef]

- Corti, M.; Cavalli, D.; Cabassi, G.; Vigoni, A.; Degano, L.; Marino Gallina, P. Application of a low-cost camera on a UAV to estimate maize nitrogen-related variables. Precis. Agric. 2018. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sensors 2017, 2017. [Google Scholar] [CrossRef] [Green Version]

- Quintano, C.; Fernández-Manso, A.; Shimabukuro, Y.E.; Pereira, G. Spectral unmixing. Int. J. Remote Sens. 2012, 33, 5307–5340. [Google Scholar] [CrossRef]

- Basso, B.; Cammarano, D. Remotely sensed vegetation indices: Theory and applications for crop management. Ital. J. Agrometeorol. 2004, 1, 36–53. [Google Scholar]

- Holland, K.H.; Lamb, D.W.; Schepers, J.S. Radiometry of Proximal Active Optical Sensors (AOS) for Agricultural Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1793–1802. [Google Scholar] [CrossRef]

- Corti, M.; Cavalli, D.; Cabassi, G.; Marino Gallina, P.; Bechini, L. Does remote and proximal optical sensing successfully estimate maize variables? A review. Eur. J. Agron. 2018, 99, 37–50. [Google Scholar] [CrossRef]

- Tremblay, N.; Wang, Z.; Ma, B.-L.; Belec, C.; Vigneault, P. A comparison of crop data measured by two commercial sensors for variable-rate nitrogen application. Precis. Agric. 2009, 10, 145–161. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T.; Mirsky, S.B.; Hively, W.D. Remote sensing with unmanned aircraft systems for precision agriculture applications. In Proceedings of the 2nd International Conference on Agro-Geoinformatics: Information for Sustainable Agriculture, Fairfax, VA, USA, 12–16 August 2013; pp. 131–134. [Google Scholar] [CrossRef]

- Ren, X.; Sun, M.; Zhang, X.; Liu, L. A simplified method for UAV multispectral images mosaicking. Remote. Sens. 2017, 9, 962. [Google Scholar] [CrossRef] [Green Version]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Bouroubi, Y.; Tremblay, N.; Vigneault, P.; Bélec, C.; Adamchuk, V. Estimating nitrogen sufficiency index using a natural local reference approach. In Proceedings of the 2nd International Conference on Agro-Geoinformatics: Information for Sustainable Agriculture, Fairfax, VA, USA, 12–16 August 2013; pp. 71–75. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA: Washington, D.C., USA, 1974; pp. 309–317.

- Hunt, E.R.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef] [Green Version]

- Lelong, C.C.D.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef] [PubMed]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Rabatel, G.; Labbé, S. Registration of visible and near infrared unmanned aerial vehicle images based on Fourier-Mellin transform. Precis. Agric. 2016, 17, 564–587. [Google Scholar] [CrossRef] [Green Version]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Hu, P.; Chapman, S.C.; Wang, X.; Potgieter, A.; Duan, T.; Jordan, D.; Guo, Y.; Zheng, B. Estimation of plant height using a high throughput phenotyping platform based on unmanned aerial vehicle and self-calibration: Example for sorghum breeding. Eur. J. Agron. 2018, 95, 24–32. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Breckenridge, R.P.; Dakins, M.; Bunting, S.; Harbour, J.L.; Lee, R.D. Using Unmanned Helicopters to Assess Vegetation Cover in Sagebrush Steppe Ecosystems. Rangel. Ecol. Manag. 2012, 65, 362–370. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Gnädinger, F.; Schmidhalter, U. Digital Counts of Maize Plants by Unmanned Aerial Vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef] [Green Version]

- Varela, S.; Dhodda, P.R.; Hsu, W.H.; Prasad, P.V.V.; Assefa, Y.; Peralta, N.R.; Griffin, T.; Sharda, A.; Ferguson, A.; Ciampitti, I.A. Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques. Remote Sens. 2018, 10, 343. [Google Scholar] [CrossRef] [Green Version]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [Green Version]

- Che, Y.; Wang, Q.; Xie, Z.; Zhou, L.; Li, S.; Hui, F.; Wang, X.; Li, B.; Ma, Y. Estimation of maize plant height and leaf area index dynamics using an unmanned aerial vehicle with oblique and nadir photography. Ann. Bot. 2020, 126, 765–773. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SfM 3D Model Accuracy in High-Relief Landscapes by Incorporating Oblique Images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef] [Green Version]

- Kakooei, M.; Baleghi, Y. A two-level fusion for building irregularity detection in post-disaster VHR oblique images. Earth Sci. Inform. 2020, 13, 459–477. [Google Scholar] [CrossRef]

- Brocks, S.; Bendig, J.; Bareth, G. Toward an automated low-cost three-dimensional crop surface monitoring system using oblique stereo imagery from consumer-grade smart cameras. J. Appl. Remote Sens. 2016, 10, 046021. [Google Scholar] [CrossRef] [Green Version]

- Brocks, S.; Bareth, G. Estimating Barley Biomass with Crop Surface Models from Oblique RGB Imagery. Remote Sens. 2018, 10, 268. [Google Scholar] [CrossRef] [Green Version]

- Lu, N.; Wang, W.; Zhang, Q.; Li, D.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Baret, F.; Liu, S.; et al. Estimation of Nitrogen Nutrition Status in Winter Wheat From Unmanned Aerial Vehicle Based Multi-Angular Multispectral Imagery. Front. Plant Sci. 2019, 10, 1601. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ritchie, S.W.; Hanway, J.J.; Benson, G.O. How a corn plant develops; Iowa State University of Science and Technology Cooperative: Ames, IA, USA, 1986. [Google Scholar]

- Khun, K. Contribution de l’imagerie dronique pour la caractérisation des paramètres biophysiques des cultures agricoles. Ph.D. Thesis, Université de Montréal, Montréal, QC, Canada, 2021. [Google Scholar]

- tkinter — Python interface to Tcl/Tk. Available online: https://docs.python.org/3/library/tkinter.html (accessed on 27 April 2021).

- OpenCV-Python Tutorials. Available online: https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_tutorials.html (accessed on 27 April 2021).

- NumPy. The fundamental package for scientific computing with Python. Available online: https://numpy.org/ (accessed on 27 April 2021).

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Boggs, P.T.; Donaldson, J.R. Orthogonal distance regression. Contemp. Math. 1989, 1–15. [Google Scholar] [CrossRef]

- Spiess, A.-N. onls: Orthogonal Nonlinear Least-Squares Regression, R package version 0.1-1. 2015. [Google Scholar]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Hlaing, S.; Khaing, A.S. Weed and crop segmentation and classification using area thresholding Technology. Int. J. Res. Eng. Technol. 2014, 3, 375–382. [Google Scholar] [CrossRef]

- Kamath, R.; Balachandra, M.; Prabhu, S. Crop and weed discrimination using Laws’ texture masks. Int. J. Agric. Biol. Eng. 2020, 13, 191–197. [Google Scholar] [CrossRef]

- Suzuki, Y.; Okamoto, H.; Kataoka, T. Image Segmentation between Crop and Weed using Hyperspectral Imaging for Weed Detection in Soybean Field. Environ. Control Biol. 2008, 46, 163–173. [Google Scholar] [CrossRef] [Green Version]

- Wendel, A.; Underwood, J. Self-supervised weed detection in vegetable crops using ground based hyperspectral imaging. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5128–5135. [Google Scholar] [CrossRef]

- Andrea, C.; Mauricio Daniel, B.B.; José Misael, J.B. Precise weed and maize classification through convolutional neuronal networks. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zeng, L.; Wardlow, B.D.; Xiang, D.; Hu, S.; Li, D. A review of vegetation phenological metrics extraction using time-series, multispectral satellite data. Remote Sens. Environ. 2020, 237, 111511. [Google Scholar] [CrossRef]

- He, L.; Song, X.; Feng, W.; Guo, B.-B.; Zhang, Y.-S.; Wang, Y.-H.; Wang, C.-Y.; Guo, T.-C. Improved remote sensing of leaf nitrogen concentration in winter wheat using multi-angular hyperspectral data. Remote Sens. Environ. 2016, 174, 122–133. [Google Scholar] [CrossRef]

- He, L.; Zhang, H.-Y.; Zhang, Y.-S.; Song, X.; Feng, W.; Kang, G.-Z.; Wang, C.-Y.; Guo, T.-C. Estimating canopy leaf nitrogen concentration in winter wheat based on multi-angular hyperspectral remote sensing. Eur. J. Agron. 2016, 73, 170–185. [Google Scholar] [CrossRef]

- Jay, S.; Gorretta, N.; Morel, J.; Maupas, F.; Bendoula, R.; Rabatel, G.; Dutartre, D.; Comar, A.; Baret, F. Estimating leaf chlorophyll content in sugar beet canopies using millimeter- to centimeter-scale reflectance imagery. Remote Sens. Environ. 2017, 198, 173–186. [Google Scholar] [CrossRef]

- Jay, S.; Maupas, F.; Bendoula, R.; Gorretta, N. Retrieving LAI, chlorophyll and nitrogen contents in sugar beet crops from multi-angular optical remote sensing: Comparison of vegetation indices and PROSAIL inversion for field phenotyping. Field Crop. Res. 2017, 210, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in Agriculture Robotics: A State-of-the-Art Review and Challenges Ahead. Robotics 2021, 10, 52. [Google Scholar] [CrossRef]

- Richardson, A.D.; Hufkens, K.; Milliman, T.; Aubrecht, D.M.; Chen, M.; Gray, J.M.; Johnston, M.R.; Keenan, T.F.; Klosterman, S.T.; Kosmala, M.; et al. Tracking vegetation phenology across diverse North American biomes using PhenoCam imagery. Sci. Data 2018, 5, 180028. [Google Scholar] [CrossRef]

| Platform | UAV | Zip Line |

| Camera sensor | 1/2.3" CMOS | 1/2.3" CMOS |

| Sensor width (mm) | 6.30 | 6.16 |

| Sensor height (mm) | 4.73 | 4.62 |

| Image size (pixels) | 4000 × 3000 | 4000 × 3000 |

| Focal length (mm) | 3.6 | 5.0 |

| Diagonal field of view | 95° | 75° |

| Pixel dimension (μm) | 1.58 | 1.54 |

| Acquisition mode | Flight path (S-pattern) | Stationary |

| Acquisition altitude (m AGL) | 10 | 2.9 |

| Viewing angles | Nadir (0°) | Nadir (0°) and oblique (30° and 45°) |

| Generated products | Orthomosaics | Single images |

| GSD (mm) | 4.4 | 0.89 (viewing angle = 0°) 1.03 (viewing angle = 30°) 1.26 (viewing angle = 45°) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khun, K.; Tremblay, N.; Panneton, B.; Vigneault, P.; Lord, E.; Cavayas, F.; Codjia, C. Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass. Remote Sens. 2021, 13, 4032. https://doi.org/10.3390/rs13204032

Khun K, Tremblay N, Panneton B, Vigneault P, Lord E, Cavayas F, Codjia C. Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass. Remote Sensing. 2021; 13(20):4032. https://doi.org/10.3390/rs13204032

Chicago/Turabian StyleKhun, Kosal, Nicolas Tremblay, Bernard Panneton, Philippe Vigneault, Etienne Lord, François Cavayas, and Claude Codjia. 2021. "Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass" Remote Sensing 13, no. 20: 4032. https://doi.org/10.3390/rs13204032

APA StyleKhun, K., Tremblay, N., Panneton, B., Vigneault, P., Lord, E., Cavayas, F., & Codjia, C. (2021). Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass. Remote Sensing, 13(20), 4032. https://doi.org/10.3390/rs13204032