Compression of Remotely Sensed Astronomical Image Using Wavelet-Based Compressed Sensing in Deep Space Exploration

Abstract

1. Introduction

2. Preliminaries

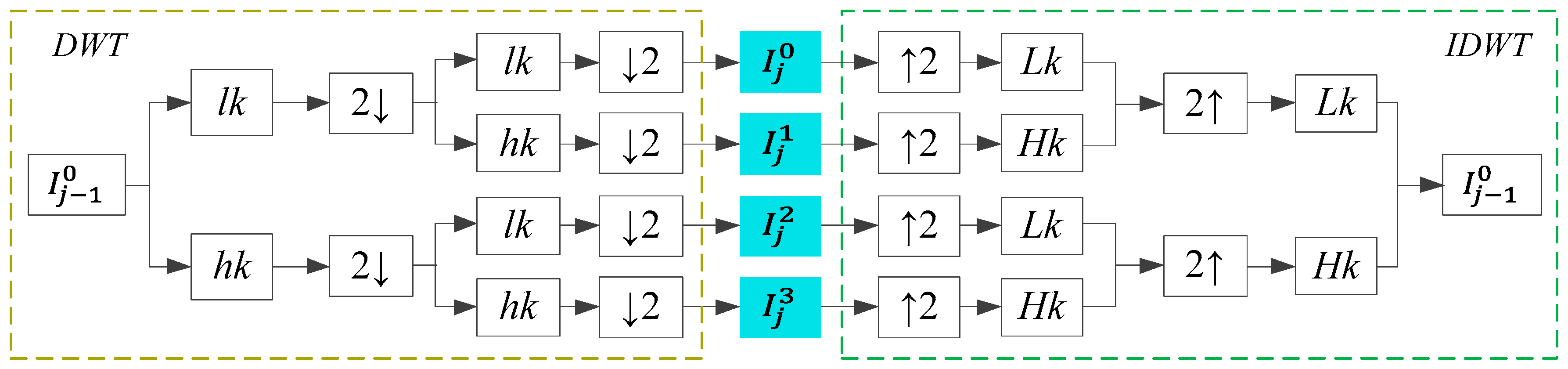

2.1. Discrete Wavelet Transform

2.2. Compressed Sensing

3. Proposed Technique

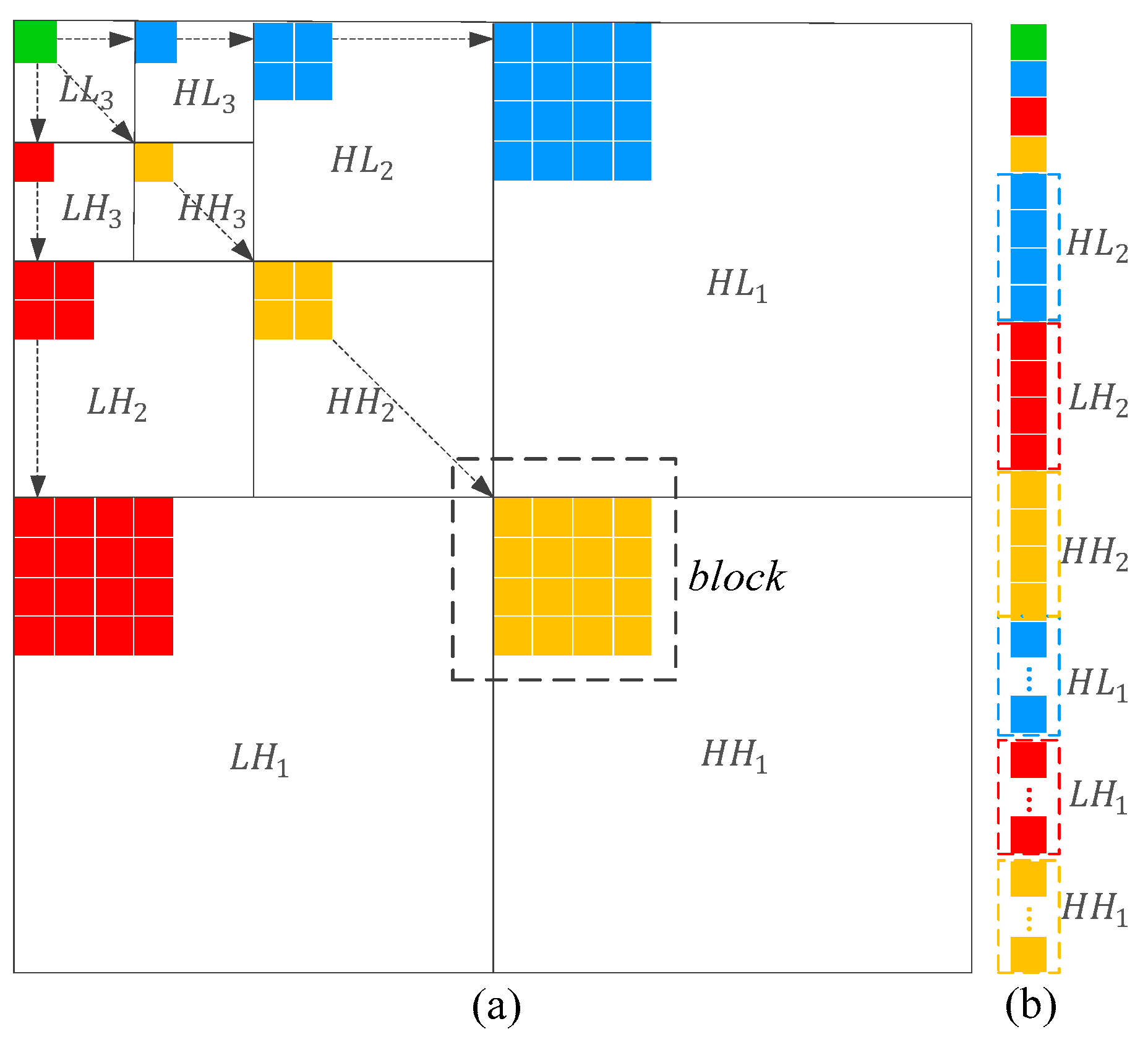

3.1. A New Sparse Vector Based on the Rearrangement of Wavelet Coefficients

3.2. Measurement Matrix with Double Allocation Strategy

- An random Gaussian matrix is constructed.

- In a sparse vector, the importance of the elements decreases from the front to the back. Increasing the coefficient of the first half of the measurement matrix can preserve more important information of the image. To do this, and to satisfy the incoherence requirement of any two columns of the measurement matrix, we introduce an optimized matrix,where and are different prime numbers. Since and are relatively prime, any two columns of are incoherent [45,46].

- The upper left part of is dot-multiplied with , and other coefficients of remain unchanged, to obtain an optimized measurement matrix . Because and , the coefficients of the upper-left part of are increased.

- is the size of . It is determined by the measurement rate of each sparse vector. When the total measurement rate is certain, the measurement rate is allocated according to the texture detail complexity of the image. We calculate as follows.

- The elements in the sparse vector correspond to the frequency information of the same area in the image. The high-frequency coefficients reflect the detailed information. The energy of high-frequency coefficients is defined to describe the texture detailed complexity of the sparse vector. Taking as an example, the energy of the high-frequency coefficients of iswhere represents the i-th element in .

- If the total measurement rate is , then the total measurement number isis divided into two parts: Adaptive distribution and fixed distribution. The measurement number used to adaptively allocate according to the complexity of the texture isThe measurement number of fixed allocation isi.e., is evenly allocated to each sparse vector.

- The higher the texture detailed complexity, the higher measurement rates are allocated to ensure retention of more details. Image areas with low texture detailed complexity, such as the background and smooth surface areas, are allocated lower measurement rates. The measurement rate is adaptively allocated according to the texture detailed complexity. A linear measurement rate allocation scheme is established based on this principle. The measurement number of the sparse vector iswhere is the number of sparse vectors, andis a proportionality coefficient. Then the measurement number of isand the final measurement number of is , i.e.,

- The sparse vectors are compressed by the optimized measurement matrix, and the compressed value of is

3.3. Image Reconstruction

4. Experimental Results and Analysis

4.1. Evaluation Standard

4.2. Experimental Data and Evaluation

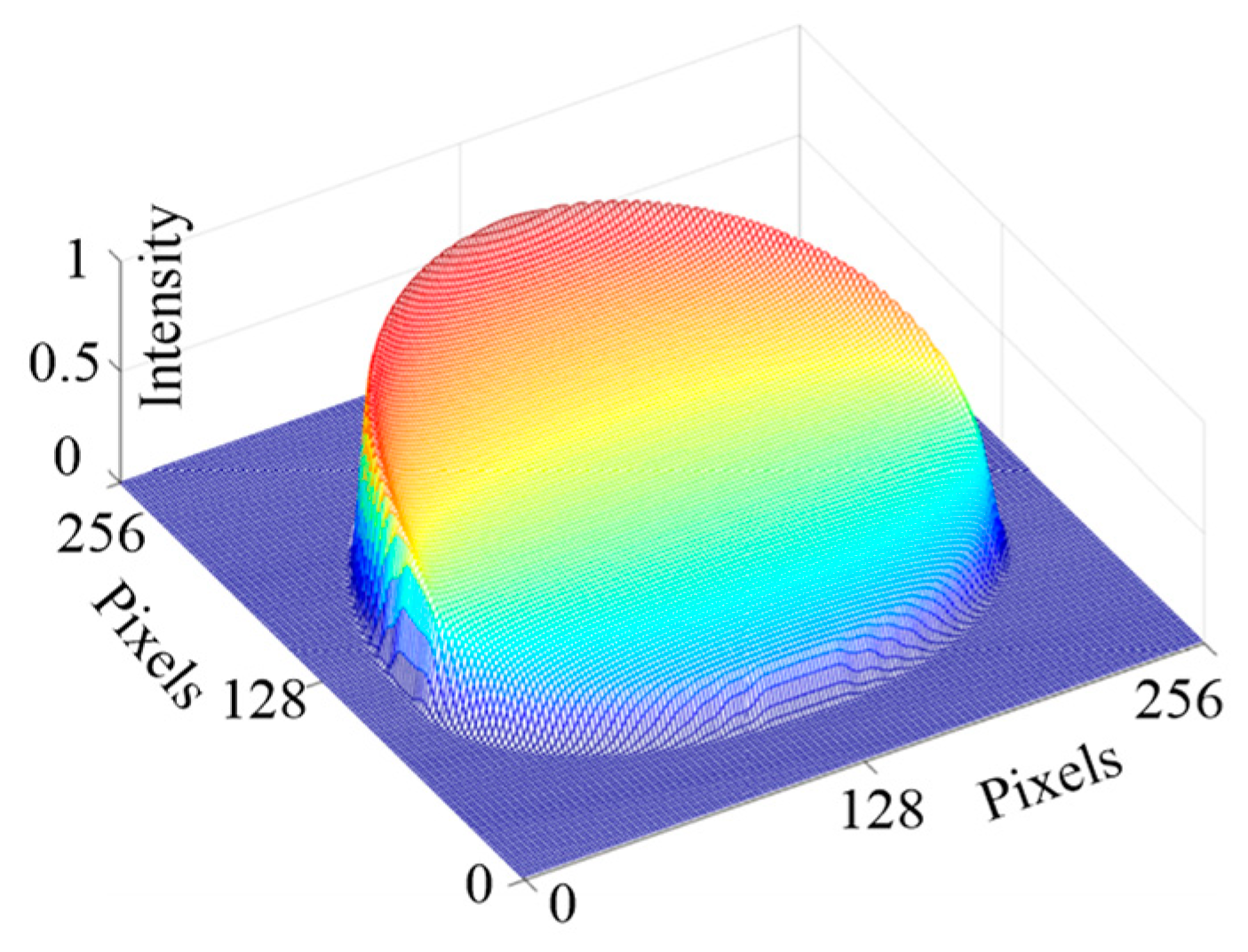

4.2.1. Simulated Images of Celestial Bodies

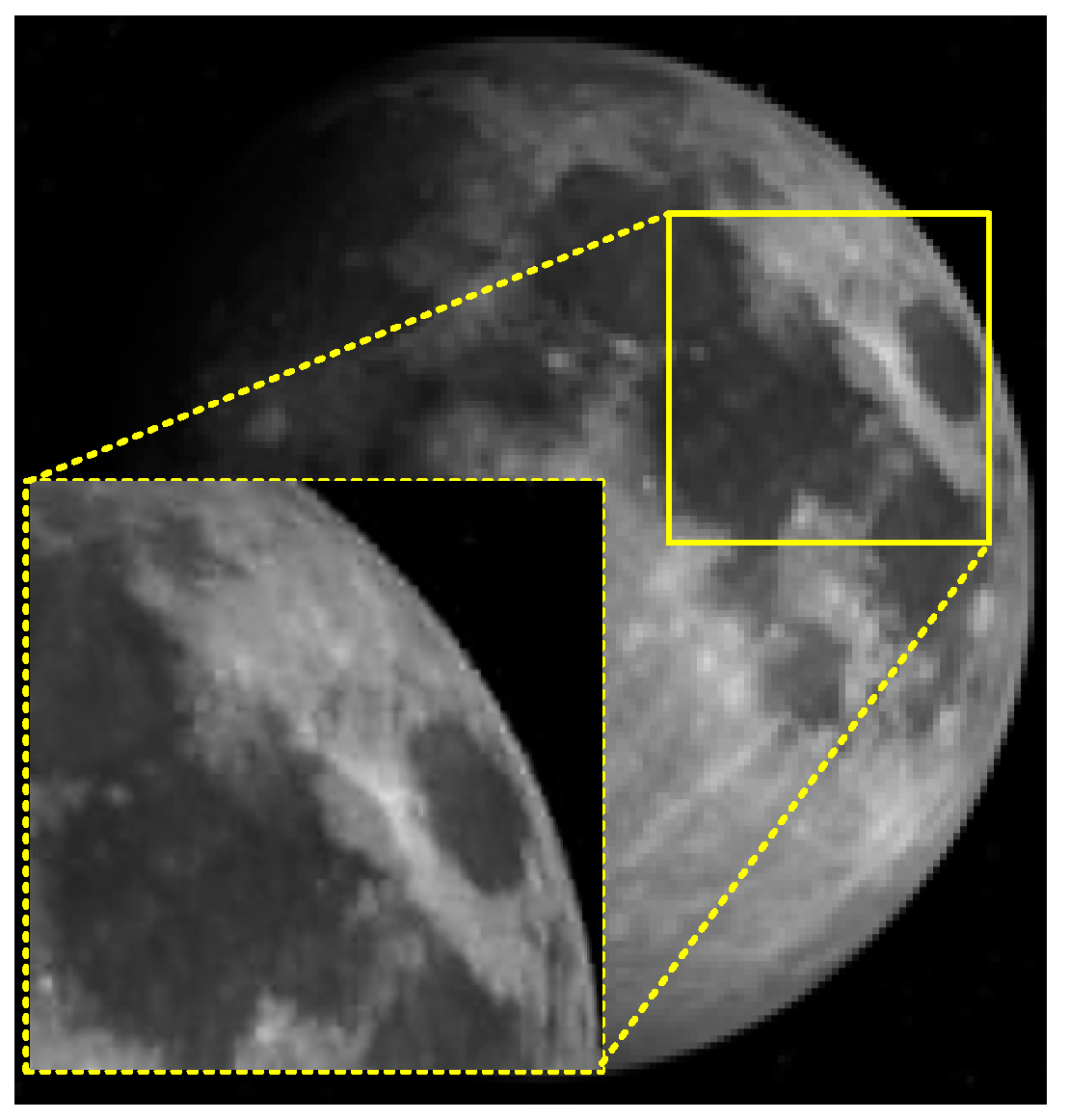

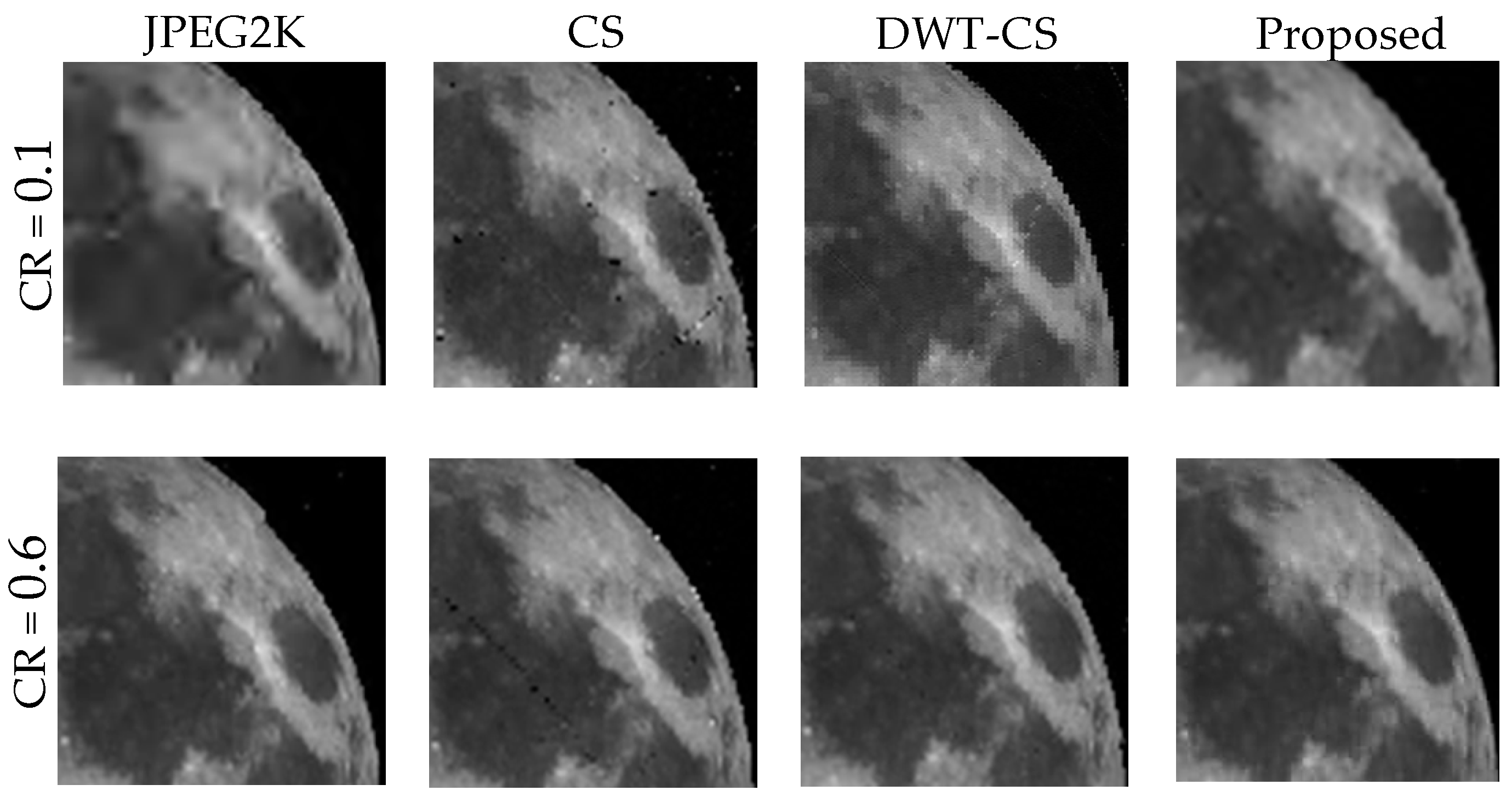

4.2.2. Moon Image

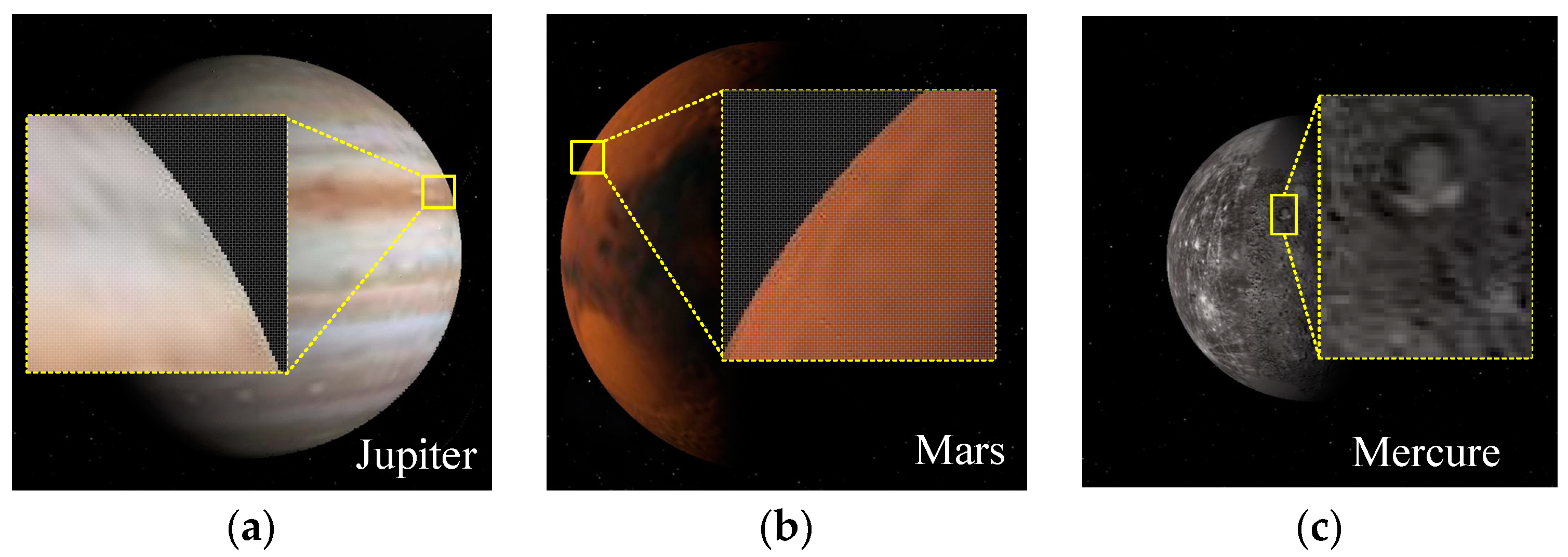

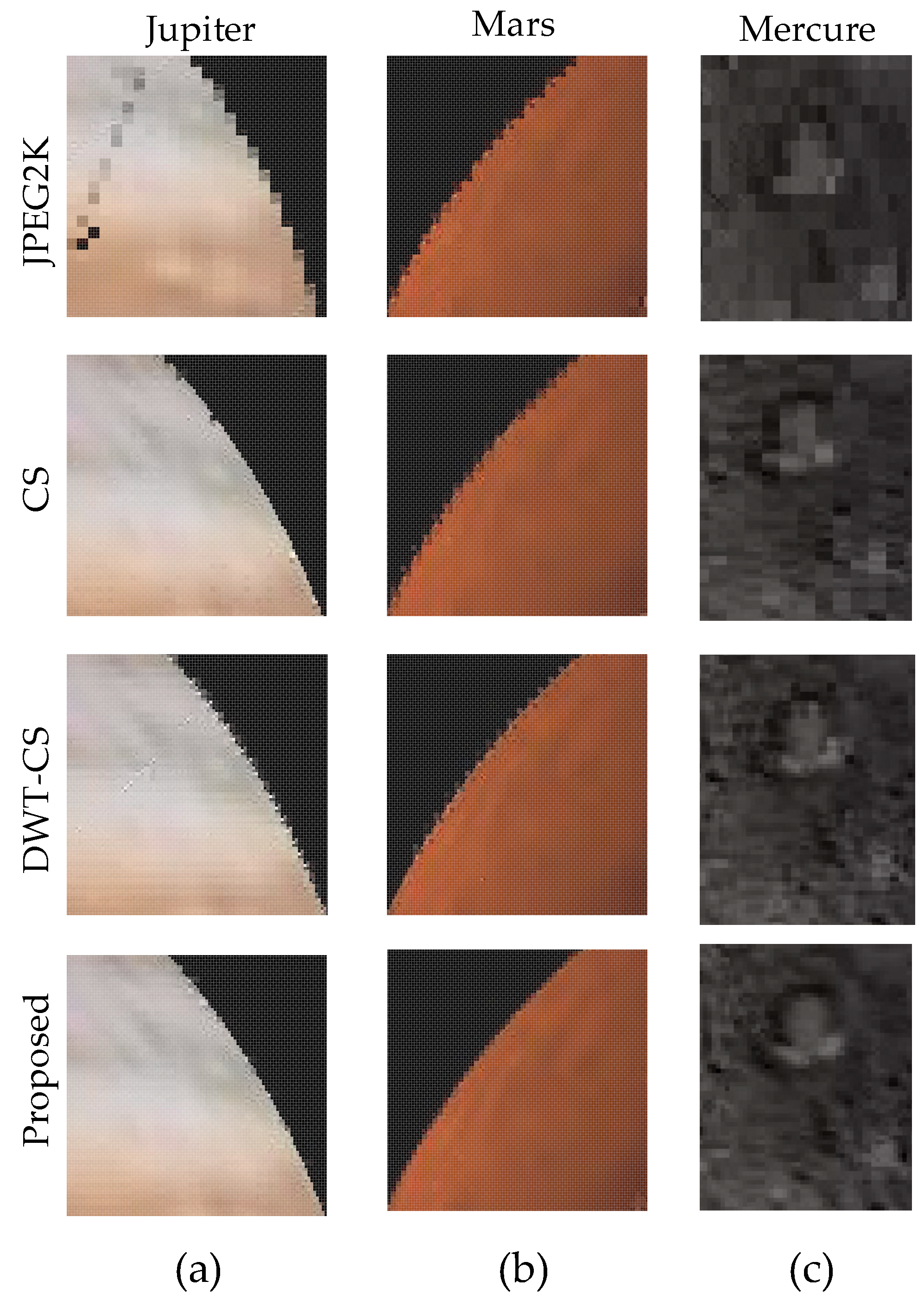

4.2.3. Planet Image

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ma, X.; Fang, J.; Ning, X. An overview of the autonomous navigation for a gravity-assist interplanetary spacecraft. Prog. Aerosp. Sci. 2013, 63, 56–66. [Google Scholar] [CrossRef]

- Jiang, J.; Wang, H.; Zhang, G. High-accuracy synchronous extraction algorithm of star and celestial body features for optical navigation sensor. IEEE Sens. J. 2018, 18, 713–723. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, J.; Zhang, G.; Lu, Y. Accurate and Robust Synchronous Extraction Algorithm for Star Centroid and Nearby Celestial Body Edge. IEEE Access 2019, 7, 126742–126752. [Google Scholar] [CrossRef]

- Blanes, I.; Magli, E.; Serra-Sagrista, J. A Tutorial on Image Compression for Optical Space Imaging Systems. IEEE Geosci. Remote Sens. Mag. 2014, 2, 8–26. [Google Scholar] [CrossRef]

- Hernández-Cabronero, M.; Portell, J.; Blanes, I.; Serra-Sagristà, J. High-Performance lossless compression of hyperspectral remote sensing scenes based on spectral decorrelation. Remote Sens. 2020, 12, 2955. [Google Scholar] [CrossRef]

- Magli, E.; Valsesia, D.; Vitulli, R. Onboard payload data compression and processing for spaceborne imaging. Int. J. Remote Sens. 2018, 39, 1951–1952. [Google Scholar] [CrossRef]

- Báscones, D.; González, C.; Mozos, D. An FPGA accelerator for real-time lossy compression of hyperspectral images. Remote Sens. 2020, 12, 2563. [Google Scholar] [CrossRef]

- Rui, Z.; Zhaokui, W.; Yulin, Z. A person-following nanosatellite for in-cabin astronaut assistance: System design and deep-learning-based astronaut visual tracking implementation. Acta Astronaut. 2019, 162, 121–134. [Google Scholar] [CrossRef]

- Orlandić, M.; Fjeldtvedt, J.; Johansen, T. A Parallel FPGA Implementation of the CCSDS-123 Compression Algorithm. Remote Sens. 2019, 11, 673. [Google Scholar] [CrossRef]

- Christian, J.A. Optical Navigation for a Spacecraft in a Planetary System. Ph.D. Thesis, Department of Aerospace Engineering, The University of Texas, Austin, TX, USA, 2010. [Google Scholar]

- Mortari, D.; de Dilectis, F.; Zanetti, R. Position estimation using the image derivative. Aerospace 2015, 2, 435–460. [Google Scholar] [CrossRef]

- Christian, J.A. Optical navigation using planet’s centroid and apparent diameter in image. J. Guid. Control. Dyn. 2015, 38, 192–204. [Google Scholar] [CrossRef]

- Christian, J.A.; Glenn Lightsey, E. An on-board image processing algorithm for a spacecraft optical navigation sensor system. AIAA Sp. Conf. Expo. 2010, 2010. [Google Scholar] [CrossRef]

- Guerra, R.; Barrios, Y.; Díaz, M.; Santos, L.; López, S.; Sarmiento, R. A new algorithm for the on-board compression of hyperspectral images. Remote Sens. 2018, 10, 428. [Google Scholar] [CrossRef]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. The LOCO-I lossless image compression algorithm: Principles and standardization into JPEG-LS. IEEE Trans. Image Process. 2000, 9, 1309–1324. [Google Scholar] [CrossRef] [PubMed]

- Du, B.; Ye, Z.F. A novel method of lossless compression for 2-D astronomical spectra images. Exp. Astron. 2009, 27, 19–26. [Google Scholar] [CrossRef]

- Aiazzi, B.; Selva, M.; Arienzo, A.; Baronti, S. Influence of the system MTF on the on-board lossless compression of hyperspectral raw data. Remote Sens. 2019, 11, 791. [Google Scholar] [CrossRef]

- Starck, J.L.; Bobin, J. Astronomical data analysis and sparsity: From wavelets to compressed sensing. Proc. IEEE 2010, 98, 1021–1030. [Google Scholar] [CrossRef]

- Pata, P.; Schindler, J. Astronomical context coder for image compression. Exp. Astron. 2015, 39, 495–512. [Google Scholar] [CrossRef]

- Fischer, C.E.; Müller, D.; De Moortel, I. JPEG2000 Image Compression on Solar EUV Images. Sol. Phys. 2017, 292. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L.; Zhang, J.; Miao, F.; He, P. Remote sensing image compression based on direction lifting-based block transform with content-driven quadtree coding adaptively. Remote Sens. 2018, 10, 999. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An Introduction to Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J.K.; Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef]

- Fiandrotti, A.; Fosson, S.M.; Ravazzi, C.; Magli, E. GPU-accelerated algorithms for compressed signals recovery with application to astronomical imagery deblurring. Int. J. Remote Sens. 2018, 39, 2043–2065. [Google Scholar] [CrossRef]

- Gallana, L.; Fraternale, F.; Iovieno, M.; Fosson, S.M.; Magli, E.; Opher, M.; Richardson, J.D.; Tordella, D. Voyager 2 solar plasma and magnetic field spectral analysis for intermediate data sparsity. J. Geophys. Res. A Sp. Phys. 2016, 121, 3905–3919. [Google Scholar] [CrossRef]

- Ma, J. Single-Pixel remote sensing. IEEE Geosci. Remote Sens. Lett. 2009, 6, 199–203. [Google Scholar] [CrossRef]

- Bobin, J.; Starck, J.L.; Ottensamer, R. Compressed sensing in astronomy. IEEE J. Sel. Top. Signal Process. 2008, 2, 718–726. [Google Scholar] [CrossRef]

- Ma, J.; Hussaini, M.Y. Extensions of compressed imaging: Flying sensor, coded mask, and fast decoding. IEEE Trans. Instrum. Meas. 2011, 60, 3128–3139. [Google Scholar] [CrossRef]

- Antonini, M. Barlaud Image coding using wavelet transform. IEEE Trans. Image Process. 1992, 1, 205–220. [Google Scholar] [CrossRef]

- Choi, H.; Jeong, J. Speckle noise reduction technique for sar images using statistical characteristics of speckle noise and discrete wavelet transform. Remote Sens. 2019, 11, 1184. [Google Scholar] [CrossRef]

- Ruan, Q.; Ruan, Y. Digital Image Processing; Publishing House of Electronics Industry: Beijing, China, 2011; pp. 289–330. [Google Scholar]

- Bianchi, T.; Bioglio, V.; Magli, E. Analysis of one-time random projections for privacy preserving compressed sensing. IEEE Trans. Inf. Forensics Secur. 2016, 11, 313–327. [Google Scholar] [CrossRef]

- Temlyakov, V.N. Greedy algorithms in Banach spaces. Adv. Comput. Math. 2001, 14, 277–292. [Google Scholar] [CrossRef]

- Tropp, J.A. Greed is good: Algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 2004, 50, 2231–2242. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Chen, S.S.; Saunders, D.M.A. Atomic Decomposition by Basis Pursuit. Siam Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Qureshi, M.A.; Deriche, M. A new wavelet based efficient image compression algorithm using compressive sensing. Multimed. Tools Appl. 2016, 75, 6737–6754. [Google Scholar] [CrossRef]

- Lewis, A.S.; Knowles, G. Image Compression Using the 2-D Wavelet Transform. IEEE Trans. Image Process. 1992, 1, 244–250. [Google Scholar] [CrossRef]

- Tan, J.; Ma, Y.; Baron, D. Compressive Imaging via Approximate Message Passing with Image Denoising. IEEE Trans. Signal Process. 2015, 63, 2085–2092. [Google Scholar] [CrossRef]

- Do, T.T.; Gan, L.; Nguyen, N.; Tran, T.D. Sparsity adaptive matching pursuit algorithm for practical compressed sensing. In Proceedings of the 2008 42nd Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 581–587. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Zhang, Y. A novel vision-based adaptive scanning for the compression of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1336–1348. [Google Scholar] [CrossRef]

- Bajwa, W.U.; Haupt, J.D.; Raz, G.M.; Wright, S.J.; Nowak, R.D. Toeplitz-Structured Compressed Sensing Matrices. In Proceedings of the IEEE/SP Workshop on Statistical Signal Processing, Madison, WI, USA, USA, 26–29 August 2007. [Google Scholar]

- Do, T.T.; Tran, T.D.; Gan, L. Fast compressive sampling with structurally random matrices. In Proceedings of the IEEE International Conference on Acoustics, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 3369–3372. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Fu, Y.; Li, G.; Liu, Z. Remote Sensing Image Compression in Visible/Near-Infrared Range Using Heterogeneous Compressive Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4932–4938. [Google Scholar] [CrossRef]

- The Celestia Motherlode. Available online: http://www.celestiamotherlode.net/ (accessed on 8 January 2021).

- Skodras, A.; Christopoulos, C.; Ebrahimi, T. The JPEG 2000 still image compression standard. IEEE Signal Process. Mag. 2001, 18, 36–58. [Google Scholar] [CrossRef]

| Level | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|

| Number of vectors | mn/16 | mn/64 | mn/256 | mn/1024 | mn/4096 |

| Vector lengths | 16 | 64 | 256 | 1024 | 4096 |

| CR | ||||||

|---|---|---|---|---|---|---|

| Method | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

| JPEG2K | 25.3267 | 27.2289 | 31.5126 | 36.0962 | 43.1136 | 52.8420 |

| Compressed Sensing (CS) | 26.5761 | 28.5148 | 31.0717 | 35.7043 | 42.4761 | 46.2453 |

| DWT-CS | 28.3680 | 30.2784 | 33.9643 | 37.6612 | 44.2360 | 51.5039 |

| Proposed | 30.2346 | 32.1275 | 35.3267 | 41.9209 | 48.0359 | 55.9472 |

| CR | ||||||

|---|---|---|---|---|---|---|

| Method | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

| JPEG2K | 0.7810 | 0.7998 | 0.8355 | 0.8759 | 0.9196 | 0.9810 |

| CS | 0.7902 | 0.8098 | 0.8309 | 0.8646 | 0.9150 | 0.9617 |

| DWT-CS | 0.8004 | 0.8352 | 0.8583 | 0.8802 | 0.9284 | 0.9786 |

| Proposed | 0.8135 | 0.8490 | 0.8618 | 0.9028 | 0.9680 | 0.9987 |

| CR | ||||||

|---|---|---|---|---|---|---|

| Method | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

| JPEG2K | 21.6909 | 24.4795 | 26.9634 | 29.5922 | 33.6692 | 35.1282 |

| CS | 24.2437 | 26. 2524 | 28.0572 | 30.1587 | 32.9893 | 34.8554 |

| DWT-CS | 25.8281 | 27.8570 | 29.2517 | 32.4487 | 34.3943 | 35.3095 |

| Proposed | 28.9721 | 31.5847 | 33.9489 | 34.7856 | 35.9889 | 37.0392 |

| CR | ||||||

|---|---|---|---|---|---|---|

| Method | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

| JPEG2K | 0.6476 | 0.6994 | 0.7455 | 0.7942 | 0.8698 | 0.8969 |

| CS | 0.6950 | 0.7323 | 0.7657 | 0.8047 | 0.8572 | 0.8918 |

| DWT-CS | 0.7244 | 0.7620 | 0.7879 | 0.8472 | 0.8833 | 0.9002 |

| Proposed | 0.7827 | 0.8312 | 0.8750 | 0.8905 | 0.9128 | 0.9323 |

| CR | |||||||

|---|---|---|---|---|---|---|---|

| Method | Planet | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

| JPEG2K | Jupiter | 0.6648 | 0.7193 | 0.7943 | 0.8641 | 0.8862 | 0.9012 |

| Mars | 0.6935 | 0.7412 | 0.7822 | 0.8438 | 0.8910 | 0.9272 | |

| Mercury | 0.6231 | 0.7081 | 0.7765 | 0.8140 | 0.8742 | 0.9181 | |

| CS | Jupiter | 0.6762 | 0.7457 | 0.8163 | 0.8660 | 0.8900 | 0.9132 |

| Mars | 0.6942 | 0.7553 | 0.8044 | 0.8570 | 0.8783 | 0.9078 | |

| Mercury | 0.6555 | 0.7394 | 0.7841 | 0.8222 | 0.8876 | 0.9307 | |

| DWT-CS | Jupiter | 0.7063 | 0.7911 | 0.8271 | 0.8713 | 0.8955 | 0.9369 |

| Mars | 0.7420 | 0.7891 | 0.8293 | 0.8612 | 0.8852 | 0.9644 | |

| Mercury | 0.6721 | 0.7741 | 0.8316 | 0.8626 | 0.8930 | 0.9497 | |

| Proposed | Jupiter | 0.7884 | 0.8112 | 0.8680 | 0.9019 | 0.9386 | 0.9697 |

| Mars | 0.7700 | 0.8180 | 0.8436 | 0.8845 | 0.9281 | 0.9874 | |

| Mercury | 0.7291 | 0.8050 | 0.8504 | 0.8819 | 0.9218 | 0.9636 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Jiang, J.; Zhang, G. Compression of Remotely Sensed Astronomical Image Using Wavelet-Based Compressed Sensing in Deep Space Exploration. Remote Sens. 2021, 13, 288. https://doi.org/10.3390/rs13020288

Zhang Y, Jiang J, Zhang G. Compression of Remotely Sensed Astronomical Image Using Wavelet-Based Compressed Sensing in Deep Space Exploration. Remote Sensing. 2021; 13(2):288. https://doi.org/10.3390/rs13020288

Chicago/Turabian StyleZhang, Yong, Jie Jiang, and Guangjun Zhang. 2021. "Compression of Remotely Sensed Astronomical Image Using Wavelet-Based Compressed Sensing in Deep Space Exploration" Remote Sensing 13, no. 2: 288. https://doi.org/10.3390/rs13020288

APA StyleZhang, Y., Jiang, J., & Zhang, G. (2021). Compression of Remotely Sensed Astronomical Image Using Wavelet-Based Compressed Sensing in Deep Space Exploration. Remote Sensing, 13(2), 288. https://doi.org/10.3390/rs13020288