Satellite-Based Precipitation Datasets Evaluation Using Gauge Observation and Hydrological Modeling in a Typical Arid Land Watershed of Central Asia

Abstract

1. Introduction

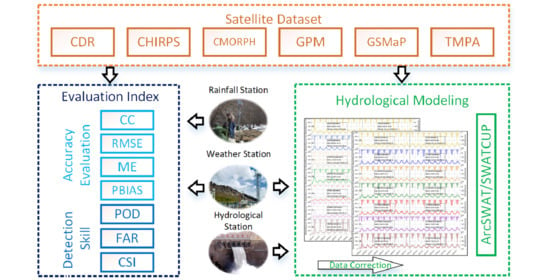

2. Data and Methods

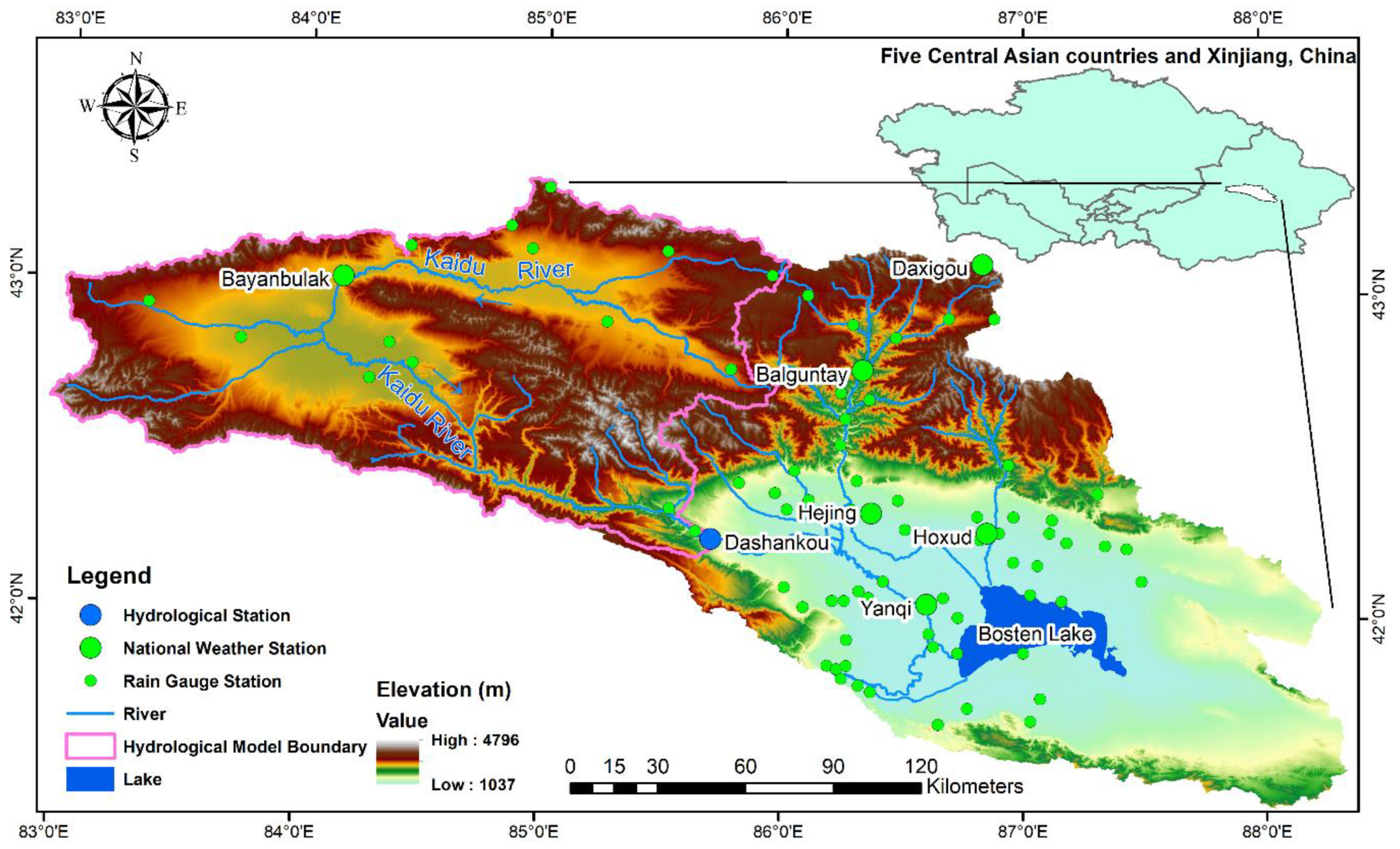

2.1. Study Area

2.2. Observed Data of Ground Stations

2.3. Satellite Precipitation Datasets

2.3.1. CDR

2.3.2. CHIRPS

2.3.3. CMORPH

2.3.4. GPM

2.3.5. GSMaP

2.3.6. TMPA

2.4. The SWAT Model

2.5. Evaluation Indexes and Correction Method

2.5.1. Evaluation Indexes of Datasets Accuracy

2.5.2. Evaluation Indexes of Hydrological Model

2.5.3. Datasets Correction Method

3. Results

3.1. Comparison Between RG Station Data and Satellite Precipitation Datasets

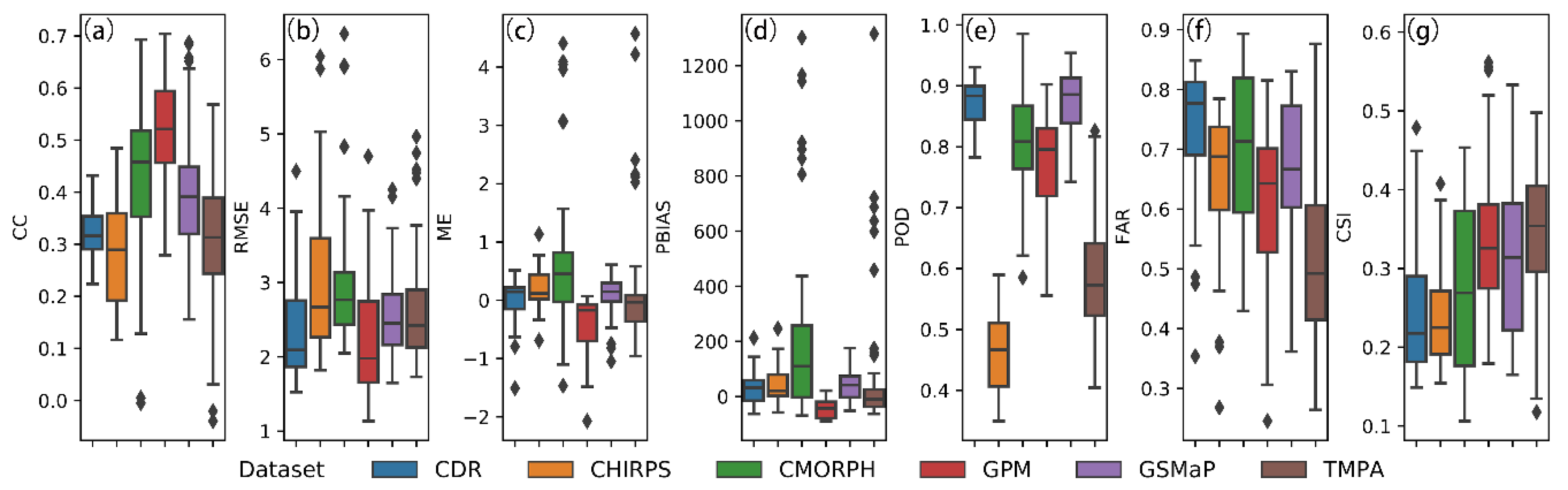

3.1.1. Evaluation Indexes Performance

3.1.2. Influence of Rainfall Intensity on the Evaluation Index

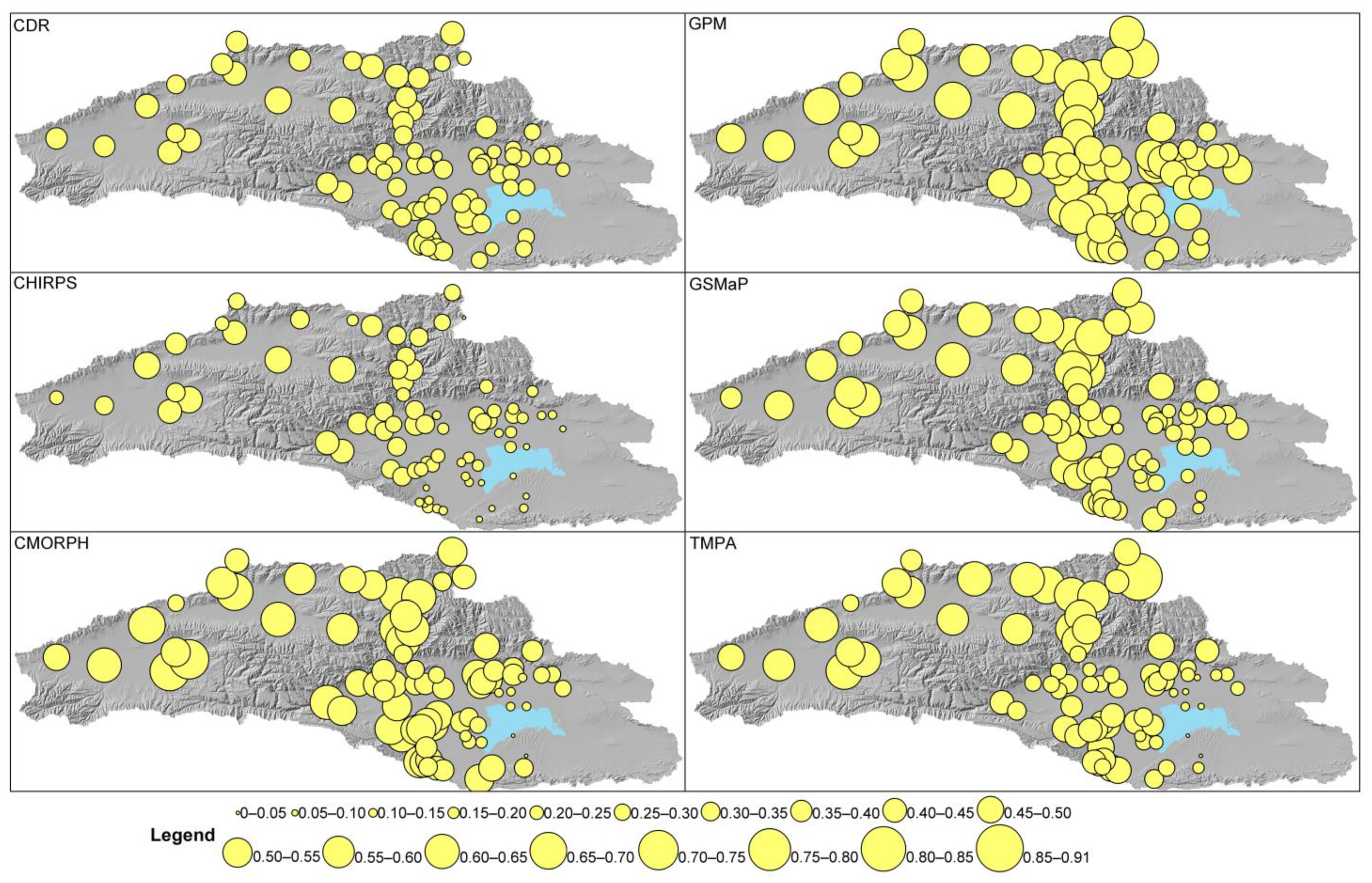

3.1.3. Spatial Distribution of Datasets Performance

3.2. Annual and Interannual Performance of Satellite Precipitation Datasets

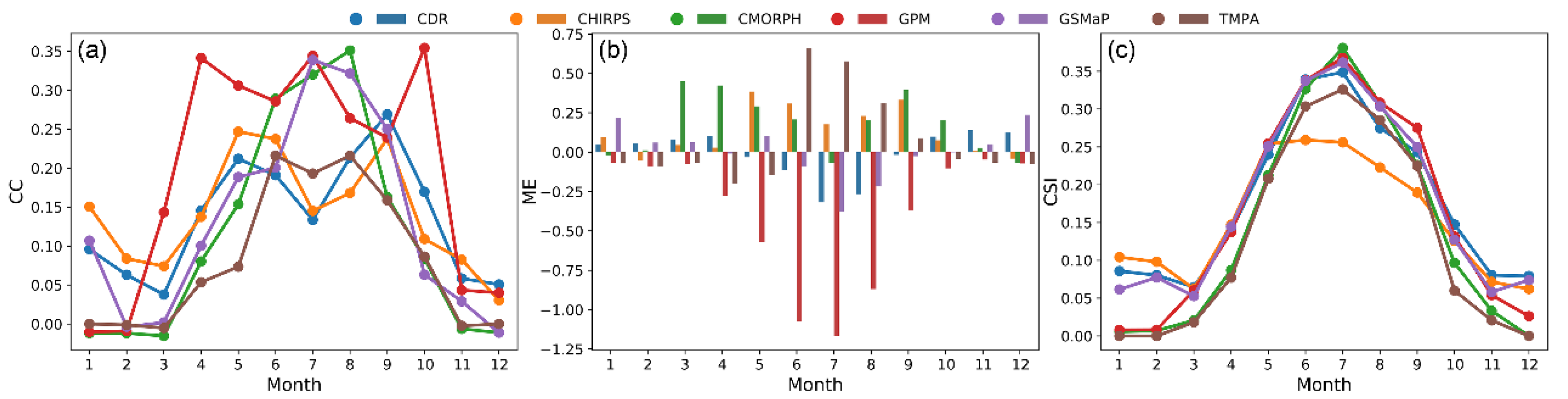

3.2.1. Performance Variation in Different Months

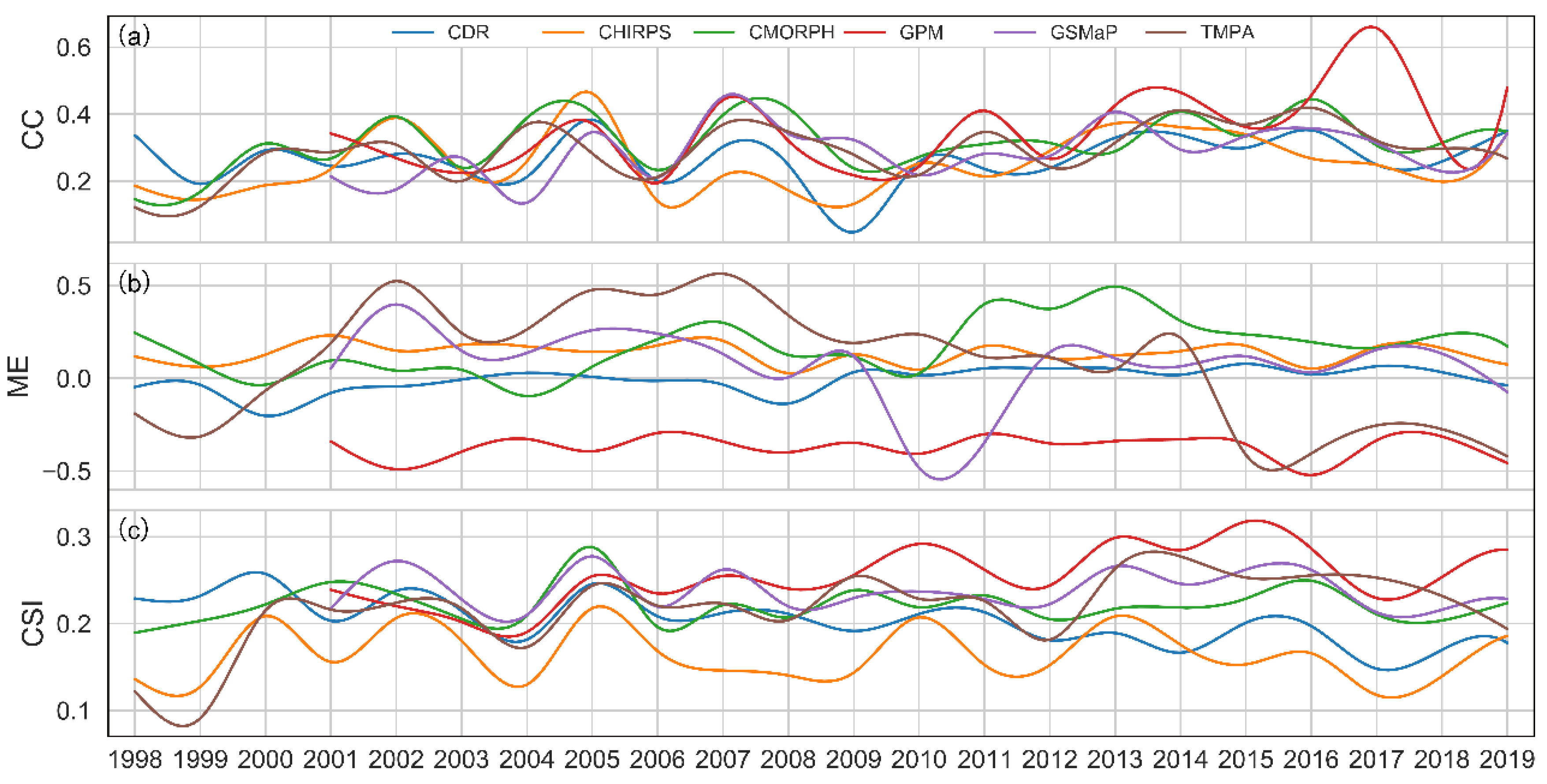

3.2.2. Trend of Datasets Performance Over the Years

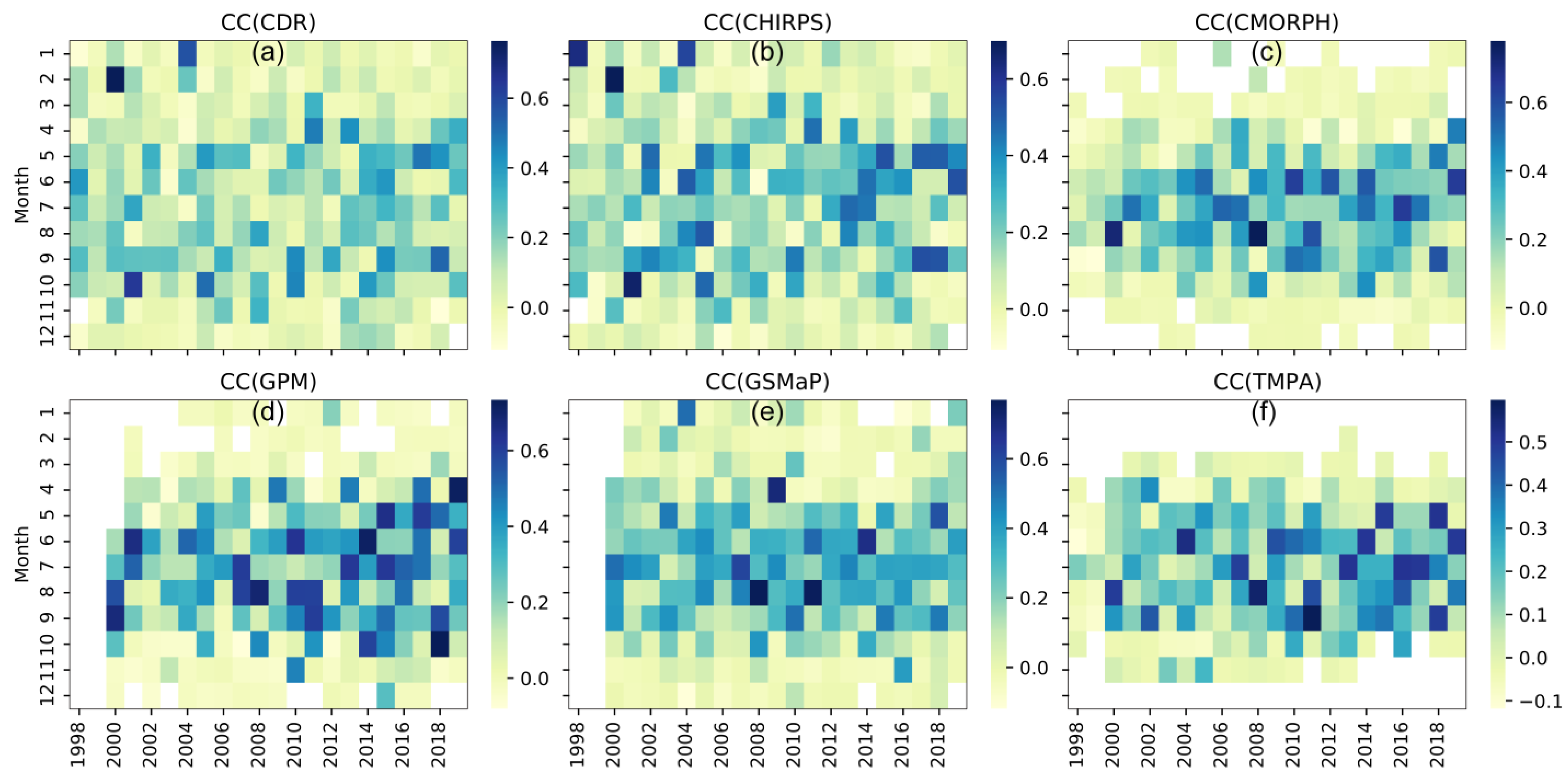

3.2.3. Multi-year Variation of Correlation Coefficient in Each Month

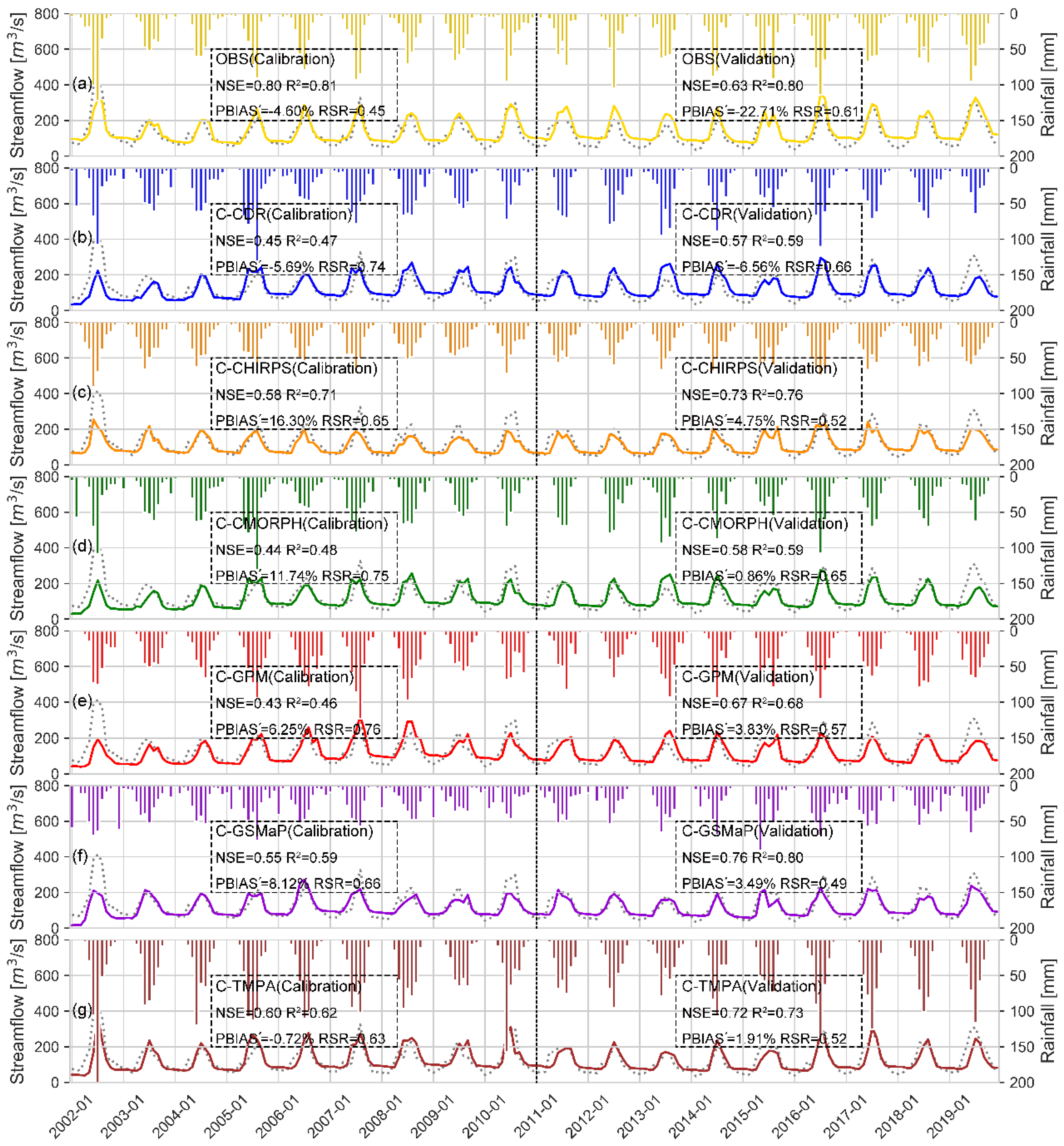

3.3. Performance in Hydrological Simulations

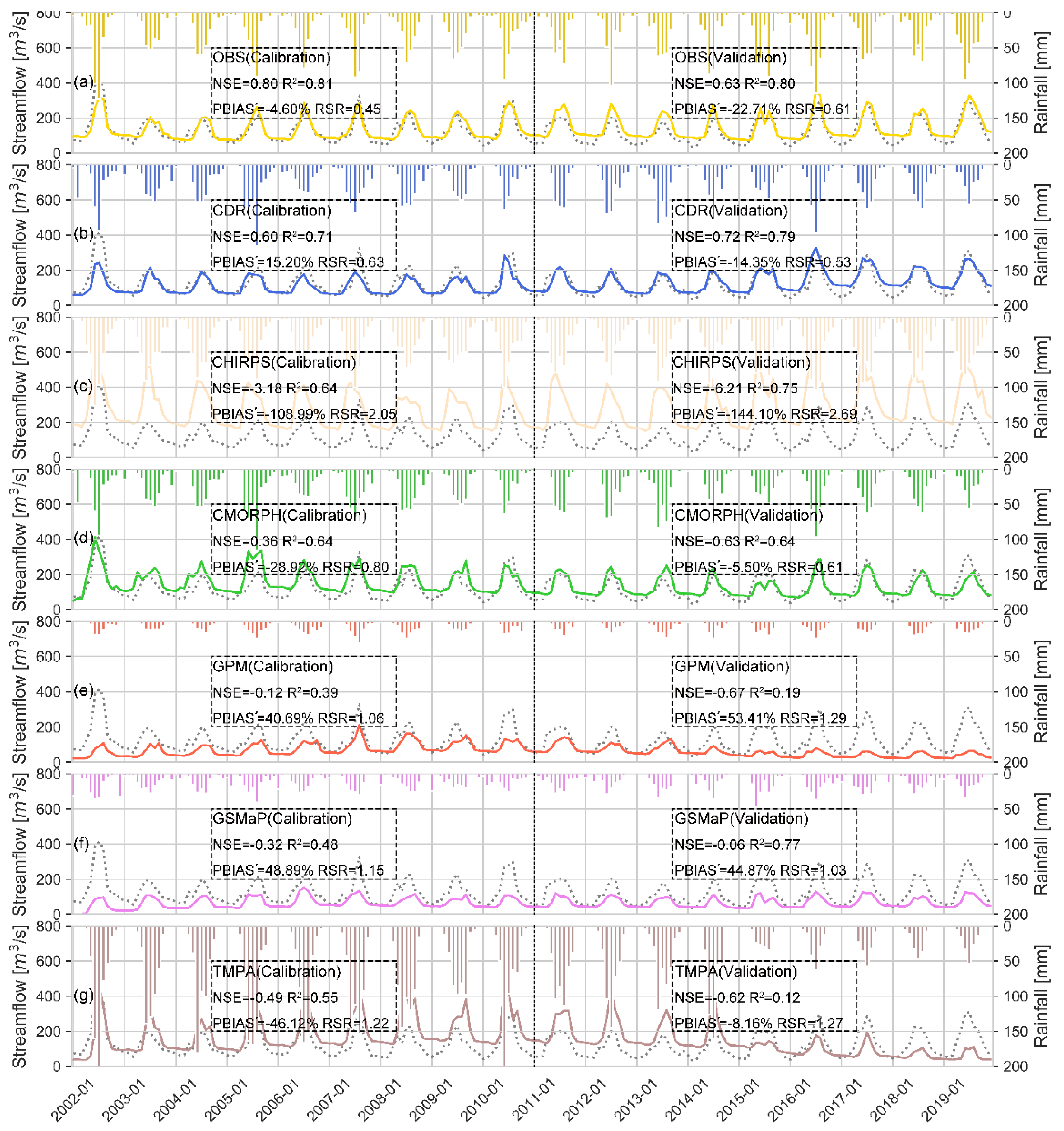

3.3.1. Streamflow Simulation of Raw Satellite Datasets

3.3.2. Streamflow Simulation of Corrected Satellite Datasets

4. Discussion

4.1. Outstanding Characteristics of Each Satellite Dataset

4.2. Similarity of the Satellite Datasets

4.3. Similarities and Differences in the Datasets Hydrological Application

4.4. Further Study

5. Conclusions

- The GPM was the best dataset in the daily scale rainfall evaluation. It had the best correlation with observed data, minimum RMSE, slight underestimation, and a reasonably good rainfall detection ability. The CHIRPS and CMORPH performed relatively poorly on a daily scale. Among them, CHIRPS had the worst rainfall detection skill, while CMORPH excessively overestimated the rainfall;

- The CDR was the best dataset in the monthly scale rainfall evaluation, with excellent agreement with observed data (ranked first in CC, RMSE, ME, and PBIAS) and a pretty good rainfall detection ability. In contrast, the CMORPH performed deficiently due to its remaining overestimation. Meanwhile, the TMPA had many unsatisfying indexes (rank 6th in CC, rank 5th in RMSE and PBIAS) and performed ineffectively in monthly rainfall estimation compared to others;

- In wetter regions of the basin, all six datasets tended to perform better. The spatial distribution of CDR and GPM was the most uniform, among which the CDR had the smallest error value and error differentiation in different locations of the basin, and the GPM performed well in correlation with gauge stations in the whole basin;

- In the multi-year evaluation, the correlation between each dataset and the NW stations was improving with time, especially during the rainy season (from April to October); among them, the GPM had the largest increase. For the evaluation within the year, the CDR and CHIRPS were the two best datasets in the winter performance, and all datasets tended to perform better in the summer;

- In the application of the hydrological model, the CDR-driven model had the most outstanding performance out of the raw satellite datasets, and was even better than the observed data-driven model in some years. In the rest of the other datasets, the CHIRPS and TMPA overestimated the streamflow in their driven models. At the same time, the GPM and GSMaP underestimated the streamflow in their driven models, and the CMORPH was the only dataset that was close to being qualified as “satisfactory”.

- After a simple correction, those datasets with large deviations could get good results in terms of hydrological modeling. Taking everything into account, satellite precipitation datasets can serve as an alternative for the related hydrological research in data-scarce areas.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Faurès, J.-M.; Goodrich, D.C.; Woolhiser, D.A.; Sorooshian, S. Impact of small-scale spatial rainfall variability on runoff modeling. J. Hydrol. 1995, 173, 309–326. [Google Scholar] [CrossRef]

- Faridzad, M.; Yang, T.; Hsu, K.; Sorooshian, S.; Xiao, C. Rainfall frequency analysis for ungauged regions using remotely sensed precipitation information. J. Hydrol. 2018, 563, 123–142. [Google Scholar] [CrossRef]

- Ebert, E.E.; Janowiak, J.E.; Kidd, C. Comparison of Near-Real-Time Precipitation Estimates from Satellite Observations and Numerical Models. Bull. Am. Meteorol. Soc. 2007, 88, 47–64. [Google Scholar] [CrossRef]

- Bharti, V.; Singh, C. Evaluation of error in TRMM 3B42V7 precipitation estimates over the Himalayan region. J. Geophys. Res. Atmos. 2015, 120, 12458–12473. [Google Scholar] [CrossRef]

- Beck, H.E.; Vergopolan, N.; Pan, M.; Levizzani, V.; van Dijk, A.I.J.M.; Weedon, G.P.; Brocca, L.; Pappenberger, F.; Huffman, G.J.; Wood, E.F. Global-scale evaluation of 22 precipitation datasets using gauge observations and hydrological modeling. Hydrol. Earth Syst. Sci. 2017, 21, 6201–6217. [Google Scholar] [CrossRef]

- Tang, G.; Clark, M.P.; Papalexiou, S.M.; Ma, Z.; Hong, Y. Have satellite precipitation products improved over last two decades? A comprehensive comparison of GPM IMERG with nine satellite and reanalysis datasets. Remote Sens. Environ. 2020, 240, 111697. [Google Scholar] [CrossRef]

- Tuo, Y.; Duan, Z.; Disse, M.; Chiogna, G. Evaluation of precipitation input for SWAT modeling in Alpine catchment: A case study in the Adige river basin (Italy). Sci. Total Environ. 2016, 573, 66–82. [Google Scholar] [CrossRef]

- Chen, C.-J.; Senarath, S.U.S.; Dima-West, I.M.; Marcella, M.P. Evaluation and restructuring of gridded precipitation data over the Greater Mekong Subregion. Int. J. Climatol. 2017, 37, 180–196. [Google Scholar] [CrossRef]

- Musie, M.; Sen, S.; Srivastava, P. Comparison and evaluation of gridded precipitation datasets for streamflow simulation in data scarce watersheds of Ethiopia. J. Hydrol. 2019, 579, 124168. [Google Scholar] [CrossRef]

- Essou, G.R.C.; Arsenault, R.; Brissette, F.P. Comparison of climate datasets for lumped hydrological modeling over the continental United States. J. Hydrol. 2016, 537, 334–345. [Google Scholar] [CrossRef]

- Ur Rahman, K.; Shang, S.; Shahid, M.; Wen, Y. Hydrological evaluation of merged satellite precipitation datasets for streamflow simulation using SWAT: A case study of Potohar Plateau, Pakistan. J. Hydrol. 2020, 587, 125040. [Google Scholar] [CrossRef]

- Ben Aissia, M.-A.; Chebana, F.; Ouarda, T.B.M.J. Multivariate missing data in hydrology—Review and applications. Adv. Water Resour. 2017, 110, 299–309. [Google Scholar] [CrossRef]

- Taylor, C.M.; Belušić, D.; Guichard, F.; Parker, D.J.; Vischel, T.; Bock, O.; Harris, P.P.; Janicot, S.; Klein, C.; Panthou, G. Frequency of extreme Sahelian storms tripled since 1982 in satellite observations. Nature 2017, 544, 475–478. [Google Scholar] [CrossRef] [PubMed]

- Mölg, T.; Maussion, F.; Scherer, D. Mid-latitude westerlies as a driver of glacier variability in monsoonal High Asia. Nat. Clim. Chang. 2014, 4, 68–73. [Google Scholar] [CrossRef]

- Sun, Q.; Miao, C.; Duan, Q.; Ashouri, H.; Sorooshian, S.; Hsu, K.-L. A Review of Global Precipitation Data Sets: Data Sources, Estimation, and Intercomparisons. Rev. Geophys. 2018, 56, 79–107. [Google Scholar] [CrossRef]

- Kummerow, C.; Barnes, W.; Kozu, T.; Shiue, J.; Simpson, J. The Tropical Rainfall Measuring Mission (TRMM) Sensor Package. J. Atmos. Ocean. Technol. 1998, 15, 809–817. [Google Scholar] [CrossRef]

- Kidd, C.; Huffman, G. Global precipitation measurement. Meteorol. Appl. 2011, 18, 334–353. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Nelkin, E.J.; Wolff, D.B.; Adler, R.F.; Gu, G.; Hong, Y.; Bowman, K.P.; Stocker, E.F. The TRMM Multisatellite Precipitation Analysis (TMPA): Quasi-Global, Multiyear, Combined-Sensor Precipitation Estimates at Fine Scales. J. Hydrometeorol. 2007, 8, 38–55. [Google Scholar] [CrossRef]

- Hou, A.Y.; Kakar, R.K.; Neeck, S.; Azarbarzin, A.A.; Kummerow, C.D.; Kojima, M.; Oki, R.; Nakamura, K.; Iguchi, T. The Global Precipitation Measurement Mission. Bull. Am. Meteorol. Soc. 2014, 95, 701–722. [Google Scholar] [CrossRef]

- Sorooshian, S.; Hsu, K.-L.; Gao, X.; Gupta, H.V.; Imam, B.; Braithwaite, D. Evaluation of PERSIANN System Satellite-Based Estimates of Tropical Rainfall. Bull. Am. Meteorol. Soc. 2000, 81, 2035–2046. [Google Scholar] [CrossRef]

- Funk, C.C.; Peterson, P.J.; Landsfeld, M.F.; Pedreros, D.H.; Verdin, J.P.; Rowland, J.D.; Romero, B.E.; Husak, G.J.; Michaelsen, J.C.; Verdin, A.P. A Quasi-Global Precipitation Time Series for Drought Monitoring; Data Series 832; U.S. Geological Survey: Reston, VA, USA, 2014; p. 12. [Google Scholar]

- Kubota, T.; Shige, S.; Hashizume, H.; Aonashi, K.; Takahashi, N.; Seto, S.; Hirose, M.; Takayabu, Y.N.; Ushio, T.; Nakagawa, K.; et al. Global Precipitation Map Using Satellite-Borne Microwave Radiometers by the GSMaP Project: Production and Validation. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2259–2275. [Google Scholar] [CrossRef]

- Joyce, R.J.; Janowiak, J.E.; Arkin, P.A.; Xie, P. CMORPH: A Method that Produces Global Precipitation Estimates from Passive Microwave and Infrared Data at High Spatial and Temporal Resolution. J. Hydrometeorol. 2004, 5, 487–503. [Google Scholar] [CrossRef]

- Deng, P.; Zhang, M.; Bing, J.; Jia, J.; Zhang, D. Evaluation of the GSMaP_Gauge products using rain gauge observations and SWAT model in the Upper Hanjiang River Basin. Atmos. Res. 2019, 219, 153–165. [Google Scholar] [CrossRef]

- Le, M.-H.; Lakshmi, V.; Bolten, J.; Bui, D.D. Adequacy of Satellite-derived Precipitation Estimate for Hydrological Modeling in Vietnam Basins. J. Hydrol. 2020, 586, 124820. [Google Scholar] [CrossRef]

- Chen, J.; Li, Z.; Li, L.; Wang, J.; Qi, W.; Xu, C.-Y.; Kim, J.-S. Evaluation of Multi-Satellite Precipitation Datasets and Their Error Propagation in Hydrological Modeling in a Monsoon-Prone Region. Remote Sens. 2020, 12, 3550. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Gao, C.; Ruben, B.G.; Wang, G. Assessing the Uncertainties of Four Precipitation Products for Swat Modeling in Mekong River Basin. Remote Sens. 2019, 11, 304. [Google Scholar] [CrossRef]

- Massari, C.; Brocca, L.; Pellarin, T.; Abramowitz, G.; Filippucci, P.; Ciabatta, L.; Maggioni, V.; Kerr, Y.; Fernandez Prieto, D. A daily 25 km short-latency rainfall product for data-scarce regions based on the integration of the Global Precipitation Measurement mission rainfall and multiple-satellite soil moisture products. Hydrol. Earth Syst. Sci. 2020, 24, 2687–2710. [Google Scholar] [CrossRef]

- Liu, T.; Willems, P.; Pan, X.L.; Bao, A.M.; Chen, X.; Veroustraete, F.; Dong, Q.H. Climate change impact on water resource extremes in a headwater region of the Tarim basin in China. Hydrol. Earth Syst. Sci. 2011, 15, 3511–3527. [Google Scholar] [CrossRef]

- Ma, Y.; Huang, Y.; Liu, T. Change and Climatic Linkage for Extreme Flows in Typical Catchments of Middle Tianshan Mountain, Northwest China. Water 2018, 10, 1061. [Google Scholar] [CrossRef]

- Guo, H.; Bao, A.; Ndayisaba, F.; Liu, T.; Kurban, A.; De Maeyer, P. Systematical Evaluation of Satellite Precipitation Estimates Over Central Asia Using an Improved Error-Component Procedure. J. Geophys. Res. Atmos. 2017, 122, 10906–10927. [Google Scholar] [CrossRef]

- Gao, F.; Zhang, Y.; Chen, Q.; Wang, P.; Yang, H.; Yao, Y.; Cai, W. Comparison of two long-term and high-resolution satellite precipitation datasets in Xinjiang, China. Atmos. Res. 2018, 212, 150–157. [Google Scholar] [CrossRef]

- Pereira-Cardenal, S.J.; Riegels, N.D.; Berry, P.A.M.; Smith, R.G.; Yakovlev, A.; Siegfried, T.U.; Bauer-Gottwein, P. Real-time remote sensing driven river basin modeling using radar altimetry. Hydrol. Earth Syst. Sci. 2011, 15, 241–254. [Google Scholar] [CrossRef]

- Luo, M.; Liu, T.; Meng, F.; Duan, Y.; Frankl, A.; Bao, A.; De Maeyer, P. Comparing Bias Correction Methods Used in Downscaling Precipitation and Temperature from Regional Climate Models: A Case Study from the Kaidu River Basin in Western China. Water 2018, 10, 1046. [Google Scholar] [CrossRef]

- Ashouri, H.; Hsu, K.-L.; Sorooshian, S.; Braithwaite, D.K.; Knapp, K.R.; Cecil, L.D.; Nelson, B.R.; Prat, O.P. PERSIANN-CDR: Daily Precipitation Climate Data Record from Multisatellite Observations for Hydrological and Climate Studies. Bull. Am. Meteorol. Soc. 2015, 96, 69–83. [Google Scholar] [CrossRef]

- Funk, C.; Peterson, P.; Landsfeld, M.; Pedreros, D.; Verdin, J.; Shukla, S.; Husak, G.; Rowland, J.; Harrison, L.; Hoell, A.; et al. The climate hazards infrared precipitation with stations—A new environmental record for monitoring extremes. Sci. Data 2015, 2, 150066. [Google Scholar] [CrossRef]

- Xie, P.; Joyce, R.; Wu, S.; Yoo, S.-H.; Yarosh, Y.; Sun, F.; Lin, R. Reprocessed, Bias-Corrected CMORPH Global High-Resolution Precipitation Estimates from 1998. J. Hydrometeorol. 2017, 18, 1617–1641. [Google Scholar] [CrossRef]

- Huffman, G.J.; Stocker, E.F.; Bolvin, D.T.; Nelkin, E.J.; Tan, J. GPM IMERG Final Precipitation L3 1 Day 0.1 Degree x 0.1 Degree V06. DISC); Technical Report No.; Savtchenko, A., Ed.; Goddard Earth Sciences Data and Information Services Center (GES DISC): Greenbelt, MD, USA, 2019. [Google Scholar] [CrossRef]

- Kubota, T.; Aonashi, K.; Ushio, T.; Shige, S.; Takayabu, Y.N.; Kachi, M.; Arai, Y.; Tashima, T.; Masaki, T.; Kawamoto, N.; et al. Global Satellite Mapping of Precipitation (GSMaP) Products in the GPM Era. In Satellite Precipitation Measurement: Volume 1; Levizzani, V., Kidd, C., Kirschbaum, D.B., Kummerow, C.D., Nakamura, K., Turk, F.J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 355–373. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Nelkin, E.J.; Adler, R.F. TRMM (TMPA) Precipitation L3 1 Day 0.25 Degree x 0.25 Degree V7. DISC); Technical Report No.; Savtchenko, A., Ed.; Goddard Earth Sciences Data and Information Services Center (GES DISC): Greenbelt, MD, USA, 2016. [Google Scholar] [CrossRef]

- Arnold, J.G.; Moriasi, N.D.; Gassman, W.P.; Abbaspour, C.K.; White, J.M.; Srinivasan, R.; Santhi, C.; Harmel, D.R.; van Griensven, A.; Van Liew, W.M.; et al. SWAT: Model Use, Calibration, and Validation. Trans. ASABE 2012, 55, 1491–1508. [Google Scholar] [CrossRef]

- Wang, N.; Liu, W.; Sun, F.; Yao, Z.; Wang, H.; Liu, W. Evaluating satellite-based and reanalysis precipitation datasets with gauge-observed data and hydrological modeling in the Xihe River Basin, China. Atmos. Res. 2020, 234, 104746. [Google Scholar] [CrossRef]

- Zhu, H.; Li, Y.; Huang, Y.; Li, Y.; Hou, C.; Shi, X. Evaluation and hydrological application of satellite-based precipitation datasets in driving hydrological models over the Huifa river basin in Northeast China. Atmos. Res. 2018, 207, 28–41. [Google Scholar] [CrossRef]

- Moriasi, N.D.; Arnold, G.J.; Van Liew, W.M.; Bingner, L.R.; Harmel, D.R.; Veith, L.T. Model Evaluation Guidelines for Systematic Quantification of Accuracy in Watershed Simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- Briggs, W. Statistical Methods in the Atmospheric Sciences. J. Am. Stat. Assoc. 2007, 102, 380. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Tao, Y.; Gao, X.; Hsu, K.; Sorooshian, S.; Ihler, A. A Deep Neural Network Modeling Framework to Reduce Bias in Satellite Precipitation Products. J. Hydrometeorol. 2016, 17, 931–945. [Google Scholar] [CrossRef]

- Yin, Z.-Y.; Liu, X.; Zhang, X.; Chung, C.-F. Using a geographic information system to improve Special Sensor Microwave Imager precipitation estimates over the Tibetan Plateau. J. Geophys. Res. Atmos. 2004, 109. [Google Scholar] [CrossRef]

- Greene, J.S.; Morrissey, M.L. Validation and Uncertainty Analysis of Satellite Rainfall Algorithms. Prof. Geogr. 2000, 52, 247–258. [Google Scholar] [CrossRef]

- Habib, E.; Haile, A.T.; Sazib, N.; Zhang, Y.; Rientjes, T. Effect of Bias Correction of Satellite-Rainfall Estimates on Runoff Simulations at the Source of the Upper Blue Nile. Remote Sens. 2014, 6, 6688–6708. [Google Scholar] [CrossRef]

- Tan, M.L.; Santo, H. Comparison of GPM IMERG, TMPA 3B42 and PERSIANN-CDR satellite precipitation products over Malaysia. Atmos. Res. 2018, 202, 63–76. [Google Scholar] [CrossRef]

- Ullah, W.; Wang, G.; Ali, G.; Tawia Hagan, D.F.; Bhatti, A.S.; Lou, D. Comparing Multiple Precipitation Products against In-Situ Observations over Different Climate Regions of Pakistan. Remote Sens. 2019, 11, 628. [Google Scholar] [CrossRef]

- Yong, B.; Hong, Y.; Ren, L.-L.; Gourley, J.J.; Huffman, G.J.; Chen, X.; Wang, W.; Khan, S.I. Assessment of evolving TRMM-based multisatellite real-time precipitation estimation methods and their impacts on hydrologic prediction in a high latitude basin. J. Geophys. Res. Atmos. 2012, 117. [Google Scholar] [CrossRef]

- Guo, H.; Chen, S.; Bao, A.; Behrangi, A.; Hong, Y.; Ndayisaba, F.; Hu, J.; Stepanian, P.M. Early assessment of Integrated Multi-satellite Retrievals for Global Precipitation Measurement over China. Atmos. Res. 2016, 176–177, 121–133. [Google Scholar] [CrossRef]

- Zhou, Z.; Guo, B.; Xing, W.; Zhou, J.; Xu, F.; Xu, Y. Comprehensive evaluation of latest GPM era IMERG and GSMaP precipitation products over mainland China. Atmos. Res. 2020, 246, 105132. [Google Scholar] [CrossRef]

- Babaousmail, H.; Hou, R.; Ayugi, B.; Gnitou, G.T. Evaluation of satellite-based precipitation estimates over Algeria during 1998–2016. J. Atmos. Sol. Terr. Phys. 2019, 195, 105139. [Google Scholar] [CrossRef]

- Alsumaiti, T.S.; Hussein, K.; Ghebreyesus, D.T.; Sharif, H.O. Performance of the CMORPH and GPM IMERG Products over the United Arab Emirates. Remote Sens. 2020, 12, 1426. [Google Scholar] [CrossRef]

- Hussain, Y.; Satgé, F.; Hussain, M.B.; Martinez-Carvajal, H.; Bonnet, M.-P.; Cárdenas-Soto, M.; Roig, H.L.; Akhter, G. Performance of CMORPH, TMPA, and PERSIANN rainfall datasets over plain, mountainous, and glacial regions of Pakistan. Theor. Appl. Climatol. 2018, 131, 1119–1132. [Google Scholar] [CrossRef]

- Yong, B.; Chen, B.; Gourley, J.J.; Ren, L.; Hong, Y.; Chen, X.; Wang, W.; Chen, S.; Gong, L. Intercomparison of the Version-6 and Version-7 TMPA precipitation products over high and low latitudes basins with independent gauge networks: Is the newer version better in both real-time and post-real-time analysis for water resources and hydrologic extremes? J. Hydrol. 2014, 508, 77–87. [Google Scholar] [CrossRef]

- Eini, M.R.; Javadi, S.; Delavar, M.; Monteiro, J.A.F.; Darand, M. High accuracy of precipitation reanalyses resulted in good river discharge simulations in a semi-arid basin. Ecol. Eng. 2019, 131, 107–119. [Google Scholar] [CrossRef]

- Yang, H.; Xu, J.; Chen, Y.; Li, D.; Zuo, J.; Zhu, N.; Chen, Z. Has the Bosten Lake Basin been dry or wet during the climate transition in Northwest China in the past 30 years? Theor. Appl. Climatol. 2020, 141, 627–644. [Google Scholar] [CrossRef]

- Behrangi, A.; Khakbaz, B.; Jaw, T.C.; AghaKouchak, A.; Hsu, K.; Sorooshian, S. Hydrologic evaluation of satellite precipitation products over a mid-size basin. J. Hydrol. 2011, 397, 225–237. [Google Scholar] [CrossRef]

- Chen, C.; Li, Z.; Song, Y.; Duan, Z.; Mo, K.; Wang, Z.; Chen, Q. Performance of Multiple Satellite Precipitation Estimates over a Typical Arid Mountainous Area of China: Spatiotemporal Patterns and Extremes. J. Hydrometeorol. 2020, 21, 533–550. [Google Scholar] [CrossRef]

- Kim, K.B.; Kwon, H.-H.; Han, D. Exploration of warm-up period in conceptual hydrological modelling. J. Hydrol. 2018, 556, 194–210. [Google Scholar] [CrossRef]

- Khoshchehreh, M.; Ghomeshi, M.; Shahbazi, A. Hydrological evaluation of global gridded precipitation datasets in a heterogeneous and data-scarce basin in Iran. J. Earth Syst. Sci. 2020, 129, 201. [Google Scholar] [CrossRef]

- Isotta, F.A.; Frei, C.; Weilguni, V.; Perčec Tadić, M.; Lassègues, P.; Rudolf, B.; Pavan, V.; Cacciamani, C.; Antolini, G.; Ratto, S.M.; et al. The climate of daily precipitation in the Alps: Development and analysis of a high-resolution grid dataset from pan-Alpine rain-gauge data. Int. J. Climatol. 2014, 34, 1657–1675. [Google Scholar] [CrossRef]

| Dataset Version | Short Name | Release Date | Resolution | Period |

|---|---|---|---|---|

| PERSIANN-CDR_V1_R1 | CDR | 2014 | 0.25°/1 d | 1983–present |

| CHIRPS_2.0 | CHIRPS | 2015 | 0.25°/1 d | 1981–present |

| CMORPH_IFlOODS_V1.0 | CMORPH | 2013 | 0.25°/1 d | 1998–2019 |

| GPM_IMERGF_V06 | GPM | 2019 | 0.10°/1 d | 2000–present |

| GSMaP_V6 | GSMaP | 2016 | 0.25°/1 d | 2000–present |

| TMPA_3B42_daily_V7 | TMPA | 2016 | 0.25°/1 d | 1998–2019 |

| Time Scale | Satellite Data | CC | RMSE (mm) | ME(mm) * | PBIAS(%) * | POD | FAR | CSI |

|---|---|---|---|---|---|---|---|---|

| Daily | CDR | 0.32 | 2.38 | 0.04 (0.25) | 29.92 (46.96) | 0.87 | 0.74 | 0.25 |

| CHIRPS | 0.29 | 3.03 | 0.19 (0.25) | 40.60 (49.35) | 0.46 | 0.66 | 0.24 | |

| CMORPH | 0.43 | 3.22 | 0.86 (1.08) | 244.82 (260.23) | 0.81 | 0.70 | 0.27 | |

| GPM | 0.52 | 2.27 | −0.38 (0.38) | −43.28 (45.08) | 0.77 | 0.61 | 0.34 | |

| GSMaP | 0.40 | 2.56 | 0.11 (0.25) | 39.87 (49.70) | 0.87 | 0.67 | 0.32 | |

| TMPA | 0.31 | 2.79 | 0.11 (0.47) | 56.31 (93.87) | 0.59 | 0.53 | 0.34 | |

| Monthly | CDR | 0.69 | 17.81 | 1.15 (7.69) | 30.98 (48.15) | 1.00 | 0.16 | 0.84 |

| CHIRPS | 0.63 | 18.91 | 5.80 (7.77) | 40.97 (49.80) | 1.00 | 0.15 | 0.85 | |

| CMORPH | 0.48 | 45.07 | 27.40 (33.60) | 257.45 (271.97) | 1.00 | 0.15 | 0.85 | |

| GPM | 0.63 | 22.00 | −11.47 (11.63) | −43.26 (45.06) | 0.99 | 0.15 | 0.85 | |

| GSMaP | 0.59 | 19.58 | 3.46 (7.70) | 41.47 (51.52) | 1.00 | 0.15 | 0.85 | |

| TMPA | 0.42 | 33.27 | 3.40 (14.18) | 56.70 (94.24) | 0.89 | 0.10 | 0.80 |

| Dataset | Raw | Corrected | ||

| Calibration | Validation | Calibration | Validation | |

| OBS | Very good | Satisfactory | Very good | Satisfactory |

| CDR | Satisfactory | Good | Unsatisfactory | Satisfactory |

| CHIRPS | Unsatisfactory | Unsatisfactory | Satisfactory | Good |

| CMORPH | Unsatisfactory | Satisfactory | Unsatisfactory | Satisfactory |

| GPM | Unsatisfactory | Unsatisfactory | Unsatisfactory | Good |

| GSMaP | Unsatisfactory | Unsatisfactory | Satisfactory | Very good |

| TMPA | Unsatisfactory | Unsatisfactory | Satisfactory | Good |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, J.; Liu, T.; Huang, Y.; Ling, Y.; Li, Z.; Bao, A.; Chen, X.; Kurban, A.; De Maeyer, P. Satellite-Based Precipitation Datasets Evaluation Using Gauge Observation and Hydrological Modeling in a Typical Arid Land Watershed of Central Asia. Remote Sens. 2021, 13, 221. https://doi.org/10.3390/rs13020221

Peng J, Liu T, Huang Y, Ling Y, Li Z, Bao A, Chen X, Kurban A, De Maeyer P. Satellite-Based Precipitation Datasets Evaluation Using Gauge Observation and Hydrological Modeling in a Typical Arid Land Watershed of Central Asia. Remote Sensing. 2021; 13(2):221. https://doi.org/10.3390/rs13020221

Chicago/Turabian StylePeng, Jiabin, Tie Liu, Yue Huang, Yunan Ling, Zhengyang Li, Anming Bao, Xi Chen, Alishir Kurban, and Philippe De Maeyer. 2021. "Satellite-Based Precipitation Datasets Evaluation Using Gauge Observation and Hydrological Modeling in a Typical Arid Land Watershed of Central Asia" Remote Sensing 13, no. 2: 221. https://doi.org/10.3390/rs13020221

APA StylePeng, J., Liu, T., Huang, Y., Ling, Y., Li, Z., Bao, A., Chen, X., Kurban, A., & De Maeyer, P. (2021). Satellite-Based Precipitation Datasets Evaluation Using Gauge Observation and Hydrological Modeling in a Typical Arid Land Watershed of Central Asia. Remote Sensing, 13(2), 221. https://doi.org/10.3390/rs13020221