Abstract

Building change detection is a critical field for monitoring artificial structures using high-resolution multitemporal images. However, relief displacement depending on the azimuth and elevation angles of the sensor causes numerous false alarms and misdetections of building changes. Therefore, this study proposes an effective object-based building change detection method that considers azimuth and elevation angles of sensors in high-resolution images. To this end, segmentation images were generated using a multiresolution technique from high-resolution images after which object-based building detection was performed. For detecting building candidates, we calculated feature information that could describe building objects, such as rectangular fit, gray-level co-occurrence matrix (GLCM) homogeneity, and area. Final building detection was then performed considering the location relationship between building objects and their shadows using the Sun’s azimuth angle. Subsequently, building change detection of final building objects was performed based on three methods considering the relationship of the building object properties between the images. First, only overlaying objects between images were considered to detect changes. Second, the size difference between objects according to the sensor’s elevation angle was considered to detect the building changes. Third, the direction between objects according to the sensor’s azimuth angle was analyzed to identify the building changes. To confirm the effectiveness of the proposed object-based building change detection performance, two building density areas were selected as study sites. Site 1 was constructed using a single sensor of KOMPSAT-3 bitemporal images, whereas Site 2 consisted of multi-sensor images of KOMPSAT-3 and unmanned aerial vehicle (UAV). The results from both sites revealed that considering additional shadow information showed more accurate building detection than using feature information only. Furthermore, the results of the three object-based change detections were compared and analyzed according to the characteristics of the study area and the sensors. Accuracy of the proposed object-based change detection results was achieved over the existing building detection methods.

1. Introduction

High-resolution satellite images provide high value-added information in a wide range of fields such as land management, management of marine water resources, disaster monitoring, agricultural applications, and national security [1]. Therefore, satellites equipped with high-resolution sensors, such as WorldView, GeoEye, QuickBird, and KOMPSAT, are operating worldwide. High-resolution big data are used in various public services. By using the high-resolution satellite images, more information can be extracted effectively in spatial information fields, e.g., image fusion, object extraction, and change detection. Specifically, object change detection for national territory monitoring are expanding in the scope of use because it is easier to acquire high-resolution images from multitemporal sensors [2,3]. Among them, focusing on buildings to extract and further to detect their changes in urban areas is one of the active researches. Especially, in areas where high-rise buildings are concentrated, such as in South Korea, they become more important [4].

Building detection and building change detection in high-resolution images have been performed in several ways. One of the methods is to use light detection and ranging (LiDAR) data with high-resolution images [5]. Research was conducted to perform morphological analysis of the terrain using LiDAR data to classify the land and buildings [6]. Studies have automatically extracted buildings using LiDAR data fusion in point and grid-based features, and have generated multitemporal LiDAR digital surface models (DSMs) to perform building analysis [3,7]. Studies have also been actively conducted to detect building changes using synthetic aperture radar (SAR) images [8,9].

However, using LiDAR, DSM, and SAR data for building change analysis has a disadvantage regarding the additional acquisitions of them in addition to multispectral images, which can be a weakness in conducting the study. Alternatively, spectral characteristics transformed from spectral bands of multispectral images have been used for building detection. The typical building index using spectral properties includes the urban index, the normalized difference built-up index [10], index-based built-up index [11], and enhanced built-up and bareness index [12]. However, most building detection indices use wavelength bands above shortwave infrared (SWIR) (3–8 µm); therefore, they are unavailable in the latest high-resolution satellites, such as KOMPSAT-3, RapidEye, and QuickBird, which provide only red, green, blue, and near-infrared (NIR) wavelength bands (0.75–3 µm). Moreover, pixel-based building detection methods, including index-based approaches, cause severe falsely detected building pixels, such as salt-and-pepper noise.

Building detections have been conducted using the shape and morphological characteristics without using SWIR band. For example, stereotyped builds were detected using shape properties [2,13]. Liu et al. [14] proposed a building change detection method using a line-constrained shape feature. An automated building change detection method based on a morphological attribute profile was proposed by Li et al. [15]. Moreover, a morphological building index (MBI) was introduced to allow for extracting buildings and thus analyzing their changes [1]. For example, a building change detection method based on MBI, spectral, and shape conditions was proposed [16]. In [17], MBI and slow feature analysis were combined to carry out the building change detection. A multi-level approach for building change detection was proposed by utilizing the MBI and mutual information together [18]. MBI-based multiple building change detection results were combined with an object unit through the Dempster–Shafer theory [19]. Studies have also detected building changes through various methods. For example, a novel patch-based matching approach using densely connected conditional random field (CRF) optimization was introduced [20]. Cross-sharpened images were used to reduce change detection errors due to undulating displacement [4,21].

Unlike pixel-based analysis, an object-based approach creates meaningful segments using a group of pixels showing similar spectral properties. Consequently, object-based change detection is compatible to analyze the changes in an object unit [22]. The object-based change detection method can be performed by fusing the spatial features (e.g., texture, shape, and topology) of an object during the change detection process [22,23,24]. Im et al. [25] proposed an object-based change detection method based on image segmentation and correlation analysis. An unsupervised change detection approach focusing on individual building objects in an urban environment was proposed in [26]. Zhang et al. [27] carried out change detection with separate segmentation of multitemporal high-resolution imagery focusing on building changes in an urban area. They individually conducted segmentation for each multitemporal imagery and extracted change features for generating changed objects.

Other methods extracted buildings using additional information that might exist around buildings, e.g., shadows. A common fact in most building detection approaches used shadow information is that shadows can be used efficiently to determine the existence of building structures because they are strong evidence of objects beyond the surface [28,29,30,31]. Using shadow information from high-rise buildings can reduce the false detection of buildings and even estimates the height of buildings [32,33,34].

However, it is challenging to detect accurate building boundaries because they are overlapped with other objects, e.g., trees or adjacent shadows, and have similar spectral characteristics with objects, e.g., roads or parking lots [35]. Furthermore, high-rise buildings cause the severe influence of relief displacement according to the sensor’s azimuth and elevation angles of the multitemporal satellite images; therefore, the same building can be photographed with different sizes and shapes in the images. This results in reducing the change detection performance by misdetecting the same building object as different objects, and vice versa [4,36].

This study proposes an object-based building detection and building change detection approach between high-resolution images while considering the Sun and sensor’s elevation and azimuth angles. Previous studies conducted on a single study site confirmed the possibility that the acquisition angles could improve the building change detection result [37]. In this study, we aim to demonstrate and to further generalize the proposed method by applying it to sites constructed with single-sensor and multi-sensor images acquired from different elevation and azimuth angles of both the Sun and sensors. To conduct the proposed method, the multiresolution segmentation method was exploited to extract object features related to the building’s shape and size. Additionally, the location relationship between buildings and their shadows, according to the azimuth angle of the Sun, was used for accurate building detection. After detecting the buildings in each image, object-based building change detection was performed by considering the spatial characteristics of the object associated with the sensor’s azimuth and elevation angles. Here, we conduct three independent building change detection methods: overlaying simply between objects, considering the size of objects according to the sensor’s elevation angle, and considering the direction of the object according to the sensor’s azimuth angle. To verify the effectiveness of the proposed building change detection method, two study sites mainly covered with high-rise buildings were selected; one site was constructed using the same sensor of KOMPSAT-3 satellite images, whereas another site was constructed using different sensors of KOMPSAT-3 and unmanned aerial vehicle (UAV) images. The results were compared with ones from pixel-based and MBI-based building change detection methods [19] to confirm the superiority of the proposed method.

2. Study Site and Evaluation Criteria

2.1. Study Site

In this study, two regions were selected as study sites for applying the proposed method. In the case of the first study site, Sejong-si in South Korea (Site 1), all bitemporal images were acquired from the high-resolution single sensor mounted on the KOMPSAT-3 satellite. For the second study site (Site 2), images were acquired over Sangju-si in South Korea from multi-sensors, the KOMPSAT-3 satellite and the Inspire 2 UAV, equipped with Zenmuse X4S optical sensor. The KOMPSAT-3 images have red, green, blue, and NIR bands, and a radiometric resolution of 14 bits. The UAV images have red, green, and blue bands and have a radiometric resolution of 8 bits. Table 1 and Table 2 show the specifications of the KOMPSAT-3 satellite and UAV used in the study.

Table 1.

Specification of KOMPSAT-3.

Table 2.

Specification of UAV and mounted camera.

Site 1 mainly consists of a residential area with high-rise buildings in Sejong-si, South Korea. Each image was acquired from a KOMPSAT-3 satellite collected on 16 November 2013 and 26 February 2019. Multispectral images with a spatial resolution of 2.8 m were used. The image sizes after performing co-registration were 936 × 1076 pixels (Figure 1). Table 3 shows the azimuth and elevation angles of the Sun and sensor when the images were taken. As shown in Figure 1, high-rise buildings lay in different directions in an image depending on the sensor’s azimuth and elevation angles. Moreover, shadow size of the buildings in the 2013 image were due to its lower elevation angle of the Sun.

Figure 1.

KOMPSAT-3 images used for constructing Site 1 (a) acquired on 16 November 2013 and (b) acquired on 26 February 2019.

Table 3.

Specifications of images used for constructing Site 1.

Site 2 is presented in Figure 2. Figure 2a is a pre-changed image acquired from the KOMPSAT-3 sensor, and Figure 2b is a post-changed image acquired from UAV. For UAV images, an orthoimage was generated using 153 overlapping images acquired from a condition of overlap and side lap set at 80% and an altitude at 100 m processed using Pix4D software. Table 4 shows the azimuth and elevation angles of the Sun and sensor when the images were taken. The azimuth (155.893°) and elevation (66.136°) angles of the Sun when the UAV image was taken were provided by the Korea Astronomy and Space Science Institute (KASI).

Figure 2.

KOMPSAT-3 and UAV images used for constructing Site 2 (a) KOMPSAT-3 image acquired on 18 May 2016 and (b) UAV image acquired on 26 April 2019.

Table 4.

Specifications of images used for constructing Site 2.

2.2. Evaluation Creteria

An accuracy assessment for building extraction results was performed using a confusion matrix, a model performance evaluation indicator, and the Kappa coefficient, identifying the consistency between the binary images. In the confusion matrix, building objects and non-building objects in the reference data are defined as condition positive (CP) and condition negative (CN), respectively. Similarly, building and non-building objects in the detection results are defined as prediction positive (PP) and prediction negative (PN), respectively. Furthermore, buildings in both the reference data and results are labeled as true positive (TP). Buildings in the reference data but non-buildings in the results are labeled as false negative (FN). In the reference data, non-building classes that are not classified as buildings in the results are labeled as true negative (TN). Non-building objects in the reference data that are categorized with building objects by the method are labeled as false positive (FP) (Table 5).

Table 5.

Confusion matrix.

From the confusion matrix, we identified false alarm, miss rate, and F1-score. The false alarm calculates the proportion that is misdetected into a building although it is not a building (Equation (1)), and the miss rate calculates the proportion that a building is detected as a non-building (Equation (2)). Precision refers to the percentage of buildings in the classification results that are also buildings in the reference data. Recall refers to the percentage of buildings in the reference data that are also buildings in the classification results (Equation (3)). The F1-score is calculated as the harmonic mean of the precision and the recall. Additionally, the Kappa coefficient, which determines how similar the findings are to the reference data (Equation (4)), was calculated to assess the accuracy.

3. Methods

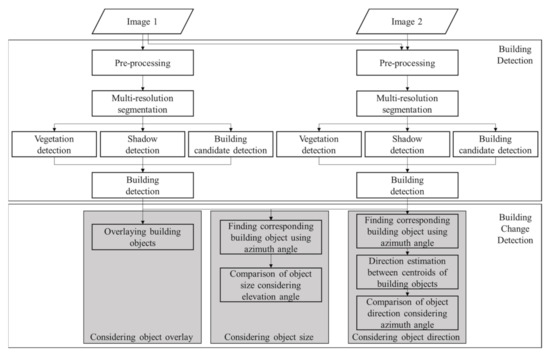

In this study, we used the KOMPSAT-3 bitemporal images acquired in Sejong-si, South Korea (Site 1), and KOMPSAT-3 and UAV images acquired in Sangju-si, South Korea (Site 2), for object-based building detection and change detection. The proposed method consists of four steps: preprocessing, multi-resolution-based segmentation, object-based building detection, and object-based building change detection. Figure 3 shows a flowchart of the proposed approach.

Figure 3.

Flowchart of the proposed object-based building change detection approach.

3.1. Preprocessing

For preprocessing the images in Site 1, image registration by manually selected tie-points was carried out, followed by a histogram matching. In the case of Site 2, the spatial resolution of the KOMPSAT-3 multispectral images was improved from 2.8 m to 0.7 m by performing Gram–Schmidt pansharpening with the panchromatic image to reduce the spatial resolution difference between the KOMPSAT-3 and UAV images. Through the image registration process, the spatial resolution of the UAV-based orthophoto image, which was around 3 cm, was downsampled to 0.7 m, the same as the spatial resolution of the KOMPSAT-3 image.

3.2. Multiresolution Segmentation

The object-based analysis is not only able to use spatial feature information of each object but also to minimize salt-and-pepper noise that is mainly caused when applying the pixel-based analysis [36]. For conducting the object-based change detection, this study produced a segmentation image using the multiresolution segmentation method provided in eCognition software. This method considers both spatial and spectral characteristics when creating the segmentation image. The bottom-up approach starts with small pixel-sized image objects and grows highly interrelated adjacent pixels into segmentation objects. This process selects random seed pixels best suited for potential segments and repeats them until all pixels in the image are grouped among highly relevant objects through processes maximizing homogeneity in the same object and heterogeneity between different objects. The association in this process can be adjusted using the three parameters, which are scale, shape, and compactness.

The scale parameter affects the segmentation size of the image and is proportional to the size of the objects. The shape parameter is a deviation of the color parameter, which is the sum of the weighted standard deviations for all bands in the image. The smaller this value is, the greater the influence of the spectral characteristics on creating the segmentation image. The compactness parameter is the ratio of the boundary to the object’s area. The optimal segmentation parameters depend on the sensor’s characteristics, the purpose of the data analysis, and the characteristics of the object of interest.

3.3. Object-Based Building Detection

3.3.1. Vegetation and Shadow Detection

Before extracting useful object features to detect building objects, additional information, including vegetation and shadows, was considered to extract to help detect them. For example, removing the vegetation area before extracting building objects can lead to the better result. Moreover, using shadow information has an advantage since buildings and their shadows have characteristic relationships from a location perspective. Specifically, Site 1 included numerous vegetation areas so that the areas were firstly extracted. The vegetation index expresses vegetation on the surface using the characteristic reflector that each band in the image represents for vegetation. Vigorous vegetation absorbs much red (R) wavelengths of visual spectral by chlorophyll and other pigments and has high reflectivity for the NIR wavelength. The vegetation at Site 1 was classified using the most widely used NDVI calculated as Equation (5).

Spectral characteristics of shadows, which are critical clues when detecting building objects, are stable compared to other objects. In this study, the shadow intensity (φ) was calculated by using a ratio of the blue (B) and green (G) bands to detect shadow objects under the consideration that the strong characteristics of the shadow region in the blue band over the green band [38] (Equation (6)).

The threshold was subsequently calculated using the Otsu algorithm for NDVI and shadow intensity. Objects with a NDVI value greater than the Otsu-based threshold were classified as vegetation. Among the remaining unclassified objects, objects with shadow intensity values greater than the Otsu threshold were classified as shadows.

3.3.2. Building Candidate Detection

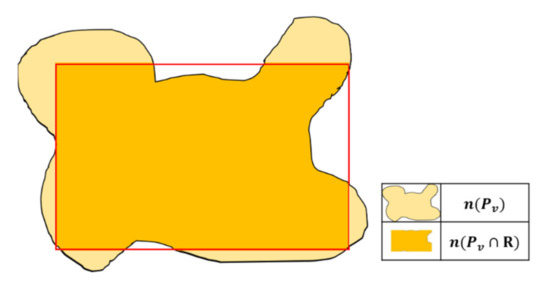

Most buildings in South Korea are hexagonal, resulting in a square shape when seen from a head-view like satellites or UAVs. Therefore, we considered morphological characteristics to detect candidate building objects. A rectangular fit, which describes how well an object fits into a rectangle with the same size of the object, was calculated in each segment. The analysis of equal area rectangles, which are drawn according to object moment, was used as a more robust version of minimum bounding rectangle comparison [39]. The value close to 1 indicates that the object is complete fitting into a rectangle. Figure 4 shows that a virtual rectangle with the same size of the object was drawn; the rectangular fit value was then calculated by dividing the number of pixels within the rectangle by the number of pixels in the object (Equation (7)).

where is the number of pixels within an object v, and R is a rectangle of the same size and proportion as an object v. is the number of intersecting pixels between an object v and a rectangle R.

Figure 4.

Rectangular fit of an image object v.

The GLCM [40] presents texture information based on statistical calculation and is generated by considering the relationship between pixels within the object. The GLCM feature calculates the relationship between the brightness value of the reference pixel and neighboring pixels or the gray level using basic statistics, such as correlation, mean, contrast, and homogeneity. In this study, an object of building candidates was classified using the GLCM homogeneity, which represents the uniformity of pixel values in an object. An artificial structure (i.e., building) is generally constructed with the same material so that it shows more homogeneous property compared to other objects. A notable change in pixel values occurs when switching to adjacent objects; therefore, we used the homogeneity to detect an object of building candidates (Equation (8)).

where (i,j) is the pixel coordinates in the object, is the normalized pixel value for the GLCM homogeneity calculation, and N is the number of rows and columns in the object. After extracting building candidates using the rectangular fit and the GLCM homogeneity features, the building objects that are adjacent to each other were merged to generate the object corresponding to each building. Finally, the area of each building candidate object was calculated to eliminate the object in the case where it is too large or small to correspond as a building [41]. The objects of the building candidate detected in this way tend to be over-detected because non-building objects can be included; thus, the shadow of the building was additionally exploited to exclude the over-detected building objects from the candidates.

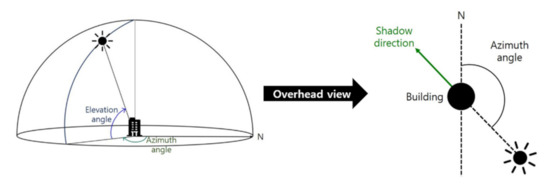

3.3.3. Final Building Detection Using Shadow Information

In high-resolution images, buildings and shadows inevitably exhibit adjacent relationships. Within an image, all shadows casted by buildings are in the same direction around the building. In this study, the shadow direction was used to detect the final building among the building candidates. As in Figure 5, the Sun’s azimuth angle determines the principal direction of the shadow calculated as Equation (9). The building candidate object was then determined as a final building object if there is a shadow object within a certain distance along the principal direction () from the object’s centroid (), as shown in Figure 6.

Figure 5.

Basic concept of the Sun’s azimuth and elevation angles (left) and shadow direction according to the azimuth angle (right).

Figure 6.

The relationship between the building and its shadow.

3.4. Object-Based Building Change Detection

After detecting building objects in the two images, change detection between the objects was performed. Here, we employed three different building change detection methods.

The first method detects building changes by considering only overlapping the building objects between images. Specifically, when a building object in one image has any portion of the overlap with objects in the other image, it is determined as a no-change object. Conversely, when a building object has no overlapping portion with other objects in the other image, this object is judged as a changed object.

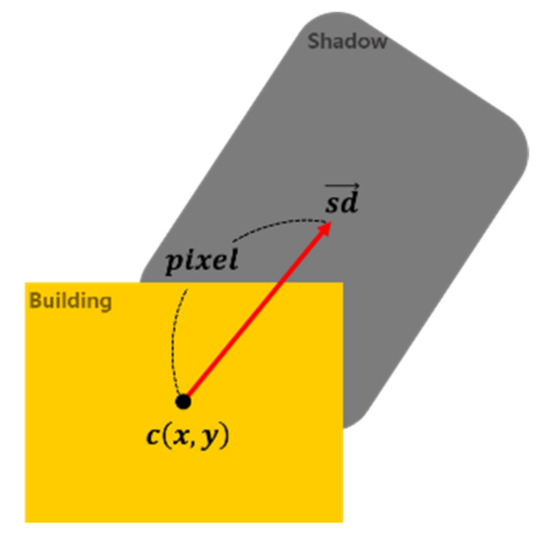

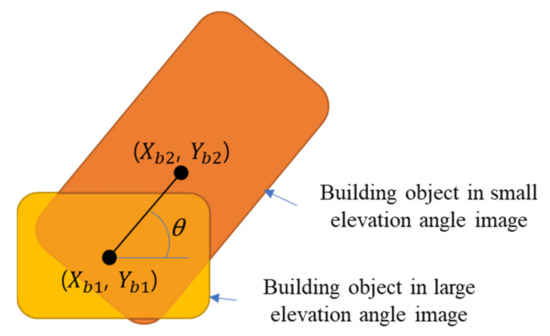

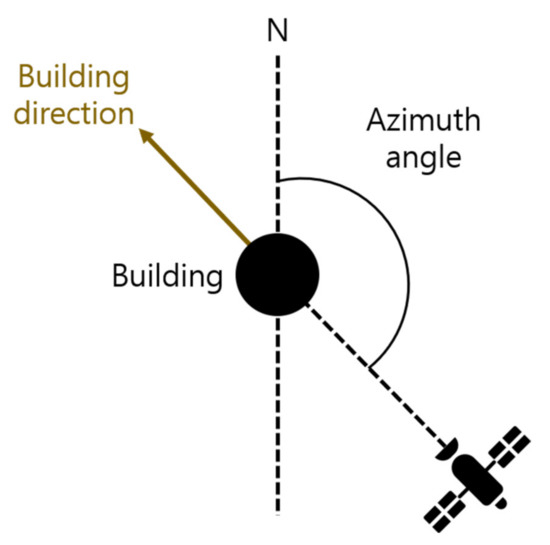

The second method compares the size of building objects by considering the sensor’s elevation angle. An image acquired with a high elevation angle of the sensor (i.e., acquired close to nadir) tends to have a small relief displacement occurring on a building object. Conversely, building objects in an image acquired from a small elevation angle occur severe relief displacement so that the side part of the buildings can be seen in the image; thus, resulting in large size of the building objects [42]. For example, as shown in Figure 7, the same building object can have a different size in images, according to the magnitude of the elevation angles when images were acquired. Therefore, in this method, it is determined whether the building has changed by comparing the magnitude of the corresponding building size by considering the elevation angles. To this end, the process of finding the corresponding building object in the bitemporal images is essential. As similarly done with the relationship of the shadow and the Sun’s azimuth angle, the sensor’s azimuth angle determines the principal direction of the building’s relief displacement in each image (Figure 8). Based on the centroid of the building object detected in an image acquired with a large elevation angle, the corresponding building candidate in the other image can be found if the building object is within a certain distance along the principal direction from the centroid. After finding the corresponding building object, the sensor’s elevation angle is considered to determine whether the building object is changed or not. The lower the sensor’s elevation angle is, the larger the building size; therefore, the size of the corresponding building objects is compared, and it is determined as a changed building if the building object size of the image with a lower sensor’s elevation angle is smaller than the other.

Figure 7.

Calculation of direction using centroid coordinates of two buildings.

Figure 8.

Direction of building’s relief displacement according to the azimuth angle of the sensor.

The third method considers the direction of objects according to the sensor’s azimuth angle. Similar to the second method, it detects the corresponding building objects in both images () and then obtain their centroids () and (), respectively (Figure 7). The principal direction () between the two objects is obtained using Equation (10).

Then, is compared with the azimuth angle of the sensor showing lower elevation angle; the object is determined as a changed building when the difference between two values (i.e., in Equation (10) and azimuth angle of the sensor showing lower elevation angle) is within an error range.

Threshold values for performing the three building change detection methods should be determined in consideration of the azimuth and elevation angles of the acquired bitemporal imagery. For example, the size of the same building will be different in images depending on the sensor’s elevation angle. Moreover, even if images were acquired from the same elevation angle, the direction of the relief displacement of the building is different depending on the azimuth angle of the sensor. Therefore, the thresholds must be determined differently by considering the properties of each image’s acquisition environments.

4. Experimental Results

4.1. Building Detection Results

4.1.1. Site 1: Single-Sensor Imagery

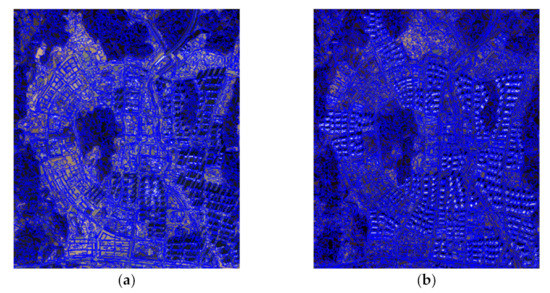

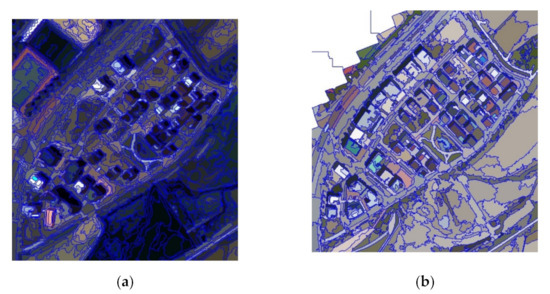

Multiresolution segmentation was applied to each KOMPSAT-3 image for performing object-based building detection. When generating the segmentation image, parameters were set to reveal the boundaries of the building object without one building being divided into too many objects. Therefore, the scale parameter was set to 90, and the compactness parameter was set to be greater than the shape parameter (Table 6). Figure 9 shows the segmented images of Site 1 overlaid with object boundaries.

Table 6.

Parameter values of multiresolution segmentation and number of generated objects of Site 1.

Figure 9.

Segmentation results of Site 1 (a) 2013 image (b) 2019 image.

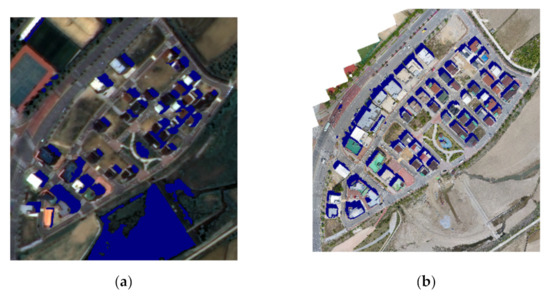

At Site 1, the NDVI and shadow intensity of each object were calculated for improving the building detection performance. The Otsu algorithm was used to decide thresholds for the extraction of the vegetation and shadow objects. Objects with NDVI and shadow intensity values greater than the thresholds were classified as vegetation and shadow objects, respectively. The detection result of the shadow and vegetation objects is presented in Figure 10. Although some misdetections of the shadow objects exist, they did not significantly affect to the building detection because shadows only adjacent to building objects were considered in this study.

Figure 10.

Vegetation (green) and shadow (navy) detection results of Site 1 (a) 2013 image (b) 2019 image.

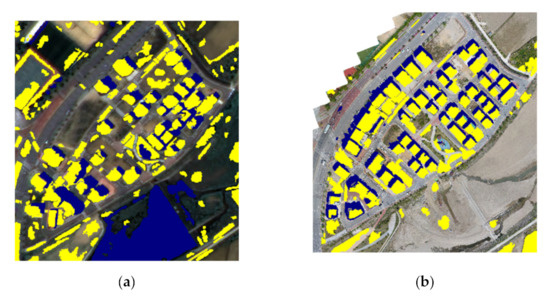

The rectangular fit, the GLCM homogeneity, and the area features were used to detect building candidate objects on Site 1. Each features’ information was generated and assigned to each object and used to extract the building candidate objects based on the thresholds selected through visual analysis and the Otsu algorithm. More specifically, objects whose rectangular fit is greater than 0.8 and GLCM homogeneity values were above the threshold selected using the Otsu algorithm were classified as building candidate objects. Subsequently, the adjacent objects among the objects classified as buildings were merged into one object. Therefore, most areas misdetected as buildings were merged into a single object, which was used to remove misdetection using area feature information. If the area of the building calculated in each image was larger than that of the buildings with the largest area, or if the segmentation object detected as an object of building candidates was too small, it was determined as a false detection. For the 2013 image, if the object’s area is more than 4300 m2 or less than 300 m2, the object was removed from the object detected as a building. For the 2019 image, the object was removed from the object detected as a building if the object’s area was more than 9000 m2 or less than 500 m2. It is worth noting that the area criteria are different according to the images since the sensor’s elevation angles can affect the building size in each image due to the relief displacement caused by the high-rise buildings.

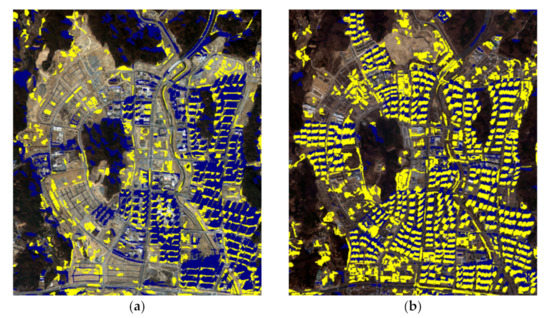

Figure 11 shows the building candidate detection results using rectangular fit, GLCM homogeneity, and area features. Despite the process of removing false detection using the area feature, falsely detected objects are still observed as buildings. Shadow objects were used to remove them.

Figure 11.

Building candidates (yellow) overlaid with shadow detection results (navy) of Site 1 (a) 2013 image (b) 2019 image.

To remove the false-alarmed building objects, the azimuth angle of the KOMPSAT-3 multitemporal images was used to calculate the principal direction of shadows. The Sun’s azimuth angles in the 2013 and 2019 images were 204.811° and 198.504°, respectively; therefore, the principal directions of the shadows were calculated as 24.811° and 18.504°, respectively.

After calculating the principal direction of shadows, the final building objects were detected by considering the positional relationship between the building candidates and their corresponding shadows. Therefore, the Sun and sensor’s azimuth angles and the sensor’s elevation angle were considered comprehensively during the time the images were taken. Accordingly, building candidates were classified as final buildings when a building was within 25 m and 70 m of the shadow in the centroid of objects detected as candidates for buildings in the 2013 and 2019 images, respectively (Figure 12). Compared to the case where numerous false detections occurring when building objects were detected using only feature information, the number of falsely detected objects in the building were reduced by considering the adjacent relationship between buildings and their shadows.

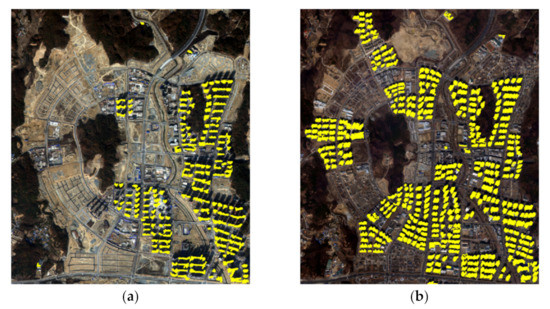

Figure 12.

Final building detection results of Site 1 (a) 2013 image (b) 2019 image.

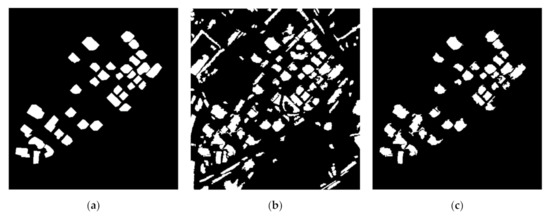

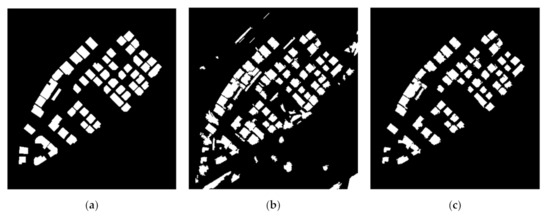

The accuracy evaluation of the building detection results was performed when using only feature information and both the feature and shadow information. Figure 13 and Figure 14 show the building detection results, and Table 7 summarizes the accuracy evaluation result. The false alarm of the building detection resulted in both the 2013 and 2019 images being significantly reduced by 30% and 90%, respectively, when considering shadows. The miss rate, however, was slightly increased because a few building objects were removed when considering the shadow information. The F1-score, which can identify the overall proportion of correctly detected objects, had a value higher than 0.8 when considering shadows for detecting buildings. The results for the 2019 image had a value greater than 0.9. The tendency of these results was similar for Kappa coefficients.

Figure 13.

Building detection results of Site 1 in the 2013 image (a) Reference data (b) without considering shadows (c) considering shadows.

Figure 14.

Building detection results of Site 1 in the 2019 image (a) Reference data (b) without considering shadows (c) considering shadows.

Table 7.

Accuracy of building detection in Site 1.

4.1.2. Site 2: Multi-Sensor Imagery

To conduct the object-based building detection acquired from KOMPSAT-3 and UAV multi-sensor images, a multiresolution segmentation method was applied to each image (Figure 15). The KOMPSAT-3 image has a radiometric resolution of 14 bits, resulting in larger numbers of segmentation objects when generating the segmentation image using the same scale parameters with the UAV image having a radiometric resolution of 8 bits. Thus, the UAV image’s scale parameter was set to 30, which is a smaller value than that of the KOMPSAT-3 image set to 120. The compactness parameter value was set to be large to identify the boundaries of buildings that show rectangular shapes in Site 2 (Table 8).

Figure 15.

Segmentation results of Site 2 (a) KOMPSAT-3 image (b) UAV image.

Table 8.

Parameter values of multiresolution segmentation and number of generated objects of Site 2.

Objects with shadow intensity values larger than the threshold calculated using the Otsu algorithm were classified as shadows (navy in Figure 16). Although some water body areas were misdetected as shadows in the case of KOMPSAT-3 images, they were not adjacent with building objects, meaning they did not significantly effect building detection results.

Figure 16.

Shadow detection results of Site 2 (a) KOMPSAT-3 image (b) UAV image.

The rectangular fit, the GLCM homogeneity, and the area features were calculated to each object, after which the objects were classified as building or non-building candidate objects based on the thresholds selected through visual analysis and the Otsu algorithm. Specifically, when the rectangular fit value is greater than 0.8 in each image and the GLCM homogeneity is greater than the threshold selected by the Otsu algorithm, it is classified as a building candidate object. Applying the Otsu algorithm to the GLCM homogeneity resulted in 0.628 for the KOMPSAT-3 image and 0.617 for the UAV image. Subsequently, the adjacent building objects were merged into one object. Then, as with Site 1, to determine the appropriate area threshold, we consider the Sun and sensor’s azimuth and elevation angles, respectively. If the area feature of the buildings estimated in each image is larger than that of the buildings or if the segmented objects detected by the building candidate objects are too small, they were determined as misdetected and removed. For KOMPSAT-3 images, the building object was removed when the area was greater than 500 m2 or less than 25 m2, and for UAV images, it was removed when the area was greater than 588 m2 or less than 15 m2.

Figure 17 shows the building candidate extraction results overlaid with the shadow detection results. Despite removing false detection using the area features, objects misdetected into buildings were still observed. Therefore, shadow objects were used to further remove them to improve the building detection result.

Figure 17.

Building candidates (yellow) overlaid with shadow detection results (navy) of Site 2 (a) KOMPSAT-3 image (b) UAV image.

To remove misdetected building objects, the distance between the centroid of the buildings and their shadows was estimated by comprehensively considering the Sun and sensor’s azimuth and elevation angles. Accordingly, when shadow objects exist within 15 m of the centroid of building candidate objects in the KOMPSAT-3 image and within 10 m in the UAV image along the shadow direction, the building candidate objects were classified as the final building objects. Figure 18 shows the detected building objects by considering the shadow constraints.

Figure 18.

Final building detection results of Site 2 (a) KOMPSAT-3 image (b) UAV image.

In contrast, the edges of the buildings detected in the KOMPSAT-3 image could not clearly show the building boundaries (Figure 18a), while those in the UAV image, where most buildings were detected throughout, clearly detected the building objects (Figure 18b). This problem in the KOMPSAT-3 image was due to the poor contrast compared to the UAV image and mixed pixels in the boundary of building objects from adjacent shadows or roads when generating the segmentation image.

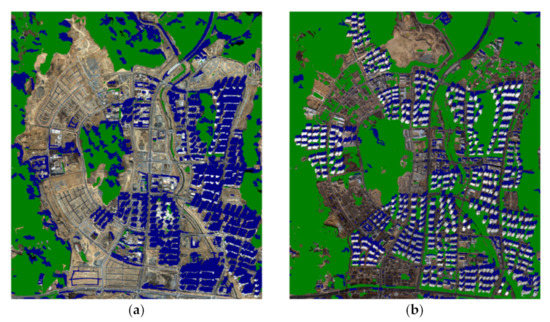

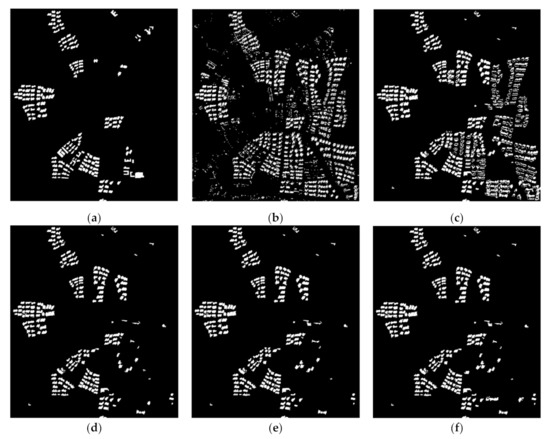

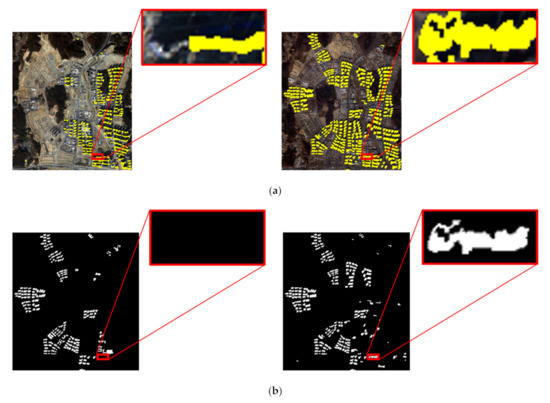

The building detection results of Site 2 are shown in binary images (Figure 19 and Figure 20). The accuracy evaluation results reported in Table 9 confirmed that both the KOMPSAT-3 and UAV images had higher accuracies in detecting buildings when considering shadow information. False alarms when shadows were not considered in KOMPSAT-3 and UAV images were 0.152 and 0.065, respectively, whereas 0.008 and 0.006, respectively, when shadows were considered. These results indicate that the building misdetection rate is significantly lower when shadows are considered. The miss rate, like in Site 1, was slightly increased by removing building objects when considering shadow information. By comparing the F1-scores and Kappa coefficients, the building detection results were significantly improved by considering shadows, shown by the significant increase in these two values. Based on the building detection results with numerical assessments on both sites, we can conclude that the building change detection step can be applied based on the building detection results using the proposed method.

Figure 19.

Building detection results of Site 2 in KOMPSAT-3 image (a) Reference data (b) without considering shadows (c) considering shadows.

Figure 20.

Building detection results of Site 2 in UAV image (a) Reference data (b) without considering shadows (c) considering shadows.

Table 9.

Accuracy of building detection in Site 2.

4.2. Object-Based Building Change Detection Results

4.2.1. Site 1: Single-Sensor Imagery

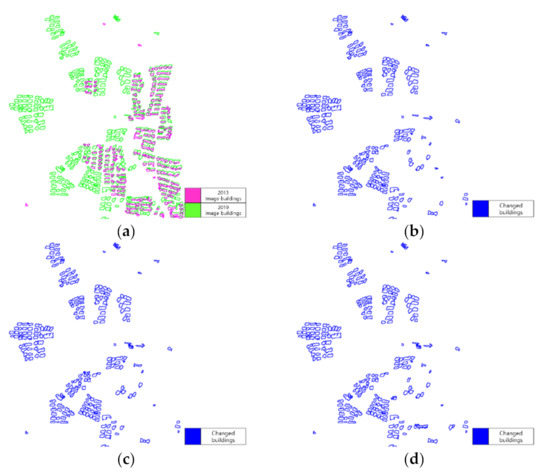

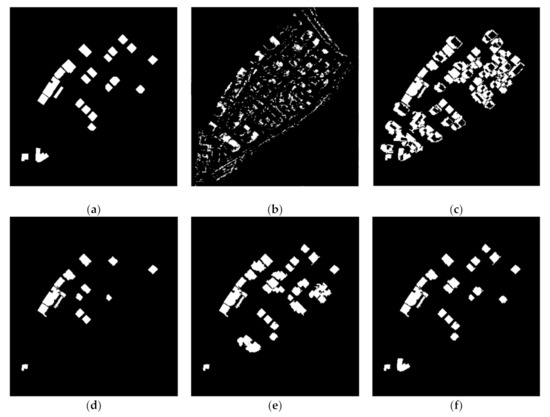

Based on the building detection results by the proposed method, object-based building change detection was conducted by applying the three different methods and presented in Figure 21. Figure 21b shows the building change detection results obtained by considering the simple overlay rule. When the detected building objects overlap in the bitemporal images, they were deemed the same building and excluded from the final changed building object. Therefore, detected building objects that are not overlaid in both images were extracted as changed buildings.

Figure 21.

Object-based building change detection results of Site 1 (a) Overlaid building detection results with 2013 and 2019 images (b) Change detection results by overlaying (c) Change detection results considering object sizes according to the sensors’ elevation angle (d) Change detection results considering object direction according to the sensors’ azimuth angle.

The second method compared the object size according to the sensor’s elevation angle. To this end, the sensor’s azimuth angle at the time of taking the images was used to detect the same buildings in bitemporal images. According to metadata, the azimuth angle of the 2019 image is 207.623° so that the main direction of the building is converted to 27.623°. For the 2013 image, the direction of the building’s relief displacement is negligible because of the small incidence angle of 10.240°, irrespective of the azimuth angle. Therefore, we only consider the azimuth and elevation angles of the 2019 image to identify the location relationship of buildings between the bitemporal images. After detecting the same buildings within the bitemporal images, the final changed buildings were detected by comparing the size between buildings deemed the same building according to the elevation angle. The elevation angles of the 2013 and 2019 images were 79.760° and 62.328°, respectively. Since the elevation angle of the 2019 image is low, the object size of the same building in the 2019 image will be larger than that of the 2013 image. Figure 21c shows the building change detection results by considering the size of the corresponding building objects accordingly.

The third method detected the corresponding building objects between images using their azimuth angles. The direction between the corresponding objects was obtained and then compared with the azimuth angle of the 2019 image. Objects showing a calculated direction within 3° along with the azimuth angle of the 2019 image (i.e., 207.623° ± 3°) were determined as unchanged objects and excluded from the changed building objects. Figure 21d shows the results of detected building change objects using the direction information.

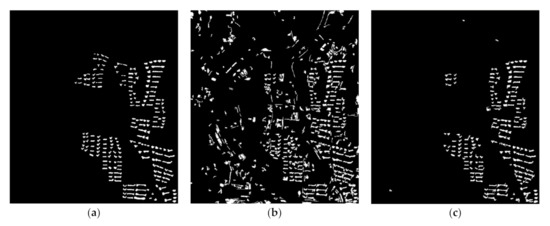

To compare the change detection results with the reference data, the results obtained using the three methods are presented in binary images (Figure 22). To relatively evaluate the performance, MBI-based building change detection method proposed in [19] and pixel-to-pixel comparison of the building objects extracted by the proposed approach were conducted. Table 10 summarizes the quantitative results compared to the reference data. The results of building change detection using the MBI-based method showed the lowest F1-score and Kappa values of 0.4 and 0.358, respectively. The pixel-based change detection obtained 0.531 and 0.5 values of both the F1-score and the Kappa coefficient. The values were improved up to 0.75 by performing the three object-based change detection methods. Their results showed no significant difference although the direction-based one achieved slightly lower than others. The detailed analysis of the results will be discussed in the Discussion section.

Figure 22.

Building change detection results of Site 1 (a) Reference data (b) MBI [19] (c) pixel-based comparison (d) considering object overlay, (e) considering object size by elevation angle (f) considering object direction by azimuth angle.

Table 10.

Accuracy of building change detection in Site 1.

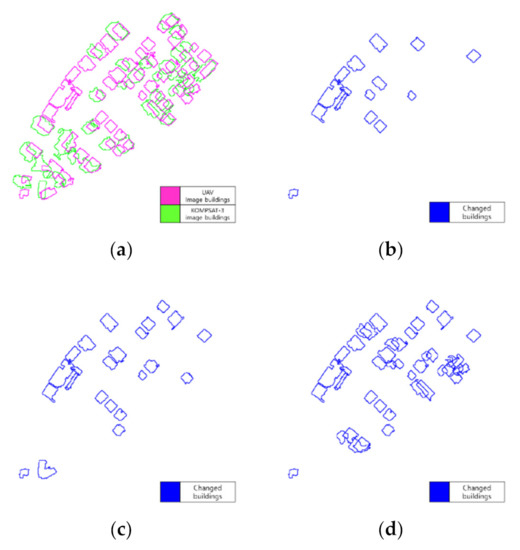

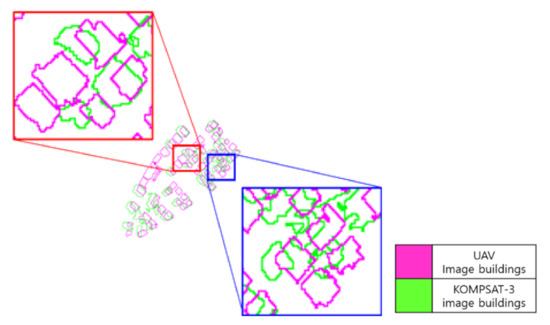

4.2.2. Site 2: Multi-Sensor Imagery

As with the experiments conducted for Site 1, building change detection of Site 2 was conducted using the three methods based on the extracted building objects. Figure 23 shows their results. When using the first method considering the object overlaying (Figure 23b), changed objects were under-detected because all the objects with a part of overlapping objects between images were determined as nonchanged objects. Because of the large relief displacement of the buildings in the KOMPSAT-3 image due to its low elevation angle, closely located buildings caused the object overlapping although they were not the corresponding buildings between the images. Hence, for accurate building change detection, the process of finding the same building objects among the building objects in the two images is necessary.

Figure 23.

Object-based building change detection results of Site 2 (a) Overlaid building detection results with KOMPSAT-3 and UAV images (b) Change detection results by overlaying (c) Change detection results by considering object sizes according to the sensors’ elevation angles (d) Change detection results by considering object direction according to the sensors’ azimuth angles.

The second direction-based method first finds the corresponding building objects using the azimuth angles of the KOMPSAT-3 and UAV images. Using the sensor’s azimuth angle, when a building object exists within 6 m of the principal direction between the images, it was classified as corresponding building objects. Subsequently, final changed buildings were detected by comparing the size between the corresponding building objects according to elevation angles. The elevation angle of the KOMPSAT-3 image is lower than that of the UAV; therefore, the building area in the KOMPSAT-3 image should be larger than that in the UAV image. Figure 23c shows the final building change detection result.

The third method detected the same buildings using the azimuth angles of the KOMPSAT-3 and UAV images. The centroid coordinates of each building were obtained and the direction between the two building centroids was calculated and then compared with the azimuth angle of the KOMPSAT-3 image (81.429°). The corresponding building objects showing the value difference between the calculated direction and the azimuth angle less than ± 3° were classified as unchanged buildings (Figure 23d).

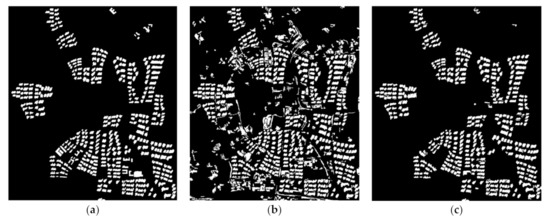

As with the building detection results, the building change detection results are also presented as binary images in Figure 24, and the quantitative evaluation results are reported in Table 11. The MBI-based method and pixel-based comparison had the F1-score values of 0.369 and 0.470, respectively. Although the three object-based methods significantly improved the accuracies, unlike Site 1, they showed differences in accuracies. The method comparing the size of objects according to elevation angles showed a 0.635 F1-score value, a relatively lower accuracy, since multiple adjacent buildings were determined falsely as changes. The method considering the direction of objects had the highest F1-score value of 0.886. The detailed analysis of the building change detection performance in Site 2 will be discussed in the Discussion section.

Figure 24.

Building change detection results of Site 2 (a) Reference data (b) MBI [19] (c) pixel-based comparison (d) considering object overlay, (e) considering object size by elevation angle (f) considering object direction by azimuth angle.

Table 11.

Accuracy of building change detection in Site 2.

5. Discussion

5.1. Site 1: Single-Sensor Imagery

Although the 2013 and 2019 images’ building detection results are reliable, the accuracy of the 2013 image is slightly lower than that of the 2019 image. Figure 25 shows that some buildings were under construction, but they were not detected as changes by the proposed method. These kinds of on-going changes on buildings are challenging to identify since the buildings and their shadows are difficult to figure out even with a visual inspection.

Figure 25.

Detailed example of undetected buildings in the 2013 image (a) Reference data (b) building detection result overlaid with 2013 image (left) and binary building detection result (right).

As mentioned earlier, building detection results highly affect the building change detection results. If a building is not detected properly in one image or both bitemporal images, it reduces the accuracy of building change detection. Furthermore, even if a portion of a building object is not properly detected, it reduces the accuracy of building change detection (Figure 26). The first overlaying-based method and the second size-based method could properly eliminate objects from the change detection results in the case where the two buildings are different. However, the third method, which considers the direction between two objects according to the sensor’s azimuth, determined these two objects as different buildings, contributing to the over-detection of changed building objects.

Figure 26.

Example of falsely detected building shapes affecting object-based building change detection result by the direction-based method (a) 2013 and 2019 images overlaid with building detection results (b) Reference data of building change detection (left) and change detection result (right).

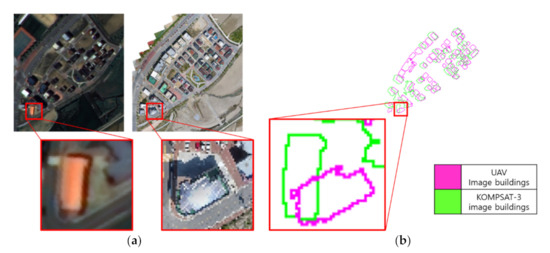

5.2. Site 2: Multi-Sensor Imagery

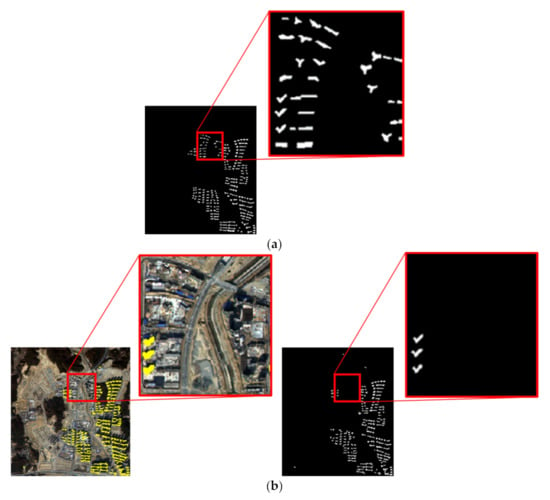

Unlike Site 1, Site 2 did not have any buildings under construction that were difficult to detect even with a visual inspection. However, buildings in the scene were located close to each other, resulting in one-too-many correspondences of building objects between the images. This resulted in a poor building change detection performance when only considering the overlay of building objects (Figure 27). This tendency can be confirmed by the fact that the miss rate of the change detection results had a large value of 0.459, and the F1-score and Kappa values showed low values less than 0.68 when applied with the overlay method.

Figure 27.

Detailed example of miss detection of building changes by the overlaying method.

When conducting the change detection using the second method, which is based on the comparison of the object sizes by considering the sensor’s elevation angle, building detection results were significantly influenced. Some buildings’ shape in the KOMPSAT-3 image was not properly detected, as shown in Figure 28. The size of these objects was thus calculated as smaller than that of the same buildings in the UAV image, resulting in the false change detection. Consequently, it showed the lowest F1-score value of 0.655 among the three methods.

Figure 28.

Detailed example of falsely detected building changes by the object size-based method.

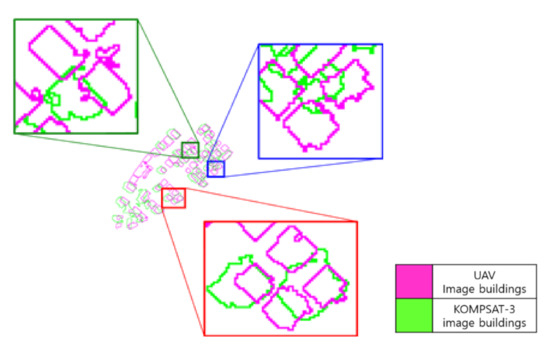

The third method, considering the direction between objects according to the sensor’s azimuth angle, had the highest accuracy over the other methods. Compared to the overlaying method, it could detect the changed buildings properly although some part of the objects was overlapped with other adjacent building objects. Unlike the size-based method, accurate change detection was achieved regardless of the poor detection of building object boundaries due to its direction-based approach. Moreover, the third method could detect changed buildings that were lost and reconstructed, as shown in Figure 29, where the other two methods could not detect them as changed buildings.

Figure 29.

Detailed example of the reconstructed building (a) before and after reconstruction of the building in KOMPSAT-3 and UAV images (b) overlaid building detection results.

6. Conclusions

This study proposed the object-based building detection and building change detection method using the Sun and sensors’ azimuth and elevation angles. For evaluating the performance of the proposed method, bitemporal images acquired from a single sensor and multi-sensors were obtained. The two constructed sites are densely populated with high-rise buildings that can cause significant relief displacement depending on the sensor’s azimuth and elevation angles. Before conducting the change detection, the building candidate object was extracted using feature information, and the final building object was then detected using the position of the building’s shadow according to the Sun’s azimuth angle. Finally, object-based building change detection was conducted based on the building detection results using three different methods: the simple overlaying method, the object size-based method, and the object direction-based method, respectively.

In Site 1, the three methods’ change detection results were not significantly different. The main reason is that the sensor’s azimuth angles in the bitemporal images were not significantly different enough to affect the experiment results. There were no cases of multiple overlapping between adjacent buildings. Furthermore, the difference in the building object’s size according to the elevation angle was noticeable because the elevation angles of the bitemporal images differed significantly. Consequently, there were no significant problems in building change detection using simple overlap, and there were no significant disadvantages in comparing the buildings’ sizes according to the elevation angles. Similarly, the method for comparing the direction between building objects according to azimuth angles also yielded relatively accurate building change detection results because of the large independence of each building object in the bitemporal images. In conclusion, Site 1 obtained similar quantitative experimental results for the three building change detection methods. If high-rise building objects existed independently, such as in Site 1, using only the simple overlay method would be a considerable option in the efficient perspective.

In the case of Site 2, multiple overlays with adjacent buildings occurred when buildings in the bitemporal images were nested because of their close distance from adjacent building objects. Furthermore, the lack of clear boundaries between building objects and shadows and between building objects and adjacent roads resulted in the misdetection of buildings, which did not accurately compare the building sizes. Consequently, the third method, considering the object direction using the sensor’s elevation angle, achieved the highest building change detection accuracy compared to other methods. Moreover, the third method could detect the reconstructed buildings properly as changed buildings. Therefore, we could conclude that the direction-based method using centroids of the corresponding building objects is the most suitable regardless of the acquisition conditions of the images. It was demonstrated that the method showed reliable and stable results in both sites constructed with single-sensor and multi-sensor images, respectively.

It should be noted that the proposed building change detection method can depend highly on the building detection performance. Although we detected buildings using object-based approach with the help of shadow information, any building detection method can be applicable to carry out the change detection. To improve further the building change detection result, studies for accurate building object detection will be performed. Further research will also include additional experiments using images acquired with different azimuth and elevation angles and large areas where high-rise buildings are concentrated.

Author Contributions

Conceptualization, Y.H.; validation, S.J.; formal analysis, S.J. and Y.H.; investigation, S.J.; resources, Y.H. and W.H.L.; data curation, Y.H. and S.J.; writing—original draft preparation, S.J.; writing—review and editing, Y.H. and W.H.L.; visualization, S.J.; funding acquisition, Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work has supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2021R1A2C2093671).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectralgeoeye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Attarzadeh, R.; Momeni, M. Object-based rule sets and its transferability for building extraction from high resolution satellite imagery. J. Indian Soc. Remote Sens. 2018, 46, 169–178. [Google Scholar] [CrossRef]

- Du, S.; Zhang, Y.; Zou, Z.; Xu, S.; He, X.; Chen, S. Automatic building extraction from LiDAR data fusion of point and grid-based features. ISPRS J. Photogramm. Remote Sens. 2017, 130, 294–307. [Google Scholar] [CrossRef]

- Park, H.; Choi, J.; Park, W.; Park, H. Modified S 2 CVA algorithm using cross-sharpened images for unsupervised change detection. Remote Sens. 2018, 10, 3301. [Google Scholar] [CrossRef] [Green Version]

- Pang, S.; Hu, X.; Wang, Z.; Lu, Y.; Balzter, H.; Blaschke, T.; Thenkabail, P.S. Object-based analysis of airborne LiDAR data for building change detection. Remote Sens. 2014, 6, 10733–10749. [Google Scholar] [CrossRef] [Green Version]

- Meng, X.; Currit, N.; Wang, L. Morphology-based building detection from airborne lidar data. In Proceedings of the American Society for Photogrammetry and Remote Sensing-ASPRS Annual Conference, Portland, OR, USA, 28 April–2 May 2008; Volume 2, pp. 484–492. [Google Scholar]

- Yan, Y.; Tan, Z.; Su, N.; Zhao, C. Building extraction based on an optimized stacked sparse autoencoder of structure and training samples using LIDAR DSM and optical images. Sensors 2017, 17, 1957. [Google Scholar] [CrossRef] [Green Version]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building change detection in VHR SAR images via unsupervised deep transcoding. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1917–1929. [Google Scholar] [CrossRef]

- Zhao, L.; Zhou, X.; Kuang, G. Building detection from urban SAR image using building characteristics and contextual information. EURASIP J. Adv. Signal Process. 2013, 2013, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Xu, H. A new index for delineating built-up land features in satellite imagery. Int. J. Remote Sens. 2008, 29, 4269–4276. [Google Scholar] [CrossRef]

- As-syakur, A.R.; Adnyana, I.W.S.; Arthana, I.W.; Nuarsa, I.W. Enhanced built-UP and bareness index (EBBI) for mapping built-UP and bare land in an urban area. Remote Sens. 2012, 4, 2957–2970. [Google Scholar] [CrossRef] [Green Version]

- Sirmaçek, B.; Ünsalan, C. Building detection from aerial images using invariant color features and shadow information. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–5. [Google Scholar] [CrossRef]

- Liu, H.; Yang, M.; Chen, J.; Hou, J.; Deng, M. Line-constrained shape feature for building change detection in VHR remote sensing imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 410. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Cao, J.; Feyissa, M.E.; Yang, X. Automatic building detection from very high-resolution images using multiscale morphological attribute profiles. Remote Sens. Lett. 2020, 11, 640–649. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Zhu, T. Building change detection from multitemporal high-resolution remotely sensed images based on a morphological building index. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 105–115. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, T.; Zhang, L.; Tang, Y. A novel building change index for automatic building change detection from high-resolution remote sensing imagery. Remote Sens. Lett. 2014, 5, 713–722. [Google Scholar] [CrossRef]

- Sheikh, M.A.A.; Kole, A.; Maity, T. A multi-level approach for change detection of buildings using satellite imagery. Int. J. Artif. Intell. Tools 2018, 27, 1850031. [Google Scholar] [CrossRef]

- Javed, A.; Jung, S.; Lee, W.H.; Han, Y. Object-based building change detection by fusing pixel-level change detection results generated from morphological building index. Remote Sens. 2020, 12, 2952. [Google Scholar] [CrossRef]

- Gong, J.; Hu, X.; Pang, S.; Li, K. Patch matching and dense crf-based co-refinement for building change detection from bi-temporal aerial images. Sensors 2019, 19, 1557. [Google Scholar] [CrossRef] [Green Version]

- Wang, B.; Choi, J.; Choi, S.; Lee, S.; Wu, P.; Gao, Y. Image fusion-based land cover change detection using multi-temporal high-resolution satellite images. Remote Sens. 2017, 9, 804. [Google Scholar] [CrossRef] [Green Version]

- Keyport, R.N.; Oommen, T.; Martha, T.R.; Sajinkumar, K.S.; Gierke, J.S. A comparative analysis of pixel-and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Huang, X. Object-oriented change detection based on the Kolmogorov–Smirnov test using high-resolution multispectral imagery. Int. J. Remote Sens. 2011, 32, 5719–5740. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-based change detection in urban areas: The effects of segmentation strategy, scale, and feature space on unsupervised methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef] [Green Version]

- Im, J.; Jensen, J.R.; Tullis, J.A. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery–an object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Peng, J.; Liu, Y.C. Model and context-driven building extraction in dense urban aerial images. Int. J. Remote Sens. 2005, 26, 1289–1307. [Google Scholar] [CrossRef]

- Katartzis, A.; Sahli, H. A stochastic framework for the identification of building rooftops using a single remote sensing image. IEEE Trans. Geosci. Remote Sens. 2008, 46, 259–271. [Google Scholar] [CrossRef]

- Izadi, M.; Saeedi, P. Three-dimensional polygonal building model estimation from single satellite images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2254–2272. [Google Scholar] [CrossRef]

- Ok, A.O. Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Gao, X.; Wang, M.; Yang, Y.; Li, G. Building extraction from RGB VHR images using shifted shadow algorithm. IEEE Access 2018, 6, 22034–22045. [Google Scholar] [CrossRef]

- Liasis, G.; Stavrou, S. Satellite images analysis for shadow detection and building height estimation. ISPRS J. Photogramm. Remote Sens. 2016, 119, 437–450. [Google Scholar] [CrossRef]

- Zhou, G.; Sha, H. Building shadow detection on ghost images. Remote Sens. 2020, 12, 679. [Google Scholar] [CrossRef] [Green Version]

- Hu, L.; Zheng, J.; Gao, F. A building extraction method using shadow in high resolution multispectral images. Int. Geosci. Remote Sens. Symp. 2011, 1862–1865. [Google Scholar] [CrossRef]

- Jung, S.J.; Kim, T.H.; Lee, W.H.; Han, Y.K. Object-based change detection using various pixel-based change detection results and registration noise. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 481–489. [Google Scholar] [CrossRef]

- Jung, S.; Park, J.; Lee, W.H.; Han, Y. Object-based building change detection using azimuth and elevation angles of sun and platform in the multi-sensor images. Korean J. Remote Sens. 2020, 36, 989–1006. [Google Scholar] [CrossRef]

- Benarchid, O.; Raissouni, N. Building extraction using object-based classification and shadow information in very high resolution multispectral images, a case study: Tetuan, Morocco. Can. J. Image Process. Comput. Vis. 2013, 4, 1–8. [Google Scholar]

- Cooner, A.J.; Shao, Y.; Campbell, J.B. Detection of urban damage using remote sensing and machine learning algorithms: Revisiting the 2010 Haiti earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Dinstein, I.; Shanmugam, K. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Sheen, D. A generalized Green’s theorem. Appl. Math. Lett. 1992, 5, 95–98. [Google Scholar] [CrossRef] [Green Version]

- Suliman, A.; Zhang, Y. Development of line-of-sight digital surface model for co-registering off-nadir VHR satellite imagery with elevation data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1913–1923. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).