Exploiting Structured CNNs for Semantic Segmentation of Unstructured Point Clouds from LiDAR Sensor

Abstract

:1. Introduction

2. Related Work

2.1. Projection-Based Methods

2.2. Point-Based Methods

3. Proposed Method

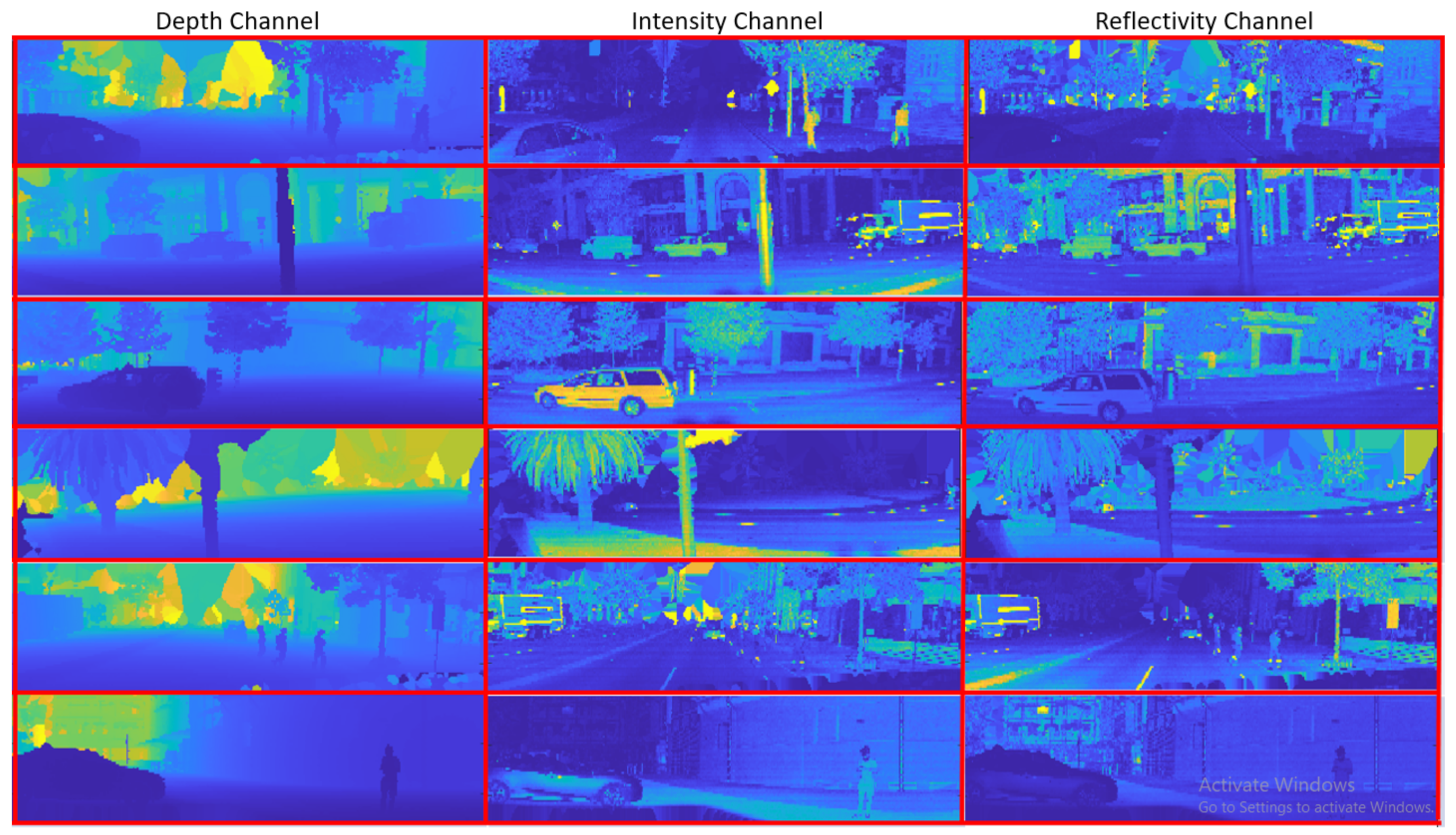

3.1. Constructing Pseudo Images from Point Clouds

3.1.1. Slice Extraction

3.1.2. 3D to 2D Projection

3.2. Image Enhancement

3.2.1. Normalization

3.2.2. Histogram Equalization

3.2.3. Decorrelation Stretch

3.3. 2D CNN Network

3.4. Back Projection to 3D

3.5. Fusion of 3D Sub-Point Clouds for Final Segmentation

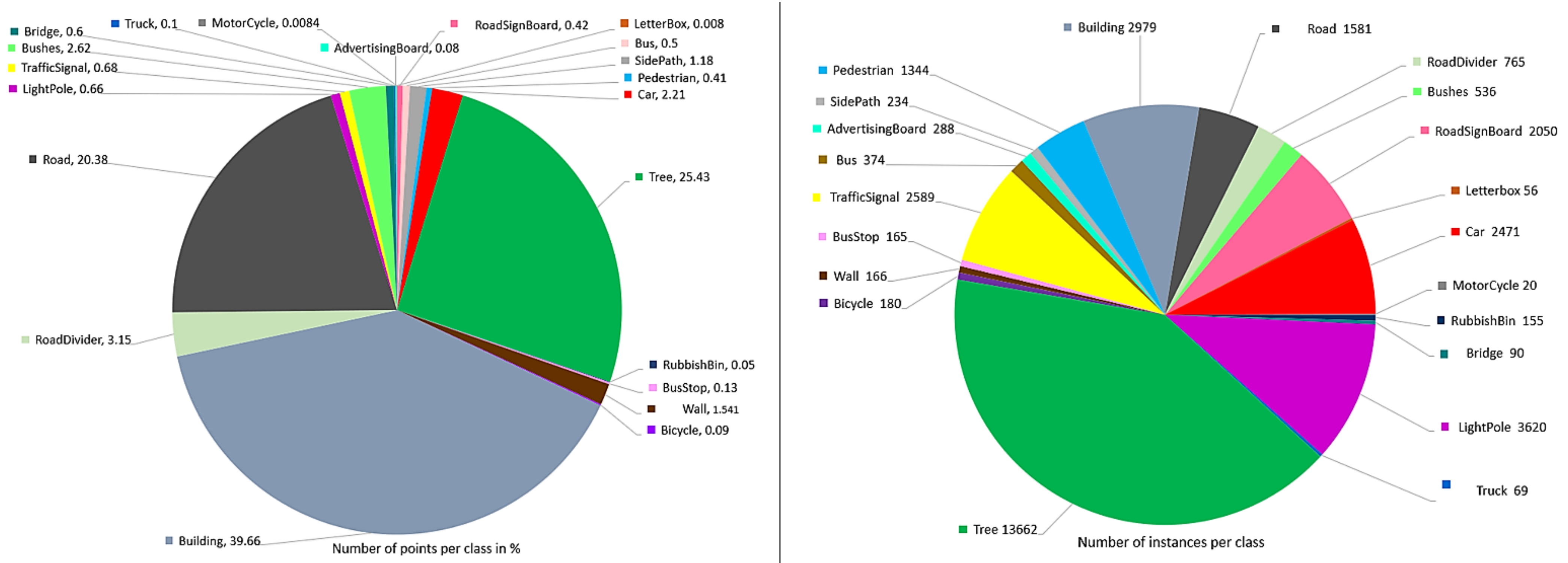

4. Proposed Dataset

4.1. Unlabeled Frames

4.2. Labeled Frames

5. Experiments

5.1. PC-Urban_V2 Dataset

Results on PC-Urban_V2

5.2. Semantic KITTI Dataset

Results on Semantic KITTI Dataset

5.3. Semantic3D Dataset

Results on Semantic3D

5.4. Audi Dataset

Results on Audi Dataset

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Angelina Uy, M.; Hee Lee, G. PointNetVLAD: Deep point cloud based retrieval for large-scale place recognition. In Proceedings of the IEEE Conference on CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4470–4479. [Google Scholar]

- Hammoudi, K.; Dornaika, F.; Soheilian, B.; Paparoditis, N. Extracting wire-frame models of street facades from 3D point clouds and the corresponding cadastral map. IAPRS 2010, 38, 91–96. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Zhao, Y.; Birdal, T.; Deng, H.; Tombari, F. 3D Point Capsule Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1009–1018. [Google Scholar]

- Lei, H.; Akhtar, N.; Mian, A. Octree Guided CNN With Spherical Kernels for 3D Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–7 December 2017; pp. 5099–5108. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9621–9630. [Google Scholar]

- Liu, X.; Qi, C.R.; Guibas, L.J. Flownet3d: Learning scene flow in 3d point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 529–537. [Google Scholar]

- Komarichev, A.; Zhong, Z.; Hua, J. A-CNN: Annularly Convolutional Neural Networks on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7421–7430. [Google Scholar]

- Yi, L.; Su, H.; Guo, X.; Guibas, L.J. Syncspeccnn: Synchronized spectral cnn for 3d shape segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2282–2290. [Google Scholar]

- Simonovsky, M.; Komodakis, N. Dynamic edge-conditioned filters in convolutional neural networks on graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3693–3702. [Google Scholar]

- Qi, X.; Liao, R.; Jia, J.; Fidler, S.; Urtasun, R. 3d graph neural networks for rgbd semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 5199–5208. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Roveri, R.; Rahmann, L.; Oztireli, C.; Gross, M. A network architecture for point cloud classification via automatic depth images generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4176–4184. [Google Scholar]

- Lawin, F.J.; Danelljan, M.; Tosteberg, P.; Bhat, G.; Khan, F.S.; Felsberg, M. Deep projective 3D semantic segmentation. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; Springer: Cham, Switerland, 2017; pp. 95–107. [Google Scholar]

- Boulch, A.; Le Saux, B.; Audebert, N. Unstructured Point Cloud Semantic Labeling Using Deep Segmentation Networks. 3DOR Eurographics 2017, 2, 7. [Google Scholar] [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3d semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1534–1543. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Semantic segmentation of earth observation data using multimodal and multi-scale deep networks. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Cham, Switerland, 2016; pp. 180–196. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. Rangenet++: Fast and accurate lidar semantic segmentation. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), The Venetian Macao, Macau, China, 4–8 November 2019; pp. 4213–4220. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 1887–1893. [Google Scholar]

- Ibrahim, M.; Akhtar, N.; Wise, M.; Mian, A. Annotation Tool and Urban Dataset for 3D Point Cloud Semantic Segmentation. IEEE Access 2021, 9, 35984–35996. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3d. net: A new large-scale point cloud classification benchmark. arXiv 2017, arXiv:1704.03847. [Google Scholar]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; et al. A2D2: AEV Autonomous Driving Dataset. Available online: http://www.a2d2.audi (accessed on 14 April 2020).

- Graham, B.; Engelcke, M.; Van Der Maaten, L. 3d semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Huang, J.; You, S. Point cloud labeling using 3d convolutional neural network. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2670–2675. [Google Scholar]

- Dai, A.; Ritchie, D.; Bokeloh, M.; Reed, S.; Sturm, J.; Nießner, M. Scancomplete: Large-scale scene completion and semantic segmentation for 3d scans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4578–4587. [Google Scholar]

- Rethage, D.; Wald, J.; Sturm, J.; Navab, N.; Tombari, F. Fully-convolutional point networks for large-scale point clouds. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 596–611. [Google Scholar]

- Meng, H.Y.; Gao, L.; Lai, Y.K.; Manocha, D. Vv-net: Voxel vae net with group convolutions for point cloud segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 8500–8508. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–10 December 2015; pp. 91–99. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-scnn: Gated shape cnns for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 5229–5238. [Google Scholar]

- Min, L.; Cui, Q.; Jin, Z.; Zeng, T. Inhomogeneous image segmentation based on local constant and global smoothness priors. Digit. Signal Process. 2021, 111, 102989. [Google Scholar] [CrossRef]

- Fang, Y.; Zeng, T. Learning deep edge prior for image denoising. Comput. Vis. Image Underst. 2020, 200, 103044. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Tatarchenko, M.; Park, J.; Koltun, V.; Zhou, Q.Y. Tangent convolutions for dense prediction in 3d. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3887–3896. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7652–7660. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. Squeezesegv2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a lidar point cloud. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), ICRA 2019, Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Dai, A.; Nießner, M. 3dmv: Joint 3d-multi-view prediction for 3d semantic scene segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 452–468. [Google Scholar]

- Chiang, H.Y.; Lin, Y.L.; Liu, Y.C.; Hsu, W.H. A unified point-based framework for 3d segmentation. In Proceedings of the 2019 International Conference on 3D Vision (3DV), 3DV 2019, Québec City, QC, Canada, 16–19 September 2019; pp. 155–163. [Google Scholar]

- Jaritz, M.; Gu, J.; Su, H. Multi-view pointnet for 3d scene understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Xie, S.; Liu, S.; Chen, Z.; Tu, Z. Attentional shapecontextnet for point cloud recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4606–4615. [Google Scholar]

- Belongie, S.; Malik, J.; Puzicha, J. Shape matching and object recognition using shape contexts. IEEE Trans. PAMI 2002, 11, 509–522. [Google Scholar] [CrossRef] [Green Version]

- Qiu, S.; Anwar, S.; Barnes, N. Geometric back-projection network for point cloud classification. IEEE Trans. Multimed. 2021. [Google Scholar] [CrossRef]

- Qiu, S.; Anwar, S.; Barnes, N. Semantic Segmentation for Real Point Cloud Scenes via Bilateral Augmentation and Adaptive Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1757–1767. [Google Scholar]

- Qiu, S.; Anwar, S.; Barnes, N. Dense-resolution network for point cloud classification and segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3813–3822. [Google Scholar]

- Deng, H.; Birdal, T.; Ilic, S. Ppfnet: Global context aware local features for robust 3d point matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 195–205. [Google Scholar]

- Li, J.; Chen, B.M.; Hee Lee, G. So-net: Self-organizing network for point cloud analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9397–9406. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–7 December 2017; pp. 3856–3866. [Google Scholar]

- Jiang, M.; Wu, Y.; Zhao, T.; Zhao, Z.; Lu, C. Pointsift: A sift-like network module for 3d point cloud semantic segmentation. arXiv 2018, arXiv:1807.00652. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.W.; Jia, J. Pointweb: Enhancing local neighborhood features for point cloud processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5565–5573. [Google Scholar]

- Zhang, Z.; Hua, B.S.; Yeung, S.K. Shellnet: Efficient point cloud convolutional neural networks using concentric shells statistics. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 1607–1616. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 11108–11117. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Yang, J.; Zhang, Q.; Ni, B.; Li, L.; Liu, J.; Zhou, M.; Tian, Q. Modeling point clouds with self-attention and gumbel subset sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3323–3332. [Google Scholar]

- Chen, L.Z.; Li, X.Y.; Fan, D.P.; Wang, K.; Lu, S.P.; Cheng, M.M. LSANet: Feature learning on point sets by local spatial aware layer. arXiv 2019, arXiv:1905.05442. [Google Scholar]

- Zhao, C.; Zhou, W.; Lu, L.; Zhao, Q. Pooling scores of neighboring points for improved 3D point cloud segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1475–1479. [Google Scholar]

- Moenning, C.; Dodgson, N.A. Fast Marching Farthest Point Sampling; Technical Report; University of Cambridge, Computer Laboratory: Cambridge, UK, 2003. [Google Scholar]

- Hua, B.S.; Tran, M.K.; Yeung, S.K. Pointwise convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 984–993. [Google Scholar]

- Engelmann, F.; Kontogianni, T.; Leibe, B. Dilated point convolutions: On the receptive field size of point convolutions on 3D point clouds. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual, 31 May–31 August 2020; pp. 9463–9469. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4558–4567. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated graph sequence neural networks. arXiv 2015, arXiv:1511.05493. [Google Scholar]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point cloud auto-encoder via deep grid deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 206–215. [Google Scholar]

- Shen, Y.; Feng, C.; Yang, Y.; Tian, D. Mining point cloud local structures by kernel correlation and graph pooling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4548–4557. [Google Scholar]

- Landrieu, L.; Boussaha, M. Point cloud oversegmentation with graph-structured deep metric learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7440–7449. [Google Scholar]

- Zhiheng, K.; Ning, L. PyramNet: Point cloud pyramid attention network and graph embedding module for classification and segmentation. arXiv 2019, arXiv:1906.03299. [Google Scholar]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10296–10305. [Google Scholar]

- Ma, Y.; Guo, Y.; Liu, H.; Lei, Y.; Wen, G. Global context reasoning for semantic segmentation of 3D point clouds. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2931–2940. [Google Scholar]

- Huang, Q.; Wang, W.; Neumann, U. Recurrent slice networks for 3d segmentation of point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2626–2635. [Google Scholar]

- Engelmann, F.; Kontogianni, T.; Hermans, A.; Leibe, B. Exploring spatial context for 3D semantic segmentation of point clouds. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 716–724. [Google Scholar]

- Zhao, Z.; Liu, M.; Ramani, K. DAR-Net: Dynamic aggregation network for semantic scene segmentation. arXiv 2019, arXiv:1907.12022. [Google Scholar]

- Liu, F.; Li, S.; Zhang, L.; Zhou, C.; Ye, R.; Wang, Y.; Lu, J. 3DCNN-DQN-RNN: A deep reinforcement learning framework for semantic parsing of large-scale 3D point clouds. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5678–5687. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 11621–11631. [Google Scholar]

- Zolanvari, S.; Ruano, S.; Rana, A.; Cummins, A.; da Silva, R.E.; Rahbar, M.; Smolic, A. DublinCity: Annotated LiDAR Point Cloud and its Applications. arXiv 2019, arXiv:1909.03613. [Google Scholar]

- Tan, W.; Qin, N.; Ma, L.; Li, Y.; Du, J.; Cai, G.; Yang, K.; Li, J. Toronto-3D: A Large-scale Mobile LiDAR Dataset for Semantic Segmentation of Urban Roadways. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar] [CrossRef]

- Roynard, X.; Deschaud, J.E.; Goulette, F. Paris-Lille-3D: A large and high-quality ground-truth urban point cloud dataset for automatic segmentation and classification. Int. J. Robot. Res. 2018, 37, 545–557. [Google Scholar] [CrossRef] [Green Version]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. An approach to extract moving objects from MLS data using a volumetric background representation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 107. [Google Scholar] [CrossRef] [Green Version]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. The KITTI Vision Benchmark Suite. Available online: http://www.cvlibs.net/datasets/kitti (accessed on 29 July 2015).

- Riemenschneider, H.; Bódis-Szomorú, A.; Weissenberg, J.; Van Gool, L. Learning where to classify in multi-view semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 516–532. [Google Scholar]

- Vallet, B.; Brédif, M.; Serna, A.; Marcotegui, B.; Paparoditis, N. TerraMobilita/iQmulus urban point cloud analysis benchmark. Comput. Graph. 2015, 49, 126–133. [Google Scholar] [CrossRef] [Green Version]

- Serna, A.; Marcotegui, B.; Goulette, F.; Deschaud, J.E. Paris-Rue-Madame Database: A 3D Mobile Laser Scanner Dataset for Benchmarking Urban Detection, Segmentation and Classification Methods. Available online: https://hal.archives-ouvertes.fr/hal-00963812/document (accessed on 22 March 2014).

- CC-BY-SA. 3D Semantic Segmentation on SemanticKITTI. Available online: https://paperswithcode.com/sota/3d-semantic-segmentation-on-semantickitti (accessed on 22 June 2018).

- Xu, J.; Zhang, R.; Dou, J.; Zhu, Y.; Sun, J.; Pu, S. RPVNet: A Deep and Efficient Range-Point-Voxel Fusion Network for LiDAR Point Cloud Segmentation. arXiv 2021, arXiv:2103.12978. [Google Scholar]

- Cheng, R.; Razani, R.; Taghavi, E.; Li, E.; Liu, B. 2-S3Net: Attentive Feature Fusion with Adaptive Feature Selection for Sparse Semantic Segmentation Network. arXiv 2021, arXiv:2102.04530. [Google Scholar]

- Zhu, X.; Zhou, H.; Wang, T.; Hong, F.; Ma, Y.; Li, W.; Li, H.; Lin, D. Cylindrical and Asymmetrical 3D Convolution Networks for LiDAR Segmentation. arXiv 2020, arXiv:2011.10033. [Google Scholar]

- Tang, H.; Liu, Z.; Zhao, S.; Lin, Y.; Lin, J.; Wang, H.; Han, S. Searching efficient 3d architectures with sparse point-voxel convolution. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; pp. 685–702. [Google Scholar]

- Yan, X.; Gao, J.; Li, J.; Zhang, R.; Li, Z.; Huang, R.; Cui, S. Sparse Single Sweep LiDAR Point Cloud Segmentation via Learning Contextual Shape Priors from Scene Completion. arXiv 2020, arXiv:2012.03762. [Google Scholar]

- Liong, V.E.; Nguyen, T.N.T.; Widjaja, S.; Sharma, D.; Chong, Z.J. AMVNet: Assertion-based Multi-View Fusion Network for LiDAR Semantic Segmentation. arXiv 2020, arXiv:2012.04934. [Google Scholar]

- Gerdzhev, M.; Razani, R.; Taghavi, E.; Liu, B. TORNADO-Net: MulTiview tOtal vaRiatioN semAntic segmentation with Diamond inceptiOn module. arXiv 2020, arXiv:2008.10544. [Google Scholar]

- Zhang, F.; Fang, J.; Wah, B.; Torr, P. Deep fusionnet for point cloud semantic segmentation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Virtual, 23–28 August 2020; Volume 2, p. 6. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 6411–6420. [Google Scholar]

- Xu, C.; Wu, B.; Wang, Z.; Zhan, W.; Vajda, P.; Keutzer, K.; Tomizuka, M. Squeezesegv3: Spatially-adaptive convolution for efficient point-cloud segmentation. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020; pp. 1–19. [Google Scholar]

- Yan, X.; Zheng, C.; Li, Z.; Wang, S.; Cui, S. Pointasnl: Robust point clouds processing using nonlocal neural networks with adaptive sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 5589–5598. [Google Scholar]

- Wang, R.; Albooyeh, M.; Ravanbakhsh, S. Equivariant Maps for Hierarchical Structures. arXiv 2020, arXiv:2006.03627. [Google Scholar]

- Boulch, A. ConvPoint: Continuous convolutions for point cloud processing. Comput. Graph. 2020, 88, 24–34. [Google Scholar] [CrossRef] [Green Version]

- Truong, G.; Gilani, S.Z.; Islam, S.M.S.; Suter, D. Fast point cloud registration using semantic segmentation. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 2–4 December 2019; pp. 1–8. [Google Scholar]

- Liu, H.; Guo, Y.; Ma, Y.; Lei, Y.; Wen, G. Semantic Context Encoding for Accurate 3D Point Cloud Segmentation. IEEE Trans. Multimed. 2020, 23, 2045–2055. [Google Scholar] [CrossRef]

- Boulch, A.; Guerry, J.; Le Saux, B.; Audebert, N. SnapNet: 3D point cloud semantic labeling with 2D deep segmentation networks. Comput. Graph. 2018, 71, 189–198. [Google Scholar] [CrossRef]

- Contreras, J.; Denzler, J. Edge-Convolution Point Net for Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5236–5239. [Google Scholar]

- Yanx. Pytorch Implementation of PointNet++. Available online: https://github.com/yanx27/Pointnet_Pointnet2_pytorch (accessed on 4 March 2019).

| Sub-Network | Layer Index | Layer Type | Input Shape | Output Outshape | Kernel | Stride | Padding | Relu | B.N | # Param |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Conv2D | (128, 352, 3) | (64, 176, 64) | 7 × 7 | 2 | Y | Y | Y | 9408 | |

| 11 | Conv2D | (64, 176, 64) | (32, 88, 64) | 3 × 3 | 1 | Y | Y | Y | 36,864 | |

| 34 | Conv2D | (34, 90, 64) | (32, 88, 64) | 3 × 3 | 1 | Y | Y | Y | 36,864 | |

| 39 | Conv2D | (34, 90, 64) | (16, 44, 128) | 3 × 3 | 2 | Y | Y | Y | 73,728 | |

| Encoder | 67 | Conv2D | (18, 46, 128) | (18, 46, 128) | 3 × 3 | 1 | Y | Y | Y | 147,456 |

| 76 | Conv2D | (18, 46, 128) | (8, 22, 256) | 3 × 3 | 2 | Y | Y | Y | 294,912 | |

| 87 | Conv2D | (10, 24, 256) | (8, 22, 256) | 3 × 3 | 1 | Y | Y | Y | 589,824 | |

| 133 | Conv2D | (10, 24, 256) | (4, 11, 512) | 3 × 3 | 2 | Y | Y | Y | 1,179,648 | |

| 156 | Conv2D | (6, 13, 512) | (4, 11, 512) | 3 × 3 | 1 | Y | Y | Y | 2,359,296 | |

| 160 | Upsamp | (4, 11, 512) | (8, 22, 512) | - | - | N | N | N | 0 | |

| 161 | Concat | (8, 22, 512) | (8, 22, 768) | - | - | N | N | N | 0 | |

| 162 | Conv2D | (8, 22, 768) | (8, 22, 256) | 1 × 1 | 1 | Y | Y | Y | 1,769,472 | |

| 168 | Upsamp | (8, 22, 256) | (16, 44, 256) | - | - | N | N | N | 0 | |

| 169 | Concat | (16, 44, 256) | (16, 44, 384) | - | - | N | N | N | 0 | |

| 170 | Conv2D | (16, 44, 384) | (16, 44, 128) | 1 × 1 | 1 | Y | Y | Y | 442,368 | |

| Decoder | 176 | Upsamp | (16, 44, 128) | (32, 88, 128) | - | - | N | N | N | 0 |

| 177 | Concat | (32, 88, 128) | (32, 88, 192) | - | - | N | N | N | 0 | |

| 178 | Conv2D | (32, 88, 192) | (32, 88, 64) | 1 × 1 | 1 | Y | Y | Y | 110,592 | |

| 184 | Upsamp | (32, 88, 64) | (64, 176, 64) | - | - | N | N | N | 0 | |

| 185 | Concat | (64, 176, 64) | (64, 176, 128) | - | - | N | N | N | 0 | |

| 186 | Conv2D | (64, 176, 128) | (64, 176, 32) | 1 × 1 | 1 | Y | Y | Y | 36,864 | |

| 192 | Upsamp | (64, 176, 32) | (128, 352, 32) | - | - | N | N | N | 0 | |

| 193 | Conv2D | (128, 352, 32) | (128, 352, 16) | 1 × 1 | 1 | Y | Y | Y | 4608 | |

| 200 | Conv2D | (128, 352, 16) | (128, 352, 30) | 1 × 1 | 1 | Y | Y | Y | 4350 |

| Dataset | Classes | Points | Sensor | Annotation |

|---|---|---|---|---|

| nuScenes [77] | 23 | - | LiDAR | bounding box |

| A2D2 [24] | 38 | - | LiDAR | pixel mapping |

| SemanticKITTI [22] | 28 | 4500 M | LiDAR (Velodyne HDL-64E) | point-wise |

| PC-Urban [21] | 25 | 4000 M | LiDAR(Ouster OS-64) | point-wise |

| DublinCity [78] | 13 | 1400 M | LiDAR | coarse labeling |

| Toronto-3D MLS [79] | 8 | 78 M | LiDAR (32-line) | point-wise |

| Paris-Lille-3D [80] | 50 | 143 M | LiDAR (Velodyne HDL-32E) | point-wise |

| Semantic3D.Net [23] | 8 | 4000 M | LiDAR (Terrestrial) | point-wise |

| TUM City Campus [81] | 9 | 1700 M | LiDAR (Velodyne HDL-64E) | point-wise |

| KITTI [82] | 8 | - | LiDAR (Velodyne) | bounding box |

| RueMonge2014 [83] | 7 | 0.4 M | Structure from Motion (SfM) | Mesh labeling |

| iQmulus [84] | 50 | 300 M | LiDAR (Q120i) | point-wise |

| Paris-rue-Madame [85] | 17 | 20 M | LiDAR (Velodyne HDL-32) | point-wise |

| PC-Urban_V2 (Proposed) | 25 | 8000 M | LiDAR (Ouster OS-64) | point-wise |

| Approach | mIoU | OA | Car | Truck | Ped | Motor-Cycle | Bus | Bridge | Tree | Bushes | Building | Road | Rubbish-Bin | Bus-Stop | Light-Pole | Wall | Traffic-Signal | RoadSignedBoard | Letter-Box |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Octree-based CNN [5] | 42.6 | 78.4 | 40.5 | 32.1 | 40.1 | 39.1 | 31.9 | 30.5 | 77.6 | 34.5 | 69.0 | 76.2 | 43.9 | 31.6 | 27.8 | 39. | 46.1 | 30.1 | 37.0 |

| PointConv [7] | 39.3 | 81.5 | 33.7 | 19.5 | 36.5 | 25.6 | 35.2 | 28.7 | 47.7 | 29.3 | 76.6 | 49.1 | 31.2 | 32.3 | 43.6 | 18.9 | 42.1 | 35.1 | 36.7 |

| PointNet++ [6] | 22.7 | 47.3 | 40.5 | 0.0 | 05.0 | 18.6 | 04.8 | 0.0 | 77.9 | 12.7 | 80.3 | 22.3 | 25.1 | 13.7 | 09.0 | 06.9 | 15.9 | 01.0 | 11.0 |

| PointNet [6] | 12.5 | 39.3 | 30.9 | 0.0 | 0.0 | 0.0 | 04.5 | 02.4 | 0.0 | 52.7 | 07.2 | 45.8 | 09.0 | 16.2 | 08.1 | 0.0 | 0.0 | 11.0 | 0.0 |

| Ours | 66.8 | 88.9 | 81.6 | 85.1 | 68.5 | 81.3 | 53.0 | 85.5 | 84.4 | 89.8 | 89.9 | 86.1 | 70.3 | 72.8 | 89.5 | 79.9 | 46.2 | 43.8 | 77.3 |

| Approach | mIoU | Car | Bicycle | Motorcycle | Truck | Other-Veh | Person | Bicyclist | Motorcyclist | Road | Parking | Sidewalk | O-Ground | Building | Fence | Vegetation | Trunk | Terrain | Pole | Traffic-Signal |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RPVNet [87] | 70.3 | 97.6 | 68.4 | 68.7 | 44.2 | 61.1 | 75.9 | 74.4 | 43.4 | 93.4 | 70.3 | 80.7 | 33.3 | 93.5 | 72.1 | 86.5 | 75.1 | 71.7 | 64.8 | 61.4 |

| S3Net [88] | 69.7 | 94.5 | 65.4 | 86.8 | 39.2 | 41.1 | 80.7 | 80.4 | 74.3 | 91.3 | 68.8 | 72.5 | 53.5 | 87.9 | 63.2 | 70.2 | 68.5 | 53.7 | 61.5 | 71.0 |

| Cylinder3D [89] | 67.8 | 97.1 | 67.6 | 64.0 | 50.8 | 58.6 | 73.9 | 67.9 | 36.0 | 91.4 | 65.1 | 75.5 | 32.3 | 91.0 | 66.5 | 85.4 | 71.8 | 68.5 | 62.6 | 65.6 |

| SPVNAS [90] | 66.4 | 97.3 | 51.5 | 50.8 | 59.8 | 58.8 | 65.7 | 65.2 | 43.7 | 90.2 | 67.6 | 75.2 | 16.9 | 91.3 | 65.9 | 86.1 | 73.4 | 71.0 | 64.2 | 66.9 |

| JS3C-Net [91] | 66.0 | 95.8 | 59.3 | 52.9 | 54.3 | 46.0 | 69.5 | 65.4 | 39.9 | 88.8 | 61.9 | 72.1 | 31.9 | 92.5 | 70.8 | 84.5 | 69.8 | 68.0 | 60.7 | 68.7 |

| AMVNet [92] | 65.3 | 96.2 | 59.9 | 54.2 | 48.8 | 45.7 | 71.0 | 65.7 | 11.0 | 90.1 | 71.0 | 75.8 | 32.4 | 92.4 | 69.1 | 85.6 | 71.7 | 69.6 | 62.7 | 67.2 |

| TORNADONet [93] | 63.1 | 94.2 | 55.7 | 48.1 | 40.0 | 38.2 | 63.6 | 60.1 | 34.9 | 89.7 | 66.3 | 74.5 | 28.7 | 91.3 | 65.6 | 85.6 | 67.0 | 71.5 | 58.0 | 65.9 |

| FusionNet [94] | 61.3 | 95.3 | 47.5 | 37.7 | 41.8 | 34.5 | 59.5 | 56.8 | 11.9 | 91.8 | 68.8 | 77.1 | 30.8 | 92.5 | 69.4 | 84.5 | 69.8 | 68.5 | 60.4 | 66.5 |

| KPCONV [95] | 58.8 | 96.0 | 30.2 | 42.5 | 33.4 | 44.3 | 61.5 | 61.6 | 11.8 | 88.8 | 61.3 | 72.7 | 31.6 | 90.5 | 64.2 | 84.8 | 69.2 | 69.1 | 56.4 | 47.4 |

| SqueezeSegV3 [96] | 55.5 | 92.5 | 38.7 | 36.5 | 29.6 | 33.0 | 45.6 | 46.2 | 20.1 | 91.7 | 63.4 | 74.8 | 26.4 | 89.0 | 59.4 | 82.0 | 58.7 | 65.4 | 49.6 | 58.9 |

| RangeNet [19] | 52.2 | 91.4 | 25.7 | 34.4 | 25.7 | 23.0 | 38.3 | 38.8 | 4.8 | 91.8 | 65.0 | 75.2 | 27.8 | 87.4 | 58.6 | 80.5 | 55.1 | 64.6 | 47.9 | 55.9 |

| PointASNL [97] | 46.8 | 87.9 | 0.0 | 25.1 | 39.0 | 29.2 | 34.2 | 57.6 | 0.0 | 87.4 | 24.3 | 74.3 | 1.8 | 83.1 | 43.9 | 84.1 | 52.2 | 70.6 | 57.8 | 36.9 |

| TangentConv [37] | 40.9 | 86.8 | 1.3 | 12.7 | 11.6 | 10.2 | 17.1 | 20.2 | 0.5 | 82.9 | 15.2 | 61.7 | 9.0 | 82.8 | 44.2 | 75.5 | 42.5 | 55.5 | 30.2 | 22.2 |

| PointNet++ [6] | 20.1 | 53.7 | 1.9 | 0.2 | 0.9 | 0.2 | 0.9 | 1.0 | 0.0 | 72.0 | 18.7 | 41.8 | 5.6 | 62.3 | 16.9 | 46.5 | 13.8 | 30.0 | 6.0 | 8.9 |

| PointNet [3] | 14.6 | 46.3 | 1.3 | 0.3 | 0.1 | 0.8 | 0.2 | 0.2 | 0.0 | 61.6 | 15.8 | 35.7 | 1.4 4 | 1.4 | 12.9 | 31.0 | 4.6 | 17.6 | 2.4 | 3.7 |

| Ours | 67.1 | 88.0 | 70.5 | 53.2 | 65.7 | 78.8 | 59.8 | 81.8 | 39.4 | 84.2 | 54.7 | 50.3 | 62.8 | 82.8 | 74.6 | 76.6 | 52.2 | 68.1 | 58.3 | 49.3 |

| Methods | mIoU | OA | MM-Terrain | N-Terrain | H-Veg | L-Veg | Buildings | Hardscape | S-Art | Car |

|---|---|---|---|---|---|---|---|---|---|---|

| WreathProdNet [98] | 77.1 | 94.6 | 95.2 | 87.1 | 75.3 | 67.1 | 96.1 | 51.3 | 51.0 | 93.4 |

| Conv_pts [99] | 76.5 | 93.4 | 92.1 | 80.6 | 76.0 | 71.9 | 95.6 | 47.3 | 61.1 | 87.7 |

| SPGraph [63] | 76.2 | 92.9 | 91.5 | 75.6 | 78.3 | 71.7 | 94.4 | 56.8 | 52.9 | 88.4 |

| WOW [100] | 72.0 | 90.6 | 86.4 | 70.3 | 69.5 | 68.0 | 96.9 | 43.4 | 52.3 | 89.5 |

| PointConv_CE [101] | 71.0 | 92.3 | 92.4 | 79.6 | 72.7 | 62.0 | 93.7 | 40.6 | 44.6 | 82.5 |

| Att_conv [55] | 70.7 | 93.6 | 96.3 | 89.6 | 68.3 | 60.7 | 92.8 | 41.5 | 27.2 | 89.8 |

| PointGCR [70] | 69.5 | 92.1 | 93.8 | 80.0 | 64.4 | 66.4 | 93.2 | 39.2 | 34.3 | 85.3 |

| SnapNET [102] | 67.4 | 91.0 | 89.6 | 79.5 | 74.8 | 56.1 | 90.9 | 36.5 | 34.3 | 77.2 |

| Super_ss [103] | 64.4 | 89.6 | 91.1 | 69.5 | 65.0 | 56.0 | 89.7 | 30.0 | 43.8 | 69.7 |

| PointNet2_Demo [104] | 63.1 [75] | 85.7 | 81.9 | 78.1 | 64.3 | 51.7 | 75.9 | 36.4 | 43.7 | 72.6 |

| Ours | 77.2 | 91.9 | 70.0 | 75.3 | 77.8 | 70.5 | 67.9 | 82.9 | 95.0 | 75.0 |

| Method | Dataset | mIoU | OA | Recall | Car | Truck | Pedestrian | Bicycle | Ego Car | Small Vehicle | Utility Vehicles | Animals |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | Audi (A2D2) [24] | 53.4 | 78.7 | 59.6 | 40.4 | 65.7 | 66.8 | 65.7 | 65.9 | 69.1 | 67.0 | 40.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibrahim, M.; Akhtar, N.; Ullah, K.; Mian, A. Exploiting Structured CNNs for Semantic Segmentation of Unstructured Point Clouds from LiDAR Sensor. Remote Sens. 2021, 13, 3621. https://doi.org/10.3390/rs13183621

Ibrahim M, Akhtar N, Ullah K, Mian A. Exploiting Structured CNNs for Semantic Segmentation of Unstructured Point Clouds from LiDAR Sensor. Remote Sensing. 2021; 13(18):3621. https://doi.org/10.3390/rs13183621

Chicago/Turabian StyleIbrahim, Muhammad, Naveed Akhtar, Khalil Ullah, and Ajmal Mian. 2021. "Exploiting Structured CNNs for Semantic Segmentation of Unstructured Point Clouds from LiDAR Sensor" Remote Sensing 13, no. 18: 3621. https://doi.org/10.3390/rs13183621

APA StyleIbrahim, M., Akhtar, N., Ullah, K., & Mian, A. (2021). Exploiting Structured CNNs for Semantic Segmentation of Unstructured Point Clouds from LiDAR Sensor. Remote Sensing, 13(18), 3621. https://doi.org/10.3390/rs13183621