Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture

Abstract

:1. Introduction

2. System Design

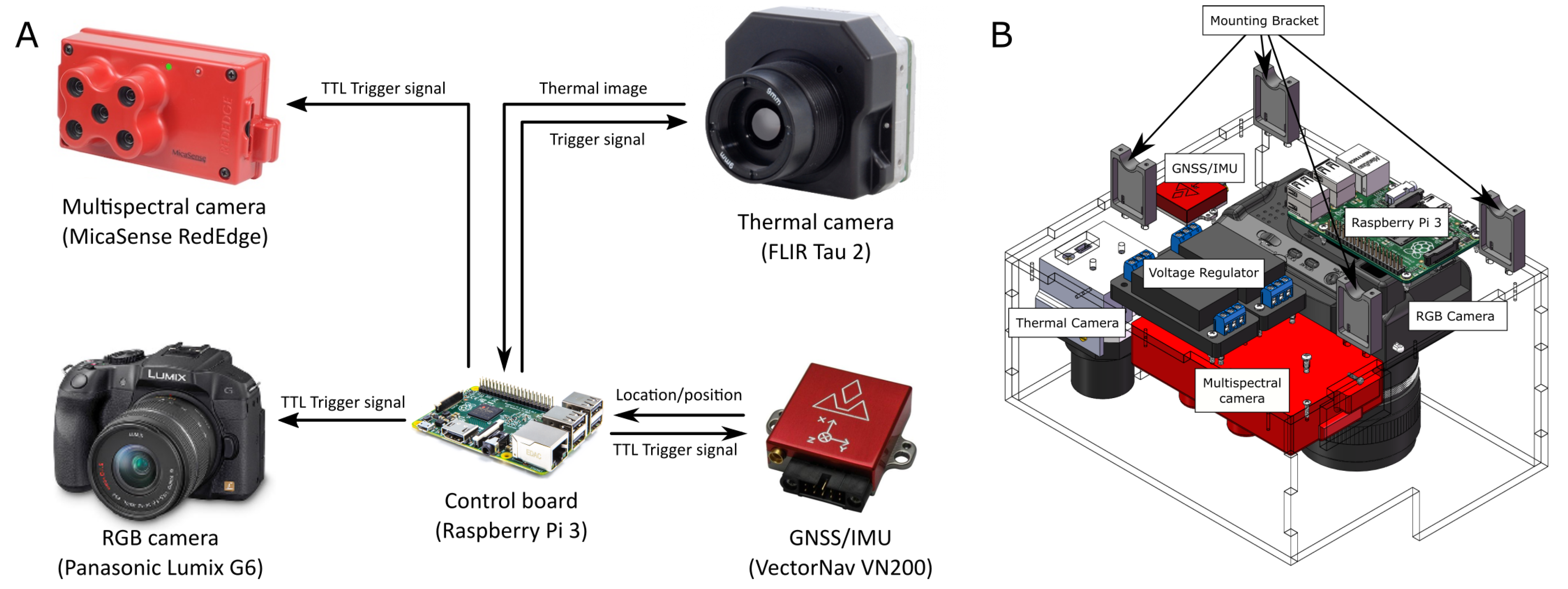

2.1. First Version of the Data Acquisition System

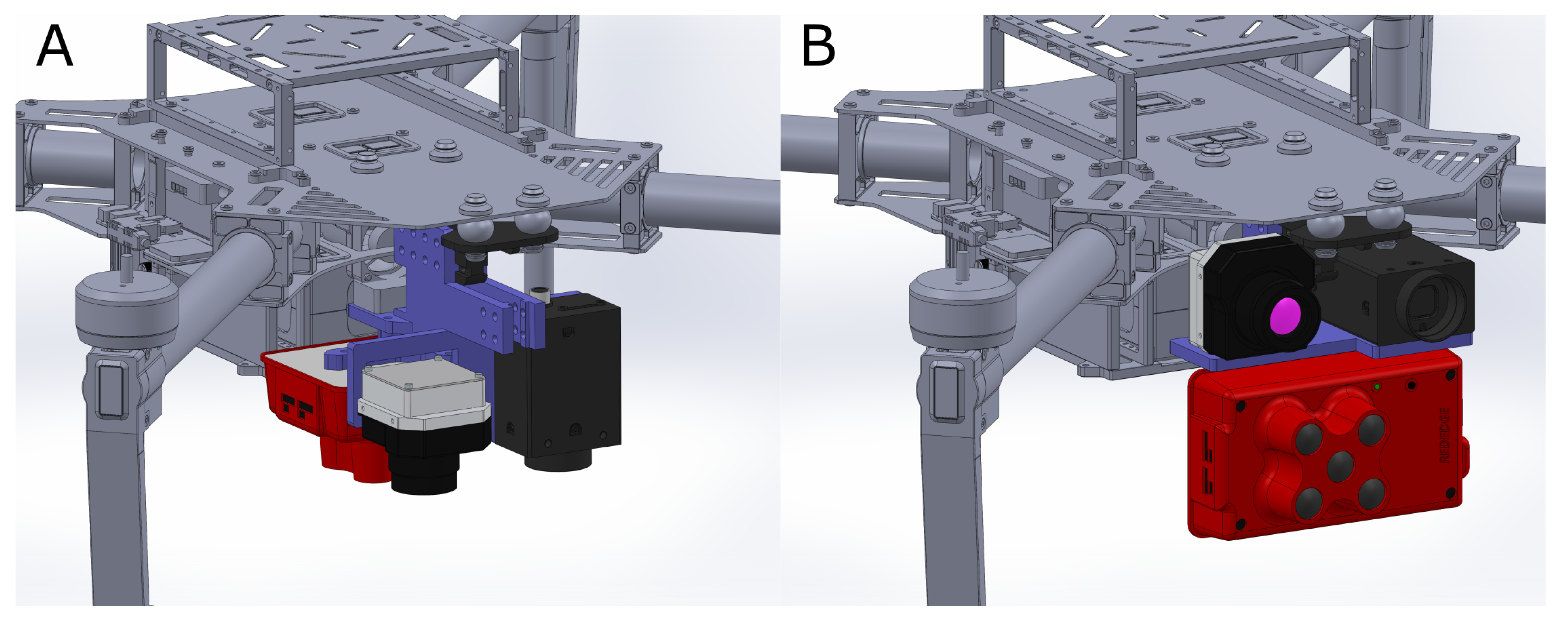

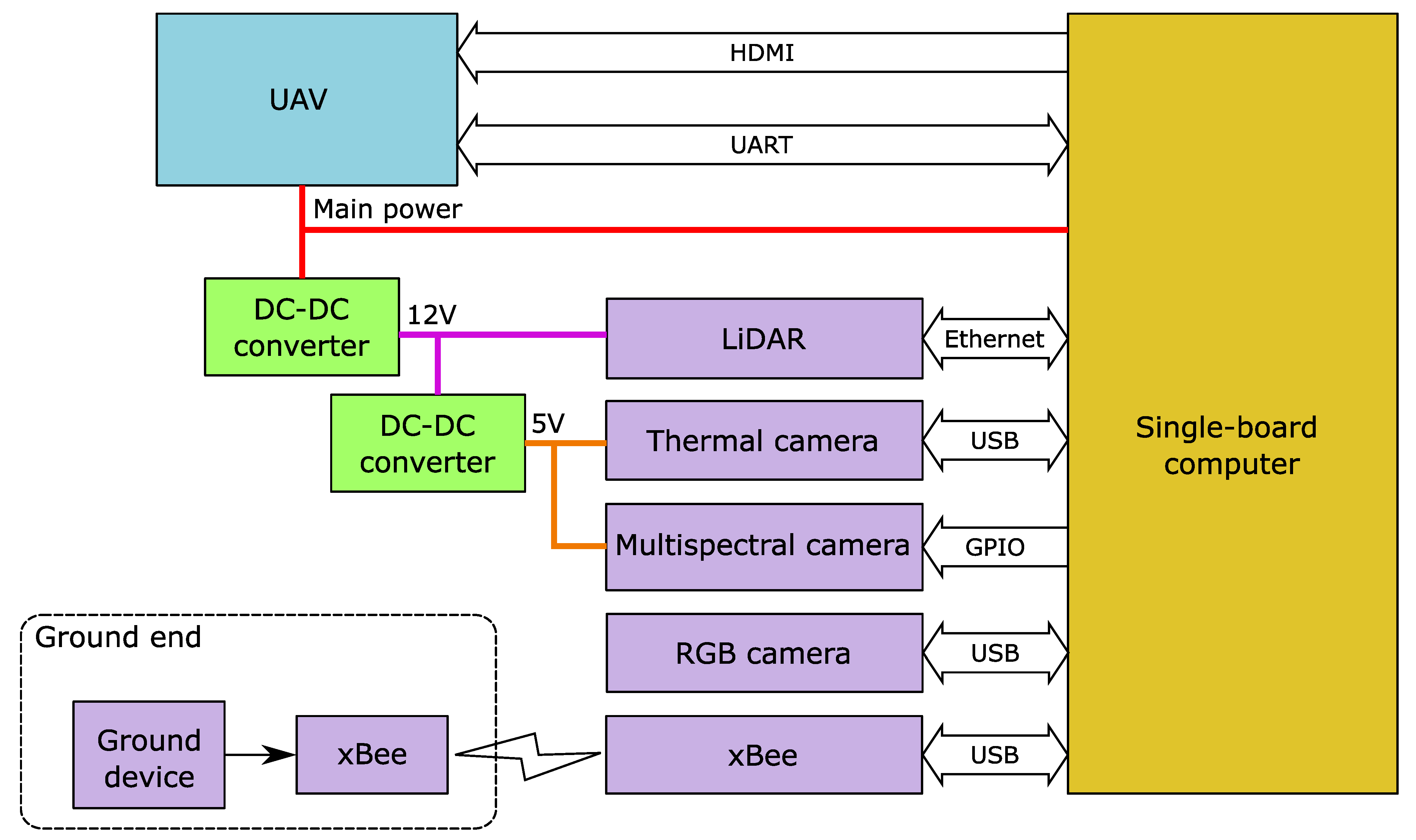

2.2. Second Version of the Data Acquisition System

2.2.1. Electronic Design

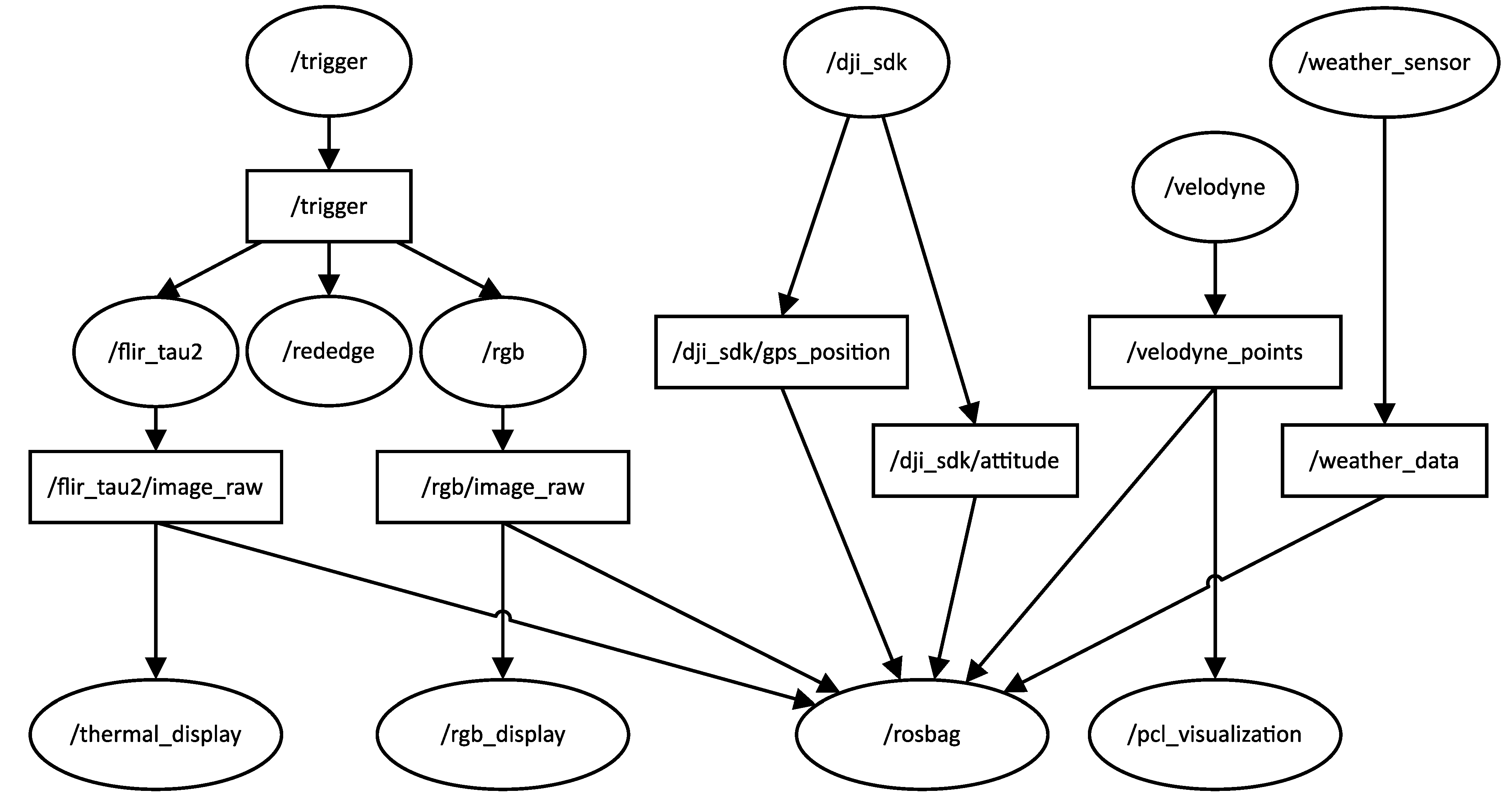

2.2.2. Software Design

3. Camera Calibration

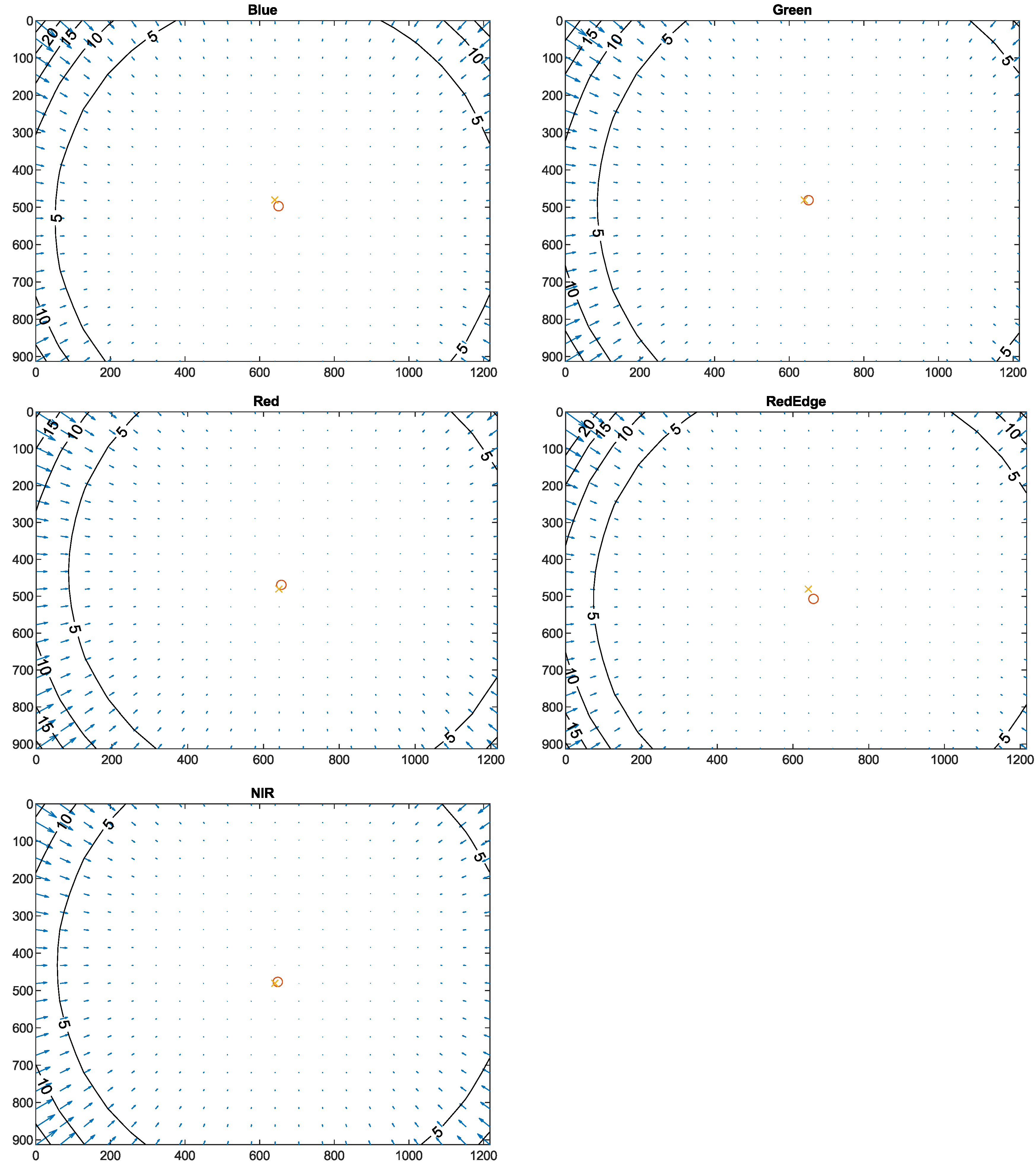

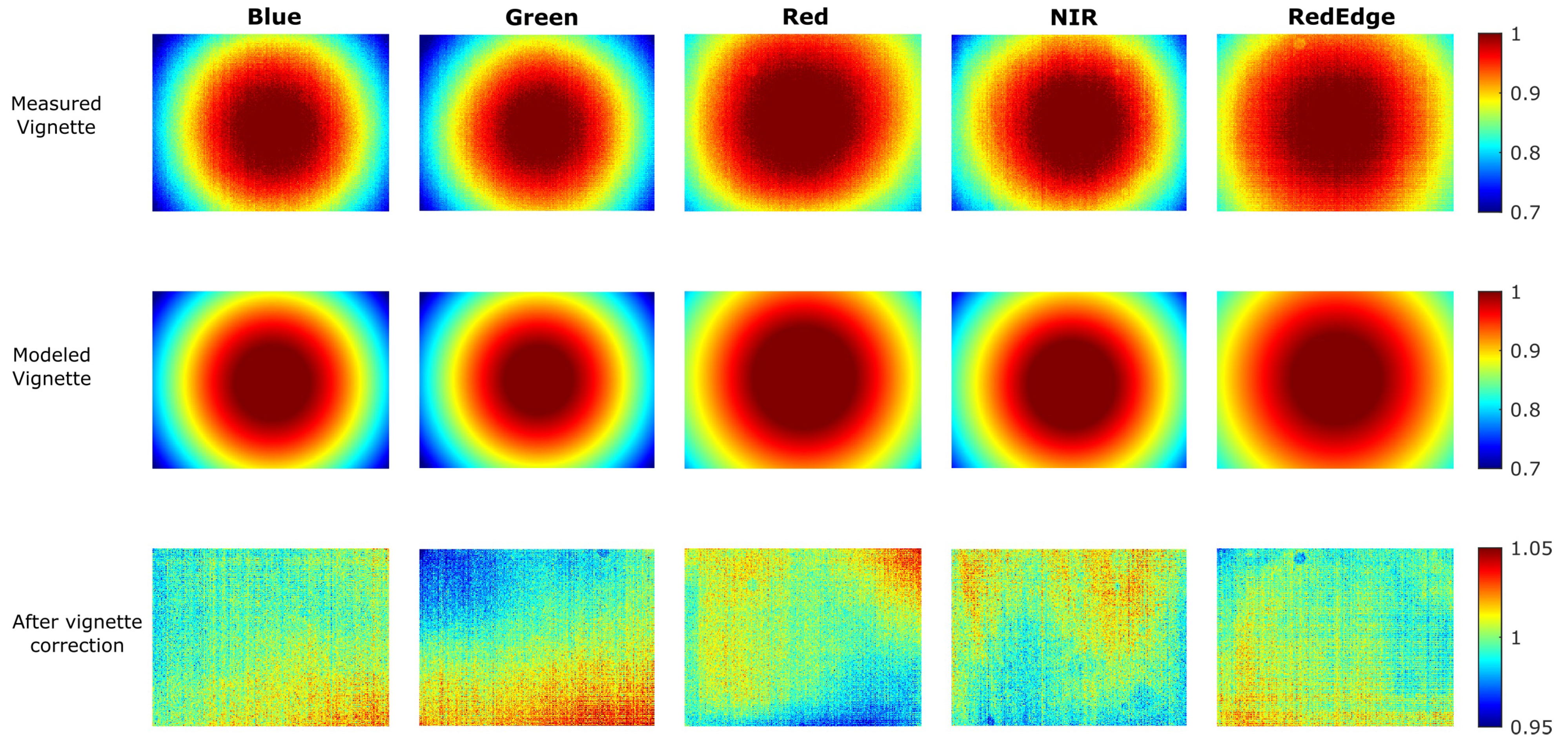

3.1. Geometric Calibration

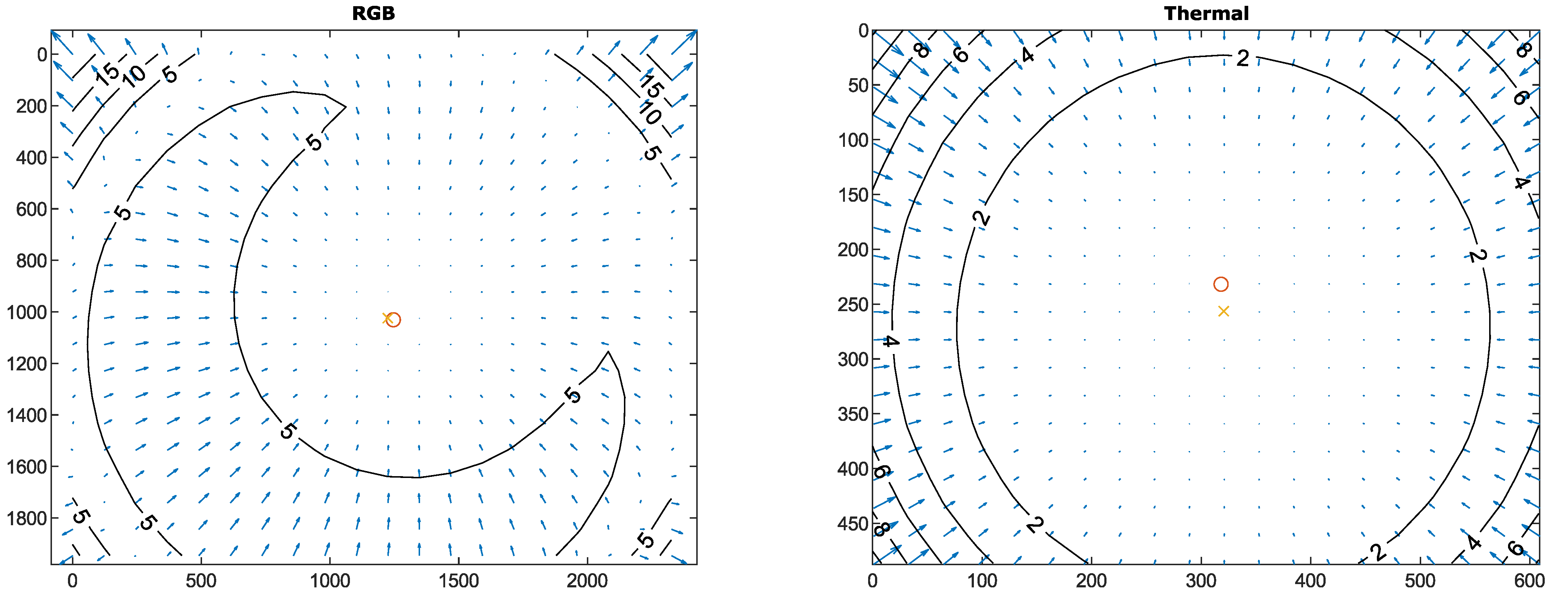

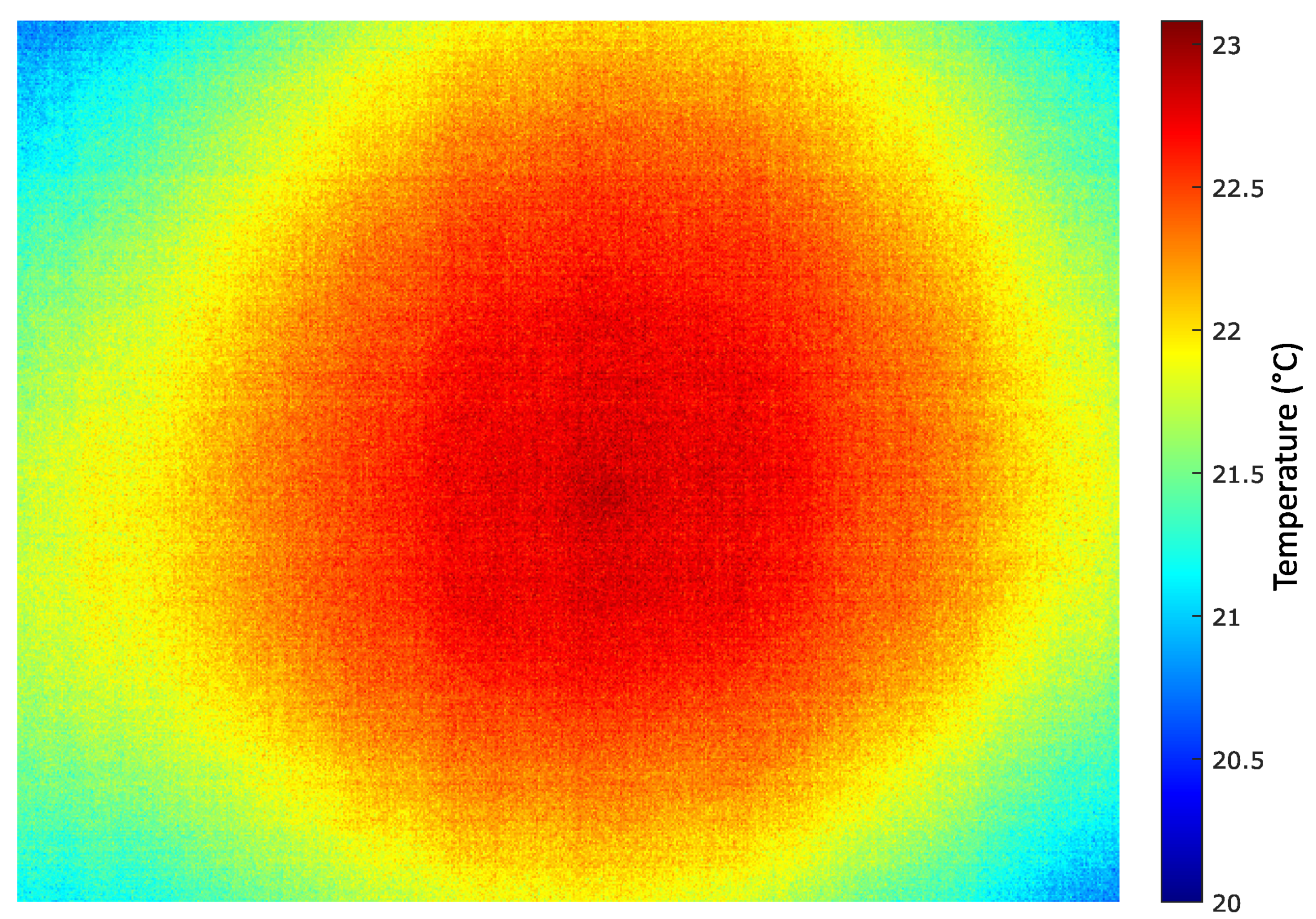

3.2. Vignetting Calibration

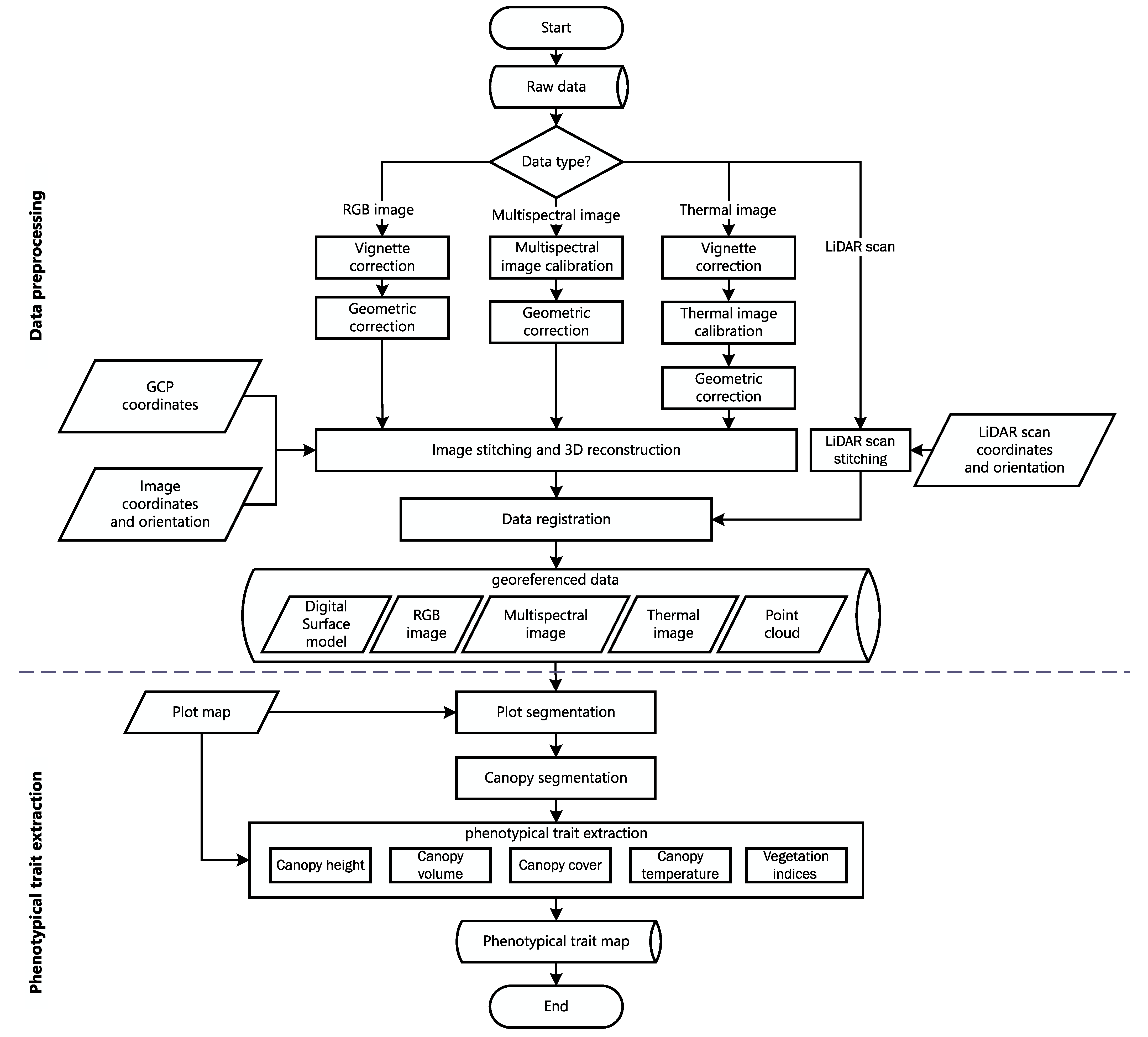

4. Data Processing

4.1. Overall Pipeline

4.2. Data Preprocessing

4.2.1. Vignetting Correction

4.2.2. Geometric Correction

4.2.3. Multispectral Image Calibration

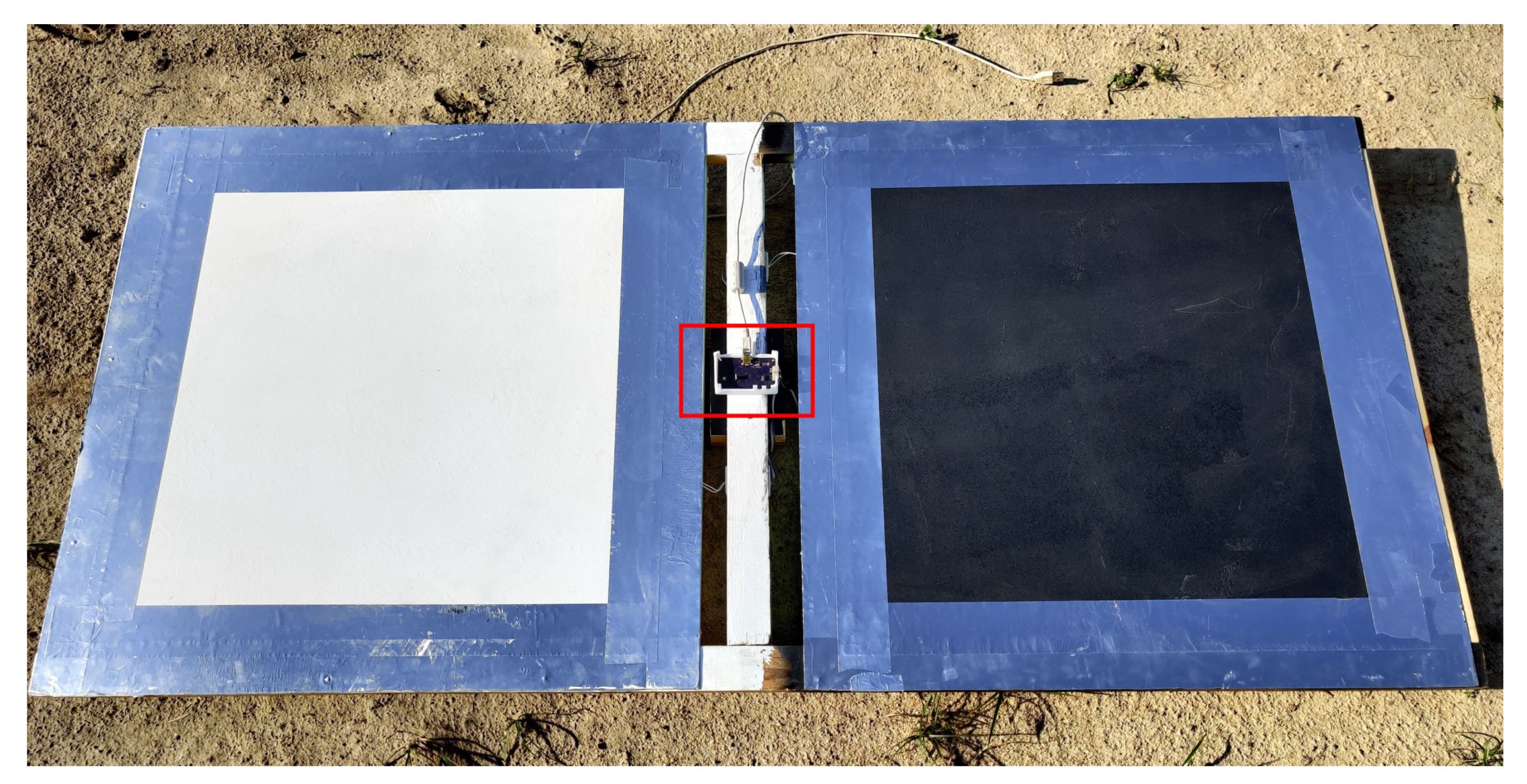

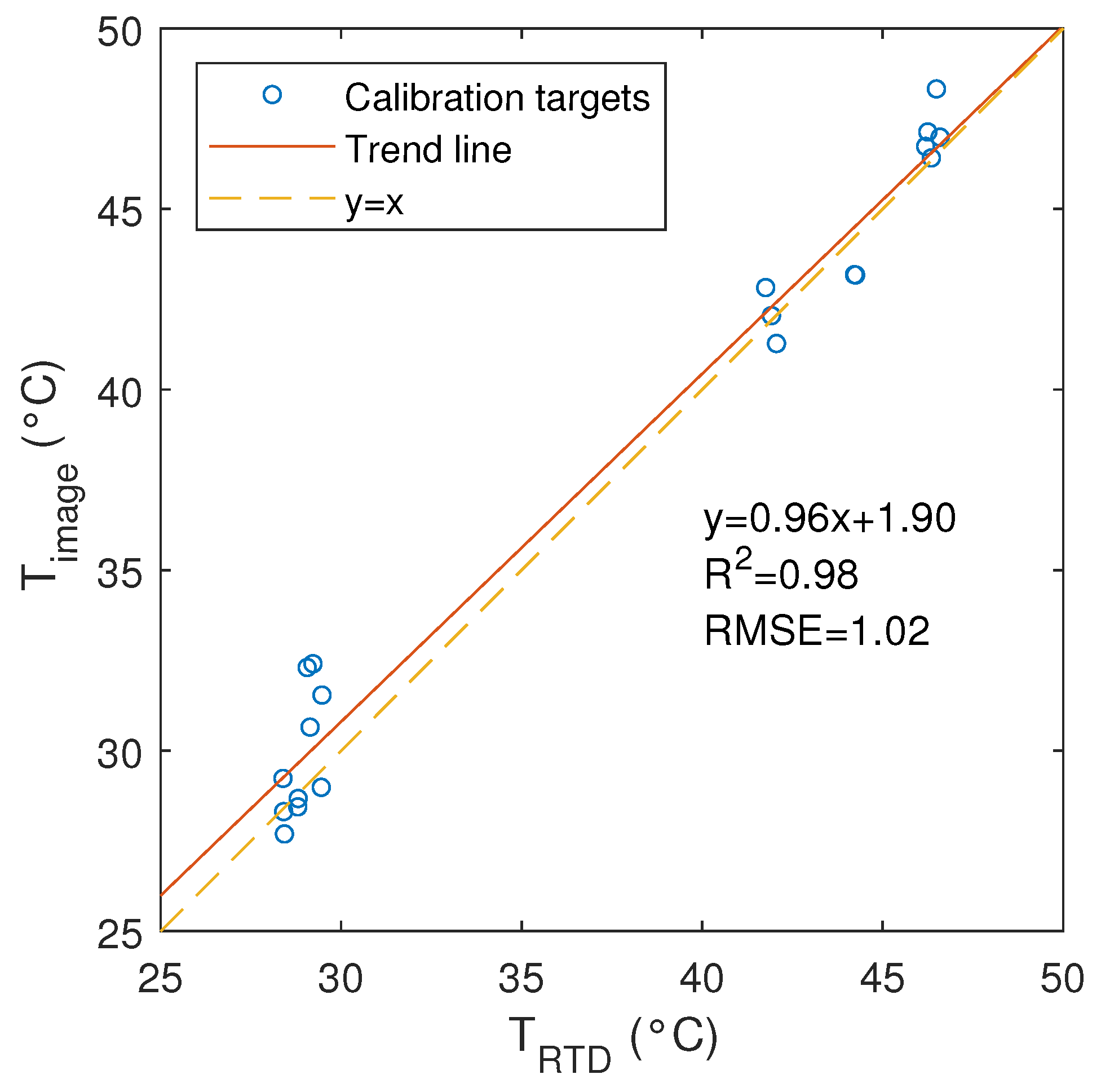

4.2.4. Thermal Image Calibration

4.2.5. LiDAR Data Processing

4.2.6. Orthomosaics and 3D Model

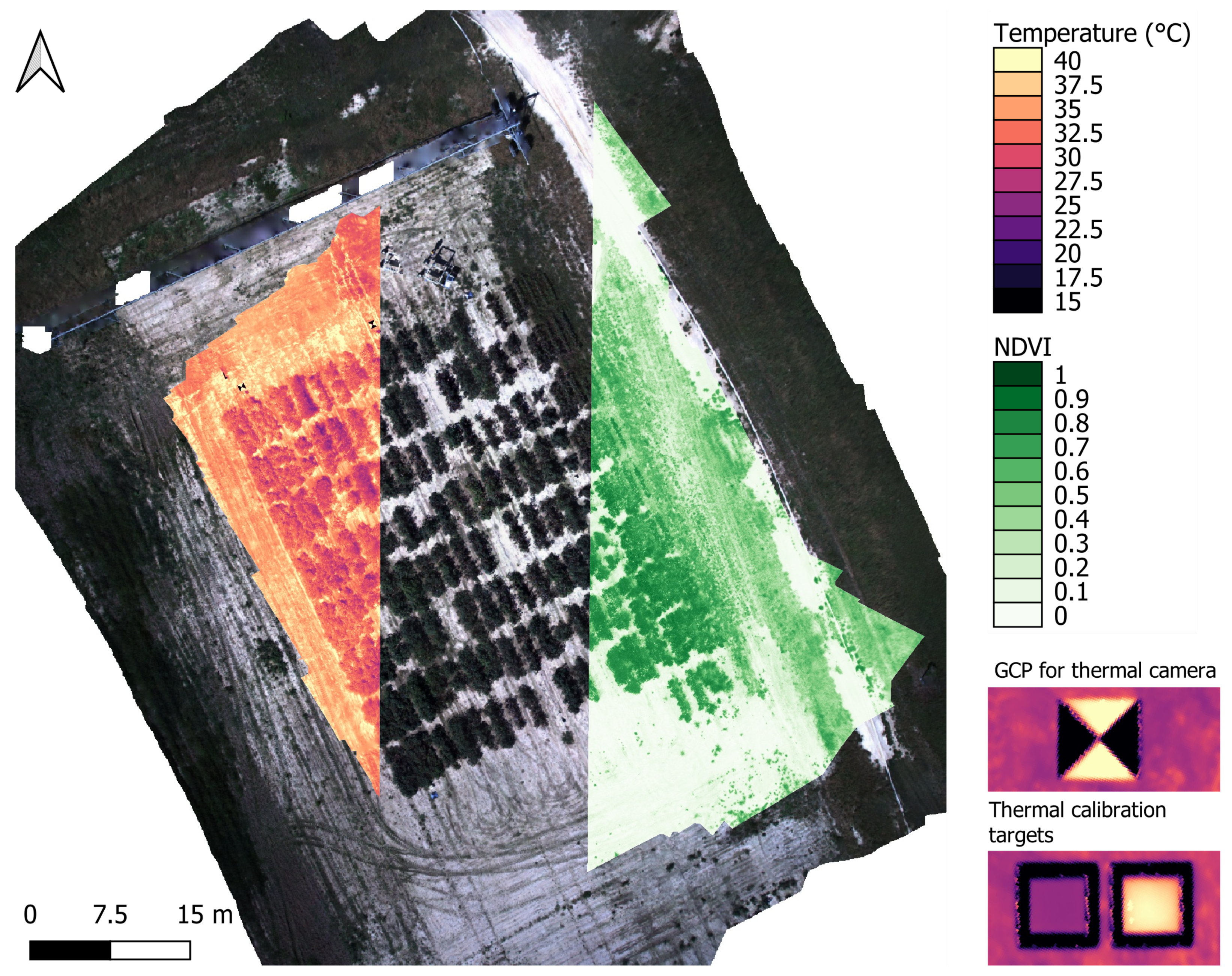

4.2.7. Data Registration

4.3. Extraction of Phenotypic Traits

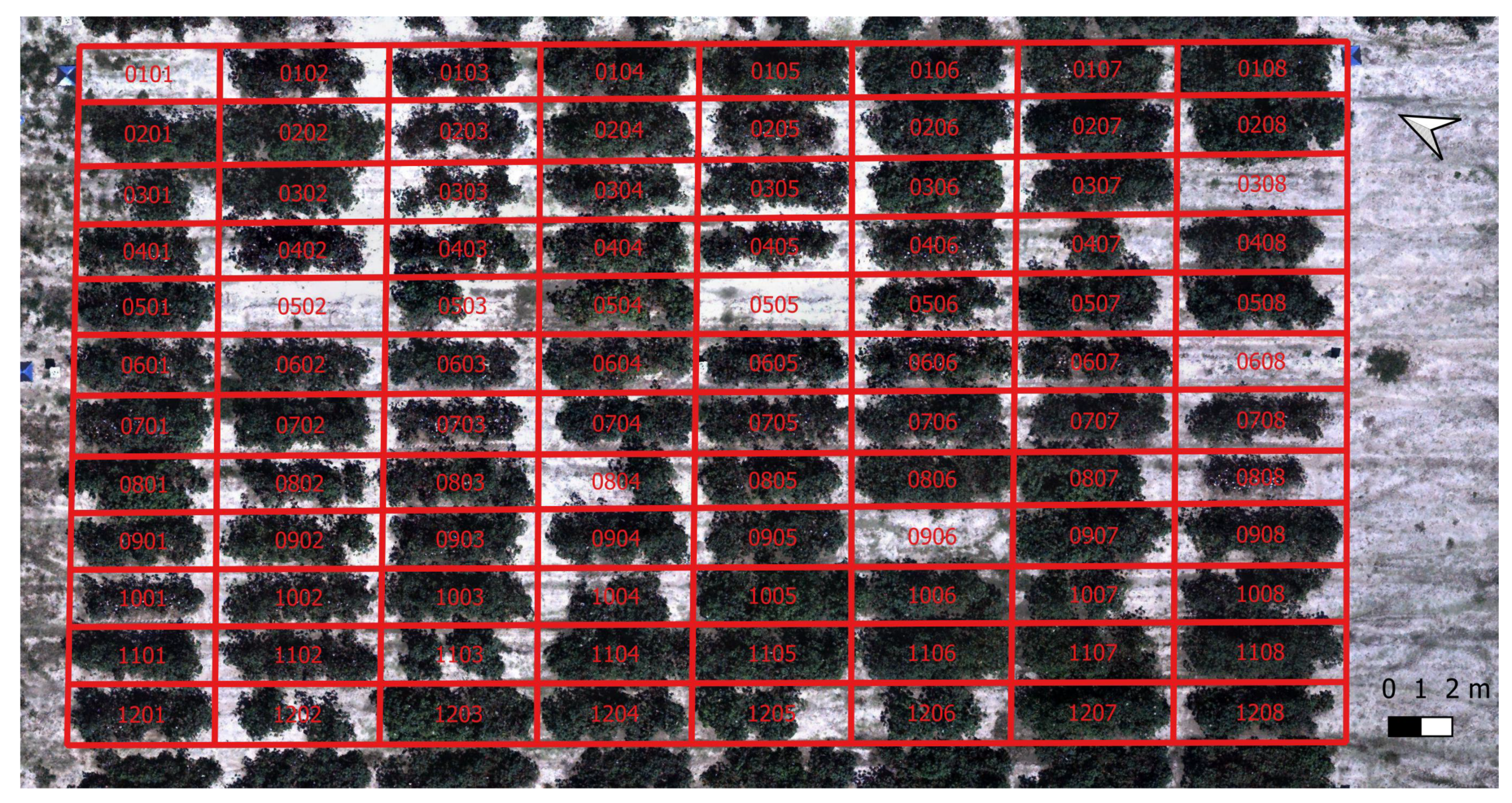

4.3.1. Plot Segmentation

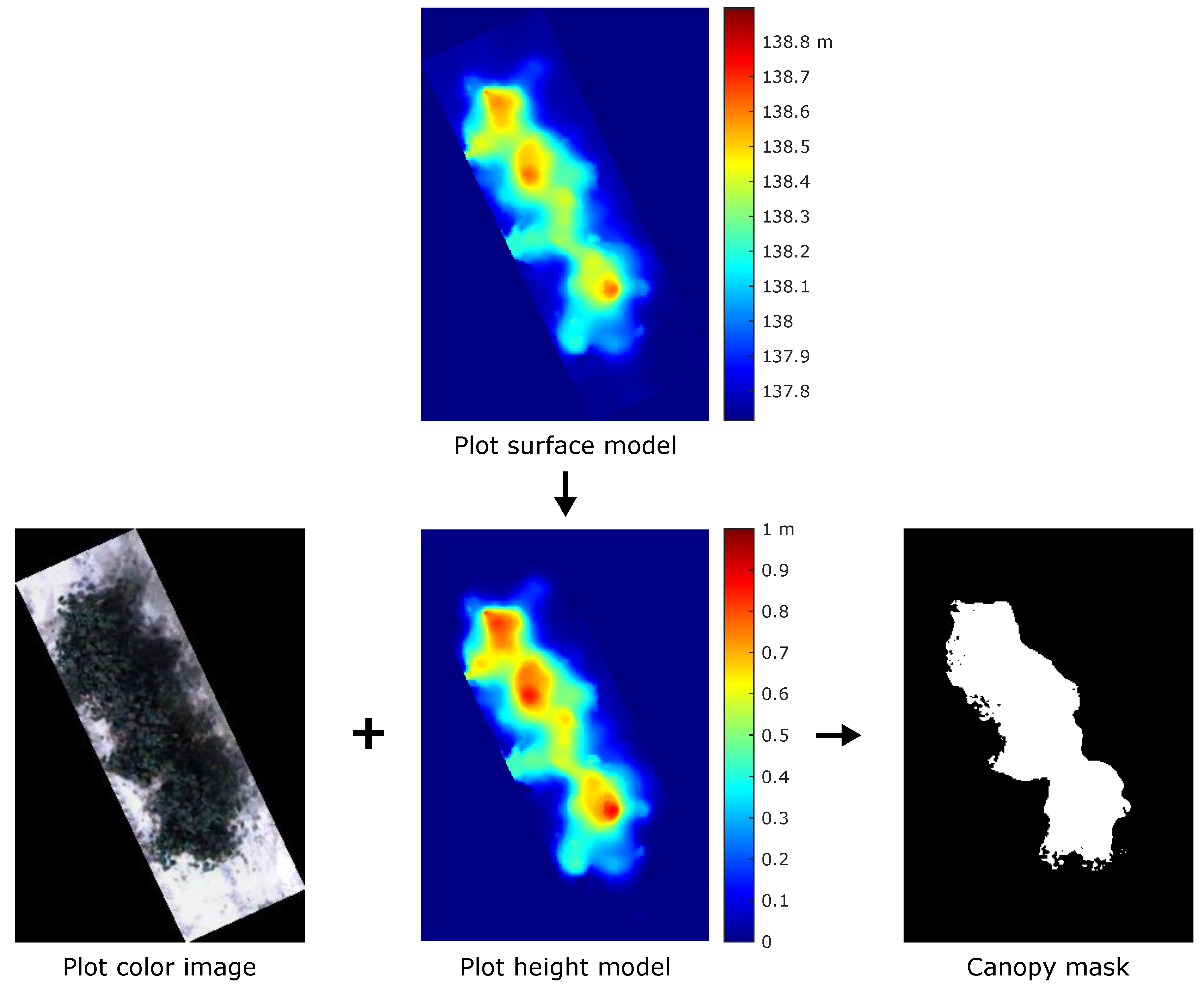

4.3.2. Canopy Segmentation

4.3.3. Morphological Traits

Canopy Height

Canopy Volume

Canopy Cover

4.3.4. Vegetation Indices

4.3.5. Canopy Temperature

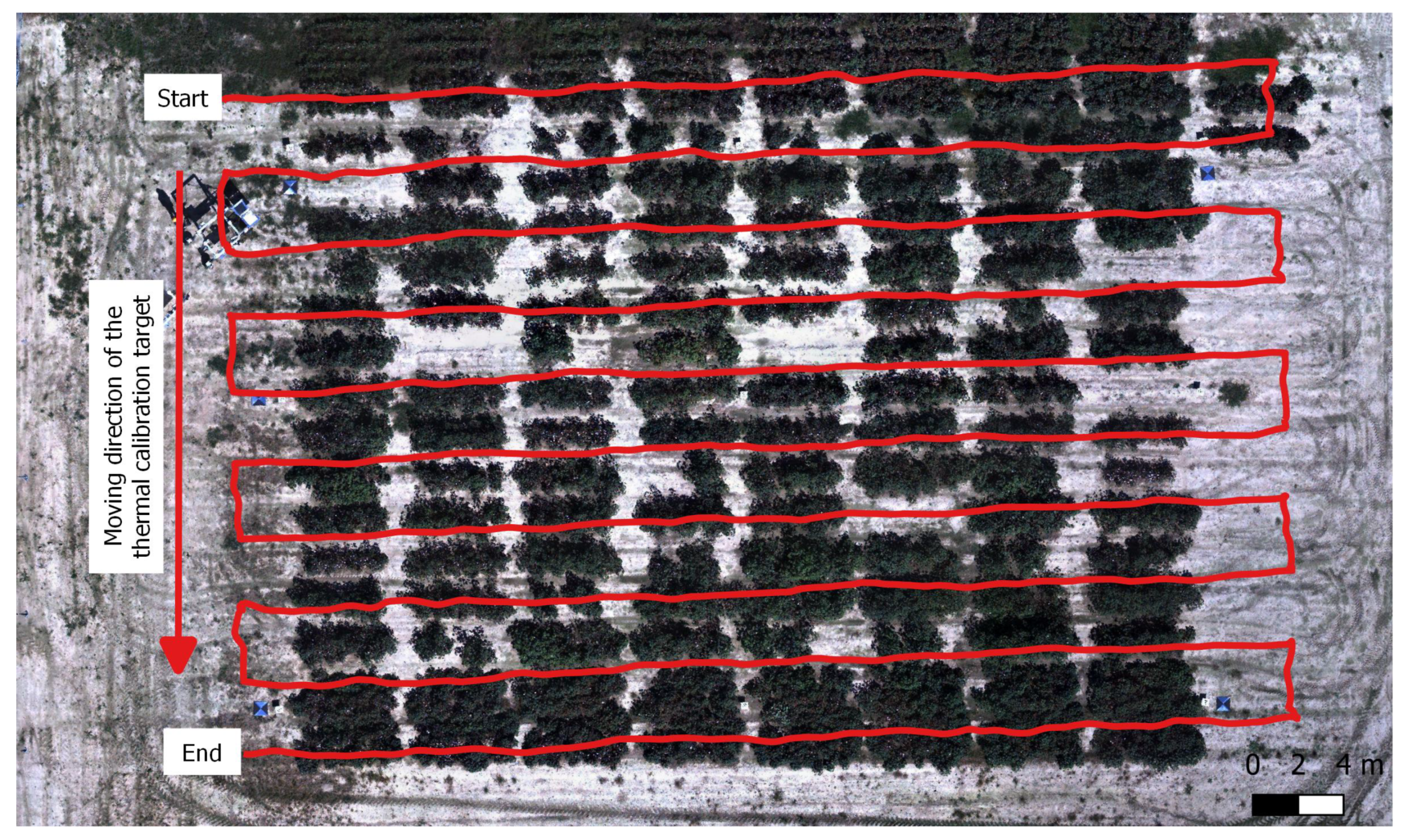

5. Data Collection

5.1. Testing Field

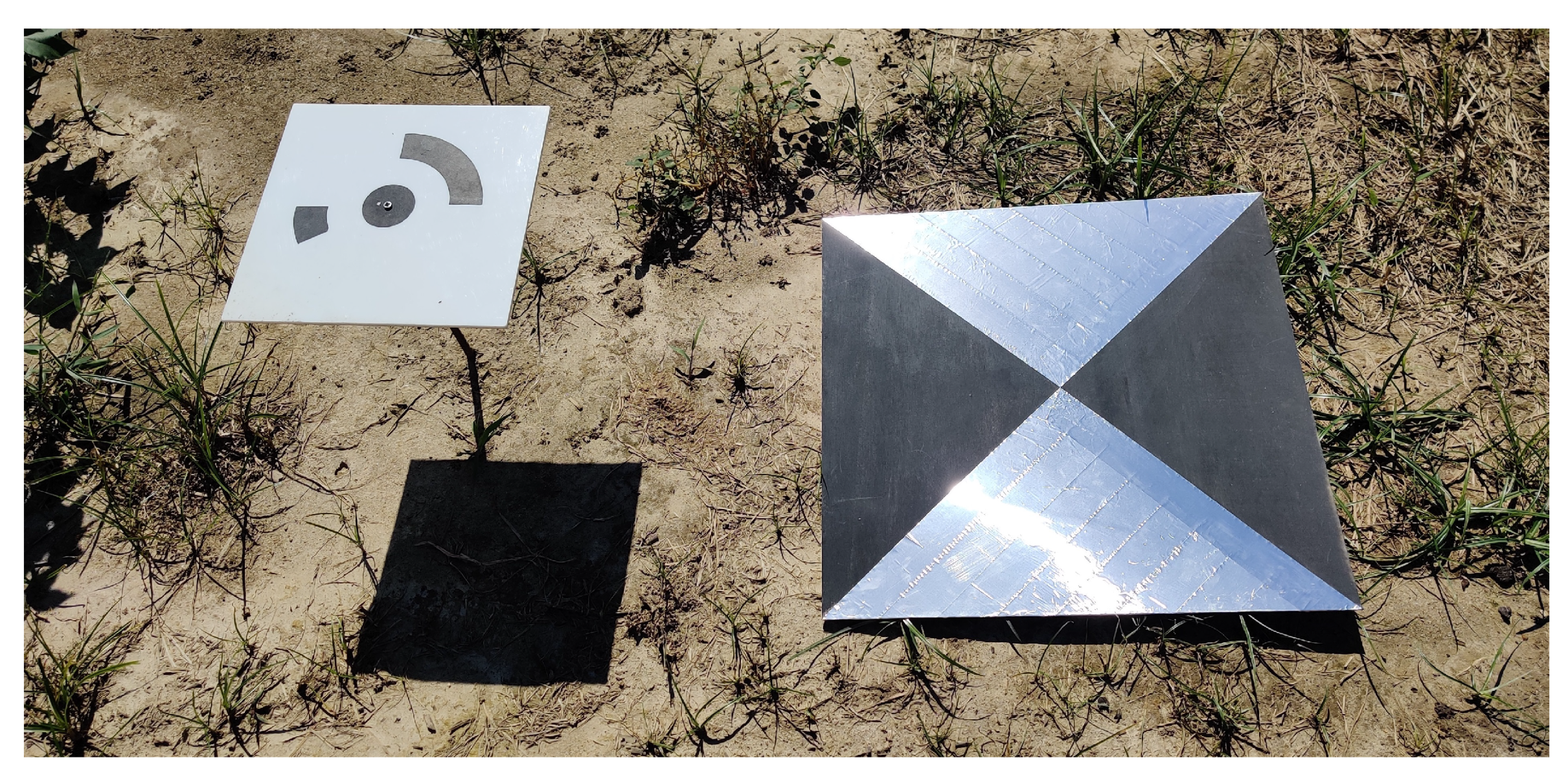

5.2. GCP Deployment and Field Mapping

5.3. Flight Path Planning

5.4. Flight Campaign

5.5. Ground Data Collection

6. Results

6.1. Camera Calibration

6.1.1. Camera Geometric Distortion

6.1.2. Camera Vignetting

6.2. Data Preprocessing

6.2.1. Accuracy of the Thermal Camera Calibration

6.2.2. Results of Orthomosaic Generation

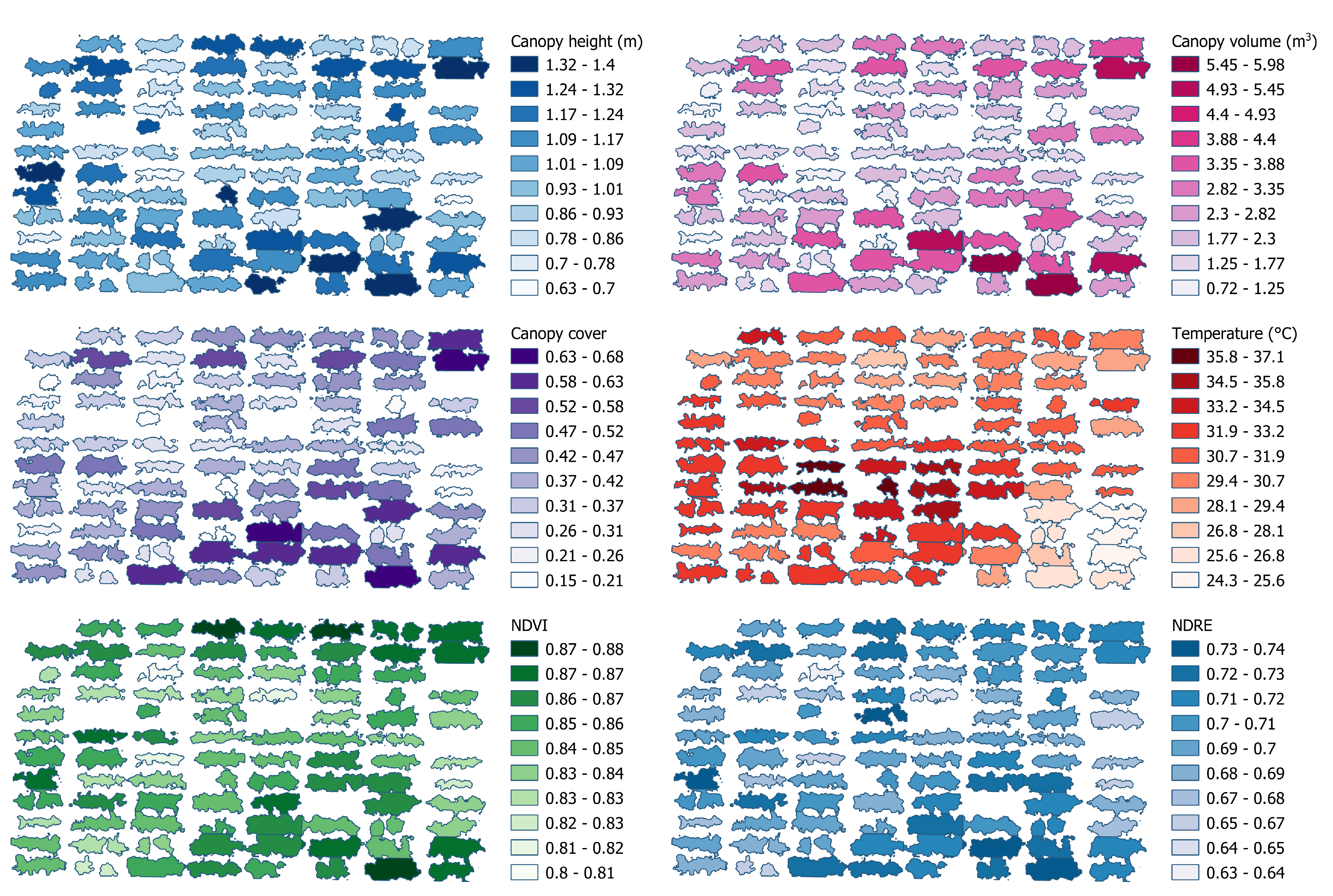

6.3. Phenotypic Traits Extraction

6.3.1. Results of the Canopy Segmentation

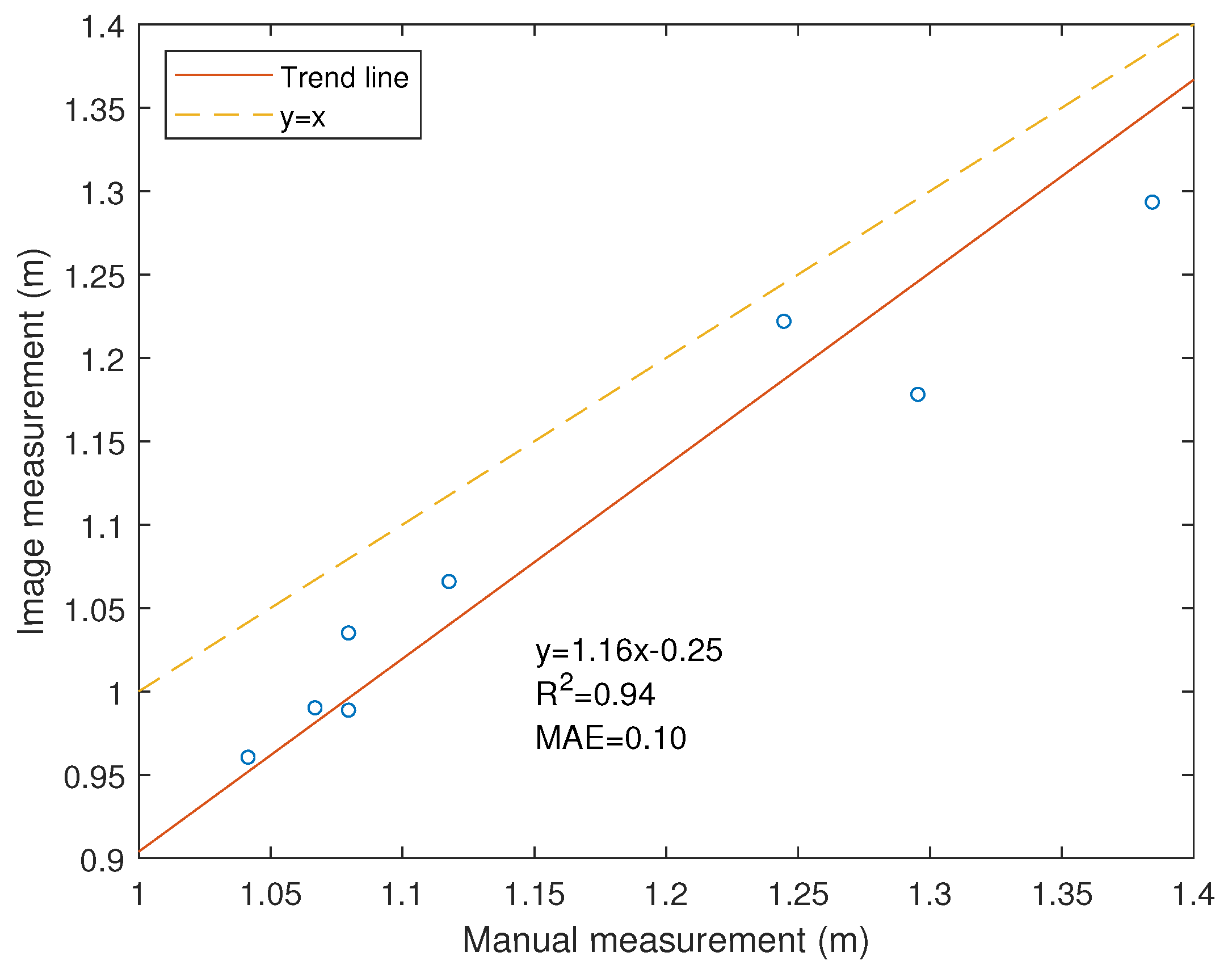

6.3.2. Accuracy of the Canopy Height

6.3.3. Accuracy of the Canopy Vegetation Index

6.3.4. Data Visualization

7. Discussion

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Busemeyer, L.; Mentrup, D.; Möller, K.; Wunder, E.; Alheit, K.; Hahn, V.; Maurer, H.P.; Reif, J.C.; Würschum, T.; Müller, J.; et al. BreedVision—A multi-sensor platform for non-destructive field-based phenotyping in plant breeding. Sensors 2013, 13, 2830–2847. [Google Scholar] [CrossRef]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Carmo-Silva, A.E.; French, A.N.; Salvucci, M.E.; White, J.W. Development and evaluation of a field-based high-throughput phenotyping platform. Funct. Plant Biol. 2014, 41, 68–79. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Li, C.; Robertson, J.S.; Sun, S.; Xu, R.; Paterson, A.H. GPhenoVision: A ground mobile system with multi-modal imaging for field-based high throughput phenotyping of cotton. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A comprehensive review on recent applications of unmanned aerial vehicle remote sensing with various sensors for high-throughput plant phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-UI-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining color indices and textures of UAV-based digital imagery for rice LAI estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Xu, X.; Han, J.; Zhang, L.; Bian, C.; Jin, L.; Liu, J. The estimation of crop emergence in potatoes by UAV RGB imagery. Plant Methods 2019, 15, 15. [Google Scholar] [CrossRef] [Green Version]

- Xu, R.; Li, C.; Paterson, A.H.; Jiang, Y.; Sun, S.; Robertson, J.S. Aerial images and convolutional neural network for cotton bloom detection. Front. Plant Sci. 2018, 8, 2235. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Díaz-Varela, R.A.; De la Rosa, R.; León, L.; Zarco-Tejada, P.J. High-resolution airborne UAV imagery to assess olive tree crown parameters using 3D photo reconstruction: Application in breeding trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef] [Green Version]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef] [Green Version]

- Zaman-Allah, M.; Vergara, O.; Araus, J.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Patrick, A.; Pelham, S.; Culbreath, A.; Holbrook, C.C.; De Godoy, I.J.; Li, C. High throughput phenotyping of tomato spot wilt disease in peanuts using unmanned aerial systems and multispectral imaging. IEEE Instrum. Meas. Mag. 2017, 20, 4–12. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Paterson, A.H. Multispectral imaging and unmanned aerial systems for cotton plant phenotyping. PLoS ONE 2019, 14, e0205083. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.; Hassan, M.A.; Xu, K.; Zheng, C.; Rasheed, A.; Zhang, Y.; Jin, X.; Xia, X.; Xiao, Y.; He, Z. Assessment of water and nitrogen use efficiencies through UAV-based multispectral phenotyping in winter wheat. Front. Plant Sci. 2020, 11, 927. [Google Scholar] [CrossRef]

- Jackson, R.; Reginato, R.; Idso, S. Wheat canopy temperature: A practical tool for evaluating water requirements. Water Resour. Res. 1977, 13, 651–656. [Google Scholar] [CrossRef]

- Jackson, R.D.; Idso, S.; Reginato, R.; Pinter, P., Jr. Canopy temperature as a crop water stress indicator. Water Resour. Res. 1981, 17, 1133–1138. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolás, E.; Nortes, P.A.; Alarcón, J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; Virlet, N.; Labbé, S.; Jolivot, A.; Regnard, J.L. Field phenotyping of water stress at tree scale by UAV-sensed imagery: New insights for thermal acquisition and calibration. Precis. Agric. 2016, 17, 786–800. [Google Scholar] [CrossRef]

- Zhang, L.; Niu, Y.; Zhang, H.; Han, W.; Li, G.; Tang, J.; Peng, X. Maize canopy temperature extracted from UAV thermal and RGB imagery and its application in water stress monitoring. Front. Plant Sci. 2019, 10, 1270. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Dugo, V.; Hernandez, P.; Solis, I.; Zarco-Tejada, P.J. Using high-resolution hyperspectral and thermal airborne imagery to assess physiological condition in the context of wheat phenotyping. Remote Sens. 2015, 7, 13586–13605. [Google Scholar] [CrossRef] [Green Version]

- Rossini, M.; Fava, F.; Cogliati, S.; Meroni, M.; Marchesi, A.; Panigada, C.; Giardino, C.; Busetto, L.; Migliavacca, M.; Amaducci, S.; et al. Assessing canopy PRI from airborne imagery to map water stress in maize. ISPRS J. Photogramm. Remote Sens. 2013, 86, 168–177. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef] [Green Version]

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 2020, 21, 955–978. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Huang, Z.; Yin, X.; Wei, S.; Lan, Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Practical applications of a multisensor UAV platform based on multispectral, thermal and RGB high resolution images in precision viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef] [Green Version]

- Kelly, J.; Kljun, N.; Olsson, P.O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sens. 2019, 11, 567. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Goldman, D.B. Vignette and exposure calibration and compensation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2276–2288. [Google Scholar] [CrossRef] [PubMed]

- Mamaghani, B.; Salvaggio, C. Multispectral sensor calibration and characterization for sUAS remote sensing. Sensors 2019, 19, 4453. [Google Scholar] [CrossRef] [Green Version]

- Usamentiaga, R.; Venegas, P.; Guerediaga, J.; Vega, L.; Molleda, J.; Bulnes, F.G. Infrared Thermography for Temperature Measurement and Non-Destructive Testing. Sensors 2014, 14, 12305–12348. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Minkina, W.; Klecha, D. 1.4-Modeling of Atmospheric Transmission Coefficient in Infrared for Thermovision Measurements. In Proceedings of the IRS2 2015, Dresden, Germany, 19–21 May 2015; pp. 903–907. [Google Scholar]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Chen, C. Determining the leaf emissivity of three crops by infrared thermometry. Sensors 2015, 15, 11387–11401. [Google Scholar] [CrossRef] [Green Version]

- Madding, R.P. Emissivity measurement and temperature correction accuracy considerations. In Thermosense XXI; International Society for Optics and Photonics: Washington, DC, USA, 1999; Volume 3700, pp. 393–401. [Google Scholar]

- Heinemann, S.; Siegmann, B.; Thonfeld, F.; Muro, J.; Jedmowski, C.; Kemna, A.; Kraska, T.; Muller, O.; Schultz, J.; Udelhoven, T.; et al. Land Surface Temperature Retrieval for Agricultural Areas Using a Novel UAV Platform Equipped with a Thermal Infrared and Multispectral Sensor. Remote Sens. 2020, 12, 1075. [Google Scholar] [CrossRef] [Green Version]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and testing a UAV mapping system for agricultural field surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torr, P.H.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef] [Green Version]

- Bareth, G.; Bendig, J.; Tilly, N.; Hoffmeister, D.; Aasen, H.; Bolten, A. A comparison of UAV-and TLS-derived plant height for crop monitoring: Using polygon grids for the analysis of crop surface models (CSMs). Photogramm.-Fernerkund.-Geoinf. 2016, 2016, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Sun, S.; Li, C.; Paterson, A.H.; Jiang, Y.; Xu, R.; Robertson, J.S.; Snider, J.L.; Chee, P.W. In-field high throughput phenotyping and cotton plant growth analysis using LiDAR. Front. Plant Sci. 2018, 9, 16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torino, M.S.; Ortiz, B.V.; Fulton, J.P.; Balkcom, K.S.; Wood, C.W. Evaluation of vegetation indices for early assessment of corn status and yield potential in the Southeastern United States. Agron. J. 2014, 106, 1389–1401. [Google Scholar] [CrossRef]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.; Neely, H.L.; et al. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef] [Green Version]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef] [Green Version]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-Mashharawi, S.; Al-Amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A calibration procedure for field and UAV-based uncooled thermal infrared instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef]

- Cao, S.; Danielson, B.; Clare, S.; Koenig, S.; Campos-Vargas, C.; Sanchez-Azofeifa, A. Radiometric calibration assessments for UAS-borne multispectral cameras: Laboratory and field protocols. ISPRS J. Photogramm. Remote Sens. 2019, 149, 132–145. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.D.; Sudduth, K.A.; Zhang, M. Yield estimation in cotton using UAV-based multi-sensor imagery. Biosyst. Eng. 2020, 193, 101–114. [Google Scholar] [CrossRef]

- Patrick, A.; Li, C. High throughput phenotyping of blueberry bush morphological traits using unmanned aerial systems. Remote Sens. 2017, 9, 1250. [Google Scholar] [CrossRef] [Green Version]

| Thermal Camera | Multispectral Camera | DSLR RGB Camera | Industrial RGB Camera | LiDAR | |

|---|---|---|---|---|---|

| Manufacturer | FLIR systems | MicaSense | Panasonic | FLIR systems | Velodyne |

| Model | Tau 2 | RedEdge | Lumix G6 | GrassHopper3 | VLP-16 |

| Dimensions (mm) | |||||

| Weight (g) | 112 | 150 | 390 | 90 | 830 |

| Resolution | Vertical: Horiz.: 0.1–0.4 | ||||

| Focal length (mm) | 25 | 5.4 | 14–42 | 5 | N/A |

| Max FPS (Hz) | 30 | 1 | 1 | 75 | 20 |

| Spectral range () | 7500–13,500 | 475, 560, 668, 717, 840 | N/A | N/A | N/A |

| Accuracy (cm) | N/A | N/A | N/A | N/A | Up to ±3 |

| RGB Camera | Multispectral Camera | Thermal Camera | |||||

|---|---|---|---|---|---|---|---|

| Blue | Green | Red | RedEdge | NIR | |||

| Focal length (mm) | 5.004 | 5.470 | 5.513 | 5.469 | 5.477 | 5.499 | 25.099 |

| (mm) | 0.072 | 0.036 | 0.041 | 0.023 | 0.028 | 0.049 | −0.049 |

| (mm) | 0.020 | 0.061 | 0.000 | −0.045 | −0.015 | 0.098 | −0.240 |

| Skew angle (rad) | 8.26 | −1.00 | −1.87 | −7.75 | −1.14 | −5.82 | −3.12 |

| −5.42 | −9.78 | −9.85 | −2.61 | −8.10 | −1.09 | −3.27 | |

| 1.08 | −1.57 | −1.27 | −5.42 | −2.61 | −7.20 | 1.77 | |

| −4.44 | −4.38 | −3.90 | −4.66 | 1.71 | −8.09 | −3.61 | |

| −1.34 | 2.08 | 5.72 | −1.55 | −2.10 | 6.55 | 8.50 | |

| 1.25 | 5.86 | 2.76 | 1.95 | 7.78 | 1.64 | 6.73 | |

| MAE (m) | 0.501 | 0.531 | 0.100 | 0.531 | 0.462 | 0.395 | 0.308 | 0.196 | 0.060 |

| 0.742 | 0.799 | 0.691 | 0.799 | 0.651 | 0.543 | 0.481 | 0.619 | 0.766 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, R.; Li, C.; Bernardes, S. Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture. Remote Sens. 2021, 13, 3517. https://doi.org/10.3390/rs13173517

Xu R, Li C, Bernardes S. Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture. Remote Sensing. 2021; 13(17):3517. https://doi.org/10.3390/rs13173517

Chicago/Turabian StyleXu, Rui, Changying Li, and Sergio Bernardes. 2021. "Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture" Remote Sensing 13, no. 17: 3517. https://doi.org/10.3390/rs13173517

APA StyleXu, R., Li, C., & Bernardes, S. (2021). Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture. Remote Sensing, 13(17), 3517. https://doi.org/10.3390/rs13173517